Notes

Article history

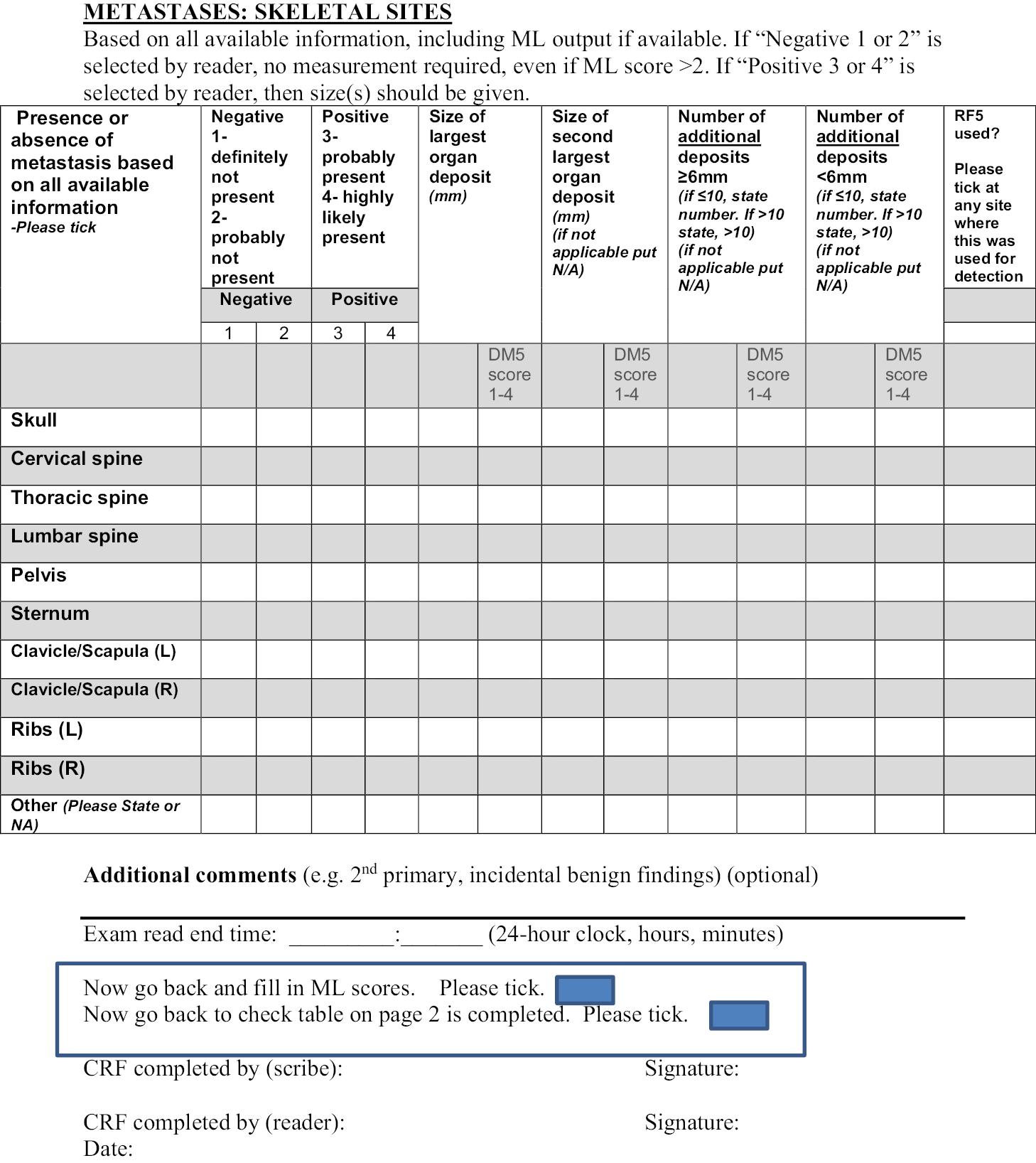

The research reported in this issue of the journal was funded by the EME programme as award number 13/122/01. The contractual start date was in February 2015. The draft manuscript began editorial review in October 2020 and was accepted for publication in July 2023. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The EME editors and production house have tried to ensure the accuracy of the authors’ manuscript and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this article.

Permissions

Copyright statement

Copyright © 2024 Henriksen et al. This work was produced by Henriksen et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2024 Henriksen et al.

Chapter 1 Introduction

The use of medical imaging has steadily and rapidly increased, becoming a central pillar in the management of patients, particularly in the setting of cancer care. With increasing use of complex modalities such as computed tomography (CT) and magnetic resonance imaging (MRI) for diagnosis, treatment planning and clinical studies, it has become desirable to use image-vision methods, such as machine-learning (ML) methods, to assist radiological experts in clinical diagnosis, quantification tasks and treatment planning. 1 In recent years, there have been rapid developments in both machine-learning methods as well as MRI techniques. For example, less than 10 years ago the use of whole-body magnetic resonance imaging (WB-MRI) protocols was uncommon due to many limitations, such as the forbidding acquisition times and limited availability. This past decade has shown substantial progress in WB-MRI protocols. This very promising technique has now started to move from the research setting to becoming more commonly used in clinical practice. It is currently recommended by the National Institute for Health and Care Excellence (NICE) for the detection of lesions in the bone marrow in myeloma and is used for detection of metastatic bone disease in prostate and breast cancer. 2–4 This clinical use has been supported by recent technological developments and validation of WB-MRI by multiple studies and consensus papers. 5 The STREAMLINE study of WB-MRI, in patients with newly diagnosed lung or colorectal cancer, reported similar staging accuracy using WB-MRI but with a reduced number of tests needed to reach the final determination of stage of disease and with a reduction in time to staging and cost. 6–8 A single WB-MRI was also preferred by patients. 9,10 As a result, WB-MRI is progressively proposed by radiologists as an efficient examination for an expanding range of indications. 11 Multi-modality WB-MRI is emerging as a new imaging standard for many diseases requiring detection and monitoring of skeletal and soft-tissue involvement in cancer. 12 WB-MRI has been successfully used in several cancers for the detection of bone, lymph nodes and visceral metastases and their monitoring under treatment. 13 Among these, metastatic cancers to bone and hematological malignancies, mainly multiple myeloma, benefit from WB-MRI. The potential benefits of using WB-MRI are considered to be a reduction in ionising radiation exposure, increased health outcomes due to reduced waiting periods and decreased patient anxiety caused by waiting periods for multiple staging investigations.

However, the radiological interpretation of WB-MRI scans requires a high level of expertise and training. Interpretation of WB-MRI requires integration of a large number of image data from multiple sequences. As a result, the reading process can become rather time-consuming for inexperienced readers, with increased risk of misinterpretations. 14 This has hindered the translation of this technique into widespread use and the routine use of WB-MRI is currently limited to a relatively small number of expert centres.

The principal challenge for translating WB-MRI in clinical routine comes from the technical skills for image acquisition and the large number of data to be reviewed. Computer-aided image analysis may alleviate the workflow. Such automatic algorithms could ultimately facilitate the process of reading WB scans by reducing the reading time (RT) and improving the diagnostic accuracy of WB-MRI.

The advent of deep learning has pushed medical image analysis to new levels, rapidly replacing more traditional ML and computer vision pipelines. Detection of anatomical tissue of interest is crucial for quantitative analysis of WB images. Many image detection and segmentation algorithms have been proposed and some machine-learning algorithms can now perform image analysis tasks with performance equal, or even superior, to the one achieved by human experts. 15,16 Automatically derived measurements and visual guides, obtained with machine-learning techniques, may serve as a valuable aid in many clinical tasks and are highly likely to transform the ways we see and use medical imaging. Reliable machine-learning algorithms are required for the accurate delineation of anatomical structures and/or lesions from different modalities of medical images.

In this project, our aim was to develop machine-learning methods for improving the diagnostic performance and reducing the RT of WB-MRI in colon and lung cancer in order to support the translation of WB-MRI into routine clinical care for the benefit of patients. Given the pragmatic setting of MAchine Learning In whole Body Oncology (MALIBO), we believe that the methodological steps and challenges described here can be of invaluable assistance, and can serve as a guide, to groups who would like to apply similar studies in the future, not only for MRI, but in radiology generally. 17

Background

Whole-body MRI, including diffusion-weighted magnetic resonance imaging (DW-MRI), is currently an active research interest in oncology imaging as a non-invasive technique for the detection of metastatic disease, as well as a potential biomarker for clinical use and drug development. 18 Meta-analyses support further development of WB-MRI in clinical practice, in view of the promising sensitivities and specificities for detection of metastases (pooled sensitivity 0.92 and pooled specificity of 0.9319,20). DW-MRI is now standard in WB imaging. DW-MRI allows quantification of water diffusivity in tissues and has been found to be sensitive for detecting tumour sites in organs and bones, with visible changes in the MRI signal intensity due to a reduction in water diffusivity associated with the highly cellular nature of tumour tissue. 21 The characteristic appearances of the bone marrow have been studied in relatively small numbers of patients without metastatic disease, and in patients with breast cancer, myeloma and prostate cancer. 22–24

Other than the requirement for extensive training and potentially slow nature of manual reads, one of the main issues when using WB-MRI for staging of patients with cancer is the potential number of false interpretations. Many ‘normal’ anatomical structures (such as lymph nodes) may reflect similar diffusion properties compared to pathological regions. The possibility of using computer-assisted reading or machine-learning (ML) techniques has been considered in aiding interpretation of complex MRI data sets. One group evaluated the topography of whole- body adipose tissue and proposed an algorithm that enables reliable and completely automatic profiles of adipose distribution from the WB data set, reducing the examination and analysis time to less than half an hour. 25 Another group has developed a parametric modelling approach for computer-aided detection of vertebral column metastases in WB-MRI. 26 ML techniques have previously been developed to differentiate benign (86 cases) from malignant (49 cases) in soft-tissue tumours using a large MRI database of multicentre, multimachine MRI images, but without using diffusion-weighted imaging (DWI). 27 Co-investigators at Imperial College London have previously developed methods for organ localisation in WB DIXON MRI and accurate semantic segmentation on CT. 28–32

Machine learning for image segmentation

Medical image segmentation aims to identify regions of interests (ROIs) from the image volume that are relevant to diagnosis or image interpretation. 33 Numerous researchers have proposed various automated segmentation systems, including, but not limited to, active contour, graph cut and clustering. These segmentation algorithms are built on traditional methods such as edge detection filters and mathematical methods. However, due to developments in neural networks in the past decade, convolutional neural networks (CNNs) dictate the state of the art in biomedical image segmentation. 34,35 One of the notable network architectures is based on encoder–decoder method for semantic segmentation, including fully convolutional networks (FCNs) and U-Net. 36,37 A successful three dimensional (3D) neural network for brain tumour segmentation was DeepMedic, introduced by Kamnitsas et al. 15 Kamnitsas et al. later enhanced an ensemble by combining three different network architectures, namely 3D FCN, 3D U-Net and DeepMedic, trained with different loss functions (Dice loss and cross-entropy) and different normalisation schemes. 38

Whole-body magnetic resonance imaging for oncology

Simultaneously, MRI techniques have experienced rapid development, allowing the use of WB-MRI in evaluating for cancer or vascular disease,12 which was not possible in the last decade. Recently, a meta-analysis was conducted to evaluate the diagnostic performance of WB-DWI technique in detection of primary and metastatic malignancies compared with that of whole-body positron emission tomography/CT (WB-PET/CT). 39 It was found that WB-DWI has similar, good diagnostic performance for the detection of primary and metastatic malignancies compared with WB-PET/CT [area under curve (AUC) of WB-MRI 0.966 vs. AUC of WB-PET/CT 0.984]. This suggests that WB-MRI can be used to replace WB-PET/CT in certain clinical settings, such as some cancer studies.

Cancer is a leading cause of death worldwide, accounting for an estimated 9.6 million deaths in 2018 (www.who.int/news-room/fact-sheets/detail/cancer). Colorectal and lung cancer are the third and fourth most common cancers in the UK, accounting for 13% and 12% of all new cancers, respectively, and they are the leading causes of cancer-related deaths in the UK. In both cancers, detection of metastatic disease is fundamental to treatment strategy. 8 Although a range of imaging tests are available for diagnosis and staging, including CT and PET-CT, there is growing interests in using WB-MRI as an alternative to multimodality staging pathways. This is because WB-MRI does not impart diagnostic ionising radiation to patients, and promising data support its ability to stage. 8,40 The recently reported National Institute for Health and Care Research (NIHR)-funded STREAMLINE study40 supports the use of WB-MRI for lung and colorectal cancer staging and this study was based on the data sourced from the STREAMLINE study. Apart from 51 WB-(DW)-MRI data set that have already been acquired,41 as part of whole-body protocol optimisation study, the trial used the WB-MRI data predominantly from the NIHR-funded STREAMLINE-C and STREAMLINE-L studies. 8 Additional cases were obtained from the CRUK (Cancer Research United Kingdom) funded MELT study (Whole-Body Functional and Anatomical MRI: Accuracy in Staging and Treatment Response Monitoring in Adolescent Hodgkin’s Lymphoma Compared to Conventional Multi-modality Imaging: NCT01459224). 42 Also, data from the MASTER study [MRI Accuracy in STaging and Evaluation of Treatment Response in Cancer (Lymphoma and Prostate-MASTER L and MASTER P)] were employed (12/LO/0428). 43 These data sets demonstrated additional cases of nodal disease and bone metastases, thereby ensuring a variation in the distribution of disease used to develop the ML algorithms. However, due to extensive heterogeneity in the data from the different studies, the STREAMLINE studies provided the final cohort for the MALIBO study.

Existing literature using machine learning for lesion detection from whole-body magnetic resonance imaging study

We searched PubMed for articles with medical subject headings (MeSH) and full-text searched for ‘ML and MRI’, ‘WB-MRI and lesion detection’, ‘WB-MRI segmentation’ and ‘ML and WB-MRI’. We did not set the beginning of the publish time from PubMed, but our ending publish time was until 1 October 2020.

With ‘ML and MRI’ as keyword, we found there were 3325 papers from PubMed. As we can see, the number of publications in ML and MRI is increasing constantly. There are also number of available reviews. 44–50 This covers a wide range of research works from neuronal networks to deep learning and from image segmentation to disease prediction.

A total of 817 papers were found from PubMed using ‘WB-MRI and lesion detection’ as keywords. Many of these are for detection of bone lesions (using ‘WB-MRI and lesion detection and bone’ as keywords there are 356 papers, i.e. 356/817 = 0.44), suggesting there is great interest in applying WB-MRI in general and WB-DWI for bone study in particular.

If we used ‘WB-MRI segmentation’ as keywords from PubMed, there were fewer articles, which include prostate,51 skeleton,52 blood vessel segmentation based on magnetic resonance angiography (MRA)53 and manual tumour segmentation. 54 When limiting the keywords to ‘ML and WB-MRI’, besides our previous studies14,17 and a study for small-animal organ segmentation from WB-MRI using multiple support vector machine (SVM)-kNN (k-nearest neighbour) classifiers,55 there were only the following articles that are described below:

Firstly, a SVM method was used to segment prostate from WB-MRI scans. The method employed 3D neighbourhood information to build classification vectors from automatically generated features and randomly selected 16 MRI examinations for validation. 56 The result suggested that the SVM for prostate segmentation can segment the prostate in WB-MRI scans with good segmentation quality.

Secondly, a combined segmentation method which included image thresholds, Dixon fat-water segmentation and component analysis were adopted to detect the lungs. MRI images are segmented into five tissue classes (not including bone), and each class is assigned a default linear attenuation value. The method was assessed using WB-MRI. 57

Thirdly, a fully automated algorithm for extraction of the 3D-arterial tree and labelling the tree segments from WB-MRA sequences was presented. The algorithm developed consists of two core parts: (1) 3D-volume reconstruction from different stations with simultaneous correction of different types of intensity inhomogeneity, and (2) extraction of the arterial tree and subsequent labelling of the pruned extracted tree. A subjective visual validation of the method, with respect to the extracted tree, was performed. The results indicated clinical potential of the approach in enabling fully automated and accurate analysis of the entire arterial tree. This was the first study that not only automatically extracts the WB-MRA arterial tree, but also labels the vessel tree segments. 58

Thus, there are few studies in the field of using ML for human WB-MRI evaluation. The major reasons for this may include challenges related to segmenting and labelling anatomical regions due to appearance variations, the frequent presence of imaging artefacts, and a paucity and variability of annotated data. In addition, ML, particularly, with deep NN (neural network), has only been more widely developed in the last decade. As a result, there were not many described ML methods for human WB-MRI studies. Furthermore, WB-MRI itself is a relatively new technique which has only been established in the clinical setting in the last decade. 11 Although there were only a few related studies in this field, the application of ML methods to WB-MRI is thought to be of potential value in oncological imaging, to support the radiologist reading a complex imaging study that requires integration of fairly extensive information.

Clinical studies that this study relates to

The MALIBO study proposed to use WB-MRI data predominantly from the NIHR-funded STREAMLINE L and C studies. These are multicentre prospective cohort studies that evaluated WB-MRI in newly diagnosed non-small cell lung cancer (250 patients; STREAMLINE-L; ISRCTN50436483) and colorectal cancer (322 patients; STREAMLINE-C; ISRCTN43958015). 40 The studies initially defined WB-MRI acquisition, quality assurance and analysis protocols applicable to daily NHS practice. The objectives of both studies are the same. The primary objective was to evaluate whether early WB-MRI increases detection rate for metastases compared to standard NICE-approved diagnostic pathways for each of the tumour types studied (a full description of the diagnostic pathways is available). 6–8,40 Secondary objectives included assessing the influence of WB-MRI on time to and nature of first major treatment decision following definitive staging. At 12-month patient follow-up, a multidisciplinary consensus panel defined the reference standard for tumour stage considering all clinical, pathological, post-mortem and imaging follow-up. Accuracy was defined per lesion, per organ and per patient.

The STREAMLINE-C study recruited patients from 16 UK hospitals between March 2013 and August 2016 with a final number of evaluable patients of 299, 68 (23%) of whom had metastasis at baseline. The STREAMLINE-L study recruited patients from 16 UK hospitals between March 2013 and September 2016 with a final number of evaluable patients of 187, 52 (28%) of whom had metastasis at baseline. The ISRCTN for STREAMLINE-L is ISRCTN50436483 and for STREAMLINE-C, it is ISRCTN43958015. 40

Additional cases were obtained from the CRUK-funded MELT study (Whole-Body Functional and Anatomical MRI: Accuracy in Staging and Treatment Response Monitoring in Adolescent Hodgkin’s Lymphoma Compared to Conventional Multi-modality Imaging, NCT01459224)59 and the University College London Hospital (UCLH)-sponsored MASTER study, including cases with lymphoma and prostate cancer was also used. 42,43 The original justification for using these data sets was that they would demonstrate additional cases of nodal disease and sclerotic bone metastases, thereby ensuring a variation in the distribution of disease used to develop the ML algorithm, as the cases from STREAMLINE studies are likely to have more non-nodal metastatic sites, such as liver (LVR) and lytic bone metastases. The purpose of the MELT study was to compare staging accuracy as well as response assessment using WB-MRI with standard investigations in patients with newly diagnosed Hodgkin’s lymphoma. It was a prospective observational cohort study. The primary outcome measures were: per-site sensitivity and specificity of MRI for nodal and extra-nodal sites and concordance in final disease stage with the multimodality reference standard (at staging). The reference standard for the MELT study was contemporaneous multidisciplinary tumour board (MDT) with all other staging, for example, PET-CT and CT at the time of diagnosis and initial staging. Secondary outcome measures included: (1) interobserver agreement for MRI radiologists, and (2) evaluation of different MRI sequences on diagnostic accuracy; simulated effect of MRI on clinical management.

Rationale for the study

In order to make WB-DW-MRI a useful and clinically relevant tool within the NHS, a method that could assist the radiologist in improving diagnostic accuracy while reducing RT would be beneficial to deliver better accuracy, productivity and cost-effectiveness. An important aspect in the development of diagnostic support systems is semantic understanding of input data. In the case of WB-DW-MRI, it is essential to ‘teach’ the computer to automatically detect and localise different anatomical structures and discriminate normal and pathological appearances. A computer system that is able understand what is shown in an image can be effectively used to implement an intelligent radiology inspection tool. Such a tool may greatly support the radiologist when reading the large amount of MRI data, with integration of different MRI sequences. Guided navigation to ROI, automatic adjustment of organ and tissue specific visualisation parameters, and quantification of volume and extent of suspicious regions are some of the features that such a system would provide and thus, potentially reduce the time needed for an expert to perform diagnostic tasks. Previous ML methods (described in Image pre-processing for whole-body-magnetic resonance imaging: correction of fat-water swaps in Dixon magnetic resonance imaging) can be adapted to WB-DW-MRI to allow automatic vertebrae localisation,26 to automatically exclude false-positive detections in suspicious regions and to discriminate malignant from benign structures28–32). These methods have yielded promising results for their respective tasks. They are all based on a particular concept of ML called supervised learning. In supervised learning the assumption is that some annotated training data are available that can be used to train a predictor model. Here, the annotations reflect the output value that one would like to infer for new patient images. The training data can be defined as a set T = {(X!, Y!)} of pairs of input data Y!, here a WB-DW-MRI, and some desired output Y!, for example, a point-wise probability map that indicates the likelihood for each image point to be malignant. Using the training data, the aim of an employed learning procedure is then to estimate the conditional probability distribution (Y|X). Having a good estimate of this distribution allows prediction of output Y for any new input data X. In the context of WB-MRI for staging, the automatically obtained predictions for a new patient image can be integrated in a radiology inspection tool, for example to automatically navigate to or highlight suspicious region.

Objectives of this study

As this is a new area for oncology imaging research, there were no previous ML or CNN developed tools to apply to WB-MRI for tumour staging nor for lesion detection. To the best of our knowledge, the applicability of this CNNs method for lesion segmentation from WB-MRI had not been investigated in a clinical setting at the time the study was commenced. Allowing for this, the main purpose of our project was to study the possibility to apply state-of-the-art CNN methods for lesion detection on human WB-MRI, particularly, for detecting lung and colon cancer and any metastatic lesions. Our plan was to develop, compare and select the most appropriate ML methods to achieve these goals.

Primary and secondary objectives

The primary objective of Phases 1 and 2 of the study was to develop a ML method (or modify an available method) for the detection of cancer lesions on WB-MRI.

The primary objective of Phase 3 of the study was to compare the diagnostic test accuracy of WB-MRI, as read by independent, experienced readers in patients being staged for cancer, with and without the aid of ML support, against the reference standard from the source studies.

Secondary objectives

The planned secondary objectives of this study were:

-

To compare the RT of WB-MRI scans, as recorded by experienced readers, with and without the assistance of ML methods.

-

To determine the interobserver variability of WB-MRI diagnosis of primary and metastatic lesions by different radiologists, with and without the assistance of ML methods.

-

To evaluate the diagnostic accuracy of WB-(DW)-MRI, as reported by non-experienced readers, with and without the assistance of ML methods.

-

To estimate the diagnostic performance of WB-(DW)-MRI when using different combinations of acquired MRI sequences, with and without the support of ML methods.

-

To determine the number of potential, additional staging tests that would be redundant if ML methods were applied in WB-(DW)-MRI, by means of a simple cost-effectiveness analysis.

Most of the study objectives were achieved, step by step, in the three phases of this study.

Study design

This is an observational study (study limited to working with data), using three different patient cohorts, being evaluated in series during three consecutive phases.

Phase 1: segmentation of normal organs

Development and optimisation of a ML pipeline to automatically identify anatomical structures of interest in WB-MRI in healthy volunteers. For automatically labelling anatomical structures of interest, we extended previous work that automatically segments abdominal organs from CT data. 30 More specifically, we used a hierarchical weighting approach in which the anatomical atlases were constructed first at participant level and then followed by atlas construction at organ level and finally at voxel level. This approach has been shown to accommodate the significant body anatomical variability across different participants. By combining this with patch-based segmentation we were able to accurately and robustly annotate anatomical structures of interest. In order to construct the anatomical atlases, whole body MRI data sets from 50 healthy volunteers were used; these were collected under a separate ethics approval [Imperial College Research Ethics Committee (ICREC) 08/H0707/58]. The initial ML output was the ability to automatically segment the normal organs.

Phase 2: ‘training and validation set’ in cancer lesions

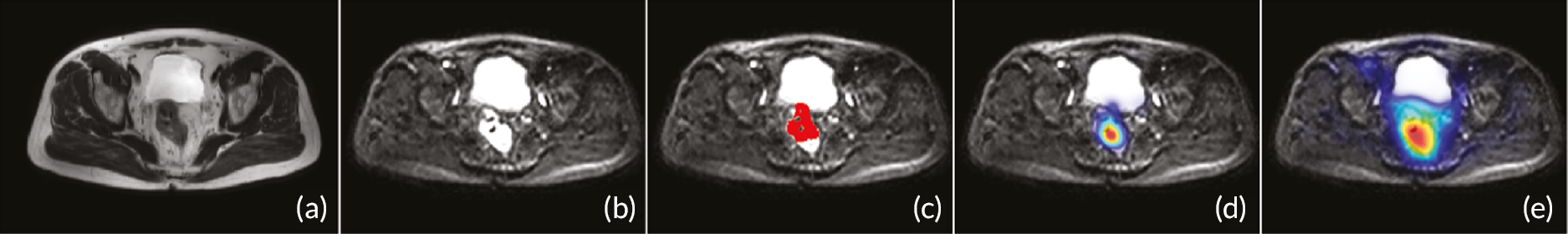

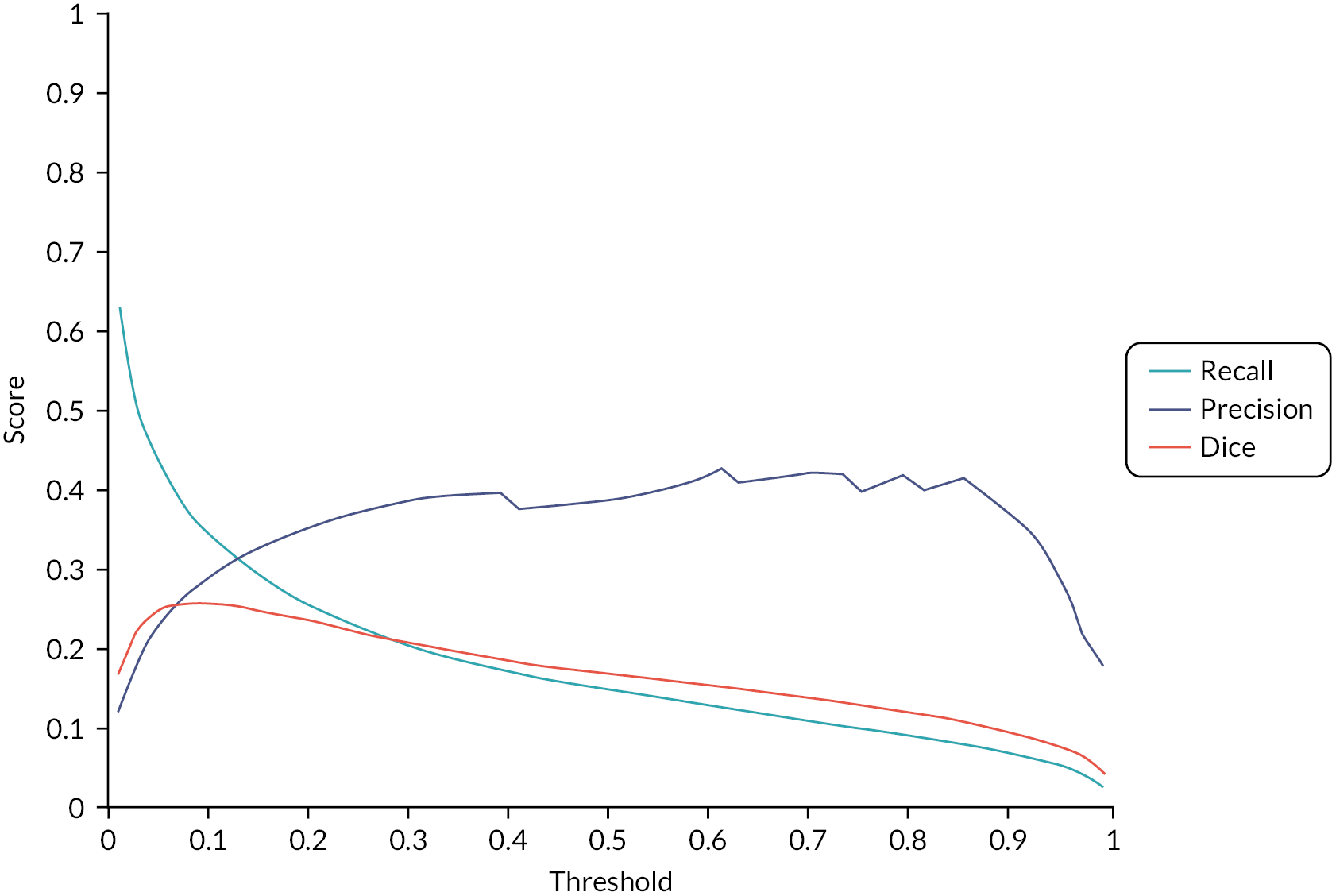

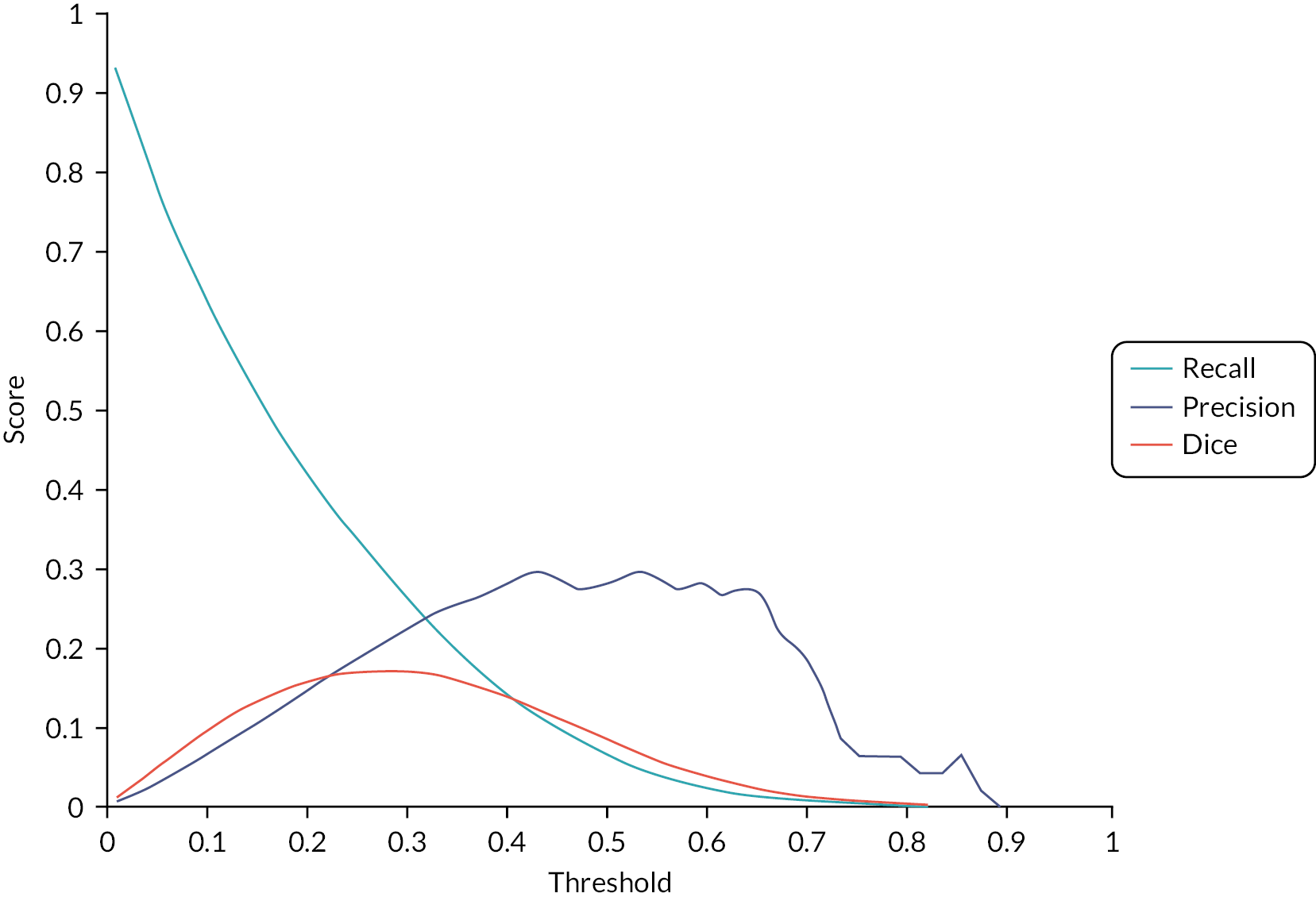

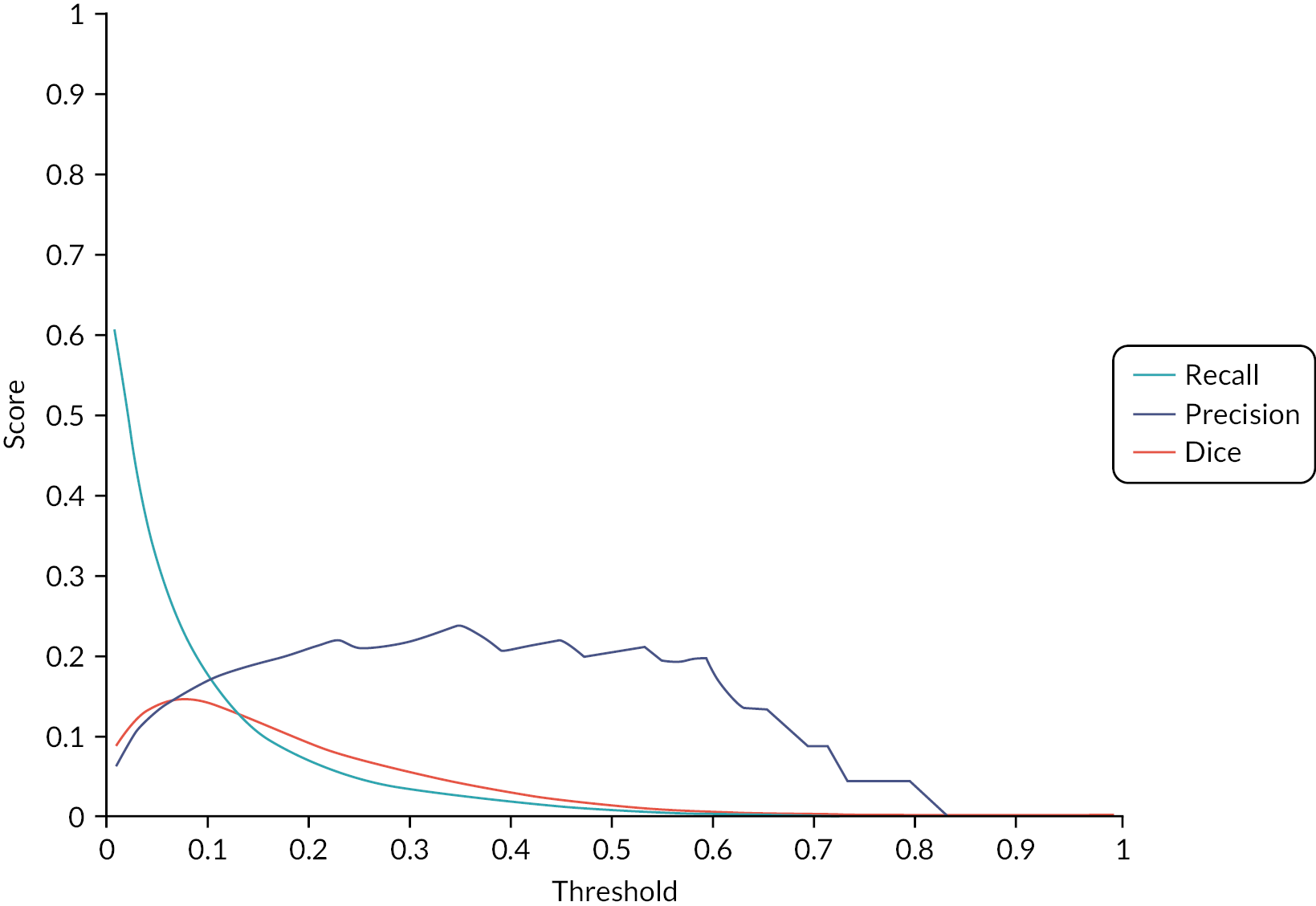

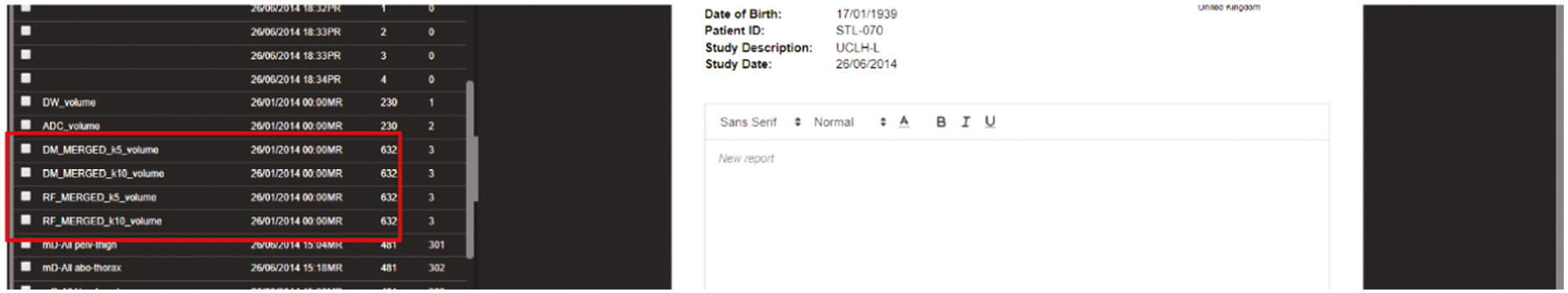

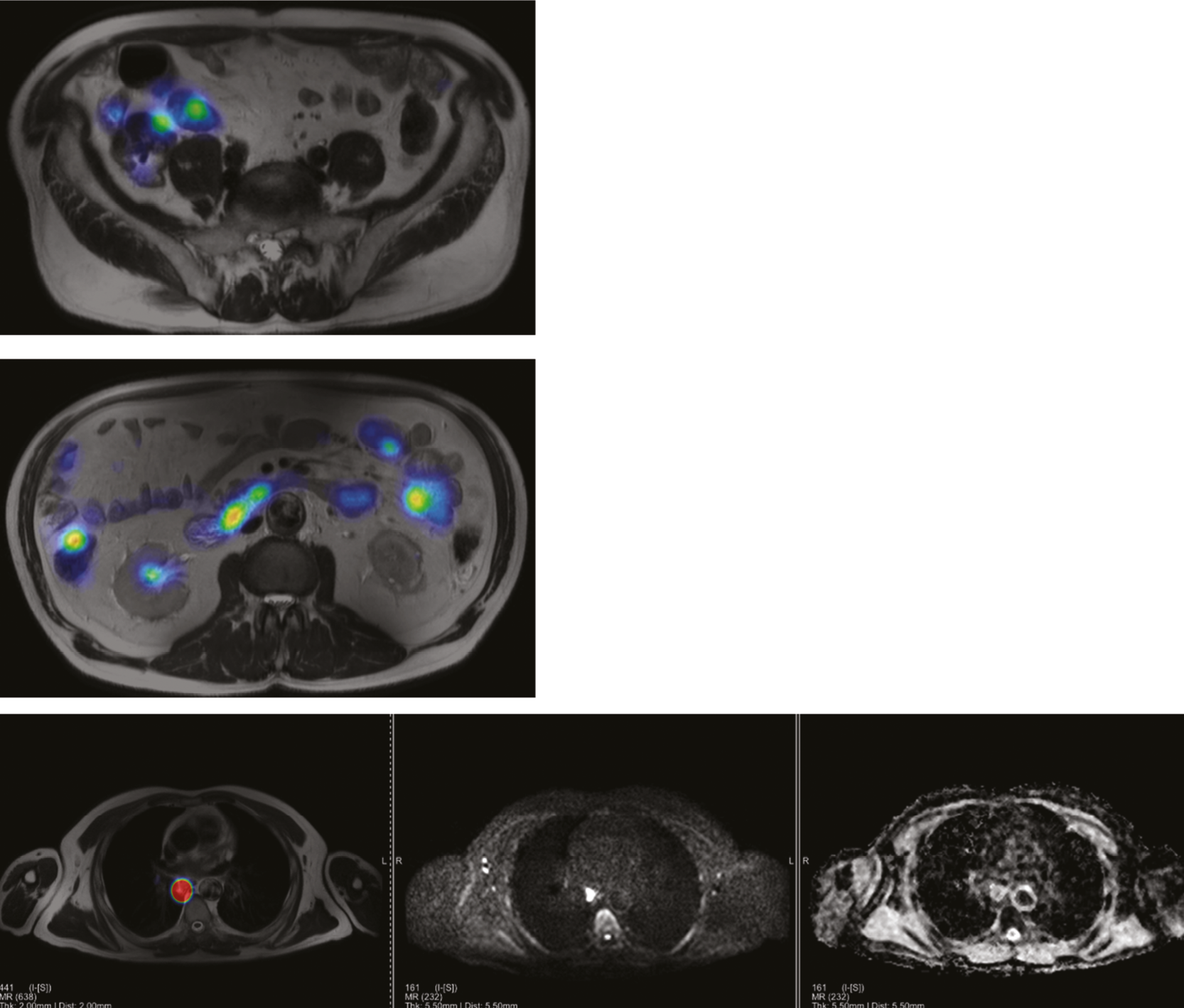

In the second phase, we planned to develop the ML pipeline for the automatic detection and identification of cancer lesions. For this we learnt shape and appearance models that were specific to the anatomical regions identified in Phase 1. These models allowed the probabilistic interpretation of the images in terms of a generative model. Classification was carried out using advanced ML techniques based on ensemble classifiers such as random forests (RFs). 60 WB-MRI scans from the STREAMLINE L and C, MELT and MASTER studies with established disease stage (main study reference standard, described above) were used to train ML detection of metastases. During the course of the study, it became apparent that the differences in sequences and acquisition parameters of the studies were too different to allow appropriate ML training and the study went forward with only the STREAMLINE C (STC) and L (STL) data sets, which had similar MRI protocols. Despite restricting Phases 2 and 3 to the STREAMLINE data, significant challenges were encountered due to the variation in scans acquired at the 16 recruitment centres. We undertook a power calculation to determine the number of cases with and without metastases that would be needed for the Phase 3 study, based on a hypothetical diagnostic test accuracy improvement. All of the remaining eligible data was then used for training. Allocation of cases to Phases 2 and 3 was undertaken by the study statistician in order to allow appropriate cases for training and ‘held-back’ cases for validation. A total of 245 WB-MR scans were allocated to Phase 2 for model development (the original protocol planned for a minimum of 150 scans), of which 19 could not be used due to technical failure, with a final number of 226 WB-MRI available for development. All lesions were segmented on T2 and DWI volumes and checked by an expert (accredited radiologist) using the reference standard to ensure that ground truth (GT) was as accurate as possible. Initial radiology outputs were reviewed by expert radiologist and ML team to identify areas for improvement and the ML development focused on accurate detection of the GT segmented sites of disease. The algorithm was gradually improved and was then tested on the internal validation set to ensure sensitivity of detection was met, before progressing to the clinical testing. A validation step in phase 2 was undertaken on 45 cases that were allocated to Phase 2 training but withheld from model development. Sensitivity for detection of metastatic lesions by the algorithm was evaluated using a Dice coefficient metric, with sufficient overlap and probability thresholds achieved. The threshold was determined as we developed the algorithm in the early stages of Phase 2. Our initially planned reader study, at the end of Phase 2, was not undertaken and the described computational method was employed. Cases used for the sensitivity check at the end of Phase 2 were not used for any ML training or read by radiologists in Phase 3.

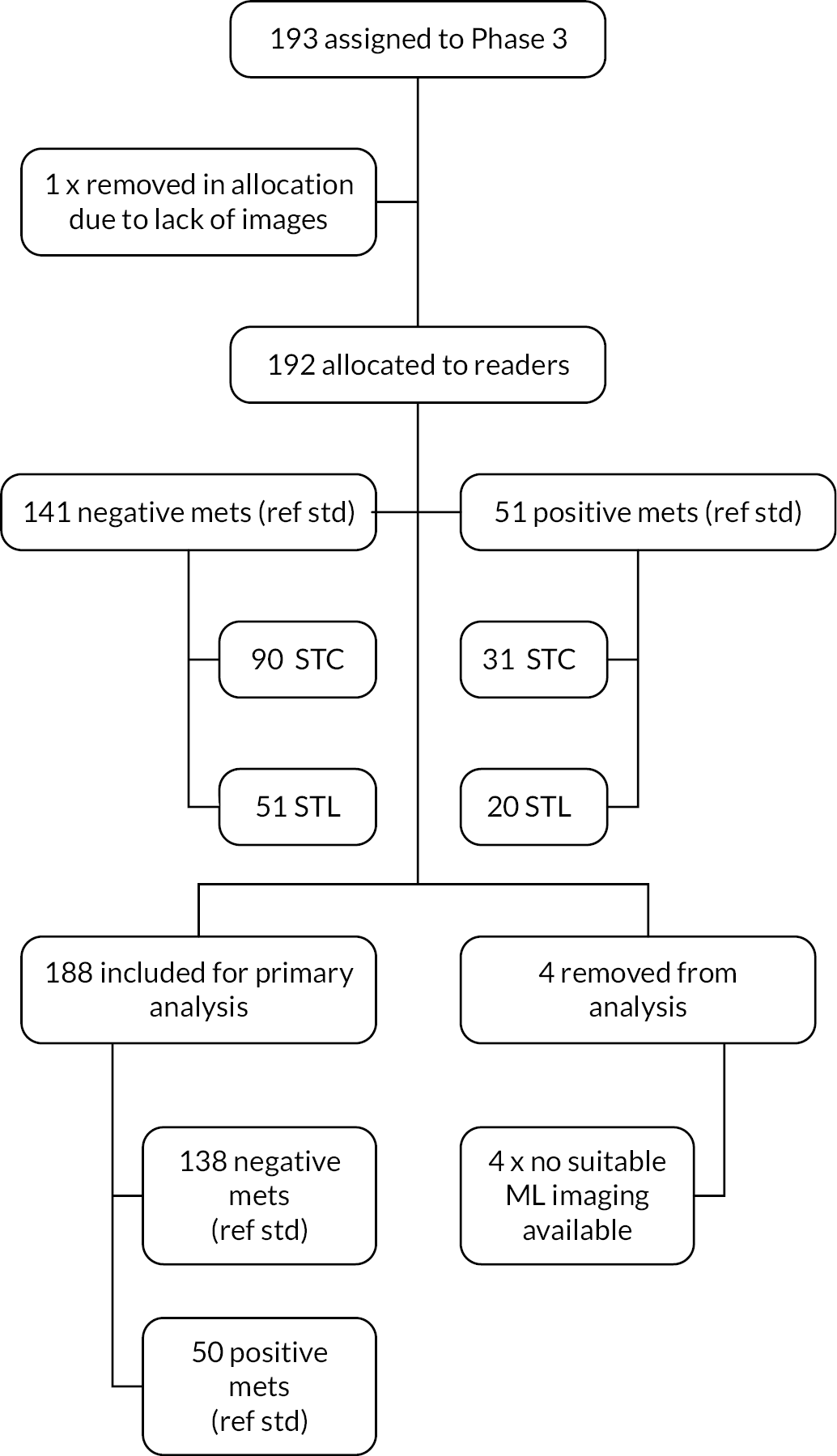

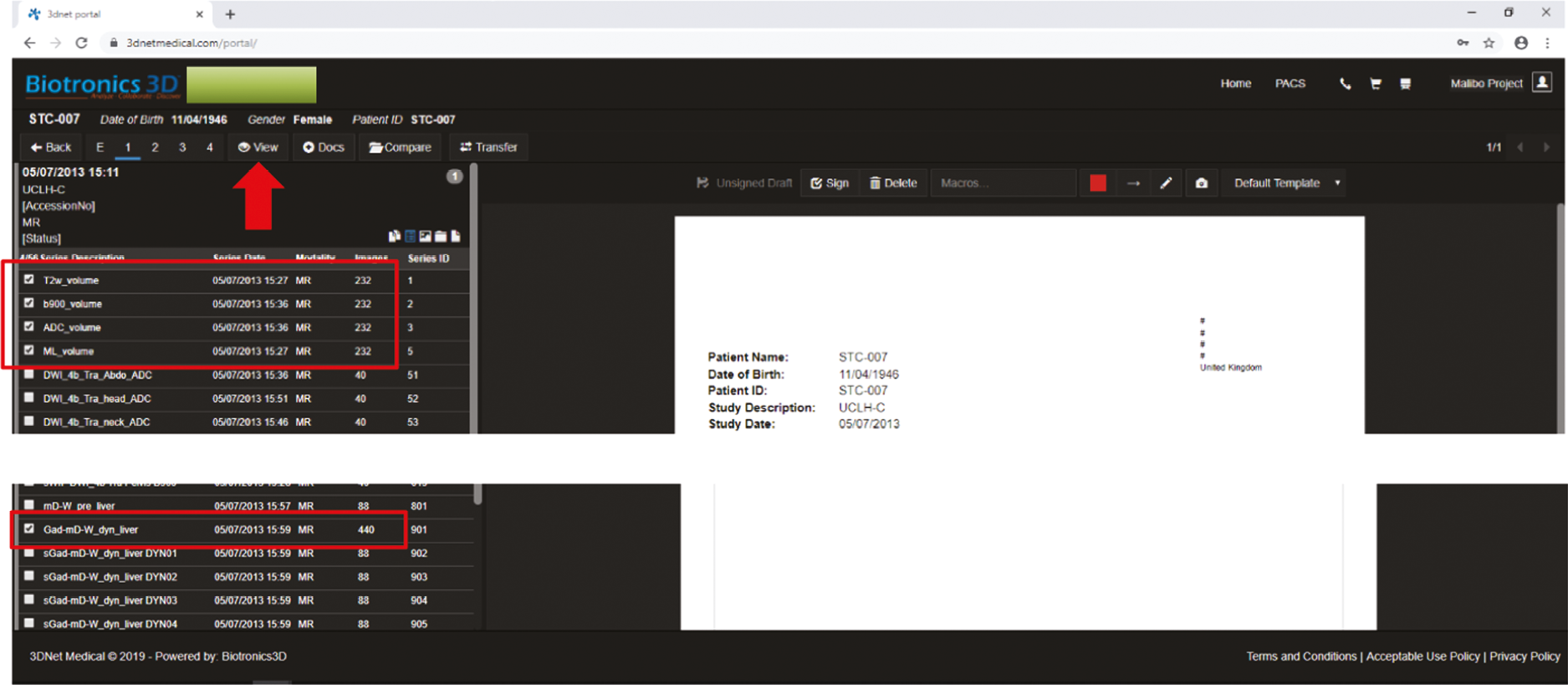

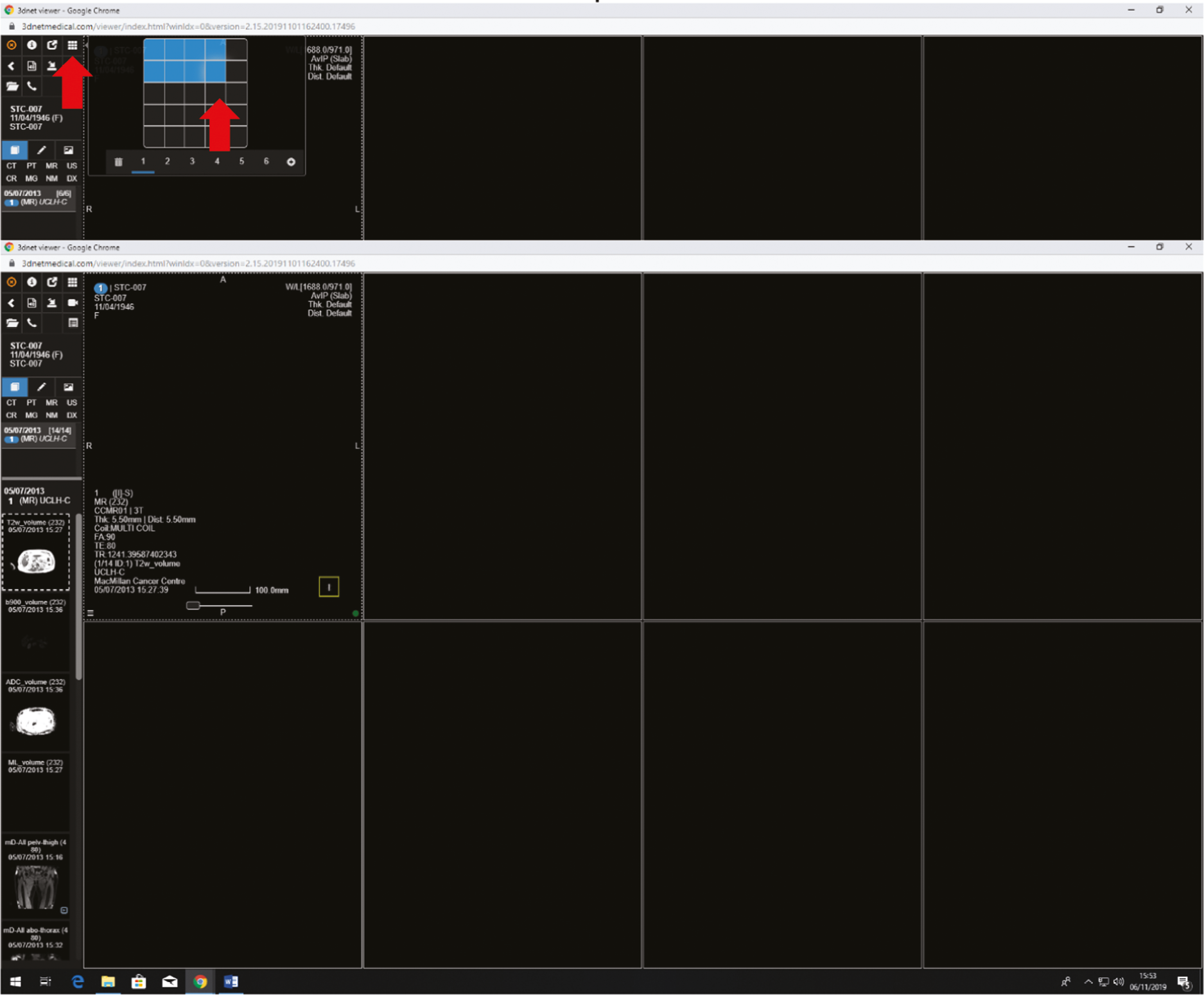

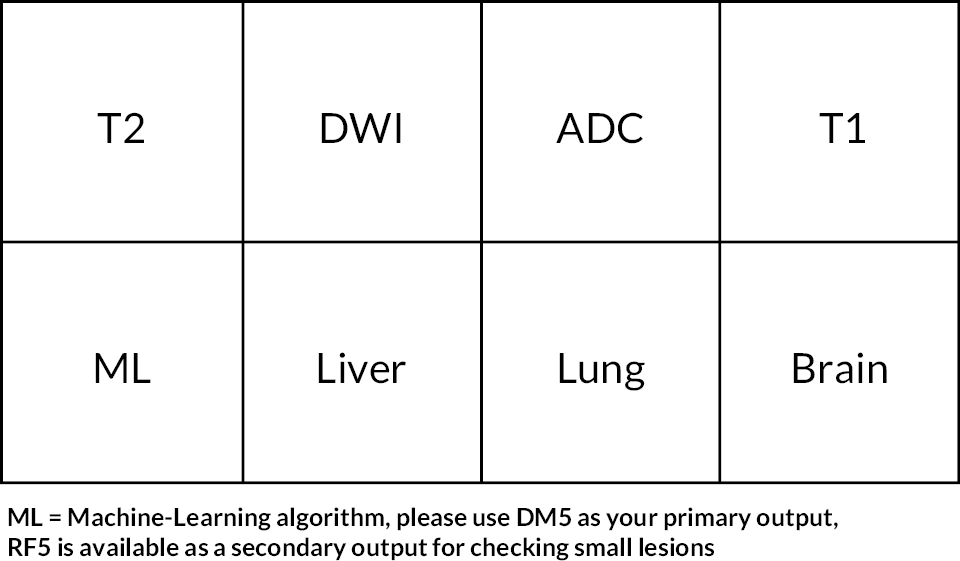

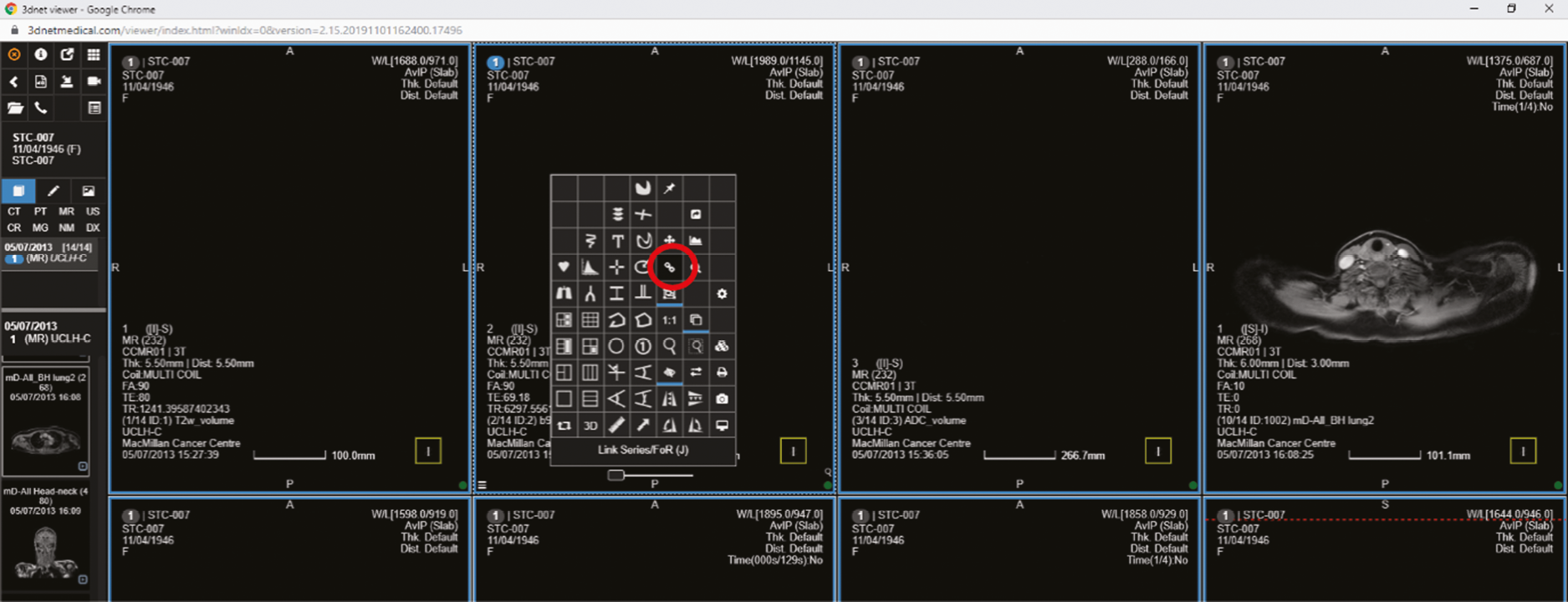

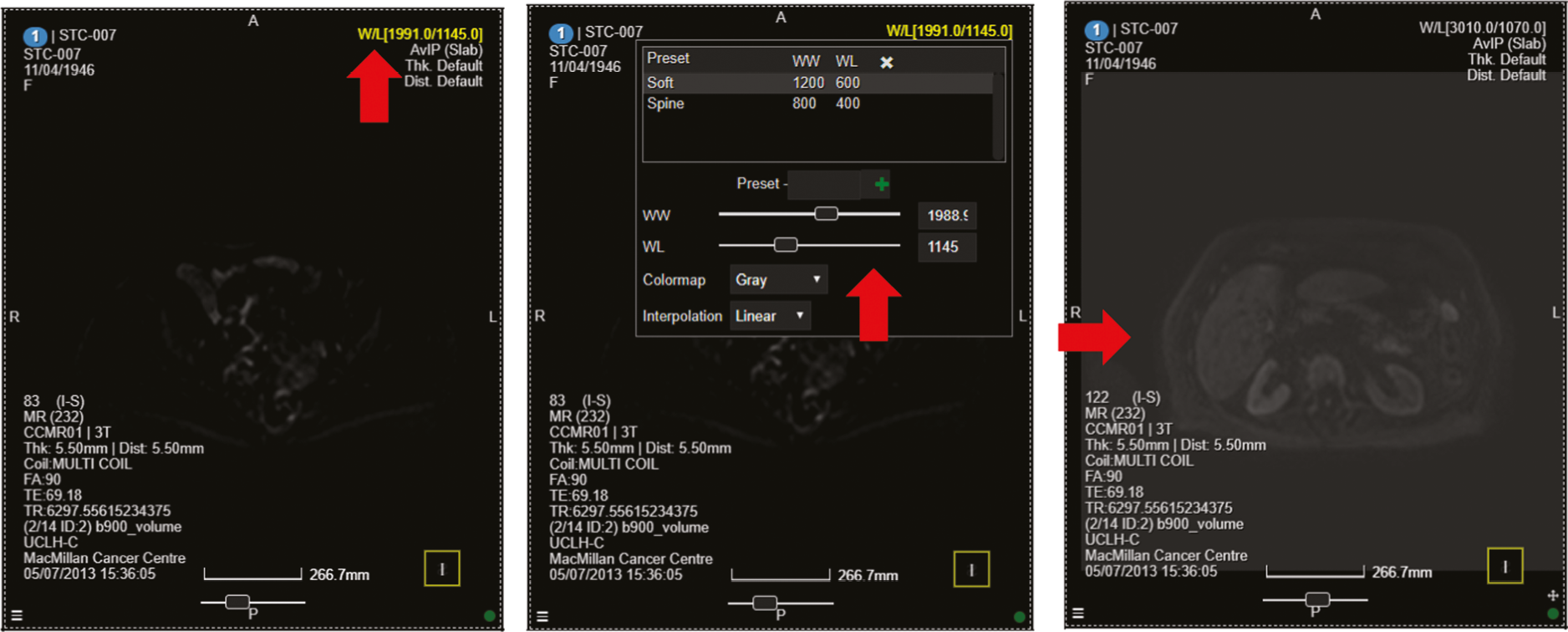

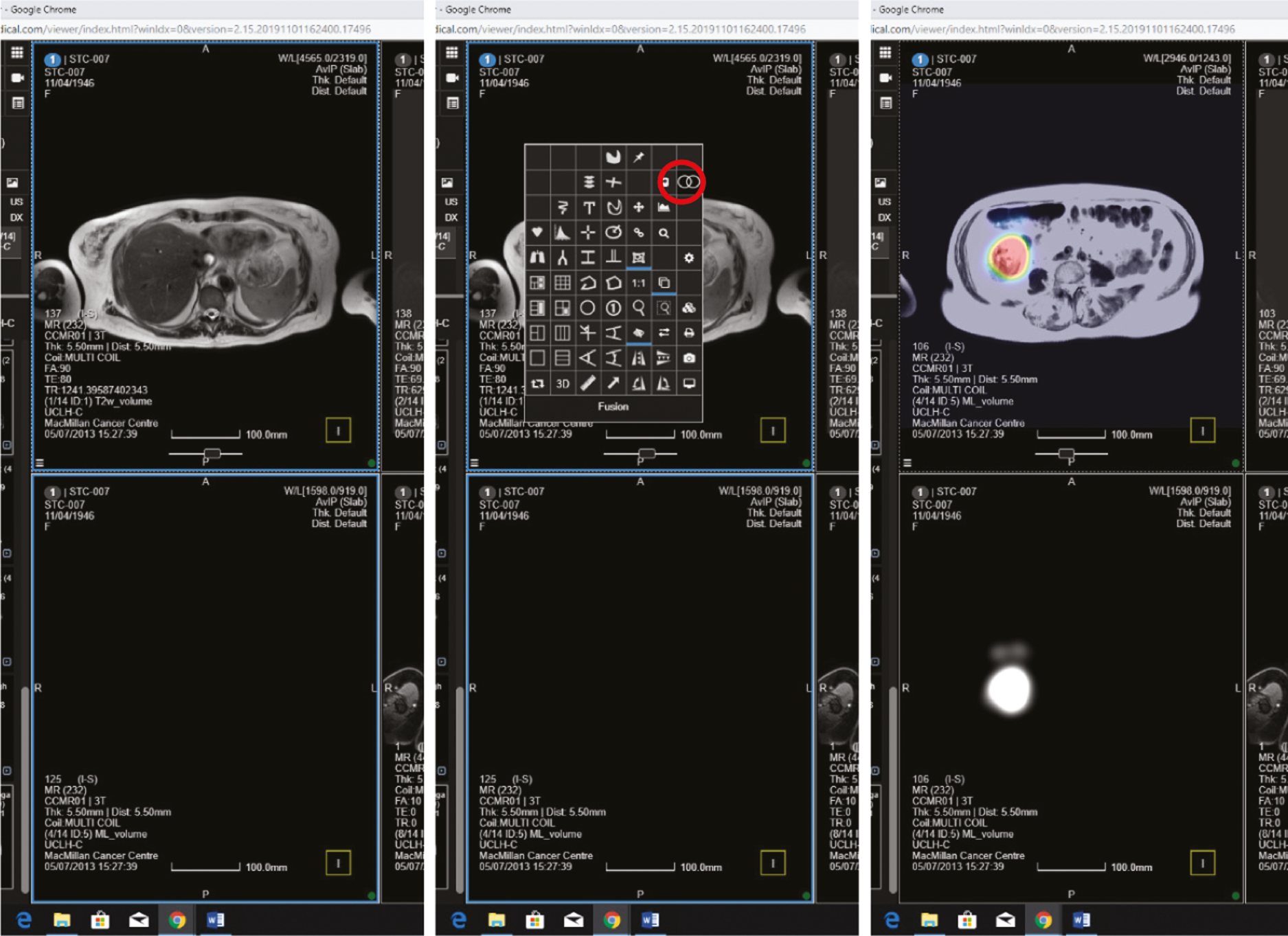

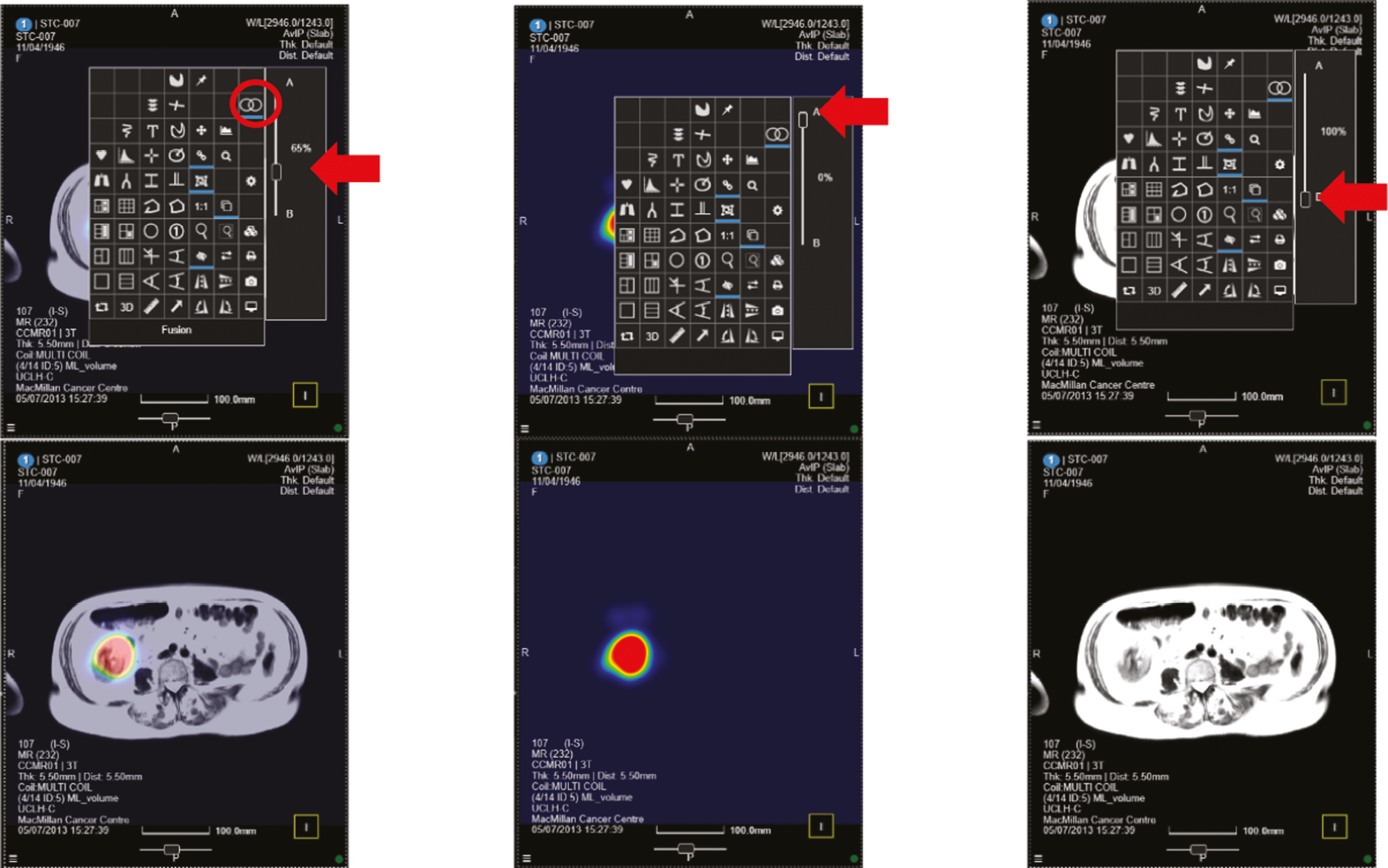

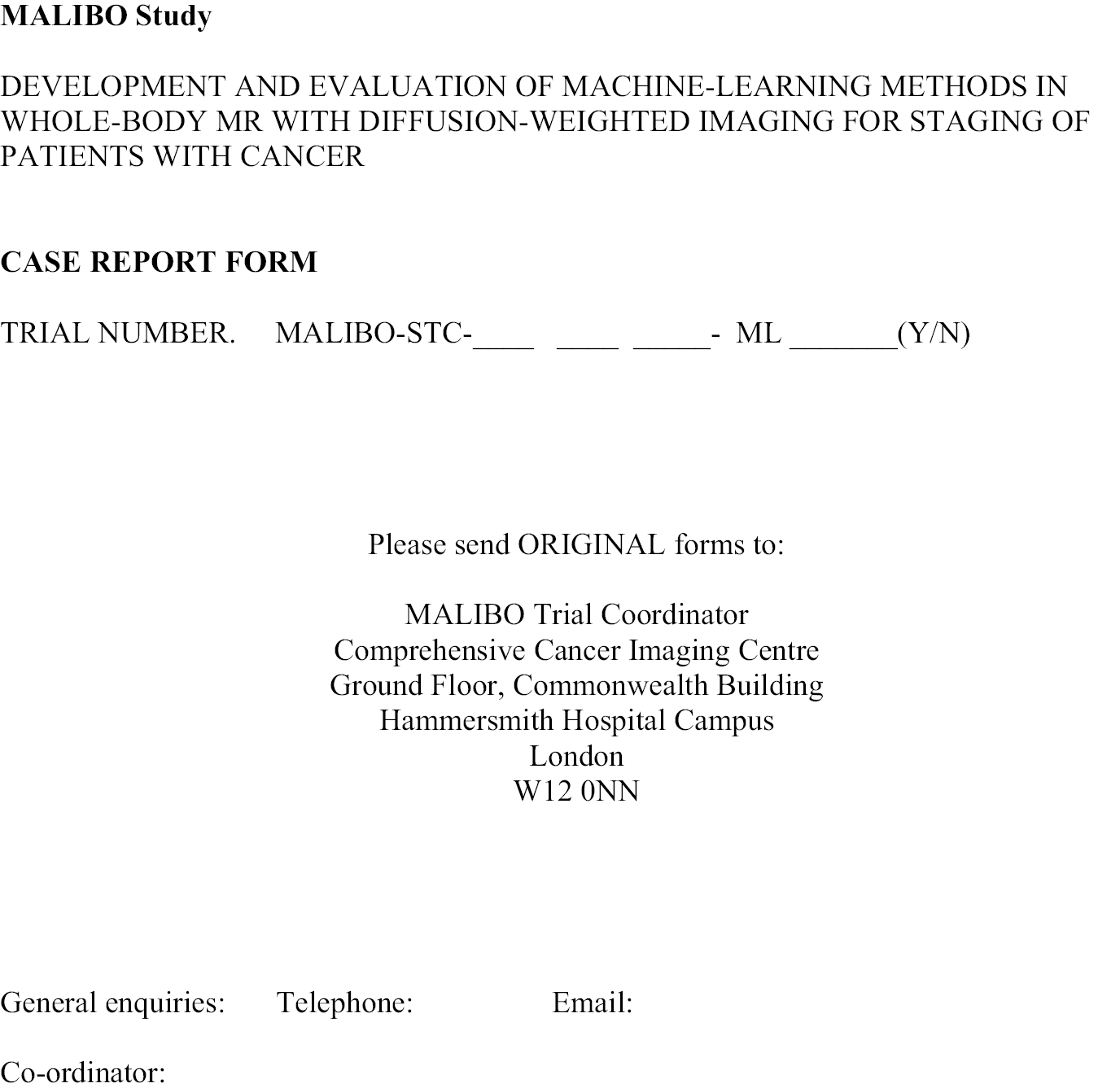

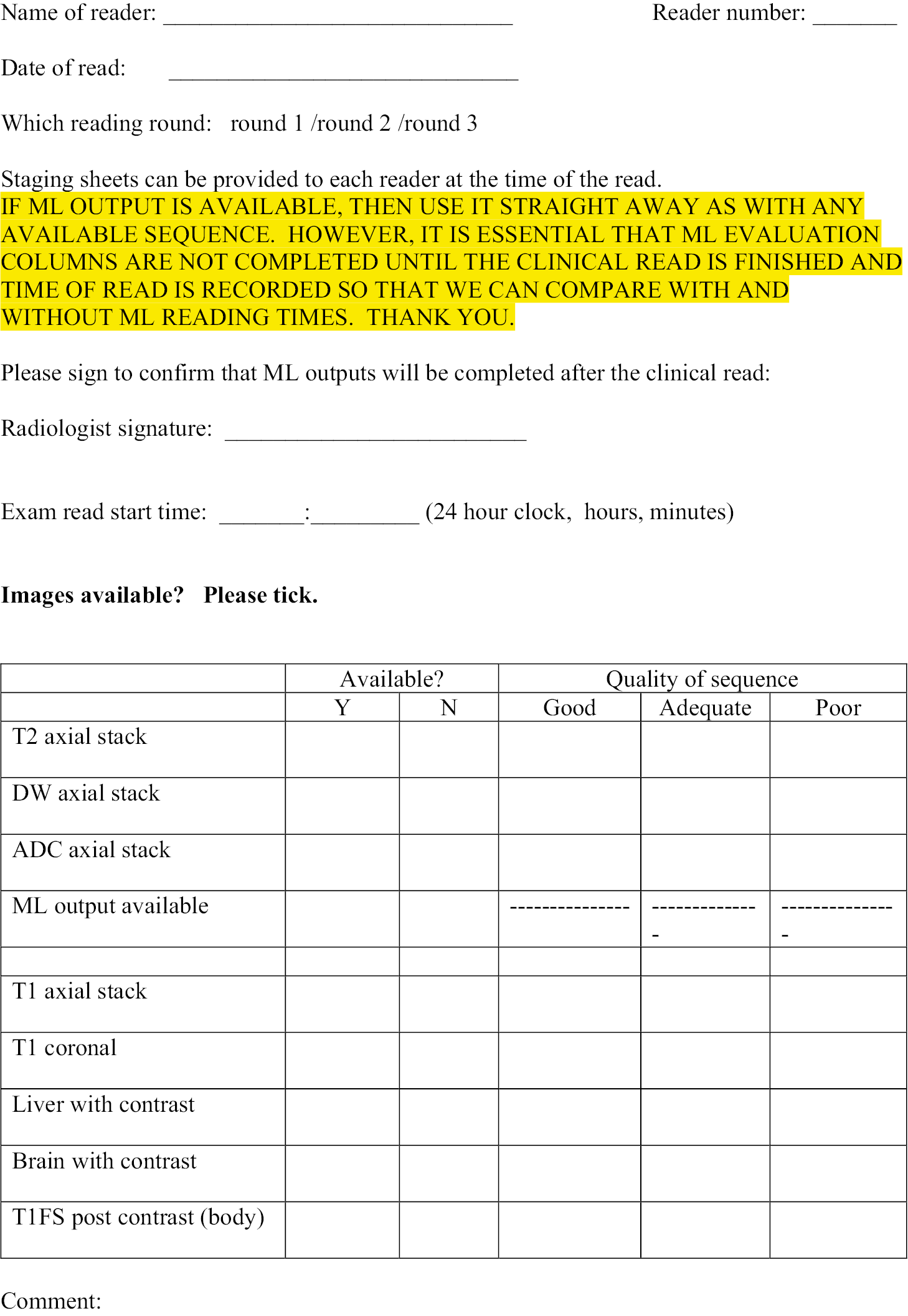

Phase 3: ‘clinical validation set’

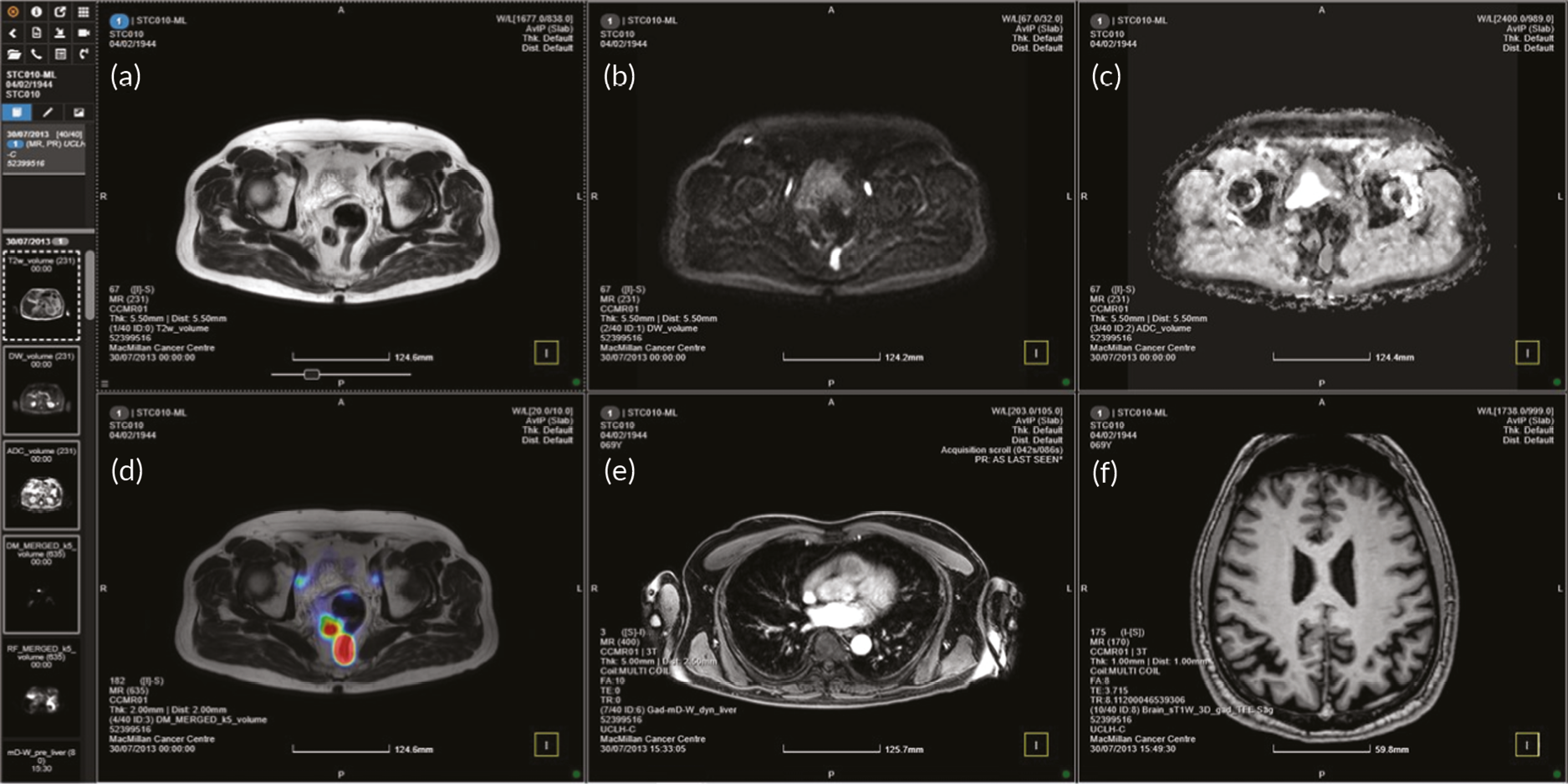

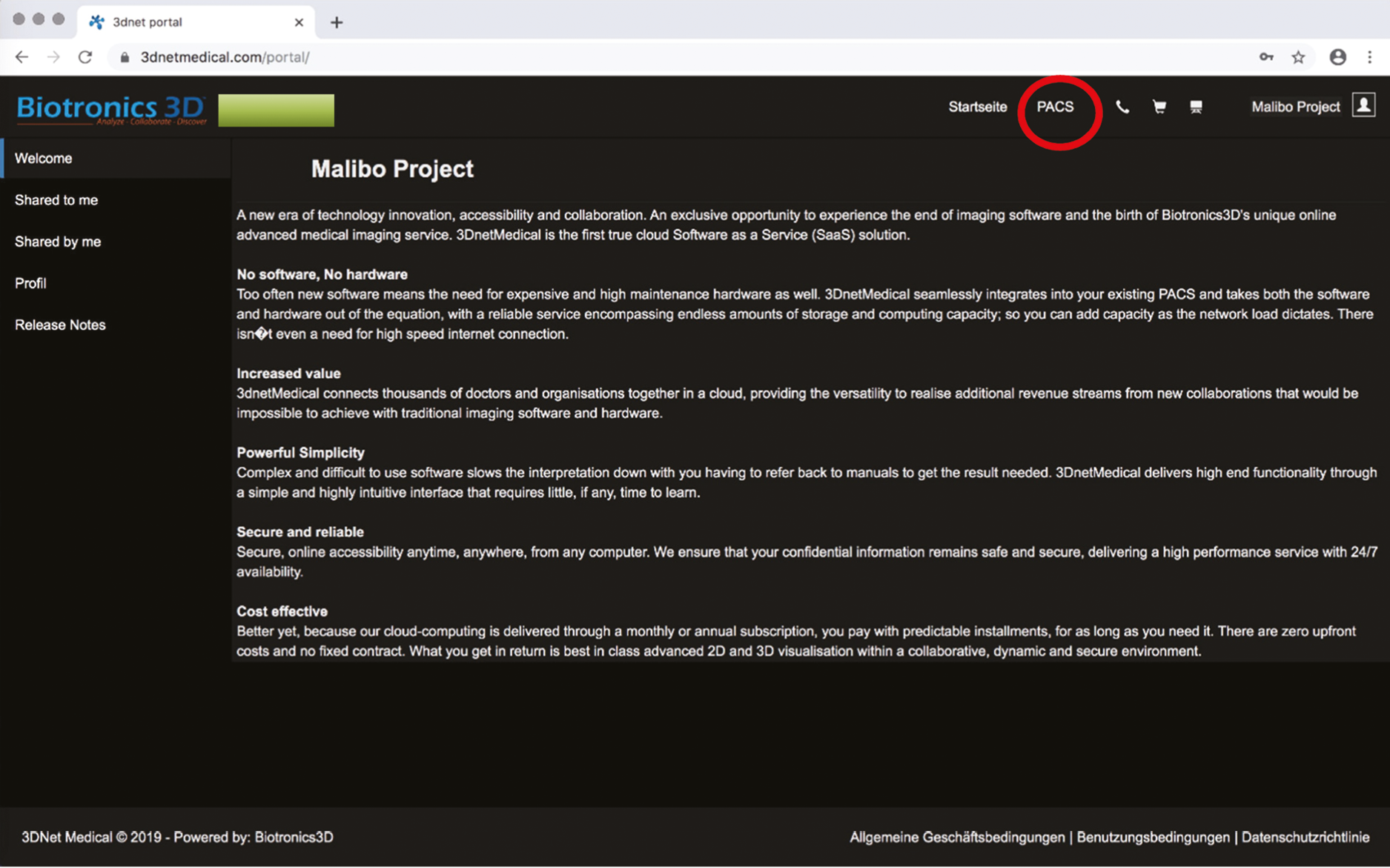

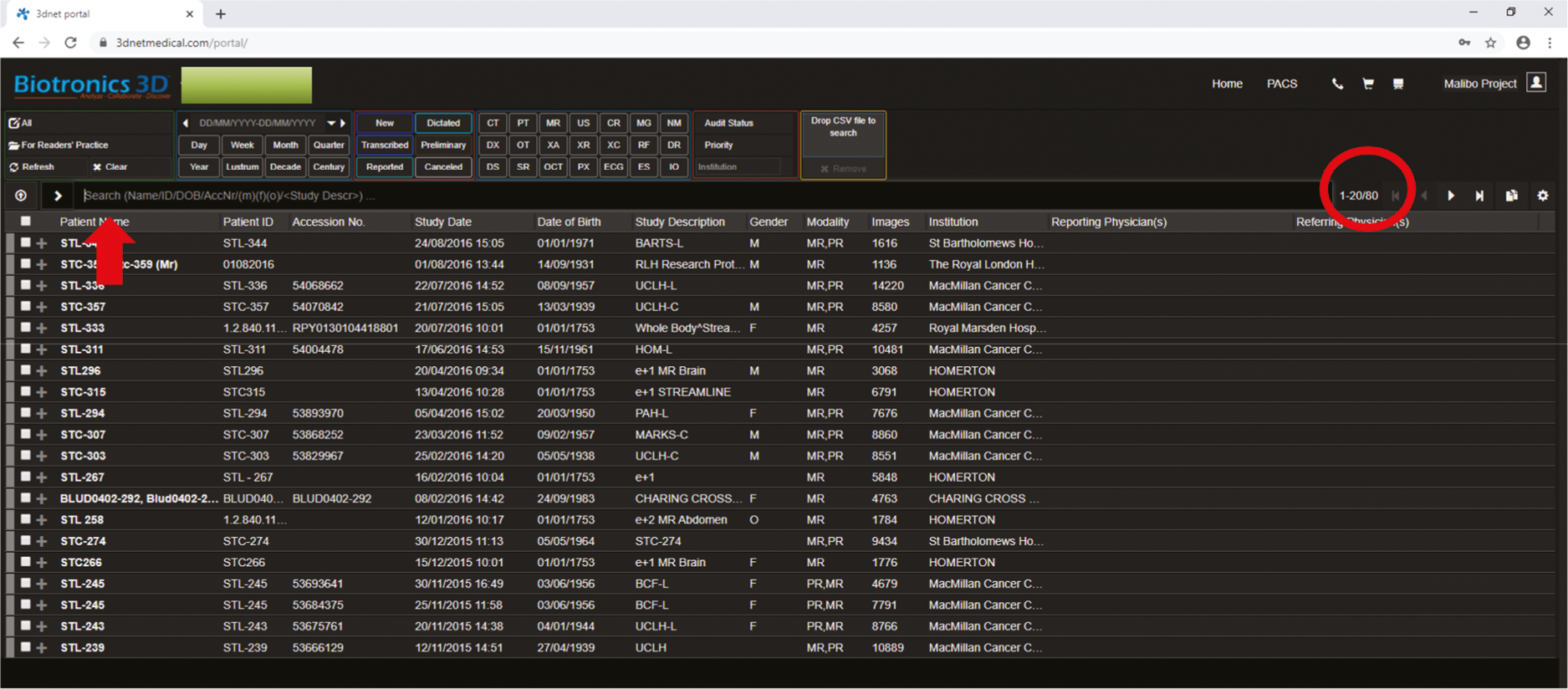

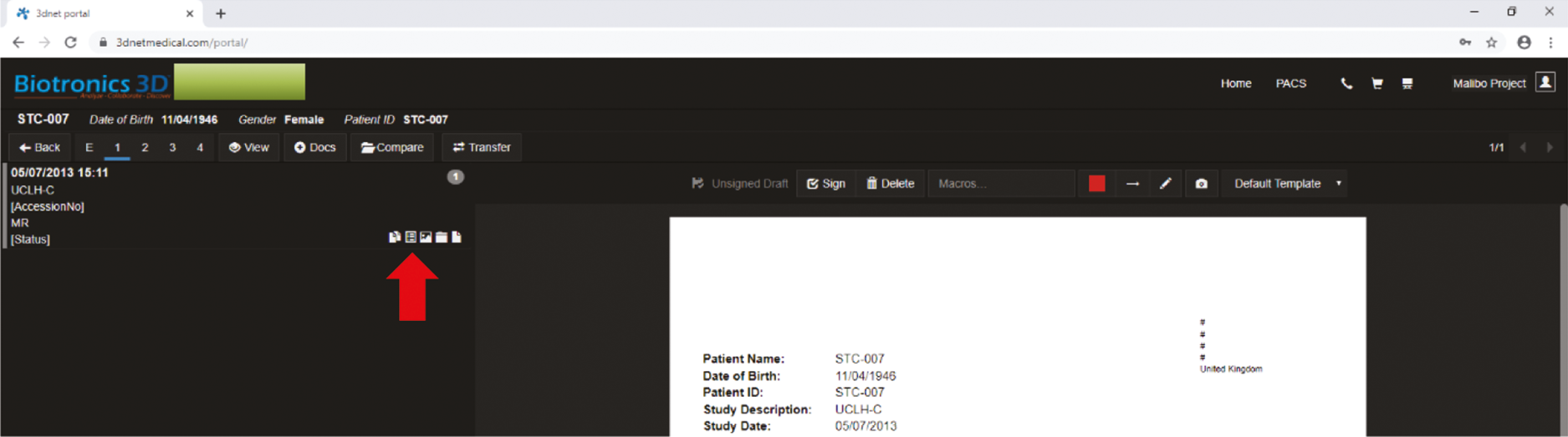

The allocated Phase 3 data set for clinical validation was comprised of 193 WB-MRI (217 were originally planned in the protocol) from the STREAMLINE C and L studies that had not been used for Phase 2 training. These scans were to be read by experienced readers with the fixed final ML support, which was provided as a probability map (a heatmap) which could be overlayed on the T2-weighted stitched volumes of the WB-MRI and this was used as the index test. The WB-MRI reads by experienced radiologists without ML support was the comparator test. The per-patient specificity and sensitivity of WB-MRI assessment, with and without ML support, was determined using the established reference standard from the main study. An interim analysis of the first 50–70 consecutive cases was planned but was not undertaken due to late running of the study. A scribe recorded the reader findings on the study case report form (CRF) and the RT was recorded. Substudies included: (1) Reads by new (inexperienced) WB-MRI readers; (2) Repeat reads (in random order and at time interval) with and without ML support to measure RT and interobserver variation. This ensured parity in computer setup between the reads, as there may have been variation in the original main study reads related to use of either picture archiving and communication system (PACS), Biotronics3D platform or other software, in addition to differences in internet speeds when reads were performed online for the main study.

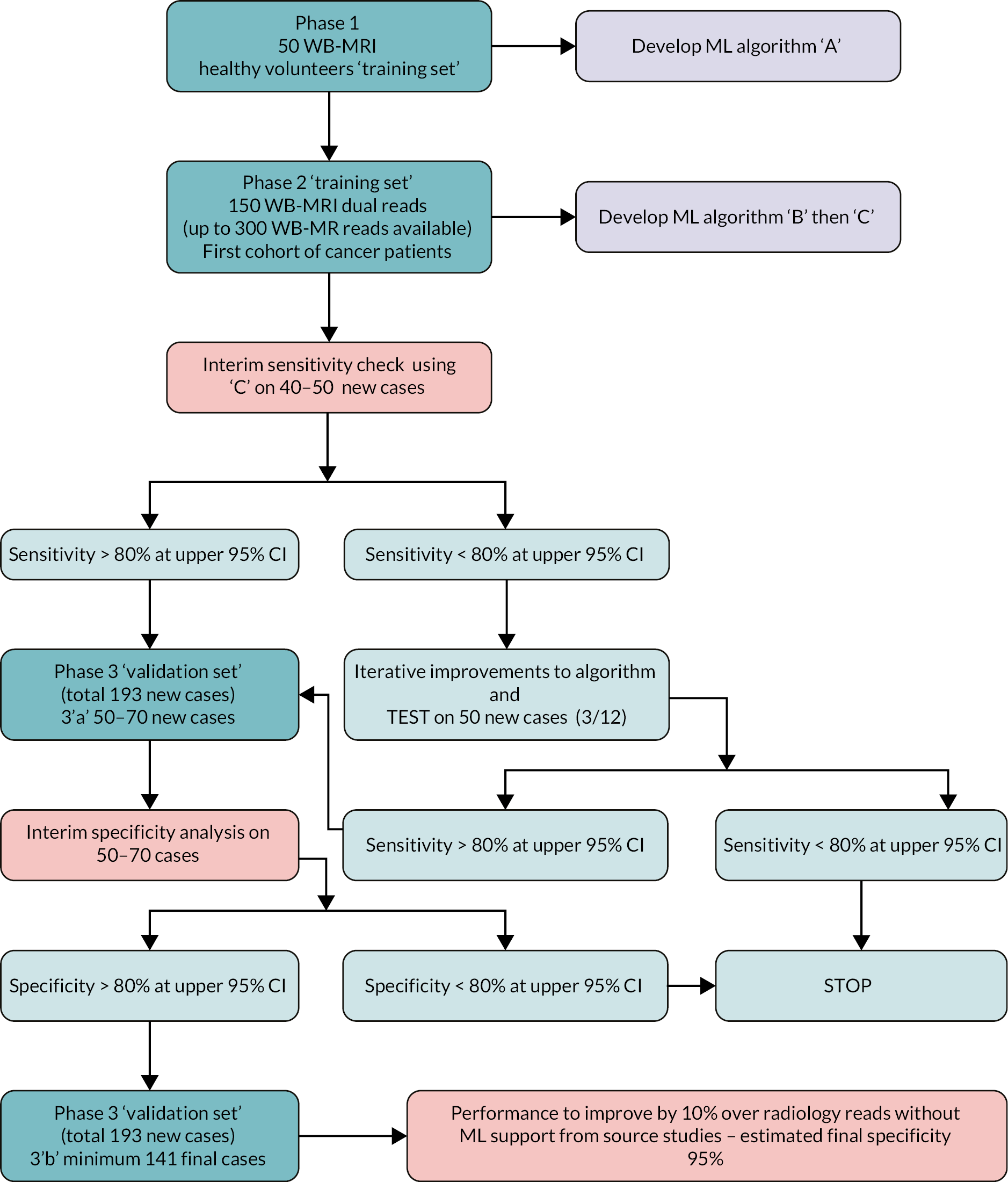

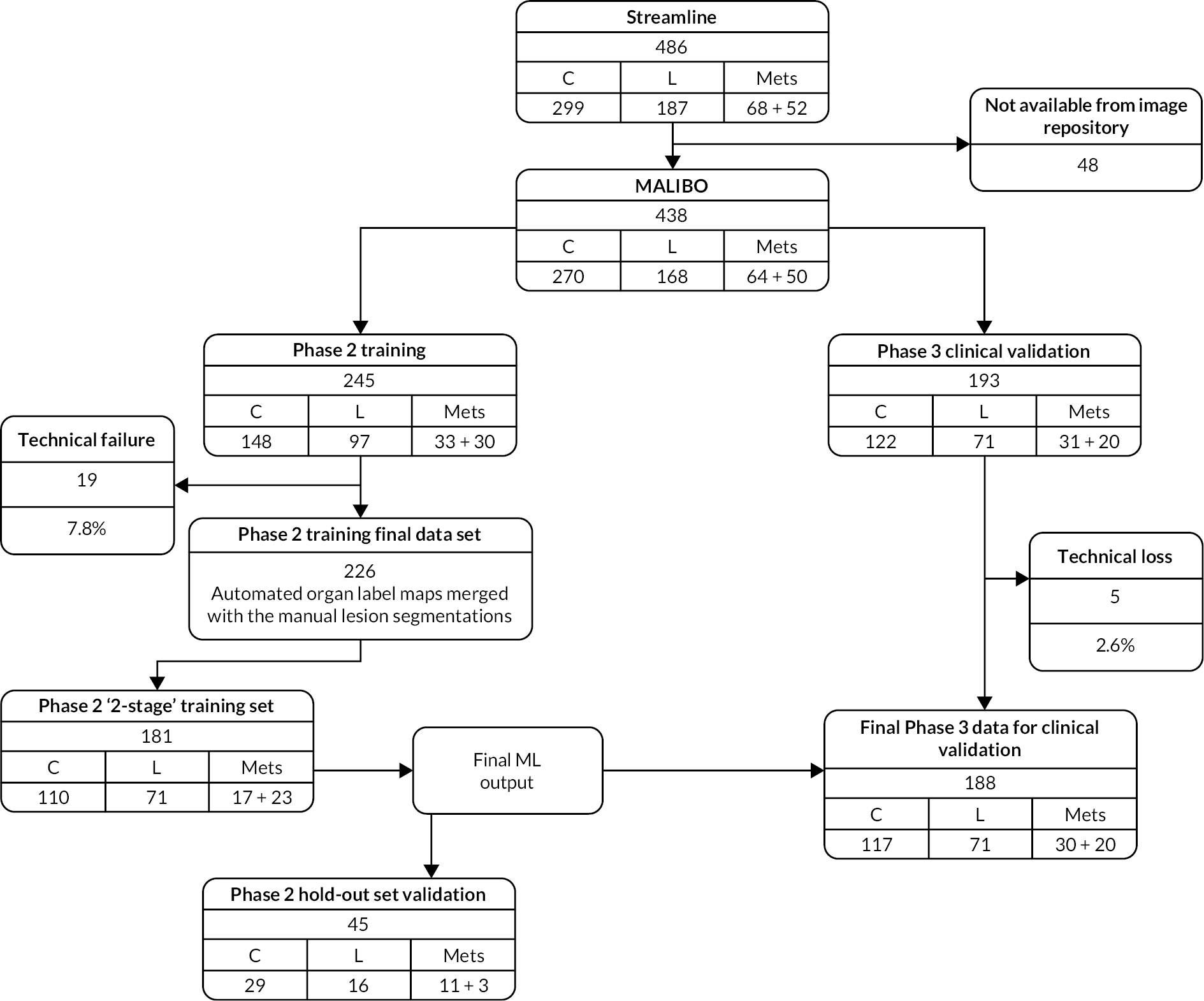

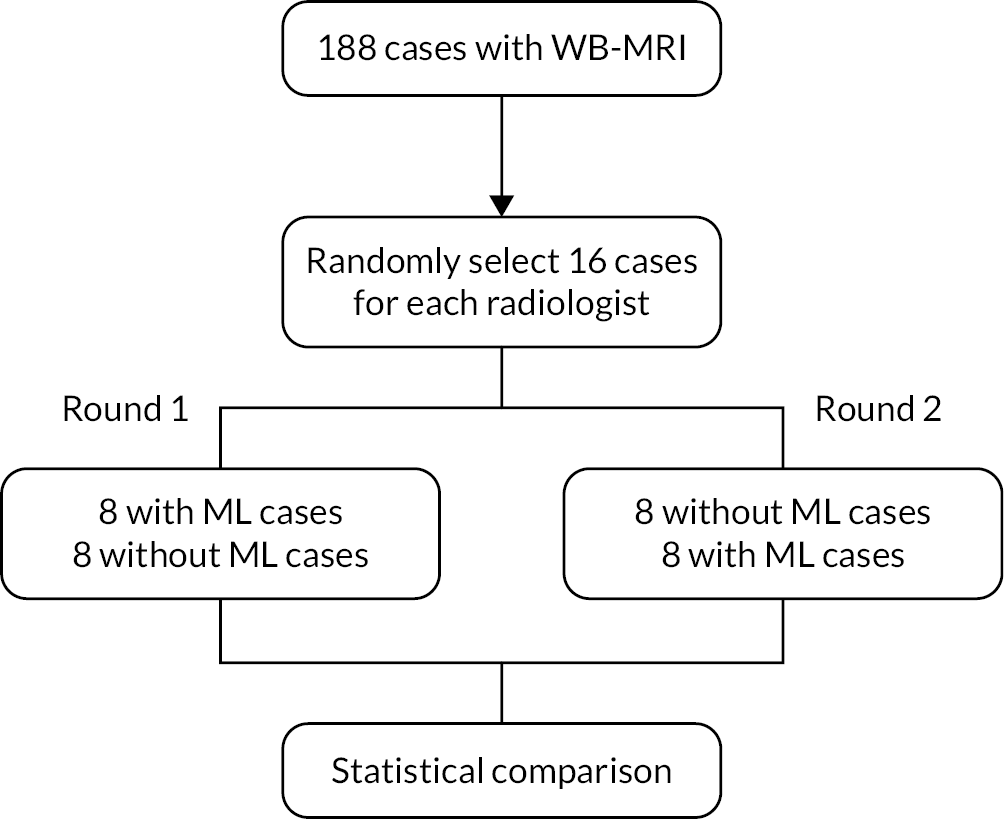

Figure 1 shows the study design flow diagram for this project.

FIGURE 1.

Planned study design flow diagram from the study protocol.

Patient public involvement

A patient representative took part in the development of the grant application, with all study materials being developed during the course of the study planning. The patient representative also was part of the trial steering committee. Discussions concerning use of patient data in de-identified format were constructive and the idea of using ML techniques to potentially improve accuracy was seen as a positive area of research. This study did not recruit patients directly and so there were no patient-facing materials for review. However, the patient representative has been kept abreast of the developments through the course of the study.

Chapter 2 Phase 1: healthy volunteer data collection and pre-processing fat-water swap artefact

This chapter includes material previously published by the authors in references. 41,61 The MRI protocol used for the healthy volunteer WB-MRI acquisition was first published in reference 41 and permission to reproduce the MRI protocol was given by the American Journal of Roentgenology. The development of a methodology for correction of fat-water swap was published in reference 61 as open access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: http://creativecommons.org/licenses/by/4.0/. The text below includes minor additions and formatting changes to the original text.

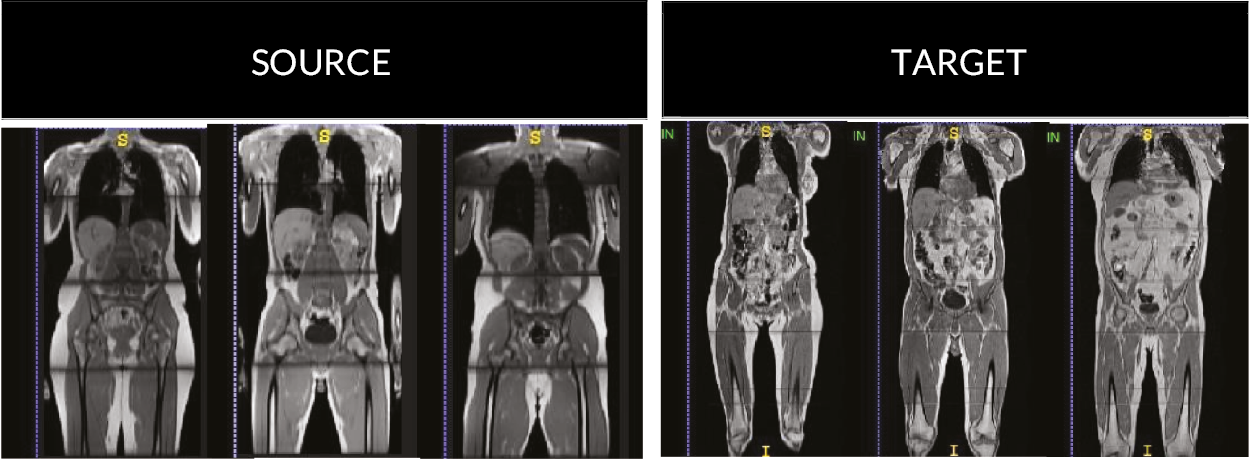

Volunteers and magnetic resonance imaging scans

The source study for the healthy volunteer data was approved by the local ethics committee (ICREC 08/H0707/58 for optimisation of MRI protocols used in clinical practice and translational research) and written consent was obtained from the participants. Fifty-one healthy volunteers [24 men (mean age = 37 years, age range = 23–67 years) and 27 women (mean age = 39 years, range = 23–68 years)] were scanned with WB-MRI from February 2012 to May 2014 at a single institution and scanned on the same machine.

Details about the volunteers’ population and scan protocol can be found in the reference. 41 The inclusion and exclusion criteria were the following:

Inclusion criteria:

-

male or female, healthy volunteers were aged 18–100 years;

-

written, informed consent was provided.

Exclusion criteria:

-

any co-existing medical illness;

-

contraindications to MRI (e.g. patients with pacemakers, metal surgical implants and aneurism clips, patients suffering from claustrophobia).

Magnetic resonance imaging protocol for healthy volunteers

Whole-body magnetic resonance imaging was performed on a moving-table 1.5-T system (Avanto with Syngo MR B17, Siemens Healthcare) using the body coil for transmission and the neck and body phased-array coils as receive coils. Four different imaging stations were used to achieve full body coverage, from the top of the neck to mid-thighs. Axial slices were acquired during free-breathing for DWI, whereas breath-holds were used for the three first stations for anatomic imaging. The acquisition time was approximately 45–50 minutes depending on the breath-holding ability of the examined participant. DWI slice-matched T1-weighted imaging with Dixon and T2-weighted imaging was performed to assist with the delineation of abdominal organs and bone marrow. The MRI protocol is provided in Table 1. 41

| DW-MRI | T1-W MRI | T2-W MRI | |

|---|---|---|---|

| Sequence type | SS SE EPI | VIBE with DIXON | HASTE |

| FOV (mm) | 450 × 366 | 450 × 351 | 450 × 366 |

| Matrix size | 128 × 128 interpolated |

320 × 202 | 256 × 256 |

| No of slices/thickness/distance (mm) | 50/5/0% | 56/5/20% | 50/5/0% |

| TR (ms) | 9000 | 7.54 | 767 |

| TE (ms) | 72 | 2.38/4.76 | 92 |

| Bandwidth (Hz/pixel) | 2056 | 300 | 399 |

| Flip angle | 90 | 10 | 130 |

| N A | 4 | 1 | 2 |

| Fat suppression | STIR (TI = 180 ms) |

N/A | N/A |

| b-values (s/mm2) | 0, 150, 400, 750, 1000 | N/A | N/A |

| Parallel acquisition | GRAPPA 2 | GRAPPA 2 | GRAPPA 2 |

| No stations | 4, free-breathing | 4, (3 with breath-holds) | 4, (3 with breath-holds) |

| TA (min)/station | 8.17 | 0.15 | 1.18 |

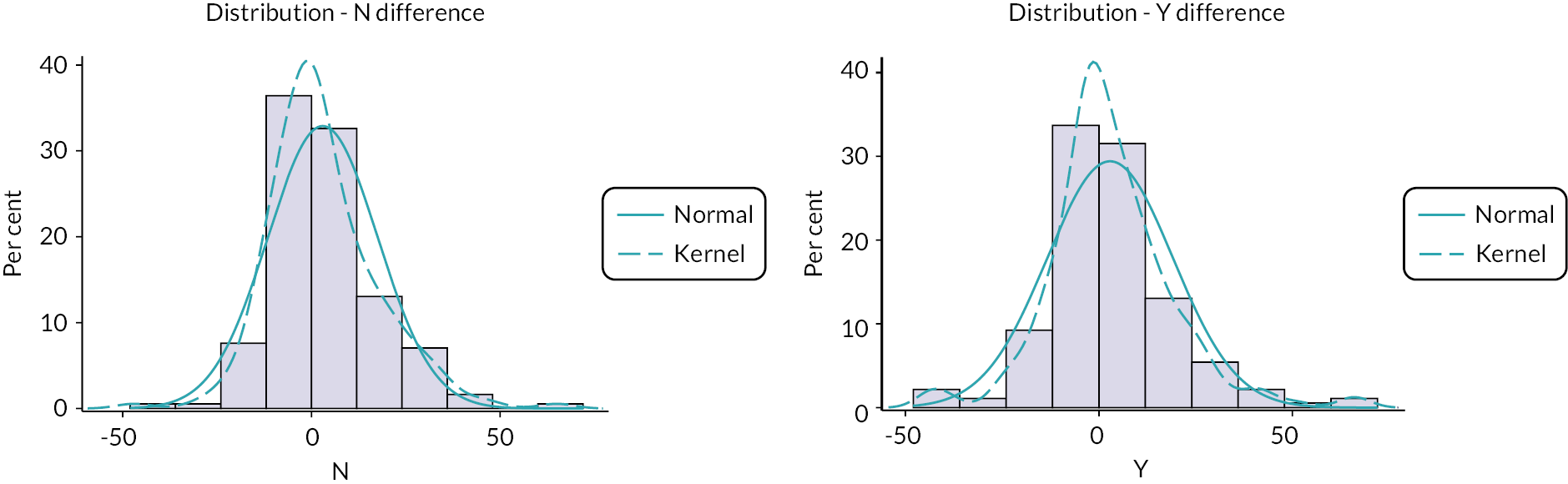

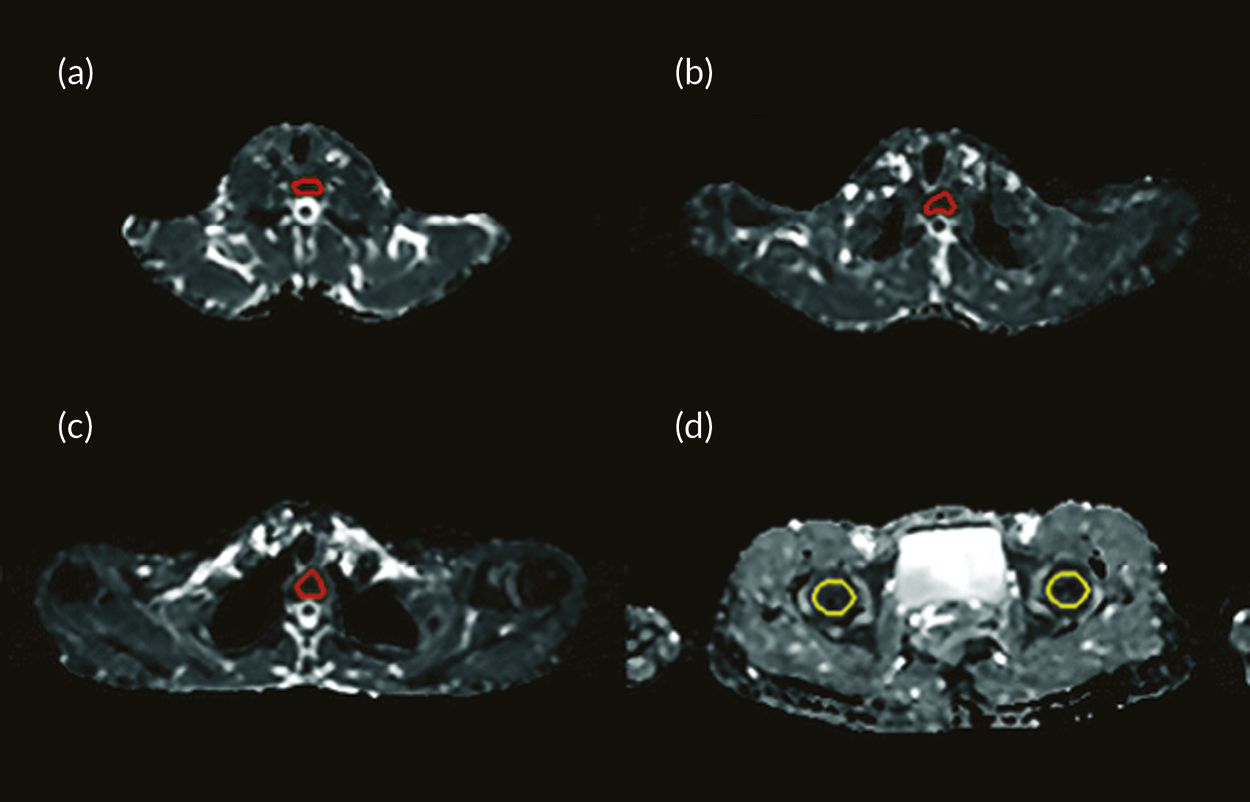

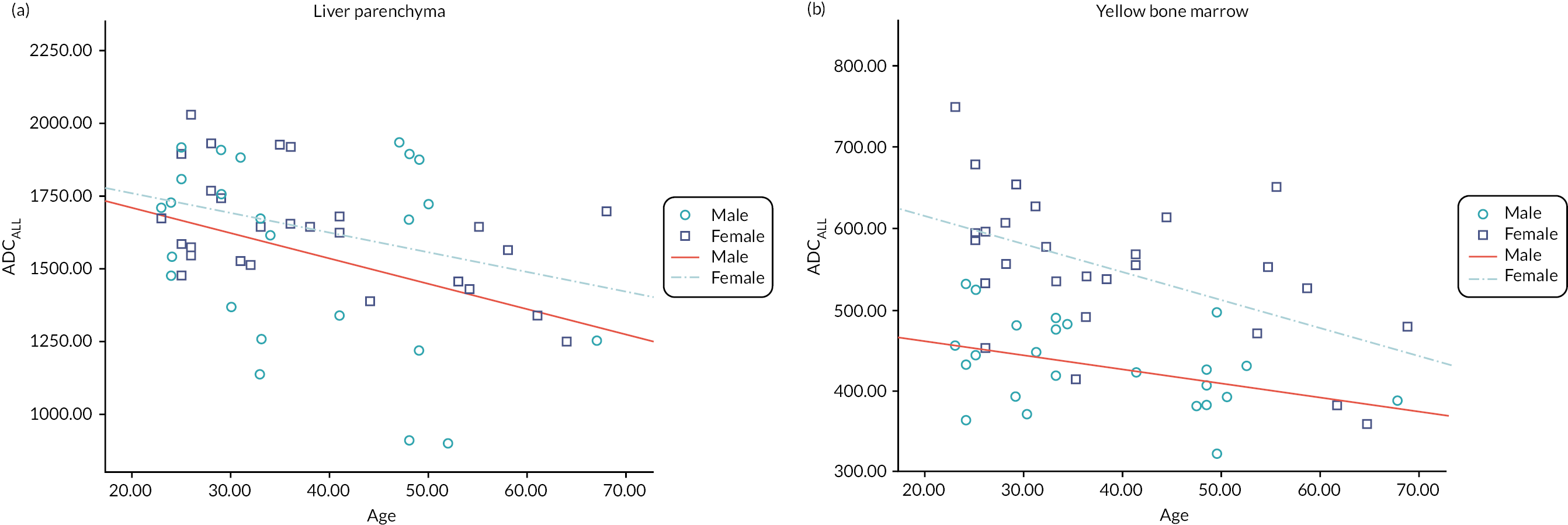

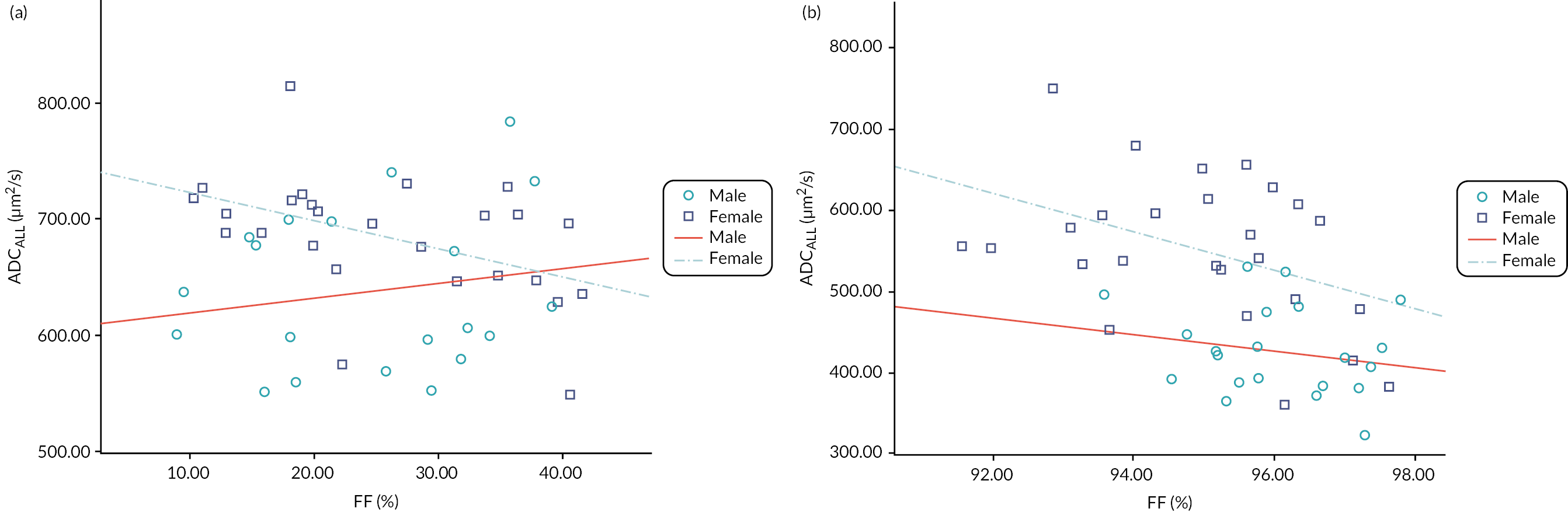

Representative slices showing ROIs used to calculate apparent diffusion coefficient (ADC) values are given in Figure 23 of Appendix 1. Also, scatterplots of ADCALL with age are displayed in Figure 24 of Appendix 1, and ADC values calculated from perfusion-sensitive WB-DWI protocol (ADCALL) vary with fat fraction (FF), shown in Figure 25 of Appendix 1.

Image pre-processing for whole-body magnetic resonance imaging: correction of fat-water swaps in Dixon magnetic resonance imaging61

In Phase 1 of the MALIBO project, we collected WB-MR images in 51 healthy volunteers with a Dixon sequence (Table 1). The Dixon method is a MRI sequence based on chemical shift and designed to achieve uniform fat suppression. However, fat- and water-only swap artefact may occur in up to 10% of scans, impacting on subsequent analysis.

We developed a new method to correct fat-water swap, based on regressing fat- and water-only images from in- and out-of-phase images by learning the conditional distribution of image appearance. We demonstrated the effectiveness of our approach on WB-MRI with various types of fat-water swaps. 61

Chapter 3 Phase 1: fully automatic, multi-organ segmentation in normal whole-body magnetic resonance imaging, using classification forests, convolutional neural networks and a multi-atlas approach14

Parts of this chapter are reproduced from Lavdas et al. 14 under licence agreement with John Wiley and Sons, number 5176490914331. Phase 1 of the MALIBO study involved the development and optimisation of a ML pipeline to automatically identify and segment anatomical structures of interest in normal WB-(DW)-MRI. In order to construct the anatomical atlases, WB-MRI data sets from 51 healthy volunteers were used (see Chapter 2 for description of data set or Lavdas et al. 41). These had already been collected under a separate ethics approval (ICREC 08/H0707/58). During this phase, we developed shape and appearance models that were specific to the normal anatomical regions as identified in WB-MRI. Classification was carried out using advanced supervised ML techniques based on ensemble classifiers, such as RFs,62 deep-learning algorithms such as CNNs-(15) or a multi-atlas (MA) approach. 63 In this phase, three different algorithms for automatic segmentation of the healthy organs and bones were completed. This phase has been successfully completed and published. 14

Machine-learning methods for image segmentation

Imaging protocol

Please see Chapter 2 for the full description of the MRI protocol that was used for scanning the 51 healthy volunteers. The full imaging protocol is shown in Table 1.

Machine-learning pipeline

Digital Imaging and Communications in Medicine (DICOM) data from individual imaging stations were stitched into single Neuroimaging Informatics Technology Initiative (NIfTI) volumes (https://nifti.nimh.nih.gov/). The stitching method was performed in the following: First, MRI images were loaded from the individual stations (or sub-volumes), typically about six but this may vary between participants. Next, the first station that is provided in the list of stations was used as a reference for the in-plane resolution and number of voxels (because these can vary between stations). Using this reference standard, we resampled each station to the same in-plane resolution as the reference station. After that, there is a read out of the physical location of each station from the DICOM header information [this is typically stored in a DICOM tag called ‘Image Position (Patient)’]. Finally, using the physical location of each station, it calculates the output volume where all stations are ‘stitched’ together to form a continuous volume. Adjacent stations often have a small overlapping region. The information of the station that lies cranially (from a head-to-toe direction) was simply used.

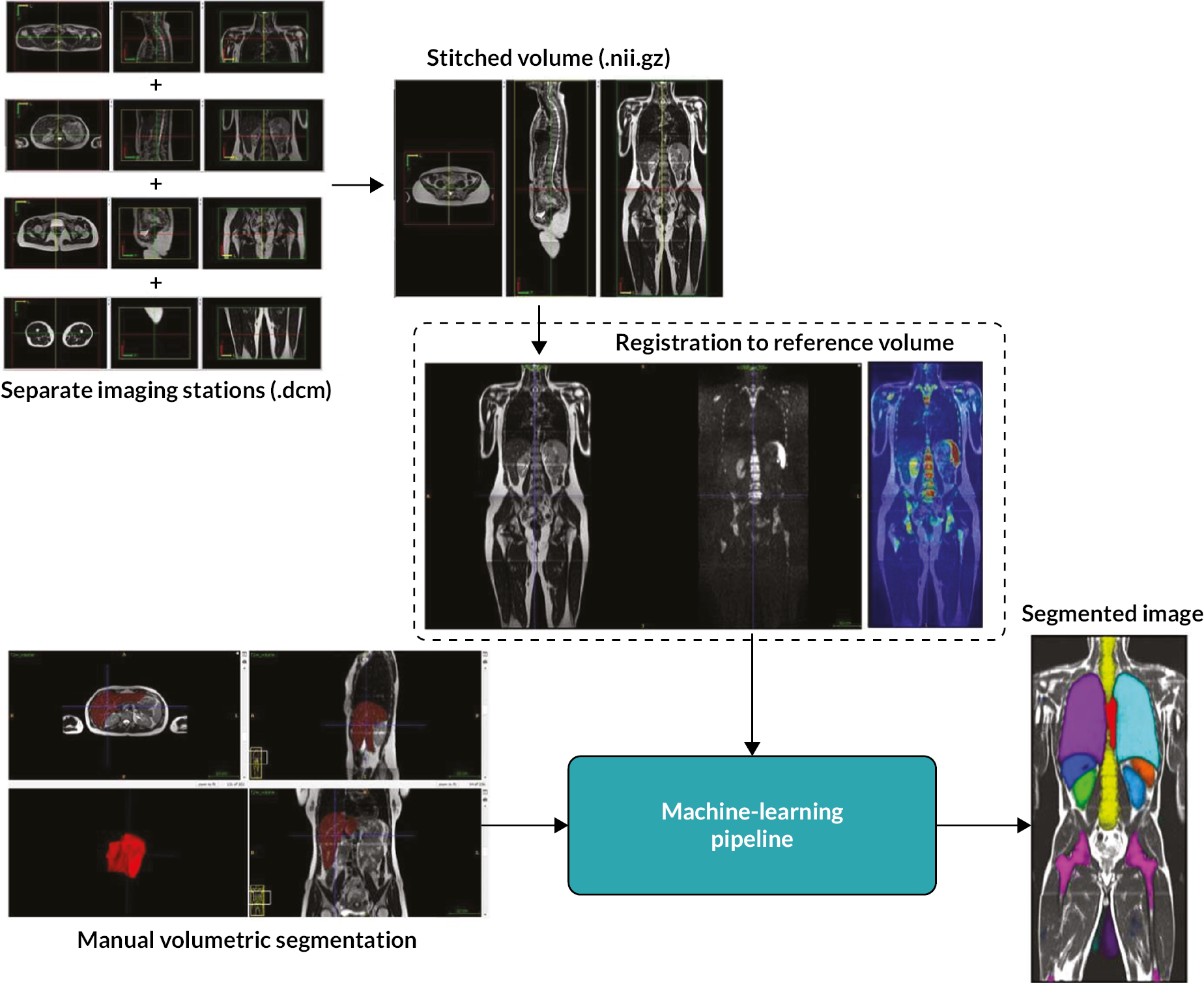

The stitched MRI volume data were used as input data to the algorithms, while training was based on manual annotation of the anatomic ROI on the T2-weighted volumes, first segmented by two radiology trainees and then checked by a MRI expert. The expert checked the segmentations, which were adjusted, if needed, and agreed in consensus. When multimodal MRI data were used as input to classification forests (CFs) and CNNs (e.g. T2w + T1w + DWI data, where T1w refers to T1w in- and opposed-phase images from the DIXON acquisitions, and DWI refers to b = 1000 s/mm2 images and ADC maps), an extra registration step was attempted, to add to the data preparation pipeline. However, in the final pipeline, no registration was undertaken. During this step, T1w and DWI volumes were registered to the T2w volumes using an affine transformation. A schematic overview of the data preparation process, including the registration step, is given in Figure 2. Data from different imaging stations are stitched to single volumes and then intrapatient registration is performed (when using multimodal MRI data as input to the algorithms). Manual segmentation and annotation of the anatomic ROI was also performed to generate training data for the ML algorithms.

FIGURE 2.

Magnetic resonance imaging image data preparation pipeline. Diagrammatic flowchart of the data preparation process for the ML pipelines. CFs algorithm. Reproduced from Lavdas et al. 14

Classification forests are powerful, multilabel classifiers that facilitate simultaneous segmentation of multiple organs. They have very good generalisation properties, meaning that the algorithm can be effectively trained using a relatively small number of annotated example data, a particularly important advantage in the clinical setting. CFs are a supervised, discriminative learning technique, which is based on RFs; an ensemble of weak classifiers called decision trees. Each decision tree is constructed in a way that it produces a partitioning of the training data, for example, image points that carry organ label information, in a way that training data with same labels are grouped together. This is achieved by building the trees from the root node down to the leaf nodes. Internal nodes, so called split nodes, separate the incoming data into two sets. Leaf nodes then correspond to small clusters of training data from which label statistics are computed and are used for predictions at testing time. Data splitting in the trees is based on an objective function, which maximises the information gained over empirical label distributions. The goal is to select discriminative features at split nodes that are best for partitioning the data. Different trees are built by injecting randomness for both feature selection and training data subsampling. This ensures decorrelation between trees and has proven to yield good generalisation properties. During testing, image points from a new image are ‘pushed’ through each tree until a leaf node is reached. The label statistics over training data that are stored in the leaf nodes are aggregated over different trees by simple averaging, and a final decision on the most likely label is made based on this aggregation. Intuitively, image points will fall into leaf nodes that contain similar image points from the training data with respect to the features that are evaluated along the path from root to leaf node. An attractive property of CFs is their ability to automatically select the right image features for a given task, from a potentially very large and high-dimensional pool of possible features. This is important because preselecting or handcrafting image features beforehand can be very difficult, as it is not known in advance which features are discriminative for the task at hand. In CFs, the user only has to provide weak guidance on the ranges of parameters that are used to randomly generate potential features. In this work, we make use of the popular offset box features, which have been shown to provide effective means of capturing local and contextual information. 64–66 Box features are very efficient to compute, which is beneficial for training and testing. In box features, intensity averages are calculated within randomly sized and displaced 3D boxes. Two types of features are computed: single-box and two-box features. Single-box features simply correspond to the average intensity of all voxels from a particular MRI sequence that fall into a 3D box. Two-box features return the difference between the averages computed for each of the two boxes and generalise intensity gradient features. Here, each box can be taken from a different MRI sequence and, thus, yield cross-sequence information.

Tuning parameters for our algorithm have been set accordingly to knowledge from previous applications, such as vertebra localisation in whole-body CT scans. We have used CFs extensively for related tasks for which cross-validation has been used to optimise hyperparameters such as tree depth. In this work, we used 50 trees with a maximum tree depth of 30. The stopping criterion for growing trees is if either the objective function (information gain) cannot be further improved or the number of training samples in a leaf fall below a threshold of four samples. We found that neither increasing the number of trees nor the tree depth increases the segmentation accuracy of the CFs.

Convolutional neural networks algorithm

Convolutional neural networks are feed-forward artificial neural networks, which have recently emerged as powerful ML methods for image analysis tasks, such as segmentation. CNNs are capable of learning complex, nonlinear data associations between the input images and segmentation labels through layers of feature extractors. Each layer performs multiple convolutional filter operations on the data coming in from the previous layer and outputs feature responses, which are then processed by the next layer. The last layer in the network combines all the outputs to make a prediction about the most likely class label for each voxel in an image. The parameters of the convolutions and weights for combining feature responses are learnt during the training stage, using an algorithm called back-propagation. The layered architecture enables CNNs to learn complex features automatically without any need for guidance from the user. The features correspond to a sequence of filter kernels learnt in consecutive layers of the neural network. A final feature that is used for classification thus can correspond to a nonlinear combination of individual features that are extracted hierarchically. This is also called features-of-features, as filter kernels in deeper layers are applied to the feature responses of earlier layers. This is different to CFs, where the user has to define a pool of potential features beforehand from which the most discriminative ones are then selected during CF training. However, CNNs come with an increased computational cost during training, and they have multiple meta-parameters that need to be highly tuned to achieve optimal performance, a process which can be challenging for less experienced users. In addition, defining the right network architecture is a challenge on its own and a field of active research.

In this project, we made use of a recently published CNN approach that we developed originally for the task of brain lesion segmentation in multiparametric MRI. The approach, called DeepMedic,15 uses a dual pathway CNN that processes an image at different levels of resolution simultaneously. This has the advantage that features are based on both local and contextual information, something that can be particularly appealing in the case of whole-body multiorgan segmentation. For example, the left and right kidneys (LKDN and RKDN) might look very similar locally and share similar features at small scale, but the contextual features that cover larger regions of the images allow the discrimination between the left and right body parts.

The CNN configuration used here follows largely the default configuration that has been previously used for brain lesion segmentation. To accommodate for larger context in the case of organ segmentation, the receptive field for the low-resolution pathway has been increased by using an image downsampling factor of 3. We use a dual pathway (two resolutions), 11-layer deep CNN, where the last two layers correspond to fully connected layers, which combine the features extracted on the two resolution pathways. We employ 50–70 feature maps (FMs) (i.e. different kernels) for each layer. The network architecture is fully convolutional and there are no max-pooling layers, which we find to increase segmentation accuracy. The CNN architecture is a balance between model capacity, training efficiency and memory demands.

Multi-atlas algorithm

A MA label propagation approach was also employed in this study. 67 MA segmentation uses a set of atlases (images with corresponding segmentations) that represent the interparticipant variability of the anatomy to be segmented. Each atlas is registered to the new image to be segmented using a deformable image registration. The MA approach accounts for anatomical shape variability and is more robust than single-atlas propagation methods in that at any errors associated with propagation, are averaged out when combining multiple atlases. The approach employed here makes use of efficient 3D intensity-based image registration68 with free-form deformations as the transformation model and correlation coefficient as the similarity measure. Majority voting is used to derive the final tissue label at each voxel.

Implementation, training and validation procedure

Training of CFs and CNNs is a demanding process computationally and in our case took up to 12 hours for CFs and 30 hours for CNNs for a single fold with 27 images, when using a quad-core Intel Xeon 3.5 GHz workstation with 32 GB RAM and a NVIDIA Titan X graphics processor unit (GPU). Our CFs implementation uses all available central processor units (CPUs), while the CNN implementation runs mostly on the GPU. Training only needs to be performed once. Testing of new data points to obtain the full segmentation of an image is a particularly efficient process and takes about a minute for CFs and CNNs. Note that the MA algorithm does not require any training, but has considerably longer running time during testing which scales linearly with the number of atlases. To segment a single image using 27 atlases takes about 15 minutes on CPU.

We ran five-fold cross-validation experiments on 34 artefact-free data sets to assess the agreement of segmentations between the ones from the developed algorithms and the ones from the clinical experts. All data sets were inspected by an expert radiologist before being selected for validation. Data sets with severe motion artefacts or DWI data sets with severe distortion artefacts, and therefore severe misalignment, were excluded from validation.

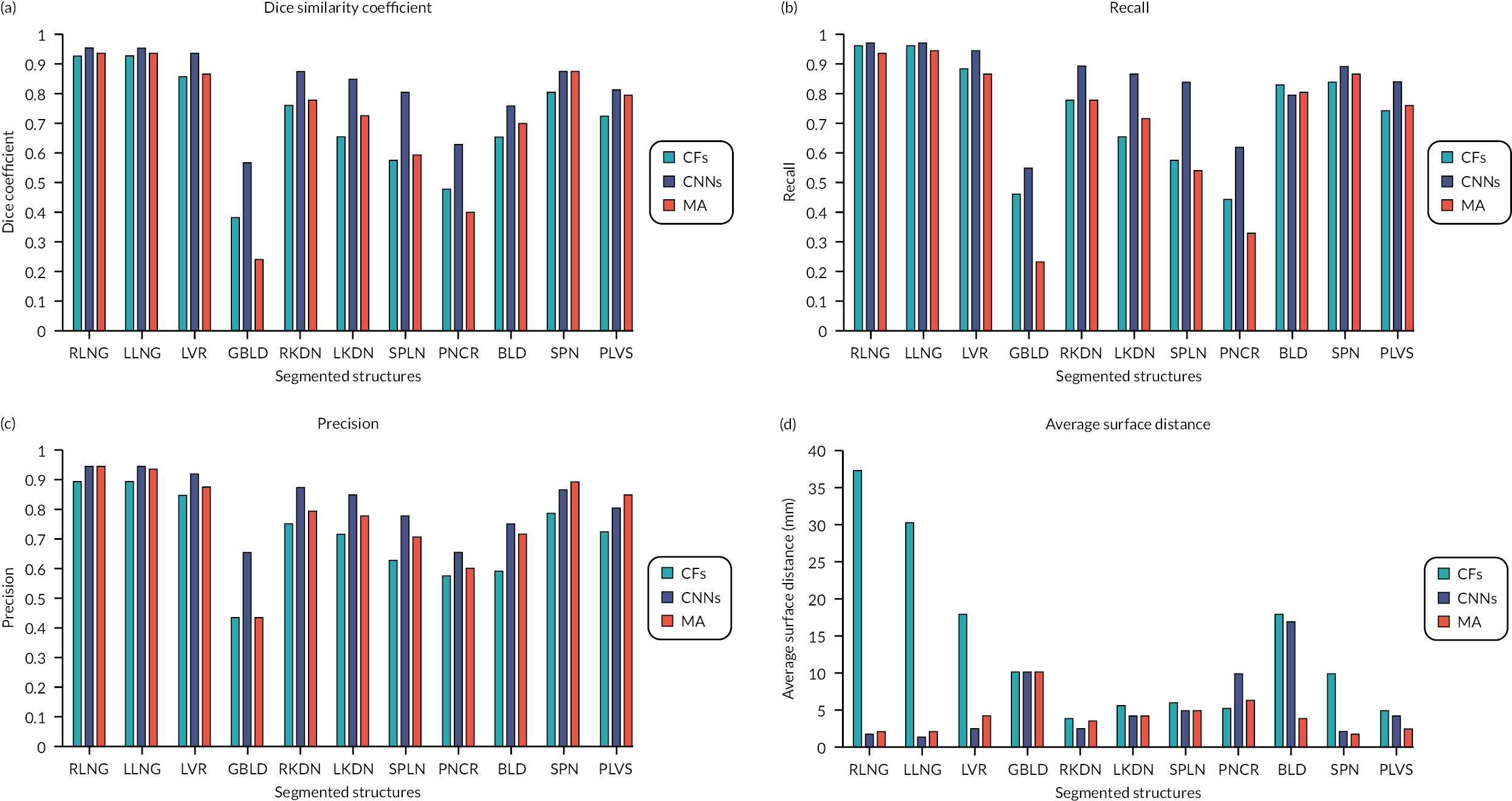

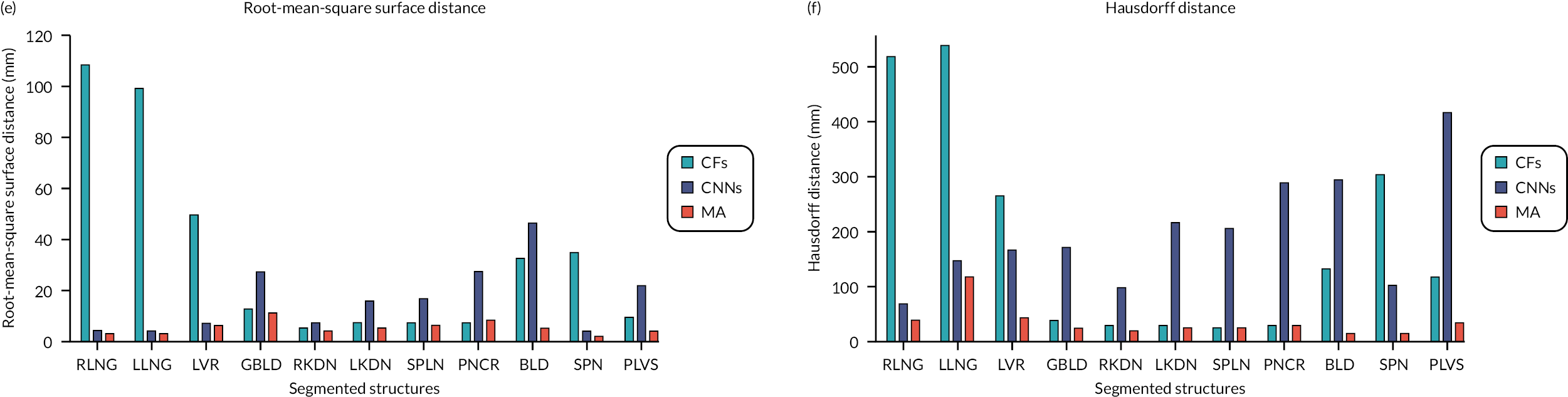

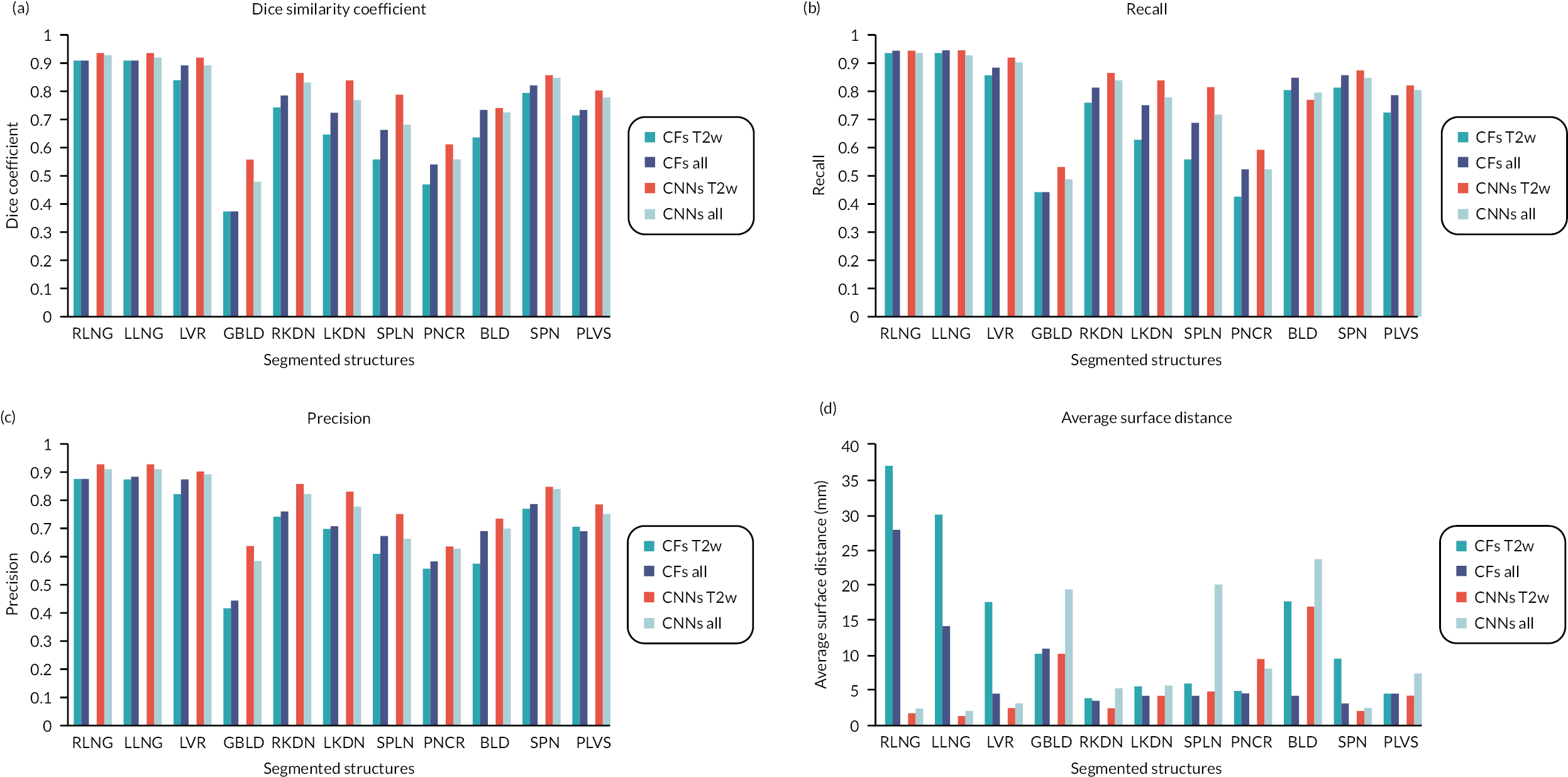

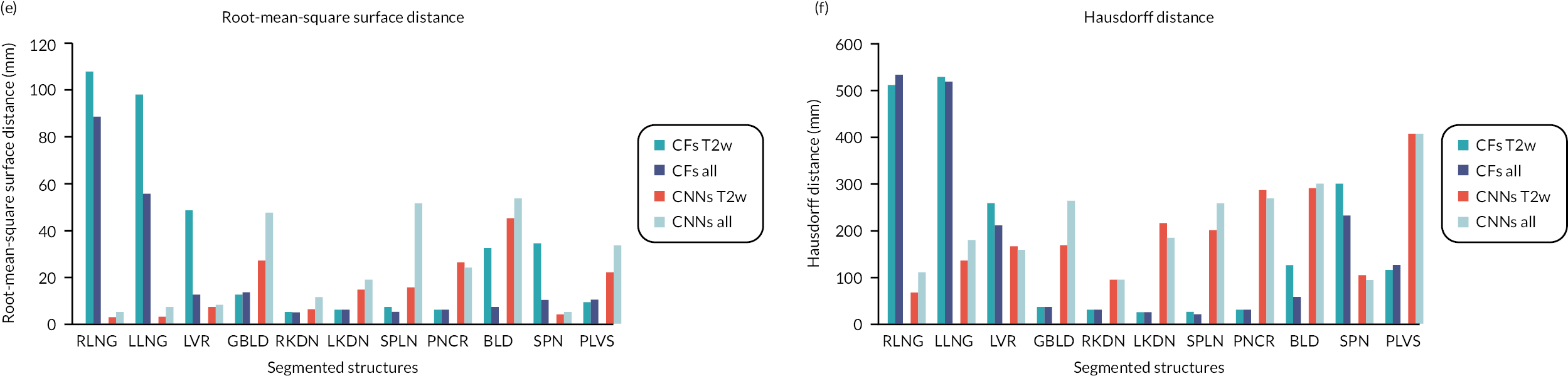

We report six metrics (three overlap and three surface distance-based measures) to assess the agreement between automatic segmentation results from our algorithms and the manual segmentations performed by the clinical experts. The Dice similarity coefficient (DSC) quantifies the match between the two segmentations (1 = complete overlap, 0 = no overlap). Recall (RE) can be expressed in terms of sensitivity (1 = no misses) and precision (PR) can be expressed in terms of specificity (1 = no false positives). The average surface distance (ASD) is the average of all the distances from points on the boundary of the automatic segmentation to the boundary of the manual segmentation (0 = perfect match), the root-mean-square surface distance (RMSSD) is calculated in the same way as the ASD, except that the distances are now squared (0 = perfect match). Finally, the Hausdorff distance (HD) or maximum surface distance is the maximal distance from a point in the first segmentation to a nearest point in manual segmentation (0 = perfect match). 69 The three surface distance metrics are expressed in millimetres and are unbounded.

We measured the above metrics for the right and left lungs (RLNG and LLNG), LVR, gallbladder (GBLD), RKDN and LKDN, spleen (SPLN), pancreas (PNCR), bladder (BLD), spine (SPN) and pelvic bones, including the femurs [pelvis (PLVS)] for all three algorithms, when using T2w volumes as inputs. Then, we did the same when using all imaging combinations (T2w + T1w + DWI) as inputs to CFs and CNNs.

Statistical analysis

One-way analysis of variances (ANOVA) was used to compare the mean metrics for all the examined structures between the three algorithms. Post hoc analysis (multiple comparisons) was performed with a Tukey test. In cases where the homogeneity of variances was violated, a Kruskal–Wallis test was used. A Mann–Whitney U-test was used to compare the performance between CFs and CNNs when using T2w volumes as input to the algorithms and when using all imaging combinations (T2w + T1w + DWI). ANOVA and Mann–Whitney U-tests were similarly used to compare the DSC of individual anatomical labels between the three algorithms and between CFs and CNNs when using different imaging inputs. Finally, a Mann–Whitney U-test was used to compare the DSC between CFs with all imaging combinations (T2w + T1w + DWI) as input and CNNs with T2w images as input only, for each anatomical label. A significance level of 0.05 was used for all tests. Statistical analysis was performed in SPSS 21.0 for Windows (SPSS, Chicago, IL, USA).

Results

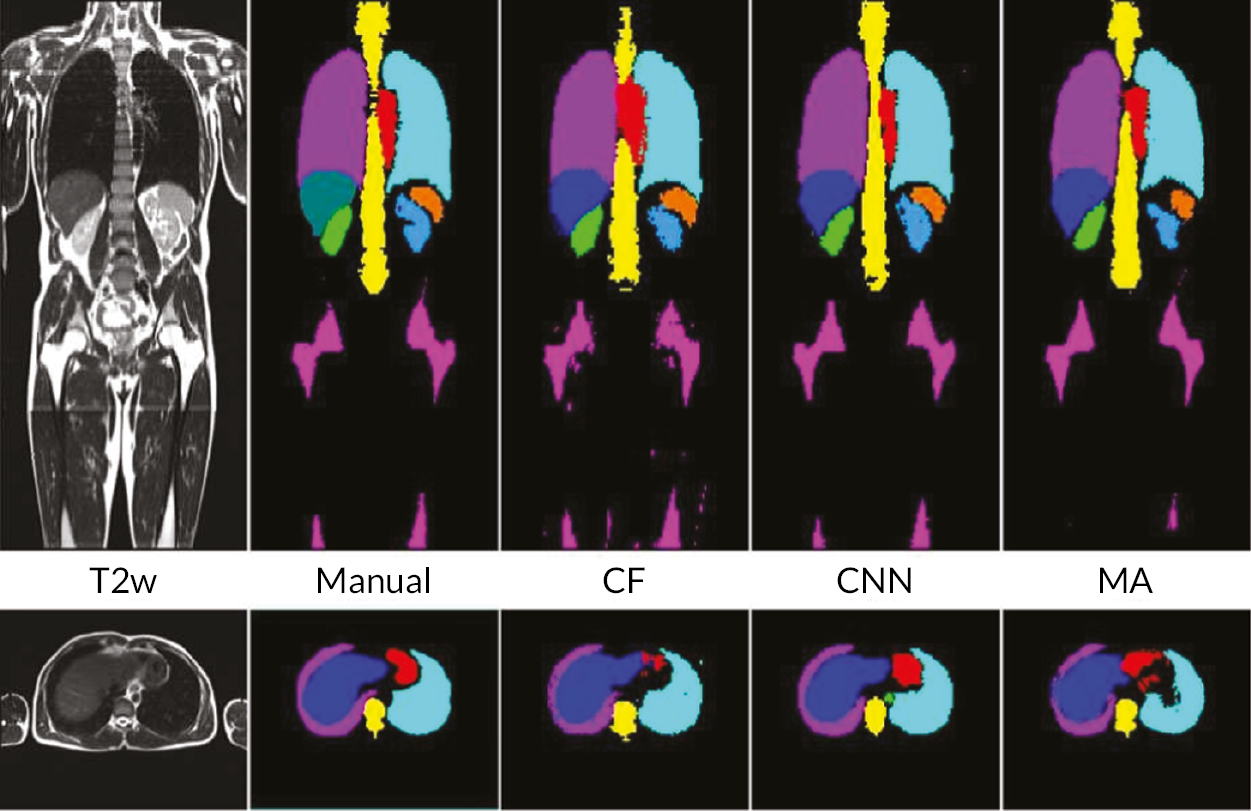

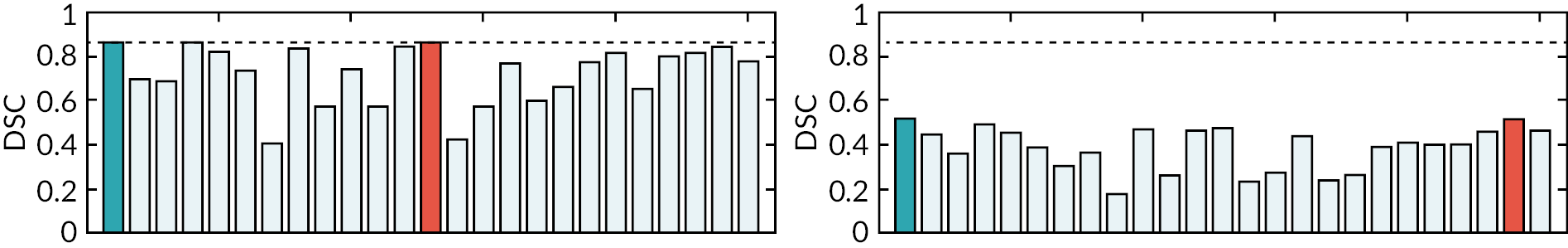

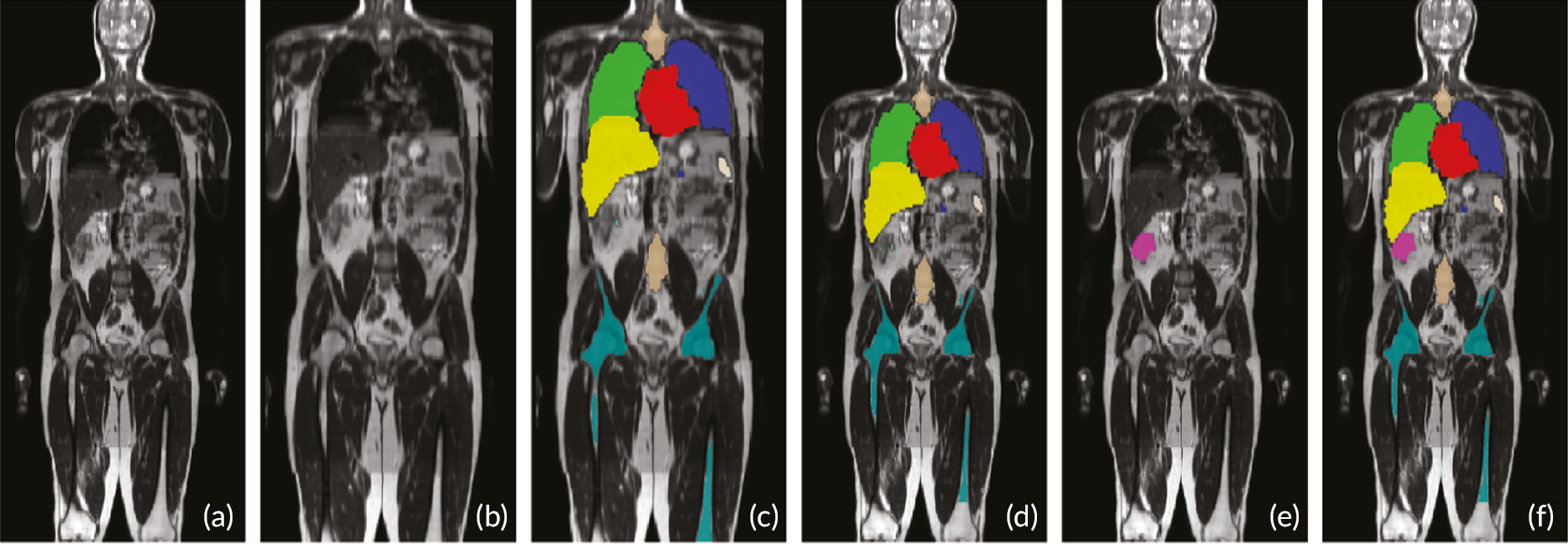

It is noteworthy that an ‘at a glance’ qualitative assessment reveals that CNNs outperform CFs and the MA algorithm in DSC, RE and PR (Figure 3), while the MA algorithm seems to perform best in terms of surface distance metrics, namely ASD, RMSSD and HD.

FIGURE 3.

Examples of segmentation results from three algorithms. T2w representative coronal (top row) and axial slices (bottom row), manual and automatic segmentations of major organs (lungs, heart, kidneys, LVR, and SPLN) and bones (SPN and femurs) from the three algorithms.

A visual example of automatic segmentation results from the three algorithms in the coronal and axial plane is shown in Figure 3.

A bar chart that provides a pictorial representation of the mean metrics (DSC, RE, PR, ASD, RMSSD and HD) for the segmented organs when using T2w volumes as input to all three algorithms is shown in Appendix 1, Figure 26.

Table 2 shows the pooled mean metrics ± standard deviation (SD) from all the segmented structures for the three algorithms. It also shows the p-values from the ANOVA when comparing the metrics between the three algorithms. It is seen that CNNs provide the highest mean DSC (0.81 ± 0.13), RE (0.83 ± 0.14) and PR (0.82 ± 0.10) compared to CFs and the MA algorithm, but not statistically significant (p = 0.271, 0.294 and 0.185, respectively). On the contrary, the MA algorithm returns the lowest ASD (4.22 ± 2.42 mm), RMSSD (6.13 ± 2.55 mm), and HD (38.9 ± 28.9 mm) when compared to CFs and CNNs, which is statistically significant (p = 0.005, 0.004 and 0.001, respectively).

| DSC | RE | PR | ASD (mm) | RMSSD (mm) | HD (mm) | |

|---|---|---|---|---|---|---|

| CFs | 0.70 ± 0.18 | 0.73 ± 0.18 | 0.71 ± 0.14 | 13.5 ± 11.3 | 34.6 ± 37.6 | 185.7 ± 194.0 |

| CNNs | 0.81 ± 0.13 | 0.83 ± 0.14 | 0.82 ± 0.10 | 5.48 ± 4.84 | 17.0 ± 13.3 | 199.0 ± 101.2 |

| MA | 0.71 ± 0.22 | 0.70 ± 0.24 | 0.77 ± 0.15 | 4.22 ± 2.42 | 6.13 ± 2.55 | 38.9 ± 28.9 |

| p | 0.271 | 0.294 | 0.185 | 0.005 | 0.004 | 0.001 |

Table 3 reports the DSC, the most commonly used metric to assess agreement between manual and automatic segmentations, for individual anatomical structures (labels) when the three algorithms (CFs, CNNs and MA) are using the T2w images as inputs only. It also shows the p-values from the ANOVA, when comparing the DSC between the three algorithms for each anatomical label.

| DSC | p-value | |||

|---|---|---|---|---|

| CFs | CNNs | MA | ||

| RLNG | 0.92 ± 0.03 | 0.95 ± 0.01 | 0.93 ± 0.01 | < 0.001 |

| LLNG | 0.92 ± 0.03 | 0.95 ± 0.01 | 0.93 ± 0.01 | < 0.001 |

| LVR | 0.85 ± 0.03 | 0.93 ± 0.01 | 0.86 ± 0.04 | < 0.001 |

| GBLD | 0.38 ± 0.26 | 0.56 ± 0.19 | 0.24 ± 0.26 | < 0.001 |

| RKDN | 0.75 ± 0.09 | 0.87 ± 0.03 | 0.77 ± 0.07 | < 0.001 |

| LKDN | 0.65 ± 0.19 | 0.84 ± 0.11 | 0.72 ± 0.13 | < 0.001 |

| SPLN | 0.57 ± 0.18 | 0.79 ± 0.11 | 0.58 ± 0.14 | < 0.001 |

| PNCR | 0.47 ± 0.13 | 0.62 ± 0.09 | 0.40 ± 0.14 | < 0.001 |

| BLD | 0.65 ± 0.22 | 0.75 ± 0.21 | 0.69 ± 0.23 | 0.162 |

| SPN | 0.80 ± 0.04 | 0.87 ± 0.01 | 0.87 ± 0.02 | < 0.001 |

| PLVS | 0.73 ± 0.05 | 0.81 ± 0.03 | 0.79 ± 0.06 | < 0.001 |

It is worth noting that CNNs performed significantly better (p < 0.001) than CFs and the MA algorithm in segmenting all the anatomies of interest, except for the BLD (p = 0.162).

A bar chart that provides a pictorial representation of the mean metrics (DSC, RE, PR, ASD, RMSSD and HD) for the segmented organs when using T2w volumes and all imaging combinations (T2w + T1w + DWI) as input to CFs and CNNs, is shown in Figure 26 in Appendix 1.

Pooled mean metrics ± SD are presented in Table 31 in Appendix 1. The bar plot of the mean measure metrics is plotted in Figures 26 and 27 of Appendix 1.

It is confirmed that the performance of CFs is improved when all imaging combinations are used (T2w + T1w + DWI) as input, when compared to using T2w volumes only. This is reflected in all metrics (DSC = 0.74 ± 0.16 vs. 0.70 ± 0.17, RE = 0.78 ± 0.16 vs. 0.73 ± 0.18, PR = 0.74 ± 0.13 vs. 0.71 ± 0.14, ASD = 7.89 ± 7.55 mm vs. 13.5 ± 11.2 mm, RMSSD = 20.9 ± 27.1 mm vs. 34.6 ± 37.6 mm and HD = 170.7 ± 194.0 mm vs. 185.7 ± 194.0 mm). On the contrary, the performance of CNNs is better when using T2w volumes only as input, rather than using all imaging combinations (T2w + T1w + DWI). This is again reflected in all metrics (DSC = 0.81 ± 0.12 vs. 0.77 ± 0.14, RE = 0.82 ± 0.14 vs. 0.79 ± 0.15, PR = 0.82 ± 0.10 vs. 0.79 ± 0.11, ASD = 5.48 ± 4.84 mm vs. 9.23 ± 8.04 mm, RMSSD = 17.0 ± 13.3 mm vs. 25.2 ± 19.1 mm and HD = 199.0 ± 101.2 mm vs. 215.9 ± 98.6 mm). No significant differences were found in the performance of CFs and CNNs when using different T2w only and all imaging combinations (T2w + T12w + DWI) as inputs.

Table 31 (in Appendix 1) shows the pooled mean metrics T SD from all the segmented structures for CFs and CNNs, when using T2w only volumes and all imaging combinations (T2w + T1w + DWI) as inputs. It also shows the p-values from the Mann–Whitney U-test when comparing the two-input cases for CFs and CNNs.

Table 4 shows the DSC for all the anatomical labels, when CFs and CNNs are being used with T2w images only (CFs_T2w and CNNs_T2w) as inputs and when using all imaging combinations (T2w + T1w + DWI) as input to the two algorithms (CFs_all and CNNs_all). It also shows the p-values from the Mann–Whitney U-tests when comparing the DSC between CFs and CNNs used with different imaging inputs.

| DSC | p-value | DSC | p-value | |||

|---|---|---|---|---|---|---|

| CFs_T2w | CFs_all | CNNs_T2w | CNNs_all | |||

| RLNG | 0.92 ± 0.03 | 0.92 ± 0.02 | 0.564 | 0.95 ± 0.01 | 0.94 ± 0.01 | 0.001 |

| LLNG | 0.92 ± 0.03 | 0.92 ± 0.02 | 0.500 | 0.95 ± 0.01 | 0.93 ± 0.03 | 0.003 |

| LVR | 0.85 ± 0.03 | 0.90 ± 0.02 | < 0.001 | 0.93 ± 0.01 | 0.91 ± 0.03 | < 0.001 |

| GBLD | 0.38 ± 0.26 | 0.38 ± 0.25 | 0.976 | 0.56 ± 0.19 | 0.49 ± 0.18 | 0.079 |

| RKDN | 0.75 ± 0.09 | 0.79 ± 0.06 | 0.093 | 0.87 ± 0.03 | 0.84 ± 0.05 | < 0.001 |

| LKDN | 0.65 ± 0.19 | 0.73 ± 0.13 | 0.023 | 0.84 ± 0.11 | 0.78 ± 0.13 | < 0.001 |

| SPLN | 0.57 ± 0.18 | 0.67 ± 0.15 | < 0.001 | 0.79 ± 0.11 | 0.69 ± 0.13 | < 0.001 |

| PNCR | 0.47 ± 0.13 | 0.55 ± 0.11 | 0.017 | 0.62 ± 0.09 | 0.57 ± 0.11 | 0.051 |

| BLD | 0.65 ± 0.22 | 0.74 ± 0.18 | 0.046 | 0.75 ± 0.21 | 0.74 ± 0.16 | 0.411 |

| SPN | 0.80 ± 0.04 | 0.83 ± 0.03 | < 0.001 | 0.87 ± 0.01 | 0.85 ± 0.05 | 0.044 |

| PLVS | 0.73 ± 0.05 | 0.74 ± 0.05 | 0.135 | 0.81 ± 0.03 | 0.78 ± 0.06 | 0.069 |

It is seen that the addition of extra imaging modalities (T1w + DWI) as input to CFs_T2w, significantly improves the segmentation performance (p = 0.046) for many anatomical structures (LVR, LKDN, SPLN, PNCR, BLD and SPN). By contrast, the addition of T1w + DWI to CNNs_T2w, significantly deteriorates the DSC (p = 0.044) for most the examined anatomies of interest (RLNG, LLNG, LVR, RKDN, LKDN, SPLN and SPN). Finally, Table 5 shows and compares the DSC from all anatomical labels, when segmented by the two algorithms with the best DSC performance as reported above, namely CFs_all and CNNs_T2w. It also shows the p-values from the Mann–Whitney U-tests to compare the DSC between the two algorithms for all the examined structures.

| DSC | p-value | ||

|---|---|---|---|

| CFs all | CNNs T2w | ||

| RLNG | 0.92 ± 0.02 | 0.95 ± 0.01 | < 0.001 |

| LLNG | 0.92 ± 0.02 | 0.95 ± 0.01 | < 0.001 |

| LVR | 0.90 ± 0.02 | 0.93 ± 0.01 | < 0.001 |

| GBLD | 0.38 ± 0.25 | 0.56 ± 0.19 | 0.002 |

| RKDN | 0.79 ± 0.06 | 0.87 ± 0.03 | < 0.001 |

| LKDN | 0.73 ± 0.13 | 0.84 ± 0.11 | < 0.001 |

| SPLN | 0.67 ± 0.15 | 0.79 ± 0.11 | < 0.001 |

| PNCR | 0.55 ± 0.11 | 0.62 ± 0.09 | 0.008 |

| BLD | 0.74 ± 0.18 | 0.75 ± 0.21 | 0.384 |

| SPN | 0.83 ± 0.03 | 0.87 ± 0.01 | < 0.001 |

| PLVS | 0.74 ± 0.05 | 0.81 ± 0.03 | < 0.001 |

It is striking that CNNs_T2w scored significantly better DSCs than CFs in all the examined organs (p < 0.008), aside from the BLD (p = 0.384). The segmentation performance was notably improved when using CNNs_T2w, even for organs with great variability in appearance, such as the GBLD (0.38 ± 0.25 for CNNs_T2w vs. 0.56 ± 0.19 for CFs_all, p = 0.002).

Discussion

All the algorithms tested in this study permitted automatic, multiorgan segmentation in whole-body MRI of healthy volunteers with very good agreement to the segmentations, performed manually by clinical experts. Accurate, multiorgan automatic segmentation in whole-body MRI is the first step in training ML algorithms to recognise normality. This will lead the way to developing automatic identification and segmentation algorithms for lesions, such as primary or metastatic tumours, with increased sensitivity and specificity. These algorithms could ultimately facilitate the process of reading whole-body scans in cancer patients by reducing the RT, and possibly, improving the diagnostic accuracy of WB-MRI. These algorithms may also assist in quantifying the extent of normal tissues such as muscle or fat.

Our analysis showed that CNNs outperformed CFs and the MA algorithm when T2w volumes were used as input to the algorithms and when using pooled overlap-evaluation metrics (DSC, RE and PR) to assess the accuracy of segmentation. When the performance of the algorithms was assessed with pooled surface distance metrics (ASD, RMSSD and HD), it was the MA algorithm that performed best. Single misinterpreted voxels in CFs and CNNs can greatly elevate ASD, RMSSD and HD; these metrics are particularly sensitive to outliers.

We then assessed the pooled metrics performance of CFs and CNNs when using all imaging combinations (T2w + T1w + DWI) as input, arguing that maximisation of training information to the algorithms might improve the performance of segmentation. We found that the performance of CFs was improved, however not significantly, when using all imaging combinations as input for training. The opposite was observed for CNNs.

The findings for the pooled metrics analysis, described above, were corroborated by a ‘per-organ’ quantitative analysis of the commonly used DSC, to assess the performance of our segmentation algorithms. This analysis confirmed that for all individual anatomical structures (except for the BLD), the algorithm that returned the greatest DSC was CNNs with T2w images only used as input.

Because our structural scans were acquired using breath-holds and the DWI ones with free breathing, we found that there was significant displacement between soft tissues in anatomical areas adjacent to the diaphragm between these types of scans. As the employed affine registration method cannot fully compensate for nonlinear motions caused by breathing, we assume that misregistration could be the reason why the performance of CNNs, despite performing better than the other two algorithms when using T2w volumes as input only, was degraded when using all imaging combinations as input for training. A more robust, nonlinear registration method could improve the accuracy of CNNs and further improve the performance of CFs, so we are currently looking into methods to address this issue. Alternatively, we could have generated training data by manually segmenting the structures of interest on each sequence type separately, but this would be a rather strenuous and time-consuming approach. Further work would need to address the performance limitations of our algorithms when segmenting organs with big variability in appearance (e.g. the GBLD or the PNCR).

Conclusion

In conclusion, in this phase of the MALIBO study we have developed and evaluated three state-of-the-art algorithms that automatically segment healthy organs and bones in whole-body MRI with accuracy comparable to the one achieved manually by clinical experts. An algorithm based on CNNs and trained using T2w only images as input performs favourably when compared to CFs or a MA algorithm, trained with either T2w only images or a combination of imaging inputs (T2w + T1w + DWI). Using multimodal MRI data as input for training, the developed algorithms did not improve the segmentation performance in this work, but it is anticipated to improve the segmentation performance if more effective WB registration between the various imaging modalities can be performed. This investigation is the first step towards developing robust algorithms for the automatic detection and segmentation of benign and malignant lesions in whole-body MRI scans for staging of cancer patients.

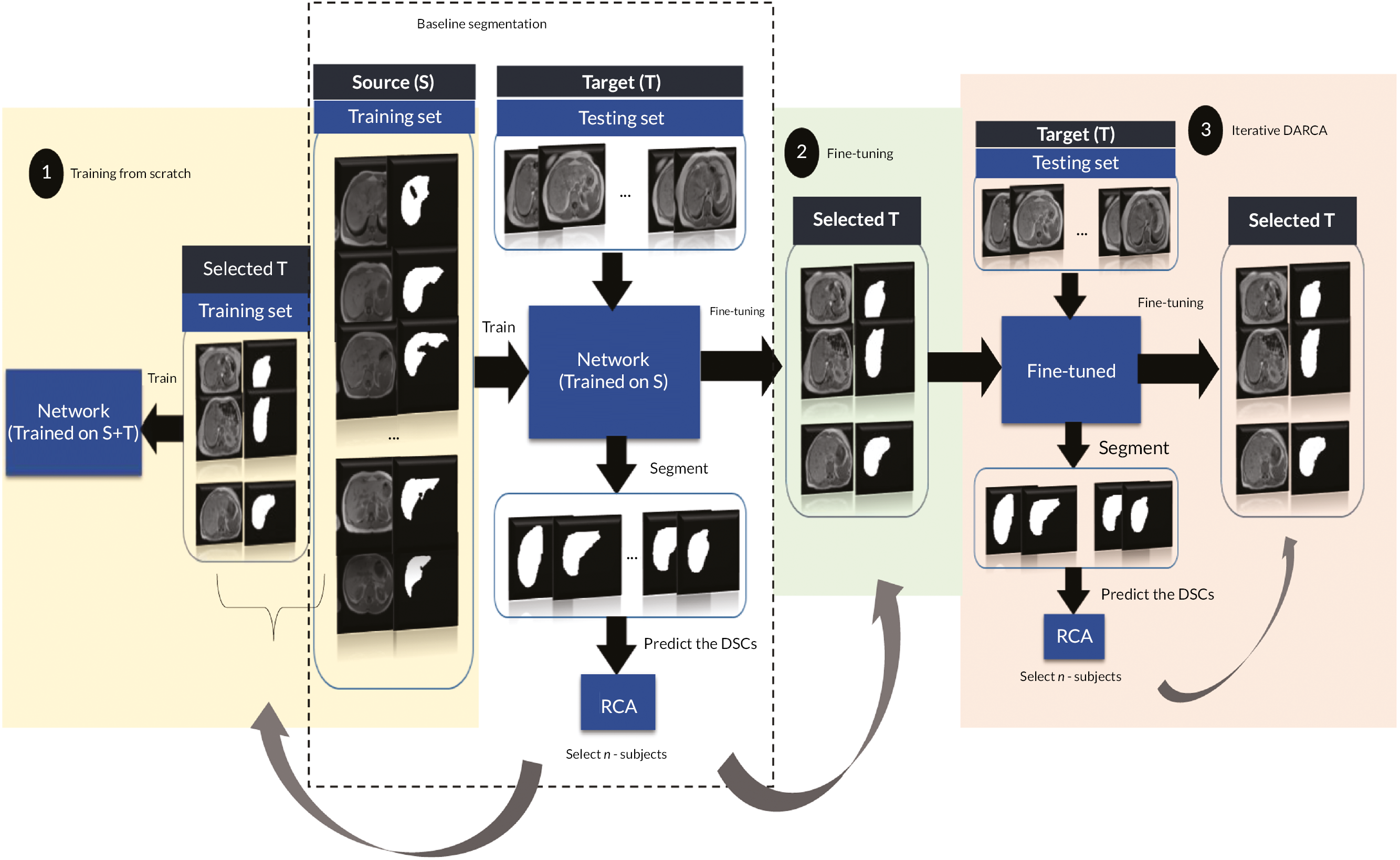

Chapter 4 Reverse classification accuracy and domain adaptation

This chapter is based on material previously published by the authors in Valindria et al. 70,71 with minor modification. This material is reproduced in line with Creative Commons licence BY 4.0.

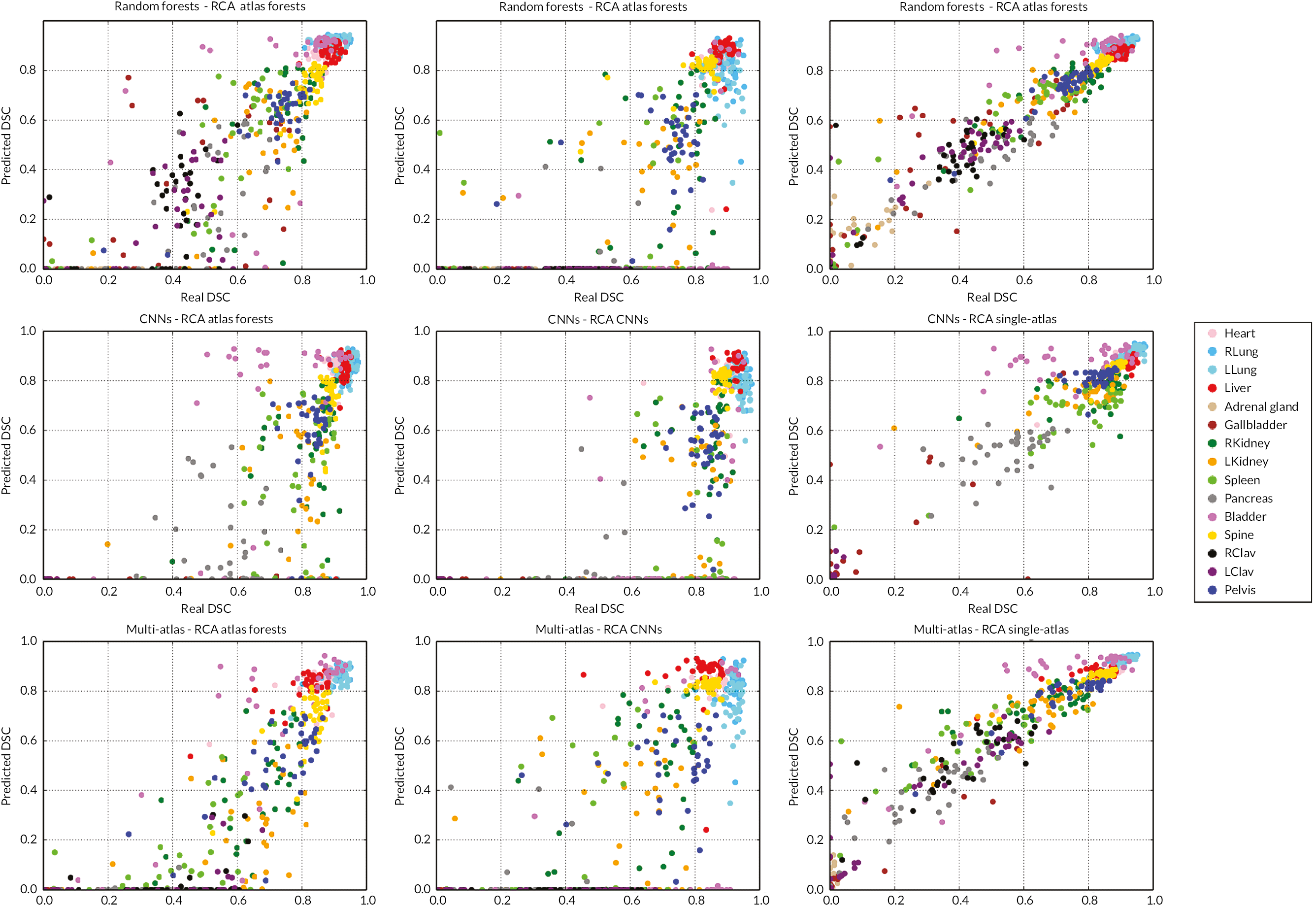

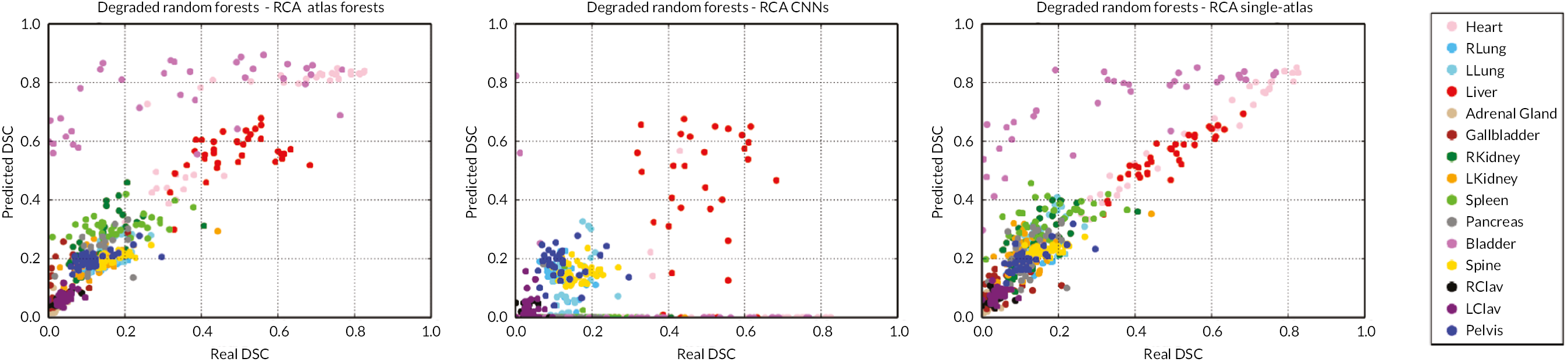

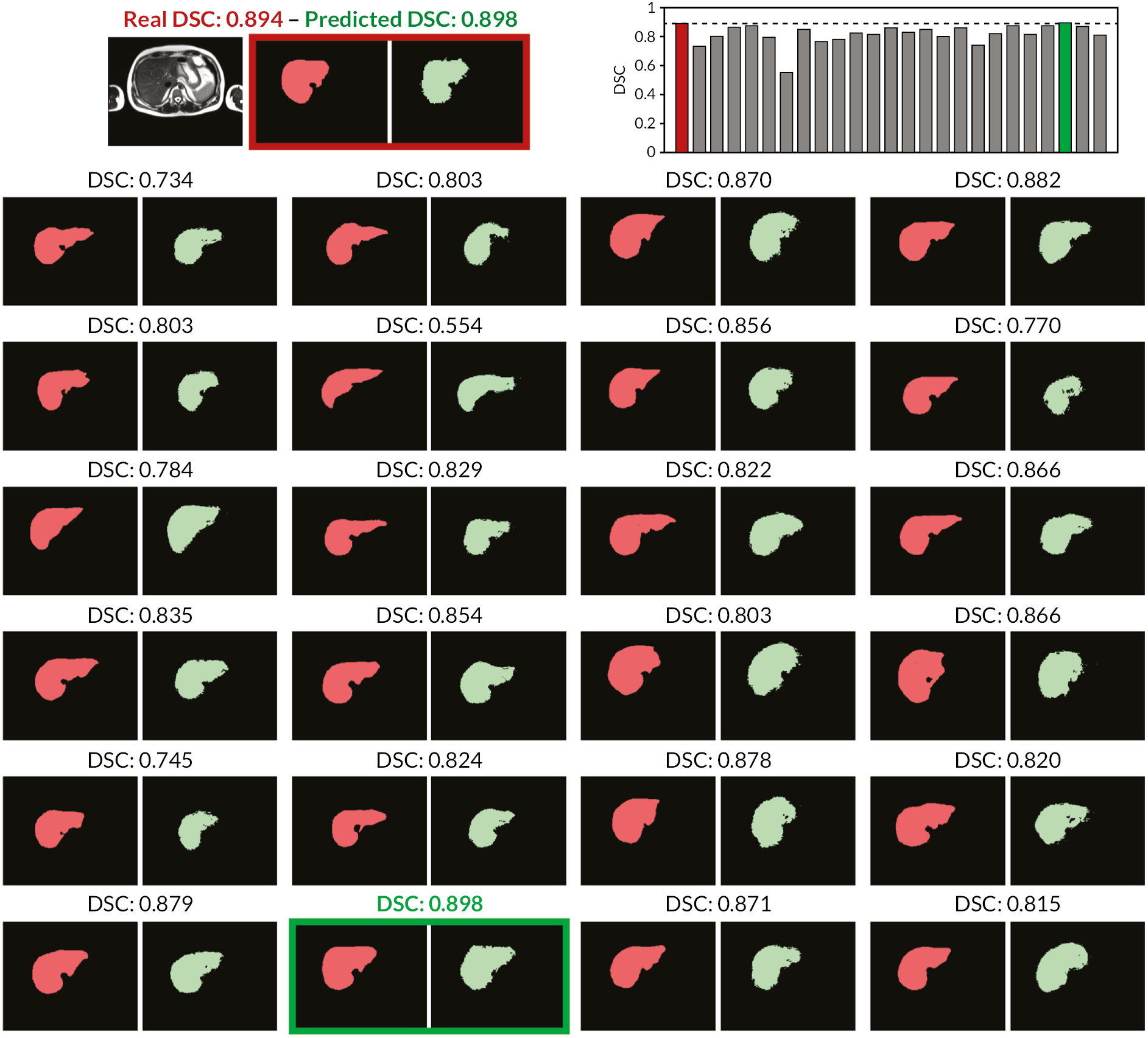

Reverse classification accuracy: predicting segmentation performance in the absence of ground truth70

When integrating computational tools such as automatic segmentation into clinical practice, it is of utmost importance to be able to assess the level of accuracy on new data, and in particular, to detect when an automatic method fails. However, this is difficult to achieve due to absence of GT. Segmentation accuracy on clinical data might be different from what is found through cross-validation because validation data are often used during incremental method development, which can lead to overfitting and unrealistic performance expectations. Before deployment, performance is quantified using different metrics, for which the predicted segmentation is compared to a reference segmentation, often obtained manually by an expert. However, little is known about the real performance after deployment when a reference is unavailable. In this paper, we introduce the concept of reverse classification accuracy (RCA)70 as a framework for predicting the performance of a segmentation method on new data. In RCA, we take the predicted segmentation from a new image to train a reverse classifier which is evaluated on a set of reference images with available GT. The hypothesis is that if the predicted segmentation is of good quality, then the reverse classifier will perform well on at least some of the reference images. We validate our approach on multiorgan segmentation with different classifiers and segmentation methods. Our results indicate that it is indeed possible to predict the quality of individual segmentations, in the absence of GT. Thus, RCA is ideal for integration into automatic processing pipelines in clinical routine and as part of large-scale image analysis studies.

Introduction

Segmentation is an essential component in many image analysis pipelines that aim to extract clinically useful information from medical images to inform clinical decisions in diagnosis, treatment planning, or monitoring of disease progression. A multitude of approaches have been proposed for solving segmentation problems, with popular techniques based on graph cuts,72 MA label propagation,63 statistical models73 and supervised classification. 74 Traditionally, performance of a segmentation method is evaluated on an annotated database using various evaluation metrics in a cross-validation setting. These metrics reflect the performance in terms of agreement75 of a predicted segmentation compared to a reference ‘GT.’ (For simplicity, we use the term GT to refer to the best-known reference, which is typically a manual expert segmentation.) Commonly used metrics include DSC76 and other overlap-based measures,77 but also metrics based on volume differences, surface distances, and others. 78–80 A detailed analysis of common metrics and their suitability for segmentation evaluation can be found in Konukoglu et al. 81

Once a segmentation method is deployed in routine, little is known about its real performance on new data. Due to the absence of GT, it is not possible to assess performance using traditional evaluation measures. However, it is critical to be able to assess the level of accuracy on clinical data,82 and in particular, it is important to detect when an automatic segmentation method fails. Especially when the segmentation is an intermediate step within a larger automated processing pipeline where no visual quality control of the segmentation results is feasible. This is of high importance in large-scale studies such as the UK Biobank Imaging Study83 where automated methods are applied to large cohorts of several thousand images, and the segmentation is to be used for further statistical population analysis. In this study, we are asking the question whether it is possible to assess segmentation performance and detect failure cases when there is no GT available to compare with. One possible approach to monitor the segmentation performance is to occasionally select a random data set, obtain a manual expert segmentation and compare it to the automatic one. While this can merely provide a rough estimate about the average performance of the employed segmentation method, in clinical routine we are interested in the per-case performance and want to detect when the automated method fails. The problem is that the performance of a method might be substantially different on clinical data and is usually lower than what is found through cross-validation on annotated data carried out beforehand due to several reasons. Firstly, the annotated database is normally used during incremental method development for training, model selection and FT of hyper-parameters. This can lead to overfitting84 which is a potential cause for lower performance on new data. Secondly, the clinical data might be different due to varying imaging protocols or artefacts caused by pathology. To this end, we propose a general framework for predicting the real performance of deployed segmentation methods on a per-case basis in the absence of GT.

Related work

Retrieving an objective performance evaluation without GT has been an issue in many domains, from remote sensing,85 graphics,86 to marketing strategies. 87 In computer vision, several works evaluate the segmentation performance by looking at contextual properties,88 by separating the perceptual salient structures89 or by automatically generating semantic GT. 90,91 However, these methods cannot be applied to a more general task, such as an image with many different class labels to be segmented. An attempt to compute objective metrics, such as PR and RE with missing GT, is proposed by Lamiroy and Sun92 but it cannot be used for data sets with partial GT since it applies a probabilistic model under the same assumptions. Another stand-alone method to consider is the meta-evaluation framework, where image features are used in a ML setting to provide a ranking of different methods,93 but this does not allow the estimation of segmentation performance on an individual image level.

Meanwhile, unsupervised methods94,95 aim to estimate the segmentation accuracy directly from the images and label maps using, for example, information-theoretic and geometrical features. While unsupervised methods can be applied to scenarios where the main purpose of segmentation is to yield visually consistent results that are meaningful to a human observer, the application in medical settings is unclear.

When there are multiple reference segmentations available, a similarity measure index can be obtained by comparing an automatic segmentation with the set of references. 96 In medical imaging, the problem of performance analysis with multiple references which may suffer from intrarater and inter-rater variability has been addressed. 97,98 The simultaneous truth and performance level estimation (STAPLE) approach97 has led to the work of Bouix et al. 99 that proposed techniques for comparing the relative performance of different methods without the need of GT. Here, the different segmentation results are treated as plausible references, thus can be evaluated through STAPLE and the concept of common agreement. Another work by Sikka and Deserno100 has quantitatively evaluated the performance of several segmentation algorithms by region correlation matrix. The limitation of this work is that it cannot evaluate the segmentation performance of a particular method on a particular image independently.

Recent work has explored the idea of learning a regressor to directly predict segmentation accuracy from a set of features that are related to various segmentation energy terms. 101 Here, the assumption is that those features are well suited to characterise segmentation quality. In an extension for a security application, the same features as in Kohlberger et al. 101 are extracted and used to learn a generative model of good segmentations that can be used to detect outliers. 102 Similarly, the work of Frounchi et al. 103 considers training of a classifier that is able to discriminate between consistent and inconsistent segmentations. However, the approaches101,103 can only be applied when a training database with good and bad segmentations is available from which a mapping from features to segmentation accuracy can be learnt. Examples of bad segmentations can be generated by altering parameters of automatic methods, but it is unclear whether those examples resemble realistic cases of segmentation failure. The generative model approach in Grady et al. 102 is appealing as it only requires a database of good segmentations. However, there is still the difficulty of choosing appropriate thresholds on the probabilities that indicate bad or failed segmentations. Such an approach cannot not be used to directly predict segmentation scores such as DSC, but can be useful to inform automatic quality control or to automatically select the best segmentation from a set of candidates. In the general ML domain, the lack-of-label problem has been tackled by exploiting transfer learning104 using a reverse validation to perform cross-validation when the number of labelled data are limited. The basic idea of reverse validation104 is based on reverse testing, where a new classifier is trained on predictions on the test data and evaluated again on the training data. This idea of reverse testing is closely related to our approach as we will discuss in the following section.

Contribution

The main contribution of this study is the introduction of the concept of RCA to assess the segmentation quality of an individual image in the absence of GT. RCA can be applied to evaluate the performance of any segmentation method on a per-case basis. To this end, a classifier is trained using a single image with its predicted segmentation acting as pseudo GT. The resulting reverse classifier (or RCA classifier) is then evaluated on images from a reference database for which GT is available. It should be noted that the reference database can be (but does not have to be) the training database that has been used to train, cross-validate and fine-tune the original segmentation method. The assumption is that in ML approaches, such a database is usually already available, but it could also be specifically constructed for the purpose of RCA. Our hypothesis is that if the segmentation quality for a new image is high, then the RCA classifier trained on the predicted segmentation used as pseudo GT will perform well at least on some of the images in the reference database, and similarly, if the segmentation quality is poor, the classifier is likely to perform poorly on the reference images. For the segmentations obtained on the reference images through the RCA classifier, we can quantify the accuracy, for example, using DSC, since reference GT is available. It is expected that the maximum DSC score over all reference images correlates well with the real DSC that one would get on the new image if GT were available. Although the idea of RCA is similar to reverse validation104 and reverse testing,105 the important difference is that in our approach we train a reverse classifier on every single instance while the approaches in references104,105 train single classifiers over the whole test set and its predictions jointly to find out what the best original predictor is. RCA has the advantage of allowing to predict the accuracy for each individual case, while at the same time aggregating over such accuracy predictions allows drawing conclusions for the overall performance of a particular segmentation method.

In the following, we will first present the details of RCA and then evaluate its applicability to a multi-organ segmentation task by exploring the prediction quality of different segmentation metrics for different combinations of segmentation methods and RCA classifiers. Our results indicate that, at least to some extent, it is indeed possible to predict the performance level of a segmentation method on each individual case, in the absence of GT. Thus, RCA is ideal for integration into automatic processing pipelines in clinical routine and as part of large-scale image analysis studies.

Reverse classification accuracy

The RCA framework is based on the idea of training reverse classifiers on individual images utilising their predicted segmentation as pseudo GT. In this work, we employ three different methods for realising the RCA classifier and evaluate each in different combinations with three state-of-the-art image segmentation methods. Details about the different RCA classifiers are provided in the following sections.

Learning reverse classifiers

Given an image I and its predicted segmentation SI, we aim to learn a function fI,SI(x): Rn 7 → C that acts as a classifier by mapping feature vectors x Є Rn extracted for individual image points to class labels c Є C. In theory, any classification approach could be utilised within the RCA framework for learning the function fI,SI. We experiment with three different methods reflecting state-of-the-art ML approaches for voxel-wise classification and atlas-based label propagation.