Notes

Article history

The research reported in this issue of the journal was funded by the HSDR programme or one of its preceding programmes as project number 17/99/89. The contractual start date was in February 2019. The final report began editorial review in August 2021 and was accepted for publication in February 2022. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HSDR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Permissions

Copyright statement

Copyright © 2022 Allen et al. This work was produced by Allen et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaption in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2022 Allen et al.

Chapter 1 General introduction

Stroke

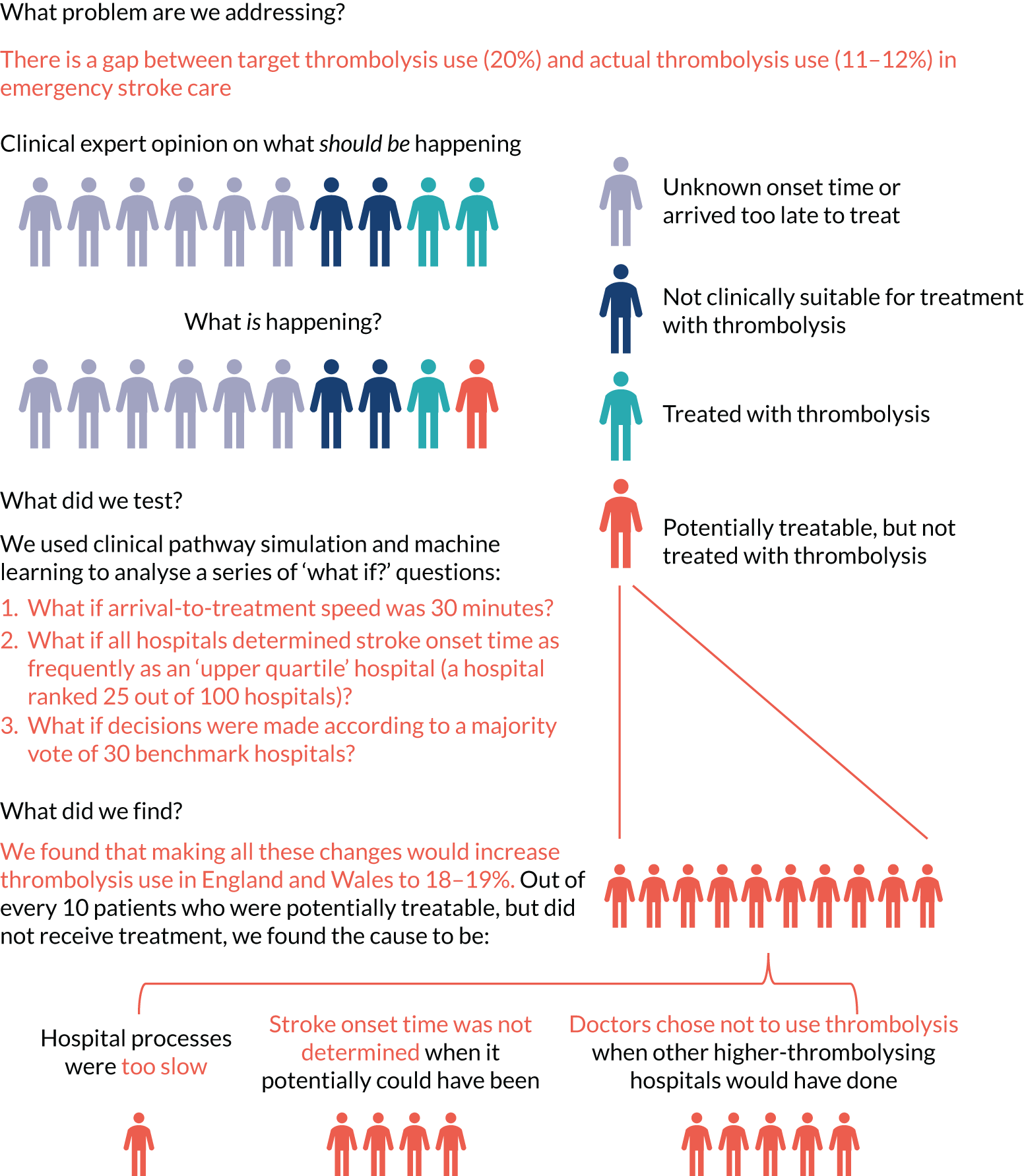

Stroke is a medical condition in which blood flow to an area of the brain has been interrupted, causing cell death. 1 Stroke may be broadly categorised into two types: (1) ischaemic (due to an arterial blockage) and (2) haemorrhagic (due to bleeding).

Stroke is a major cause of adult long-term disability and presents a significant load on health-care services. In 2010, it was reported that, across the world, there were 5.9 million stroke deaths and 33 million stroke survivors. 2 Approximately 85,000 people are hospitalised with stroke each year in England, Wales and Northern Ireland. 3 Over the last 25 years, stroke was the leading cause of lost disability-adjusted life-years, which combine mortality and disability burdens. 4

Intravenous thrombolysis

Intravenous thrombolysis is a form of ‘clot-busting’ therapy developed to treat ischaemic stroke by removing or reducing the blood clot impairing blood flow in the brain.

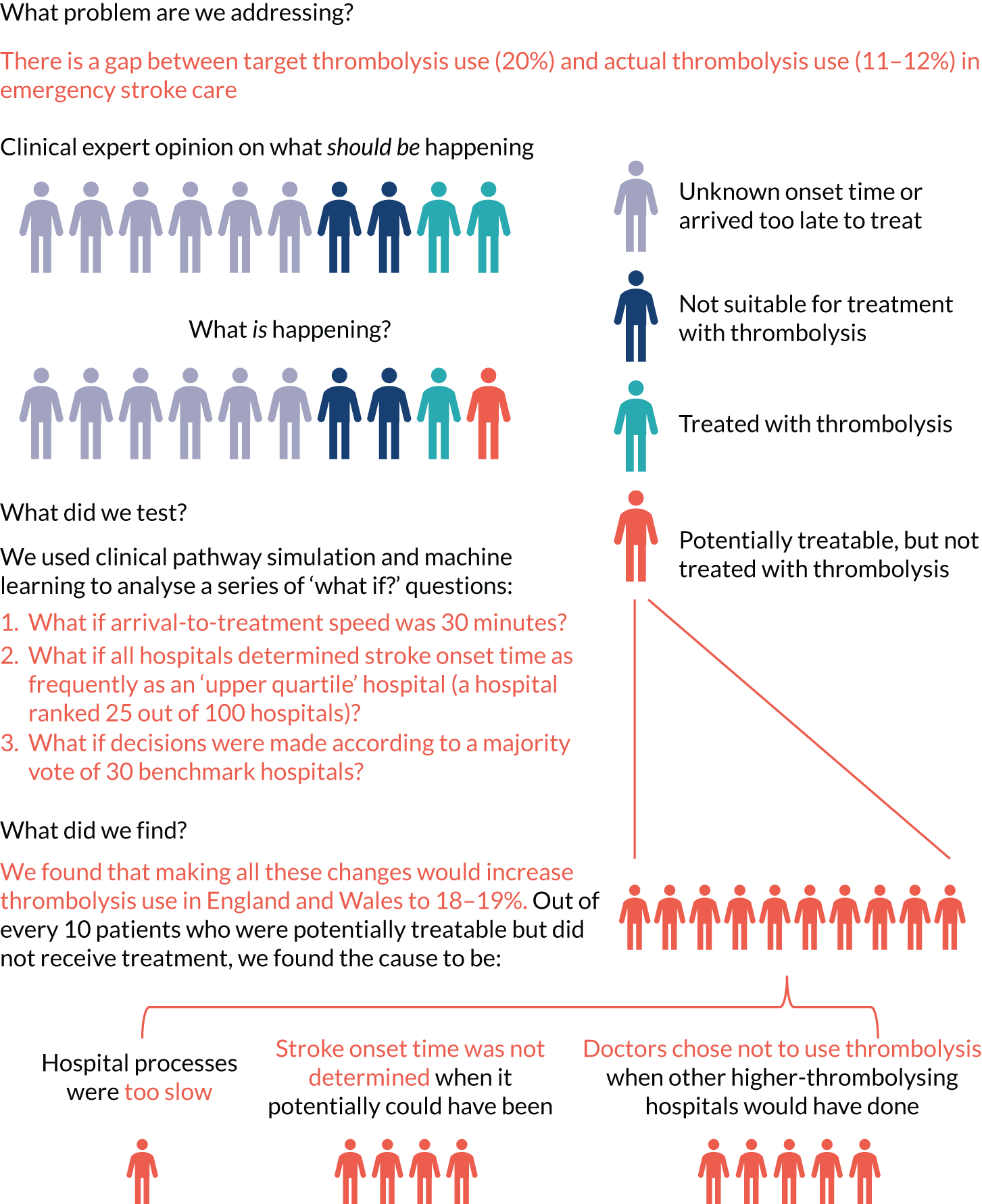

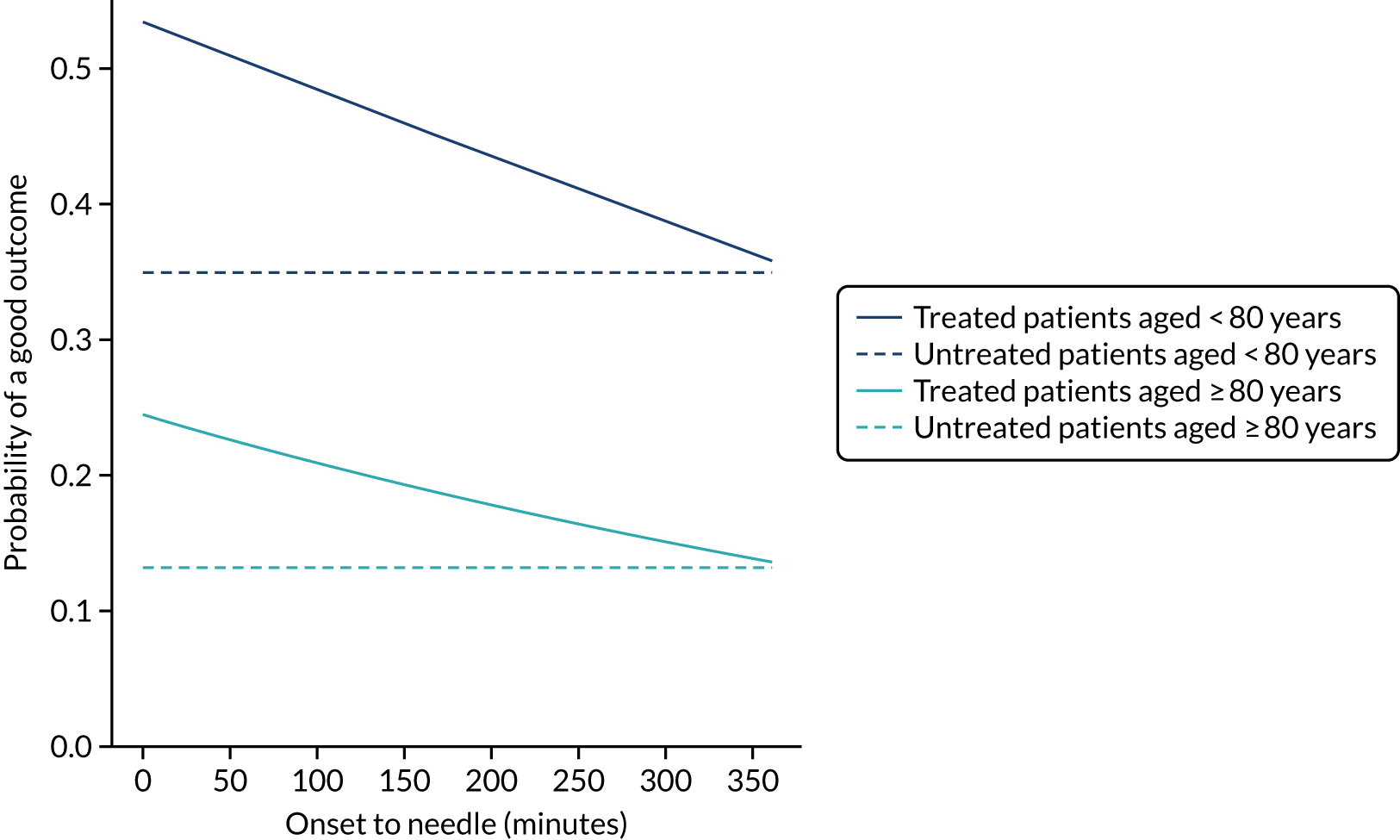

For ischaemic strokes, thrombolysis is an effective treatment for the management of acute stroke if given soon after stroke onset,5 and is recommended for use in many parts of the world, including Europe. 6 The mechanism of action is activation of the body’s own clot breakdown pathway, fibrinolysis. Although the benefit of alteplase (recombinant tissue plasminogen activator) is now well established, individual trials have suffered from uncertainty in the relationship between time to treatment with alteplase and the benefit achieved. Emberson et al. 5 accessed individual patient results from nine clinical trials involving 6756 patients (treated, n = 3391; untreated control, n = 3365). By combining the trials in this meta-analysis, Emberson et al. 5 established a statistically significant benefit of alteplase up to 5 hours after onset of stroke symptoms, using a modified Rankin Scale (mRS) score of 0–1 at 3–6 months as the outcome measure. The decline in odds ratio of an additional good outcome was consistent across age groups, but the differences in baseline (untreated) probability of a good outcome between those aged < 80 years and those aged ≥ 80 years means that the absolute probability of a good outcome after treatment with alteplase is dependent on age group. Figure 1 shows the probability of a good outcome if receiving alteplase, depending on time to treatment, derived from the analysis by Emberson et al. 5

FIGURE 1.

Effect of time to treatment (onset-to-needle time) on the clinical benefit (probability of an outcome with mRS score of 0–1) from thrombolysis, as derived by Emberson et al. 5

Targets for thrombolysis use and speed

The European Stroke Organisation (Basel, Switzerland) has prepared a European stroke action plan7 and has suggested a European target of at least 15% thrombolysis, with median onset-to-needle (also known as onset-to-treatment) times of < 120 minutes, noting that evidence suggests that achieving these targets may be aided by centralisation of stroke services. 8,9 An analysis of the third international stroke trial for thrombolysis concluded that 60% of ischaemic stroke patients arriving within 4 hours of known stroke onset were suitable for thrombolysis. 10 Assuming that 40% of patients arrive within 4 hours of known stroke onset, and assuming that 85% of stroke is ischaemic, then this gives a potential target of 20% thrombolysis. (In 2016–18, in England and Wales, 37% of emergency stroke patients arrived within 4 hours of known stroke onset; see Table 1.) The 2019/20 Sentinel Stroke National Audit Programme (SSNAP) report3 provides an NHS England long-term ambition of 20% of emergency stroke patients receiving thrombolysis. This target of treating up to 20% of patients with thrombolysis is also stated in The NHS Long Term Plan11 and the specification of the Integrated Stroke Delivery Networks. 12

The NHS plan for improving stroke care through the use of Integrated Stroke Delivery Networks sets a target that patients should receive thrombolysis within 60 minutes of arrival, but ideally within 20 minutes. 12 Although this speed of thrombolysis, also called door-to-needle time, provides an ambitious target, it has also been shown to be achievable, as the Helsinki University Central Hospital (Helsinki, Finland) has reported a median of 20 minutes door-to-needle time, with 94% of patients treated within 60 minutes. 13 This speed was achieved using innovative solutions, such as bypassing the emergency department (ED) and taking patients straight to the scanner (with thrombolysis delivered close to the scanner to avoid any transfer-related delay in treatment).

Barriers to thrombolysis use

There have been many studies of barriers to the uptake of thrombolysis. 14–16 Eissa et al. 14 divided barriers into pre- and post-admission phases. Pre-admission barriers included poor patient response (e.g. not recognising symptoms of a stroke and not calling for help soon enough) and paramedic-related barriers (e.g. adding delays in getting the patient to an appropriate hospital in the fastest possible time). Hospital-based barriers include organisational problems (e.g. delay in recognising stroke patients, delays in pathway and poor infrastructure) and physician uncertainty or lack of experience leading to low use of thrombolysis. There has been significant discussion on how services may best be organised to optimise the effectiveness of thrombolysis. 17

Clinical audit

NHS England describes clinical audit in the following way:18

Clinical audit is a way to find out if healthcare is being provided in line with standards and lets care providers and patients know where their service is doing well, and where there could be improvements. The aim is to allow quality improvement to take place where it will be most helpful and will improve outcomes for patients. Clinical audits can look at care nationwide (national clinical audits) and local clinical audits can also be performed locally in trusts, hospitals or GP [general practitioner] practices anywhere healthcare is provided.

In England, the Healthcare Quality Improvement Partnership (HQIP) is responsible for overseeing and commissioning clinical audits. Over 30 clinical audits together form the National Clinical Audit Programme. These audits collect and analyse data supplied by local clinicians.

SSNAP collects data on about 85,000 stroke admission patients per year (i.e. more than 90% of stroke admissions to acute hospitals in England, Wales and Northern Ireland). The data collected include process information and outcomes for stroke care up to 6 months post stroke. SSNAP publishes analysis of results on its website. 19

Clinical pathway simulation

Clinical pathway simulation aims to mimic the passage of individual patients through a clinical pathway. Each patient may take a different route through the pathway, and may take different amounts of time in process steps, depending on the model logic and distributions of timings used.

Computer simulation of stroke pathways has previously allowed investigation and improvement of thrombolysis use in individual hospitals, including both increasing the number of patients treated and reducing door-to-needle times. 20,21 These models have usually focused solely on the speed of the acute stroke pathway from arrival at hospital to treatment with thrombolysis. 20 Interest in the use of simulation for improving the performance of the acute stroke pathway has reached such a level that a common framework has been proposed. 22

Modelling clinical decision-making

A component of clinical pathway simulation that has not usually been included in modelling is patient-level clinical decision-making. For example, in previous modelling studies, when there was time to administer patient thrombolysis, the model component ‘Does a patient receive thrombolysis?’ was modelled as a stochastic event independent of clinical characteristics of the patient. 20,21

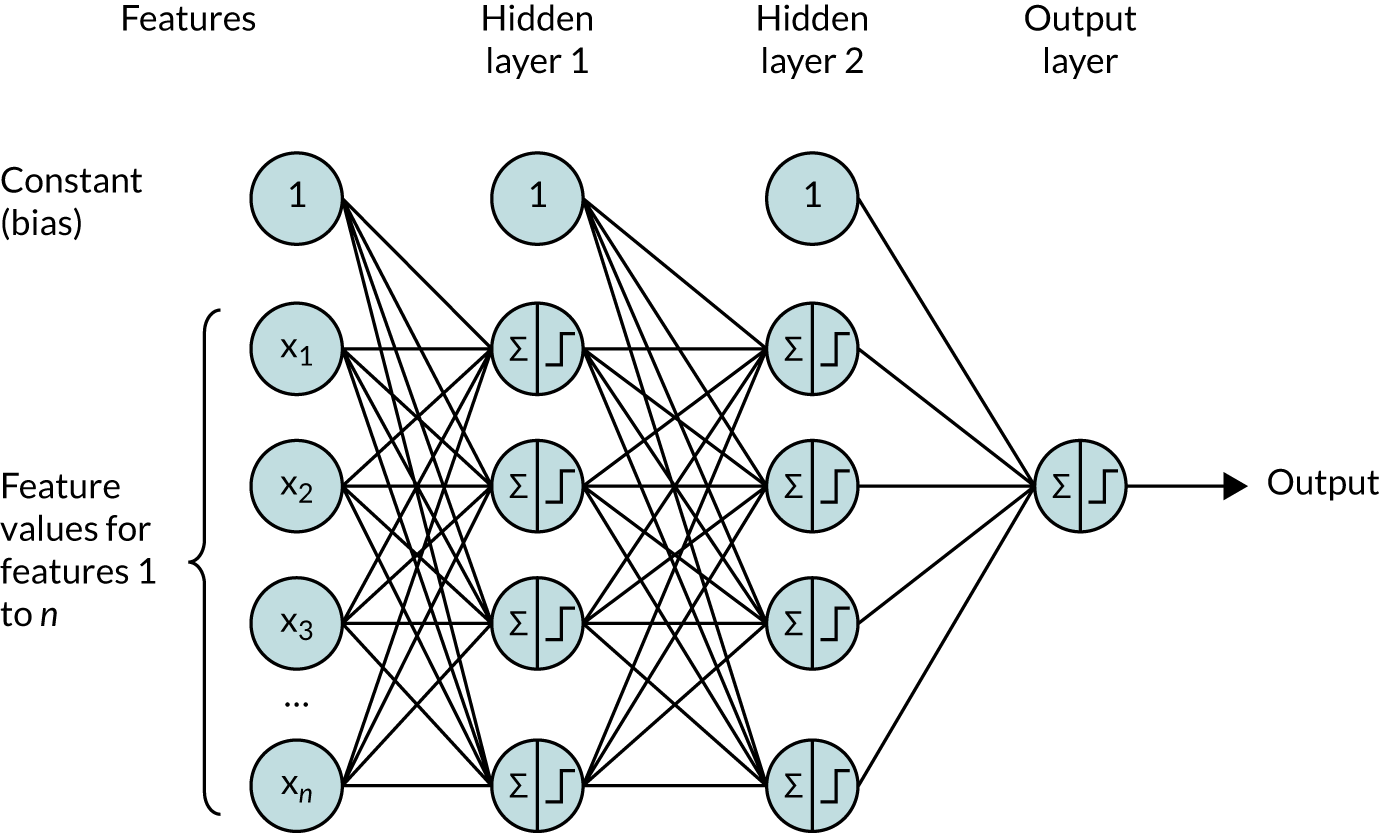

In this project, we seek to use machine learning to predict the probability of a patient receiving thrombolysis, based on a range of features about the patient and also based on which stroke team (hospital) they attend, with the aim of being able to answer the counterfactual question ‘What treatment would this patient probably receive at other hospitals?’. This is a classical supervised learning problem in machine learning, and we explore three machine learning methods: (1) logistic regression, (2) random forest and (3) neural network.

Combining clinical pathway simulation and clinical decision-making

Traditional clinical pathway simulation mimics a process, using times and decisions sampled from appropriate distributions. In this project, we combine simulation with machine learning, by using probabilities of receiving thrombolysis derived from machine learning models (e.g. by ‘replacing’ the decision-making in one hospital with a majority vote of a standard set of benchmark hospital decision models).

Pilot work

Pilot work for this project, based on seven regional stroke teams, has been published. 23

Chapter 2 Open science

In conducting this research we have tried to follow, where possible, the recommendations for open and reproducible data science from The Alan Turing Institute. 24 Detailed methods (including all code used) and results are available online. 25

At this stage, it is not possible to share original data.

Chapter 3 Data

Data were obtained from the Sentinel Stroke National Audit,19 managed through HQIP. 26 SSNAP collects patient-level data on acute stroke admissions, with pre-hospital data recently added. SSNAP has near-complete coverage of all acute stroke admissions in the UK (outside Scotland) and is building up coverage of outcomes at 6 months. All hospitals admitting acute stroke participate in the audit, and year-on-year comparison with Hospital Episode Statistics27 confirms estimated case ascertainment of 95% of coded cases of acute stroke.

The NHS Health Research Authority (HRA) decision tool28 was used to confirm that ethics approval was not required to access the data. Data access was authorised by HQIP (reference HQIP303).

Data were retrieved for 246,676 emergency stroke admissions to acute stroke teams in England and Wales between 2016 and 2018 (i.e. 3 full years).

Data fields are given in Appendix 1.

Chapter 4 General descriptive statistics

What is in this section?

This section provides an analysis of descriptive statistics of the SSNAP data.

This section contains the following analyses:

-

descriptive analysis of stroke pathway data, describing mean patient and pathway characteristics, and describing variation across stroke teams and between age groups (i.e. age < 80 years vs. ≥ 80 years)

-

association between patient features on use of thrombolysis, examining the relationship between patient features and use of thrombolysis, including analysis by disability (mRS) before stroke, stroke severity [using the National Institutes of Health Stroke Scale (NIHSS)], gender, ethnicity, age group, onset known, arrival by ambulance and comorbidities

-

comparison of average values for patients who receive thrombolysis and those who do not, comparing feature means for patients who receive thrombolysis and those who do not

-

pathway patterns throughout the day, analysing key pathway statistics broken down by time of day (3-hour epochs)

-

pathway patterns throughout the week, analysing key pathway statistics broken down by day of week

-

pathway patterns throughout the day in an example single stroke team, analysing key pathway statistics broken down by time of day (3-hour epochs) in a single stroke team

-

pathway patterns throughout the week in an example single stroke team, analysing key pathway statistics broken down by day of week in a single stroke team

-

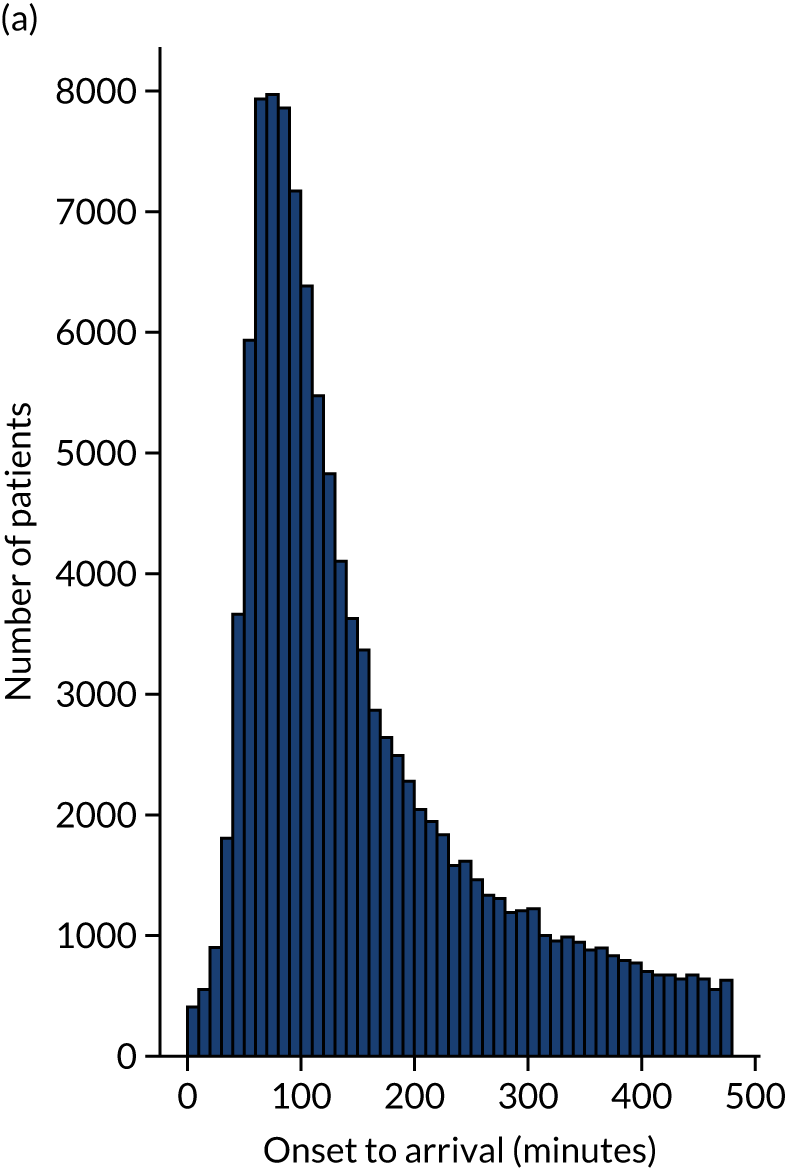

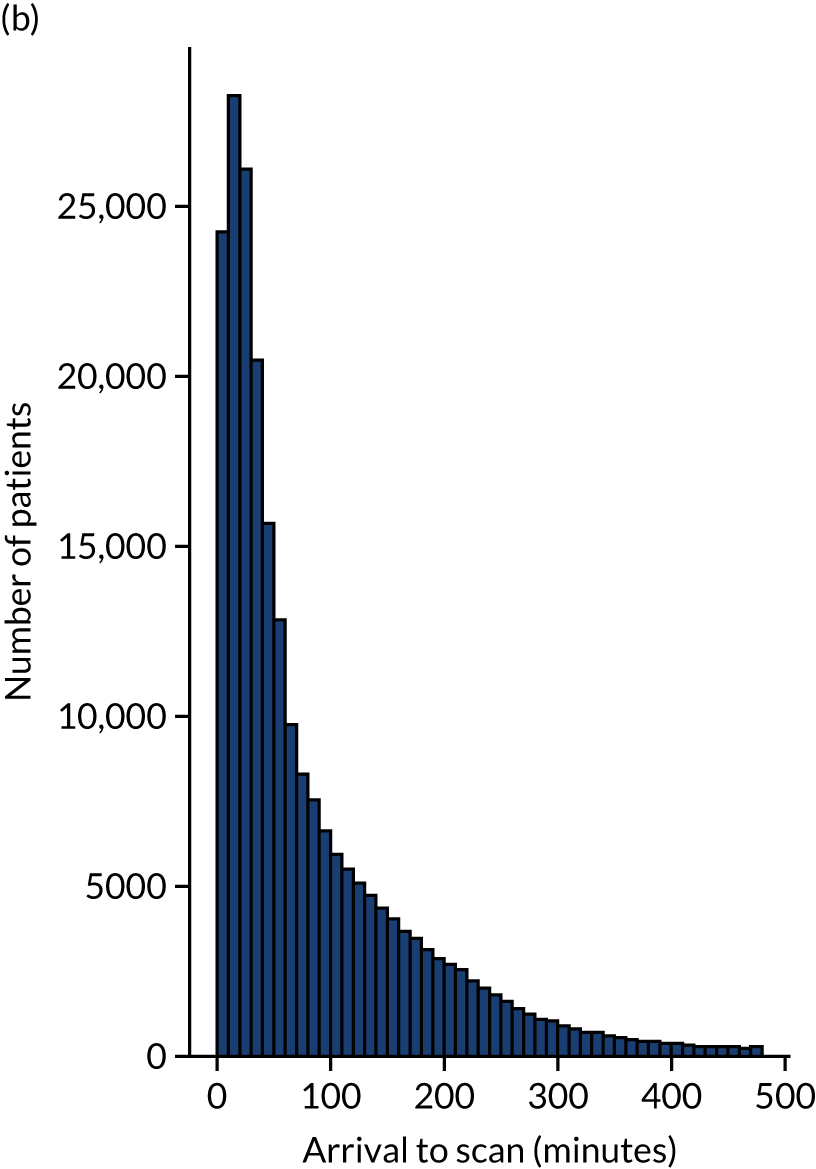

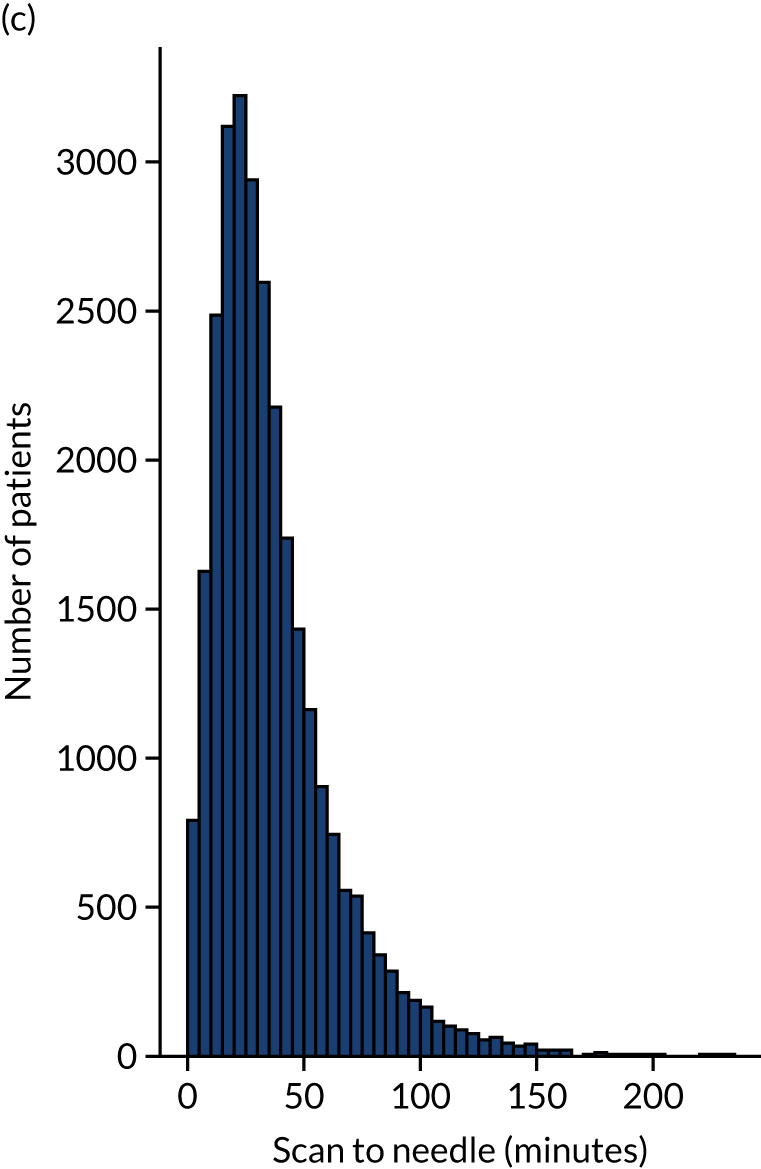

stroke pathway timing distribution, visualising distributions for onset to arrival, arrival to scan and scan to needle

-

covariance between all feature pairs.

Detailed code and results are available online. 29

Key findings in this section

Some key general statistics include the following:

-

Between 2016 and 2018, there were 239,505 admissions to 132 stroke teams that had received at least 300 admissions and provided thrombolysis to at least 10 patients over the course of 3 years (2016–18).

-

A total of 5.3% of patients had an in-hospital onset of stroke, 12.3% of whom received thrombolysis.

-

A total of 94.7% of patients had an out-of-hospital onset of stroke, 11.8% of whom received thrombolysis.

-

There was generally little covariance between features, with 96% of feature pairs having a R2 of < 0.1.

The following statistics apply to patients with an out-of-hospital onset of stroke only:

-

A total of 43% of patient arrivals were aged ≥ 80 years.

-

A total of 67% of all patients had a determined stroke time of onset, 60% of whom arrived within 4 hours of known stroke onset. This equates to 40% of all arrivals arriving within 4 hours of known stroke onset.

The following statistics apply to patients with an out-of-hospital onset of stroke who also arrived within 4 hours of known stroke onset:

-

The mean onset-to-arrival time was 111 minutes.

-

Most (95%) patients underwent scanning within 4 hours of arrival, with a mean arrival-to-scan time of 43 minutes.

-

Among patients who underwent scanning within 4 hours of known stroke onset, 30% received thrombolysis.

-

Overall, 11.8% of patients received thrombolysis.

-

The mean scan-to-needle time was 40 minutes, the mean arrival-to-needle time was 63 minutes and the mean onset-to-needle time was 158 minutes.

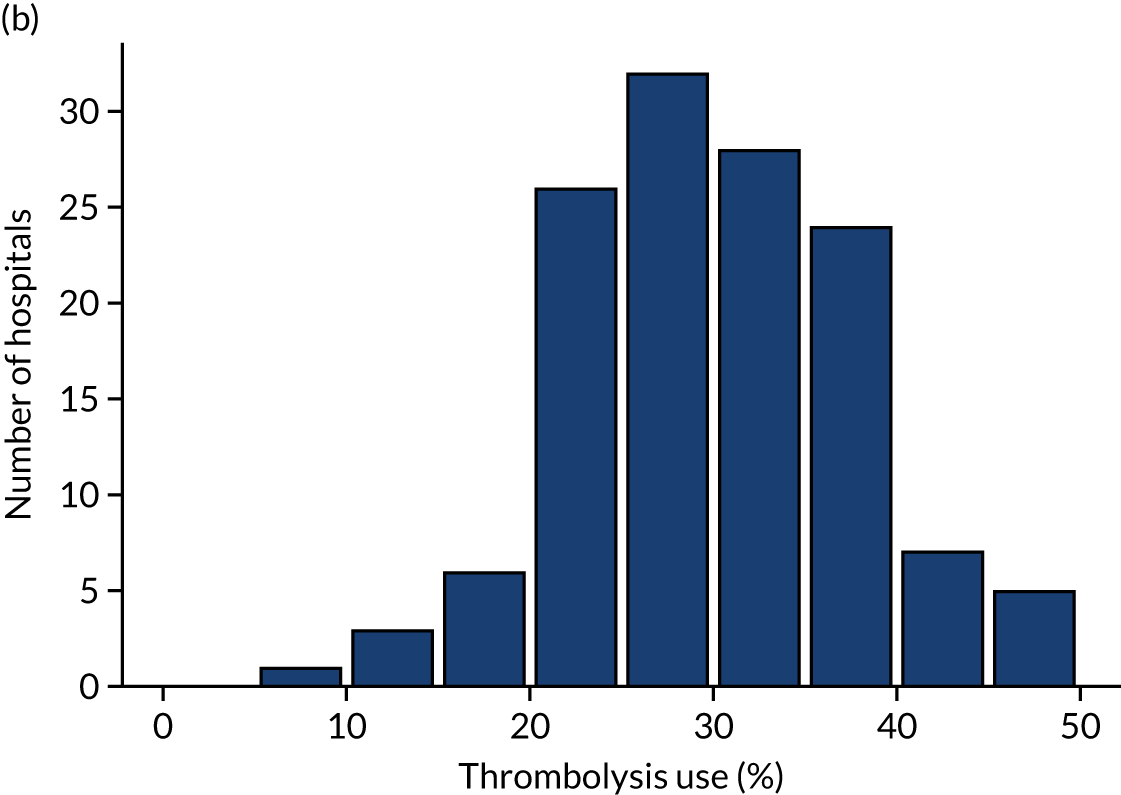

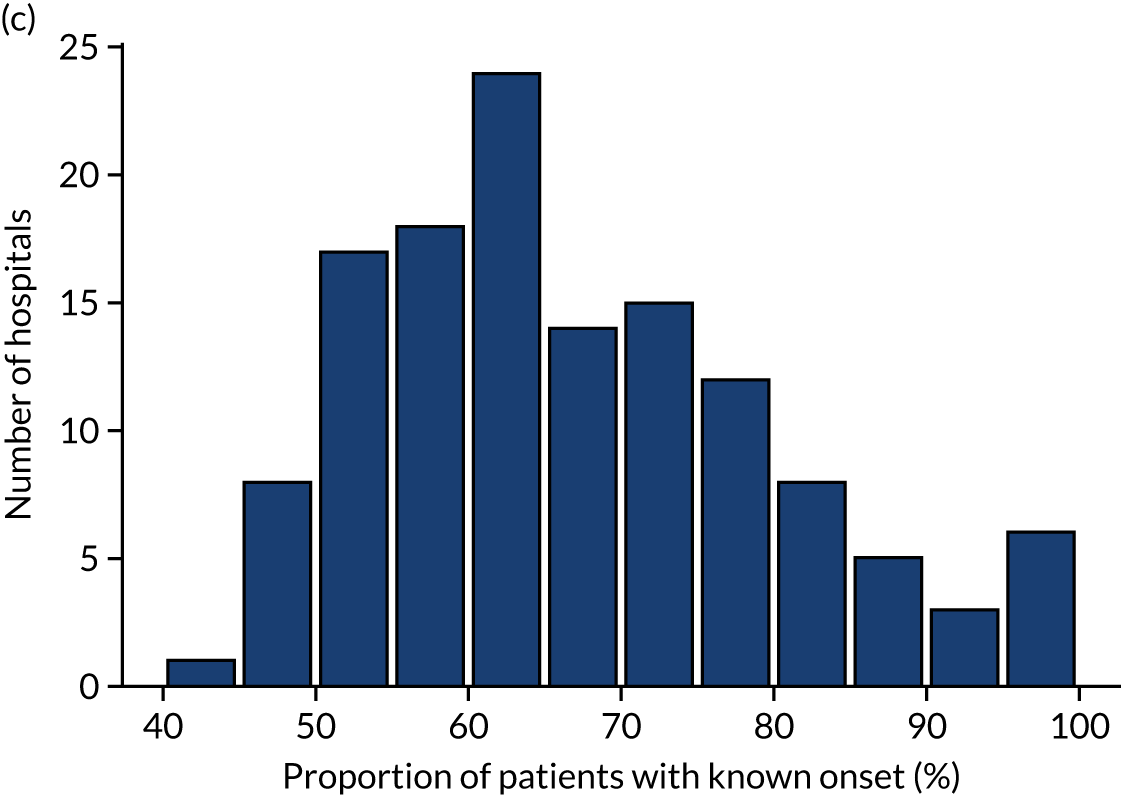

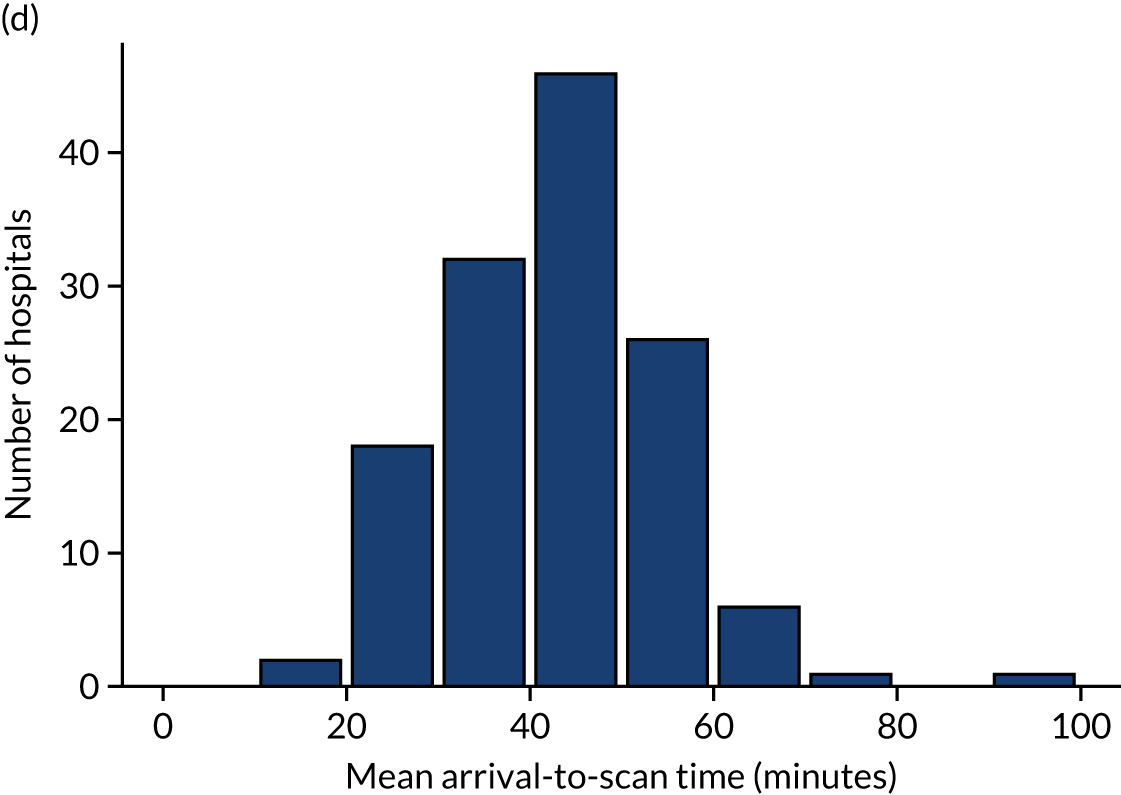

Statistics on inter-hospital variation include the following:

-

Thrombolysis use varied from 1.5% to 24.3% of all patients and from 7.3% to 49.7% of patients arriving within 4 hours of known stroke onset.

-

The proportion of patients with a determined stroke onset time ranged from 34% to 99%.

-

The proportion of patients arriving within 4 hours of known stroke onset ranged from 22% to 56%.

-

The proportion of patients scanned within 4 hours of arrival ranged from 85% to 100%.

-

Mean arrival-to-scan time (for those arriving within 4 hours of known stroke onset and scanned within 4 hours of arrival) ranged from 19 to 93 minutes.

-

Mean arrival-to-needle time varied from 26 to 111 minutes.

-

The proportion of patients aged ≥ 80 years varied from 29% to 58%.

-

The mean NIHSS score (i.e. stroke severity) ranged from 6.1 to 11.7.

Statistics on differences by age group (i.e. patients aged < 80 years vs. ≥ 80 years) and gender include the following:

-

A total of 10.1% of arrivals aged ≥ 80 years received thrombolysis (compared with 13.0% of arrivals aged < 80 years). There was a steady decline in use of thrombolysis over the age of 55 years.

-

A total of 39% of arrivals aged ≥ 80 years arrived within 4 hours of known stroke onset (compared with 40% of arrivals aged < 80 years).

-

The mean disability (mRS) score before stroke was 1.7 among patients aged ≥ 80 years (compared with 0.6 among patients aged < 80 years).

-

The mean stroke severity (NIHSS) score on arrival was 10.7 for patients aged ≥ 80 years (compared with 8.2 for patients aged < 80 years).

-

Of the patients scanned within 4 hours, 26.3% of those aged ≥ 80 years received thrombolysis (compared with 34.7% of those aged < 80 years).

-

Of the patients arriving within 4 hours of known stroke onset, 30.8% of all male arrivals received thrombolysis (compared with 28.2% of all female arrivals).

Statistics on ethnicity include the following:

-

There was a weak relationship between ethnicity and thrombolysis use, with 10.2% of black people receiving thrombolysis, compared with 11.7% of white people. This difference was mostly explained by a lower proportion of black people arriving within 4 hours of known stroke onset.

Statistics on the relationship between clinical features and use of thrombolysis include the following:

-

Use of thrombolysis fell as disability (mRS) score before stroke increased.

-

Use of thrombolysis was low for low stroke severity on arrival and then increased and reached a plateau (NIHSS score 6–25) before falling at higher stroke severity on arrival.

-

The presence or absence of comorbidities could be a strong indicator of the use of thrombolysis. For example, patients on anticoagulant therapies were less likely to receive thrombolysis than those who were not on anticoagulant therapies, but patients on antiplatelet therapies were more likely to receive thrombolysis than those who were not on antiplatelet therapies.

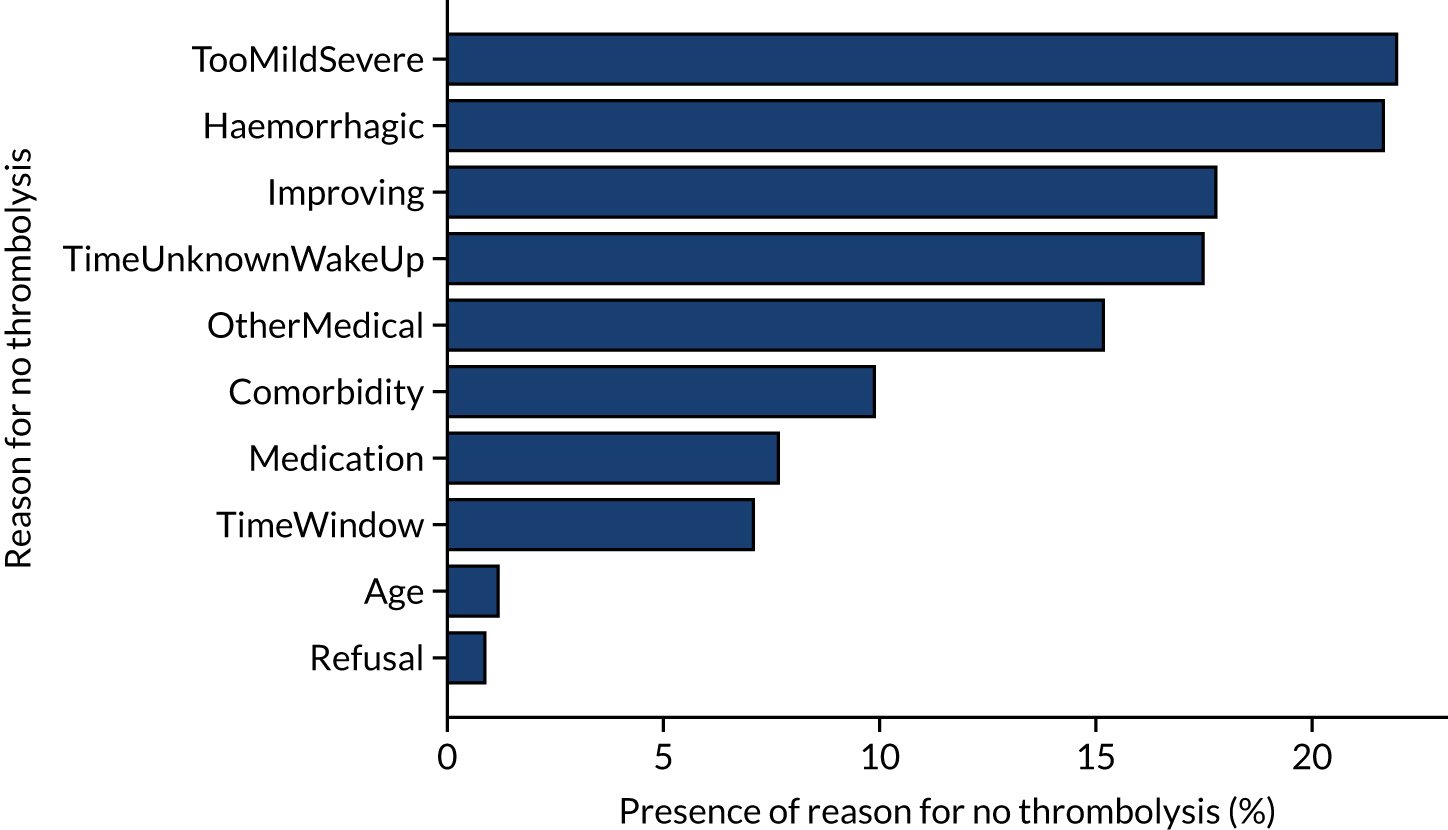

The three most common reasons stated for not giving thrombolysis were:

-

stroke was too mild/severe

-

haemorrhagic stroke

-

patient improving.

Statistics on the relationship of time of day and day of week to use of thrombolysis include the following:

-

Nationally, there was a significant fall in the use of thrombolysis among patients arriving between 3 a.m. and 6 a.m. (with about 6% of those arriving between 3 a.m. and 6 a.m. receiving thrombolysis, compared with 11–13% of patients arriving at other times of the day); however, patients arriving during this period accounted for only about 3% of all arrivals (compared with the 12.5% that would be expected if the arrival rate were uniform).

-

Nationally, there was a weak relationship between day of week and use of thrombolysis, with thrombolysis use ranging from 11.2% to 12.6% by day of week (increasing Monday through to Sunday).

General descriptive statistics stratified by age group

Table 1 shows general statistics for those patients with an out-of-hospital onset of stroke (i.e. 94.7% of all patients), stratified by age group (patients aged ≤ 80 years vs. aged ≥ 80 years).

| Pathway parameter | All | Age group (years) | |

|---|---|---|---|

| < 80 | ≥ 80 | ||

| Thrombolysis use (%) | 11.8 | 13.0 | 10.1 |

| Admissions/year, n | 75,607 | 44,134 | 31,473 |

| Aged ≥ 80 years (%) | 42.3 | 0.0 | 100.0 |

| mRS score before stroke | 1.0 | 0.7 | 1.6 |

| NIHSS score on arrival | 7.5 | 6.4 | 9.1 |

| Onset determined (%) | 66.9 | 68.2 | 65.0 |

| Known stroke onset arriving within 4 hours (%) | 58.6 | 56.8 | 61.3 |

| All arriving within 4 hours (%) | 39.2 | 38.7 | 39.9 |

| mRS score before stroke (4-hour arrivals)a | 1.1 | 0.6 | 1.7 |

| NIHSS score on arrival (4-hour arrivals)a | 9.2 | 8.2 | 10.7 |

| Mean onset-to-arrival time (minutes)a | 111.4 | 109.3 | 114.3 |

| SD onset-to-arrival time (minutes)a | 52.7 | 52.8 | 52.4 |

| Receiving scan within 4 hours of onset (%)a | 94.8 | 95.0 | 94.5 |

| Mean arrival-to-scan time (minutes)b | 42.1 | 41.6 | 42.8 |

| SD arrival-to-scan time (minutes)b | 44.8 | 44.6 | 45.1 |

| Receiving thrombolysis (%)b | 31.1 | 34.7 | 26.3 |

| Mean scan-to-needle time (minutes) | 36.0 | 36.5 | 35.0 |

| SD scan-to-needle time (minutes) | 28.4 | 29.3 | 26.6 |

| Mean arrival-to-needle time (minutes) | 58.8 | 60.0 | 56.7 |

| SD arrival-to-needle time (minutes) | 35.2 | 36.7 | 32.2 |

| Mean onset-to-needle time (minutes) | 155.8 | 156.5 | 154.5 |

| SD onset-to-needle time (minutes) | 54.0 | 55.2 | 51.8 |

| Thrombolysis given at > 180 minutes (%) | 29.5 | 30.3 | 28.0 |

| Thrombolysis given at > 240 minutes (%) | 1.7 | 1.8 | 1.4 |

Relationship between patient features and use of thrombolysis

In this section, we examine the relationship between particular features and the use of thrombolysis.

Note that the association of particular features with use of thrombolysis does not imply that these relationships are necessarily causal.

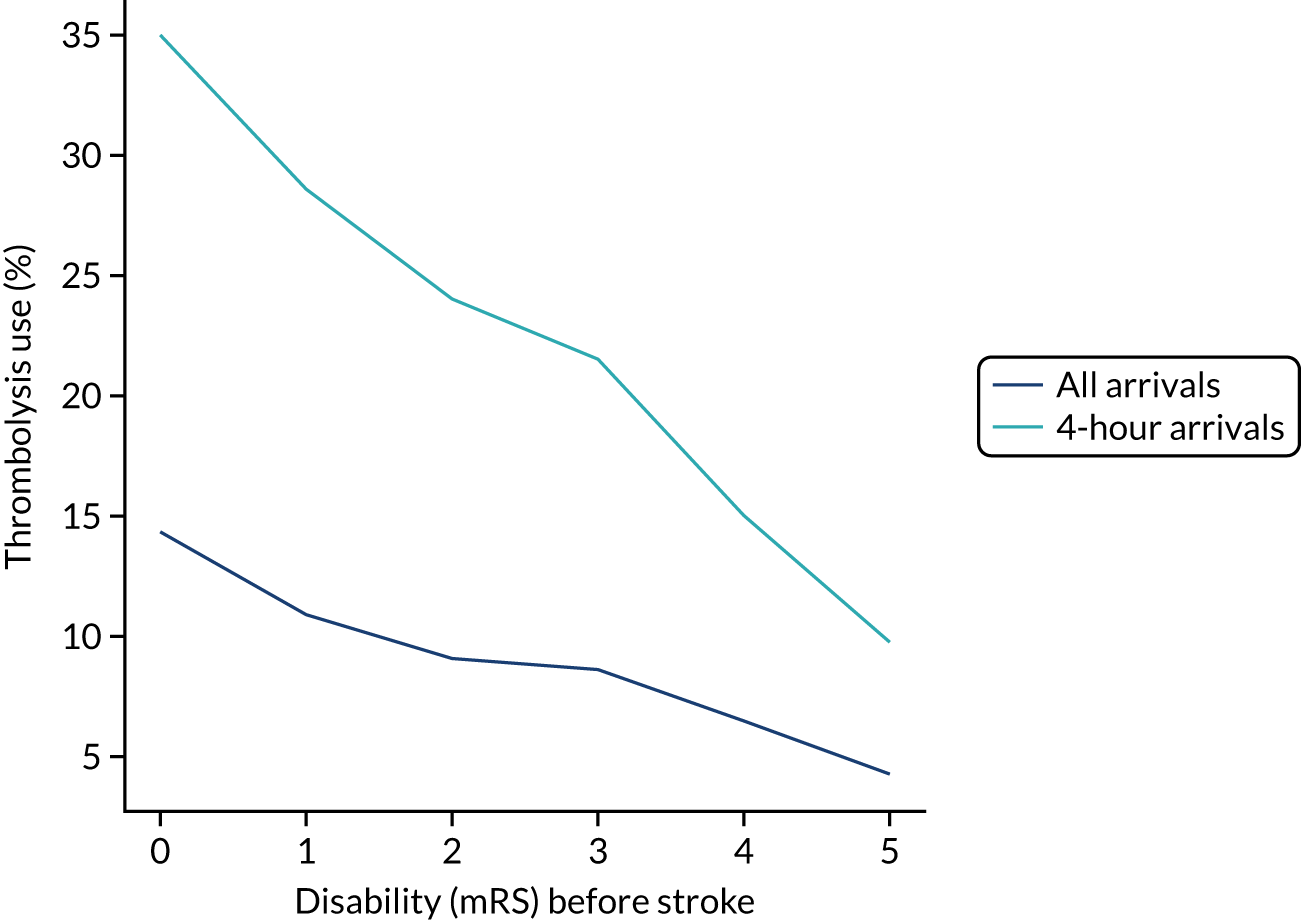

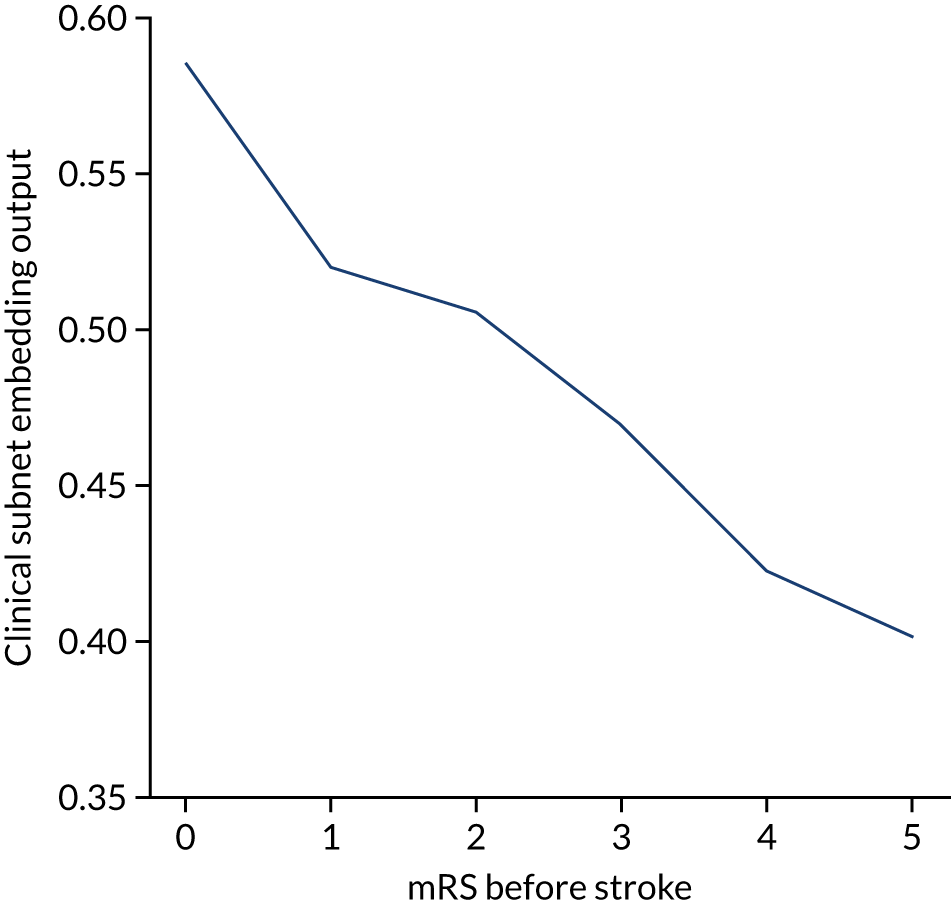

Disability before stroke

The higher the level of disability before stroke (as described by the mRS) then the lower the use of thrombolysis (Figure 2). As a percentage of arrivals with an out-of-hospital onset of stroke, thrombolysis use dropped from 14.2% in those with no disability before stroke to 4.3% in those with a mRS score of 5. As a percentage of arrivals with an out-of-hospital onset of stroke who arrive within 4 hours of known stroke onset, thrombolysis use dropped from 34.9% in those with no disability before stroke to 9.8% in those with a mRS score of 5.

FIGURE 2.

Thrombolysis use by disability before stroke: mRS. The dark blue line shows thrombolysis use in all arrivals, and the light blue line shows thrombolysis use in those arriving within 4 hours of known stroke onset.

Stroke severity on arrival

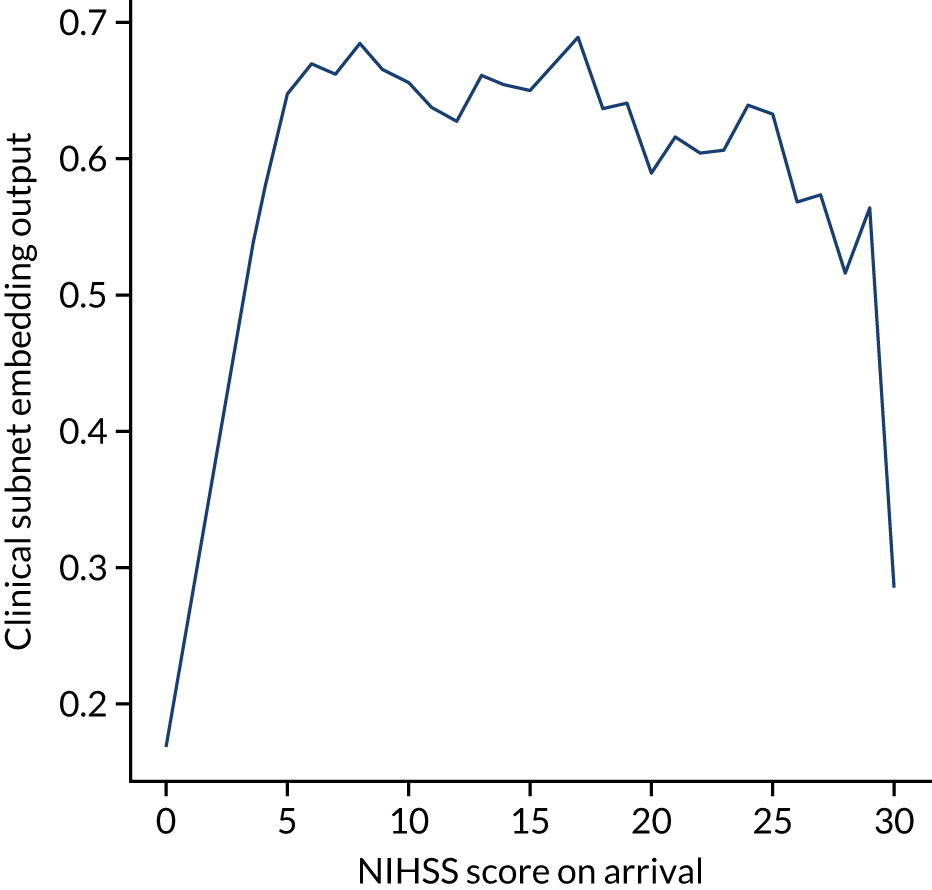

The use of thrombolysis varied very significantly with stroke severity (i.e. NIHSS score) on arrival (Figure 3). Thrombolysis use was very low at extreme NIHSS scores, with a plateau of use at NIHSS scores of approximately 6–25.

FIGURE 3.

Thrombolysis use by stroke severity on arrival: NIHSS. The dark blue line shows thrombolysis use in all arrivals, and the light blue line shows thrombolysis use in those arriving within 4 hours of known stroke onset.

Gender

There was a weak relationship between gender and use of thrombolysis. A total of 12.3% of all male arrivals received thrombolysis, compared with 11.3% of all female arrivals. Among patients arriving within 4 hours of known stroke onset, 30.8% of all males received thrombolysis, compared with 28.2% of all females.

Ethnicity

There was a weak relationship between ethnicity and thrombolysis use in all arrivals (Table 2), with use of thrombolysis being lowest in black people, but use of thrombolysis showed little variation with ethnicity among those arriving within 4 hours of known stroke onset, suggesting that the cause of lower use of thrombolysis was likely to be the lower proportion of black people arriving at hospital within 4 hours of known stroke onset.

| Ethnicity | Thrombolysis use (%) | |

|---|---|---|

| All arrivals | Arrivals within 4 hours of known stroke onset | |

| Asian | 12.1 | 30.0 |

| Black | 10.2 | 29.2 |

| Mixed | 11.7 | 32.3 |

| White | 11.6 | 29.1 |

Age

Thrombolysis use declined with age (Figure 4). Among patients aged 25–55 years, thrombolysis was used in about 15% of all patients and in about 35% of those arriving within 4 hours of known stroke onset. Above this age band, there was a decline in use; for example, in the age band 85–89 years, thrombolysis was used in about 10% of all patients and in 25% of patients arriving within 4 hours of known stroke onset.

FIGURE 4.

Thrombolysis use by age band (5-year bands). The dark blue line shows thrombolysis use in all arrivals, and the light blue line shows thrombolysis use in those arriving within 4 hours of known stroke onset.

Knowledge of time of stroke onset

Knowledge of time of onset was split almost evenly (33–34% each) among ‘not known’, ‘best estimate’ and ‘precise’. Type of knowledge of stroke onset showed a significant association with use of thrombolysis (Table 3). Of patients arriving within 4 hours of known onset, 39.0% received thrombolysis if the time was recorded as being known precisely, compared with 14.0% receiving thrombolysis if the time was a best estimate.

| Knowledge of time of onset | Thrombolysis use (%) | |

|---|---|---|

| All arrivals | Arrivals within 4 hours of known stroke onset | |

| Not known | 0.4 | NA |

| Best estimate | 6.3 | 14.0 |

| Precise | 28.8 | 39.0 |

Arrival mode

A total of 8.4% of patients arrived by ambulance. There was a significant association between mode of arrival and use of thrombolysis. Use of thrombolysis was 13.7% among patients arriving by ambulance and 3.8% among those arriving by other means.

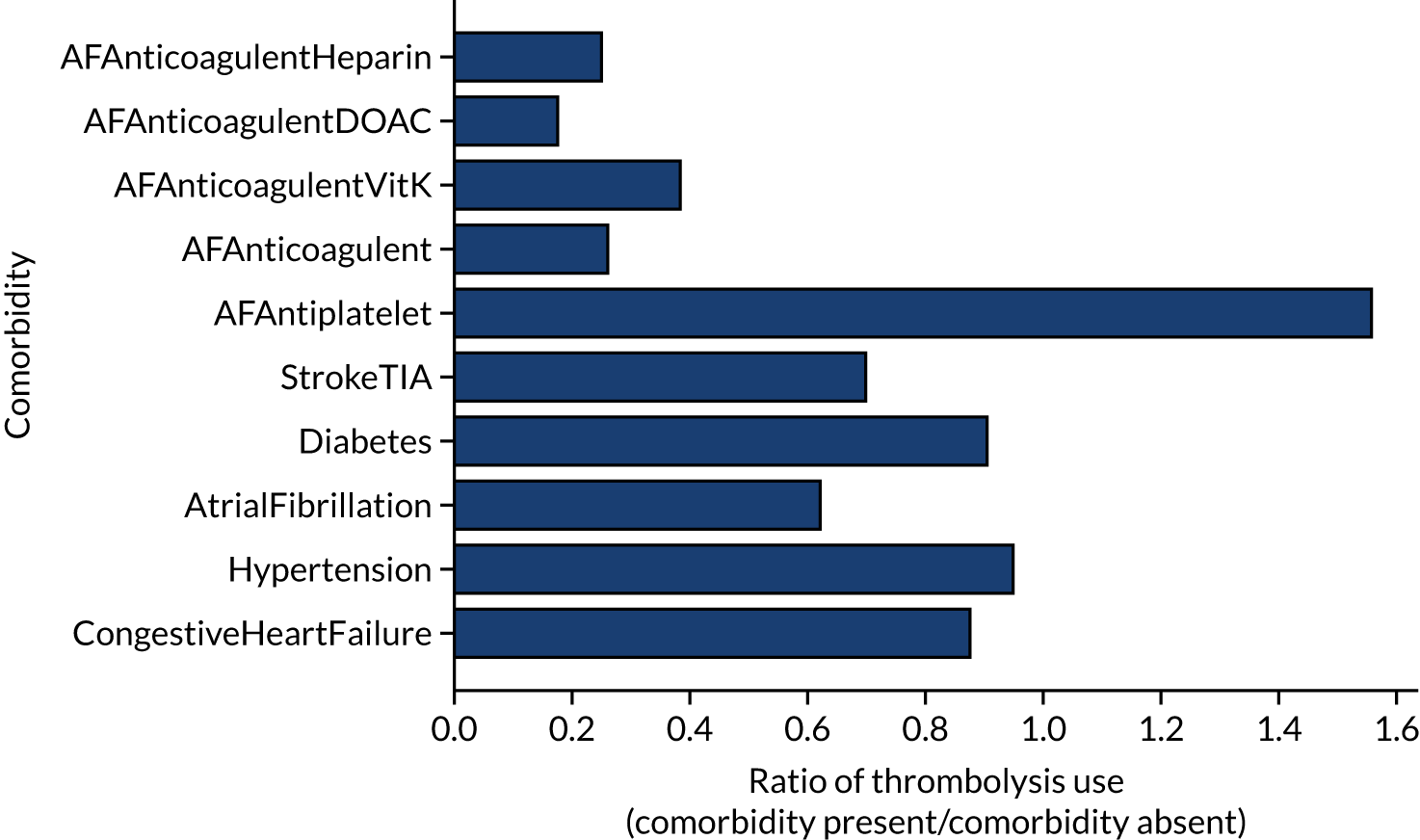

Comorbidities

The presence or absence of comorbidities could be a strong indicator of the use of thrombolysis (Figure 5). For example, patients on anticoagulant therapies were less likely than patients who were not on anticoagulant therapies to receive thrombolysis (by ratios of 0.18–0.39, depending on the type of anticoagulant), whereas those on antiplatelet therapies were more likely than those not on antiplatelet therapies to receive thrombolysis (by a ratio of 1.56).

FIGURE 5.

The relationship between the presence of comorbidities and the use of thrombolysis. The results show the ratio of patients treated with the comorbidity present to the use when the comorbidity is absent. AF, atrial fibrillation; DOAC, direct oral anticoagulant; TIA, transient ischaemic attack.

Stated reasons for not giving thrombolysis

Figure 6 shows the reasons given for not giving thrombolysis (note that more than one reason may be given). Haemorrhagic stroke or a stroke that is too mild/severe were the most common causes (each present for 22% of patients not treated). An improving condition was given as the reason for no treatment in 17% of non-treated patients. It is noteworthy that patient refusal was given as a reason for non-treatment in only 0.9% of untreated patients.

FIGURE 6.

Indicated reasons for not giving thrombolysis among patients who did not receive thrombolysis. Note that more than one reason may be given.

Inter-hospital variation in general descriptive statistics

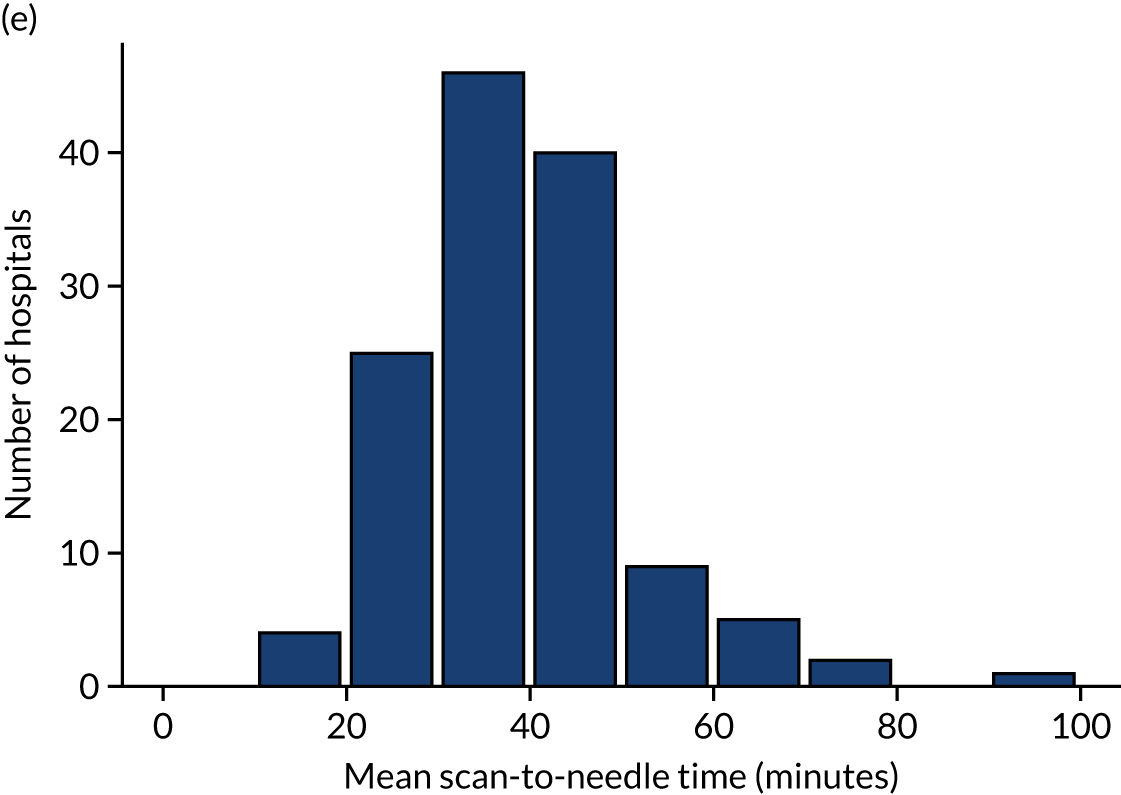

Table 4 shows inter-hospital variation in general descriptive statistics for patients with an out-of-hospital onset of stroke. Distributions of key parameters are also shown as histograms in Figure 7.

| Pathway parameter | Mean | SD | Minimum | First quartile | Median | Third quartile | Maximum |

|---|---|---|---|---|---|---|---|

| Thrombolysis use (%) | 11.5 | 3.5 | 1.5 | 9.3 | 11.0 | 13.3 | 24.3 |

| Admissions/year, n | 573 | 277 | 101 | 378 | 544 | 755 | 2039 |

| Aged ≥ 80 years (%) | 42.6 | 5.6 | 29.2 | 38.7 | 42.8 | 45.8 | 57.6 |

| mRS score before stroke | 1.0 | 0.3 | 0.5 | 0.8 | 1.0 | 1.2 | 1.9 |

| NIHSS score on arrival | 7.4 | 0.9 | 4.5 | 6.7 | 7.4 | 8.1 | 10.3 |

| Onset determined (%) | 66.6 | 13.4 | 34.5 | 57.4 | 64.2 | 75.3 | 98.8 |

| Known onset arriving within 4 hours (%) | 60.1 | 9.0 | 25.7 | 55.8 | 60.9 | 66.2 | 81.4 |

| All arriving within 4 hours (%) | 39.2 | 6.2 | 22.3 | 35.3 | 39.2 | 43.1 | 55.8 |

| mRS score before stroke (4-hour arrivals)a | 1.1 | 0.3 | 0.5 | 0.9 | 1.1 | 1.2 | 1.8 |

| NIHSS score on arrival (4-hour arrivals)a | 9.1 | 1.1 | 6.1 | 8.4 | 9.2 | 9.9 | 11.7 |

| Mean onset-to-arrival time (minutes)a | 110.6 | 6.7 | 90.3 | 106.9 | 110.8 | 115.1 | 132.6 |

| SD onset-to-arrival time (minutes)a | 52.4 | 2.3 | 46.5 | 50.8 | 52.1 | 53.7 | 63.9 |

| Receiving scan within 4 hours of onset (%)a | 94.8 | 3.0 | 84.7 | 93.3 | 95.4 | 96.9 | 100.0 |

| Mean arrival-to-scan time (minutes)b | 43.0 | 11.5 | 18.6 | 35.6 | 42.9 | 50.6 | 92.9 |

| SD arrival-to-scan time (minutes)b | 43.2 | 6.9 | 24.0 | 38.9 | 42.7 | 47.5 | 64.4 |

| Receiving thrombolysis (%)b | 30.1 | 7.8 | 7.3 | 24.9 | 29.3 | 36.0 | 49.7 |

| Mean scan-to-needle time (minutes) | 39.7 | 12.0 | 19.1 | 30.7 | 37.4 | 45.9 | 92.1 |

| SD scan-to-needle time (minutes) | 26.5 | 8.5 | 7.9 | 21.3 | 25.1 | 29.9 | 65.9 |

| Mean arrival-to-needle time (minutes) | 63.5 | 15.1 | 26.5 | 54.5 | 60.0 | 71.7 | 111.4 |

| SD arrival-to-needle time (minutes) | 32.8 | 8.4 | 8.2 | 27.9 | 32.2 | 36.5 | 69.8 |

| Mean onset-to-needle time (minutes) | 158.4 | 13.1 | 127.0 | 149.7 | 156.5 | 166.7 | 189.7 |

| SD onset-to-needle time (minutes) | 52.3 | 5.9 | 39.1 | 48.6 | 51.3 | 54.9 | 83.3 |

| Thrombolysis given at > 180 minutes (%) | 31.0 | 9.3 | 8.0 | 25.0 | 29.0 | 35.6 | 58.8 |

| Thrombolysis given at > 240 minutes (%) | 1.8 | 1.5 | 0.0 | 0.8 | 1.5 | 2.6 | 7.0 |

FIGURE 7.

Histograms of inter-hospital variation in key descriptive statistics for those patients with an out-of-hospital onset of stroke. (a) Thrombolysis use: all admissions; (b) thrombolysis use: arrivals within 4 hours of known onset; (c) proportion of patients with known onset; (d) mean arrival-to-scan time for patients arriving within 4 hours of known onset and receiving scan within 4 hours of arrival; (e) mean scan-to-needle time; and (f) mean arrival-to-needle time.

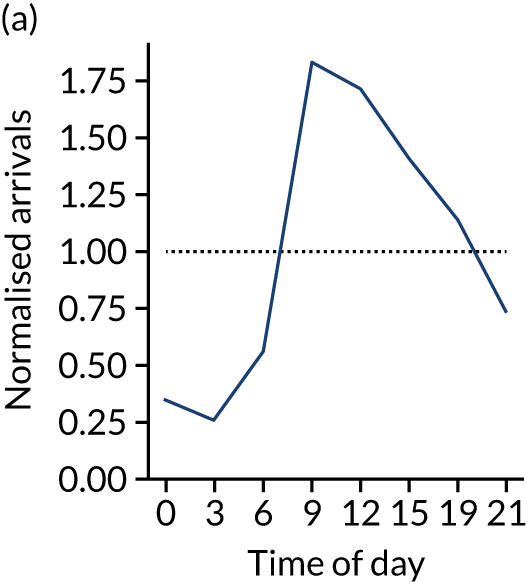

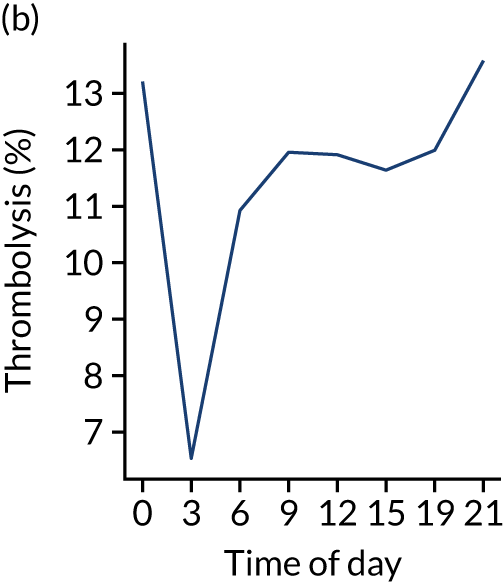

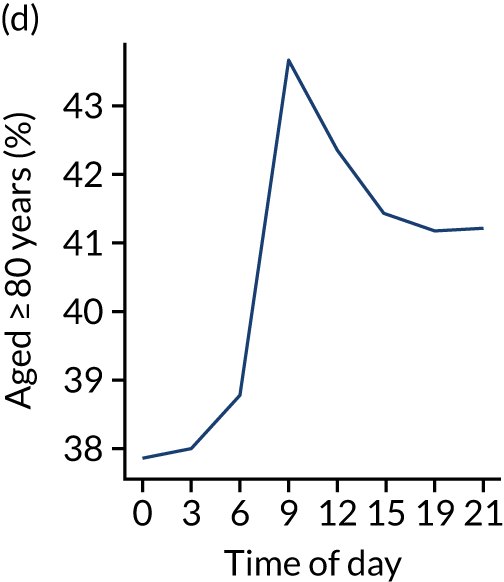

Relationship between time of day and day of week on use of thrombolysis

At a national scale

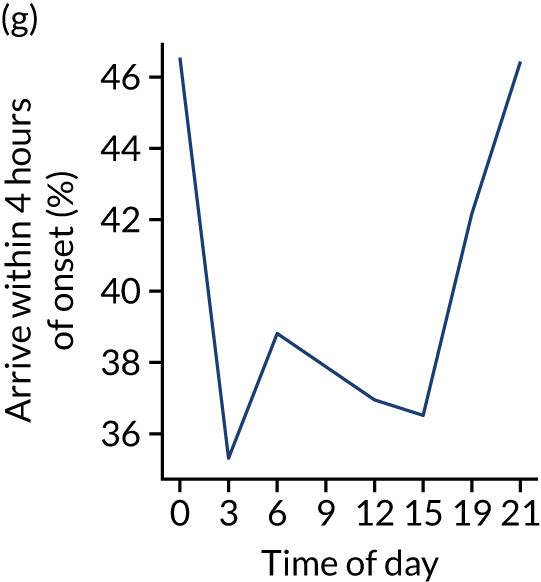

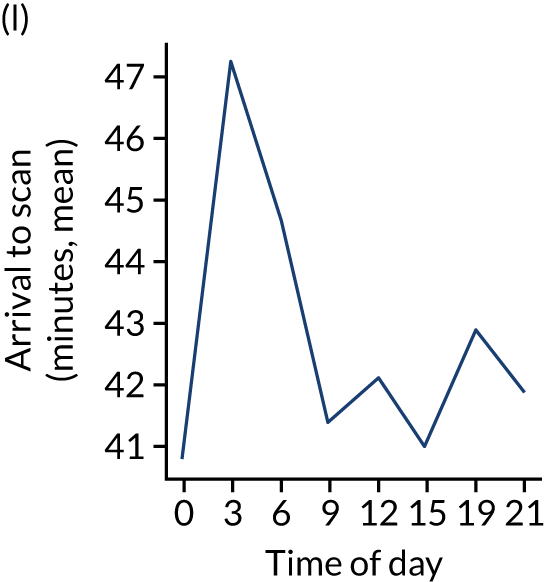

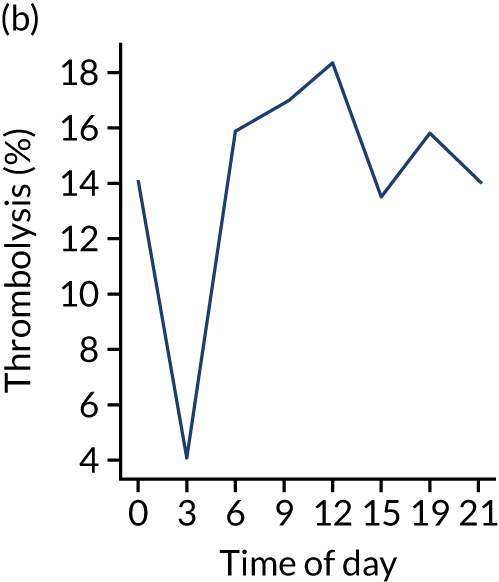

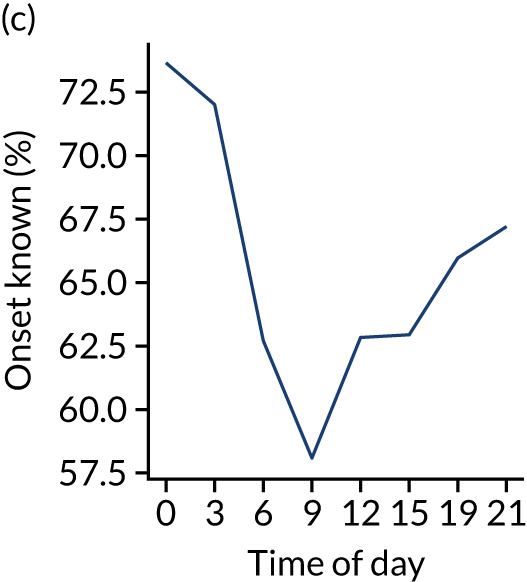

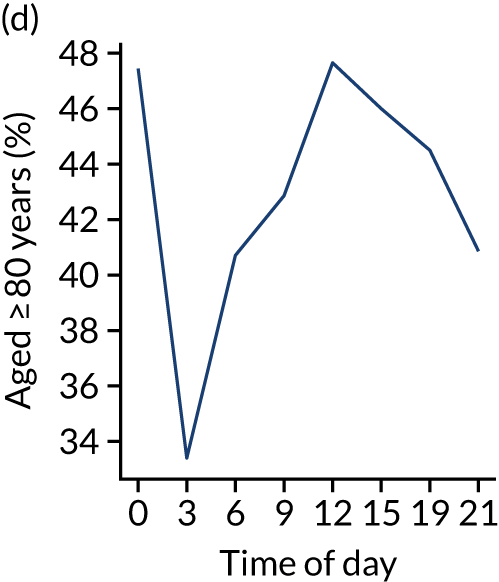

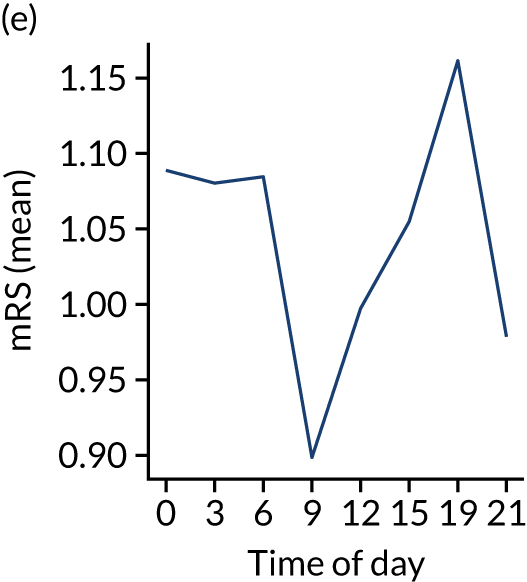

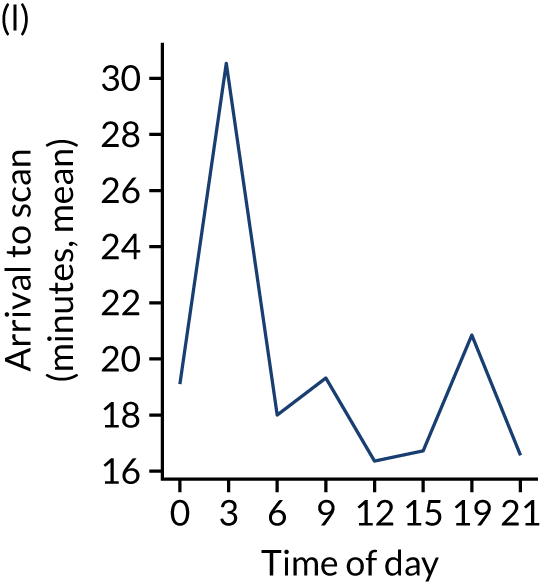

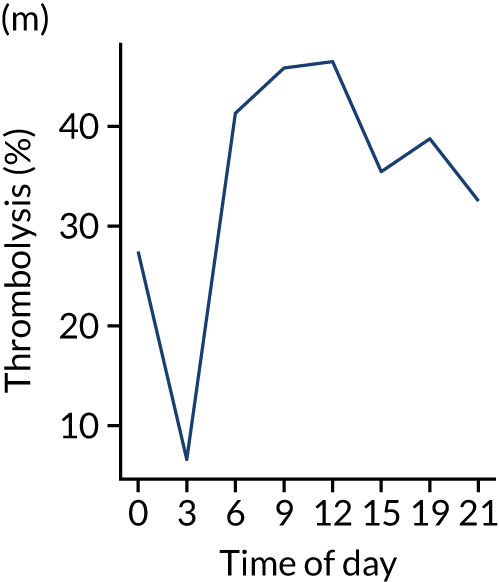

Figure 8 shows changes in key pathway statistics throughout the day, as averaged across all patients. Arrivals peaked between 9 a.m. and 3 p.m., and then dropped steadily to a low between 3 a.m. and 6 a.m. Thrombolysis use was significantly lower in the 3 a.m. to 6 a.m. epoch. At this point, the proportion of patients who arrived within 4 hours of known stroke onset was lower than during the day. Processes appeared a little slower; for example, the arrival-to-scan time was 47 minutes on average, compared with about 42 minutes during the day. The largest coincidental association at this time (i.e. the 3 a.m. to 6 a.m. epoch) was that fewer patients who were scanned within 4 hours of known stroke onset were deemed suitable for thrombolysis (22%, compared with about 35% of patients who arrived later in the day). When considering the performance of the pathway at this time, it may be worth noting that only about 3% of all patients arrived between 3 a.m. and 6 a.m. (and we would expect this to be 12.5% of all arrivals in this time period if there were a uniform arrival rate).

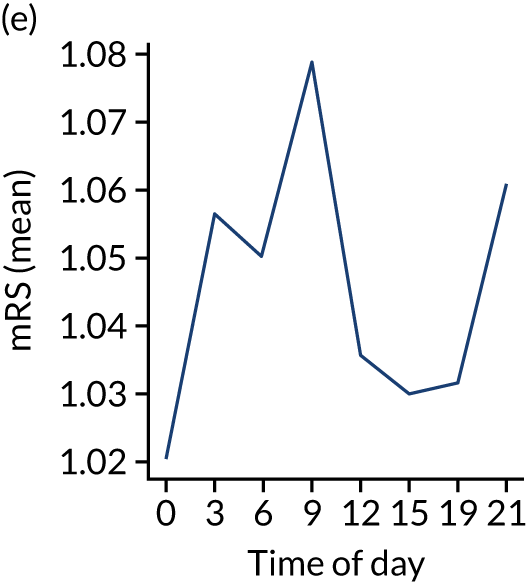

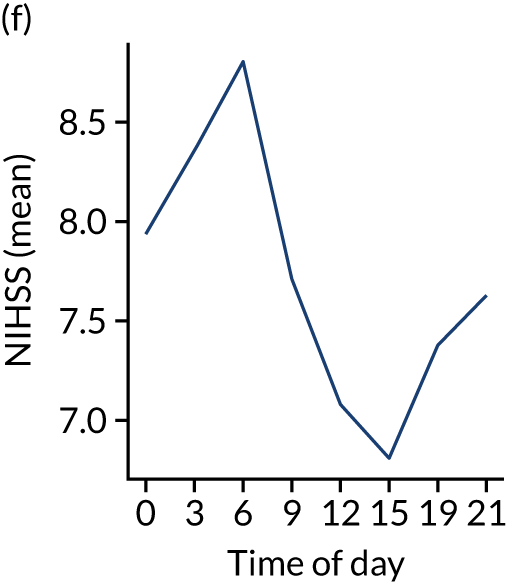

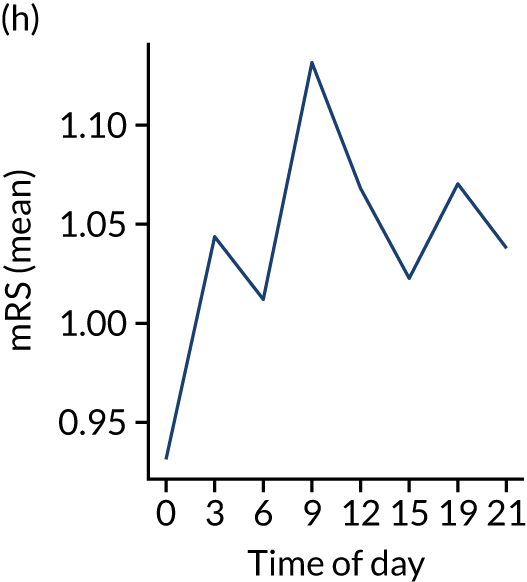

FIGURE 8.

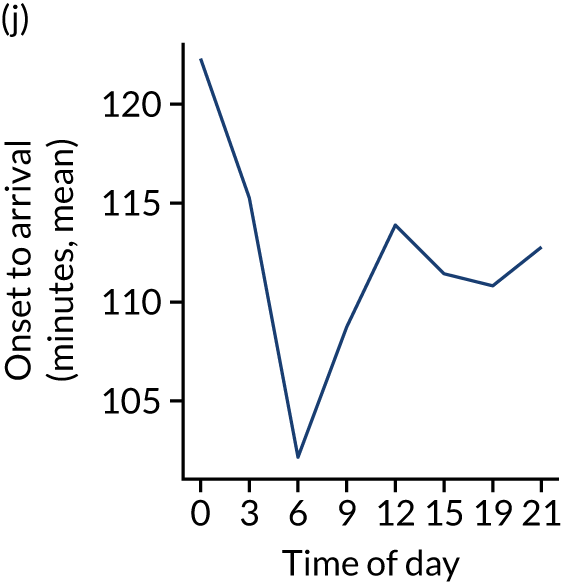

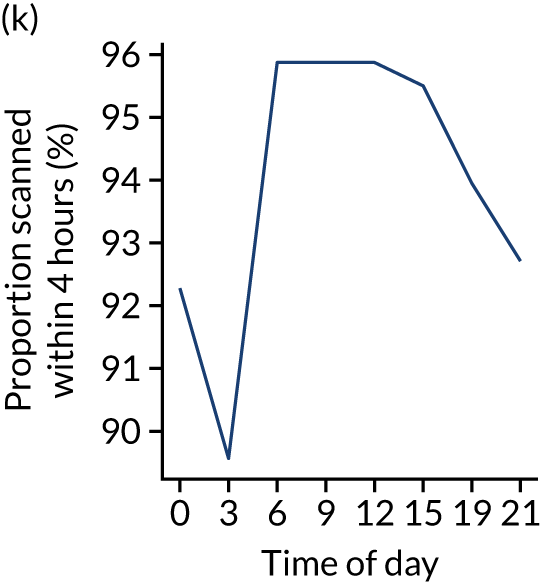

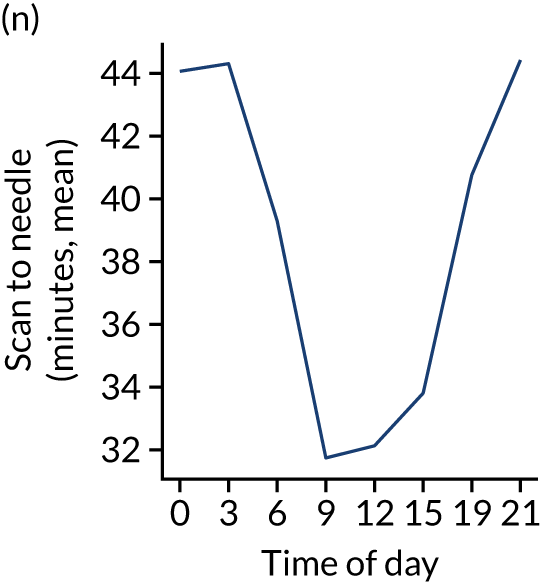

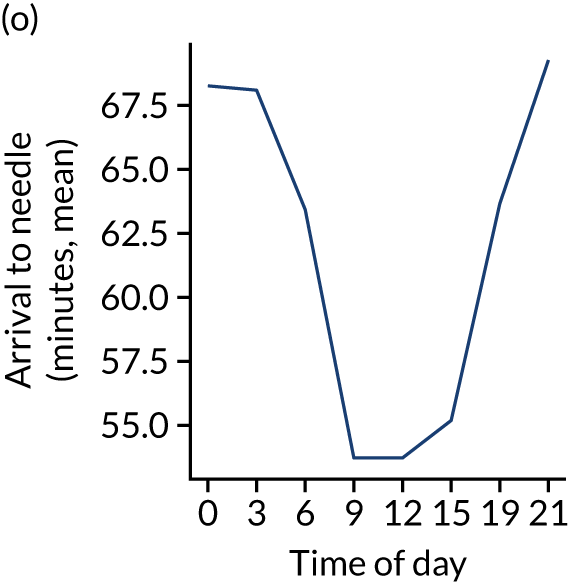

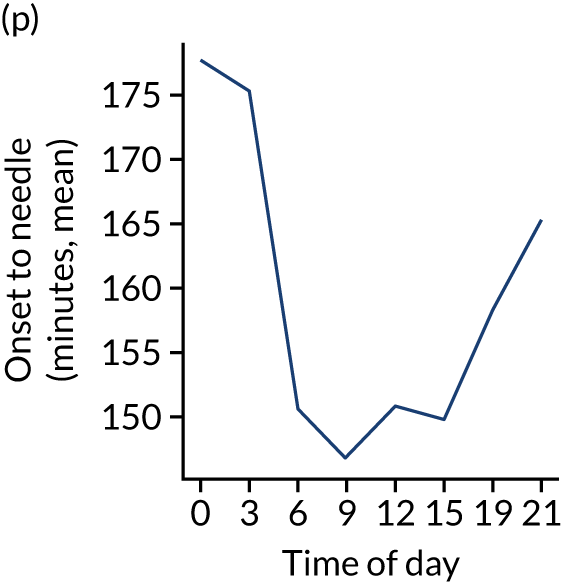

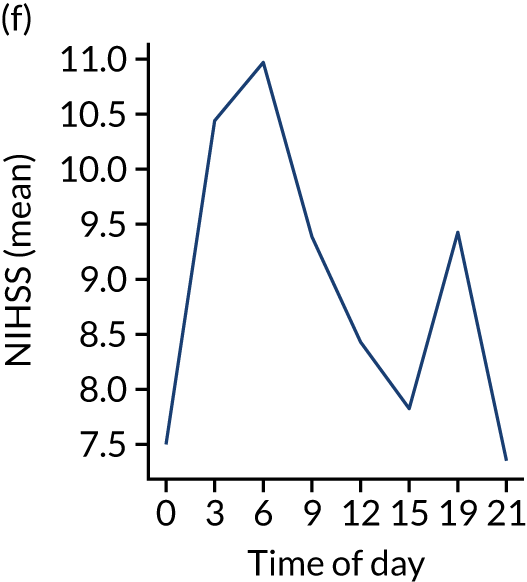

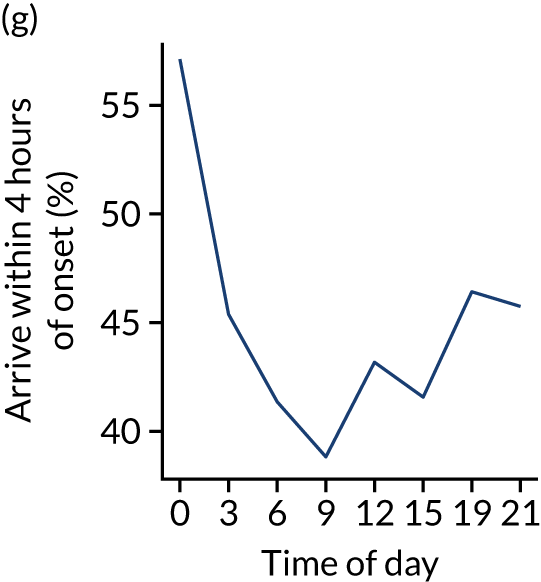

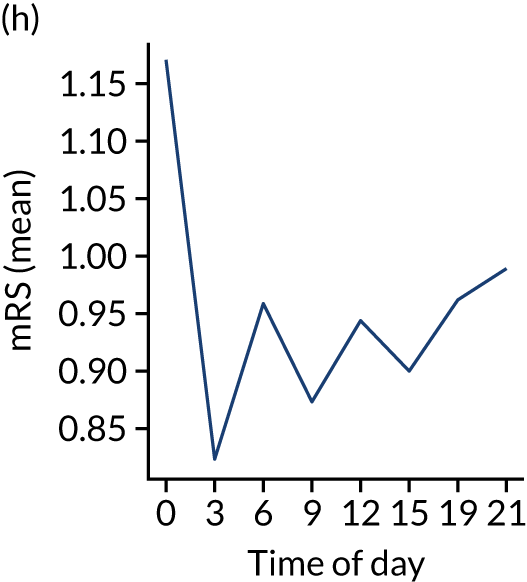

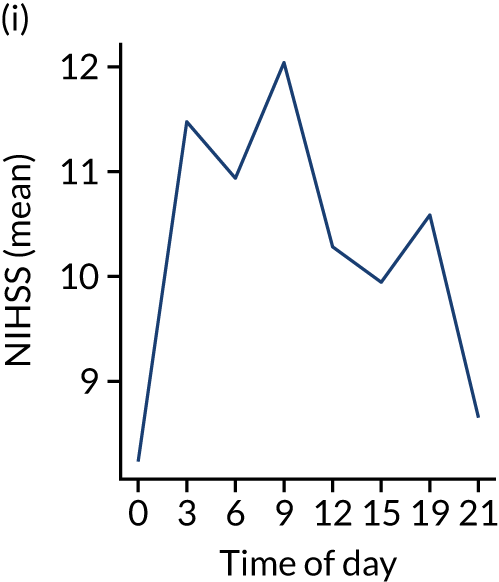

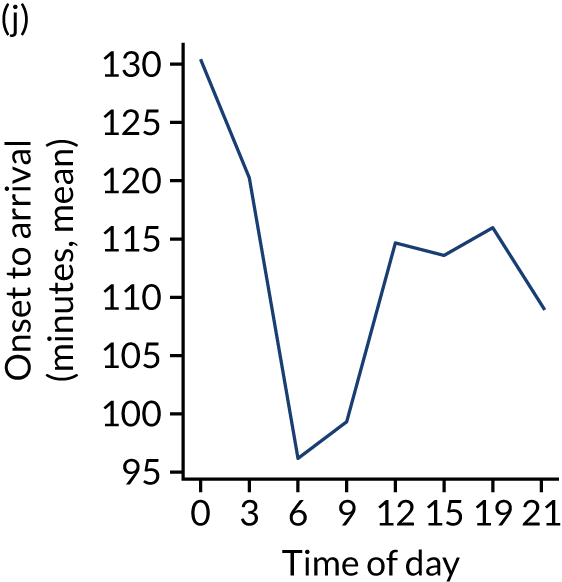

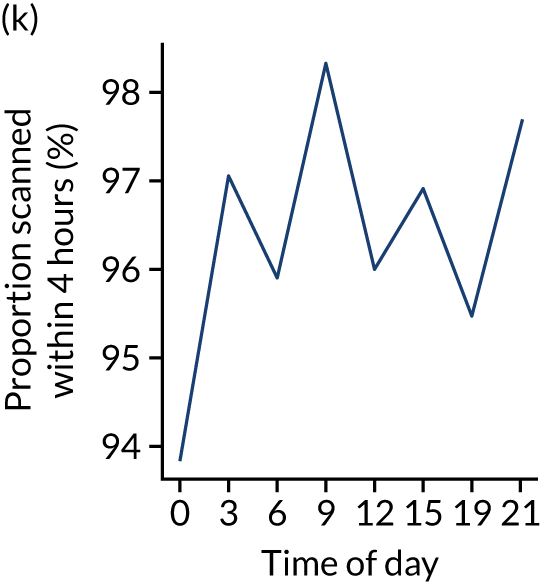

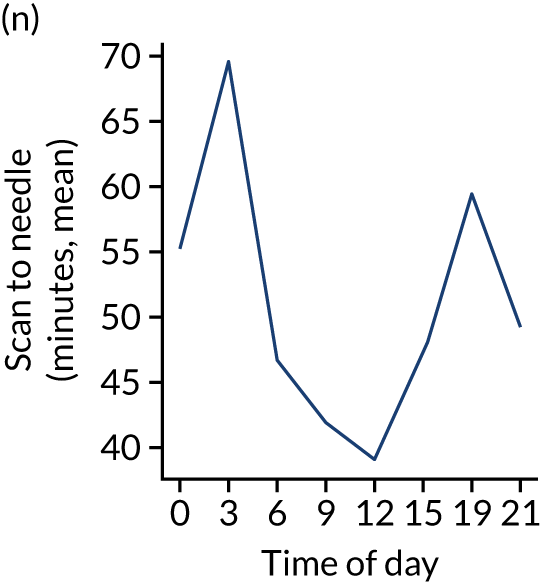

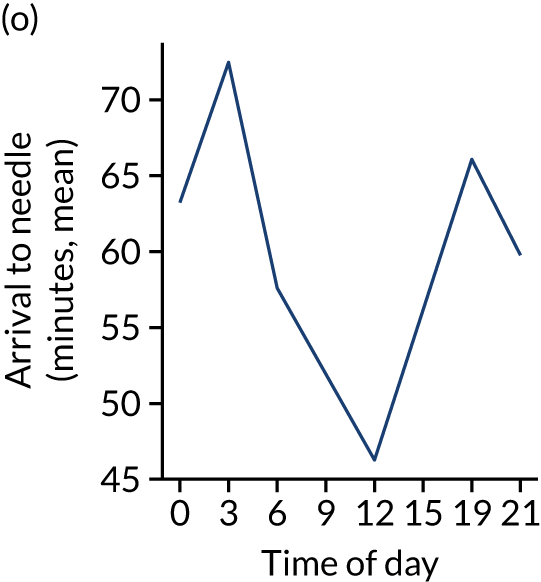

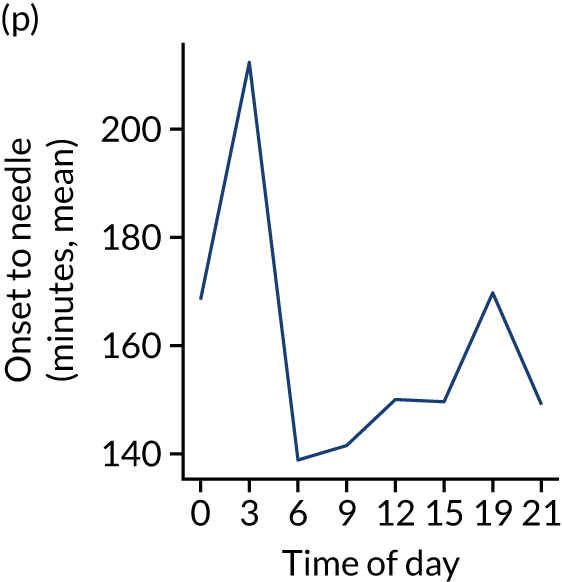

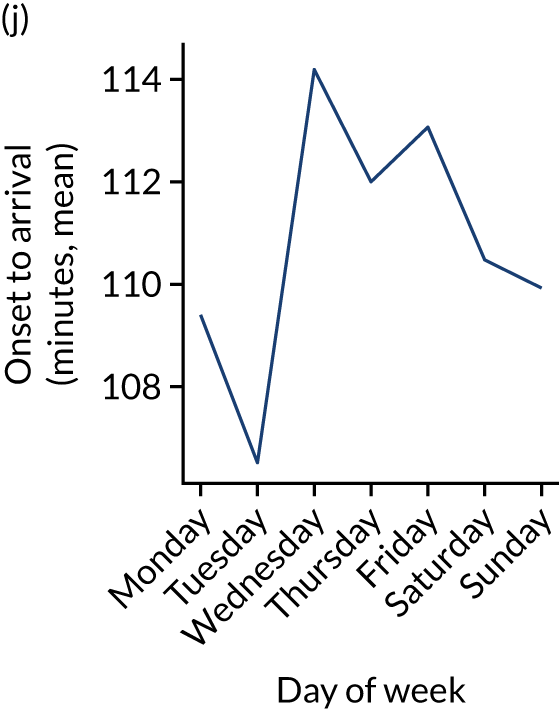

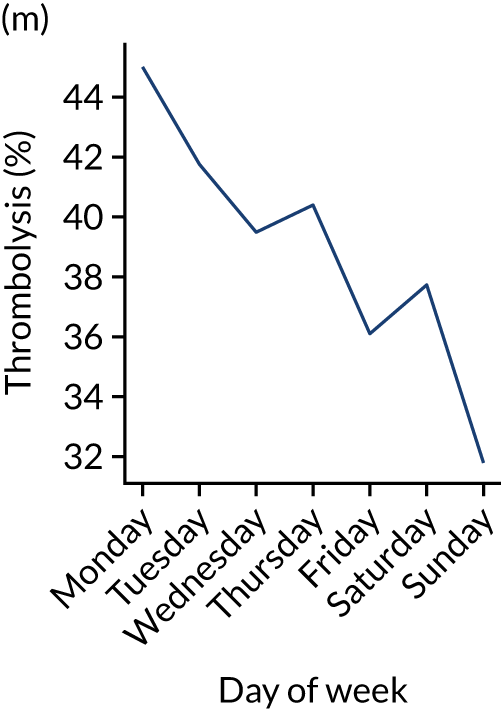

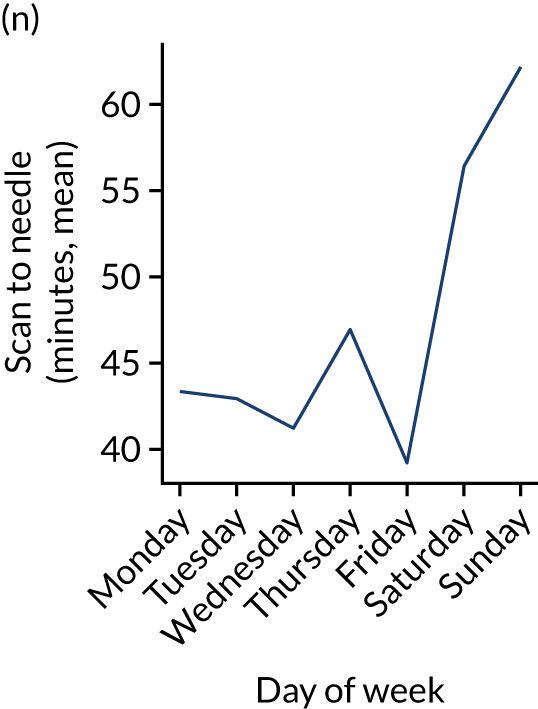

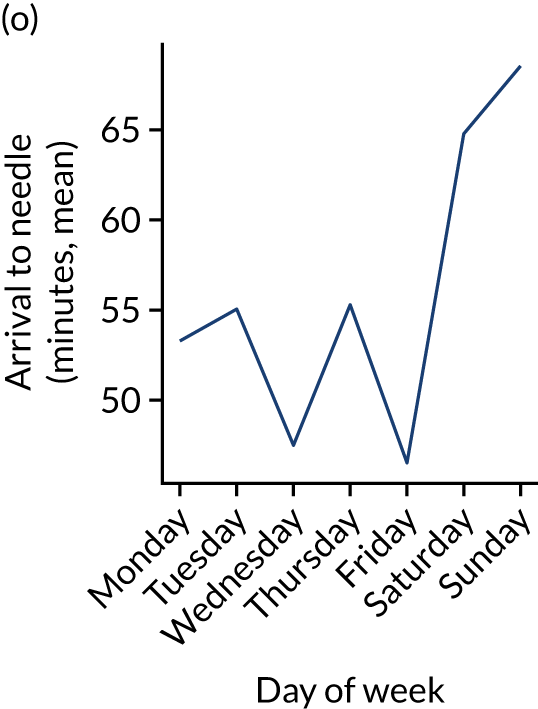

Changes in key pathway statistics by time of day. Results are mean results across all stroke units. Time is given as the start of a 3-hour epoch (e.g. ‘3’ is 3 a.m. to 6 a.m.). (a) Arrivals: normalised to average; (b) thrombolysis use: all arrivals; (c) proportion of patients with known onset; (d) proportion of patients aged ≥ 80 years; (e) mean pre-stroke mRS: all arrivals; (f) mean NIHSS score: all arrivals; (g) proportion of patients arriving within 4 hours of known onset; (h) mean pre-stroke mRS: arrivals 4 hours from onset; (i) mean NIHSS score: arrivals 4 hours from onset; (j) mean onset-to-arrival time (minutes): arrivals 4 hours from onset; (k) proportion of patients scanned within 4 hours of arrival: arrivals 4 hours from onset; (l) mean arrival-to-scan time (minutes): scanned 4 hours from onset; (m) thrombolysis use: scanned 4 hours from onset; (n) mean scan-to-needle time (minutes); (o) mean arrival-to-needle time (minutes); and (p) mean onset-to-needle time.

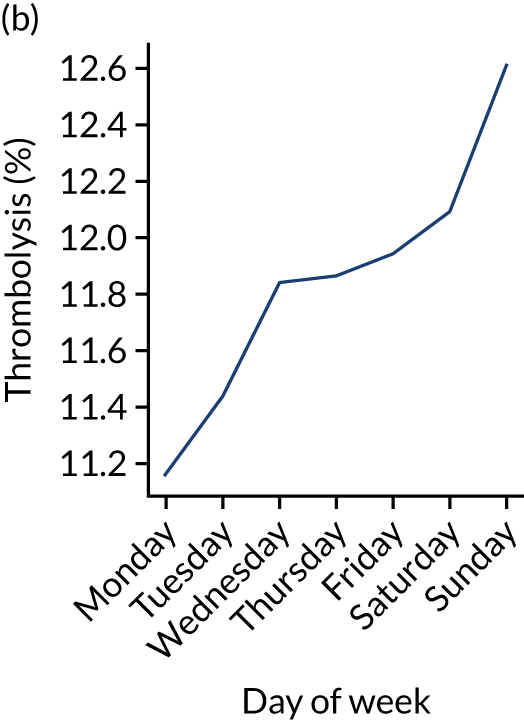

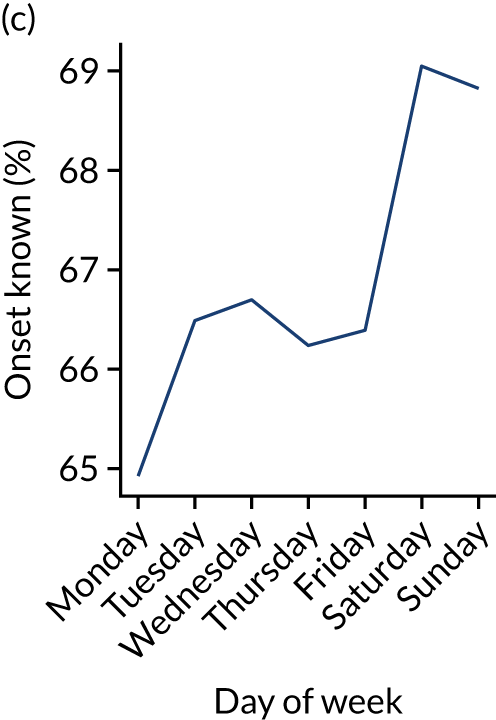

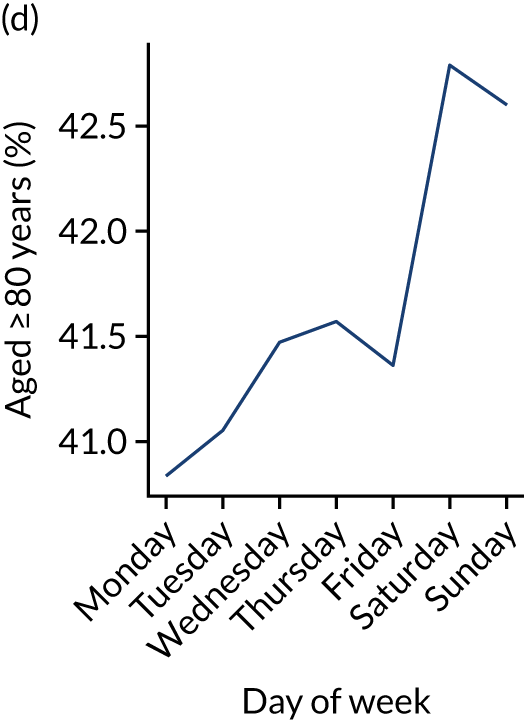

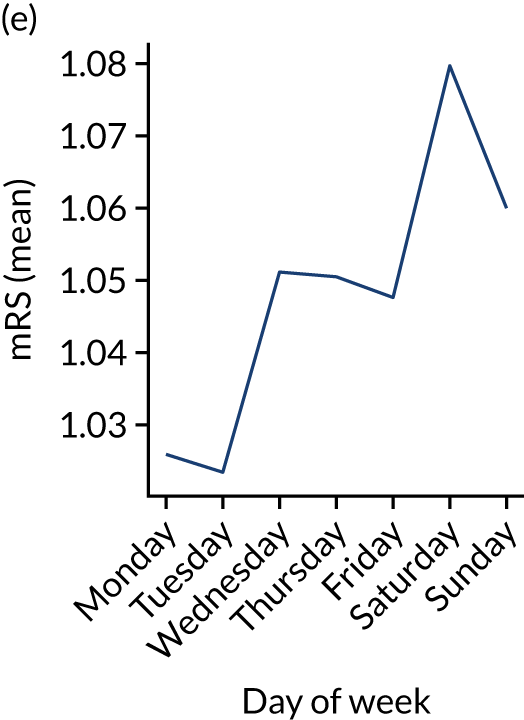

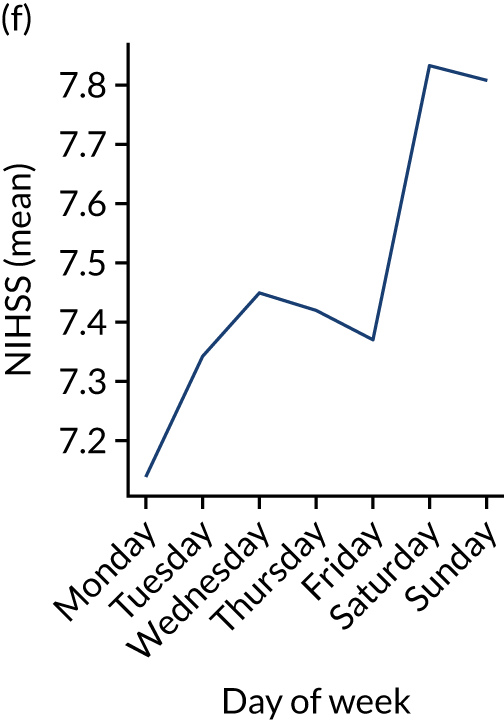

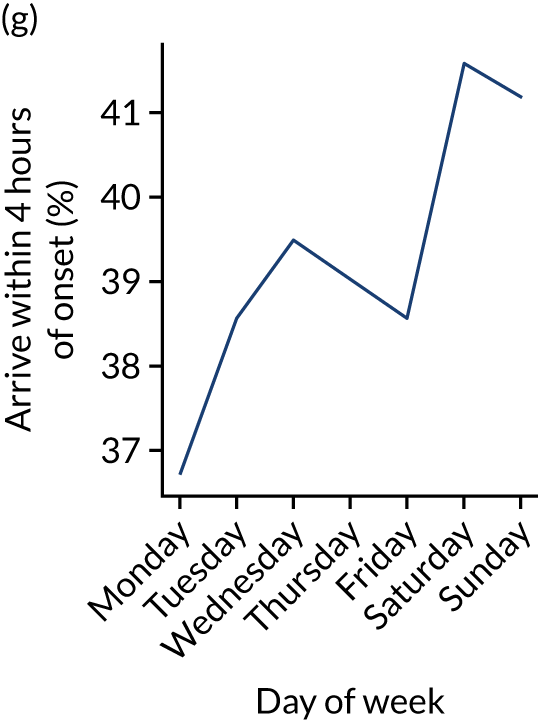

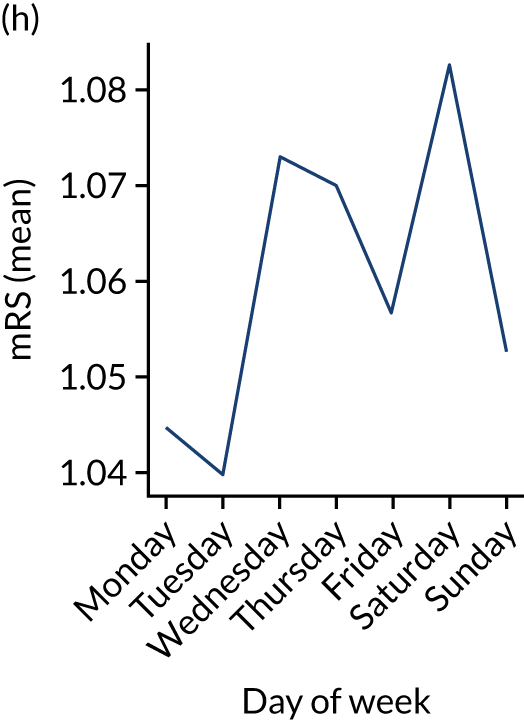

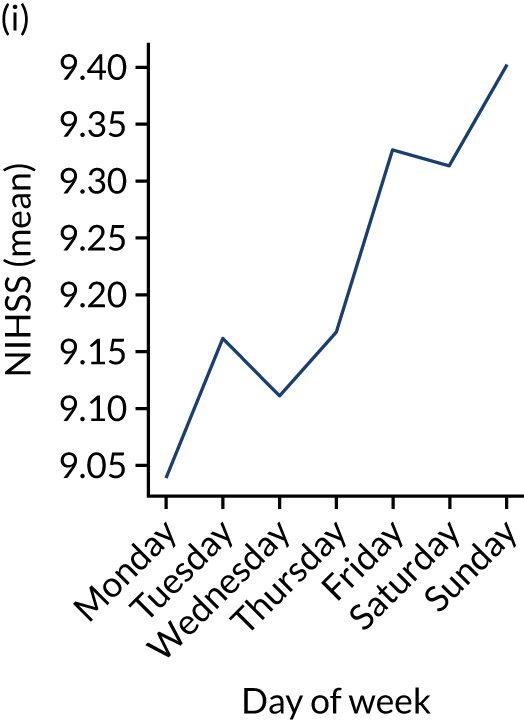

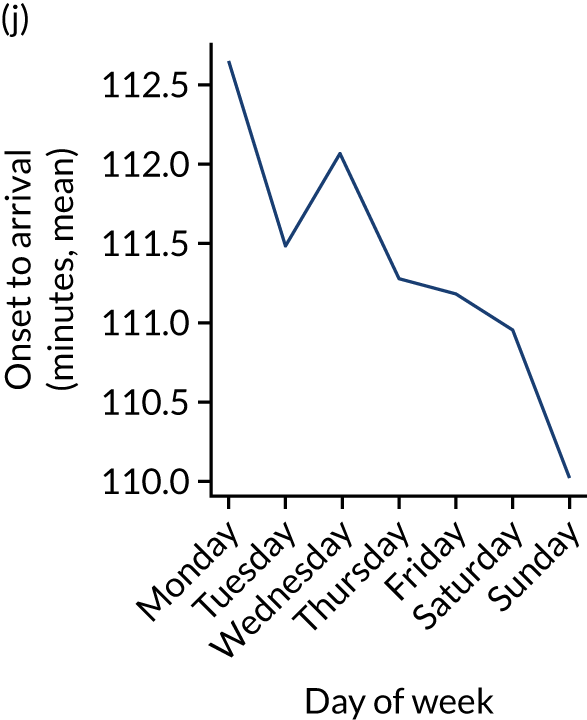

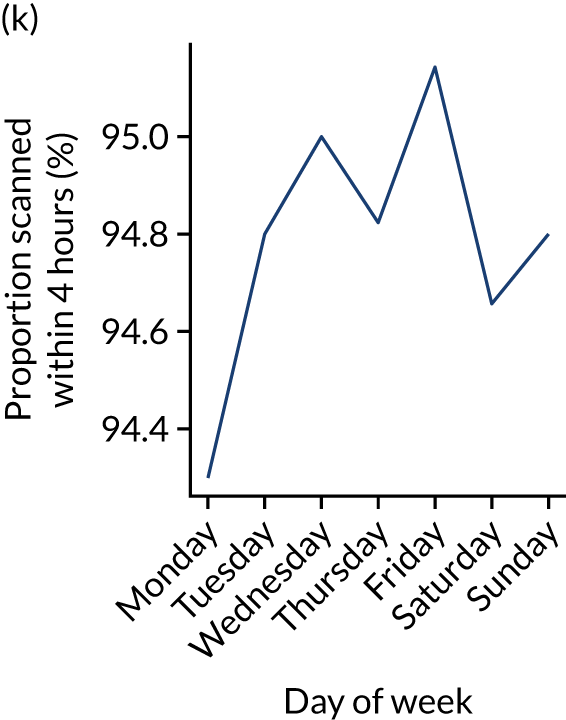

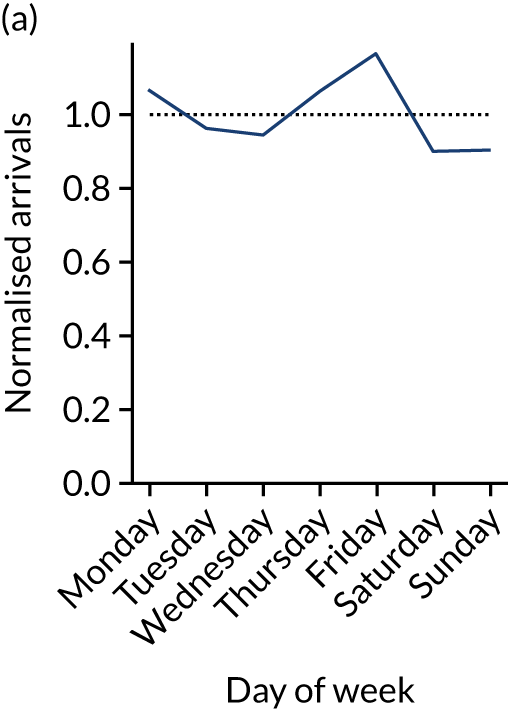

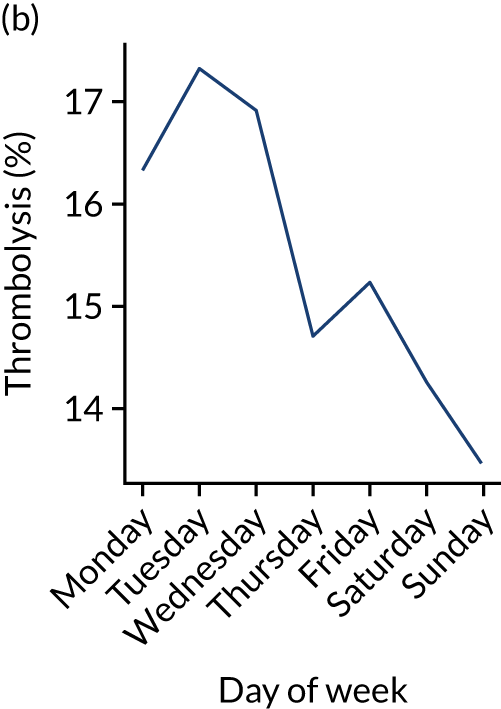

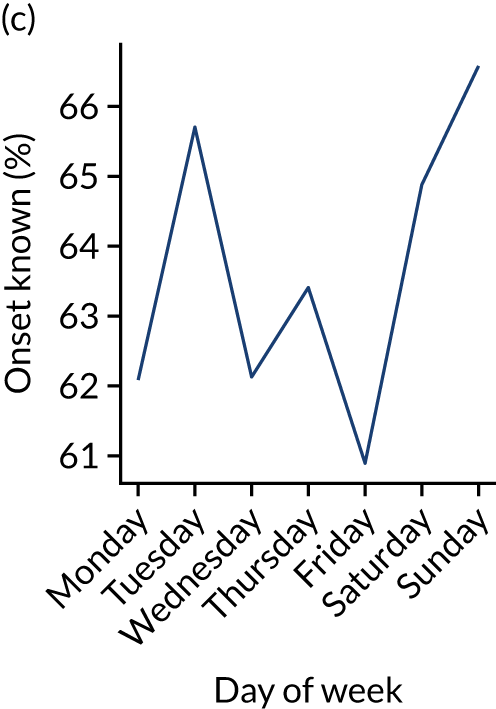

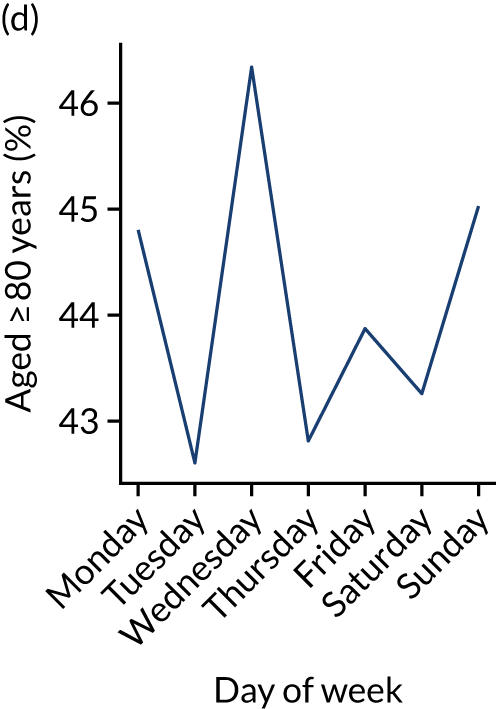

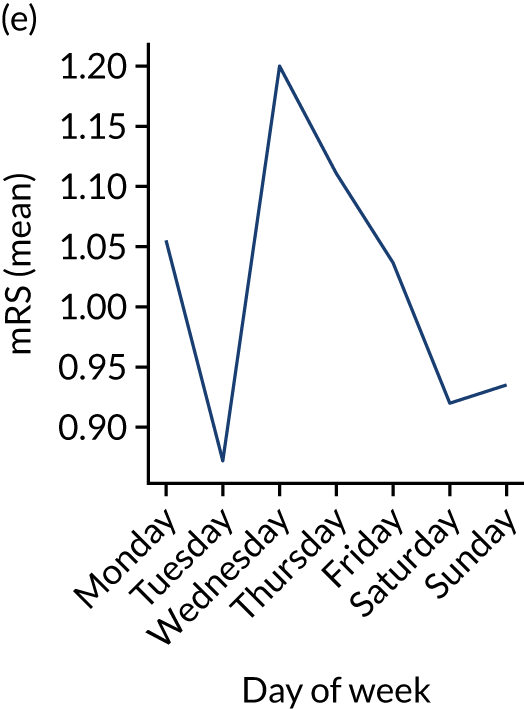

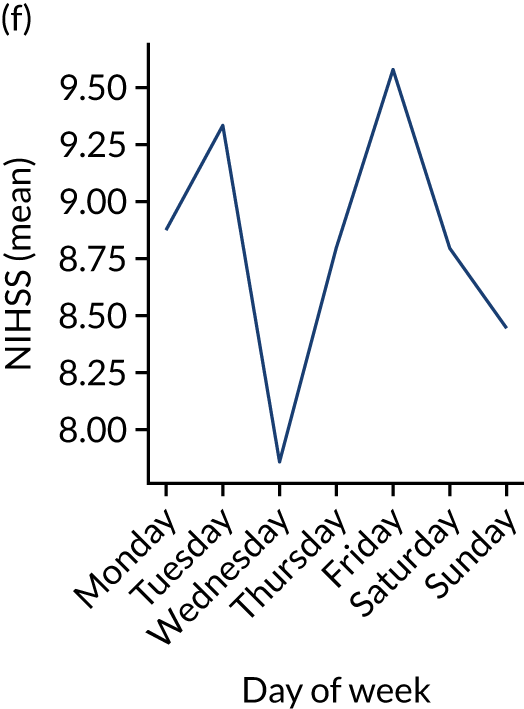

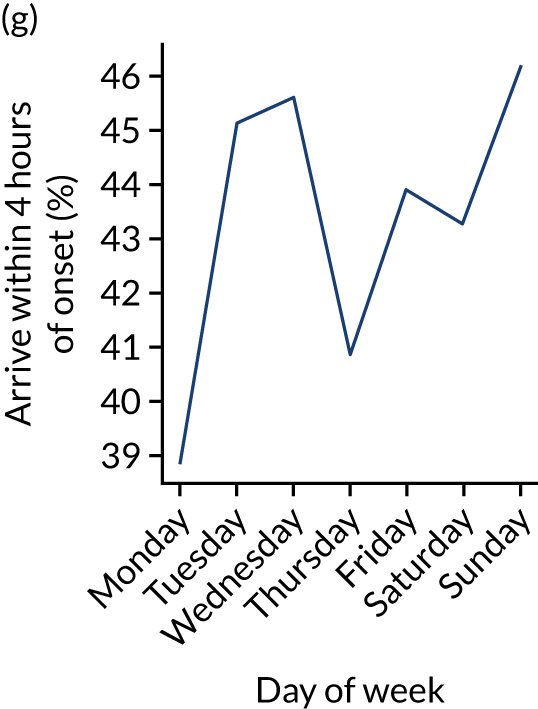

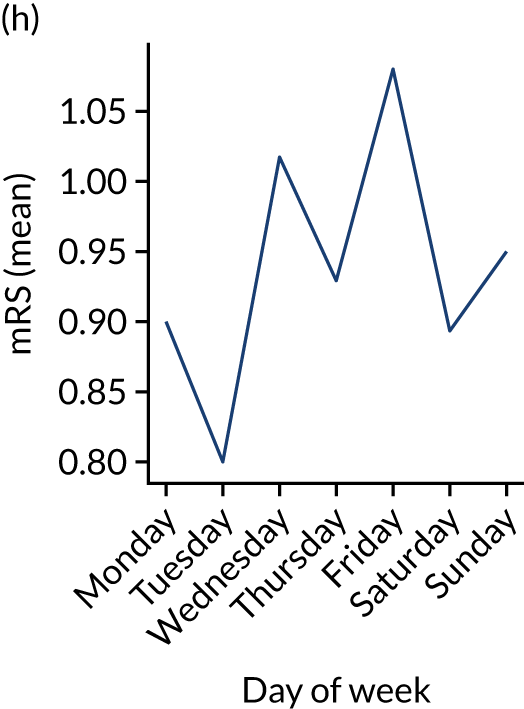

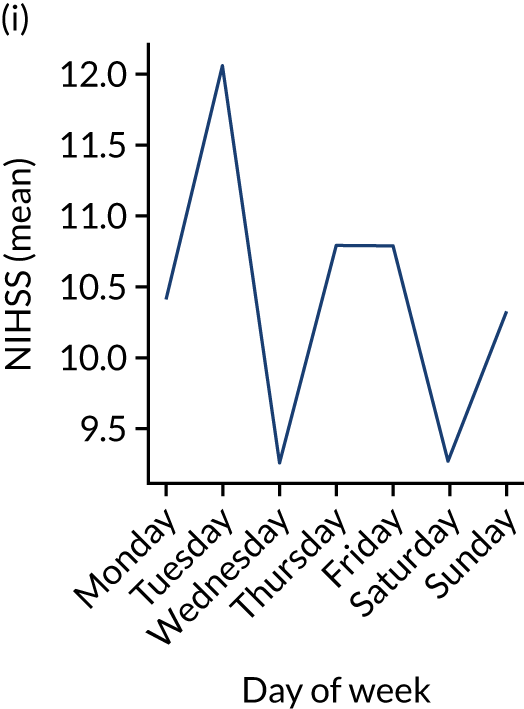

Figure 9 shows changes in key pathway statistics throughout the week, as averaged across all patients. Compared with the time-of-day associations (see Figure 8), the association between day of week and thrombolysis use was more modest. There were slightly fewer admissions at weekends than during the week (i.e. weekend arrivals were about 12% lower than during the week). The proportion of patients arriving within about 4 hours of known stroke onset was a little higher at weekends, and this was associated with a slightly higher thrombolysis use in all arrivals, without any change in thrombolysis use in patients who received a scan within 4 hours of known onset. Arrival-to-scan time was similar at the weekend to on weekdays, but scan-to-needle time tended to be a little (about 5 minutes) longer at weekends.

FIGURE 9.

Changes in key pathway statistics by day of week. Results are mean results across all stroke units. (a) Arrivals: normalised to average; (b) thrombolysis use: all arrivals; (c) proportion of patients with known onset; (d) proportion of patients aged ≥ 80 years; (e) mean pre-stroke mRS: all arrivals; (f) mean NIHSS score: all arrivals; (g) proportion of patients arriving within 4 hours of known onset; (h) mean pre-stroke mRS: arrivals 4 hours from onset; (i) mean NIHSS score: arrivals 4 hours from onset; (j) mean onset-to-arrival time (minutes): arrivals 4 hours from onset; (k) proportion of patients scanned within 4 hours of arrival: arrivals 4 hours from onset; (l) mean arrival-to-scan time (minutes): scanned 4 hours from onset; (m) thrombolysis use: scanned 4 hours from onset; (n) mean scan-to-needle time (minutes); (o) mean arrival-to-needle time (minutes); and (p) mean onset-to-needle time.

At the level of an individual stroke team

Individual hospitals may show similarities and differences in diurnal patterns compared with the national average, and results may be produced at the individual hospital/team level, as shown in Figures 10 and 11.

FIGURE 10.

Changes in key pathway statistics by time of day. Results show a single team (with 672 admissions/year). Time is given as the start of a 3-hour epoch (e.g. ‘3’ is 3 a.m. to 6 a.m.). (a) Arrivals: normalised to average; (b) thrombolysis use: all arrivals; (c) proportion of patients with known onset; (d) proportion of patients aged ≥ 80 years; (e) mean pre-stroke mRS: all arrivals; (f) mean NIHSS score: all arrivals; (g) proportion of patients arriving within 4 hours of known onset; (h) mean pre-stroke mRS: arrivals 4 hours from onset; (i) mean NIHSS score: arrivals 4 hours from onset; (j) mean onset-to-arrival time (minutes): arrivals 4 hours from onset; (k) proportion of patients scanned within 4 hours of arrival: arrivals 4 hours from onset; (l) mean arrival-to-scan time (minutes): scanned 4 hours from onset; (m) thrombolysis use: scanned 4 hours from onset; (n) mean scan-to-needle time (minutes); (o) mean arrival-to-needle time (minutes); and (p) mean onset-to-needle time.

FIGURE 11.

Changes in key pathway statistics by day of week. Results show a single team (with 672 admissions/year). (a) Arrivals: normalised to average; (b) thrombolysis use: all arrivals; (c) proportion of patients with known onset; (d) proportion of patients aged ≥ 80 years; (e) mean pre-stroke mRS: all arrivals; (f) mean NIHSS score: all arrivals; (g) proportion of patients arriving within 4 hours of known onset; (h) mean pre-stroke mRS: arrivals 4 hours from onset; (i) mean NIHSS score: arrivals 4 hours from onset; (j) mean onset-to-arrival time (minutes): arrivals 4 hours from onset; (k) proportion of patients scanned within 4 hours of arrival: arrivals 4 hours from onset; (l) mean arrival-to-scan time (minutes): scanned 4 hours from onset; (m) thrombolysis use: scanned 4 hours from onset; (n) mean scan-to-needle time (minutes); (o) mean arrival-to-needle time (minutes); and (p) mean onset-to-needle time.

One hospital showed a more marked reduction in night-time (i.e. midnight to 3 a.m.) use of thrombolysis than the national average, and showed a marked reduction in use of thrombolysis at weekends. This reduced use of thrombolysis was associated with a lower use of thrombolysis in patients scanned within 4 hours and significantly slower scan-to-needle times. These observations may suggest that this unit struggled to maintain the post-scan thrombolysis pathway at weekends.

Comparison of average values for patients who receive thrombolysis and patients who do not

Among patients arriving within 4 hours of known stroke onset, those who received thrombolysis had the following characteristics (vs. patients who did not receive thrombolysis):

-

Patients were younger (mean age 73 years vs. 76 years).

-

Patients arrived sooner (mean onset-to-arrival time 97 minutes vs. 117 minutes).

-

Patients had higher stroke severity (mean NIHSS score 11.6 vs. 8.4).

-

Patients were scanned within 4 hours of arrival (100% vs. 93%) and within 4 hours of onset (99% vs. 77%).

-

Patients were more likely to have a precisely determined stroke onset time (97% vs. 87%).

-

Patients arrived by ambulance (94% vs. 91%).

-

Patients did not have atrial fibrillation (AF) (14% vs. 24% having AF).

-

Patients did not have a history of transient ischaemic attack (TIA) (21% vs. 30% having had a TIA).

-

Patients were not on anticoagulant therapy (e.g. of those receiving thrombolysis, 3.7% were on an anticoagulant, whereas of those not receiving thrombolysis, 15.7% were on an anticoagulant).

Distribution of process times

The code and all results for distribution fitting may be found online. 30

Figure 12 shows the distribution of process times across all hospitals. All timings show a right skew.

FIGURE 12.

Histograms of distribution of timings for (a) onset to arrival; (b) arrival to scan; and (c) scan to needle.

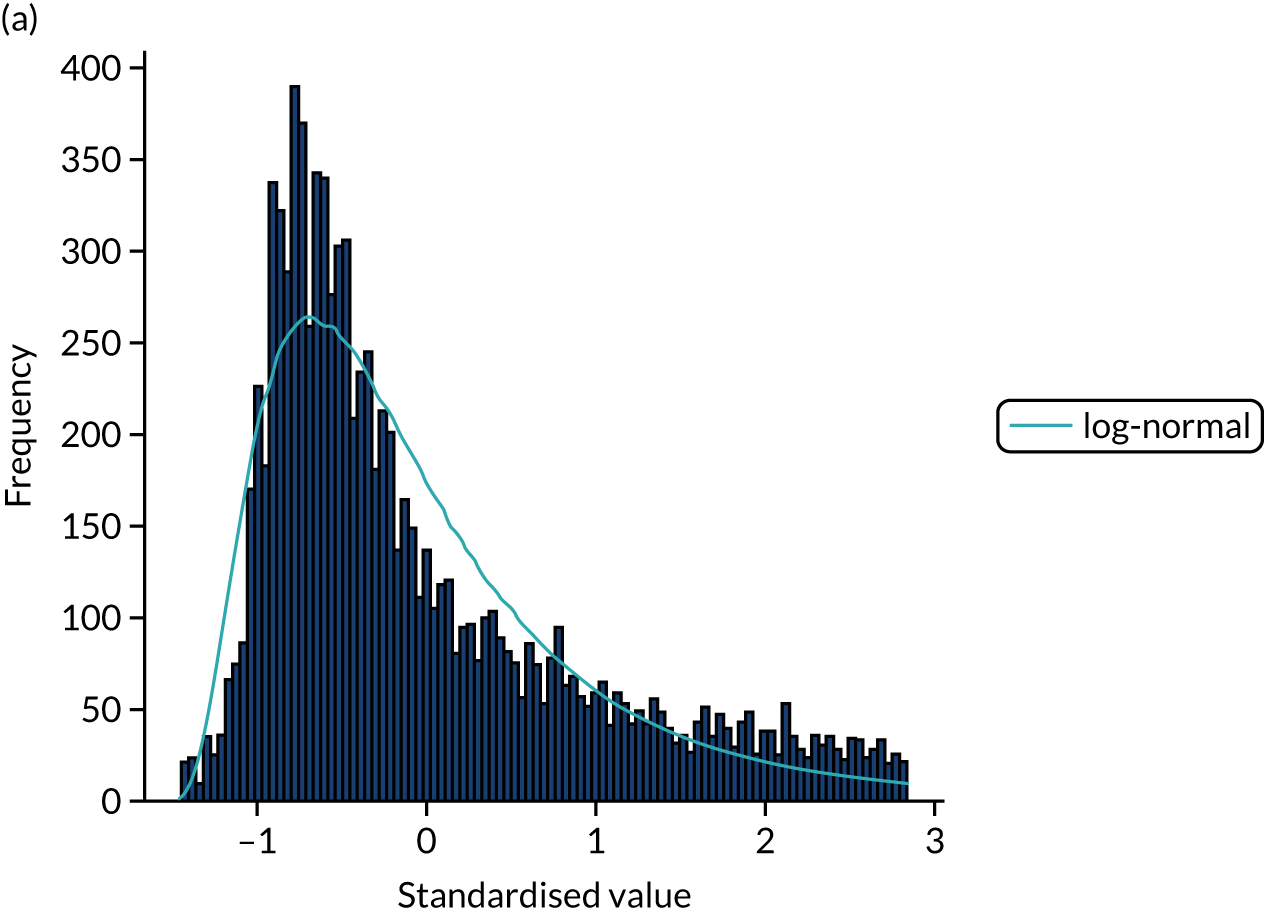

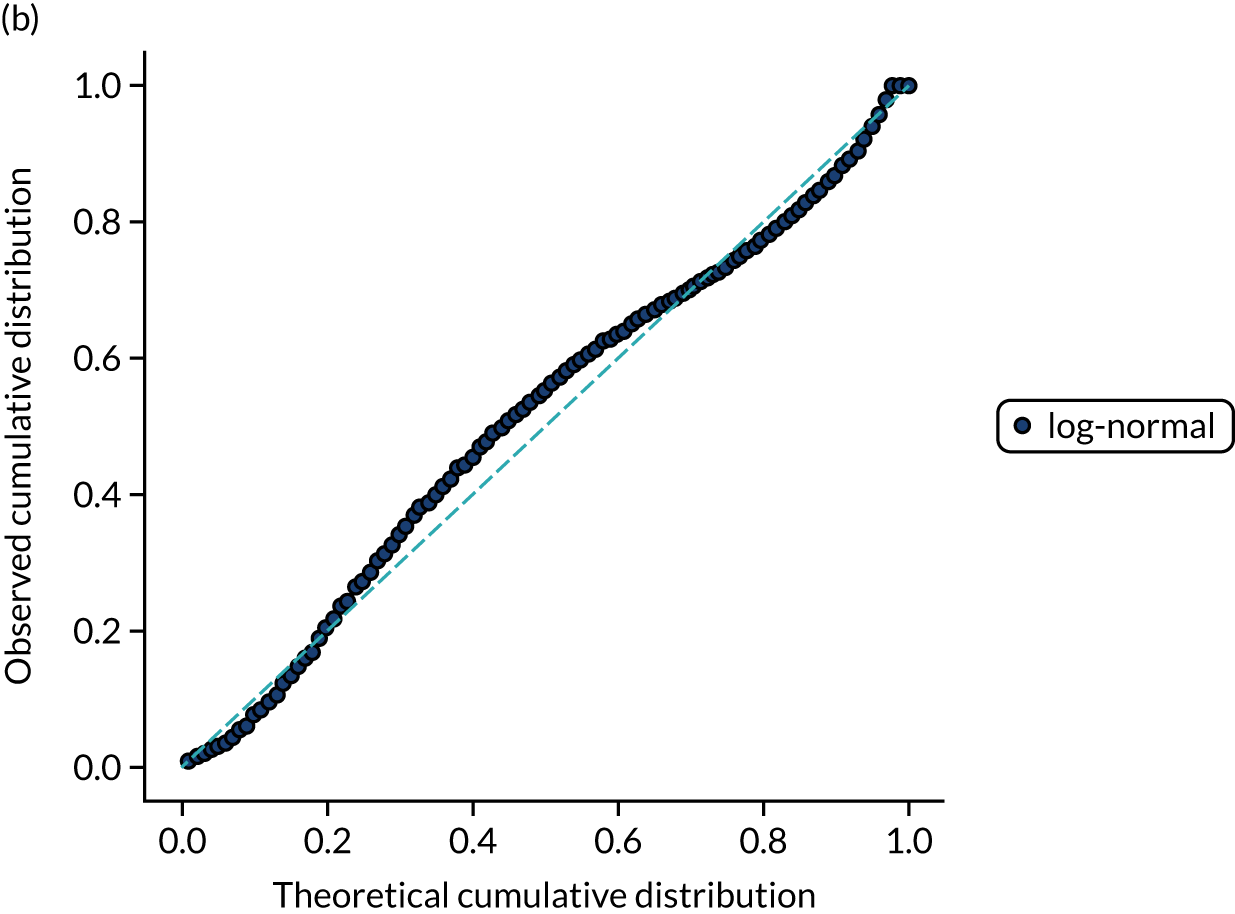

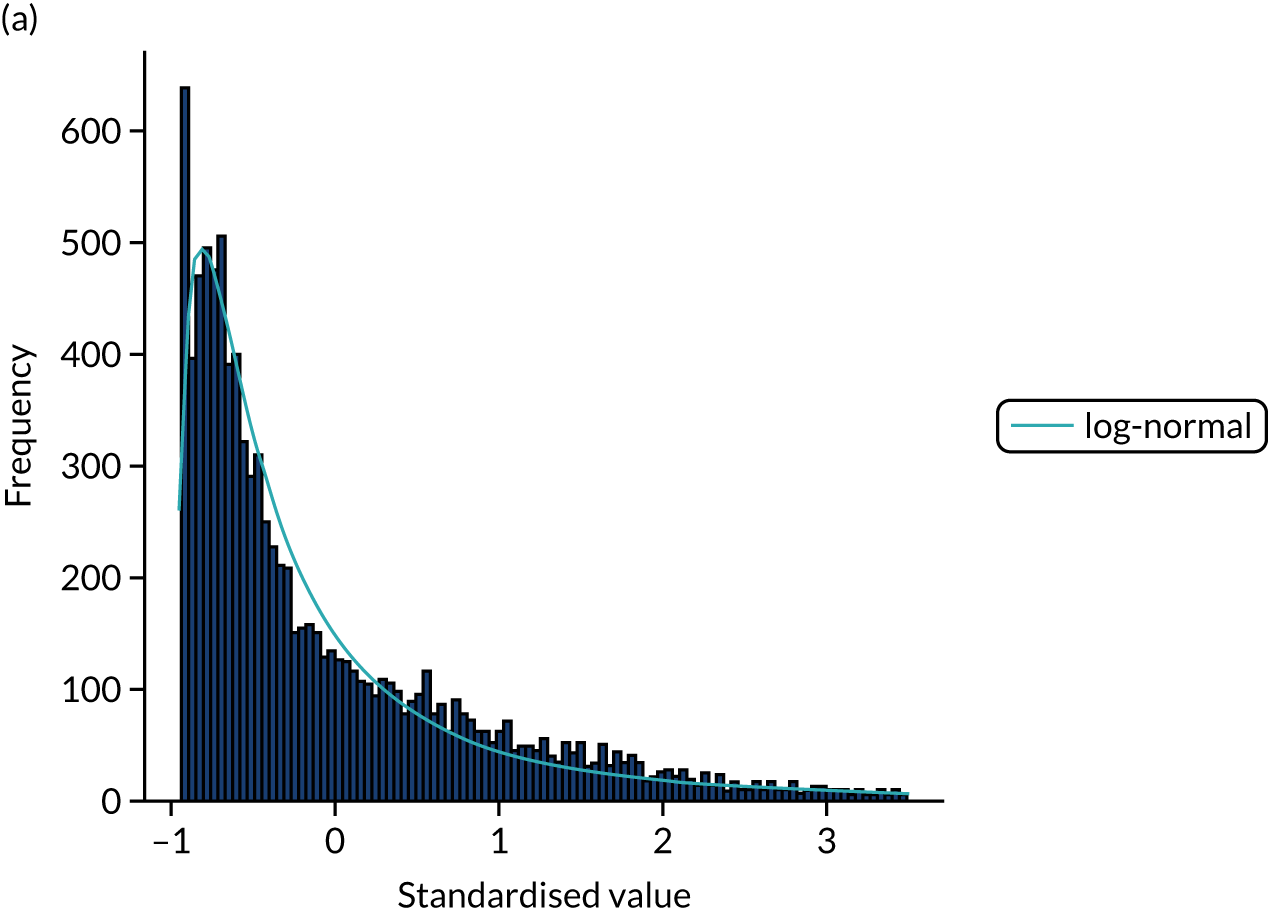

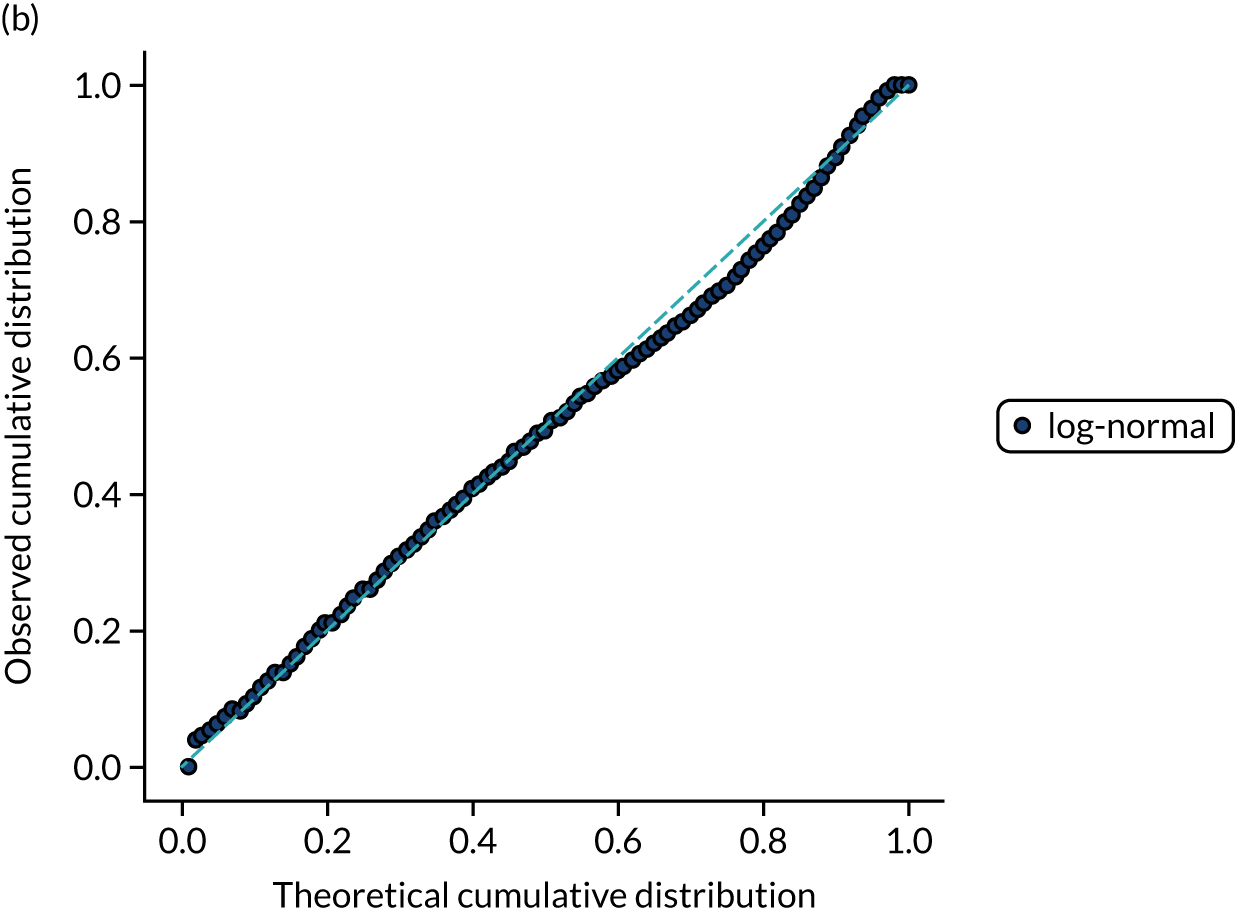

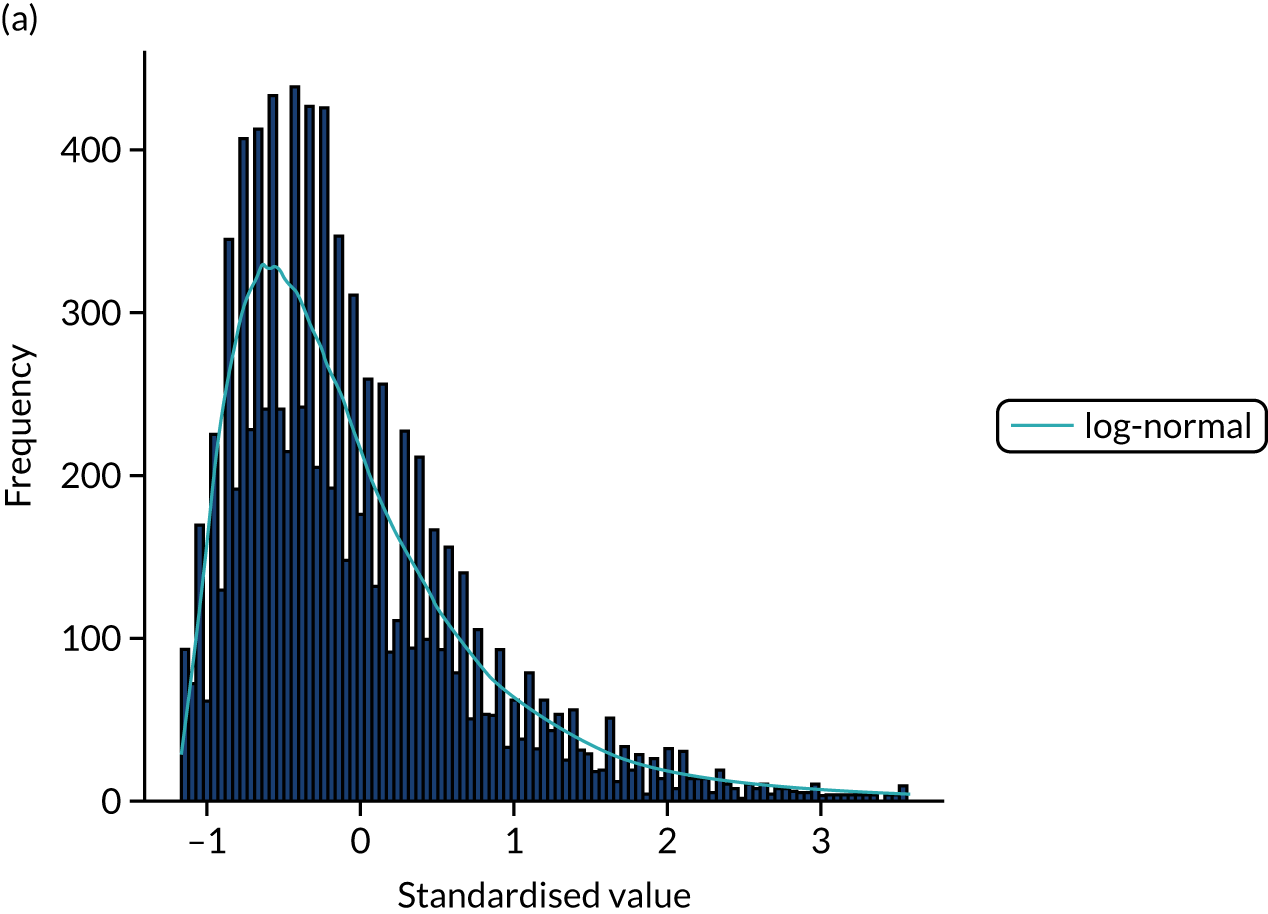

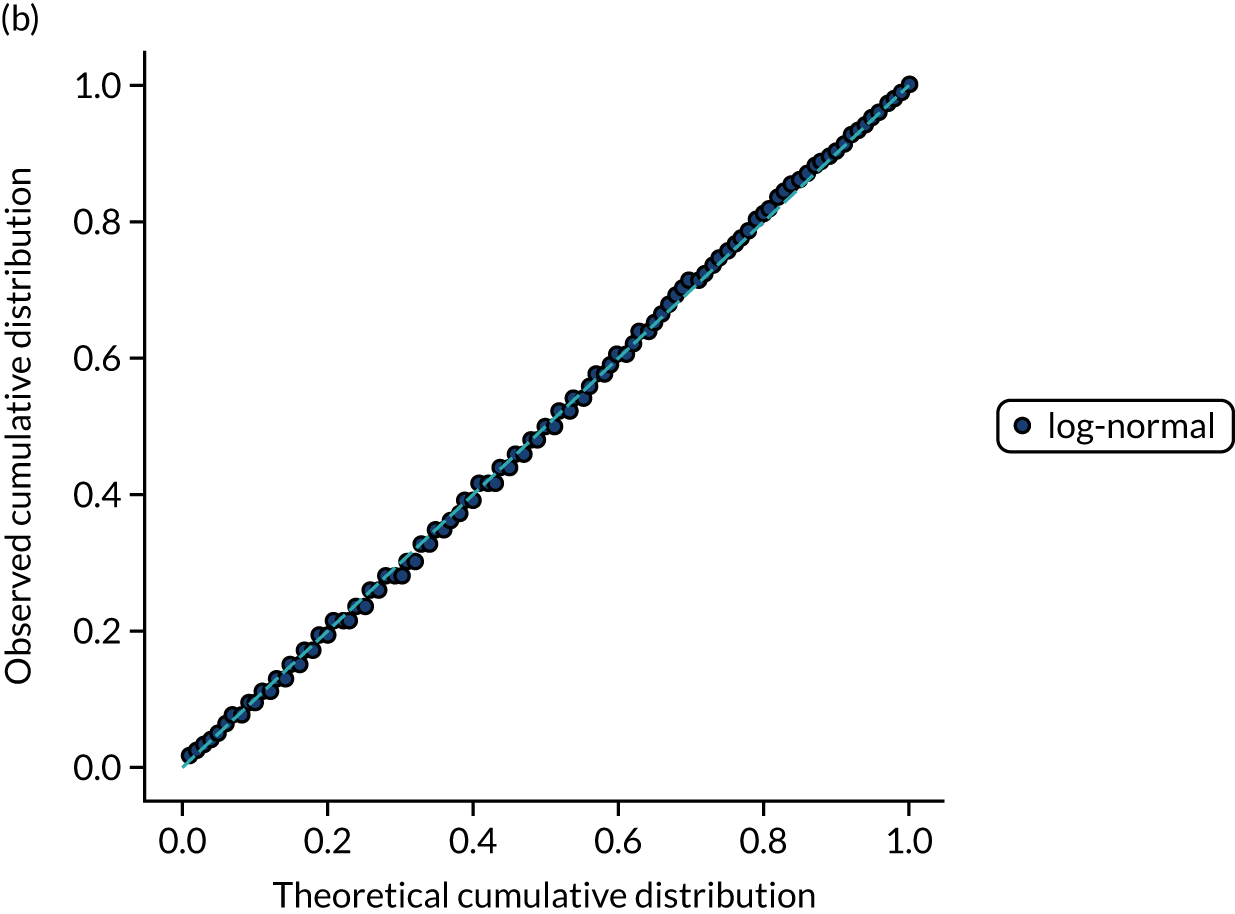

Ten candidate distribution types were fitted to the data. All three process times were best fitted by a log-normal distribution (chosen by lowest chi-squared; distributions were fitted to 10,000 bootstrapped samples for each process time). Figures 13–15 show log-normal distribution fits for the three process times.

FIGURE 13.

Distribution fitting to onset-to-arrival times. (a) Histogram of standardised values and fitted distribution (log-normal); and (b) P–P plot of actual vs. theoretical (fitted) probabilities.

FIGURE 14.

Distribution fitting to arrival-to-scan times. (a) Histogram of standardised values and fitted distribution (log-normal); and (b) P–P plot of actual vs. theoretical (fitted) probabilities.

FIGURE 15.

Distribution fitting to scan-to-needle times. (a) Histogram of standardised values and fitted distribution (log-normal); and (b) P–P plot of actual vs. theoretical (fitted) probabilities.

Covariance between features

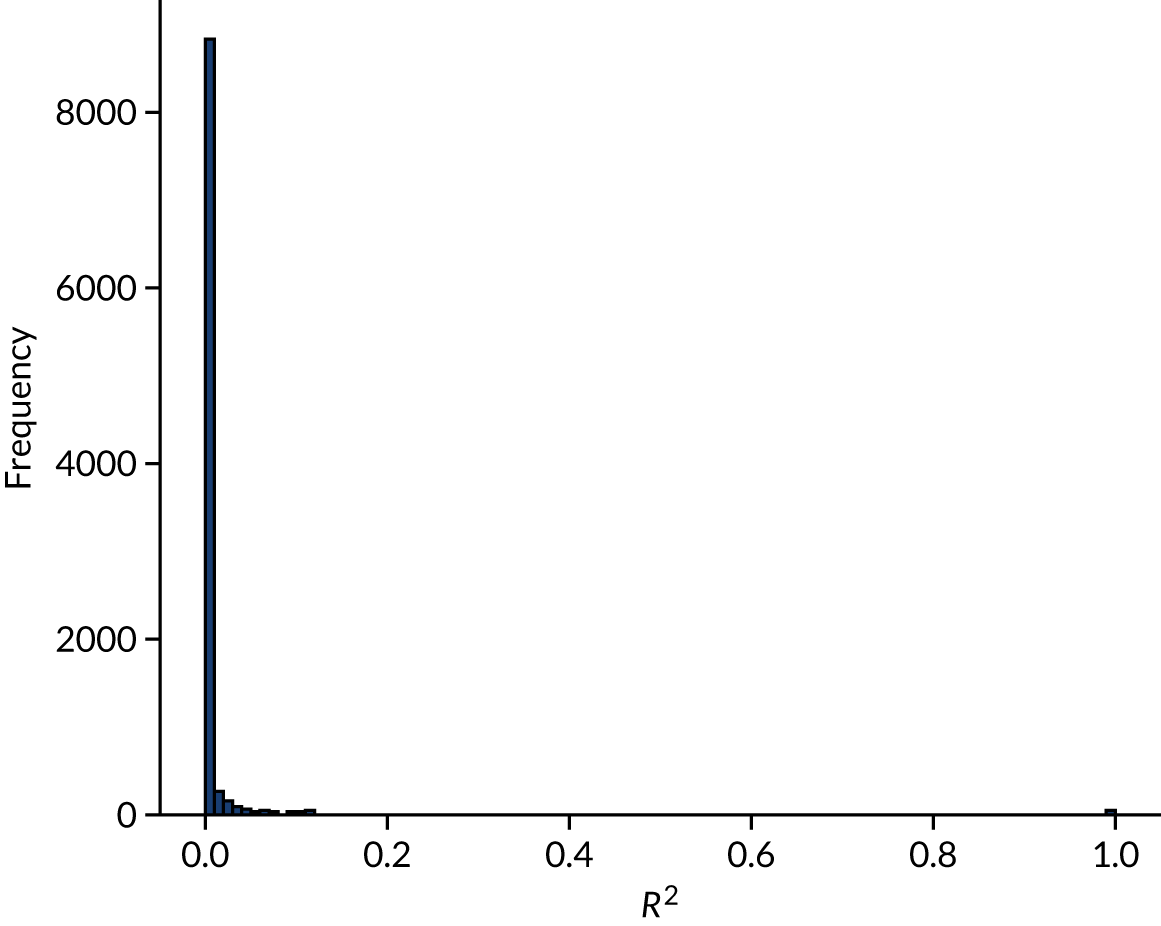

The code and full results for analysis of covariance are available online. 31

Figure 16 shows the distribution of R2 between feature pairs. Fewer than 4% of the feature pairs had a R2 ≥ 0.1.

FIGURE 16.

Coefficient of determination (R2) between feature pairs.

Table 5 shows those feature pairs with a R2 ≥ 0.25. Many of these feature pairs are pairs of data from within the same subset of data, such as stroke type, anticoagulant use or NIHSS score.

| Feature pair | R 2 | |

|---|---|---|

| Feature 1 | Feature 2 | |

| S2StrokeType_Infarction | S2StrokeType_Primary Intracerebral Haemorrhage | 0.988 |

| AFAnticoagulentHeparin_No | AFAnticoagulentVitK_No | 0.917 |

| AFAnticoagulentDOAC_No | AFAnticoagulentHeparin_No | 0.869 |

| AFAnticoagulentDOAC_No | AFAnticoagulentVitK_No | 0.790 |

| MotorLegRight | MotorArmRight | 0.712 |

| MotorArmLeft | MotorLegLeft | 0.693 |

| S2NewAFDiagnosis_No | AFAnticoagulentDOAC_No | 0.618 |

| S2NewAFDiagnosis_No | AFAnticoagulentVitK_No | 0.600 |

| AtrialFibrillation_No | AFAntiplatelet_No | 0.598 |

| AFAntiplatelet_No | AtrialFibrillation_Yes | 0.598 |

| S1Ethnicity_White | S1Ethnicity_Other | 0.579 |

| S2NewAFDiagnosis_No | AFAnticoagulentHeparin_No | 0.573 |

| MoreEqual80y_Yes | S1AgeOnArrival | 0.571 |

| MoreEqual80y_No | S1AgeOnArrival | 0.571 |

| S1OnsetDateType_Precise | S1OnsetDateType_Best estimate | 0.528 |

| AFAnticoagulent_No | AFAnticoagulentDOAC_No | 0.520 |

| S2NewAFDiagnosis_No | AFAnticoagulent_No | 0.503 |

| AFAnticoagulent_No | AFAnticoagulentVitK_No | 0.487 |

| BestLanguage | LocQuestions | 0.487 |

| S2NihssArrival | ExtinctionInattention | 0.462 |

| BestGaze | S2NihssArrival | 0.456 |

| BestLanguage | S2NihssArrival | 0.455 |

| AFAnticoagulentHeparin_No | AFAnticoagulent_No | 0.440 |

| LocCommands | LocQuestions | 0.433 |

| S2NihssArrival | LocCommands | 0.430 |

| AtrialFibrillation_Yes | AFAnticoagulent_Yes | 0.425 |

| AFAnticoagulent_Yes | AtrialFibrillation_No | 0.425 |

| S2NihssArrival | MotorLegRight | 0.422 |

| S1OnsetDateType_Stroke during sleep | S1OnsetDateType_Precise | 0.419 |

| LocQuestions | S2NihssArrival | 0.404 |

| LocCommands | BestLanguage | 0.403 |

| S2NihssArrival | MotorArmRight | 0.386 |

| AFAnticoagulent_Yes | AFAntiplatelet_No | 0.362 |

| Visual | S2NihssArrival | 0.358 |

| Dysarthria | S2NihssArrival | 0.341 |

| BestGaze | ExtinctionInattention | 0.329 |

| Visual | ExtinctionInattention | 0.317 |

| S2NihssArrival | Loc | 0.310 |

| S2NihssArrival | FacialPalsy | 0.308 |

| S2NihssArrival | MotorLegLeft | 0.303 |

| S2NihssArrival | Sensory | 0.303 |

| Visual | BestGaze | 0.296 |

| MotorArmRight | BestLanguage | 0.275 |

| ExtinctionInattention | Sensory | 0.257 |

| MotorLegRight | BestLanguage | 0.255 |

Chapter 5 Machine learning (modelling clinical decision-making)

General machine learning methodology

Machine learning models were used to predict whether or not a patient would receive thrombolysis, based on a range of patient-related features in SSNAP, including which hospital the patient attended. Machine learning models were restricted to patients arriving at hospital within 4 hours of known stroke onset and to 132 stroke teams that had received at least 300 admissions and provided thrombolysis to at least 10 patients over the course of 3 years (2016–18).

As we restrict machine learning to those patients who arrive within 4 hours of known stroke onset, the mean use of thrombolysis is 29.5% (compared with 11.8% of all arrivals receiving thrombolysis).

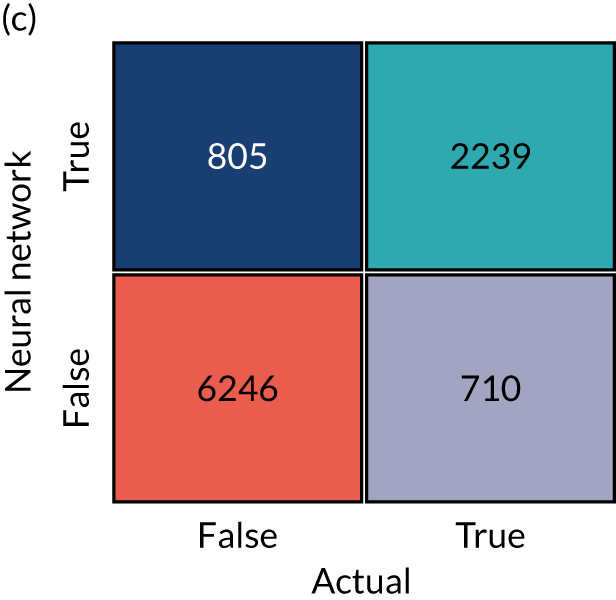

This is a supervised learning problem where we train a model using a training set of data that has all the features (i.e. variables) for the patient with a corresponding label of whether or not a patient received thrombolysis. The model is then tested on data that have not been used in training (see Stratified k-fold validation). We test three different types of machine learning: (1) logistic regression, (2) random forest and (3) neural networks (with alternative architectures).

Handling hospital identification

We use three different ways of handling hospital identification (ID) in our models.

One-hot encoding

A single model is built, which predicts use of thrombolysis in all hospitals. Hospital ID is encoded in a vector whose length is equal to the number of hospitals. All values are set to zero, except one value is set to 1 (i.e. one-hot) using the hospital ID as the index. For example, if there were five hospitals, then the one-hot encoding of hospital 2 would be (0, 1, 0, 0, 0). This one-hot vector is then joined to the rest of the input data. In our models, the one-hot vector has 132 values, one of which has the value 1, with all others having the value 0.

Hospital-specific model

A model is built for each hospital. No encoding of hospital is needed.

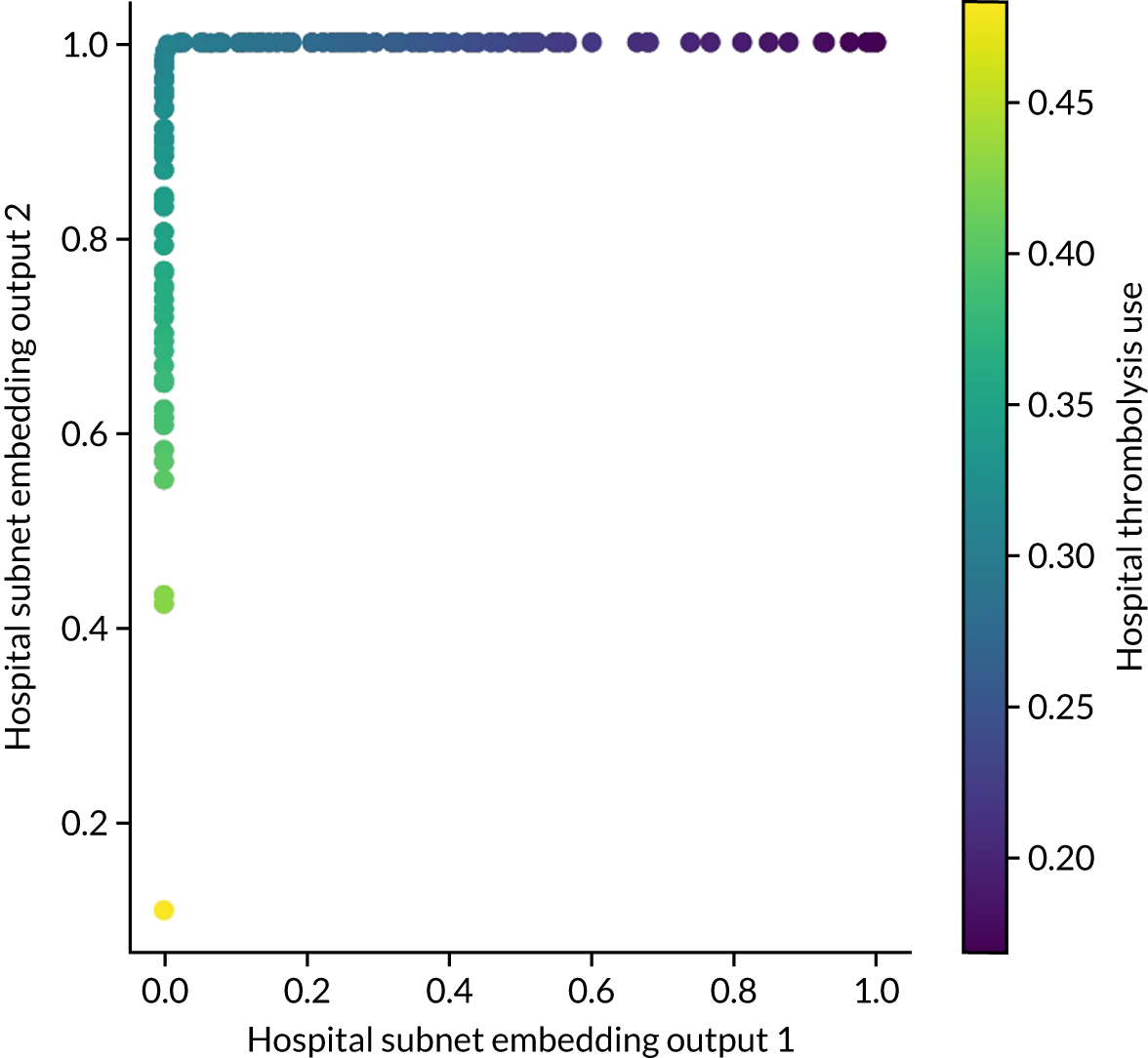

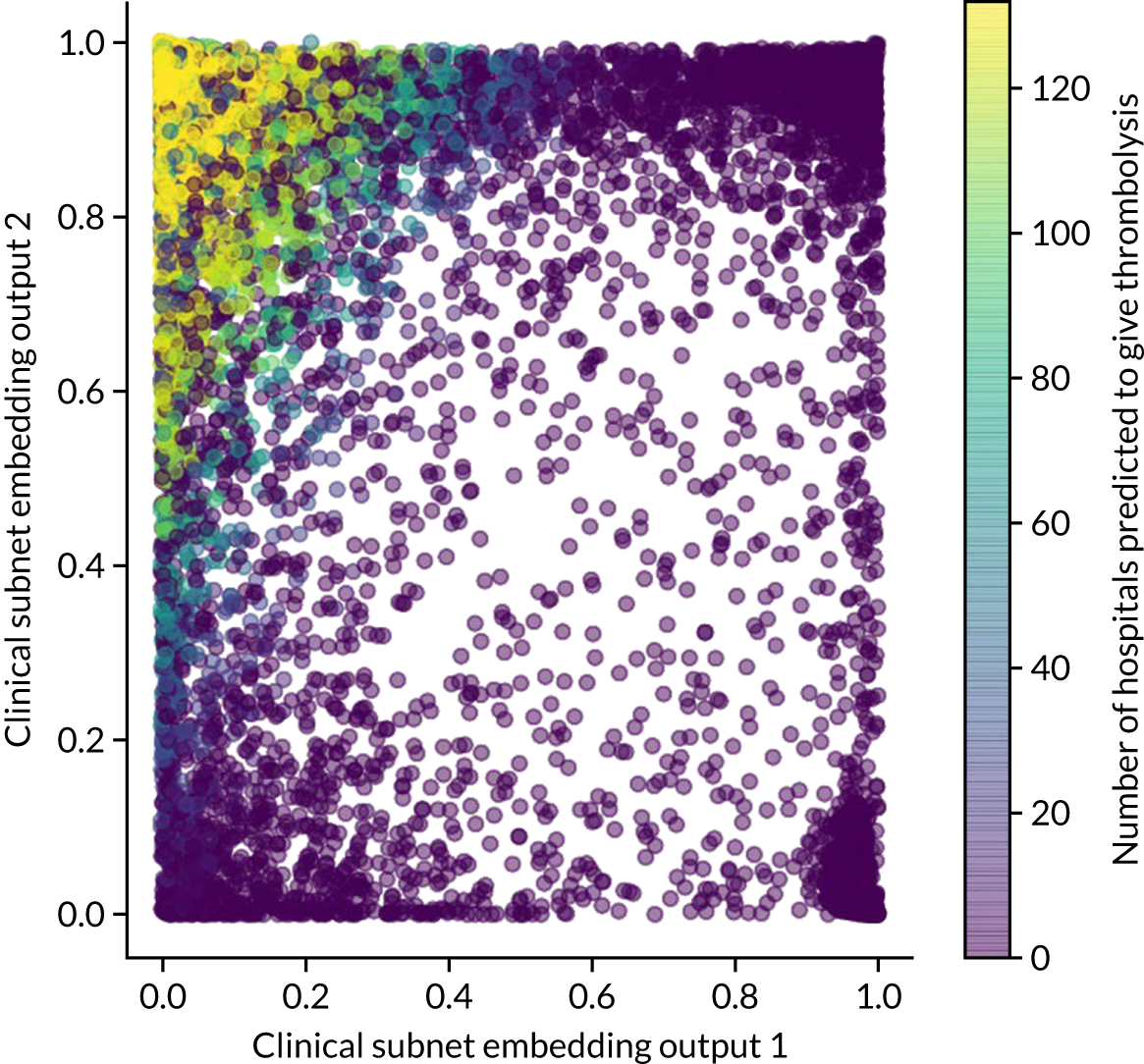

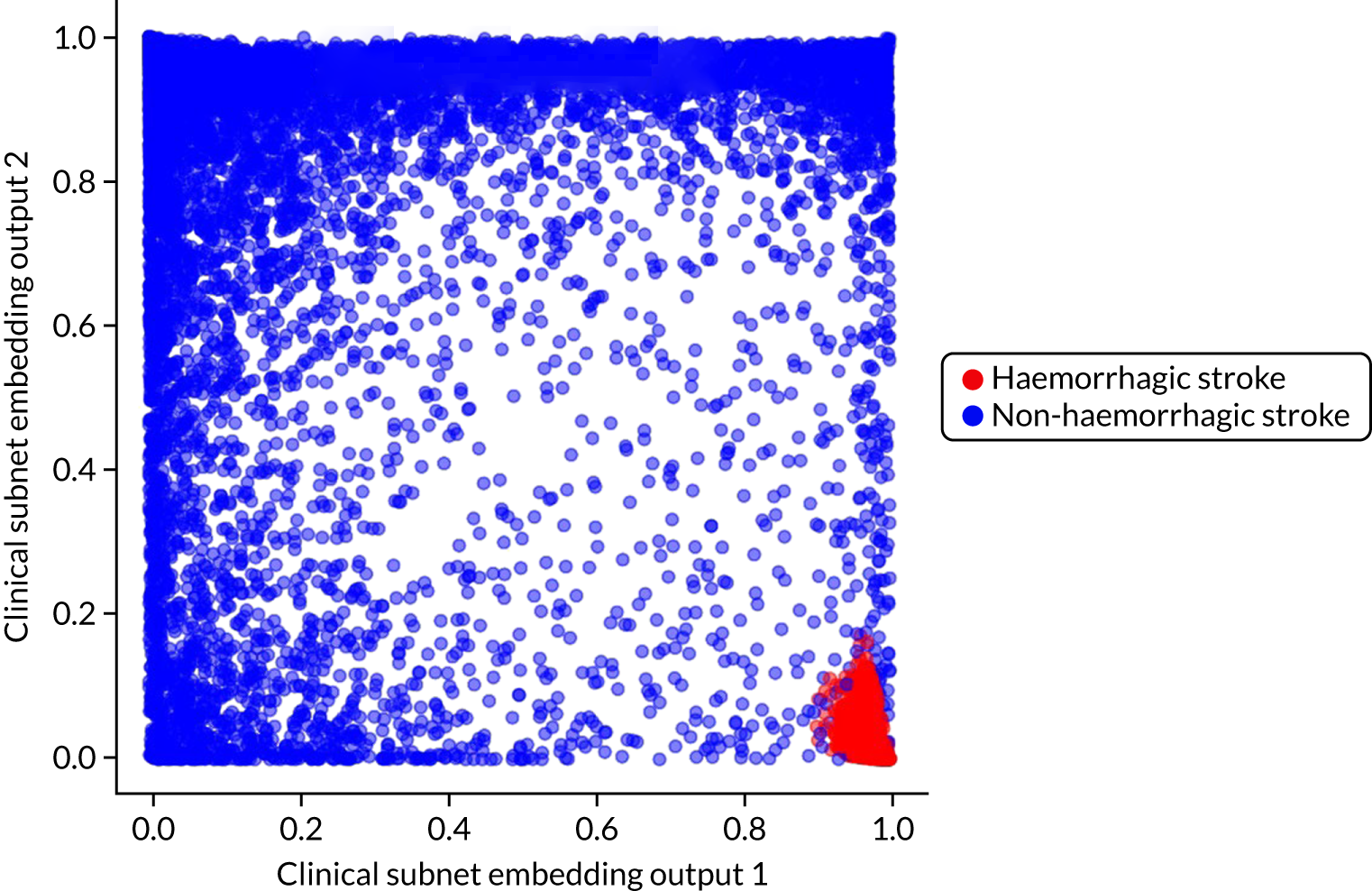

Embedding layer (neural networks only)

The hospital ID is first one-hot vector encoded. The one-hot vector is used as the input into an embedding layer, which reduces the one-hot vector to a reduced size [in this project, we reduce the one-hot vector to either a one-dimensional (1D) or a two-dimensional (2D) vector]. The embedding value is optimised during neural network training so that similar hospitals (from the perspective of decision-making) have embedding values that are similar.

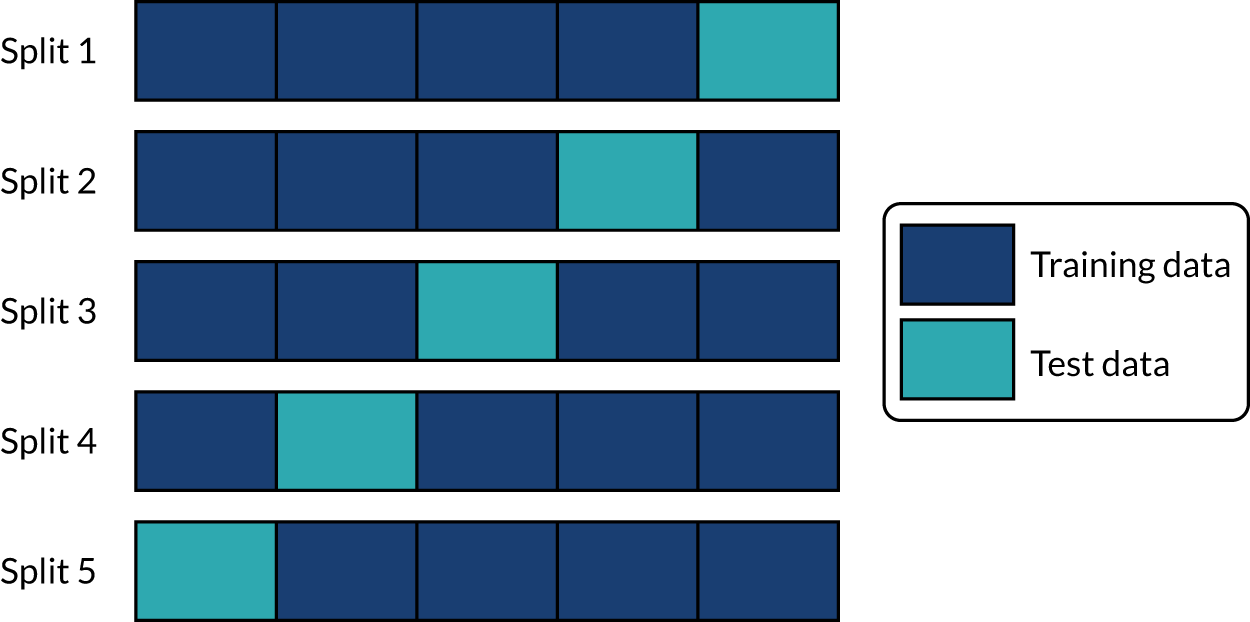

Stratified k-fold validation

When assessing accuracy of the machine learning models, stratified k-fold splits were used. We used fivefold splits where each data point is in one, and only one, of five test sets (the same point is in the training set for the four other splits). This is represented schematically in Figure 17. Data are stratified such that the test set is representative of hospital mix in the whole data population, and within the hospital-level data the use of thrombolysis is representative of the whole data for that hospital.

FIGURE 17.

Schematic representation of k-fold splits with five splits.

Logistic regression

What is in this section?

This section describes experiments predicting, using logistic regression, whether or not a patient will receive thrombolysis in a given hospital.

This section contains the following analyses:

-

Fitting to all stroke teams together, that is, a logistic regression classifier that is fitted to all data together, with each stroke team being a one-hot encoded feature. The models were analysed for (1) various accuracy scores, (2) a receiver operating characteristic (ROC) area under curve (AUC), (3) a sensitivity–specificity curve, (4) feature weights, (5) learning rate and (6) model calibration.

-

Fitting hospital-specific models, that is, a logistic regression classifier that has a fitted model for each hospital. The models were analysed for (1) various accuracy scores, (2) a ROC AUC, (3) a sensitivity–specificity curve and (4) model calibration.

Detailed code and results can be found online. 32

Key findings in this section

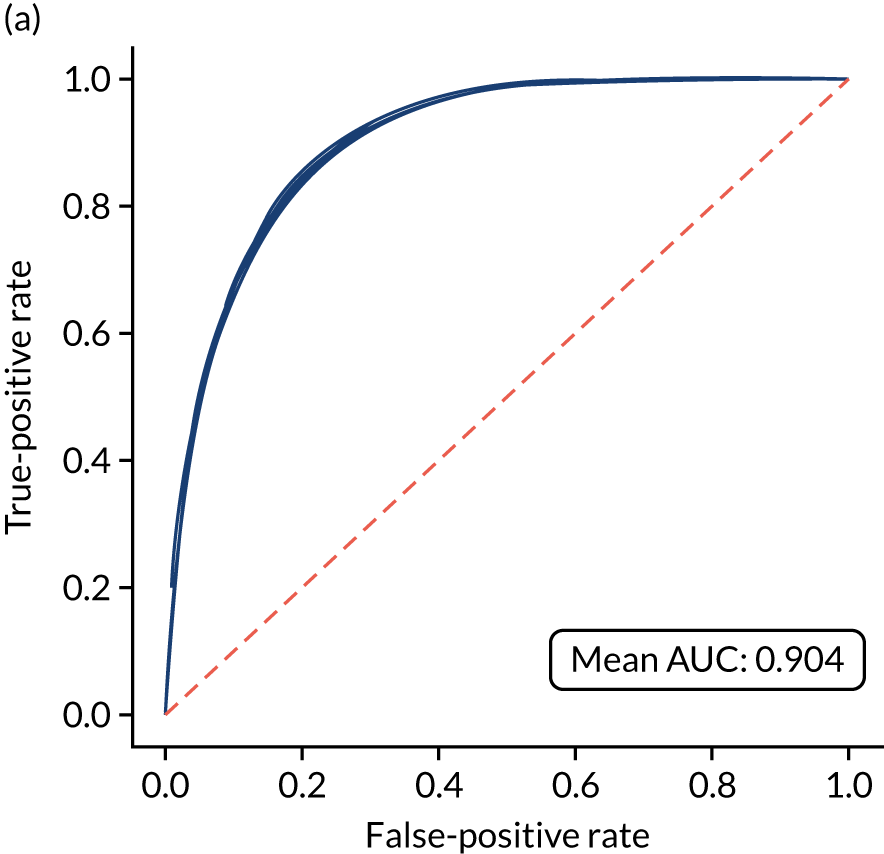

Using a single model fitted to all hospitals, we found the following:

-

The overall accuracy was 83.2%.

-

The model can achieve 82.0% sensitivity and specificity simultaneously.

-

The mean ROC AUC was 0.904.

Using models fitted to each hospital, we found the following:

-

The overall accuracy was 82.6%.

-

The model can achieve 78.9% sensitivity and specificity simultaneously.

-

The ROC AUC was 0.870.

Introduction to logistic regression methodology

Logistic regression is a probabilistic model, meaning that it assigns a class probability to each data point. Probabilities are calculated using a logistic function:

Here, x is a linear combination of the variables of each data point, that is, a + bx1 + cx2 + ., where x1 is the value of one variable, x2 the value of another, etc. The function f maps x to a value between 0 and 1, which may be viewed as a class probability. If the class probability is greater than the decision threshold, then the data point is classified as belonging to class 1 (i.e. receives thrombolysis). For probabilities less than the threshold, it is placed in class 0 (i.e. does not receive thrombolysis).

During training, the logistic regression uses the examples in the training data to find the values of the coefficients in x (a, b, c, . . .) that lead to the highest possible accuracy in the training data. The values of these parameters determine the importance of each variable for the classification and, therefore, the decision-making process. A variable with a larger coefficient (positive or negative) is more important when predicting whether or not a patient will receive thrombolysis.

The logistic regression classifier used was from scikit-learn [URL: https://samuel-book.github.io/samuel-1/introduction/software.html (accessed 23 May 2022)]. Default settings were used.

Logistic regression model fitted as a single model

Logistic regression models were fitted to standardised data. These results are for a model that is fitted to all hospitals simultaneously, with hospital encoded as a ‘one-hot’ feature (i.e. all hospitals are present as separate features in the model and the hospital attended has a feature value of 1, whereas all other hospitals have a feature value of 0).

Accuracy

The logistic regression model had an overall accuracy of 83.2%. With a default classification threshold, sensitivity (71.7%) is lower than specificity (88.1%), which is likely due to the imbalance of class weights in the data set. Full accuracy measures are given in Table 6.

| Accuracy measure | Mean (95% CI)a |

|---|---|

| Actual positive rate | 0.295 (0.000) |

| Predicted positive rate | 0.295 (0.000) |

| Accuracy | 0.833 (0.001) |

| Precision | 0.717 (0.002) |

| Recall/sensitivity | 0.717 (0.002) |

| F1 score | 0.717 (0.002) |

| Specificity | 0.881 (0.001) |

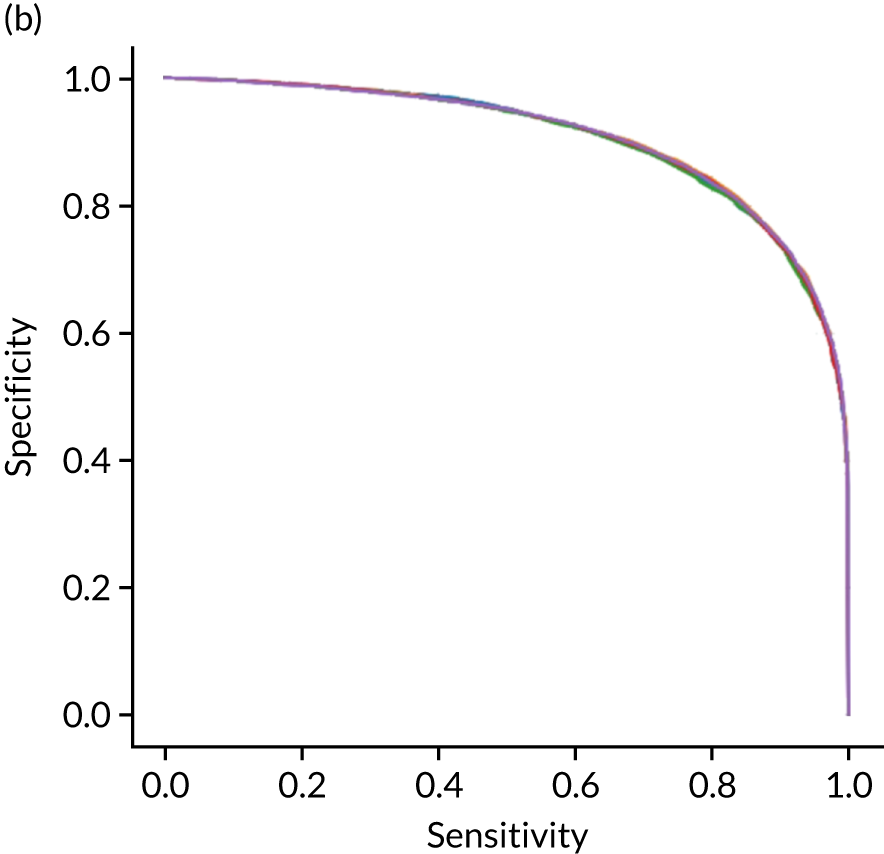

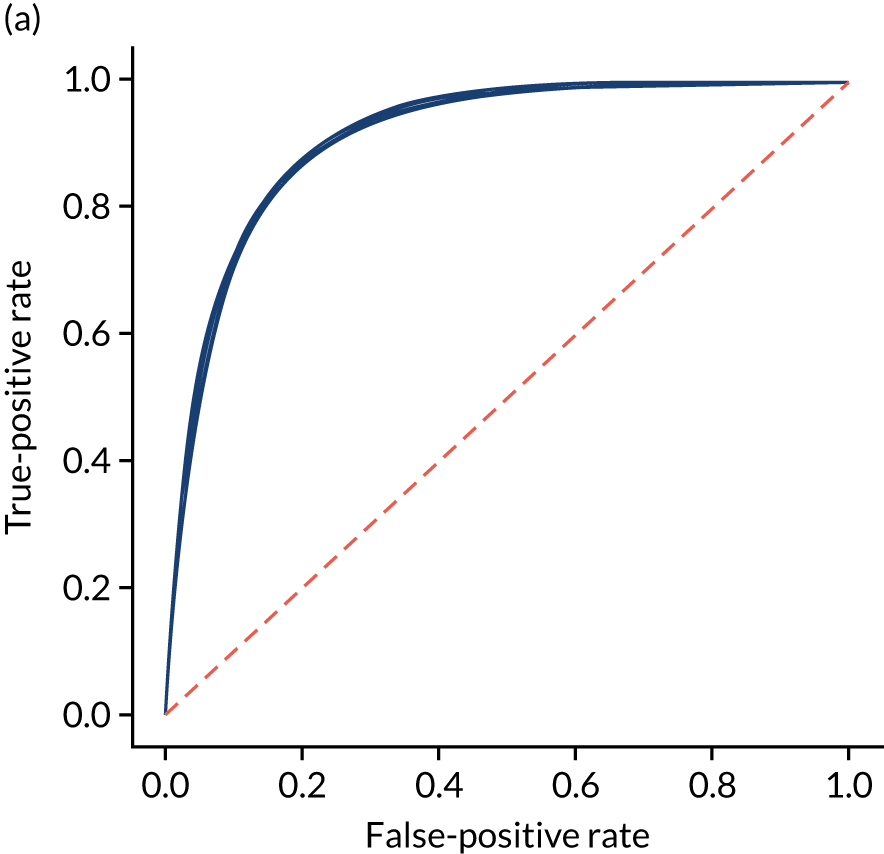

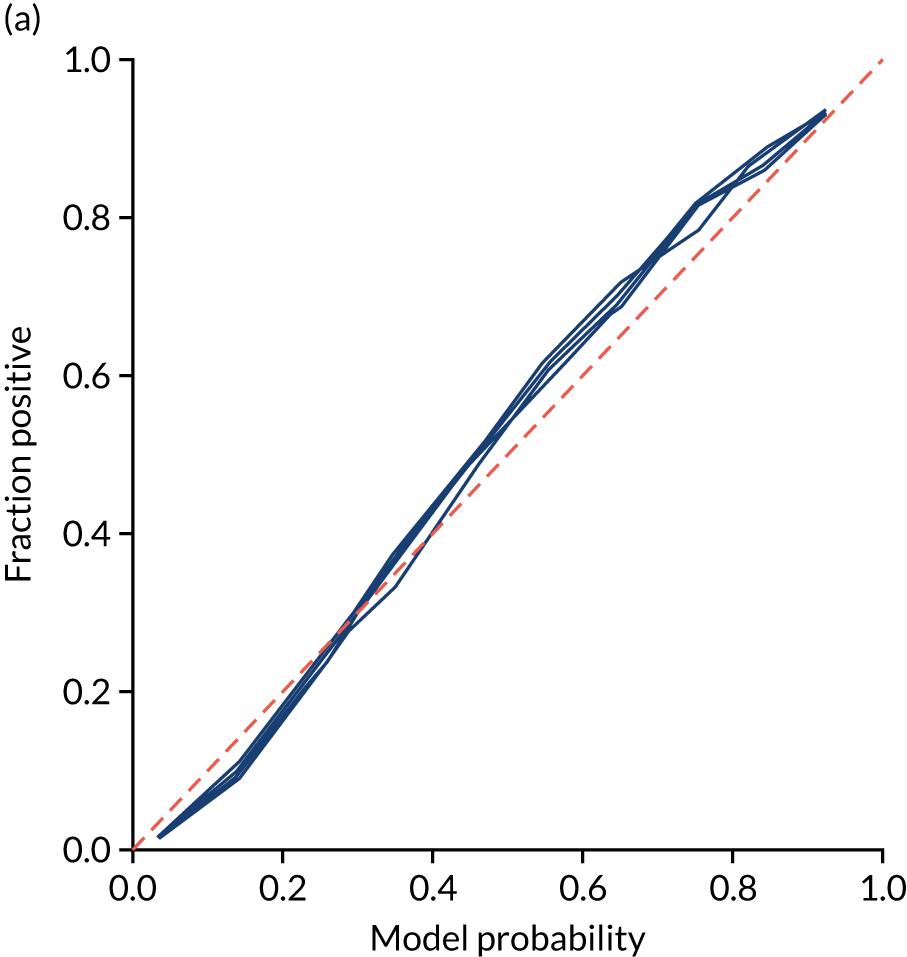

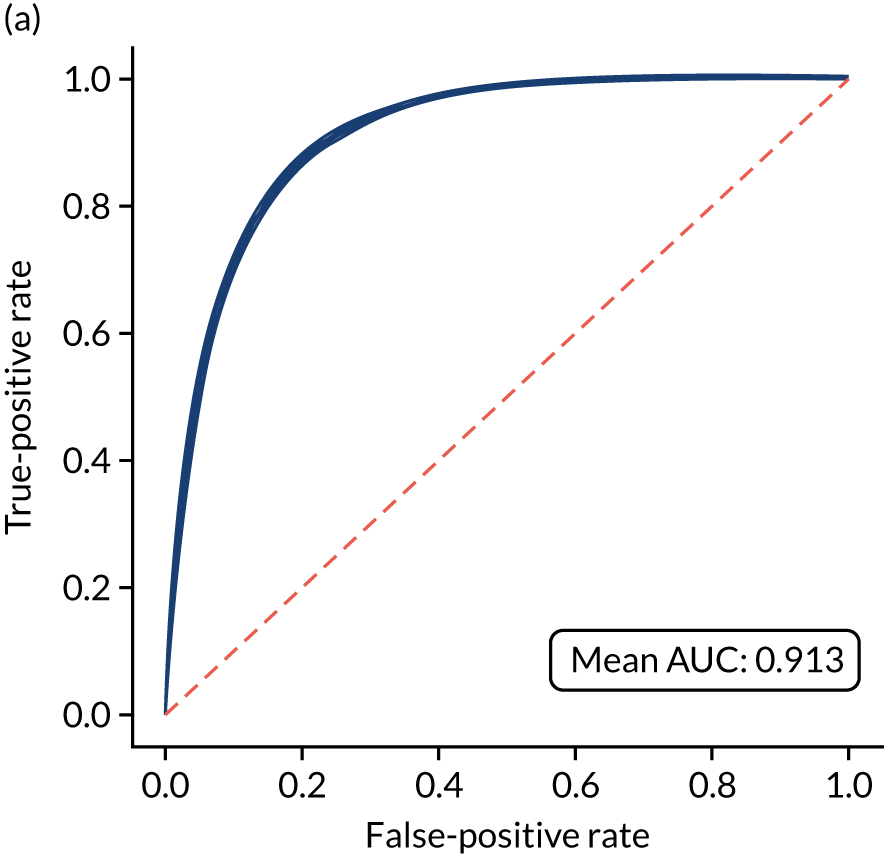

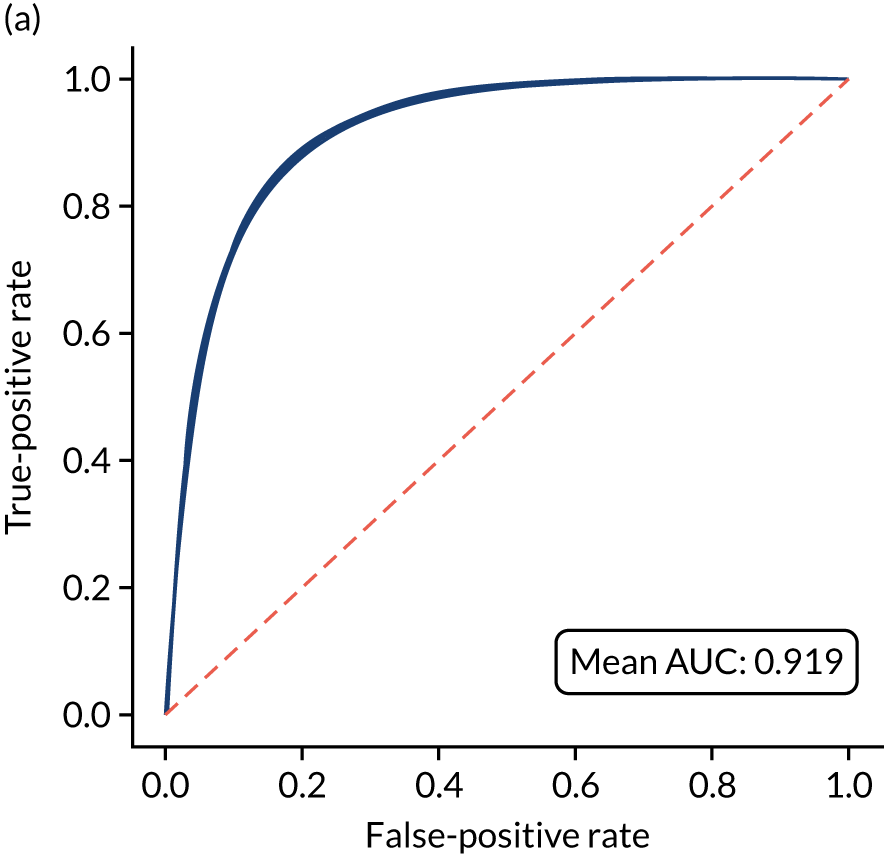

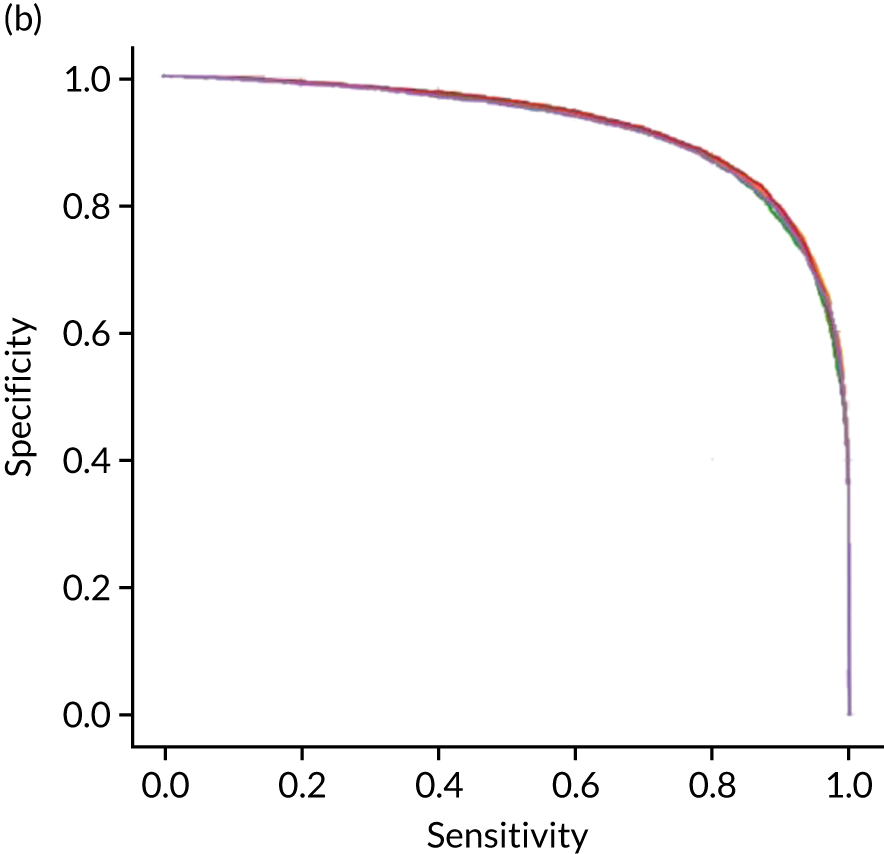

Receiver operating characteristic and sensitivity–specificity curves

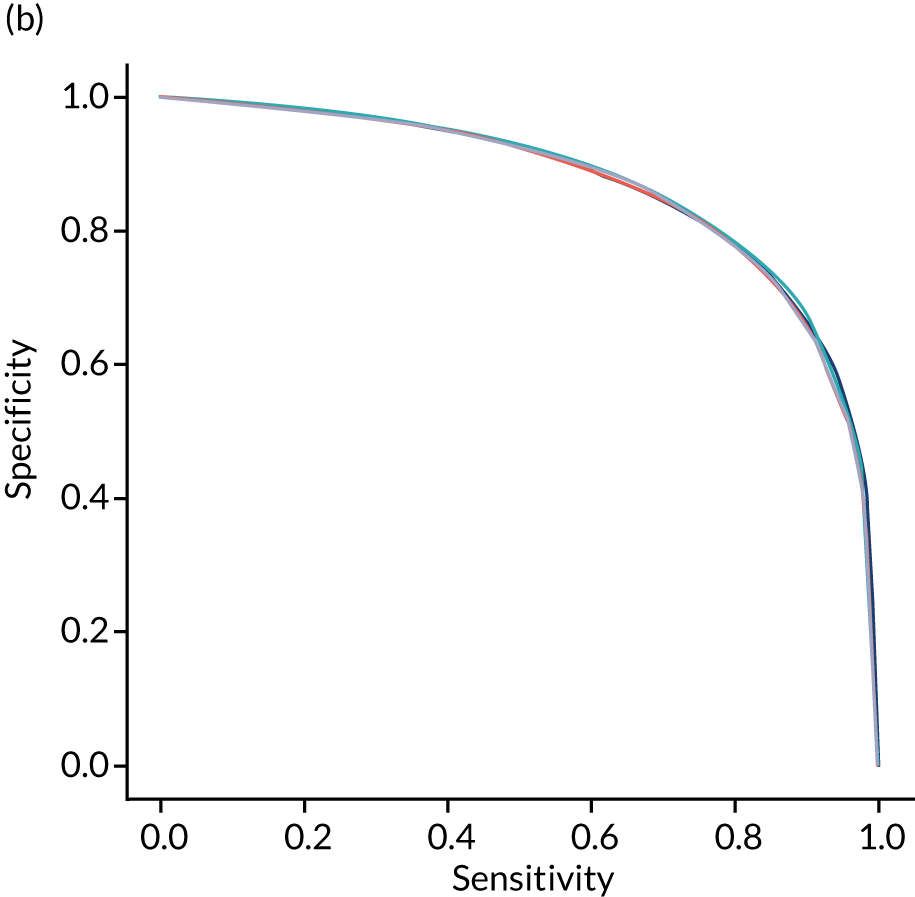

Figure 18 shows ROC and sensitivity–specificity curves for the single-fit logistic regression model. Analyses were performed using fivefold validation. The mean ROC AUC was 0.904. By using a different classification threshold to the default, the model can achieve 82.0% sensitivity and specificity simultaneously.

FIGURE 18.

(a) ROC curve; and (b) sensitivity–specificity curve for a logistic regression model with hospital as a feature (one-hot encoded). Curves show separate fivefold validation results; however, multiple lines are not easily visible as they overlap each other.

Feature weights

Figure 19 shows the top 25 feature weights (i.e. model coefficients using standardised feature values). The probability of receiving thrombolysis is dominated by arrival-to-scan time (a shorter duration increases the probability of receiving thrombolysis) and stroke type (an infarction increases the probability of receiving thrombolysis), and this is followed by disability before stroke (with higher disability scores meaning that a patient is less likely to receive thrombolysis), receiving anticoagulants (reduces probability of receiving thrombolysis), level of consciousness (lower consciousness leads to lower probability of receiving thrombolysis) and best language (poorer language leads to lower probability of receiving thrombolysis). It should be noted that this logistic regression model does not allow for complex interactions (e.g. bell-shaped or U-shaped curves) between feature values and probability of receiving thrombolysis (see Figure 3 as an example, which shows that thrombolysis use is low when stroke severity is either low or high, with thrombolysis use having a high plateau with intermediate stroke severity scores).

FIGURE 19.

Feature weights (coefficients) for a logistic regression model with hospital as a feature (one-hot encoded). Results show mean values from fivefold validation.

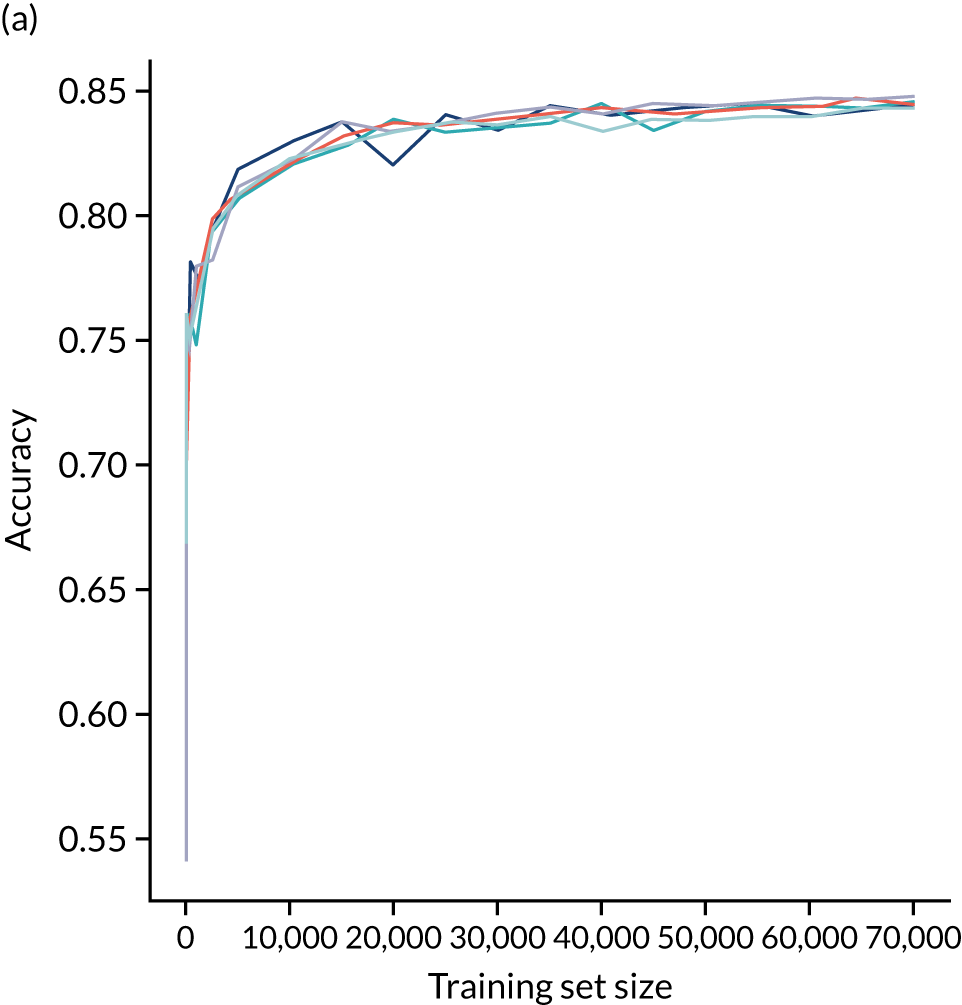

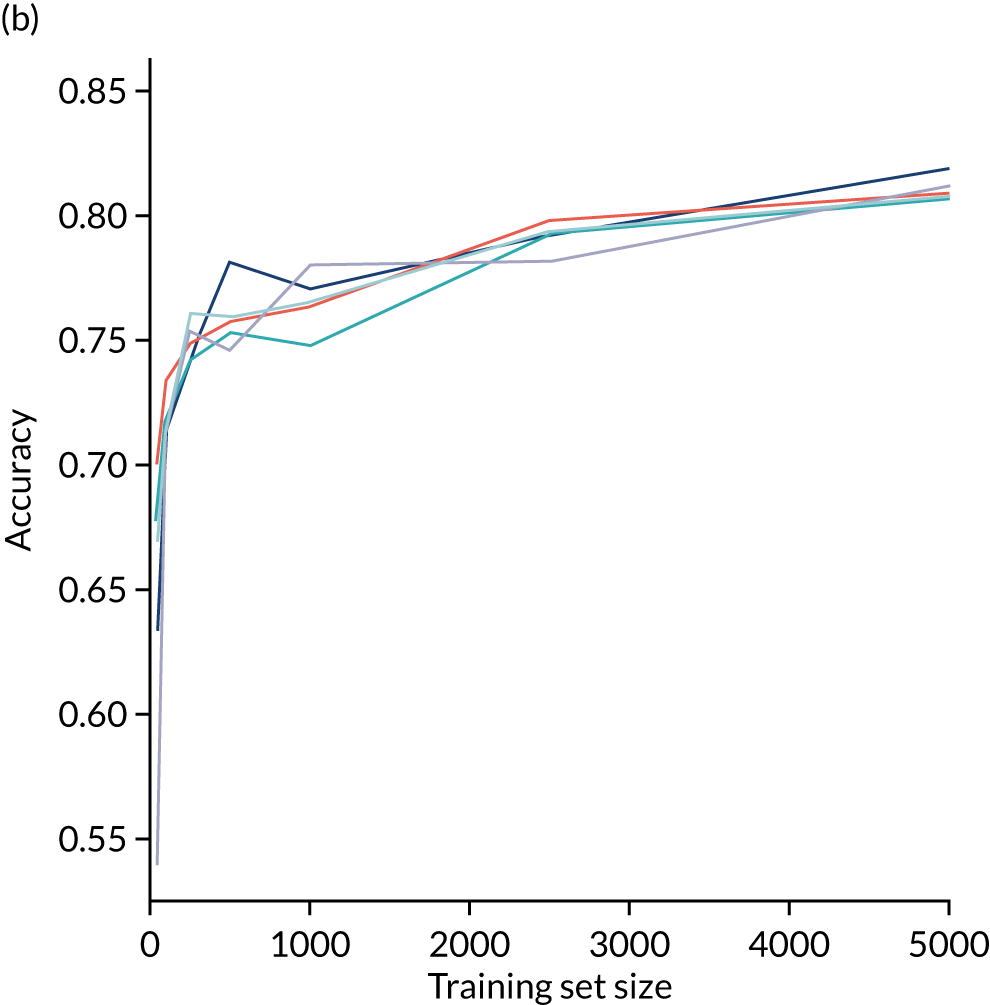

Learning curve

A learning curve shows the relationship between size of training data and accuracy of model. Learning curves that show a clear plateau are indicative of models where accessing more data would not improve model accuracy. For the single-fit logistic regression model, accuracy appears to have reached a plateau with a training set size of 50,000 individuals (Figure 20), suggesting that the accuracy of this model is not limited by the number of training data available.

FIGURE 20.

Learning curve (relationship between training set size and model accuracy) for a logistic regression model with hospital as a feature (one-hot encoded). Results show mean values from 5-fold validation.

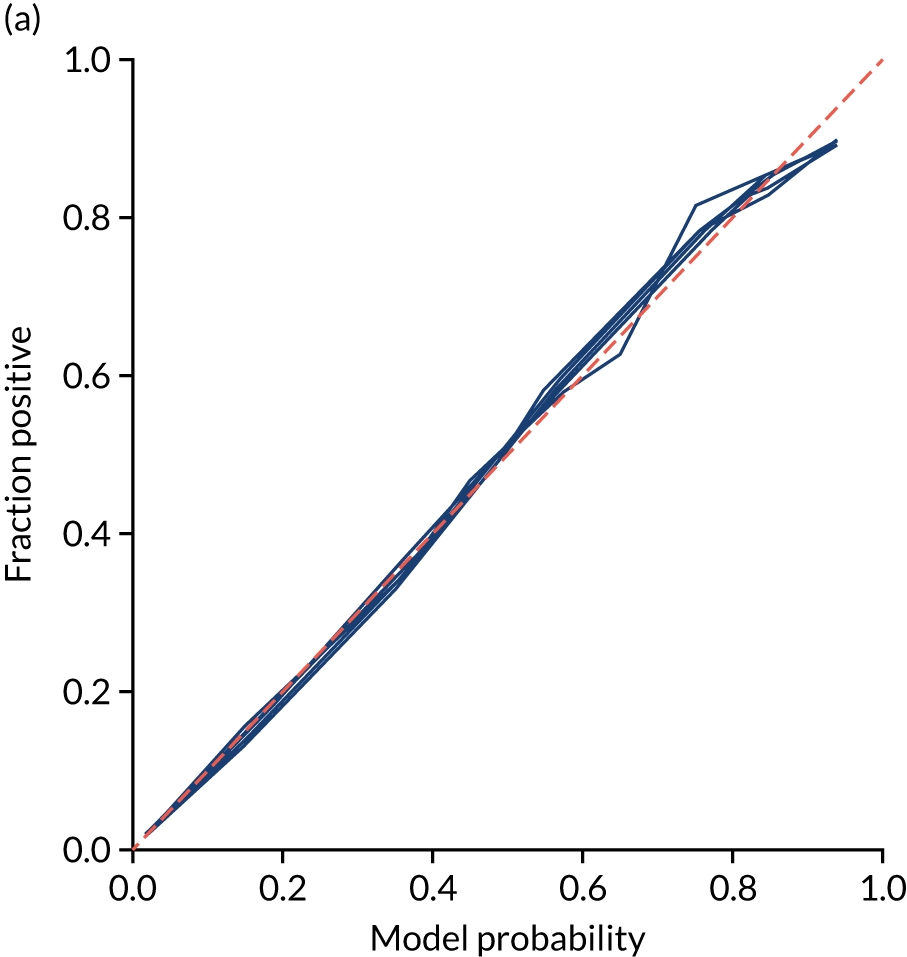

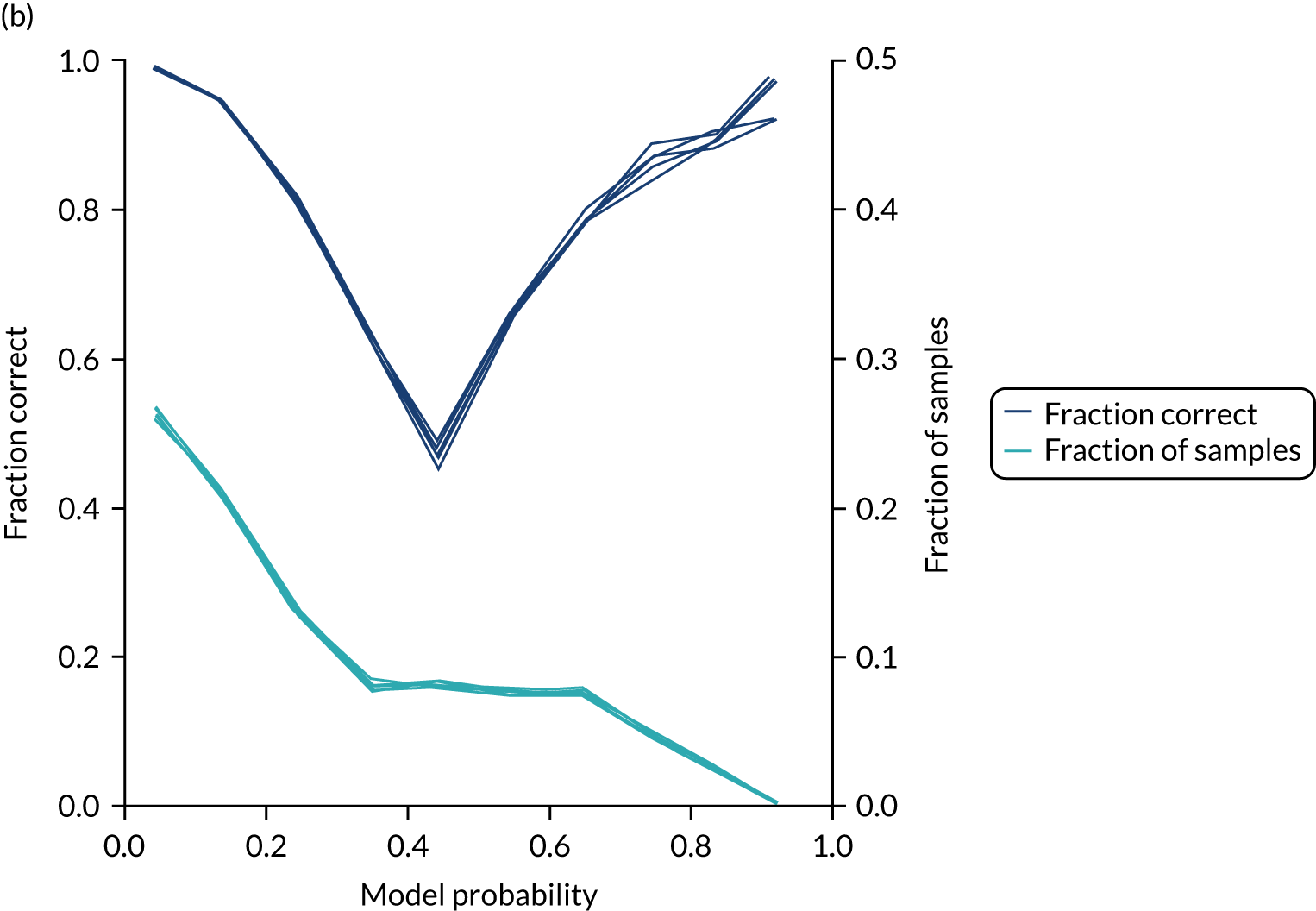

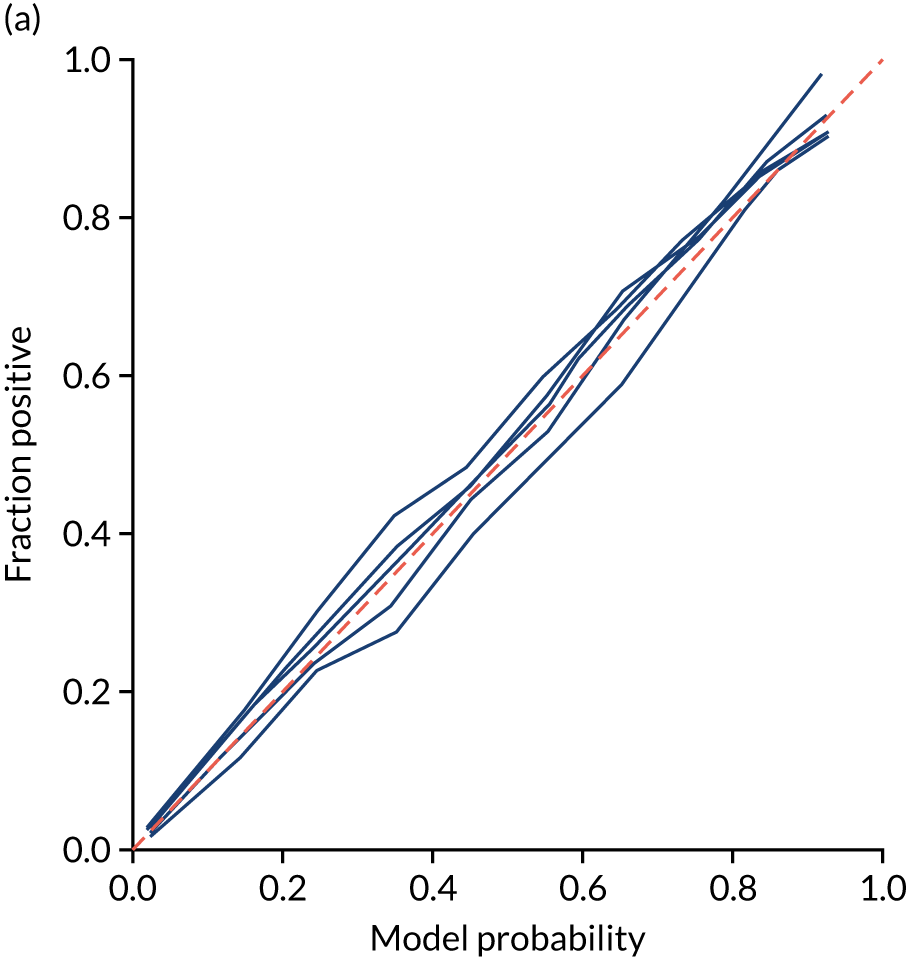

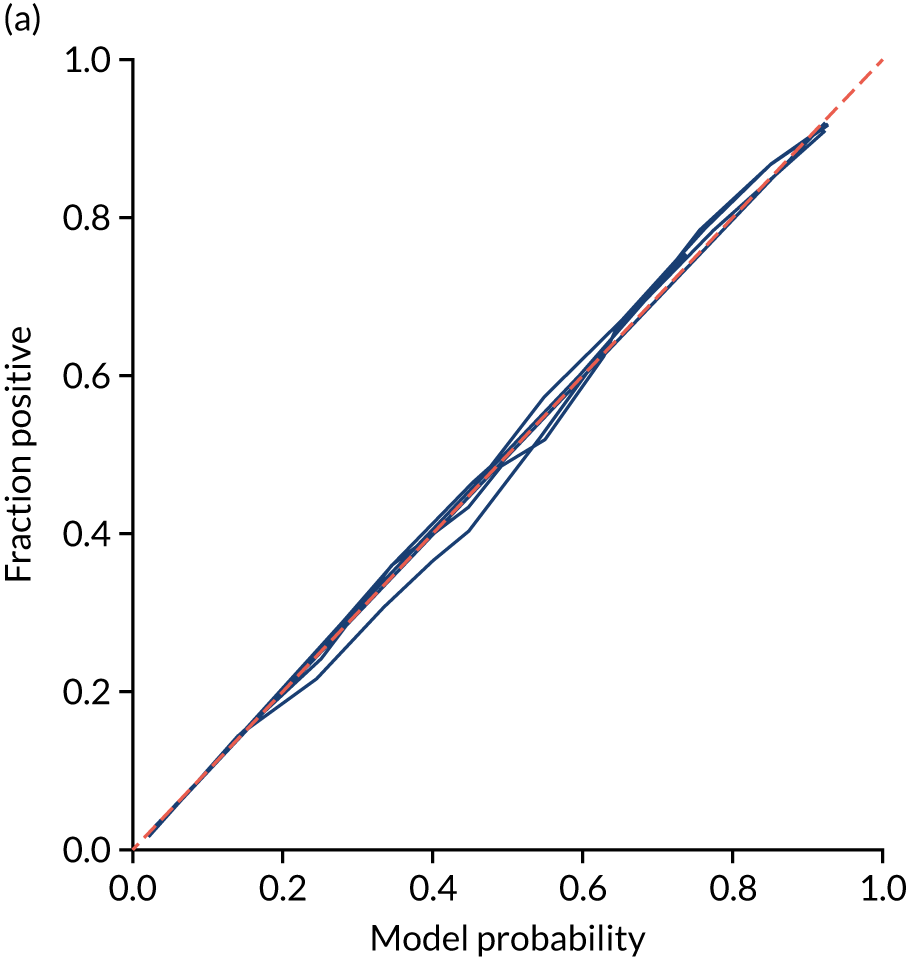

Calibration and assessment of accuracy when model has high confidence

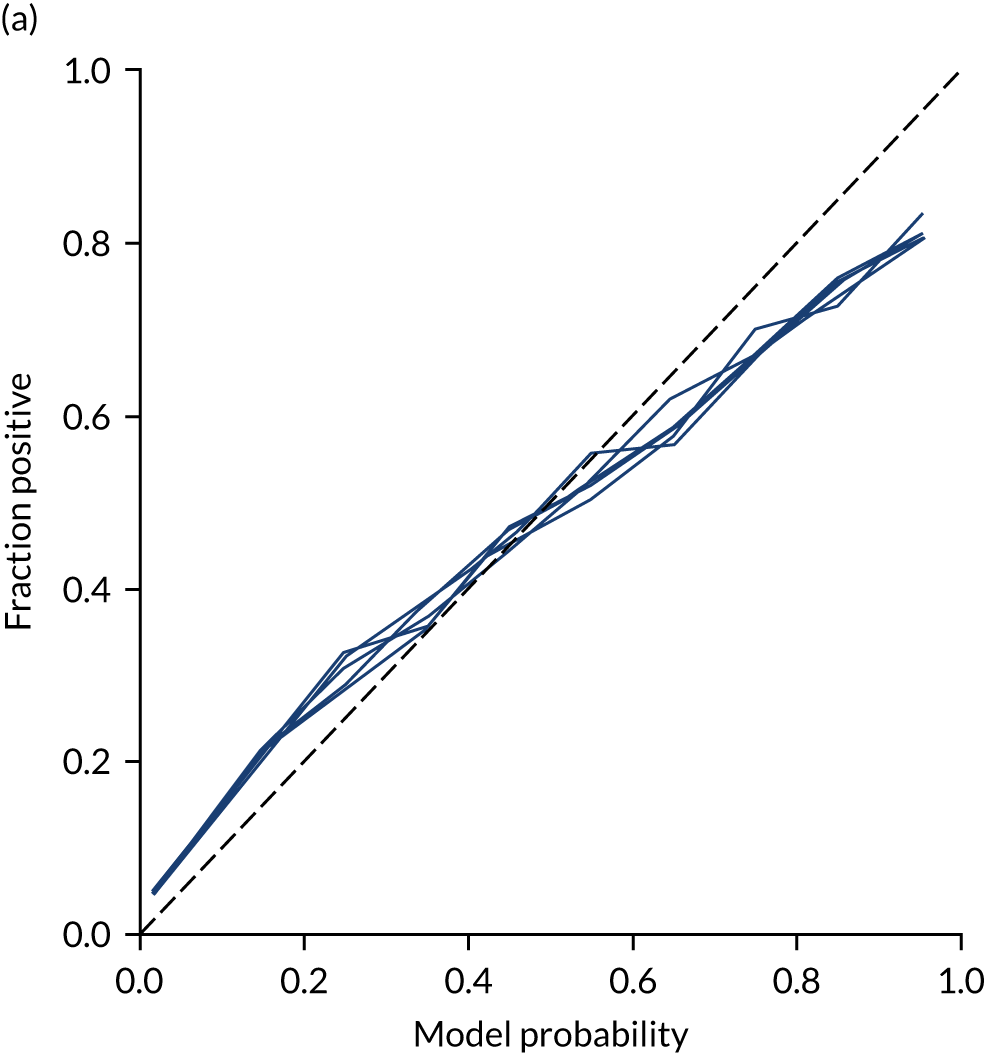

Ideally, machine learning model probability output should be well calibrated with actual incidence of receiving thrombolysis. For example, 9 out of 10 patients with a model prediction of 90% probability of receiving thrombolysis should receive thrombolysis. Calibration may be tested by separating output into probability bins and comparing mean probability and the proportion of patients receiving thrombolysis.

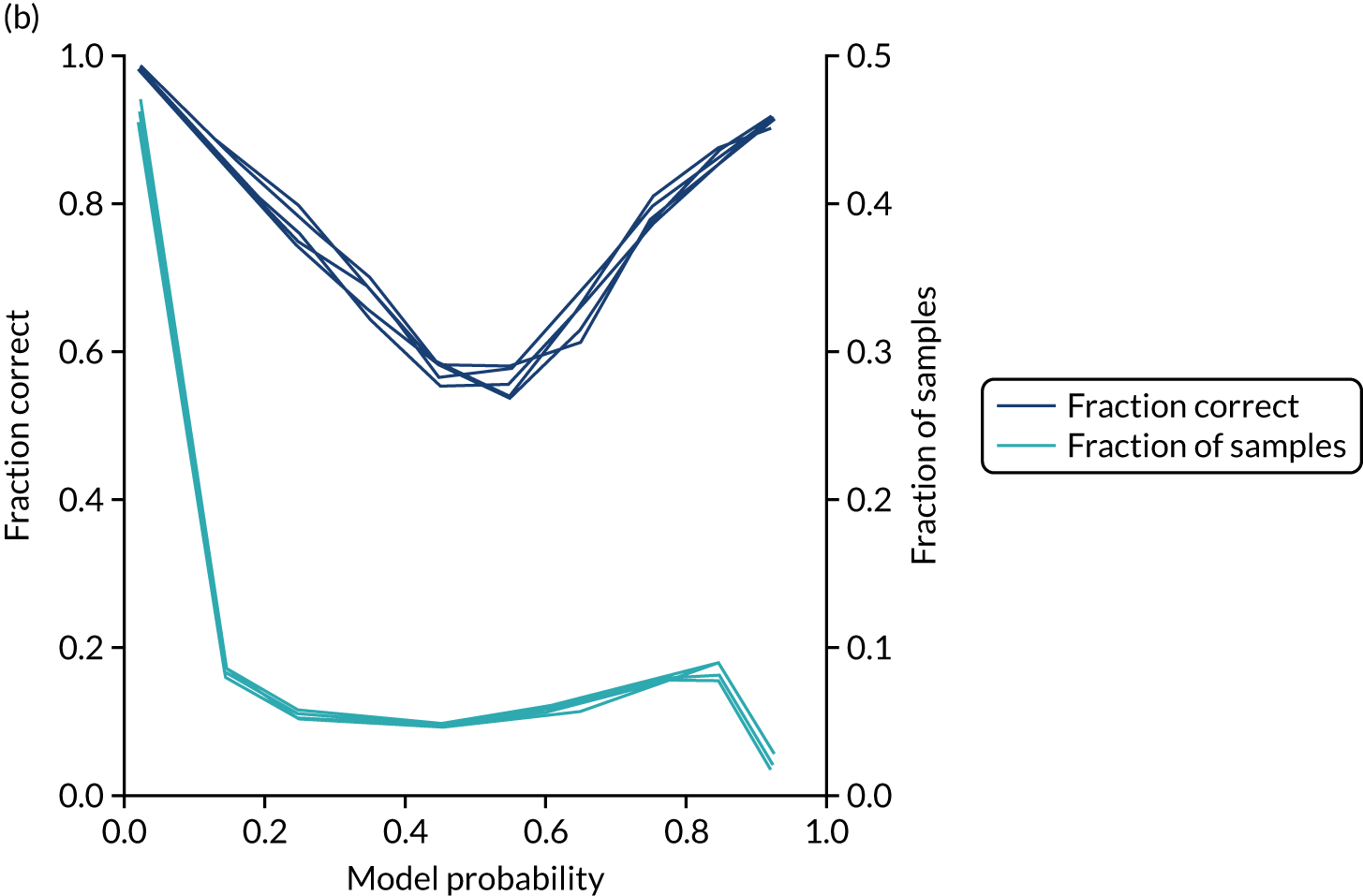

This is shown for the single-fit logistic regression model in Figure 21a. A well-calibrated model has a good match between the mean probability (x-axis) and the fraction of those patients with a positive output category (y-axis). We can see that the single-fit logistic regression model is well calibrated (see Figure 21b).

FIGURE 21.

(a) Model probability calibration; and (b) model accuracy vs. confidence for a logistic regression model with hospital as a feature (one-hot encoded). Results show separate fivefold validation results.

In addition, the proportion that is correct may be tested against predicted probability, that is, if a model is well calibrated, then the proportion that is correct should align with the probability of classification of the ‘most likely’ predicted class (e.g. for those individuals given a 10% probability of receiving thrombolysis, 90% should be correct in a well-calibrated model). This is shown for the single-fit logistic regression model in Figure 21b. The light blue line is a distribution function that shows the spread of instances that have a model probability output. The dark blue line shows the fraction of instances that are correctly predicted across the different model probability values. This is shown as a V-shaped relationship, which means, as we would expect, getting correct classifications at the two extremes of the probabilities (< 0.2 and > 0.8) and fewer correct classifications when the model is less certain (0.4–0.6).

Although the overall accuracy of the model is 83.2%, the accuracy of those 60% samples with at least 80% confidence in prediction is 89.6% (calculated separately).

Logistic regression models fitted to individual hospital stroke teams

As an alternative to using hospital stroke team as a feature in the model, models may be fitted to each hospital separately. When we fitted models, we standardised data for each hospital individually and calibrated each model so that a threshold was used that gave the same thrombolysis use rate as observed for that hospital.

Fitting models to individual hospitals has the advantage that each model may learn the hospital-specific relationship between features and probability of thrombolysis, but the disadvantage that each model has a much smaller number of data to fit to than a single-fit model.

Accuracy

Accuracy of the model (Table 7) was lower than in the single-fit model (overall accuracy 82.6% vs. 83.3%, and ROC-AUC 0.870 vs. 0.904 compared with a single-fit model).

| Accuracy measure | Mean (95% CI)a |

|---|---|

| Actual positive rate | 0.295 (0.000) |

| Predicted positive rate | 0.296 (0.000) |

| Accuracy | 0.801 (0.002) |

| Precision | 0.671 (0.003) |

| Recall/sensitivity | 0.672 (0.003) |

| F1 score | 0.672 (0.003) |

| Specificity | 0.862 (0.003) |

Receiver operating characteristic and sensitivity–specificity curves

Figure 22 shows ROC and sensitivity–specificity curves for logistic regression models fitted to individual hospitals. The mean ROC AUC was significantly lower than a single-fit model (0.870 vs. 0.904) and sensitivity–specificity trade-off was poorer, with the model achieving 78.9% sensitivity and specificity simultaneously (compared with 82.0% for the single-fit model).

FIGURE 22.

(a) ROC curve; and (b) sensitivity–specificity curve for logistic regression models fitted to individual hospitals. Curves show separate fivefold validation results; however, multiple lines are not easily visible as they overlap each other.

Feature weights

As models are fitted to each hospital individually, we do not report overall feature weights.

Learning curve

As different hospitals had different numbers of data available for fitting, a learning curve was not constructed.

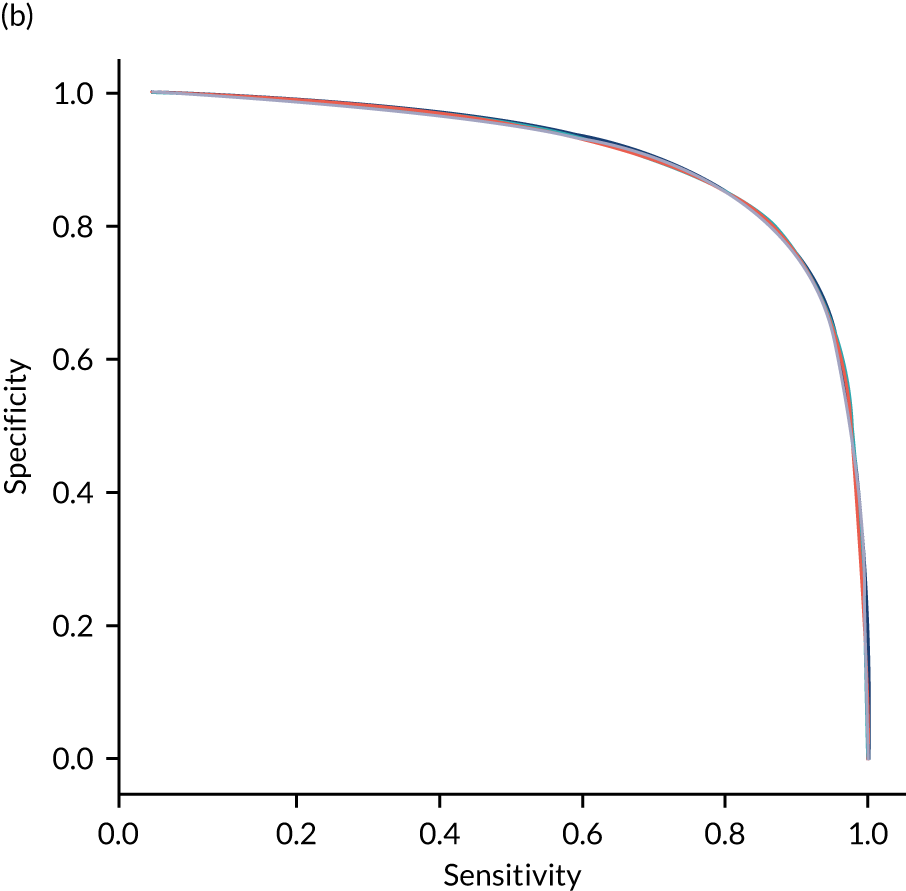

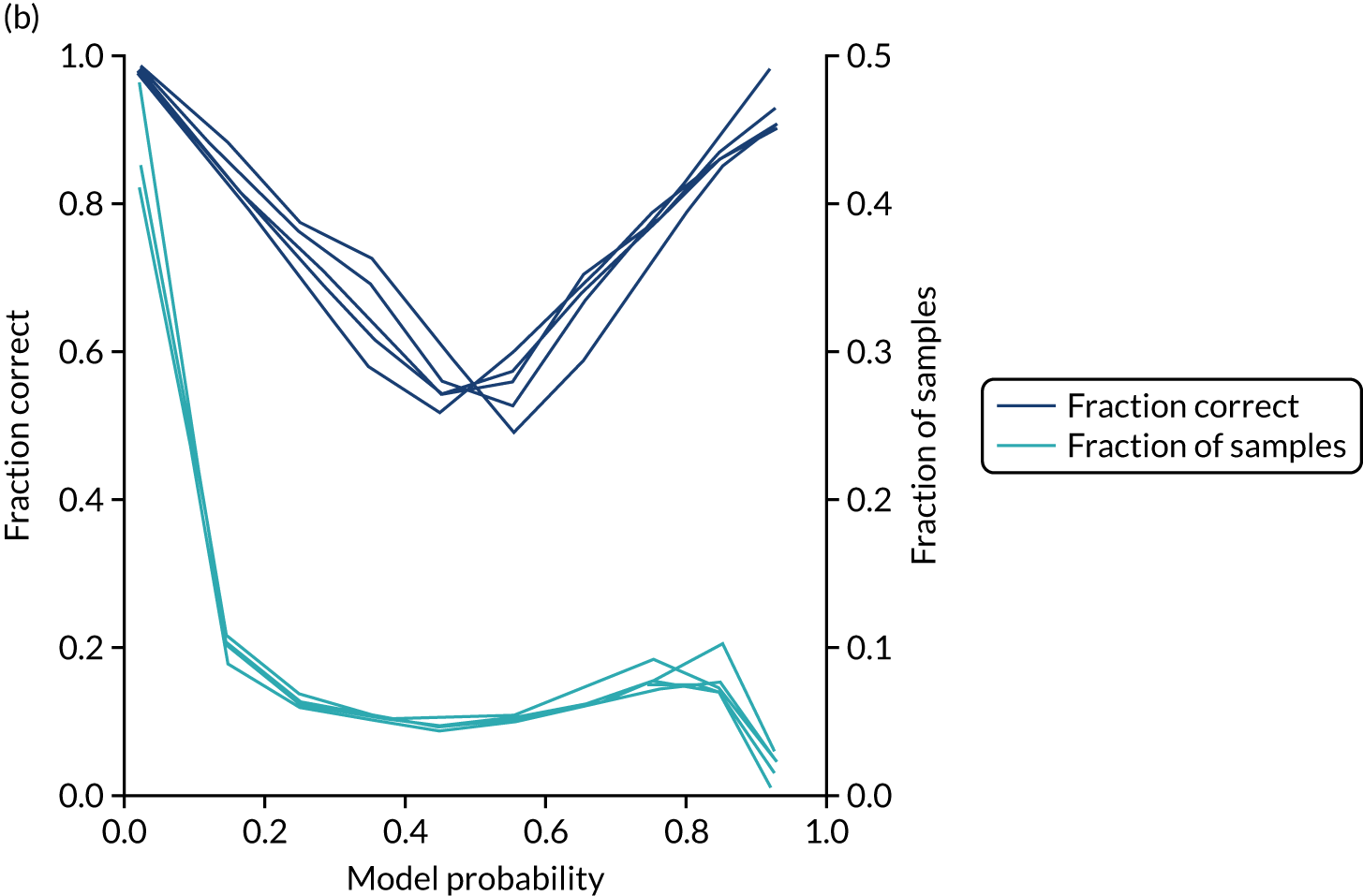

Calibration and assessment of accuracy when model has high confidence

Logistic regression models fitted to individual hospitals were not as well calibrated as the single-fit mode (Figure 23). For example, among individuals with a predicted probability of receiving thrombolysis of 80–90%, the mean probability of receiving thrombolysis was 85.1%, but only 74.6% actually received thrombolysis.

FIGURE 23.

(a) Model probability calibration; and (b) model accuracy vs. confidence for logistic regression models fitted to individual hospitals. Results show separate fivefold validation results.

Machine learning: random forest

What is in this section?

This section describes experiments predicting, using random forest, whether or not a patient will receive thrombolysis in a given hospital.

This section contains the following analyses: a random forest classifier fitting to all stroke teams together; a random forest classifier fitting to hospital-specific models; a comparison of the level of agreement in clinical decision-making between hospitals; a comparison of thrombolysis rates of benchmark hospitals with actual thrombolysis rates; a comparison of similarity in decision-making between hospitals; a comparison of hospital decision-making with the benchmark hospital set, identifying individual patients of interest; and an analysis to find similar patients who are treated differently within the same hospital. Details of these analyses are provided below.

A random forest classifier fitting to all stroke teams together

This analysis involved a random forest classifier that is fitted to all data together, with each stroke team being a one-hot encoded feature. The models were analysed for (1) various accuracy scores, (2) a ROC AUC, (3) a sensitivity–specificity curve, (4) feature importance, (5) learning rate and (6) model calibration.

A random forest classifier fitting hospital-specific models

This analysis involved a random forest classifier that has a fitted model for each hospital. The models were analysed for (1) various accuracy scores, (2) a ROC AUC, (3) a sensitivity–specificity curve and (4) model calibration.

A comparison of the level of agreement in clinical decision-making between hospitals using random forest models

This analysis passed all patients through all hospital decision models and investigated if there was a level of agreement between hospitals.

Benchmark hospitals

In this analysis, we identified the 30 hospitals with highest thrombolysis use, using the same 10,000 reference set of patients at all hospitals (note the 10,000 reference set of patients is a random sample of patients arriving within 4 hours of known stroke onset). For all hospitals and all patients, we predicted the decisions made by those 30 benchmark hospitals and took a majority decision to determine whether or not each hospital’s patients would be given thrombolysis. We then compared these benchmark thrombolysis rates with actual thrombolysis rates.

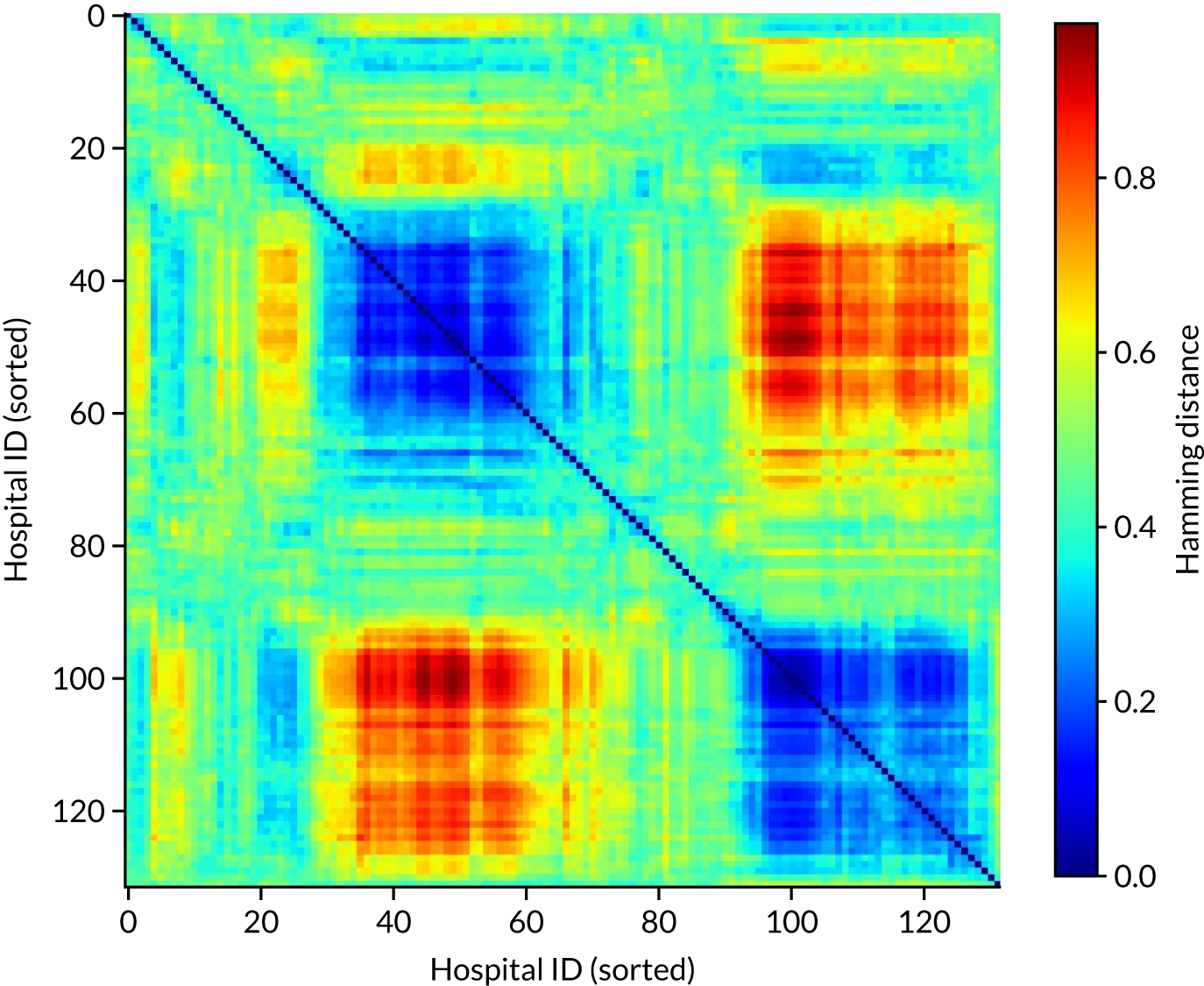

Grouping hospitals by similarities in decision-making

In this analysis, we compared the extent of similarity in decision-making between hospitals, and identified groups of hospitals making similar decisions.

Thrombolysis hospitals versus benchmark diagrams and patient vignettes

In this analysis, we Iooked at the differences in decision-making between hospitals and the benchmark set and asked how much overlap there is and how much difference. We created synthetic patient vignettes (based on SSNAP data) to illustrate examples of differences in decision-making (e.g. hospitals with lower-than-usual use of thrombolysis in either patients with milder strokes or patients with prior disability).

Similar patients who are treated differently within the same hospital

In this analysis, within a hospital, we identified patients who did not receive thrombolysis, when the model had high confidence that they would have, and then looked for the most similar patients who did actually receive thrombolysis.

Detailed code and results are available online. 33

Key findings in this section

Using a single model fitted to all hospitals, we found the following:

-

The overall accuracy was 84.6%.

-

The model can achieve 83.7% sensitivity and specificity simultaneously.

-

The ROC AUC was 0.914.

Using models fitted to each hospital, we found the following:

-

The overall accuracy was 84.3%.

-

The model can achieve 83.2% sensitivity and specificity simultaneously.

-

The ROC AUC was 0.906.

When comparing predicted decisions between hospitals, we found the following:

-

It is easier to find majority agreement on who not to thrombolyse than who to thrombolyse. A total of 77.5% of all patients had a treatment decision that was agreed by 80% of hospitals. Of patients who were not given thrombolysis, 84.6% had agreement from 80% of hospitals. Of patients who were given thrombolysis, 60.4% had agreement from 80% of hospitals.

-

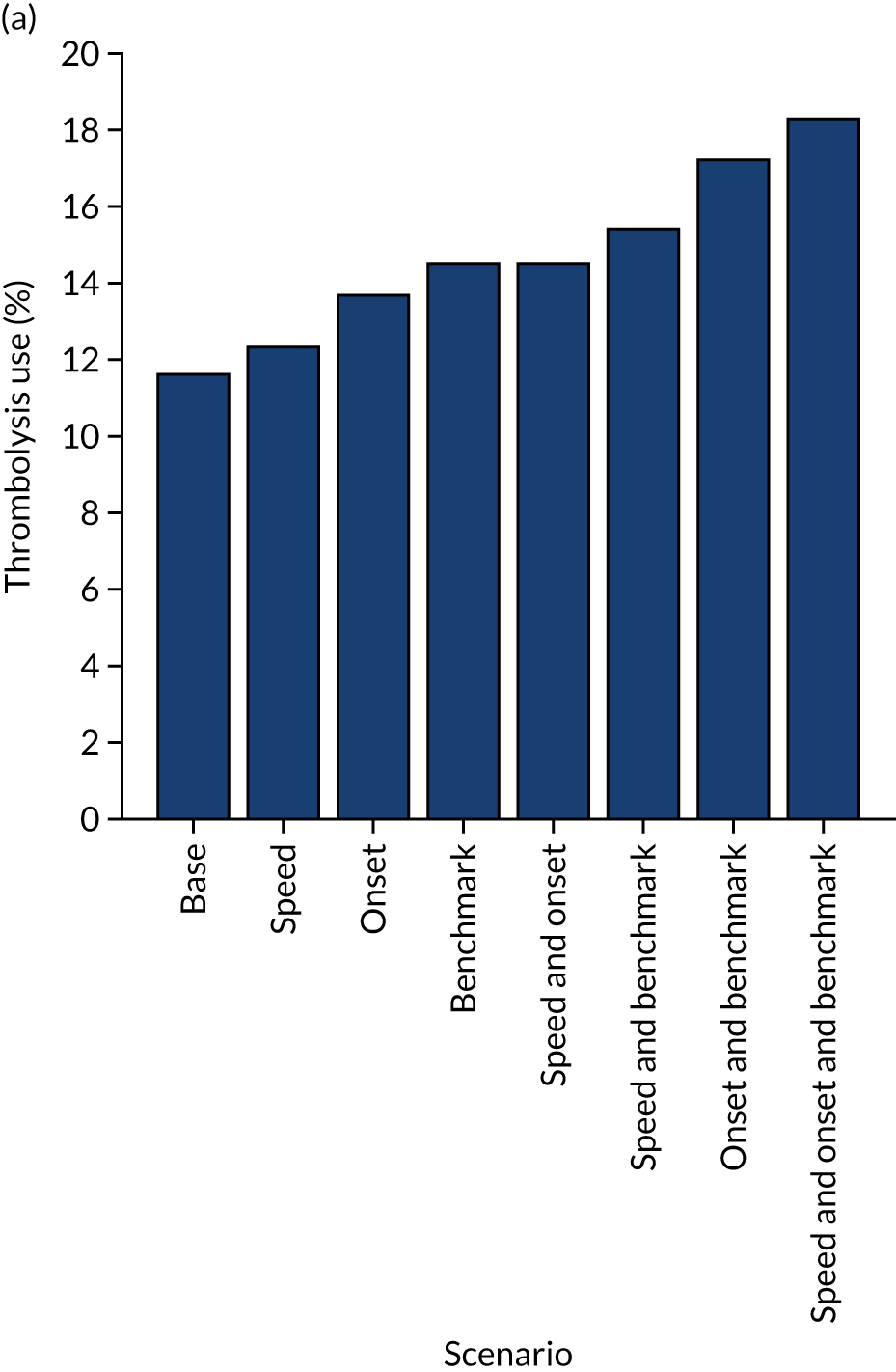

A benchmark set of hospitals was created by passing the same 10,000 patient cohort set through all hospitals and selecting the 30 hospitals with the highest thrombolysis use. If all thrombolysis decisions were made by a majority vote of these 30 hospitals, then thrombolysis use (in those arriving within 4 hours of known stroke onset) would be expected to increase from 29.5% to 36.9%.

-

Decisions at each hospital may be compared to the benchmark majority vote decision.

-

These models may be used to identify the following types of patients:

-

Patients for whom the hospital model has high confidence in prediction but who, in reality, were treated the other way (e.g. a patient who appears to have high suitability for thrombolysis, but did not receive it or, conversely, a patient who appears to not be suitable for thrombolysis, but received it).

-

Patients who were treated in accordance with the prediction of the hospital model but whom the majority of the benchmark hospitals would have treated differently.

-

-

Patient vignettes may be constructed to illustrate particular types of patients, for example a patient in a hospital that has low treatment rates of patients with previous disability. These vignettes are potentially useful for clinical discussions.

-

When hospitals are making decisions for a set of common patients, hospitals may be grouped according to the proportion of patients who would be expected to have the same thrombolysis decision. This grouping is made clearer by using a subset of patients with higher divergence of decisions (i.e. those patients who 30–70% of hospitals would thrombolyse).

-

For patients not treated as expected in real life, we can use the structure of the random forest model to find similar patients who were treated as expected.

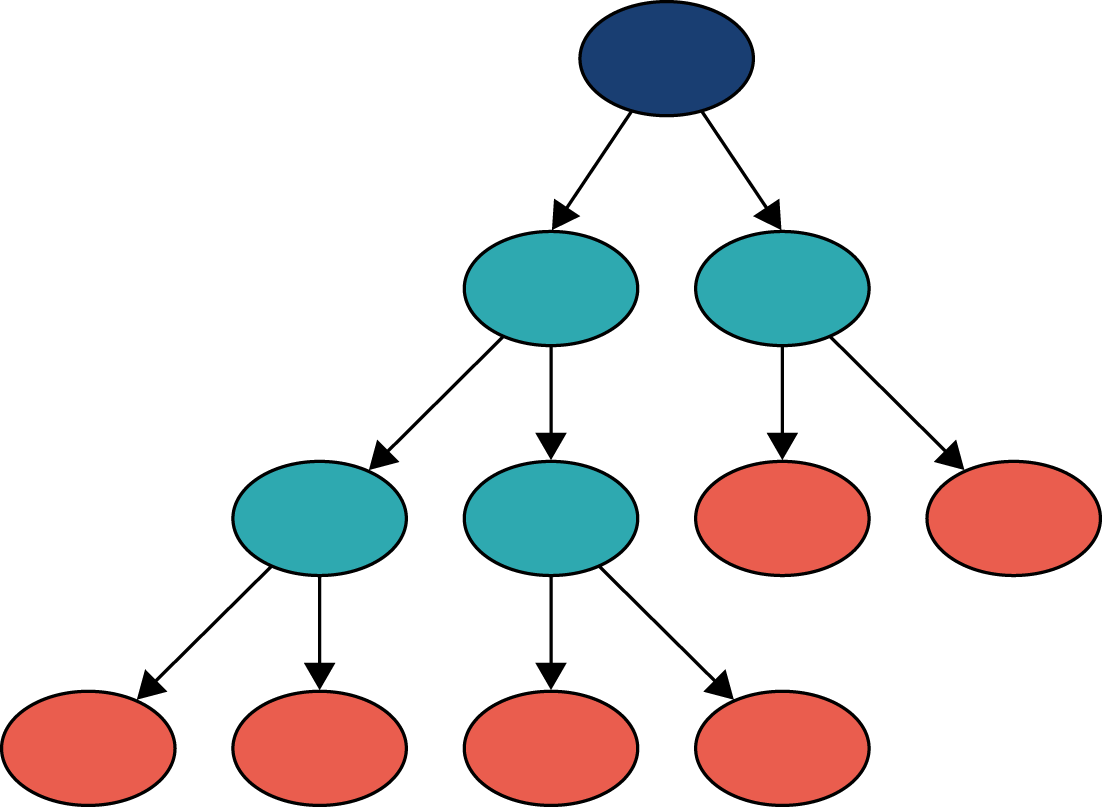

Introduction to random forest methodology

A random forest is an example of an ensemble algorithm, and the outcome (i.e. whether or not a patient receives thrombolysis) is decided by a majority vote of other algorithms. In the case of a random forest, these ‘other algorithms’ are decision trees (each of which is trained on a random subset of examples and a random subset of features). A random forest is an ensemble of decision trees. Each tree is considered a weak learner, but the collection of trees together forms a robust classifier that is less prone to overfitting than a single full decision tree.

We can think of a decision tree as similar to a flow chart. In Figure 24, we can see that a decision tree comprises a set of nodes and branches. The node at the top of the tree is called the root node, and the nodes at the bottom are leaf nodes. Every node in the tree, except for the leaf nodes, splits into two branches leading to two nodes that are further down the tree. A path through the decision tree always starts at the root node. Each step in the path involves moving along a branch to a node lower down the tree. The path ends at a leaf node where there are no further branches to move along. Leaf nodes will each have a particular classification (e.g. patient receives thrombolysis or does not receive thrombolysis).

FIGURE 24.

Schematic of a decision tree showing root node (dark blue), splitting nodes (light blue) and terminal leaf nodes (orange). A random forest takes the majority vote from a multitude of decision trees.

The path taken through a tree is determined by the rules associated with each node. The decision tree learns these rules during the training process. The goal of the training process is to find rules for each node, such that a leaf node contains samples from one class only. The leaf node a patient ends up in determines the predicted outcome of the decision tree.

Specifically, given some training data (i.e. variables and outcomes), the decision tree algorithm will find the variable that is most discriminative (i.e. provides the best separation of data based on the outcome). This variable will be used for the root node. The rule for the root node consists of this variable and a threshold value. For any data point, if the value of the variable is less than or equal to the threshold value at the root node, then the data point will take the left branch, and if it is greater than the threshold value, then it will take the right branch. The process of finding the most discriminative feature and a threshold value is repeated to determine the rules of the internal nodes lower down the tree. Once all data points in a node have the same outcome, then that node is a leaf node, representing the end of a path through a tree. The training process is complete once all paths through the tree end in a leaf node.

A random forest is an ensemble of decision trees. During training, the algorithm will select, with replacement, a random sample of the training data and, using a subset of the features, will train a decision tree. This process is repeated many times, with the exact number being a parameter of the algorithm corresponding to the number of decision trees in the random forest.

The resulting random forest is a classifier that can be used to determine whether a data point belongs to class 0 (i.e. patient does not receive thrombolysis) or to class 1 (i.e. patient receives thrombolysis). The path of the data point through every decision tree ends in a leaf node. If there are 100 decision trees in the random forest, and the data point’s path ends in a leaf node with class 0 in 30 of the decision trees and a leaf node of class 1 in 70, then the random forest takes the majority outcome and classifies the data point as belonging to class 1 (i.e. patient receives thrombolysis) with a probability of 0.7 [number of trees voting class 1/total number of trees (70/100)].

The random forest classifier used was from scikit-learn. Default settings were used, apart from balanced class weighting, where weights for samples are inversely proportional to the frequency of the class label.

Random forest model fitted as a single model

These results are for a model that is fitted to all hospitals simultaneously, with hospital encoded as a ‘one-hot’ feature. The random forest model is fitted to raw (non-standardised) data.

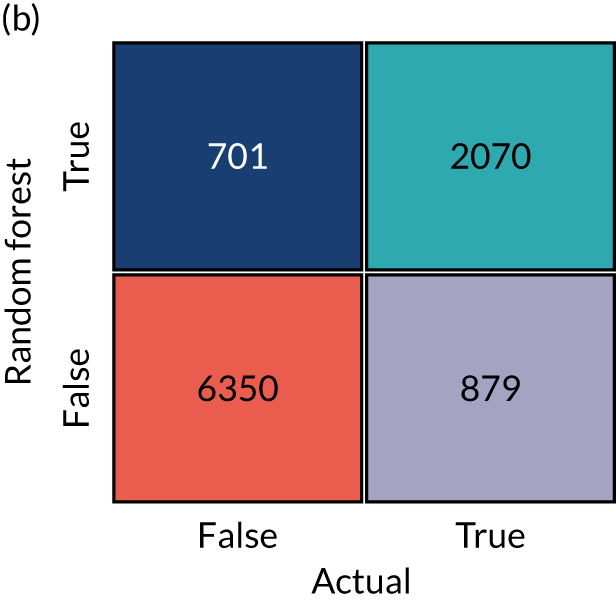

Accuracy

The random forest model had an overall accuracy of 84.6%. With a default classification threshold, sensitivity (74.2%) is lower than specificity (88.9%), which is likely to be due to the imbalance of class weights in the data set. Full accuracy measures are given in Table 8.

| Accuracy measure | Mean (95% CI)a |

|---|---|

| Observed positive rate | 0.295 (0.000) |

| Predicted positive rate | 0.297 (0.001) |

| Accuracy | 0.846 (0.002) |

| Precision | 0.738 (0.003) |

| Recall/sensitivity | 0.742 (0.002) |

| F1 score | 0.740 (0.002) |

| Specificity | 0.889 (0.002) |

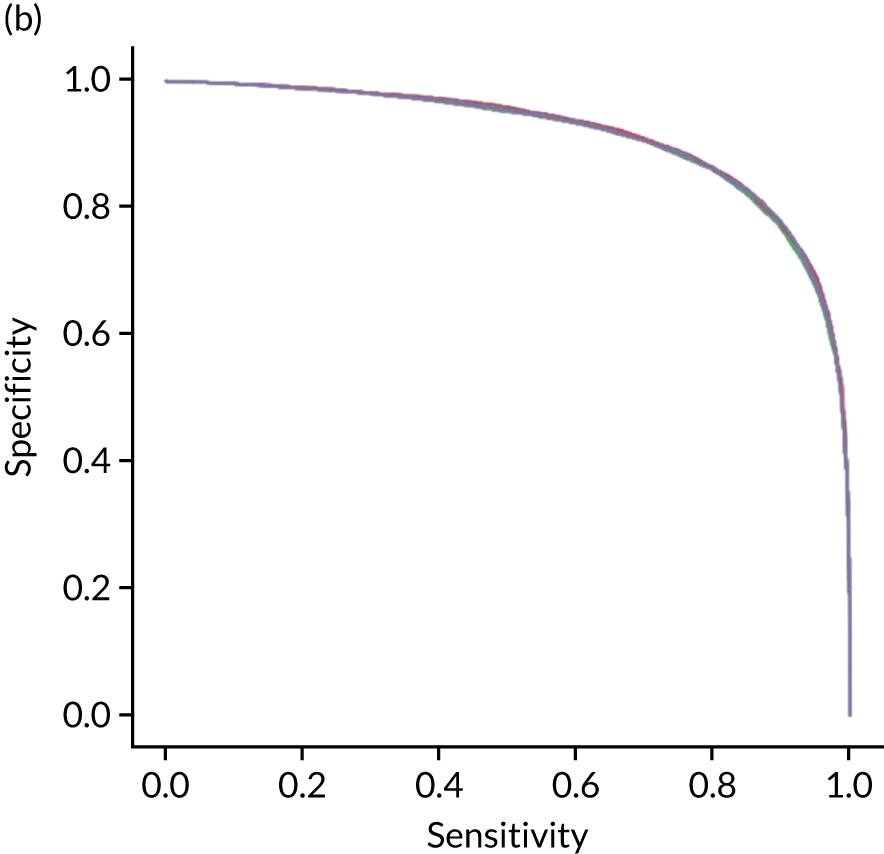

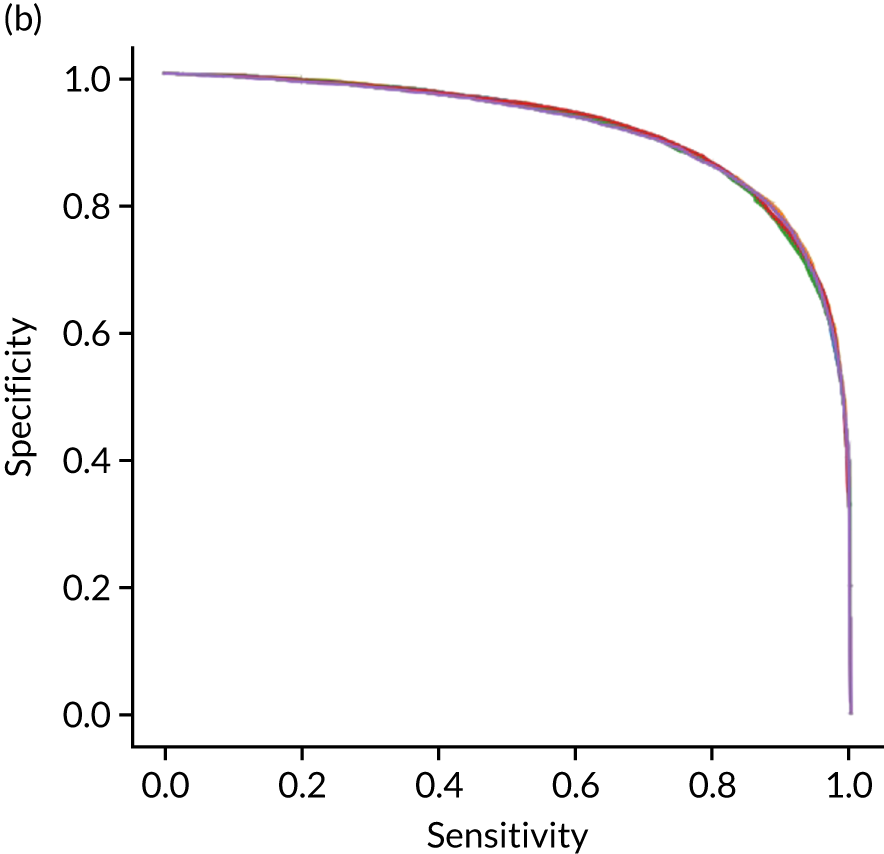

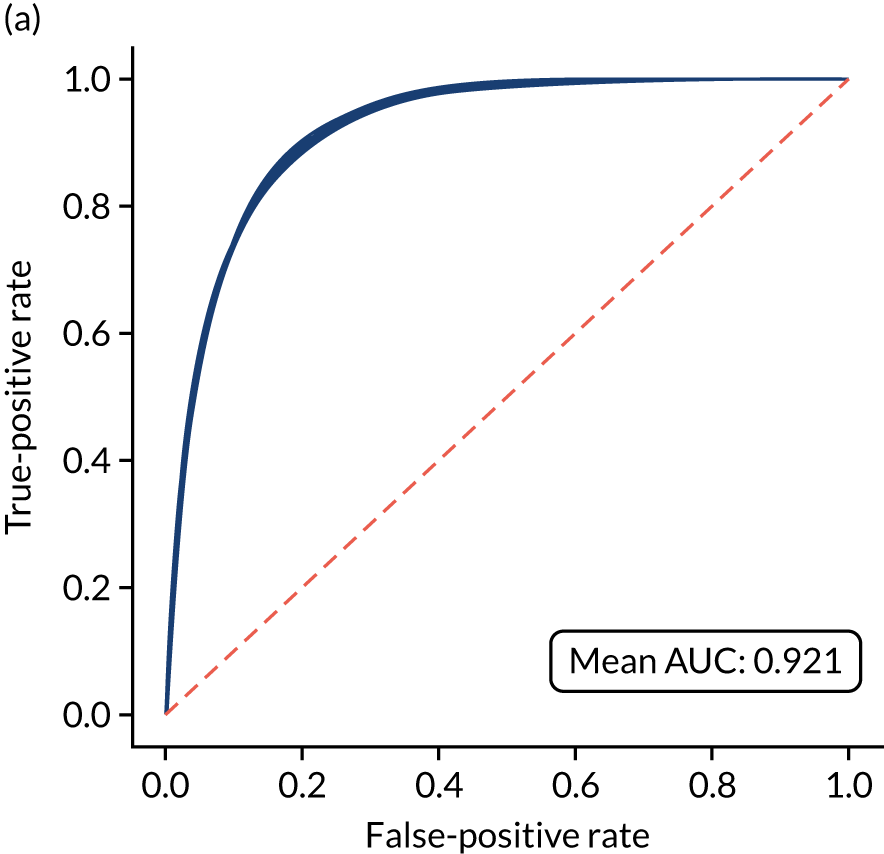

Receiver operating characteristic and sensitivity–specificity curves

Figure 25 shows ROC and sensitivity–specificity curves for the single-fit random forest model. Analyses were performed using fivefold validation. The mean ROC AUC was 0.914 (compared with 0.904 for the single-fit logistic regression model). By using a different classification threshold to the default, the model can achieve 83.7% sensitivity and specificity simultaneously (compared with 82.0% for the single-fit logistic regression model).

FIGURE 25.

(a) ROC curve; and (b) sensitivity–specificity curve for a random forest model with hospital as a feature (one-hot encoded). Curves show separate fivefold validation results; however, multiple lines are not easily visible as they overlap each other.

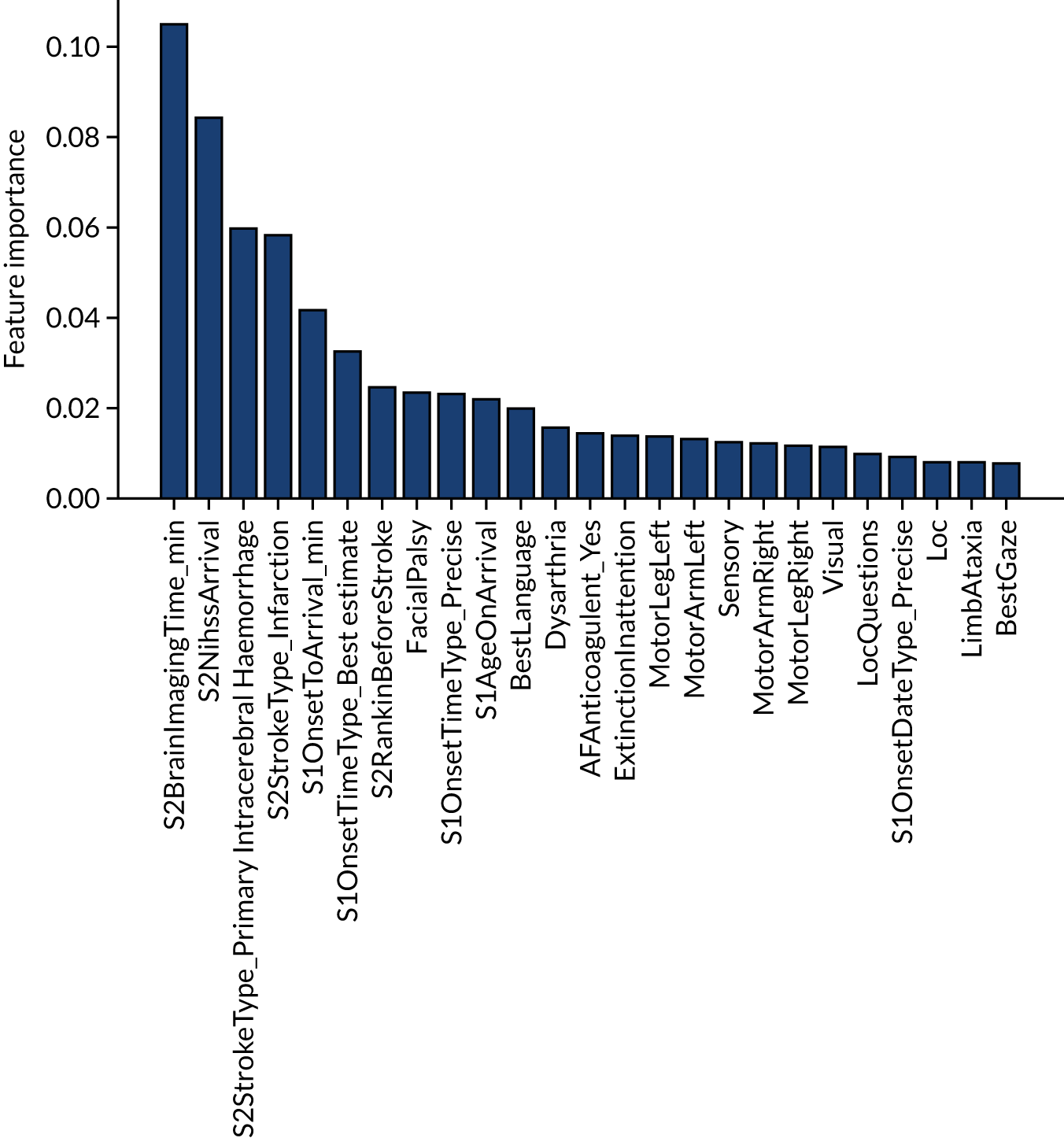

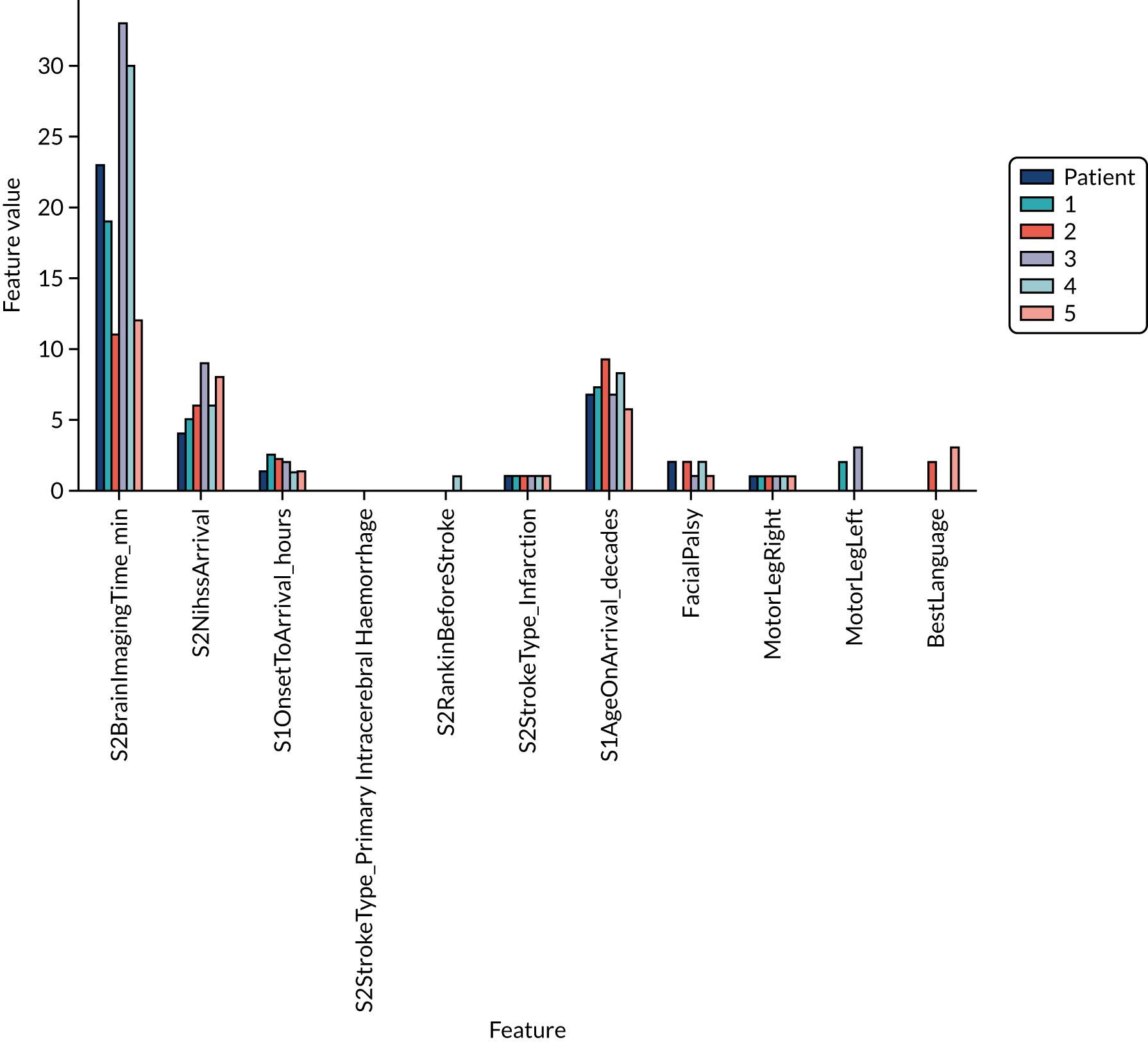

Feature importance

The influence of features in a random forest model may be calculated in various ways. Here we report the standard scikit-learn feature importance, which is based on how much each feature, on average, reduces the impurity of the split data. The feature importance of the 25 most influential features is shown in Figure 26. The five most influential features are arrival-to-scan time, stroke severity on arrival, stroke type, onset-to-arrival time and whether onset has been determined precisely or has been estimated.

FIGURE 26.

Feature importance for a random forest model with hospital as a feature (one-hot encoded). Results show mean values from fivefold validation.

The single-fit random forest and single-fit logistic regression models have the same two features in their top three: (1) arrival-to-scan time and (2) stroke type. Stroke severity on arrival is present in the random forest model top three but not the logistic regression, and this is likely to be because of the logistic regression not being able to model the non-linear relationship between stroke severity on arrival and use of thrombolysis.

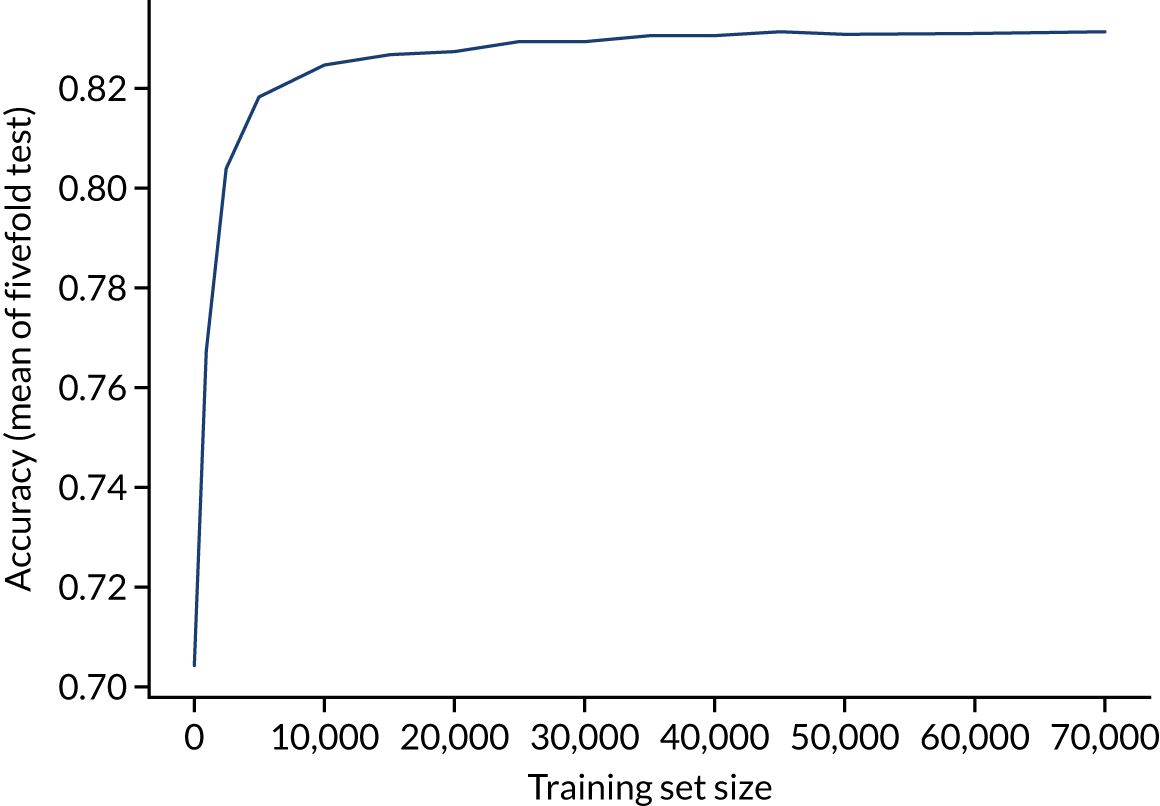

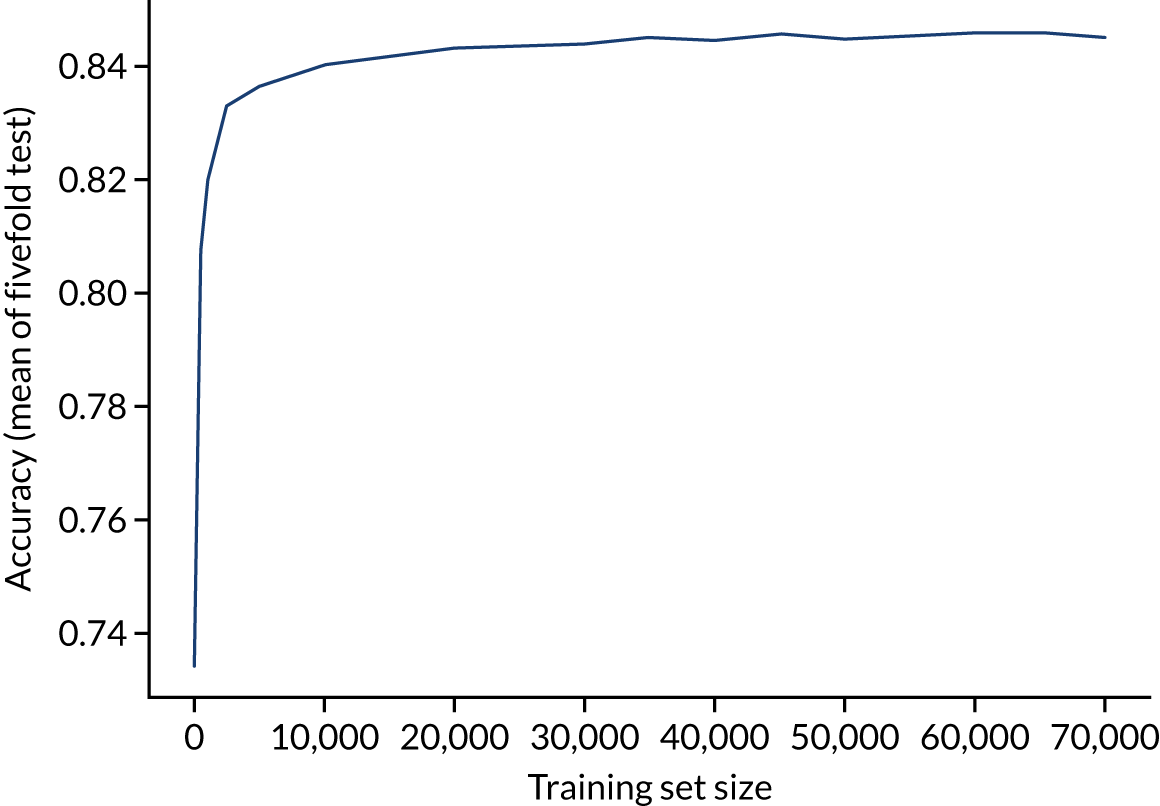

Learning curve

The accuracy of the random forest model reaches a plateau by about 40,000 training points (Figure 27), suggesting that the accuracy of this model is not limited by the number of training data available.

FIGURE 27.

Learning curve (relationship between training set size and model accuracy) for a random forest model with hospital as a feature (one-hot encoded). Results show mean values from fivefold validation.

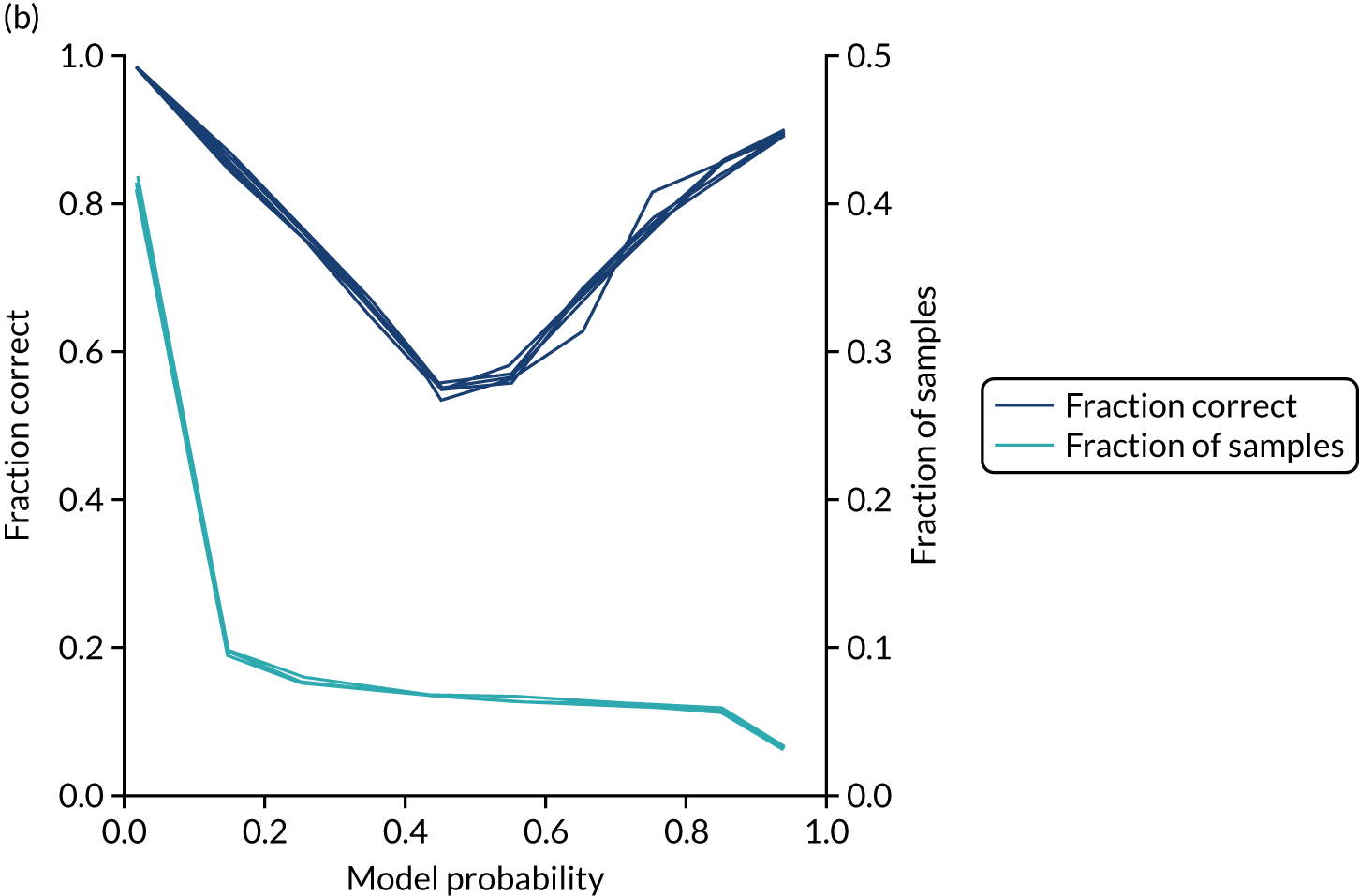

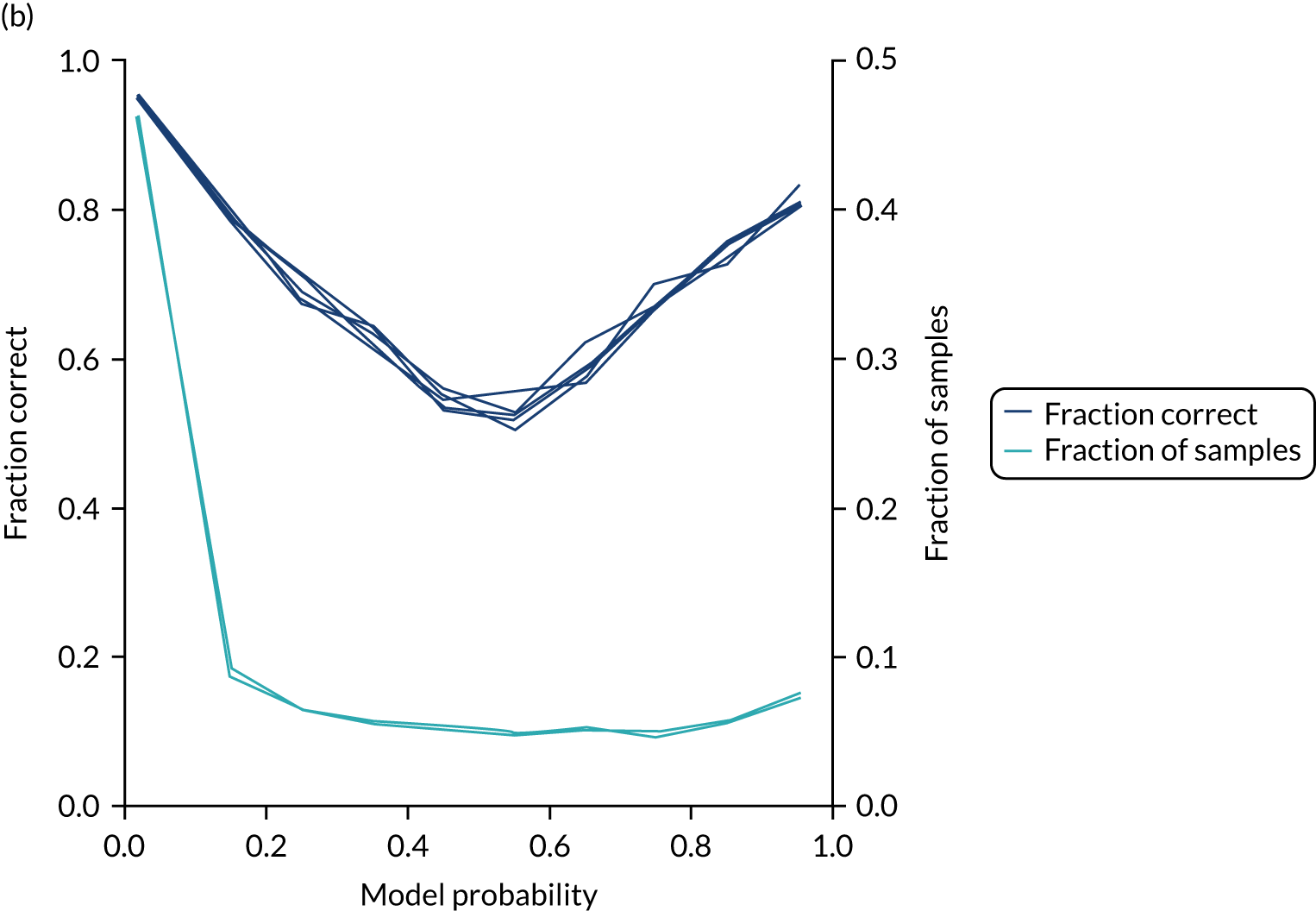

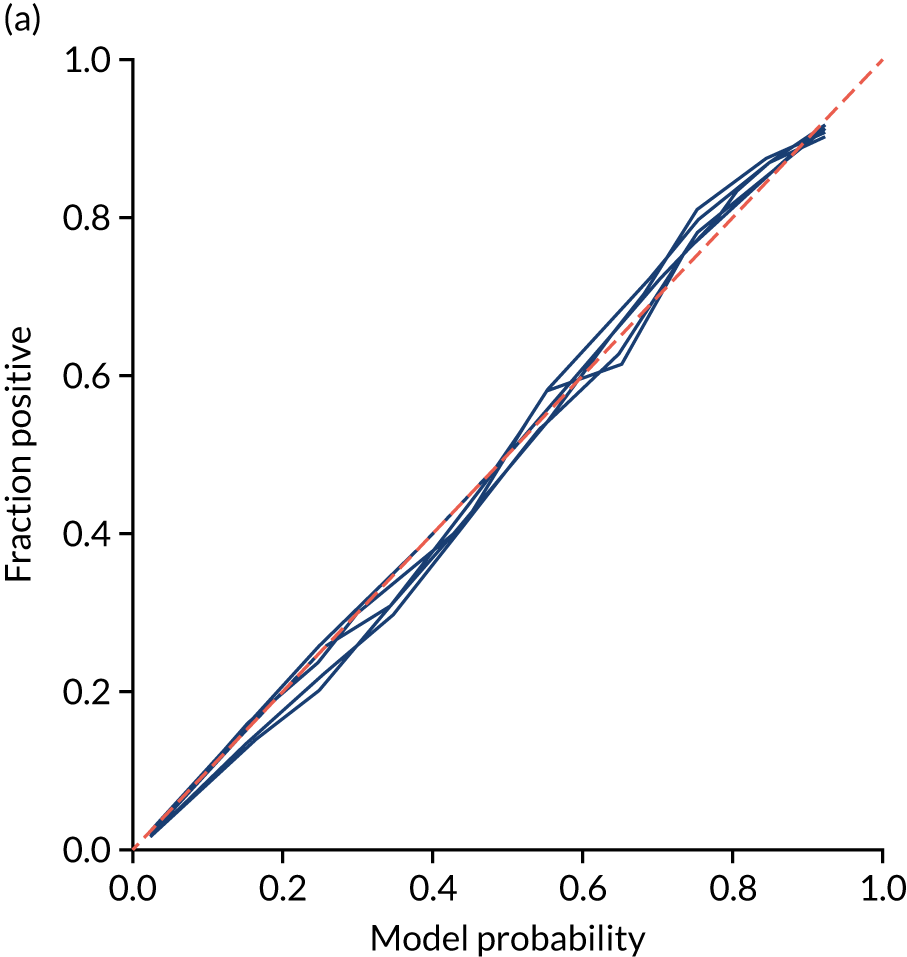

Calibration and assessment of accuracy when model has high confidence

The single-fit random forest model is well calibrated (Figure 28). Although the overall accuracy of the model is 84.6%, the accuracy of those 59% of samples with at least 80% confidence in prediction is 92.4% (calculated separately).

FIGURE 28.

(a) Model probability calibration; and (b) model accuracy vs. confidence for a random forest model with hospital as a feature (one-hot encoded). Results show separate fivefold validation results.

Random forest model fitted to individual hospital stroke teams

As with logistic regression, random forest models were also fitted for individual hospitals, rather than using hospital as a feature. Again, data were left raw (i.e. non-standardised). We calibrated each model so that a classification threshold was used that gave the same thrombolysis use rate as observed for that hospital.

Accuracy

The model accuracy was slightly lower than the single-fit model (overall accuracy 84.3% vs. 84.6% for a single-fit model), although the loss of accuracy was less than that observed with logistic regression. Full accuracy results are shown in Table 9.

| Accuracy measure | Mean (95% CI)a |

|---|---|

| Actual positive rate | 0.295 (0.001) |

| Predicted positive rate | 0.300 (0.001) |

| Accuracy | 0.843 (0.001) |

| Precision | 0.730 (0.001) |

| Recall/sensitivity | 0.743 (0.001) |

| F1 score | 0.737 (0.001) |

| Specificity | 0.885 (0.001) |

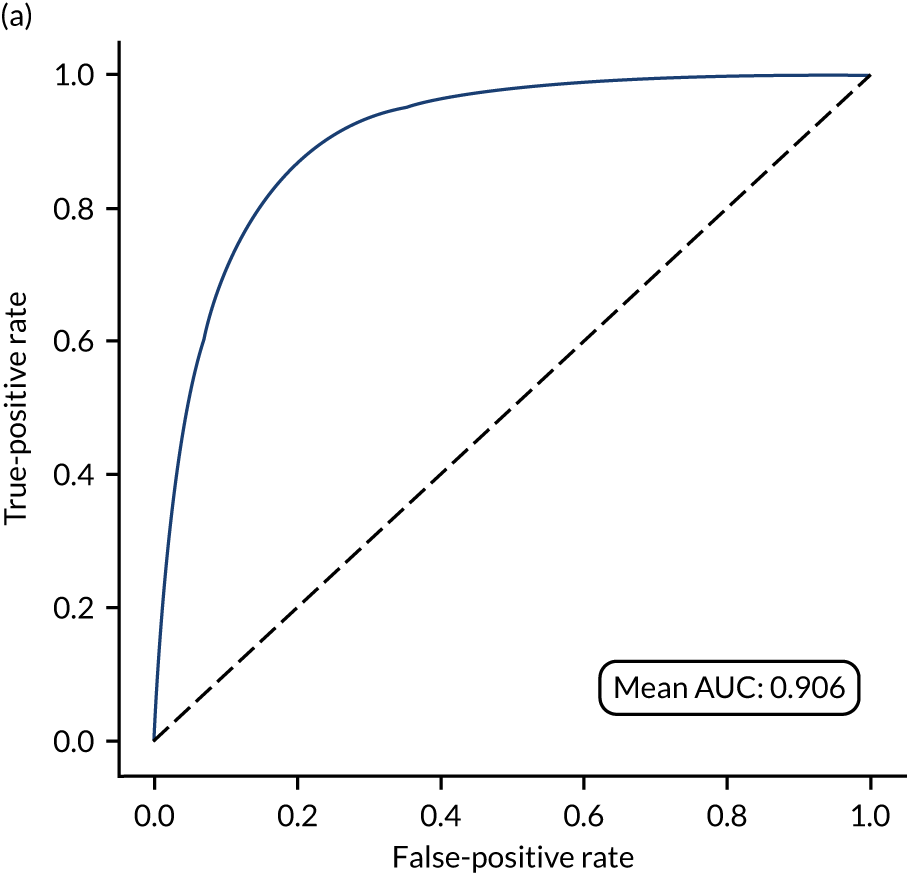

Receiver operating characteristic and sensitivity–specificity curves

Figure 29 shows ROC and sensitivity–specificity curves for random forest models fitted to individual hospitals. The mean ROC AUC was slightly lower than a single-fit random forest model (0.906 vs. 0.914). Sensitivity–specificity trade-off was also slightly poorer, with the model achieving approximately 83.2% sensitivity and specificity simultaneously (compared with 83.7% for the single-fit random forest model).

FIGURE 29.

(a) ROC curve; and (b) sensitivity–specificity curve for random forest models fitted to individual hospitals. Curves show separate fivefold validation results; however, multiple lines are not easily visible as they overlap each other.

Feature importance

As models are fitted to each hospital individually, we do not report overall feature importance.

Learning curve

As different hospitals had different numbers of data available for fitting, a learning curve was not constructed.

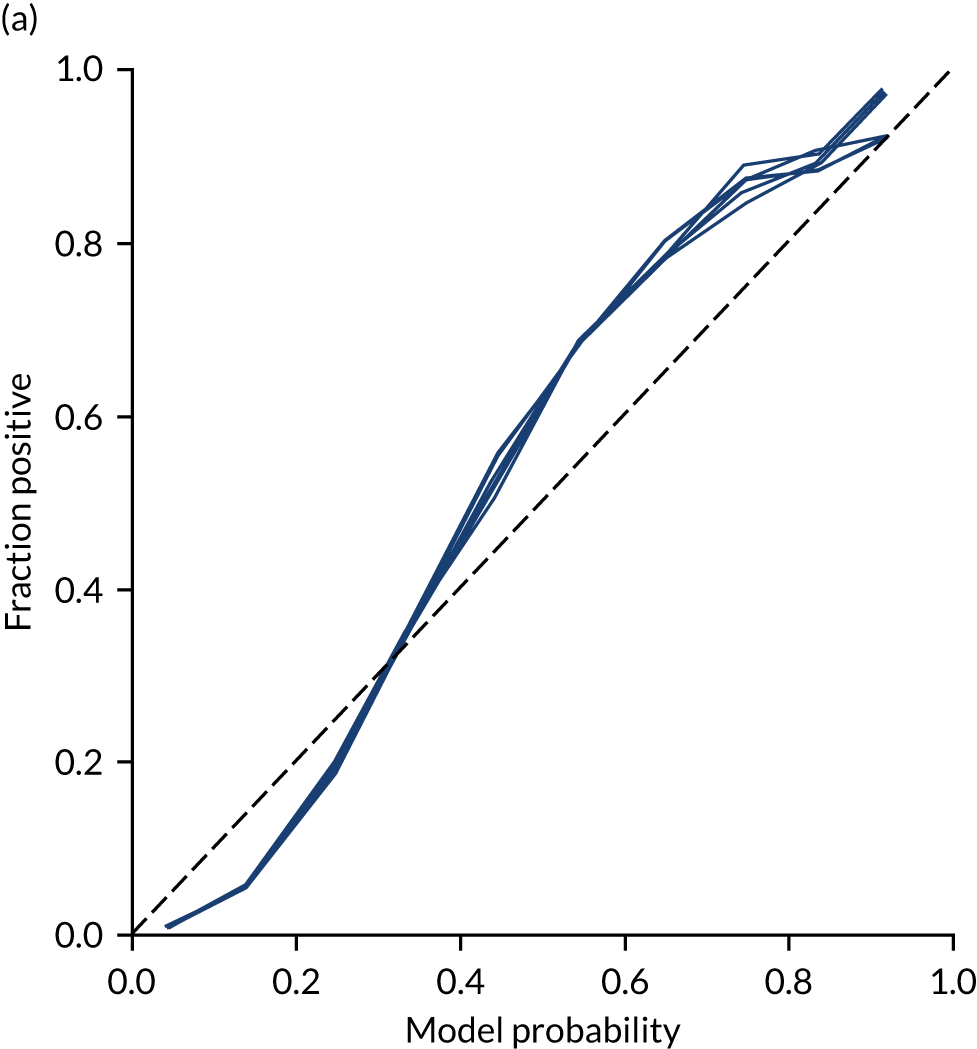

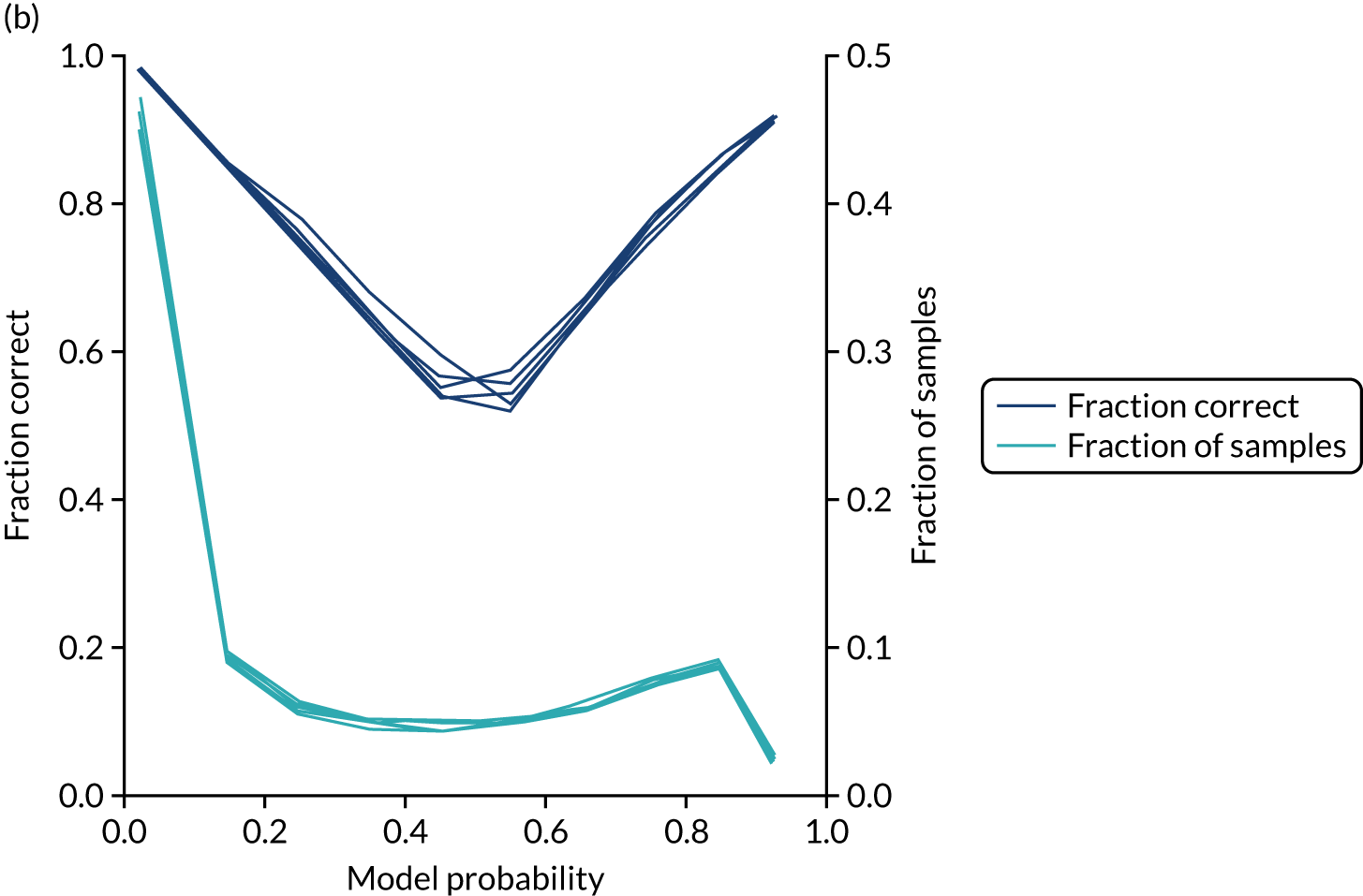

Calibration and assessment of accuracy when model has high confidence

Unlike logistic regression, individual models fitted to hospitals maintain reasonable calibration (Figure 30). Although the overall accuracy of the model is 84.3%, the accuracy of those 50% of samples with at least 80% confidence in prediction is 94.4% (calculated separately).

FIGURE 30.

(a) Model probability calibration; and (b) model accuracy vs. confidence for random forest models fitted to individual hospitals. Results show separate fivefold validation results.

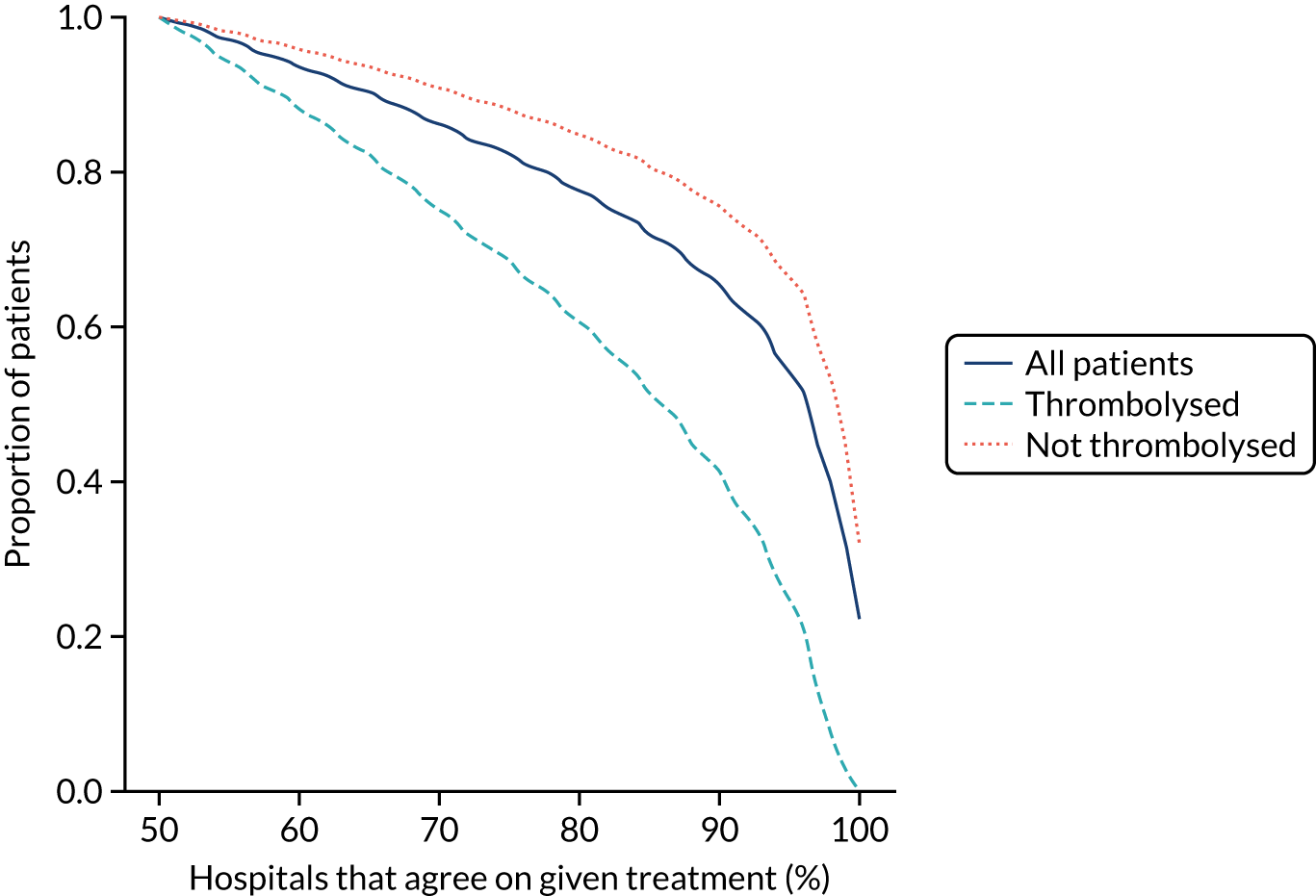

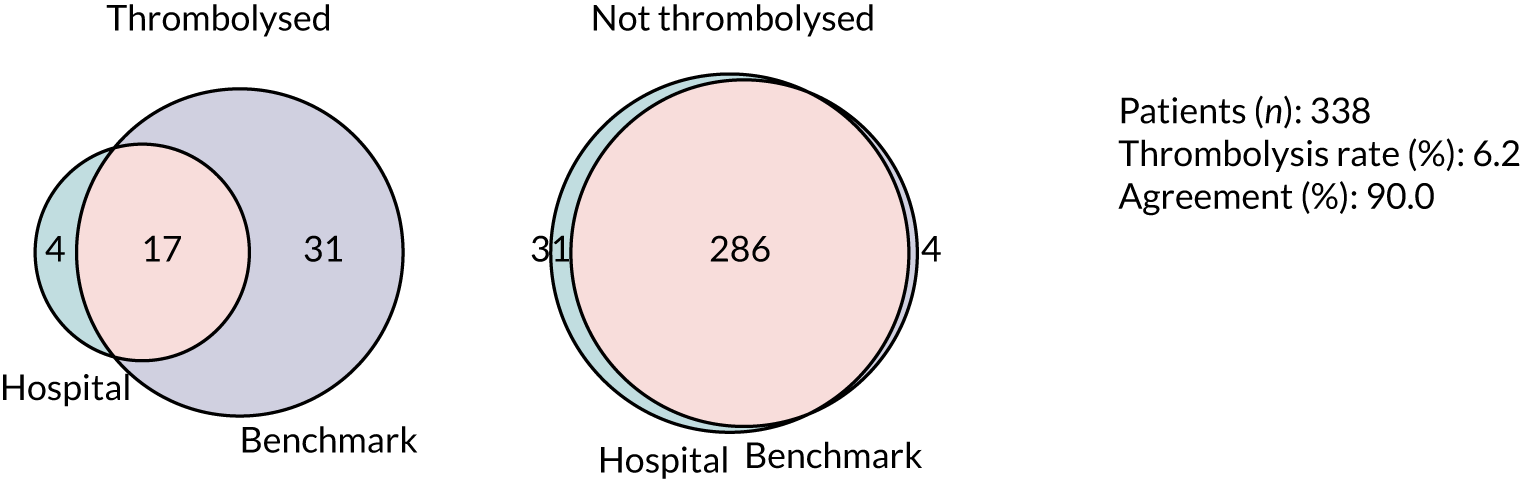

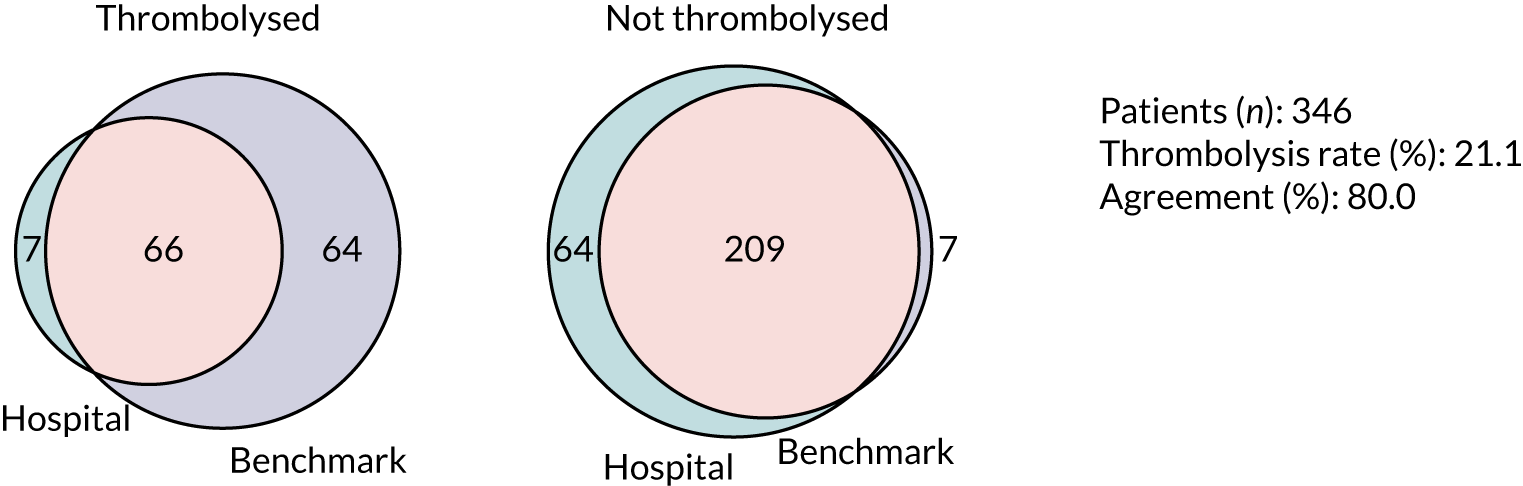

Agreement of decision-making between hospitals

Using the random forest models fitted for individual hospitals to determine the treatment for each patient at each hospital, Figure 31 shows the level of agreement between hospitals on decision-making. Hospitals agree more on patients who will not receive thrombolysis than those who do. For example, 76% of patients have decisions that are agreed by 80% of hospitals, but 85% of patients who did not receive thrombolysis have agreement from 80% of hospitals and 60% of patients who did receive thrombolysis have agreement from 80% of hospitals.

FIGURE 31.

Agreement on decision-making between hospitals (using the random forest models fitted on individual hospitals). The x-axis shows the proportion of hospitals that must agree on a decision and the y-axis shows the proportion of patients who have that level of agreement. Analysis is for all decisions (dark blue solid), for those patients who did receive thrombolysis (light blue dashed) and for those patients who did not receive thrombolysis (orange dotted).

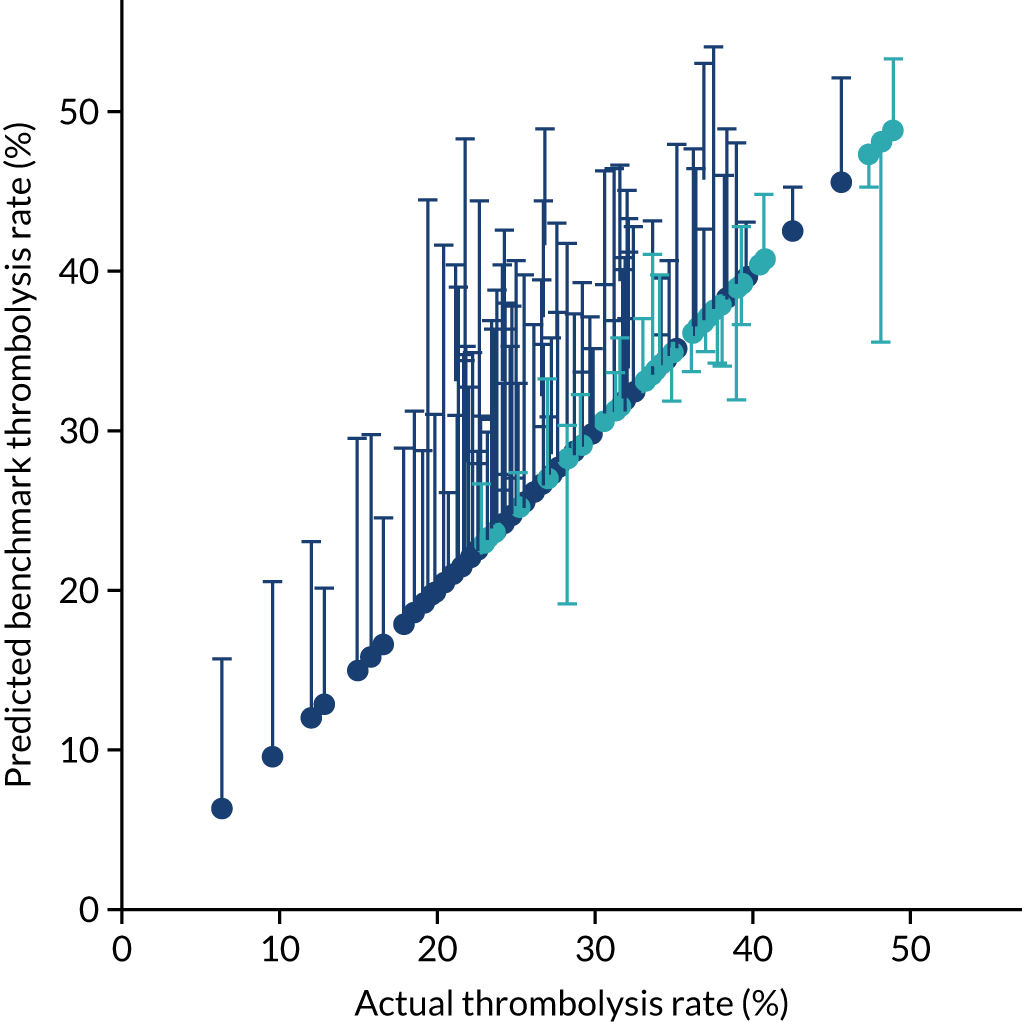

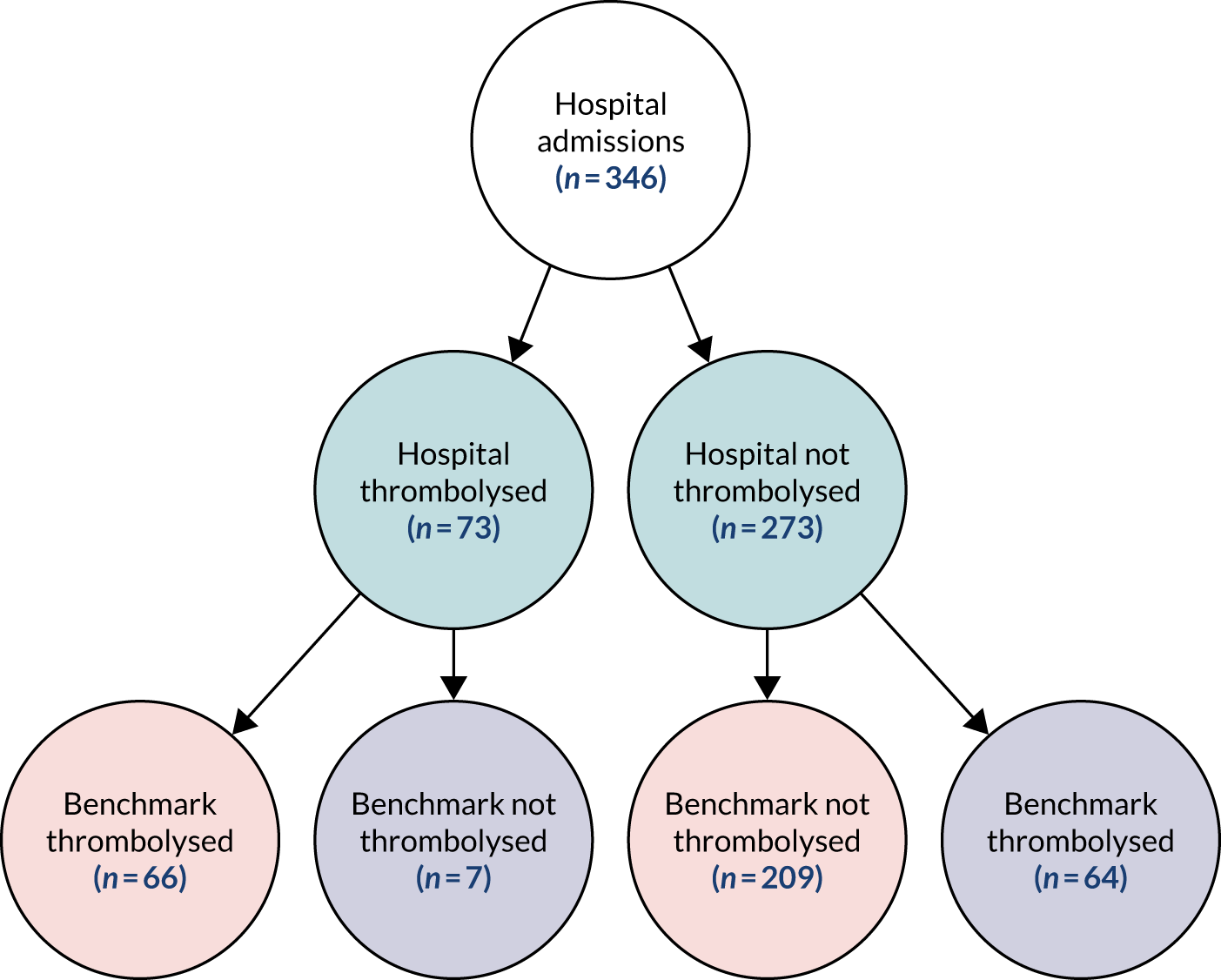

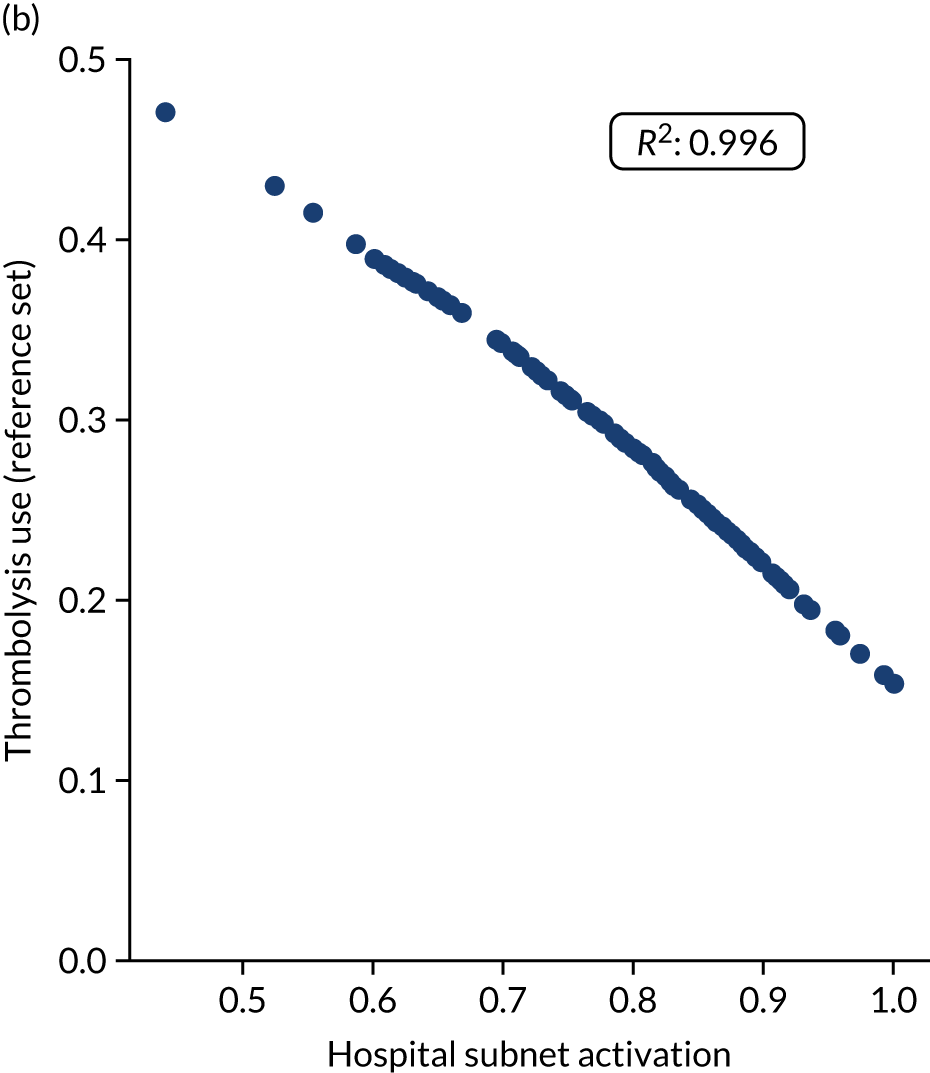

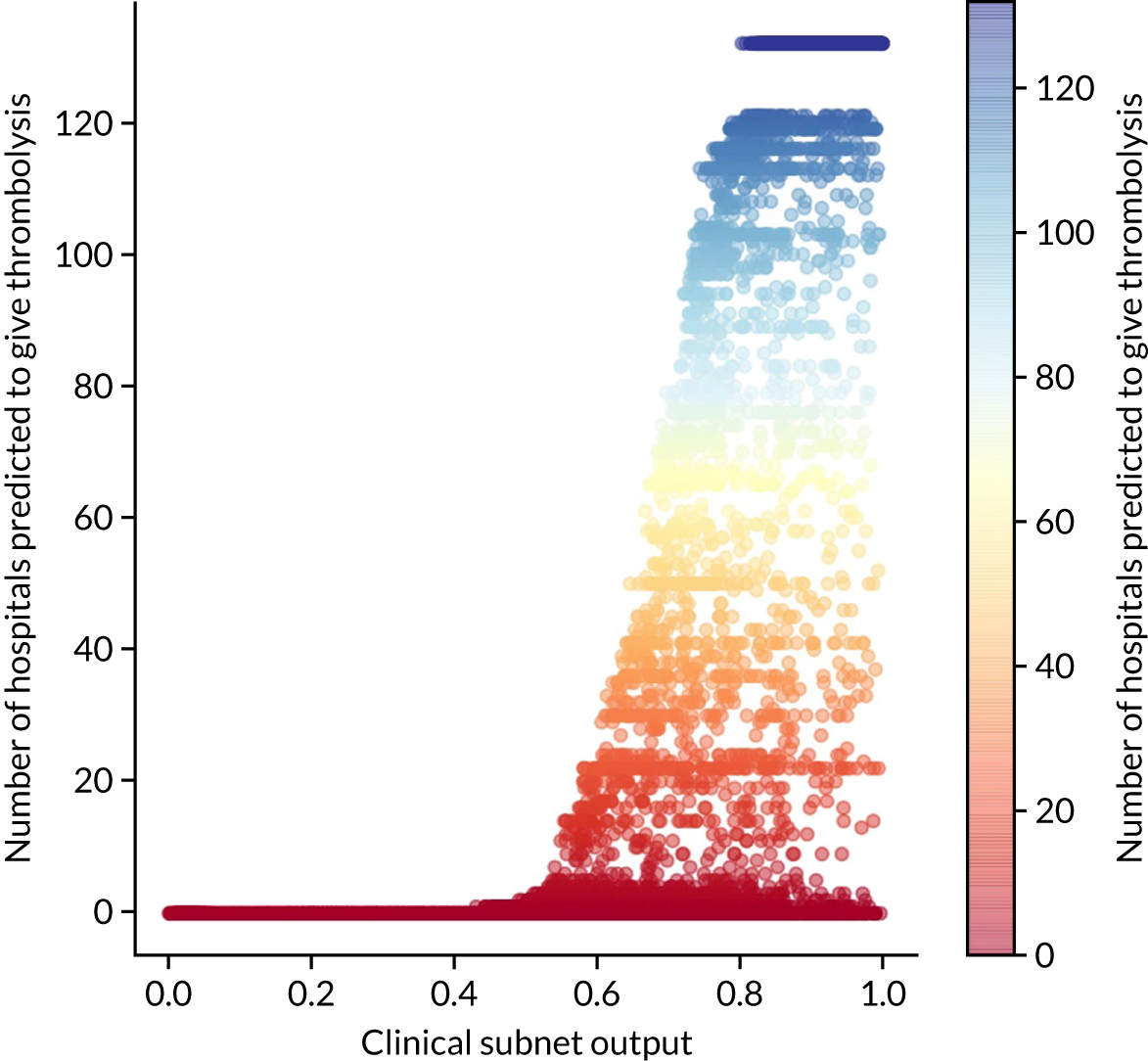

Benchmarking hospitals

Comparison of thrombolysis use between hospitals, using raw thrombolysis use, is confounded by different patient populations (see Table 4). If a hospital has a lower-than-average thrombolysis rate in patients who arrive and are scanned in time for thrombolysis, then is that because decision-making is different or because the patient population is different? Our aim is to compare decisions each hospital would make on a standard set of patients so that we can compare decision-making independently of local patient populations.

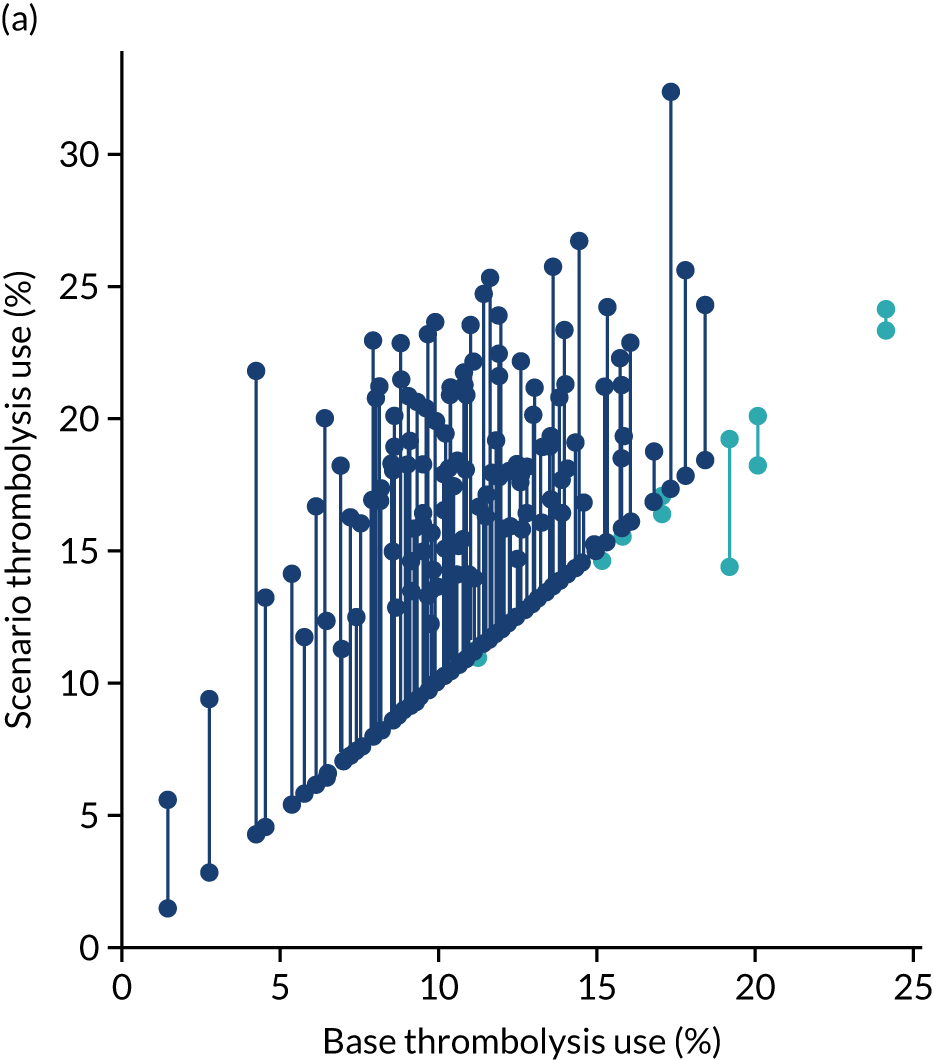

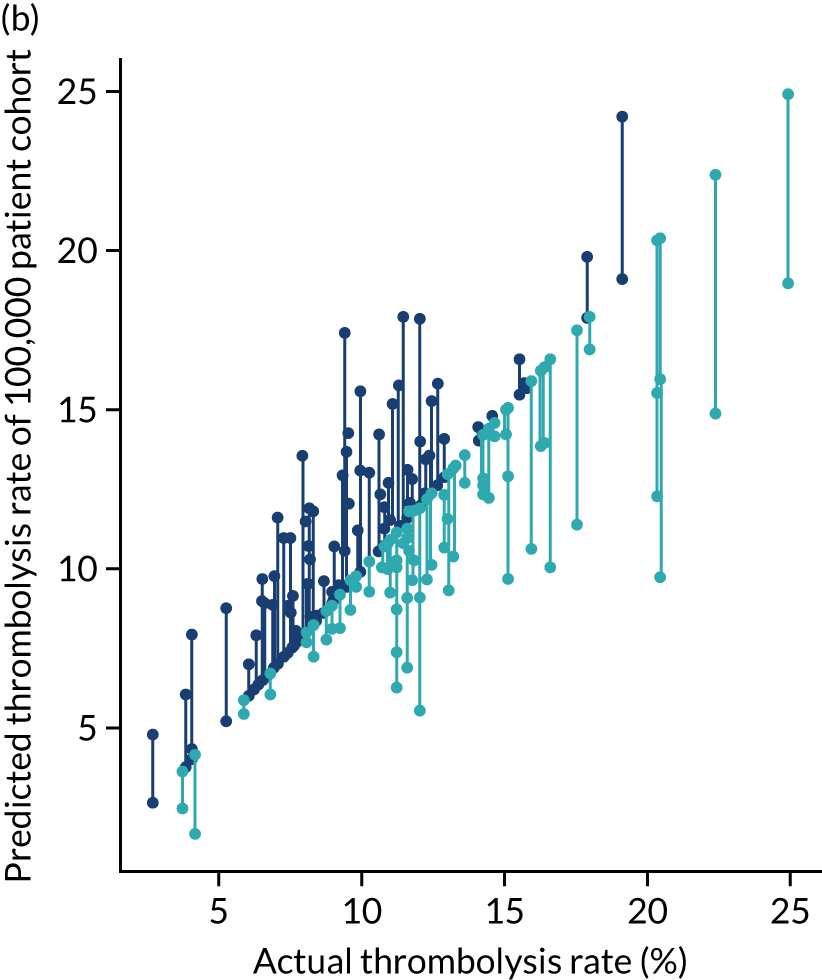

To compare decision-making between hospitals, we compare decisions predicted (using the random forest models fitted for individual hospitals) for a cohort of 10,000 patients (i.e. a random selection of patients who arrive within 4 hours of known stroke onset) and this gives us what we call a predicted cohort thrombolysis rate (i.e. the predicted thrombolysis use in a standard cohort of patients).

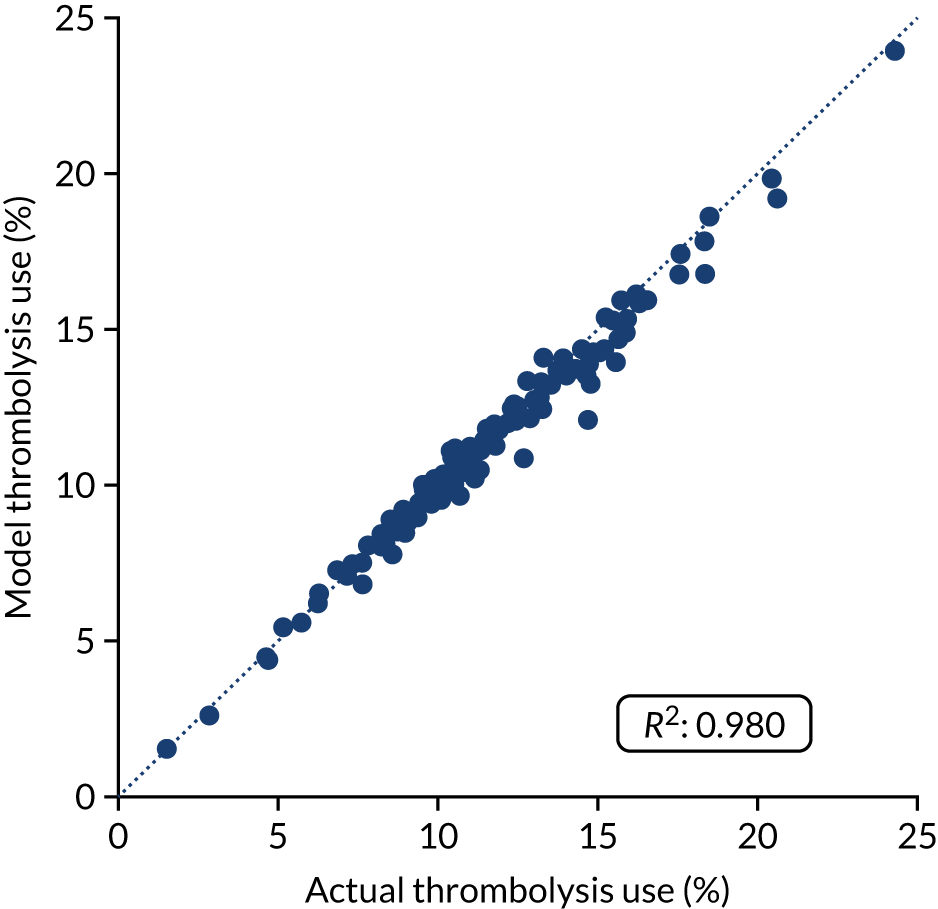

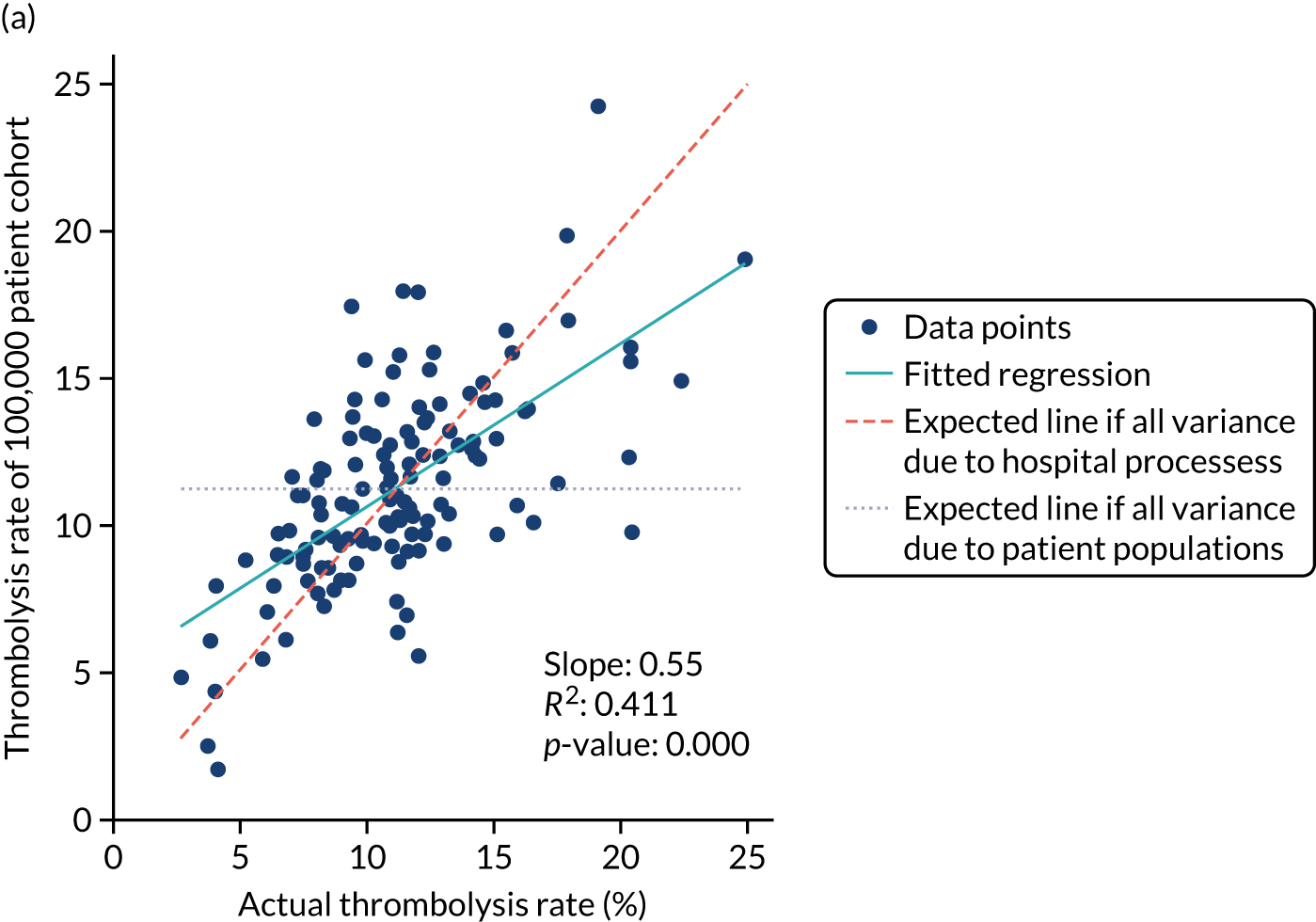

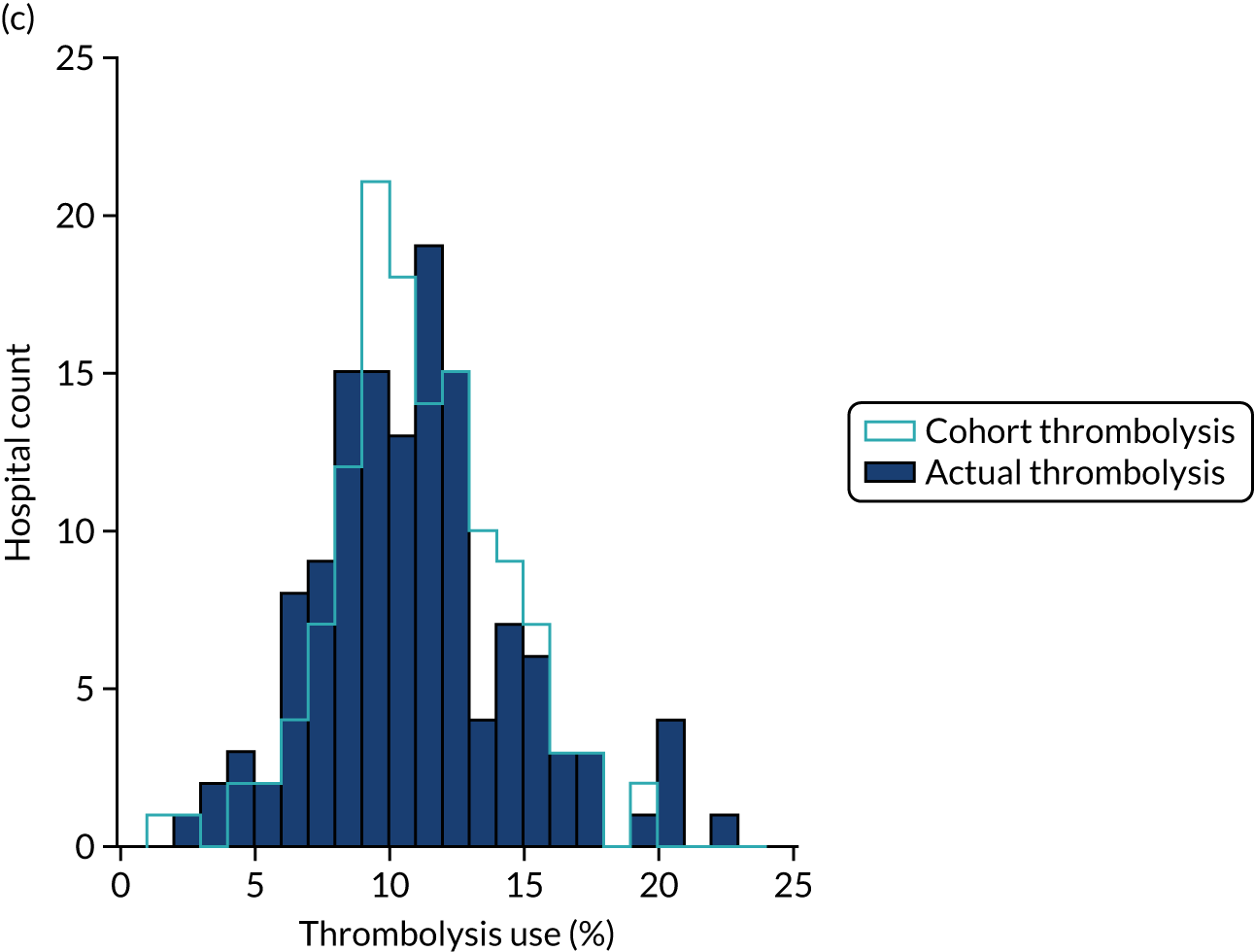

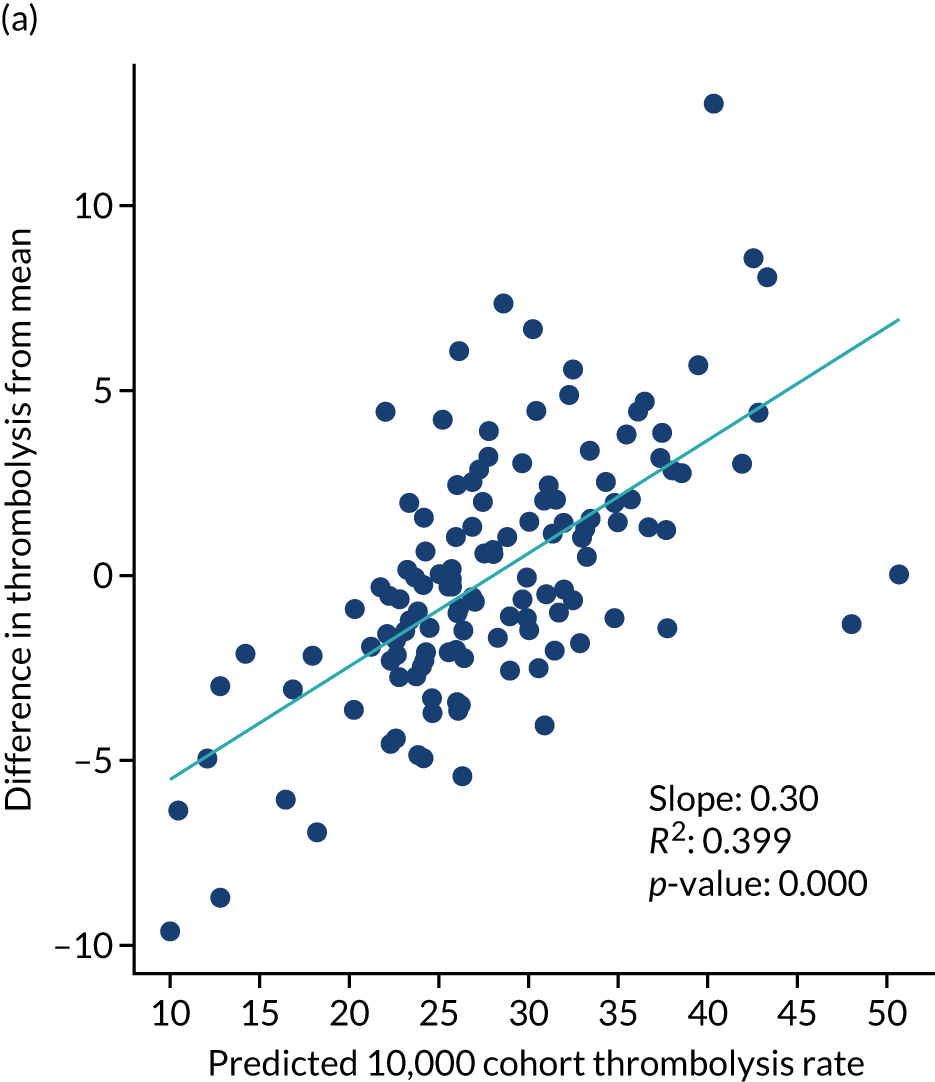

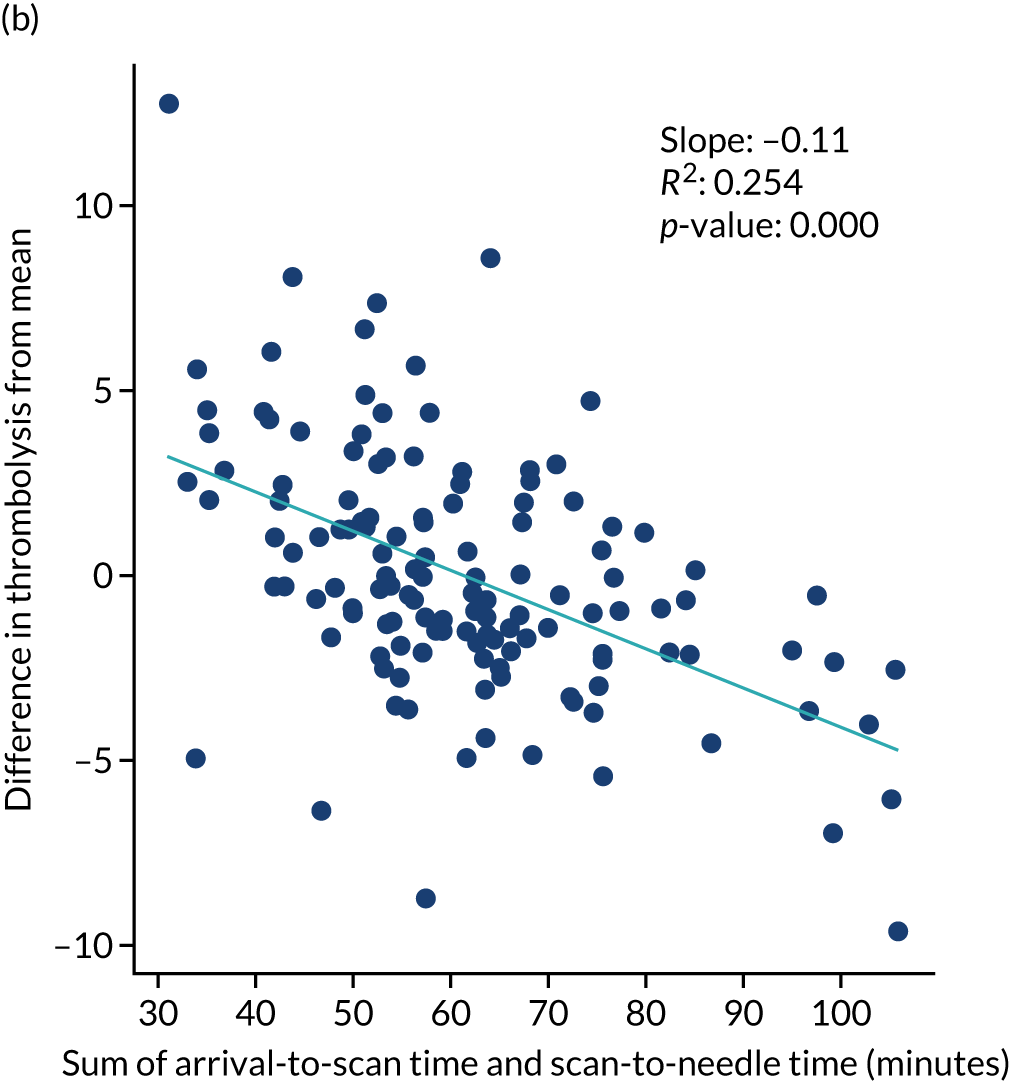

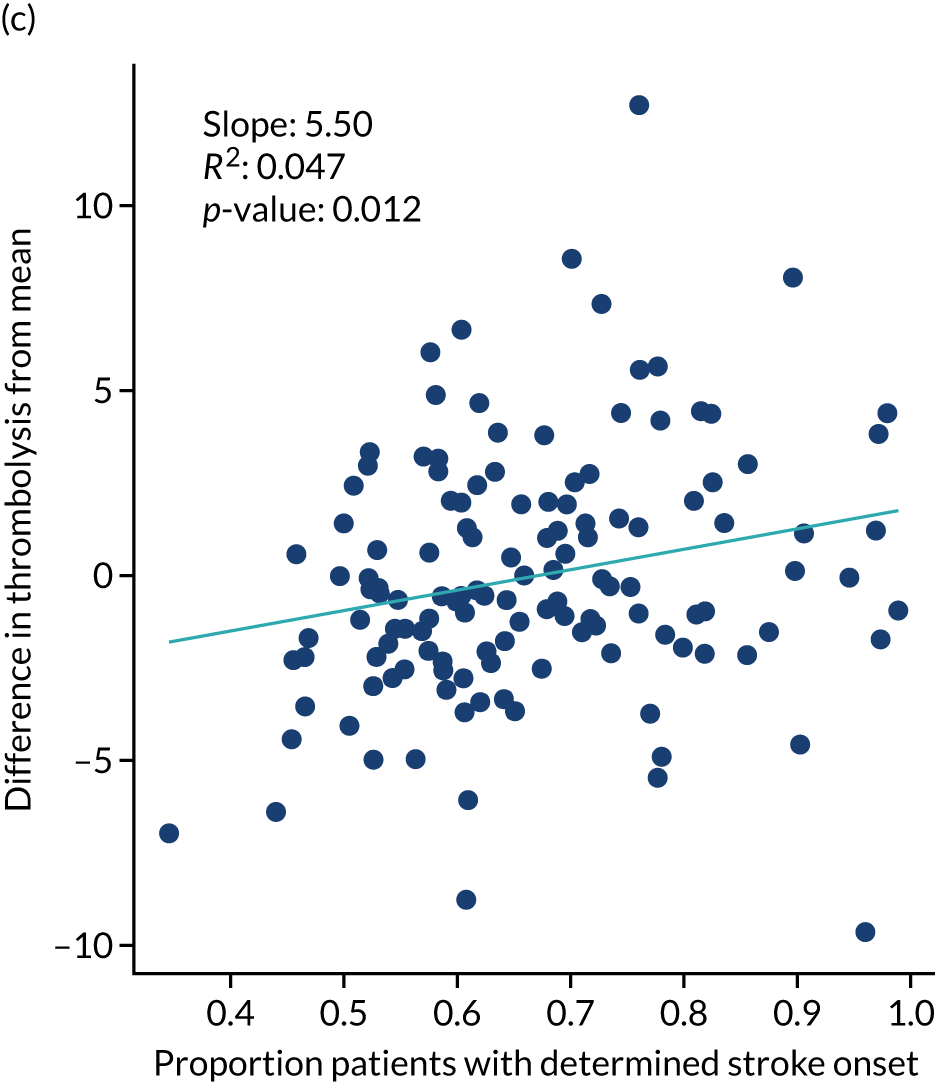

Figure 32 shows a comparison of true thrombolysis rate (i.e. the hospital’s actual thrombolysis rate on the patients who attended their hospital) and predicted cohort thrombolysis rate. There is a trend for predicted cohort thrombolysis to be higher in those hospitals that have a higher true thrombolysis rate, but there is also significant variation (R2 = 0.393), suggesting that patient populations play a significant role in determining a hospital’s level of use of thrombolysis, even after removing those patients who did not arrive within 4 hours of known stroke onset.

FIGURE 32.

A comparison of true (actual) thrombolysis rate and the predicted cohort thrombolysis rate when a decision model for each hospital makes predictions for thrombolysis use on a standard cohort of 10,000 patients (each arriving within 4 hours of known stroke onset). Each point represents a single hospital. The orange points are those hospitals that are in the top 30 of hospitals when cohort thrombolysis rate is predicted, with other hospitals coloured light purple. The dashed mid-blue line shows the threshold for selection of the top 30 hospitals. The dotted dark blue line shows a 1 : 1 relationship between true thrombolysis rate and predicted cohort thrombolysis rate.

After analysing the predicted thrombolysis use in a standard cohort of patients, we take the 30 hospitals with the highest predicted cohort thrombolysis rate as a set of benchmark hospitals. We can use the hospital models of these 30 benchmark hospitals and predict what thrombolysis use would be at each hospital if the decision made was the same as the majority vote of the benchmark hospitals. For example, we take all the patients from hospital X and pass them through the decision models for the 30 benchmark hospitals. Each benchmark hospital model predicts a yes/no output on whether or not each patient would be predicted to receive thrombolysis at that hospital. If 15 or more of the 30 benchmark hospitals predict thrombolysis would be given for any patient, then we assign ‘receive thrombolysis’ to that patient.

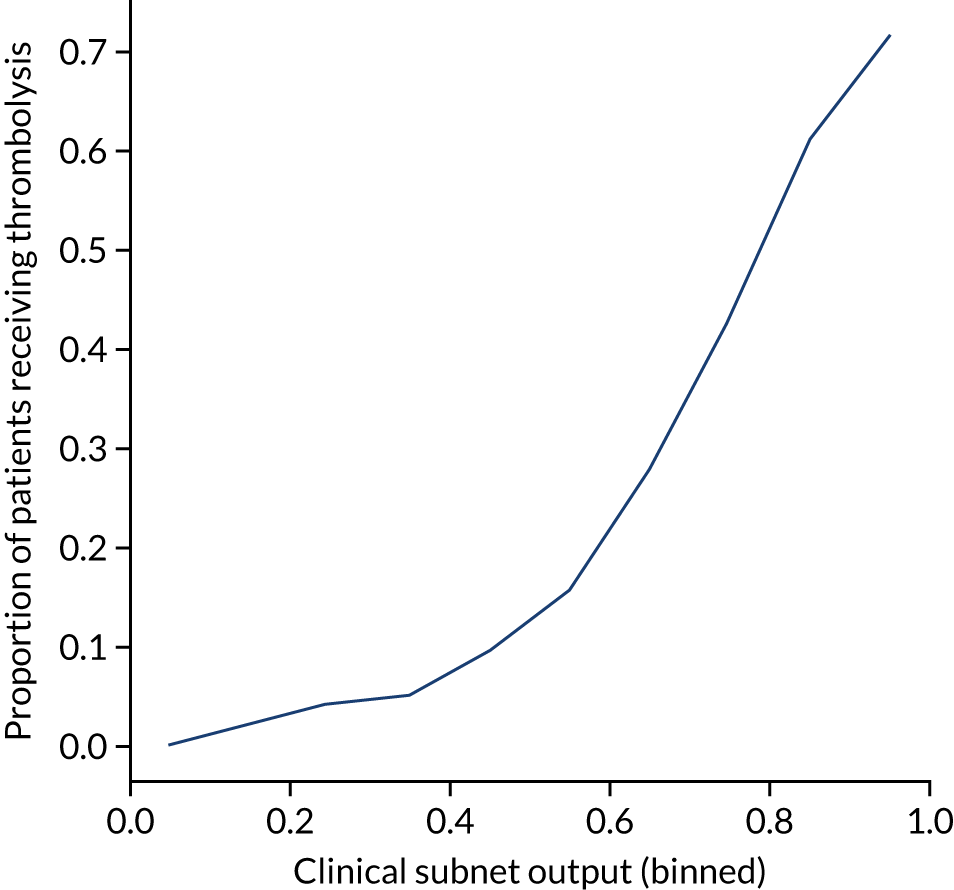

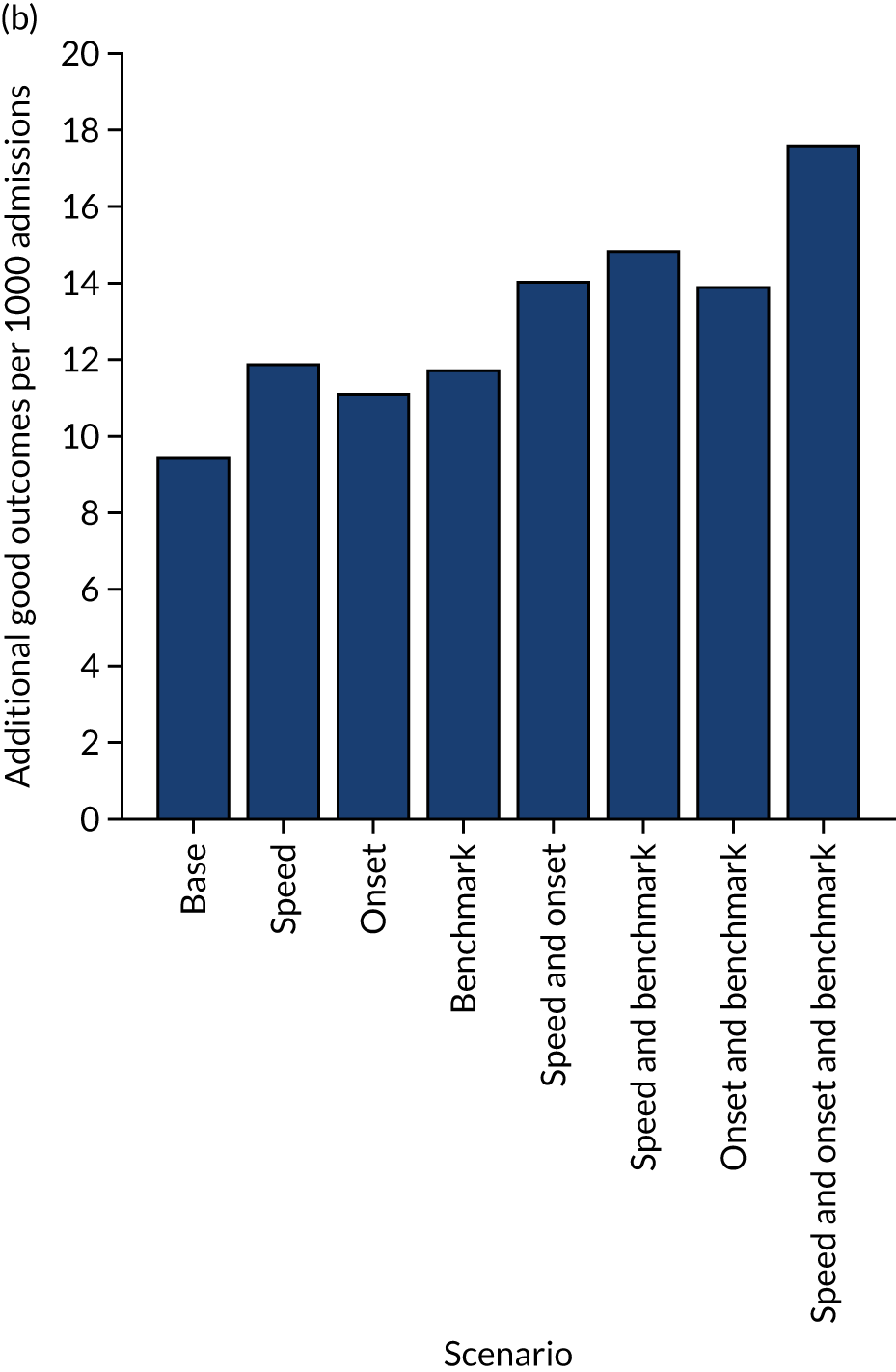

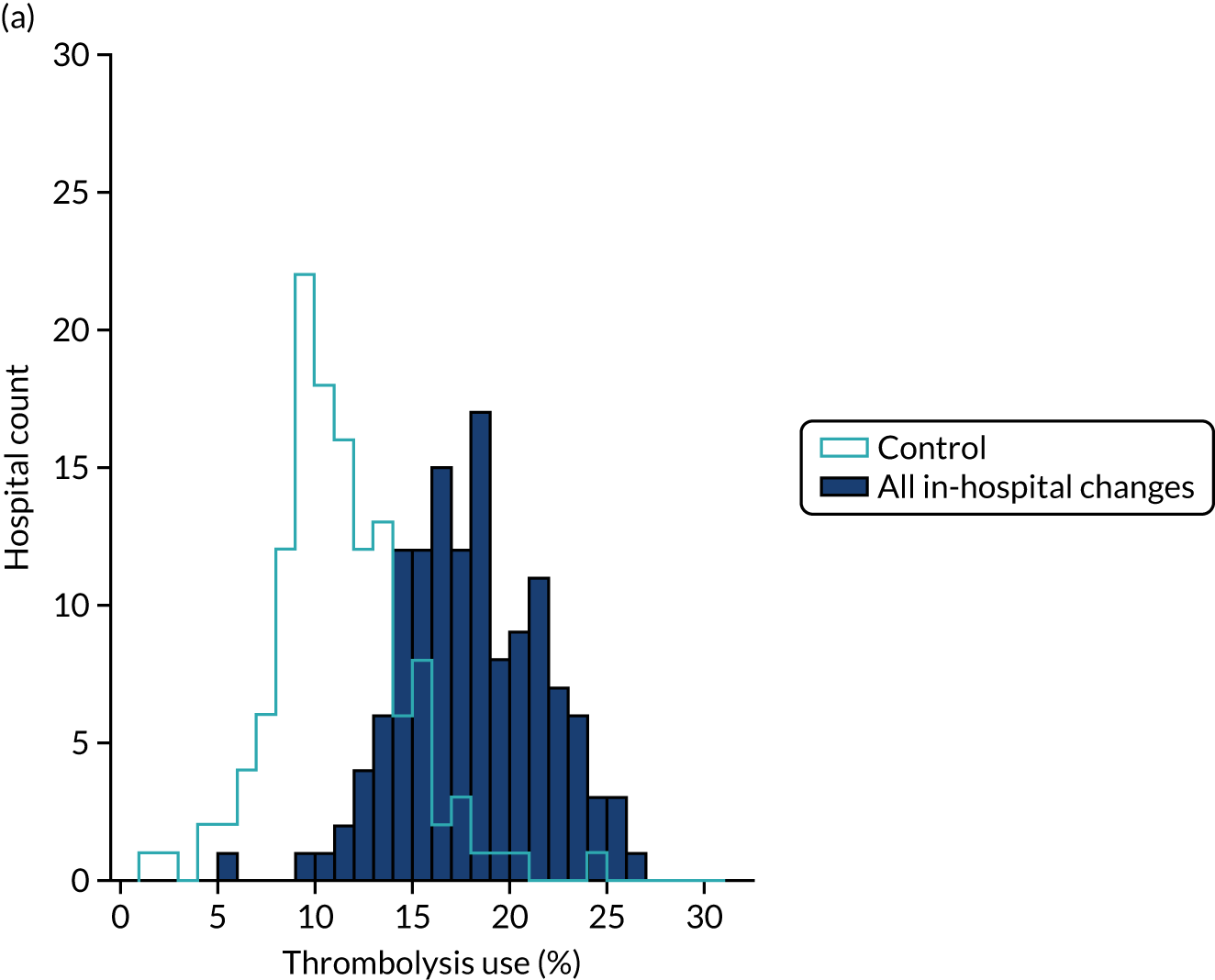

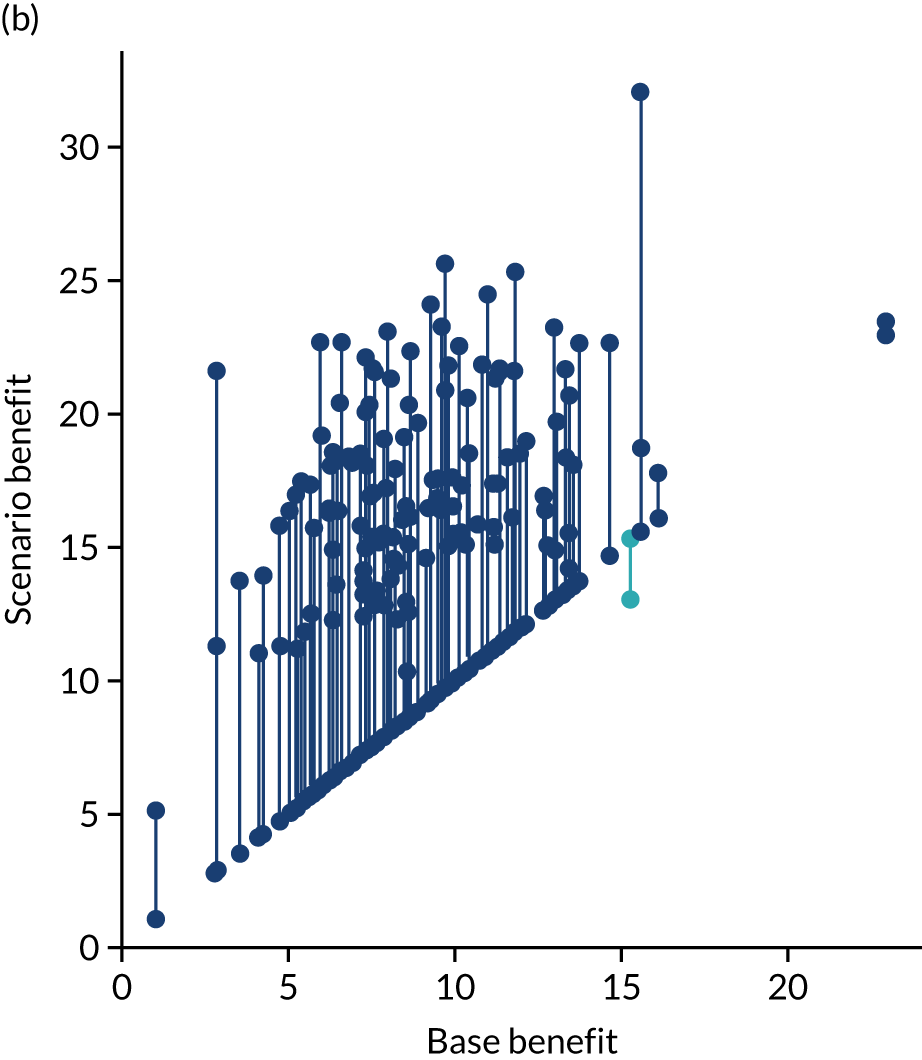

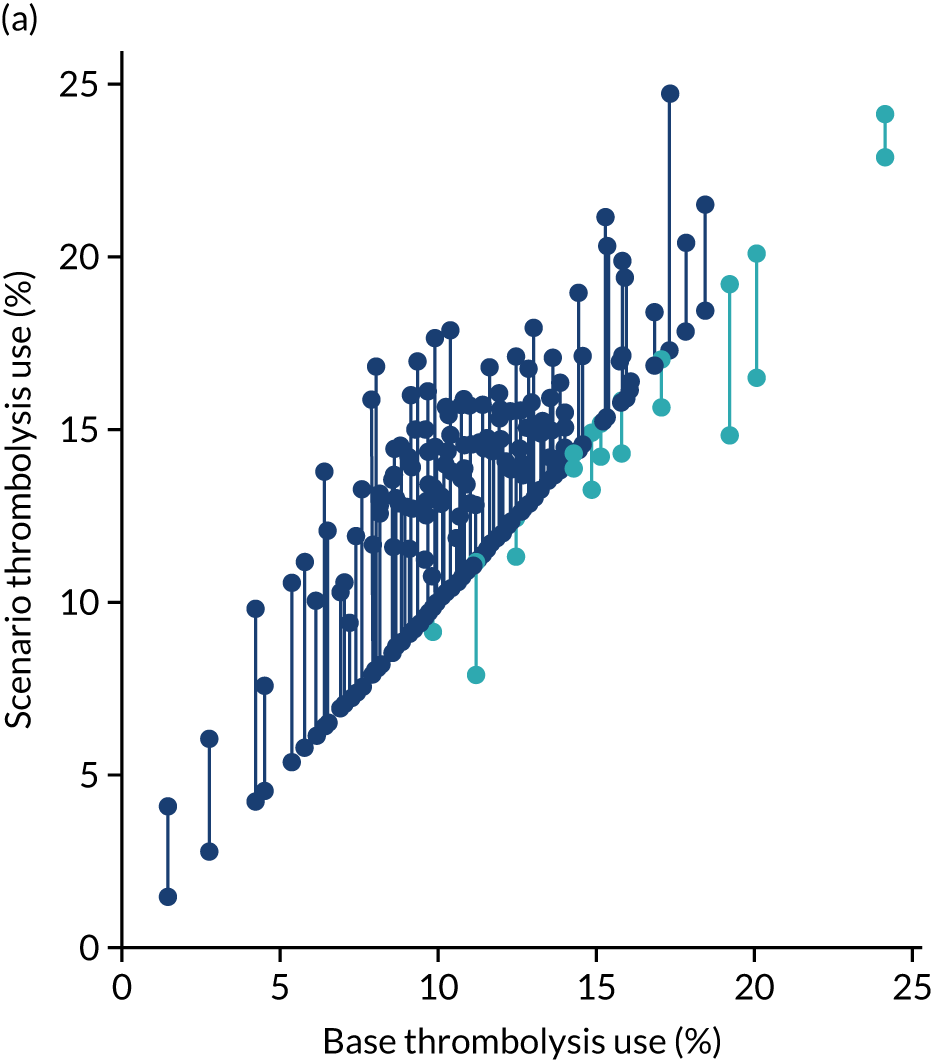

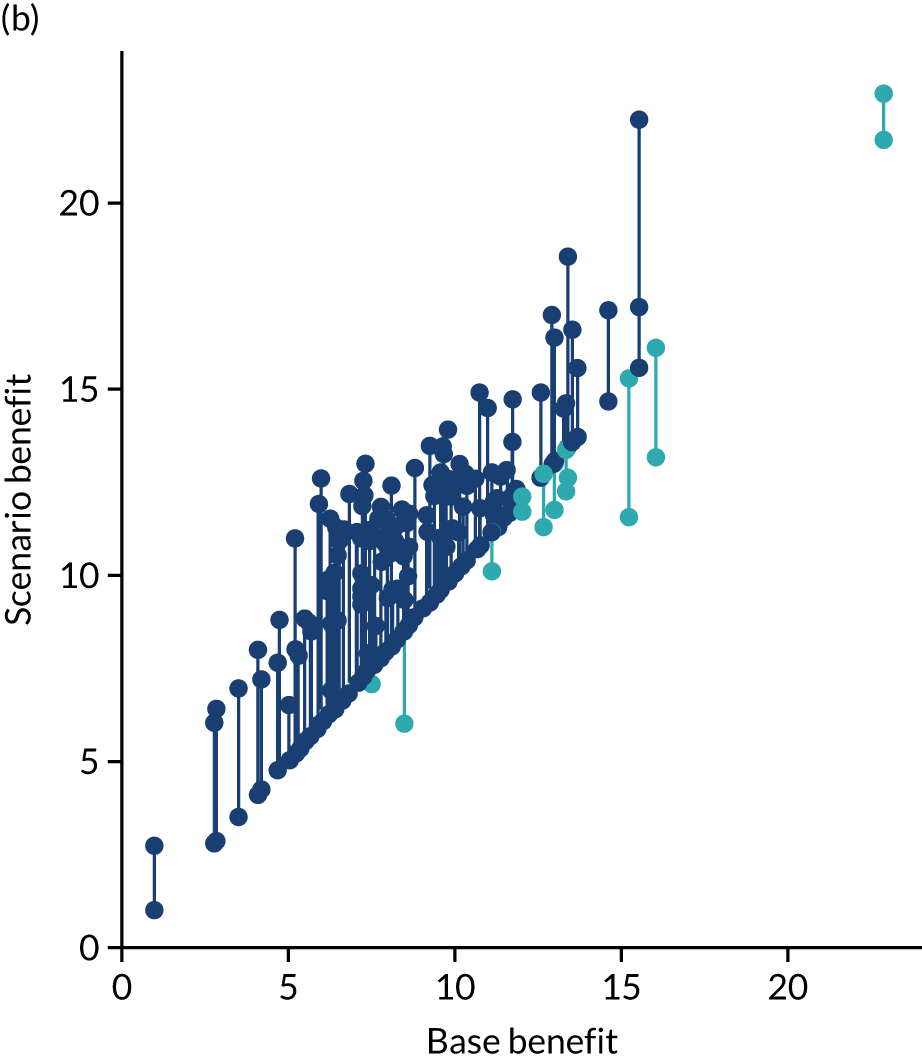

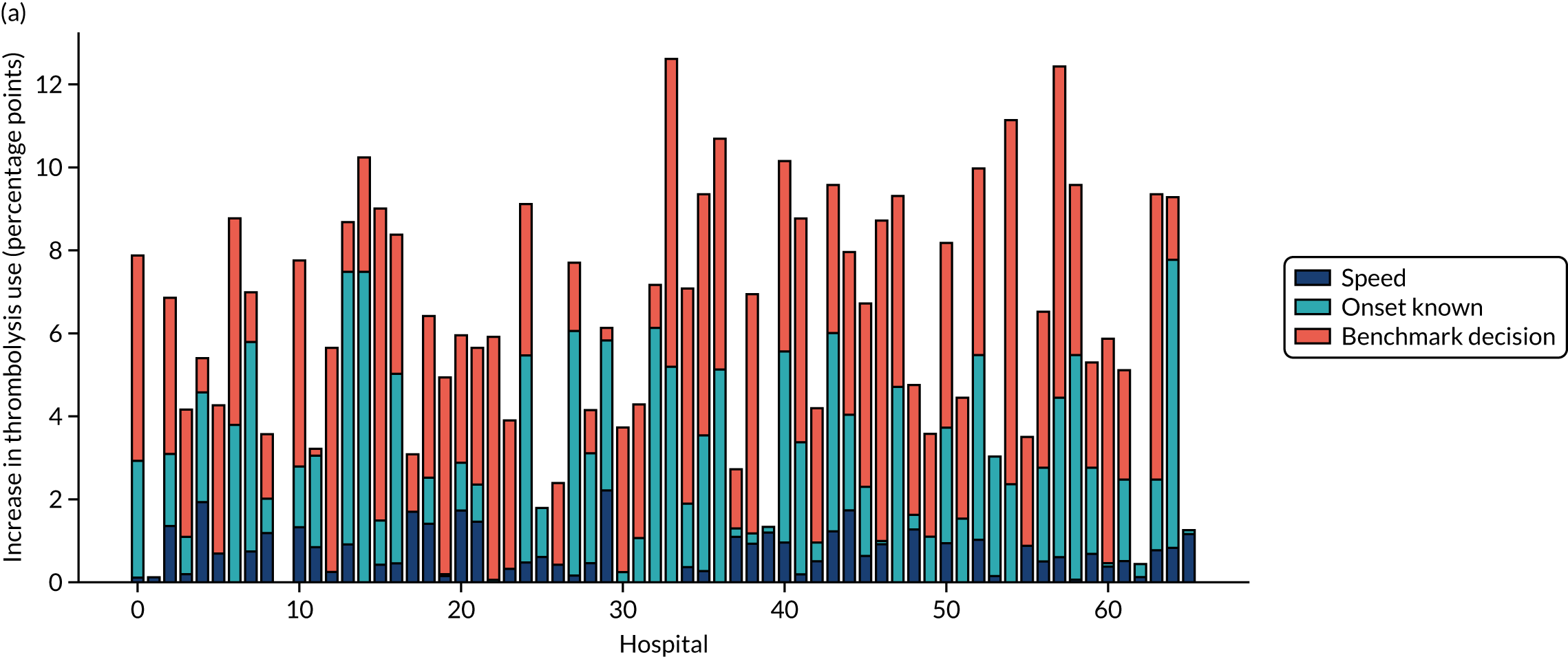

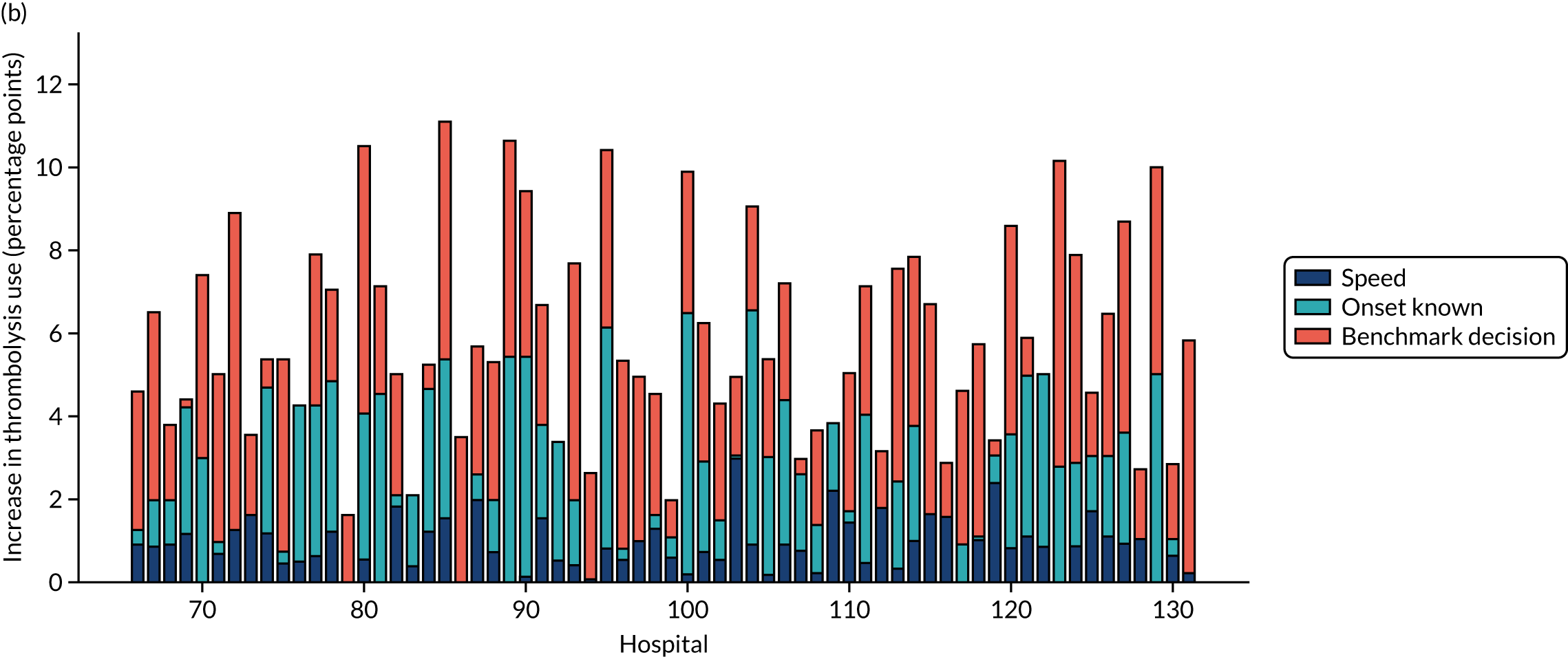

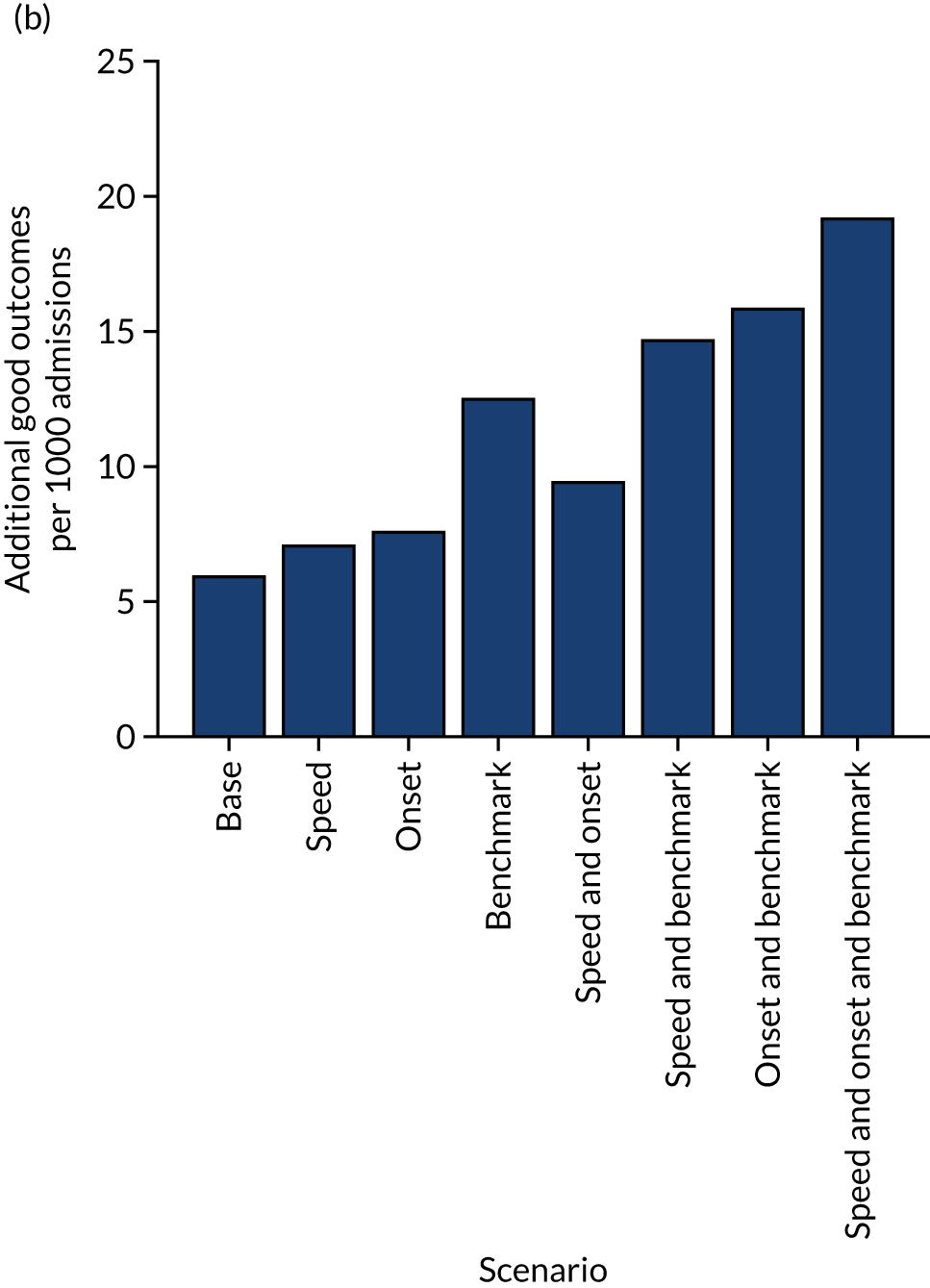

Figure 33 shows the predicted thrombolysis use at each hospital in accordance with the majority vote of the benchmark hospital models. When results are weighted by the number of patients attending each hospital, using the 30 benchmark hospital models to decide if a patient would receive thrombolysis, then this would increase national thrombolysis use from 29.5% to 36.9% of patients arriving within 4 hours of known stroke onset. If decisions were, instead, made by the majority vote of the top 30 hospitals with the highest true (actual) thrombolysis rate, rather than choosing benchmark hospitals using a standard patient cohort, then overall thrombolysis rate would increase from 29.5% to 32.7% of patients arriving within 4 hours of known stroke onset.

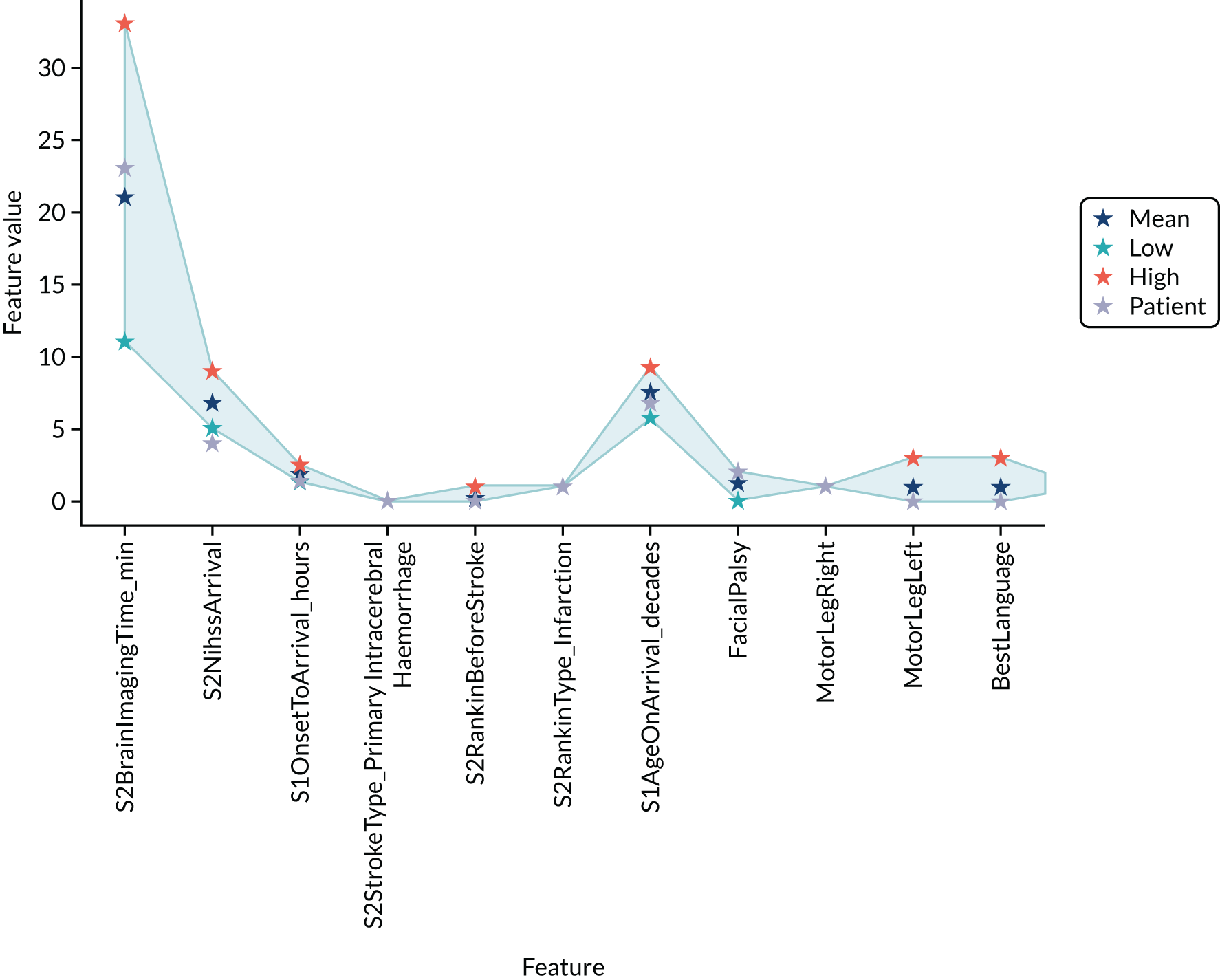

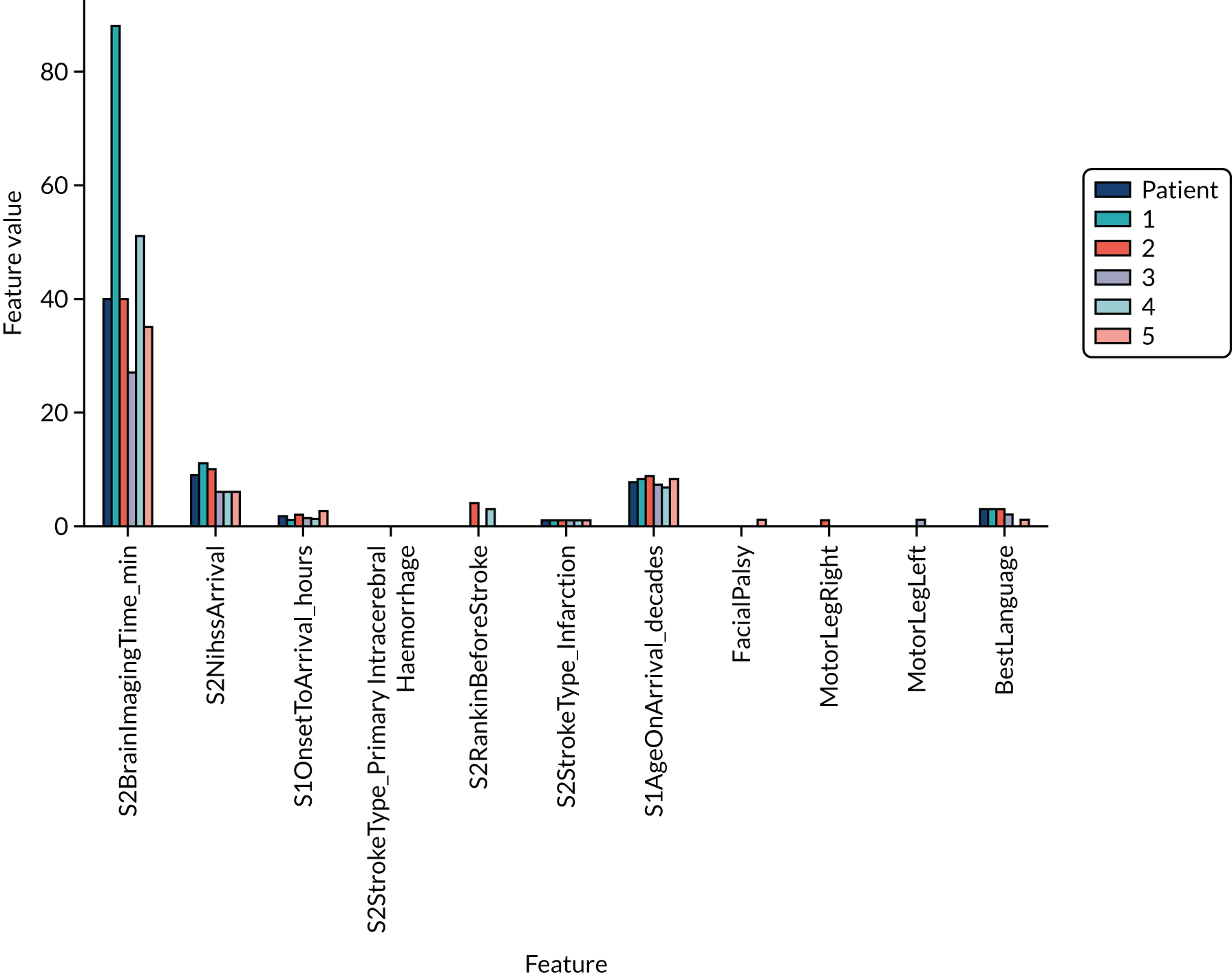

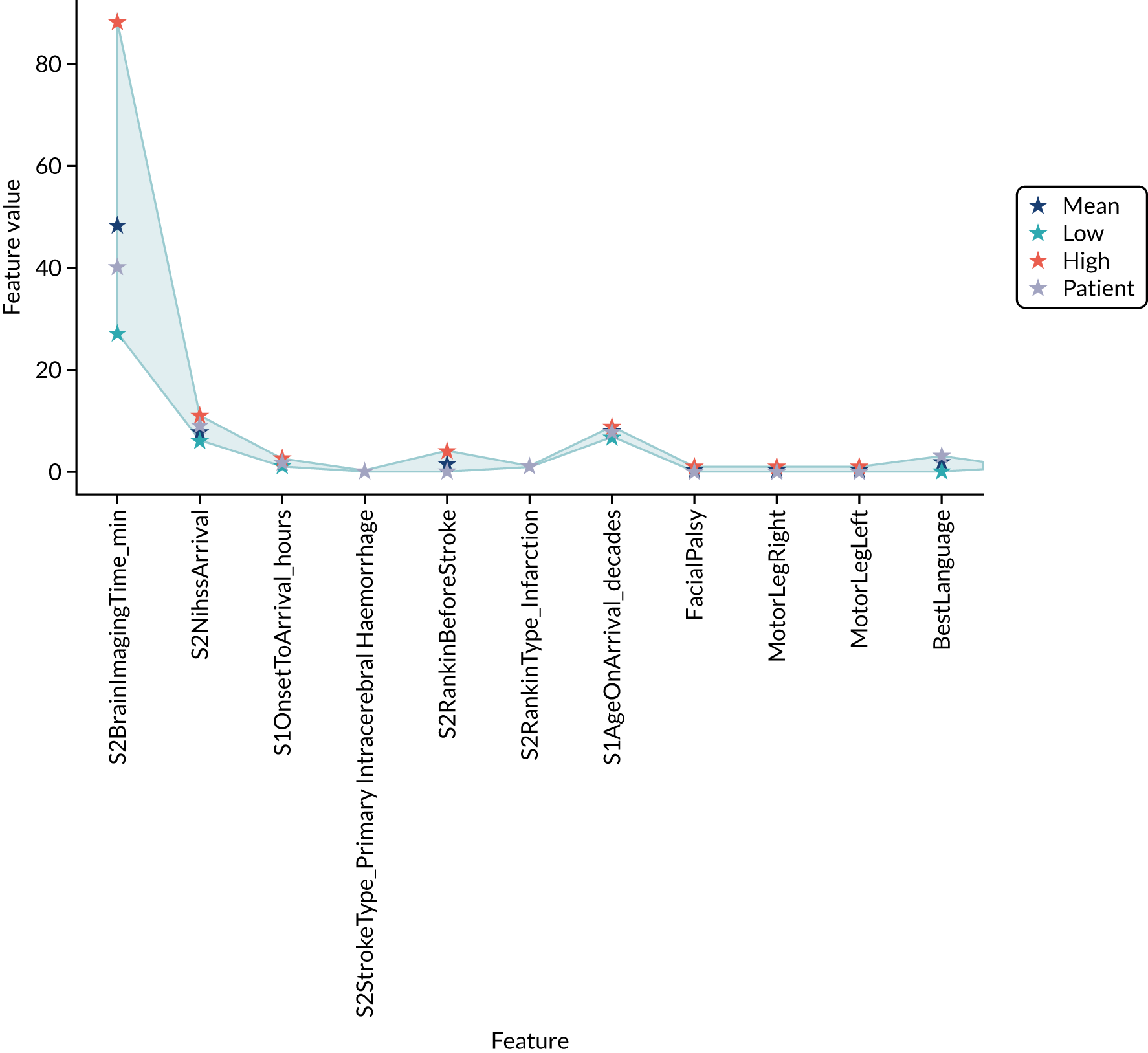

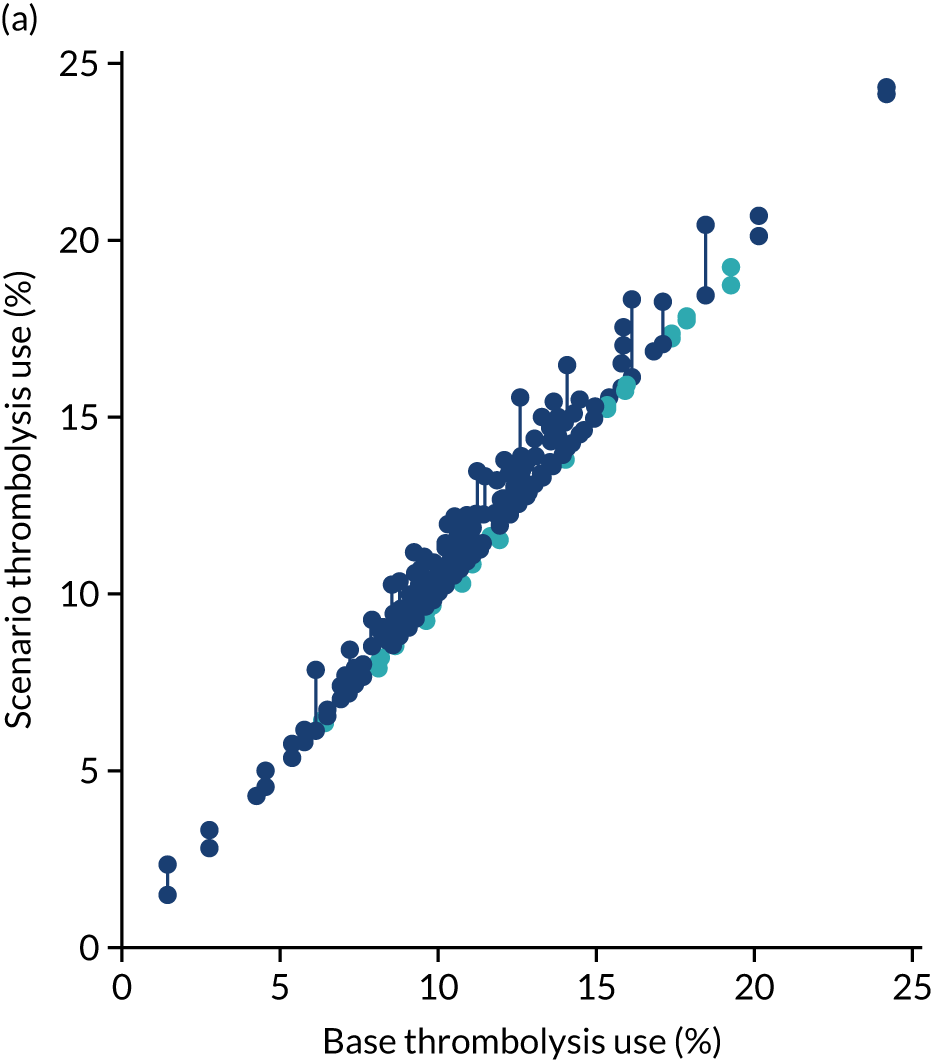

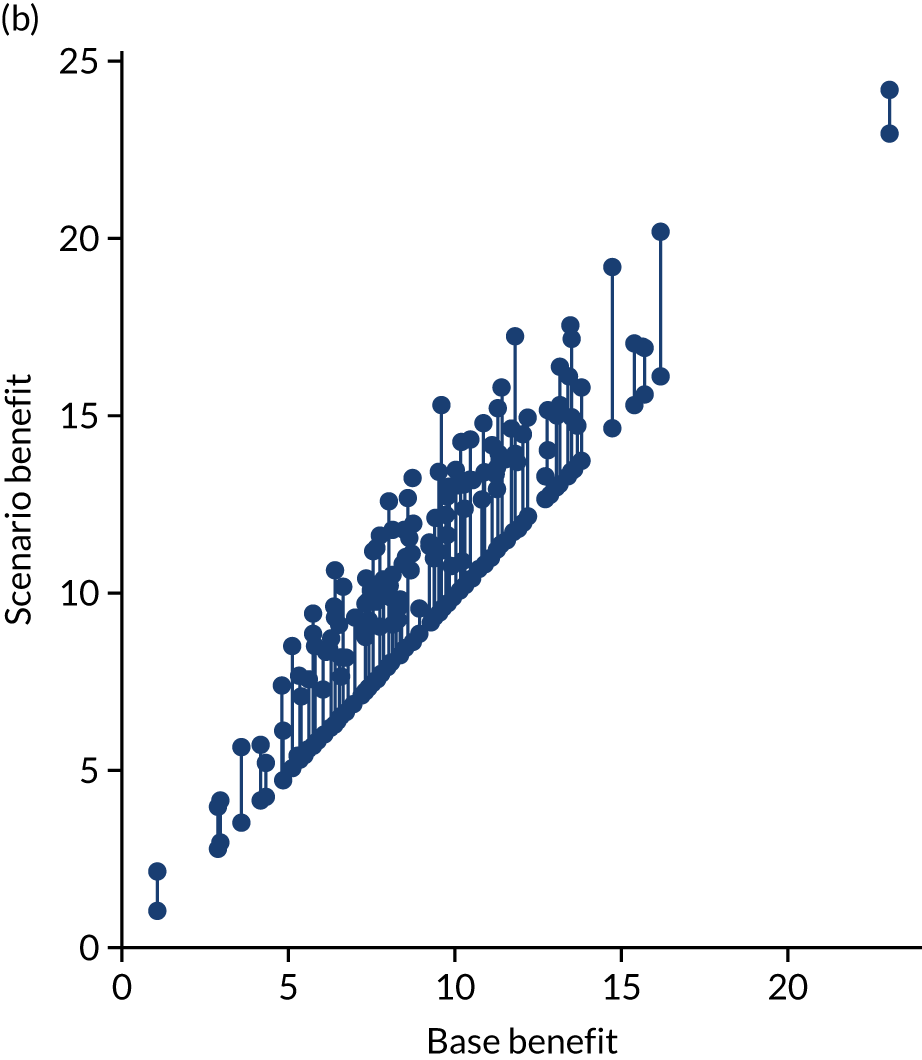

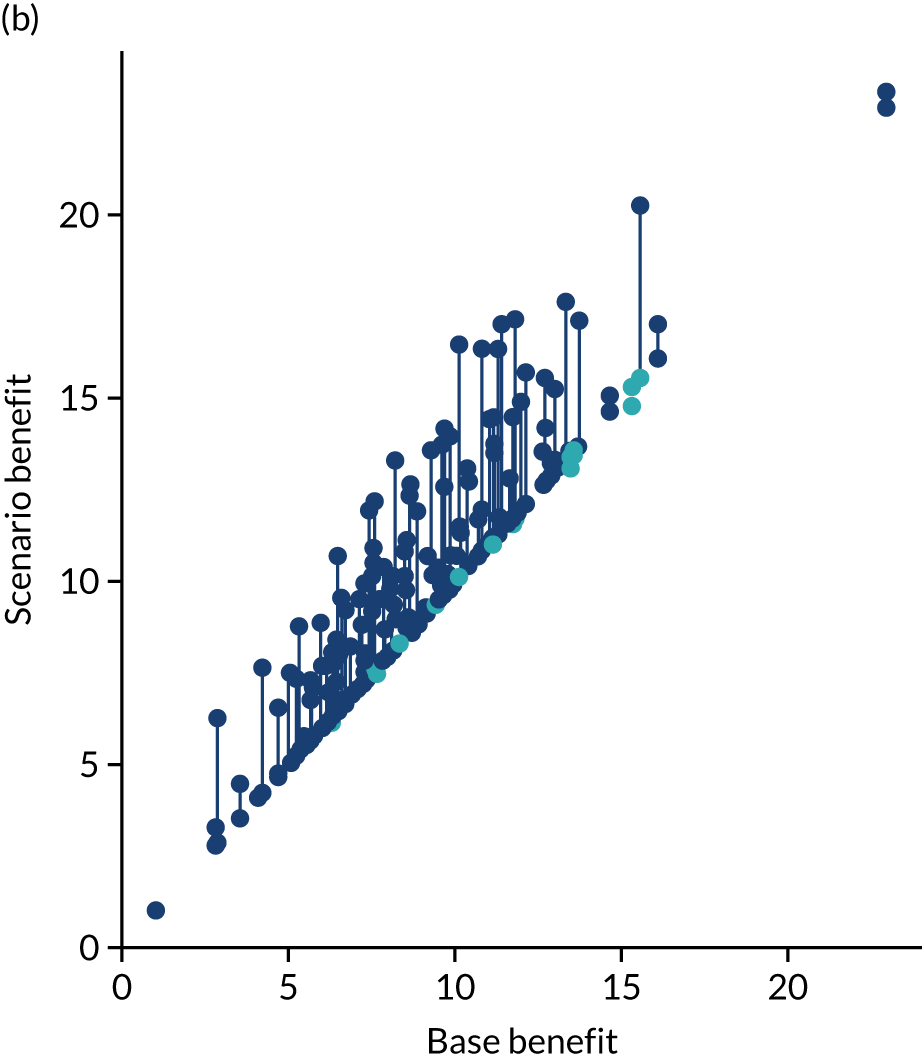

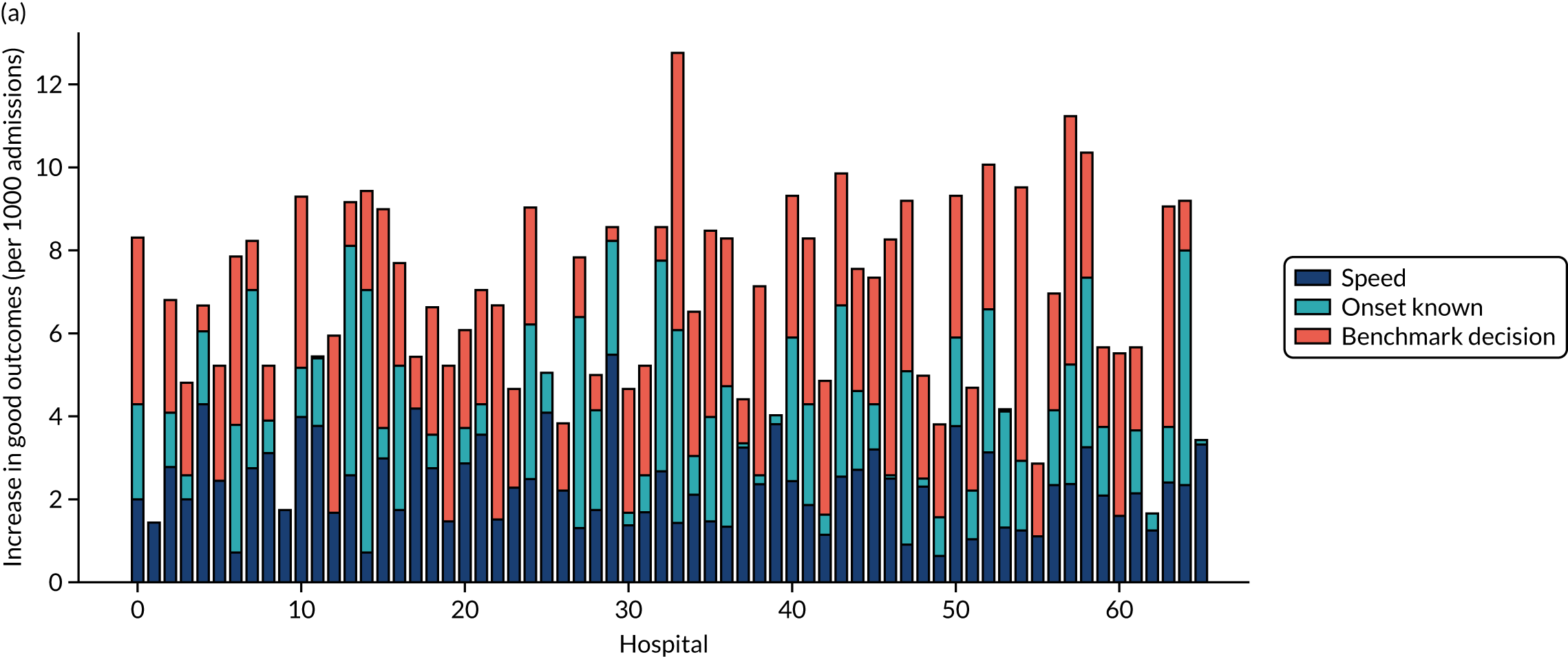

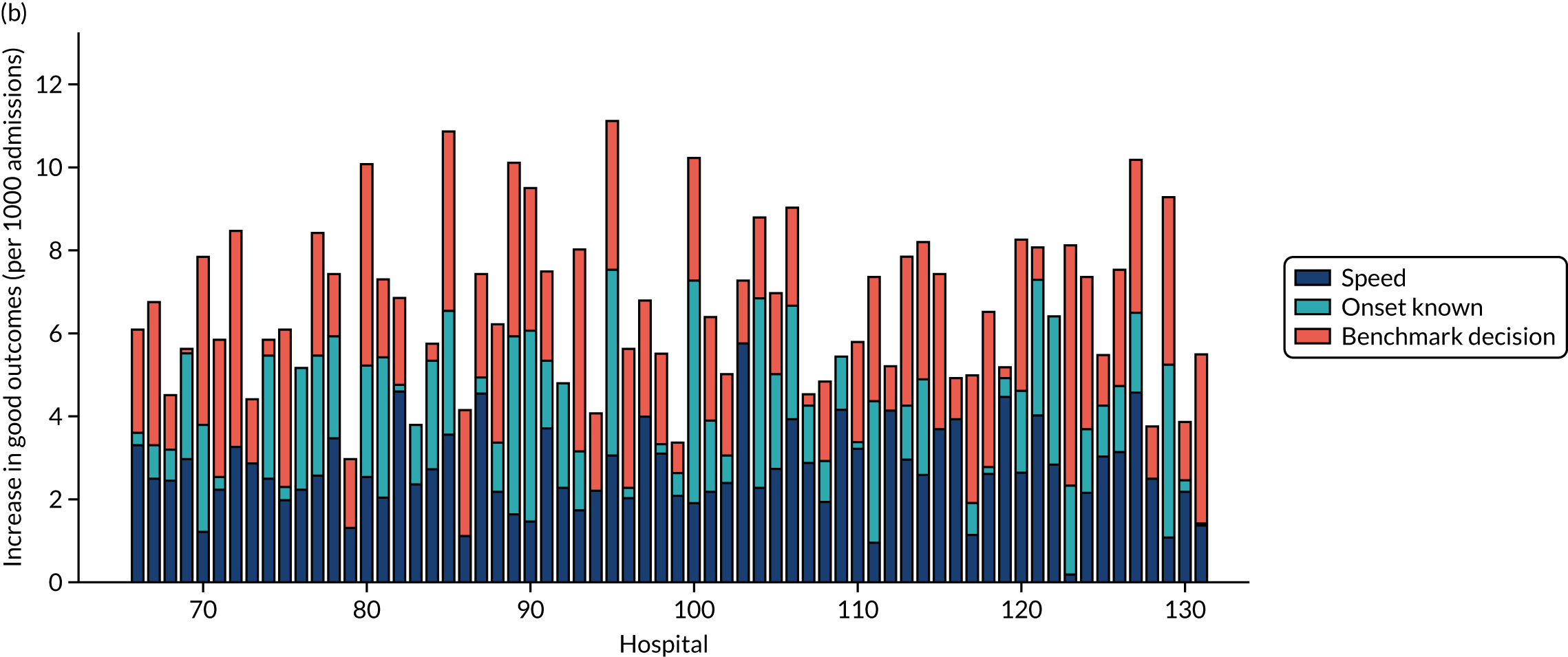

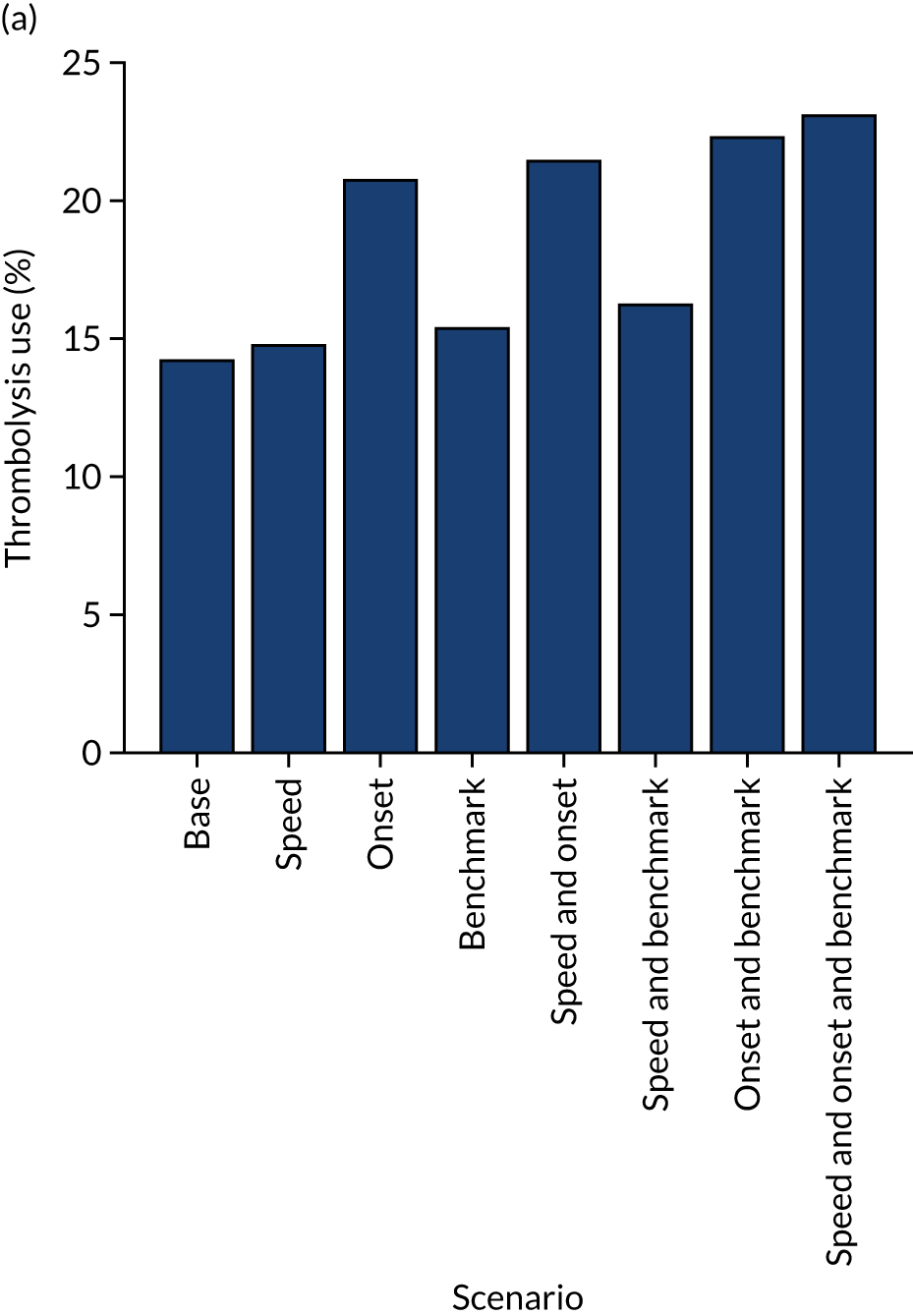

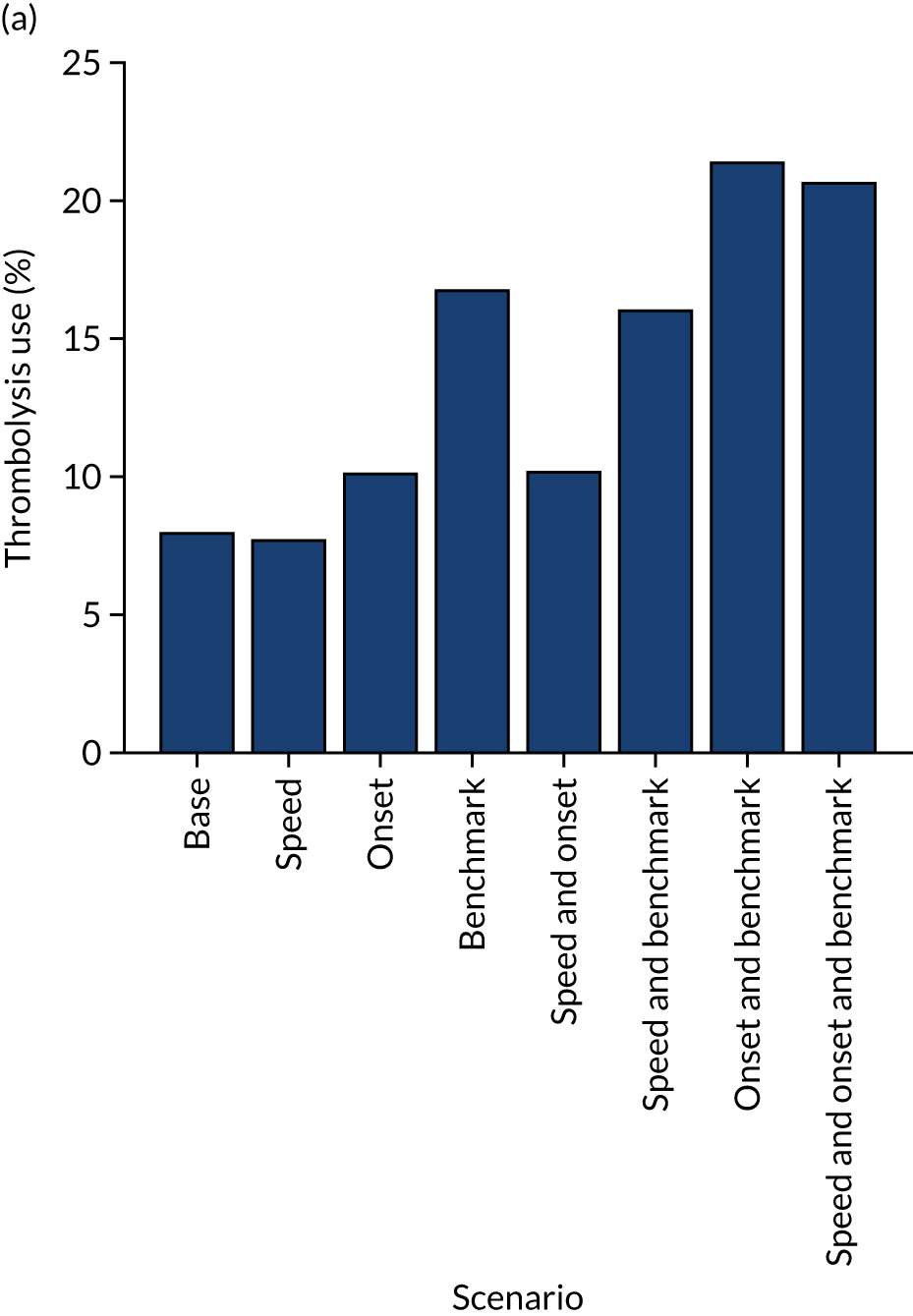

FIGURE 33.