Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its proceeding programmes as project number 10/1008/07. The contractual start date was in April 2011. The final report began editorial review in July 2013 and was accepted for publication in January 2014. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2014. This work was produced by Wong et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Background

Academics and policy-makers are increasingly interested in policy-friendly approaches to evidence synthesis which seek to illuminate issues and understand contextual influences on whether or not, why and how interventions might work. 1–4 A number of different approaches have been used to try to address this goal, such as meta-ethnography, grounded theory, thematic synthesis, textual narrative synthesis, meta-study, critical interpretive synthesis, ecological triangulation and framework synthesis. 5 Qualitative and mixed-method reviews are often used to supplement, extend and, in some circumstances, replace Cochrane-style systematic reviews. 6–12 Theory-driven approaches to such reviews include realist and meta-narrative reviews. Realist review was originally developed by Pawson for complex social interventions to explore systematically how contextual factors influence the link between intervention and outcome (summed up in the question: what works, how, for whom, in what circumstances and to what extent?). 13,14 Greenhalgh et al. 15 developed a meta-narrative review for use when a policy-related topic has been researched in different ways by multiple groups of scientists, especially when key terms have different meanings in different literatures.

Quality checklists and publication standards are common (and, increasingly, expected) in health services research – see for example CONSORT (Consolidated Standards of Reporting Trials) for randomised controlled trials,16 AGREE (Appraisal of Guidelines for Research and Evaluation) for clinical guidelines,17 PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) for Cochrane-style systematic reviews18 and SQUIRE (Standards for Quality Improvement Reporting Excellence) for quality improvement studies. 19 They have two main purposes: they help researchers design and undertake robust studies, and they help reviewers and potential users of research outputs assess validity and reliability. This project seeks to produce a set of quality criteria and comparable publication standards for realist and meta-narrative reviews.

What are realist and meta-narrative reviews?

Realist and meta-narrative reviews are systematic, theory-driven interpretative techniques, which were developed to help make sense of heterogeneous evidence about complex interventions applied in diverse contexts in a way that informs policy. Interventions have been described as theory incarnate,20 driven by hypotheses, hunches, conjectures and aspirations about individual and social betterment. Strengthening a review process that helps to sift and sort these theories may be an important step in producing better interventions.

Realist reviews seek to unpack the relationships between context, mechanism and outcomes (sometimes abbreviated as CMO), i.e. how particular contexts have triggered (or interfered with) mechanisms to generate the observed outcomes. 14 Its philosophical basis is realism, which assumes the existence of an external reality (a real world) but one that is filtered (i.e. perceived, interpreted and responded to) through human senses, volitions, language and culture. Such human processing initiates a constant process of self-generated change in all social institutions, a vital process that has to be accommodated in evaluating social programmes.

In order to understand how outcomes are generated, the roles of both external reality and human understanding and response need to be incorporated. Realism does this through the concept of mechanisms, whose precise definition is contested but for which a working definition is, ‘. . . underlying entities, processes, or structures which operate in particular contexts to generate outcomes of interest’. 21 Different contexts interact with different mechanisms to make particular outcomes more or less likely; hence, a realist review produces recommendations of the general format ‘in situations [X], complex intervention [Y], modified in this way and taking account of these contingencies, may be appropriate’. Realist reviews can be undertaken in parallel with traditional Cochrane reviews (see, for example, the complementary Cochrane and realist reviews of school feeding programmes in disadvantaged children). 22,23 The Cochrane review produced an estimate of effect size, whereas the realist review addressed why and how school feeding programmes worked, explained examples of when they did not work, and produced practical recommendations for policy-makers.

Meta-narrative reviews were originally developed by Greenhalgh et al. 15,24 to try to explain the apparently disparate data encountered in their review of diffusion of innovation in health-care organisations. Core concepts such as diffusion, innovation, adoption and routinisation had been conceptualised and studied very differently by researchers from a wide range of primary disciplines including psychology, sociology, economics, management and even philosophy. While some studies had been framed as the implementation of a complex intervention in a social context (thus lending themselves to a realist analysis), others had not. Preliminary questions needed to be asked, such as ‘What exactly did these researchers mean when they used the terms ‘diffusion’, ‘innovation’ and so on?’; ‘How did they link the different concepts in a theoretical model – either as a context–mechanism–outcome proposition or otherwise?’; and ‘What explicit or implicit assumptions were made by different researchers about the nature of reality?’

These questions prompted the development of meta-narrative review, which sought to illuminate the different paradigmatic approaches to a complex topic area by considering how the same topic had been differently conceptualised, theorised and empirically studied by different groups of researchers. Meta-narrative review is particularly suited to topics where there is dissent about the nature of what is being studied and what is the best empirical approach to studying it. For example, Best et al. ,25 in a review of knowledge translation and exchange, asked how different research teams had conceptualised the terms knowledge, translation and exchange – and what different theoretical models and empirical approaches had been built on these different conceptualisations. Thus, meta-narrative review potentially offers another strategy to assist policy-makers to understand and interpret a conflicting body of research and, therefore, to use it more effectively in their work.

The need for standards in theory-driven systematic reviews

Realist and meta-narrative approaches can capitalise on and help build common ground between social researchers and policy teams. Many researchers are attracted to these approaches because they allow systematic exploration of how and why complex interventions work. Policy-makers are attracted to them because they are potentially able to answer questions relevant to practical decisions (not merely ‘What is the impact of X?’ but ‘If we invest in X, to which particular sectors should we target it, how might implementation be improved and how might we maximise its impact?’). As interest in such approaches is burgeoning, it is our experience that these approaches are sometimes being applied in ways that are not always true to the core principles set out in previous methodological guidance. 4,14,15,26 Some reviews published under the realist banner are not systematic, not theory driven and/or not consistent with realist philosophy. The meta-narrative label has also been misapplied in reviews which have no systematic methodology. For these reasons, we believe that the time has come to develop formal standards and training materials.

There is a philosophical problem here, however. Realist and meta-narrative approaches are interpretive processes (that is, they are based on building plausible evidenced explanations of observed outcomes, presented predominantly in narrative form); hence, they do not easily lend themselves to a formal procedure for quality checking. Indeed, we have argued previously that the core tasks in such reviews are thinking, reflecting and interpreting. 4,15 In these respects realist and meta-narrative reviews face a problem similar to that encountered in assessing qualitative research, namely the extent to which guidelines, standards and checklists can ever capture the essence of quality. Some qualitative researchers are openly dismissive of the technical checklist approach as an assurance of quality in systematic review. 27 While we acknowledge such views, we believe that from a pragmatic perspective, formal quality criteria – with appropriate caveats – are likely to add to, rather than detract from, the overall quality of outputs in this field. Scientific discovery is never the mere mechanical application of set procedures. 28 Accordingly, research protocols should aim to guide rather than dictate.

The online Delphi method

This study used the online Delphi method, and in this section we introduce, explain and justify our use of this method. The essence of the Delphi technique is to engender reflection and discussion among a panel of experts with a view to getting as close as possible to a consensus and documenting both the agreements reached and the nature and extent of residual disagreement. 29 It was used, for example, to set the original care standards which formed the basis of the Quality and Outcomes Framework for UK general practitioners. 30 Factors which have been shown to influence quality in the Delphi process include:

-

composition (expertise, diversity) of the expert panel

-

selection of background papers and evidence to be discussed by that panel (completeness, validity, representativeness)

-

adequacy of opportunities to read and reflect (balance between accommodating experts’ busy schedules and keeping to study milestones)

-

qualitative analysis of responses (depth of reflection and scholarship, articulation of key issues)

-

quantitative analysis of responses (appropriateness and accuracy of statistical analysis, clarity of presentation when this is fed back)

-

how dissent and ambiguity are treated (e.g. avoidance of groupthink, openness to dissenting voices). 29,31,32

Evidence suggests that the online medium is more likely to improve than jeopardise the quality of the consensus development process. Mail-only Delphi panels have been shown to be as reliable as face-to-face panels. 33 Asynchronous online communication has well-established benefits in promoting reflection and knowledge construction. 34 There are over 100 empirical examples of successful online Delphi studies conducted between geographically dispersed participants (for examples see Keeney et al. ,32 Elwyn et al. ,35 Greenhalgh and Wengraf,36 Hart et al. ,37 Holliday and Robotin,38 and Pye and Greenhalgh39). We were unable to find any online Delphi study which identified the communication medium as a significant limitation. On the contrary, many authors described significant advantages of the online approach, especially when dealing with an international sample of experts. One group commented ‘our online review process was less costly, quicker and more flexible with regard to reviewer time commitment, because the process could accommodate their individual schedules’. 38

Critical commentaries on the Delphi process have identified a number of issues which may prove problematic, for example ‘issues surrounding problem identification, researcher skills and data presentation’29 or defining consensus; issues of anonymity; time requirements for data collection, analysis, feedback to participants and obtaining responses on feedback; defining and selecting experts; enhancing response rates and deciding on how many rounds to undertake. 40 These comments suggest that it is the underlying design and rigour of the research process which is key to the quality of the study and not the medium through which this process happens.

Chapter 2 Objectives

For this project we set out to:

-

collate and summarise the literature on the principles of good practice in realist and meta-narrative reviews, highlighting in particular how and why these differ from conventional forms of systematic review and from each other

-

consider the extent to which these principles have been followed by published and in-progress reviews, thereby identifying how rigour may be lost and how existing principles could be improved

-

produce, in draft form, an explicit and accessible set of methodological guidance and publication standards using an online Delphi method with an interdisciplinary panel of experts from academia and policy

-

produce training materials with learning objectives linked to these steps and standards

-

refine these standards and training materials prospectively on real reviews-in-progress, capturing methodological and other challenges as they arise

-

synthesise expert input, evidence review and real-time problem analysis into more definitive guidance and standards

-

disseminate these guidance and standards to audiences in academia and policy.

Chapter 3 Methods

Overview of methods

We used a range of methods to meet the objectives we set out above. In this section we provide a brief overview of the methods we used and how they related to each other. The following methods sections outline specific aspects of the methods we used in more detail.

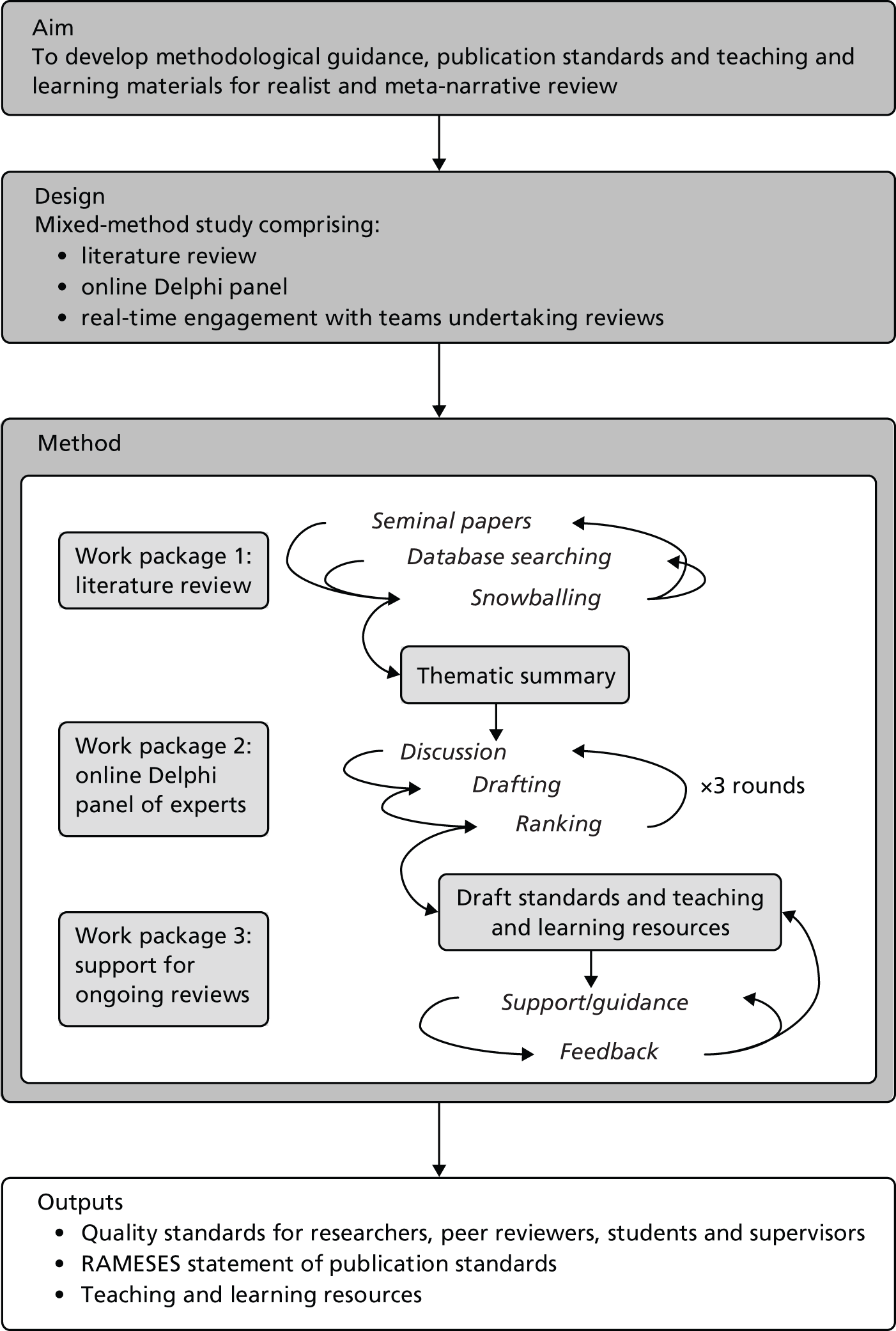

To fulfil objectives 1 and 2 we undertook a narrative review of the literature that was supplemented by collating feedback from presentations and workshops. We synthesised our findings into briefing materials [one for realist synthesis (RS) and another for meta-narrative reviews]. We recruited members to two Delphi panels, which had wide representation from researchers, students, policy-makers, theorists and research sponsors. We used the briefing materials to brief the Delphi panel, so they could help us in fulfilling objective 3. For objective 4, we drew not only on our experience in developing and delivering education materials, but also relevant feedback from the Delphi panel, an e-mail list we set up specifically for this project (www.jiscmail.ac.uk/RAMESES), training workshops and the review teams we supported methodologically. To help us refine our publication standards (objective 5) we captured methodological and other challenges that arose within the realist or meta-narrative review teams we provided methodological support to. To produce the definitive publication standards, quality standards and training materials (objective 6), we synthesised expert input (from the Delphi panel), literature review and real-time problem analysis (e.g. feedback from the e-mail list, training sessions and workshops, and presentations). Throughout this project we did not set specific time points when we would refine the drafts of our project outputs. Instead, we iteratively and contemporaneously fed any data we captured into our draft publication standards, quality standards and training materials, making changes gradually. Only our Delphi panels ran within a specific time frame. The definitive guidance and standards were, therefore, the product of continuous refinements. We addressed objective 7 through academic publications, online resources and delivery of presentations and workshops. Figure 1 provides a pictorial overview of how the different methods we used fed into each other.

FIGURE 1.

Overview of study processes. RAMESES, Realist And Meta-narrative Evidence Syntheses – Evolving Standards.

Details of literature search methods

Prior to the start of this project we had undertaken initial exploratory searches. These were rapid searches that were not intended to be comprehensive: they involved each project team member identifying in their personal files examples of realist and/or meta-narrative reviews. To identify further reviews, we undertook a search of the reference lists of each retrieved review. This two-step process yielded 13 reviews that were later used by our expert librarian to develop and refine our searches (see Appendix 1). We found that the literature in this field was currently small but expanding rapidly, of broad scope, variable quality and inconsistently indexed. Our purpose for identifying published reviews was not to complete a census of realist and meta-narrative studies. We make no claims that the review we undertook was exhaustive, thus we have not and never intended that it should be published as a stand-alone piece of research. The main purpose of this review was to enable us to produce the briefing materials for our two Delphi panels (objective 3), not to produce an exhaustive summary of all research ever published on the topic. As such, the review we undertook would best be considered as being a rapid, accelerated or truncated narrative review. Such an approach will predictably produce limitations and these are discussed in Chapter 5, Limitations.

We wanted our search to allow us to pinpoint real examples (or publications claiming to be examples) that provide rich detail on their usage of those review activities we wish to scrutinise and formalise. To that end, and drawing on a previous study which demonstrated the effectiveness and efficiency of the methods proposed,41 and employing the skills of a specialist librarian, we searched 16 electronic databases from inception (where applicable) to 15 June 2010. The databases searched are listed below (the number of hits found with each database searched may be found in Table 1):

-

Academic Search Complete

-

Cumulative Index to Nursing and Allied Health Literature (CINAHL)

-

The Cochrane Library

-

Dissertation Abstracts

-

EMBASE

-

Education Resources Information Center (ERIC)

-

Global Health

-

Google

-

HealthSTAR

-

MEDLINE

-

PASCAL [database of INIST (Institut de l’Information Scientifique et Téchnique)]

-

PsycINFO

-

Scopus

-

Sociological Abstracts

-

Social Policy and Practice

-

Web of Science [Science Citation Index (SCI), Social Science Citation Index (SSCI), Arts and Humanities Citation Index (AHCI)].

| Database | Hits returned |

|---|---|

| Academic Search Complete | 69 |

| CINAHL | 28 |

| The Cochrane Library | 4 |

| Dissertation Abstracts | 9 |

| EMBASE | 39 |

| ERIC | 2 |

| Global Health | 10 |

| HeathSTAR | 20 |

| MEDLINE | 43 |

| PASCAL | 6 |

| PsycINFO | 14 |

| Scopus | 182 |

| Web of Science (SCI and SSCI combined) | 190 |

| Sociological Abstracts | 0 |

| Social Policy and Practice | 0 |

| 0 | |

| Total retrieved | 616 |

| Duplicates | 368 |

| Files screened | 248 |

We used the following approaches in our searches:

-

A simple truncated text-word search was conducted on all databases using the following words: (Meta-narrative OR metanarrative OR realist) ADJ (review* OR protocol* OR synthesis OR syntheses OR technic OR technics OR technique*) was used where ADJ (adjacency) was a search operator (Ovid Databases); or (metanarrative OR meta-narrative OR realist) AND (review* OR synthesis OR syntheses OR protocol* OR technic OR technics OR technique*), where adjacency was not a search operator. In the last instance, the search was limited to the title field. The strategy was developed based on a collection of 13 published reviews we had identified in our exploratory searches and was piloted and refined to produce the most sensitive search strategy for the topic.

-

Citation chaining, on databases where this feature was available (Scopus and Web of Science at the time of the search) was performed. Seminal citations were followed, with the reasoning that anyone using realist or narrative techniques would be likely to cite these references. 4,14,15

Results were kept separate for each database in RefWorks (version 5; RefWorks-COS, Bethesda, MD, USA) reference management software and were then collated into a separate merged file from which duplicates were removed.

No language or study design filters were applied. To construct our sample for further analysis (in which we intended to study both exemplary reviews and those that had flaws), we included any review that claimed to be a realist review or a meta-narrative review. Documents were excluded if they were not a review (e.g. editorials, opinion pieces, commentaries, methods papers) or did not claim to be a realist or meta-narrative review. We did not undertake any independent screening or an audit of a random subset for quality control purposes. The whole searching process from start to the retrieval of all full-text documents took approximately 1 month.

We conducted a thematic analysis of this literature which was initially oriented to addressing seven key areas:

-

What are the strengths and weaknesses of realist and meta-narrative review from both a theoretical and a practical perspective?

-

How have these approaches actually been used? Are there areas where they appear to be particularly fit (or unfit) for purpose?

-

What, broadly, are the characteristics of high- and low-quality reviews undertaken by realist or meta-narrative methods? What can we learn from the best (and worst) examples so far?

-

What challenges have reviewers themselves identified (e.g. in the introduction or discussion sections of their papers) in applying these approaches? Are there systematic gaps between the theory and the steps actually taken?

-

What is the link between realist and meta-narrative review and the policy-making process? How have published reviews been commissioned or sponsored? How have policy-makers been involved in shaping the review? How have they been involved in disseminating and applying its findings? Are there models of good practice (and of approaches to avoid) for academic–policy linkage in this area?

-

How have front-line staff and service users been involved in realist and meta-narrative reviews? If the answer to this is ‘usually, not much’, how might they have been involved and are there examples of potentially better practice which might be taken forward?

-

How should one choose between realist, meta-narrative and other theory-driven approaches when selecting a review methodology? How might (for example) the review question, purpose and intended audience(s) influence the choice of review method?

The thematic analysis was led by one member of the review team (GWo). He undertook all stages of the review and shared findings with the rest of the project team so that discussion, debate and refinement of his interpretations of the data in the included reviews could take place. Findings were shared by e-mail and, where necessary, face-to-face meetings took place to discuss any interpretations made of the data. In undertaking our thematic analysis, we familiarised ourselves with the included reviews to identify patterns in the data. We used the questions above, which relate to seven key areas, as a starting point in our sensemaking of the data, and as a project team we were aware that the purpose of the review was to produce briefing documents for the Delphi panels. In these panels we wanted to achieve a consensus on quality and reporting standards, and so what we needed from our review of the literature were data to inform us on what might constitute quality in executing and reporting reviews. We accepted that we might need to refine, discard or add additional questions and topic areas to explore in order to better capture our analysis and understanding of the literature as these emerge from our reading of the papers.

Data were extracted to a Microsoft Excel spreadsheet (Microsoft Corporation, Redmond, WA, USA), which we iteratively refined to capture the data needed to produce our briefing materials. This review was undertaken in a short timeframe, such that the time taken from obtaining full-text documents to producing the final draft for circulation of the briefing documents was approximately 10 weeks. The output of this phase was a provisional summary for each review method that addressed the questions above and highlighted for each question the key areas of knowledge, ignorance, ambiguity and uncertainty. This was distributed to the Delphi panel (as our briefing document) as the starting point for their guidance development work.

Details of online Delphi process

We followed an online adaptation of the Delphi method (see Chapter 1, The online Delphi method) which we had developed and used in a previous study to produce guidance on how to critically appraise research on illness narratives. 36 In that study, a key component of a successful Delphi process was recruiting a wide range of experts, policy-makers, practitioners and potential users of the guidance who could approach the problem from different angles and, especially, people who would respond to academic suggestions by asking ‘so what?’ questions.

Placing the academic–policy/practice tension central to this phase of the research, we planned to construct our Delphi panel to include a majority of experienced academics (e.g. those who have published on theory and method in realist and/or meta-narrative review). We also planned to recruit policy-makers, research sponsors and representatives of third-sector organisations. These individuals were recruited by approaching relevant organisations and e-mail lists [e.g. professional networks of systematic reviewers, CHAIN (Contact, Help, Advice and Information Network; http://chain.ulcc.ac.uk) and INVOLVE (www.invo.org.uk/)]. We approached INVOLVE as we were interested in exploring if we could identify a lay person who might have interest in secondary research and/or informing policy/decision-making through reviews. Those interested in participating were provided with an outline of the study and individuals who indicated greatest commitment and potential to balance the sample were selected. We drew on our own experience of developing standards and guidance, as well as on published papers by CONSORT, PRISMA, SQUIRE, AGREE and other teams working on comparable projects. 16,18,19,42

The Delphi panel was conducted entirely via the internet using a combination of e-mail and an online survey tool (www.surveymonkey.com). We began with a brainstorm round (round 1) in which participants were invited to submit personal views, exchange theoretical and empirical papers on the topic and suggest items that might could be included in the publication standards. This was done as a warm-up exercise and panel members were sent our own preliminary summary or briefing document (see Chapter 3, Details of literature search methods). These early contributions, along with our summary, were collated and summarised in a set of provisional items, which were developed into an online survey and sent electronically (via the online survey tool, SurveyMonkey®; Survey Monkey, Palo Alto, CA, USA) to participants for ranking (round 2). Participants were asked to rank each item twice on a 7-point Likert scale (1 = strongly disagree to 7 = strongly agree), once for relevance (i.e. should an item on this theme/topic be included at all in the guidance?) and once for validity (i.e. to what extent do you agree with this item as currently worded?). Those who agreed that an item was relevant, but disagreed on its wording, were invited to suggest changes to the wording via a free-text comments box. In this second round, participants were again invited to suggest additional topic areas and items.

Each participant’s responses were collated and the numerical rankings entered onto an Excel spreadsheet. The response rate, average, mode, median and interquartile range for each participant’s response to each item were calculated. Items that score low on relevance were omitted from subsequent rounds. Further online discussion was invited on items that score high on relevance but low on validity (indicating that a rephrased version of the item was needed) and on those where there was wide disagreement about relevance or validity. Following analysis and discussion within the project team, a second list of statements was drawn up and circulated for ranking (round 3). We planned that the process of collation of responses, further e-mail discussion, and reranking would be repeated until a maximum consensus is reached (round 4 et seq.). In practice, very few Delphi panels, online or face to face, go beyond three rounds as participants tend to ‘agree to differ’ rather than move towards further consensus. 36

We had planned to report residual non-consensus as such and the nature of the dissent described. Making such dissent explicit tends to expose inherent ambiguities (which may be philosophical or practical) and acknowledges that not everything can be resolved; such findings may be more use to reviewers than a firm statement that implies that all tensions have been fixed.

Preparing teaching and learning resources

A key aim of our project was to produce publicly accessible resources to support training in realist and meta-narrative review. We anticipate that these resources will need to be adapted and perhaps supplemented for different groups of learners, and interactive learning activities added. 43 We developed, and iteratively refined, draft learning objectives, example course materials and teaching and learning support methods. We drew on our previous work on course development, quality assurance and support for interactive and peer-supported learning in health-care professionals for this aspect of our project. 34,43–45

Real-time refinement

The sponsor of this study, the National Institute for Health Research (NIHR) Health Services and Delivery Research (HSDR) programme, supports secondary research calls for rapid, policy-relevant reviews, some, though not all, of which seek to use realist or meta-narrative methods. We were asked to work with a select sample of teams funded under such calls, as well as other teams engaged in relevant ongoing reviews (selected to balance our sample), to share emerging recommendations and gather real-time data on how feasible and appropriate these recommendations are in a range of different reviews. Over the 27-month duration of this study, we used the feedback we gathered to iteratively refine our draft training materials. Training and support offered to these review teams consisted of three overlapping and complementary packages:

-

An all-comers online discussion forum via JISCmail (www.jiscmail.ac.uk/RAMESES) for interested reviewers who were doing or had previously attempted a realist or meta-narrative review. This was run via light-touch facilitation in which we invited discussion on particular topics and periodically summarise themes and conclusions (a technique known in online teaching as weaving). Such a format typically accommodates large numbers of participants since most people tend to lurk most of the time. Such discussion groups tend to generate peer support through their informal, non-compulsory ethos and a strong sense of reciprocity (i.e. people helping one another out because they share an identity and commitment)46 and they are often rich sources of qualitative data. We anticipated that this forum would contribute key themes and ideas to the quality and reporting standards and learning materials throughout the duration of the study.

-

Responsive support to our designated review teams. We anticipated that our input to these teams would depend on their needs, interests and previous experience. In our previous dealings with review teams we have been called upon (for example) to assist them in distinguishing context from mechanism in a particular paper, extracting and formalising programme theories, distinguish middle-range theories from macro or micro theories, develop or adapt data extraction tools, advise on data extraction techniques and train researchers in the use of qualitative software for systematic review.

-

A series of workshops for designated review teams and other reviewers. We planned to run a series of workshops both to provide training to fellow reviewers interested in using realist or meta-narrative reviews, but also to get feedback from them about what challenges they faced either learning about or undertaking such reviews.

Chapter 4 Results

In this project we produced three specific outputs for realist and meta-narrative reviews:

-

publication standards

-

quality standards

-

teaching and learning materials (also known as training materials).

We used a range of methods to gather the data that informed the content of each of our intended outputs. This section provides details of the results we obtained from the methods we used and how they contributed to the content of our outputs.

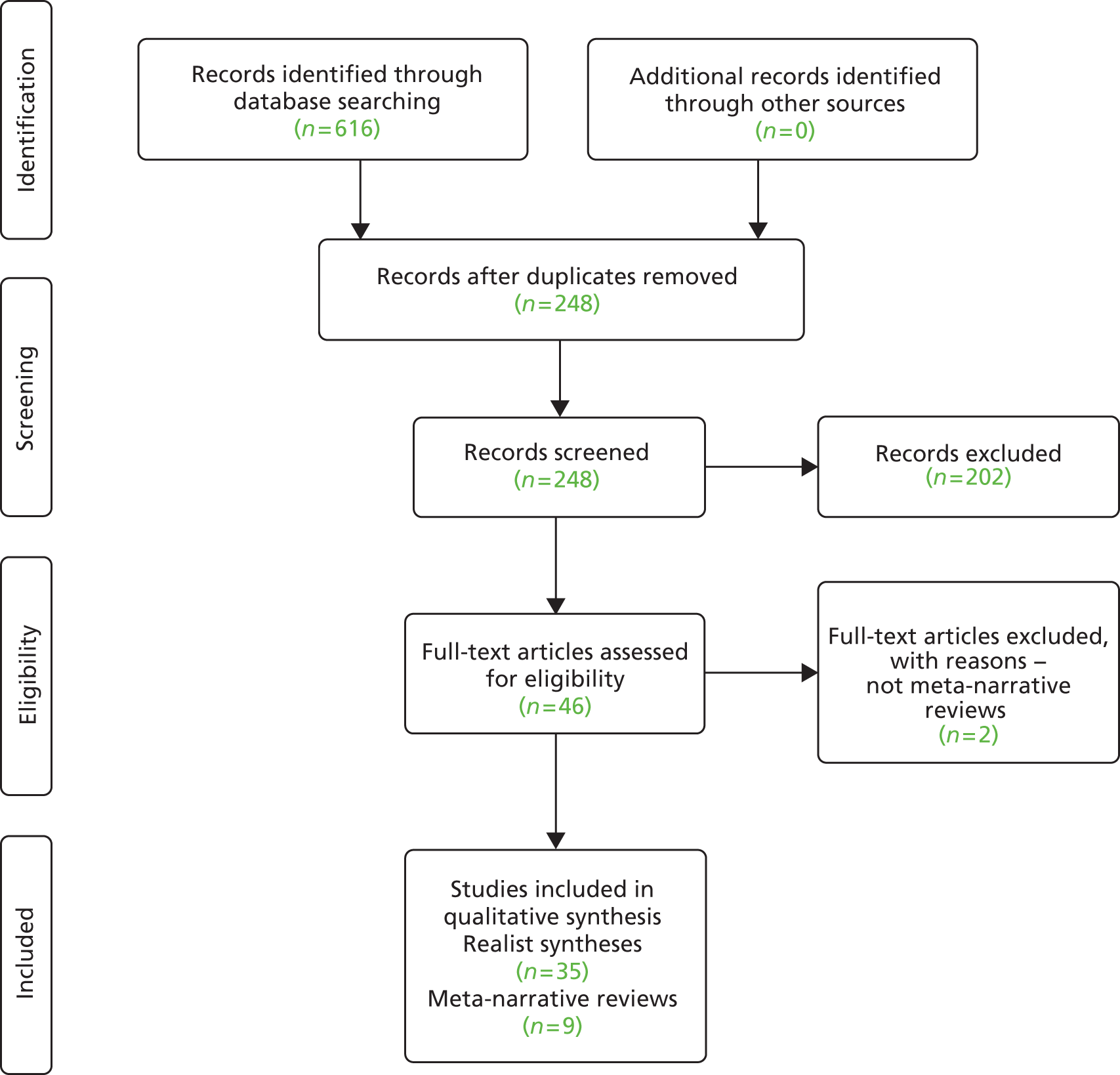

Literature search

Sixteen electronic databases were searched from inception to June 2011 and citation tracking was undertaken generating 248 documents. A flow diagram outlining the disposition of documents can be seen in Figure 2. Table 1 shows the number of hits returned for the databases we searched.

FIGURE 2.

Flow diagram outlining the disposition of documents.

One of the project team (GWo) screened the abstracts and titles and included documents which claimed to be realist or meta-narrative reviews. All the documents judged to be possible realist and meta-narrative reviews were circulated to all the project team and through discussion and debate a final set of included documents were retained. We retrieved what we judged to be 35 possible realist reviews and nine meta-narrative reviews. For the possible realist reviews there was no disagreement between the project team as to inclusion (35 out of 35 were included for analysis). Out of the 11 possible meta-narrative reviews, two were judged not to be meta-narrative reviews, leaving nine documents. Tables 2 and 3 show characteristics of the documents (review title, type of document, year published and topic area) that we drew on for realist reviews and meta-narrative reviews respectively. We conducted a thematic analysis guided initially by the seven questions set out above (see Chapter 3, Details of literature search methods) to produce the briefing documents for the realist and meta-narrative Delphi panels (see Appendix 2). All the data we extracted were either entered into an Excel spreadsheet or written up directly into a draft of our briefing documents.

| Review title | Type of document | Year published | Topic area |

|---|---|---|---|

| Vocational rehabilitation: what works and in what circumstances47 | Journal article | 2004 | Vocational rehabilitation |

| A systematic review of controlled trials of interventions to prevent childhood obesity and overweight: a realistic synthesis of the evidence48 | Journal article | 2006 | Interventions to reduce childhood obesity and overweight |

| A realist synthesis of evidence relating to practice development: Final report to NHS Education for Scotland and NHS Quality Improvement Scotland49 | Full report | 2006 | Practice development |

| aRealist review to understand the efficacy of school feeding programmes22 | Journal article | 2007 | Efficacy of school feeding programmes |

| Evaluating the impact of patient and public involvement initiatives on UK health services: a systematic review50 | Journal article | 2007 | Patient and public involvement |

| Marketing mix standardization in multinational corporations: a review of the evidence51 | Journal article | 2007 | Marketing mix standardisation in multinational corporations |

| Human resource management interventions to improve health workers’ performance in low and middle income countries: a realist review52 | Journal article | 2008 | Human resource management interventions to improve health workers’ performance |

| Does moving from a high-poverty to lower-poverty neighborhood improve mental health? A realist review of ‘Moving to Opportunity’53 | Journal article | 2008 | US Moving to Opportunity programme |

| Primary health care delivery models in rural and remote Australia – a systematic review54 | Journal article | 2008 | Primary health-care delivery models in rural and remote Australia |

| Independent learning literature review (research report DCSF-RR051)55 | Report | 2008 | Independent learning in school children |

| A realist synthesis of randomised control trials involving use of community health workers for delivering child health interventions in low and middle income countries56 | Journal article | 2009 | Community health workers |

| Community-based services for homeless adults experiencing concurrent mental health and substance use disorders: a realist approach to synthesising evidence57 | Journal article | 2009 | Community-based services for homeless adults with concurrent mental health and substance use disorders CDs |

| Water, sanitation and hygiene interventions to combat childhood diarrhoea in developing countries58 | Report | 2009 | Water, sanitation and hygiene interventions in reducing childhood diarrhoea |

| aInternet-based medical education: a realist review of what works, for whom and in what circumstances43 | Journal article | 2009 | Internet-based learning |

| Interventions to promote social cohesion in sub-Saharan Africa59 | Full report | 2010 | Interventions to promote social cohesion in subSaharan Africa |

| Implementation of antiretroviral therapy adherence interventions: a realist synthesis of evidence60 | Journal article | 2010 | Antiretroviral adherence interventions |

| Lean thinking in healthcare: a realist review of the literature61 | Journal article | 2010 | Lean thinking |

| District nurses’ role in palliative care provision: a realist review62 | Journal article | 2010 | Role of district nurses in palliative care provision |

| A realist review of evidence to guide targeted approaches to HIV/AIDS prevention among immigrants living in high-income countries63 | PhD thesis | 2010 | Evidence to guide targeted approaches to HIV infection or AIDS prevention among immigrants living in high-income countries |

| Effectiveness of telemedicine: a systematic review of reviews64 | Journal article | 2010 | Effectiveness of telemedicine |

| How equitable are colorectal cancer screening programs which include FOBTs? A review of qualitative and quantitative studies65 | Journal article | 2010 | Equitability of colorectal screening programmes |

| Evidence-based health policy: a preliminary systematic review66 | Journal article | 2010 | Evidence-based health policy |

| Behavioral caregiving for adults with traumatic brain injury living in nursing homes: developing a practice model67 | Journal article | 2010 | Behavioural caregiving for adults with traumatic brain injury living in nursing homes |

| Addressing locational disadvantage effectively68 | Journal article | 2010 | Addressing locational disadvantage |

| Realist review and synthesis of retention studies for health workers in rural and remote areas69 | Report | 2011 | Access to health workers in rural and remote areas |

| aPolicy guidance on threats to legislative interventions in public health: a realist synthesis70 | Journal article | 2011 | Public health legislation |

| Implementing successful intimate partner violence screening programs in health care settings: evidence generated from a realist-informed systematic review71 | Journal article | 2011 | Intimate partner violence |

| An evidence synthesis of qualitative and quantitative research on component intervention techniques, effectiveness, cost-effectiveness, equity and acceptability of different versions of health-related lifestyle advisor role in improving health72 | Report | 2011 | Health-related lifestyle advisors |

| The gradient in health inequalities among families and children: a review of evaluation frameworks73 | Journal article | 2011 | Health inequalities among families and children |

| Effectiveness of the geriatric day hospital – a realist review74 | Journal article | 2011 | Effectiveness of geriatric day hospital |

| Are journal clubs effective in supporting evidence-based decision making? A systematic review. BEME Guide No.1675 | Journal article | 2011 | Effectiveness of journal club in supporting evidence-based decision-making |

| Conducting a realist review of a complex concept in the pharmacy practice literature: methodological issues76 | Journal article | 2011 | Culture in community pharmacy organisations |

| Getting inside acupuncture trials – exploring intervention theory and rationale77 | Journal article | 2011 | Acupuncture |

| Unleashing their potential: a critical realist scoping review of the influence of dogs on physical activity for dog-owners and non-owners78 | Journal article | 2011 | Influence of dogs on physical activity for dog- and non-owners |

| Social networks, social capital and chronic illness self-management: a realist review79 | Journal article | 2011 | Social networks, social capital and chronic illness self-management |

| Review title | Type of document | Year published | Topic area |

|---|---|---|---|

| aDiffusion of innovations in service organisations: systematic literature review and recommendations for future research24 | Journal article | 2004 | Diffusion of innovations |

| Environmental health and vulnerable populations in Canada: mapping an integrated equity-focused research agenda80 | Journal article | 2008 | Environmental health and vulnerable populations |

| Tensions and paradoxes in electronic patient record research: a systematic literature review using the meta-narrative method81 | Journal article | 2009 | Electronic health record |

| The health, social care and housing needs of lesbian, gay, bisexual and transgender older people: a review of the literature82 | Journal article | 2009 | Health, social care and housing needs of lesbian, gay, bisexual and transgender older people |

| The role of urban municipal governments in reducing health inequities: a meta-narrative mapping analysis83 | Journal article | 2010 | Municipal urban governments in reducing health inequalities |

| Knowledge exchange processes in organizations and policy arenas: a narrative systematic review of the literature84 | Journal article | 2010 | Knowledge exchange processes in organisational policy arenas |

| aMeasuring quality in the therapeutic relationship – parts 1 and 285,86 | Journal article | 2010 | Measuring quality in therapeutic relationships |

| Defining the fundamentals of care87 | Journal article | 2010 | Defining the fundamentals of nursing care |

| How can we improve guideline use? A conceptual framework of implementability88 | Journal article | 2011 | Improving guideline use |

Our briefing documents were based on our thematic analysis which was guided by seven initial key areas (see Chapter 3, Details of literature search methods for a list of the key areas). We needed differing levels of immersion and analysis for each of the items we included in our briefing documents. Some were more straightforward to derive from our initial questions and our reading of the literature. We noted that three out of the initial seven questions [(1) What are the strengths and weaknesses of realist and meta-narrative review from both a theoretical and a practical perspective?; (2) How have these approaches actually been used? Are there areas where they appear to be particularly fit (or unfit) for purpose?; and (7) How should one choose between realist, meta-narrative and other theory-driven approaches when selecting a review methodology? How might (for example) the review question, purpose and intended audience(s) influence the choice of review method?] had overlaps and could be collapsed into one topic area for consideration by our Delphi panels. We judged that questions 1, 2 and 7 were related to matching the research question to the method. We noted that in our included reviews, most researchers had also considered this an important topic to address – often through an explanation of why they had deliberately chosen either a realist or meta-narrative review. We therefore included this issue as items 6 and 5 (for meta-narrative and realist reviews respectively) in our briefing document for our Delphi panel. These items asked the Delphi panel members to clarify what a research question would look like in a meta-narrative or realist review.

When doing realist and meta-narrative reviews ourselves, we had previously noted that such reviews often covered broad topics and needed to be progressively focused. Two of our initial questions related to these issues: (5) What is the link between realist and meta-narrative review and the policy-making process? How have published reviews been commissioned or sponsored? How have policy-makers been involved in shaping the review? How have they been involved in disseminating and applying its findings? Are there models of good practice (and of approaches to avoid) for academic-policy linkage in this area?; and (6) How have front-line staff and service users been involved in realist and meta-narrative reviews? If the answer to this is ‘usually, not much’, how might they have been involved and are there examples of potentially better practice which might be taken forward? We had asked questions 5 and 6 to ascertain if other researchers had noted this as an important process and, if they had, what approaches had they used. Within our included reviews, the breadth of the initial topic areas had been identified as a challenge and a range of different approaches had been used to focus reviews. The issue of the need to focus reviews thus seemed to us an important one to include in our briefing documents (as items 9 and 8 for meta-narrative and realist reviews respectively). Items 9 and 8 in our briefing documents asked the Delphi panel members to consider if it was important for researchers to explain how and why their review was shaped and contained.

Question 3 [What, broadly, are the characteristics of high- and low-quality reviews undertaken by realist or meta-narrative methods? What can we learn from the best (and worst) examples so far?] of our initial questions required the most immersion and analysis. With this question we had wanted to understand what processes a review team had to undertake to produce a high-quality review. As a project team we had our own ideas but wanted to explore if these were reflected in our reading of the included reviews. We first had to decide if we could agree among ourselves on which of the included reviews were high, mixed or low quality. To do this, each review was read in detail (GWo) and the characteristics of each review that determined its quality were extracted into an Excel spreadsheet. The headings on this spreadsheet were: study name; type of document; year submitted; topic area; purpose of review; understood method?; methodological comments; lessons for methods; methods for reporting; and challenges reported by reviewers and notes.

Once completed (one for realist reviews and another for meta-narrative reviews), the spreadsheet and the full-text documents were circulated to the rest of the project team and through e-mail discussion and debate, a consensus was achieved. The next process was then to reread each of the included reviews to determine which review processes were necessary to lead to a high-quality review. Again, this was led by GWo and each review process was added to a draft of the briefing documents. These drafts were circulated to the rest of the project team and a consensus achieved through discussion and debate. The briefing materials were the result of seven rounds of revisions.

The contents of our briefing materials were as follows:

-

an explanation of how we would like the Delphi panel members to contribute

-

background to the review methods

-

methodological issues we identified for each method

-

a summary of the published examples

-

our preliminary thoughts on what might be included as publication standards items.

The complete briefing document circulated to the Delphi panels for realist reviews and meta-narrative reviews can be found in Appendix 2.

Delphi panel

Realist review

We ran the realist review Delphi panels between September 2011 and March 2012. We recruited 37 individuals from 27 organisations in six countries. These comprised researchers in: public or population health (8); evidence synthesis (6); health services research (8); international development (2); and education (2). We also recruited experts in research methodology (6), publishing (1), nursing (2) and policy and decision-making (2). We started round 1 in mid-September 2011 and circulated the briefing document to the panel. We sent two chasing e-mails to all panel members, and within 4 weeks all panel members who indicated that they wanted to provide comments had done so. Twenty-two Delphi panel members provided suggestions of items that should be included in the publication standards. We used the suggestions from the panel members and the briefing document as the basis of the online survey for round 2. Round 2 started at the end of November 2011 and ran until early January 2012. Panel members were invited to complete our online survey and asked to rate each potential item for relevance and clarity. A copy of this survey can be found in Appendix 3. Two reminder e-mails were sent to the panel members. Once the panel had completed their survey we analysed their ratings for relevance and clarity (Table 4).

| Item | Relevance | Content | ||||||

|---|---|---|---|---|---|---|---|---|

| Response rate (%) | Mode | Median | Interquartile range | Response rate (%) | Mode | Median | Interquartile range | |

| Title | 33/37 (89) | 7 | 7 | 6–7 | 31/37 (84) | 7 | 7 | 6–7 |

| Abstract | 34/37 (92) | 7 | 7 | 7–7 | 34/37 (92) | 7 | 6.5 | 5–7 |

| Rationale for review | 37/37 (95) | 7 | 7 | 6–7 | 35/37 (95) | 7 | 7 | 5–7 |

| Objective and focus of review | 33/37 (89) | 7 | 7 | 6–7 | 33/37 (89) | 7 | 7 | 6–7 |

| Changes in review processa | 35/37 (95) | 7 | 6 | 5–7 | 34/37 (92) | 7 | 5.5 | 3–6.75 |

| Rationale for using realist review | 34/37 (92) | 7 | 7 | 6–7 | 33/37 (89) | 7 | 6 | 5–7 |

| Scoping the literature | 35/37 (95) | 7 | 7 | 5.5–7 | 37/37 (92) | 7 | 6 | 5–7 |

| Searching process | 34/37 (92) | 7 | 7 | 6–7 | 34/37 (92) | 7 | 6 | 5–7 |

| Selection and appraisal of documentsa | 35/37 (95) | 7 | 7 | 6–7 | 35/37 (95) | 7 | 6 | 4.5–7 |

| Data extraction | 34/37 (92) | 7 | 7 | 6–7 | 33/37 (89) | 7 | 6 | 5–7 |

| Analysis and synthesis processes | 35/37 (95) | 7 | 7 | 6–7 | 35/37 (95) | 7 | 6 | 5–7 |

| Document flow diagram | 35/37 (95) | 7 | 6 | 6–7 | 35/37 (95) | 7 | 6 | 5–7 |

| Document characteristicsa | 35/37 (95) | 7 | 6 | 5–7 | 35/37 (95) | 7 | 6 | 4.5–7 |

| Main findings | 34/37 (92) | 7 | 7 | 6–7 | 31/37 (84) | 7 | 6.5 | 5–7 |

| Summary of findings | 35/37 (95) | 7 | 7 | 7–7 | 34/37 (95) | 7 | 7 | 6–7 |

| Strength, limitations and future research directions | 35/37 (95) | 7 | 7 | 6–7 | 35/37 (95) | 7 | 6 | 6–7 |

| Comparison with existing literature | 35/37 (95) | 7 | 6 | 5–7 | 35/37 (95) | 7 | 6 | 5–7 |

| Conclusion and Recommendations | 34/37 (92) | 7 | 7 | 6–7 | 34/37 (92) | 7 | 6.5 | 6–7 |

| Funding | 35/37 (95) | 7 | 7 | 7–7 | 35/37 (95) | 7 | 7 | 6–7 |

From round 2, only three items did not achieve a consensus: items 5, 9 and 13 (see Table 4). Based on the suggestions made by the panel members we refined the text for these items. We had asked panel members if they had a preference between the terms realist review or RS. Fourteen (39%) preferred RS, 10 (28%) realist review and 12 (33%) had no preference. Our conclusion was that the terms RS and realist review are synonymous. We also produced a post-round briefing document from round 2, which detailed for each item:

-

the response rate

-

mode

-

median

-

interquartile range

-

the action we took for each item based on the panel’s ratings

-

an anonymised list of all the free-text comments made.

For round 3, we only asked the panel to consider again the items for which a consensus had not been reached in round 2, namely items 5, 9 and 13. We produced an online survey for round 3 and again asked to rate items 5, 9 and 13 for relevance and clarity. To keep the panel updated we provided them with our post-round briefing document from round 2 (available on request from authors). Round 3 ran from mid-February to mid-March 2012. A copy of this survey can be found in Appendix 4. Two reminder e-mails were sent to the panel members. Once the panel had completed their survey we analysed their ratings for relevance and clarity (Table 5).

| Item | Relevance | Content | ||||||

|---|---|---|---|---|---|---|---|---|

| Response rate (%) | Mode | Median | Interquartile range | Response rate (%) | Mode | Median | Interquartile range | |

| 5. Changes in review process | 34/37 (92) | 7 | 7 | 6–7 | 34/37 (92) | 7 | 6 | 6–7 |

| 9. Selection and appraisal of documents | 33/37 (89) | 7 | 7 | 6–7 | 33/37 (89) | 7 | 7 | 6–7 |

| 13. Document characteristics | 33/37 (89) | 7 | 7 | 6–7 | 33/37 (89) | 7 | 6 | 6–7 |

By the end of round 3 a consensus was reached on all items. We produced a post-round briefing document from round 3 and circulated this to all our panel members for the sake of completeness (available on request from authors).

Using the data we gathered from the three rounds of the Delphi panel for realist reviews, we produced a final set of items to be included in the publication standards for realist reviews. These were published simultaneously in January 2013 in BMC Medicine89 and the Journal of Advanced Nursing. 90 Our publication standards have also been accepted and listed on the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) network (a resource centre for good reporting of health research studies; www.equator-network.org).

Meta-narrative review

We ran the meta-narrative review Delphi panels between September 2011 and March 2012. We recruited 33 individuals from 25 organisations in six countries. These comprised researchers in public or population health researchers (five); evidence synthesis (five); health services research (eight); international development (two); education (two); and also research methodologists (six), publishing (one), nursing (two) and policy and decision-making (two). We started round 1 in mid-September 2011 and circulated the briefing document to the panel. We sent two chasing e-mails to all panel members and within 4 weeks all panel members who indicated that they wanted to provide comments had done so. Twenty-two Delphi panel members provided suggestions of items that should be included in the publication standards. One of these items, on whether or not the concept of epistemic tradition should be included in a meta-narrative review, caused a degree of disagreement within the project team. As a result, we specifically put this issue to the Delphi panel. We used the suggestions from the panel members and the briefing document as the basis of the online survey for round 2. Round 2 started at the end of November 2011 and ran until early January 2012. Panel members were invited to complete our online survey and asked to rate each potential item for relevance and clarity. A copy of this survey can be found in Appendix 5. Two reminder e-mails were sent to the panel members. Once the panel had completed their survey we analysed their ratings for relevance and clarity (Table 6).

| Item | Relevance | Content | ||||||

|---|---|---|---|---|---|---|---|---|

| Response rate (%) | Mode | Median | Interquartile range | Response rate (%) | Mode | Median | Interquartile range | |

| Title | 31/33 (94) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 7 | 6–7 |

| Abstract | 32/33 (97) | 7 | 7 | 6–7 | 32/33 (97) | 7 | 6.5 | 6–7 |

| Rationale for review | 32/37 (97) | 7 | 7 | 6–7 | 32/33 (97) | 7 | 7 | 6–7 |

| Objectives and focus of review | 32/33 (97) | 7 | 7 | 6–7 | 32/33 (97) | 7 | 7 | 6–7 |

| Changes in the review processa | 31/33 (94) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 6 | 6–7 |

| Rationale or using the meta-narrative approacha | 27/33 (82) | 7 | 7 | 6–7 | 27/33 (82) | 7 | 6 | 5–7 |

| Evidence of adherence to guiding principles of meta-narrative review | 31/33 (94) | 7 | 6 | 5–7 | 31/33 (94) | 7 | 6 | 5–7 |

| Scoping the literature | 30/33 (91) | 7 | 7 | 6–7 | 30/33 (91) | 7 | 7 | 6–7 |

| Searching processes | 31/33 (94) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 7 | 6–7 |

| Selection and appraisal of documents | 31/33 (94) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 6 | 6–7 |

| Data extraction | 30/33 (91) | 7 | 6 | 6–7 | 30/33 (91) | 7 | 6 | 5–7 |

| Analysis and synthesis processes | 31/33 (94) | 7 | 6 | 6–7 | 31/33 (94) | 6 | 6 | 5.5–7 |

| Document flow diagram | 31/33 (94) | 7 | 7 | 5–7 | 31/33 (94) | 7 | 6 | 4.5–7 |

| Document characteristics | 31/33 (94) | 7 | 6 | 5–7 | 30/33 (91) | 7 | 6 | 5–7 |

| Main findings | 31/33 (94) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 7 | 6–7 |

| Summary of findings | 31/33 (94) | 7 | 7 | 6–7 | 30/33 (91) | 7 | 7 | 6–7 |

| Strengths, limitations and future research directions | 30/33 (91) | 7 | 7 | 6–7 | 30/33 (91) | 7 | 7 | 6–7 |

| Comparison with existing literature | 30/33 (94) | 7 | 6 | 5–7 | 30/33 (94) | 7 | 6 | 5–7 |

| Conclusion and recommendations | 31/33 (94) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 7 | 6–7 |

| Funding | 29/33 (88) | 7 | 7 | 6–7 | 29/33 (88) | 7 | 7 | 6–7 |

From round 2, only three items did not achieve a consensus: items 6 and 13. Item 5 had reached a consensus on relevance and content on the numerical scores, but there were sufficient concerns raised in the free text that we felt it needed to be amended and returned to the panel (see Table 6). Based on the suggestions made by the panel members, we refined the text for these items. We had asked panel members if they had a preference between the terms meta-narrative review or meta-narrative synthesis. Thirteen (41%) preferred meta-narrative synthesis, six (18%) meta-narrative review and 13 (41%) had no preference. Our conclusion was that the terms meta-narrative synthesis and meta-narrative review are synonymous. In response to the question of whether or not epistemic tradition should be included in a meta-narrative review, 16 (60%) agreed that it should be, four (15%) disagreed and seven (26%) did not know. As a result, we decided that epistemic tradition should be included in meta-narrative reviews and was incorporated into item 6. We also produced a post-round briefing document from round 2, which detailed for each item:

-

the response rate

-

mode

-

median

-

interquartile range

-

the action we took for each item based on the panel’s ratings

-

an anonymised list of all the free-text comments made.

For round 3, we only asked the panel to consider again the items for which a consensus had not been reached in round 2, namely items 5, 6 and 13. Two additional individuals who had initially decided not to respond to round 2 agreed to provide ratings. To ensure consistency they were briefed on the process and results from round 2. We produced an online survey for round 3 and again asked to rate items 5, 6 and 13 for relevance and clarity. To keep the panel updated we provided them with our post-round briefing document from round 2 (available on request from authors). Round 3 ran from mid-February to mid-March 2012. A copy of this survey can be found in Appendix 6. Two reminder e-mails were sent to the panel members. Once the panel had completed their survey we analysed their ratings for relevance and clarity (Table 7).

| Item | Relevance | Content | ||||||

|---|---|---|---|---|---|---|---|---|

| Response rate (%) | Mode | Median | Interquartile range | Response rate (%) | Mode | Median | Interquartile range | |

| 5. Changes in the review process | 29/35 (83) | 7 | 7 | 6–7 | 29/35 (83) | 7 | 7 | 6–7 |

| 6. Rationale for using the meta-narrative approach | 31/35 (89) | 7 | 7 | 6–7 | 31/35 (89) | 7 | 7 | 6–7 |

| 13. Document flow diagram | 32/35 (91) | 7 | 7 | 6–7 | 31/33 (94) | 7 | 6 | 6–7 |

By the end of round 3 a consensus was reached on all items. We produced a post-round briefing document from round 3 and circulated this to all our panel members for the sake of completeness (available on request from authors).

Using the data we gathered from the three rounds of the Delphi panel for realist reviews, we produced a final set of items to be included in the publication standards for realist reviews. These were published simultaneously in January 2013 in BMC Medicine91 and the Journal of Advanced Nursing. 92 Our publication standards have also been accepted and listed on the EQUATOR network.

Developing quality standards, teaching and learning resources using real-time refinement

We used a range of sources, in real-time to help us develop and refine our quality standards and teaching and learning resources. The data we used to help us came from the following sources:

-

JISCMail (www.jiscmail.ac.uk/RAMESES). At the start of the project we set up an e-mail list and membership of this list grew rapidly. As of June 2014, the list has 326 members and there are regular discussions on a range of topics relating to realist and meta-narrative reviews.

-

Methodological support to review teams. Over the course of this project the project team members have provided differing levels of methodological support to reviewers undertaking realist and meta-narrative reviews. This has ranged from providing answers to questions raised by e-mail or on JISCMail to regular face-to-face meetings. The level of support needed by each team differed considerably depending on each team’s initial level of expertise. Table 8 provides an overview of the projects we provided more in-depth methodological support to and also brief details of the nature of each type of support provided. When providing methodological support we contemporaneously made notes on issues and topics that might be relevant in helping us in this part of the project. An example of the type of records we made of our discussions with a review team we provided methodological support to can be found in Appendix 7.

| Review title | Research question(s) | Funder | Review type | Type of support provided |

|---|---|---|---|---|

| Risk models and scores for type 2 diabetes: systematic review | What are the different risk scores for identifying adults at risk of type 2 diabetes and which scores work for whom in what circumstances? | Local primary care trusts/London Deanery, UK | Realist synthesis linked to systematic review | One of us (TG), as the lead member of this team, provided the following: Training for all other team members on realist review principles Lead researcher on the realist review, undertaking all data extraction, tabulation, analysis and preparation of draft realist section of a mixed Cochrane-style and realist review. One other team member cross-checked this work Writing up the mixed-method review for British Medical Journal |

| Uncovering the benefits of participatory research: implications of a realist review for health research and practice |

|

Canadian Institute of Health Research, Canada | Realist synthesis | This novice realist review team was supported in the following ways:

|

| Realist review of multicomponent interventions to reduce harms of college binge drinking | What were the underlying theories and assumptions about why these programmes work, and what appear to be the mechanisms and associated contextual influences that led to their intended outcomes? | Dartmouth College, USA | Realist synthesis | This novice realist review team was supported in the following ways:

|

| How design of places promotes or inhibits mobility of older adults: realist synthesis of 30 years of research | How do characteristics of the environment (place) support mobility and what circumstances appear to facilitate or hinder mobility in older adults? | Centers for Disease Control and Prevention, USA | Realist synthesis | This novice realist review team was supported in the following ways:

|

| Systematically synthesizing IMCI implementation: what works for whom and in what circumstances? |

|

The Alliance for Health Policy and Systems Research, Switzerland | Realist synthesis | This moderately experienced realist review team was supported in the following ways:

|

| Evidence synthesis on the occurrence, causes, consequences, prevention and management of bullying and harassing behaviours to inform decision-making in the NHS | What is known about the occurrence, causes, consequences and management of bullying and inappropriate behaviour in the workplace? | National Institute for Health Research Health Services and Delivery Research programme | Realist synthesis | This novice realist review team was supported in the following ways:

|

| The effective and cost-effective use of intermediate, step-down, hospital at home and other forms of community care as an alternative to acute inpatient care: a realist review | Produce a conceptual framework and summary of the evidence of initiatives that have been designed to provide care closer to home in order to reduce reliance on acute care hospital beds | National Institute for Health Research Health Services and Delivery Research programme | Realist synthesis | This experienced realist review team took part in a 2-day roundtable discussion covering:

|

| What are the impacts of preschool feeding programmes for disadvantaged young children? | What is the impact of school feeding on growth and educational attainment in preschool children and what explains the successes, failures and partial successes of such programmes | International Initiative for Impact Evaluation (3ie), USA | Realist synthesis | One of us (TG), as the lead on realist review elements for this review, provided the following:

|

| Hospital patient safety: a realist analysis | Examine the introduction of three safety interventions (improving leadership, reducing infection rates, and implementing surgical checklists) in seven hospitals | National Institute for Health Research Health Services and Delivery Research programme | Realist synthesis | E-mail advice for team members on practical application of realist review principles |

| The relevance of complexity concepts and systems thinking to public and population health intervention research: a meta-narrative synthesis | Examine a variety of theoretical frameworks that use the concept of complexity science to help understand the social processes and systems of a constantly evolving environment within which public health interventions have to adapt | Canadian Institute of Health Research, Canada | Meta-narrative review | One of us (TG) was a co-applicant on this study and provided:

|

| Mining and aboriginal community health: impacts and interventions | Address the knowledge gap regarding mining impacts on Aboriginal health through a multidisciplinary knowledge synthesis of material from both academic and professional realms as held by Aboriginal communities, mining firms, governments, consultancies and civil society | Canadian Institute of Health Research and Social Sciences and Humanities Research Council, Canada | Meta-narrative review | E-mail advice for team members on practical application of meta-narrative review principles |

Workshops

We ran a number of methods training workshops during this project and these are listed in Table 9. Once again we made contemporaneous notes during these workshops and an example of the notes we made can be found in Appendix 8.

| Date | Purpose | Venue |

|---|---|---|

| 2011 March | Realist review training | Queen Mary, University of London, UK |

| 2011 October | Realist evaluation and review webinar | National Institute for Health Research Health Services and Delivery Research webinar, UK |

| 2011 October | Meta-narrative review training | Queen Mary, University of London, UK |

| 2011 November | Meta-narrative review training | McGill University, Montreal, QC, Canada |

| 2012 March | Realist review training | Karolinska Institute, Stockholm, Sweden |

| 2012 April | Realist review training | University of Leeds, UK |

| 2012 April | Realist review training | University of Sheffield, UK |

| 2012 October | Realist review training | Keele University, UK |

| 2012 October | Plenary: realist synthesis | University of Southern Denmark, Copenhagen, Denmark |

| 2012 November | Realist review training | Queens University Belfast, UK |

| 2012 November | Introduction to realism | Global Health Symposium on Health Systems Research, Beijing, China |

| 2013 March | Realist synthesis and evaluation | Erasmus University, Rotterdam, the Netherlands |

| 2013 April | Realist review training | University of East Anglia, UK |

| 2013 June | Realist review training | University of Leeds, UK |

Quality standards

The data from the sources above were channelled and collated contemporaneously by GWo and used to initially develop the quality standards for researchers using the realist or meta-narrative method. The initial drafts were circulated within the project team and were iteratively refined for content and clarity. Box 1 provides an illustration of how we drew on the data sources to produce the quality standards using an example for realist syntheses.

As researchers and trainers in RS we had noted that there was some confusion amongst researchers about the nature, need and role of realist programme theory (theories) in realist syntheses. To develop the briefing materials and initial drafts of the reporting standards for realist syntheses, we searched for and analysed a number of published syntheses and noted that our impressions were well founded.

When we ran a 1-day conference in March 2011, the issue of the nature, need and role of realist programme theory (theories) in realist syntheses emerged again. In our Delphi process we encouraged participants to provide free-text comments. These closely reflected the comments we received from our 1-day conference.

Development of the quality criteriaWe drew on our content expertise of the topic area and published methodological literature to develop the quality criteria. In addition we found that some of our Delphi panel participants provided us with clear indications that support the criteria we set. For example, we suggested that a RS should develop a programme theory and one that did not was inadequate. Delphi panel participants’ free-text comments echoed our suggestion:

How could identification of programme theories not be appropriate . . .

. . . it cannot be an RS without candidate [programme] theories.

We were also able to draw on the discussions that took place on JISCMail to find support some of our criteria. For example, under excellent in our suggested quality standards for programme theory, we suggested that: ‘The relationship between the programme theory and relevant substantive theory is identified.’

As illustration, a comment from JISCMail that we drew upon to support this criterion was:

In a review, one focus[es] first on what is reported but one can – and probably should, in order to produce some added value – reflect the findings and outcomes of the study under review against the theories and/or best practice that already exist.

For realist syntheses and meta-narrative reviews we developed two sets of quality standards for each. The two sets have been developed for the following user groups:

-

researchers and peer reviewers using these methods

-

funders/commissioners of research.

Although the core component of the quality standards we have developed are the same for each of the two ‘versions’ listed above, we have adapted them each in an attempt to make them more focused and useful for the intended users. All the quality standards for realist syntheses and meta-narrative reviews are freely available online. 93

Quality standards for researchers using the methods and peer reviewers

The quality standards for these user groups are set out using rubrics. By peer reviewers here, we specifically refer to individuals who have been asked to appraise the quality of completed reviews. For each review process that requires a judgement about its quality, we have provided a brief description of why the process is important and also descriptors of criteria against which a decision about quality might be arrived at. The quality standards for realist syntheses for researchers are set out in Table 10, while the quality standards for meta-narrative reviews are presented in Table 11.

| Quality standards for RS (for researchers and peer reviewers) | ||||

|---|---|---|---|---|

| 1. The research problem | ||||

| Realist synthesis is a theory-driven method that is firmly rooted in a realist philosophy of science and places particular emphasis on understanding causation and how causal mechanisms are shaped and constrained by social context. This makes it particularly suitable for reviews of certain topics and question, for example complex social programmes that involve human decisions and actions. A realist research question contains some or all of the elements of what works, how, why, for whom, to what extent and in what circumstances, in what respect and over what duration and applies realist logic to address the question. Above all, realist research seeks to answer the why question. Realist synthesis always has explanatory ambitions. It assumes that programme effectiveness will always be partial and conditional and seeks to improve understanding of the key contributions and caveats | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| The research topic is appropriate for a realist approach | The research topic is:

|

The research topic is appropriate for secondary research. It requires understanding of how and why outcomes are generated and why they vary across contexts | Adequate plus: framing of the research topic reflects a thorough understanding of a realist philosophy of science (generative causation in contexts; mechanisms operating at other levels of reality than the outcomes they generate) |

Good plus: there is a coherent argument as to why a realist approach is more appropriate for the topic than other approaches, including other theory-based approaches |

| The research question is constructed in such a way as to be suitable for a RS | The research question is not structured to reflect the elements of realist explanation. For example, it:

|

The research question includes a focus on how and why the intervention, or programme (or similar classes of interventions or programmes – where relevant) generates its outcomes, and contains at least some of the additional elements, ‘for whom, in what contexts, in what respects, to what extent and over what durations’ | Adequate plus: the rationale for excluding any elements of ‘the realist question’ from the research question is explicit the question has a narrow enough focus to be managed within a realist review |

Good plus: the research question is a model of clarity and as simple as possible |

| 2. Understanding and applying the underpinning principles of realist reviews | ||||

| Realist syntheses apply realist philosophy and a realist logic of enquiry. This influences everything from the type of research question to a review’s processes (e.g. the construction of a realist programme theory, search, data extraction, analysis and synthesis to recommendations) The key analytic process in realist reviews involves iterative testing and refinement of theoretically based explanations using empirical findings in data sources. The pertinence and effectiveness of each constituent idea is then tested using relevant evidence (qualitative, quantitative, comparative, administrative, and so on) from the primary literature on that class of programmes. In this testing, the ideas within a programme theory are recast and conceptualised in realist terms. Reviewers may draw on any appropriate analytic techniques to undertake this testing |

||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| The review demonstrates understanding and application of realist philosophy and realist logic which underpins a realist analysis | Significant misunderstandings of realist philosophy and/or logic of analysis are evident. Common examples include:

|