Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its proceeding programmes as project number 09/2001/32. The contractual start date was in September 2010. The final report began editorial review in March 2014 and was accepted for publication in August 2014. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

The Dr Foster Unit at Imperial College London is principally funded by Dr Foster Intelligence, an independent health-care information company. The Dr Foster Unit is affiliated with the National Institute for Health Research (NIHR) Imperial Patient Safety Translational Research Centre. Dr Alex Bottle and Professor Paul Aylin are part funded by a grant to the unit from Dr Foster Intelligence outside the submitted work.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2014. This work was produced by Bottle et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Background and research objectives

Measuring and comparing health-care performance are essential components of driving quality improvement. When comparing patient outcomes such as mortality, it is usually necessary to appropriately adjust for patient factors such as age and disease (collectively known as casemix) using statistical models. The NHS collects a wealth of administrative data that are currently underutilised to support improvements in health-care provision. The aim of this project was to use a core NHS data set, Hospital Episode Statistics (HES), to develop risk-prediction and casemix adjustment models to predict and compare outcomes between health-care units. The outcomes we have chosen are mortality, unplanned readmission, unplanned returns to theatre for selected specialties and non-attendance in outpatients departments (OPDs). As well as patient age and sex, a key casemix factor for risk-adjustment models is comorbidity, which has been measured in various ways, such as the Charlson index,1 that warrant comparison. Modelling for binary outcomes is typically done using logistic regression (LR), but there are a number of machine learning approaches that have been tried and are worth investigating here. Finally, just as statistical methodology has developed, HES itself is expanding and now covers OPD appointments since the financial year 2003/4 and accident and emergency (A&E) attendances since 2007/8. Information from these sources may provide useful information if the data quality is sufficiently high.

In view of all this, the project had these main objectives:

-

to derive robust casemix adjustment models for these outcomes adjusting for available covariates using HES

-

to update the weights and codes for the widely used Charlson index of comorbidity, recalibrate it for the NHS and assess its use for mortality and also non-mortality outcomes

-

to assess if more sophisticated statistical methods based on machine learning such as artificial neural networks (ANNs) outperform traditional LR for risk prediction

-

to assess the usefulness of outpatient data for these models.

The first of these may be considered an overarching aim, with the other three as elements of that aim.

The structure of this report is as follows. Chapter 2, Methods, begins by describing the HES database as held and processed by the Dr Foster Unit at Imperial. It briefly describes the assessment of data quality of the two newer elements used in this project: OPD and A&E records (the Diagnostic Imaging Dataset was released too late for us to obtain it). There are subsections on the outcome measures, particularly the (unplanned) return to theatre (RTT) ones, a review of the literature on comorbidity measures, including a summary of our published systematic review of studies comparing two or more such measures, and the statistical methods for risk adjustment, together with their implementation issues, in particular (re)calibration. That chapter ends by discussing count models as an alternative to considering binary outcomes, particularly readmissions. Chapter 3, Results, begins by giving the results for the updating of the Charlson index using HES data and illustrates the performance of Charlson compared with that of the popular Elixhauser index. It illustrates different methods for incorporating information from prior admissions for two conditions: heart failure (HF) and acute coronary syndrome (ACS). This is followed by a section comparing risk-adjustment methods, which comprises a comparison between LR and machine learning approaches for mortality and readmission. The results of the RTT analyses are then presented. Following a literature review of factors relating to OPD non-attendance, we present the results of the prediction models. Finally, we go beyond the common 28- or 30-day readmission indicator to cover other time periods and future bed-days. We focus on HF patients; the basic results were similar for chronic obstructive pulmonary disease (COPD). Chapter 4 gives the discussion and dissemination activity to date and suggests further research, and Chapter 5 offers some conclusions.

Chapter 2 Methods

Hospital Episode Statistics database

Our unit holds inpatient and day case HES and Secondary Uses Service (SUS) data from 1996/7 to the present with monthly SUS feeds. We apply published HES cleaning rules to the SUS feed. Details of this and our other processing, such as episode and spell linkage, can be found on documents on our website (www1.imperial.ac.uk/publichealth/departments/pcph/research/drfosters/reports/ under ‘HSMR methodology’). HES data cleaning rules may be found on the NHS Information Centre website under HES. Throughout this report, we refer to all these records as ‘HES data’. Briefly, each record in the inpatient part of the database is a finished consultant episode, representing the continuous period of time during which the patient is under the care of a consultant or allied health professional. We link episodes into ‘spells’ (admissions to one provider) and link spells into ‘superspells’, the latter combining any interhospital transfers. We will refer to superspells as ‘admissions’ and in general use them as the unit of analysis throughout.

We hold outpatient HES and SUS data since they became part of HES in 2003/4 and hold A&E records since they became part of HES in 2007/8. The most recent A&E records we had for the project were for 2011/12. The Information Centre’s contractors provide a file to enable us to attach the date and cause of death to HES records, with the latest date of death being August 2011.

We derive a number of fields, in particular the main diagnosis group, the main procedure group and the Carstairs deprivation quintile. We use the Agency for Healthcare Research and Quality (AHRQ) Clinical Classification System (CCS) to turn the International Classification of Diseases, Tenth Edition (ICD-10) codes of the primary diagnosis field into one of 259 diagnosis groups designed for health services research (see www.ahrq.org for details). For procedures, no such system exists for the UK’s Office for Population, Censuses and Surveys (OPCS) procedure codes. Over several years, in conjunction with clinicians, we have created a number of procedure groups. 2 The Carstairs quintile3 is assigned at the super output area geographical level using information from the 2001 census; the necessary 2011 census information was not available in time for this project. Although Carstairs is therefore based on older information than the Index of Multiple Deprivation, its resolution is greater.

We now briefly consider the quality of HES data.

Assessment of inpatient and day case data quality

Submission of HES records is mandatory and coverage is very high, though the frequency and timeliness of submission to SUS varies. Apart from ethnicity, which is fast improving, there are few clearly duplicate records or missing or invalid values in HES for patient identifiers and demographics, dates of admission, discharge or procedure, method of admission and other key fields:2,4 < 1% of records were dropped because of missing values. Most debate around HES data quality concerns the primary and secondary diagnostic and procedure fields. A sample of records is externally audited for its coding and the results published online by trust by the Audit Commission. 5 These reports show that the variation by trusts is notable but narrowing each year. The estimates of coding accuracy in these reports are not sufficiently robust or detailed to allow us to estimate the ‘correct’ level of comorbidity at a given hospital, however. A recent systematic review on this subject by our group6 found coding accuracy to be high (97%), particularly since the introduction of Payment by Results. The Health and Social Care Information Centre have now written two reports on English hospital and social care data (see www.hscic.gov.uk/catalogue/PUB08687 for the first one).

Assessment of outpatient data quality

Unlike with A&E, there is no independent source with which to compare the number of records. We assessed data quality in terms of the proportion of duplicates and the proportion of records with missing values for the primary diagnosis, primary procedure, and date and outcome of attendance (whether the patient attended or not). Most records lacked both a primary procedure (though we cannot ascertain what proportion of OPD appointments actually involved a procedure) and a primary diagnosis field. The use of codes for invalid or unknown was low for the other key fields. The proportion of duplicate records was < 1%, but these should still be removed.

Assessment of accident and emergency data quality

We compared for each hospital the number of electronic records in our data set with counts from the Accident and Emergency Quarterly Monitoring Data Set (QMAE). Overall in the first 2 years of A&E HES, 2007/8 and 2008/9, it was missing more than 6 million of the 19 million attendances reported by QMAE, a shortfall of around a third. As many as 40% of hospitals with A&E departments failed to submit any data. Then 2009/10 saw a jump of around 3 million electronic records to include 16 million out of the 20 million QMAE total, a jump that was maintained but not improved upon in 2010/11. We felt that the shortfall was still too large and therefore decided not to use A&E records in the project. Records for 2011/12 arrived too late for inclusion but may be worth using in future analyses.

Definition of outcome measures and predictors

The two most commonly used mortality measures are in-hospital mortality (any time during the index stay) and 30-day total mortality (in or out of hospital within 30 days of the index admission or procedure date). For readmission, the most commonly used internationally is the 30-day all-cause version; in the UK, 28 days is typically used. Unless otherwise stated, ‘readmission’ as an outcome means within 28 days; for the HF analyses we used 7-, 30-, 90-, 182- and 365-day follow-up periods rather than 28 days.

For HF, the number of admissions and inpatient bed-days during the 365 days following discharge were also counted; these and also the first readmission were split by primary diagnosis into those for HF and those for any other cause (non-HF). OPD non-attendance was simply defined using the ATENTYPE (attendance type) field. The first appointment following inpatient discharge for selected acute conditions was readily identified using dates; of particular interest here was whether or not non-attendance rate varied by the time interval between discharge and the appointment, as if so this would represent an opportunity for the hospitals to reduce missed appointments. The patient’s first new appointment for the specialties of general medicine and general surgery were identified using the field FIRSTATT and also by tracking back 3 years in the database to ensure that this was each patient’s first for that specialty (or at least their first for 3 years).

The construction of the RTT measures followed the same outline for each specialty as for cystectomy in urology. 7 All procedure fields with dates between 1 and 29 days inclusive of the index procedure date, even if performed after transfer or discharge from the index stay, were extracted from the database and inspected by a senior surgeon and clinical coder to determine which were likely to be reinterventions. These can potentially encompass surgical operations, both surgical and non-surgical procedures, including radiological interventions. For orthopaedics, we defined RTT between 1 and 89 days after the index procedure in order to capture infections, which may take a few weeks to develop. The index procedures of hip and knee replacements were each divided into subgroups with advice from a senior surgeon. Hip operations were divided into total with cement, total without cement, hybrid and hip resurfacing. Knee operations were divided into total, unicondylar (or unicompartmental) and patellofemoral replacement, taking into account the national changes in coding practice in 2009/10 regarding Z845 (tibiofemoral joint) and W581 (resurfacing). The small number of otherwise unclassified procedures were excluded. Using laterality Z codes, we matched the RTT to the joint where possible – around 95% of joint procedures had laterality codes.

Potential covariates for the risk-adjustment models were taken from our national monitoring system that uses HES:2 age, sex, deprivation quintile, year, month of admission for respiratory conditions, admission method (elective or emergency if the patient group included both), emergency admissions in the year before the index stay, comorbidities and potentially an interaction between age and comorbidity. For the HF readmission models, we also tried incorporating previous admissions using more sophisticated methods, and we used the number of prior OPD appointments attended and the number missed. HF models also included some procedures. Further details are given in the relevant later sections.

Table 1 summarises which outcomes will be presented for which patient groups.

| Patient group | Outcomes modelled |

|---|---|

| All emergency inpatients combined | Mortality |

| Acute myocardial infarction | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| COPD | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| Stroke | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| HF | Mortality (in-hospital and 30-day total); readmission (7-, 28-, 30-, 90- and 365-day, both all-cause and diagnosis-specific); number of emergency bed-days and readmissions within a year of index discharge (also converted into membership of high-resource ‘buckets’) |

| Pneumonia | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| Fracture of the neck of femur | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| Hip replacement (primary procedure) | Mortality (in-hospital and 30-day total); readmission (7- and 28-day); return to theatre within 90 days |

| Knee replacement (primary procedure) | Mortality (in-hospital and 30-day total); readmission (7- and 28-day); return to theatre within 90 days |

| First-time isolated coronary artery bypass graft (primary procedure) | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| Abdominal aortic aneurysm repair (primary procedure) | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| Colorectal excision (primary procedure) | Mortality (in-hospital and 30-day total); readmission (7- and 28-day) |

| Patients having their first general medical or general surgical OPD appointment | Non-attendance |

| Patients admitted for any of acute myocardial infarction, coronary heart disease, stroke, HF, acute bronchitis, COPD and pneumonia | Non-attendance in first OPD appointment after discharge |

Review of comorbidity indices

The two most commonly used indices are the Charlson1 and Elixhauser,8 originally described using International Classification of Diseases, Ninth Edition (ICD-9) with US data. In each index, points are given for the presence of a set of codes representing diseases associated with higher or sometimes lower risk than if the disease is not present. The points are then summed to give a score for the admission. 9 The Charlson index is now over 20 years old and both indices need calibrating on the data set of interest; to our knowledge, very little has been published from the UK on this. The weights (or scores) for these two indices may be inappropriate for the UK because of differing populations and/or coding practices. We have to date been using an Australian version of Charlson10 in our risk-adjustment models (there are a variety of others for ICD-9), but discussions with clinical coders raised questions over the suitability of some codes when used in the UK. According to their advice, we modified the codes for acute myocardial infarction (AMI) and dementia in particular. 11

A decade or so later, Elixhauser et al. 8 constructed a more extensive index covering 30 conditions. This index was designed for administrative databases such as HES that lack present on admission information, which means that they cannot distinguish between comorbidities and complications that arise during the stay. This is why Elixhauser does not include AMI or stroke, which are both part of Charlson. Some comorbidities were associated with lower risk of mortality and were given negative scores, confirmed in a Canadian analysis. 12 One reason for this was given by Elixhauser: low-risk patients may be given more codes for less acute problems than acutely ill people, for whom coders will focus on problems relevant to the acute situation. The presence of codes for non-life-threatening disease may therefore be a marker for relatively healthy patients.

Including Charlson and Elixhauser, there have been different approaches of selecting sets of comorbidities. The most common typically involved considering the prevalence of each condition and medical expert opinion. 13–17 Gagne et al. 18 and Thombs et al. 19 simply combined all the conditions listed in Elixhauser and the Deyo adaptation of the Charlson index except those conditions thought to be related to the main diagnosis. The simplest approach is to count the number of conditions, thereby giving them equal weight.

To find the best-performing one(s), we conducted a systematic review of multiple comparison studies on comorbidity measures/indices in use with administrative data. The review used a new meta-analytical approach, hypergeometric tests, to identify the best-performing indices. We now briefly outline the search strategy, results and conclusions. Full details may be found elsewhere. 20

The literature search was conducted first using three electronic databases, MEDLINE, EMBASE and PubMed, up to 18 March 2011. Search terms included Charlson, Elixhauser, comorbidity, casemix, case-mix, mortality and morbidity. In addition, the bibliography of the chosen articles and the relevant reviews were searched. 21–25 Two authors independently reviewed the titles and abstracts and the methodology section of the articles and selected the relevant potential articles. All disagreements were resolved by consensus.

Articles were included if administrative data were used and comparisons between predictive performances of at least two indices (perhaps also including common covariates) were made. Articles were excluded if clinical databases without ICD codes were used or if indices were used for the purpose of adjustment only, without any comparison of indices.

The c-statistics and the confidence intervals, or Akaike information criterion (AIC) or other appropriate statistics, were looked at if they were available, to compare the predictive performances of the measures/indices. If c-statistics alone without confidence intervals (or p-values) were provided, an arbitrary ≥ 0.02 cut-off point was chosen to define the difference between two measures/indices as significant.

All mortality outcomes were divided into two major groups: (1) ‘short term’ – all inpatient mortality and any mortality within 30 days of admission; and (2) ‘long term’ – outpatient mortality or that 30 or more days from admission.

After removing duplicates from different databases, we retrieved 1312 studies to review, subsequently reduced to 54 articles eligible for data abstraction. Of these, 90% were carried out in North America.

In summary, for short-term mortality (up to 30 days), the use of empirical weights (of whichever index) or the small group of various other comorbidity measures performed best. For long-term mortality (30+ days), these ‘other measures’, the Romano version of Charlson and the Elixhauser measure performed significantly better. As others have suggested, there may be useful gains if the two indices are combined. In practice, this would mean taking the Elixhauser set and adding dementia if we excluded AMI and stroke (Charlson components), which could occur after admission as complications but whose timing cannot be determined using HES. Following a review of the Sundararajan et al. comorbidity ICD definitions10 by external clinical coders, we amended the Charlson algorithm so that it uses extra codes for AMI and dementia in particular.

Statistical methods

Modelling framework

The terms risk prediction and risk adjustment are closely related despite their differing aims, but a model for predicting mortality, for example, might not include the same set of variables as a risk-adjustment model used to compare hospitals’ mortality rates. Risk prediction values parsimony and interpretability, whereas risk adjustment can focus more on confounder control. Risk-prediction models could encompass factors such as staffing and bed numbers or other factors that are (at least partly) under the hospital’s control, whereas this would be wrong for risk-adjustment models for comparing providers. For the most part we restrict ourselves to adjustment except for part of the HF analysis that covers the prediction of future inpatient activity and for OPD non-attendance prediction.

Prediction of binary outcomes such as mortality or readmission is usually done using LR with records from single institutions. It is therefore prone to problems such as poor reproducibility due to small sample sizes and variations in patient characteristics between study centres. 26 Combining a larger number of institutions would increase the sample size, but the modeller then in principle needs to account for the ‘clustering’ of patients within institutions. The common way of accounting for this is to use multilevel models, particularly with random intercepts for surgeons and hospitals and fixed effects for covariates. For this, we used SAS’s procedure for binary outcomes, PROC GLIMMIX, version 9.2 (SAS Institute Inc., Cary, NC, USA). From this we derived relative risks (RRs) for each hospital using predicted probabilities from only the fixed effects part of the model. 27,28 We used the noblup ilink options within PROC GLIMMIX to achieve this. These RRs are akin to standardised mortality ratios (SMRs), which represent the ratio of the hospital’s rate to the national average rate. The Center for Medicare and Medicaid Services (CMS) in the USA uses empirical Bayes ‘shrunken’ estimates of the SMRs for its publicly reported outcome measures. 29 Confidence intervals for the CMS measures are constructed using a complicated bootstrap procedure. A discussion of the different SMRs calculable from multilevel modelling is given by Mohammed et al. ,30 though the article is unnecessarily critical of mortality measures in general. Several studies have compared the results from fixed and random effects models with regard to provider profiling, in which a key aim is the identification of statistical outliers, especially units with higher than expected mortality. In general, these conclude as Austin et al. 31 did: ‘when the distribution of hospital-specific log-odds of death was normal, random-effects models had greater specificity and positive predictive value than fixed-effects models. However, fixed-effects models had greater sensitivity than random-effects models’ (p. 526).

Bayesian methods in provider profiling are of growing interest, and four such approaches are reviewed by Austin. 32 He concluded that there was often little agreement in the hospital rankings between the methods considered. We will not consider Bayesian methods further.

Multilevel models allow for the estimation of the amount of variation between units at each level, for example between surgeons or hospitals. The residual intraclass correlation coefficient (ICC), a measure of clustering used in hierarchical modelling, expresses the proportion of variability explained by the presence of clusters as, for instance, a hospital level. 33 It is computed as:

where τH is the hospital-level variance and π = 3.14159.

By building up the levels in a hierarchical model, one can assess, for example, how much of the variation in outcomes at hospital level is due to the variation between surgeons or to differences in the distribution of patient factors. In this project, this is particularly useful with surgical outcomes such as RTT, and we will illustrate it for orthopaedics.

Model fitting

The modelling in this project served different purposes, and we therefore took different approaches accordingly. For the comparison of LR and machine learning methods, we needed to mitigate overfitting; in our experience before and during this project, this is not a problem with regression and HES data, and therefore we did not routinely split the data subsets into a training and a testing set. For the comparison of methods, however, we did. For this part of the analysis, we took 2 years of data, randomly split the records into two portions and then recombined them to form a test data set and a training data set so that each of the resulting sets contained a random mix of each year. This was to avoid any effect of the data year itself, for instance on account of national outcome trends or coding changes. We included a binary variable to indicate the year. As the aim was to see to what extent more complex methods can go beyond standard regression, no interactions or non-linear terms were included.

For LR, all candidate covariates were retained: we did not use any stepwise methods given their well-known drawbacks. For the most part we did not test for interactions.

The machine learning methods tend to be slower than LR and, given their complexities, often need an expert as an operator and to choose which implementation to use. While many packages offer ‘out-of-the-box’ ways of running the code, doing so is usually suboptimal, and an expert is needed to make decisions on implementation issues. The sections that follow are therefore the most technical in this report.

Machine learning methods

Logistic regression is familiar to many, easy to use, with easy-to-interpret output consistent across platforms, be it SAS, R or any other. This is in stark contrast to machine learning methods, which may fall into different broad categories. As an alternative to regression, researchers have applied various machine learning methods, especially ANNs and support vector machines (SVMs), and the initial results have been promising. 34–37 There are also various tree-based methods that offer an appealing output that, unlike ANNs and SVMs, shows the relations between predictors. Of these, we consider random forests, as we doubted that our data would benefit from the extra complexity inherent in boosted trees. The essential question here that we tackle is: do these sophisticated methods offer substantial benefits over the humble LR?

These methods have a number of implementation issues and decisions to be taken, as there is much less consensus than for LR. For each method, we now describe these issues and how we addressed them. A short subsection outlines how we assessed the performance of each model. Finally in this chapter, we consider the problem of poor calibration and how, for this comparison of methods set of analyses, we tackled it.

Support vector machines

Support vector machines are designed to find a hypersurface (hyperplane in the linear case) that separates positive outcomes from negative ones (e.g. death vs. survival). SVMs are therefore classifiers. The SVM output is a number detailing how far on one side of the hypersurface or the other a test vector (set of patient characteristics) lies. This number induces an ordering on the data; however, the ordering needs to be recalibrated if one wants a probability estimate. If no hypersurface can be found that separates the data according to outcome, there will be misclassification. The c-parameter controls the leniency of such misclassifications.

For a given set of parameters a SVM is trained on a training set. The trained model is then applied to a test set, and we calculate the c-statistic. A grid-based search is then performed to determine the optimal set of parameters. The optimal parameters depend on the outcome and set of covariates.

A crucial ingredient for SVMs is the so-called kernel, the most prominent ones being linear and Gaussian. We also studied a Mahalanobis distance-based kernel in more detail (see next paragraph), and there are many others which we briefly looked at. There is no uniquely correct choice of kernel. The kernel will contain parameters of its own in addition to C. As mentioned above, another problem is that SVMs generally output not probability estimates but just a rank. This needs to be calibrated in an extra step, which comes with its own issues. Furthermore, care needs to be taken with ‘unbalanced’ data sets. An imbalance in outcome frequencies is common in medical data sets, that is there are usually many more survivors than non-survivors. LR handles this well, but other methods such as SVMs have a tendency to overemphasise the majority class (which is usually survival). This can be solved by either undersampling the majority class (i.e. randomly dropping patients belong to the majority class until the class distribution is balanced) or oversampling the minority class (i.e. counting patients in the minority class several times until numbers are balanced). The SVM code we used implements a similar but somewhat more sophisticated method. It allows the classes to be weighted differently. Since no data are dropped in this process, this method is similar to oversampling but without adding any data, thus not needlessly increasing the data sets. The last point is important, as SVMs are computationally intensive, and speed is a consideration.

Support vector machines work by measuring the similarity, or ‘distance’, of patient characteristics, which are stored as vectors. The problem is: what is a meaningful ‘distance’ in an abstract space of such vectors? How does one compare the presence of, say, diabetes, with the patient’s gender, or either with a 1-year difference in age? There is therefore no natural distance measure. Standard linear or Gaussian kernels are therefore not necessarily the best, and neither are many others, as all the elements of a vector are treated on the same footing. A data-driven distance measure, the Mahalanobis distance,38 does exist, but to implement it directly would be computationally impossible. However, transforming the data by a principal components analysis results in the Euclidian distance measure in the Gaussian kernel becoming the Mahalanobis distance. We also used the transformed data with the linear kernel.

We chose the free package svm-light39 to implement the SVMs. Its advantage is that it is written in C and thus allows for easy implementation of alternative kernels. In general, for a given kernel, a numerical grid search is employed varying the control parameter C and any other kernel parameters if present. Particularly for more complex kernels and limited computer resources, this is prohibitively inefficient. We therefore performed such a grid search for the popular linear kernels (parameter C only) and the Gaussian kernel (parameter C and the width of the Gaussian). We implemented several other kernels, such as Jaccard–Needham, Cosine, Maryland bridge, Correlation, Coulomb, Polynomial and Rogers–Tanimoto. Full testing was not feasible, so we compared the different kernels using only a small subset of the possible parameters (default and similar). We found no indication of any other kernel outperforming the Gaussian kernel and decided not to pursue these other kernels in more detail.

Svm-light was run in ‘regression mode’. In standard mode, that is ‘classification mode’, SVMs yield an optimal hypersurface separating the two classes, such as deaths and survivors. In regression mode the algorithm will try to fit a function so that it comes as close as possible to the outcome values and therefore may contain information in addition to just being on one side or the other of a hypersurface. In practice we did not see much of a difference in SVM performance between ‘regression mode’ and ‘classification mode’.

With the exception of age, all the covariates were binary, so the data are located on the corners of a hypercube. Unsurprisingly, building the model was very slow. Given that one learning run needs to be performed for each combination of SVM parameters, we had to limit each SVM learning run to 24 hours. The resulting model then also contains a large fraction (> 90%) of all data vectors as support vectors – SVM are meant to be able to find a model with few support vectors (support vectors are points that lie on the margins of the separating hyperplanes).

Artificial neural networks

Artificial neural networks come in a multitude of different types. There are far too many adjustable aspects of ANNs to systematically optimise all of them. Hertz et al. discuss important standard implementations of ANNs such as feed-forward and recurrent ANNs, but also unsupervised ANNs such as Kohonen maps. 40 After waning for a few years because of the popularity of SVMs, interest has revived recently under the heading of Deep Learning. 41 We concentrate on what is probably the standard: feed-forward ANNs, which are conceptually quite similar to LR. Whereas in LR a weighted sum feeds into a logit function, in feed-forward ANNs several such structures are fed into a further LR-like computational structure layer by layer. Such ANNs are usually trained using the back-propagation algorithm. This is a local optimisation algorithm. The exact computational methodology for solving the resulting optimisation problem is also not as clear-cut as in LR. One may minimise several different measures such as the Brier score (mean square error), a log-likelihood-based measure as in LR, or some combination of the two, but even then there is no unique solution as is the case in LR. This means that different optimisation methods are used in conjunction with several overall measures, generally resulting in different solutions (local minima) with no unique and obvious choice of the optimal combination. However, the result will usually be a meaningful estimate of a probability. The scoring function which was optimised could interpolate between the mean square error and a LR-like log-likelihood-based measure. This affects the position of the optima and the speed with which they are reached. We chose an equal mix of both. No systematic changes with respect to the result were observed, but some speeding up was observed. For this reason we also added a momentum term and damping into the stepwise updates of the optimisation.

We terminated this procedure at one so-called hidden layer, which is the standard choice. One must decide how many nodes to use. When choosing the number of nodes in the hidden layer, we kept to a common rule of thumb: the number of nodes should be of the order of the square root of the number of variables in the model. Any number of hidden nodes beyond one will give results better than LR. We found that, as long as the number of hidden nodes was greater than two, no systematic changes regarding the c-statistic were observed, so we settled on five as per the rule of thumb. In ANNs as in LR, there is no unique functional form of the non-linear transform, although logit is a common choice.

We added a LR-like direct link from the input layer to the output layer. While this did not improve overall performance it has a few advantages. By setting the links to the hidden layer to zero we are left with a LR-like architecture. The optimisation can then be initialised with a LR solution, which saves time. In addition, back-propagation is then guaranteed to reach an optimum that is at least as good as LR.

Random forests

Random forests is a decision tree-based method. 42 A decision tree is a set of if–then clauses (nodes) with a tree-like structure, with each leaf being a decision on the expected outcome: ‘if (age > 65 years) then if (no diabetes) equals survival’, etc. The trees in random forests use a randomly chosen subset of all explanatory variables at each node. A random forest then consists of many such trees, each one grown on a bootstrapped sample of the training data set (bootstrapping a data set means generating new data sets by drawing from the original one with replacement). The outcome or outcome probability is then derived from a committee decision made by all the trees in the forest. This method may also be combined with other methods. Calibration issues may also be prominent, and there are potentially many parameters to tweak.

Individual trees are grown in the following manner:

-

Sample N cases at random with replacement to create a subset of the data.

-

At each tree node:

-

From the total number of explanatory variables, M, select at random a subset of m explanatory variables.

-

The explanatory variable that provides the best split, according to the classification and regression trees methodology43 or some other objective function, is used to do a binary split on that node.

-

At the next node, choose another m variable at random from all explanatory variables and do the same.

-

For each case, the numbers of trees that ‘vote’ for a particular class (e.g. death) are used as an estimate of the probability of being in that class. In addition to the randomforest package version 4.6–7 (University of California, Berkeley, CA, USA) default of 500 trees and m = sqrt(M), the advice given by Genuer et al. 44 was followed on selecting the number of trees and m.

The random forests were implemented as described by Breiman45 using R version 3.0.2 (The R Foundation for Statistical Computing, Vienna, Austria) and the randomforest package version 4.6–7.

Assessment of model performance

With any risk model comes the need to assess its performance. For binary outcomes, two standard measures for LR are the area under the receiver operating characteristic (ROC) curve, or c-statistic, and the Hosmer–Lemeshow (HL) test output. The former measures discrimination, the ability of the model to predict a higher probability of death for those who died than for those who survived. It is generally considered that values of c above 0.70 represent good discrimination and values above 0.80 represent excellent discrimination. The maximum value obtainable is often quoted as 1 but in fact varies with the distribution of risk in the population (see Cook46 for a full discussion on this statistic). The HL test describes the model’s calibration and divides the data set into risk deciles. The observed and predicted number of events are compared in each decile – which often shows poor calibration at the extremes – and summarised in a chi-squared statistic. It has been criticised for having high type I and II error rates. 47 While a simple plot of observed versus predicted rates may be more useful, we will nonetheless report HL test results because of the large number of models fitted and the need to be concise.

For the HL chi-squared values, we give right-sided p-values. We used 10 bins for the HL test, as is standard. Any constraints of the fitting procedure (e.g. that the total sum of estimated probabilities equals total sum observed events) which reduce the effective number of degrees of freedom apply to the HL test with respect to the training data set. When running LR for a range of patient groups, we used eight degrees of freedom as is standard and as used by SAS. However, in the comparison of methods section, as we present results for the test set, no such constraints exist, and the HL test will be distributed according to the full 10 degrees of freedom.

Recalibration

Being built around a sigmoid output node, ANNs produce output that can be interpreted as a probability estimate, as does LR. The greater variational freedom inherent in the algorithm then means that the result is potentially better calibrated. Therefore we did not recalibrate the ANN output.

Methods that are known to have calibration issues, such as random forests,48 or are not even designed to produce meaningful probability estimates, such as SVMs, have to be recalibrated. We also found a number of the LR models to show overprediction of low risk in particular. As LR may be viewed as an ANN with no hidden nodes, back-propagation is applicable and can be adapted easily to optimise the internal shape parameters of the output node. Adjusting the shape parameters changes the calibration. Standard LR is based on optimising a log-likelihood-based measure and results in perfect overall calibration (i.e. total observed counts equal total predicted counts). Optimising the mean square error does not result in perfect overall calibration. However, the difference in probability estimates using each optimisation scheme encodes the deviance from an optimal model for which the difference would be zero. (This follows from the fact that both measures are so-called ‘strictly proper scoring rules’.) We found that feeding the probability estimates back into a further LR iteration in conjunction with the other covariates improved both discrimination and calibration. This will be labelled ‘LR+’ in the results. Only feeding back in the standard LR probability estimates also improved the final result but to a much lesser degree.

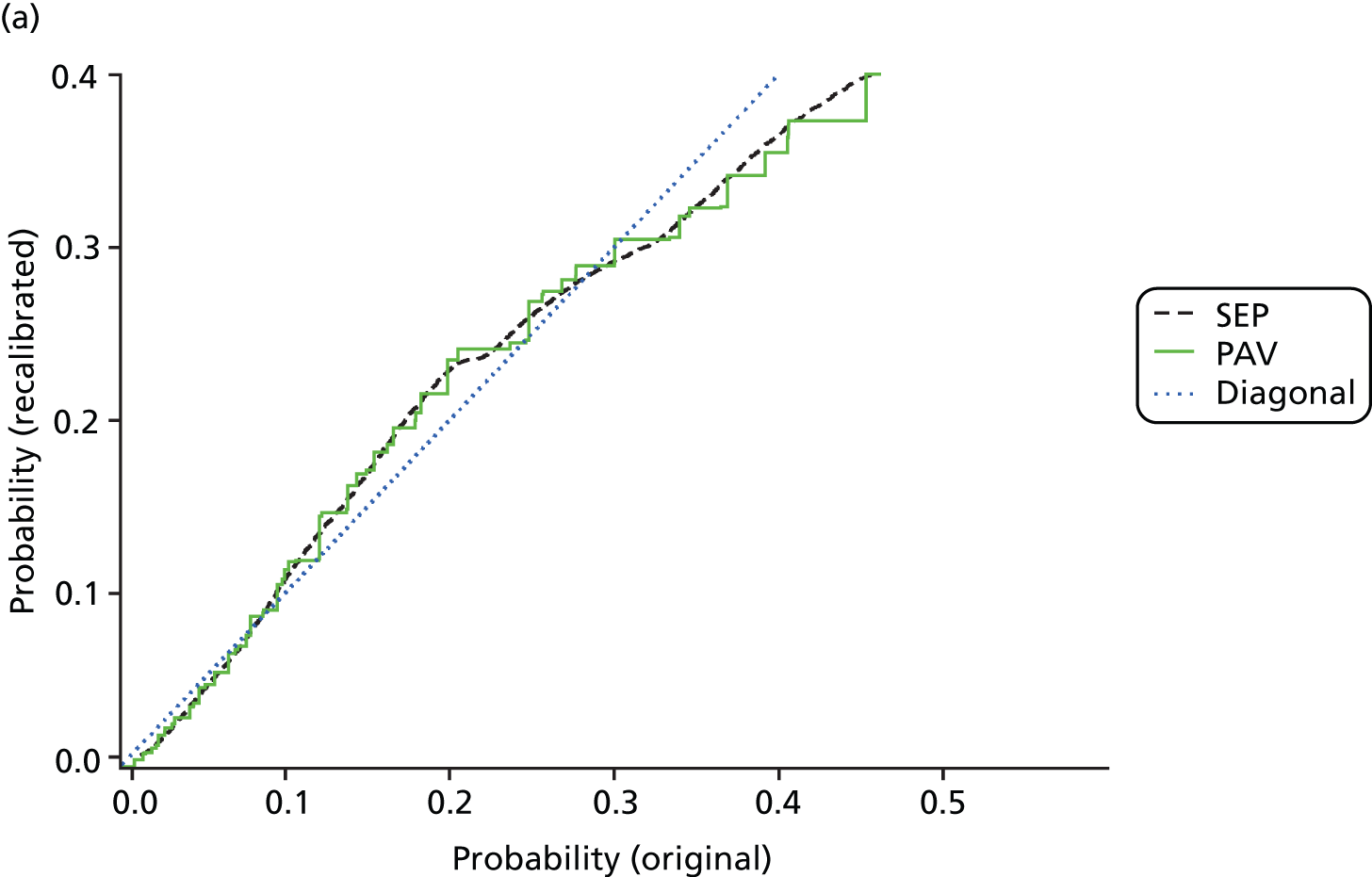

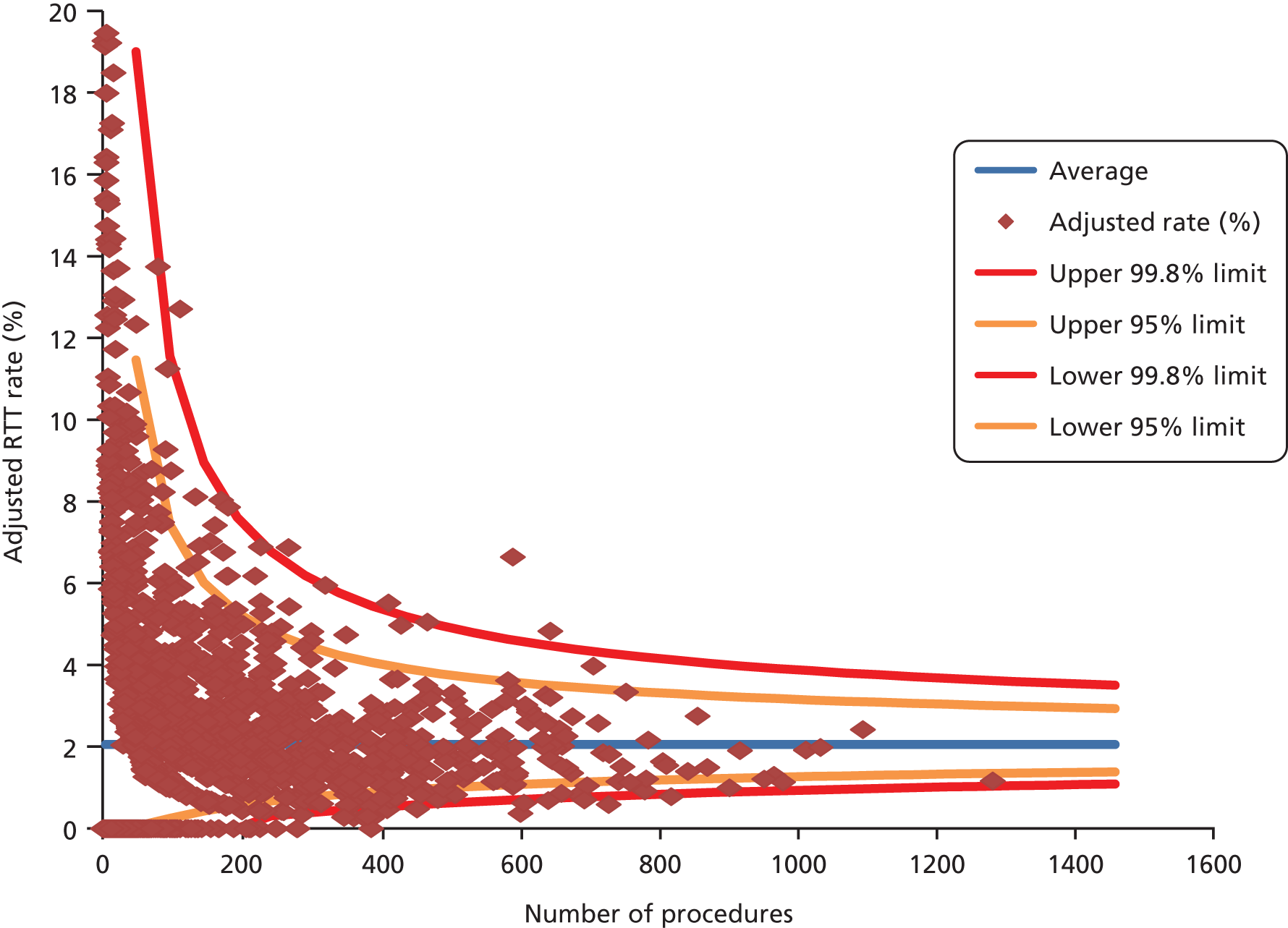

The standard method for recalibration is isotonic regression pool-adjacent-violator algorithm (IR-PAV),49 which we have used throughout. However, this results in a series of steps in the predicted probability curve, which is biologically implausible. Later in the project, we developed a smoothing technique using splines – full details are found elsewhere. 50 To illustrate, the original LR probability estimates for 30-day total mortality following AMI are mapped onto recalibrated estimates for the two methods, compared in Figure 1. The blue ‘diagonal’ line represents no recalibration. The effect of the recalibration is to lower very low probabilities and high probabilities and to push up those in the middle. In other words, LR first over-, then under- and then overestimates probabilities as one moves from low to high values. Depending on the exact use, correct calibration in addition to discrimination may be of importance. Note that the bulk of the patients in this example are to be found between 0.05 and 0.4 so the large discrepancy for very large values affects only few patients.

FIGURE 1.

Comparison of standard (PAV) and alternative (SEP) recalibration methods. Graph (a) shows probabilities up to 0.5; and graph (b) shows the whole data set. SEPs, splined empirical probabilities.

Methods for including information from previous admissions into the prediction models

Many patients, particularly the elderly and those with chronic conditions, have a number of health service contacts. As mentioned before, data quality considerations currently limit the use of A&E attendances (we decided not to use these) and OPD appointments to counting OPD appointments attended and missed; see the last section of Chapter 3. With previous admissions, one may use the procedure and diagnosis information (the latter can be used to augment comorbidity codes as mentioned earlier) and also the timing of them relative to the index contact. A simple but crude approach is to count the number of emergency admissions for any reason within the previous year. 2 To use the primary diagnosis of these previous admissions, one needs to use some grouping algorithm such as the AHRQ’s CCS groups and then combine the infrequent ones. The relative timing, which is recorded in number of days between admission dates, may also be incorporated, as in principle may the duration of each stay.

Intuitively, one may think that a recent readmission better predicts the outcome of the index admission than one that lies further back in time. If we ignore the durations and consider just the fact of admission, then a 3-year lookback period generates roughly 1000 binary variables – one for each day. Determining the effect of each of these variables cannot be done by simply adding them to a LR model. Some aggregation is needed. This also smooths out small time differences (e.g. whether a previous admission is 673 days in the past or 674 is unlikely to be of major significance). We used a two-step process as follows.

First step:

-

We generated the 60 lowest-order orthogonal polynomials of our 1000-dimensional vector space of admission dates. The base polynomial (a horizontal line) then is equivalent to just summing the number of admissions; the next polynomial (a diagonal) translates into a sum weighted by the time difference to the present admission, etc.

-

The value of the sums weighted by these polynomials results in 60 new variables. These were used in a LR model.

-

Using the LR coefficients yields a weighting of polynomials, which results in a single weighted sum that in a LR model would give the same result.

-

As expected, this weighted sum puts about five times greater emphasis on very recent previous admissions than those further in the distant past. The decline in weights occurs over about the first half-year or so.

Second step:

-

The weighted sum above may be used to generate new weighted polynomials. These will give greater prominence in magnitude and resolution to where the weighting is largest, that is to recent admissions. The base polynomial stays the same.

-

One or more such polynomials may now be used as weighted sums to generate variables that can be added to a LR model.

-

We also included a flag for no previous admission within the last 3 years.

To test this, we considered two years of index HF admissions as used in the readmission analyses. Our outcome was unplanned readmission within 28 days. All of these models contained the set of casemix variables used in the comparison of methods above plus the flag for no previous admission. The orthogonal polynomials were generated in SAS using PROC IML, and the weighted sums were implemented in SAS using PROC FCMP.

The effect of the semi-competing risk of death on readmission-type measures

As death precludes subsequent readmission, using LR – which ignores any effect of death either during or following the index discharge – may be potentially misleading. We therefore also applied cause-specific proportional hazards modelling and subdistribution proportional hazards modelling. These two survival analysis methods make different assumptions regarding post-discharge deaths;51,52 other methods exist, but these are the two most widely used. The PSHREG macro in SAS was run for subdistribution hazards. 53 If the odds ratios (ORs) and two sets of hazard ratios all agree, then we can be fairly confident that the effect of post-discharge deaths is minor. For the proportional hazards models we inspected the standard errors and deviance and Schoenfeld residuals; as our focus here was on the validity of using LR as the standard method rather than on the precise specification of a survival analysis model, we did not perform the usual formal tests of proportionality.

As is standard, our analyses of readmissions were restricted to patients discharged alive from their index admission. We did not attempt to take account of deaths during the index admission, and we acknowledge that this is a potential limitation.

Methods for predicting future bed-days in heart failure patients

As well as modelling the first unplanned readmission, as is common practice, we also wanted to consider broader measures of future hospital use. Internationally, the literature on HF readmissions is dominated by studies predicting a single readmission, usually 30-day readmissions,54,55 though some used longer follow-up periods of 90 days56 and 12 months. 57 Braunstein et al. 58 modelled the number of all-cause and HF ambulatory care-sensitive conditions (ACSCs) and all-cause ACSC hospitalisations during 12 months. The ACSC literature tends to focus on trying to predict the high-risk patients. 59,60 Few studies have modelled the number of further admissions. Johnson et al. 61 considered the number of HF-related hospitalisations during 3 years of follow-up using a negative binomial model. Although Chun et al. 62 modelled cardiac and non-cardiac hospitalisations from discharge until death, they took a survival analysis approach that included repeated-events time-to-event analysis. We found no study that considered total future bed-days in HF patients.

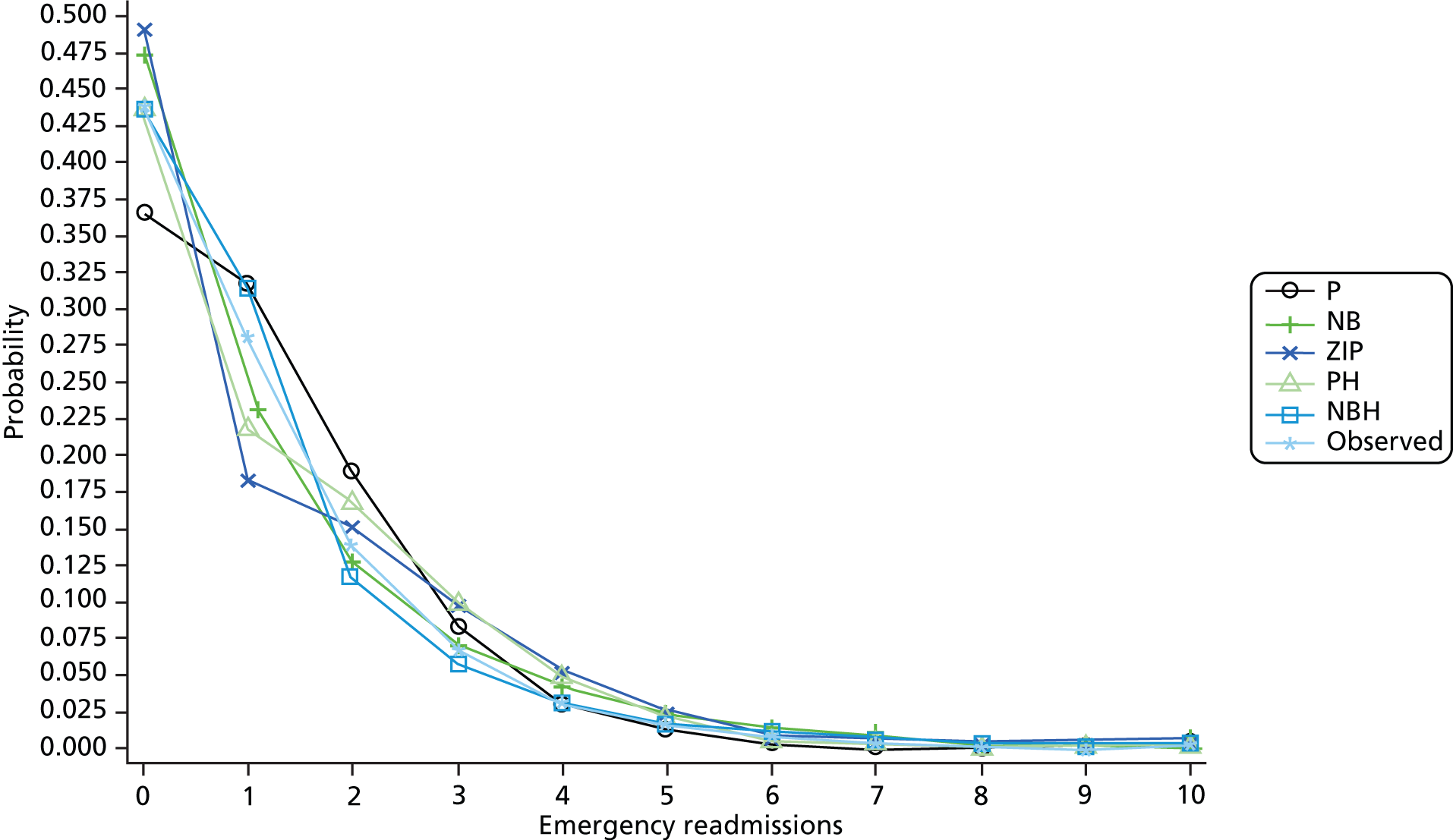

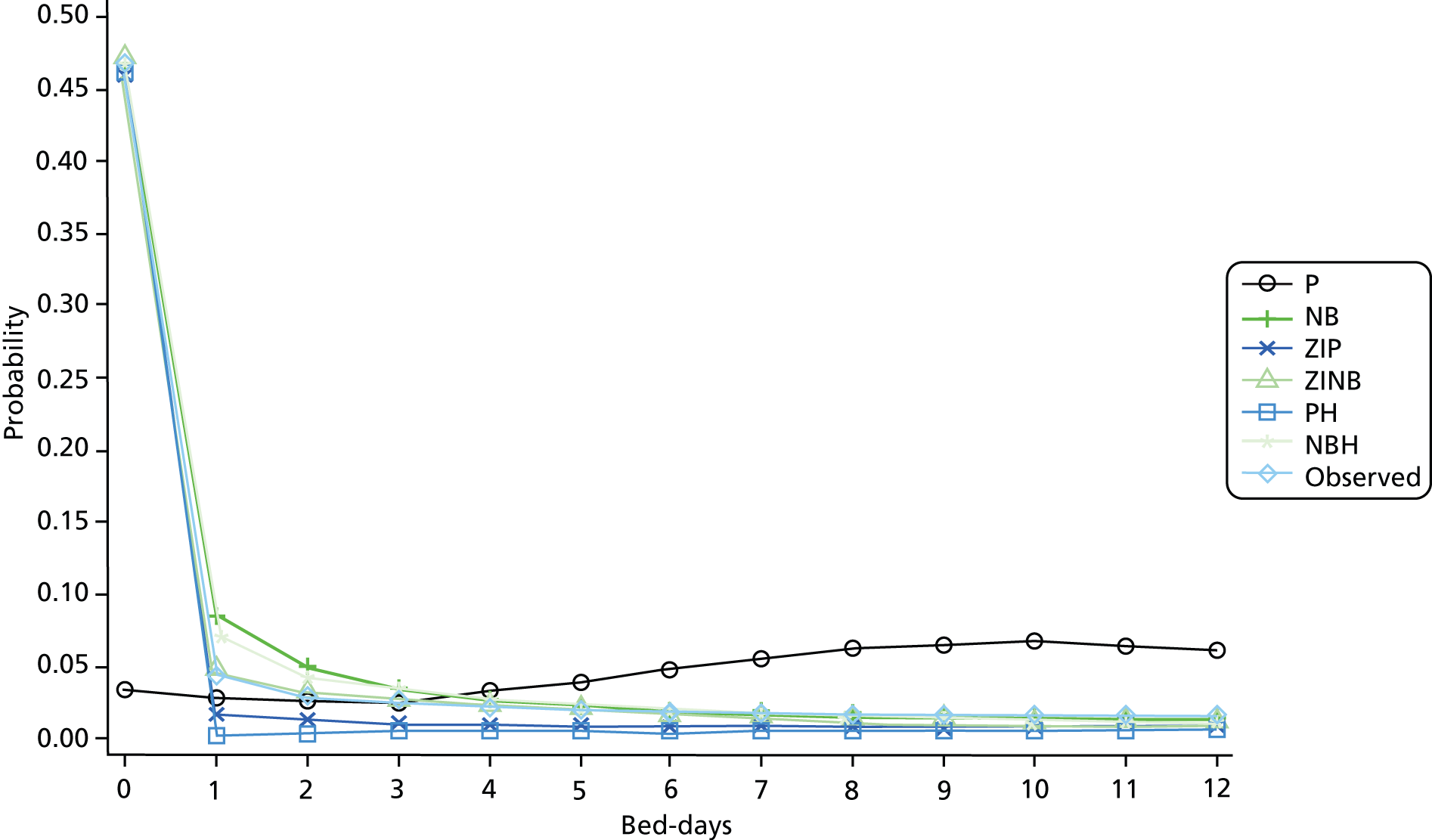

Resource-use measures are often characterised by distributions displaying overdispersion and an excess number of zero counts, so a range of count data models including Poisson, negative binomial with quadratic variance function (NB2), zero-inflated Poisson, zero-inflated negative binomial (ZINB), Poisson hurdle and negative binomial hurdle (NBH) models were fitted. The NB2 model allows for overdispersion by relaxing the Poisson model assumption of equal mean and variance. The zero-inflated and hurdle models provide alternative ways of capturing the excess zero counts. The former treats zeroes as arising from one of two sources – always zero and possibly zero – and the latter treats zeroes as arising from only one source. For a review of such models, see Hu et al. 63

Mortality rates in the year after discharge from the index HF admission are high. Accordingly, the number of days the patient survived following discharge from their index HF admission, capped at 365 days, was used as on offset term in the regression models to control for differing opportunity to accrue readmissions and bed-days. 61

‘Buckets’ approach

An alternative approach to modelling the number of bed-days directly is to categorise the count. We assigned patients with a positive number of bed-days to one of five resource-use buckets. The thresholds for each bucket were allocated such that the total resource use (number of patients × number of bed-days) in each bucket was approximately equal. The factors influencing membership of the highest (and two highest) resource-use buckets were examined using LR.

Other modelling details

The models described above assume that observations are independent, so the conditional ICC was calculated to assess the need for models taking account of the clustering of patients in hospitals. We used the same set of covariates as for the first readmission. This time, we also examined the additional value of including specific ICD-10 Z-codes as proxies for care in the community, patient support networks and likely adherence to post-discharge advice. One candidate was the code for living alone, whose recording in HES has been mandatory since 2009.

Nested models were compared using likelihood ratio tests, log-likelihood, AIC and Bayesian information criterion. For pairs of generalised linear models we additionally compared the deviance. Non-nested models were compared using the tests of Vuong64 and Clarke. 65

The mean predicted probability for each count value and observed probabilities were compared graphically. Pearson’s chi-square test and the chi-square goodness of fit test proposed by Cameron and Trivedi (p. 195)66 were not used, as they are not informative with large sample sizes such as ours.

The discrimination and calibration of the logistic models were assessed using the c-statistic and HL statistics respectively.

Chapter 3 Results

We now present results to illustrate the foregoing methods and questions rather than give a large number of tables covering AMI, stroke, HF, COPD, ACS, coronary artery bypass graft (CABG), hip and knee replacements, colorectal surgery and the other patient groups that we analysed. For instance, RTT metrics were defined for colorectal and orthopaedic surgery, and to illustrate this we present results for elective hip and knee replacements. When comparing machine learning and regression, we present results for AMI and colorectal excision.

Adjusting for comorbidity: results from updating the Charlson index

Amending the ICD codes following the expert clinical coder review and literature search led to increases in prevalence for dementia and AMI in particular. Comparison with the original 1987 published weights showed notable declines in relative importance for human immunodeficiency virus (HIV) and increases for dementia, a condition that was not even included in the original 1998 formulation of the Elixhauser index. For illustration, we present the weights derived from 2008/9 inpatients (Table 2).

| Comorbidity variable | Original Charlson weights | Original Charlson codes, new HES-based weights, all inpatients 2008/9 | Amended Charlson codes, new HES-based weights, all inpatients 2008/9 |

|---|---|---|---|

| Cancer | 2 | 5 | 4 |

| Connective tissue disorder | 1 | 3 | 2 |

| Cerebrovascular accident | 1 | 7 | 5 |

| Dementia | 1 | 8 | 7 |

| Diabetes with long-term complications | 2 | 0 | –1 |

| Diabetes without long-term complications | 1 | 2 | 1 |

| Congestive HF | 1 | 8 | 6 |

| HIV | 6 | 0 | 0 |

| Mild or moderate liver disease | 1 | 4 | 4 |

| Pulmonary disease | 1 | 3 | 2 |

| Metastatic cancer | 3 | 8 | 7 |

| AMI | 1 | 5 | 2 |

| Paraplegia | 2 | 1 | 1 |

| Peptic ulcer | 1 | 5 | 4 |

| Peripheral vascular disease | 1 | 4 | 3 |

| Renal disease | 2 | 7 | 5 |

| Severe liver disease | 3 | 11 | 9 |

As we found in our systematic review, Elixhauser generally outperformed Charlson for both mortality and readmissions. To illustrate, Table 3 gives the c-statistic and adjusted R2 values for both indices for AMI and COPD for mortality. More details are given elsewhere. 67

| Measure | Original Charlson codes and weights | Original Charlson codes, empirical weights | Amended Charlson codes, empirical weights | Modified Elixhauser, empirical weights |

|---|---|---|---|---|

| c-statistic | ||||

| All inpatients | 0.719 | 0.726 | 0.757 | 0.799 |

| AMI admissions | 0.624 | 0.641 | 0.654 | 0.668 |

| COPD admissions | 0.577 | 0.601 | 0.611 | 0.646 |

| Adjusted R2 statistic | ||||

| All inpatients | 0.100 | 0.106 | 0.123 | 0.142 |

| AMI admissions | 0.046 | 0.051 | 0.058 | 0.058 |

| COPD admissions | 0.020 | 0.030 | 0.032 | 0.044 |

Incorporating information from prior admissions is often done using a 1-year lookback period but sometimes using a longer one. Table 4 compares the ORs for a set of comorbidities relevant to ACSs when using a 1- and a 5-year lookback and when using none, i.e. when using only the secondary diagnosis codes from the index admission. Some effects were modified when using prior admissions. Overall, a 5-year period offered little benefit over a 1-year period, and a 1-year period offered little over no lookback. Further details are found elsewhere. 67

| Factor | One-year lookback | Five-year lookback | ||

|---|---|---|---|---|

| OR (95% CI) | p-value | OR (95% CI) | p-value | |

| Cancer | 1.50 (1.40 to 1.59) | < 0.001 | 1.25 (1.19 to 1.31) | < 0.001 |

| Atherosclerosis | 0.96 (0.87 to 1.06) | 0.420 | 0.93 (0.86 to 0.99) | 0.034 |

| Valvular disease | 1.81 (1.49 to 2.19) | < 0.001 | 1.76 (1.53 to 2.01) | < 0.001 |

| HF | 1.40 (1.30 to 1.50) | < 0.001 | 1.40 (1.32 to 1.47) | < 0.001 |

| Lower respiratory | 1.34 (1.22 to 1.46) | < 0.001 | 1.30 (1.22 to 1.39) | < 0.001 |

| Diabetes | 1.84 (1.58 to 2.15) | < 0.001 | 1.62 (1.47 to 1.78) | < 0.001 |

| Renal failure | 1.74 (1.52 to 2.00) | < 0.001 | 1.80 (1.63 to 1.99) | < 0.001 |

| Stroke | 1.16 (1.04 to 1.29) | 0.009 | 1.21 (1.13 to 1.29) | < 0.001 |

| Hypertension | 1.33 (1.10 to 1.61) | 0.003 | 1.18 (1.04 to 1.35) | 0.014 |

| Atrial fibrillation | 0.97 (0.87 to 1.08) | 0.554 | 0.90 (0.84 to 0.97) | 0.005 |

| Peripheral vascular disease | 1.43 (1.22 to 1.69) | < 0.001 | 1.42 (1.29 to 1.55) | < 0.001 |

| All other diagnoses | 1.06 (1.03 to 1.09) | < 0.001 | 0.99 (0.96 to 1.02) | 0.369 |

Such lookback approaches ignore the potential impact of the timing in relation to the index admission. More sophisticated approaches using polynomials as described earlier were applied to our HF cohort, and we now give the results.

Including information regarding the timing of previous health service contacts in the prediction models

Since using a straightforward sum for the previous admissions had no great effect in terms of the c-statistic on casemix adjustment, it is unsurprising that the weighted sum we derived did not add much either. This, however, does not mean that previous admissions or the non-constant weights used here are unimportant. Here are the results for 30-day readmission following live discharge from an index HF admission:

-

Without additional variables (i.e. just age, sex, comorbidities, etc.) the c-statistic was 0.579, and the HL test gave a chi-squared of 17.2 (p = 0.025).

-

With a constant sum only for the previous admissions the c-statistic rose to 0.586, and the HL test gave a chi-squared of 17.1 (p = 0.030).

-

With the time-weighted base polynomial for the previous admissions the c-statistic was 0.591, and the HL test gave a slightly improved chi-squared of 11.5 (p = 0.177).

-

The two lowest time-weighted polynomials for the previous admissions yielded a c-statistic of 0.592, and the HL test gave a lower chi-squared of 7.4 (p = 0.499).

-

The three lowest time-weighted polynomials for the previous admissions also yielded a c-statistic of 0.592, and the HL test gave a similar chi-squared of 5.8 (p = 0.675).

-

The four lowest time-weighted polynomials for the previous admissions also yielded a c-statistic of 0.592, and the HL test gave a chi-squared of 5.8 (p = 0.668).

Adding one or two time-weighted polynomials improved the c-statistic by about as much again as did an unweighted sum of previous admissions. This procedure also noticeably improved the calibration. Adding a third polynomial improved the calibration even more, but beyond that we saw no further improvement. We conclude that using these (two or three) time-weighted polynomials was appreciably better than the simple count approach.

Mortality and readmission from logistic regression

Following on from the previous section, we used the Elixhauser set plus dementia to adjust for comorbidity in this section. Model performance in terms of discrimination varied considerably by patient group and outcome (Table 5), with readmissions of any follow-up length being harder to predict than death. Discrimination was generally higher for 28- than for 7-day readmission but similar for the two death outcomes. Because of the large sample sizes, the confidence intervals for the c-statistics were very narrow and are not shown in this report.

| Patient group | 7-day readmission | 28-day readmission | Death | Total 30-day death | ||||

|---|---|---|---|---|---|---|---|---|

| c | HL (p-value) | c | HL (p-value) | c | HL (p-value) | c | HL (p-value) | |

| AMI | 0.623 | 21 (0.006) | 0.644 | 72 | 0.775 | 376 | 0.770 | 361 |

| Stroke | 0.599 | 14 (0.071) | 0.618 | 69 | 0.737 | 707 | 0.732 | 590 |

| HF | 0.600 | 11 (0.165) | 0.618 | 11 (0.166) | 0.684 | 80 | 0.680 | 78 |

| Pneumonia | 0.618 | 60 | 0.657 | 285 | 0.800 | 445 | 0.795 | 459 |

| COPD | 0.650 | 36 | 0.682 | 170 | 0.718 | 73 | 0.714 | 66 |

| FNOF | 0.617 | 38 | 0.624 | 106 | 0.773 | 209 | 0.773 | 183 |

| THR | 0.607 | 5.0 (0.756) | 0.613 | 10 (0.278) | 0.864 | 13 (0.123) | 0.845 | 8.9 (0.348) |

| TKR | 0.615 | 16 (0.039) | 0.61 | 13 (0.111) | 0.811 | 10 (0.273) | 0.804 | 7.5 (0.481) |

| CABG | 0.608 | 8.5 (0.382) | 0.604 | 3.0 (0.933) | 0.835 | 10 (0.237) | 0.830 | 15 (0.061) |

| AAA repair | 0.610 | 2.8 (0.945) | 0.615 | 9.0 (0.339) | 0.770 | 6.3 (0.611) | 0.771 | 4.1 (0.851) |

| Colorectal excision | 0.580 | 9.1 (0.333) | 0.593 | 12 (0.142) | 0.834 | 21 (0.006) | 0.830 | 19 (0.016) |

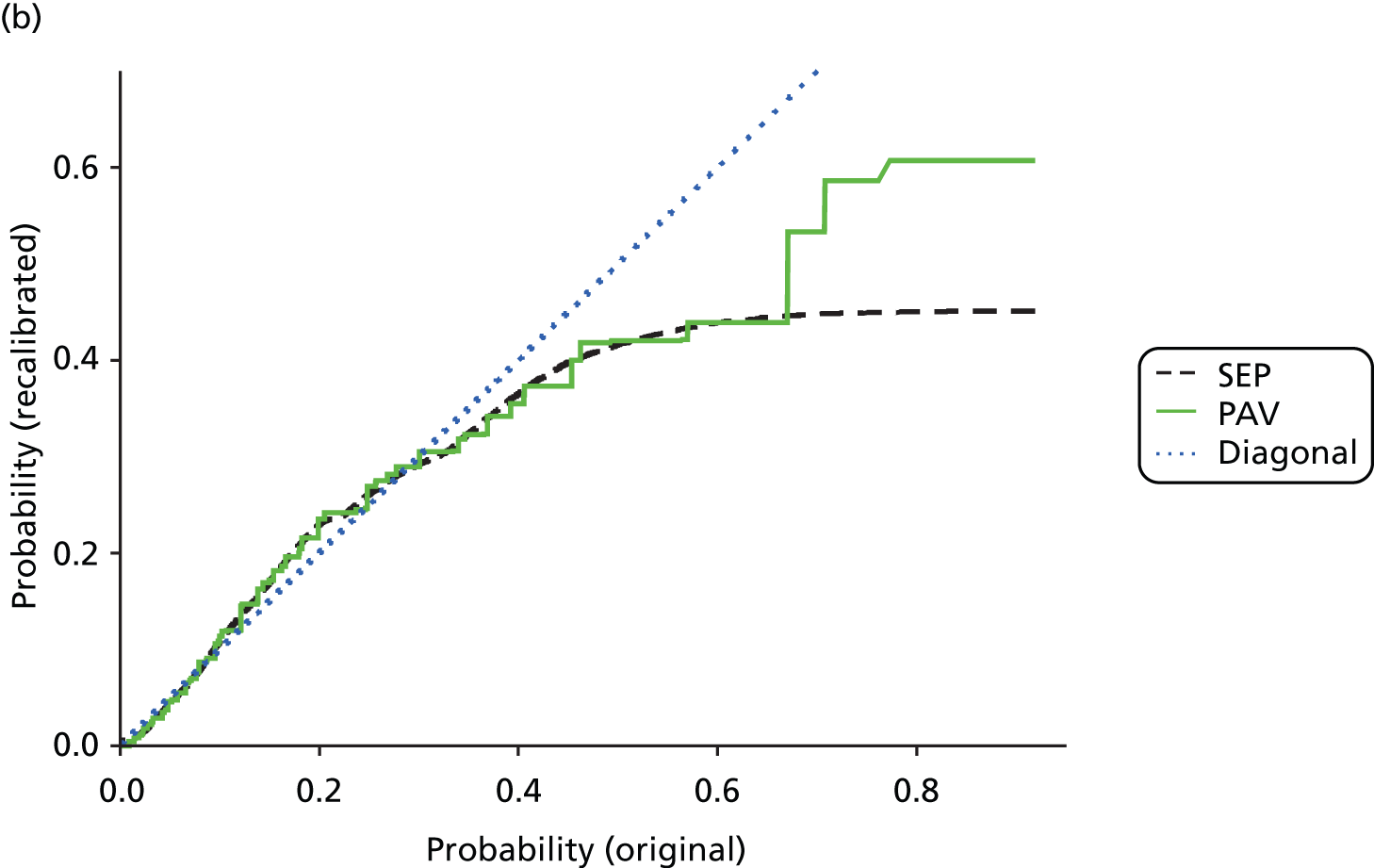

Calibration was much better for the procedures than for the diagnosis groups. It is common to find that LR models are not always well calibrated, with overprediction of low risk and underprediction of high risk common problems. Where calibration was found to be unacceptable as judged by the HL p-value (though see earlier commentary on this measure), we found overprediction of low risk to be a common explanation. A typical example is given below for 30-day total mortality following AMI. Figure 2 plots the ratio of the observed to predicted deaths.

FIGURE 2.

Ratio of observed to predicted deaths for each risk decile for 30-day mortality following AMI.

Comparison of methods: logistic regression and machine learning

For the LR models in this section of the analysis, we therefore took output from both the usual LR models and those with the extra recalibration step as described in the earlier recalibration subsection. We compared these with several machine learning approaches. Table 6 gives the resulting discrimination for AMI and elective colorectal surgery for mortality and readmission (results were similar for pneumonia and are not shown). In general, ANNs sometimes gave slightly better c-statistics, but LR performed comparatively well. Overfitting was a problem for the random forests in particular: for example, for AMI mortality, the training set c-statistic reached 0.92, which fell to 0.74 for the test set. For SVMs, only the best results are shown; performance varied by the SVMs’ parameters. In general, we found that overfitting was severe on the raw data for any kernel. However, transforming the data to principal components analysis space, as described earlier, massively reduced overfitting and also stabilised the algorithm. The total predicted outcomes differed from the total observed outcomes because the results are for the test set, whereas any calibration is learnt/trained on the training data set. Even the best method would suffer from at least some such statistical fluctuations.

| Outcome and method | AMI | Colorectal excision | ||||

|---|---|---|---|---|---|---|

| c | HL statistic | p-value | c | HL statistic | p-value | |

| Mortality | ||||||

| LR | 0.773 | 124.5 | < 0.001 | 0.823 | 26.4 | 0.003 |

| LR+ | 0.777 | 17.3 | 0.067 | 0.826 | 51.7 | < 0.001 |

| ANN | 0.777 | 36.4 | < 0.001 | 0.824 | 86.3 | < 0.001 |

| ANN-LR | 0.777 | 36.5 | < 0.001 | 0.826 | 31.9 | < 0.001 |

| SVM linear kernel | 0.771 | 13.4 | 0.201 | 0.806 | 26.8 | 0.003 |

| SVM Gaussian kernel | 0.771 | 8.9 | 0.544 | 0.823 | 49.1 | < 0.001 |

| Random forest | 0.743 | See text | < 0.001 | 0.768 | See text | < 0.001 |

| Readmission | ||||||

| LR | 0.640 | 33.0 | < 0.001 | 0.576 | 8.6 | 0.567 |

| LR+ | 0.638 | 20.2 | 0.027 | 0.570 | 9.6 | 0.479 |

| ANN | 0.638 | 36.5 | < 0.001 | 0.571 | 104.0 | < 0.001 |

| ANN-LR | 0.638 | 42.7 | < 0.001 | 0.570 | 31.9 | < 0.001 |

| SVM linear kernel | 0.637 | 32.0 | < 0.001 | 0.575 | 5.8 | 0.830 |

| SVM Gaussian kernel | 0.636 | 25.1 | 0.005 | 0.578 | 19.7 | 0.032 |

| Random forest | 0.550 | See text | < 0.001 | 0.532 | See text | < 0.001 |

It is noteworthy that random forests suffered more from overfitting than the other methods. This also had repercussions for recalibration: the raw probability estimates were generally too low. In particular we observed that random forests picked up a sizeable number of positive outcomes and grouped them together. Pool adjacent violators recalibration then maps these onto high-probability estimates. Unfortunately, these high probabilities were accurate only on the training data set (overfitting), and hence the corresponding HL test resulted in extremely large chi-squared values, which we have not given in the table.

When these models are used in risk adjustment, the resulting RRs at unit level are also of interest. These were derived by summing the observed and summing the predicted for each hospital and dividing the former sum by the latter sum. Table 7 compares the RRs derived from LR (both standard and recalibrated) with those derived from the other methods for AMI in terms of their funnel plot outlier status. Hospital trusts with at least 50 AMIs in two years were included. The patterns for colorectal surgery were similar and are not shown.

| Outcome and method | Low 95% outliers | High 95% outliers | Low 99.8% outliers | High 99.8% outliers |

|---|---|---|---|---|

| Mortality | ||||

| ANN | 2 | 5 | 1 | 0 |

| ANN-LR | 9 | 3 | 1 | 0 |

| LR | 6 | 3 | 1 | 0 |

| LR+ | 10 | 3 | 1 | 0 |

| SVM linear kernel | 8 | 3 | 1 | 0 |

| SVM Gaussian | 7 | 3 | 1 | 0 |

| Random forest | 19 | 4 | 2 | 0 |

| Readmission | ||||

| ANN | 2 | 0 | 1 | 0 |

| ANN-LR | 3 | 0 | 1 | 0 |

| LR | 4 | 1 | 1 | 0 |

| LR+ | 2 | 0 | 1 | 0 |

| SVM linear kernel | 3 | 1 | 1 | 0 |

| SVM Gaussian | 3 | 1 | 1 | 0 |

| Random forest | 7 | 2 | 1 | 0 |

There were more low than high outliers. In general, there was a lot of similarity between the sets of SMRs themselves and between the numbers of outliers across six of the seven methods. The exception was the random forests, for which recalibration consistently gave the highest total expected count for the four sets (AMI and colorectal, mortality and readmission) and resulted in by far the highest number of hospitals flagged as significantly low on the funnel plots. This was due to overfitting and the assigning of a very high predicted risk to too many patients.

Return-to-theatre metrics for orthopaedics

Following on from our earlier work in urology for cystectomy,7 we give results for total or partial hip replacement (HR) and knee replacement (KR); colorectal excision results may be found elsewhere. 68

There were 260,206 index HR procedures and 286,590 index KR procedures during the 6 years 2007/8 to 2012/13 combined. Because of invalid or unknown consultant team codes, 2153 HRs and 2874 KRs were excluded. A further 112 HRs and 29 KRs were removed at hospitals performing fewer than 30 procedures over the 6 years. This left 260,370 HRs among 2029 surgeons and 315,454 KRs among 2061 surgeons for analysis. Of these, there were 5353 RTTs within 90 days for a rate of 2.1% for HR and 5508 RTTs within 90 days for a rate of 1.8% for KR.

The most common reinterventions were closed reduction of dislocated total prosthetic replacement for HR (34% of the total) and attention to total prosthetic replacement of knee joint not elsewhere classified for KR (28% of the total), the latter mostly representing manipulations under anaesthesia for stiffness. Age, sex and many comorbidities were significant predictors for both index procedures. Age had different relations for hips (ages over 75 years had higher odds) from those for knees (ages under 60 years had higher odds, particularly those under 45 years). Some comorbidities, such as arrhythmias, dementia, obesity, fluid disorders and Parkinson’s disease, were associated with higher odds of RTT for both index procedures, but others, such as liver disease, depression and alcohol abuse, raised the odds only for HR. There was no relation with area deprivation. Hip resurfacing had around half the odds of other hip subgroups; partial knee replacements had around half the odds of other knee subgroups.

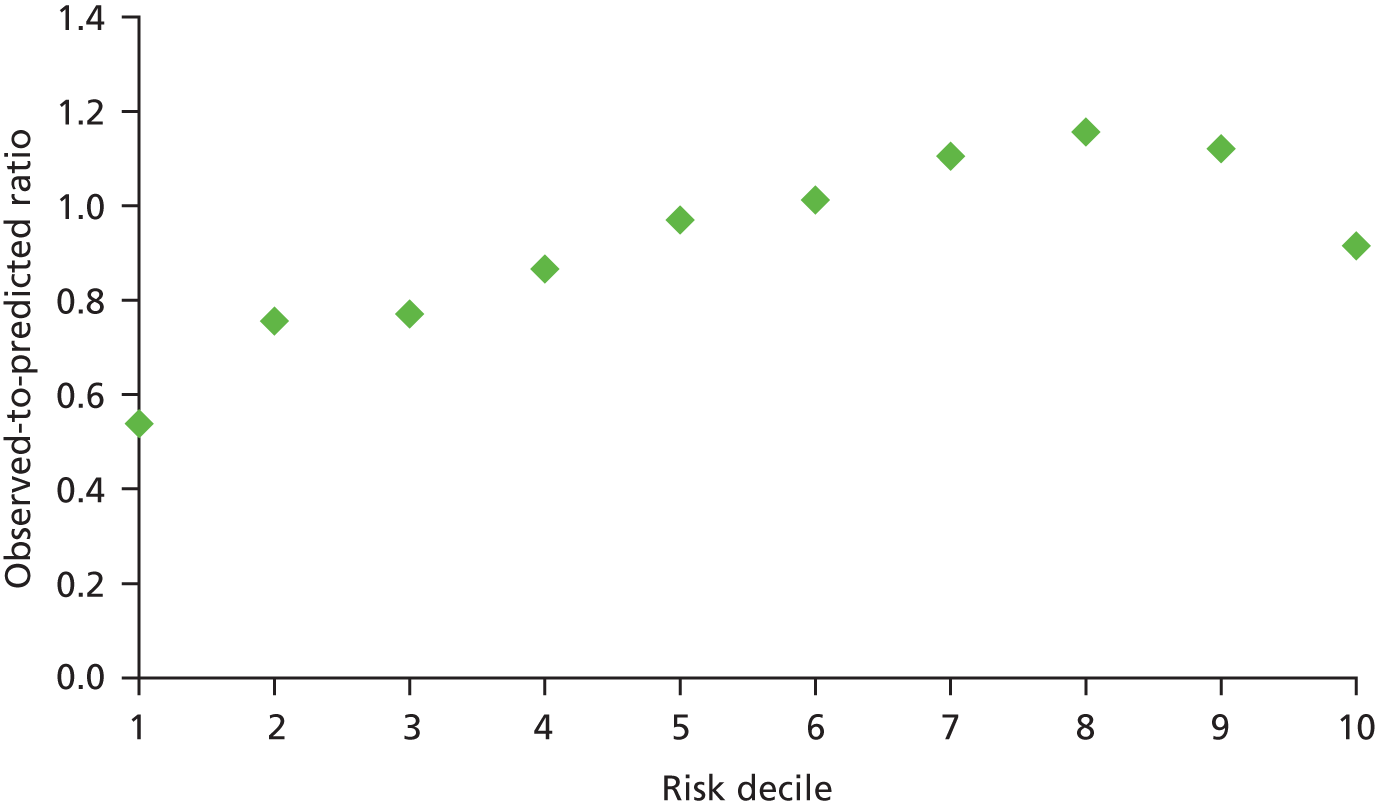

Figure 3 shows the variation for RTT following HR by surgeon (the plot for KRs is very similar and is not shown). Because of the known unreliability of consultant codes in HES for unplanned activity, we analysed and give the figures for elective procedures only.

FIGURE 3.

Funnel plot for RTT following HR by surgeon.

The discrimination of the two models was low (c = 0.61 for HR and 0.60 for KR) but the calibration was acceptable using the HL test (chi-squared 9.2, p = 0.324 for HR, and chi-squared 13.3, p = 0.102 for KR).

Variation by surgeon for HR was similar to that for KR (standard deviation 0.22, Table 8). Patient factors explained little of this for either procedure. Accounting for the hospital explained around a quarter of the variation between surgeons for HR and nearly half (44%) for KR. This suggests that three-quarters of a surgeon’s RTT rate for HR and half their rate for KR are explained by factors other than patient factors or the hospital at which they operate.

| Index procedure and model | AIC | Standard deviation of surgeon effects | % change from surgeon only |

|---|---|---|---|

| HR | |||

| Surgeon only | 51,841 | 0.219 (0.021) | 0 |

| Surgeon + patient factors | 51,153 | 0.210 (0.020) | –4.1 |

| Surgeon + hospital | 51,806 | 0.162 (0.019) | –26.0 |

| Surgeon + hospital + patient factors | 51,124 | 0.160 (0.019) | –26.0 |

| KR | |||

| Surgeon only | 55,094 | 0.220 (0.019) | 0 |

| Surgeon + patient factors | 54,458 | 0.212 (0.019) | –3.6 |

| Surgeon + hospital | 55,000 | 0.123 (0.016) | –44.1 |

| Surgeon + hospital + patient factors | 54,361 | 0.116 (0.016) | –47.3 |

Outpatients department non-attendance

Many reasons for patients missing their OPD appointment have been identified. We now give a brief summary of the literature that is not meant to be exhaustive. Reasons for missing include simple forgetting or confusion over the appointment’s time or location,69 illness severity (either mild, including recovery from symptoms, or severe),70,71 administrative and/or communication errors,69,72–74 being busy or unable to take time off work or arrange child care. 75,76 Previous unsatisfactory experience of health care, such as long waiting times and unhelpful staff with poor communication skills, also has an impact,77,78 as do not being involved in the referral decision-making process69,71 and not understanding the reasons for the appointment. 79 Previous non-attendance is a strong predictor for future behaviour related to attendance,78 with the probability of missing a second appointment being much higher than the probability of not turning up to the first. 75 There is an association between the number of appointments and rates of non-attendance, with high users of outpatient services being more likely to default on an appointment. 78 These patients are likely to have long-term chronic diseases, which may have not changed since their last appointment. 73 Because of this factor, there is often a difference in attendance rates between those attending their first appointment and those attending subsequent follow-up appointments. King et al. 80 found that patients with diabetes and glaucoma felt that, as their condition was stable, missing an appointment was unlikely to harm them.

Several studies have looked at the relation between non-attendance and factors such as demographic information and personal circumstances, for example family size or socioeconomic deprivation. With age, it is likely that non-attendance rates have a bimodal distribution, with young adults and teenagers as well as the elderly being those most likely to miss their appointments. 71 It has been reported that alcoholics, intravenous drug users and those who are pregnant have higher non-attendance rates,75 as well as past and current smokers. 81,82 Patients who are single parents or have children living at home are more likely to be non-attenders. 81 Those with young, large families are also more likely to miss appointments,76,83 as well as those with a poor family support system. 69 Area-level deprivation scores are often strong predictors of non-attendance. 70,84 Gatrad85 found higher rates of non-attendance in Asian populations for both new and follow-up appointments, though these attendance rates improved when culturally specific interventions were introduced.

As well as analysing first-time appointments, we also considered patients recently discharged from inpatient treatment. Just as the time interval between referral and appointment has been found to be a strong predictor of non-attendance,86 so is the time interval between discharge from inpatient admission and the first subsequent outpatient appointment. 70 If there is a lengthy delay then patients are less likely to attend the appointment for a number of reasons, including the appointment being unnecessary as their condition has improved, or simply the patient forgetting the appointment. 78 Two studies found that those waiting more than 2 months from referral to appointment were least likely to attend. 73,87 On the other hand, too short an interval, such as less than 3 days or a week, between referral and appointment had a higher rate of non-attendance, as this short notice does not give patients enough time to reschedule their commitments. 73,79 Hamilton et al. 70 found that, for every 1-week increase in interval, patients were 5–9% more likely to miss their appointment.

While many of the foregoing factors such as personal circumstances are not available in HES, several key ones are, such as age, area-level deprivation, prior non-attendance and time interval between inpatient discharge and the first subsequent appointment. We also used HES to track later hospital activity in our patient cohorts.

Table 9 gives the results for the first appointment in general medical and general surgical OPD; Table 10 gives the same but for the first appointment following inpatient discharge. In both tables, to reduce size, only a few age groups and none of the many diagnosis group and subgroup combinations have been shown.

| Patient factor | OR (95% CI) for general medicine appointments | p-value | OR (95% CI) for general surgery appointments | p-value |

|---|---|---|---|---|

| Age group (years): < 1 (compared with 65–69) | 3.52 (2.67 to 4.63) | < 0.0001 | 2.64 (2.26 to 3.09) | < 0.0001 |

| 1–4 | 3.34 (2.73 to 4.09) | < 0.0001 | 1.82 (1.62 to 2.03) | < 0.0001 |

| 5–9 | 2.26 (1.76 to 2.92) | < 0.0001 | 1.61 (1.42 to 1.83) | < 0.0001 |

| 10–14 | 1.99 (1.60 to 2.48) | < 0.0001 | 1.96 (1.74 to 2.20) | < 0.0001 |

| 15–19 | 2.55 (2.36 to 2.77) | < 0.0001 | 2.86 (2.68 to 3.06) | < 0.0001 |

| 20–24 | 3.06 (2.85 to 3.28) | < 0.0001 | 3.47 (3.29 to 3.66) | < 0.0001 |

| 25–29 | 2.88 (2.69 to 3.08) | < 0.0001 | 3.24 (3.08 to 3.41) | < 0.0001 |

| (rows omitted) | ||||

| 90+ | 1.27 (1.14 to 1.42) | < 0.0001 | 1.58 (1.42 to 1.77) | < 0.0001 |

| Male sex | 1.21 (1.17 to 1.24) | < 0.0001 | 1.29 (1.27 to 1.32) | < 0.0001 |

| Deprivation quintile 2 (compared with least deprived) | 1.06 (1.01 to 1.11) | 0.0238 | 1.07 (1.03 to 1.11) | 0.0002 |

| 3 | 1.25 (1.20 to 1.31) | < 0.0001 | 1.24 (1.19 to 1.28) | < 0.0001 |

| 4 | 1.51 (1.45 to 1.58) | < 0.0001 | 1.60 (1.55 to 1.65) | < 0.0001 |

| 5 (most deprived) | 1.94 (1.86 to 2.03) | < 0.0001 | 2.06 (2.00 to 2.13) | < 0.0001 |

| Waiting (per week) | 1.02 (1.02 to 1.02) | < 0.0001 | 1.03 (1.02 to 1.03) | < 0.0001 |

| Earlier appointments | 0.96 (0.96 to 0.96) | < 0.0001 | 0.97 (0.96 to 0.97) | < 0.0001 |

| Earlier DNAs | 1.32 (1.31 to 1.34) | < 0.0001 | 1.34 (1.33 to 1.35) | < 0.0001 |

| Earlier elective admissions | 0.99 (0.98 to 1.00) | 0.0049 | 0.98 (0.97 to 0.99) | < 0.0001 |

| Earlier emergency admissions | 1.10 (1.09 to 1.11) | < 0.0001 | 1.14 (1.13 to 1.15) | < 0.0001 |

| Earlier total bed-days | 1.01 (1.00 to 1.01) | < 0.0001 | 1.01 (1.00 to 1.01) | < 0.0001 |

| Patient factor | Factor value | OR (95% CI) | p-value |

|---|---|---|---|

| Age (compared with ages 60–64 years) | < 1 | 1.63 (1.41 to 1.89) | < 0.0001 |

| 1–4 | 1.38 (1.19 to 1.59) | < 0.0001 | |

| 5–9 | 1.21 (1.02 to 1.45) | < 0.0001 | |

| 10–14 | 1.49 (1.22 to 1.83) | 0.033 | |

| 15–19 | 1.69 (1.44 to 1.97) | 0.0001 | |

| (rows omitted) | |||

| 85–89 | 1.26 (1.20 to 1.33) | < 0.0001 | |

| 90+ | 1.44 (1.35 to 1.53) | < 0.0001 | |

| Sex | Male | 0.99 (0.97 to 1.01) | 0.375 |

| Charlson comorbidity score | 1.01 (1.01 to 1.02) | < 0.0001 | |

| Number of previous OPD appointments attended | 0.98 (0.98 to 0.98) | < 0.0001 | |

| Number of previous OPD appointments missed | 1.25 (1.24 to 1.26) | < 0.0001 | |

| Number of previous elective admissions | 0.99 (0.98 to 0.99) | < 0.0001 | |

| Number of previous emergency admissions | 1.03 (1.02 to 1.03) | < 0.0001 | |

| LOS of index admission (per night) | 1.01 (1.01 to 1.01) | < 0.0001 | |

| Quintile (compared with least deprived) | 2 | 1.04 (1.00 to 1.08) | 0.073 |

| 3 | 1.21 (1.16 to 1.26) | < 0.0001 | |

| 4 | 1.48 (1.43 to 1.54) | < 0.0001 | |

| 5 (most deprived) | 1.80 (1.74 to 1.87) | < 0.0001 | |