Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 11/2004/39. The contractual start date was in January 2013. The final report began editorial review in December 2015 and was accepted for publication in March 2016. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Richard Cookson and Peter Goldblatt report grants for related work received during this study and that they are members of the NHS Outcomes Framework Technical Advisory Group, and Brian Ferguson reports that he is Chief Economist, Public Health England.

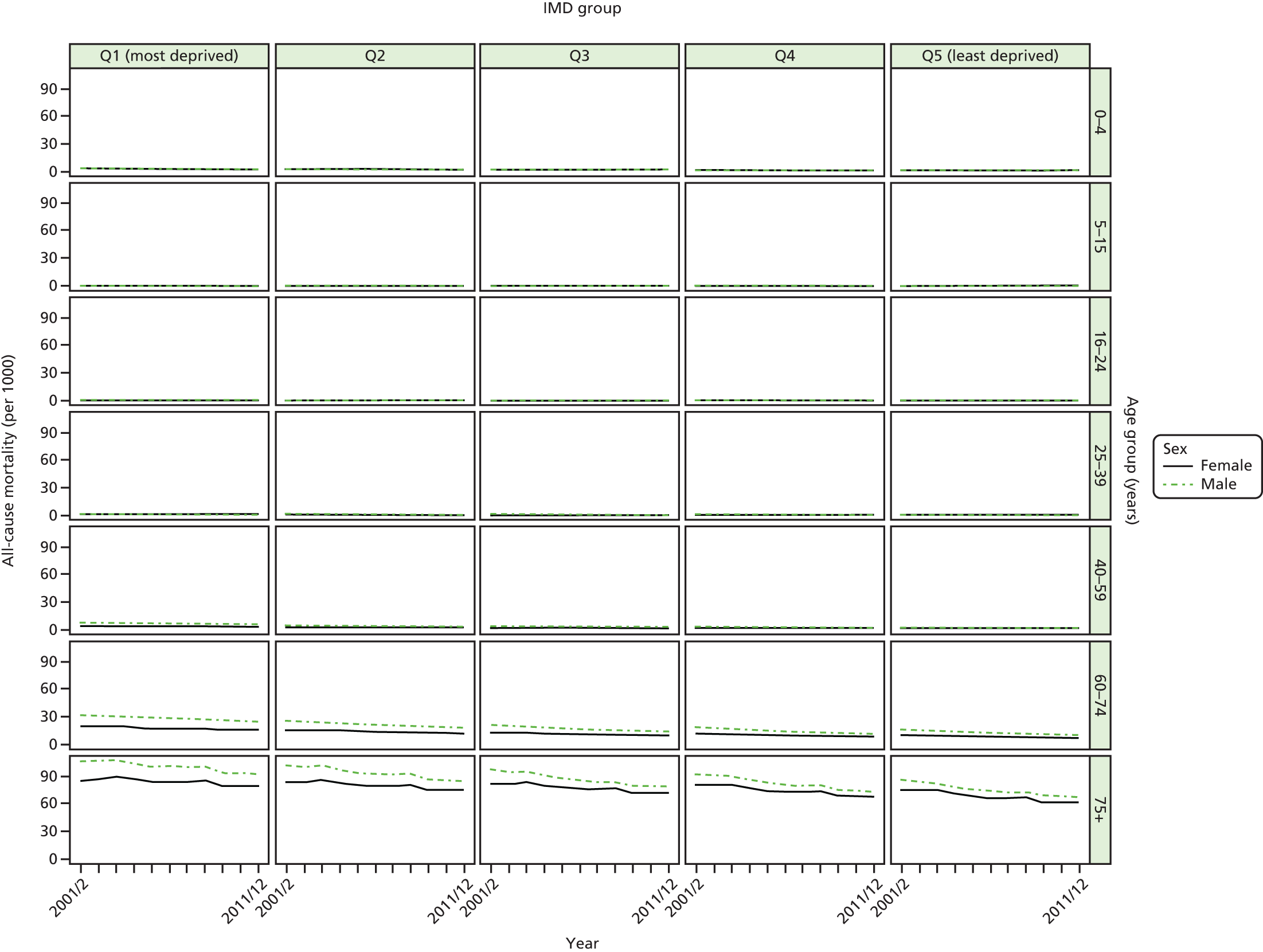

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2016. This work was produced by Cookson et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Introduction

[T]he isolation of disparities from mainstream quality assurance has impeded progress in addressing them.

Fiscella et al. , 20001

Overview

This report describes the findings of independent research to develop health equity indicators for monitoring socioeconomic inequalities in health-care access and outcomes in England. Inequalities of this kind persist, raising important public policy concerns about both quality of care and social justice. However, progress in addressing these concerns is hampered because socioeconomic inequalities in health-care access and outcomes are not yet monitored systematically in England at either national or local levels. 2

We developed an integrated suite of equity indicators for two different kinds of monitoring:

-

annual monitoring of change in national health-care equity

-

annual monitoring of local within-area health-care equity against a national equity benchmark, for Clinical Commissioning Groups (CCGs) or other subnational areas comprising ≥ 100,000 people.

Our equity indicators are designed to help national and local decision-makers in England discharge the NHS health inequalities duties introduced in the Health and Social Care Act 2012. 3 The local duty for CCGs is as follows:

Each clinical commissioning group must, in the exercise of its functions, have regard to the need to –

(a) reduce inequalities between patients with respect to their ability to access health services, and

(b) reduce inequalities between patients with respect to the outcomes achieved for them by the provision of health services.

Health and Social Care Act 2012. 3 Contains public sector information licensed under the Open Government Licence v3.0

The national duty for NHS England is phrased in the same way, and the national duty for the Secretary of State is as follows:

In exercising functions in relation to the health service, the Secretary of State must have regard to the need to reduce inequalities between the people of England with respect to the benefits that they can obtain from the health service.

Health and Social Care Act 2012. 3 Contains public sector information licensed under the Open Government Licence v3.0

Our indicators will also help to monitor the health inequalities elements of the NHS duties as to promoting integration of care that were introduced in the Health and Social Care Act 2012. 3 Improving the integration of care is a central policy priority for the English NHS, including not only integration within NHS-funded services across different specialties and different primary and acute care settings, but also integration between NHS-funded services and other services that impact on patient outcomes. NHS England announced in 2013 the establishment of a ‘Better Care Fund’ for integrated care across health-care and social-care boundaries, and announced in 2014 a programme of ‘new models of care’ or ‘vanguard sites’ for integrating care between specialties and settings. 4 The relevant local duties on integration and inequalities are phrased as follows:

(1) Each clinical commissioning group must exercise its functions with a view to securing that health services are provided in an integrated way where it considers that this would —

(a) improve the quality of those services (including the outcomes that are achieved from their provision),

(b) reduce inequalities between persons with respect to their ability to access those services, or

(c) reduce inequalities between persons with respect to the outcomes achieved for them by the provision of those services.

(2) Each clinical commissioning group must exercise its functions with a view to securing that the provision of health services is integrated with the provision of health-related services or social care services where it considers that this would —

(a) improve the quality of the health services (including the outcomes that are achieved from the provision of those services),

(b) reduce inequalities between persons with respect to their ability to access those services, or

(c) reduce inequalities between persons with respect to the outcomes achieved for them by the provision of those services.

(3) In this section —

‘health-related services’ means services that may have an effect on the health of individuals but are not health services or social care services;

‘social care services’ means services that are provided in pursuance of the social services functions of local authorities (within the meaning of the Local Authority Social Services Act 1970).

Health and Social Care Act 2012. 3 Contains public sector information licensed under the Open Government Licence v3.0

The phrasing of the Health and Social Care Act 20123 makes it clear that the NHS health inequalities duties include (1) concern for reducing inequalities in the health outcomes or benefits of health care, as well as concern for reducing inequalities of access to health care, and (2) concern for improving the co-ordination of health care with social care and other public services which impact on health outcomes. These two concerns go to the heart of what it means to be a national health service, rather than a national sickness service, and are also reflected in the NHS Constitution, published in 2012. 5 The first principle of the NHS Constitution is that:

The NHS provides a comprehensive service, available to all . . . At the same time, it has a wider social duty to promote equality through the services it provides and to pay particular attention to groups or sections of society where improvements in health and life expectancy are not keeping pace with the rest of the population.

The NHS Constitution for England 2012. 5 Contains public sector information licensed under the Open Government Licence v3.0

The fifth principle is that:

The NHS works across organisational boundaries and in partnership with other organisations in the interest of patients, local communities and the wider population. The NHS is an integrated system of organisations and services bound together by the principles and values reflected in the Constitution. The NHS is committed to working jointly with other local authority services, other public sector organisations and a wide range of private and voluntary sector organisations to provide and deliver improvements in health and wellbeing.

The NHS Constitution for England 2012. 5 Contains public sector information licensed under the Open Government Licence v3.0

These concerns relate to wider health equity concern for reducing social inequality in population health. Social inequalities in life expectancy and health raise important concerns about social justice, because health is essential to human flourishing. 6 In economic terms, health is both a consumption good that people value for its own sake and a capital good that allows people to do the things they value in life. Health care is, of course, only one of many social determinants of health and survival over the life-course, along with in utero and childhood circumstances, income, education, working and living conditions, social support networks, long-term care, lifestyle factors such as smoking, poor diet and physical inactivity, and many other factors. 7–9 However, although health care cannot eliminate social inequalities in health, it can play a role in helping to reduce them. 10–12 Therefore, we sought to ensure that our equity indictors are relevant from a wider-population health perspective, as well as from a health-care perspective, and that our indicators are relevant to the integration of care across different specialties, settings and services.

Our equity indicators are intended for use by NHS and local authority decision-makers for quality improvement purposes, to help policy-makers and managers learn how to improve the delivery of health-care services including integration with social care and other health-related services. They are also intended for use by a wide range of organisations which play external scrutiny roles in helping to hold the NHS to account, including Public Health England and local Health and Wellbeing Boards, health sector regulators (such as the National Audit Office and the Health Select Committee), professional associations [such as the NHS Confederation, British Medical Association (BMA) and Royal Colleges], think tanks (such as the Health Foundation, The King’s Fund and Nuffield Trust), and national and local media organisations. Our indicators are also intended for public reporting, to facilitate more direct forms of public accountability. In principle, our equity indicators can also be used to monitor health-care equity in other high-income countries with well-developed administrative health data sets, to make international comparisons of equity in health care, and to help evaluate the health-care equity impacts of interventions in trials and quasi-experimental studies.

The aims of our study were to:

-

develop indicators of socioeconomic inequality in health-care access and outcomes at different stages of the patient pathway

-

develop methods for monitoring local NHS equity performance in tackling socioeconomic health-care inequalities

-

track the evolution of socioeconomic health-care inequalities in the 2000s

-

develop ‘equity dashboards’ for communicating equity indicator findings to decision-makers in a clear and concise format.

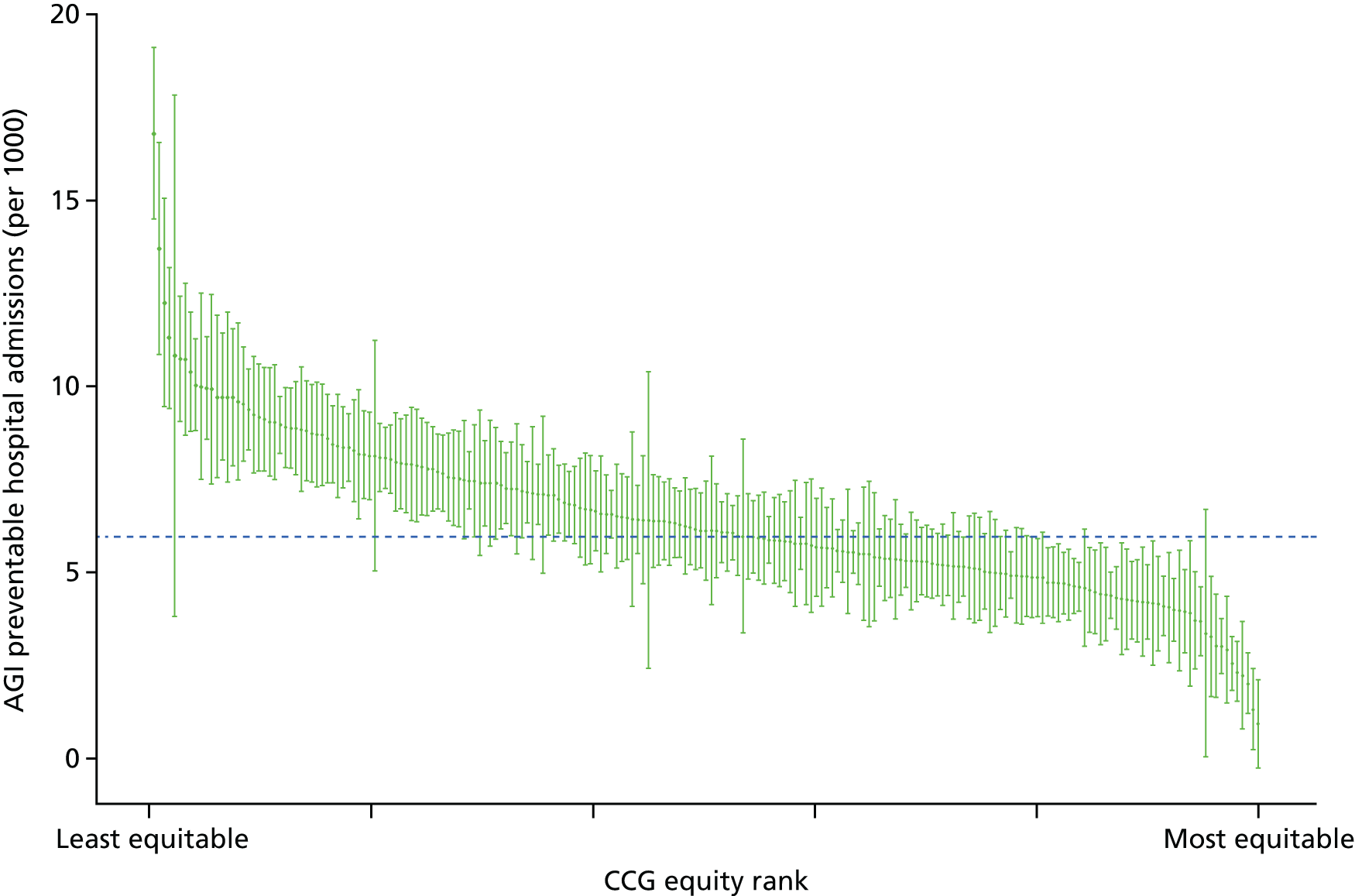

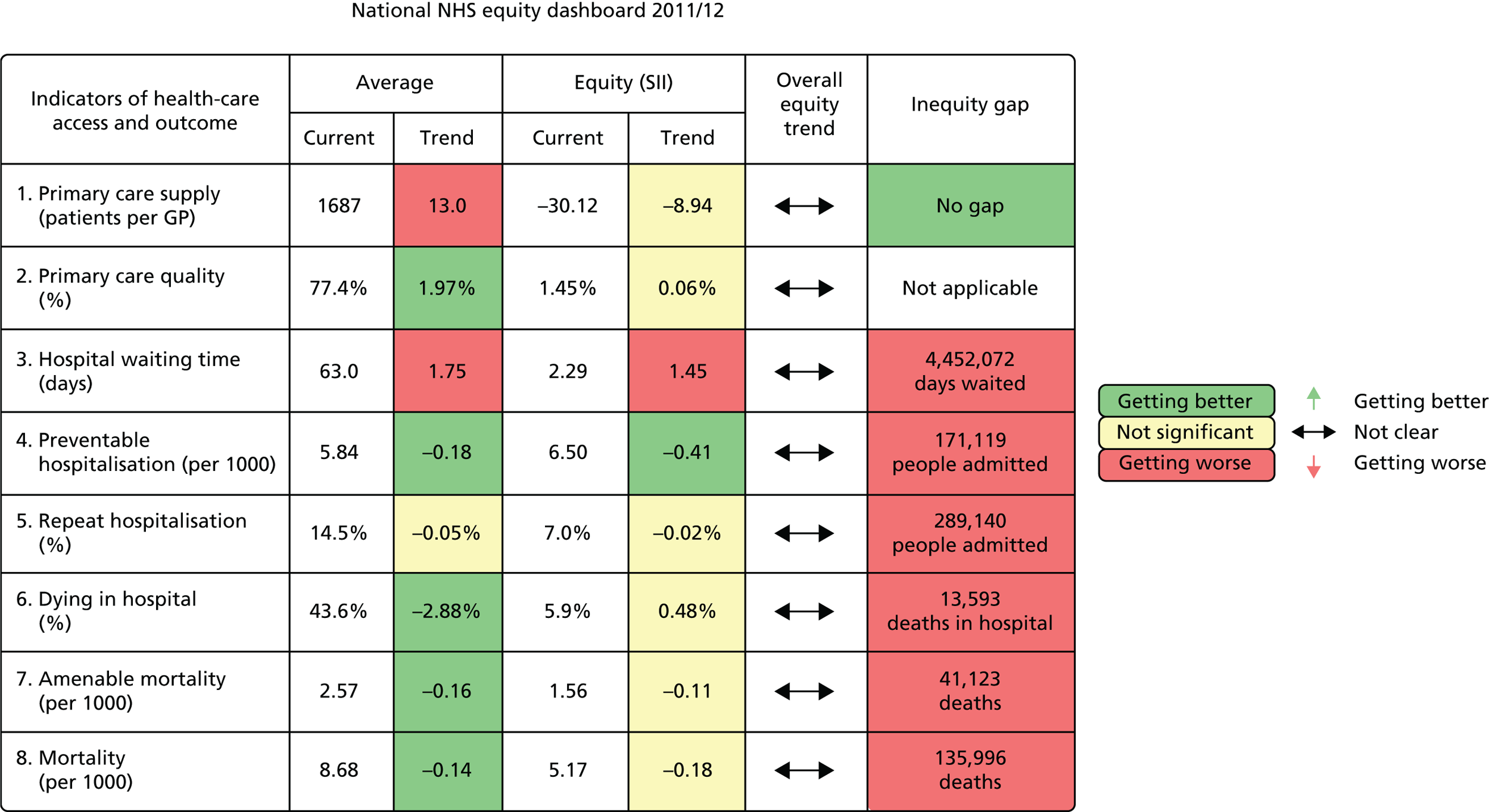

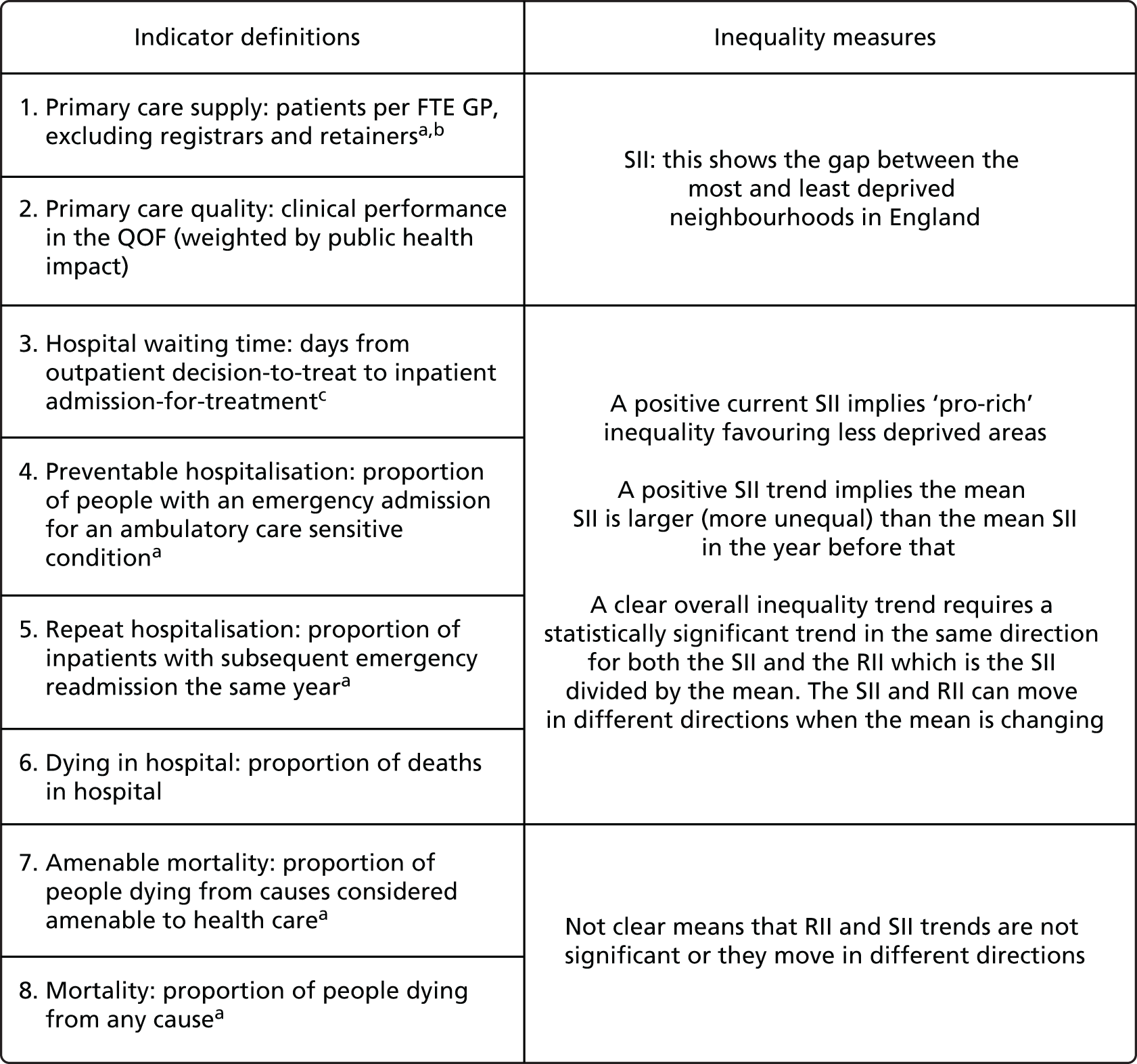

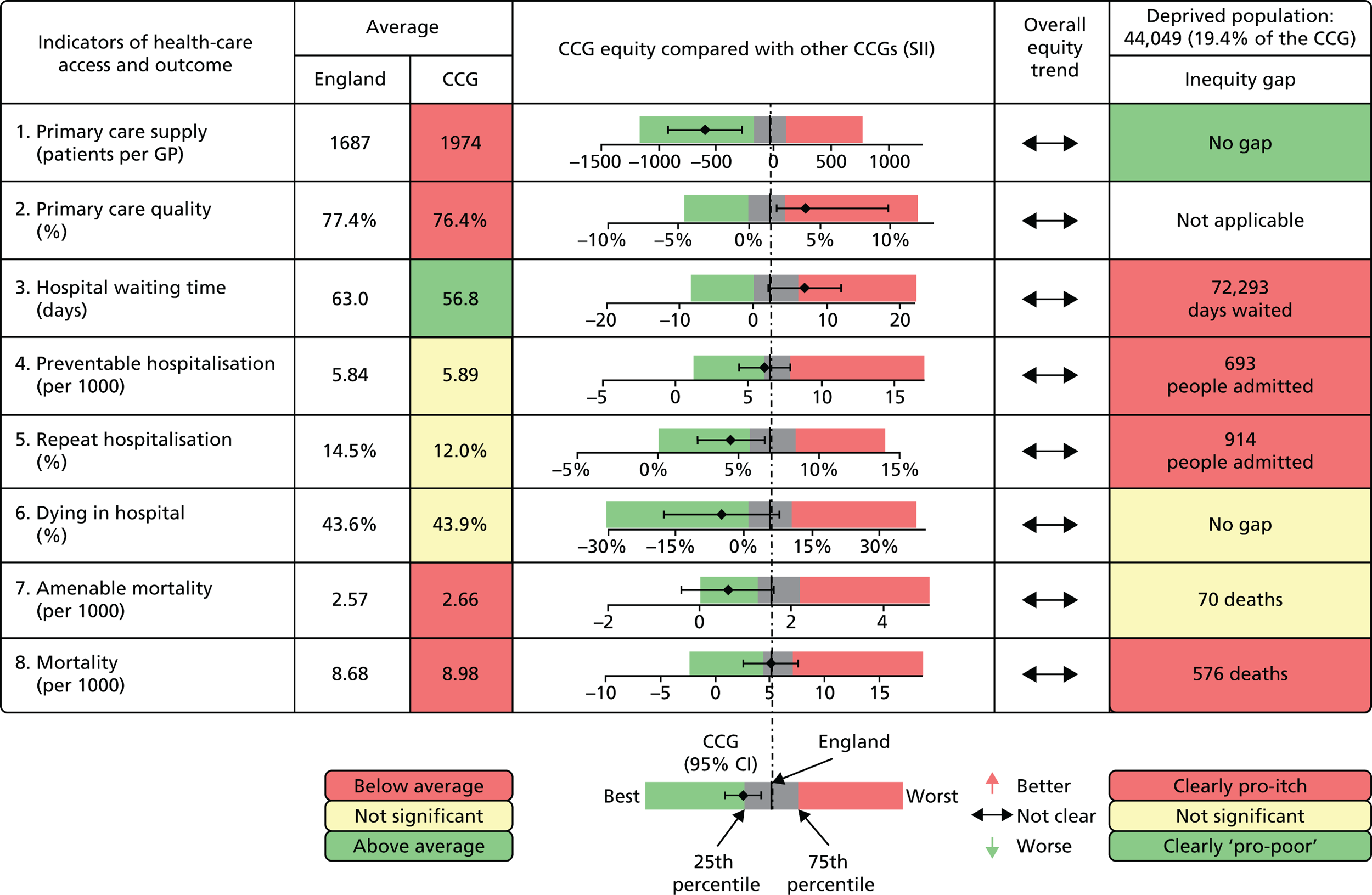

The main contributions of our study were as follows. First, we have developed the first indicators for local NHS equity monitoring against a national NHS equity benchmark, including new methods for national benchmarking as well as a new suite of indicators. Our approach has subsequently been adopted by NHS England in the Clinical Commissioning Group Improvement and Assessment Framework, starting with publication of an up-to-date version of one of our key local equity monitoring indicators: inequality in potentially avoidable emergency hospitalization (NHS England 2016,13 NHS Choices 2016,14 University of York 201615). This aspect of our work was also cited by the independent think tank, The King’s Fund, as being a potentially useful way of incorporating equity into routine CCG performance monitoring by NHS England, in a report commissioned by the Department of Health,16 and the University College London Institute of Health Equity are discussing piloting the use of these local equity indicators to monitor progress in vanguard sites. Second, we have developed a more comprehensive suite of national NHS equity indicators than the inequalities breakdowns currently produced in the NHS Outcomes Framework, by including indicators of inequality in health-care access as well as health-care outcomes. Third, by producing our indicators from 2001/2 to 2011/12, we have provided the first comprehensive assessment of health-care equity trends during a key period of sustained effort by the NHS to reduce socioeconomic health inequalities through primary care strengthening. Finally, we have developed a comprehensive suite of visualisation tools for presenting and communicating our equity indicator findings to decision-makers. This includes a one-page ‘equity dashboard’ presenting summary information, automated ‘equity chart packs’ providing in-depth information underpinning the dashboard, and a web-based tool that allows users to their own graphs. Visualisation is an essential component of equity monitoring, because inequality is a complex concept and judgements about ‘fairness’, ‘justice’ or ‘equity’ often involve controversial value judgements about which reasonable people can disagree. A single ‘one-size-fits-all’ headline inequality measure can therefore be misleading. So, it is essential to show people the underlying inequality patterns and trends, to help them understand the meaning and importance of the trends, and draw their own conclusions about equity based on their own value judgements.

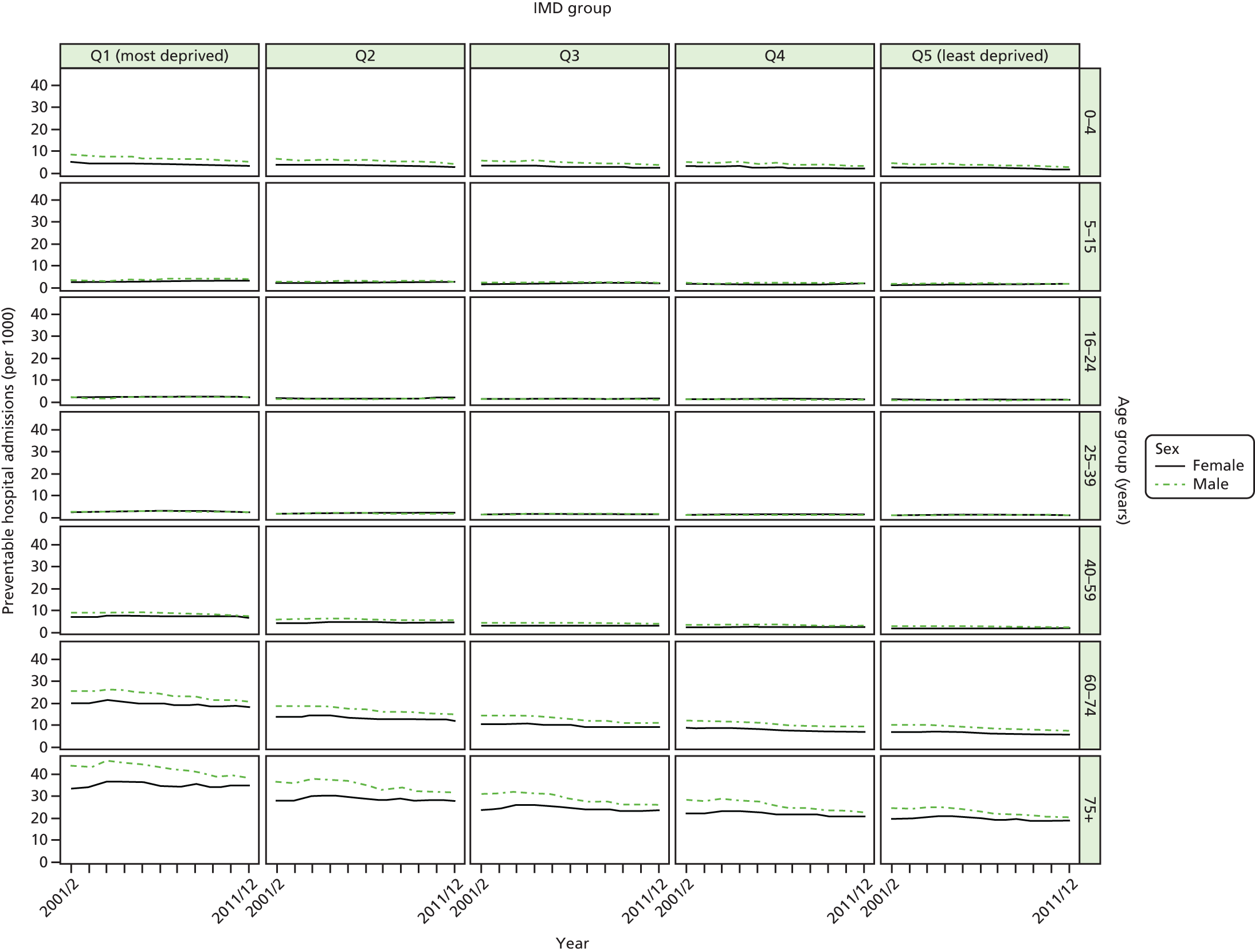

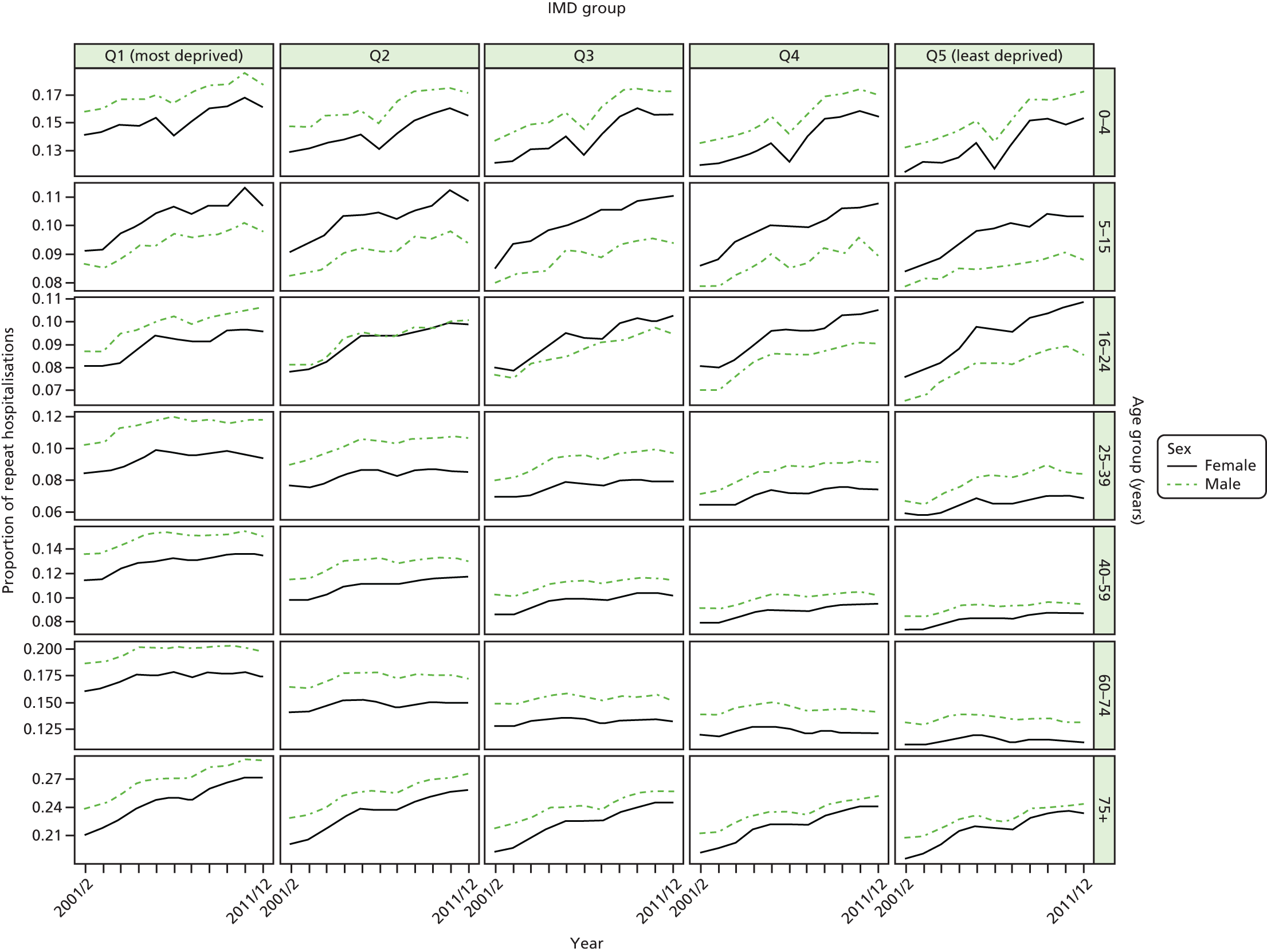

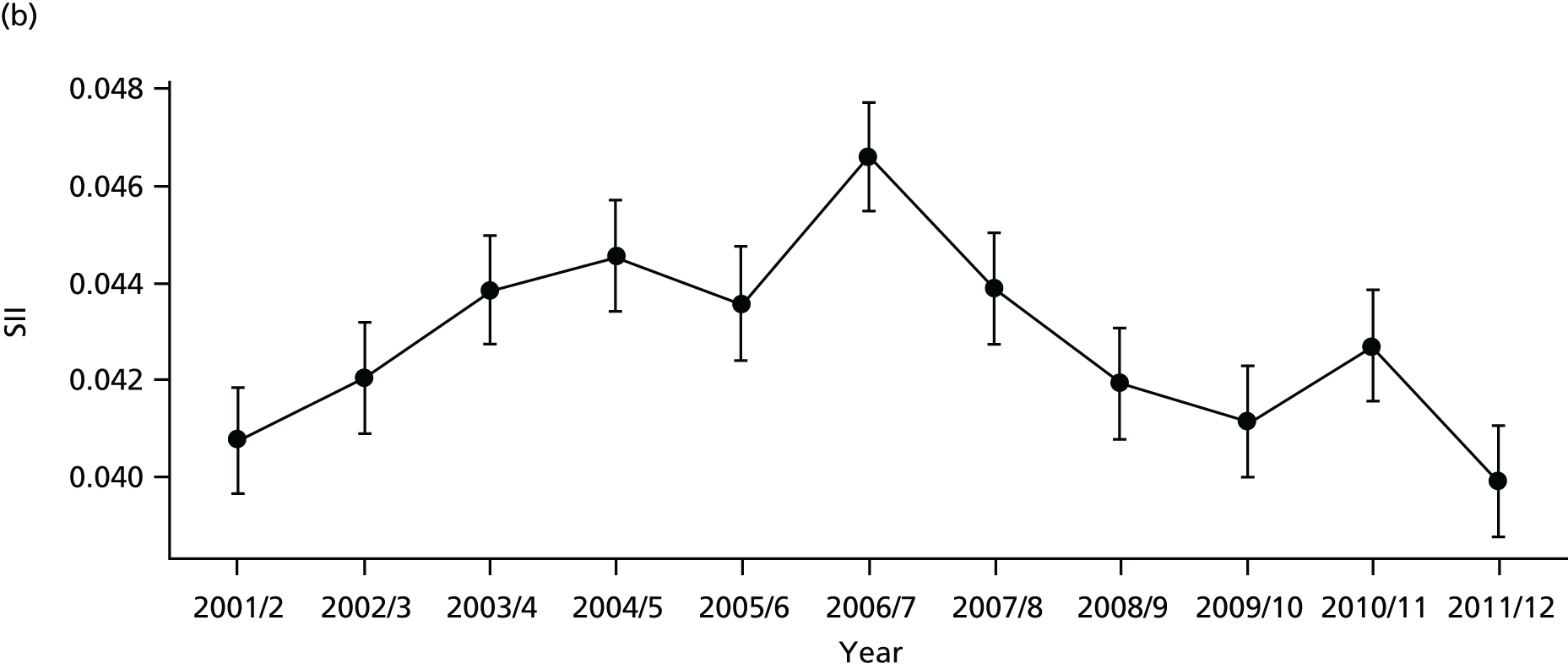

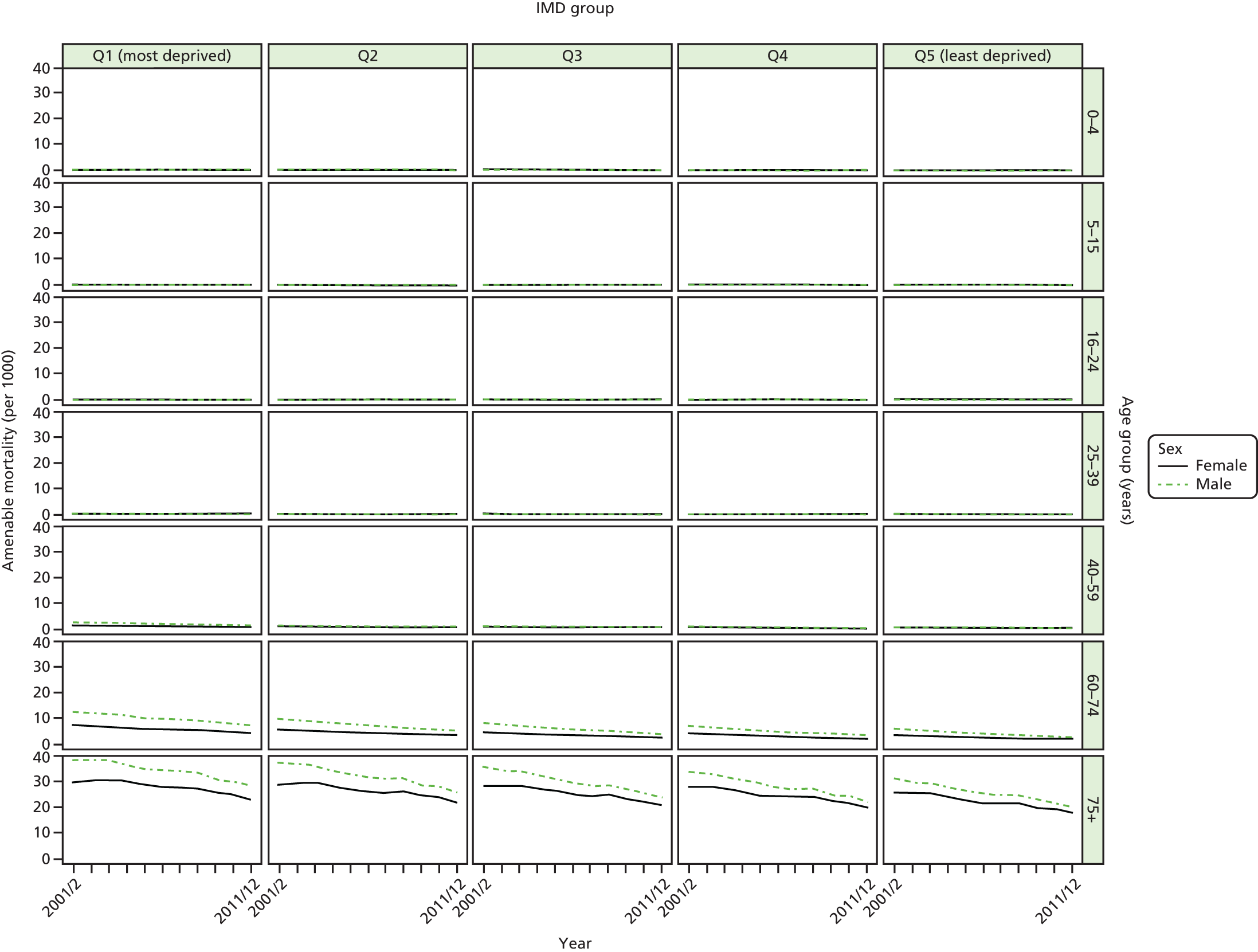

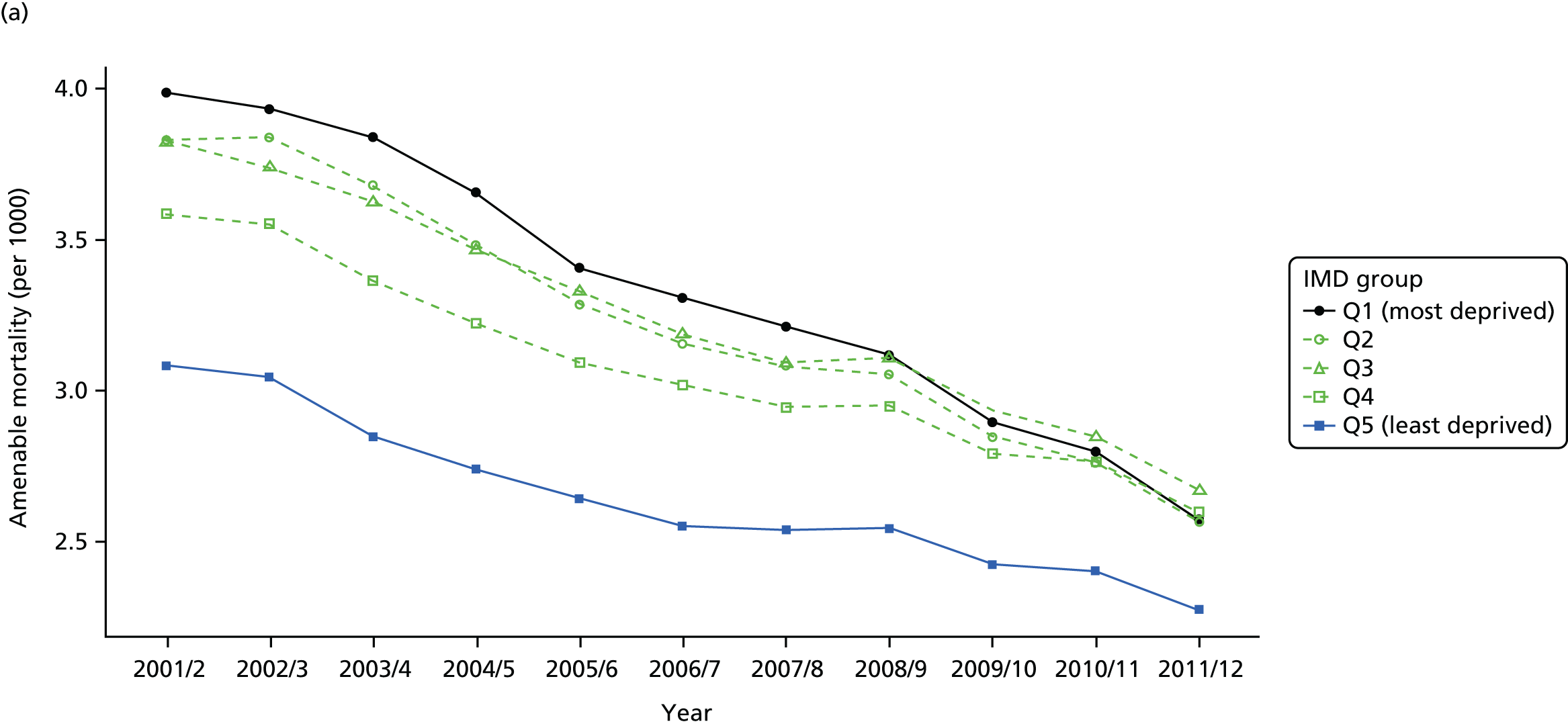

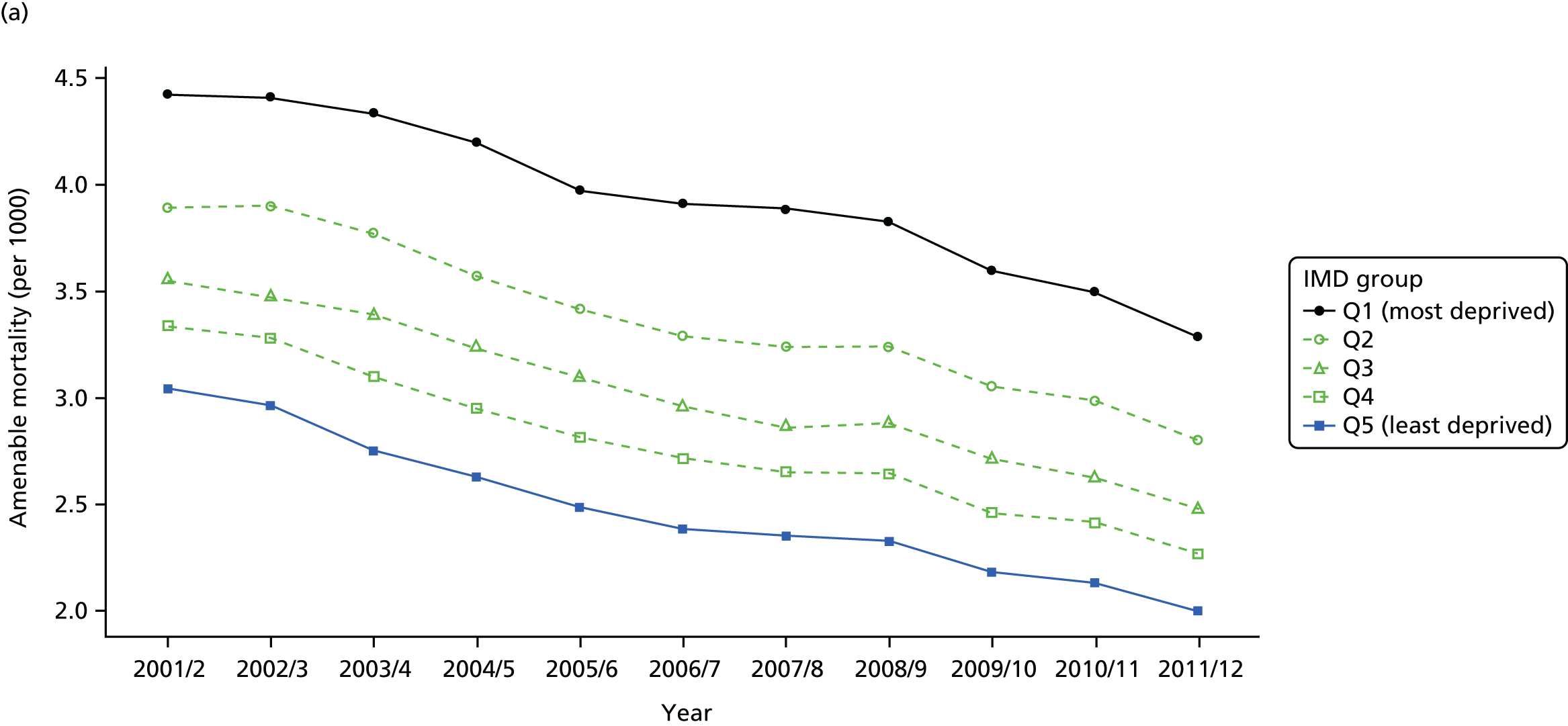

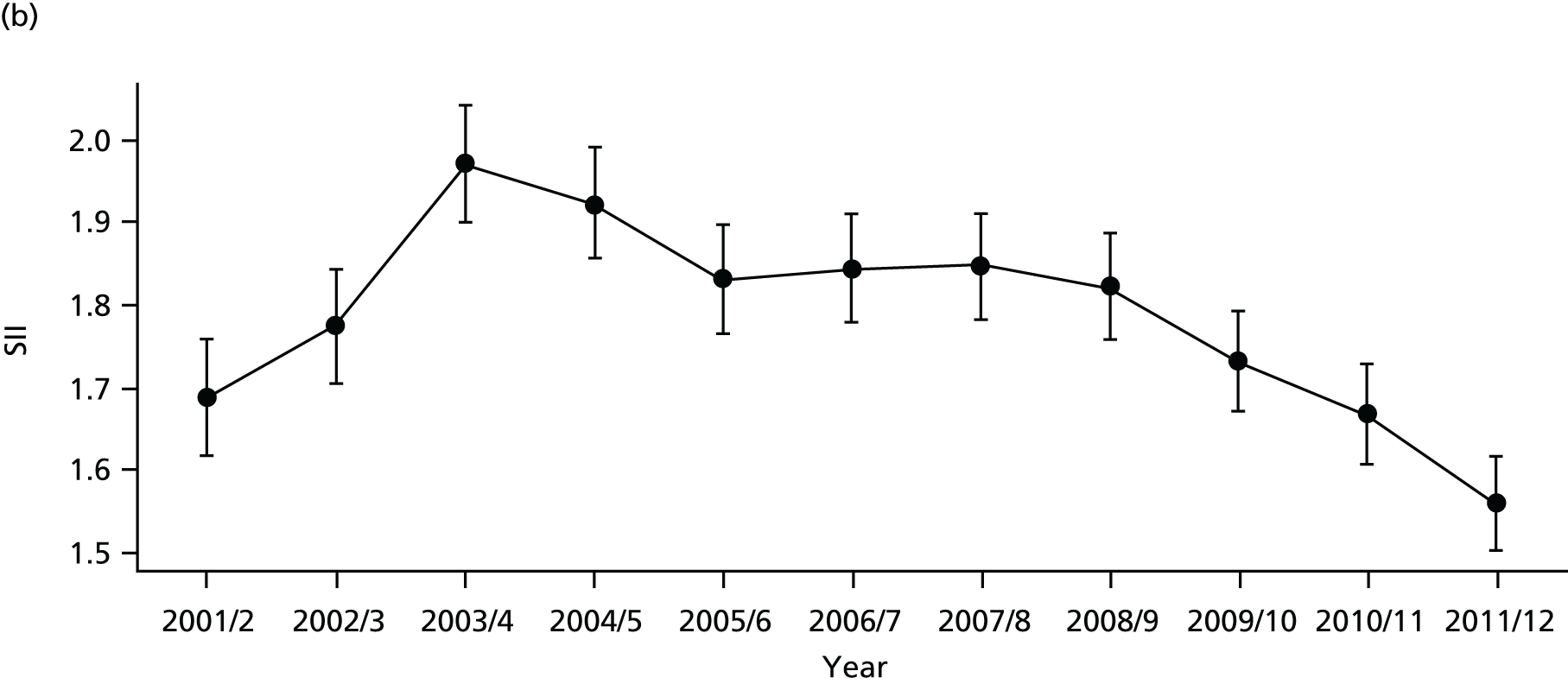

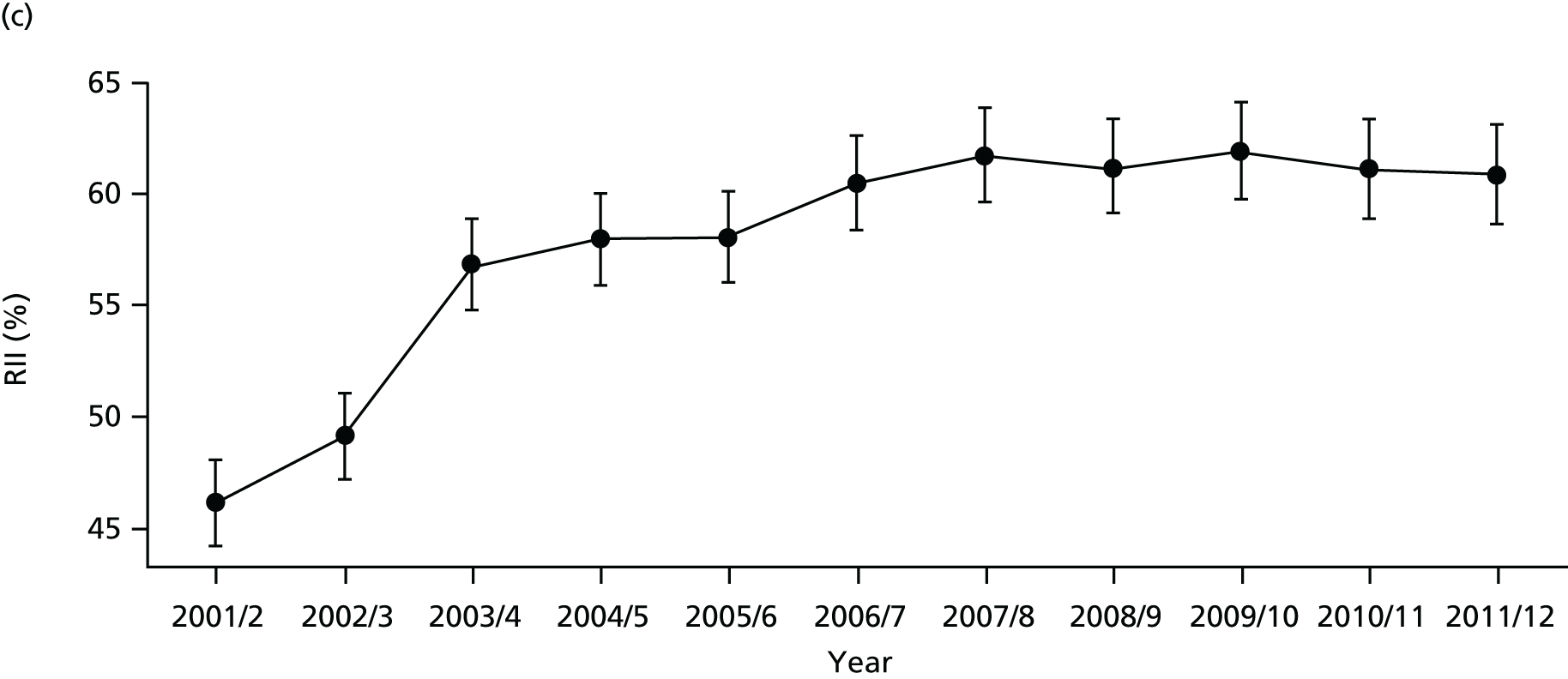

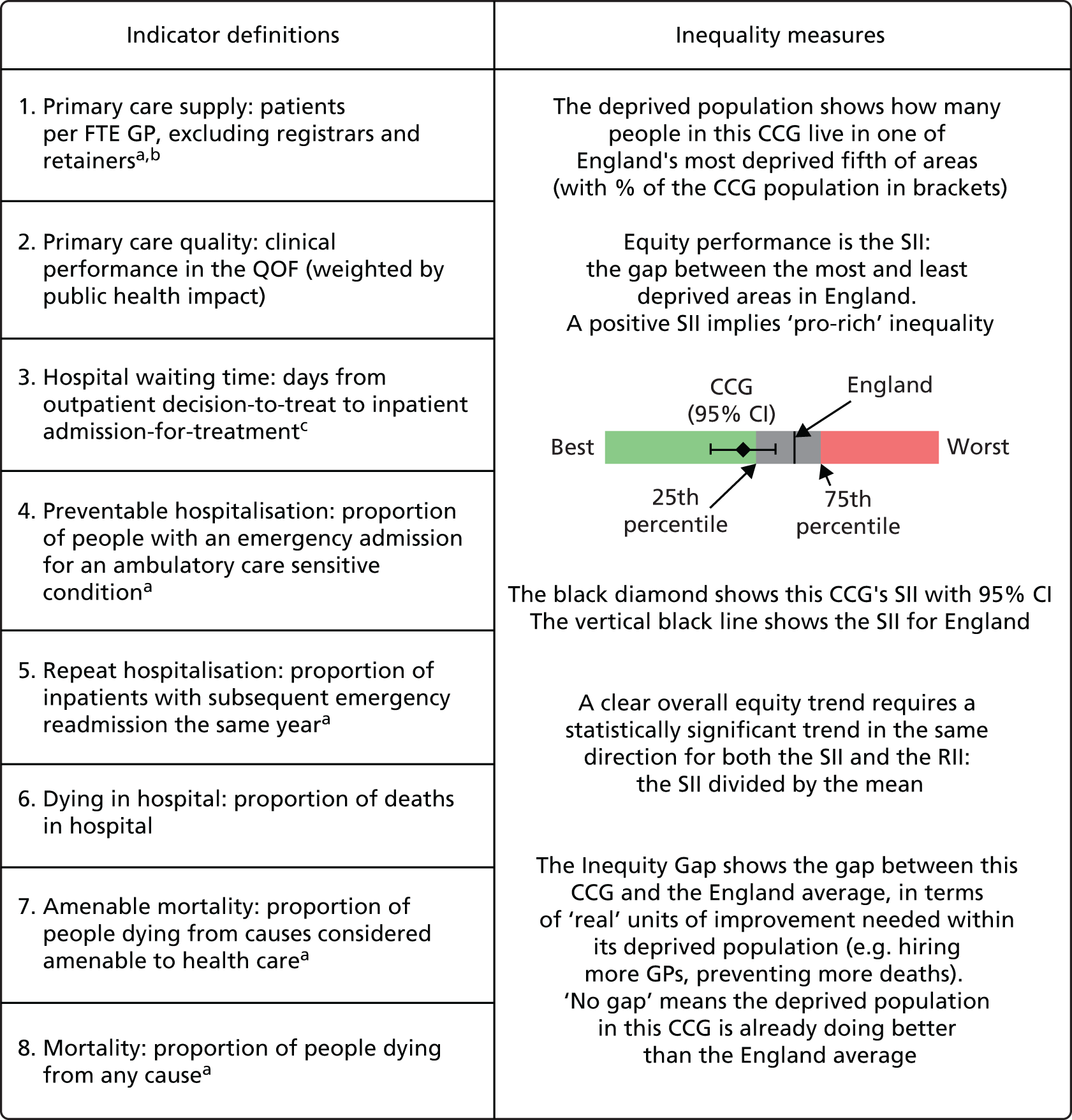

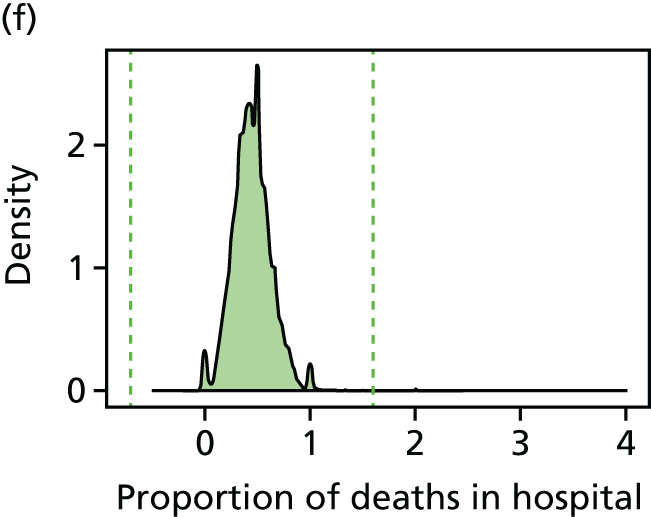

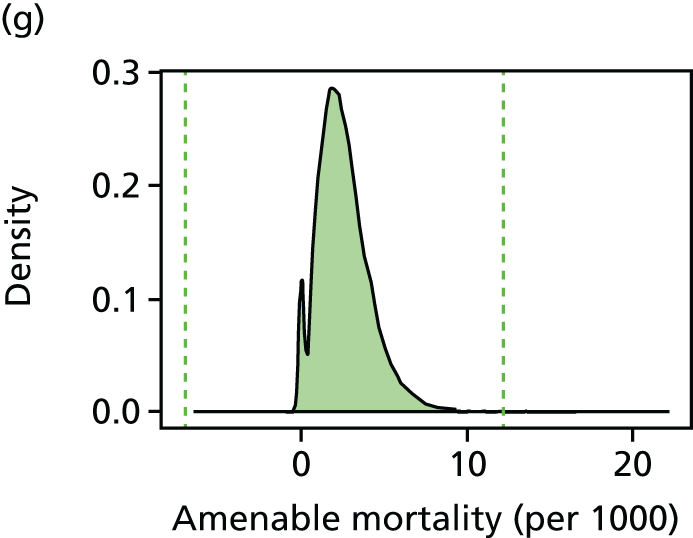

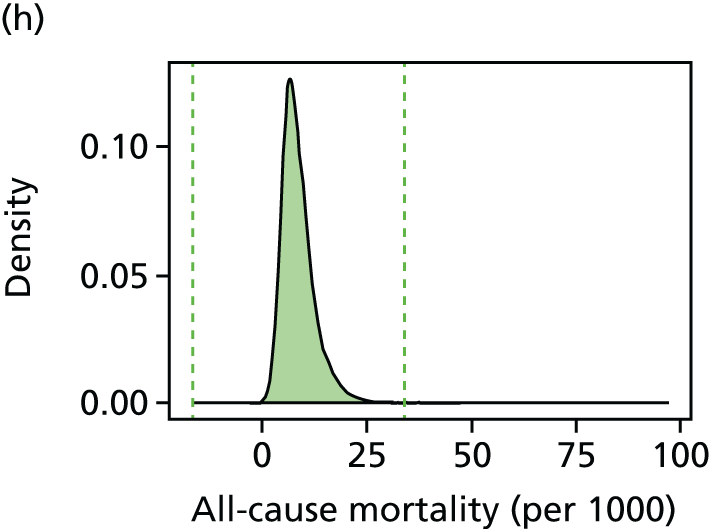

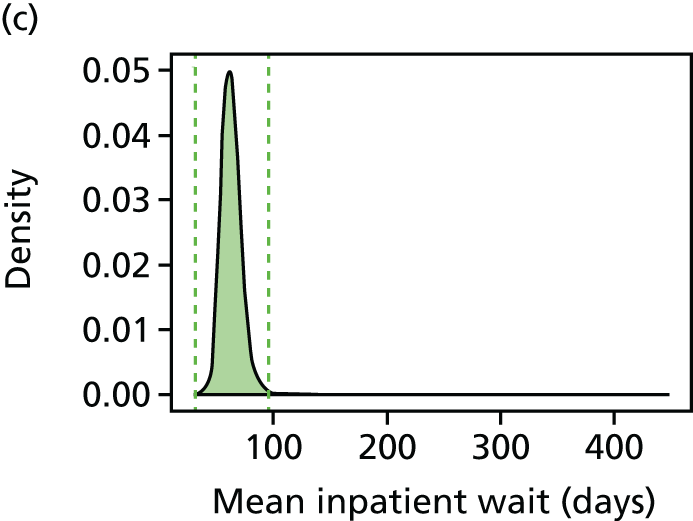

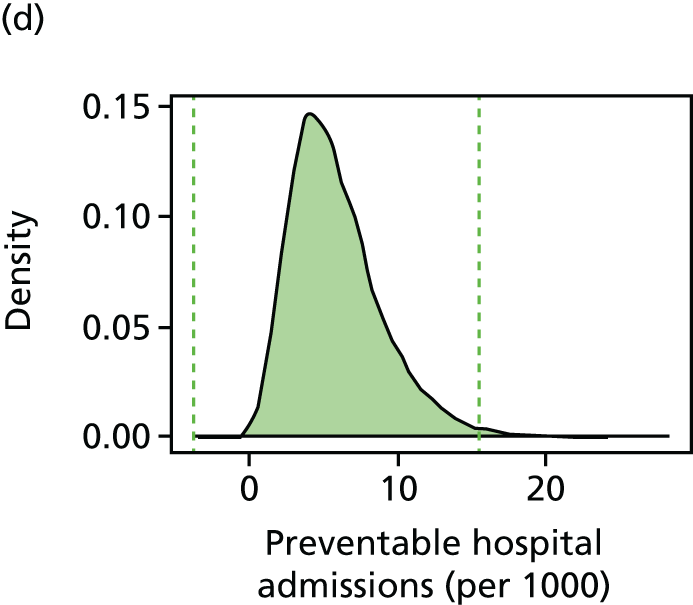

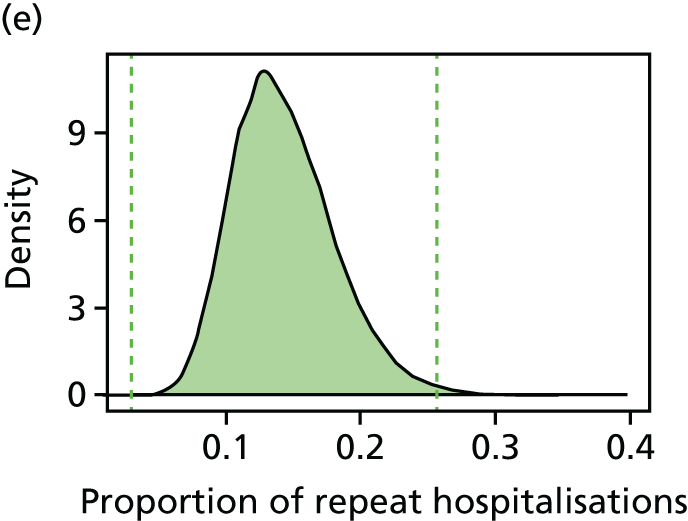

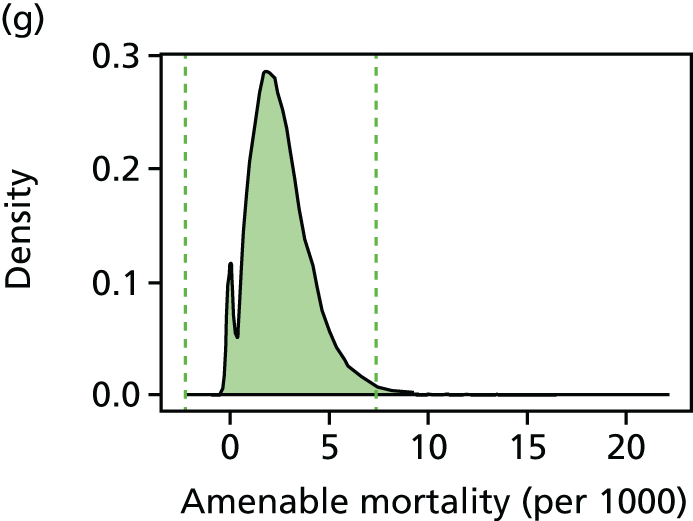

We have developed eight general indicators of health-care equity that examine socioeconomic inequalities in health-care access and outcomes at different stages of the patient pathway: (1) primary care supply, (2) primary care quality, (3) hospital waiting time, (4) preventable hospitalisation, (5) repeat hospitalisation, (6) dying in hospital, (7) amenable mortality and (8) overall mortality. We did not include general indicators of socioeconomic inequality in health-care utilisation, such as the total number of non-emergency inpatient or outpatient hospital visits, because when diverse health-care services are grouped together it is hard to tell whether more utilisation reflects better access to care, worse quality of care or worse health.

All eight of our general indicators are potentially suitable for national equity monitoring. However, we found that the last three indicators do not fully meet the more demanding data requirements for local equity monitoring. The main issue was that there are relatively few deaths in any given local CCG area in any given year, making it hard to tell from a statistical perspective whether or not observed differences in social gradients between different local areas are merely a result of the random play of chance. We recommend three indicators as a high priority for local equity monitoring against a national equity benchmark: primary care supply, primary care quality and preventable hospitalisation. Two other indicators could also be used for local equity monitoring: hospital waiting time and repeat hospitalisation. However, as explained in Chapters 8 and 9, these indicators may require further validation and refinement before being used for routine monitoring purposes.

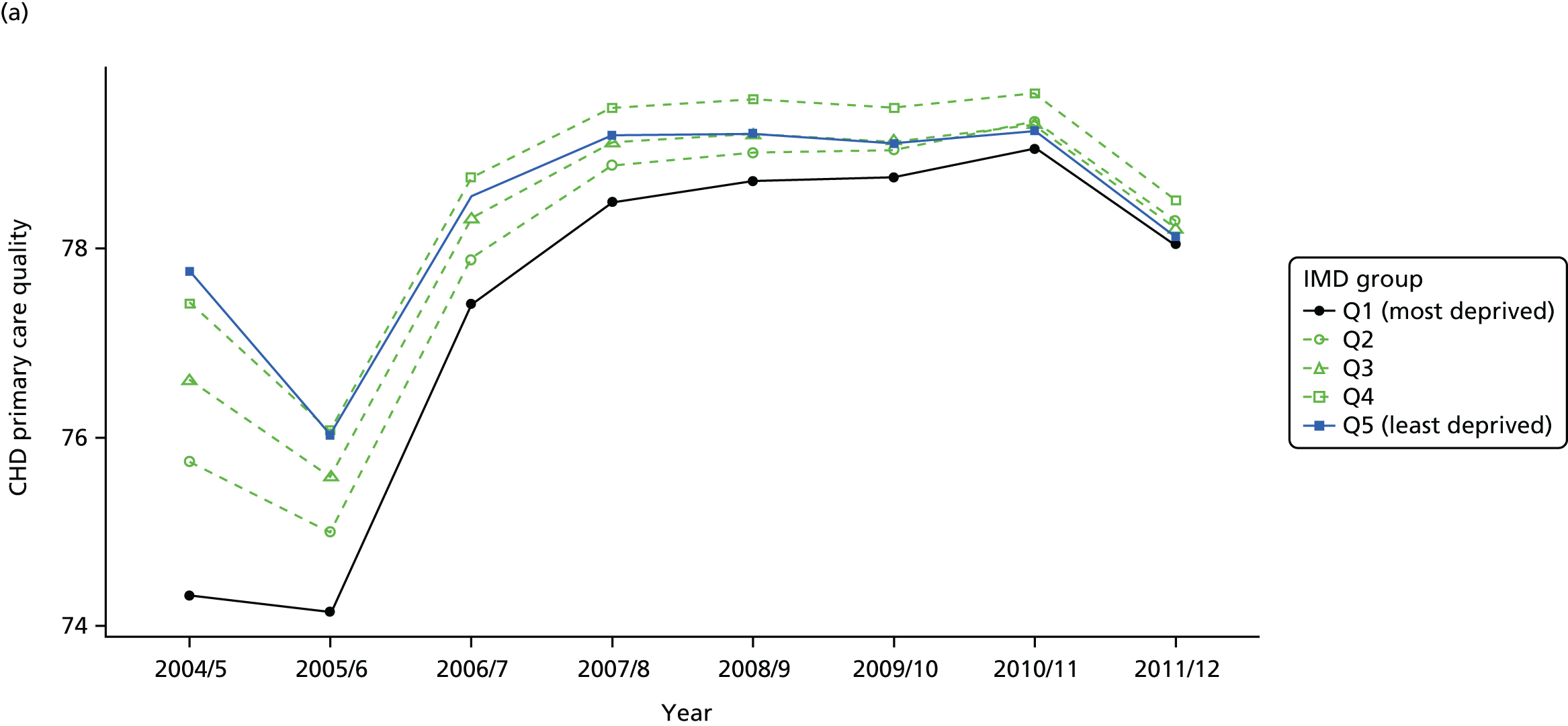

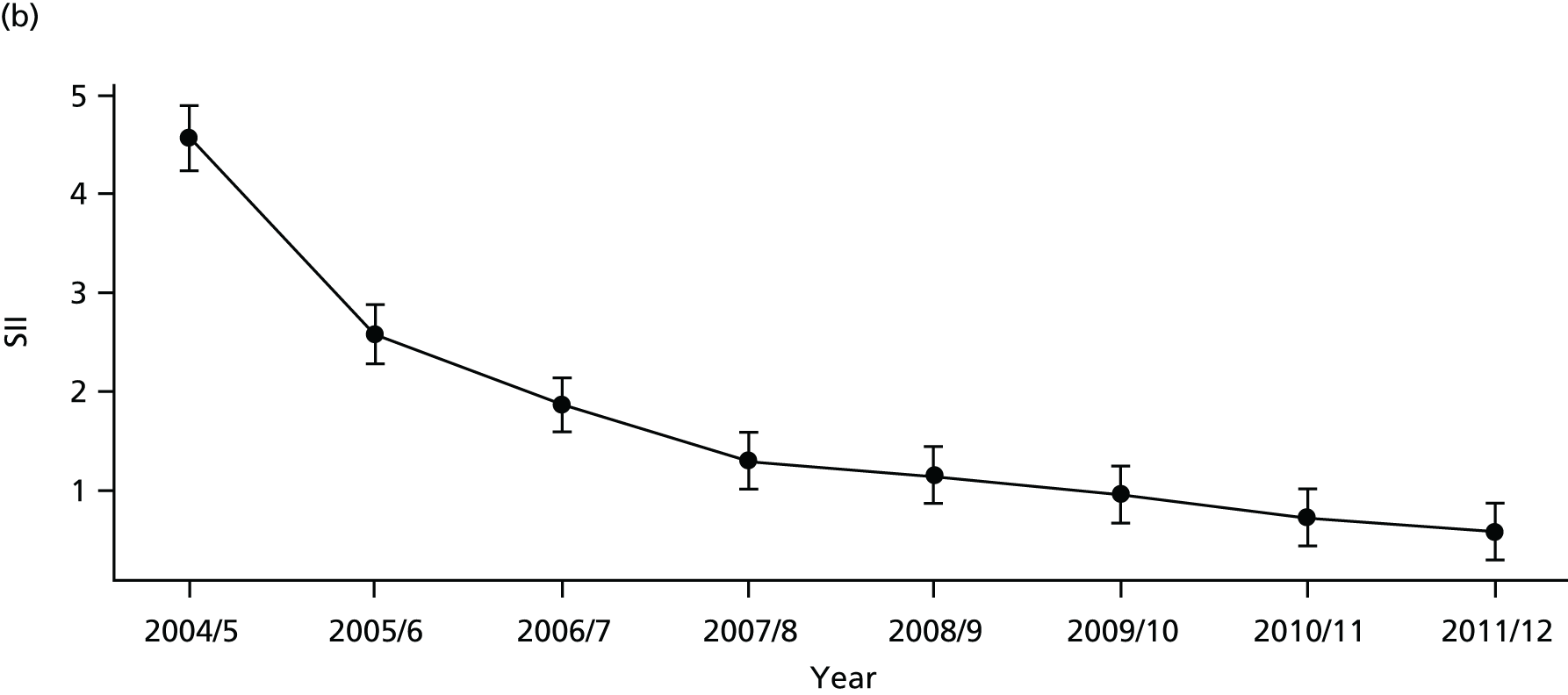

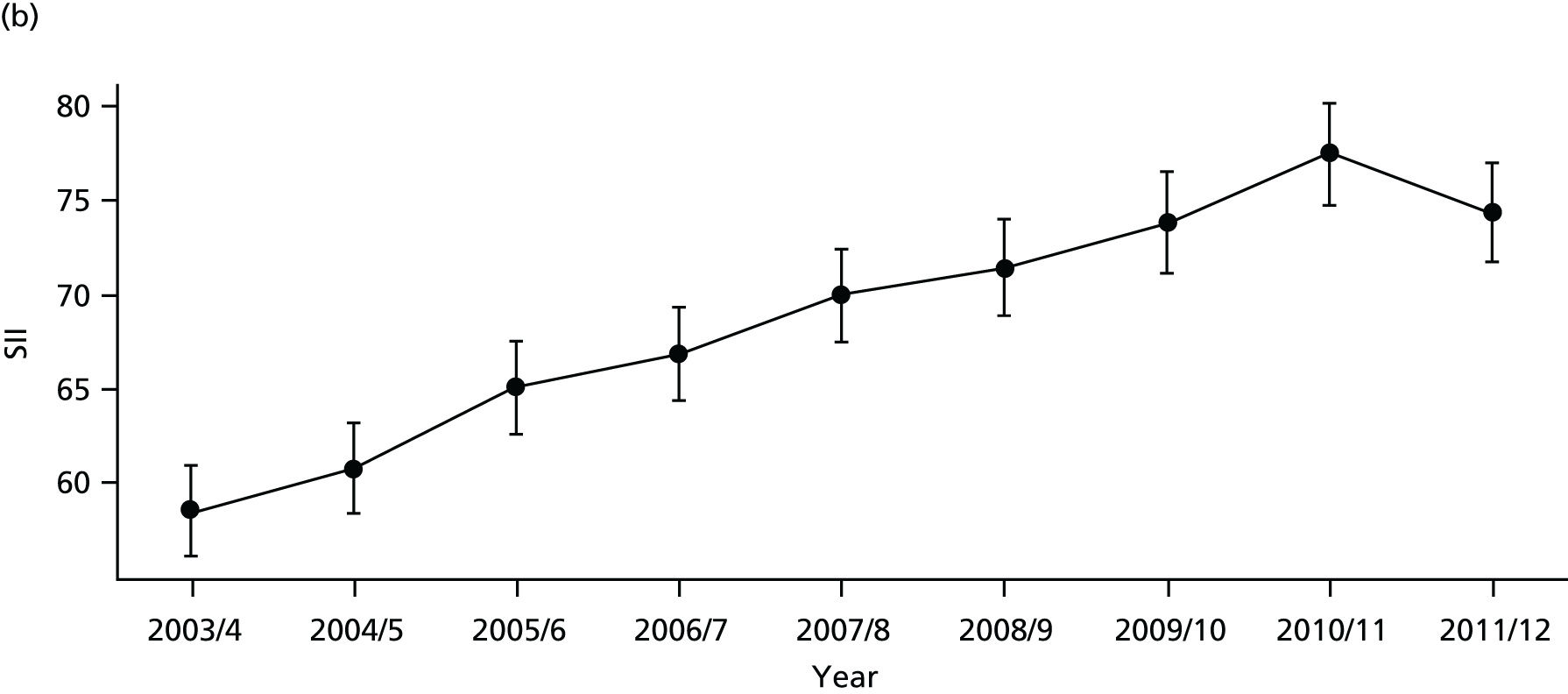

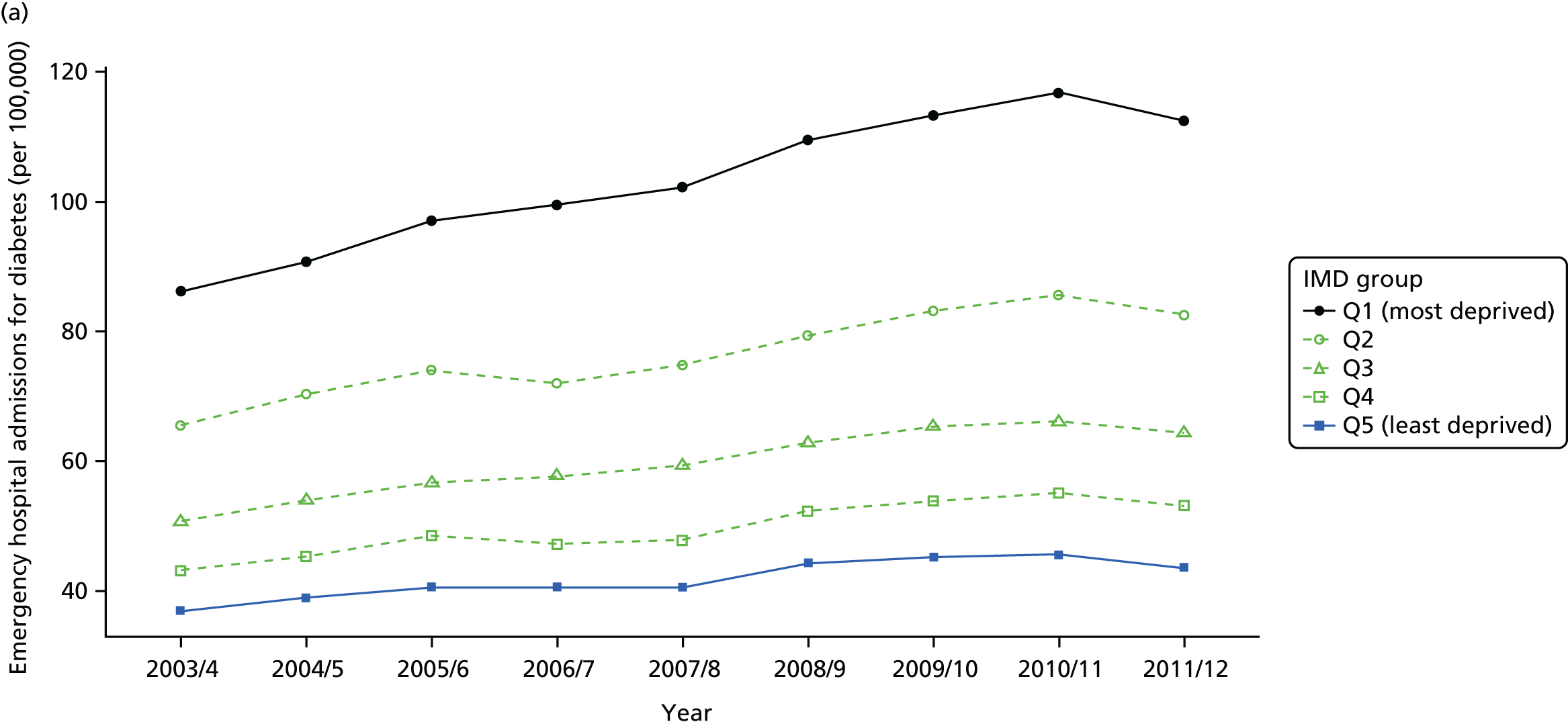

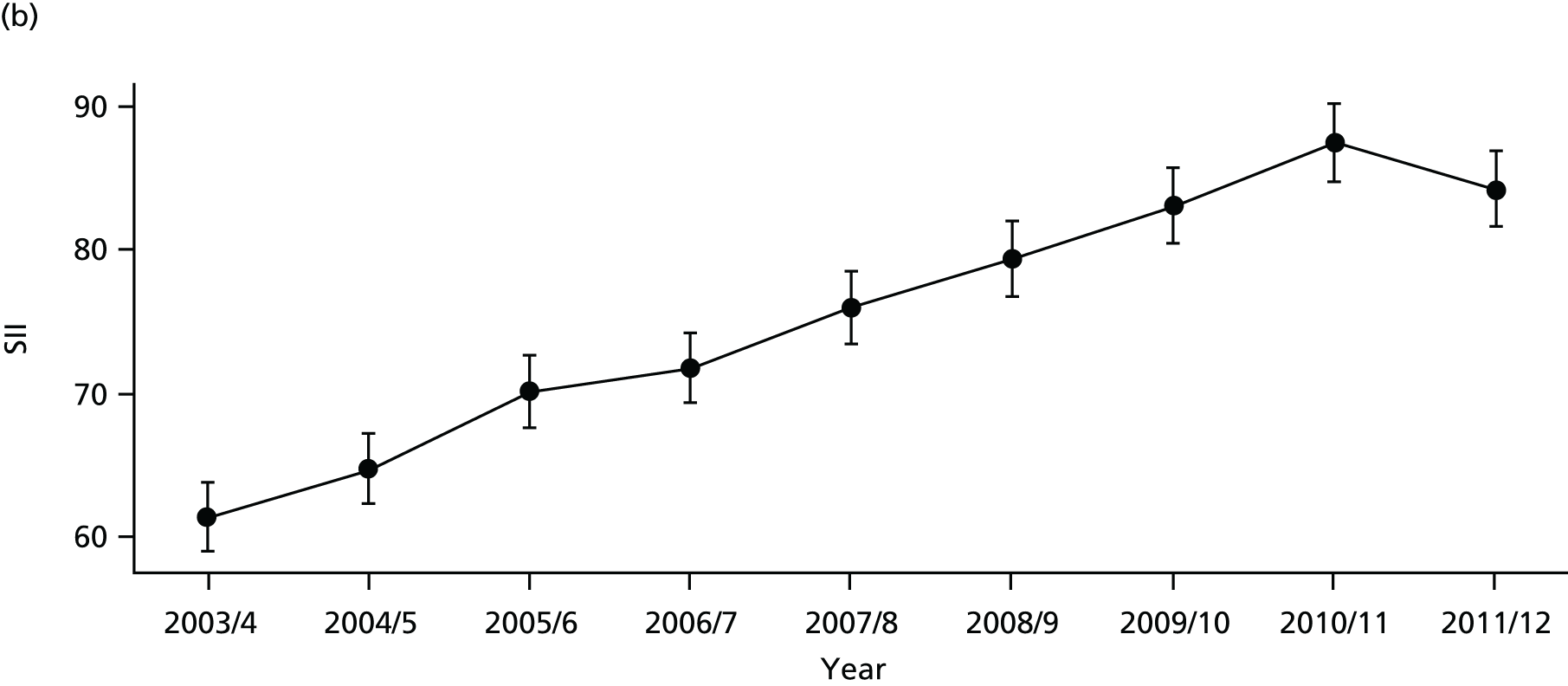

Our general indicators measure socioeconomic inequality across the full range of health-care activity, rather than focusing on one particular condition. General indicators can be used for local monitoring against a national benchmark, whereas at the present time disease-specific indicators can only be used for national equity monitoring of health-care outcomes. This is because the kinds of health-care outcomes we can currently measure on a comprehensive national basis involve rare events, for example hospitalisations or deaths. This is not problematic when we examine the total number of events across all disease areas, which can add up to a large number. But when we focus on one specific disease, the numbers become too small to detect statistically significant differences between local inequality and the national inequality benchmark. However, to illustrate the potential use of disease-specific indicators at a national level we have developed national disease-specific indicators of equity in the areas of coronary heart disease (CHD) and diabetes, which are presented in Appendices 1 and 2.

Our indicators can be used to assess the degree to which health-care equity in England is getting better or worse over time. They can also be used to identify local NHS areas that are performing better or worse than the national NHS average in reducing within-area socioeconomic inequalities in health-care access and outcomes. This information can be used to facilitate health-care quality improvement efforts, to understand why some areas are doing well or badly, to learn lessons, and to share good practice.

However, we would caution against using our equity indicators for setting performance targets with rewards or penalties attached, at least until further experience and understanding of equity monitoring has been built up. The Health and Social Care Act 20123 suggests the use of financial payments to reward CCGs that succeed in reducing inequalities, as one factor to be taken into account when making end of year payments to CCGs to reward quality. Specifically, section 223k of the act entitled ‘Payments in respect of quality’ states that NHS England:

may, after the end of a financial year, make a payment to a clinical commissioning group . . . For that purpose, the Board may also take into account either or both of the following factors – (a) relevant inequalities identified during that year; (b) any reduction in relevant inequalities identified during that year (in comparison to relevant inequalities identified during previous financial years).

Health and Social Care Act 2012. 3 Contains public sector information licensed under the Open Government Licence v3.0

The process of paying CCGs for quality was subsequently implemented in a process known as the ‘quality premium’, although so far health inequality has not been incorporated into this process (www.england.nhs.uk/resources/resources-for-ccgs/ccg-out-tool/ccg-ois/qual-prem/; accessed 12 July 2015). We would caution against too ambitious a timescale for incorporating our CCG-level equity indicators into decisions on this process for two reasons. The first reason is that health-care equity monitoring is still in its infancy and is less well developed than the monitoring of health-care quality for the average patient. For example, health-care decision-makers have a reasonably good idea about how to reduce average hospital waiting times, supported by a strong evidence base from decades of international policy experimentation, monitoring and evaluation. By contrast, rather less is known about how to reduce socioeconomic inequality in hospital waiting times or other forms of health-care access and outcome. The second reason is that the causal links between policy action and health-care outcome are more complex, delayed and uncertain for some of the health-care outcomes we measure, such as preventable hospitalisation and amenable mortality, compared with health-care outcomes traditionally used for performance management, such as rates of antibiotic resistant bloodstream infections in hospitals. This can make it hard straightforwardly to attribute change in inequality in these outcomes to recent actions taken by CCG managers or the services they commission. Given the current state of knowledge, therefore, the most appropriate initial uses of our indicators are (1) to hold the NHS to account, and (2) to improve quality by helping decision-makers learn how to reduce social gradients in health care and by helping researchers build a stronger evidence base, rather than (3) to set high-powered financial and managerial incentives.

Throughout the study the research team was guided by an advisory group including academic and clinical experts, NHS and public health officials, and lay members, whose membership is listed in Appendix 3. All key decisions around indicator selection and the development of analytical methods and visualisation tools were taken in consultation with the advisory group. The team is grateful for its advice and support, although the responsibility for all decisions rests with the research team.

The next two sections of this introductory chapter set out the background to this study and present the conceptual framework we developed for monitoring equity in health care. Chapter 2 of the report describes how members of the public were involved in selecting our indicators and designing our visualisation tools, through a public consultation exercise in York based on an online survey and a citizens’ panel meeting, and through the participation of the two lay members of our advisory group. Chapter 3 describes the indicator selection process, which included reviewing existing indicators used by the NHS to monitor health-care quality, consulting health indicator experts about technical feasibility and consulting NHS and public health experts about policy relevance. Chapter 4 describes the data and analytical methods used for health-care equity indicator production and visualisation at both national and local levels. Chapter 5 presents the main results for all eight of our general indicators, including national health-care equity in 2011/12, national health-care equity time trends during the 2000s and local health-care equity monitoring in 2011/12 against a national benchmark. Chapter 6 describes the NHS engagement process we undertook to develop and refine our visualisation tools. Chapter 7 presents our prototype ‘equity dashboards’. Finally, Chapter 8 discusses our findings and Chapter 9 summarises our conclusions and research recommendations.

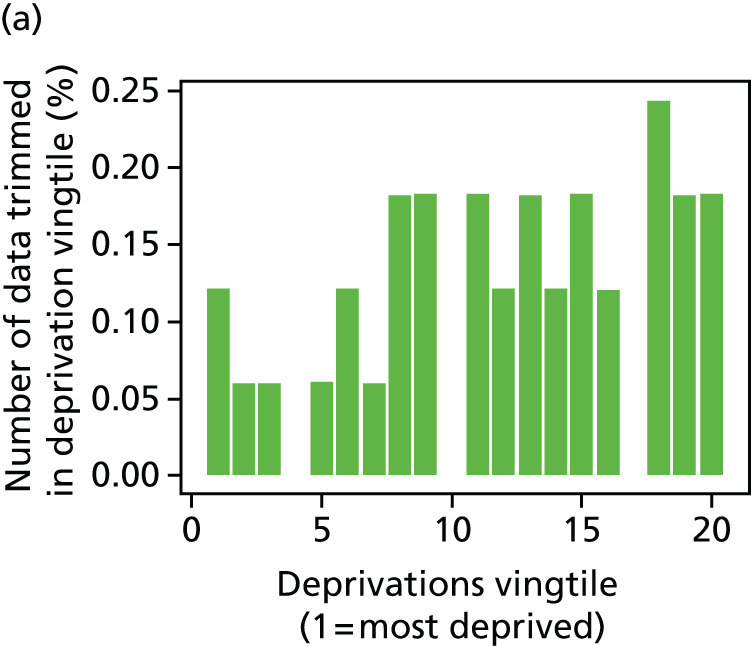

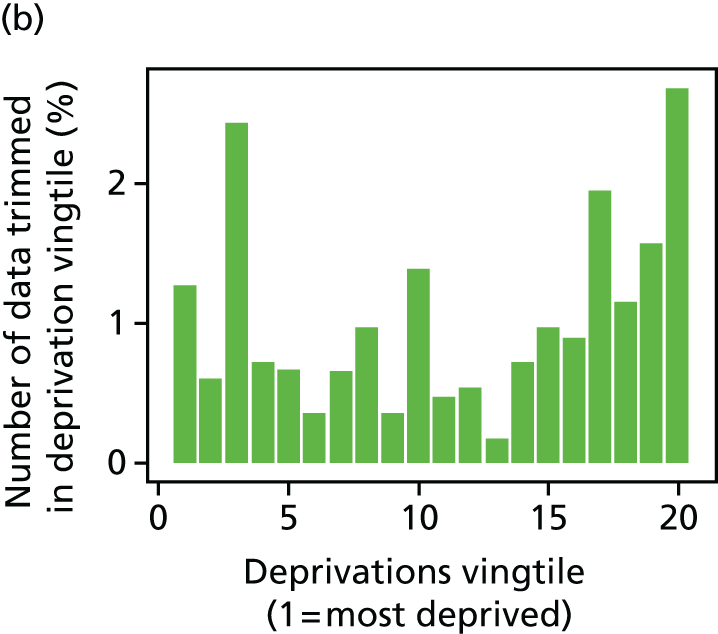

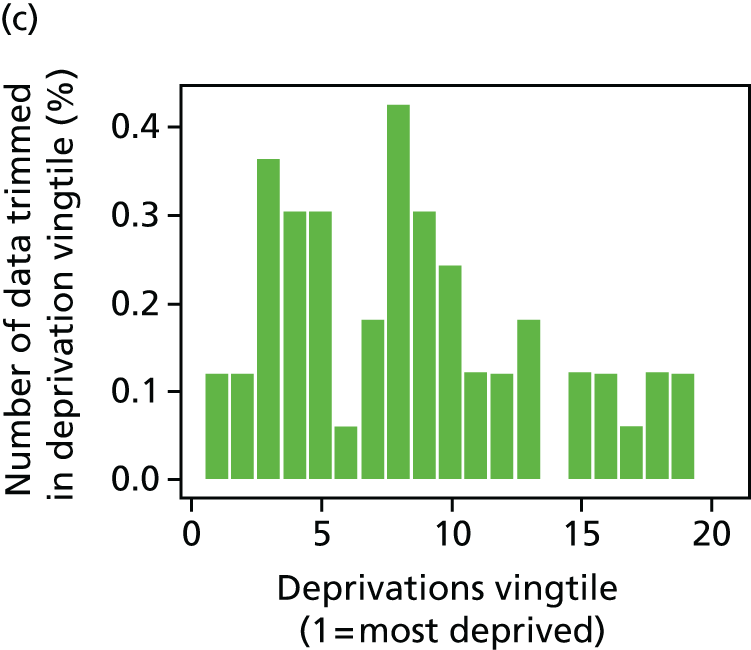

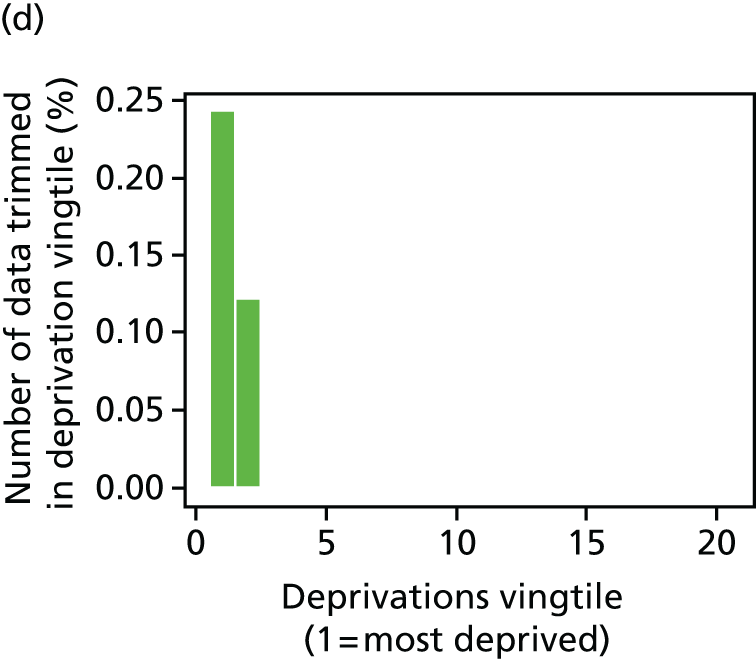

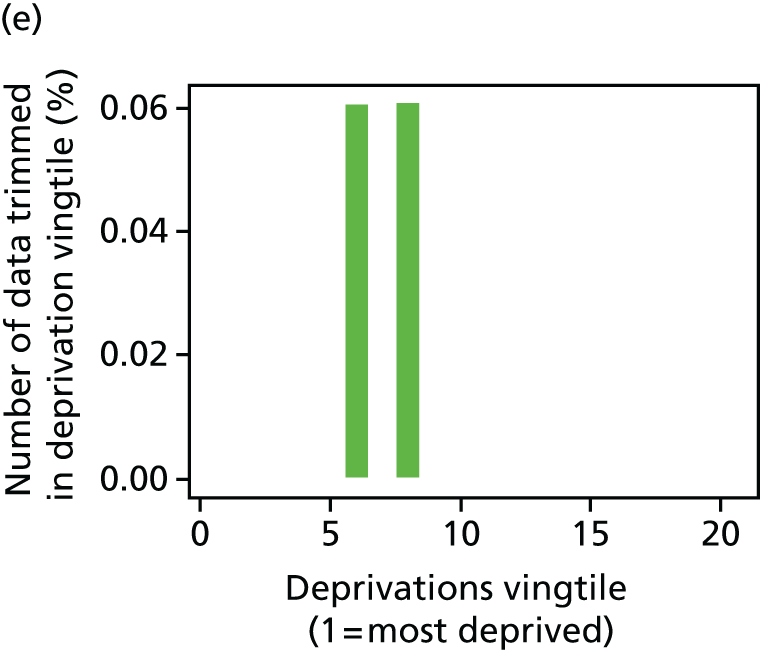

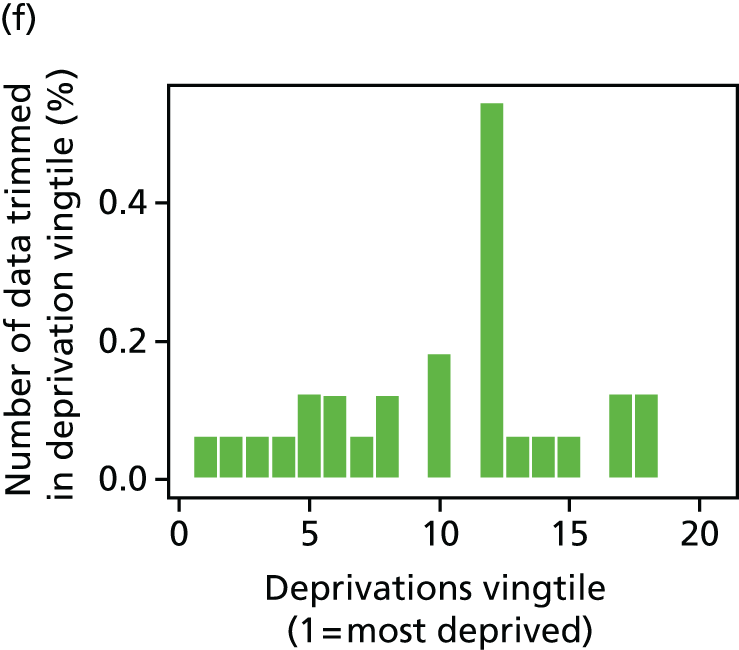

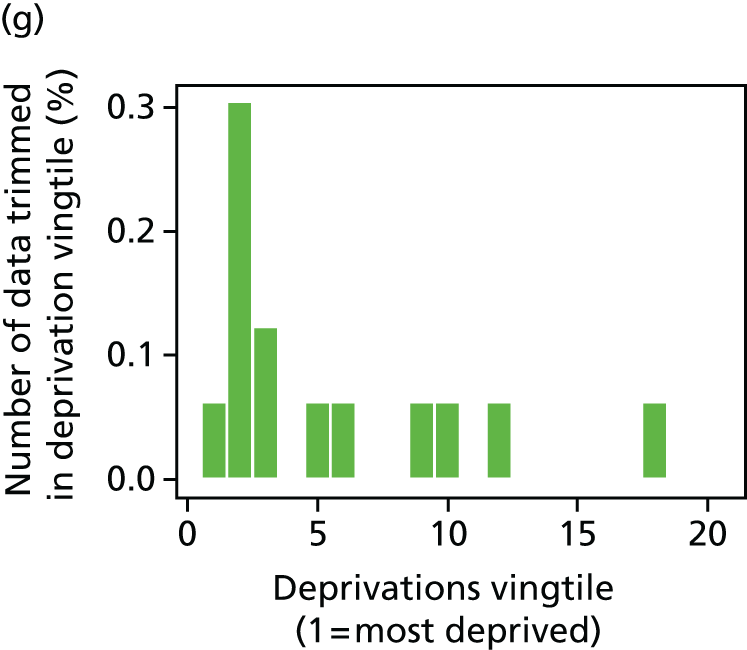

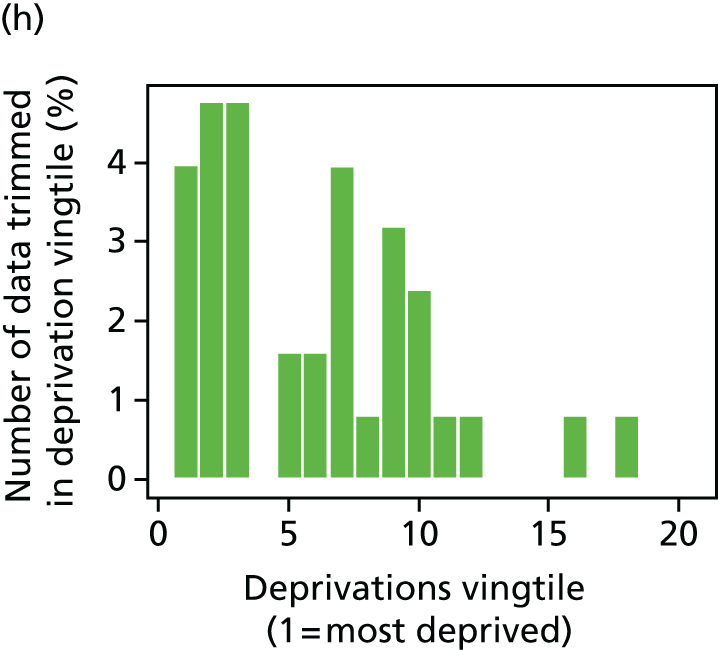

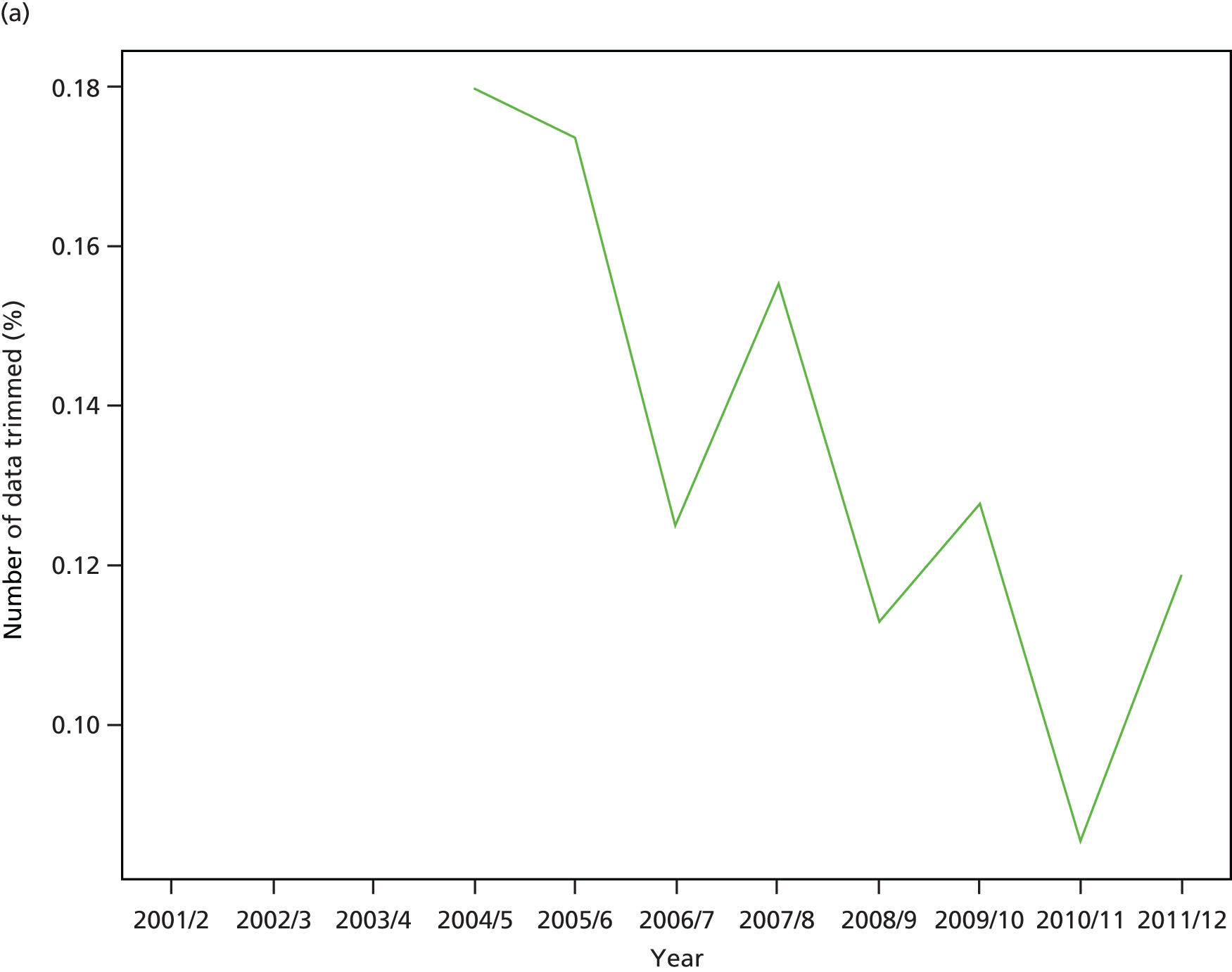

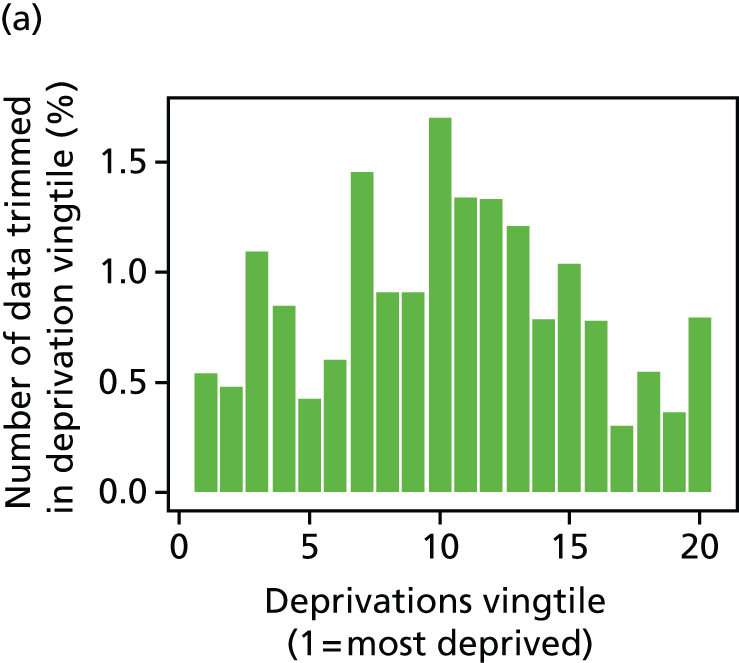

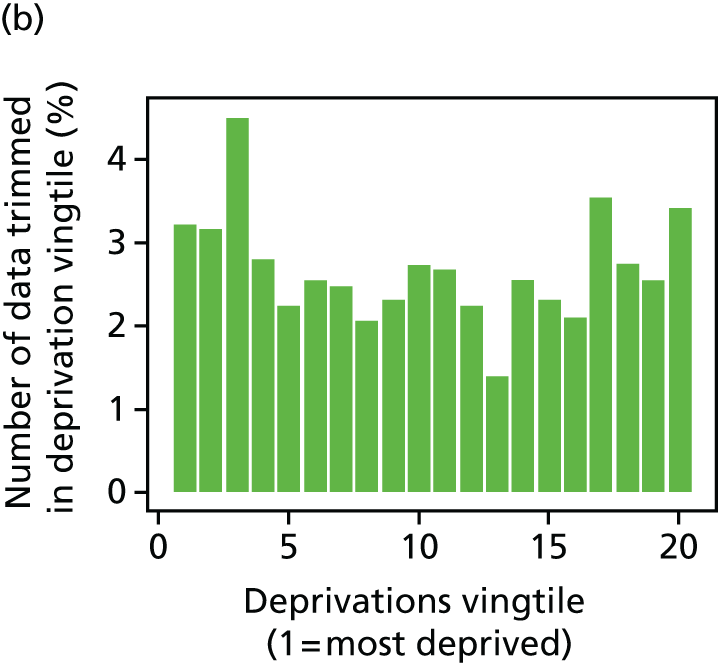

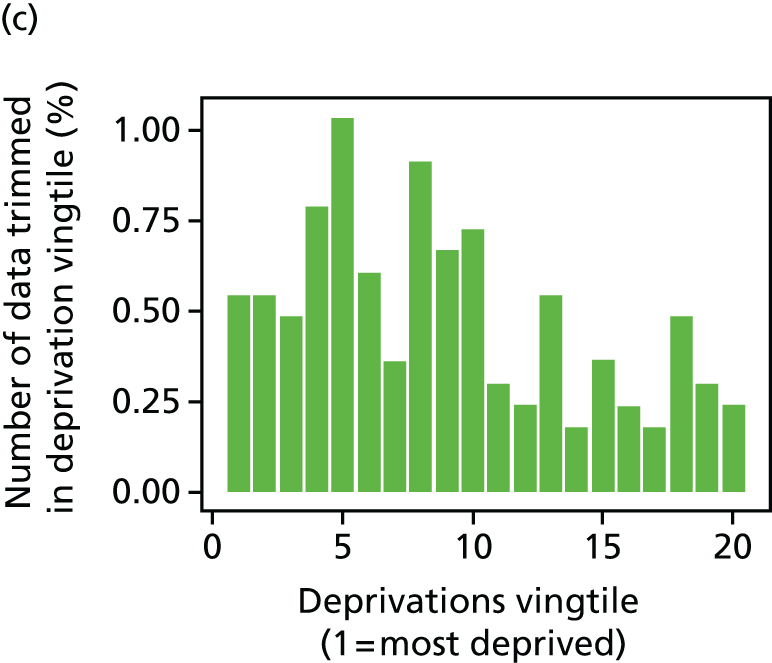

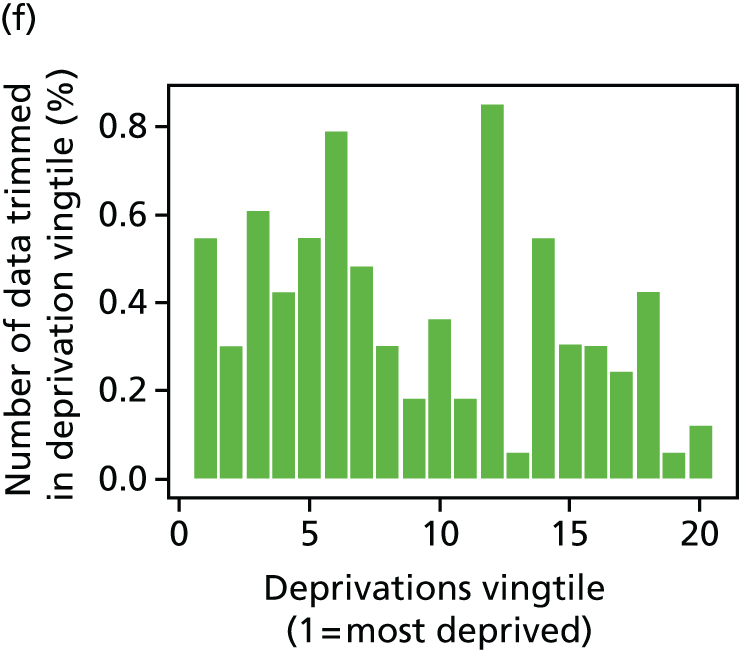

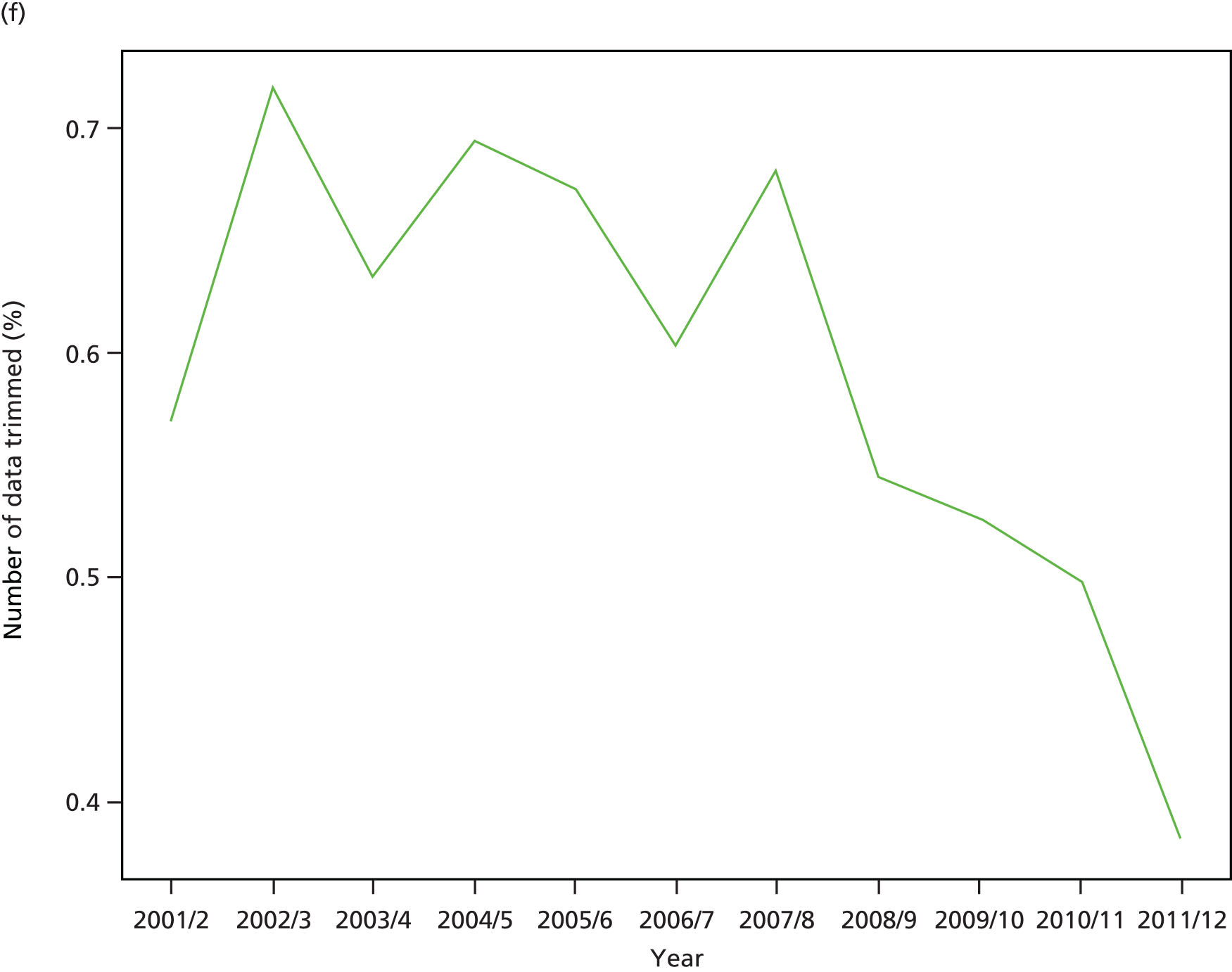

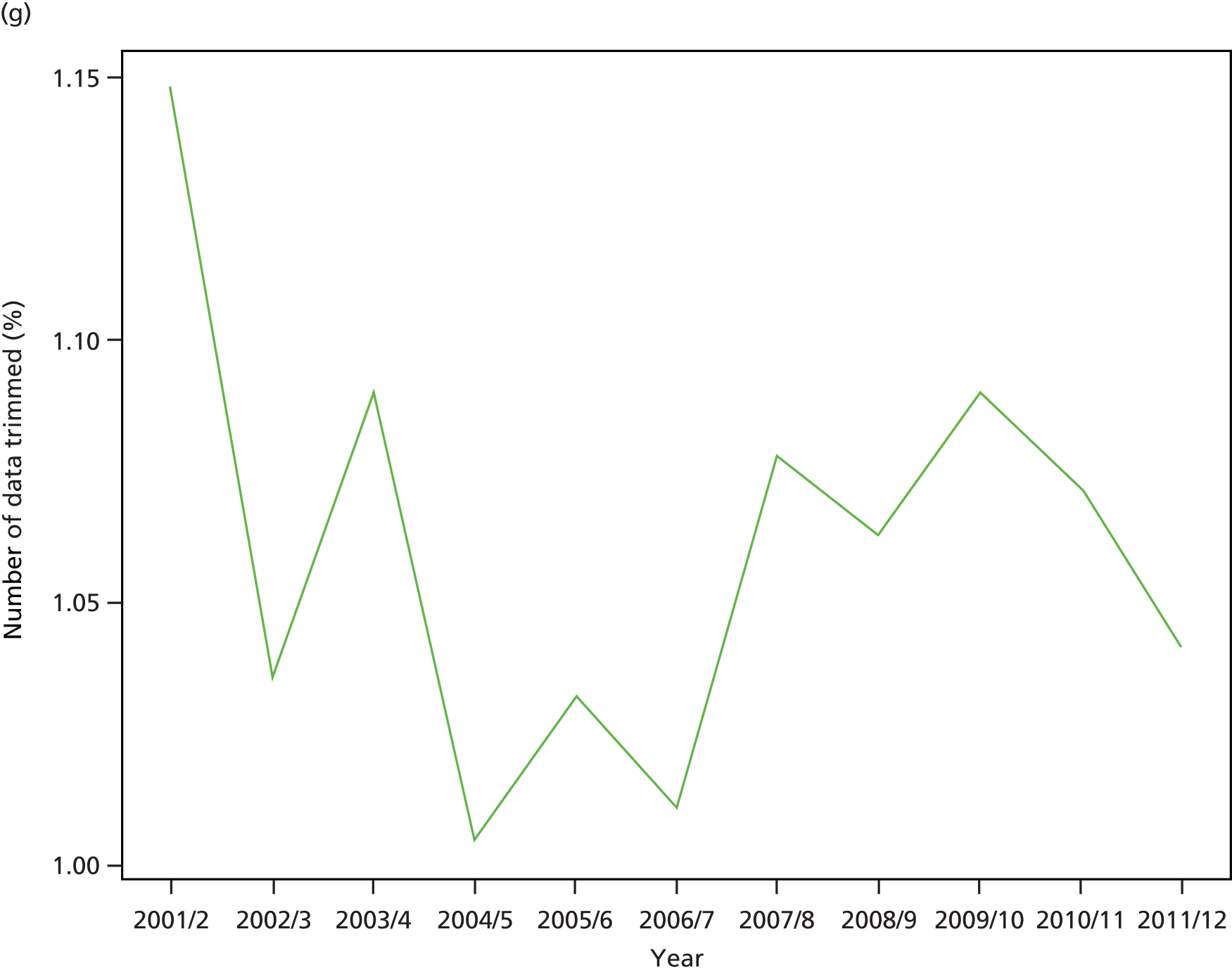

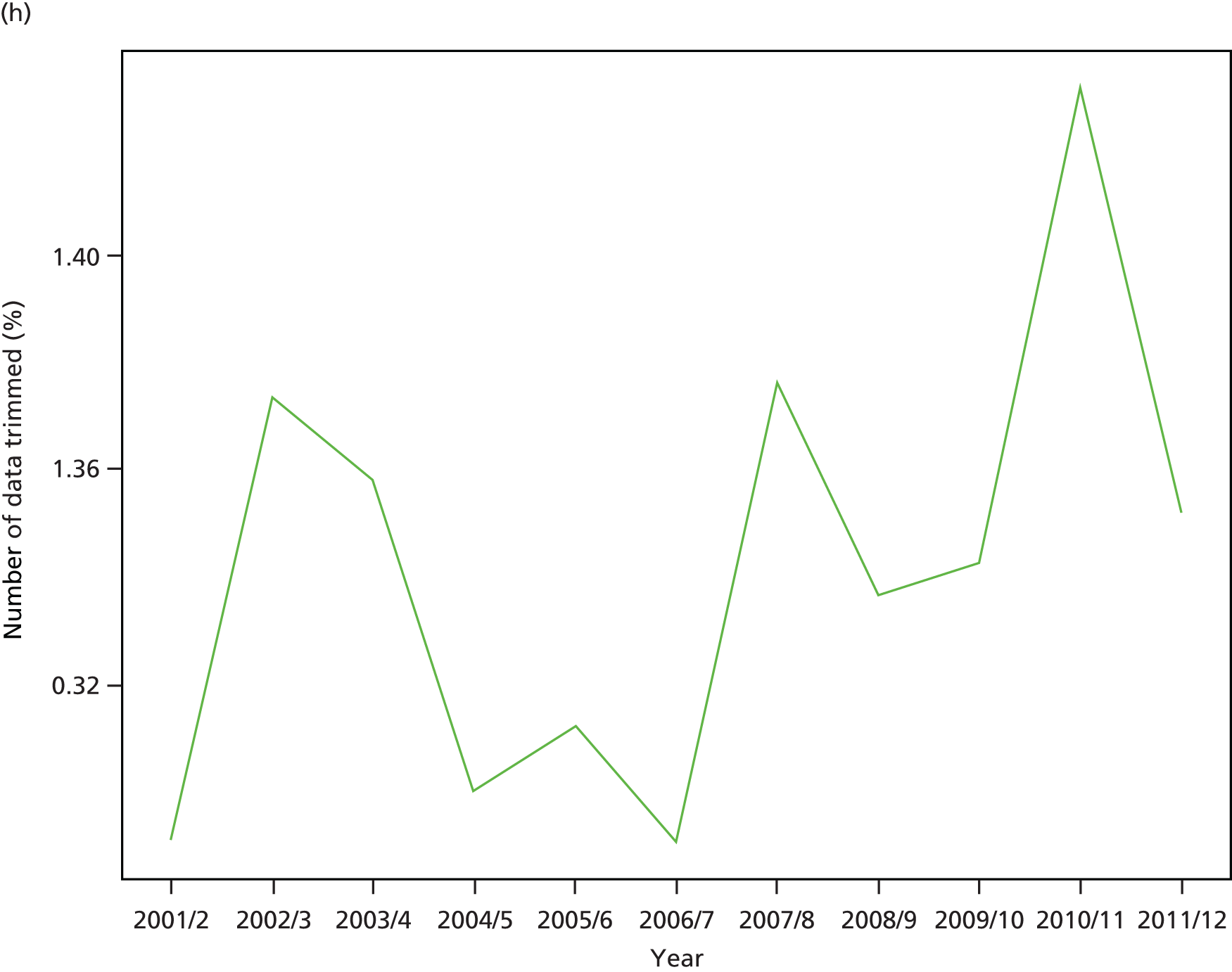

The report also contains extensive appendices. Appendices 1 and 2 present national disease-specific health-care equity indicators for CHD and diabetes, respectively. Appendix 3 lists the advisory group members. Appendix 4 contains full technical specifications of our main general indicators. Appendix 5 presents sensitivity analysis around different ways of cleaning our data by trimming outliers. Appendix 6 contains materials from the public consultation exercise. Finally, Appendix 7 contains letters from the three key NHS organisations we consulted during the development process confirming their interest in seeing our equity indicators routinely produced and used for NHS quality improvement: the NHS England Inequality and Health Inequalities Unit, Hull CCG and Vale of York CCG.

Background on equity in health care

Why monitoring health-care equity is important

The World Health Organization (WHO) has called for universal health care and routine monitoring of health-care equity in all countries. 6,17,18 It is fairly obvious why health-care equity monitoring is needed in countries that lack universal health-care systems. In such countries, many people cannot afford high-quality health care and have limited protection against the financial risk of catastrophic health-care costs and impoverishment as a result of ill health. Limited access to health care and limited financial protection are both typically associated with a low level of wealth, ethnicity, rural location and other social variables giving rise to equity concerns. Furthermore, there is good evidence that introducing universal health care, and, in particular, universal primary care, can contribute to reducing wider social inequalities in population health. 10 Therefore, it is important for countries seeking to establish universal health-care systems to monitor progress in reducing three different kinds of inequality in health care:

-

inequality in health-care financing

-

inequality in health-care access

-

inequality in health-care outcomes.

But why is health-care equity monitoring also important in a high-income country like England, which introduced universal health care as long ago as 1948? The answer is that important inequalities in health-care access and outcomes persist in these countries, even though universal health care has succeeded in reducing them. Monitoring of inequality in health-care financing may also be more important in countries with less comprehensive and generous systems of universal health care than the English NHS. Detailed local monitoring of the unequal impact of out-of-pocket health-care costs on household finances can be considered less important in England, which regularly tops international league tables of fairness in health-care financing and has succeeded in virtually eliminating the threat of catastrophic health-care costs: relatively few people in England report financial difficulties in paying health-care bills or face catastrophic medical expenditures. 19,20

The fact that social inequalities in health-care access and outcomes persist in universal health-care systems has been known for some time,21–24 and the findings of our study provide further evidence. Furthermore, there is a risk that some of these inequalities could potentially worsen in future decades as universal health-care systems come under increasing financial strain even in high-income countries. Over the next 50 years, rising care costs may make it increasingly hard for high-income countries to provide fully comprehensive packages of health care that are fully supported by long-term care and other public services that influence patient outcomes. 25,26 This is not just a short-term issue relating to public sector austerity in the aftermath of the global economic crisis in 2008. There are also concerns about long-term health-care cost inflation as a result of medical innovation, demographic change and wage inflation in a labour-intensive high-skill industry. Health-care expenditure has absorbed an increasing share of national income in Organisation for Economic Co-operation and Development (OECD) countries over the past 50 years, and this trend is projected to continue. 27 A recent study forecast that public spending on health care and long-term care as a share of national income in OECD countries will more than double over the next 50 years, from an average of 5.5% in 2006–10 to between 9.5% and 13.9% by 2060. 26 Faced with tensions between the rising cost of public care and what people are willing to pay in higher taxes, rich-country governments may face increasingly hard choices in the coming decades about what services to include in the universal health package at what level of quality. This has the potential to exacerbate existing inequalities of health-care access and outcome, especially inequalities related to income, as (1) income inequalities are also projected to continue growing in the coming decades,28 and (2) financial strain on public health-care systems may increase the role of privately funded health and social care in future.

In summary, important inequalities in health-care access and outcomes remain, and are at risk of growing in future decades. That is why it is important to establish systems for health-care equity monitoring, even in high-income countries with universal health-care systems.

Concepts of equity in health care

This section briefly reviews the main concepts of equity in health care that underpin all empirical measurement work in this area, including the indicators developed in this study. We focus on equity in health-care delivery, because relatively few people in England report financial difficulties in paying health-care bills or face catastrophic medical expenditures. We focus on socioeconomic inequality because (1) this is an important type of inequality at risk of growing in the coming decades and (2) the available data sources for measuring socioeconomic inequality in health care are relatively well developed. Socioeconomic inequality is, therefore, a useful test case to see if robust equity monitoring systems can be developed. Data sources for measuring other dimensions of equity are improving, and so it may be possible in future to apply similar methods to examine health-care inequalities relating to ethnicity, mental health, homelessness and other equity-relevant variables.

The literature on socioeconomic inequality in health-care delivery usually adopts a normative perspective that seeks to distinguish ‘appropriate’ or ‘fair’ inequalities in health care from ‘inappropriate’ or ‘unfair’ inequalities. To mark this distinction, it is common in the literature to use the word ‘inequities’ (in Europe) or ‘disparities’ (in the USA) to reflect what may be regarded as ‘unfair’ social inequalities in health care. However, there is considerable variation in usage and the term ‘disparities’ is sometimes used to indicate the mere fact of variation without any normative implication. However, the term ‘inequity’ always has a normative connotation and is the term we use in this report. The basic idea is to measure departures from ‘horizontal equity’ in health-care delivery: the equal treatment of people in equal need. We can distinguish three main kinds of health-care inequality that policy-makers may be concerned to reduce, based on three different definitions of ‘equal treatment’:

-

inequality of health-care access between people with equal need for health care

-

inequality of health-care utilisation between people with equal need for health care

-

inequality of health-care outcome between people with equal need for health care.

These three types of inequality are progressively more challenging to reduce. Providing equal access to a service does not guarantee the service will be used equally, and using the same service does not guarantee the same benefits will be gained. The first and third principles are both central to this report, and so we compare and contrast them in more detail below. First, however, we review the concept of ‘need for health care’ which is common to all three principles and raises a host of thorny conceptual issues.

One important preliminary issue is how far ‘need for health care’ may extend beyond traditional health-care boundaries to include need for other non-health-care goods and services that may improve health. As mentioned earlier, it is now well known that health care is just one of many important social determinants of individual health over the life-course, along with childhood development, living and working conditions, job control, social status anxiety, and all of the lifestyle health behaviours that are causally associated with these social factors. 6 It might be stretching things to argue that ‘need for health care’ is the same thing as ‘need for health’, and that, therefore, it includes need for all the social and biological determinants of health. This would imply, for example, that health-care providers are responsible for providing people with the strong genes, loving parents and high incomes they need in order to live long and healthy lives. However, it might be reasonable to expect health-care staff to deliver preventative health-care services including not only narrowly medical interventions such as vaccination and immunisation but also a broader range of screening and disease awareness services to facilitate the early detection of disease and interventions to help reduce behavioural health risk factors such as smoking, physical inactivity and poor diet. It might also be reasonable to expect health-care staff from different specialties to work together in multidisciplinary teams when treating a complex patient with multiple conditions, to co-ordinate across primary and acute care settings, and to liaise with staff in social care and other public services to help improve the patient’s prospects for a sustained recovery. So, need for health care may extend to need for co-ordinated care efforts by health-care providers, need for travel services that allow people to use health care, and need for social services that help to improve recovery and long-term patient outcomes such as avoidable episodes of ill health. We return to these boundary issues in more detail below.

Another important debate is about the role of individual preferences, or what we might call ‘subjective need for health care’ as seen from the patient’s own internal perspective, as opposed to ‘objective need for health care’ as seen from an external clinical or policy perspective. 29 Some authors argue that it is important to respect individual preferences about how far to seek, accept and adhere to health care that is only seen as needed from an external perspective. 30 By contrast, other authors emphasise that preferences are socially determined and may reflect entrenched deprivations, and so the focus for the purpose of assessing unfair inequality should be on ‘objective’ need as assessed from an external perspective. 31,32 There is a social gradient in self-reported ill health, such that poorer individuals generally report greater ill health than richer individuals. However, for a given level of ‘objective’ ill health, as assessed by a clinician using biomedical measures, richer individuals are likely to report greater subjective ill health than poorer individuals and to express greater demand for health services that are free at the point of delivery. 33,34 Those who wish to respect individual preferences may be content to use ‘subjective’ measures of ill health and need for health care, or to focus on reducing inequality of health-care access. By contrast, others may prefer to focus on the more demanding equity objectives of reducing inequality of health-care utilisation and outcome for people with the same ‘objective need’.

A third conceptual issue is whether need for health care should be defined in terms of severity of illness or capacity to benefit. 35 Severely ill patients are worse off than other patients in a relevant sense, and, to that extent, may have a greater claim on health-care resources. On the other hand, if a severely ill patient has zero capacity to benefit from a costly new medical treatment, over and above the benefits they receive from their existing package of care, then it seems odd to say that they ‘need’ that costly additional treatment. It may be unfair as well as inefficient to spend money on ineffective health care for severely ill patients rather than effective health care for less severely ill patients, although it is of course important to adopt a broad view of what counts as ‘effective’ care that does not merely focus on life extension and biomedical functioning but also includes broader aspects of quality of life, including being treated with dignity and compassion, perhaps especially in relation to palliative care for severely and terminally ill patients. In relation to equality of health-care outcomes, the case for defining need as capacity to benefit is that it may not be possible for the health-care system to deliver equal outcomes to people with equal severity of illness. For example, imagine one patient has an incurable disease, while another has an equally severe disease with a fully effective remedy. Furthermore, assume that the incurable nature of the disease was not caused by a failure on the part of health-care services to deliver diagnosis, effective treatment and prevention services at an earlier stage in the patient pathway. In that case, the patient with the incurable disease may have less capacity to benefit from health care, and so the unequal health-care outcome for these two people with equal severity of illness may not be the responsibility of the health service and hence not an indicator of unfair treatment.

This raises a fourth, thorny, question: should need for health care (including preventative services) be assessed from the perspective of the current situation, at whatever point the patient has currently reached in the disease pathway, or from an earlier point when severity of illness may be lower but capacity to benefit greater? This relates to a more general question about time perspective. Should equity in health care be assessed from a cross-sectional perspective, focusing on health-care delivery this year for health-care needs this year, or from a longitudinal perspective looking at health-care delivery over a longer time window that may include past, present and future time periods, perhaps even the individual’s entire life-course?

Unfortunately, empirical studies often have limited ability to address these important conceptual debates about ‘need’, because they often rely on imperfect need variables such as age, sex and various indicators of morbidity which are typically only measured at a point in time or across time in just one part of the system (e.g. primary or secondary care). The basic strategy used in the empirical literature on socioeconomic inequity in health care is to measure associations between (current) socioeconomic status and (current) health care after adjusting for (current) need variables. Our study also follows this strategy, and our need variables are also imperfect. Although we have time series cross-sectional data on small-area populations going back several years, we do not follow each individual within those small areas longitudinally to assess their historical levels of need, health-care delivery and socioeconomic status at earlier points in the patient pathway. The assessment of equity in health care from a longitudinal perspective is an important avenue for future research using longitudinal or linkable data at an individual level.

Our need variables are especially imperfect in the case of health-care outcomes, in which we are able to adjust only for age and sex, but not for morbidity. Failure to adjust for morbidity means that we typically underestimate the risk of poor health-care outcomes in deprived populations. To put this another way, we typically overestimate short-term capacity to benefit from health care and underestimate level of need in deprived populations from the cross-sectional perspective of the current indicator year. As discussed previously, however, capacity to benefit from health care from a longitudinal perspective will be greater than short-term capacity to benefit because of potential benefits in the past and in the future. Nevertheless, from the cross-sectional perspective of the current indicator year we typically overestimate the extent of ‘pro-rich’ socioeconomic inequity in the following three health-care outcomes: preventable hospitalisation, repeat hospitalisation and amenable mortality. For this reason, we usually refer to socioeconomic ‘inequality’ in health-care outcomes throughout the report, rather than socioeconomic ‘inequity’. This does not apply to our three indicators of inequality of access, however, that is primary care supply, primary care quality and hospital waiting time. Indeed, in the case of primary care supply, imperfect measurement of need generates a bias that works in the opposite direction. In this case, as explained in Chapters 4 and 8, we typically underestimate need for primary care supply in more deprived populations. This means that we typically underestimate the extent of pro-rich inequality in primary care supply. These issues are discussed in more detail in Chapters 4 and 7, and in Appendix 4.

In the health outcomes literature, adjusting for age, sex and other risk factors is usually called ‘risk adjustment’ rather than ‘need adjustment’. The basic idea is to adjust the observed outcomes for exogenous risk factors that are beyond the control of the health-care provider, so that the ‘risk-adjusted outcomes’ can be attributed to the actions of the health-care provider and interpreted as an indicator of the quality of care. However, in our context we can also think of this as a form of ‘need adjustment’, for which need is interpreted as short-term capacity to benefit from health care in the current period. We adjust the observed outcomes from health care for exogenous risk factors that determine short-term capacity to benefit from health care in the current indicator period. The remaining differences in adjusted outcomes then reflect ‘unfair’ differences in the benefit achieved by health care rather than ‘fair’ differences in the capacity to benefit from health care. As ‘risk adjustment’ is the more familiar phrase in relation to health outcomes, however, we use that phrase in the rest of this report.

We now return to the question of why reducing socioeconomic inequality of health-care outcomes is a more demanding principle of justice than reducing socioeconomic inequalities of access to health care. The basic reason is that access to health care is just one input into the production of health outcomes. 7,9,10,36 One set of issues relates to individual resilience. Poorer patients may tend to recover more slowly and less completely following health-care intervention because of greater comorbidity, less biological, physiological and psychological resilience and less supportive home and community environments in which to recover, including worse access to supportive informal care from friends and relatives (e.g. in noticing when public care quality falls short and taking corrective action). Another set of issues relates to individual health-seeking behaviour. Poorer patients may be less likely to invest time and other resources in improving their own health by seeking medical information, using medical care and engaging in healthy lifestyle activities, as they face higher opportunity costs (e.g. time required at the expense of domestic and work duties, travel costs) relative to their more limited wealth and human capital, have less social capital to draw on (e.g. support from friends, family and wider social and professional networks) and, more controversially, may be less able to find enjoyable jobs, and to afford pleasant and fulfilling leisure activities, and so may see less point investing time and money to gain additional days of life. Other things being equal, poorer individuals will tend to use less preventative health care when facing no immediate pain or disability, and to present to health-care providers at a later stage of illness. The quality of medical care received may also depend in part on the intensity and effectiveness of patient care-seeking behaviour (e.g. in navigating through a complex health-care system, lobbying providers for the best-quality care) and self-care behaviour (e.g. in adhering to medication regimes). For all of these reasons, poorer patients tend to have greater needs for co-ordinated care and support across diverse service providers in order to achieve good health-care outcomes, including co-ordination between primary, secondary and community care providers, between specialties, and between health-care and social-care services.

Socioeconomic inequalities in health-care outcomes may therefore arise as a result of socioeconomic-related differences in (1) the life-course of the patient, because of the accumulated effect of advantage or disadvantage on the risk of ill health and the prospects of recovery from episodes of ill health; (2) patient behaviour including health-care-seeking behaviour, self-care behaviour and lifestyle behaviour; (3) the behaviour of primary, secondary and community care providers in patient encounters; (4) informal health and community care provided to patients by family and friends; (5) formal long-term care including both publicly and privately funded care and social services provided in the home as well as in institutions; and (6) the co-ordination of care between primary, secondary and community care providers, between specialties, and between health and non-health services. Some of these factors may be considered ‘exogenous’ capacity to benefit factors that lie entirely outside the remit of the health-care system. Others may be considered ‘endogenous’ factors under the control of the health-care system. Still others may lie in a ‘grey area’ of overlap, in which the boundaries of responsibility are not clear-cut. These boundary issues can raise challenging ethical questions for health-care providers. For instance, if a poor patient has a worse post-surgical outcome than a rich patient as a result of their lack of a supportive home environment in which to recover, how far should health-care providers be held responsible for stepping in to remedy the situation? One view is that health-care providers are indeed responsible for stepping in, as the poor patient needs additional support during their recovery period, whereas the rich patient does not. Another view might be that providing a supportive home environment including reminders to take medication, follow physiotherapy regimes and other medical advice is not properly the responsibility of the health service. Our report does not seek to take a prescriptive ethical view on such matters. Rather, we seek to provide data and evidence to help decision-makers draw their own conclusions about equity based on their own value judgements.

Monitoring of equity in health and health care in England

This section briefly reviews the recent history of monitoring of equity in health and health care in England since the early 2000s, and summarises the equity indicators that are already produced by Public Health England and NHS England. By way of comparison, the section then reviews the system of health-care equity monitoring in the USA, which at the current time is arguably the most comprehensive in the world, as explained below.

Monitoring of equity in health care is in its infancy, and remains isolated from mainstream quality assurance. Although health-care policy-makers, regulators, purchasers and providers have become accustomed to paying close attention to routine comparative data on health-care quality for the average patient, they lack routine comparative data on social inequalities in health-care quality. 2 This hampers efforts to improve equity, as what is not measured may be marginalised. 1 So, although NHS decision-makers know that health-care inequalities exist, they do not yet have a routine approach to quantifying the influence of the NHS on those inequalities. They cannot routinely pinpoint changes in health-care inequalities at local level, and do not know what impact their actions are having on such inequalities. Prior to 2015, there was essentially no routine monitoring of equity in health care in the English NHS. The NHS Outcomes Framework started producing national breakdowns of inequalities in selected health-care outcomes for internal use in 2015 and plans to start publishing these breakdowns from 2016. 37 However, there is currently no national monitoring of inequality in health-care access and no local monitoring of equity in the NHS. 2

By contrast, monitoring of inequality in health is more advanced and monitoring of health inequalities within local areas started in the early 2010s, as explained below. In the early 2000s, England introduced national health inequality targets as part of the world’s first cross-government strategy for tackling health inequality. 38,39 However, these targets were limited from a health-care quality improvement perspective. First, they focused on life expectancy and infant mortality, over which health-care providers have little direct control as they are strongly influenced by non-NHS social and economic factors (e.g. living and working conditions) and related lifestyle behaviours (e.g. smoking, diet and exercise). Second, they were defined in terms of inequalities between local government areas, known as ‘spearhead areas’, and the rest of the country, thus masking important inequalities within these areas. This second issue was noted in the 2010 Marmot Review8 of health inequalities in England, as follows: ‘around half of disadvantaged individuals and families live outside spearhead areas . . . By measuring changes only at local authority level, we cannot tell whether any improvements being made are confined only to the more affluent members of a generally deprived population.’

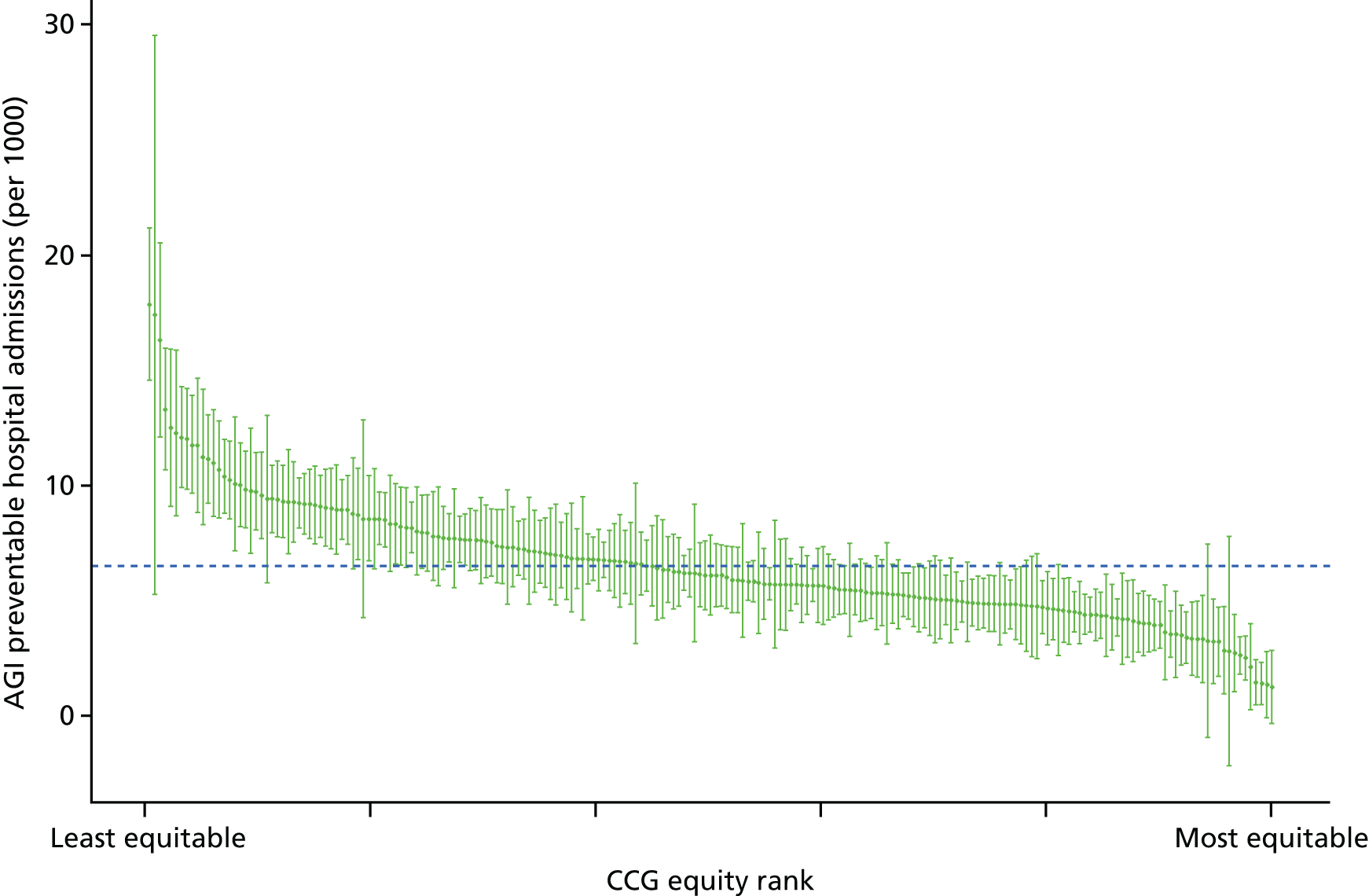

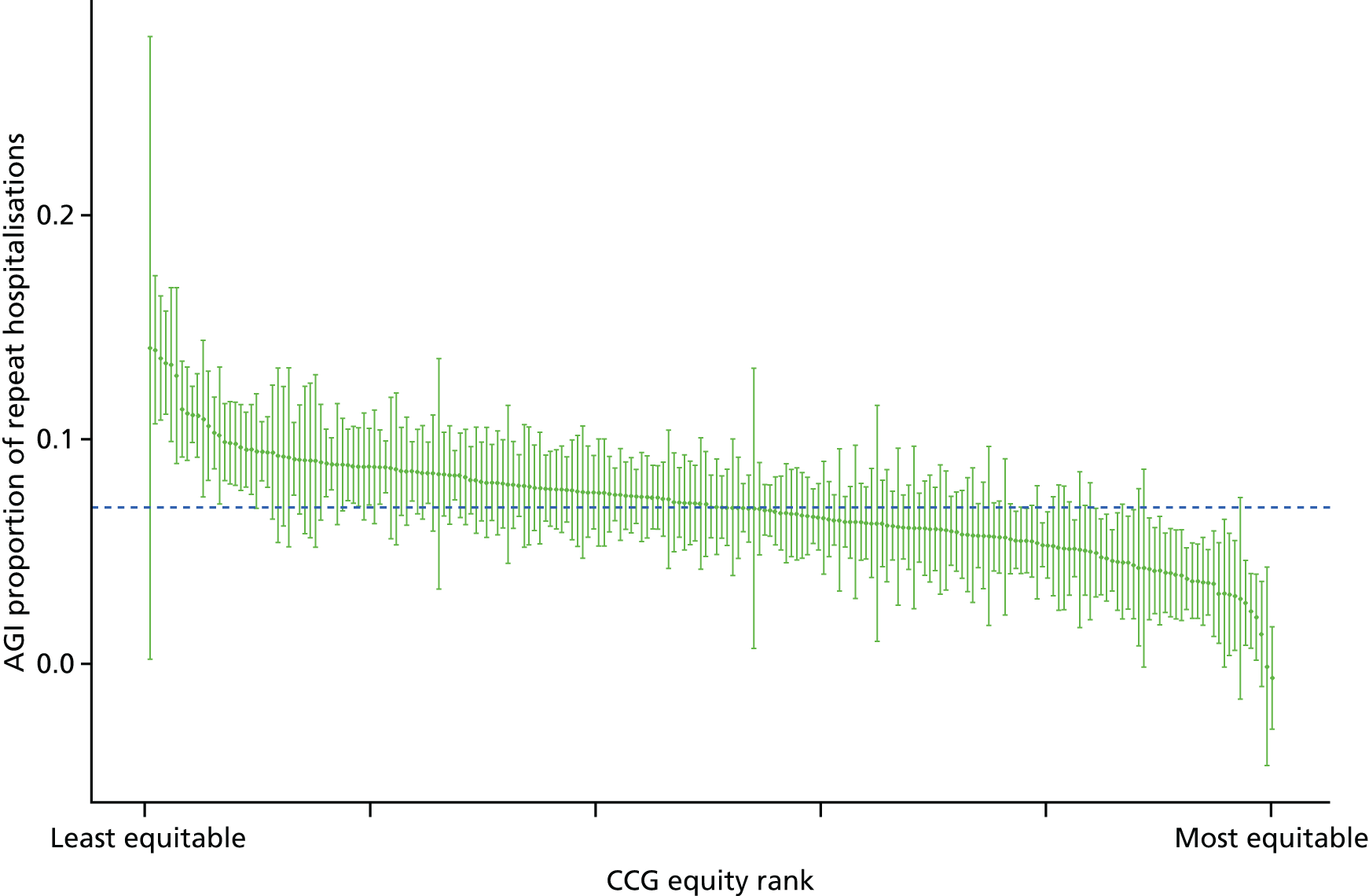

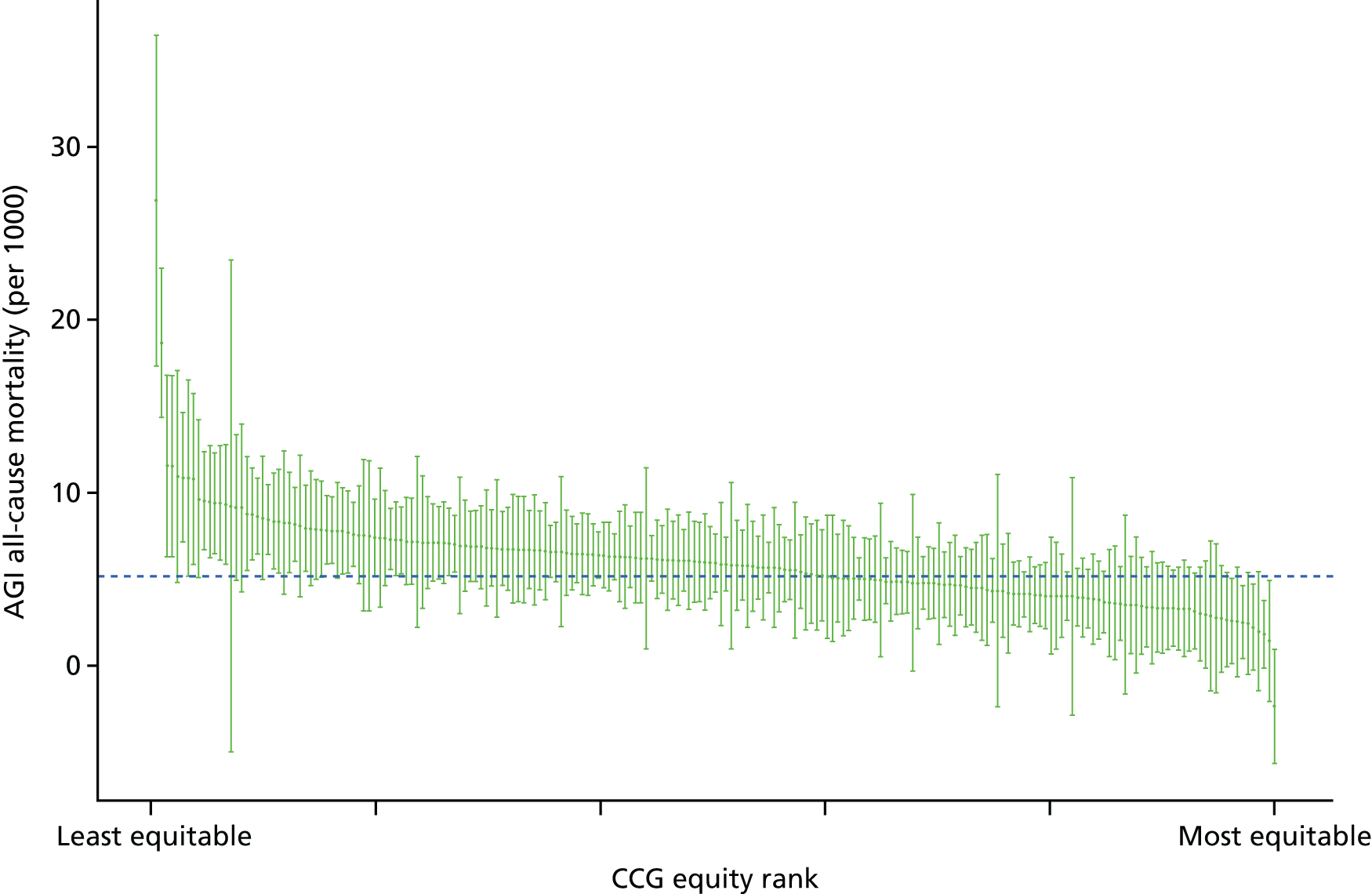

Subsequently, in the early 2010s, a more comprehensive and sophisticated set of local authority level health inequality indicators were developed by the Institute of Health Equity, in collaboration with the London Health Observatory, known as the ‘Marmot indicators’. 40 These include indicators of average health and the social determinants of health that broadly correspond to the policy recommendations proposed in Fair Society, Healthy Lives: The Marmot Review. 8 Importantly, they also include indicators of inequality in life expectancy within each local authority level, based on small-area-level data. The Public Health Outcomes Framework (PHOF) has also produced local as well as national indicators of inequality in life expectancy. 41 These local indicators use a local version of the slope index of inequality (SII), based on ranking small areas into local deprivation decile groups by deprivation score within the local authority. This is a different approach to the one used in the present study, which is based on the national deprivation rank within England as a whole, as explained later in the report in Chapter 4. The primary aim of the PHOF local health inequality indicators is to compare change over time in each local authority, rather than to compare local performance against a national benchmark. The PHOF local deprivation approach is not appropriate for the last task, as local deprivation ranks cannot be compared with national deprivation ranks for the country as a whole. For that reason, we use national deprivation ranks so that we can compare the local gradient in health-care outcomes within the local area with the national gradient. To distinguish our approach from the PHOF approach, we label our local inequality index the ‘absolute gradient index’ (AGI) rather than the slope index.

In contrast to England, the USA has had a fairly comprehensive system of national health-care equity monitoring since 2003. The US health-care equity monitoring system was initiated following landmark reports by the US Institute of Medicine on the safety of care,42 the quality of care43 and racial disparities in both. 44 Since 2003, the Agency for Healthcare Research and Quality (AHRQ) has published an annual report on health-care disparities within the general US population by racial, ethnic and socioeconomic group, and by state. 45 In 2014, this was integrated with the AHRQ annual report on health-care quality to form the National Healthcare Quality and Disparities Report. 46 This report summarises national US time trends in more than 250 different indicators of health-care access, process quality and outcomes. The indicators mostly focus on indicators of health-care access and process quality, in line with relatively narrowly defined health-care quality improvement objectives. However, there are also some indicators of health-care outcomes that go under the heading of ‘care co-ordination’ indicators, such as preventable hospitalisation for ambulatory care-sensitive conditions. Although these are likely to be more sensitive to variations in health-care access in a US setting, compared with a country such as England which has universal health care, these indicators may also pick up concerns for population health improvement and the co-ordination of care across different health-care settings and between health care and long-term care. Most of the indicators in the 2014 report published in May 2015 end in 2012, more than a 2-year data lag, although some indicators such as the proportion of Americans with health-care insurance are measured up to 2014. The AHRQ also publishes a web-based ‘States Snapshots’ tool for comparing quality and disparities between states. 47 This focuses mainly on comparisons of average quality between states, although also compares racial disparities between states by dividing the average of the black, Hispanic and Asian scores by the white score, ranking states on this ratio, and then listing states by quartile group. However, there is still no attempt to compare socioeconomic disparities between states, or to perform statistical tests of whether or not states are performing significantly differently from the national average on racial disparity.

Conceptual framework

Our monitoring framework has the following general design objectives:

-

to monitor equity in both health-care access and outcomes, after appropriate need or risk adjustment

-

to monitor overall equity in health care for the general population, while allowing disaggregation by age, sex and disease category

-

to monitor the equity performance of the health service as a whole, including the integration of care across different specialties, different primary and acute care settings, and different health care, social care and other public services

-

to monitor equity at all main stages of the patient pathway

-

to monitor local equity performance against a national equity benchmark

-

to monitor equity trends alongside equity levels, and average performance alongside equity performance

-

to summarise all key findings in a one-page summary (‘equity dashboard’)

-

to provide visual information about underpinning inequality patterns and trends (‘equity chart packs’)

-

to provide a battery of inequality measures that are easy to understand and capture importantly different concepts of inequality that can trend in different directions

-

to ensure indicators can be understood by members of the general public.

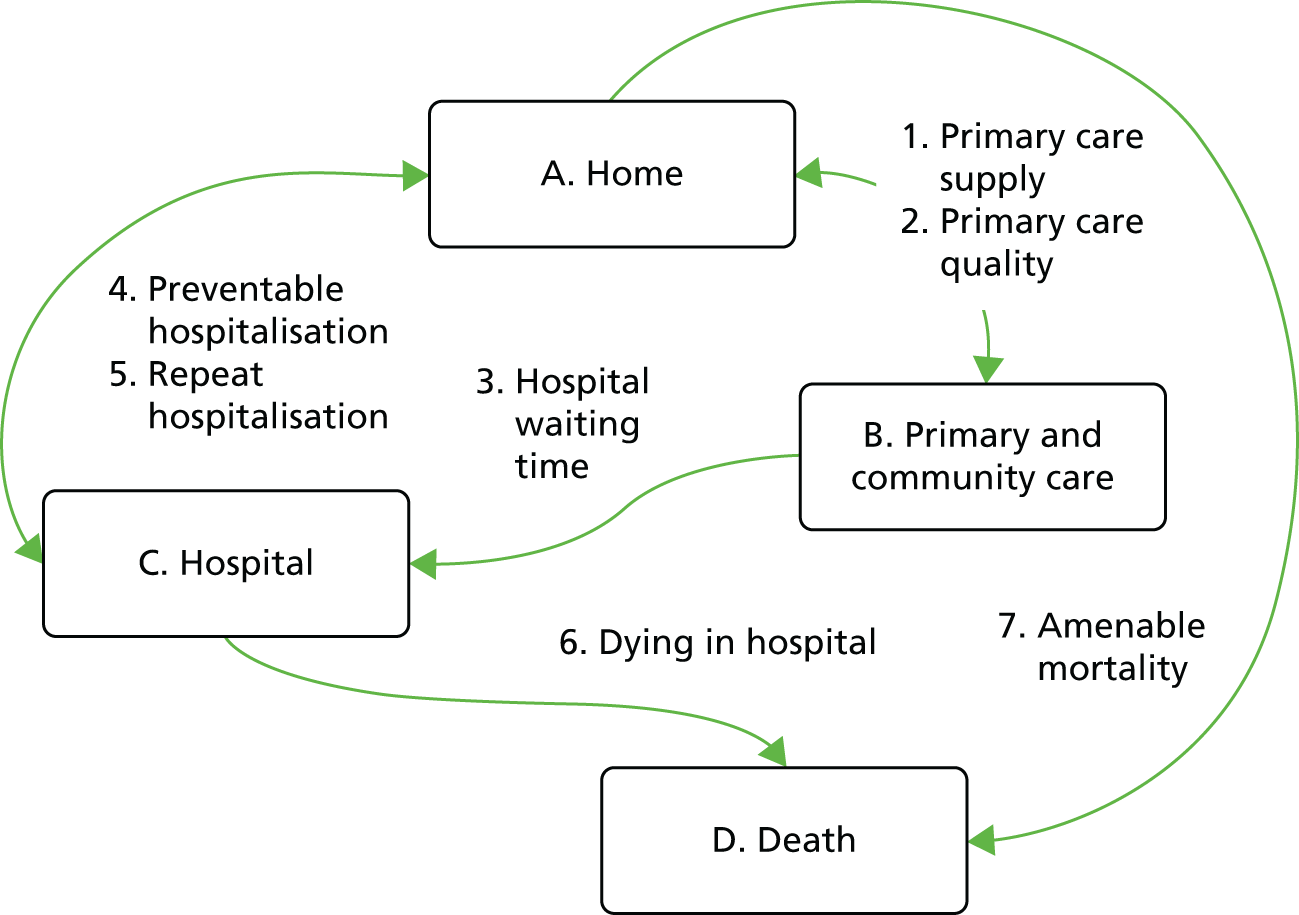

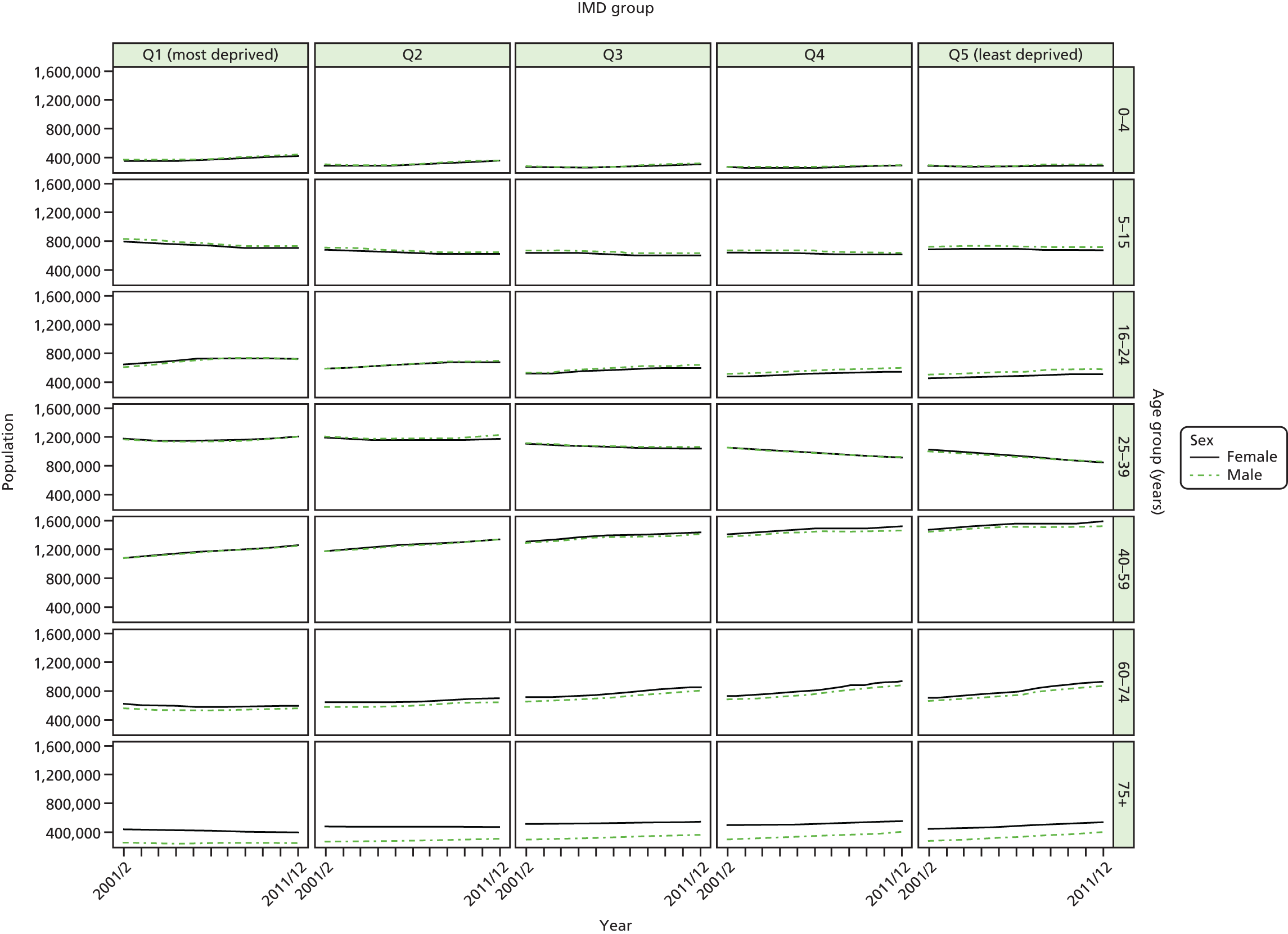

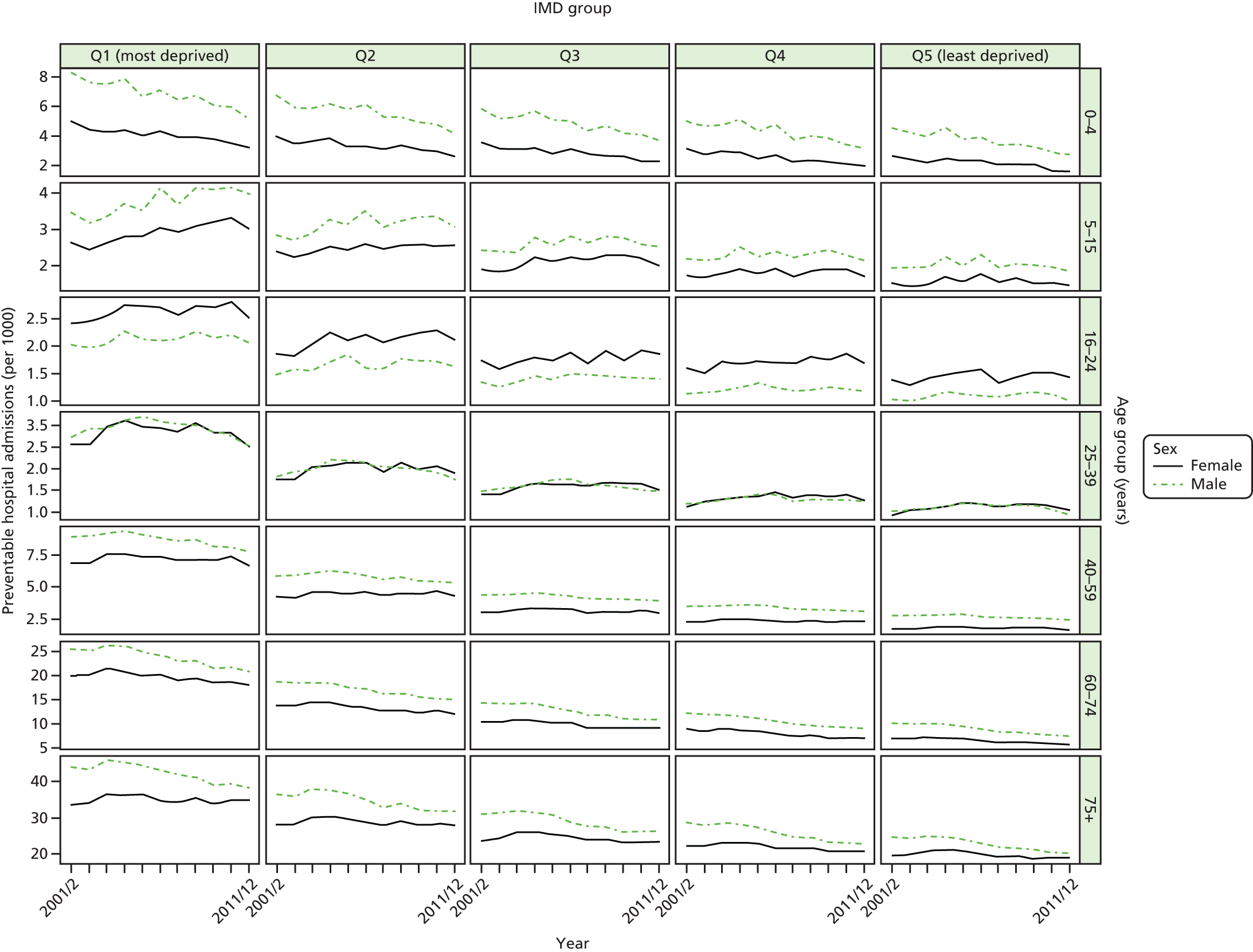

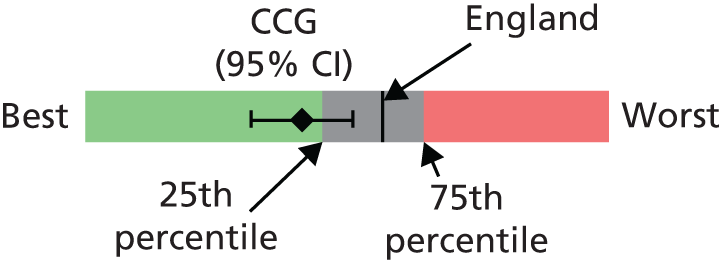

Figure 1 illustrates our framework for monitoring inequality in health-care access and outcomes at key stages of the patient pathway and shows how our eight general indicators fit into this framework.

FIGURE 1.

Conceptual framework for monitoring inequality in health-care access and outcomes at key stages of the patient pathway.

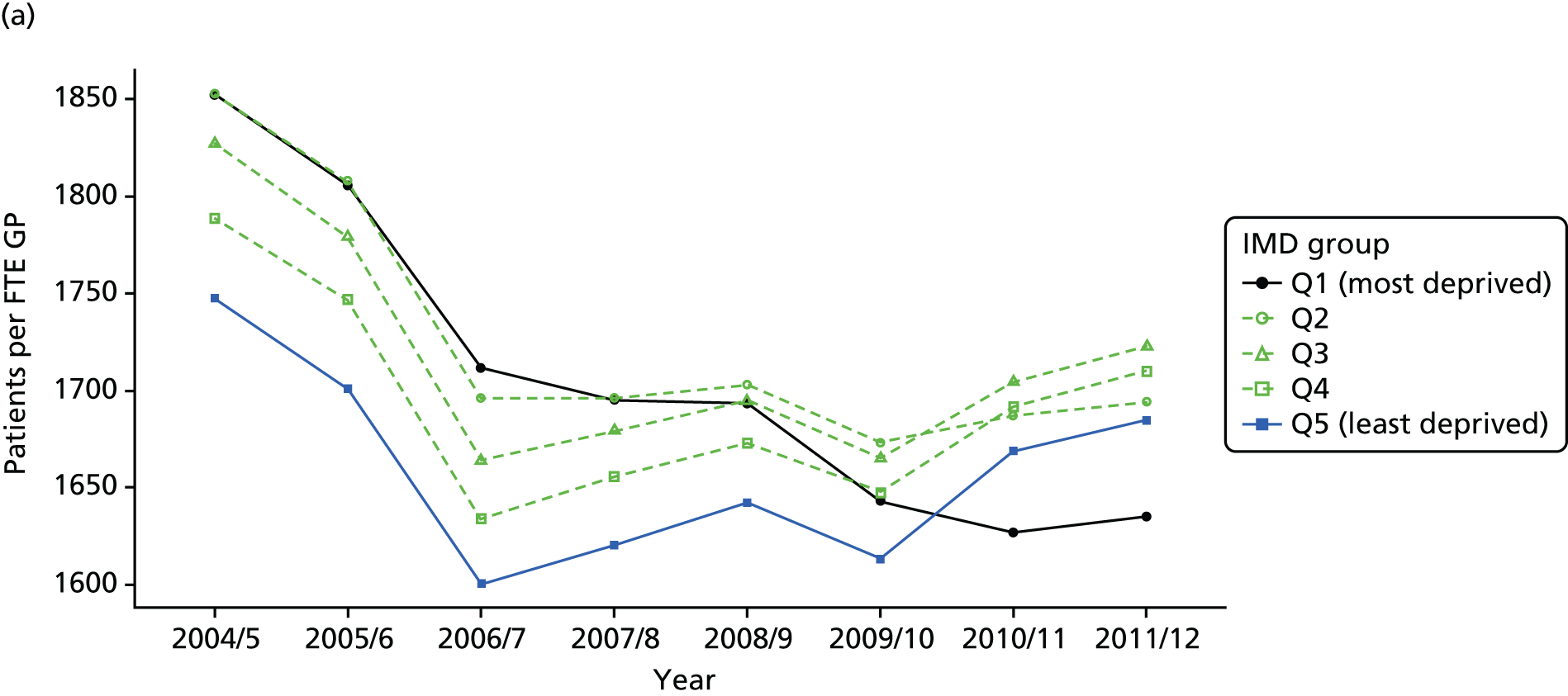

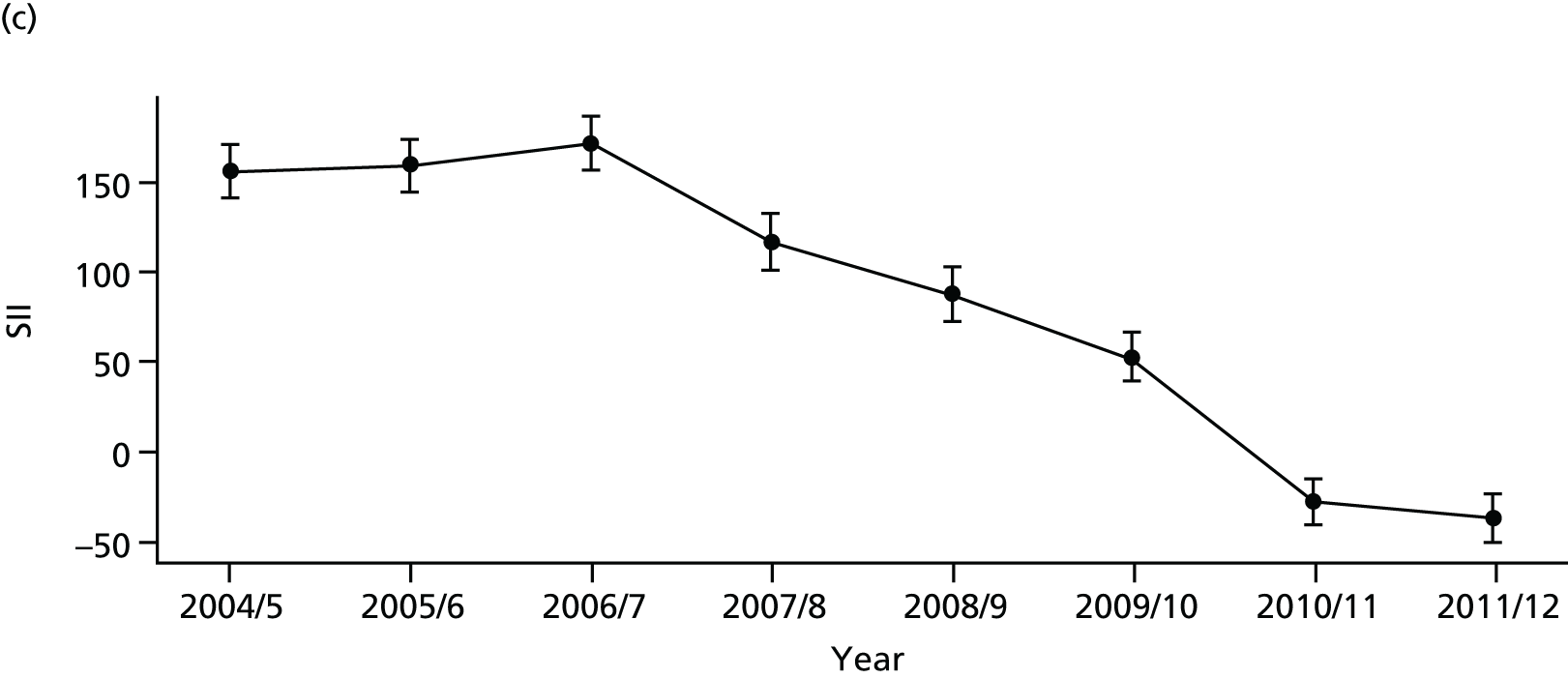

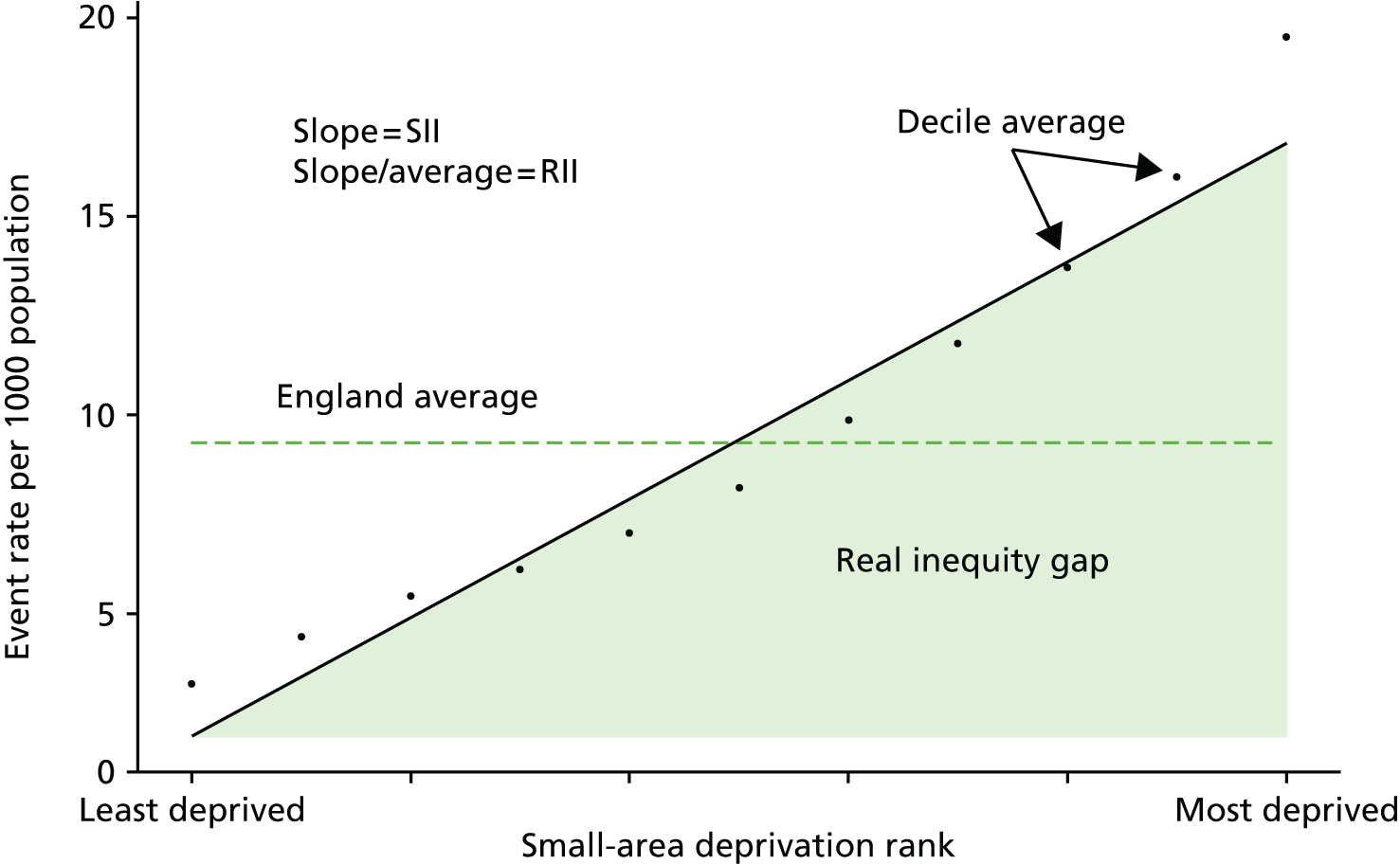

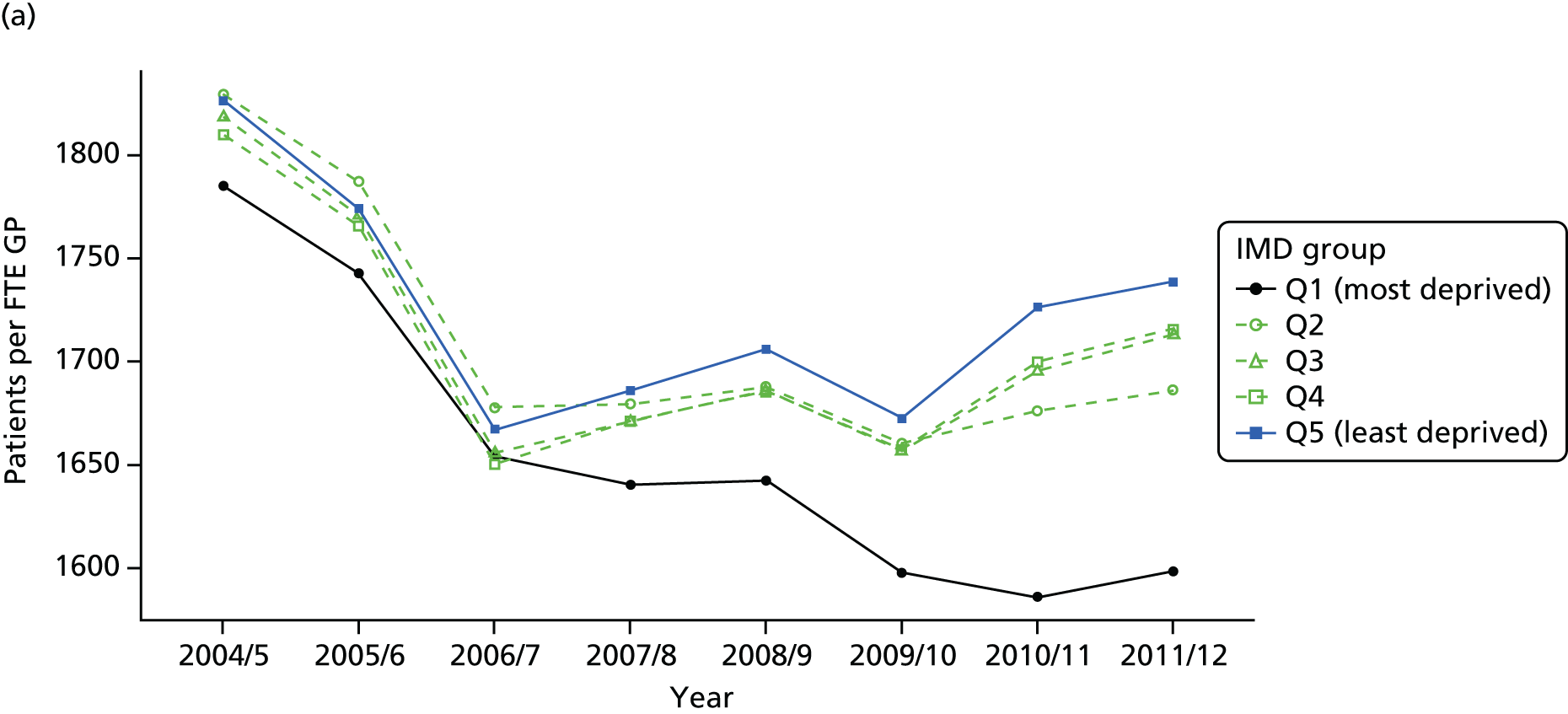

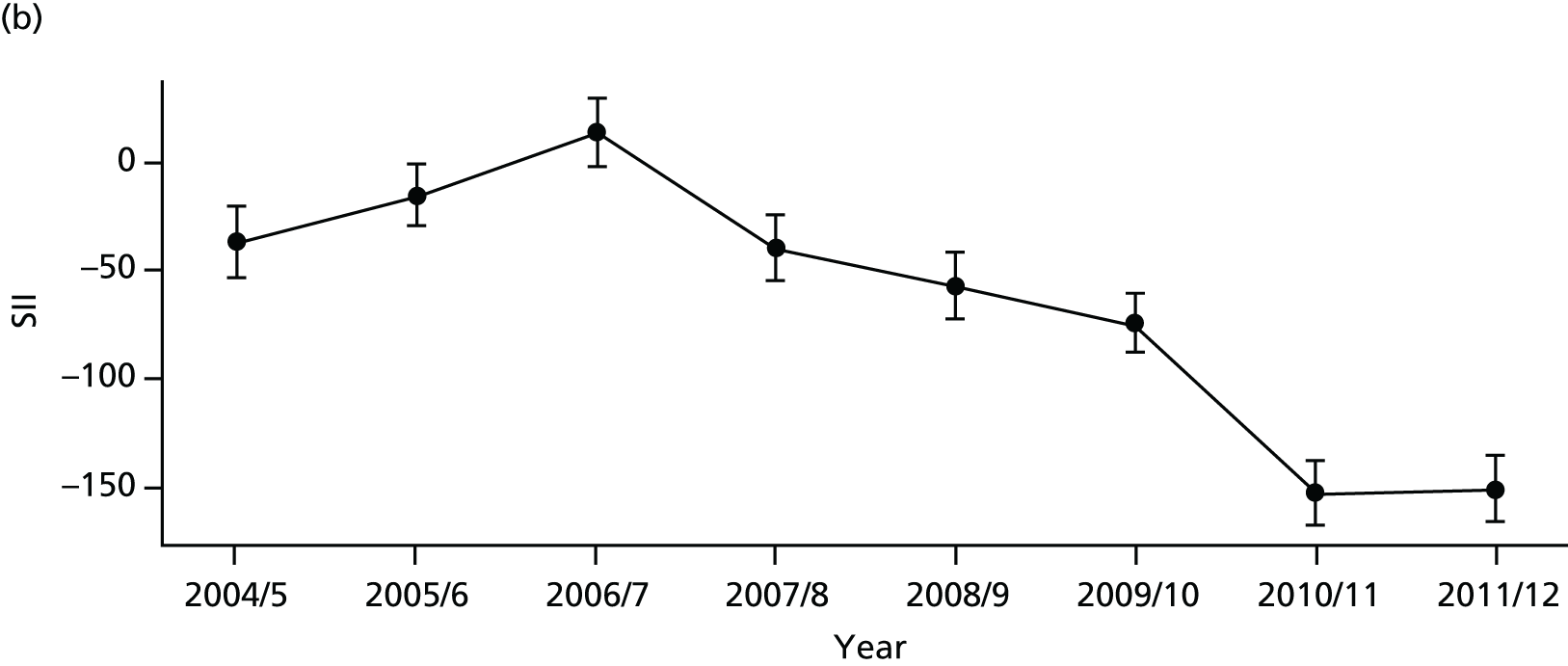

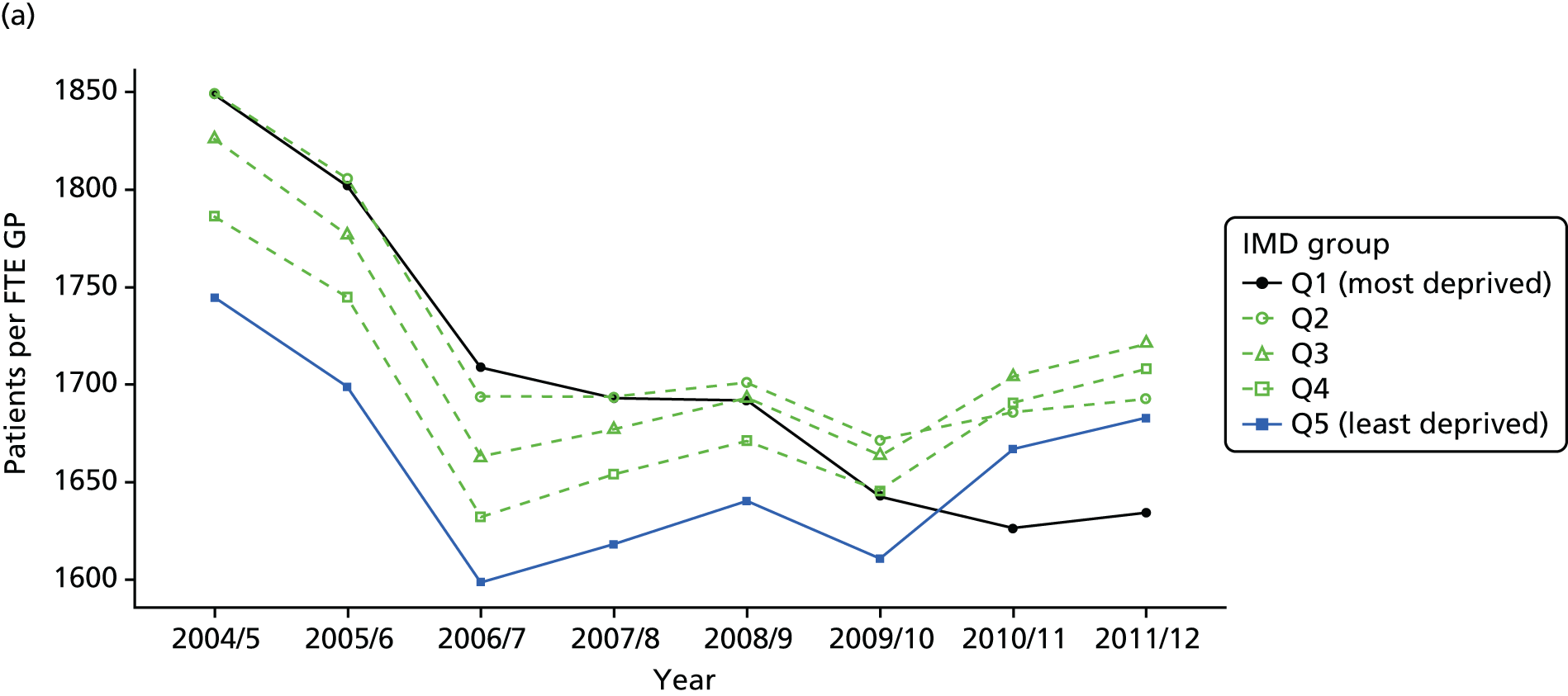

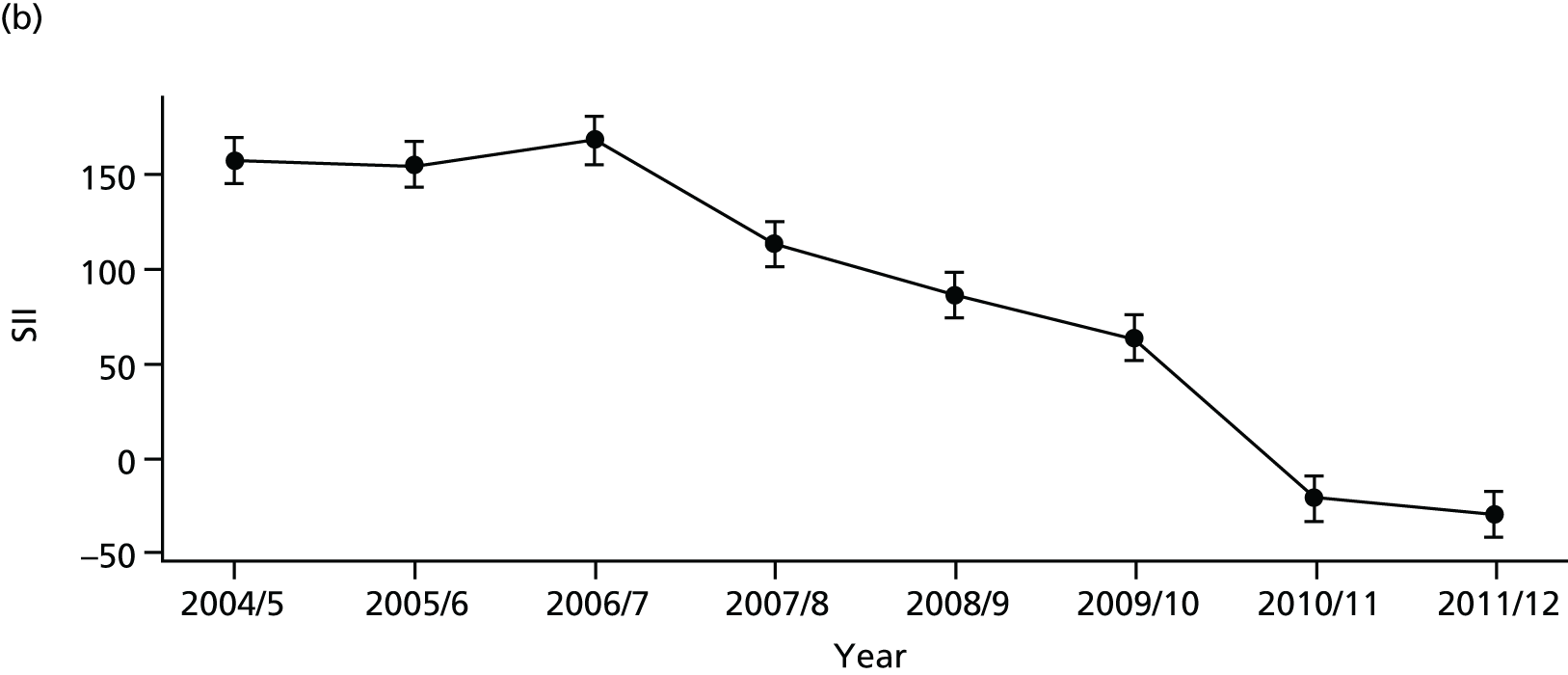

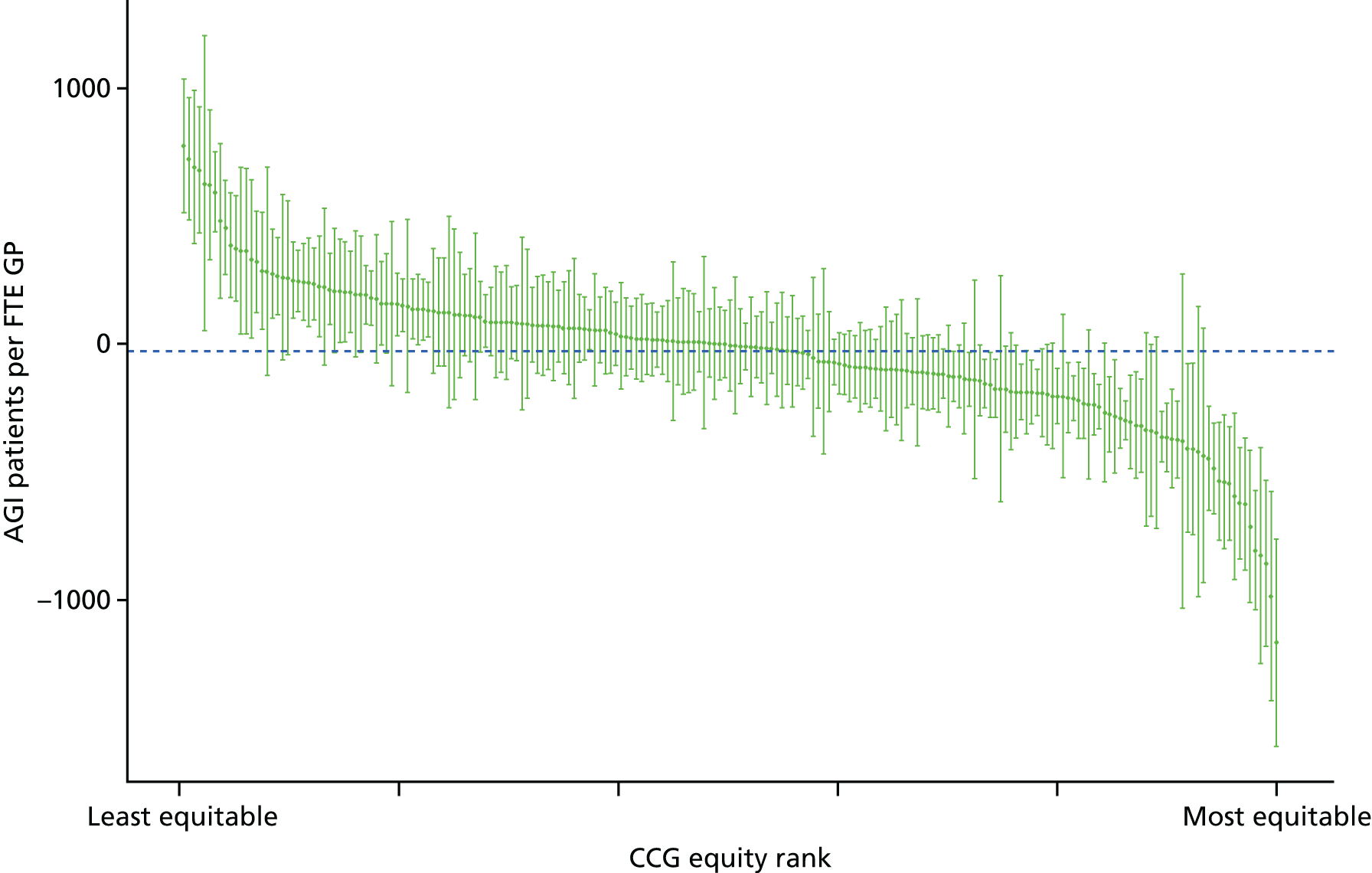

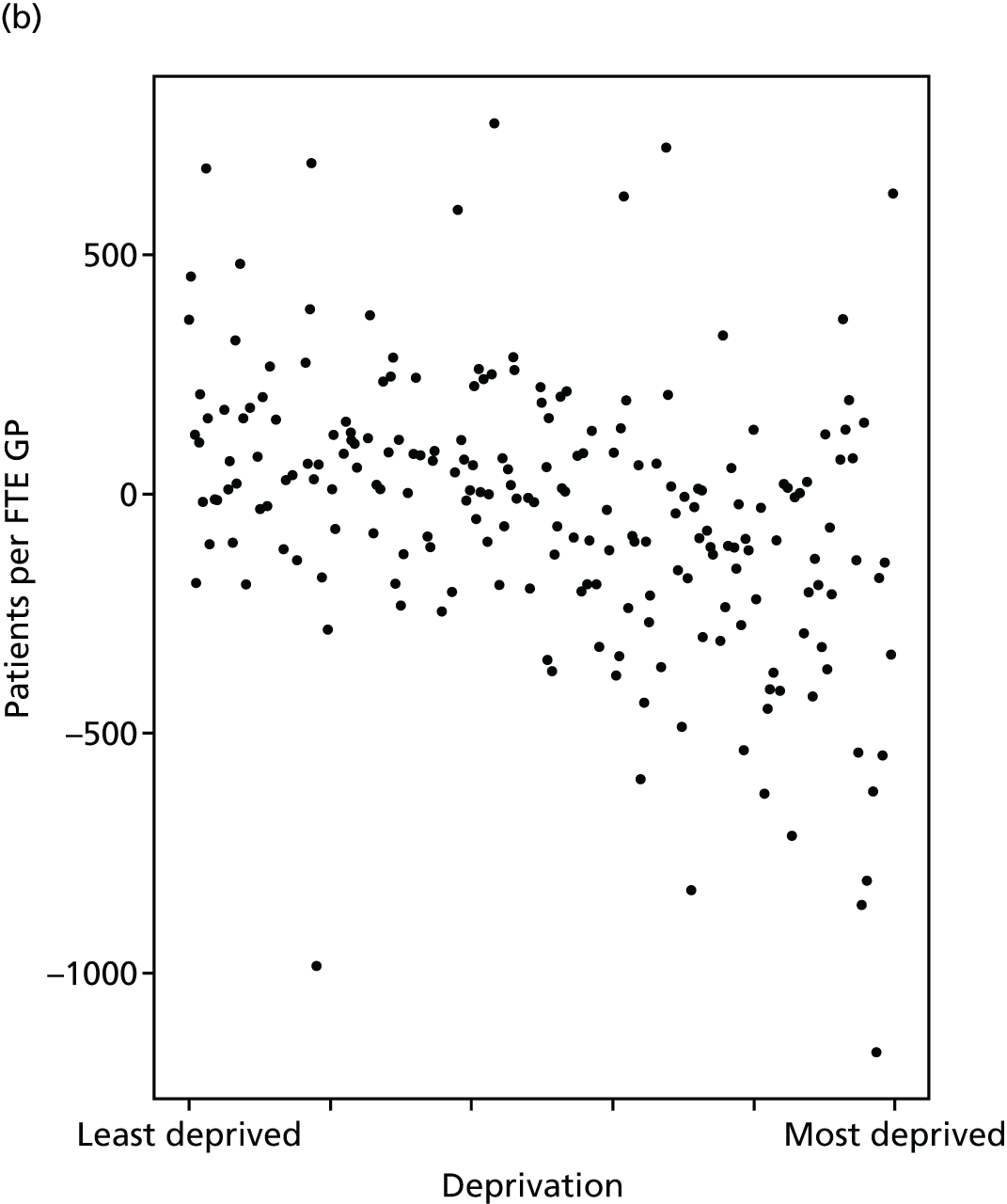

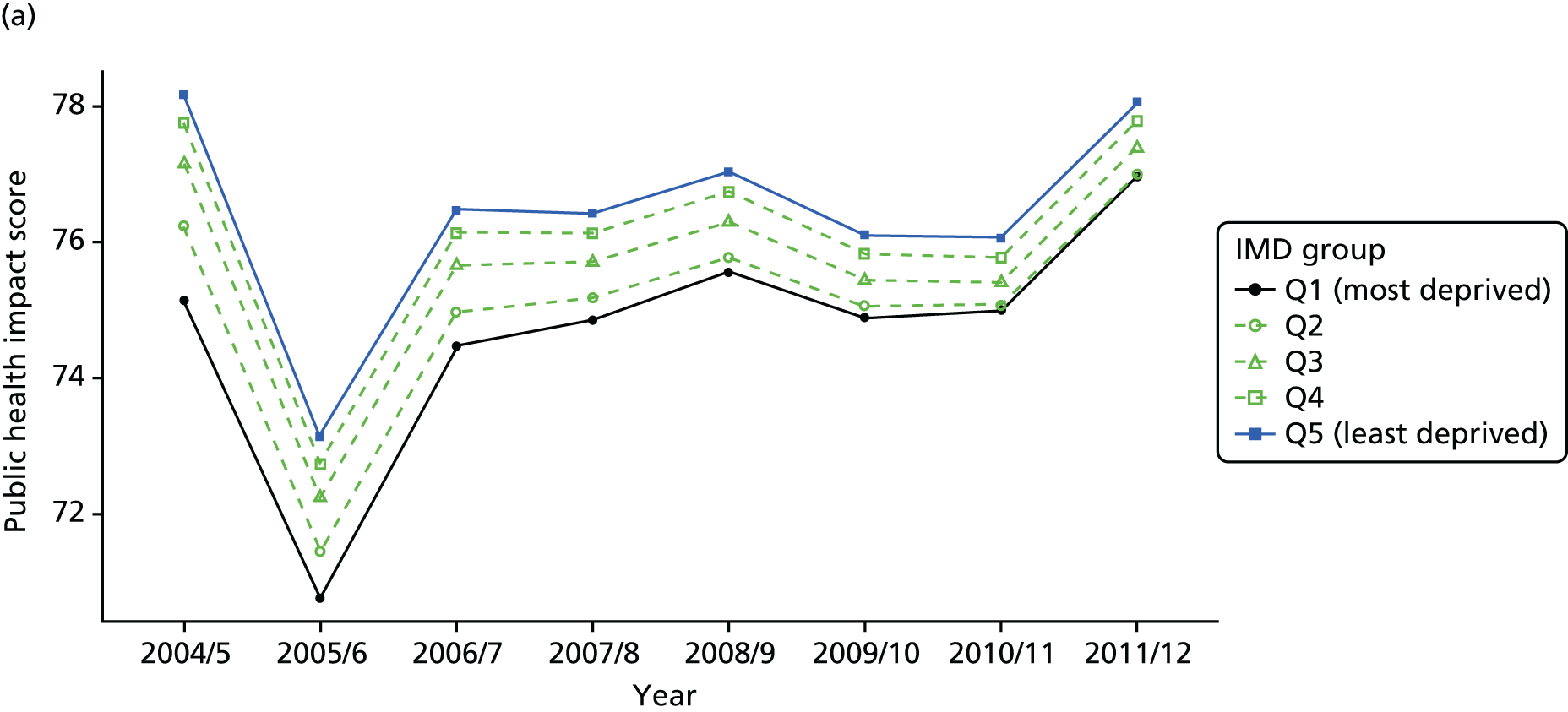

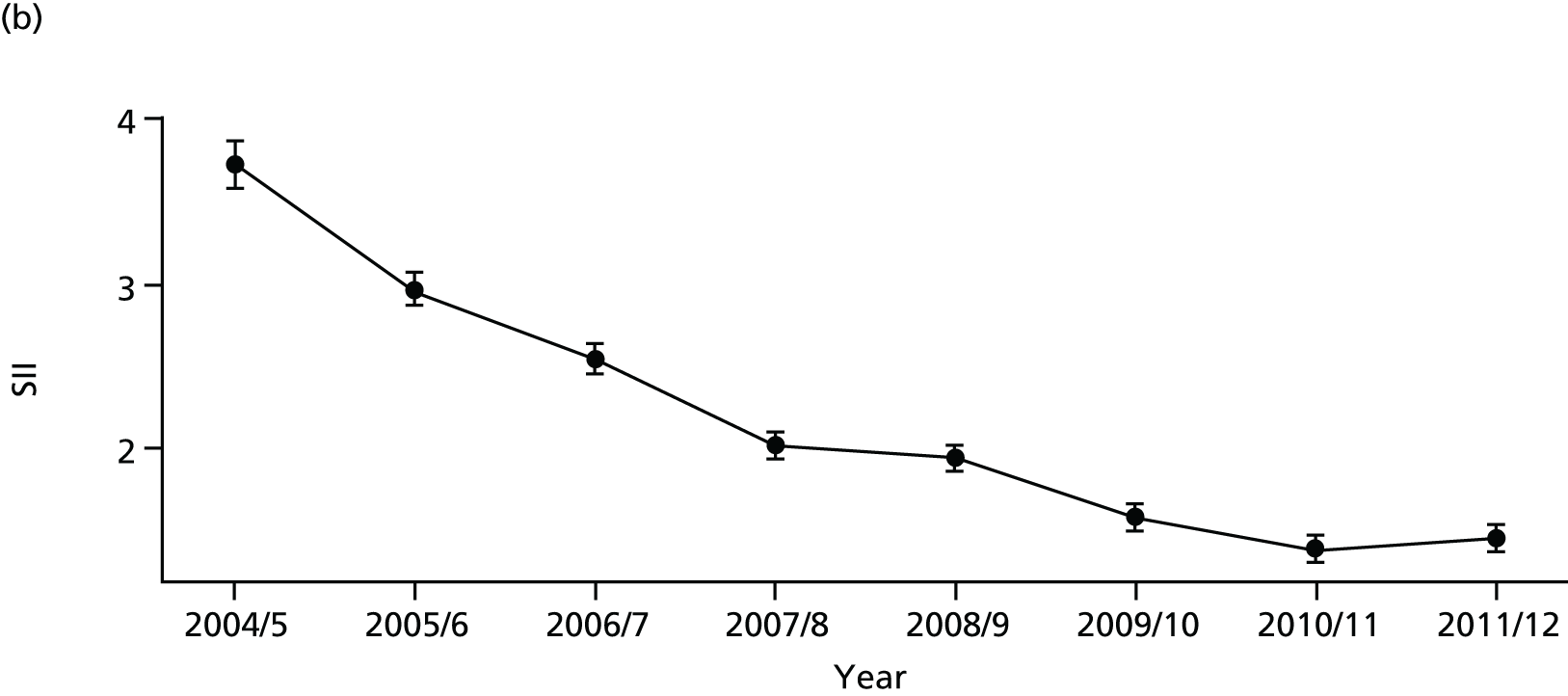

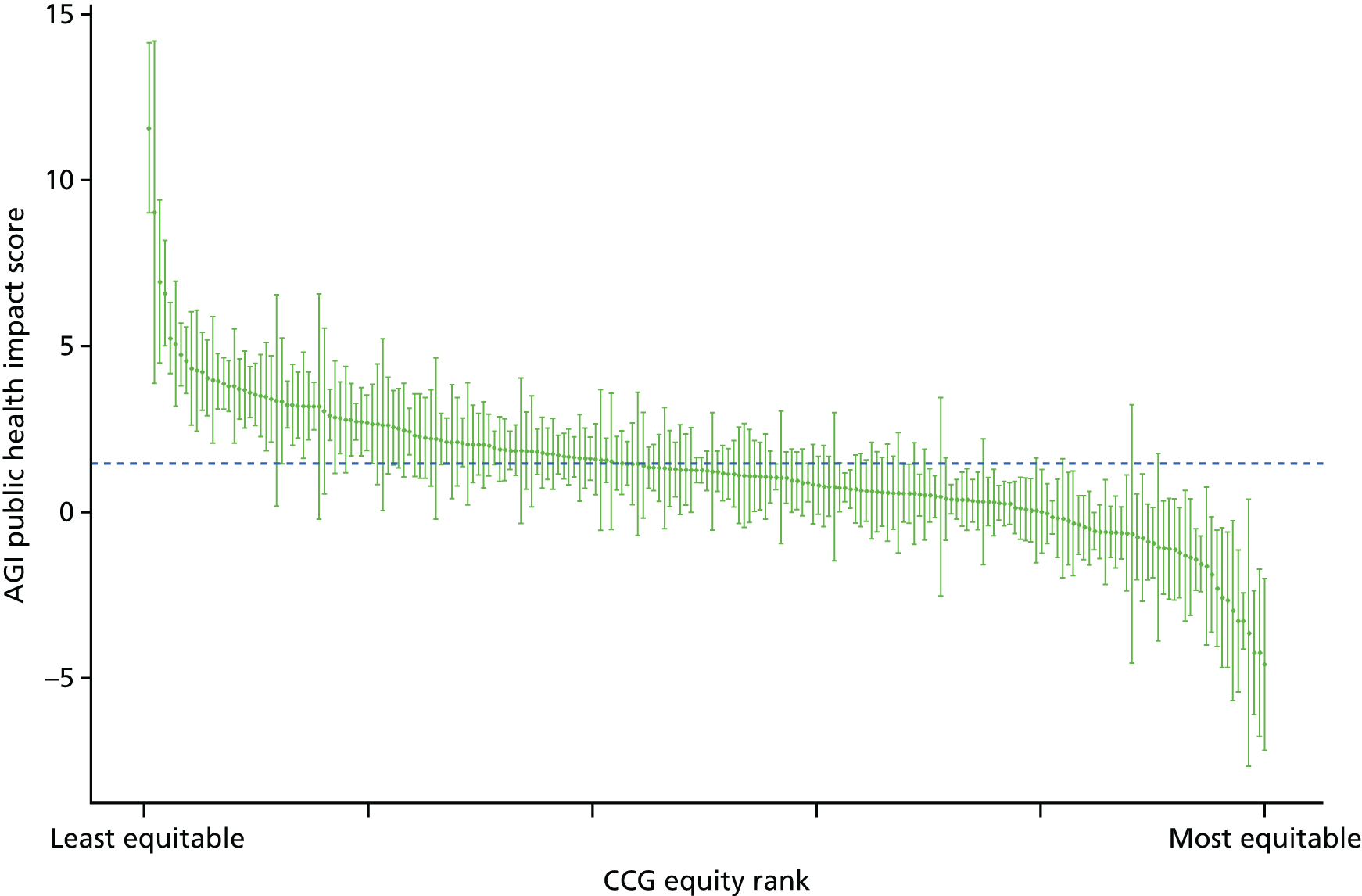

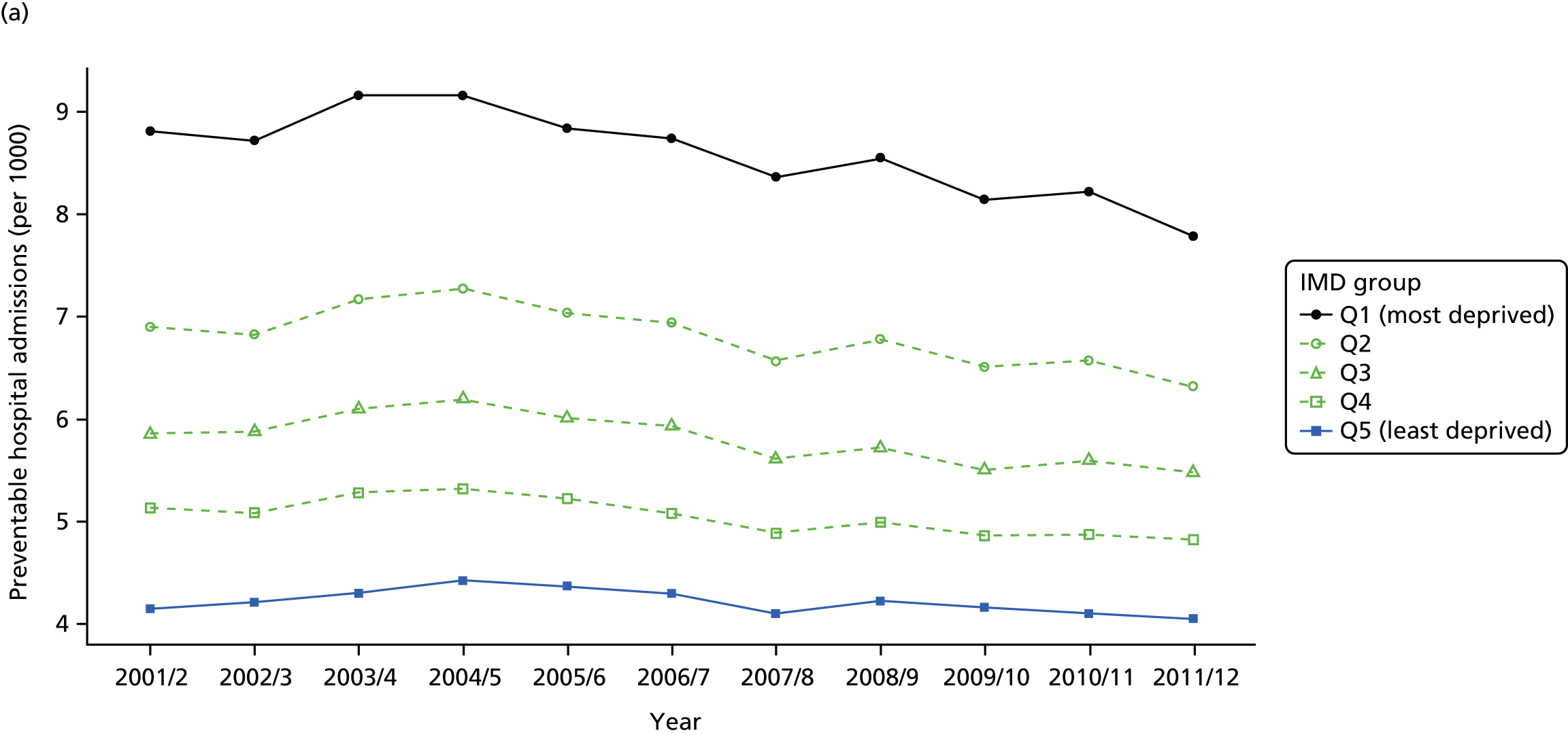

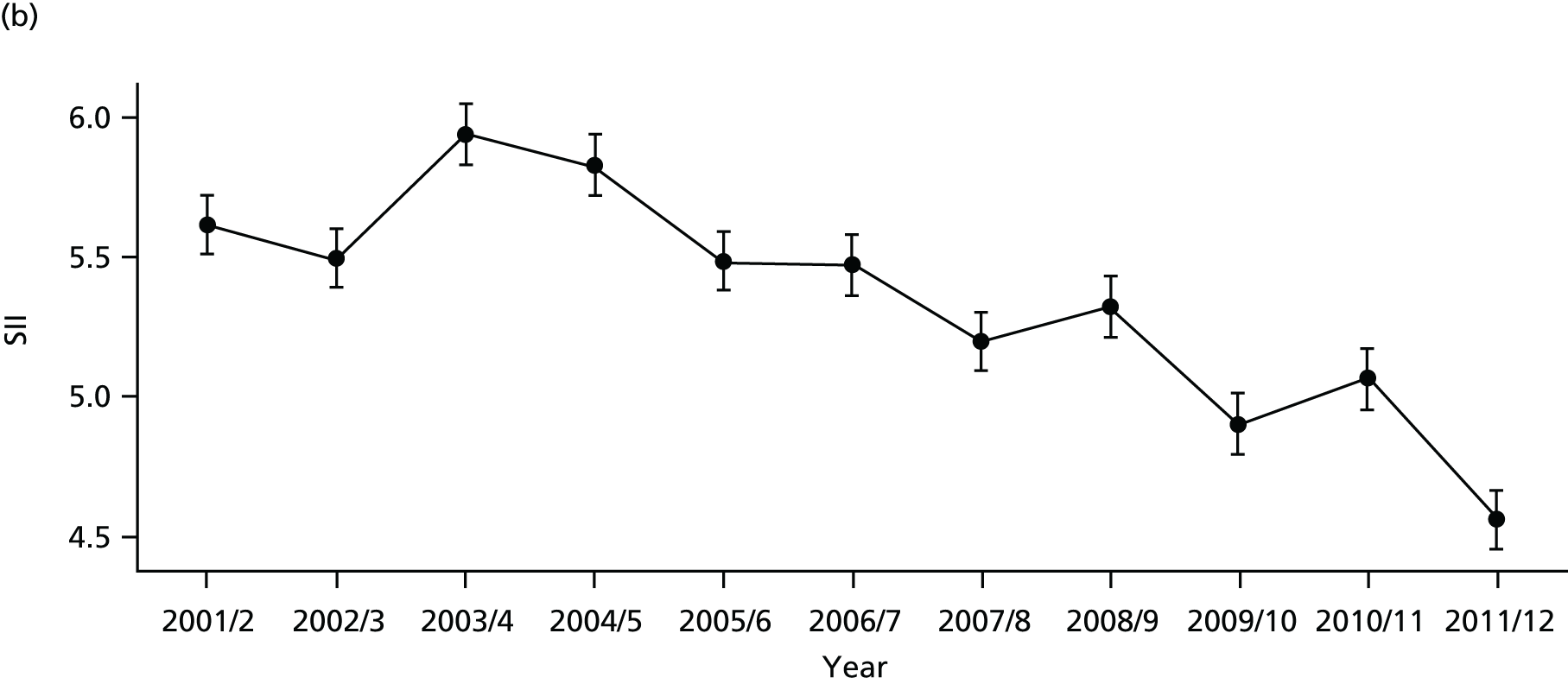

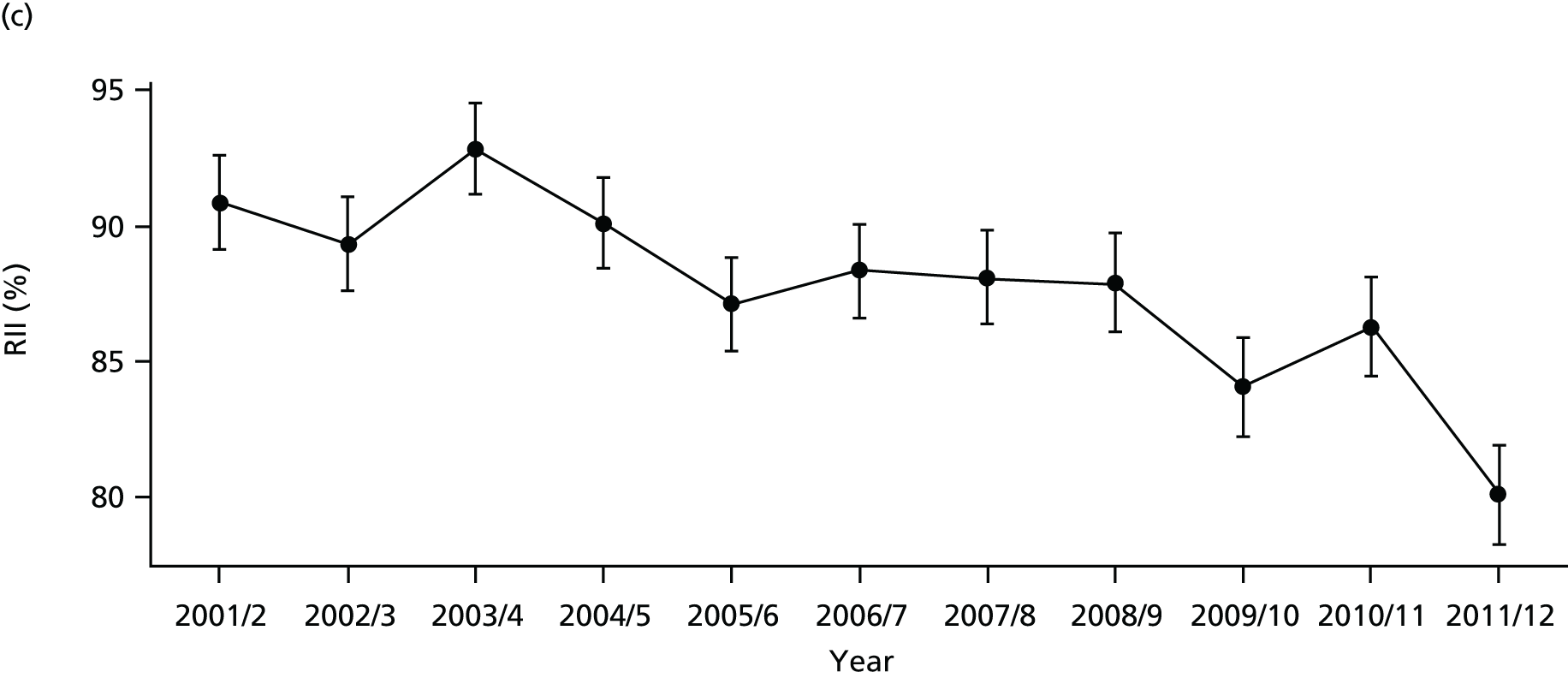

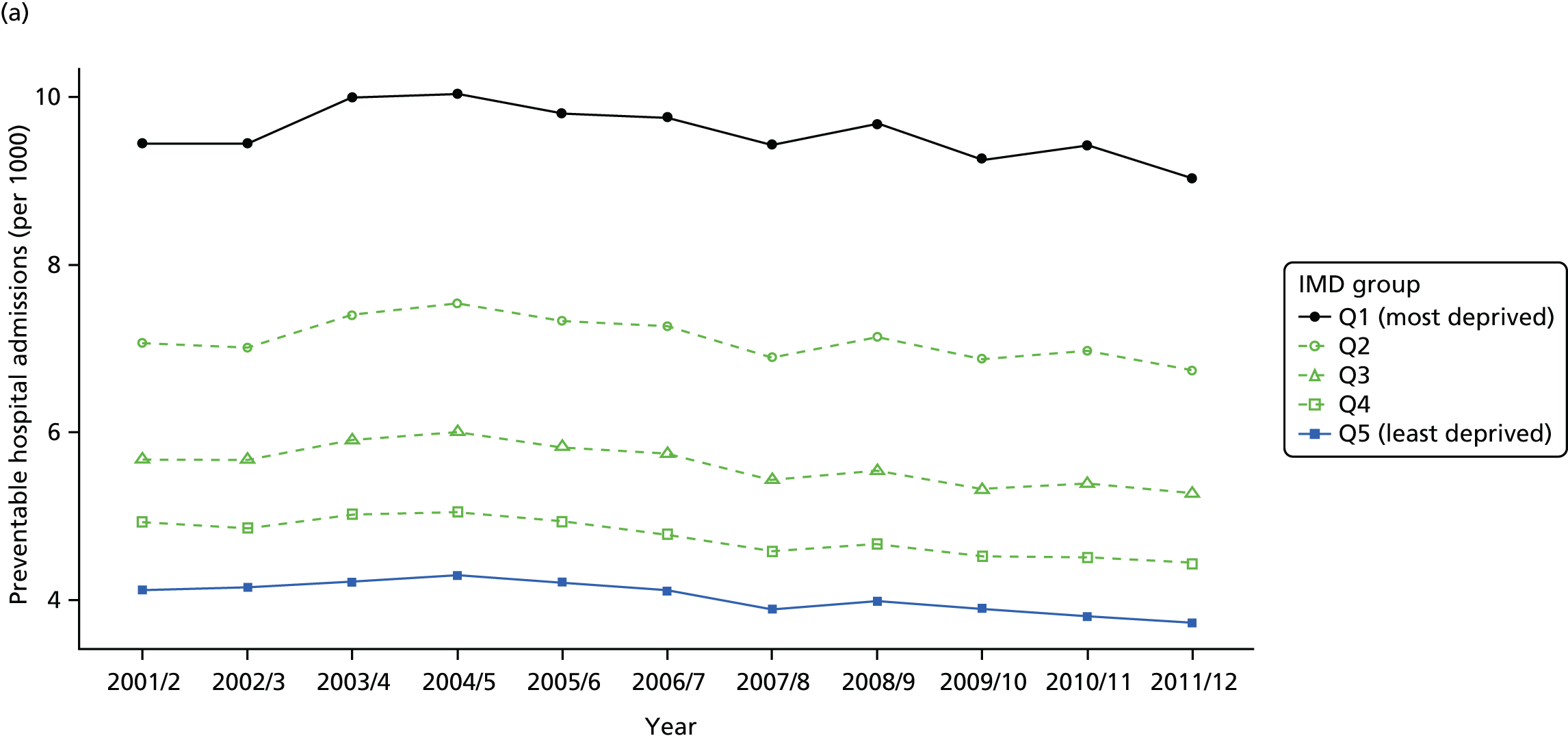

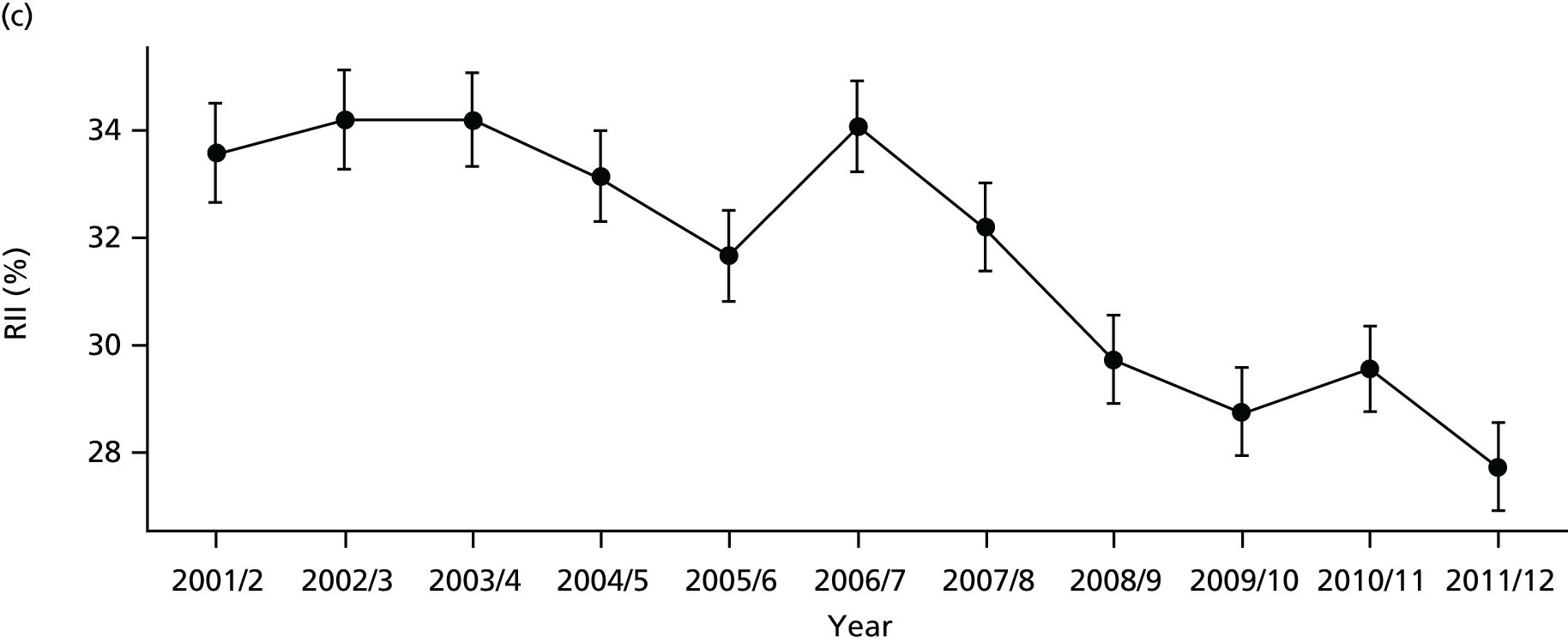

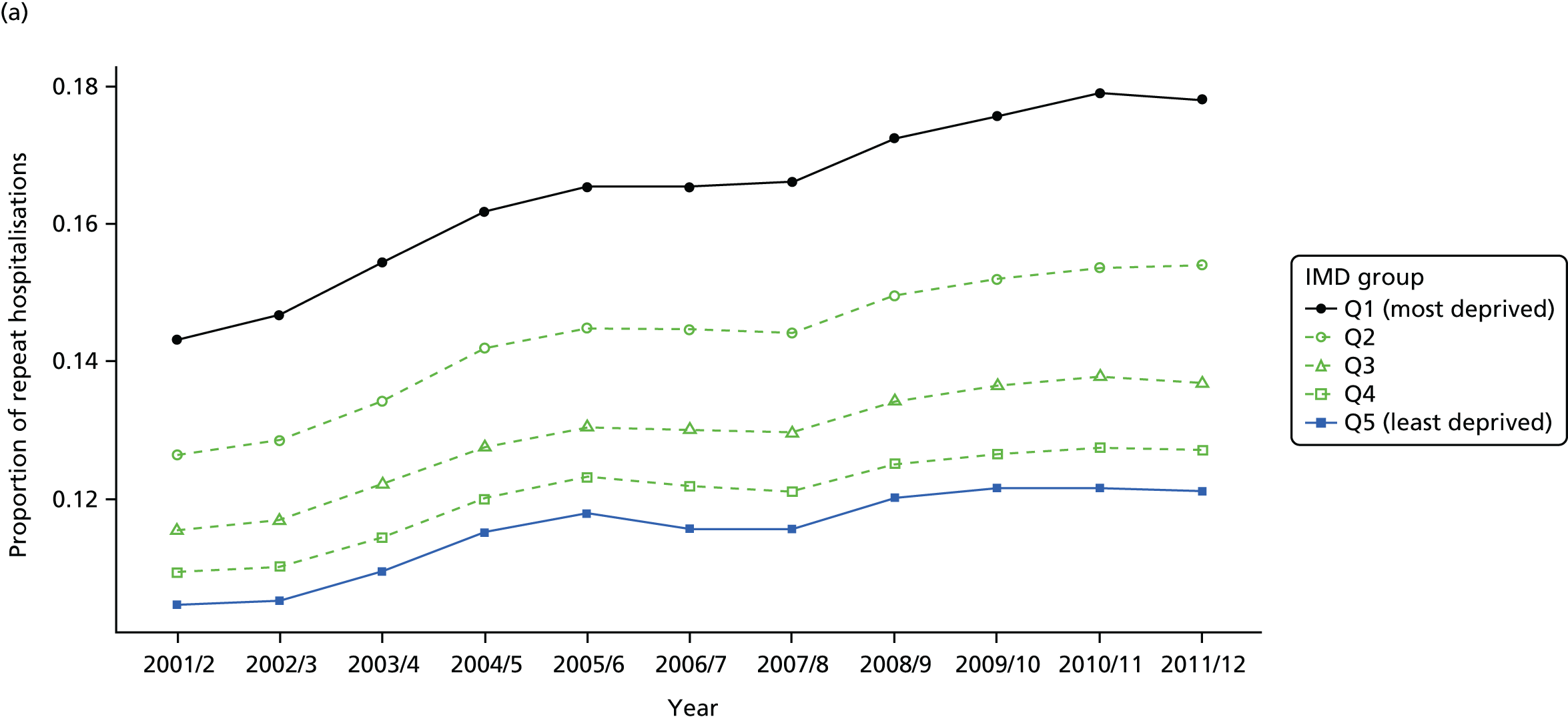

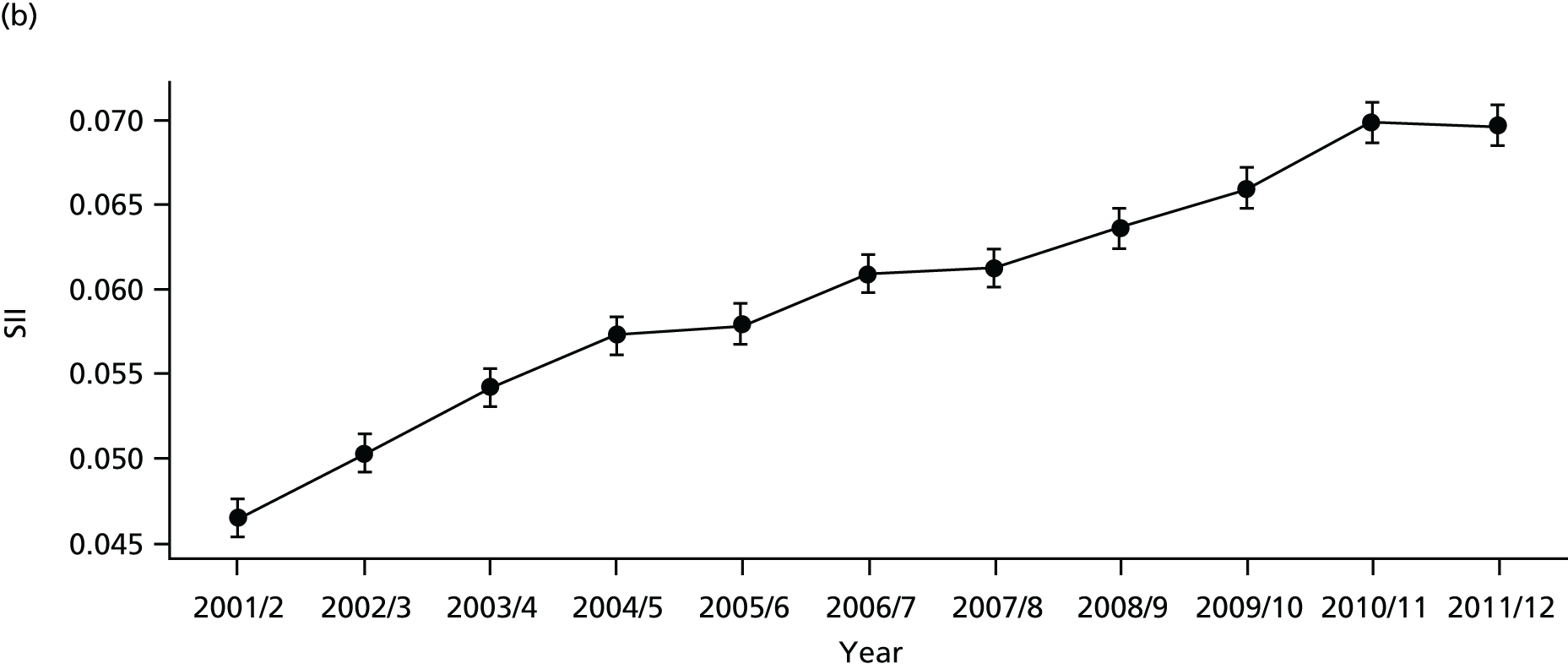

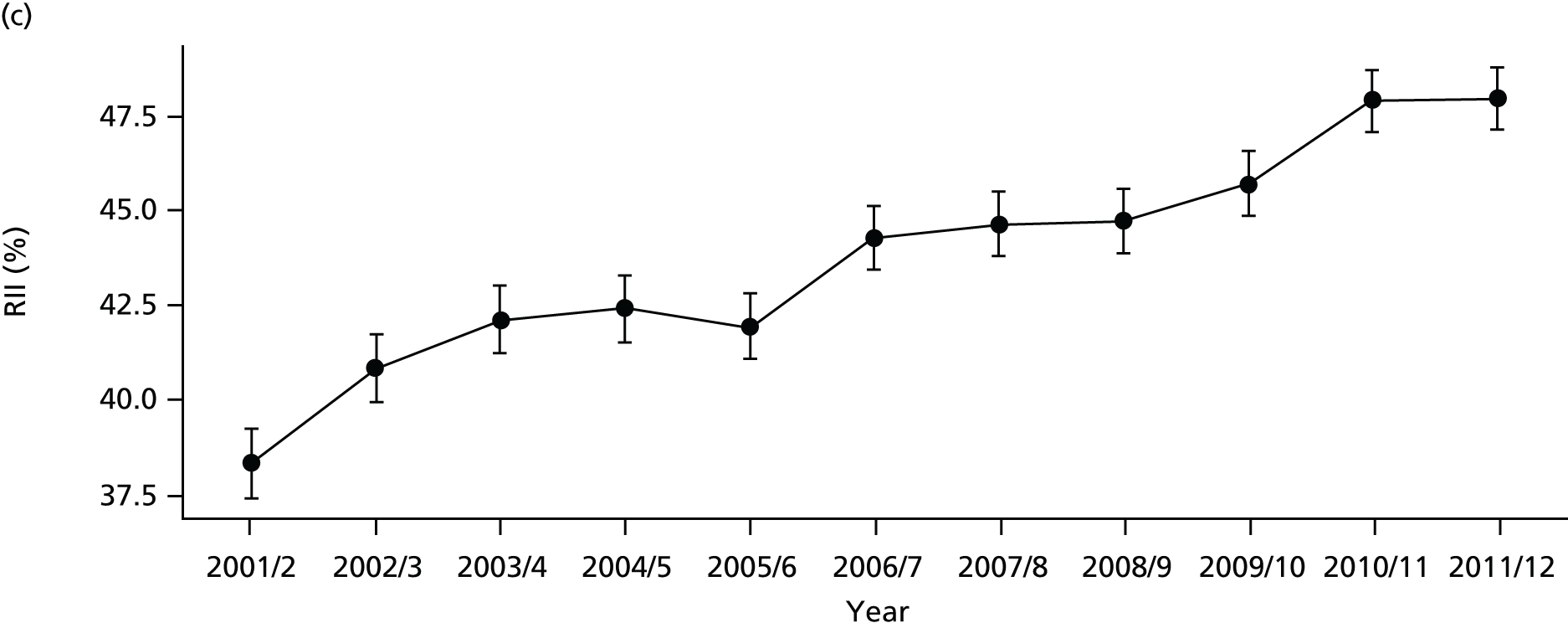

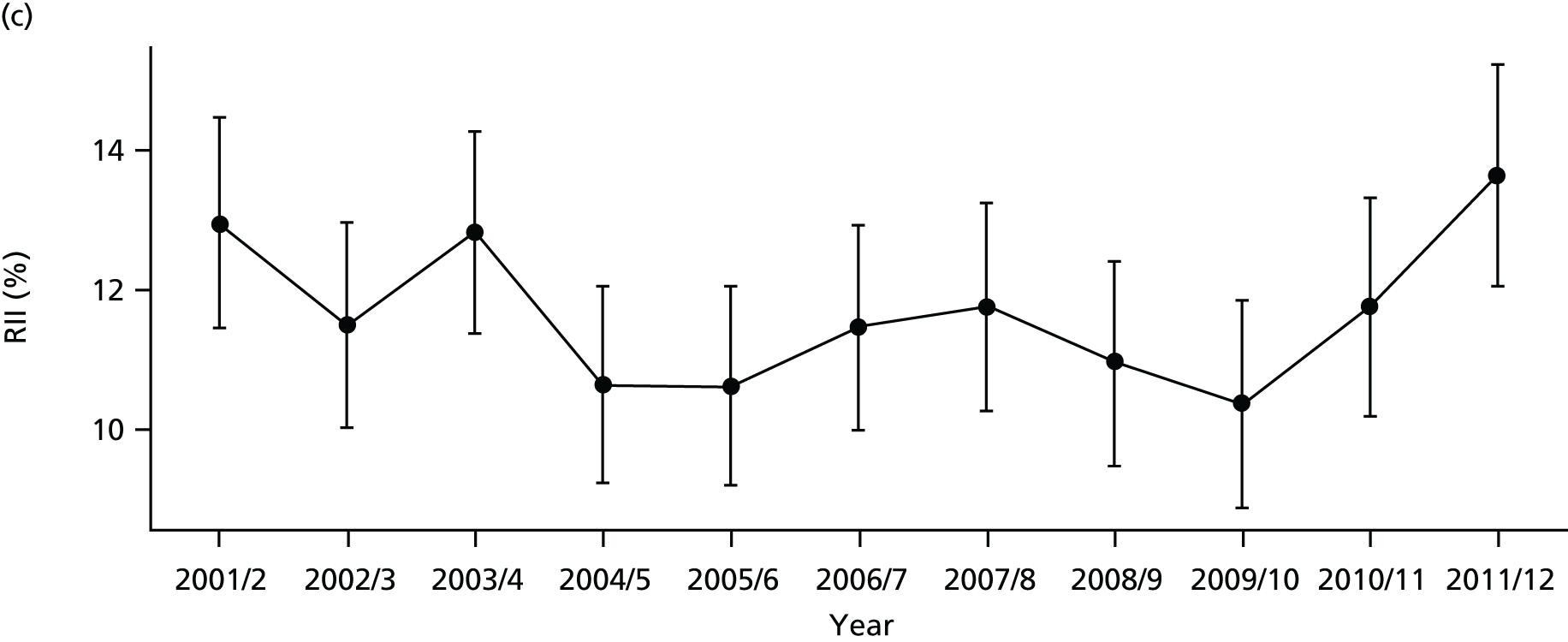

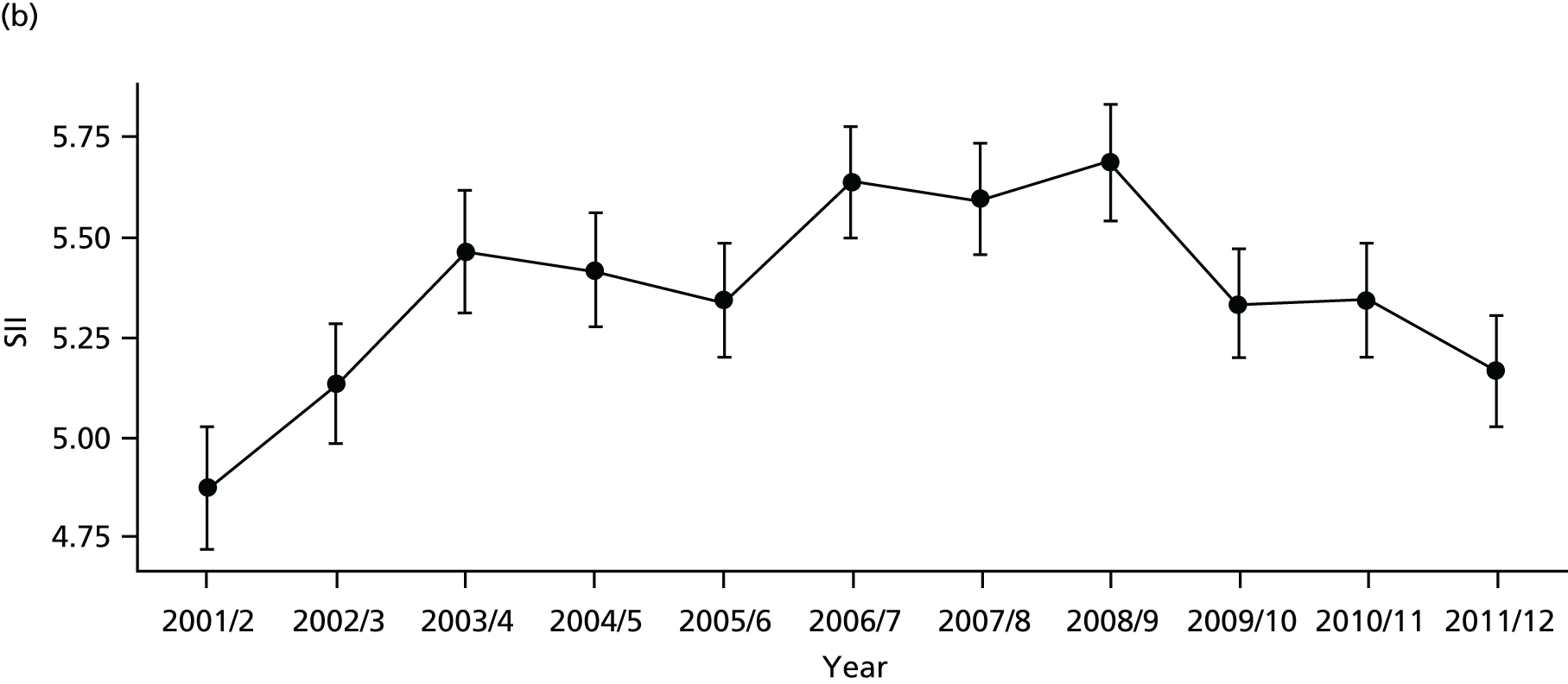

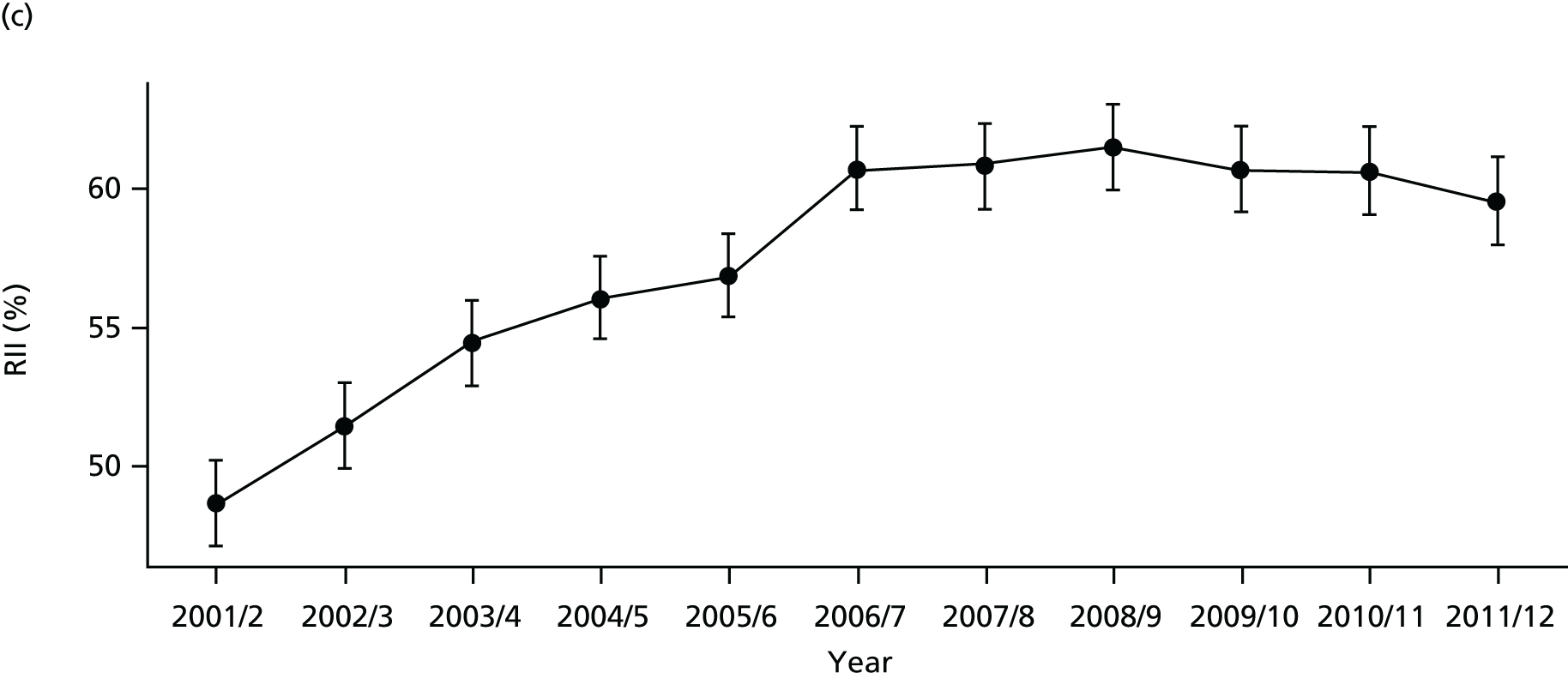

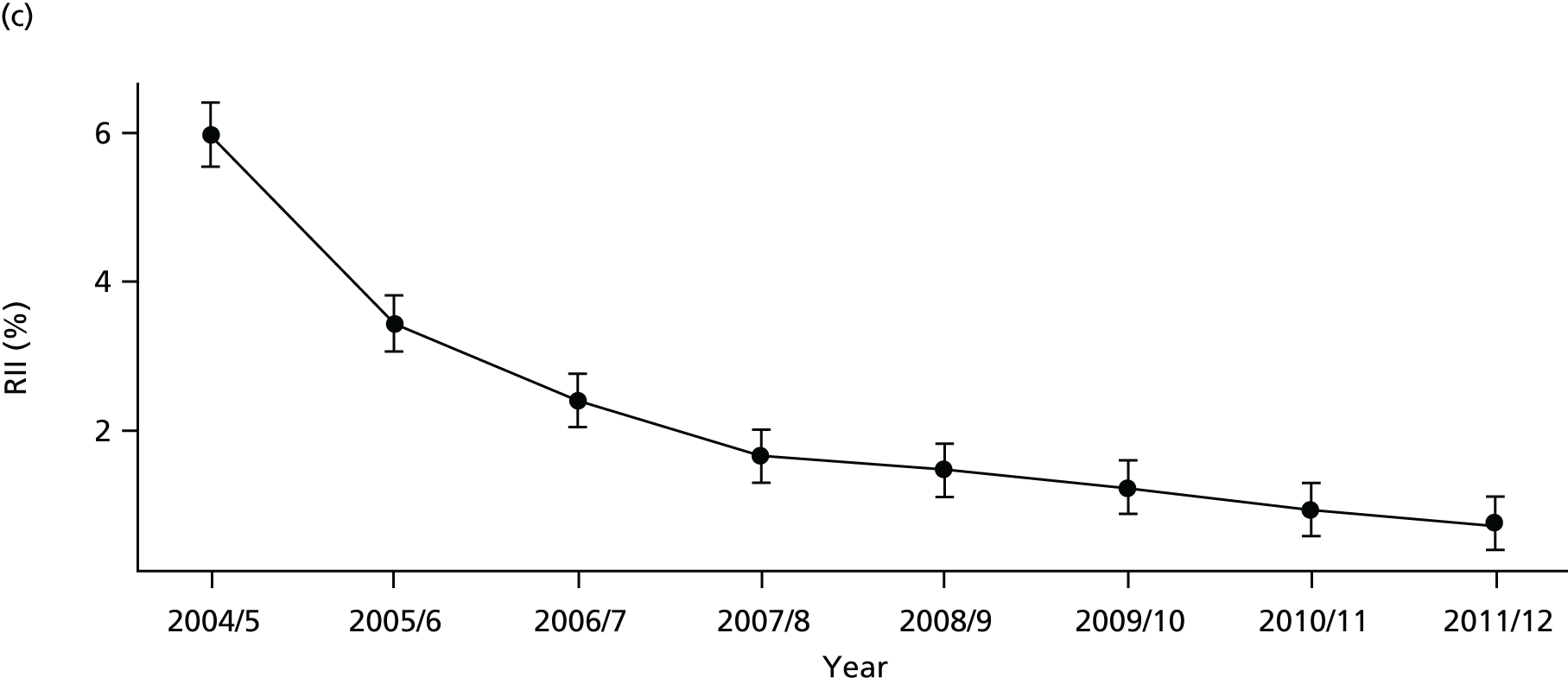

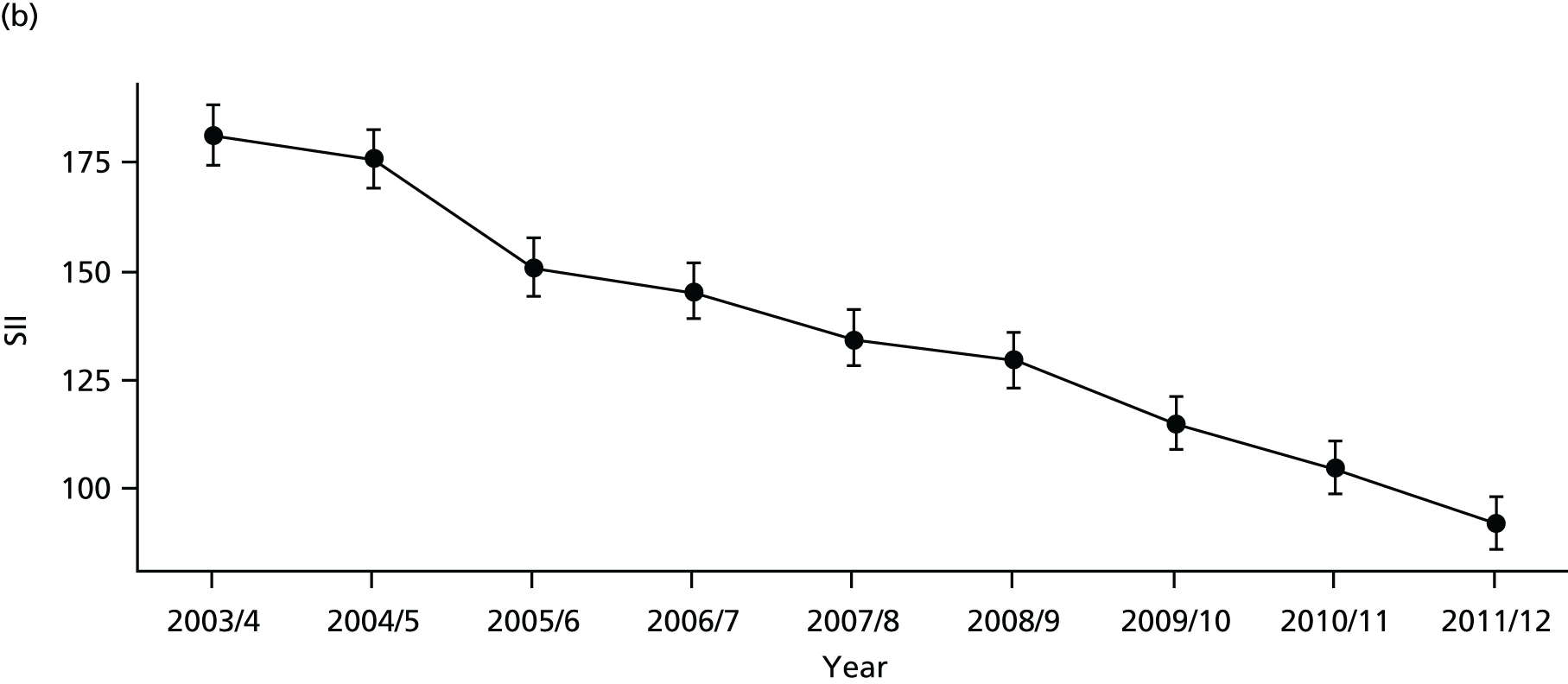

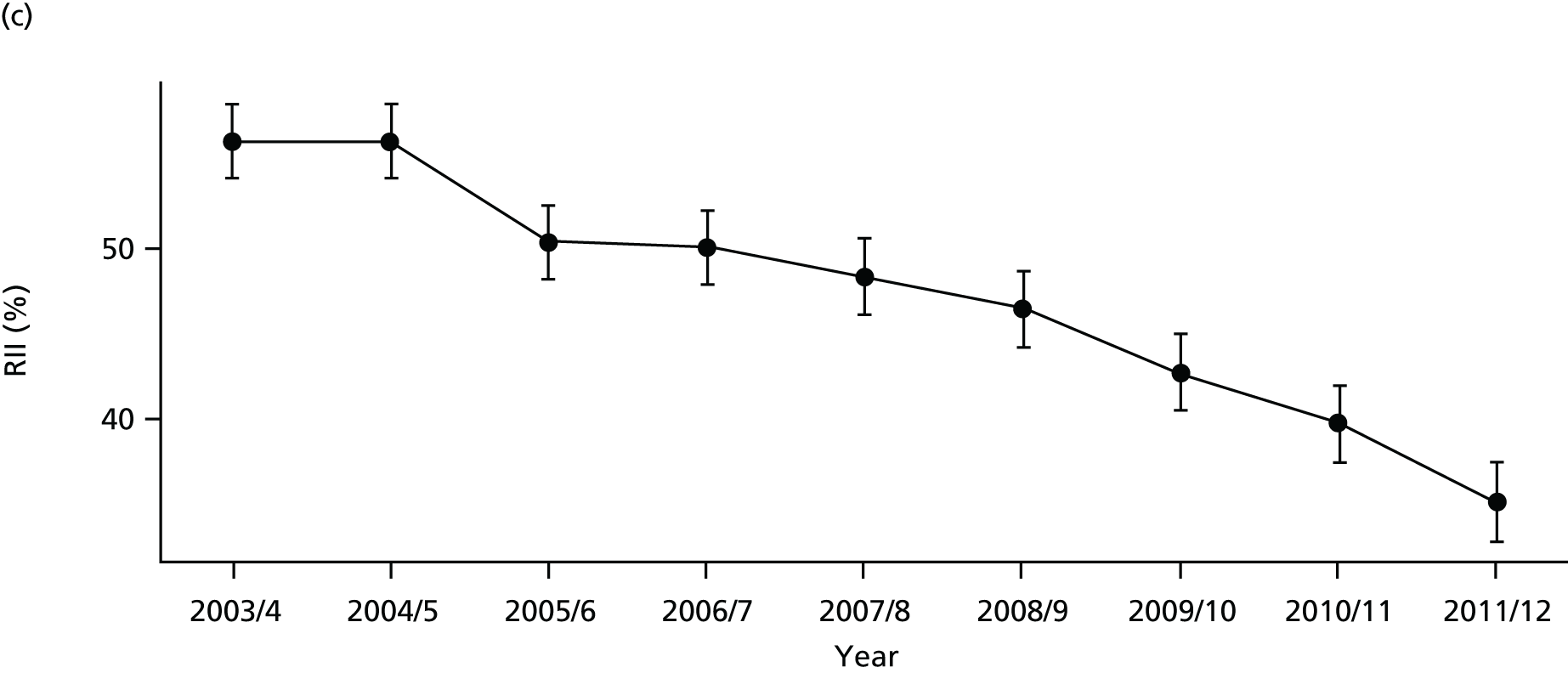

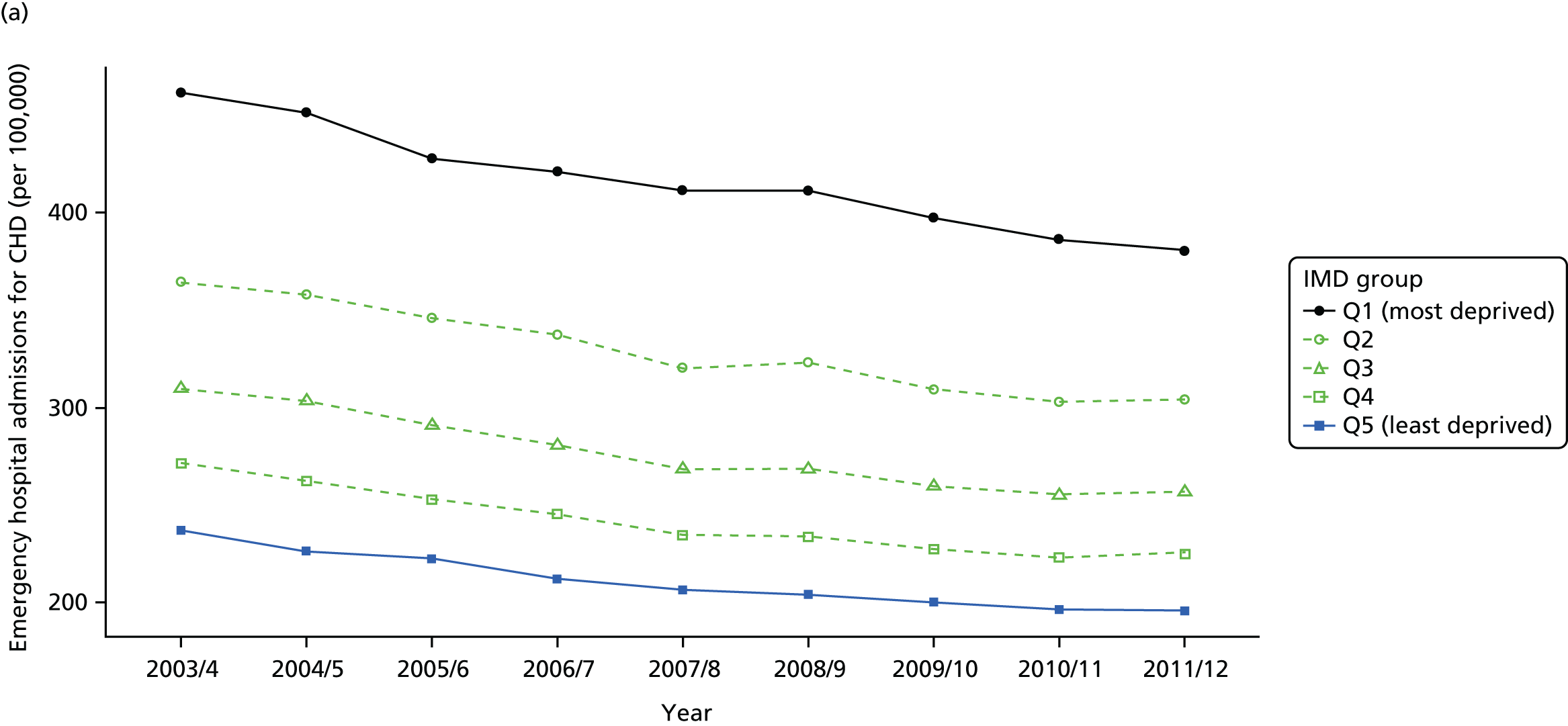

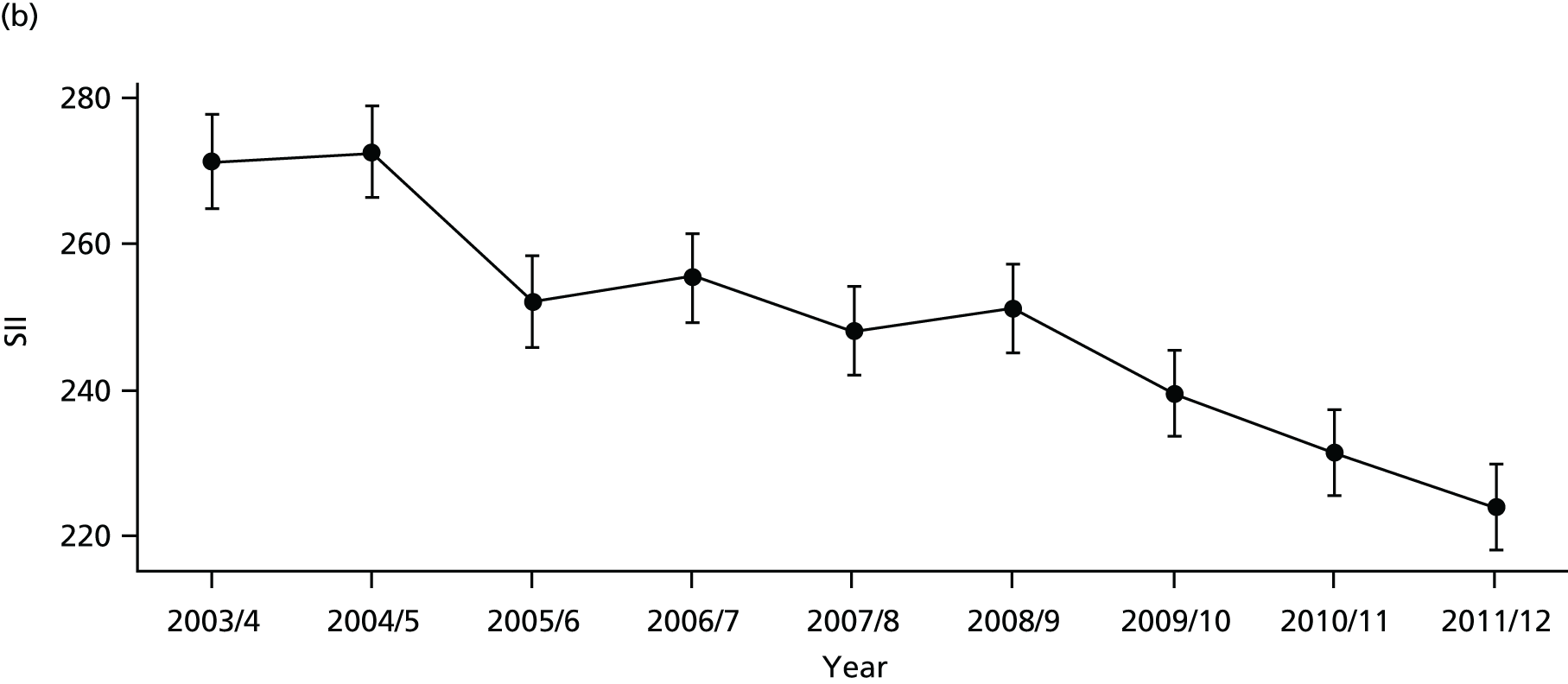

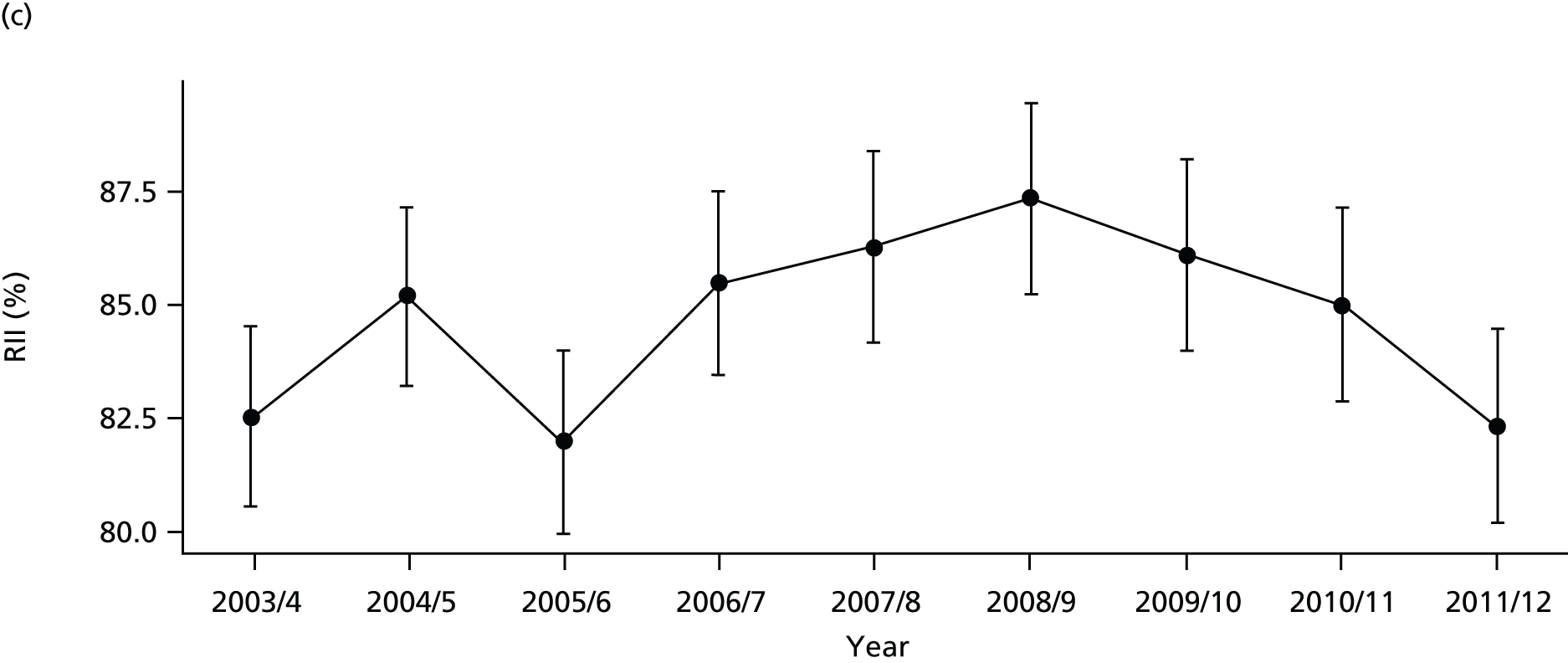

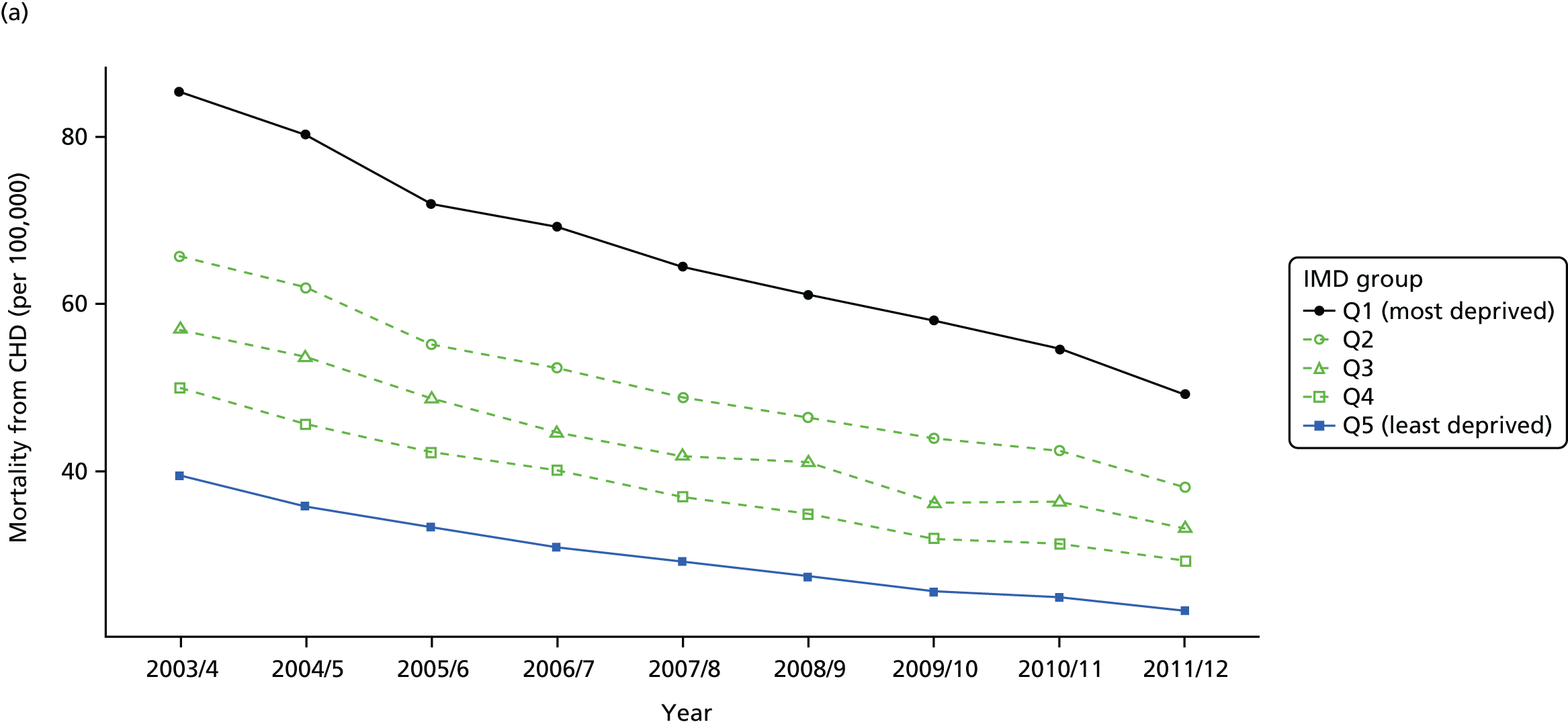

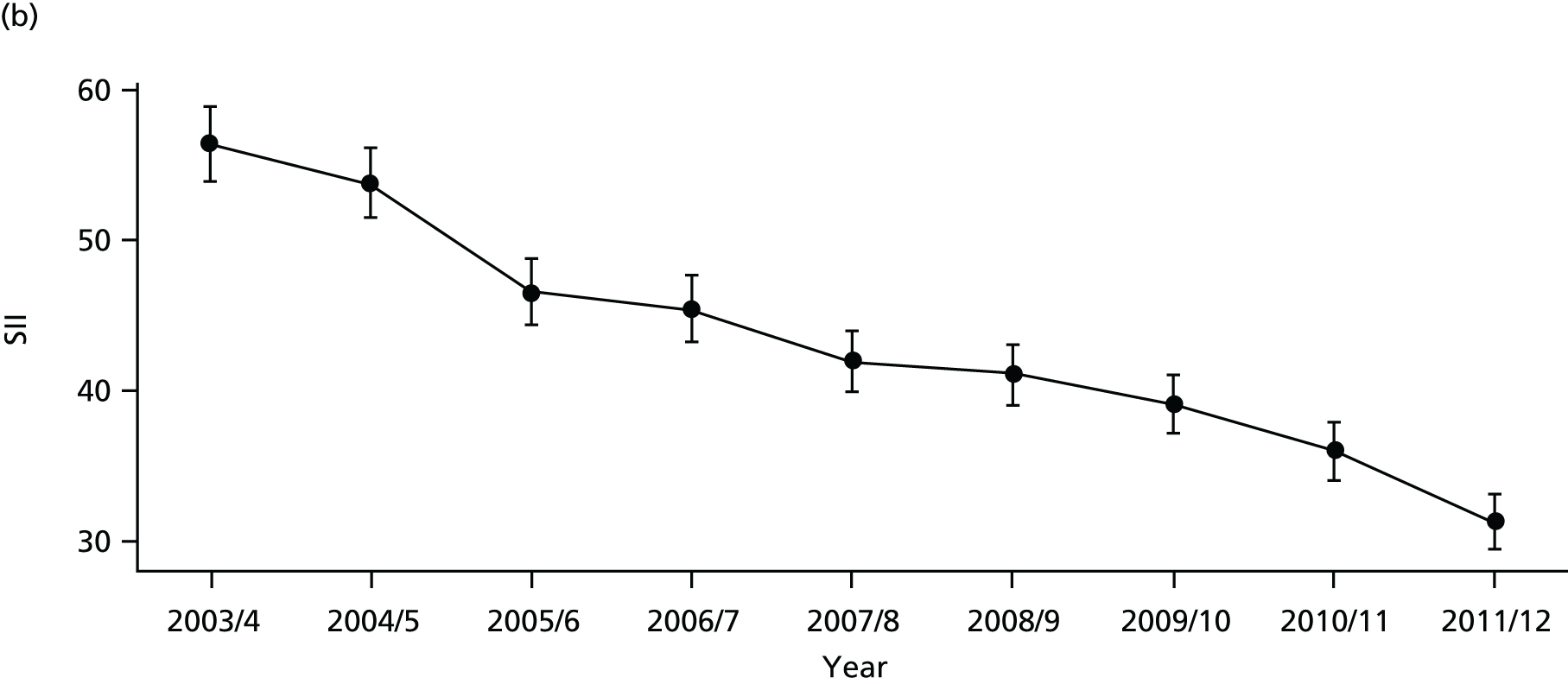

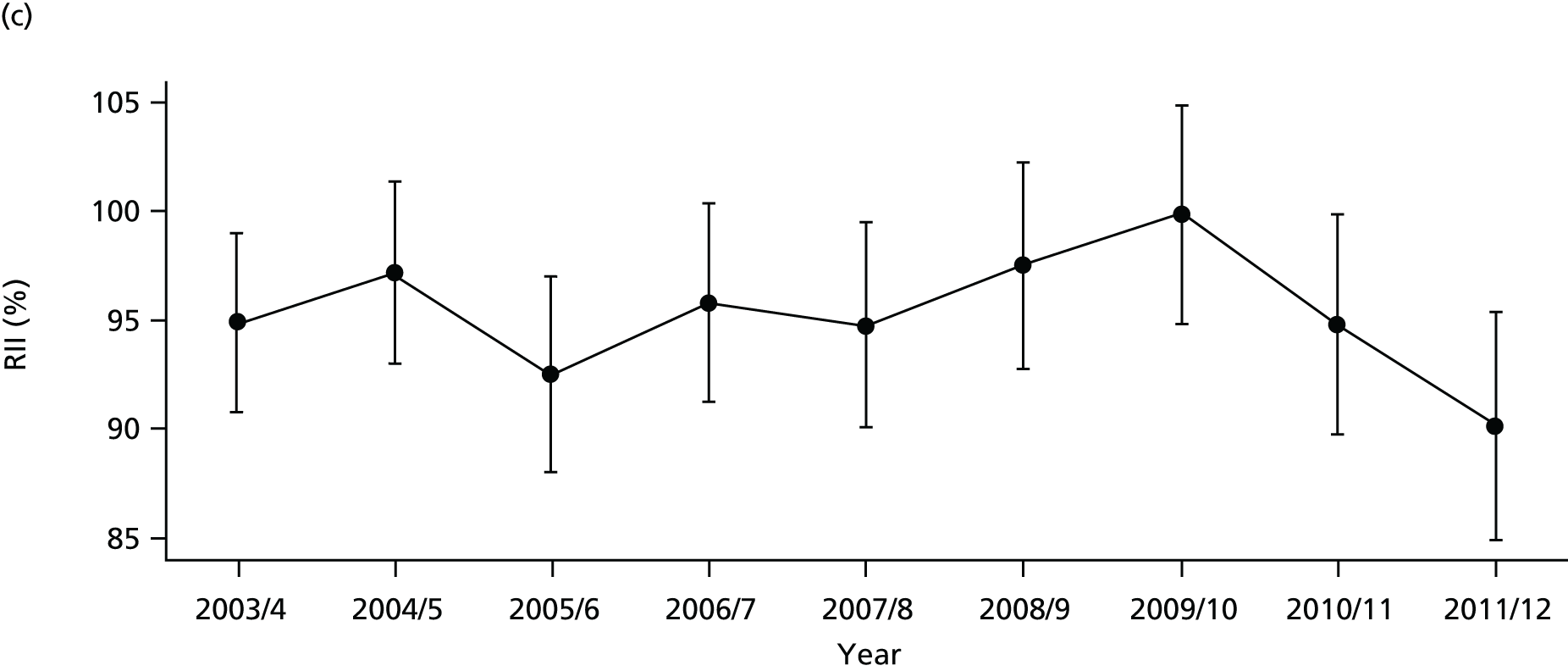

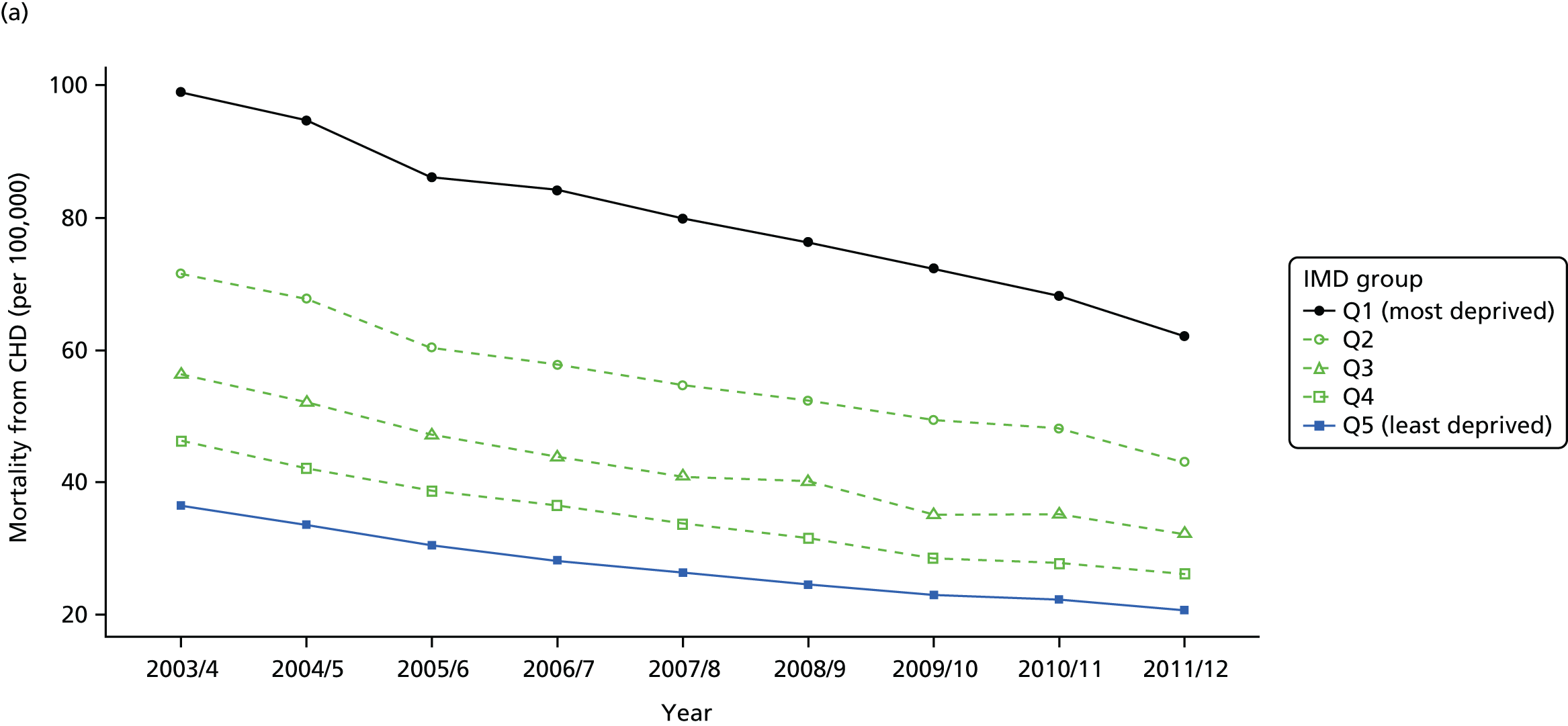

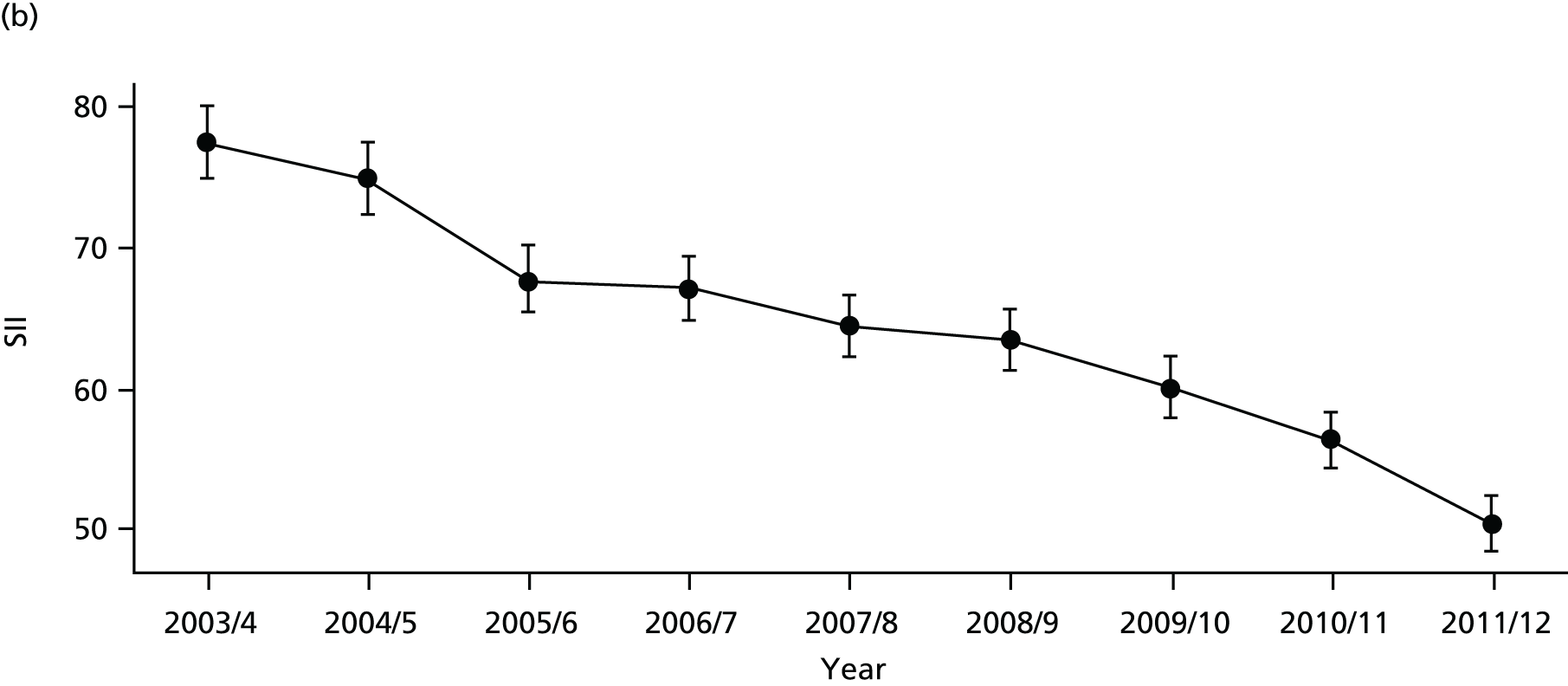

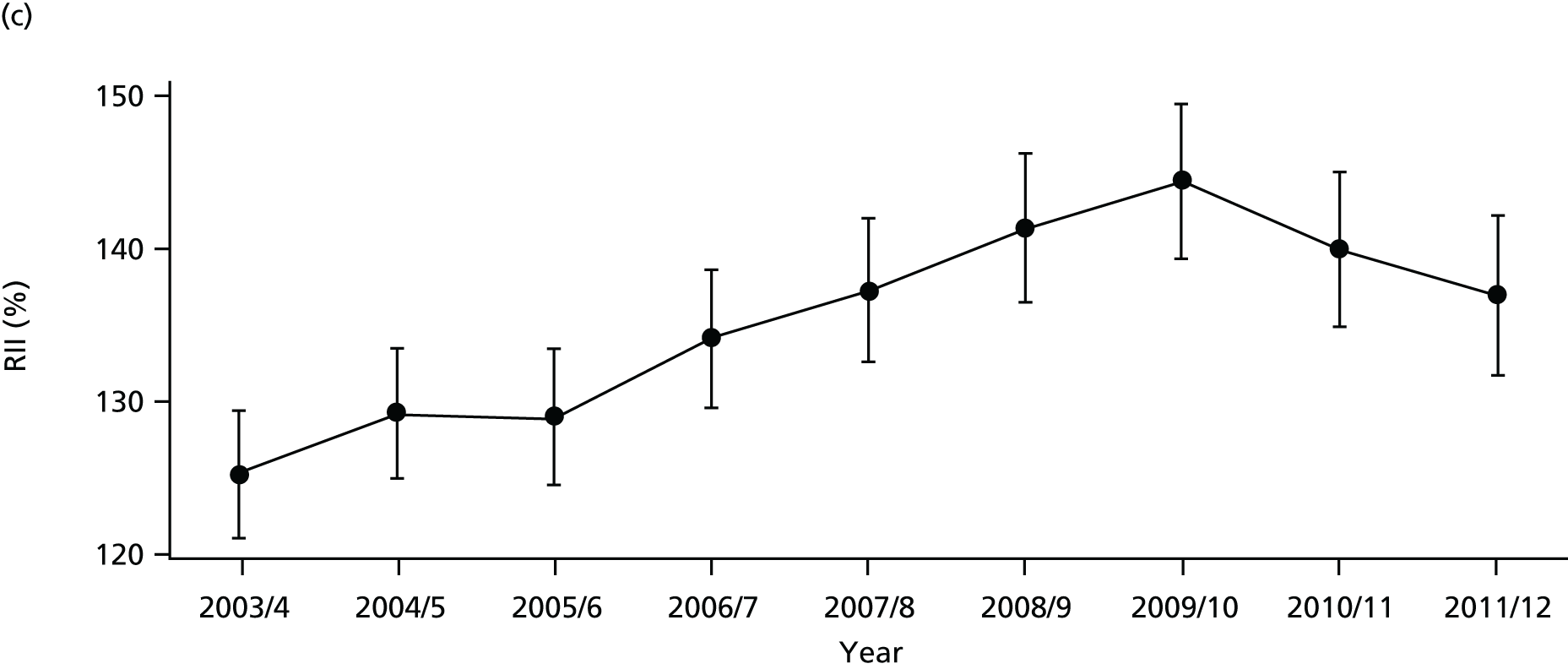

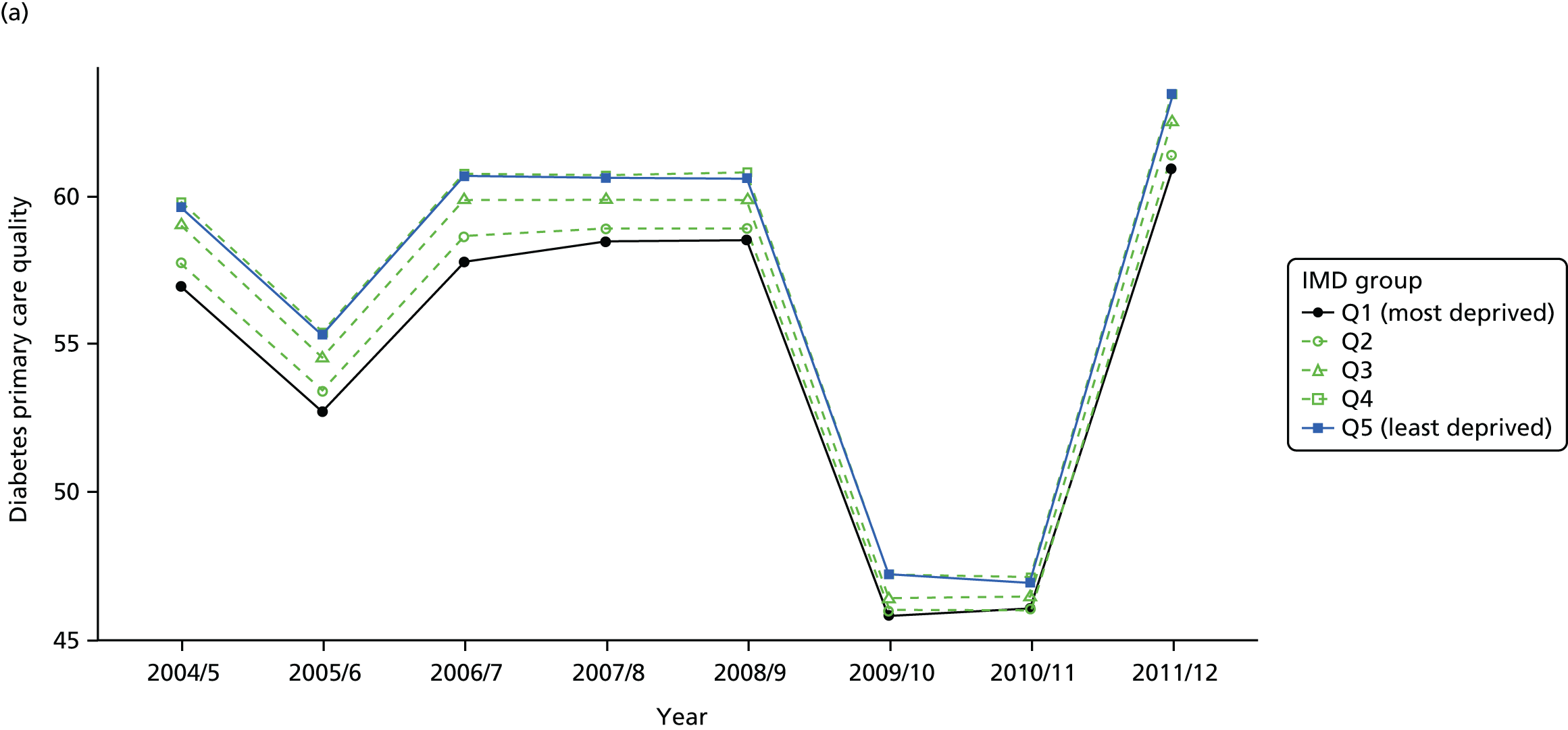

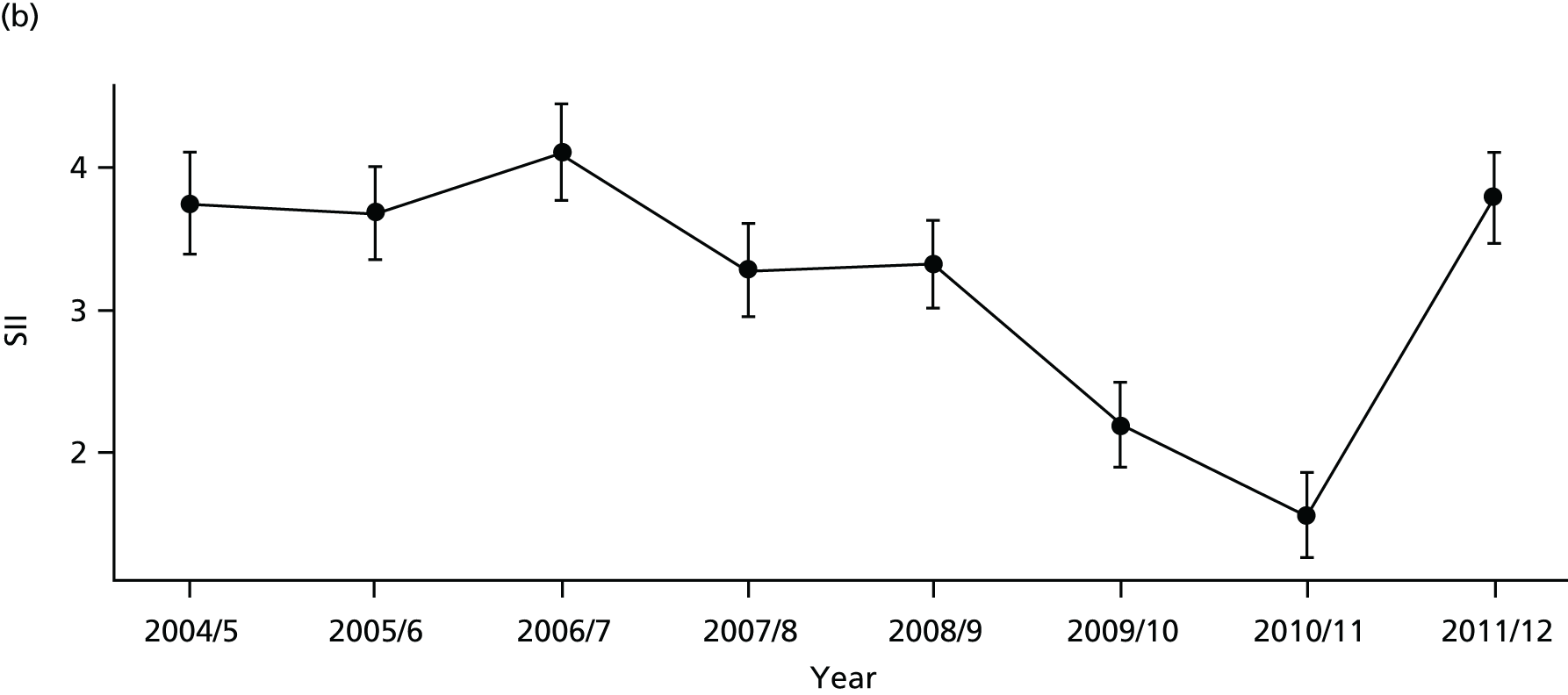

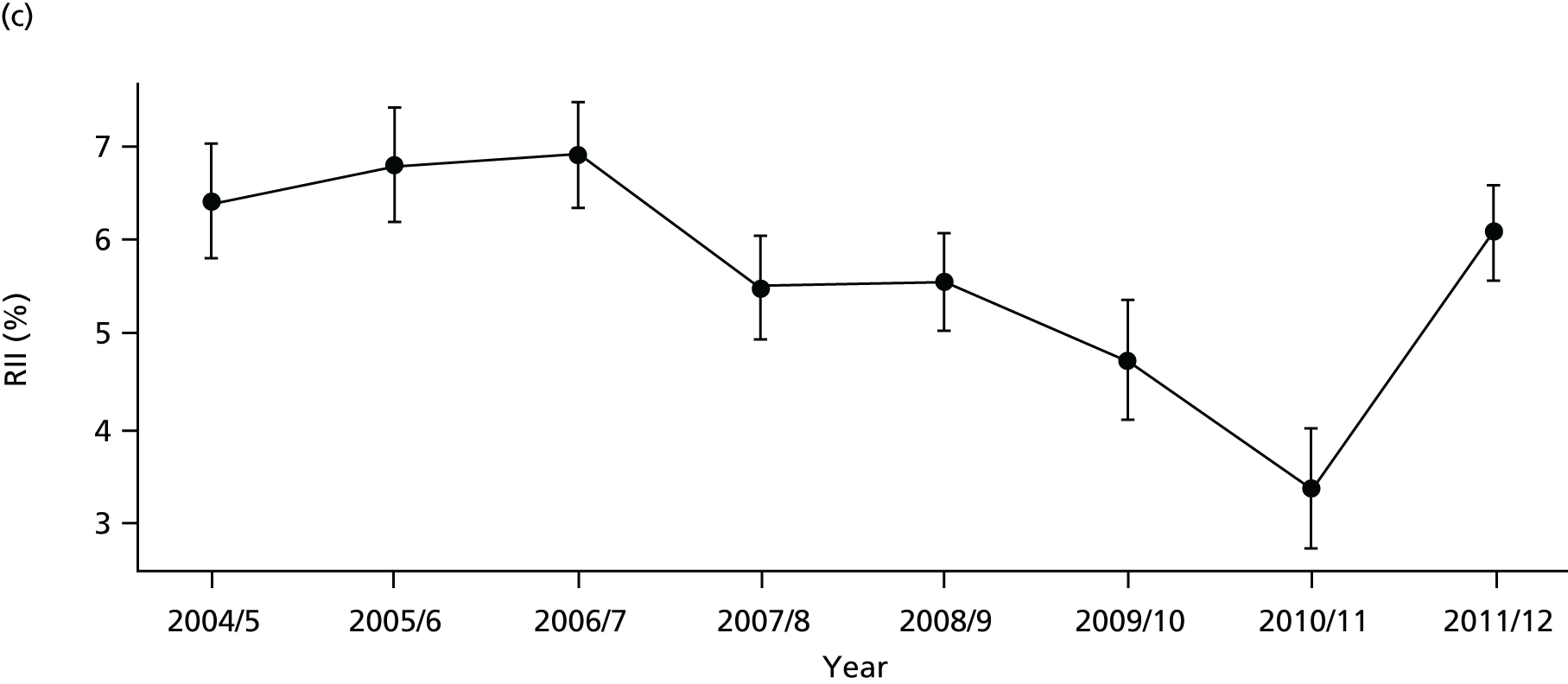

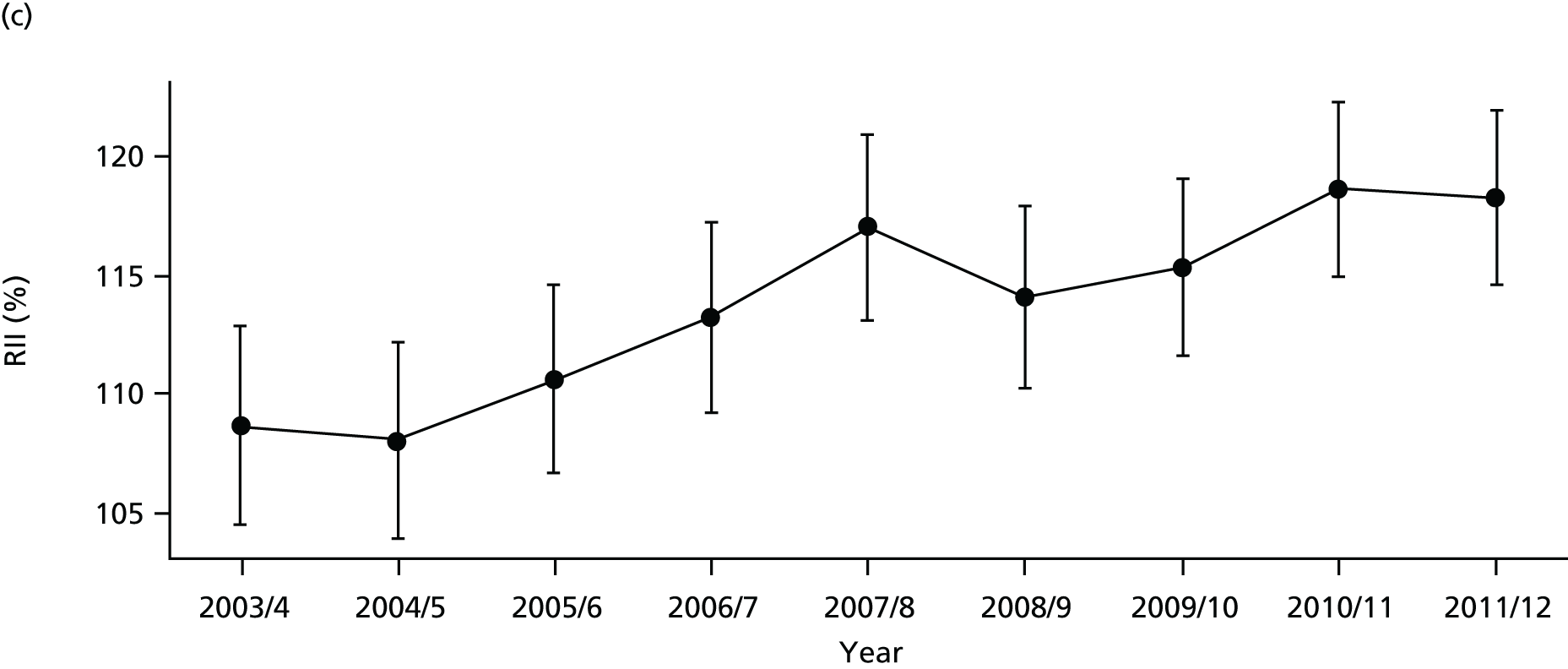

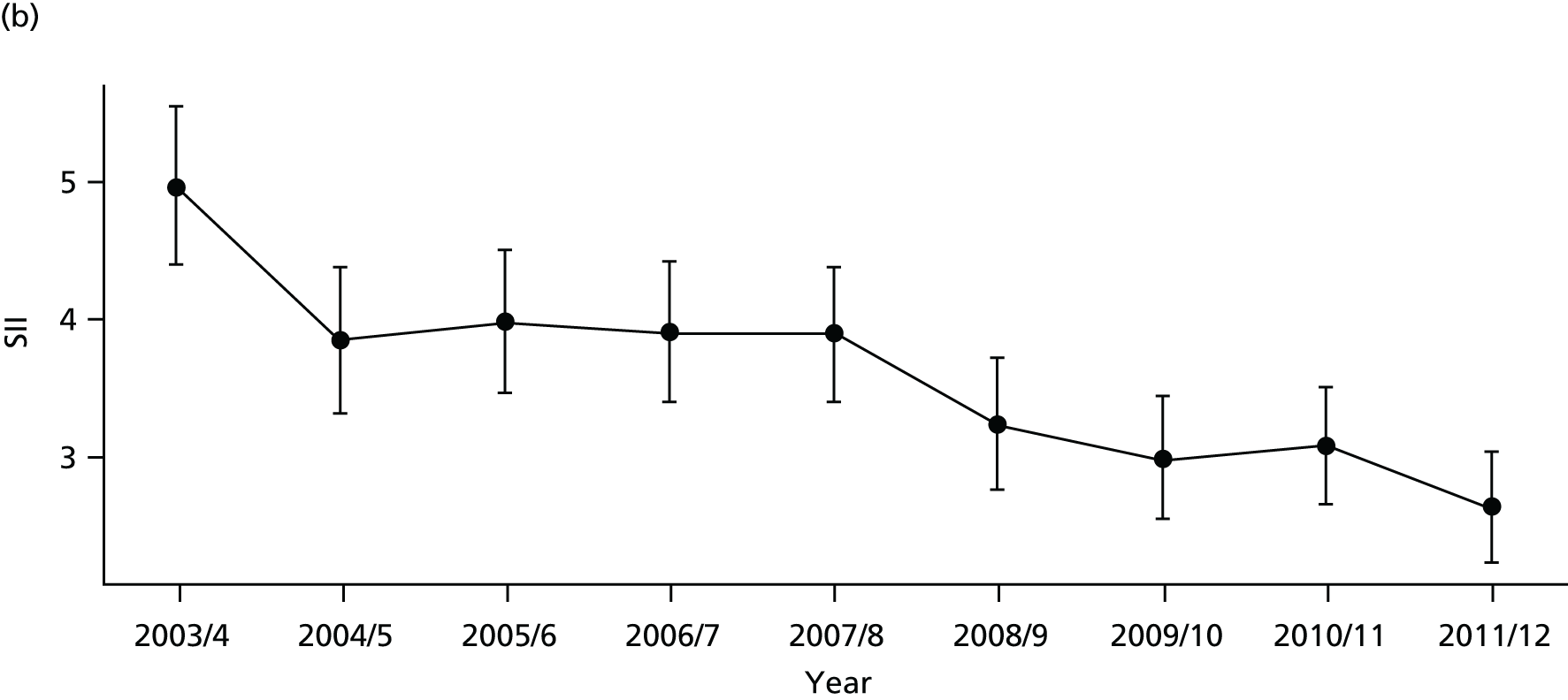

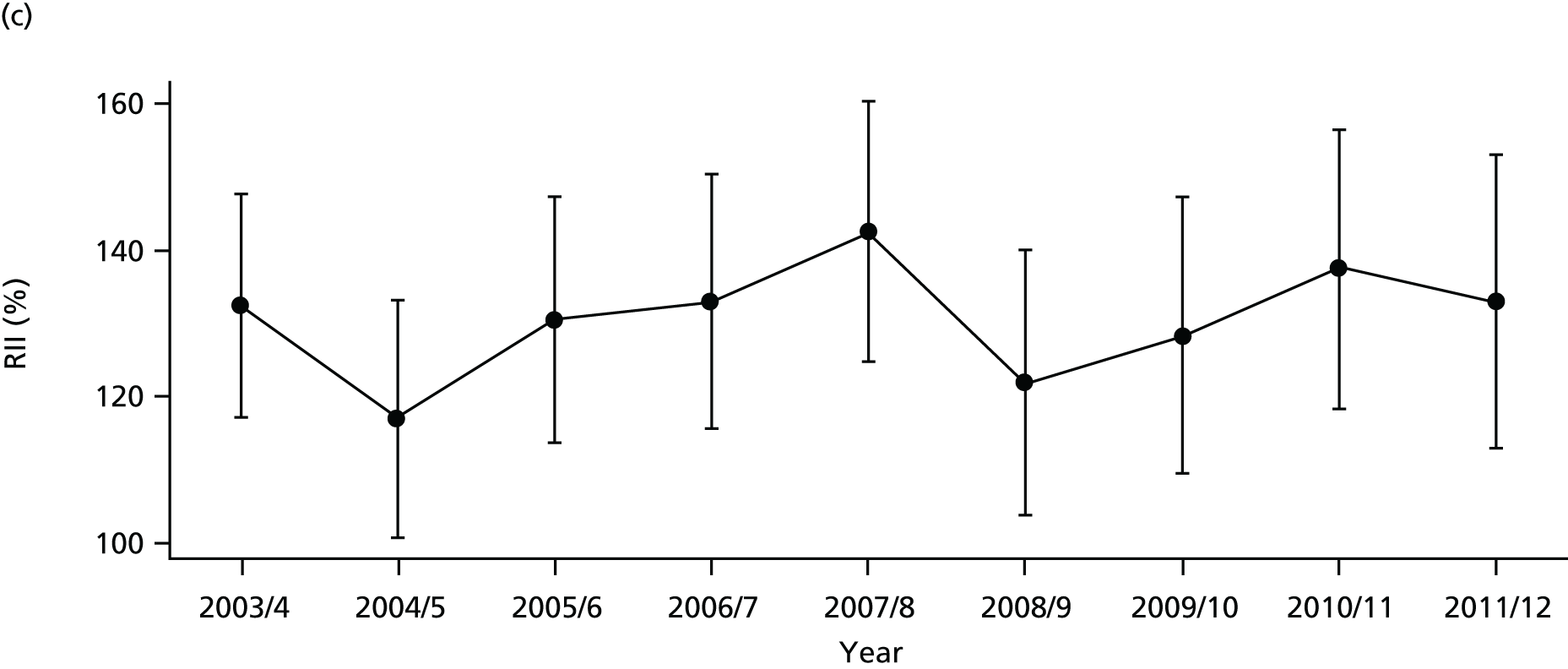

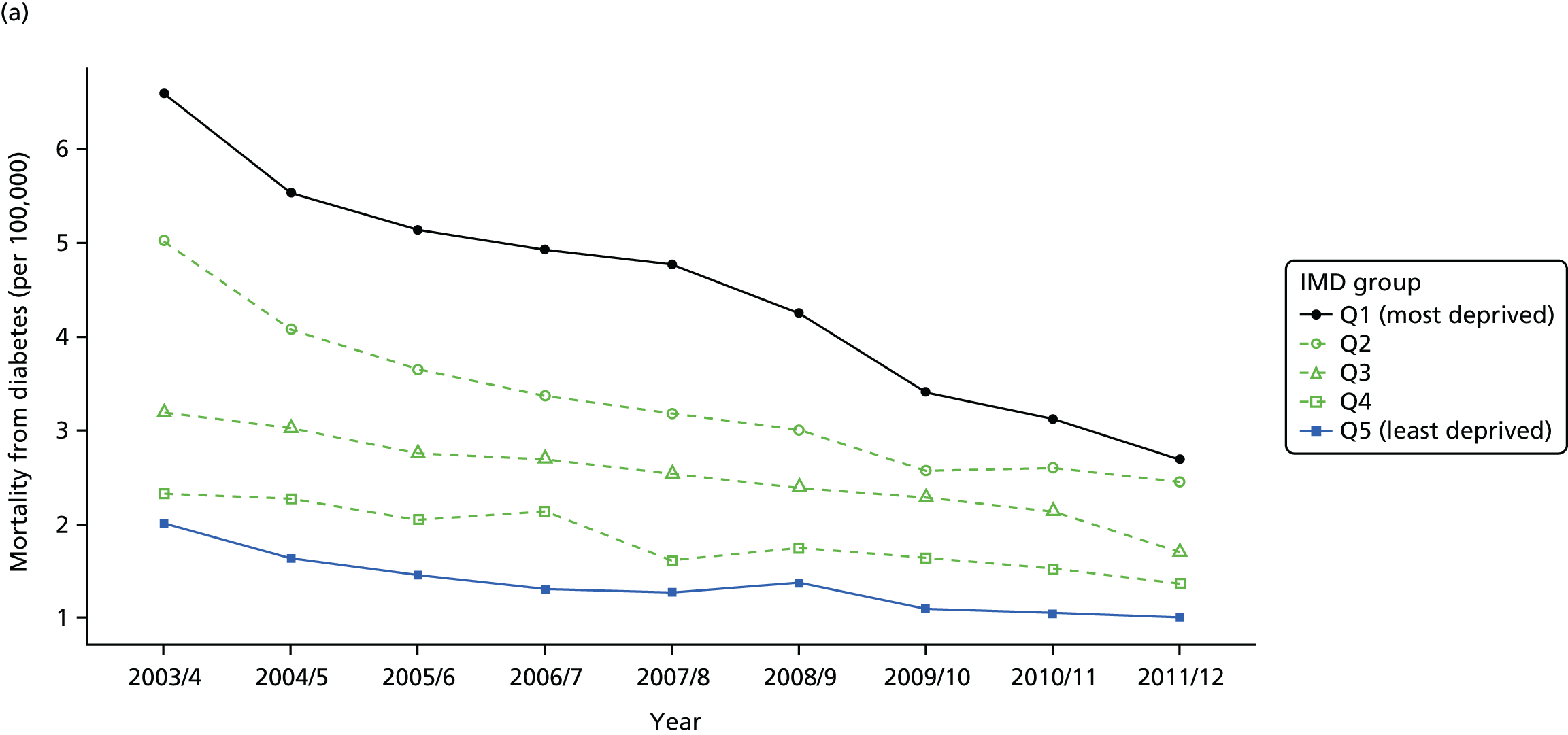

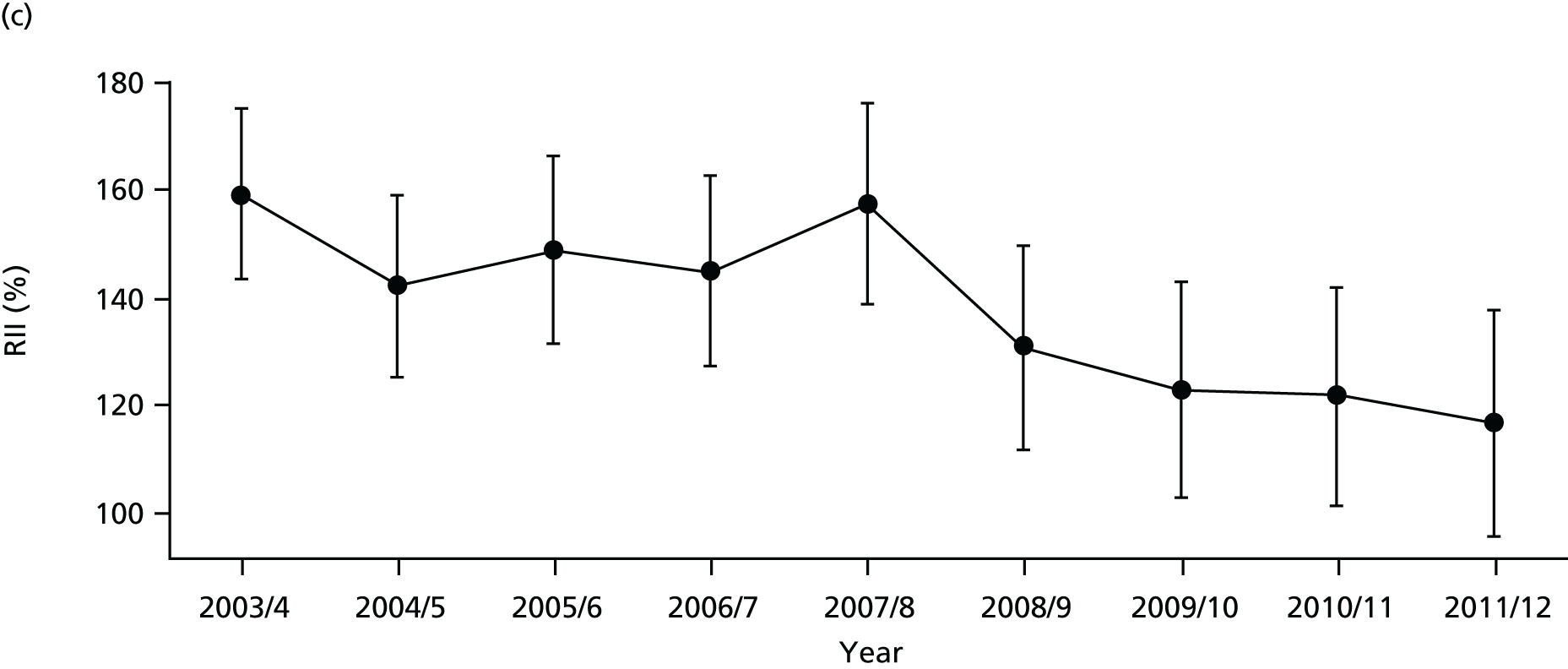

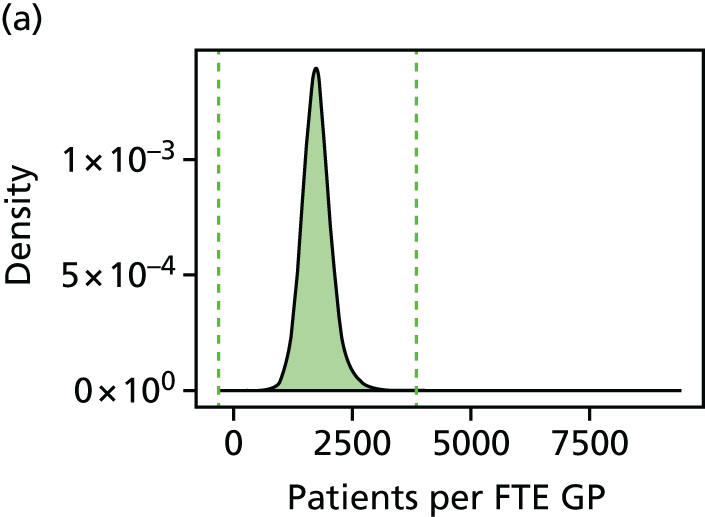

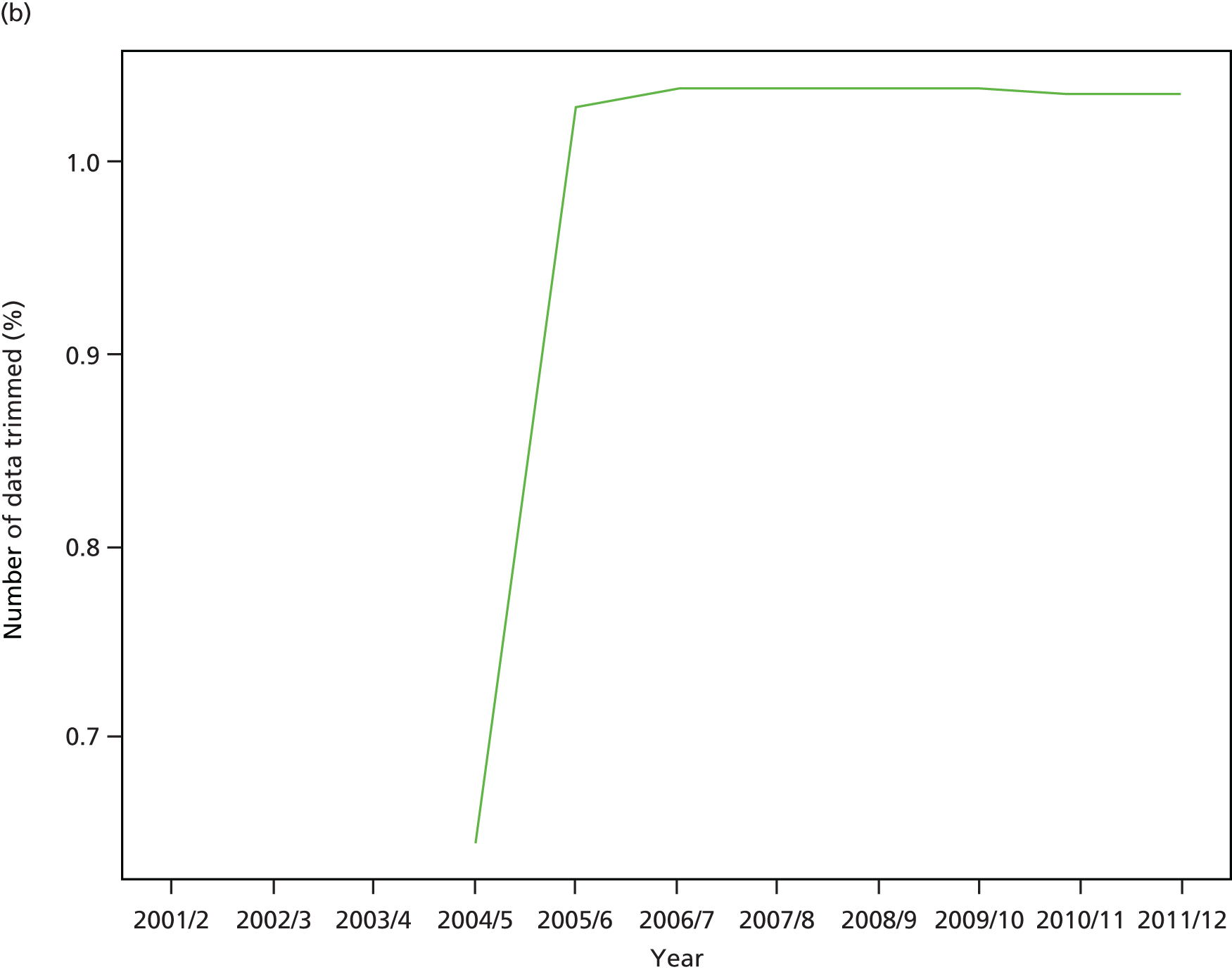

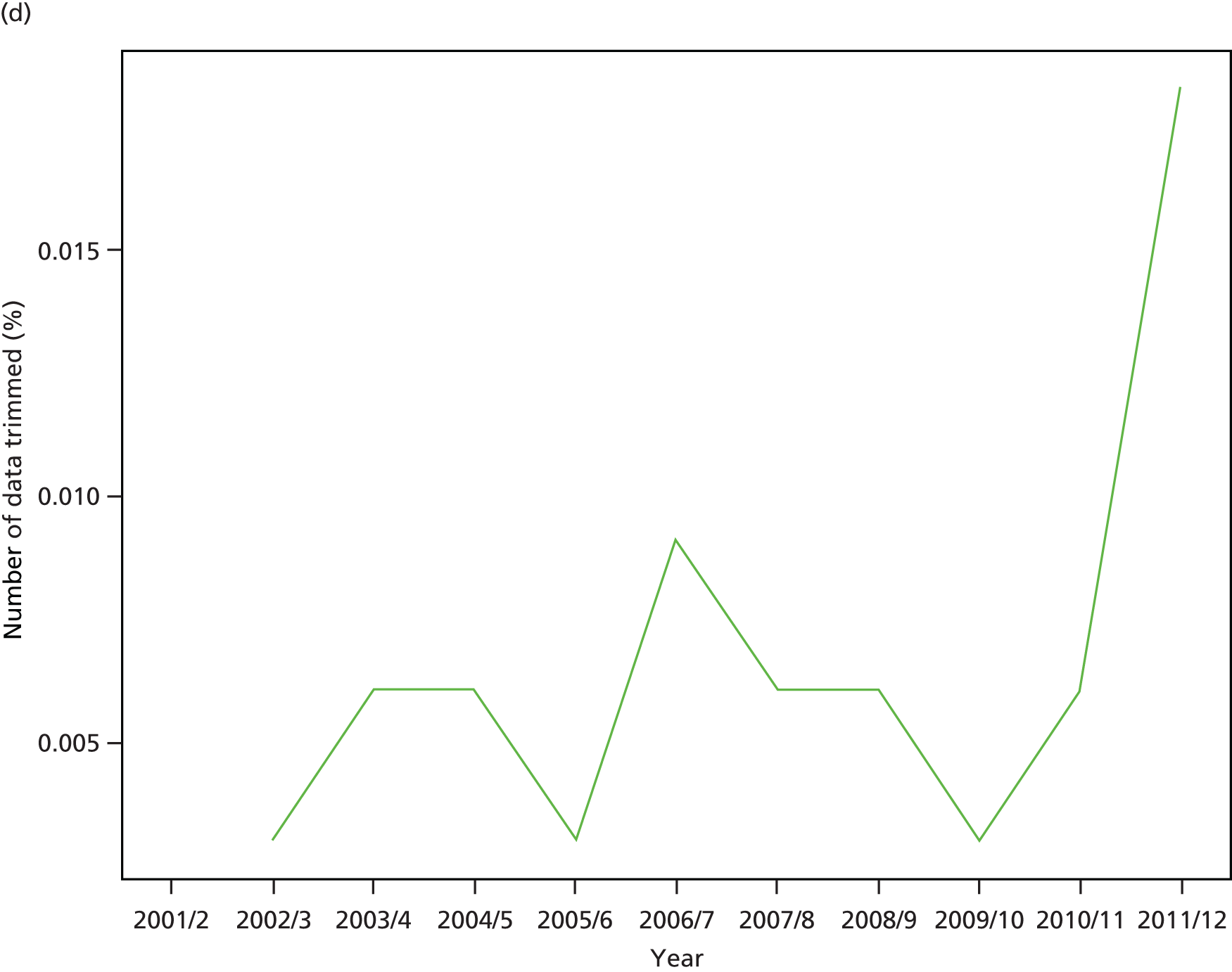

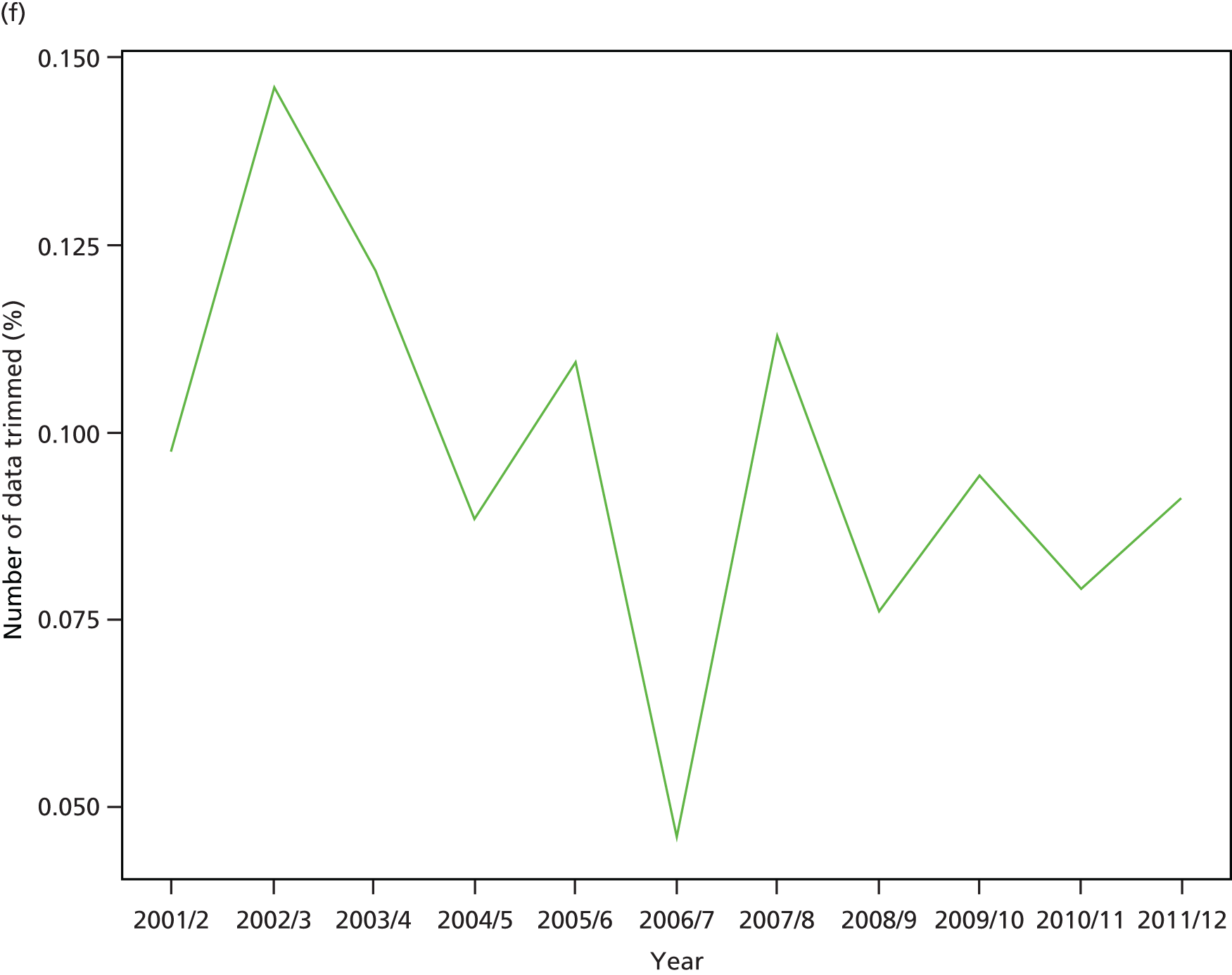

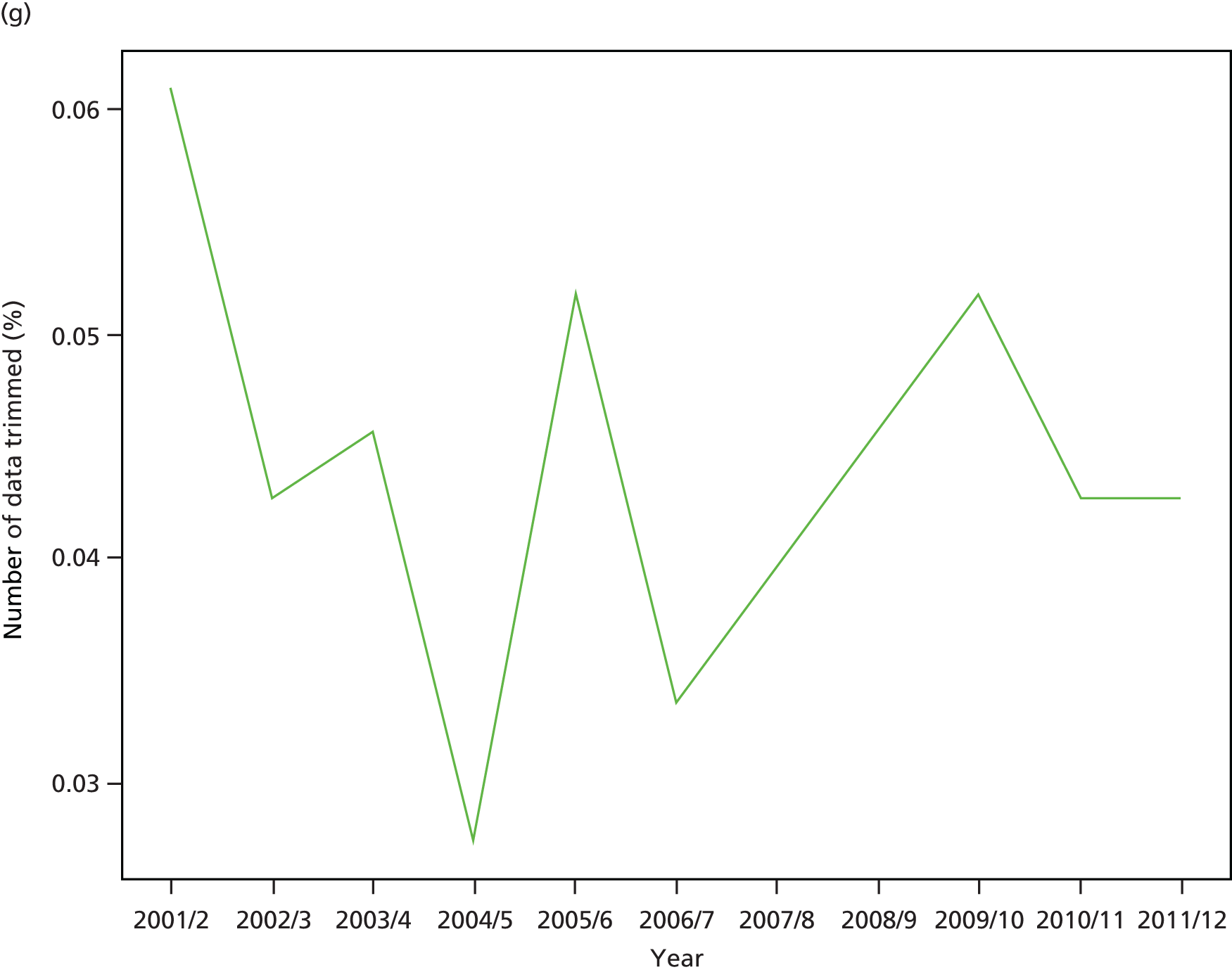

As an example, Figure 2 illustrates how we monitor national equity trends using indicator 1, primary care supply. The top panel shows a breakdown of patients per full-time equivalent (FTE) general practitioners (GPs) by deprivation quintile group, allowing for need and population change, and the bottom panels show how this translates into two standard inequality measures that look at the whole of the social gradient in health care: the SII and the relative index of inequality (RII). These measures and graphs are explained in more detail in Chapter 4.

FIGURE 2.

National monitoring of change in equity over time. (a) Indicator 1: primary care supply; (b) RII; and (c) SII. The top panel shows a breakdown of patients per FTE GPs by deprivation quintile group, allowing for need and population change, and the bottom panels show how this translates into two standard inequality measures that look at the whole of the social gradient in health care: the SII and RII. FTE, full-time equivalent; GP, general practitioner; IMD, Index of Multiple Deprivation; RII, relative index of inequality.

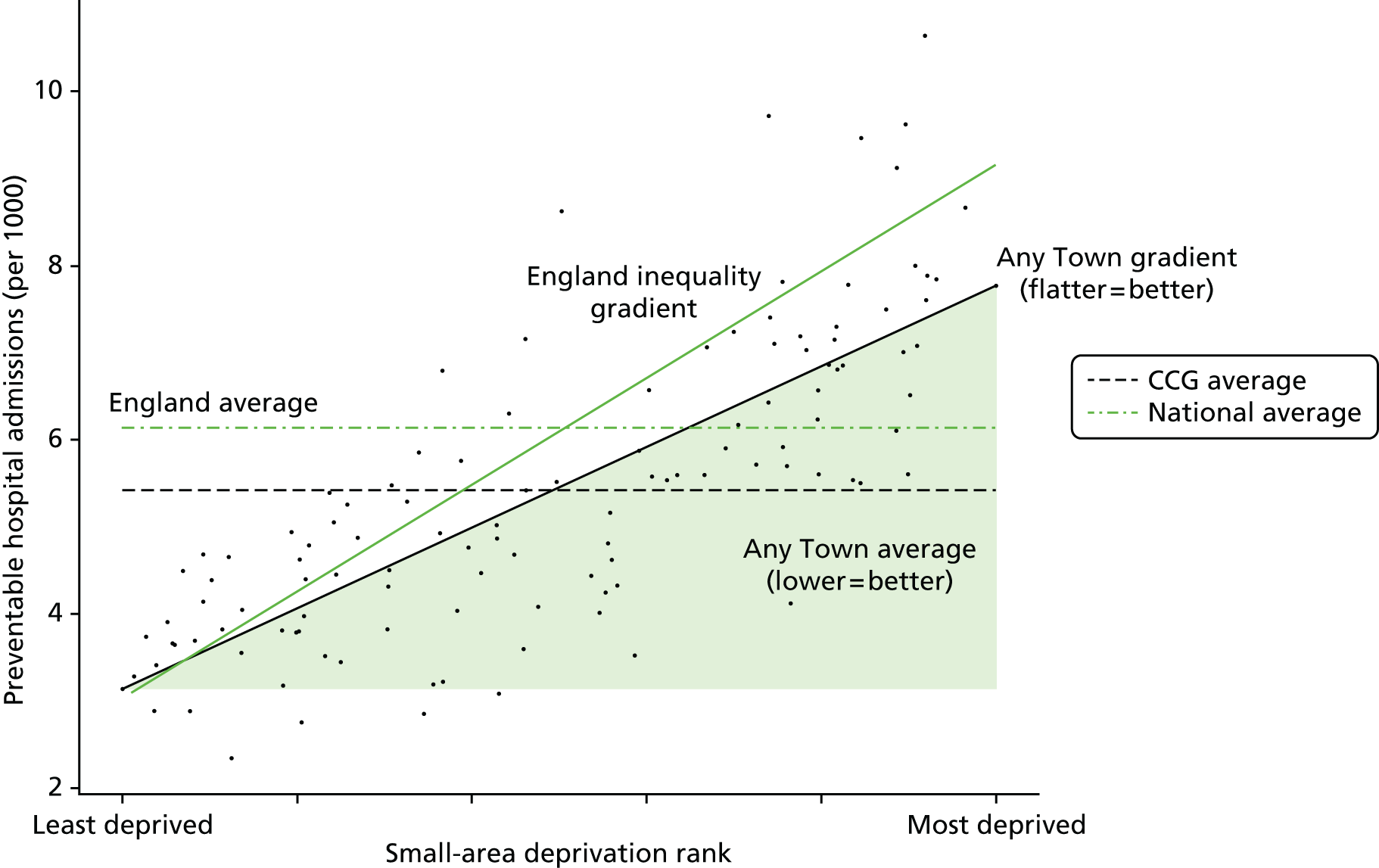

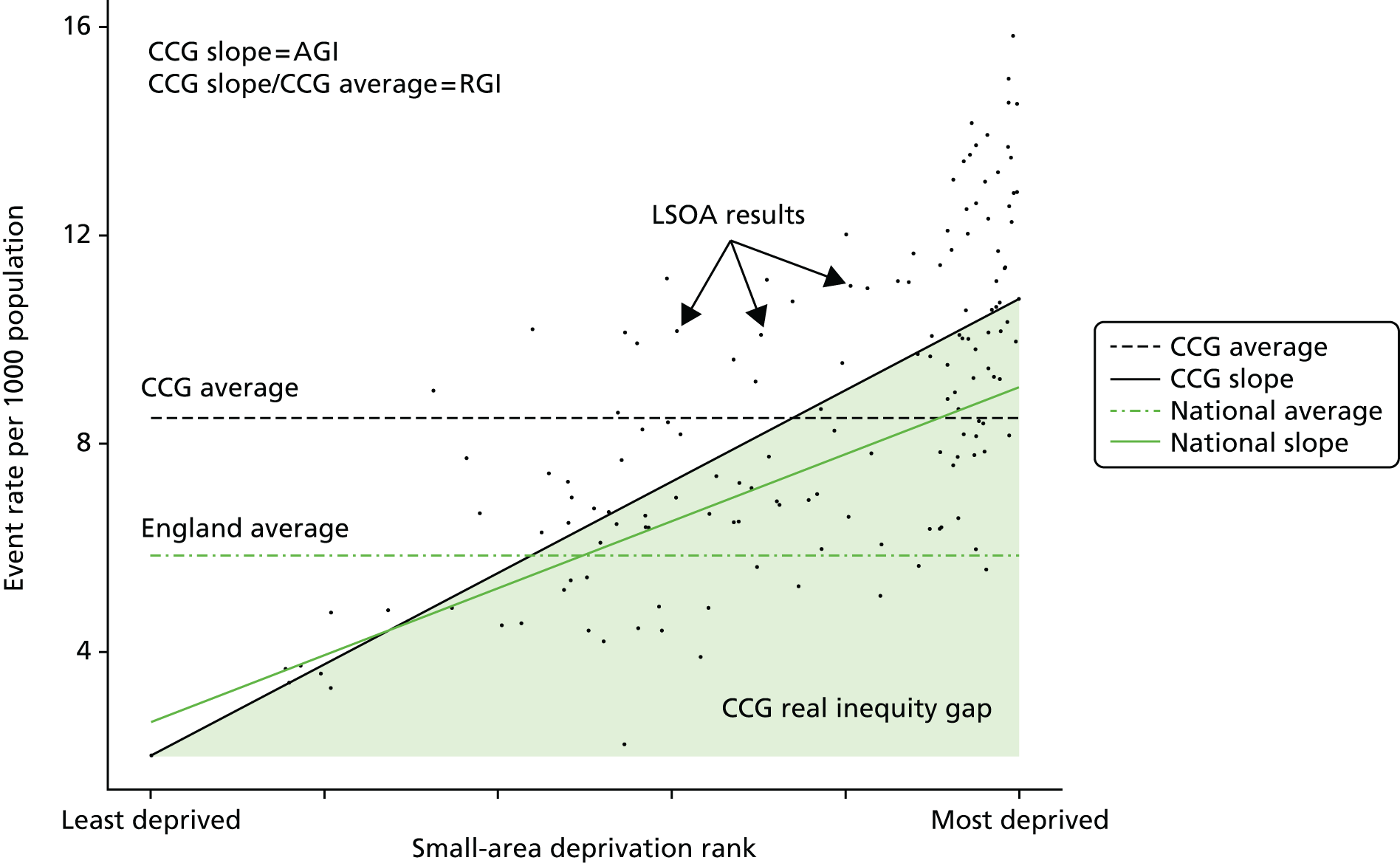

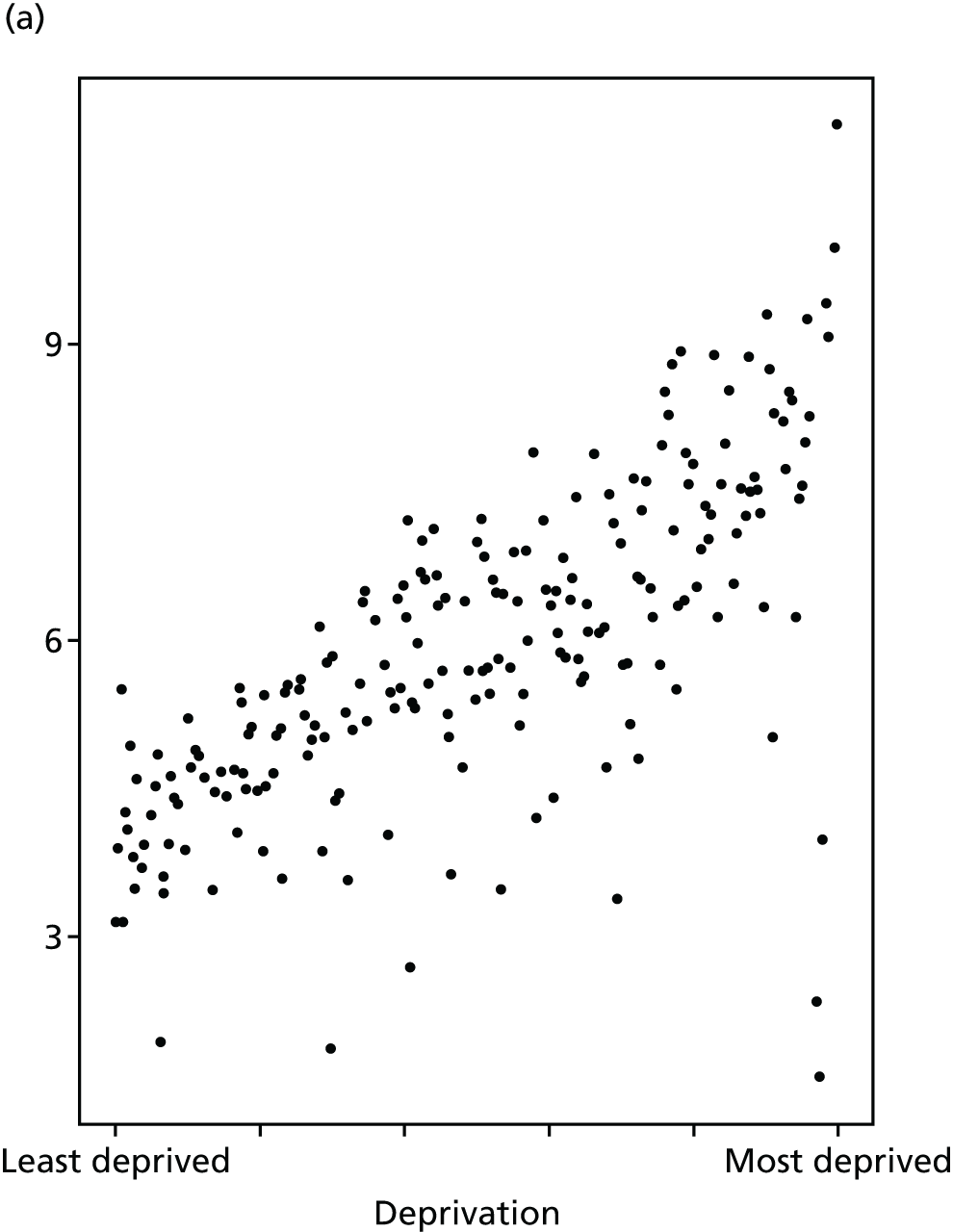

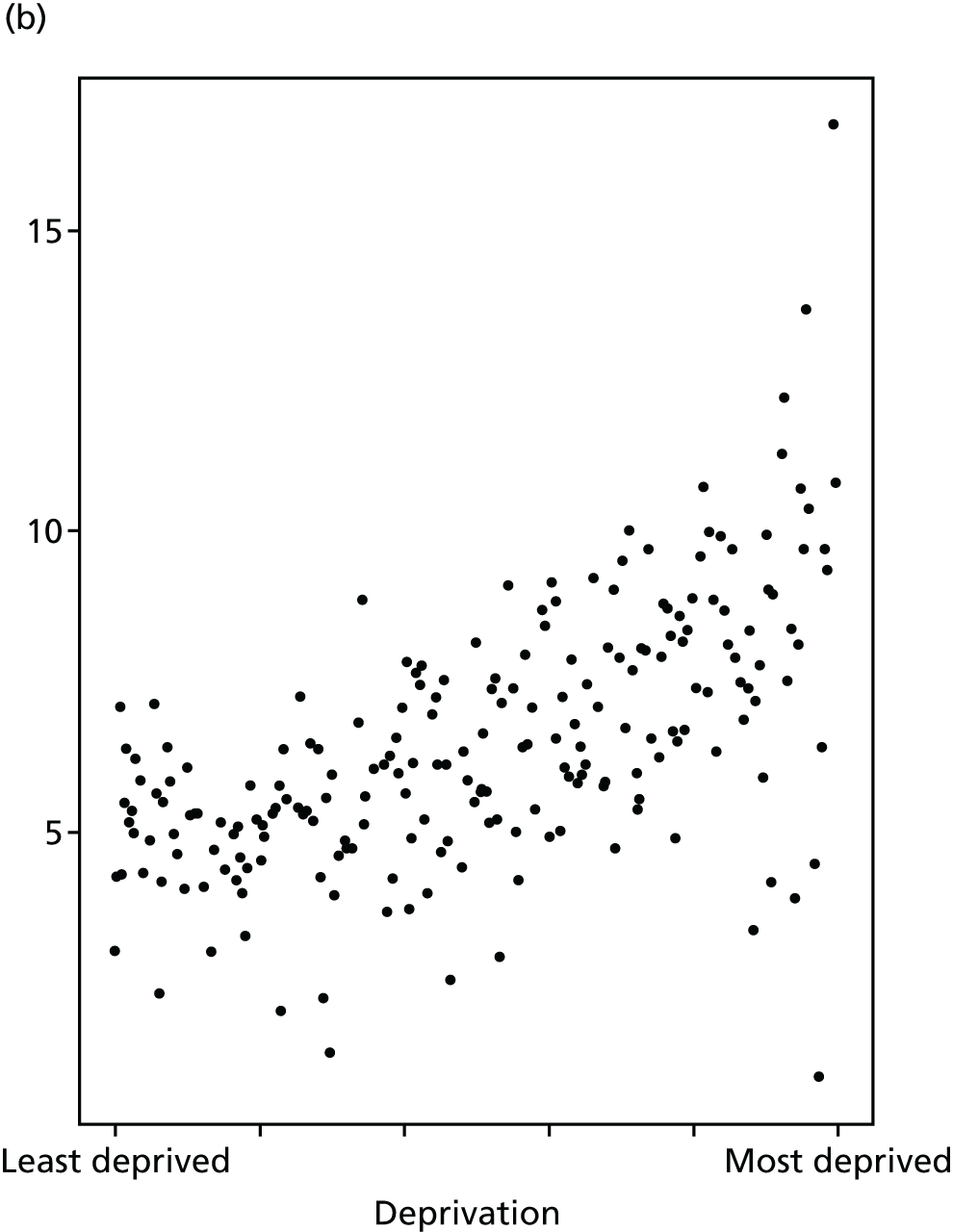

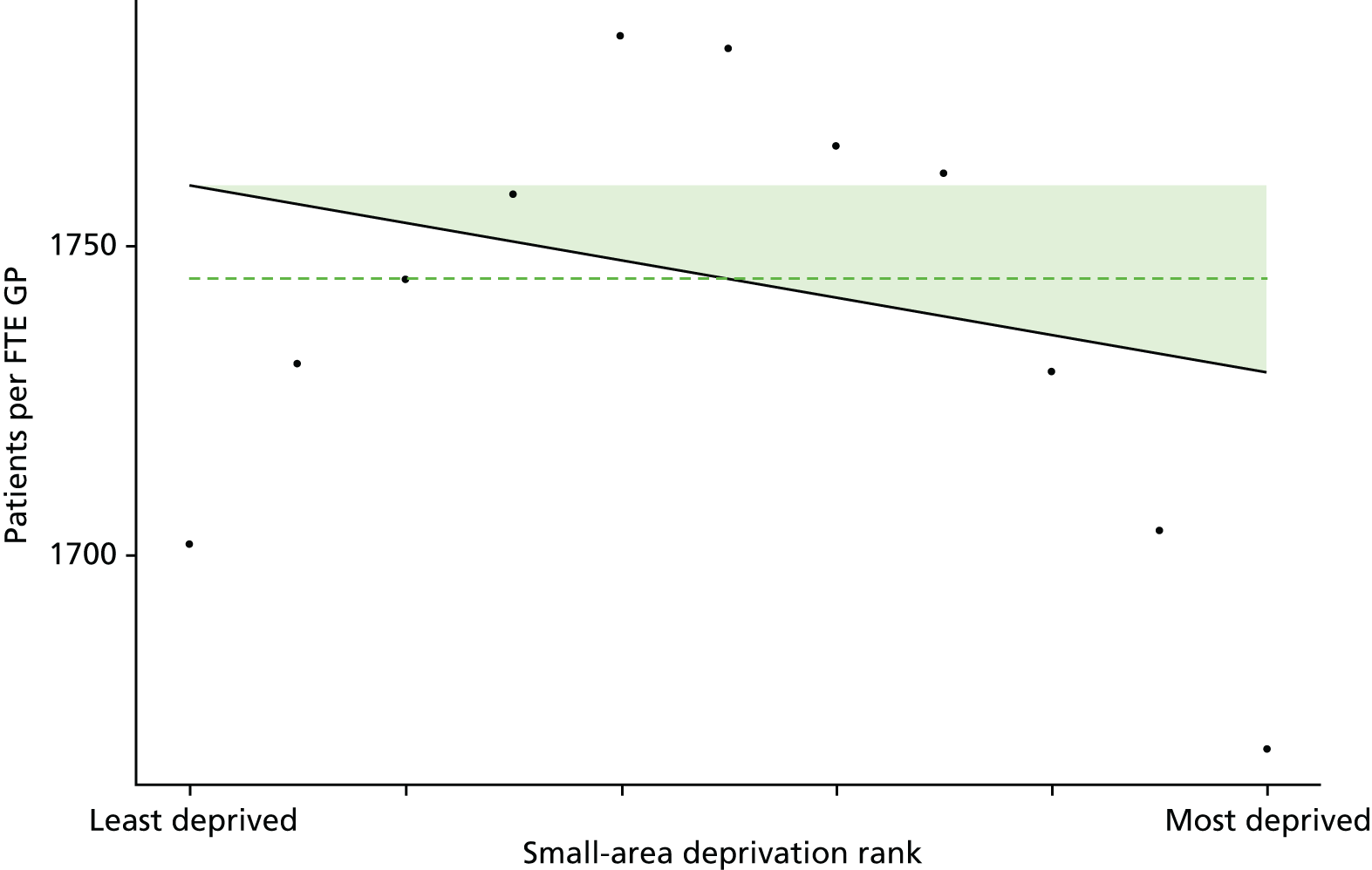

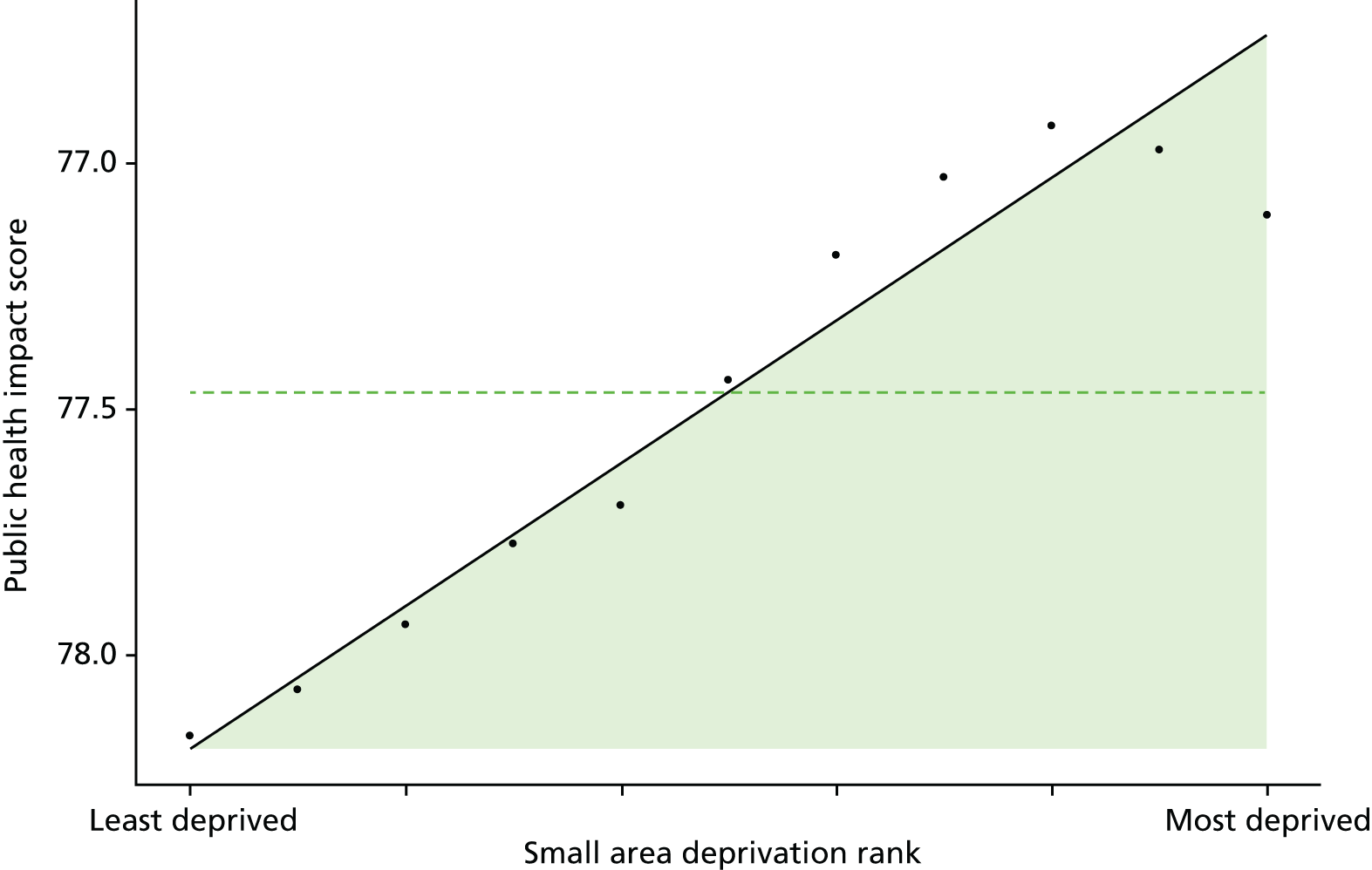

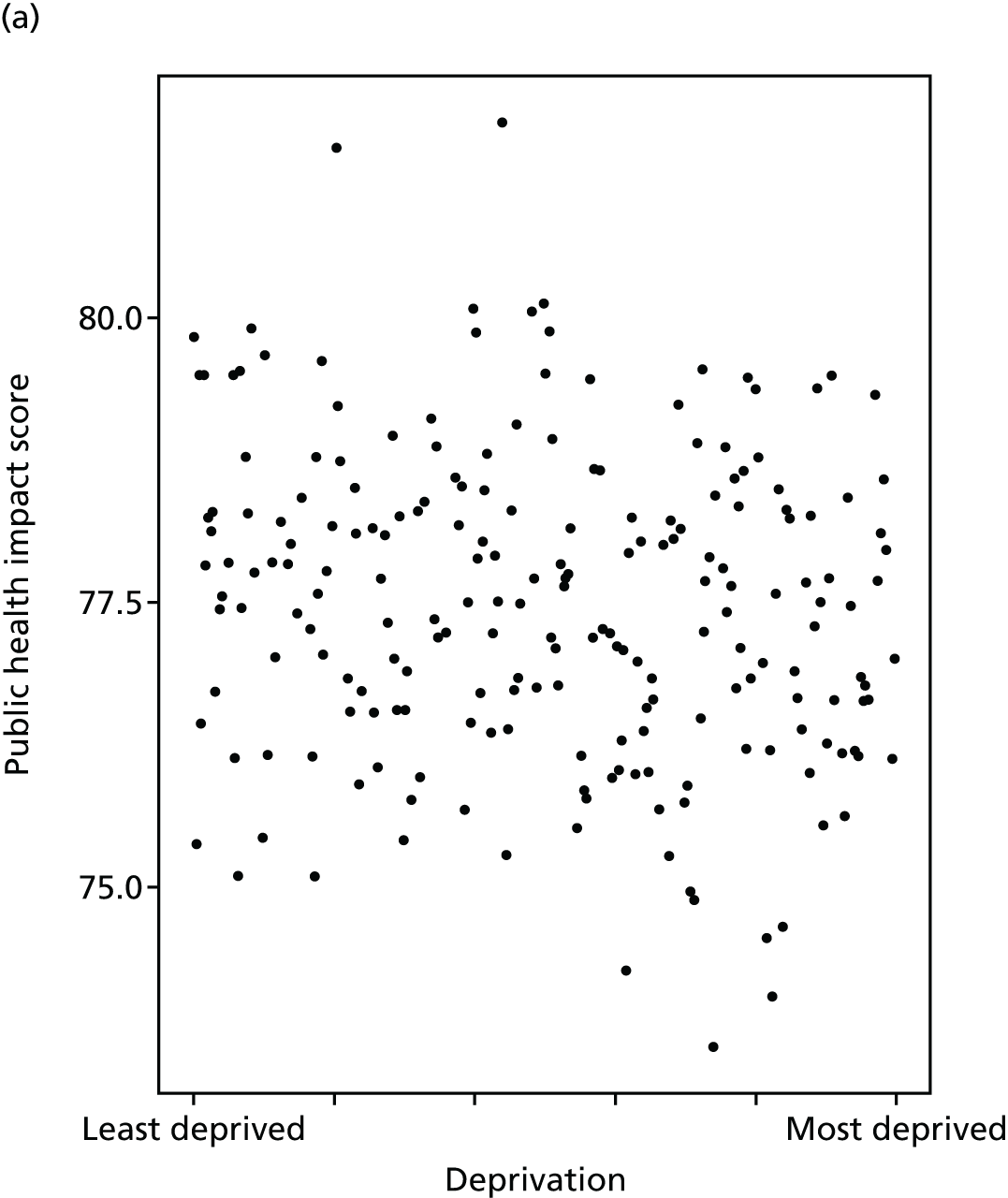

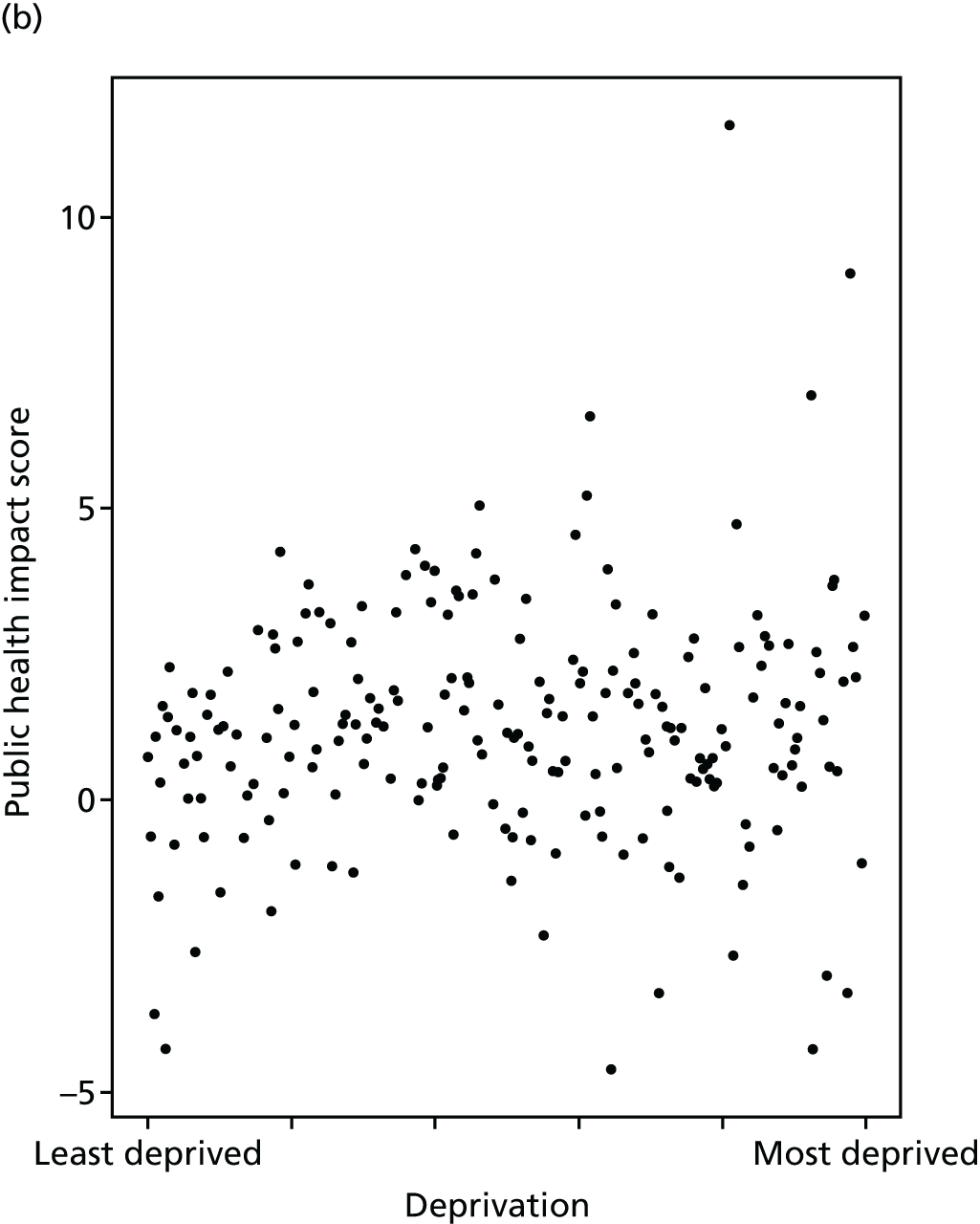

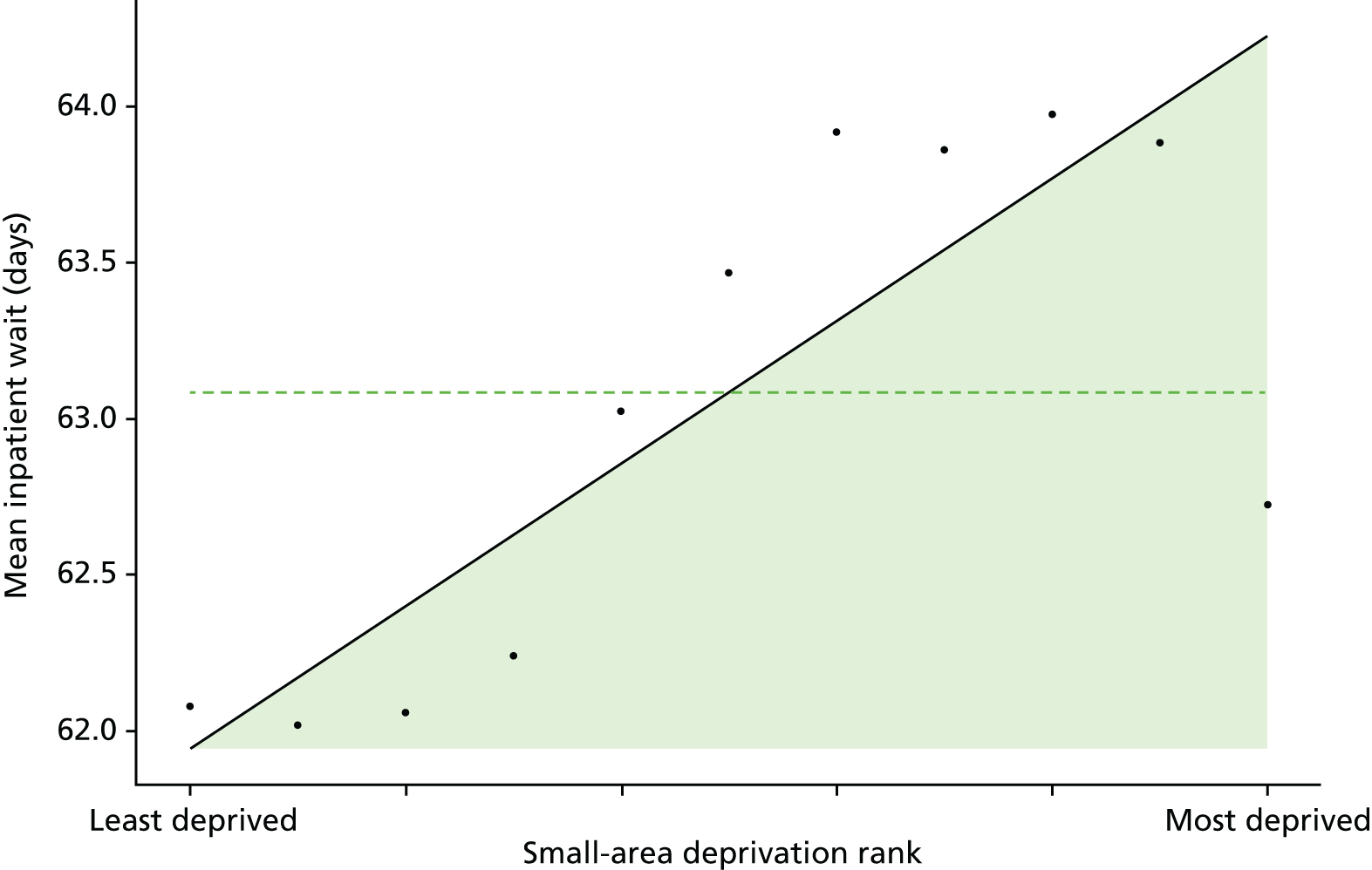

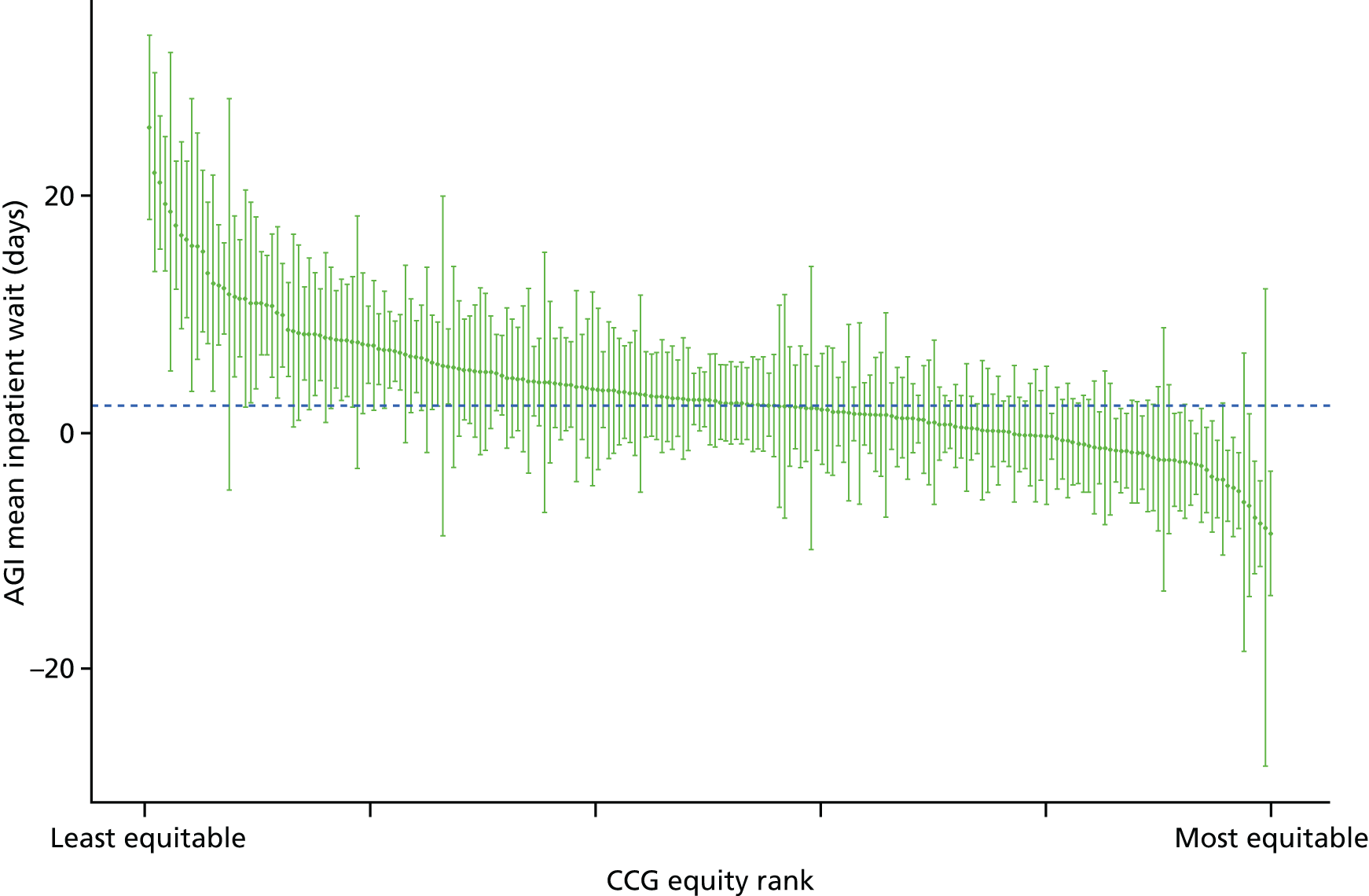

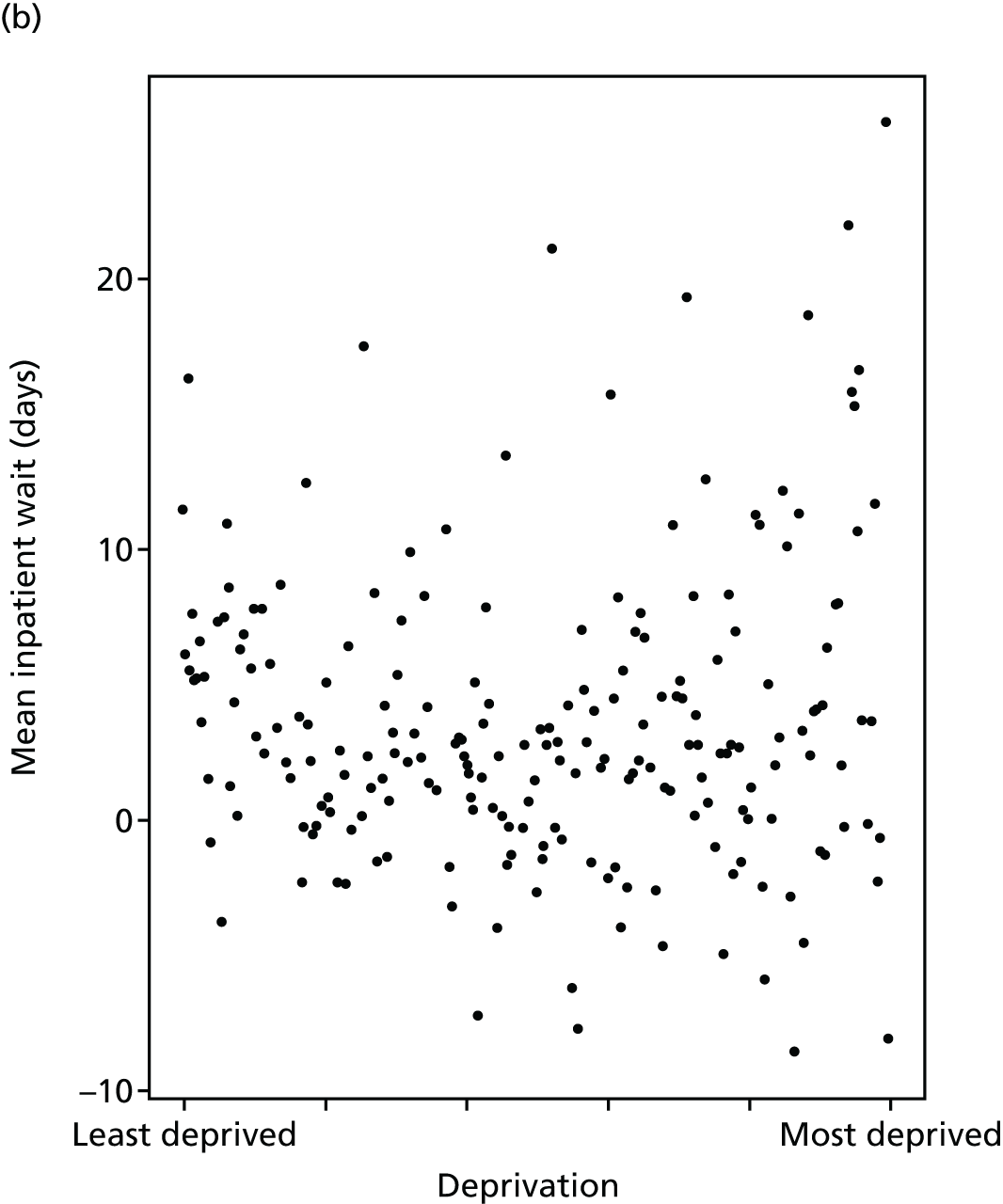

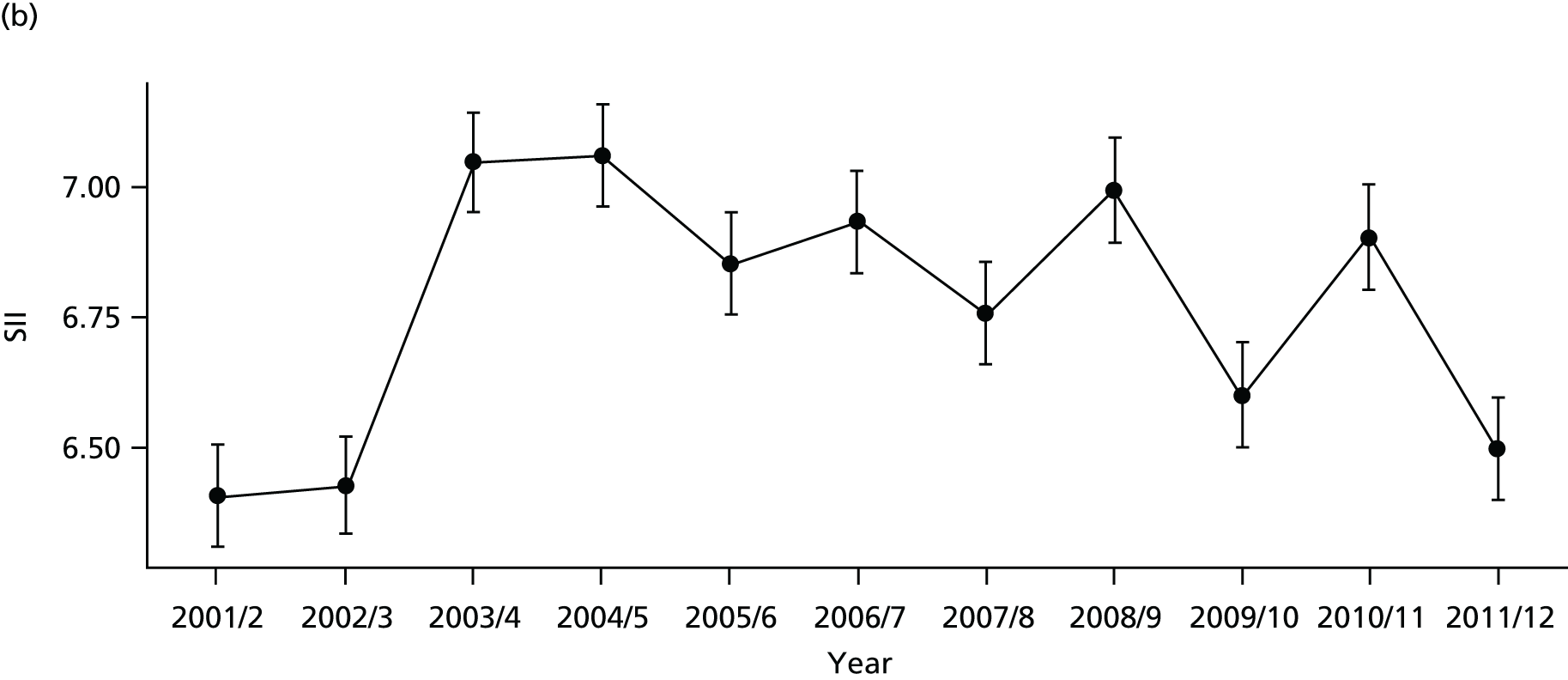

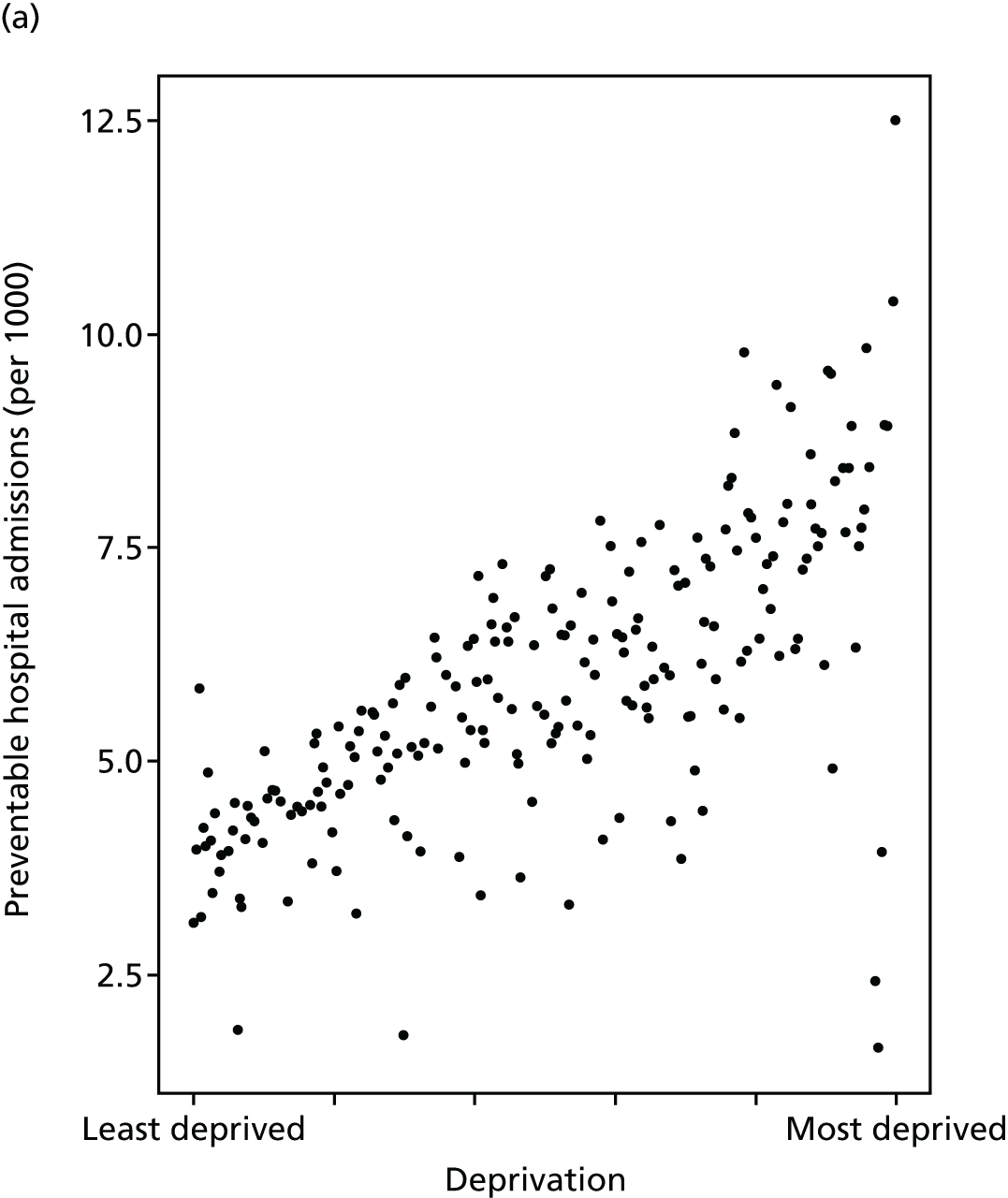

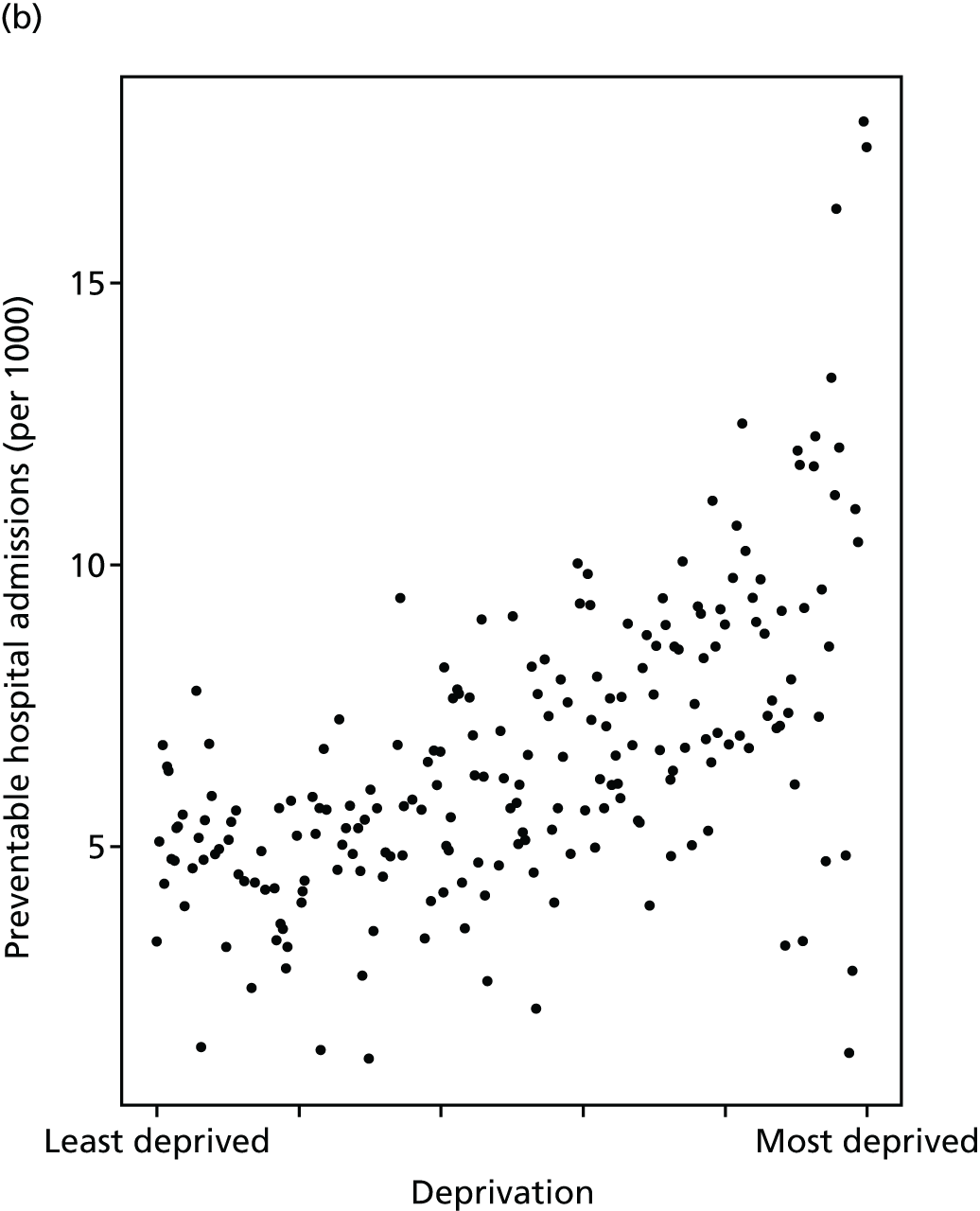

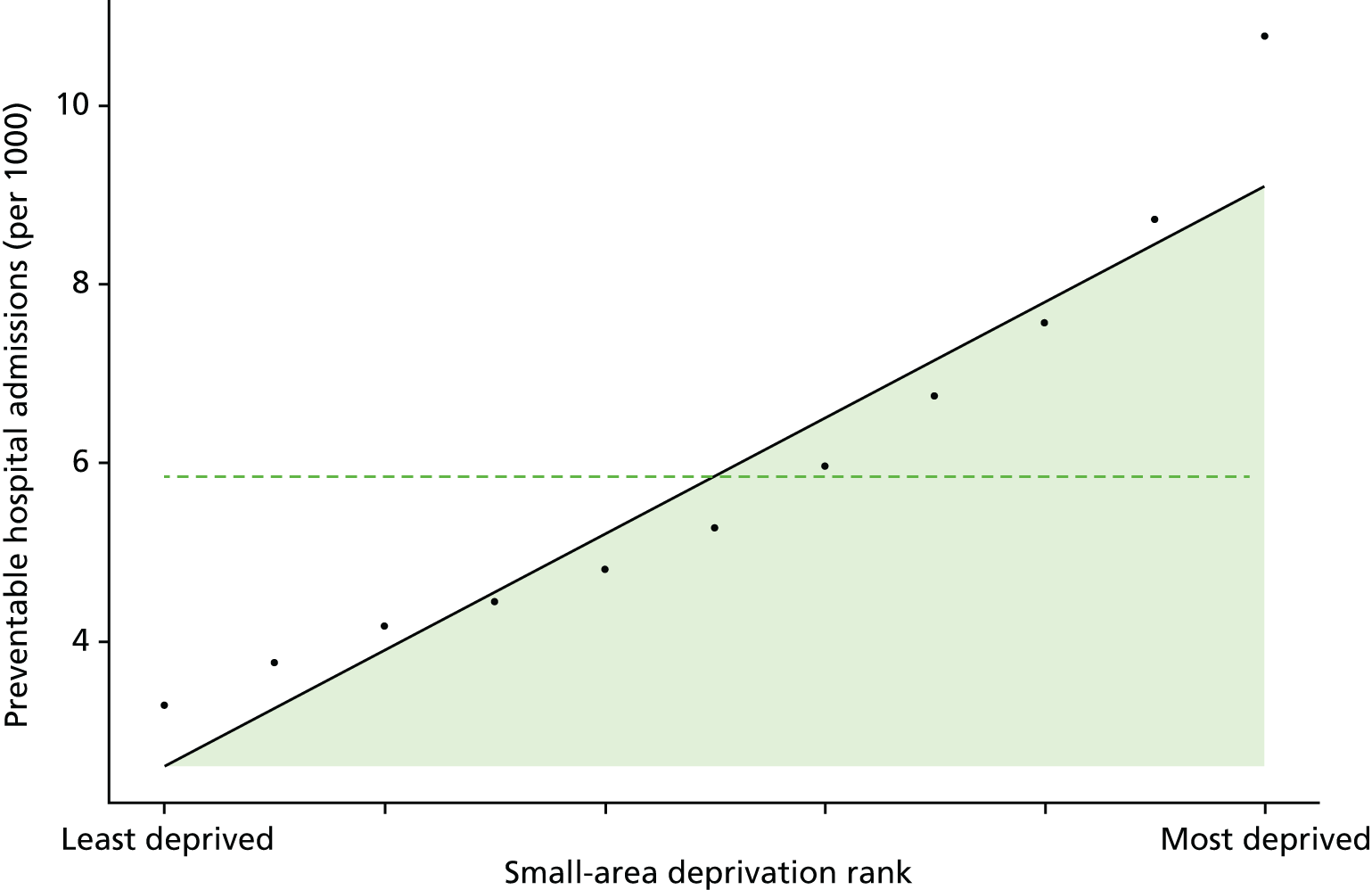

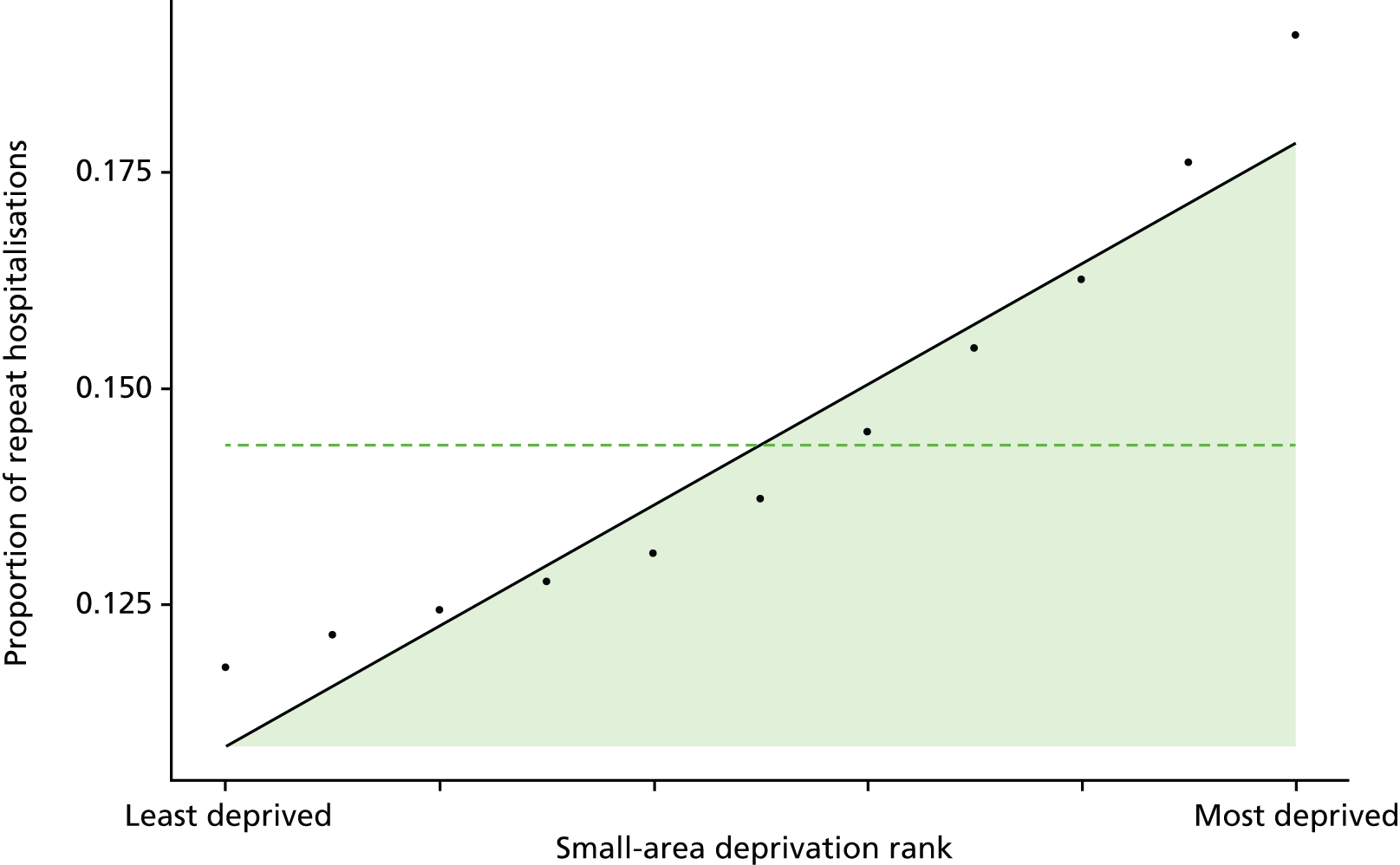

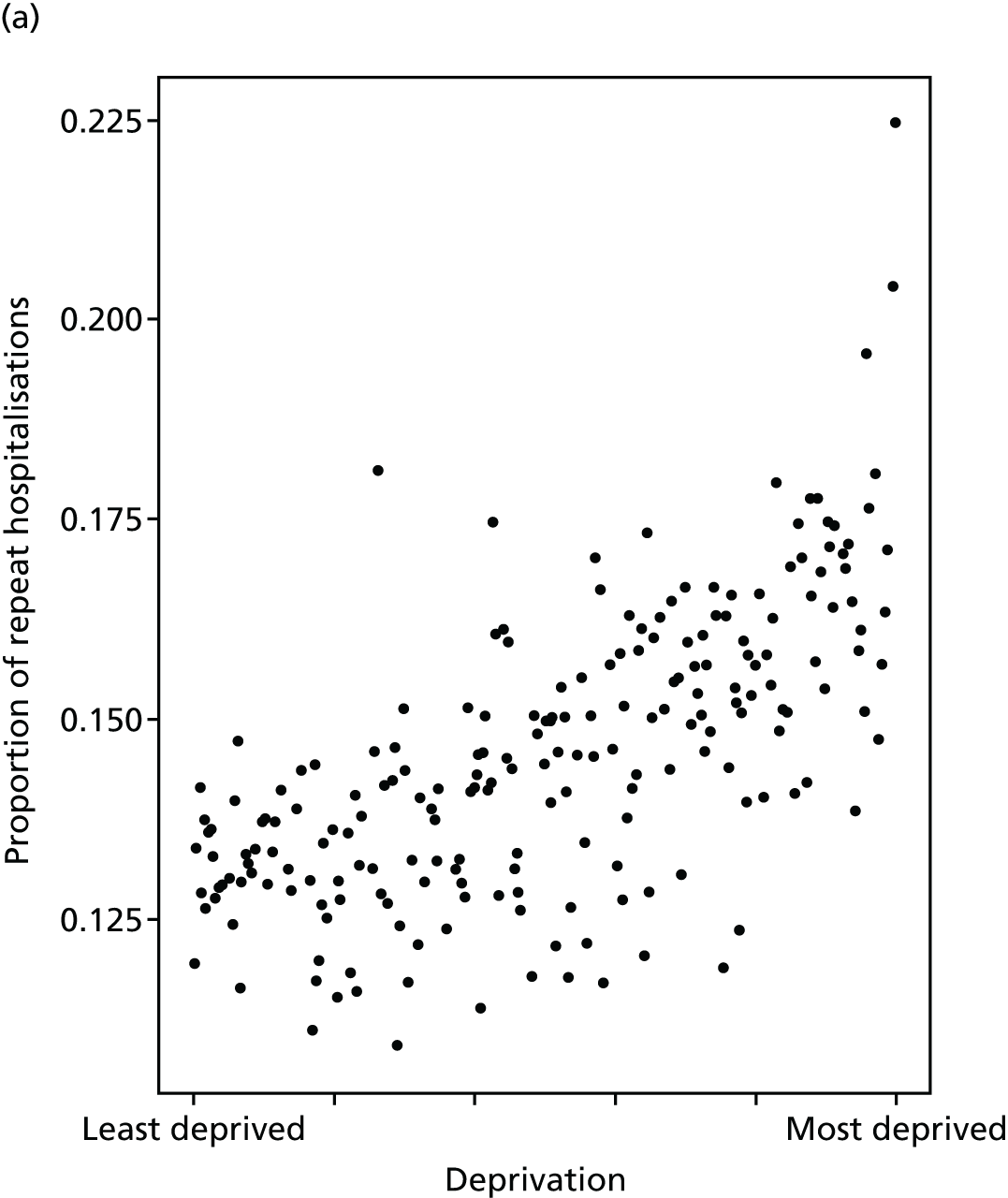

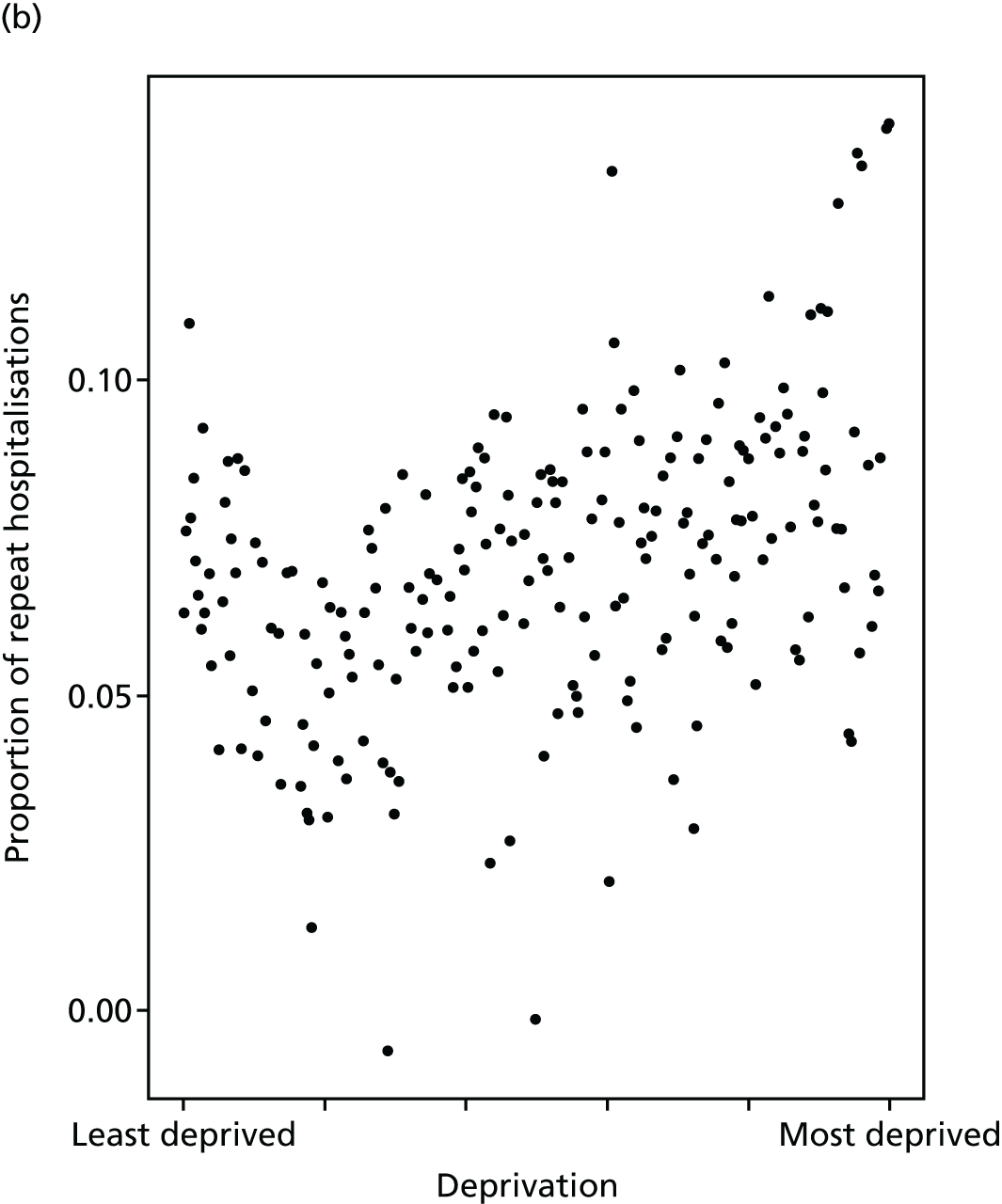

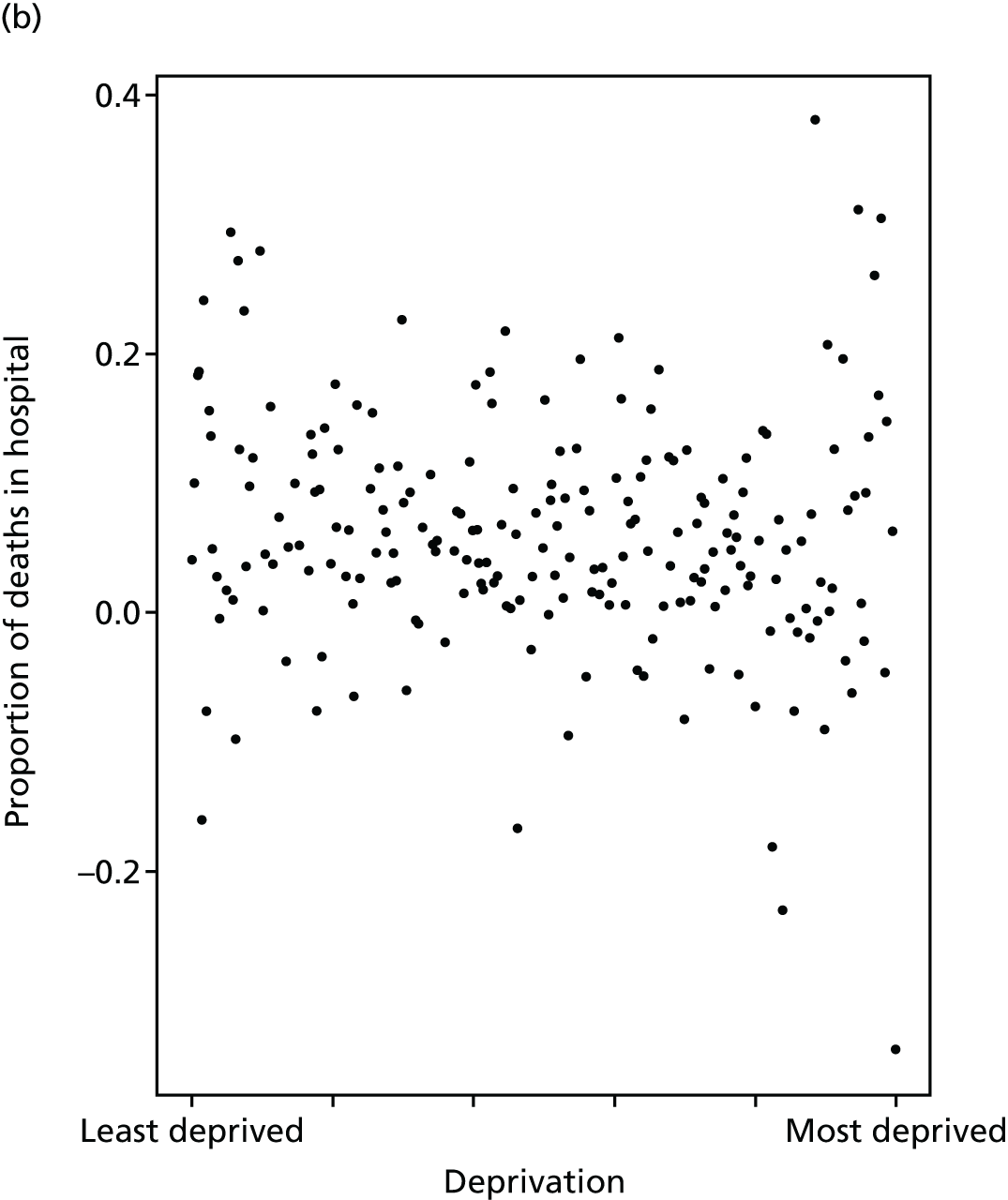

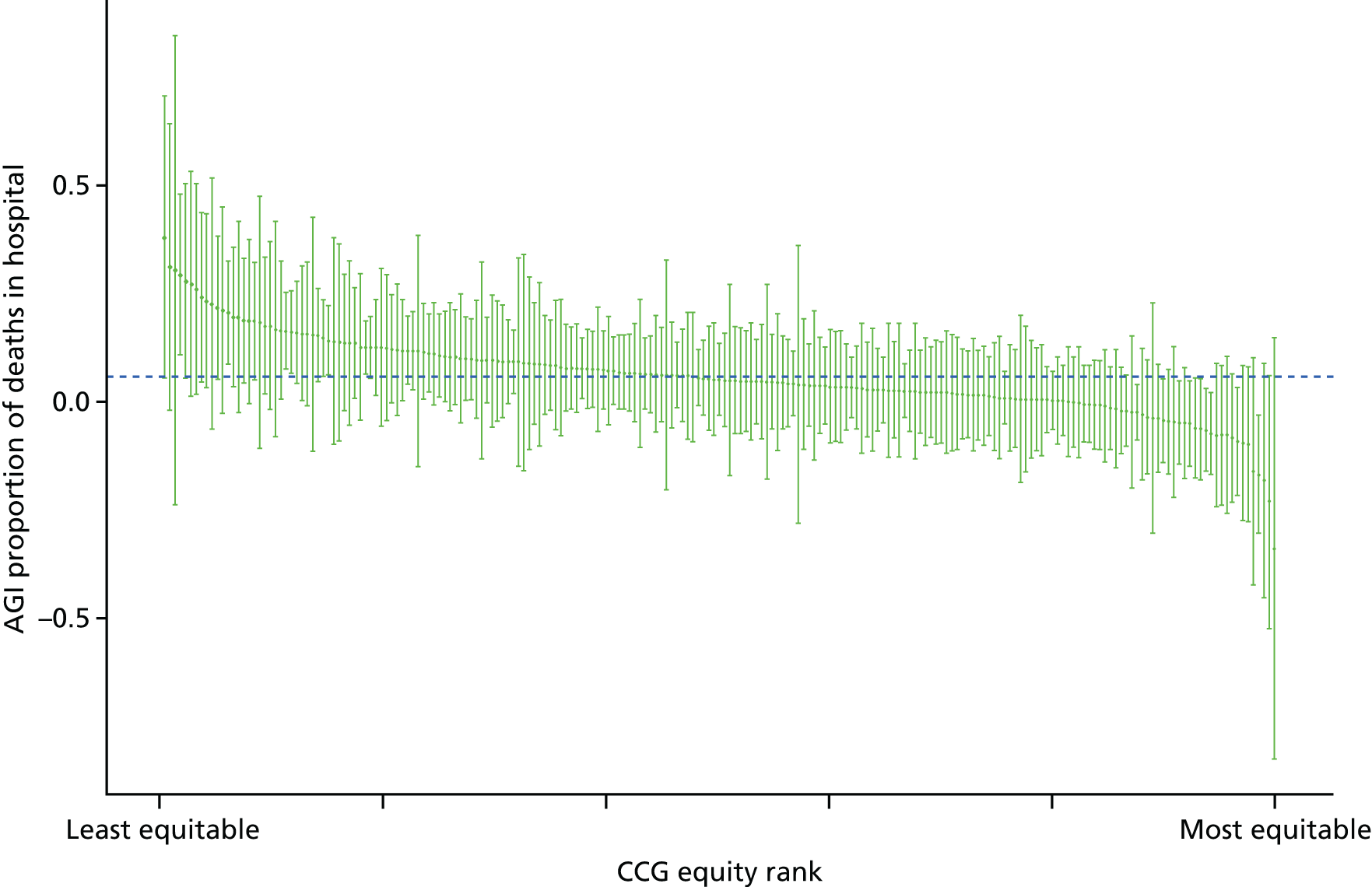

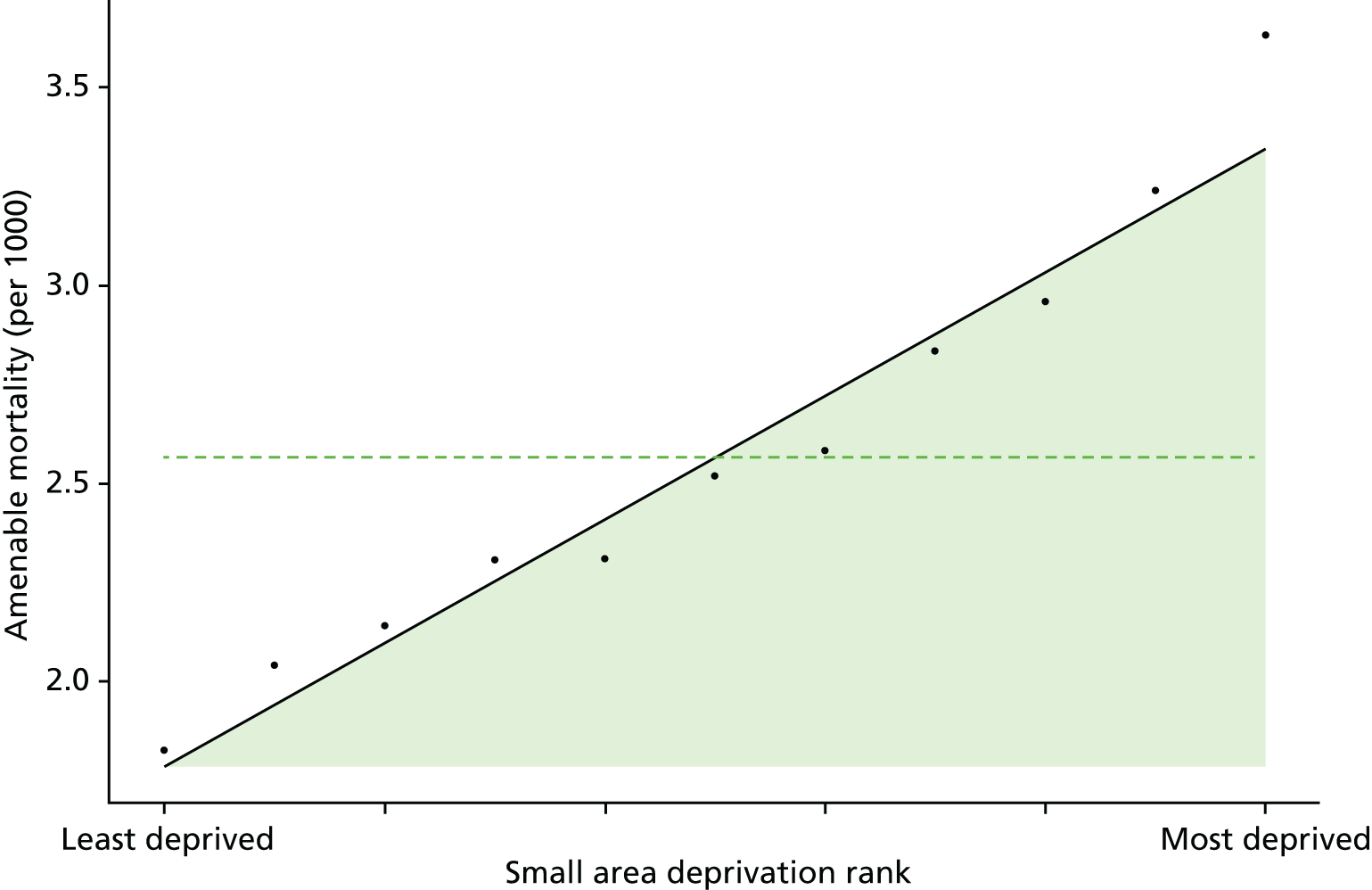

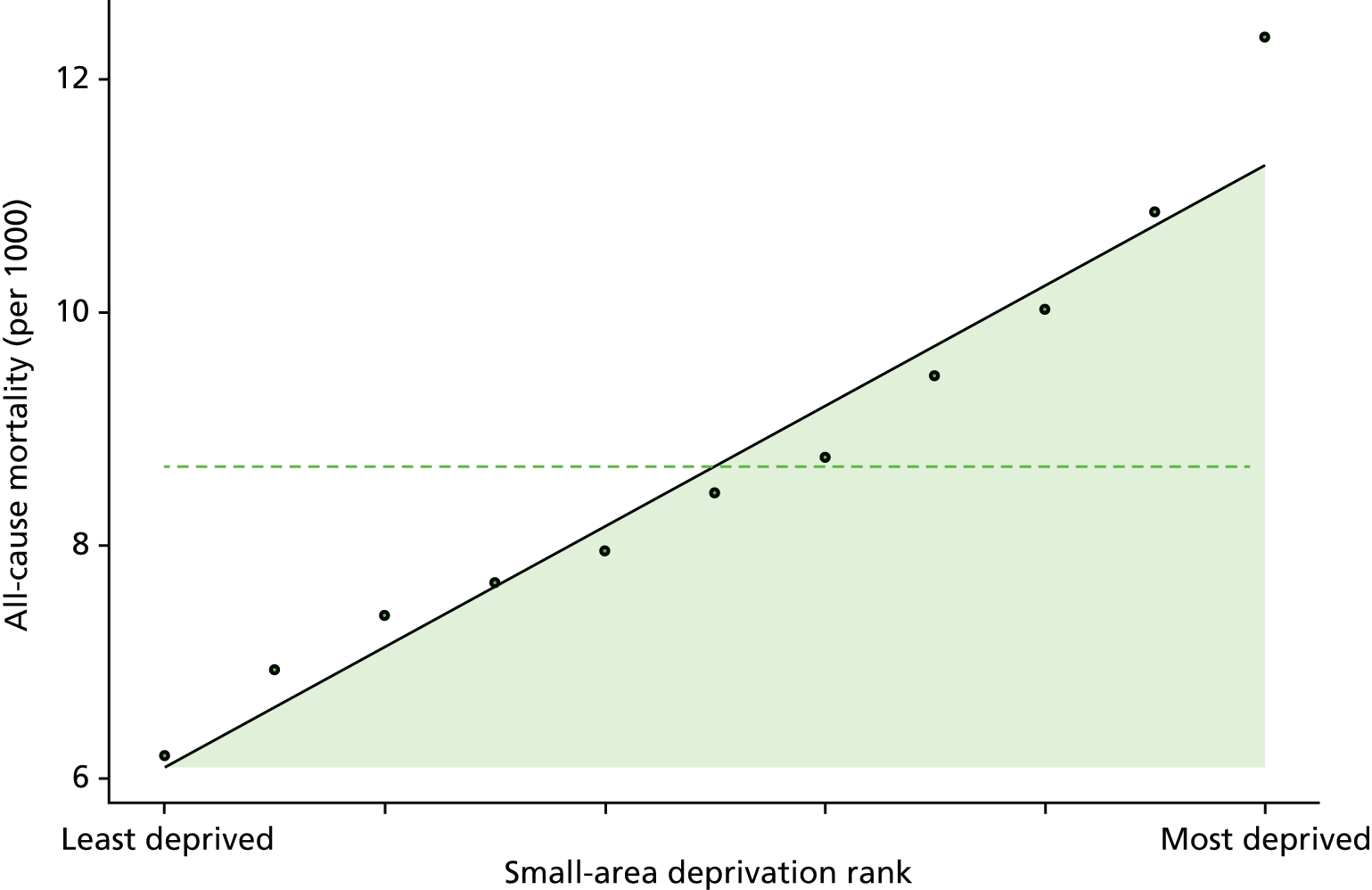

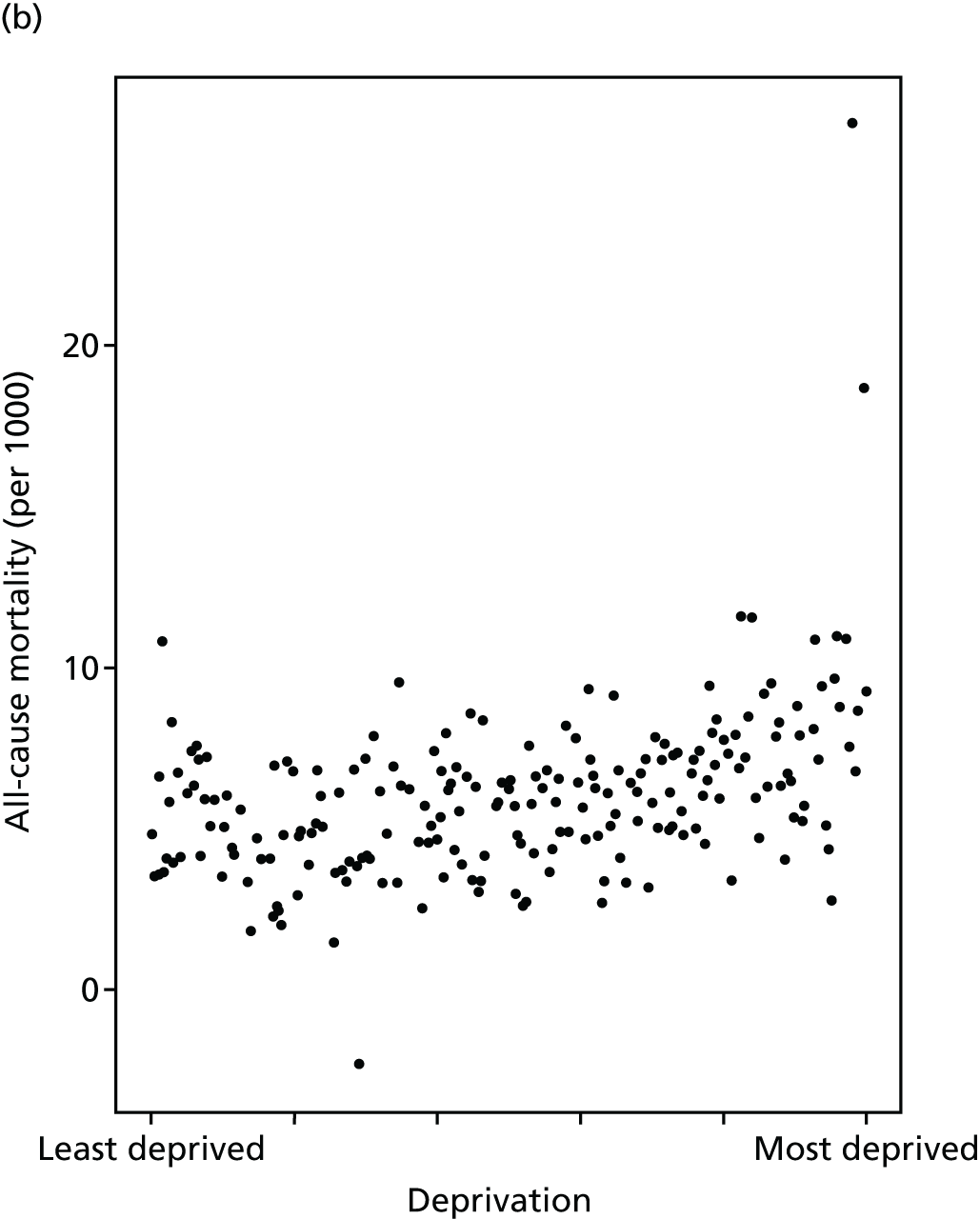

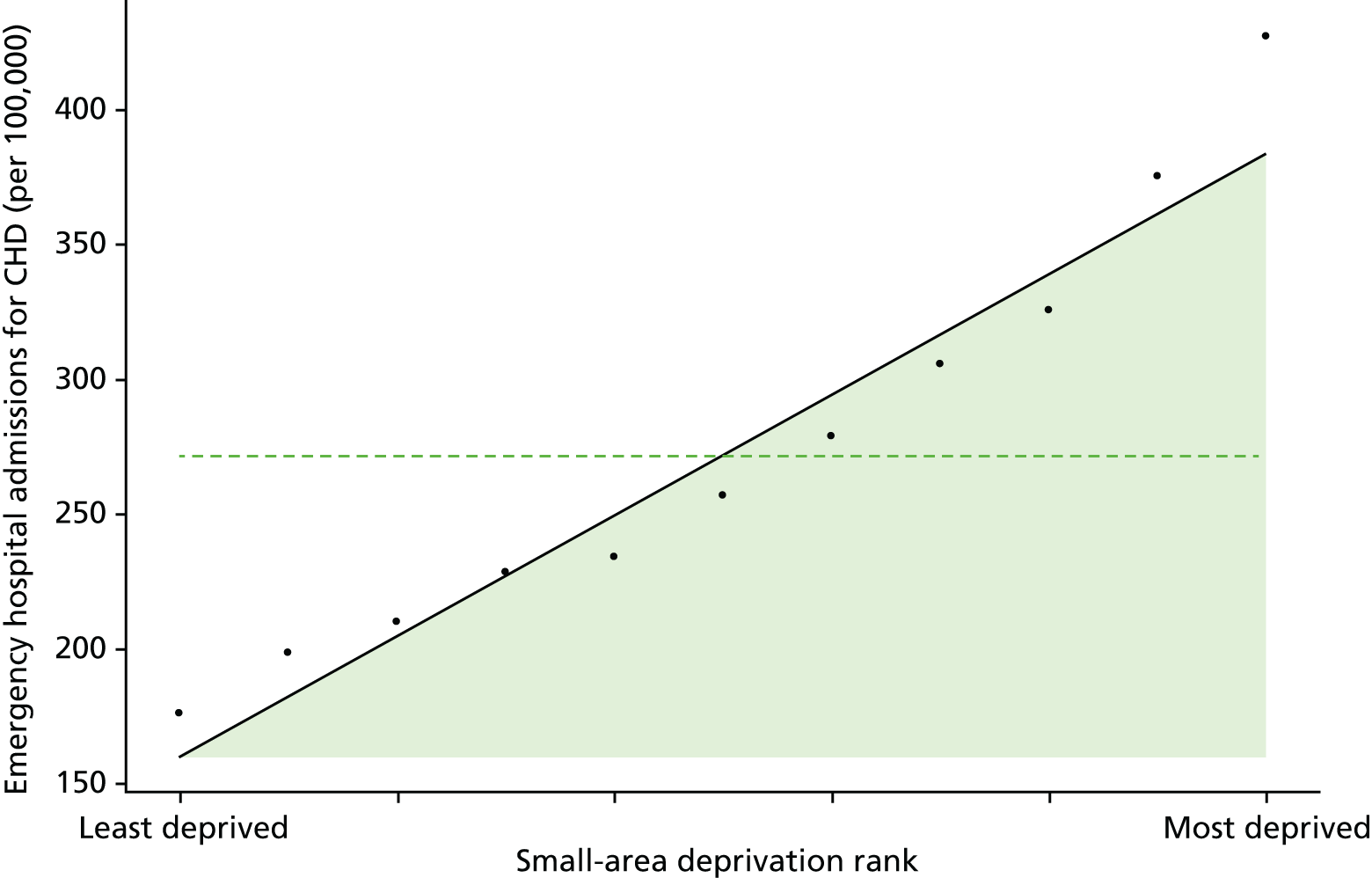

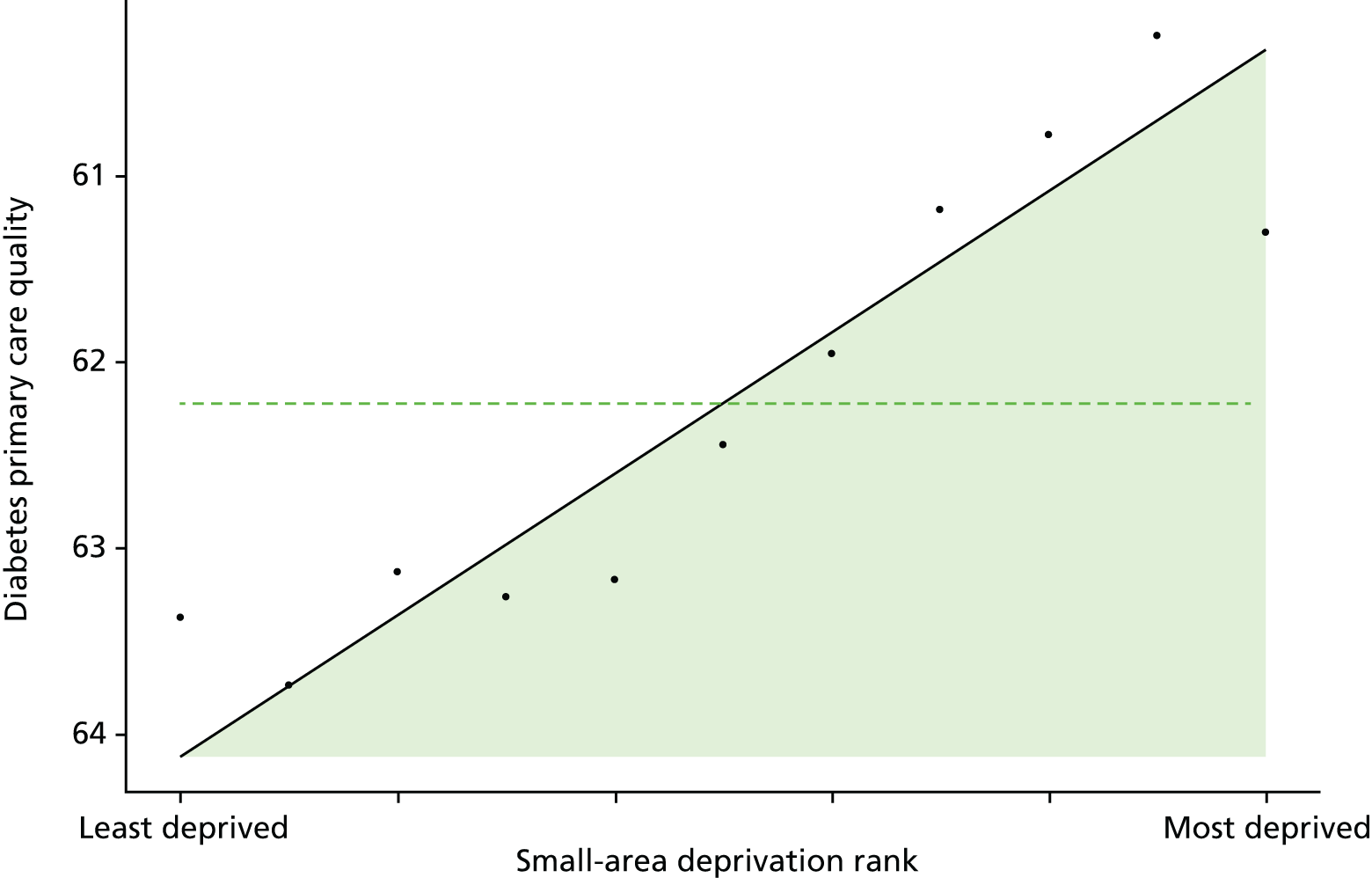

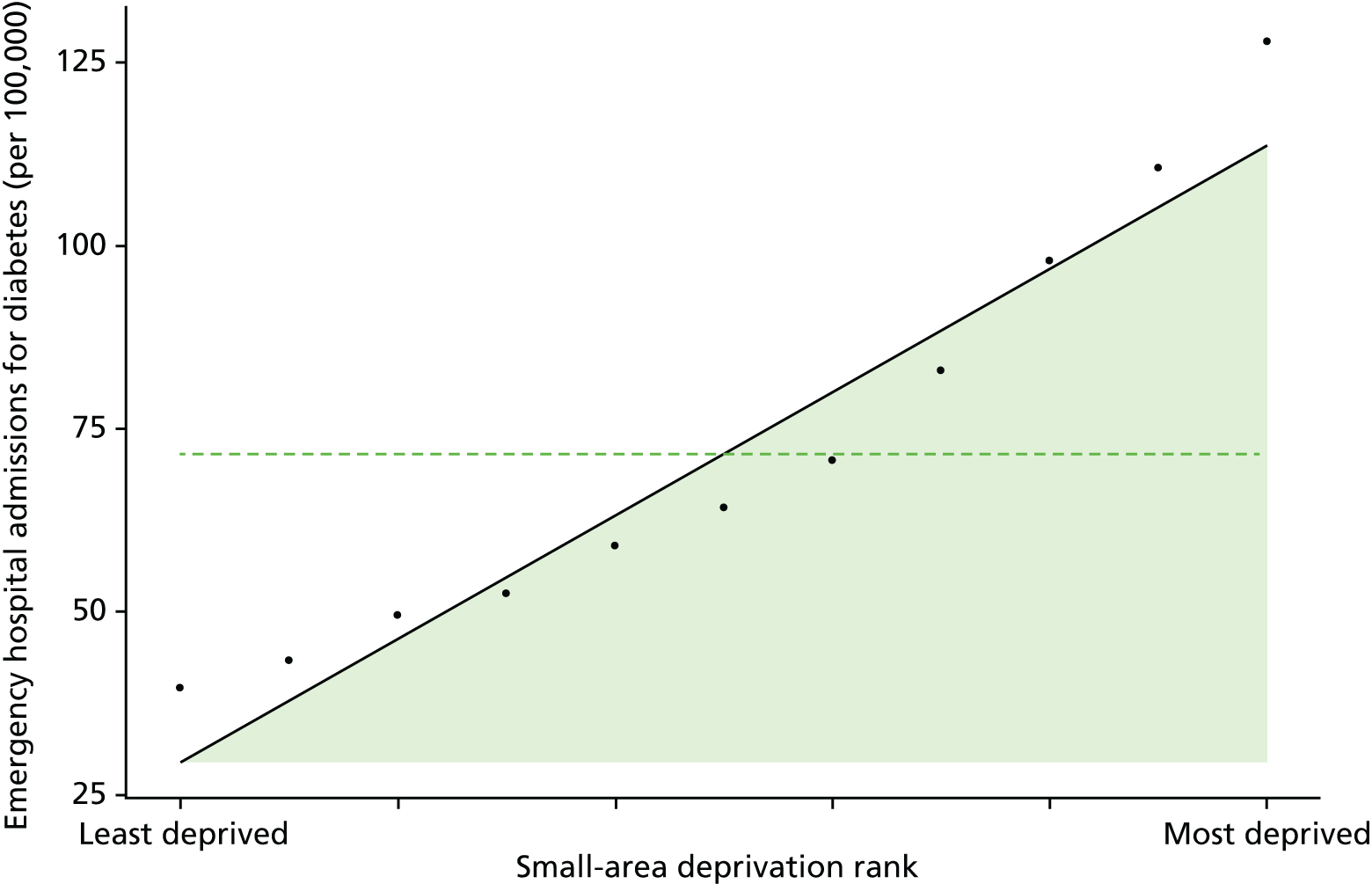

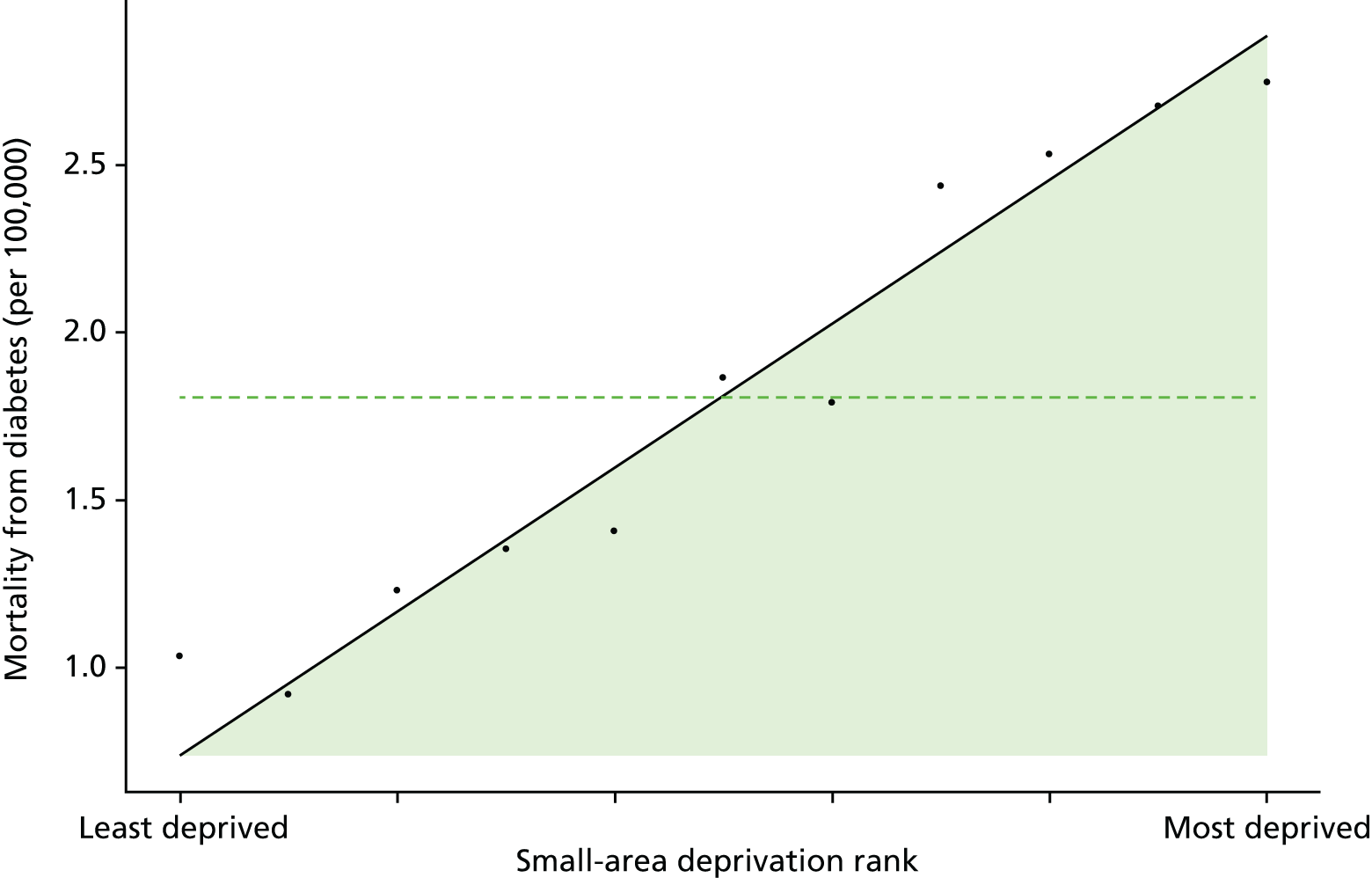

Finally, Figure 3 illustrates our framework for local equity monitoring against a national benchmark. This figure shows socioeconomic inequality in preventable hospitalisation within a fictional local NHS area called ‘Any Town’. The basic idea is to compare the social gradient in health care within Any Town against the social gradient in health care within England as a whole. The social gradient shows the pro-rich link between socioeconomic status and preventable hospitalisation, after allowing for exogenous risk factors influencing preventable hospitalisation that are not under the control of the NHS, in this case, age and sex. As explained above, in Concepts of equity in health care, we would ideally also want to adjust for morbidity, or, more precisely, that part of morbidity that is not under the control of the NHS, but were unable to do so because of data limitations. The relevant NHS equity objective is to reduce the social gradient in health care, both in Any Town and in England as a whole.

FIGURE 3.

Local equity monitoring against a national benchmark: preventable hospitalisation in neighbourhoods in a fictional NHS area (Any Town). Neighbourhoods are ranked by deprivation, with more deprived neighbourhoods to the right.

Any Town has a population of approximately 200,000 people. Each dot represents one of the 125 neighbourhoods in Any Town, each containing approximately 1500 people. Neighbourhoods are ranked by deprivation, with more deprived neighbourhoods to the right. The Any Town inequality gradient is simply a regression line fitted through these 125 dots. The England inequality gradient is a regression line fitted through all 32,482 neighbourhoods in England. In this example, Any Town is doing better than England as a whole both for the average patient (a lower average line) and in terms of reducing inequality (a flatter inequality gradient). In this example, these differences are statistically significant and unlikely to be merely because of the random play of chance. The NHS may, therefore, be able to learn lessons from Any Town about how to tackle inequality in preventable hospitalisation.

Chapter 2 Public involvement

Introduction

Public involvement was important to our study because one of the main purposes of our indicators is public reporting for democratic accountability, as well as facilitating quality improvement efforts by national and local decision-makers. We therefore wanted to select indicators of socioeconomic inequality in health care that members of the general public will consider meaningful and important. Before selecting our indicators, we therefore considered it important to ask the general public about what they view as the most unfair socioeconomic inequalities in health care. We also sought feedback from members of the public to help refine our visualisation tools for communicating the findings of our indicators and to ensure that members of the public are capable of understanding our indicators.

This chapter describes how members of the public were involved in this study. They were involved in two ways. First, through a small-scale public consultation exercise in York conducted at the beginning of the study to give us a better understanding of what kinds of socioeconomic inequality in health care are of most concern to members of the public. This involved both an online survey (with 155 responses) and a full-day citizens’ panel meeting (with 29 participants) to gather more in-depth views. Second, two members of the public, recruited via our public consultation exercise, gave feedback throughout the project through their membership of our advisory group.

The primary aim of the public consultation was to identify a list of priority areas for monitoring NHS equity performance. This was achieved by asking the public to consider different types of socioeconomic inequality in health and health care and assess which ones they thought were the most unfair. Our key finding was that the public are concerned to reduce inequality in health-care outcomes, but that their concern for reducing inequalities of access, specifically, for GP supply and hospital waiting times, is at least as strong. This finding influenced the selection of equity indicators for our subsequent analysis. At the inception of the project we had presumed that our indicators would focus on health-care utilisation and outcomes, which are the focus of much current academic literature on equity in health care. However, as a result of our public consultation exercise, as well as further development of our conceptual framework in monitoring equity at multiple stages of the patient pathway, we ensured that both GP supply and hospital waiting time were selected for inclusion in our suite of equity indicators.

A secondary aim of the public consultation was to identify two lay members of the public to join our advisory group to contribute further to the indicator selection process and provide feedback on the design of equity dashboards and other visualisation tools for monitoring equity performance.

This chapter is organised as follows. We start with consultation exercise methods, including the sampling approach, development of questionnaire and data collection. We then present the main quantitative results of the public consultation in terms of people’s responses to questions asking them to assess and rank different kinds of inequality in health and health care by degree of unfairness. The results are presented separately for our online survey and citizens’ panel. We then discuss the process of recruitment of lay members and their contribution to the advisory group and, in particular, the design of visualisation tools. Finally, we conclude by discussing the implications of public involvement for our indicator selection.

Methods of public consultation

Sampling

The survey was conducted in the York area using two modes of administration: (1) a 1-day face-to-face citizens’ panel event (n = 29), and (2) an online survey (n = 155). Participants in both forms of public consultation were recruited in the same way, through advertising and leafleting in the York area as described below. The citizens’ panel event was held in York city on Saturday, 21 September 2013. The online survey was administered between July and September 2013, using a web portal called SmartSurvey™ (SmartSurvey Ltd, Tewkesbury, UK). Citizens’ panel members were paid expenses and an honorarium for devoting a whole day of their time to this, according to National Institute for Health Research (NIHR) and INVOLVE guidance, whereas online survey participants were unpaid. The sampling strategies for both approaches are described below.

The citizens’ panel meeting was advertised in a local monthly magazine called Your Local Link in July and August 2013. The magazine is free of charge and distributed to all homes and businesses across York (35 postcode sectors), targeting all sociodemographic groups. In addition, we distributed 810 leaflets door to door to 10 of the most deprived streets in York [identified as being within the most deprived fifth of neighbourhoods in England according to the Index of Multiple Deprivation (IMD) 2010] to reach a diverse groups of participants. We also distributed flyers at two public events as part of the University of York’s Festival of Ideas which was held in June 2013. Finally, we also put out a University of York press release about the citizens’ panel event. A selection of our recruitment materials, together with the participant consent form, is presented in Appendix 6.

A total of 103 individuals made contact with the project administrator for the citizens’ panel event. The contact was made by telephone, e-mail or completion of an online registration form. Thirty places were offered after stratifying respondents based on age, sex and socioeconomic background [established using respondents’ postcode data and information from the Office for National Statistics (ONS)’s neighbourhood statistics website derived from IMD 2010 deprivation score] and then selecting participants on a ‘first-come-first-served’ basis. A total of 29 participants attended the citizens’ panel event. This resulted in a sample which was 41.3% male (n = 12) and 58.7% female (n = 17); had approximately one-quarter from each main age group (18–34 years, 35–49 years, 50–64 years and ≥ 65 years), although slightly more (around 30%) in the 50–64 years age group; and had respondents in all five deprivation quintile groups with a mean deprivation rank of around 3, which is about average for the England population (see Table 1 for more details).

The online survey was publicised on Your Local Link magazine, on door-to-door leaflets, the Centre for Health Economics website and the JISCMail mailing list for health economists. It was also advertised on social media from June 2013, particularly using the Twitter (Twitter, Inc., San Francisco, CA, USA; www.twitter.com) handles of the Centre for Health Economics and the University of York, and Facebook (Facebook, Inc., Menlo Park, CA, USA; www.facebook.com). In addition, individuals who contacted us for the citizens’ panel but were not offered a place, were also informed about the online questionnaire.

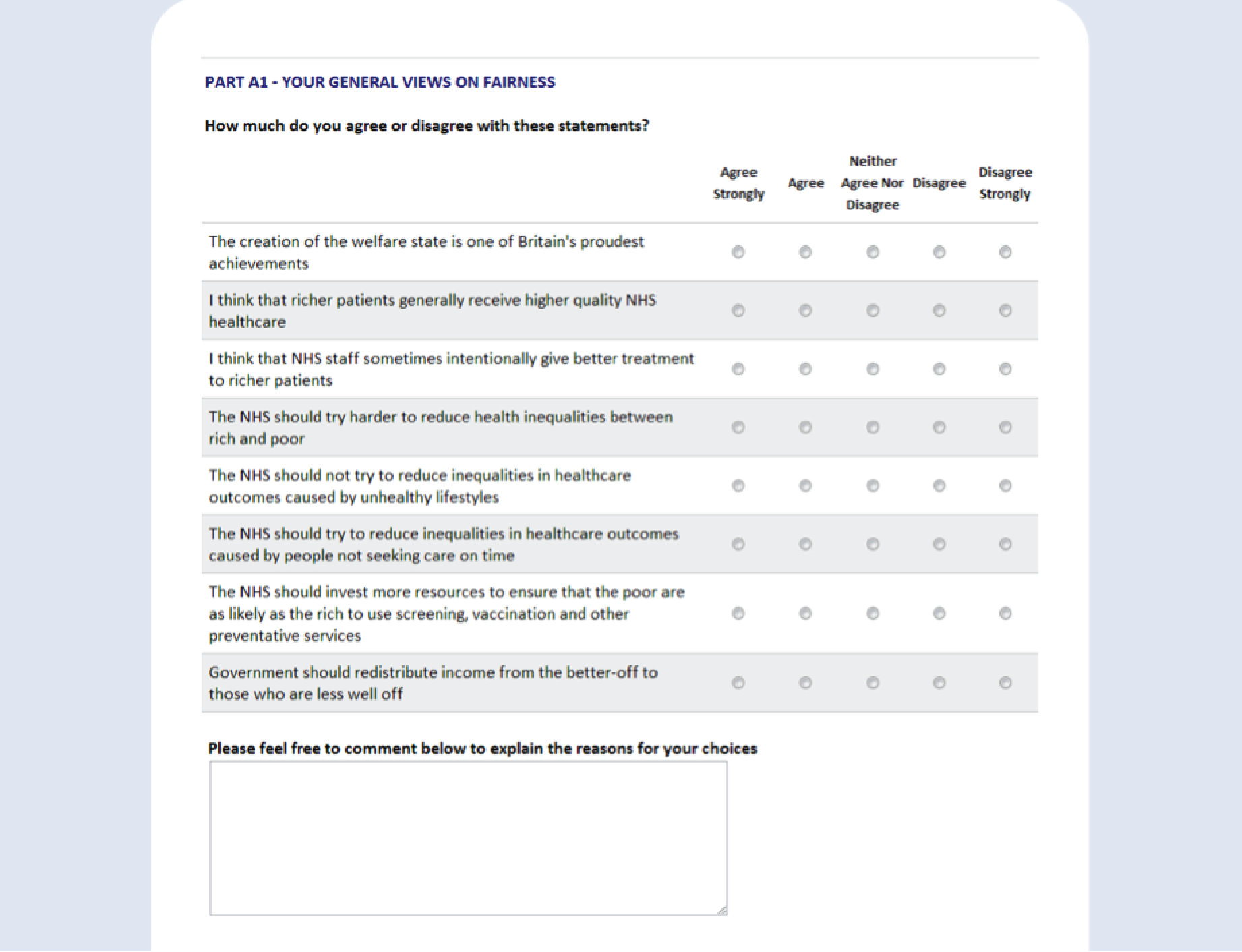

Questionnaire

The questionnaire focused on socioeconomic inequality in the supply, process and outcomes of health care. Statements about inequalities in general (non-disease-specific) health and health care were presented to all participants who were asked to rate them on a scale of 1 to 10, where 1 is not at all unfair and 10 is extremely unfair (see Appendix 6). In order to elicit views about the unfairness of different kinds of inequality, we developed a questionnaire based on the following statements about different general kinds of socioeconomic inequality in health-care access and outcome. Our selection was based on statements about inequalities that can in principle be monitored using available data, which constrained our choices considerably, but this was a necessary step as the ultimate aim was to measure and monitor inequality. We piloted these statements on a sample of administrative staff members at the University of York. Based on their feedback, we improved the presentation and clarity of the statements.

-

The richest fifth of people in England are more likely than the poorest fifth to have a healthy diet and a healthy level of physical exercise.

-

The richest fifth of people in England are served by more GPs than the poorest fifth.

-

The richest fifth of people in England are more likely than the poorest fifth to receive routine screening tests (e.g. for bowel cancer).

-

The richest fifth of people in England are more likely than the poorest fifth to see a medical specialist when they are ill.

-

The richest fifth of people in England wait less time for NHS surgery than the poorest fifth.

-

The richest fifth of people in England are less likely than the poorest fifth to die after high-risk surgery (e.g. heart or cancer surgery).

-

The richest fifth of people in England are less likely than the poorest fifth to have an emergency hospitalisation preventable by good-quality health care.

-

The richest fifth of people in England are less likely than the poorest fifth to die from conditions preventable by good-quality health care.

Respondents were then asked to indicate which of the above inequalities they saw as the most and least unfair. This rating question and a screenshot of the online questionnaire are reproduced in Appendix 6.

We did not present statements about specific clinical disease areas because it was not possible to provide members of the public with adequate clinical and epidemiological information about all the different possible disease area domains that we could select. This would require a series of clinical tutorials that would take up more than the full day of discussion. Furthermore, asking people to compare disease areas would likely change the focus of discussions to which diseases are more important, rather than on socioeconomic inequality and fairness in health and health care within each disease area.

Data collection

There were two samples: the citizens’ panel sample and the online sample. The citizens’ panel event involved presentations by facilitators to introduce the questionnaire, interactive discussions in small and large groups, and individual completion of a paper version of the questionnaire. Respondents were split into five pre-arranged groups (four groups of five and one group of four), which were mixed according to age, sex and socioeconomic background. The following people each facilitated a group: Shehzad Ali, Miqdad Asaria, Richard Cookson, Paul Toner and Aki Tsuchiya. A gift payment of £70.00 was offered to all participants of the citizens’ panel event which was accepted by all except one, who asked to donate it to charity.

The online survey was posted on SmartSurvey with the following web link: www.smart-survey.co.uk/s/NHSFairness. The survey included the same inequality statements as the citizens’ panel questionnaire and followed the same format (see Appendix 6). Our online questionnaire was active between June 2013 and September 2013. Respondents could complete the survey anonymously, or leave their name and e-mail address to receive a copy of the findings. No financial incentive was offered for taking part in the online survey because of budget limitations and technical difficulty of arranging payments.

Results of public consultation

Survey sample

In total, 29 individuals participated in the citizens’ panel event in York and 159 individuals completed the online survey. The baseline characteristics of the sample are presented in Table 1. The majority of respondents were female: 62.1% in the citizens’ panel and 66.5% in the online group. The age distribution in both groups was similar and reflects that the survey represented a diverse group of participants. Based on respondents’ postcode information, we calculated their deprivation level using the IMD 2010 data available at the small-area level. Respondents in the two groups were from all five deprivation quintile groups, with the mean deprivation quintile group rank being 3.2 and 3.3 for the citizens’ panel and online groups, respectively (i.e. the average person was in the middle of the five deprivation groups). Respondents were also asked to complete standard questions from the British Attitudes Survey about attitudes to the welfare state and income redistribution (1 = strongly agree and 5 = strongly disagree). The average score on the statement ‘The creation of the welfare state is one of Britain’s proudest achievements’ was 1.4 showing a high level of agreement (93.1% and 94.8% of respondents agree or strongly agree with this statement in the citizens’ panel and online samples, respectively). This is much higher than the findings of the British Attitude Survey results for 2014, which found that 56% of respondents agree or strongly agree with this statement. This reflects the general point that public consultation exercises about equity are more likely to recruit individuals who care about equity issues.

| Variable | Sample | |||

|---|---|---|---|---|

| Citizens’ panel (n = 29) | Online group (n = 155) | |||

| Statistic | n | Statistic | n | |

| Baseline | ||||

| Male (%) | 37.9 | 11 | 33.5 | 52 |

| Age, years (%) | ||||

| < 18 | 0.0 | 0 | 0.6 | 1 |

| 18–34 | 27.6 | 8 | 24.5 | 38 |

| 35–49 | 20.7 | 6 | 23.2 | 36 |

| 50–64 | 31.0 | 9 | 34.8 | 54 |

| ≥ 65 | 20.7 | 6 | 16.8 | 26 |

| Deprivation quintile (%) | ||||

| Quintile 1 (most deprived quintile) | 13.8 | 4 | 16.2 | 19 |

| Quintile 2 | 20.7 | 6 | 17.1 | 20 |

| Quintile 3 | 20.7 | 6 | 18.8 | 22 |

| Quintile 4 | 20.7 | 6 | 19.7 | 23 |

| Quintile 5 (least deprived quintile) | 24.1 | 7 | 28.2 | 33a |

| Social attitude statementsb (mean) (1 = strongly agree; 5 = strongly disagree) | ||||

| The creation of the welfare state is one of Britain’s proudest achievements | 1.4 | 29 | 1.4 | 154 |

| Government should redistribute income from the better off to those who are less well off | 3.0 | 29 | 2.2 | 154 |

Ranking of unfair inequalities

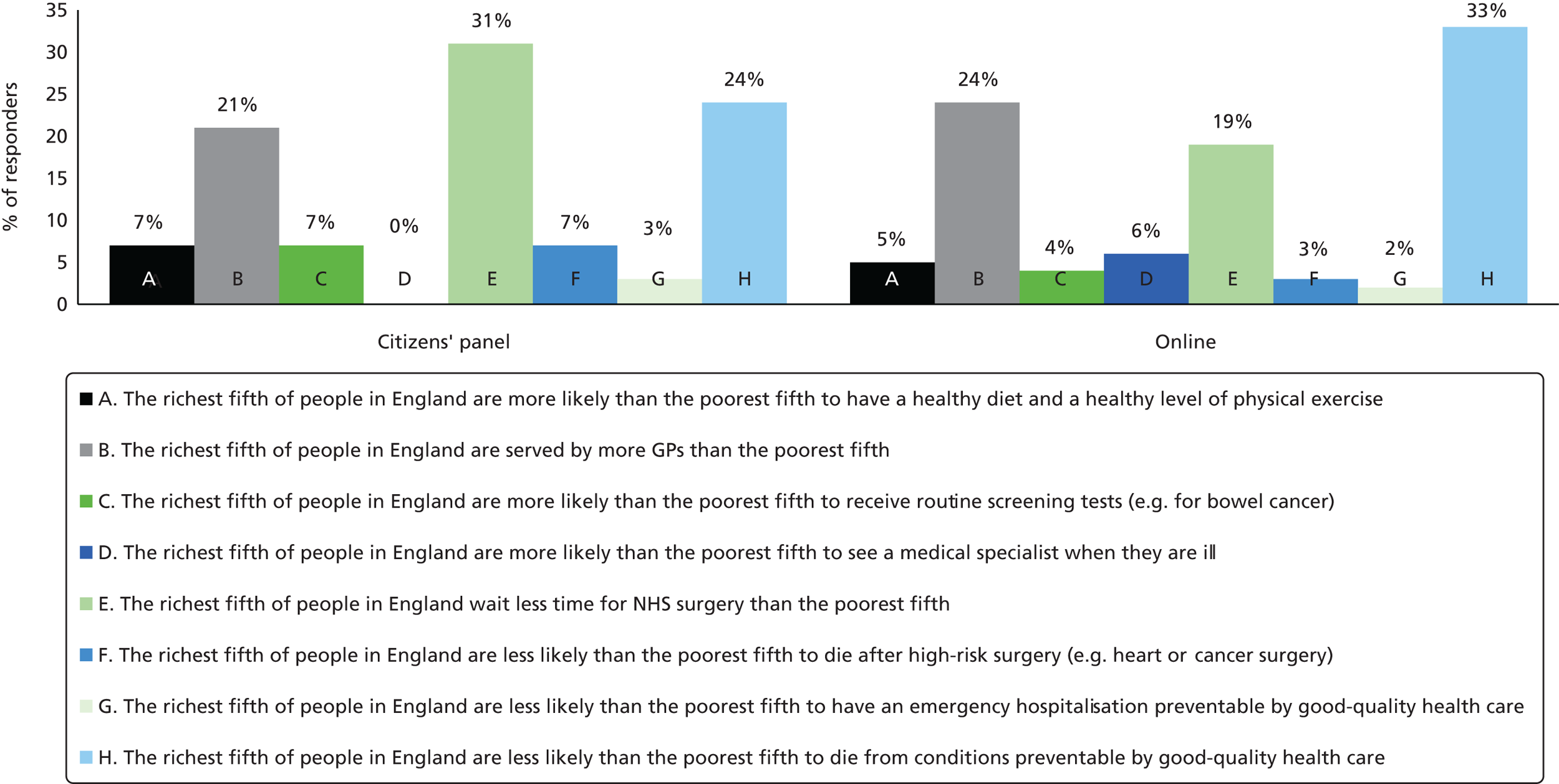

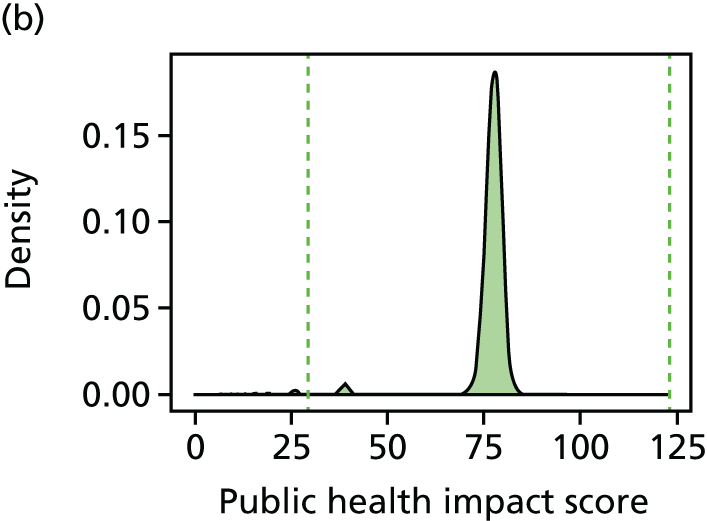

All participants responded to the question about the most unfair socioeconomic inequalities in health and health care. Figure 4 presents the full distribution of responses to the question about the most unfair inequality. The citizens’ panel group ranked socioeconomic inequality in waiting time for surgery as the most unfair (31%), whereas the online group ranked inequality in death from conditions preventable by good-quality health care as most unfair (33%).

FIGURE 4.

Choice of the most unfair type of inequality in the citizens’ panel and online samples.

However, the following three types of inequalities were identified as the most important unfair inequalities by both citizens’ panel participants and online survey respondents:

-

The richest fifth of people in England wait less time for NHS surgery than the poorest fifth (31% of citizens’ panel participants and 19% of online respondents ranked this as the most unfair inequality).

-

The richest fifth of people in England are less likely than the poorest fifth to die from conditions preventable by good-quality health care (24% of citizens’ panel participants and 33% of online respondents ranked this as the most unfair inequality).

-

The richest fifth of people in England are served by more GPs than the poorest fifth (21% of citizens’ panel participants and 24% of online respondents ranked this as the most unfair inequality).

Rating of unfair inequalities

We also asked respondents to rate how unfair they think each type of inequality is on a scale of 1–10, where 1 is ‘not at all unfair’ and 10 is ‘extremely unfair’. All respondents in the citizens’ panel and online groups completed the rating scale. Table 2 summarises the results of the level of perceived unfairness of different types of inequality. The table shows that all forms of socioeconomic inequalities in health and health care were considered unfair by both the citizens’ panel and online groups. Based on mean scores, the citizens’ panel group rated the following inequalities as particularly unfair: waiting time for NHS surgery; supply of GPs; and routine screening tests. Similarly, based on average scores, the online group rated the following inequalities as particularly unfair: waiting time for NHS surgery; supply of GPs; and death from conditions preventable by good-quality health care.

| Statements | Sample | |||||

|---|---|---|---|---|---|---|

| Citizens’ panel | Online sample | |||||

| Mean | Median | % of responses with a score of ≥ 6 | Mean | Median | % of responses with a score of ≥ 6 | |

| A. The richest fifth of people in England are more likely than the poorest fifth to have a healthy diet and a healthy level of physical exercise | 6.69 | 7 | 62 | 6.52 | 7 | 60 |

| B. The richest fifth of people in England are served by more GPs than the poorest fifth | 8.07 | 8 | 83 | 8.67 | 10 | 91 |

| C. The richest fifth of people in England are more likely than the poorest fifth to receive routine screening tests (e.g. for bowel cancer) | 8.1 | 8 | 83 | 7.92 | 9 | 80 |

| D. The richest fifth of people in England are more likely than the poorest fifth to see a medical specialist when they are ill | 7.31 | 8 | 76 | 8.02 | 9 | 83 |

| E. The richest fifth of people in England wait less time for NHS surgery than the poorest fifth | 8.41 | 8 | 86 | 8.76 | 10 | 91 |

| F. The richest fifth of people in England are less likely than the poorest fifth to die after high-risk surgery (e.g. heart or cancer surgery) | 7.79 | 9 | 79 | 7.51 | 8 | 72 |

| G. The richest fifth of people in England are less likely than the poorest fifth to have an emergency hospitalisation preventable by good-quality health care | 7.34 | 8 | 79 | 7.99 | 9 | 82 |

| H. The richest fifth of people in England are less likely than the poorest fifth to die from conditions preventable by good-quality health care | 7.93 | 9 | 79 | 8.05 | 9 | 82 |

Role of the lay members of the advisory group

Two participants at the citizens’ panel event were invited to join our advisory group as lay members, to contribute to the research design and, in particular, the process of indicator selection and design of dashboards and other visualisation tools for monitoring changes in NHS equity performance. The selection of lay members was based on their interest in the subject of inequalities in health and health care, willingness to contribute to this project, experience of using NHS health services, ability to communicate with members of the team and availability to join meetings in York and London. Based on these criteria, one male and one female participant were invited to join the advisory group.

The lay members attended all three advisory group meetings in London, were involved in additional face-to-face discussions, and reviewing and commenting on relevant documents. More specifically, the lay members contributed to the project in the following ways:

-

They contributed to discussions on the choice of equity indicators that matter to the general public and, therefore, should be considered for monitoring equity performance.

-

They provided useful advice about dashboard design to improve presentation and interpretation.

The lay members commented on the prototype NHS equity dashboard designs, as a result of which we revised and simplified the designs to reduce ‘clutter’ on the graphical displays, and added arrows as well as traffic-light colours to help colour-blind users and people who print out in black and white. The lay members also commented on the different types of graph in the chart pack, and reassured us that our graphs were clear and informative to non-expert audiences.

Our lay members agreed in the final project meeting that the current one-page summary dashboard style presents useful information to NHS and public health experts. However, they thought that this concise format may not be appropriate for communication to the public, as it provides too much information in a small space. They advised that public reporting would require a different kind of infographic design tailored to public audiences. They suggested that the dashboard presentation would be useful to health experts once they are familiar with the dashboard design and have read the accompanying material, but proposed that clear notes accompanying the dashboard would be useful to help interpretation.

Conclusion