Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 12/136/31. The contractual start date was in March 2014. The final report began editorial review in December 2015 and was accepted for publication in May 2016. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Disclaimer

This report contains transcripts of interviews from studies identified during the course of the research and contains language that may offend some readers.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2017. This work was produced by Greenhalgh et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Introduction and overview

Background and rationale for the review

In this chapter we provide an overview of the background of, and rationale for, the review. We then summarise the aims and objectives of the review, and provide an overview of our methodological approach: realist synthesis. We then outline the structure of the report.

Definitions and policy context

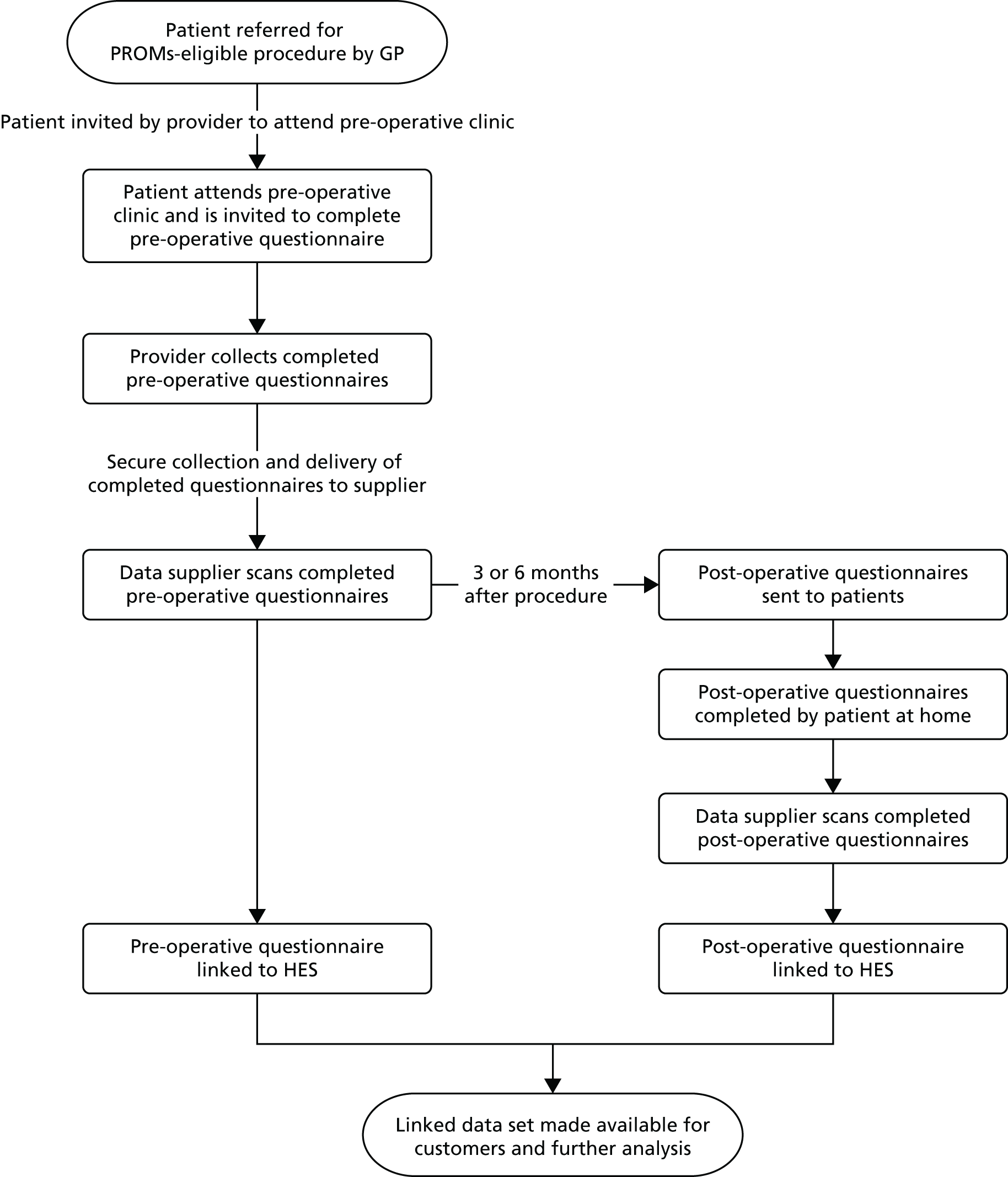

Patient-reported outcome measures (PROMs) are questionnaires that measure patients’ perceptions of the impact of a condition and its treatment on their health. 1 Many of these measures were originally designed for use in research to ensure that the patient’s perspective was integrated into assessments of the effectiveness and cost-effectiveness of care and treatment. 2 Over the last 5 years, the routine collection of PROMs data has played an increasing role in health policy in England, with the introduction of the national PROMs programme in the NHS. The original challenge that led the Department of Health to pilot the routine collection of PROMs data in 2007 was a demand management issue: to assess whether or not surgery for certain conditions was overutilised. 3 However, more recent findings showing the gradient in use of these interventions by social class and ethnicity have led to calls for PROMs to be used to improve the equity of care. 4 In 2008, the Darzi report5 called for the routine collection of PROMs data to benchmark provider performance, assess the appropriateness of referrals, support the payment of providers by results and support patient choice of provider. The public reporting of PROMs data to support the patient choice agenda was given further impetus in the 2010 government White Paper,6 which set out that ‘Success will be measured . . . against results that really matter to patients’ and that ‘Patients . . . will have more choice and control, helped by easy access to the information they need about the best GPs [general practitioners] and hospitals’ (© Crown Copyright; contains public sector information licensed under the Open Government Licence v3.0). Most recently, in the light of the Francis report,7 it is planned to introduce single aggregated ratings, and to develop ratings of hospital performance at department level to support public accountability and patient choice. 8

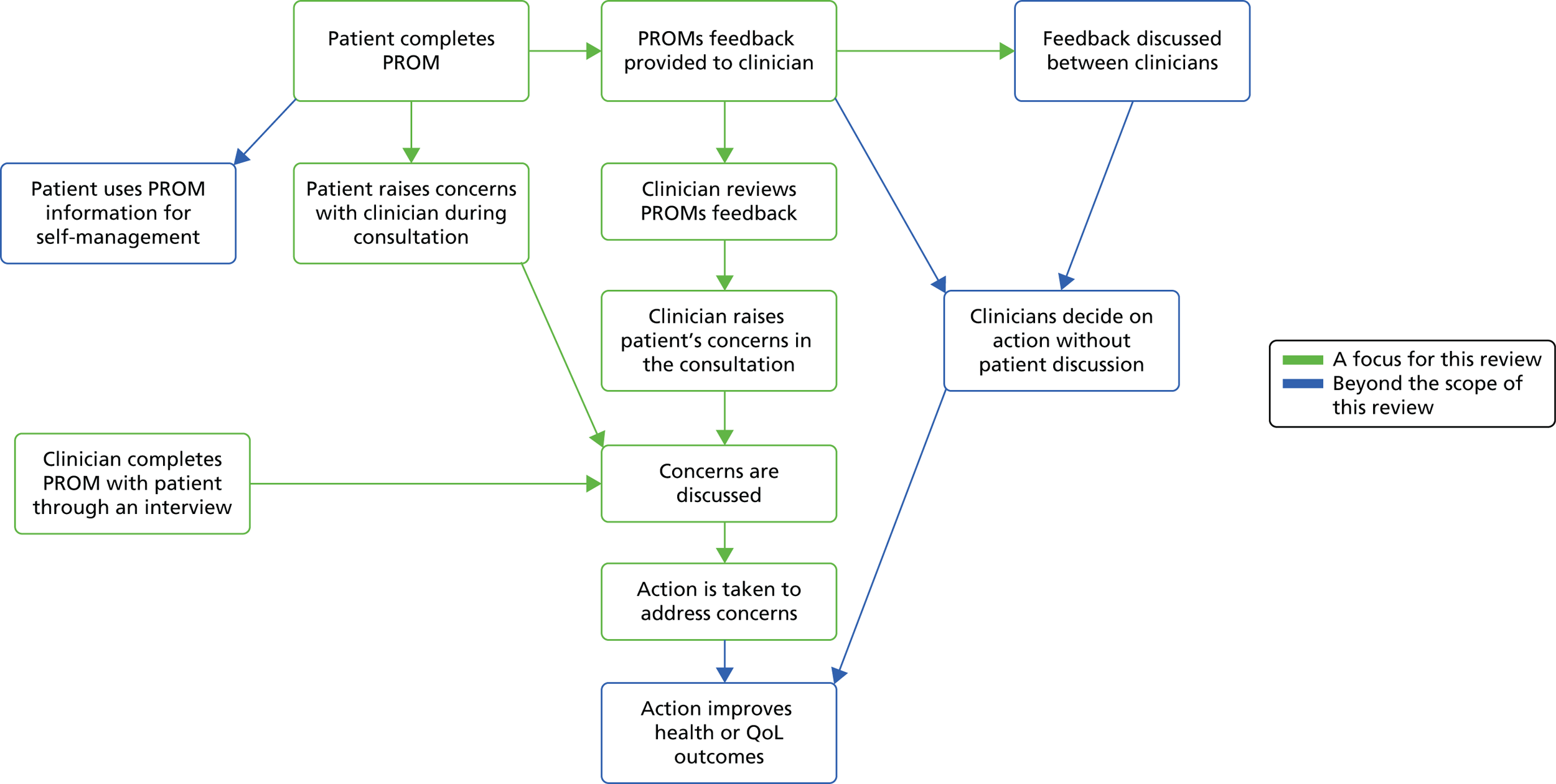

Alongside the use of PROMs data at an aggregate level, the routine collection and use of PROMs data at the individual patient level has also become more widespread, although in a less co-ordinated way, with individual clinicians using PROMs on an ad hoc basis, often with little guidance. 9–11 At the individual level, the intention of PROMs feedback is to improve the detection of patient problems, to support clinical decision-making about treatment through ongoing monitoring and to empower patients to become more involved in their care. 12,13

Despite the fact that the ambitions for the usage of PROMs data have multiplied, PROMs research has focused on form rather than function. There is a substantial body of evidence on the psychometric properties of PROMs, but less attention has been given to clarifying the subsequent decisions and actions that the measures are intended to support. 12 For example, careful deliberation went into selecting the instruments for the UK PROMs programme and piloting the feasibility of their collection,14 but the precise mechanisms through which PROMs data will improve the quality of patient care for each of their intended functions have been less well articulated. 12 Furthermore, there are inherent tensions between the different uses of PROMs data that may influence how these data are collated and interpreted and, thus, the success of PROMs initiatives. 15,16 For example, individualised measures, where the patient specifies the domains to be measured, may be more relevant to patients and, thus, better support patient involvement in their care than standardised measures. 17 However, such personalised measures may lose their meaning when used at the group level, and thus may not be adequate reflections of the quality of patient care. Accordingly, there is a significant need for research that clarifies the different functions of PROMs feedback and delineates more clearly the processes through which they are expected to achieve their intended outcomes.

Despite their relatively recent introduction to the NHS, the underlying reasoning about how PROMs data will be mobilised is familiar, and has a long and somewhat chequered history. For example, the use of aggregated PROMs data to benchmark provider performance and the public reporting of these data to inform consumer choice shares many of the assumptions and some of the drawbacks of other ‘feedback’ or ‘public disclosure’ interventions (e.g. hospital star ratings in the UK18 and surgical mortality report cards in the USA19). These interventions may improve patient care through a ‘change’ pathway [whereby providers initiate quality improvement (QI) activities to improve the quality of patient care] or a ‘selection’ pathway (whereby patients choose a high-quality hospital). 20 Evidence across a range of different forms of this intervention suggests that the public reporting of performance data results in improvements in performance in situations in which the named party is motivated to maintain their market share; the reporting occurs alongside other market sanctions (e.g. financial incentives); the public reporting carries intensive but controllable media interest; the disclosed data are unambiguous in classifying poor and high performers; and the reporting authority is trusted by those who receive the data. 21 The evaluation and implementation of the public reporting and feedback of PROMs data will benefit from a careful review of the extent to which equivalent conditions apply to the impact of public dissemination of these data on the quality of patient care. At the individual level, PROMs feedback for detection and monitoring of patients’ problems can be seen as an attempt to modify clinical judgement with encoded, standardised knowledge as part of the move towards scientific-bureaucratic medicine. 22 The intention of increasing patient involvement in the consultation bears the hallmarks of other collaborative care interventions,23 and much can be learned by reviewing common underlying mechanisms. As PROMs feedback is rolled out to other services and settings, it is vital that such cumulative evidence on parallel interventions informs future implementation.

Existing evidence

There are currently no systematic reviews examining the feedback of aggregate PROMs data to improve patient care. Boyce and Browne’s24 systematic review examined PROMs feedback in the care of individual patients and at the aggregate level. They found only one study25 examining the use of aggregate PROMs data, in which physicians were randomised either to receive peer comparison feedback on the functioning of older patients in their care or to be told that the functioning of their older patients would be monitored. This study found no statistically significant differences in patient functional status between patients in the intervention and control groups. There are four reviews of the feedback of performance data. 19,26–28 In general, these reviews found a small decline in mortality following public reporting after controlling for trends in a reduction of mortality; however, individual studies varied in their findings. For example, studies examining the impact of cardiac public reporting programmes on mortality rates found a variable picture: eight studies found a decrease in mortality rates over time,29–36 while another four studies37–40 found no changes in mortality rates over time. Similarly, although most studies examining the impact of public reporting on process indicators found an improvement in hospital quality, this varied from a ‘slight’ improvement to a ‘significant’ improvement. However, they also found little evidence that the public reporting of performance data stimulated changes in hospitals’ market share, suggesting that patients may not change hospitals in response to the public reporting of quality data. We consider these reviews in more detail in Chapter 4 of this report.

There are 16 reviews of the quantitative/randomised controlled trial (RCT) literature on the feedback of individual-level PROMs data24,41–56 and one review currently in progress. 57 There is also one review of qualitative studies58 and four mixed-method reviews. 59–62 Thus, there are a total of 21 existing reviews examining the feedback of individual PROMs data in patient care. Of these reviews, one focused on screening for mental health problems51 in primary and secondary care, and four others focused on the use of PROMs feedback in specialist mental health settings. 41,45,47,50 Four reviews focused on use of PROMs in oncology settings,42,43,46,55 one review focused on use of PROMs as a means of screening for cancer-related distress56 and two reviews focused on the use of PROMs feedback in palliative care settings. 59,60 One review focused on feedback of PROMs data to allied health professionals. 61 Three studies attempted to identify the ‘barriers and facilitators’ to PROMs feedback in clinical practice;58,59,61 two reviews adopted a theory-driven approach to the review;60,62 and one review combined a ‘review of reviews’ with existing conceptual frameworks of PROMs feedback, but focused on synthesising the quantitative evidence. 43

Thus, there is a large volume of literature examining the impact of individual PROMs feedback in clinical practice, and reviewers have dissected and grouped the literature in a number of different ways, for example by condition or by setting. Furthermore, even for those reviews that have focused on the same condition or setting, differences in search methods and in inclusion and exclusion criteria have resulted in these reviews including overlapping, but different, groups of studies. For example, both Chen et al. 43 and Kotronoulas et al. 42 examined the impact of PROMs feedback in oncology settings, and both reviews included both RCTs and quasi-experimental studies. Chen et al. 43 identified 27 eligible studies and Kotronoulas et al. 42 identified 24 eligible studies, reported in 26 papers. However, only 16 papers are common to both reviews; 10 papers appear only in Chen et al. 43 and nine papers appear only in Kotronoulas et al. 42 The reviews also vary in their synthesis methodology; most adopted a narrative overview, but those with a more narrowly focused review question used a meta-analysis. 47,51 However, although a range of synthesis methods has been used, the reviews are dominated by traditional systematic reviews of RCTs.

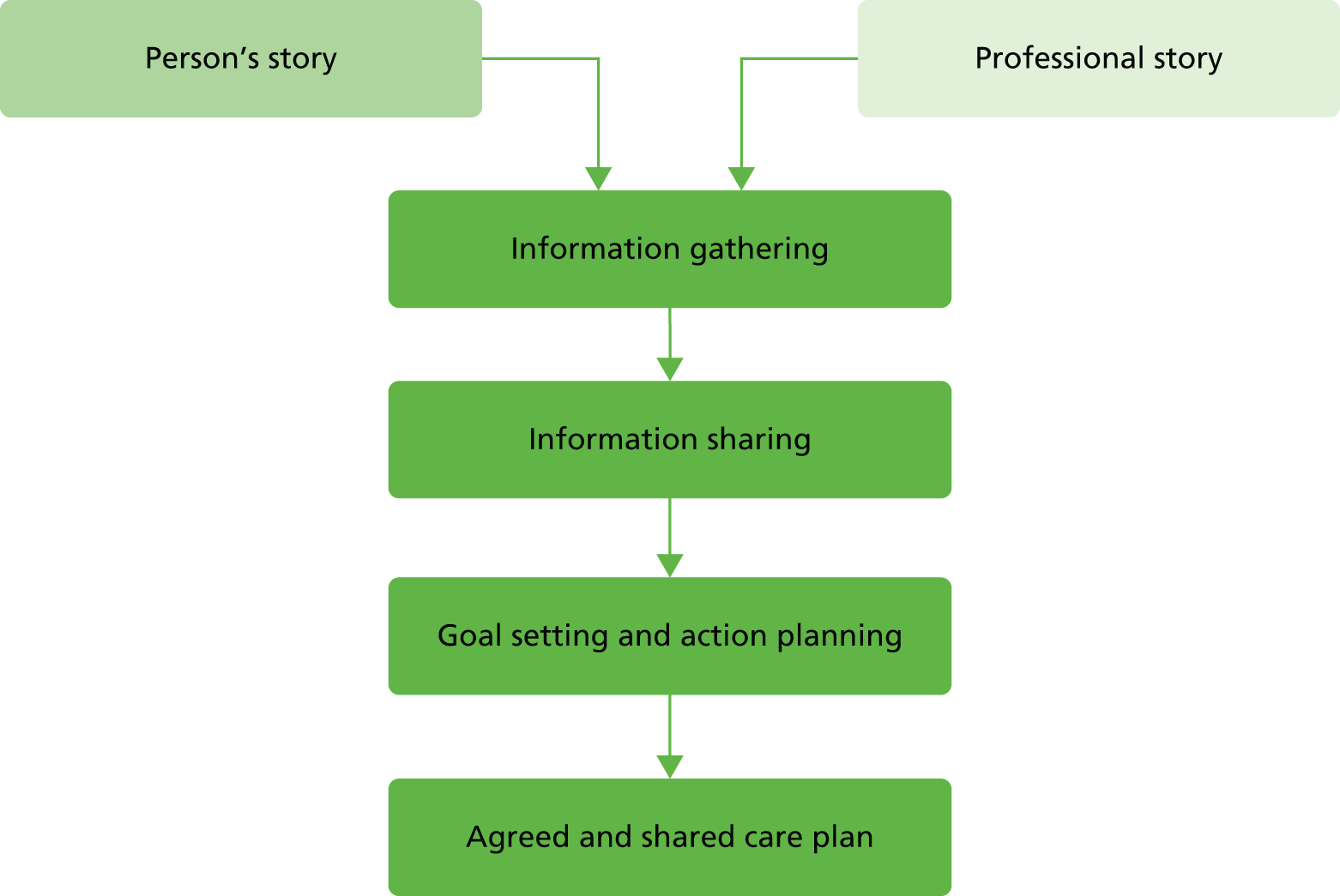

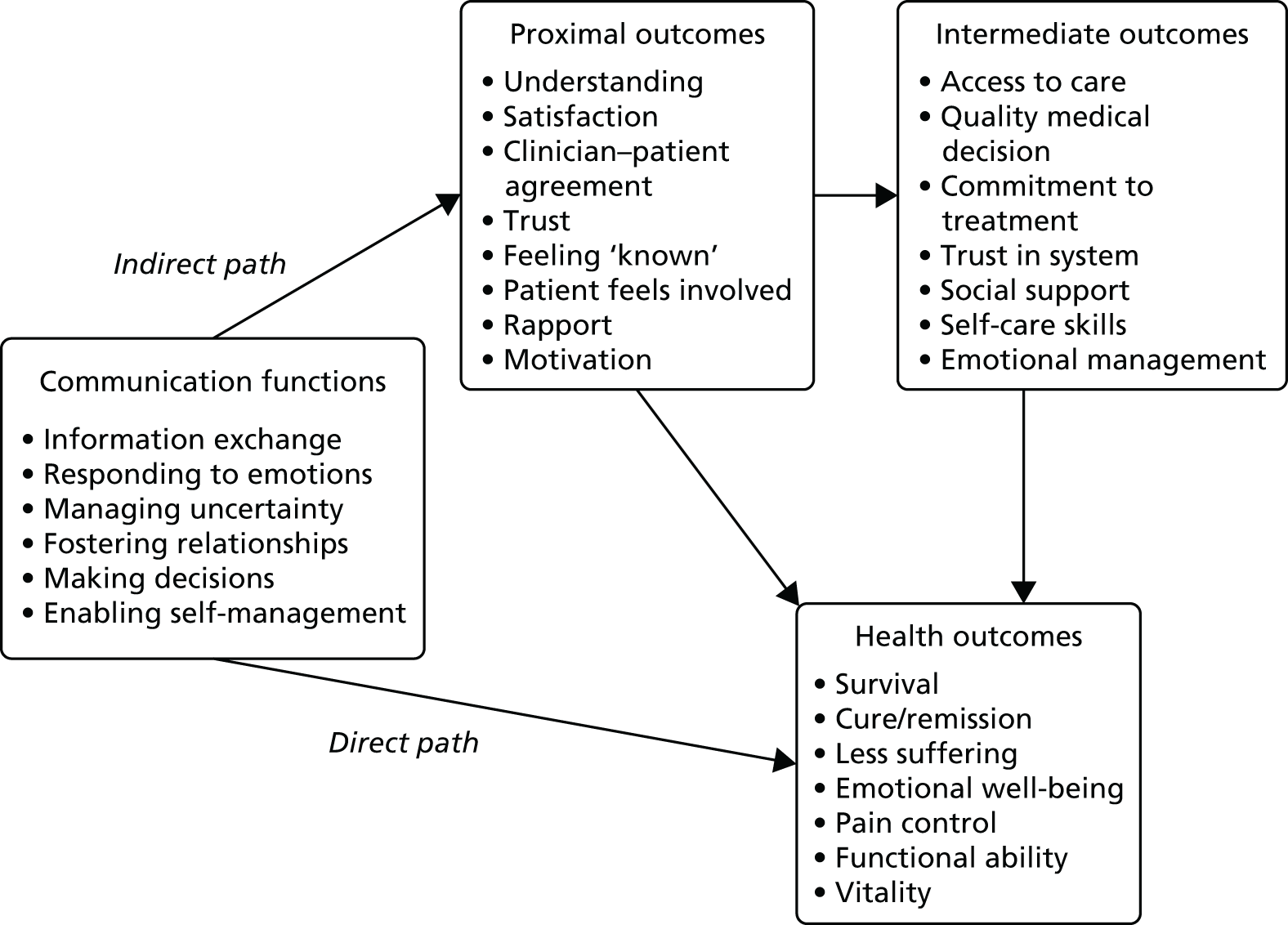

It is not our intention to provide a detailed analysis of the findings of each review. Here we present a brief overview of their findings in order to highlight outcome patterns that will be explored during our synthesis. Those adopting a traditional systematic review methodology to survey the entire literature have, in general, found it difficult to reach firm conclusions about the impact of PROMs feedback on the process and outcomes of patient care, largely owing to the heterogeneity of the intervention itself, and the wide range of indicators used to assess its impact. 48 There is some evidence to suggest that the purpose or function of PROMs feedback may influence its impact, with greater impact on patient outcomes when PROMs are used to monitor patient progress over time in specific disease populations, rather than as a screening tool. 24 One common pattern evident in these reviews is that PROMs feedback has a greater impact on clinician–patient communication, the provision of advice or counselling and the detection of problems than on patient management and subsequent patient outcomes. 48,49

This general conclusion is also mirrored in the reviews focusing on oncology. 42,43 For example, Chen et al. 43 found ‘strong’ evidence that the feedback of PROMs data improves patient–clinician communication, and ‘some’ evidence that it improves the monitoring of treatment response and the detection of patients’ problems. However, they found ‘weak but positive evidence’ that PROMs feedback leads to changes in patient management, and ‘a great degree of uncertainty’ regarding whether or not PROMs feedback improves patient outcomes. Chen et al. 43 suggested that greater impact of PROMs feedback may be found where PROMs are fed back for a sustained period of time to multiple stakeholders, with feedback that is clear and easy to understand, and sufficient training for health professionals. Kotronoulas et al. 42 found significant increases in the frequency of discussions ‘pertinent to patient outcomes’, but little impact on referrals or clinical actions in response to PROMs data. This suggests that there may be a ‘blockage’ between the identification and discussion of the issues raised by PROMs and the ways in which clinicians respond to these issues.

The review of qualitative evidence58 provides some further possible explanations for these findings, which can be explored in our synthesis. This review found that clinicians sometimes questioned the validity of PROMs data, and expressed concerns about the lack of clarity regarding whether PROMs data were intended for use to inform clinical care or to monitor the quality of the service. PROMs feedback was more likely to inform patient management when it provided new information to clinicians. This review also identified a number of unintended consequences of PROMs feedback. In line with some of the theories we discuss in Chapter 8, the intrusive nature of incorporating discussion of PROMs data into the consultation was, in some circumstances, perceived to affect the patient–health-care practitioner interaction. They found some evidence that, rather than open up the consultation, PROMs feedback may narrow its focus, and that certain questions may distress patients and, thus, damage the patient–health-care practitioner relationship.

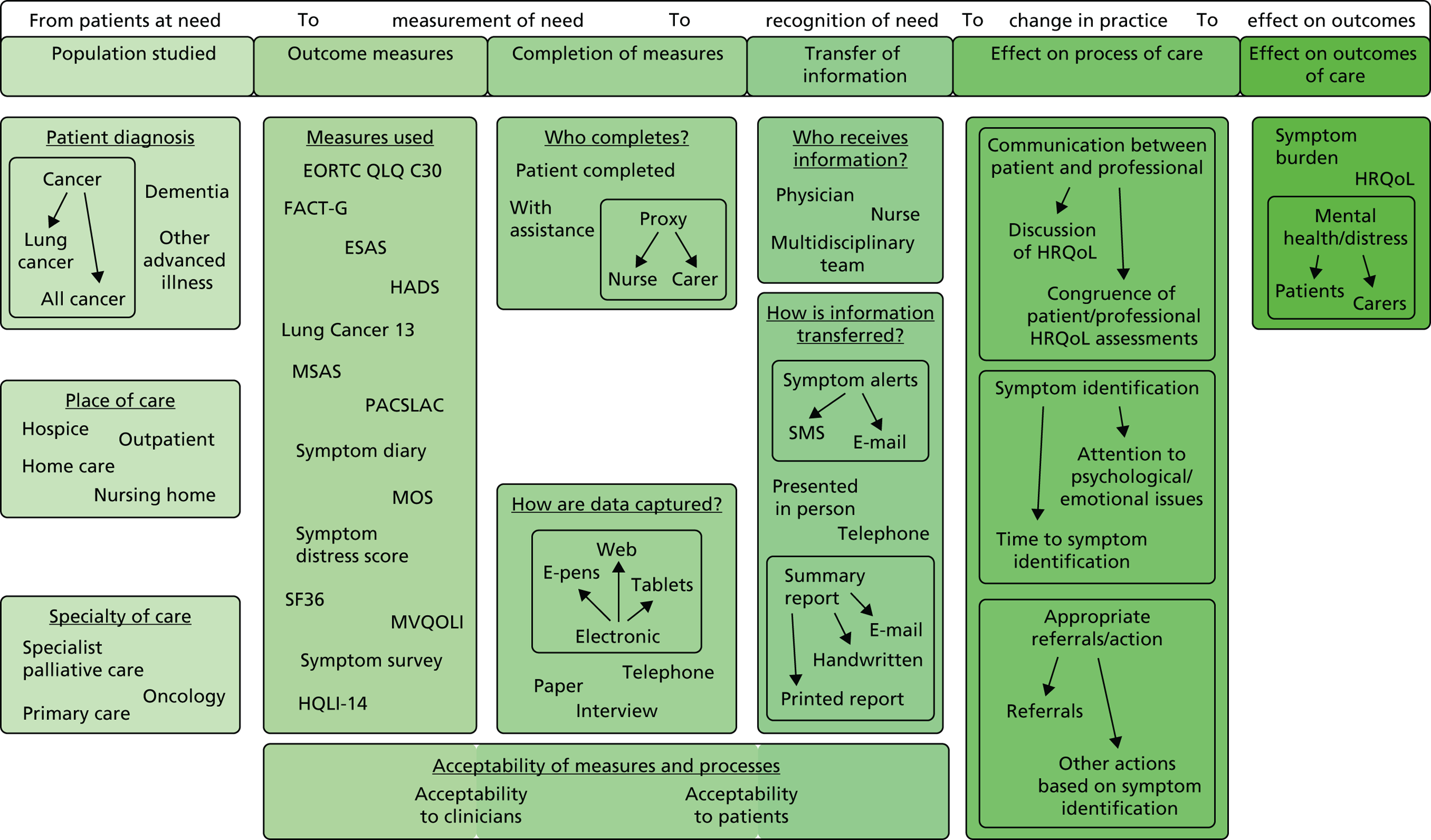

Thus, evaluating and reviewing the evidence of PROMs feedback is a challenge for several reasons, all of which arise from the complexity of the intervention. First, PROMs feedback is unavoidably heterogeneous and varies by PROM used, the purpose of the feedback, the patient population, the setting, the format and timing of feedback, the recipients of the information and the level of aggregation of the data. 12 Therefore, there is a need for review methods that explicitly take into account the heterogeneity of the intervention, and seek to understand how this shapes intervention success.

Second, the implementation chain from feedback to improvement has many intermediate steps and may only be as strong as its weakest link. 62 At an individual level, PROMs feedback may improve communication and detection of patient problems, but may have less impact on patient management or health status. 48 However, its impact on communication during the consultation is not uniform and depends on the nature of patients’ problems. In oncology, where there is most evidence that PROMs influence communication, clinicians were more likely to discuss symptoms with their patients in response to PROMs feedback, but not psychosocial issues. 63,64 We are confronted with the cautionary hypothesis that PROMs feedback may not result in further discussion or the offer of symptomatic treatment because high PROMs scores (suggesting high disease impact) do not always represent a problem for the patient or a problem that clinicians perceive as falling within their remit to address. 65

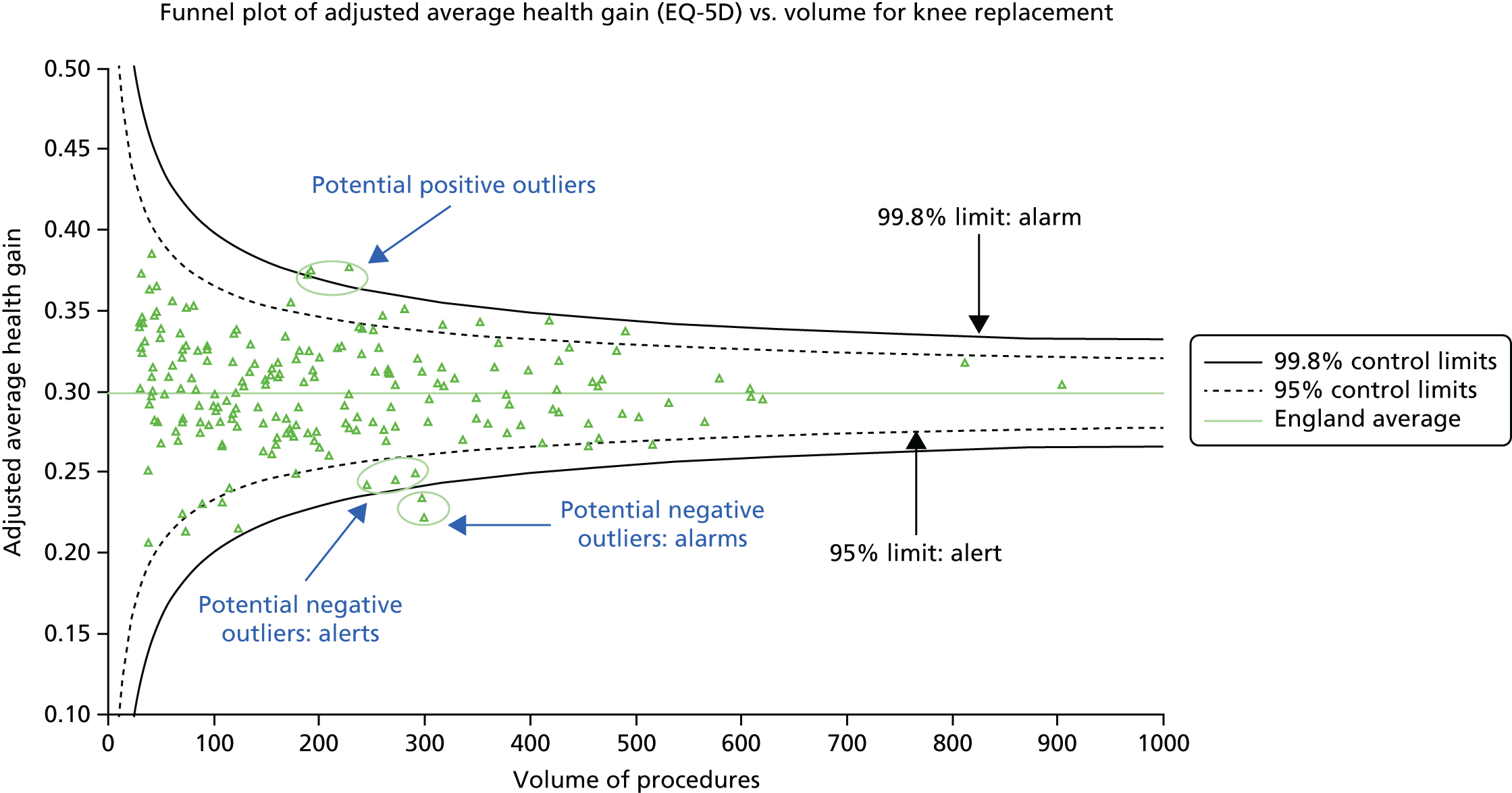

At an aggregate level, there are many organisational, methodological and logistical challenges to the collation, interpretation and then utilisation of PROMs data. 66 These include reducing the risk of selection bias, as older, sicker patients are less likely to complete PROMs;67 reducing the variation in recruitment rates in PROMs data collection across NHS trusts;68 ensuring that procedures are in place to adequately adjust for case mix;69,70 collecting the data at the right point in the patient’s pathway; and summarising this information in a way that is interpretable to different audiences. 71 In summary, a number of potential obstacles may prevent or lead to partial success in PROMs feedback achieving its intended outcome of improving patient care. There is a need to pinpoint these obstacles or blockages more systematically in terms of their location in the implementation chain, and to identify the circumstances in which they occur and those in which they can be overcome.

Third, the success of PROMs feedback is context dependent, and these contextual differences influence the precise mechanisms through which it works and, thus, its impact on patient care. For example, using PROMs data as an indicator of service quality for surgical interventions in acute care is very different from their use as a quality indicator of GPs’ management of long-term conditions in primary care. The impact of surgery on disease-specific PROMs and knowledge of the natural variability of scores has been well documented,72 but this knowledge is lacking regarding the impact of primary care on PROM scores. 73 At an individual level, surgeons are specialised and need only interpret the PROMs data in their specialty. In contrast, GPs manage patients with different long-term conditions, and need to make sense of data from different PROMs, or to disentangle the impact of different conditions on PROMs scores. The interpretation of the meaning of changes is, therefore, very different in each context.

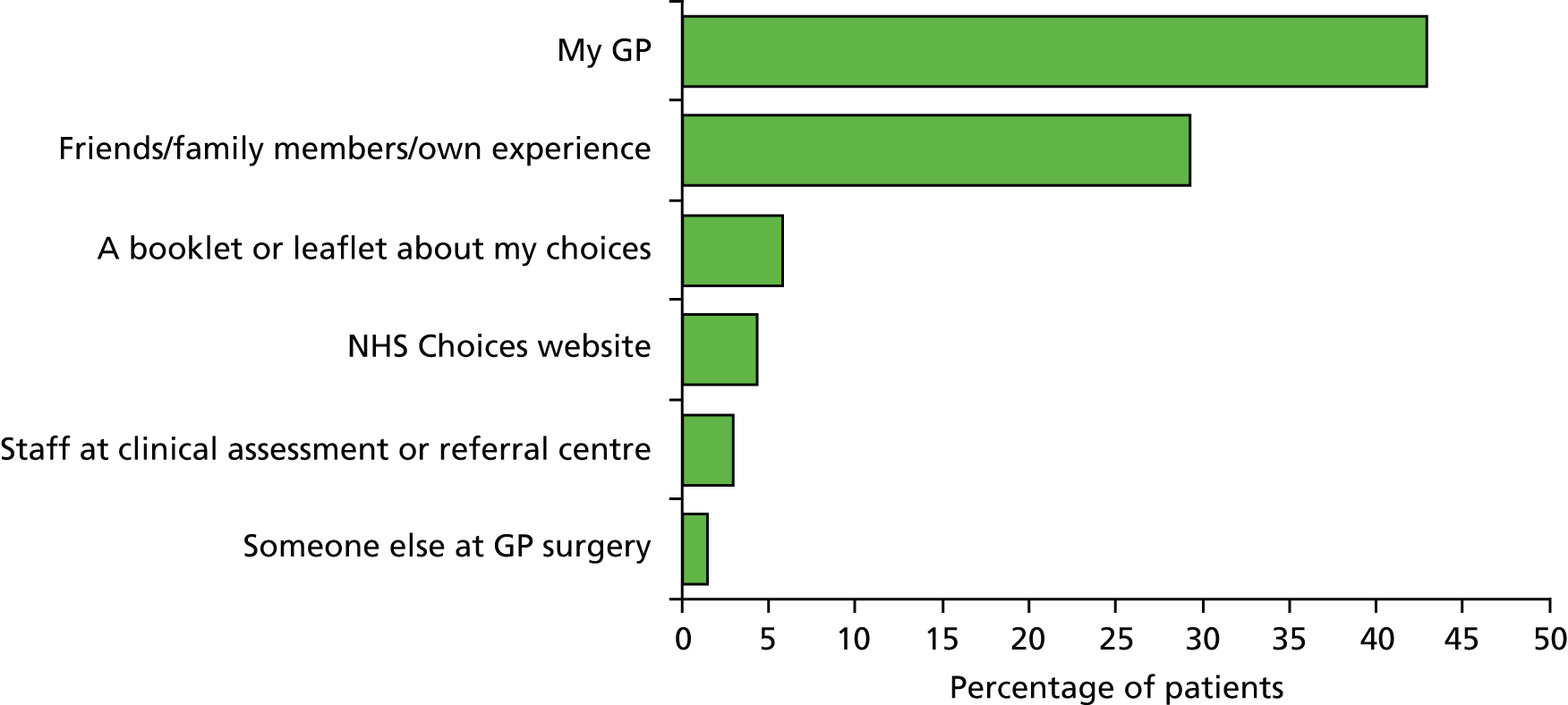

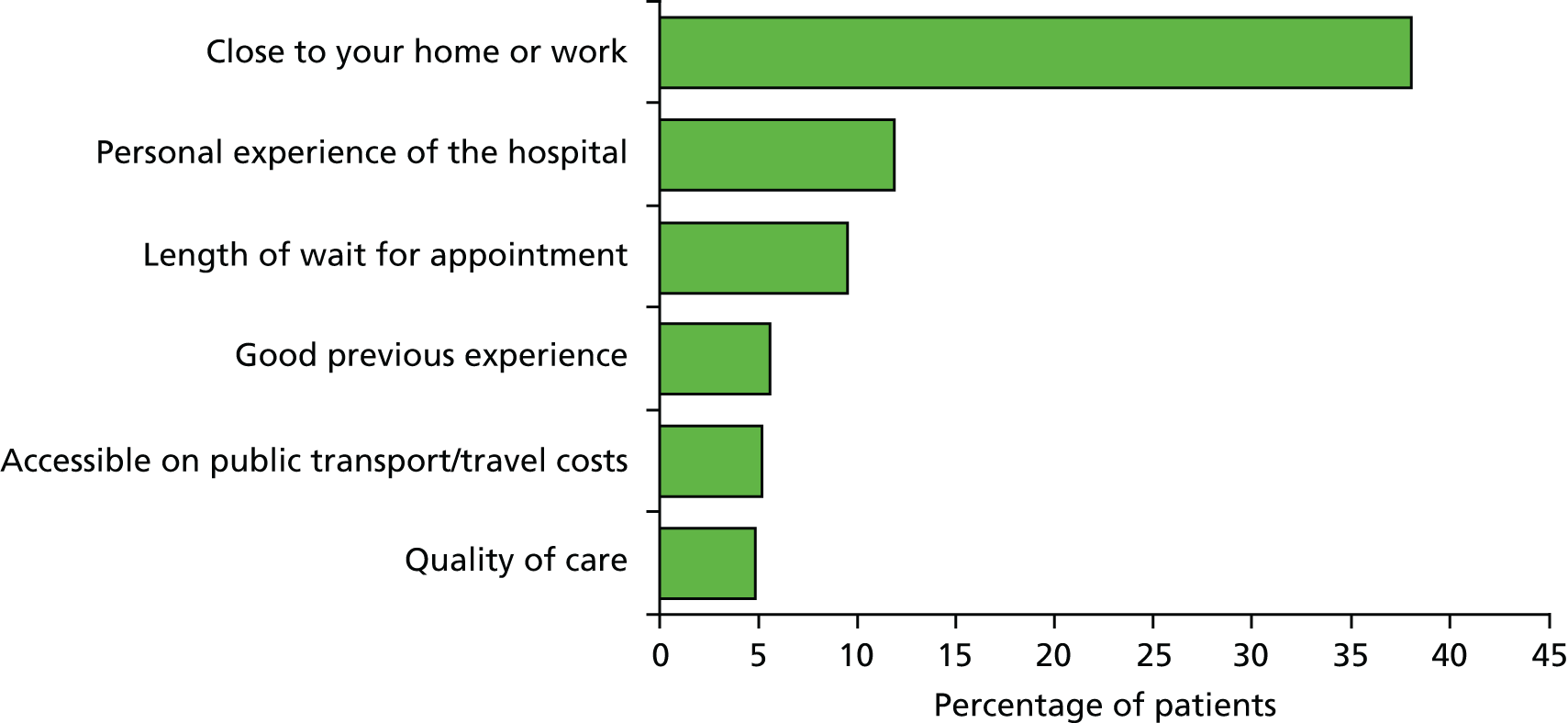

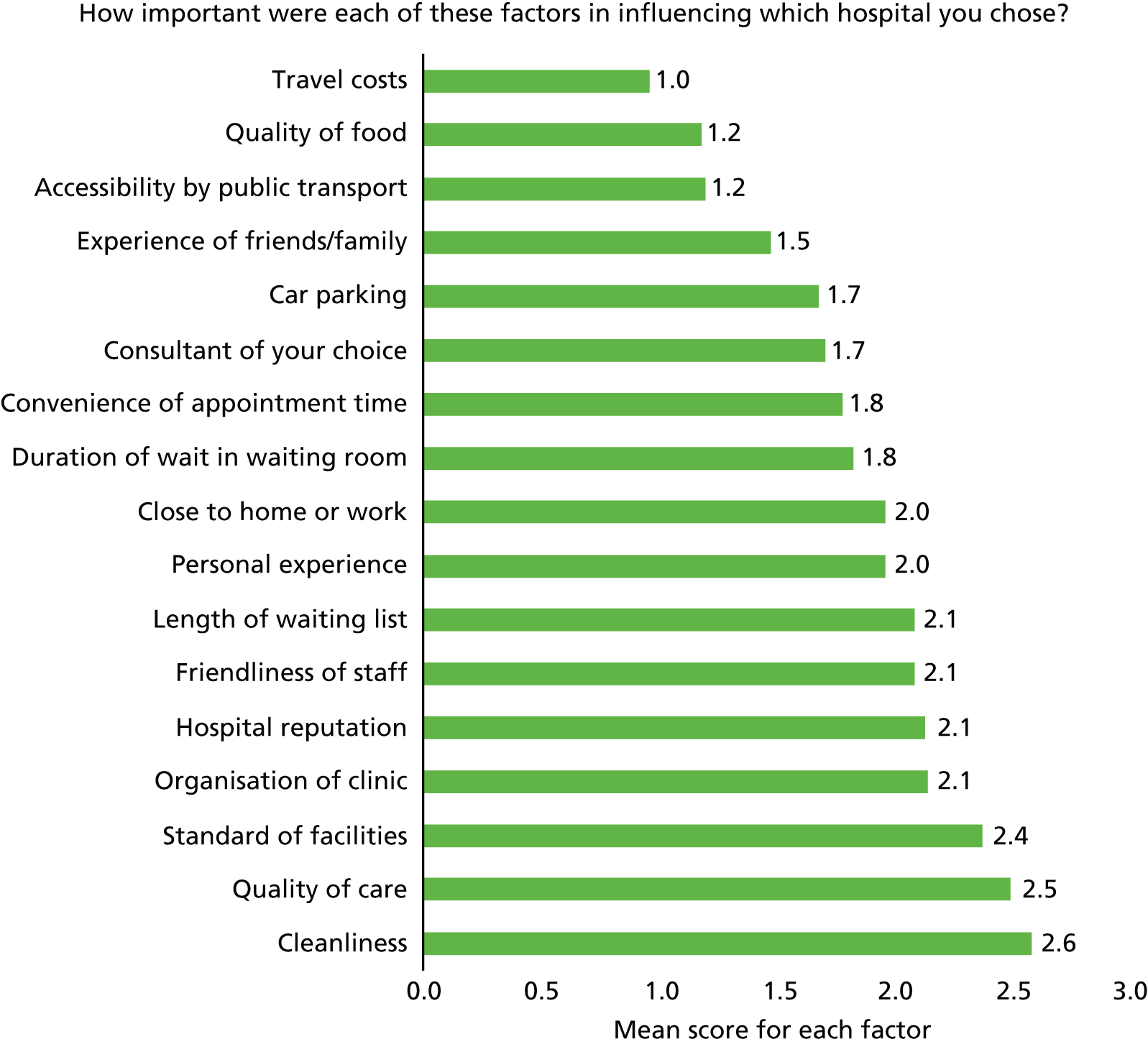

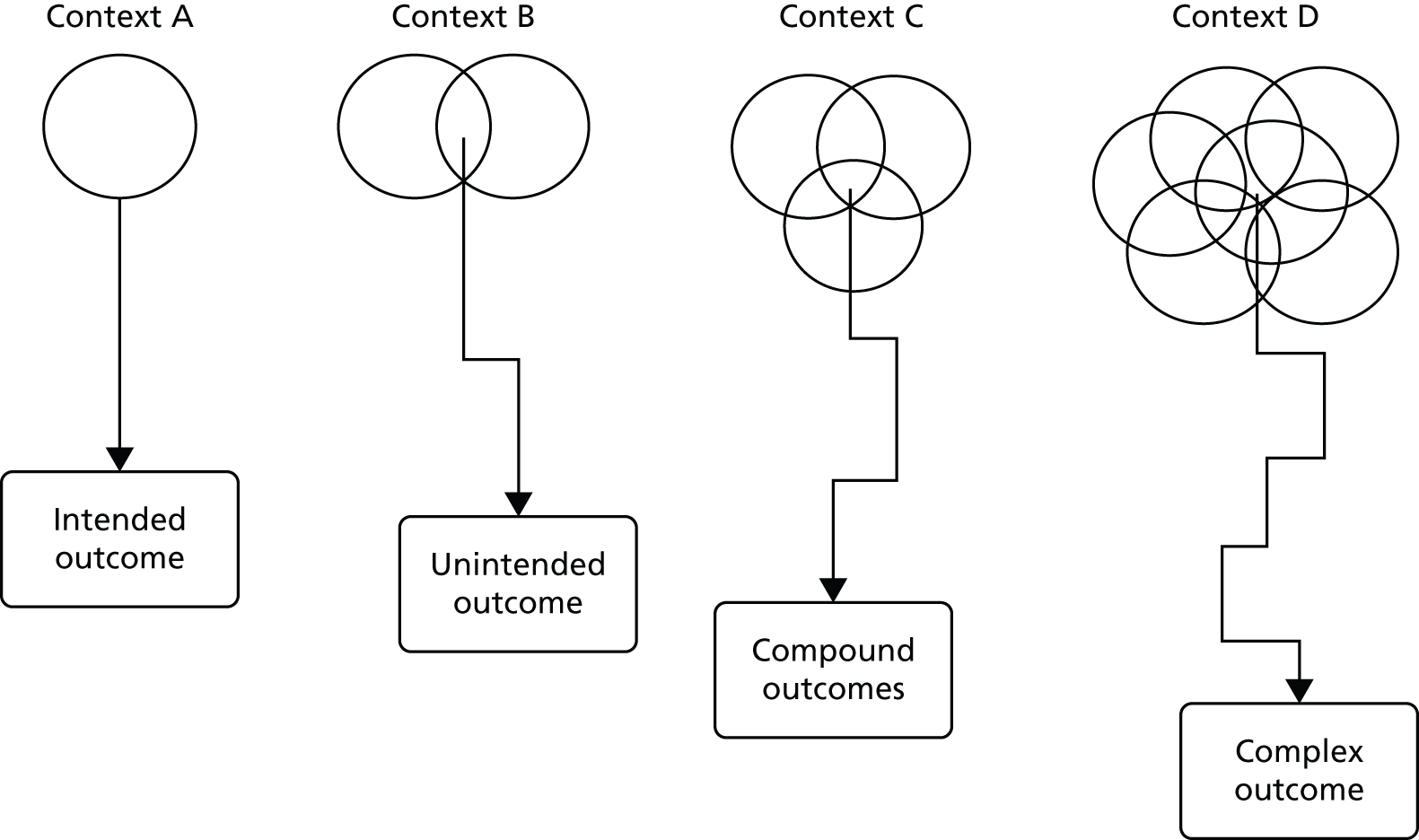

Furthermore, differences in context can result in the intervention not working through the intended mechanisms, leading to unintended consequences. 74 For example, the feedback and public release of performance data may stimulate improvement activity at hospital level through increased the involvement of leadership or a refocusing of organisational priorities,75 but it has also been shown to lower morale, and may focus attention on what is measured to the exclusion of other areas. 18 Others have cautioned that it may also lead to surgeons refusing to treat the sickest patients to avoid poor outcomes and lower publicly reported ratings. 74 Data from the national PROMs programme have been misinterpreted by some as indicating that a significant proportion of varicose vein, hernia and hip and knee replacement should not take place. 76 Public reporting of performance data may not improve patient care, as intended, through informing patient choice. 19,77 Rather, patients are often ambivalent about performance data and rely on their GP’s opinion when choosing a hospital. 78,79 Thus, there is need to highlight the potential unintended consequences of PROMs feedback and to distinguish between the circumstances in which they arise.

Fourth, PROMs have been implemented against a backdrop of other initiatives designed to drive up the quality of patient care, which can potentially either support or derail the intended impact of PROMs feedback. For example, Quality and Outcomes Framework (QOF) payments are dependent on the use of a standardised questionnaire for depression screening, resulting in GPs sometimes avoiding coding a person as suffering from depression in order to circumvent the completion of a questionnaire viewed by many GPs as unnecessary. 80,81

Finally, despite PROMs feedback having many functions and aspirations, research coverage of them is uneven, with more studies (trials and qualitative case studies) examining PROMs feedback at an individual level and few studies examining their use as a performance indicator at a group level.

Aims and objectives

The purpose of this review is to take stock of the evidence to understand by what means and in what circumstances the feedback of PROMs data leads to the intended service improvements. For any application of PROMs feedback, its impact on the quality of patient care depends on a long, complex chain of inputs and outputs, and is greatly affected by where and how it is implemented. This complexity has made it difficult for existing systematic reviews to provide a definitive answer regarding whether PROMs feedback leads to improvements in patient care at either the individual patient level48 or the level of health-care organisations. 19 In this project, we will use a different review method, realist synthesis,82 to clarify how the different applications of PROMs feedback are intended to work and to identify the circumstances under which PROMs feedback works best and why, in order to inform its future implementation in the NHS.

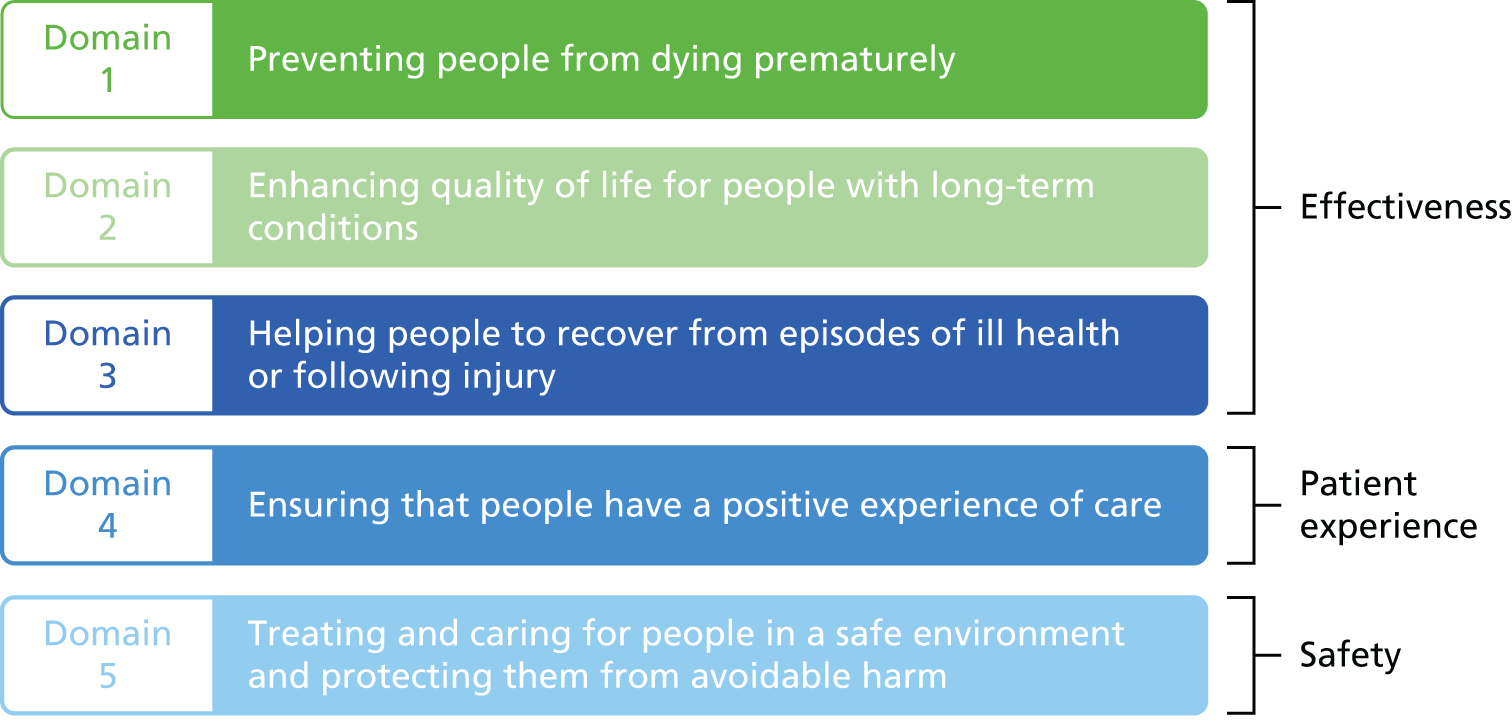

As the applications of PROMs data continue to multiply, our first aim is to identify and classify the various ambitions of PROMs feedback. At the individual level, PROMs data are utilised to improve patient care by (1) screening for undetected problems, (2) monitoring patients’ problems over time and (3) involving the patient in decisions about their care. At the group level, PROMs data may improve patient care by (4) improving the appropriateness of the use of interventions, (5) stimulating QI activities through benchmarking provider performance or (6) informing decision-making about choice of provider. 6 Our objectives are to:

-

Produce a comprehensive taxonomy of the ‘programme theories’ underlying these different functions, and capture their subtle differences and the tensions that may lie between them.

-

Produce a logic model of the organisational logistics, social processes and decision-making sequences that underlie the collation, interpretation and utilisation of PROMs data. We will use this model to identify the potential blockages and unintended consequences of PROMs feedback that may prevent the intervention from achieving its intended outcome of improving patient care. This will provide a framework for the review.

To inform the future implementation of PROMs feedback, our second aim is to test and refine these programme theories about how PROMs feedback is supposed to work against existing evidence of how it works in practice. We will synthesise existing evidence on each application of PROMs feedback including, where necessary, evidence from other quality reporting initiatives. The specific objectives of this synthesis are to:

-

identify the implementation processes that support or constrain the successful collation, interpretation and utilisation of PROMs data

-

identify the mechanisms and circumstances through which the unintended consequences of PROMs data arise and those through which they can be avoided.

Our third aim is to use the findings from this synthesis to identify what support is needed to optimise the impact of PROMs feedback and distinguish the conditions (e.g. settings, patient populations, nature and format of feedback) in which PROMs feedback might work best. We will produce guidance to enable NHS decision-makers to tailor the collection and utilisation of PROMs data to local circumstances and maximise its impact on the quality of patient care.

During initial project team discussions, we established that the feedback and public reporting of aggregate PROMs data to stimulate QI efforts by providers was based on a different set of programme theories from the feedback of individual PROMs data in the care of individual patients. Therefore, to meet these aims and objectives, we decided to carry out two separate, albeit related, reviews:

-

Review 1, which examined the feedback and public reporting of aggregate PROMs data to providers, aimed to explore in what circumstances and through what processes the feedback of aggregate PROMs data leads to improvements in patient care.

-

Review 2, which examined the feedback of individual PROMs data to clinicians, aimed to explore in what circumstances and through what processes the feedback of individual PROMs data leads to improvements in patient care.

Public and patient involvement

We involved patients in a number of ways throughout the reviews. Laurence Wood, a public and patient involvement (PPI) representative, was a member of the project team throughout the review. He attended project team meetings, helped to inform the development of our programme theories and commented on our findings. He chaired a PPI group consisting of three PPI members, Gill Riley, Eileen Exeter and Rosie Hassaman. The group met twice during the project; this was less often than we had anticipated and was a result of a long-term condition of one of the members. The group helped to inform our programme theories and reviewed our findings to date. Laurence Wood and Eileen Exeter also attended our Stakeholder Group meeting (described in Chapters 2 and 6) to help to focus the review. Laurence also read and commented on our plain English summary.

Rationale and overview of methodology

In this section we explain why we chose realist synthesis to conduct our review, and provide an overview of the methodology of realist synthesis. We provide a more detailed description of the application of this methodology for review 1 in Chapter 2 and for review 2 in Chapter 6.

Why realist synthesis?

Realist synthesis82 is designed to disentangle the heterogeneity and complexity of the intervention, and to make sense of the various contingencies, blockages and unintended consequences that may influence its success. The methodology was developed by one of the coauthors of this report (RP). It is an approach that is finding increasing use in the health-care field, and a number of current and recently completed Health Services and Delivery Research projects are making use of the approach (e.g. project 11/1022/04 led by Pawson with Greenhalgh as coapplicant, project 13/97/24 led by Wong and project 14/194/20 led by Burton). Pawson was also a team member of another key Health Services and Delivery Research project, 10/101/51; ‘Realist And Meta-narrative Evidence Synthesis: Evolving Standards – RAMESES’, which led to the development of reporting standards for realist synthesis. 83 The methodology now forms one of the approaches used by the National Institute for Health and Care Excellence (NICE) to develop public health guidance. 84

We have chosen to use realist synthesis because it:

-

permits us to understand in what circumstances and through what processes the feedback of PROMs data improves patient care and why (rather than just answering ‘does it work?’)

-

recognises that the success of PROMs feedback is shaped by the ways in which it is implemented and the contexts in which it is implemented

-

allows us to combine evidence from different types of empirical studies (both qualitative and quantitative).

What is realist synthesis?

We do not intend to provide a detailed description of the origins and basic assumptions underpinning realist synthesis; this can be found elsewhere. 82 However, we assume that some readers of this report will not be familiar with the methodology and, therefore, we provide a basic introduction to its modus operandi. Realist synthesis is a review methodology that is based on the premise that social programmes or interventions constitute ideas and assumptions, or theories, about how and why they are supposed to work. As Pawson and Tilley argue, social programmes are theories incarnate. 85 As such, the unit of analysis of realist synthesis is not the intervention per se but the programme theories that underpin them. Therefore, the task of realist synthesis is an iterative process of identifying, testing and refining these programme theories to build explanations about how, and in what circumstances, these interventions work and why. In practical terms, this means that we can draw on evidence from interventions that share the same programme theories within the synthesis. For example, as we discuss in subsequent chapters of this report, the feedback of aggregated PROMs data shares many of the ideas and assumptions as the public reporting of hospital report cards and patient experience data regarding how it is intended to work. Therefore, it is legitimate to include studies that have evaluated these interventions in the synthesis; even though they are different interventions, they share the same programme theory.

Realist synthesis is also premised on the idea that it is not the intervention (in our case, PROMs feedback) itself that gives rise to its outcomes. Rather, interventions offer resources to people, and it is people choosing to act, or not to act, on these resources (known as mechanisms) that will determine their impact on patient care. Furthermore, complex interventions, such as PROMs feedback are never universally successful, as people differ in their response to the intervention and their responses are supported or constrained by the social, organisational and political circumstances in which PROMs feedback is implemented (context). What realist synthesis aims to do is explain why PROMs feedback works in some circumstances and not others. It does so through a process of developing, testing and refining theories about how the intervention works, expressed as context–mechanism–outcome configurations. These are hypotheses which specify that, in this situation (context), the intervention works through these processes (mechanisms) and gives rise to these outcomes.

Initially, these theories focus on practitioner, policy-maker and participant ideas and assumptions about how the intervention is intended to work (or not). These ideas can then be formulated into programme theories to specify hypotheses that certain outcomes (intended or unintended) will occur as a result of particular mechanisms being fired in particular contexts. As synthesis progresses and these theories are tested across a range of contexts through a review of the empirical literature, these theories are refined to develop explanations at a level of abstraction that can allow generalisation beyond a single setting. The ‘end product’ of realist synthesis is explanation through the formulation of ‘middle-range’ theories that are limited in scope, conceptual range and claims, rather than offering general laws about behaviour and structure at a societal level. 86 Middle-range theories are identified by drawing across the literature to explain why regularities in the patterns of contexts, mechanisms and outcomes occur. Thus, they provide the basis for guidance to help policy-makers to target PROMs feedback interventions to local circumstances, and highlight what support they may need to put in place in order to maximise their impact on patient care.

How is realist synthesis conducted?

Again, we do not intend to provide a detailed description of the process of conducting a realist synthesis; this can be found elsewhere. 82 However, here we do offer a blueprint, so that readers who are not familiar with the methodology can make sense of how we operationalised our synthesis. It also makes explicit our understanding of how a realist synthesis ought to be conducted, which can be subject to scrutiny in judging the rigour and quality of the review.

Realist synthesis is an iterative review methodology, consisting of five main steps. For ease, these are described sequentially, but, in practice, there is considerable movement back and forth between different steps. Furthermore, a number of these steps, for example searching, quality appraisal, data abstraction and synthesis, are integrated rather than conducted separately.

Step 1: searching for and identifying programme theories

The basic unit of analysis in realist synthesis is not the intervention, but the ideas and assumptions or programme theories that underpin it. Thus, the starting point of realist synthesis is to search for and catalogue the different ideas and assumptions about how interventions are supposed to work. Initially, these programme theories focus on practitioner, policy-maker and participant ideas and assumptions about how the intervention is intended to work (or not). These may specify the sequences of steps required to deliver the intervention, and the organisational and social processes required in order for the intervention to achieve its intermediate and final outcomes: that is, an ‘implementation chain’. They may also identify potential blockages in this process, as well as potential unintended consequences. They often contain ideas about the different reactions or responses that participants may have to an intervention (mechanisms) that will determine whether or not the intervention is successful (outcome). They may also include ideas about the circumstances (or context) that determine the kind of reactions participants may have to an intervention, and the blockages that may occur, which thus influence the impact of the intervention.

These ideas often remain tacit and unexpressed in empirical evaluations of the intervention, which frequently assume shared knowledge regarding how the intervention is intended to work or consider that knowing how the intervention works is not important: the task is simply to know whether or not it works. Therefore, unearthing and cataloguing these ideas is best achieved through searching and analysing policy documents, position pieces, comments, letters, editorials, critical pieces and websites or blogs that express and debate these tacit assumptions and explain how the intervention in question is intended to work. For some interventions, it can be a useful exercise to deconstruct empirical investigations or policy documents of a given intervention to surface the implicit assumptions underpinning the design of the intervention itself. 62 This often requires a considerable amount of ‘detective’ work and reflection on the part of the researcher. We explain how we searched for the programme theories underlying PROMs feedback in the more detailed description of methodology in Chapters 2 and 6.

Step 2: focusing the review and selecting programme theories

Inevitably, the search for programme theories results in the identification of many different ideas about how the intervention is supposed to work, and its potential blockages and unintended consequences. It is not possible to review all of these, and the next stage of realist synthesis involves a process of (1) identifying common mechanisms or issues across the different programme theories, and (2) prioritising which set of programme theories to review.

The first is an important initial step in developing ‘middle range’ theory, which allows transferable lessons to be made. It requires the researcher to think ‘what are these programme theories an example of?’ and ‘how do these programme theories relate to more formal or abstract theories?’. Thus, it represents a process of moving up and down a ladder of abstraction, from practitioners’ ideas about how a specific intervention works, to more abstract ideas about how the family of interventions which share that programme theory are expected to work. This plays an important part in defining the boundaries of the review, as it serves to identify other interventions that also share the same programme theory, evaluations of which might therefore be included in the theory-testing phase of the review.

The second is a process of narrowing and deciding which of these theories we might focus on. There are no set criteria to govern these decisions,82 but they can focus on:

-

which aspects of the programme theory stakeholders and practitioners consider most important or would like to be answered

-

understanding how and why one particular section of the implementation chain works or becomes ‘blocked’

-

considering how the same programme theory fares in different contexts

-

adjudicating between rival ideas about the mechanisms through which an intervention is intended to work.

These decisions then form the framework for the review. However, it must be recognised that this process is iterative. Inevitably, the process of testing one programme theory uncovers a number of ‘sub’ or ‘mini’ theories within the review. Furthermore, the review is also likely to focus on a smaller number of main theories. Therefore, defining and redefining the boundaries of the review is an ongoing and iterative process. We explain how we identified relevant abstract theories and how we narrowed down the focus of our review in Chapter 2 for review 1 and Chapter 6 for review 2.

Step 3: searching for empirical evidence

The programme theories to be tested provide the backbone of the review, and determine the search strategy and decisions about study inclusion into the review in order to test and refine these theories. The next stage of the review thus involves an evidence search to identify primary studies that will provide empirical tests of each component of the theory. This involves electronic database searches, as well as forwards and backwards citation tracking. Searching and synthesis are interwoven, and, as the synthesis progresses, the emergence of new subtheories or mini theories often requires further iterative searches to identify empirical evidence to test them. Furthermore, the review is also likely to focus on a smaller number of main theories as the synthesis progresses. In Chapters 2 and 6, we describe in some detail the processes we used to identify the empirical evidence on which this review is based.

Step 4: quality appraisal and data extraction

These are combined in realist synthesis. Different programme theories require substantiation in divergent bodies of evidence. Hypotheses about the optimal contexts for the utilisation of PROMs data are tested by comparing the outcomes of experimental studies in different settings, claims about the reactions of different recipients of PROMs data are tested using qualitative data, etc. Studies (or parts thereof) are included in the study depending on their relevance to the programme theory being tested.

Quality appraisal is conducted throughout the review process, and goes beyond the traditional approach that focuses on only the methodological quality of studies. 87 In realist synthesis, the assessment of study rigour occurs alongside an assessment of the relevance of the study, and occurs throughout the process of synthesis. Quality appraisal is done on a case-by-case basis, as appropriate to the method utilised in the original study. Both qualitative and quantitative data are compiled. In addition, the inferences and conclusions drawn by the authors of the studies are also extracted as data in realist synthesis, as they often permit the identification of subtheories that can then be further tested with empirical evidence. Different fragments of evidence are sought and utilised from each study. Each fragment of evidence is appraised, as it is extracted, for its relevance to theory testing and the rigour with which it has been produced. 87 In many instances, only a subset of findings from each study that relate specifically to the theory being tested are included in the synthesis. Therefore, quality appraisal relates specifically to the validity of the causal claims made in these subset of findings, rather than the study as a whole. Trust in these causal claims is also enhanced by the accumulation of evidence from a number of different studies, which provides further lateral support for the theory being tested, discussed in more detail in the following section. Finally, quality appraisal is integrated into the synthesis narrative, rather than reported separately.

Step 5: synthesis

The goal of realist synthesis is to refine our understanding of how the programme works and the conditions and caveats that influence its success, rather than offering a verdict, descriptive summary or mean effect calculation on an intervention or family of programmes. Synthesis takes several forms. At its most basic, realist synthesis involves building ‘lateral support’ for a theory by bringing together information from different primary studies and different study types to explain why a pattern of outcomes may occur. Another form of synthesis, particularly useful when there is disagreement on the merits of an intervention, is to ‘adjudicate’ between the contending positions. This is not a matter of providing evidence to declare a certain standpoint correct and another invalid. Rather, adjudication assists in understanding the respects in which a particular programme theory holds and those in which it does not. Finally, the main form of synthesis is known as ‘contingency building’. All PROMs feedback programmes make assumptions that they will work under implementation conditions A, B, C, applied in contexts P, Q, R. The purpose of the review is to refine many such hypotheses, enabling us to say that, more probably, A, C, D, E and P, Q, S are the vital ingredients. In Chapter 2, we will provide short examples of how we carried out our synthesis.

Structure of the report

This report is divided into two parts. Review 1, consisting of Chapters 2–5, reports our realist synthesis of the feedback of aggregate PROMs and performance data to providers. Review 2, consisting of Chapters 6–9, considers the feedback of individual PROMs data to inform the care of individual patients.

Review 1: a realist synthesis of the feedback of aggregate patient-reported outcome measures and performance data to improve patient care

Chapter 2 provides a description of the methodology of review 1, and details the process of searching for programme theories, the process of searching for evidence to test these theories, how studies were selected for inclusion in the synthesis, and how data were extracted and synthesised. In Chapter 3, we provide a comprehensive taxonomy of the ideas and assumptions, or programme theories, underlying the feedback of aggregate PROMs data to providers. In Chapters 4 and 5, we report the findings of our evidence synthesis for the feedback of PROMs and other performance data to providers. Chapter 4 interrogates the mechanisms through which this is intended to occur, while Chapter 5 considers how different contextual configurations influence which of these mechanisms occur and the subsequent intended (or unintended) outcomes.

Review 2: a realist synthesis of the feedback of individual patient-reported outcome measures data to improve patient care

Chapter 6 provides a description of the methodology for review 2, again detailing the process of searching for programme theories and for evidence to test these theories, and the ways in which we selected studies for inclusion and extracted and synthesised data. Chapter 7 examines the programme theories underlying the feedback of PROMs data at the individual level, offering a taxonomy on the ideas and assumptions, or programme theories, underlying the feedback of individual PROMs data. In Chapters 8 and 9, we report on the evidence synthesis of the implementation chain through which the feedback of PROMs data in the care of individual patients is expected to work. In Chapter 8, we explore the circumstances in which, and process through which, PROMs completion may support patients to raise issues with clinicians. In Chapter 9, we examine clinicians’ use of PROM feedback to support their care of individual patients. Finally, Chapter 10 brings our findings together and discusses their implications for practice and future research.

Chapter 2 Review methodology: feedback and public reporting of aggregate patient-reported outcome measures data

A protocol for our realist synthesis has been published,88 and in this chapter we describe how the boundaries and focus of our review of the feedback of aggregate PROMs data were defined. This enables the reader to understand why and how changes were made to the original protocol, as suggested by the RAMESES guidelines for reporting realist syntheses. 83 We describe how we carried out our review, using the five steps outlined in Chapter 1 as a structure.

Searching for and identifying programme theories

As discussed in Chapter 1, identifying opinions and commentaries for a realist synthesis is the first stage in identifying theories for which evidence is later sought. The purpose of this search is to map the range and diversity of different programme theories underlying PROMs feedback, rather than identify and include every single paper discussing the ideas and assumptions underlying PROMs feedback. We conducted one search for programme theories for PROMs feedback at both the aggregate and individual level. Opinion pieces and commentaries on PROMs feedback were identified in database searches, JG’s personal library (89 known relevant studies) and citation tracking activities including forwards and backwards citation searching.

In April–May 2014, we searched the following databases:

-

Cochrane Database of Systematic Reviews (via Wiley Online Library), issue 5 of 12, May 2014

-

Cochrane Methodology Register (via Wiley Online Library), issue 3 of 4, July 2012

-

Database of Abstracts of Reviews of Effects (via Wiley Online Library), issue 4 of 4, October 2014

-

EMBASE Classic+EMBASE (via Ovid), 1947–30 April 2014

-

Health Management Information Consortium (via Ovid), 1983–present

-

(Ovid) MEDLINE®, 1946–week 3 April 2014

-

(Ovid) MEDLINE® In-Process & Other Non-Indexed Citations, 1966–29 April 2014

-

NHS Economic Evaluation Database (via Wiley Online Library), issue 4 of 4, October 2014.

Two search strategies were run on the Ovid databases: one aimed at identifying review papers and one aimed at identifying commentaries and opinion pieces. The Cochrane Library databases were searched with one strategy to identify reviews only, as they were unlikely to contain opinion pieces. The searches were developed iteratively; initial searches developed by the information specialist (JW) were discussed with JG and SD, who provided feedback on whether or not useful papers were being captured. JW then revised the search strategy.

All search strategies included search concepts for PROMs and the ‘Outcomes of Feedback’. Subject headings and free-text words were identified for use in the search concepts by JW and project team members. Further terms were identified and tested from the personal library (known relevant) papers. 57 Care was taken to avoid retrieving papers that simply reported PROMs outcomes, and to identify those with discussion of the feedback of PROMs.

An ‘opinion pieces’ search strategy from a previous realist synthesis,89 conducted by the same authors, was tested against the known relevant papers and used (with a minor adaption in MEDLINE to include the search term ‘comment.cm’). An example of the PROMs feedback ‘opinion pieces’ search is presented in Appendix 1. The search strategies for review papers used the Clinical Queries – Reviews specificity maximising filter in Ovid databases plus a series of specific free-text searches to identify reviews (see Appendix 1).

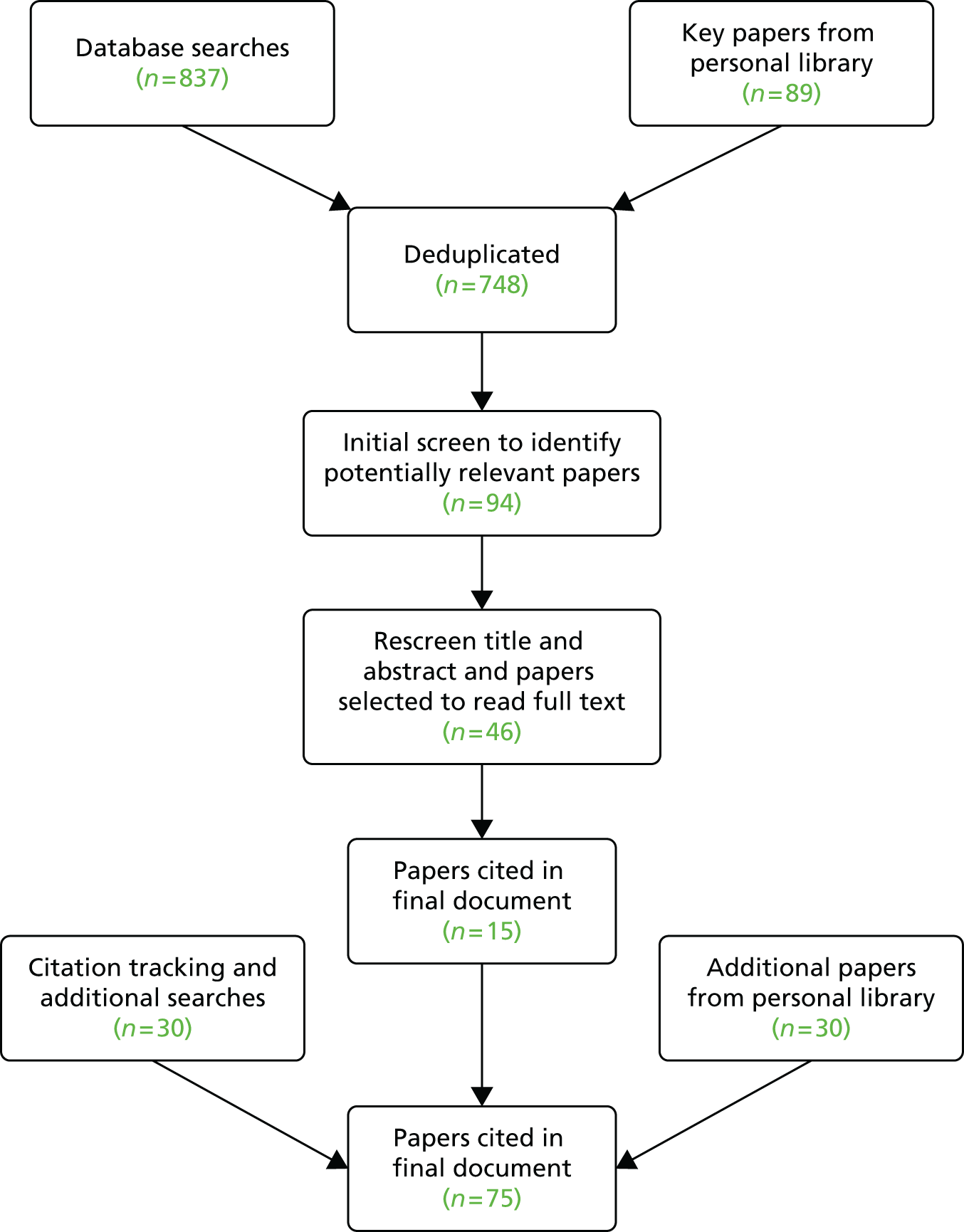

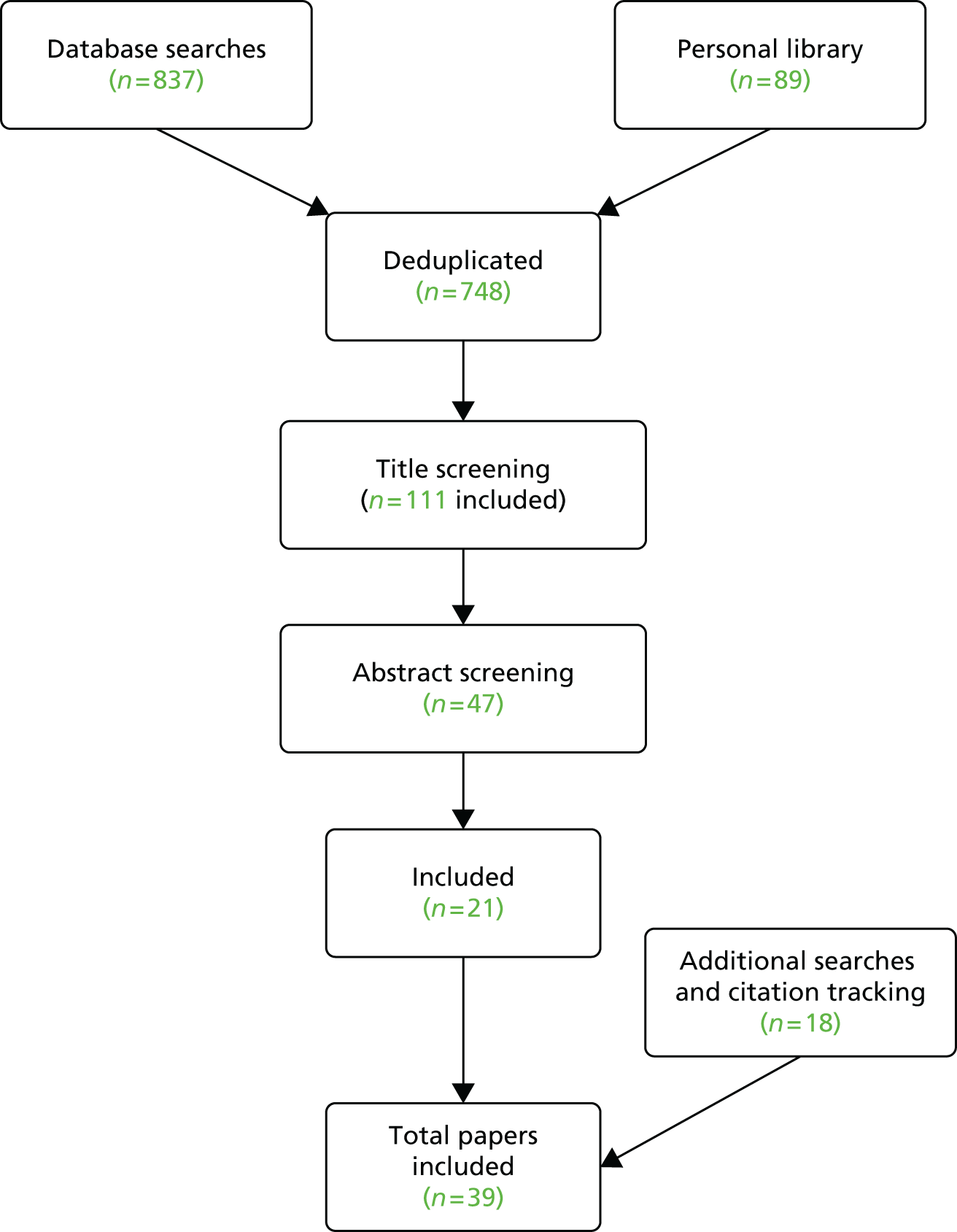

The database searches identified 1011 references, which reduced to 837 when duplicates were removed. These records were stored in an EndNote (Thomson Reuters, CA, USA) library alongside the 89 personal library references to create set of 748 references.

JG and SD screened the titles and abstracts of the 748 references to identify potentially relevant papers according to the following criteria.

Inclusion criteria

-

The paper describes how aggregate PROMs feedback is intended to work.

-

The paper provides a theoretical framework that describes how aggregate PROMs feedback is intended to work.

-

The paper provides a critique of the ideas underlying how aggregate PROMs feedback is intended to work.

-

The paper reviews ideas about how aggregate PROMs feedback is intended to work.

-

The paper provides stakeholder accounts or opinions of how aggregate PROMs feedback does/does not work.

-

The paper outlines, discusses or reviews potential unintended consequences of aggregate PROMs feedback.

Exclusion criteria

-

The paper reports findings in which a PROM is used as a research tool [e.g. an evaluation of an intervention, a study exploring the health-related quality of life (HRQoL) of specific populations].

-

The paper is focused on evaluating the psychometric properties of a PROM.

-

The paper reviews the psychometric properties of a PROM or a collection of PROMs.

-

The paper provides advice or recommendations about which PROM to use in a research context.

An initial screen identified 94 potentially useful papers; the titles and abstracts of these papers were then rereviewed by JG and categorised according to the different theories they articulated. All of these papers contributed to the process of mapping the programme theories underlying PROMs feedback. Following this process, 46 were selected for inclusion, as they provided the clearest examples of the ideas underpinning the feedback and public reporting of PROMs and performance data. These papers represented the same ideas and assumptions contained within the full set of 94 papers and, in essence, were a purposive sample of these papers. The full texts of these papers were then read, together with an additional 30 papers from JG’s existing library, which included key policy documents and grey literature. Notes were taken about the key ideas and assumptions regarding how the feedback and public reporting of PROMs data was intended to work. Of the 46 papers identified from the literature searches, 15 were purposively selected as ‘best exemplars’ of the ideas reflected in the papers as a whole. These were cited in the final draft of the paper cataloguing and summarising the programme theories underlying the feedback and public reporting of aggregated PROMs data, reported in Chapter 3. However, again, they represented similar ideas and assumption as the 94 papers initially selected for inclusion. Forwards and backwards citation tracking of key articles, and additional, iterative searches in Google Scholar (Google, Inc., Mountain View, CA, USA) as key subtheories emerged, identified a further 30 papers that were cited in the final draft, reported in Chapter 3. In all, 75 papers contributed to the development of programme theories. Regular discussion of these ideas among the project group, circulation and feedback of draft working papers outlining the theories ensured that the full range of different theories were represented. Figure 1 summarises the flow of studies from identification through to inclusion in the final document.

FIGURE 1.

Summary of searching and selection process for aggregate programme theories.

Alongside these searches, we also held informal meetings with a number of stakeholders. These included the insight account manager for PROMs and a senior analyst from NHS England, an information analyst for the national PROMs programme from the Health and Social Care Information Centre (HSCIC), a GP commissioner from a Clinical Commissioning Group and a NHS trust lead for patient experience. In these meetings, we explored stakeholders’ views on how the national PROMs programme and the national surveys of patient-reported experience measures (PREMs) were intended to improve patient care. These interviews were neither tape recorded nor formally analysed. Rather, they were used to clarify our ideas about how these programmes were intended to work, and to support and expand the programme theories we identified from the literature.

Focusing the review and selecting programme theories

Agreeing the focus of the review

The process of cataloguing the different programme theories underlying PROMs feedback at the aggregate level (reported in Chapter 3 of this report) allowed us to identify the inner workings of these interventions as perceived by those who design, implement and receive these interventions. To agree the focus of the review, we presented our initial programme theories and a basic logic model of the feedback of aggregate PROMs data to our patient group and at a 1-day stakeholder workshop (also attended by two members of our patient group). Our patient group consisted of three ‘expert’ patients: one was a retired GP, one had previously worked for a NHS Commissioning Board and the third worked for a national charity, Arthritis UK. Our stakeholder event included the following stakeholders:

-

three analysts on the national PROMs programme from NHS England

-

analyst working on the national PROMs programme from the HSCIC

-

Matron for Surgery, Anaesthesia and Theatre

-

Senior Sister for Surgical Pre-Assessment

-

Director of Operations at a NHS trust

-

representative from the Royal College of Nursing with expertise in PROMs

-

consultant surgeon

-

two academics with expertise in orthopaedics and PROMs

-

two patient representatives.

We presented our initial programme theories as ‘propositions about how PROMs feedback is intended to work’ at these meetings, and invited participants to comment on these ideas and refine, extend and prioritise them. Stakeholder discussions focused on aspects of the national PROMs programme that they found challenging, which included:

-

Variations in how providers used the PROMs data provided, which was perceived to depend on the size of the trust’s information technology (IT) department; and thus the resources available to interrogate and analyse these data.

-

Variations in how PROMs data were disseminated, in terms of who these data were shared with and how.

-

Whether or not staff on the ground felt that PROMs data provided information to enable them to identify the causes of poor care and solutions to address them.

-

Whether or not PROMs data could be linked or interpreted in relation to other locally collected data to enable trust boards to utilise the data effectively.

-

There was some scepticism about whether or not PROMs data would inform patient choice of hospital; patients felt that they were more likely to be used to reassure patients that the care they received was of a high standard (i.e. for public accountability).

Following the stakeholder workshops, we held a project team meeting to reflect on the issues raised and agree the focus of the review. Although stakeholders had not actively prioritised our theories in the workshop, they had provided a valuable perspective on ‘why PROMs feedback may not work as intended’. It was felt that much research had focused on how the data are collected, but the key issue that emerged from the stakeholder workshop was the difficulties that providers experienced in responding to the data. Therefore, we decided to focus our review on how different stakeholders were expected to respond to the feedback and public reporting of PROMs data. There was some debate about whether or not the review should consider the use of PROMs as a tool for patient choice. The stakeholder group had talked about PROMs as a means of public accountability in terms of providing reassurance to the public of good care, rather than informing decisions about which hospital to go to. Our patient group had expressed some scepticism about the idea that PROMs data would be used by patients to inform their choice of hospital. We also recognised that systematic reviews had found little evidence that patients used performance data to inform their choice of hospital. 19 Therefore, we agreed we would focus on how providers were expected to respond to PROMs data. Thus, our review question was:

-

In what circumstances and through what processes do providers respond to the feedback and public reporting of PROMs data to improve patient care, and why?

However, during the review itself, we found that the process of testing the hypothesis that providers respond to performance feedback to protect their market share led us to synthesise some aspects of the literature exploring how patients made choices about which hospital to attend.

Identifying and searching for abstract theories

The next step in our review was to make connections between these lower-level practitioner theories and higher-level, more abstract theories to develop a series of hypotheses that could be tested against empirical studies in our review, and produce transferable lessons about how and in what circumstances PROMs feedback produces its intended outcomes.

To identify the abstract theories, JG, SD and RP engaged in a series of joint brainstorming sessions and analysis of the PROMs programme theories. The aim of these sessions was to identify the abstract, higher-level theories relevant to PROMs feedback. To do this, we tried to answer the questions ‘what is this intervention an example of?’, ‘what is the core underlying idea at work here?’ and ‘what other interventions also share these ideas?’ We identified three key ideas underlying PROMs feedback at the aggregate level:

-

Audit and feedback: PROMs feedback is an example of an audit and feedback intervention, as it involves generating a ‘summary of clinical performance of health care over a specified period of time aimed at providing information to health professionals to allow them to assess and adjust their performance’. 90

-

Benchmarking: PROMs feedback also has a comparative element, such that providers can also compare their own performance with that of other providers in their locality or across England.

-

Public disclosure: PROMs data are made publicly available to a range of stakeholders, including patients, who are expected to exert pressure on providers to improve patient care.

We discuss these ideas in more detail in Chapter 3. To identify abstract theories relating to these ideas, we conducted searches using search terms ‘feedback NHS’, ‘benchmarking NHS’, ‘audit and feedback NHS’ on Google and Google Scholar (August 2014). For each search, we screened the first five pages and selected papers according to the following criteria:

-

presents or discusses an abstract theory

-

presents propositions about how (mechanisms) and in what circumstances (contexts) the intervention may work best

-

contains a map, model or implementation chain of the theory.

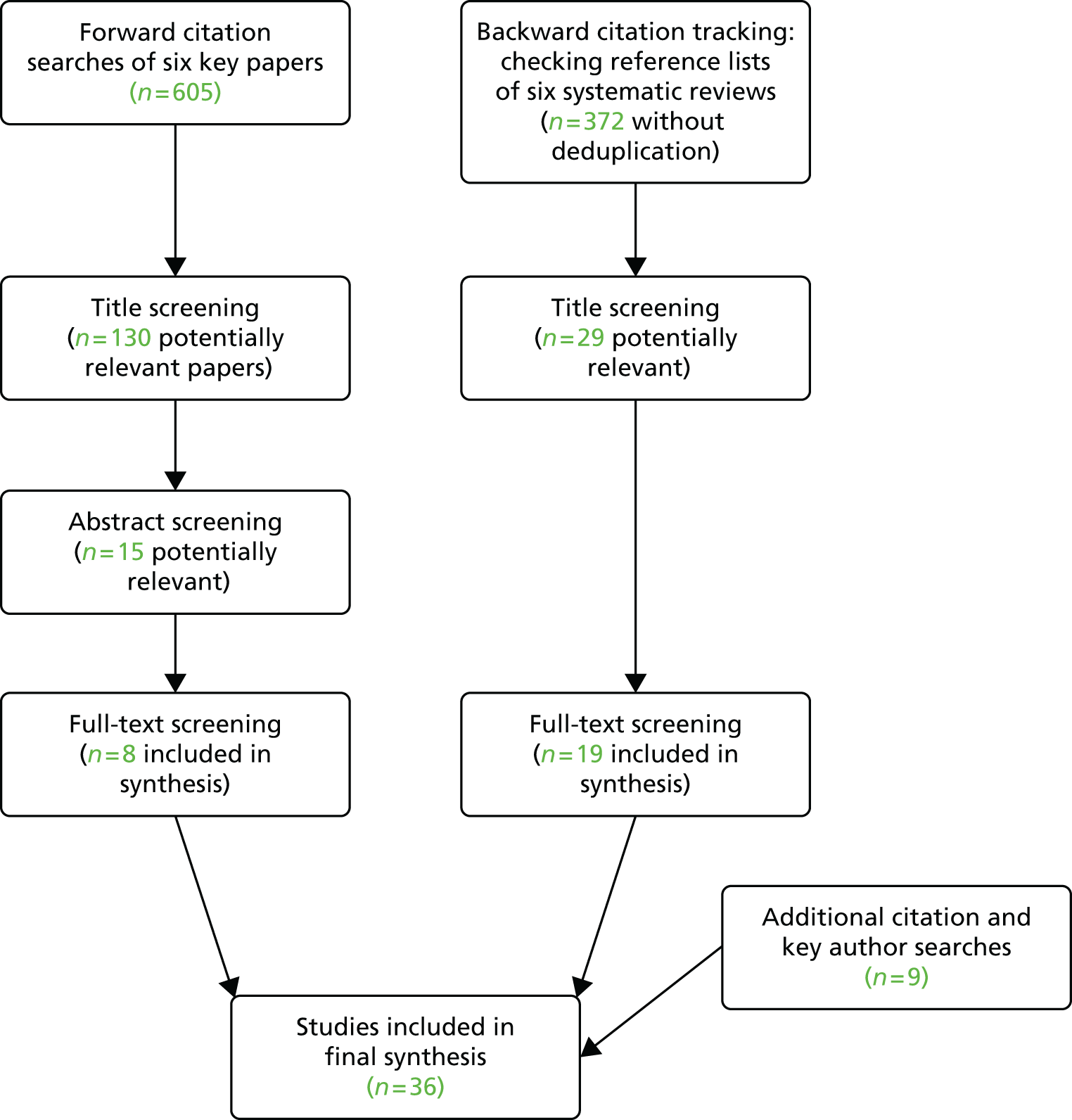

From 300 references, we identified six papers as meeting our inclusion criteria. For each selected paper, we checked the references to identify other related papers and also undertook a process of forwards citation tracking of four key papers91–94 to identify papers that cited these. We also consulted existing systematic reviews90,95,96 to identify references to abstract theories.

The searches were run August 2014 in the following resources:

-

Google Scholar

-

Sciences Citation Index (via Thomson Reuters Web of Science) – 1900–present.

These searches identified 69 references, which were reduced to 65 when duplicates were removed. We drew on a total of 27 papers from all of these searches to inform our thinking about abstract theories underlying PROMs feedback, which formed the basis of a working paper discussed among the project team. From this working paper, we cited 13 papers in our final report, which are reported in Chapter 3. Figure 2 provides a summary of these searches.

FIGURE 2.

Summary of abstract theory searches.

Searching for empirical evidence and selection of studies

The next stage of our realist synthesis involved searching for empirical evidence in order to test and refine our programme theories. JW developed a search strategy, with input from SD and JG, to search for published studies against which to test our theories. We were aware that there were very few papers looking at how providers have responded to PROMs feedback per se. Following our analysis of abstract theories described in the previous section, we identified a number of interventions that shared the same underlying programme theory, so we also searched for studies that had evaluated these interventions. These included provider views on and responses to:

-

feedback of patient experience data (e.g. the National Inpatient Survey, the GP Experience Survey, etc.)

-

National Clinical Audits

-

other forms of publicly reported ‘performance data’, for example mortality data or process data; many of these come from the USA, where there is a long history of public reporting.

The development of the search strategy was iterative; JW ran a strategy and sent JG and SD initial results; SD and JG provided feedback on whether or not the resulting papers were useful for theory testing. After several iterations, this resulted in the agreement of a final search strategy. All search strategies included search concepts for PROMs or other performance indicators, outcomes of feedback (e.g. decision-making, improved participation and communication) and qualitative research (see Appendix 1). In October 2014 we searched the following databases:

-

EMBASE Classic+EMBASE (via Ovid) 1947–2014 October 17

-

Health Management Information Consortium (via Ovid) 1983–present

-

(Ovid) MEDLINE® 1946–week 2 October 2014

-

(Ovid) MEDLINE® In-Process & Other Non-Indexed Citations, 1966–29 April 2014.

The searches identified 2080 records, which reduced to 1617 after removing duplicates found across the searches and duplicates of records that had already been identified from previous (theories) searches.

JG and SD independently reviewed the titles and abstracts of the first 160 references (approximately 10% of the total) using a broad set of inclusion and exclusion criteria, and compared and discussed our included studies to check that we were making comparable judgements. We then split the papers between us, and screened the titles and abstracts using a broad set of inclusion and exclusion criteria.

Inclusion criteria

-

Studies about provider or commissioner views of, responses to, use of, or interpretation of PROMs data, national clinical audits, patient experience data, clinical outcomes (as an indicator of treatment effectiveness and, thus, performance) and mortality data (as an indicator of performance).

-

Reports on the process of implementation of local PROMs/patient experience data collection for use as an indicator of service quality, reporting not just the results of the data collection but how data collection was implemented and/or how it was used.

-

Reports on the process of implementation and use of local audit to improve care – how it was implemented and how people responded – not just reporting the results of the audit.

Exclusion criteria

-

Articles on the development or validation of PROMs data, patient experience data, national clinical audits, mortality data, clinical outcomes.

-

Articles about patient involvement in patient experience data.

-

Articles reporting just the findings/analysis of audit data.

-

Articles evaluating the impact of other QI programmes that did not involve some sort of feedback or public reporting of data.

At this stage we included 124 papers. After rereading the titles and abstracts, we developed a more restrictive set of inclusion and exclusion criteria that were focused on our evolving theories, as follows.

Inclusion criteria

Studies about how clinicians or managers have used or responded to or about clinicians’ views of:

-

national or local patient experience data collection and feedback

-

mortality report cards or anything described as ‘performance data’

-

hospital report cards

-

aggregate clinical outcome indicators or process data.

Studies contributing to testing theories about:

-

the mechanisms through which feedback is intended to work (intrinsic desire to improve, peer comparison, protecting market share, protecting professional reputation)

-

the contextual configurations that might influence how performance feedback works (financial incentives, credibility, ‘actionability’ of these data).

Exclusion criteria

Studies examining:

-

views/experiences or implementation of general QI activities

-

implementation of, or response to, local clinical audit or guidelines

-

results/findings of audits or patient experience data.

Following the application of these criteria, we included 28 studies. We also checked the references of an existing systematic review of public reporting of performance data,27 which identified a further 18 studies, and also checked the reference lists of five key papers18,75,97–99 on which we had conducted a preliminary synthesis and identified 26 studies. We conducted an additional search in MEDLINE and EMBASE for feedback of patient experience data (see Appendix 1) and selected three studies from the 194 identified. This gave us a total of 78 references. We then checked for duplicates and rescreened the papers using the more focused set of inclusion and exclusion criteria listed above, and included 51 papers. These papers focused on providers’ views and responses to performance data and indicators and patient experience data. Table 1 provides a summary of the different sources of papers.

| Source | Number identified | How screened | Number potentially relevant |

|---|---|---|---|

| A: electronic database search | 1617 (after removal of duplicates) | JG and SD coscreened approximately 160 to check the application of the criteria, and then each independently screened 811 and 806 | 124; 28 after second screening |

| B: five index papers backwards citation tracking | JG went through five index papers18,75,97–99 and identified potentially relevant papers | 26 | |

| C: EG’s personal library: patient experience measures | 7 | EG selected the papers and JG and SD screened them together to identify if they focused on provider responses and the use of patient experience data | 3 |

| D: citation tracking from Totten et al.’s 27 Agency for Healthcare Research and Quality review of public reporting | 40 | JG went through the report and judged that potentially relevant references were those related to answering Q3 (did feedback results in changes) (n = 8) and qualitative studies reporting provider awareness or views of performance measures (n = 10) or their reported use of them in practice (n = 22); these were then screened to evaluate potential relevance to our review | 18 |

| E: JG patient experience searches | 194 (after removal of duplicates from EMBASE/MEDLINE) | JG screened to check if papers were about provider responses to PREMs data | 3 |

| Total (after removal of duplicates) | 78; 51 after second screening |

We read the 51 papers and began our synthesis, described in the next section (see Data extraction, quality assessment and synthesis). As the synthesis progressed, we further focused our synthesis on a smaller number of main theories but, at the same time, a number of ‘subtheories’ within this focused selection of ‘main’ theories were identified. This required us to revisit our original search results, further examine documents in JG’s personal library and carry out additional citation tracking of key studies to further test these subtheories.

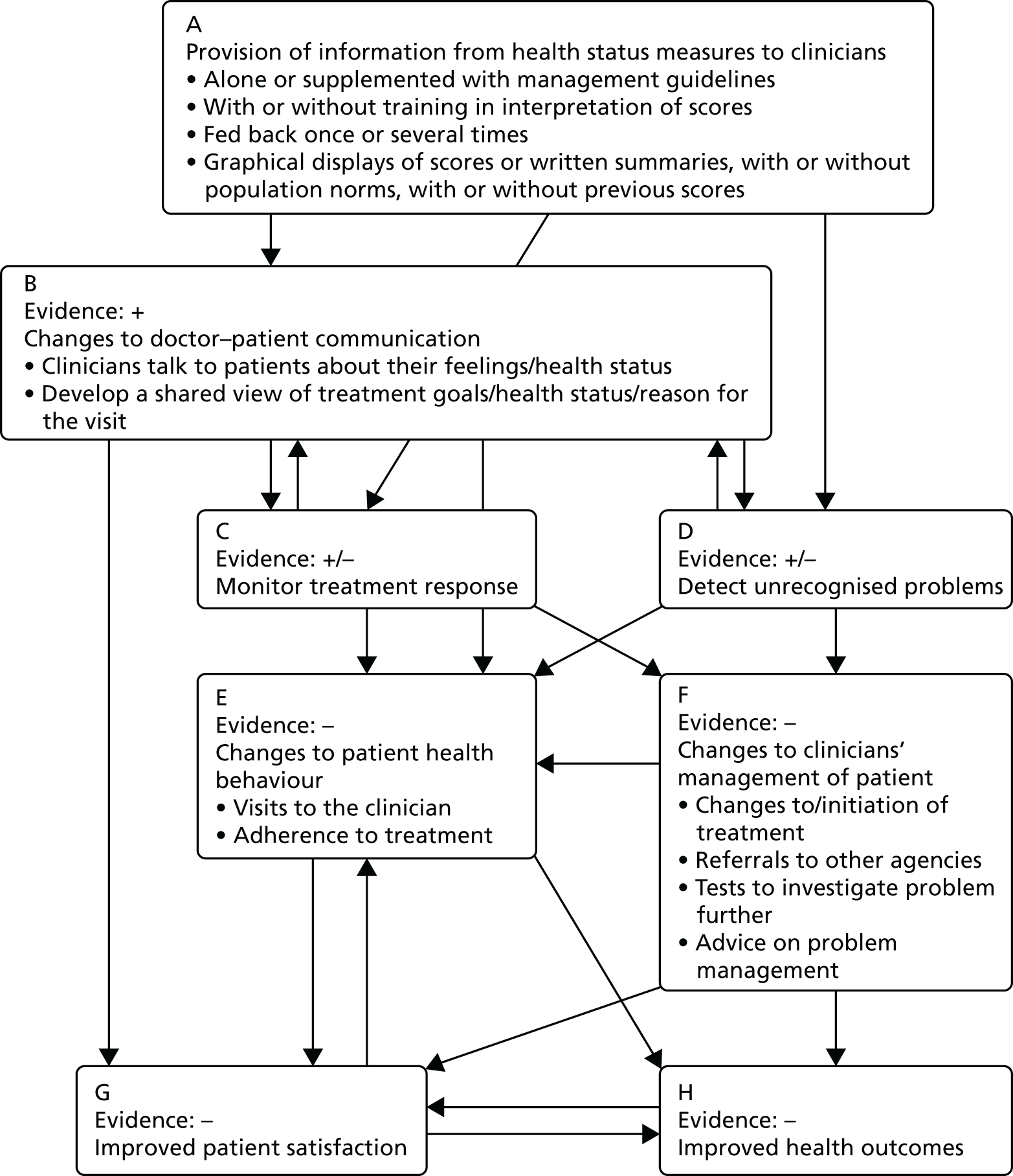

This identified an additional 30 papers that were included in the final synthesis. At the same time, we found that some of the papers we had originally included were no longer relevant to the main theories in the synthesis. Of the original 51 papers identified from the initial searches, 28 made it into the final synthesis; 23 papers were left out of the final synthesis because they were not relevant to the final theory testing phase of the synthesis or did not progress the theory testing further. Thus, in total, 58 papers were cited in the final synthesis of the feedback of aggregated PROMs and performance data, reported in Chapters 4 and 5. A flow chart of the search process is show in Figure 3.

FIGURE 3.

Flow diagram of the evidence search process for the feedback of aggregate PROMs data.

Data extraction, quality assessment and synthesis

This was an iterative process undertaken by JG, SD and EG, with feedback from the wider project group (NB, CV, DM, LW, JJ and LL). Data extraction, quality assessment, literature searching and synthesis occurred simultaneously. To begin the synthesis process, we identified five key index papers18,75,97–99 from JG’s personal library and conducted a ‘mini’ or ‘pilot’ synthesis on the papers. The papers were selected to represent a range of countries (the USA and the UK), settings (secondary care and primary care) and different types of performance data (PROMs, star ratings and mortality data). In this pilot synthesis, we attempted to understand:

-

In what circumstances and through what mechanisms do providers interpret performance data, identify a solution to the problem and then implement that solution to improve that quality of patient care?

-

In what circumstances and through what mechanisms do providers respond to performance feedback in a way that does not lead to the initiation of QI activities?

Together with our programme theories, we developed an initial logic model of how providers were expected to respond to performance data and used this as a framework to read the papers. We read the papers with our initial programme theories and logic model in mind and the theories acted as a lens through which to identify salient findings in the paper and relate them to the theory. We used the theories to make sense of the findings of each paper in and of itself, but also in comparison with other papers. After a first reading of the papers, we began to chart the potential contextual factors that might influence how performance data are responded to and the different provider responses identified within the paper. We then reread the papers to begin to develop ideas about how these different factors might come together as context–mechanism–outcome configurations: that is, ideas or hypotheses that would explain in what circumstances and through what processes performance data feedback led to initiation of QI initiatives (or not). This was both a within-paper and a cross-paper analysis. Within papers, the analysis consisted of identifying patterns in which particular clusters of contextual factors reported in a paper gave rise to a particular provider response or responses. For the cross-paper analysis, we compared these patterns across papers and attempted to explain or hypothesise why similar or different patterns might have arisen.

We discussed the findings of this pilot synthesis with the wider project group, and this informed the screening process for the papers identified via database searches and citation tracking. We screened the papers as discussed above and had an initial selection of 51 papers to begin the synthesis ‘proper’. We developed a data extraction template to extract details of study title, aims, methodology and quality assessment, main findings and links to theory (see Appendix 2 for an example of a completed data extraction template). In realist synthesis, data include not just the findings of the study but also the authors’ interpretations of their findings, and we made these distinctions clear during data abstraction.

We extracted these data for all 51 studies initially, and linked the study findings to our initial programme theories. This enabled us to have a detailed understanding of each study and to begin the process of using each individual study to test and refine our theories. We discussed findings through face-to-face meetings and via Skype (SkypeTM, Microsoft Corporation, Redmond, WA, USA). To enable us to compare study findings in terms of the theories about when, how and why feedback and public reporting of performance data prompted providers to respond, we produced a summary table that listed key theories for each study. We considered providers in the broadest sense to mean both individuals and organisations and both primary and secondary care. However, we also recognised that different theories related to different levels of the organisations; for example, ‘intrinsic’ motivation theories were more relevant to individuals, whereas ‘market share’ theories could refer both to individuals and organisations. We then produced another table that identified common ‘theory themes’ across the papers (see Appendix 3). These included:

-

whether or not market competition is necessary for providers to respond to performance data

-

whether or not rewards and incentives are necessary for providers to respond to performance data

-

how the quality of data collection and analysis (e.g. timing of data collection, process of case-mix adjustment) influences the extent to which it is trusted by providers

-

how the ‘sponsorship’ of performance data influences the nature of the data collected and the extent to which they are trusted by providers

-

whether low or high performers viewed or responded differently to performance data

-

different intended and unintended consequences and what was perceived to be driving them (e.g. when does focusing leadership attention on poor areas of care become ‘tunnel vision’ and when does it gain acceptance from clinical staff and lead to change?)

-

the role of the media in driving or prompting providers to respond to performance data

-

whether providers are motivated to change to protect their market share because they want to be as good as or better than their peers, or from an intrinsic desire to improve care

-

the relative impact of ‘external’ indicators and providers’ own ‘internal data’ in driving a response to performance indicators

-

the role of previous experience in public reporting, QI initiatives and involvement in broader QI activities in influencing how providers respond to public reporting

-

the ‘actionability’ of the indicators and what makes them actionable or not.

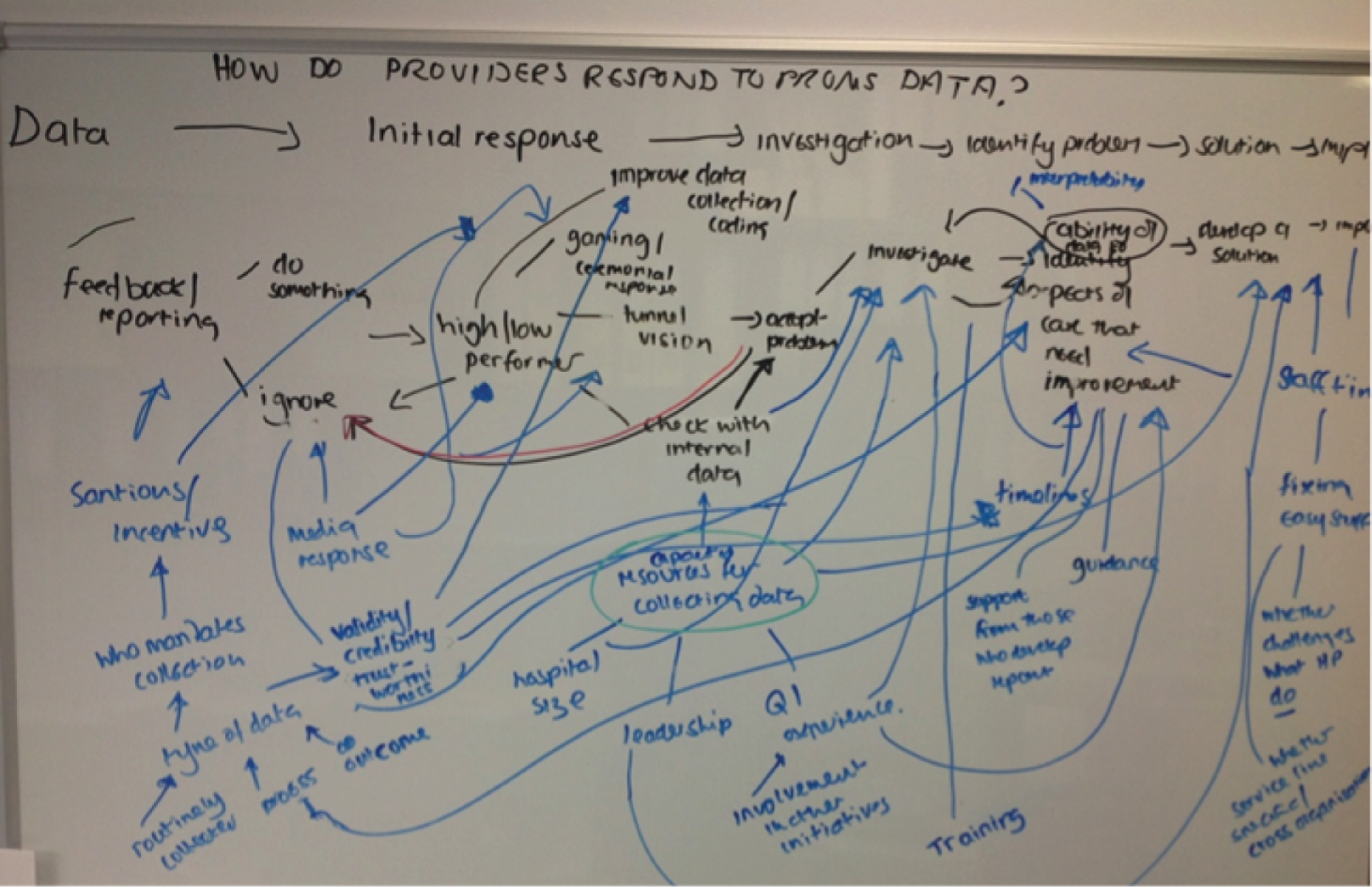

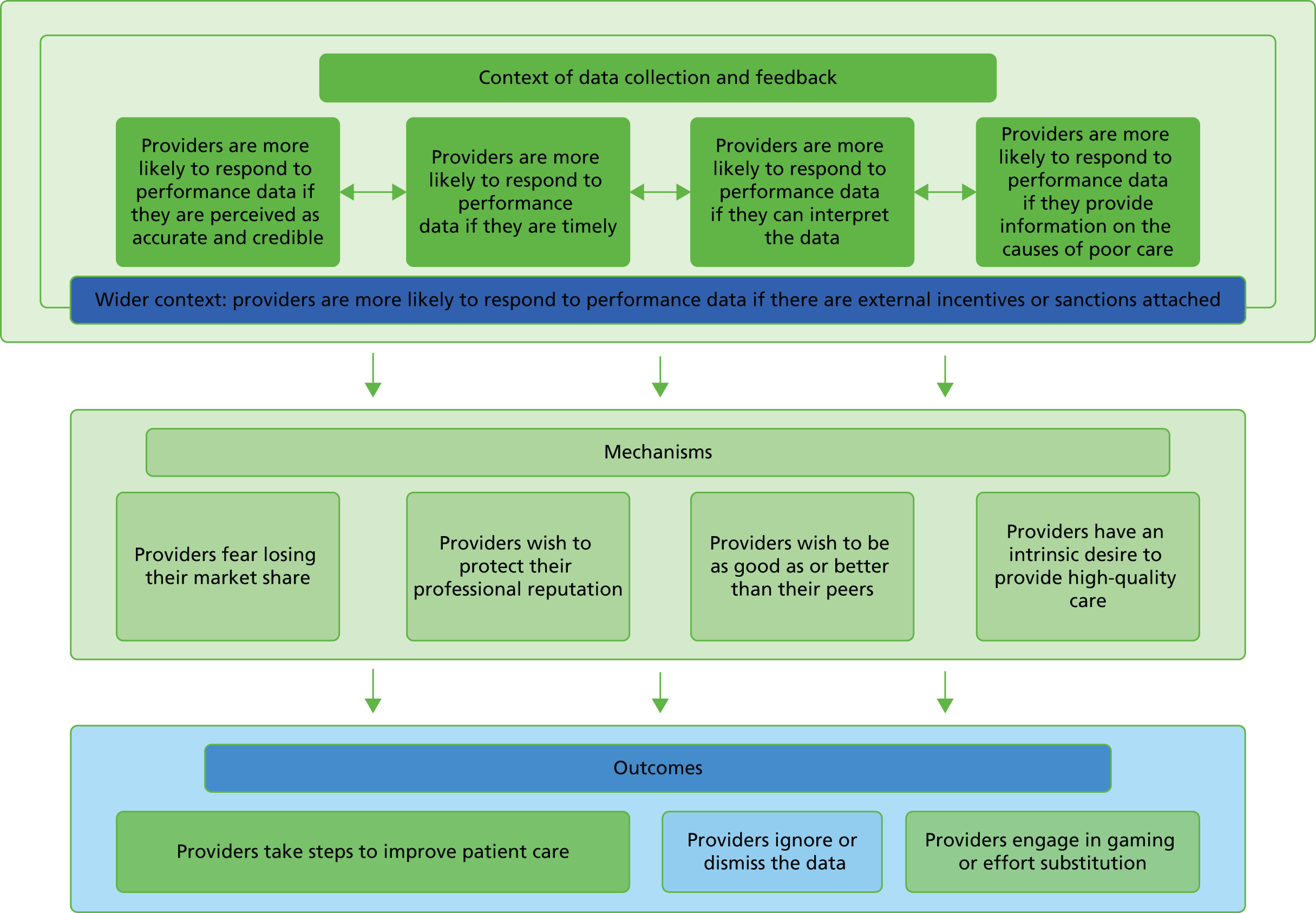

We also produced a table summarising study findings by type of performance data, to clarify the ways in which provider responses were influenced by the characteristics of each indicator, who mandated its collection, what sanctions or incentives were attached to it and how actionable the indicator was perceived to be. We then tried to establish how these different subtheories (listed above) linked together to explain the process through which providers responded to performance data. To do this, we returned to our original logic model of the process through which providers are expected to respond to performance data. We used a whiteboard to plot the mechanisms and contextual factors that influenced the different stages of providers’ responses to performance data, based on the findings of the summaries we had read. A photograph of this is shown below in Figure 4.

FIGURE 4.

Mind map of the process through which providers respond to performance data.

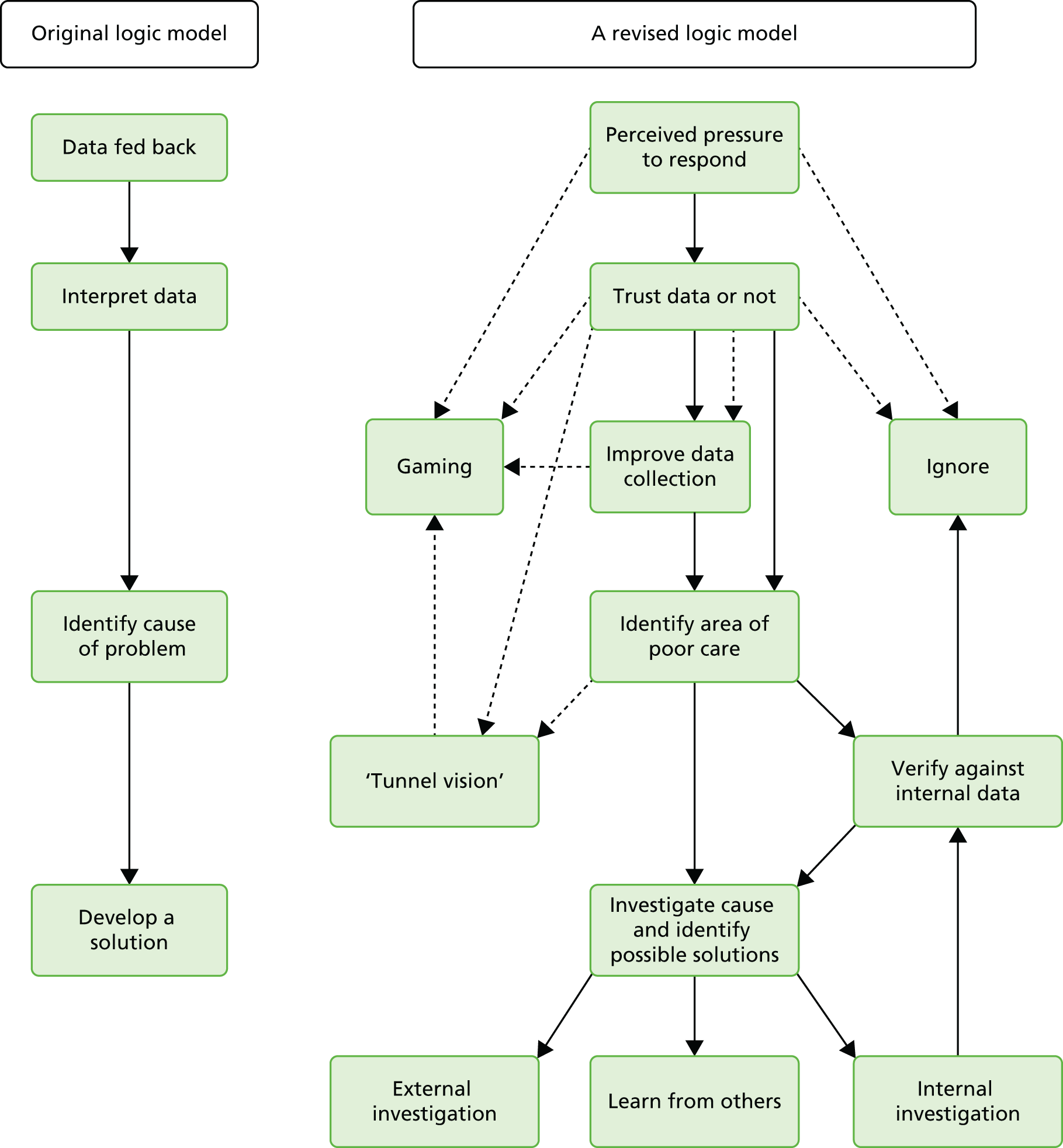

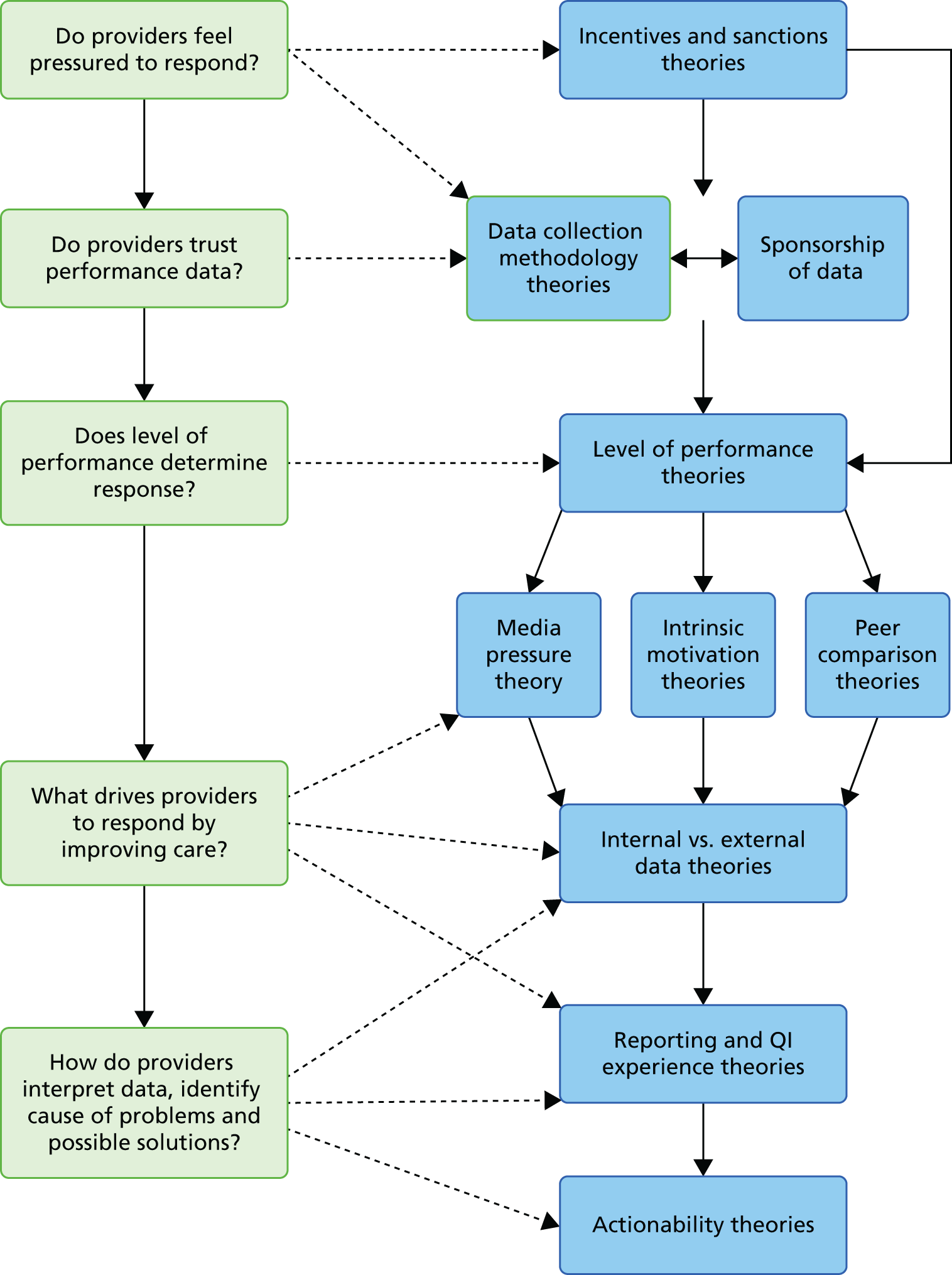

Throughout our analysis, we revised the original logic model developed in our pilot synthesis, which set out the process through which providers respond to performance data to incorporate various intermediate actions or steps that providers might take in their response, and the feedback between these two steps. We also produced a corresponding sequence of the different interlinked subtheories. Figure 5 presents both our original and one of our revised logic models side by side (indicating how providers might be expected to respond following feedback of ‘poor’ performance). In the revised model, the dashed lines represent routes to ‘unintended consequences’, while the solid lines represent ‘intended consequences’. Figure 6 takes the different steps depicted in the logic model and links them to one or more of the different subtheories that seek to explain how, why and when providers may respond in a particular way. This model was revised throughout the synthesis.

FIGURE 5.

Original and a revised logic model of provider responses to performance data following feedback of ‘poor’ performance.

FIGURE 6.

Sequence of ‘middle range’ theories about how and why providers respond to performance data.

As our synthesis progressed, we focused on a number of specific theories that addressed the mechanisms through which providers were expected to respond to performance data, and the key contextual factors that influenced this response. The mechanisms we explored were that providers respond to performance data because of:

-

‘Intrinsic motivation’ theory Their professional ethos means that they are intrinsically motivated to maintain good patient care, and will take steps to improve if feedback highlights that there is a gap between their performance and expected standards of patient care.

-

‘Market share’ theory They feel threatened by the potential loss of market share that could occur if patients decided to choose alternative, higher-performing providers.

-