Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 14/19/13. The contractual start date was in April 2015. The final report began editorial review in July 2016 and was accepted for publication in February 2017. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Christina Pagel, Sonya Crowe and Martin Utley report personal fees from University College London Consultants, outside the submitted work, from royalties from the sale of the original PRAiS software in 2013. No fees were received for work in this project. Rodney Franklin reports that he is Clinical Lead of the National Congenital Heart Disease Audit (NCHDA) within the National Institute of Cardiovascular Outcomes Research. Katherine Brown reports grants from Great Ormond Street Hospital Children’s Charity (grant number V1498) and the National Institute for Health Research {for Health Services and Delivery Research programme projects 10/2002/29 [Brown KL, Wray J, Knowles RL, Crowe S, Tregay J, Ridout D, et al. Infant deaths in the UK community following successful cardiac surgery: building the evidence base for optimal surveillance, a mixed-methods study. Health Serv Deliv Res 2016;4(19); and 12/5005/06 [under way]} outside the submitted work. Katherine Brown also sits on the steering committee of the NCHDA.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2017. This work was produced by Pagel et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Background and research objectives

Background

Why is mortality monitored and why should we try to adjust for risk?

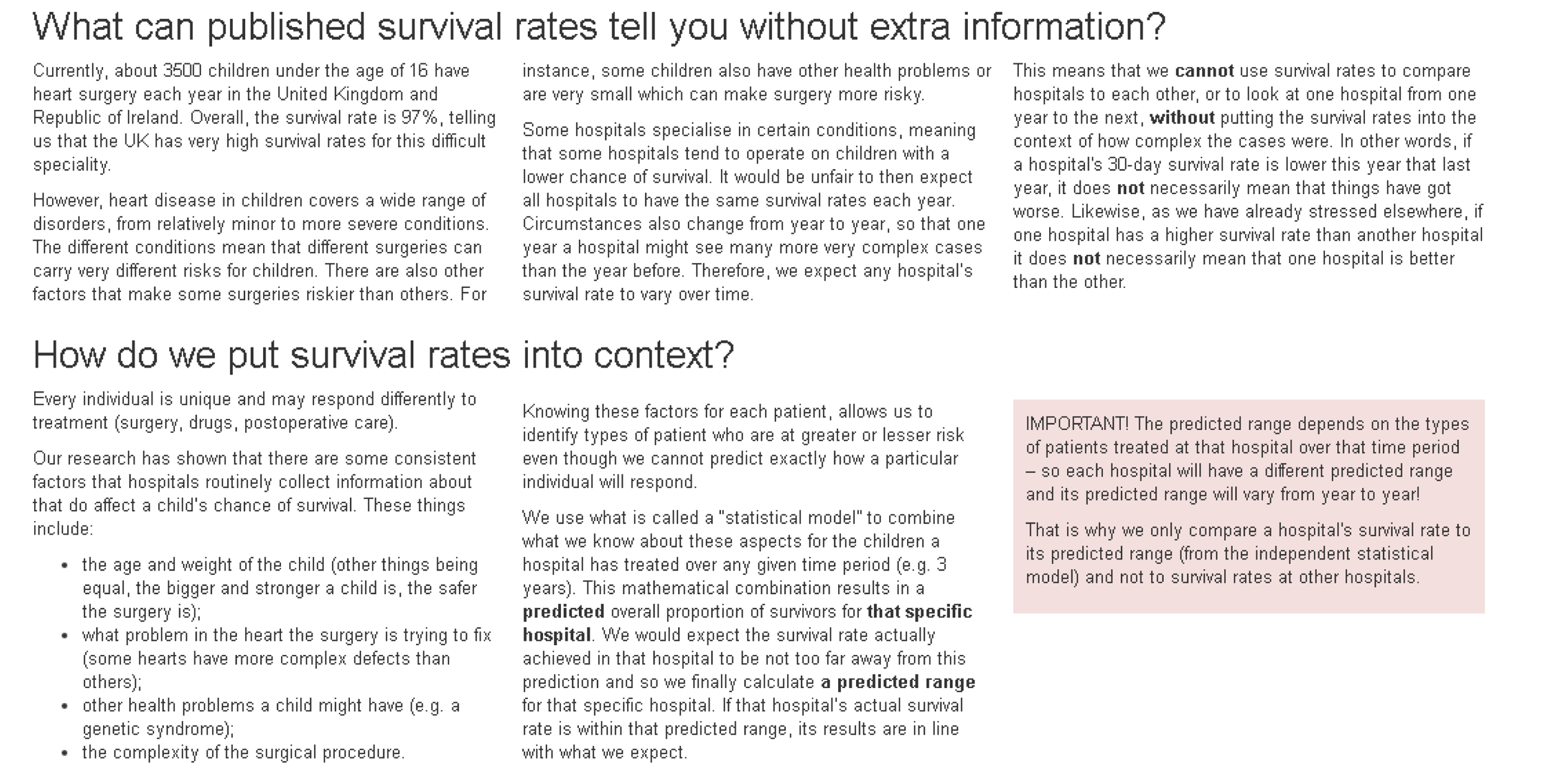

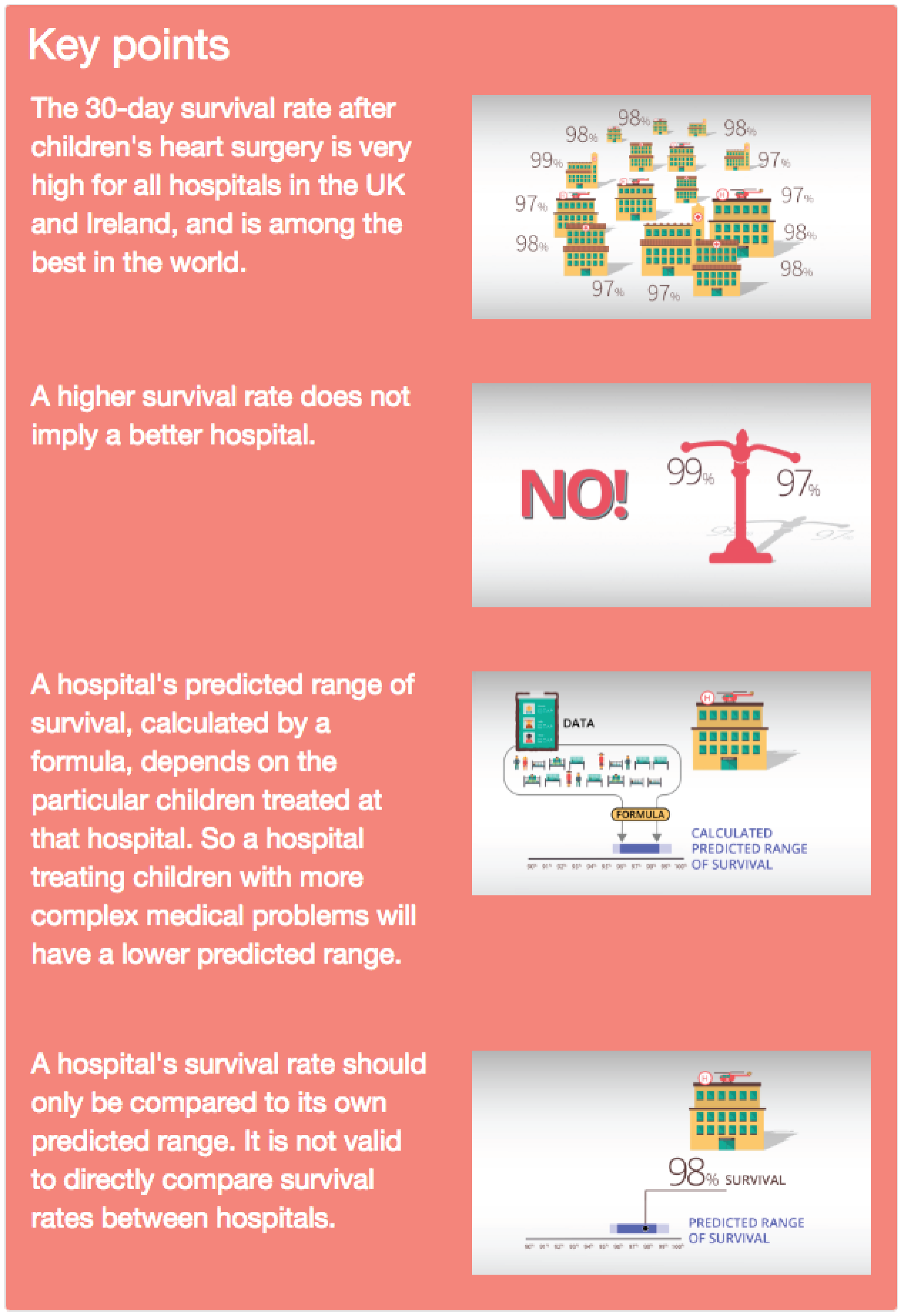

Approximately 3500 children aged < 16 years have heart surgery each year in the UK. 1 Although overall 30-day survival is over 97%, congenital heart disease (CHD) is a spectrum of disorders, and the more serious and complex abnormalities are an important cause of childhood mortality, morbidity and disability.

Since 2000, all UK specialist centres have contributed procedure data to the National Congenital Heart Disease Audit (NCHDA), one of seven national audits within the National Institute of Cardiovascular Outcomes Research (NICOR). Life status is independently obtained from the Office for National Statistics (ONS); when no ONS tracking is available, discharge status is used instead and checked with individual units. Centre-specific mortality outcomes for individual procedure categories have been published online by NICOR since 2007. 2

Outcomes following children’s heart surgery have long been the subject of clinical, regulatory, media and public scrutiny in the UK. Complex surgical procedures on extremely small hearts are among the most technically challenging and resource intensive in the field. In the UK, past events, public inquiries and plans to reduce the number of centres performing such surgery all provide a rich source of backstories and a level of public awareness that make this specialty ripe for comment and journalism. 3–6 Mortality has, understandably, always been the dominant reported outcome and is often perceived as a straightforward measure of performance by the media and the public.

There is a reasonable expectation from the paediatric cardiac profession that, in publishing their outcomes, audit will be ‘fair’ to clinical teams. That is, the reporting of outcomes should take into account the hugely diverse set of diagnoses and comorbid conditions that patients present with, the wide range of surgical procedures performed, the differences in case mix between centres and the impact of relatively small numbers of patients on what can reliably be inferred from data. These characteristics of the specialty make risk adjustment in the presentation of outcomes analysis essential, but they also make it very difficult to achieve.

Until recently, the only risk adjustment method easily available to NICOR was to report mortality within procedure categories, thus partially accounting for variations in case mix. One example is the arterial switch operation, a definitive surgical procedure to repair the heart when the main artery and vein connections to the heart are the opposite of what they should be. The structure of such hearts can vary drastically, from a ‘simple’ isolated inversion of the great arteries to a complex physiology with several accompanying abnormalities. A child may also have additional non-cardiac problems and/or an underlying chromosomal abnormality. These sorts of issues highlight the importance of incorporating case mix into interpreting observed short-term outcomes following heart surgery in children, beyond consideration of the procedure performed. 6

Why did we develop the PRAiS risk model?

There have been several models aiming to incorporate risk assessment into outcome measures. In the early 2000s, the Risk Adjustment for Congenital Heart Surgery (RACHS-1) score was introduced. 7 This method involves gathering a panel of experts who assign patients to one of six predefined risk categories on the basis of the presence or absence of individual diagnosis and procedure codes. Cases with combinations of cardiac surgical procedures are placed in the category of the highest risk procedure. Around the same time, the Aristotle tool emerged to evaluate quality of care based on the complexity of the operation and on specific patient characteristics. 8 Also based on a review by a panel of experts, Aristotle gives a precise score for the complexity of 145 specific paediatric cardiac operations. Both RACHS-1 and Aristotle are examples of ‘consensus-based’, subjective, risk stratification tools, essentially meaning that experts have sat down and decided how a particular operation compares with others in terms of risk, which for these systems is usually considered synonymous with complexity. Although certainly valuable and useful, these methods have started to give way to recent empirical approaches, based on the emerging availability of databases incorporating the outcomes of tens of thousands of patients. The Society of Thoracic Surgeons-European Association of Cardiothoracic Surgery (STS-EACTS) score, or STAT-score, introduced in 2009, was based on data from > 75,000 paediatric cardiac surgery procedures performed between 2002 and 2007 in Europe and North America and was an important step towards monitoring mortality, as clinical teams could benchmark current outcomes against achieved outcomes in the recent past. 9

None of the above risk models is easy to use in the routine monitoring of outcomes using UK national audit data, none was calibrated on UK data and all mainly used procedure information. In our previous National Institute for Health Research (NIHR) Health Services and Delivery Research-funded project (09/2001/13), which ended in 2011, we (VT, KB, MU, CP and SC) developed a new risk model for 30-day outcome after paediatric cardiac surgery, referred to as PRAiS (Partial Risk Adjustment in Surgery). 10,11 This empirical model was developed for the purpose of routine local in-house monitoring of risk-adjusted outcomes within UK paediatric cardiac surgical units, and incorporated not only the procedure but also cardiac diagnosis, the number of functioning ventricles and age category (neonate, infant or child), as well as continuous age, continuous weight, presence of a non-Down syndrome comorbidity and whether or not surgery was performed on cardiopulmonary bypass. The model was developed using data from all paediatric cardiac surgery procedures performed in the UK between 2000 and 2010, and in validation it compared well with RACHS-1, Aristotle and the STS-EACTS score. The intention was that, by facilitating local routine monitoring, units could regularly examine their recent outcomes (with a time lag of 30 days), compared with the annual results published by NICOR, which could represent a time lag of up to 18 months.

What happened with PRAiS after the end of the first National Institute for Health Research grant?

Following the development of the PRAiS risk model, several units expressed an interest in piloting the model for the in-house routine monitoring of outcomes. In 2012, the analytical team at the University College London (UCL) Clinical Operational Research Unit (CORU) (CP, MU and SC) worked with Great Ormond Street Hospital, Evelina London Children’s Hospital and Glasgow Royal Hospital for Sick Children in a pilot study to implement the new risk model. We developed prototype software to allow units to use their own routinely collected data to produce variable life-adjusted display (VLAD) charts for 30-day mortality after children’s heart surgery after partial risk adjustment with PRAiS. 12,13 The software was codesigned with the clinical teams to be robust and easy to use and to produce output that was helpful for team discussion of outcomes. The advantage of using VLAD charts is that they show the accumulation of outcomes over time, allowing trends in outcomes (both negative and positive) to be spotted quickly and discussed.

The pilot study was successful, with the pilot units keen to continue using the software and other units also showing an interest. 14 As a result, the team at UCL CORU decided to further develop the software that implements PRAiS into a package that could be rolled out across the UK. This involved including comprehensive error checking of any entered data, further work on the software in response to feedback from pilot units, the development of user manuals and the recalibration of the PRAiS risk model on all national data from 2007 to 2010, in part to address an observed imbalance in neonatal outcomes in the original development and validation data sets. 11 The software to implement PRAiS was licenced by UCL Business in April 2013 and licences were purchased by NICOR with funding from NHS England for all English hospitals that perform children’s heart surgery and for the NICOR congenital heart audit itself. All units that contribute data to NICOR’s congenital heart audit now possess licensed copies of the PRAiS software.

In the following 6 months, all English hospitals downloaded the software to implement PRAiS, and it was also used by NICOR to include risk adjustment in the reporting of national outcomes for the first time. 1 An important part of the software licence was the inclusion of a half-day consultancy visit to units, to discuss not only the practical use of the software, but also, and more importantly in the analytical team’s view, the caveats of using risk adjustment to monitor outcomes. As the PRAiS model began to be used to monitor outcomes both locally within units and nationally as part of NICOR’s audit, data quality for information previously collected but not actively used (such as comorbidity and diagnosis codes) improved rapidly (and retrospectively, as recent data were revisited by hospitals back to 2009). In particular, the proportion of surgical episodes with a recorded comorbidity (excluding Down syndrome) doubled from 15% (2000–10 original data set) to 30% of cases. It also appeared that national outcomes between 2009 and 2012 had improved since the time period that the PRAiS risk model was calibrated on (2007–10). 1 In July 2013, at NICOR’s request, CORU analysts (CP and SC) recalibrated the risk model on the 2009–12 data set (but left the risk factors identified in the original development process unchanged) and updated the software to implement the model. 15 These changes are documented on UCL CORU’s website (www.ucl.ac.uk/operational-research/AnalysisTools/PRAiS).

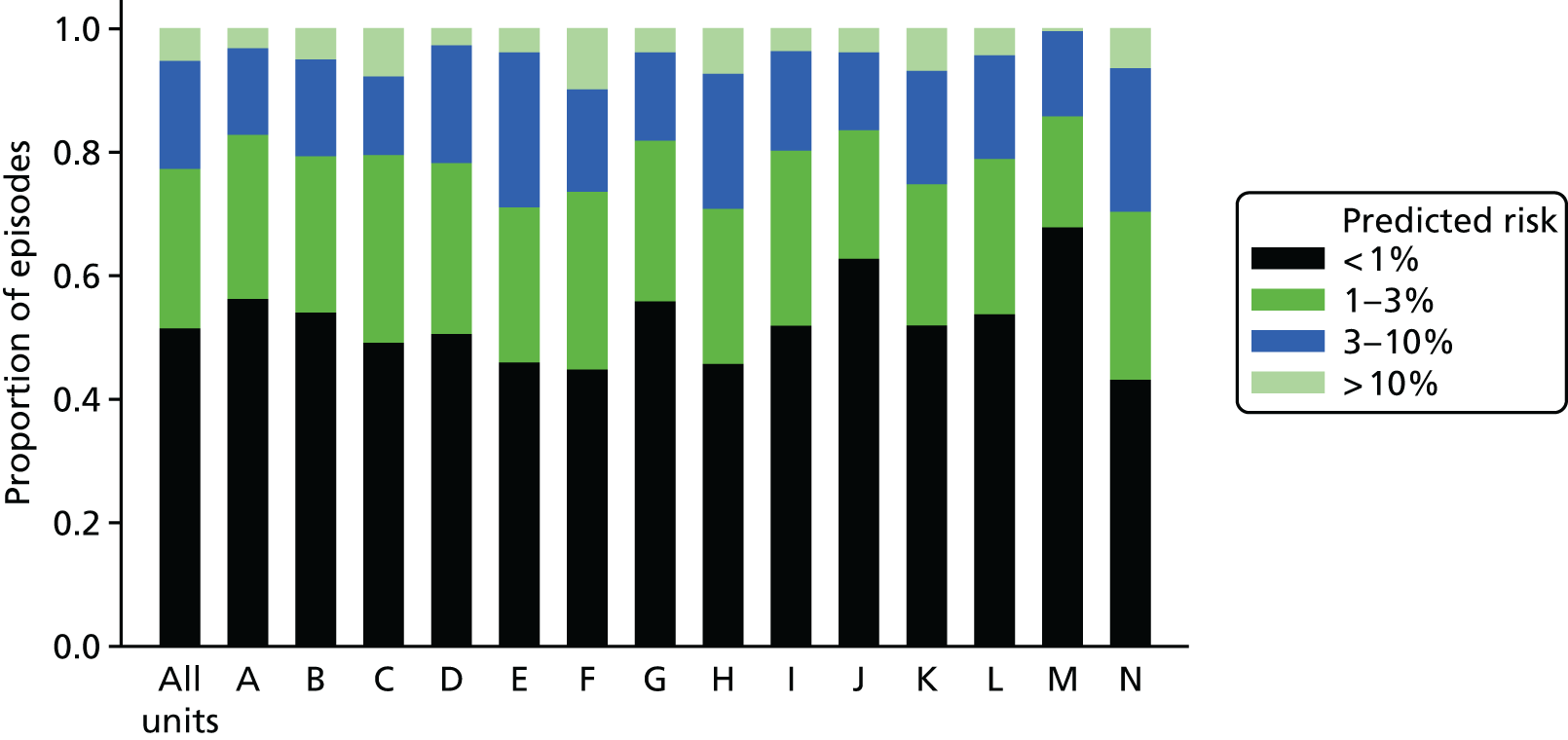

PRAiS is now being used in three main ways.

-

Local quality assurance and improvement, for which it is used every month to monitor survival trends, and any up or down ticks can be discussed as a team. It is also used to audit particular population cohorts or operations types (e.g. all shunt operations or all neonates).

-

National quality assurance to ensure that no hospital has a survival rate that is potentially concerning because it is much lower than expected using PRAiS.

-

National audit annual benchmarking between hospitals and also against historic national performance (e.g. is one hospital doing markedly better and can the clinical community learn anything from those processes? Are national outcomes overall improving compared with historical data and, if so, do we understand why?).

What prompted this project to update the model?

Data completeness and quality of comorbidity information was poor in the original 2000–10 data set that was used to develop PRAiS. Although we explored different methods for incorporating information about different types of comorbidity and multiple comorbidity as part of our original project, none of the models using such methods proved to be robust. 10 Faced with the choice of excluding comorbidity entirely as a risk factor or using a very crude measure of comorbidity as a ‘yes/no’ variable, we chose the latter. This was because the definite presence of at least one non-Down syndrome comorbidity was significantly associated with mortality in multivariable analysis, because comorbidity was considered extremely important in risk adjustment by clinical collaborators (VT and KB) and because it was hoped that inclusion of the crude risk factor would drive future improvement in data quality concerning comorbidities. 10,11 In addition, other international risk adjustment systems have started to use comorbidity information as a risk factor, in recognition of its importance. 16,17

Our consultancy visits to English hospitals with the PRAiS software are now complete. Feedback on the software to implement PRAiS and the usefulness of the VLAD charts was very positive; however, a consistent concern, expressed during almost all visits, was the treatment of comorbidity within the PRAiS risk model, highlighting its importance as a risk factor. On the one hand, this justified the inclusion of comorbidity within PRAiS, but, on the other hand, it emphasised the need to revisit how comorbidity is incorporated within the model. The improvement in national audit data quality since 2009 (noted above) means that by 2015 there were enough data with better-quality comorbidity information for us to explore a more sophisticated inclusion of comorbidity within the PRAiS risk model.

We proposed to return to PRAiS model development to improve comorbidity information and to improve the risk adjustment achieved through the use of PRAiS in local and national audit.

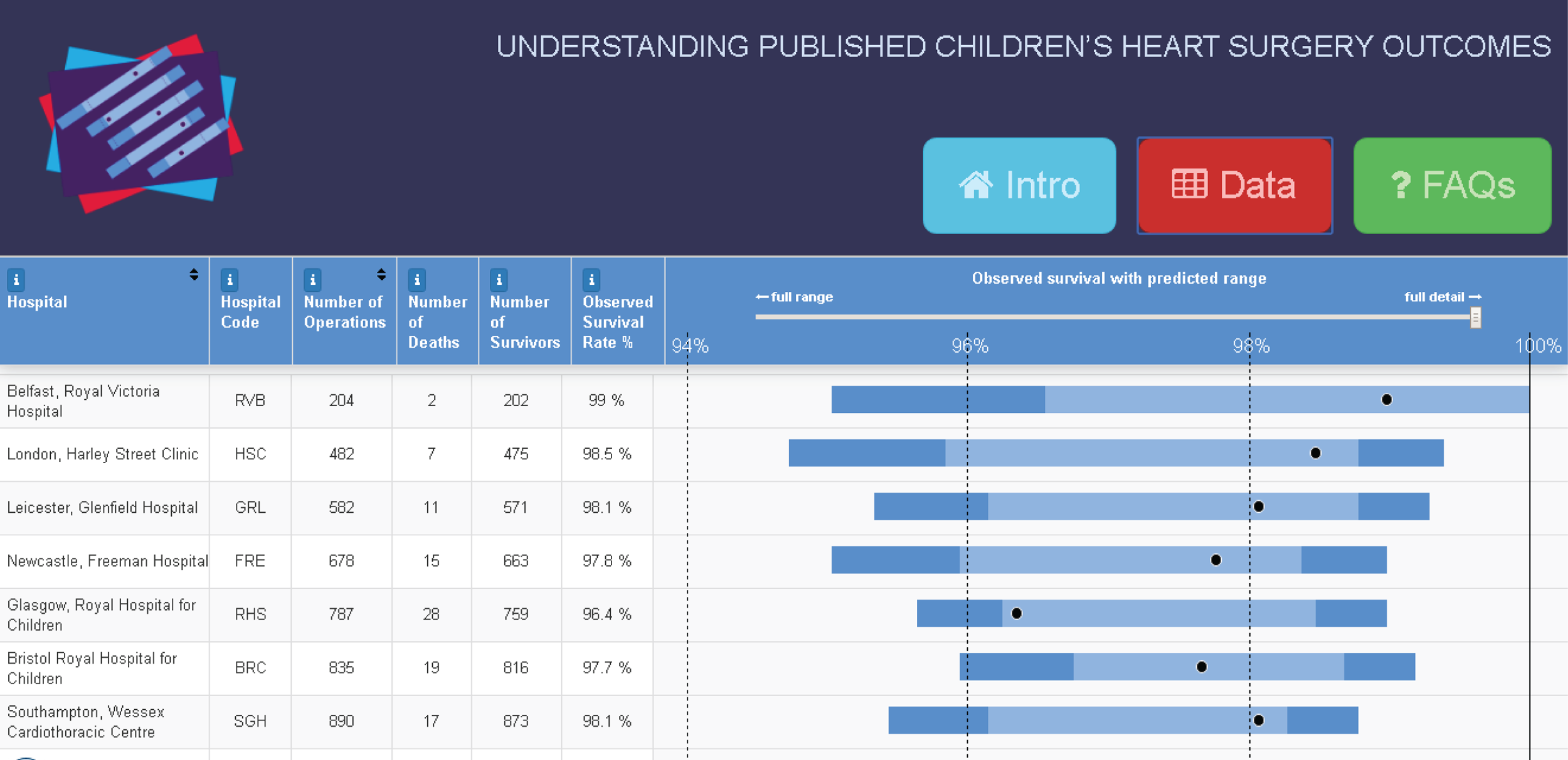

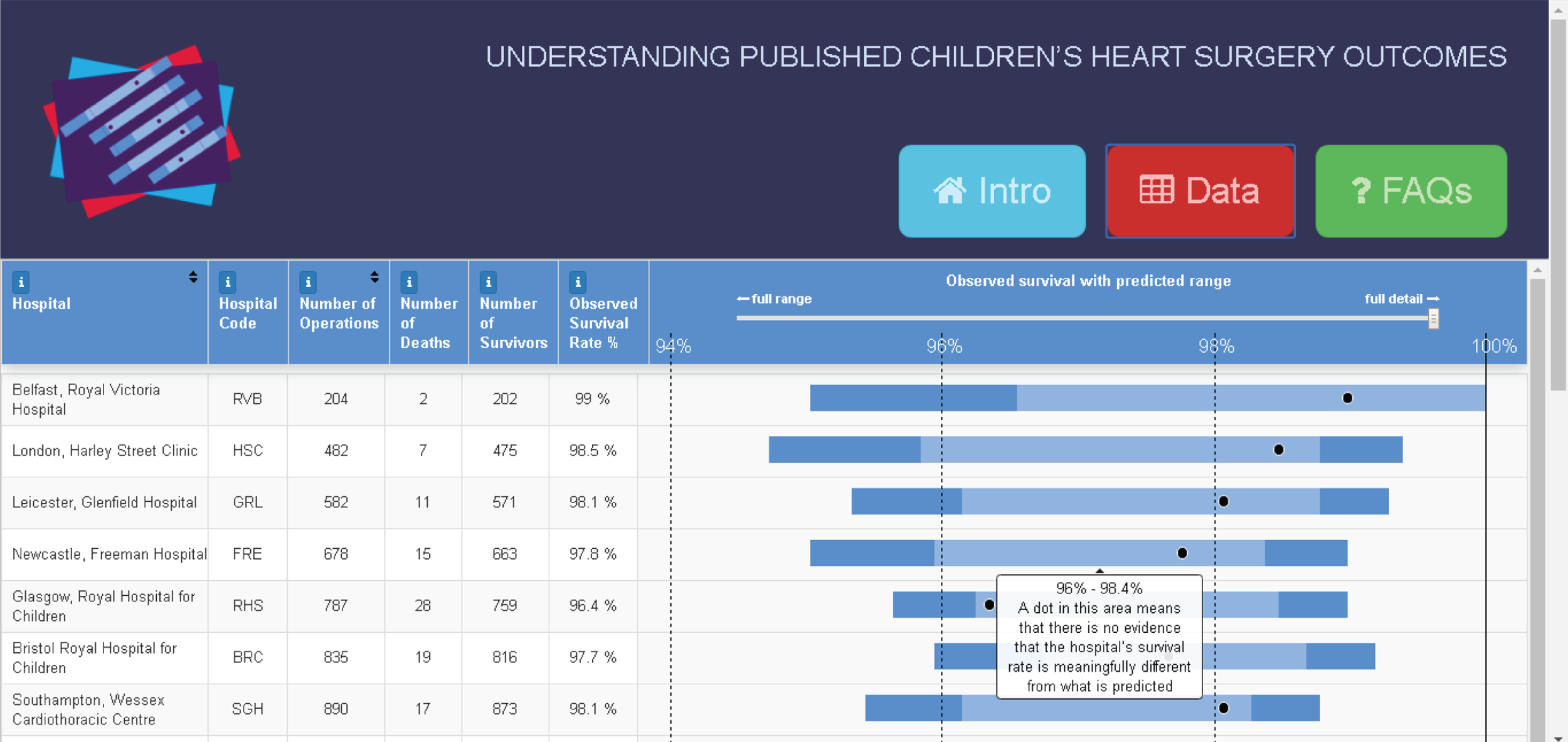

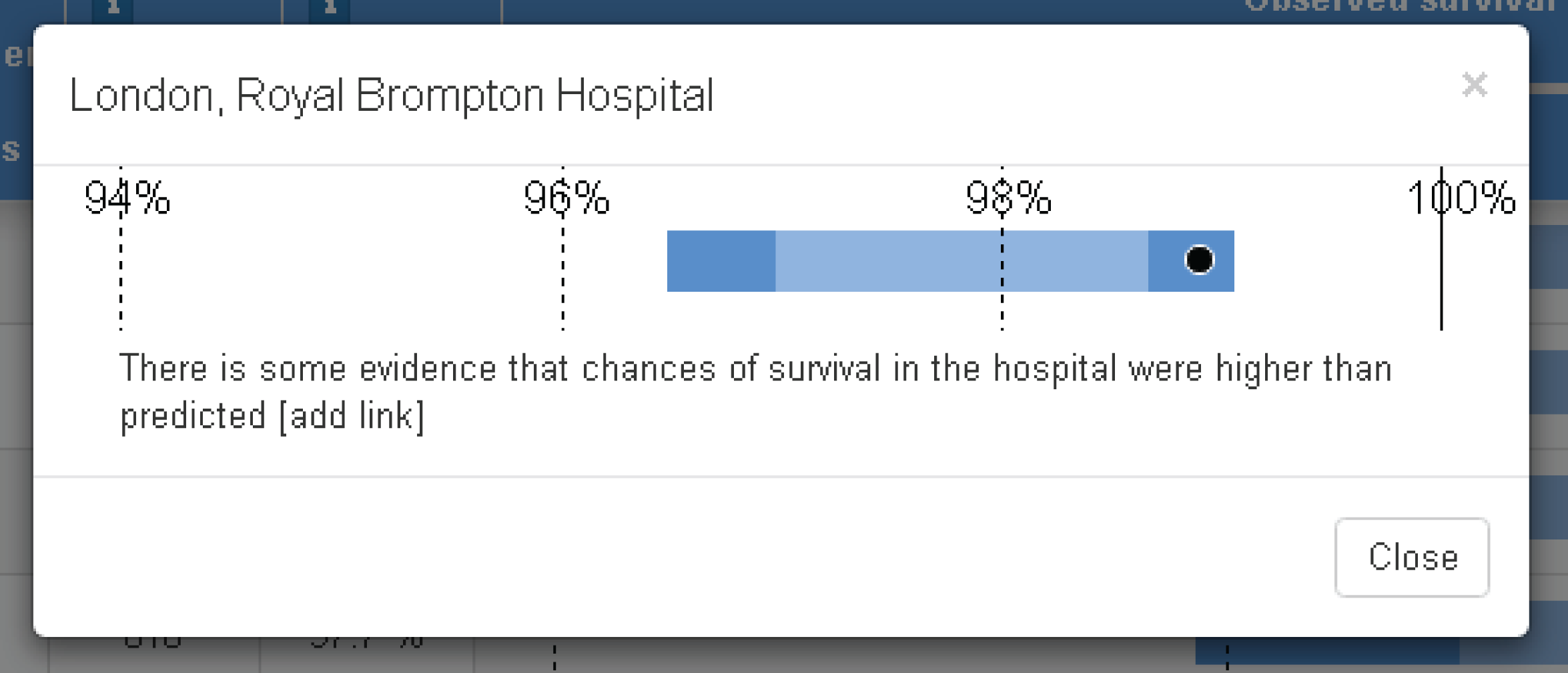

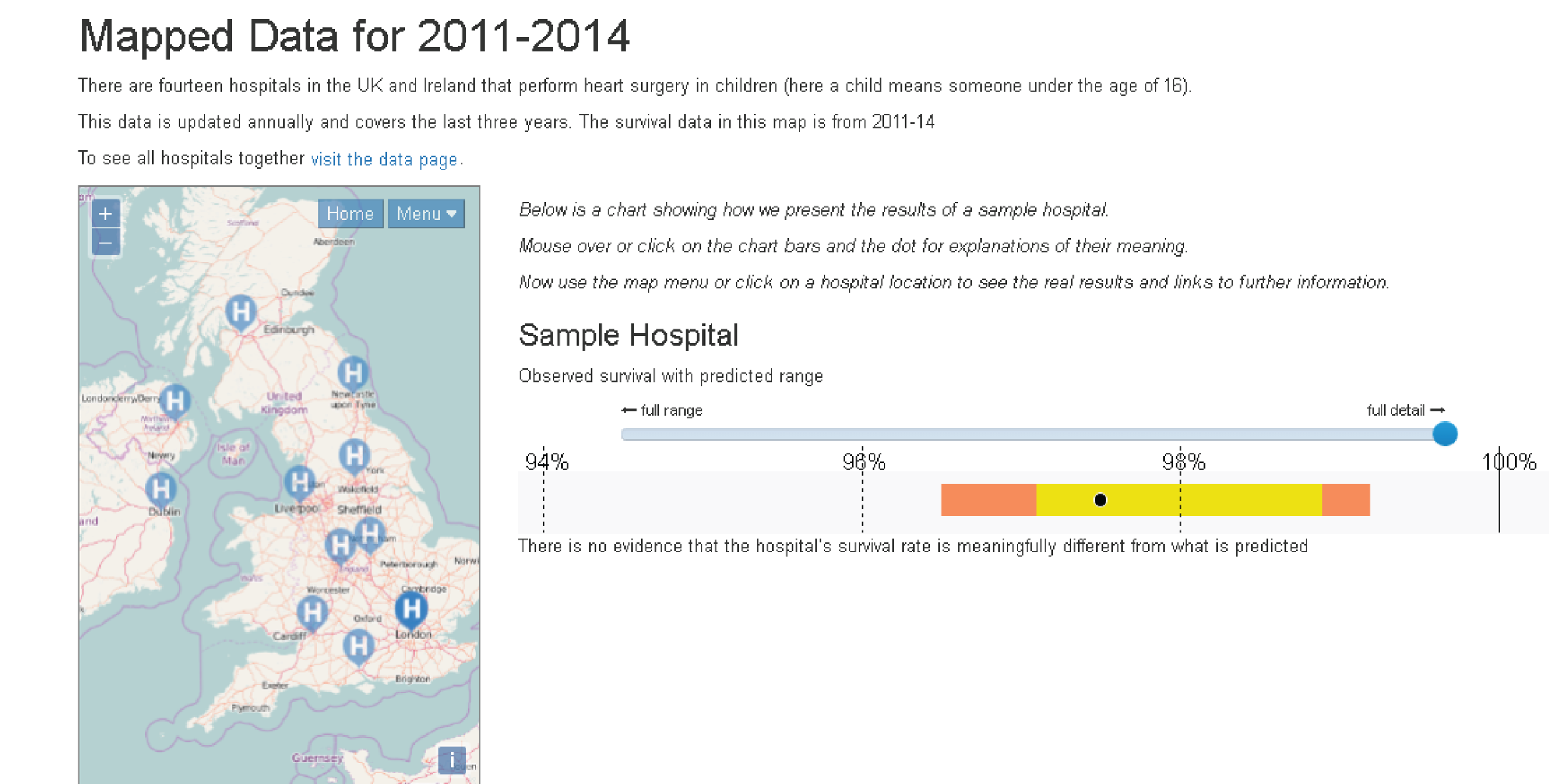

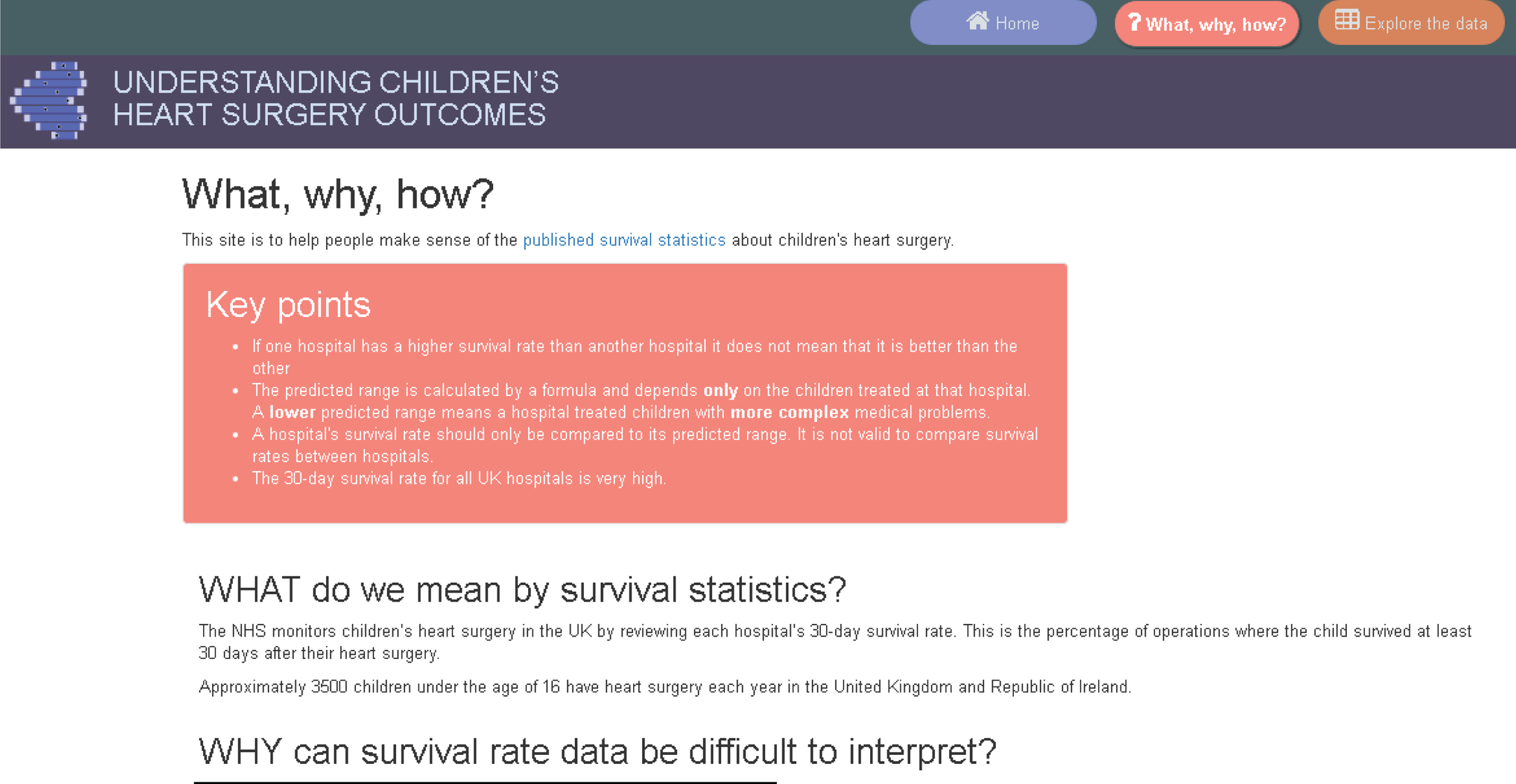

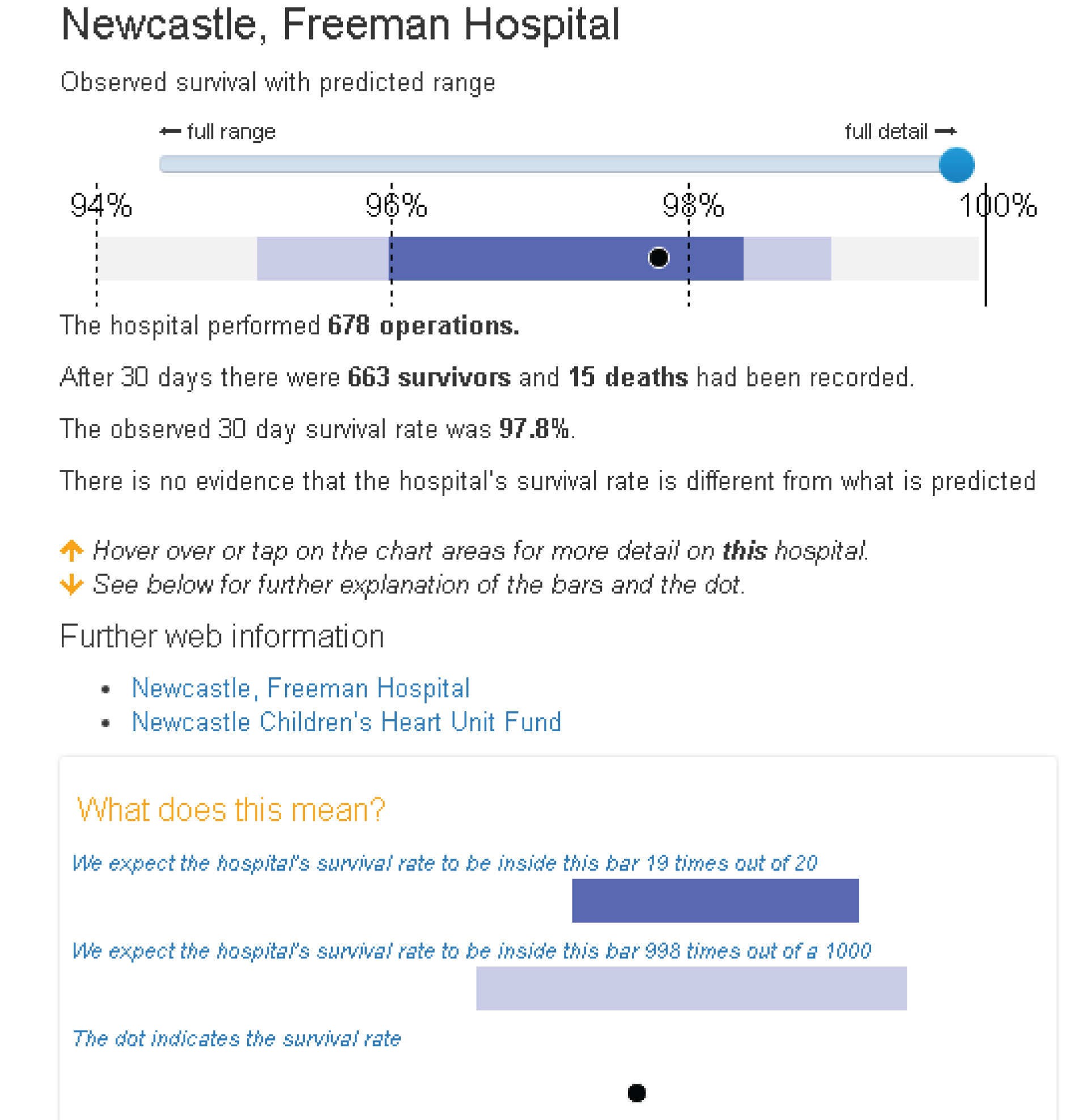

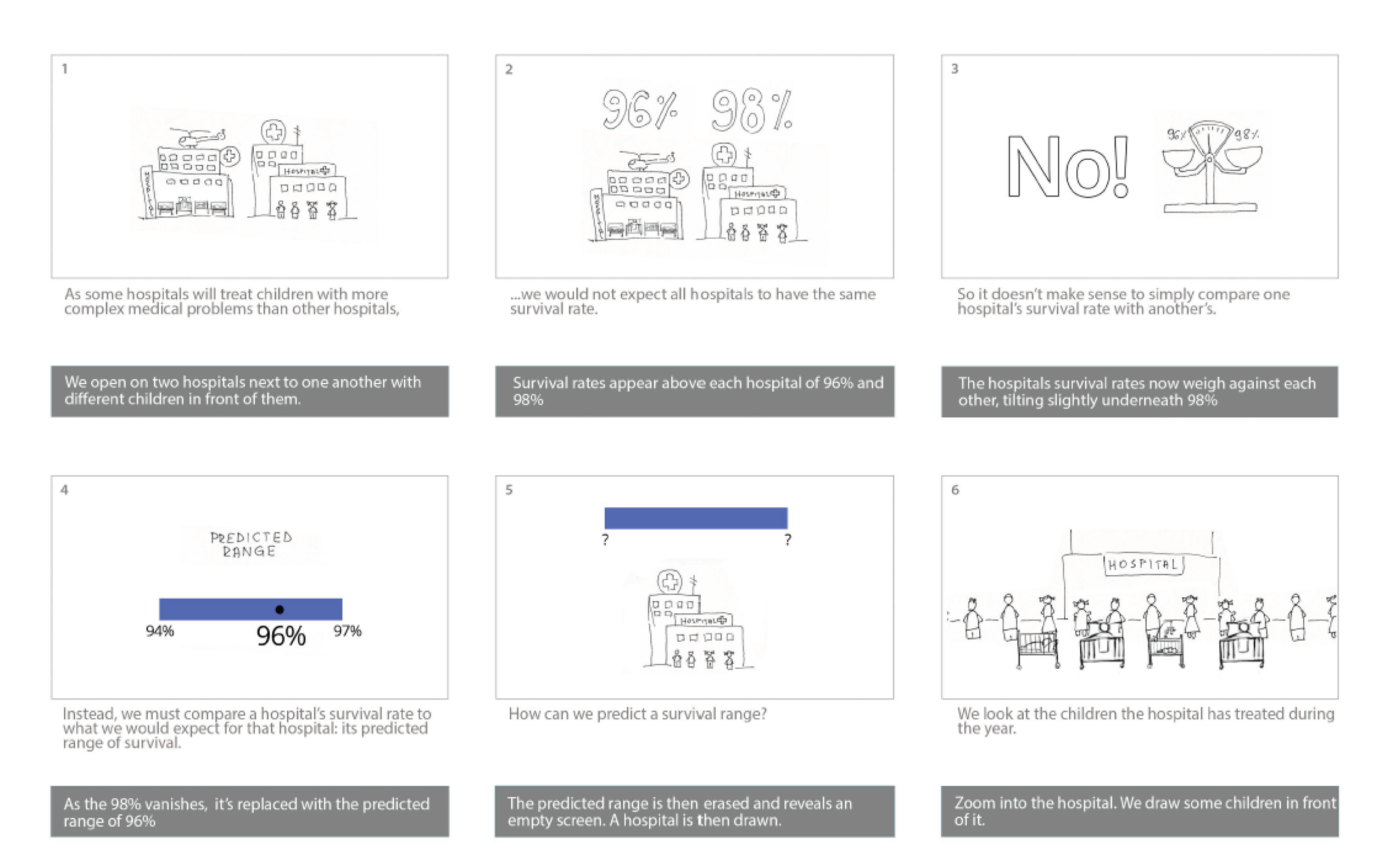

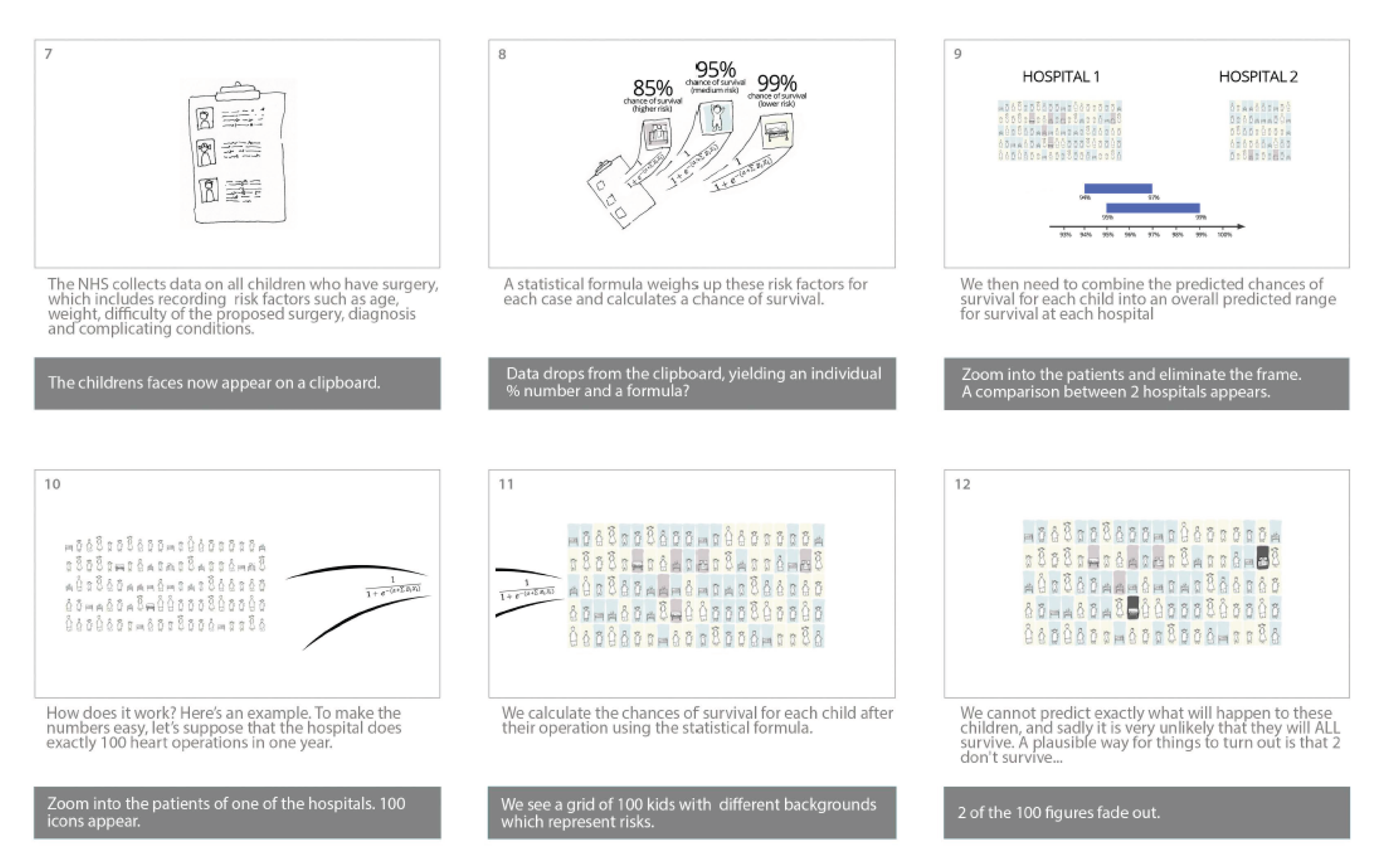

Public understanding of mortality outcomes following children’s heart surgery

The public and media response relating to the cessation of children’s heart surgery in one unit in 2010, the brief suspension of heart surgery in another in 2013 and other recent coverage all prove the immense public interest in understanding what happens to children after heart surgery and, in particular, fears about what deaths after heart surgery mean about the care provided within units. 18–27 The UK is one of the few countries that publish mortality outcomes after children’s heart surgery, and NICOR’s results are, understandably, used by journalists, politicians and the public to make judgements about whether or not heart surgery is ‘safe’. 28 Such judgements are fraught with difficulties and are very stressful both for the families of children who have heart disease and for the clinical teams treating these children.

Although the PRAiS risk model was originally developed for the local in-house routine monitoring of outcomes, it has also been adopted by the NICOR’s congenital heart audit for reporting annual outcomes for each UK centre. 1 Using risk models for comparative audit is fairer than using raw mortality, but risk adjustment does not in and of itself make comparisons ‘fair’. 14,15 Although comparing the number of deaths seen in different units seems straightforward, risk adjustment or not, unfortunately it is not that simple (whether in congenital audit or elsewhere). 15,29,30 We have written on the difficulties of interpreting comparative mortality data using PRAiS, and the NICOR Congenital Audit has also written resources for the public on its public portal; however, these sources are not easily found without prior knowledge of their existence and are not necessarily easily digested by the non-expert. 14,15,31,32

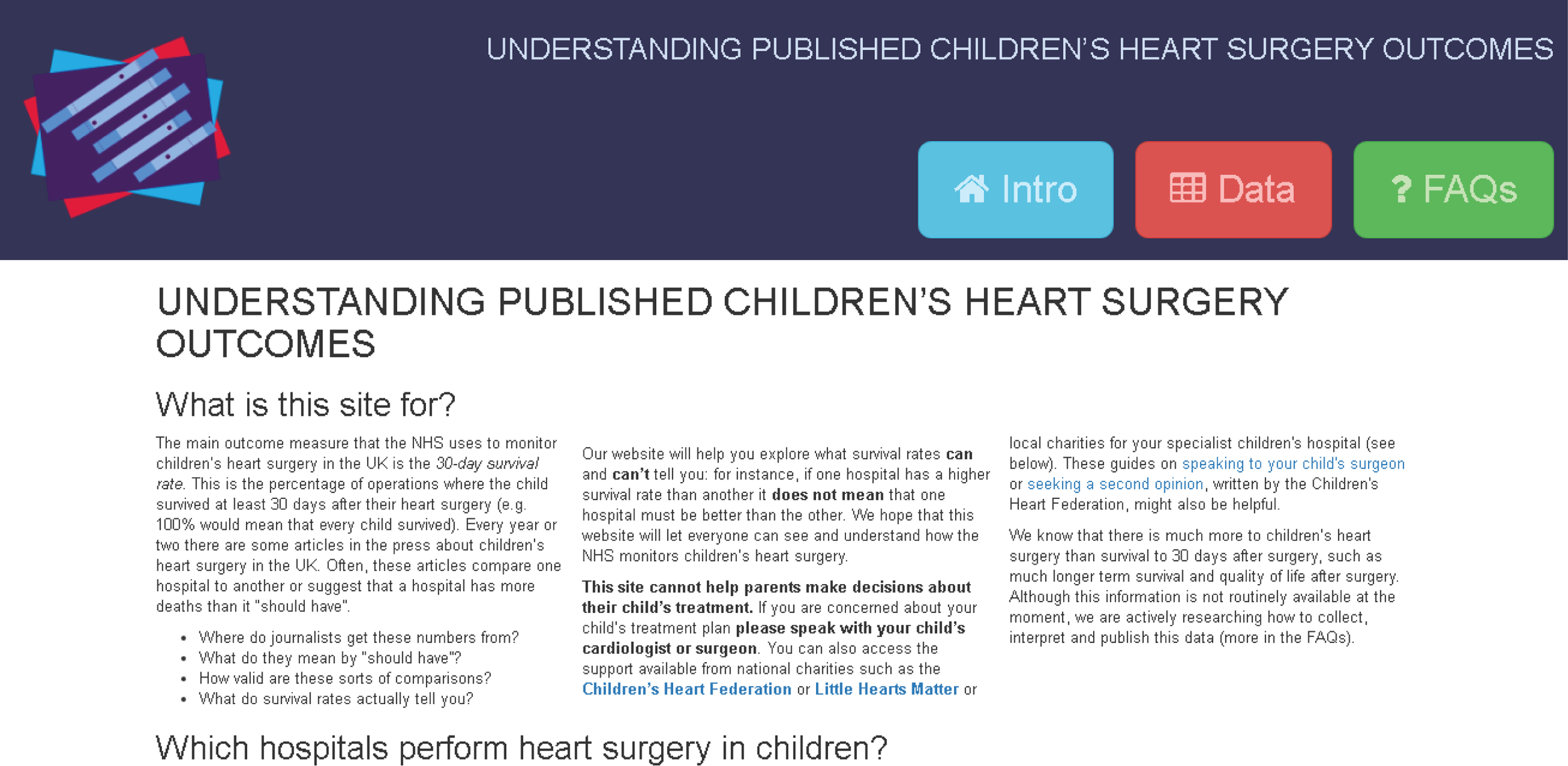

We believed that there was a real need to, first, develop better resources for the public about how to interpret evidence on mortality following children’s heart surgery and, second, to disseminate these resources widely. We had the enthusiastic support of charities the Children’s Heart Federation (CHF) (a user group for families of children with heart disease) and Sense about Science (a charity dedicated to the public understanding of science and evidence). The second strand of our project was (originally) to develop a video animation on the interpretation of mortality outcomes for the public, with help and user input from both charities.

Aims and objectives

Aim 1: updating the PRAiS risk model for 30-day mortality following paediatric cardiac surgery

Aim 1 was to improve the PRAiS risk model for 30-day mortality following paediatric cardiac surgery by incorporating more detailed information about comorbid conditions.

The objectives to achieve aim 1 were to:

-

explore the relationship between the existing six comorbidity groupings (defined as part of the original risk model development process but not included in final model) and mortality, both in the presence and in the absence of other risk factors, and the consequent potential impact on the robustness of the PRAiS risk model (1.1)

-

decide on the suitability of existing comorbidity groups in the light of initial exploratory analysis, devise any necessary modifications and consider options for changing current groupings of specific procedure and diagnosis categories, with expert input from clinicians and data managers from multiple centres (1.2)

-

modify the existing mapping of individual comorbidity codes to broader comorbidity categories and to a single 30-day patient episode with expert clinical input (1.3)

-

explore trade-offs in reducing detail in existing risk factors (e.g. specific procedure categories) to incorporate new comorbidity categories within the PRAiS risk model while maintaining a robust calibration (1.4)

-

calibrate a new PRAiS risk model, after deciding on the final risk factors by consideration of statistical goodness of fit and clinical face validity (1.5)

-

update the software that implements PRAiS with the new parameterisation (1.6).

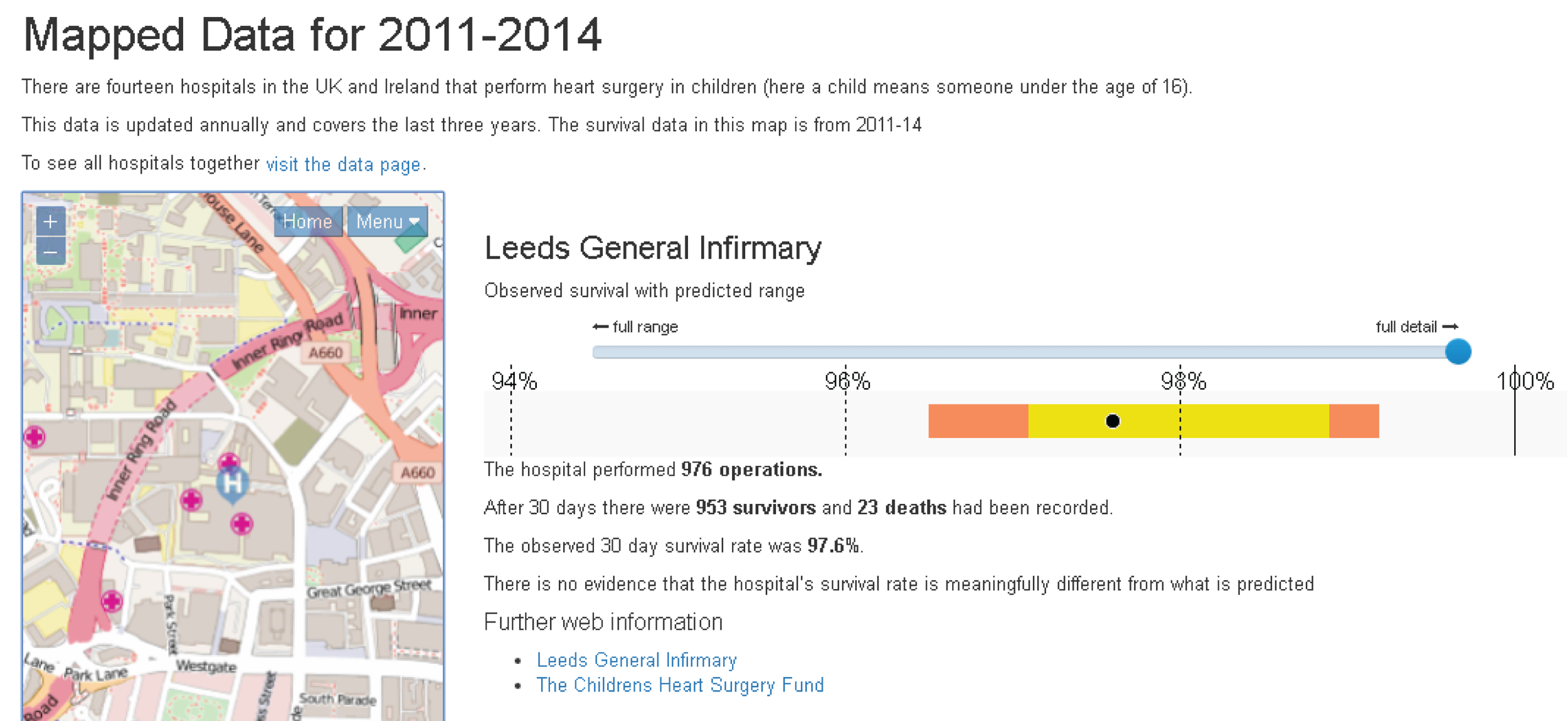

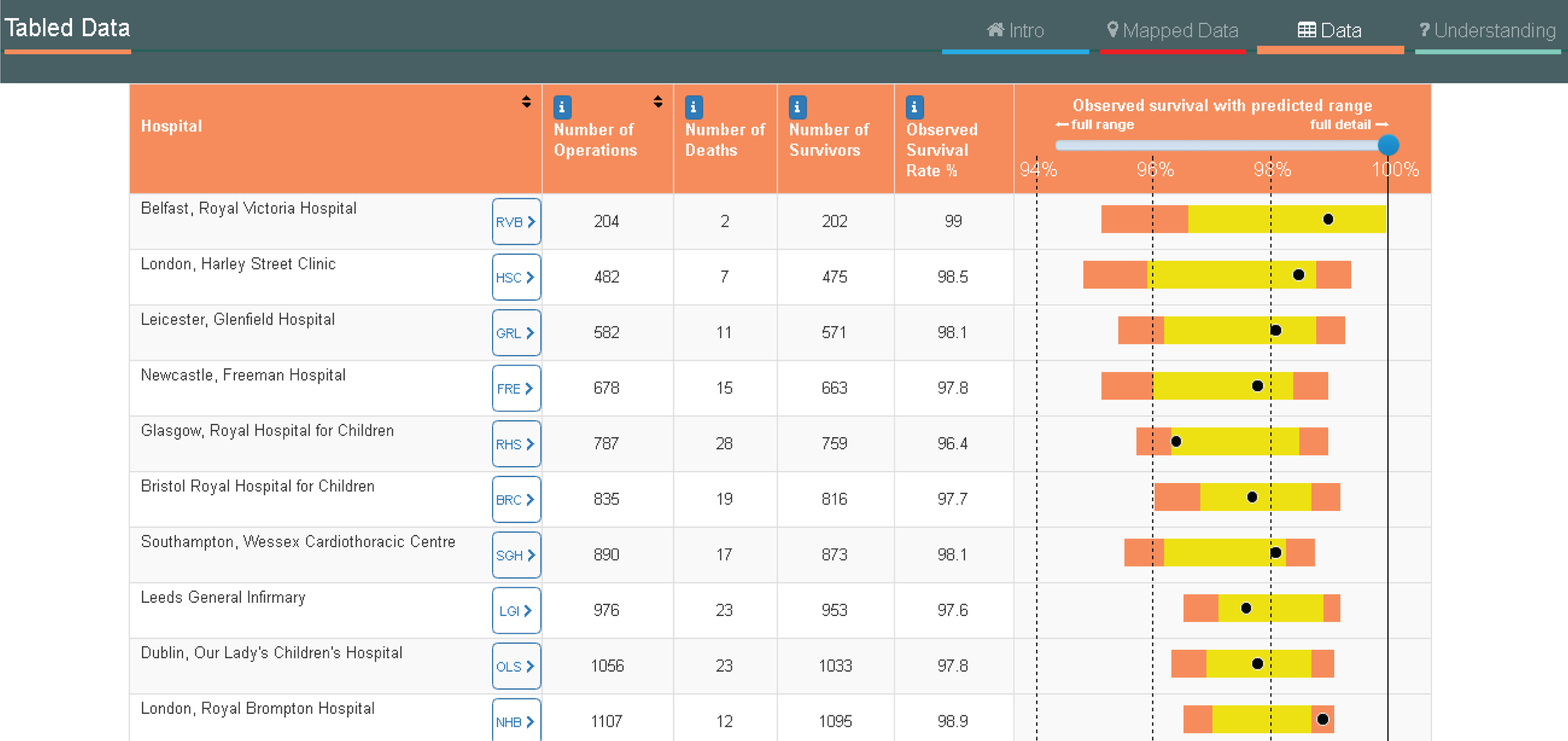

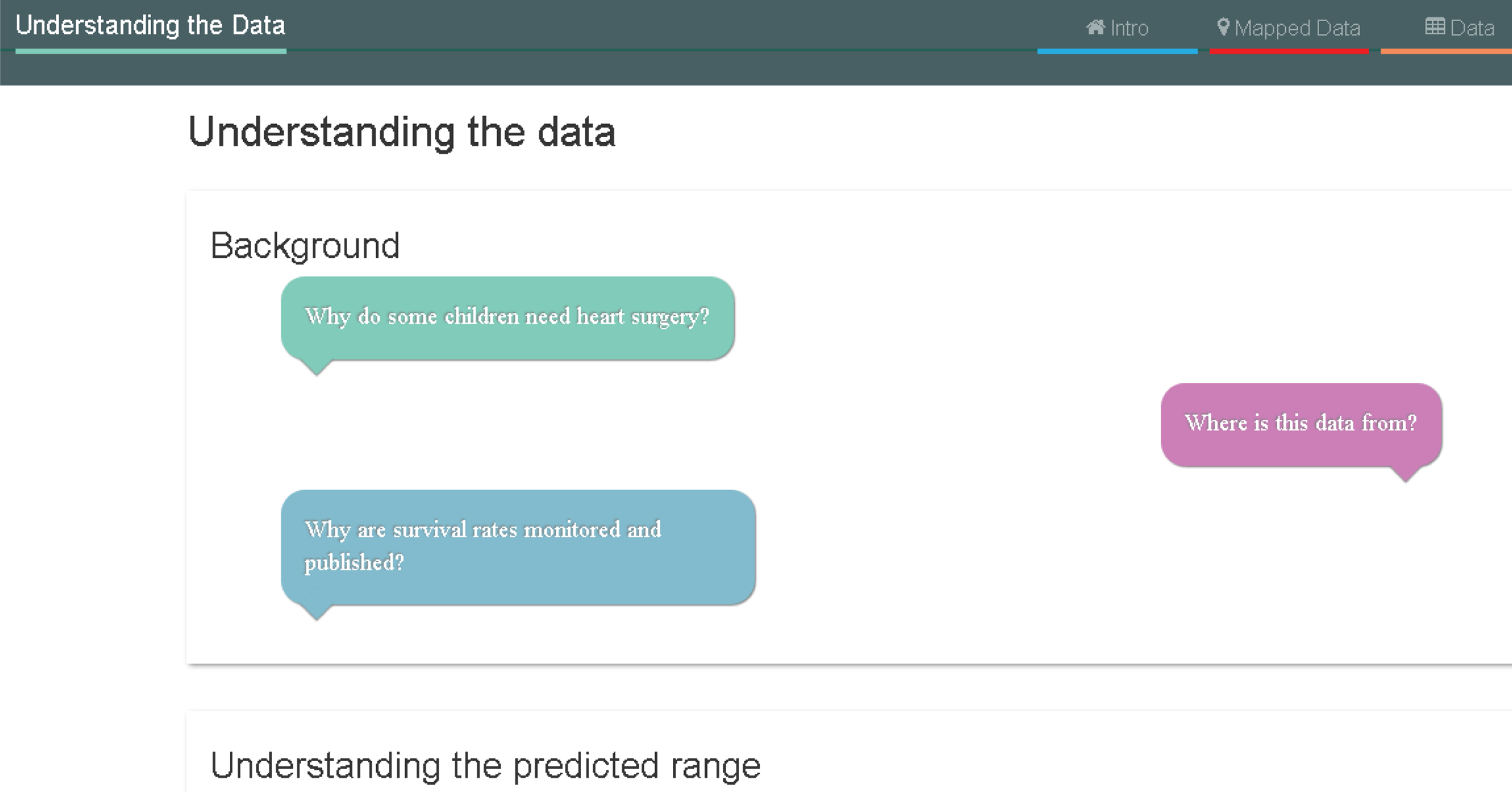

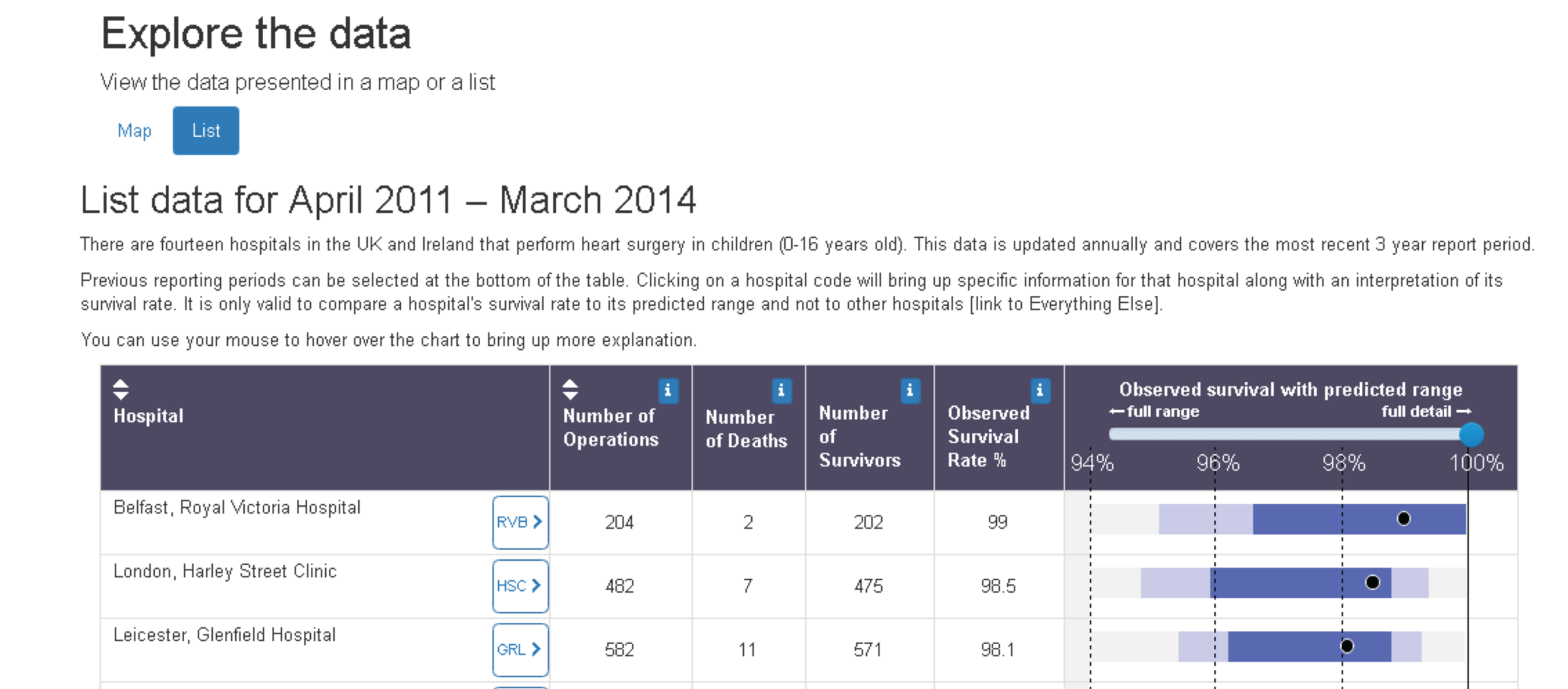

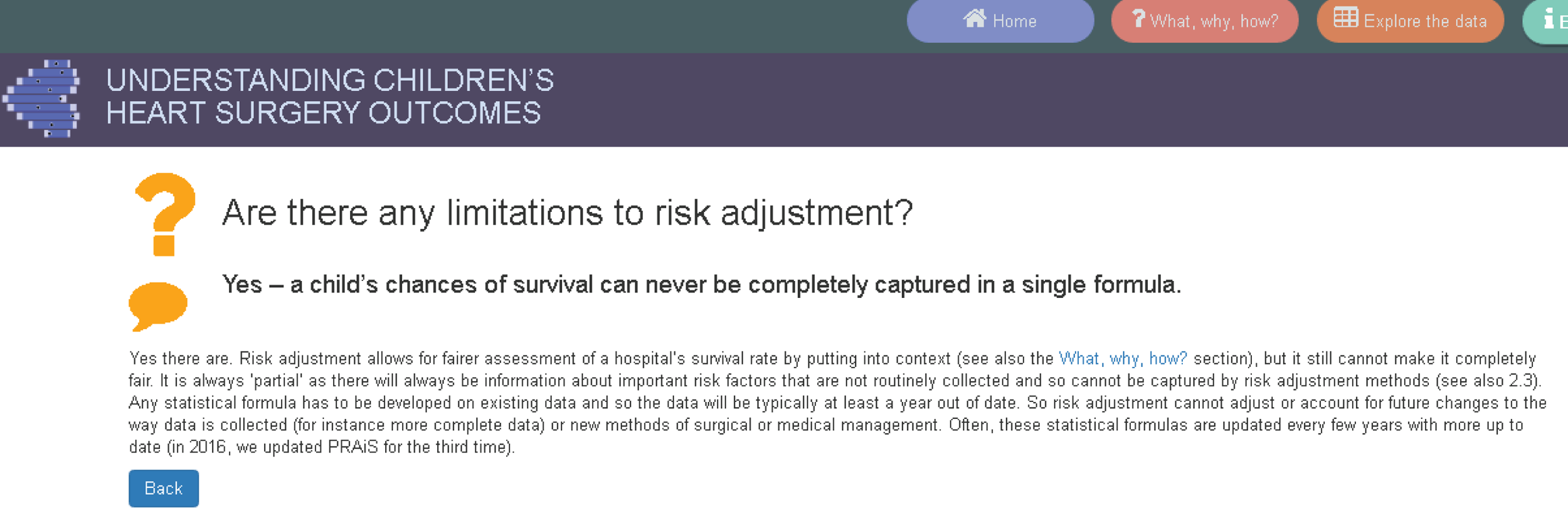

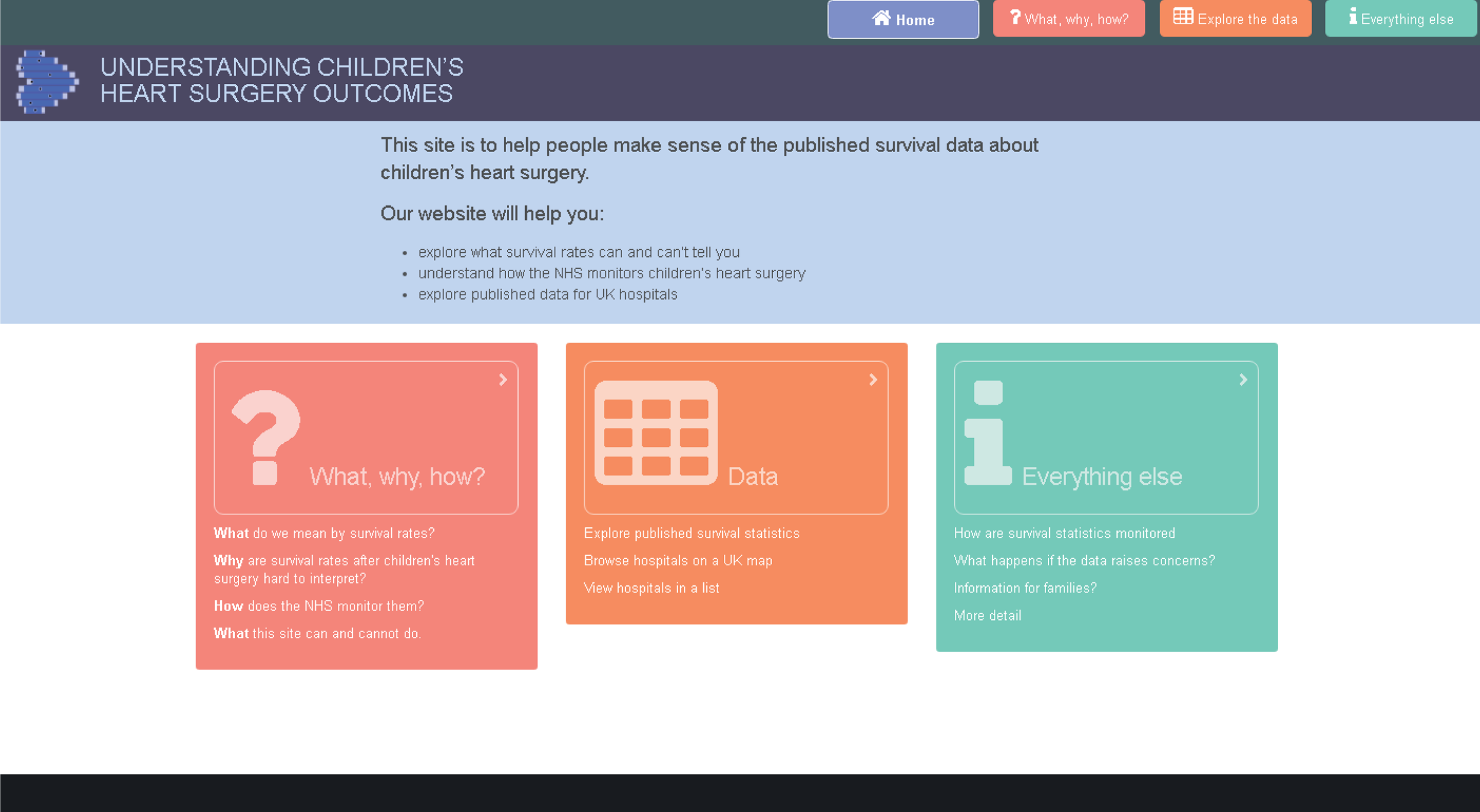

Aim 2: developing a public website to communicate how PRAiS is used to monitor children’s heart surgery

Aim 2 was to develop, test and disseminate online resources for families affected by CHD in children, the public and the media to facilitate the appropriate interpretation of published mortality data following paediatric cardiac surgery.

The objectives to achieve aim 2 were to:

-

confirm our understanding of the current and planned presentations of mortality outcome data by the NICOR congenital audit (2.1)

-

coproduce a web tool with patient groups and interested users that includes an explanatory website, an interactive animation and a short video to facilitate the interpretation of mortality outcomes (2.2)

-

undertake a formative mixed-methods evaluation of the web tool to strengthen the final outputs (2.3)

-

disseminate the developed material via the CHF and Sense about Science and to evaluate the usability and efficacy of the final web tool as an aid to the public understanding of outcome data; in addition, to share the material with other charities such as the British Heart Foundation and also with the NICOR Congenital Audit (2.4).

Structure of the report

The report is separated into two main sections: the development of an updated PRAiS risk model, discussed in Chapter 2, and the development of the website, discussed in Chapter 3.

In Chapter 2, we discuss the national data set used, the role of the expert panel, the development of the PRAiS 2 risk model, its final performance and its incorporation into updated PRAiS Microsoft Excel® software (2013, Microsoft Corporation, Redmond, WA, USA).

In Chapter 3, we discuss the interdisciplinary team assembled for the project, our overall strategy for building the web resource, the evolution of the resource and its launch and reception. We end by discussing the key learning from this innovative methodology and how the multidisciplinary aspects contributed to the whole.

Chapter 2 Aim 1: updating the PRAiS risk model for 30-day mortality following paediatric cardiac surgery

This part of the project aimed to improve the PRAiS risk model for 30-day mortality following paediatric cardiac surgery by incorporating more detailed information about comorbid conditions.

The starting point: the PRAiS 1 risk model

The original PRAiS 1 risk model included the following risk factors:

-

age

-

weight

-

28 specific procedure groupings

-

procedure type (bypass/non-bypass)

-

three diagnosis groups (high, medium and low risk)

-

univentricular heart (UVH) status

-

presence of a non-Down syndrome comorbidity

-

three categorical age groups (neonate, infant and child)

-

procedure performed pre 2007.

As discussed in Chapter 1 (see Aims and objectives, Aim 1: updating the PRAiS risk model for 30-day mortality following paediatric cardiac surgery), the main aim of the update for PRAiS risk model (hereafter called PRAiS 2) was to explore incorporating more comorbidity information into the risk model than the binary absence/presence of a non-Down syndrome comorbidity. Given that the raw mortality rate is low (< 3%), there is a practical upper limit to how many free parameters can be reasonably included in the model. We also intended to explore the inclusion of more diagnostic information than the three broad groupings in PRAiS 1. Thus, it was likely that a desire to include more detailed information about comorbidity and diagnosis would necessitate a trade-off in grouping together some other categorical risk factors, most probably the current 30 specific procedure groupings used within PRAiS.

With respect to the other PRAiS 1 risk factors, we initially kept treatment of age, weight, procedure type and UVH status unchanged.

We used the same process as for PRAiS 1 for excluding non-cardiac procedures, reoperations within 30 days (see Defining episodes of surgical management for analysis) and catheter procedures. 10,33

Defining episodes of surgical management for analysis

Using the same approach as for PRAiS 1, a ‘30-day episode of care’ was the unit of analysis. A 30-day episode starts with the first surgical procedure on a patient. 10 This episode is then assigned an outcome of alive or dead, according to the vital status of the patient 30 days after this first surgical procedure. Any further surgical procedures that the same patient underwent within 30 days of this first procedure were not included in model development. The next procedure recorded for the same patient > 30 days after the first surgical procedure was treated as the start of a new 30-day episode.

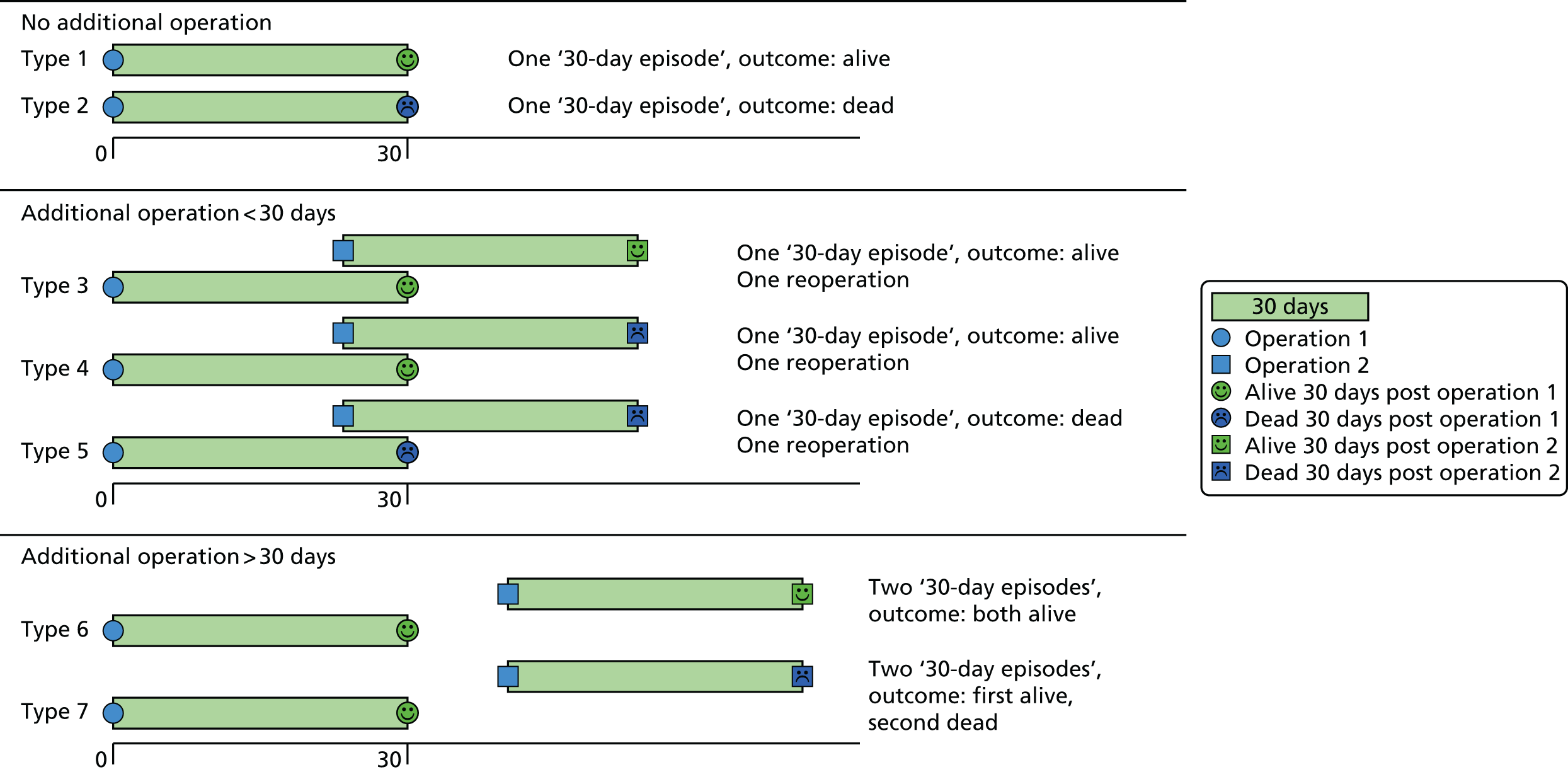

Examples of how the 30-day episode was allocated are shown in Figure 1.

FIGURE 1.

Illustration of how the ‘30-day episode’ was allocated: example patient histories.

Role of the expert panel

Comorbidities have a complex impact on risk of death, depending on the number of comorbidities present, the particular combinations of comorbidity, age and other covariates. It is not feasible to include all potential groupings of comorbidity information within the risk model and, as discussed above, it is unlikely that further comorbidity information can be included without some trade-offs in the detail included for procedure. The options for dealing with comorbidity and any resultant trade-offs with specific procedure should not be decided only by the analysts (CP, LR, MU and SC) but also need input from the clinical community.

The case mix of units is different not only in terms of primary cardiac diagnosis but also by pattern of comorbid conditions. It is also possible that an intensive care consultant will see the risk of comorbidity differently from a surgeon, who may see it differently from a cardiologist. In addition, each procedure can have several comorbidities entered (typically up to eight) and there may be variations in coding practice between centres. Prematurity and/or extremely low-weight babies are important comorbidities and there may be scope for inferring their presence from age and weight information in the absence of relevant comorbidity codes. Thus, it is crucial to have input from a range of centres, a range of clinical expertise, and experienced data managers who have an excellent understanding of how comorbidities are actually coded within the data. To this end, we assembled an expert advisory panel of nine people from five centres, comprising three surgeons (VT, DA and DB), two cardiologists (KE and RF), two intensivists (KB and ST) and two data management experts (TW and JS).

The role of the expert panel was to advise on the update to the model from analytical, clinical and data management perspectives. The expert panel was consulted on:

-

how more comorbidity information could be included

-

potential new groupings of specific procedures and diagnoses

-

new ways of incorporating age and weight information

-

how to incorporate a new, rare and high-risk procedure

-

options for taking into account national changes in outcome over time

-

the exclusion/inclusion of specialist centres.

The expert panel met in July 2015 and February 2016, and regular updates of model development were provided by the analytical team and clinical co-applicants KB and RF to the rest of the expert panel between meetings. Key examples of the material shared with the expert panel are in Report Supplementary Material 1.

The new data set

We used the NCHDA data set covering procedures from April 2009 to March 2014 (‘the main data set’), received in April 2015 after our successful data application on confirmation of the NIHR grant award. All data were stored on the UCL Data Safe Haven, which has been certified to the ISO27001 information security standard and conforms to the NHS Information Governance Toolkit. The data set included all cardiac procedures carried out in children in the UK and Ireland. The NCHDA uses specialised detailed codes for categorising the procedural, diagnostic and comorbid information for each child using the European Paediatric Cardiac Coding (EPCC) Short List. Each operation can have up to eight procedural codes recorded (out of a list of 679 EPCC procedure codes), six diagnostic codes (out of a list of 1292 EPCC diagnostic and diagnostic procedure codes) and up to eight comorbidity codes (out of a list of 145 comorbidity codes).

The data fields used for data cleaning and model development were:

-

pseudonymised hospital patient identification

-

pseudonymised patient NHS number

-

pseudonymised unit (hospital) identification

-

age at operation (to three decimal places)

-

sex

-

six diagnostic code fields

-

weight

-

a single comorbidity field that can contain many individual EPCC codes

-

year and month of procedure

-

procedure type

-

sternotomy sequence (used for data cleaning only)

-

six procedure code fields

-

discharge life status

-

(most recent) life status

-

age at discharge (to three decimal places)

-

age at life status (to three decimal places).

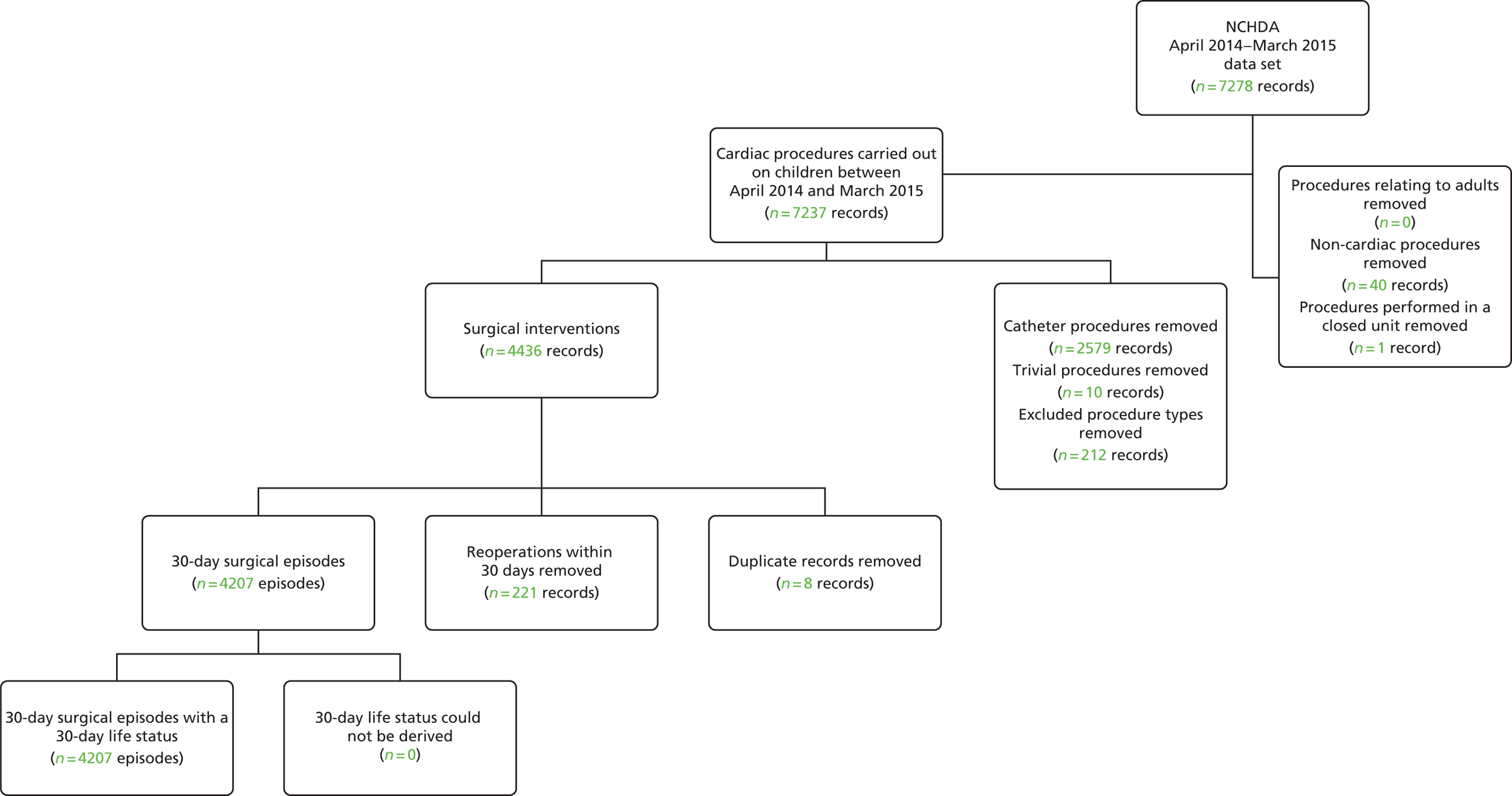

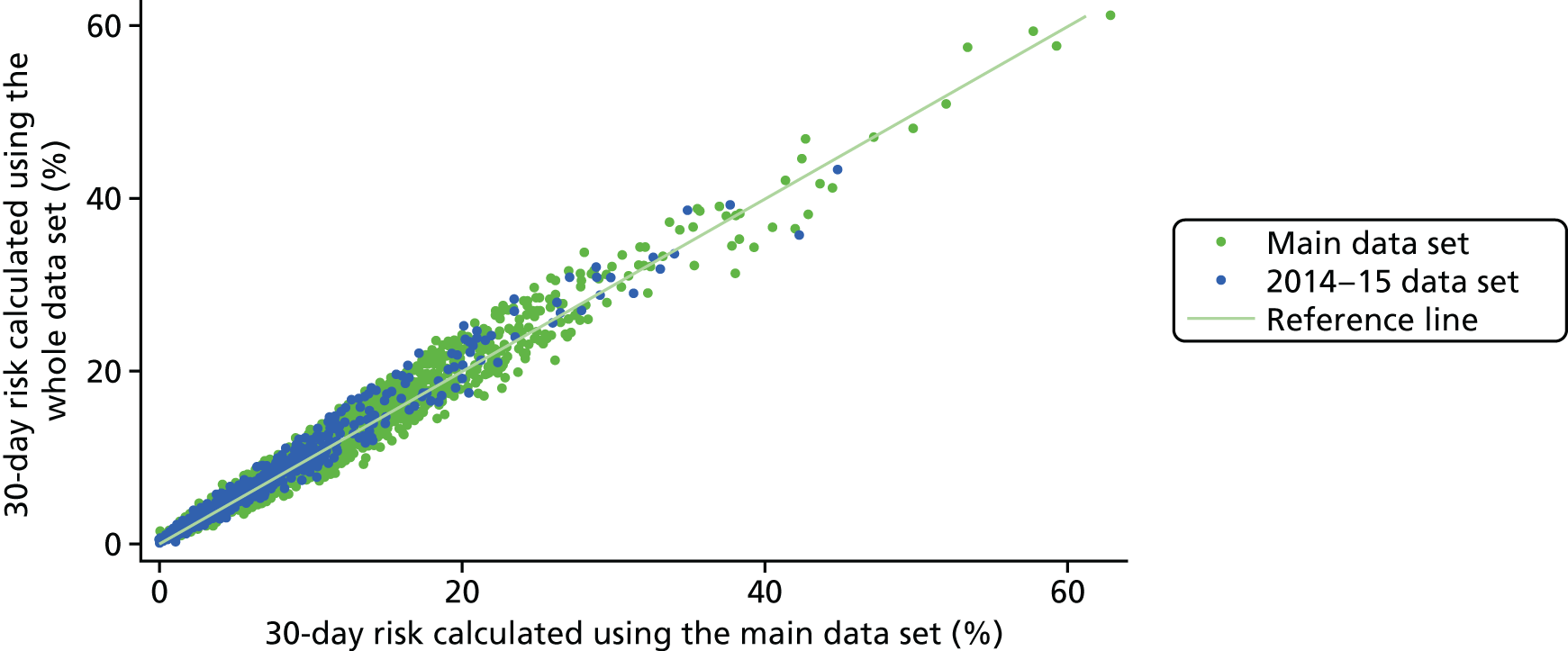

In February 2016, towards the end of the modelling process, we were offered another year of NCHDA data, from April 2014 to March 2015 (‘the 2014–15 data set’). Given that the model development was well under way, it was decided that this would be used as an external temporal validation set for the model developed on the main data set, with the final model used for roll-out to the hospitals as part of the PRAiS software being recalibrated using all data from April 2009 to March 2015 (‘the full data set’).

Data preparation and descriptive analysis in the main data set

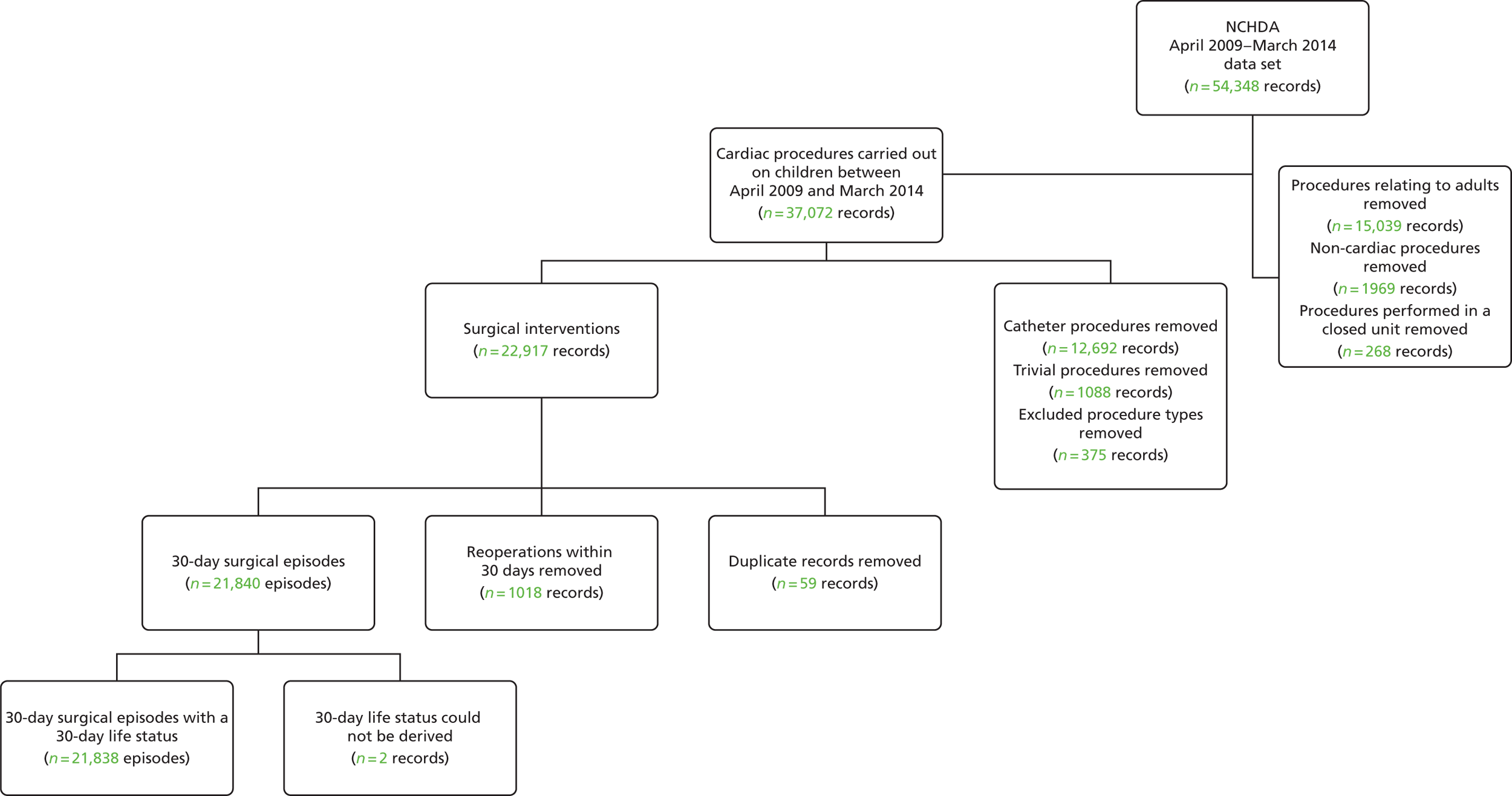

A summary of the records included and excluded from the main data set is included in Figure 2.

FIGURE 2.

Inclusions and exclusions from the main data set.

Initial removal of records outside the scope of the project

From the main data set, we initially removed all records in which the patient was > 16 years old at the time of the procedure; any procedure performed before April 2009; any non-cardiac procedures; records relating to catheter and ‘other’ type (i.e. non-surgical) procedures; and non-cardiac or trivial/minor procedures.

The PRAiS 1 risk model had also excluded ‘hybrid’-type procedures, as these were not considered surgical cardiac operations. Since then, a new hybrid procedure has evolved to treat the most serious congenital heart condition, hypoplastic left heart syndrome (HLHS). On the advice of the expert panel and the NCHDA at the first expert panel meeting, HLHS hybrid procedures were also included in our model development. All other hybrid-type procedures were excluded.

Oxford’s John Radcliffe Hospital stopped performing paediatric cardiac surgery in 2010. Following discussion with the expert panel, it was decided that procedures performed at this unit should be excluded, as they would not be indicative of future outcomes.

To construct 30-day episodes, it was essential to identify records within the data set relating to the same individual patient. To do this, we initially linked all procedure records that had the same pseudonymised NHS and/or the same pseudonymised hospital number.

We then checked for inconsistencies between records now identified as being for the same patient. We first identified all records for an apparently single patient for whom sequential ages at operation were inconsistent with procedure dates. The manual inspection of procedural, diagnostic and other demographic factors for these patient records determined whether the patients were, in fact, different, or whether a mistake had been made in the recording of date of birth or procedure dates. However, neither episode allocation nor 30-day life status was affected in those cases in which it was determined that records apparently relating to the same patient were, in fact, for different patients. For patients for whom there appeared to be an error in the date of birth or date of procedure, we used indicators such as weight and sternotomy sequence instead of the procedure date to infer the correct order and the timing of procedures for determining episode allocation.

This reduced data set contained 22,917 uncleaned procedure records, corresponding to 18,836 unique patients.

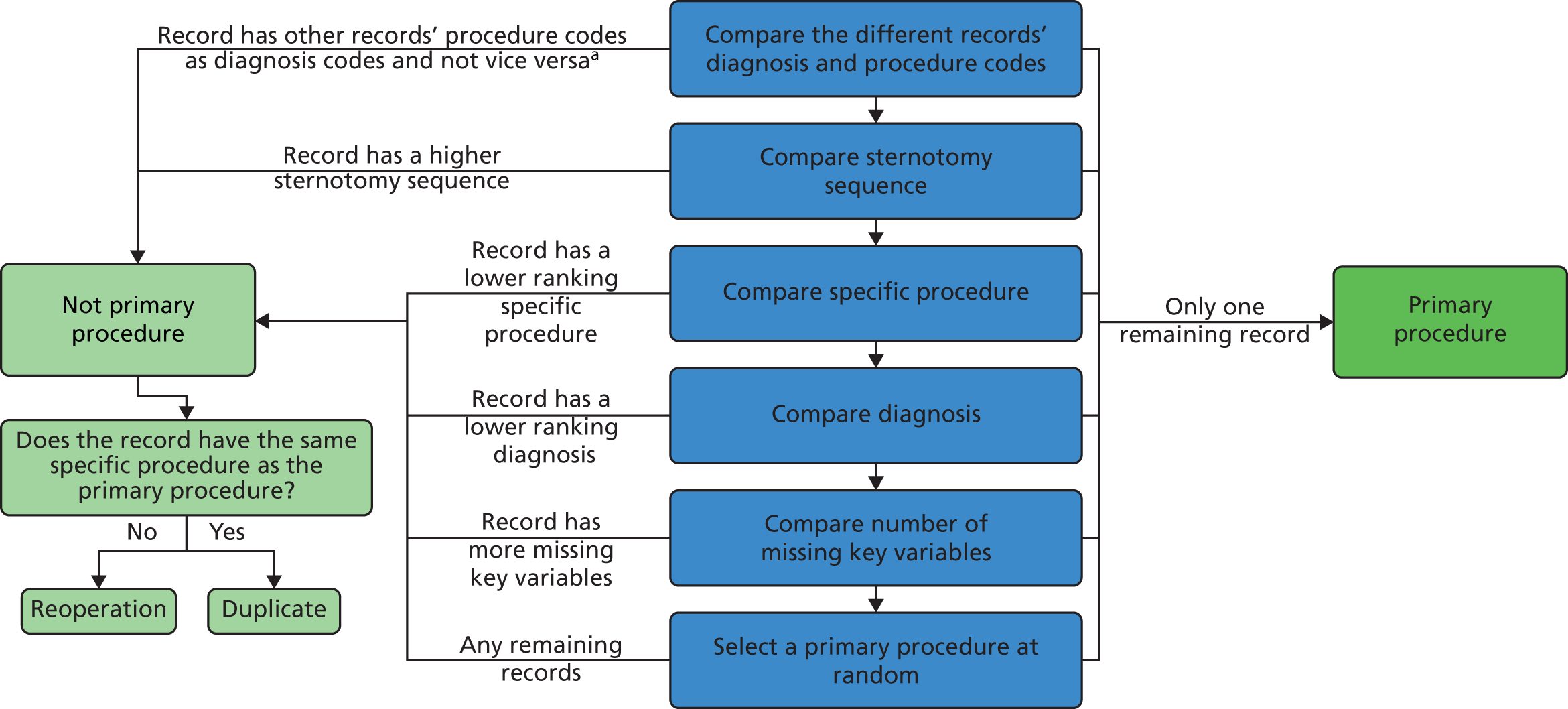

Removal of duplicate records

Some records appeared to be duplicates based on systematic comparison of pseudonymised patient NHS number or hospital number, year and month of operation, age-specific procedure, procedure type and procedure and diagnosis codes included. There is a plausible mechanism for record duplication: if an institution tries to update an individual record within NCHDA by changing either the patient’s hospital identification number or the procedure date (both of which are key fields), a second, duplicate, record will be created instead of the original record being overwritten. We used a formal protocol (see Appendix 1) to determine possible duplicates, and to determine which record of each pair to retain, based on the completeness and plausibility of the data contained in the records. Our identification of duplicates was checked with the clinical co-applicants and shared with the NCHDA. A total of 59 duplicate records in the main data set were removed from the analysis.

We then proceeded to allocate 30-day surgical episodes of care as described in Figure 1, resulting in 21,840 episodes.

Allocation of 30-day life status

The NCHDA receives independent life tracking from the ONS. However, a patient is not recorded as dead in ONS until any ongoing inquest is complete, which can lead to delays in updating the life status of a minority of children who have died. The ONS life status in the data set was the most recent life status at data extraction (2015), and if a hospital discharge life status was ‘dead’ and the ONS life status was ‘alive’, we used the hospital discharge status, consistent with current practice at NCHDA. We note that the only records likely to be affected by the ONS inquest issue would be children operated on in 2014–15 with a discharge status of alive who then died following discharge, which is unlikely to affect more than a very small number of episodes, especially as we received the 2014–15 data in March 2016, allowing almost an extra year for any inquest to be completed.

Note that ONS tracking is not available for children from overseas or for those who do not have a NHS number for any other reason. The NCHDA includes these children in its public reports and so these children must also be included in the development of the PRAiS risk model, using the same method for allocating life status.

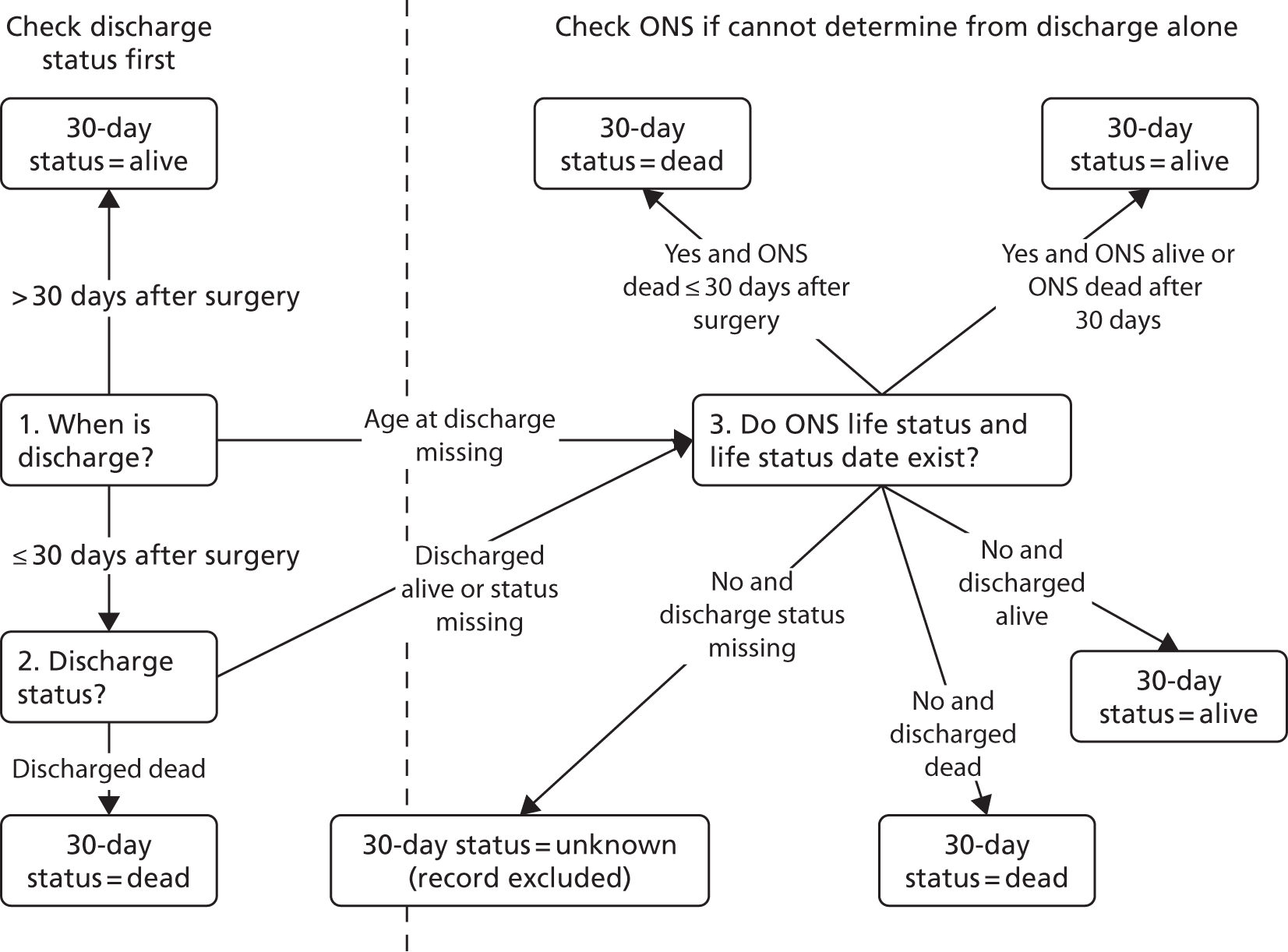

The overall algorithm we use for assigning the episode life status as shown in Figure 3 and is consistent with the method used in the NCHDA.

FIGURE 3.

Allocation of 30-day status.

In addition, subsequent records for the same patient were used to check the 30-day life status. For example, if a patient had a further operation at least 30 days after their previous procedure, they were considered alive at 30 days for the initial procedure, even if this was not supported by discharge or life status.

When the life status was not known at 30 days post procedure, the discharge status prior to 30 days was used as a proxy for 30-day life status, which is consistent with NCHDA practice and the PRAiS 1 process. This affected 3165 episodes (14% of records) in the main data set (99% of which correspond to patients from outside England and Wales for whom no ONS tracking is available and who were discharged alive before 30 days).

We were able to allocate the 30-day life status for all but two surgical episodes. These two records were excluded from the analysis (see Figure 2), leaving us with a final data set comprising 21,838 30-day surgical episodes.

Data cleaning of key variables

The NCHDA data set is widely recognised as being of extremely high quality (arguably the best of its type in the world) and the data quality has increased steadily over time; however, given the sheer size of the data set and the complexity of paediatric cardiac diagnoses and surgery, it is inevitable that there were some errors and anomalies in the data. The analytical team spent considerable amounts of time understanding the data set and, when necessary, cleaning it.

Age and weight fields

Some of the ages, weights and combinations of age/weight recorded for an episode were biologically implausible. When a weight was recorded as identically zero, it was treated as missing data. There were five patients in the main data set whose weight was determined as having been recorded in grams instead of kilograms (all children in the first month of life whose weights were recorded as > 600 kg). These weights were converted manually into kilograms.

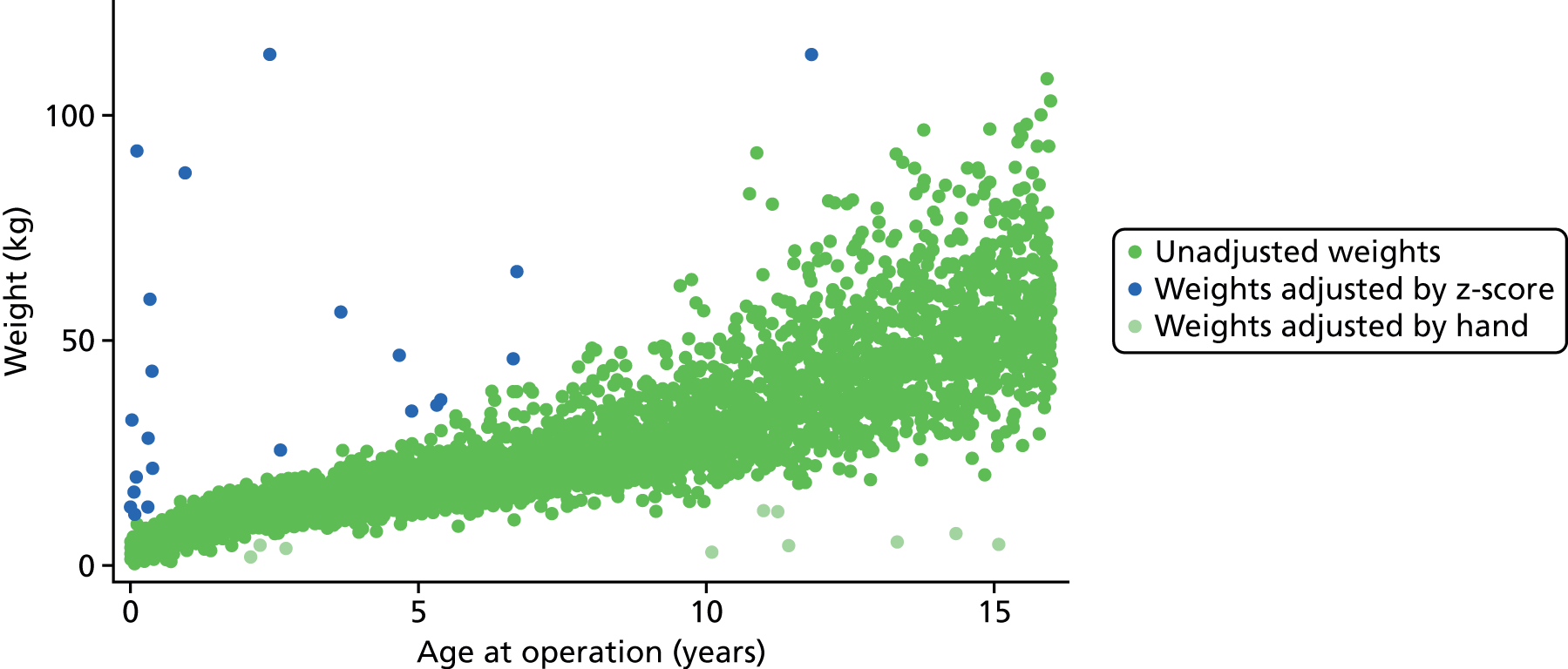

The data set was then subdivided into the same 23 age bands that were used for the development of PRAiS 1 (narrower at younger ages), and the means and standard deviations (SDs) of the recorded weights within each band were calculated. We then calculated the weight-for-age z-scores for each episode within the data set. Episodes in the main data set, with missing weights (n = 3) or a z-score of either < –5 or > 5 (considered infeasible) (n = 23, shown in blue in Figure 4), were assigned the mean weight corresponding to their age band. The z-score thresholds were chosen after consultation with the expert panel and an independent statistician, and were chosen to ensure that patient weights outside these boundaries were definitely biologically implausible.

FIGURE 4.

Adjustments made to weight.

Finally, a further 10 episodes had their weight manually assigned to the mean weight for age following inspection by a clinician (KB) on the grounds of biological implausibility (all considered too low to be feasible). These episodes are shown in light green in Figure 4.

Figure 4 shows the distribution of age and weight in the main data set prior to adjustment with the identified anomalous weights highlighted.

Diagnosis fields

Our use of diagnostic information in the model development relied on us being able to identify each recorded diagnosis for an episode by its EPCC short codes. Some of the information recorded in the six possible diagnosis fields for each episode in the data set was not automatically identifiable as an official EPCC code. For fields for which this information was ambiguous, we replaced that diagnosis with ‘empty/unknown’. In other instances we were able to allocate an official EPCC code to a diagnosis unambiguously, for example when there was an anomalous numerical code format that was nonetheless identifiable (e.g. with additional spaces or dots). When multiple codes were recorded in a single diagnosis field, we split these and moved them to separate diagnosis fields in sequential order for that record. Any repeated codes were ignored. We investigated the data using additional text searches for episodes that were being allocated an ‘empty/unknown’ diagnosis, but they did not provide any further information.

Procedure fields

In general, the quality of the procedural information in the data set was very high, although there were some examples of information recorded in the procedure fields that was not automatically identifiable as an official EPCC code. When the code had an anomalous format (e.g. additional spaces or dots), we replaced the information with the official EPCC code. When multiple codes were recorded in a single procedure field, we split these into separate procedure fields in sequential order for that record. In cases when this information was ambiguous, the field was ignored. After the procedural information was cleaned, those records containing only non-cardiac procedures (using same criteria as those for PRAiS 1;10 see also Report Supplementary Material 2) were removed from the data set (n = 1991; see Initial removal of records outside the scope of the project). Any repeated codes were ignored.

Comorbidity fields

Comorbidity codes provided information on other health problems that a child had in addition to his or her primary cardiac diagnosis. We extracted all of the comorbidity codes stored in the single NCHDA data field for each episode. These were then cleaned in the same way as the diagnosis fields.

We note that, to capture all of the diagnosis and other illness information possible, we searched all diagnosis and comorbidity fields for both diagnosis and other illness EPCC codes.

Consideration of risk factors

Specific procedure information

The specific procedure algorithm was first developed by the NCHDA steering committee over 10 years ago to combine the individual EPCC codes submitted to NCHDA as part of a patient’s procedure record to define a standard procedure category (‘a specific procedure’). The algorithm defines the series of EPCC codes that may be included to identify an individual operation, and in a proportion of operations in which the definition may be more complicated it also defines the list of EPCC codes that must be excluded. The algorithm contains a hierarchy that ranks the recognisable procedures in order, with the most complex at the top (grouped first) and the least complex at the bottom (grouped last). The algorithm has been refined and improved by the NCHDA steering committee year on year to match evolving practice, such that the definitions of each operation remain tight and consistent. There are currently 49 surgical specific procedure categories.

We used the most recent specific procedure algorithm available to determine specific procedures for all surgical episodes in the main data set. By the end of the model development, this was the Specific Procedure Algorithm v5.05 from May 2016 (https://nicor4.nicor.org.uk/chd/an_paeds.nsf/vwcontent/technical%20information?opendocument). In recent years a new procedure to treat HLHS, called the HLHS hybrid approach procedure, has become increasingly common and is expected to become more widespread in the future. Although this procedure is not currently included in the specific procedure algorithm, the expert panel felt that, owing to the expected increase in its use and the high risk of the procedure, it should be included in PRAiS 2. This inclusion of HLHS hybrids was then discussed and affirmed by the NCHDA steering committee. The expert panel developed a coding addition to the specific procedure algorithm to include this procedure, taking into account how the procedure had been coded in the past and how it should be coded prospectively.

One of the main aims of the update for the PRAiS model was to balance an increase in diagnosis and comorbidity information included in the model with a reduction in the number of degrees of freedom used by procedural information. The increase in the number of specific procedures included in the specific procedure algorithm, from 41 to 49, since PRAiS 1 was developed made this problem more acute. This balance was discussed at the expert panel meeting in July 2015. The analysts (CP and LR) demonstrated how risk model performance could be maintained with some illustrative broader specific groupings offset by increased comorbidity and diagnostic information. The expert panel agreed that the analytical team would investigate different methods of grouping specific procedures into broader procedural risk groups and share the most statistically promising groupings at the next expert panel meeting.

The analytical team initially grouped specific procedures by the ages at which they occurred and the risk associated with them using classification and regression tree (CART) analysis. 34 These groups were then adjusted iteratively by the analysts (LR and CP) after consultation with the clinical expert panel and testing of the stability and performance of the resultant risk models under cross-validation, to ensure that the groups were clinically valid and that they demonstrated consistently good performance. The expert panel provided advice on procedures for which the observed mortality was not considered representative of the risk or about instances when procedures that were qualitatively different should not be combined in a single risk group.

Procedure type

In PRAiS 1, only bypass and non-bypass surgical procedures were included in the model. The relatively new HLHS hybrid approach procedure involves both surgical and catheter techniques, so it did not strictly fit into a bypass/non-bypass procedure type category. 35 How to allocate a procedure type for HLHS hybrid procedures was discussed with the expert panel.

Diagnostic information

The diagnosis allocated to episodes from the individual recorded EPCC codes was a slightly modified version of the scheme used by coauthors KB and SC in their work on longer-term infant heart surgery outcomes, which itself was adapted from the scheme originally developed by coauthors for the PRAiS 1 risk model. 36,37 The diagnosis allocation algorithm results in 29 hierarchical diagnosis groups, and full details are provided in Report Supplementary Material 2. In short, for each episode, each recorded diagnosis code is mapped uniquely to one of the 29 primary diagnosis groups, and then the highest ranked (most serious) primary diagnosis is allocated as the overall primary diagnosis group for that episode. In PRAiS 1, these primary diagnosis groups were then further allocated to one of three broad diagnosis groups used in the final model. 10

The reduction in the number of degrees of freedom used by procedure information meant that we could explore increasing the number of diagnosis risk groups used for PRAiS 2. New diagnosis risk groups were developed in a similar way to, and alongside, the broader procedure risk groups, using CART analysis to initially group diagnoses by the age at which patients with that diagnosis had procedures, and the risk associated with that diagnosis. These groups were then adjusted iteratively by the analysts after consultation with the expert panel and testing of the stability and performance under cross-validation, to ensure that the groups were clinically valid and were not unduly affected by the apparent risk shown in the data set if the expert panel did not feel that this reflected the true risk of the diagnosis. We also took into consideration when there was significant correlation between procedures and diagnosis (e.g. some procedures are only performed for a single diagnosis) and strived to develop parsimonious groupings.

Definite indication of univentricular heart allocation

An episode was determined to relate to a patient with a UVH structure if they had a diagnosis code implying a UVH or if they had a procedure that would be performed only on a patient with a UVH. A patient was thus assigned to the UVH category if they met at least one of the following criteria.

-

Had one of the following overall diagnosis groups:

-

HLHS

-

functionally UVH

-

pulmonary atresia and intact ventricular septum (IVS).

-

-

Had one of the following EPCC diagnosis codes:

-

010119 – double outlet right ventricle: with non-committed ventricular septal defect (VSD)

-

159060 – ‘failed’ Fontan-type circulation

-

010309 – atrioventricular and/or ventriculoarterial connections abnormal.

-

-

Had one of the following specific procedures:

-

Norwood procedure

-

HLHS hybrid approach procedure

-

Fontan procedure

-

bidirectional cavopulmonary shunt.

-

-

Had one of the following EPCC procedure codes:

-

121000 – Norwood-type procedure

-

123001 – Fontan-type procedure

-

123005 – total cavopulmonary connection (TCPC) using extracardiac inferior caval vein/pulmonary artery conduit with fenestration

-

123006 – TCPC with fenestrated lateral atrial tunnel.

-

123013 – Fontan procedure with atrioventricular connection

-

123027 – Fenestration of Fontan-type connection

-

123028 – Fontan-type connection without fenestration

-

123031 – takedown of Fontan-type procedure

-

123032 – Fontan procedure with direct atriopulmonary anastomosis

-

123034 – conversion of Fontan repair to TCPC

-

123037 – Fontan-type procedure revision or conversion

-

123050 – TCPC

-

123051 – TCPC with lateral atrial tunnel

-

123054 – TCPC using extracardiac inferior caval vein/pulmonary artery conduit

-

123056 – takedown of TCPC

-

123111 – bidirectional superior cavopulmonary (Glenn) anastomosis

-

123115 – hemi-Fontan procedure

-

123144 – bilateral bidirectional superior cavopulmonary (Glenn) anastomoses

-

123172 – superior caval vein to pulmonary artery anastomosis.

-

Comorbidity information

During the development of PRAiS 1, coapplicant KB devised a scheme whereby EPCC comorbidity codes were placed into one of the following groups:

-

acquired comorbidity

-

congenital non-Down syndrome comorbidity

-

prematurity

-

Down syndrome.

The binary group of ‘any indication of a non-Down syndrome comorbidity’ was included in the PRAiS 1 model. The original four groupings developed by KB formed the basis of the expert panel’s discussion on how to add more detailed comorbidity information to the PRAiS risk model. Other particular additional risk factors considered by the panel were the most common EPCC codes in the data set and those highlighted by Jacobs et al. 16,38 in the US context, not all of which had been included in the above categories.

Other categories of additional risk factor that were considered were acquired heart conditions not directly related to a patient’s primary congenital cardiac diagnosis and indicators that a patient was severely ill (e.g. shock).

Possible ways to include more comorbidity information that were discussed included:

-

a simple count of the number of non-duplicate EPCC comorbidity codes recorded

-

definite indication of different comorbidity/additional risk factor groups

-

a count of the number of instances in each of these groups

-

common combinations of types of additional risk factor.

We also explicitly considered the potential for ‘gaming’ by including risk factors. A candidate risk factor could be open to gaming if additional risk could be counted in the calculation based on factors included in the data that did not necessarily reflect an actual increase in risk. This would lead to an artificially high predicted risk, leading to a more favourable conclusion of predicted versus actual 30-day mortality and potentially allowing a poorly performing hospital to avoid investigation as a result of national audit reporting. A possible way that a candidate risk factor could be ‘gamed’ would be if several different diagnostic or comorbidity codes relating to the same condition were counted as making individual contributions to the risk for that episode, or if risk factors that are present in many patients with CHD without necessarily leading to an increased risk of 30-day mortality, such as cyanosis, were included in the model.

Age and weight

The association of both age and weight with mortality is non-linear. We investigated several methods of accounting for this non-linearity. In PRAiS 1, a continuous linear age and a categorical age band variable (neonate, infant and child) were included as risk factors in the model alongside continuous weight. 10 However, this inevitably resulted in relatively large, unrealistic changes in predicted risk at age band boundaries.

For PRAiS 2, with encouragement from the expert panel, we explored using cubic splines and fractional polynomials to model the non-linear association of age and weight with mortality. Cubic splines are piecewise third-order polynomials that pass through a set of ‘knots’. 39 Fractional polynomials are continuous functions that can contain repeated, integer, non-integer and logarithmic terms. 40

During discussion about comorbidities and additional risk factors, a low weight (< 2.5 kg) risk factor was also discussed as a possible proxy for prematurity.

Descriptive analysis of the data

Field completeness

Since PRAiS 1 was developed, the quality of the data in the NCHDA data set has improved greatly. Missing data were not a significant problem in model development. Data completeness is summarised in Table 1.

| Variable | Number (%) of missing records | Treatment |

|---|---|---|

| Age | 42 (0.11) | Removed with records relating to adults (see Figure 3) |

| Procedure codes | 45 (0.11) | Removed with non-cardiac procedures (see Figure 3) |

| Procedure type | 29 (0.07) | Removed with excluded procedure types (see Figure 3) |

| Diagnosis codes | 42 (0.11) | Included with ‘empty/unknown’ diagnosis |

| Weight | 79 (0.20) | Included and adjusted as detailed in Data cleaning of key variables, Age and weight fields |

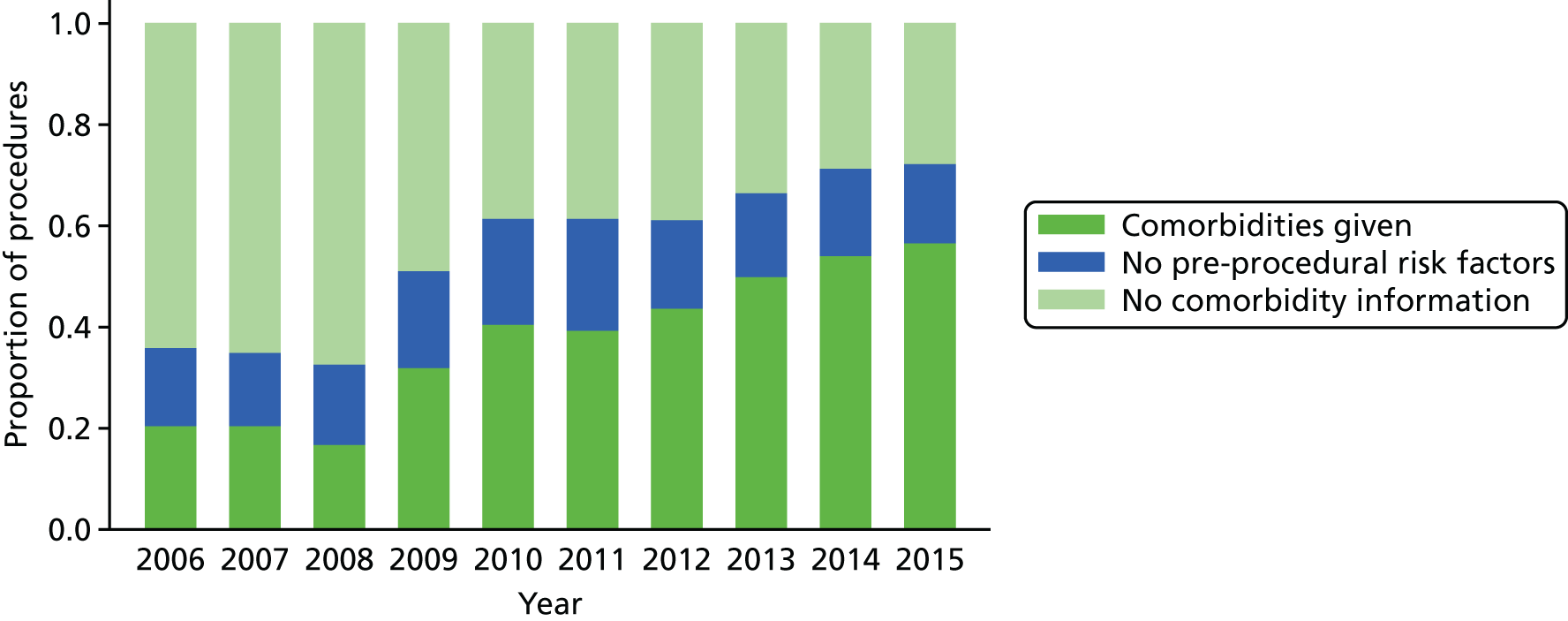

The increase in the amount of comorbidity information included in the NCHDA data is shown in Figure 5, while the proportion of procedures in the data set that have the explicit ‘no pre-procedural risk factors’ comorbidity code has stayed reasonably stable over the years.

FIGURE 5.

The completeness of the comorbidity field over time for surgical procedures.

Specific procedures

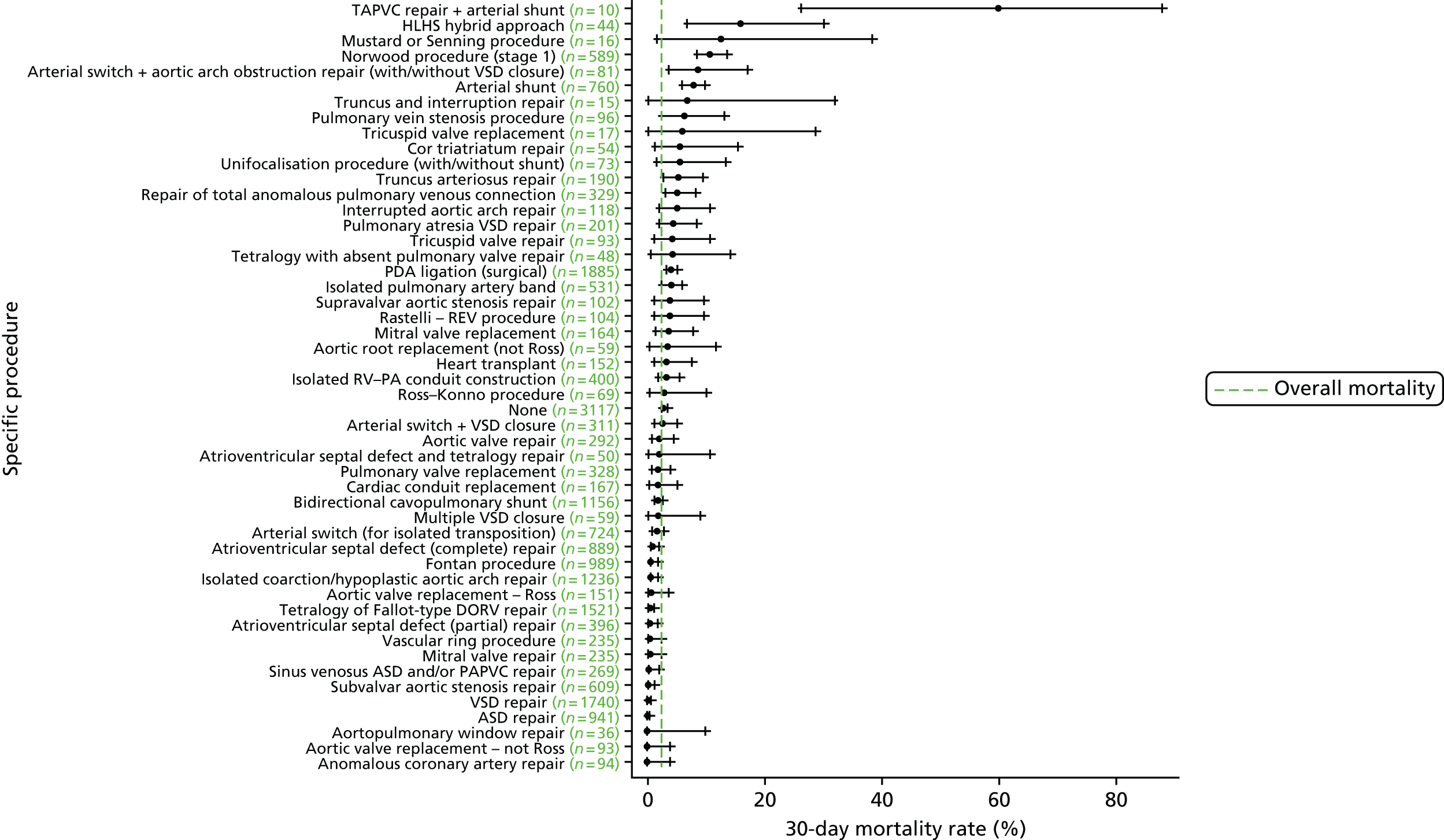

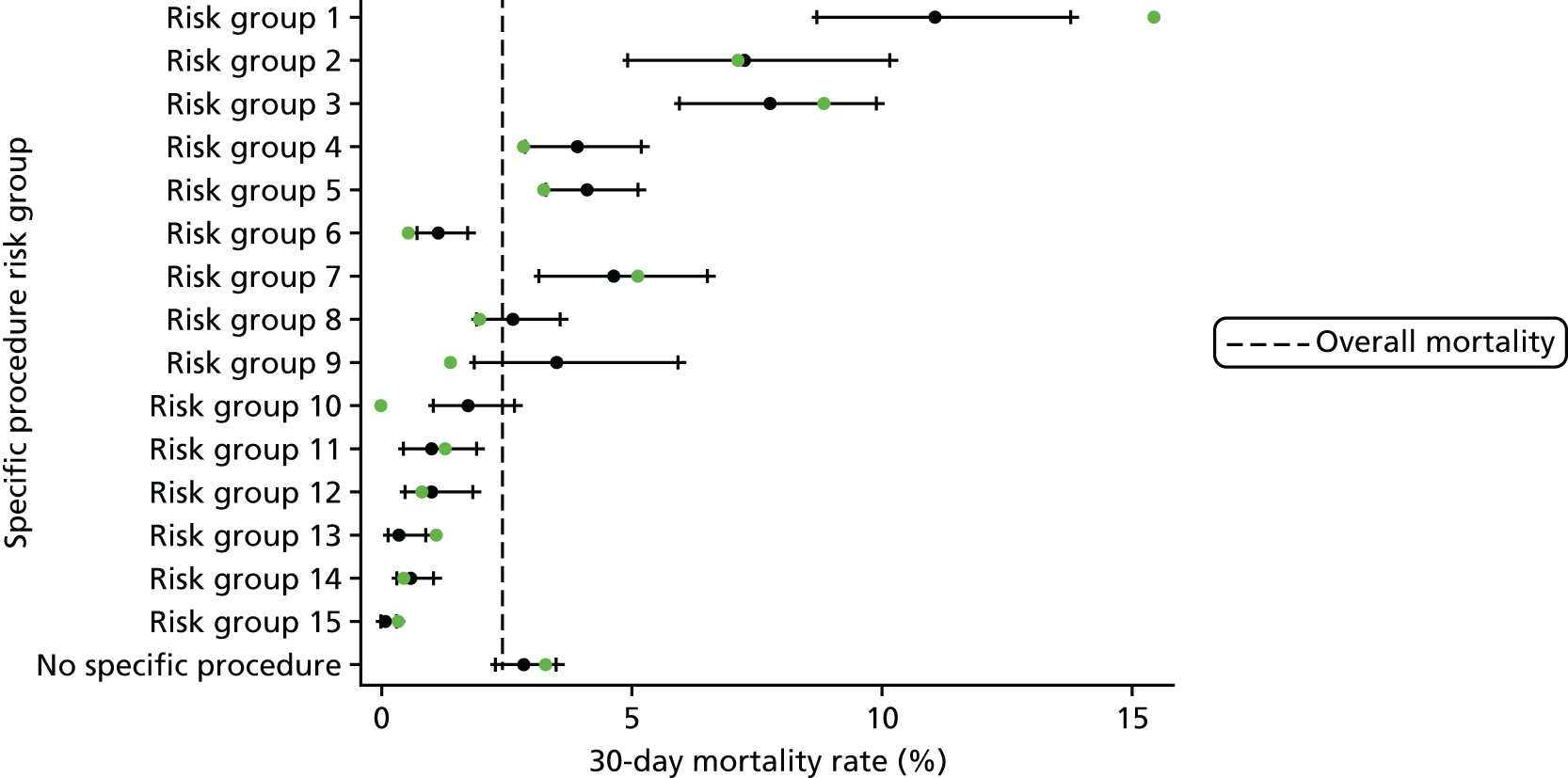

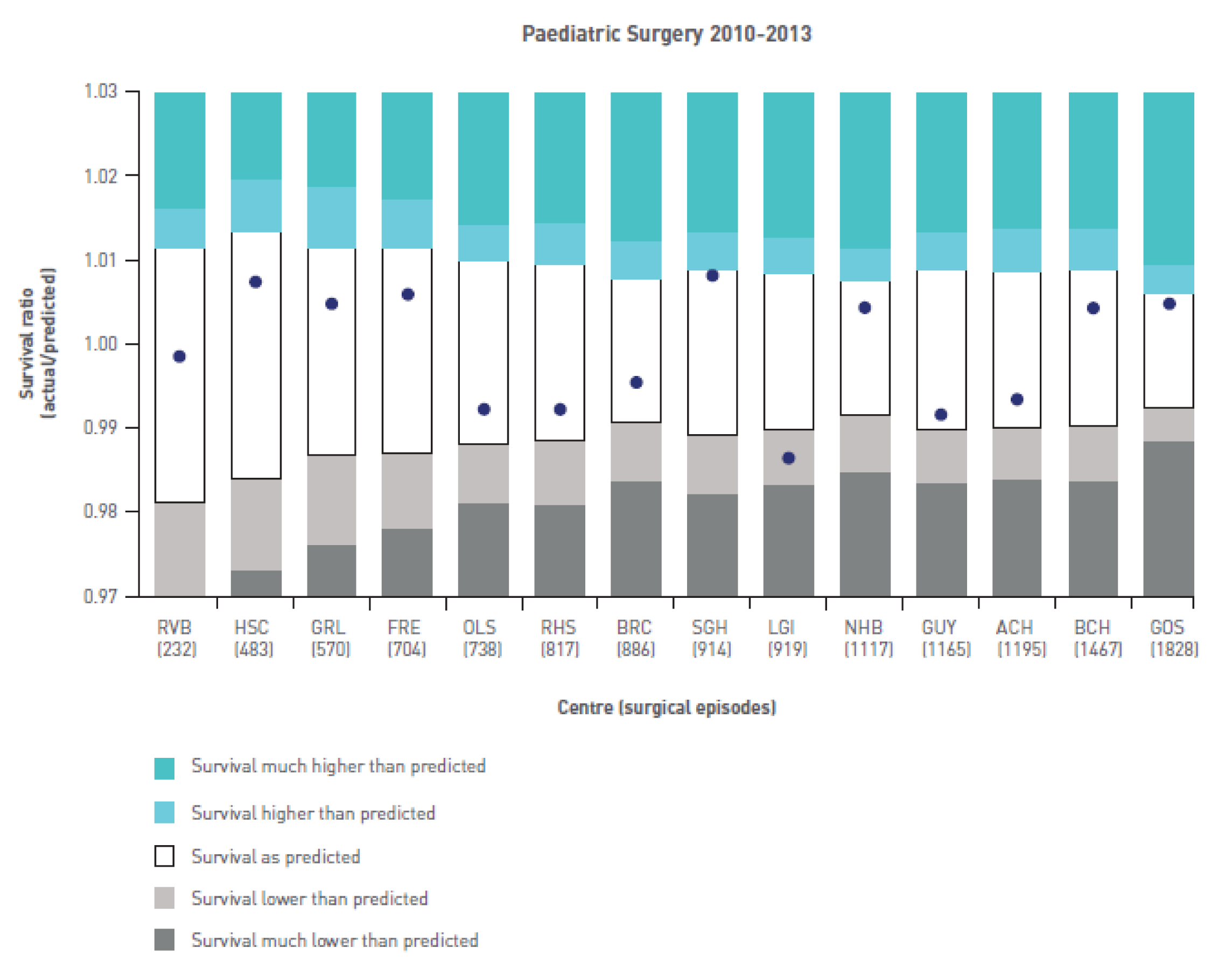

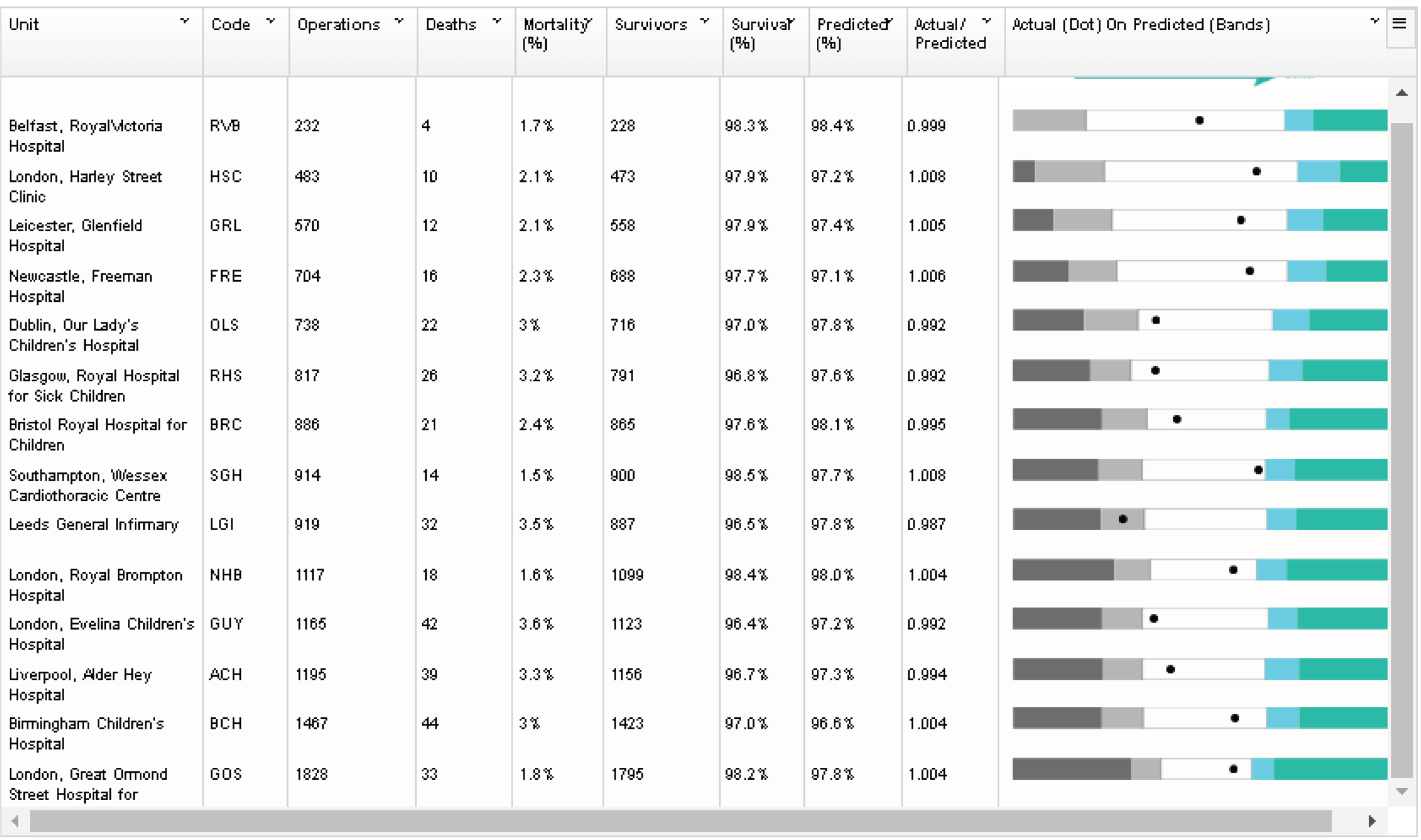

We show the mortality rate by specific procedure category in Figure 6.

FIGURE 6.

Mortality rates (95% CIs) based on 30-day status for specific procedures within the main data set (n = 21,838). The dotted green line indicates the overall average mortality rate (2.5%). ASD, atrial septal defect; CI, confidence interval; DORV, double outlet right ventricle; PAPVC, partial anomalous pulmonary venous connection; REV, Réparation à l’Etage Ventriculaire; RV–PA, right ventricle to pulmonary artery; TAPVC, total anomalous pulmonary venous connection.

Diagnosis

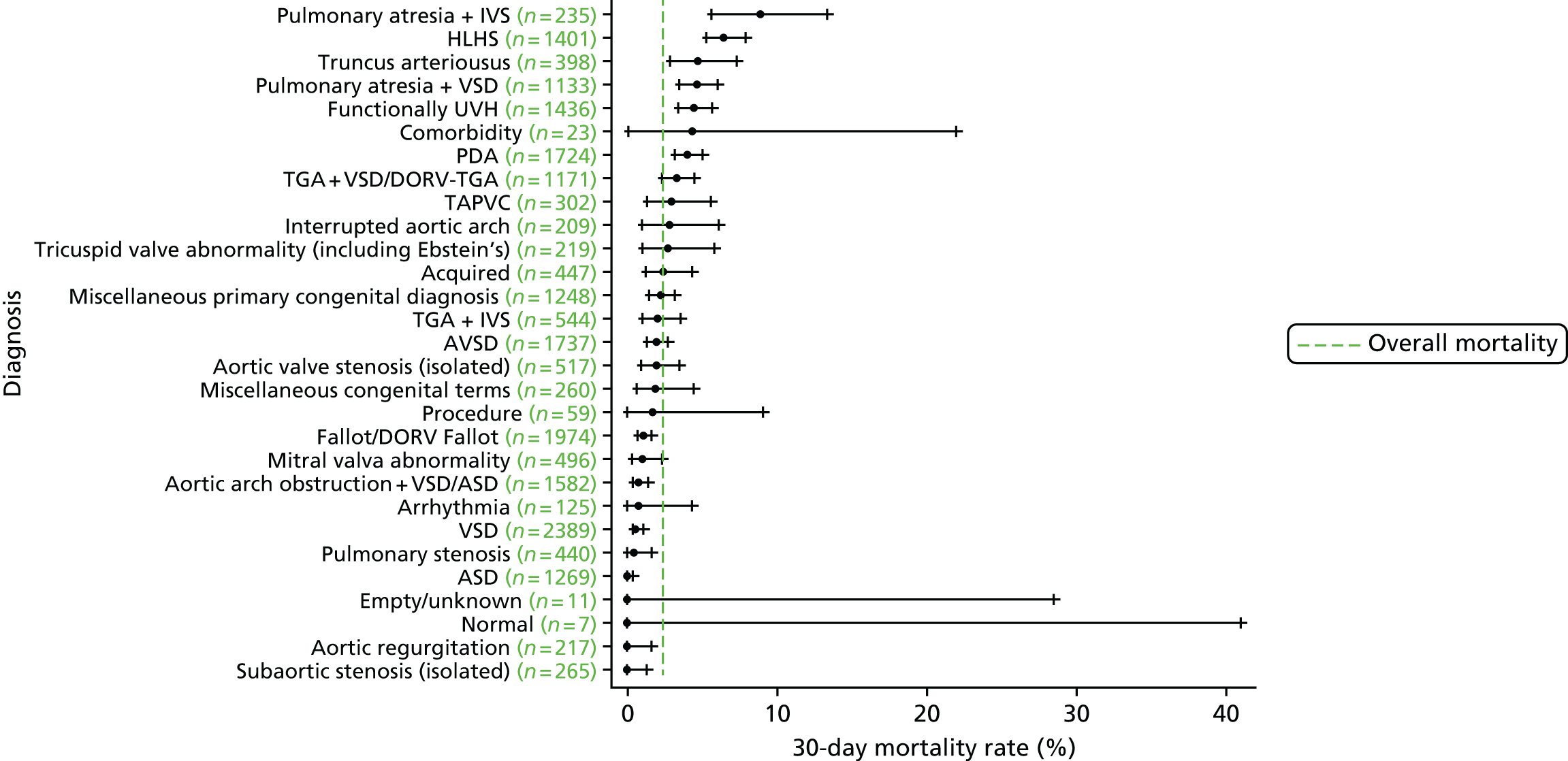

We show the mortality rate by diagnosis group in Figure 7.

FIGURE 7.

Mortality rates (95% CIs) based on 30-day status for diagnosis within the main data set (n = 21,838). The dotted green line indicates the overall average mortality rate (2.5%). ASD, atrial septal defect; AVSD, atrioventricular septal defect; CI, confidence interval; DORV, double outlet right ventricle; TAPVC, total anomalous pulmonary venous connection; TGA, transposition of the great arteries.

Age and weight

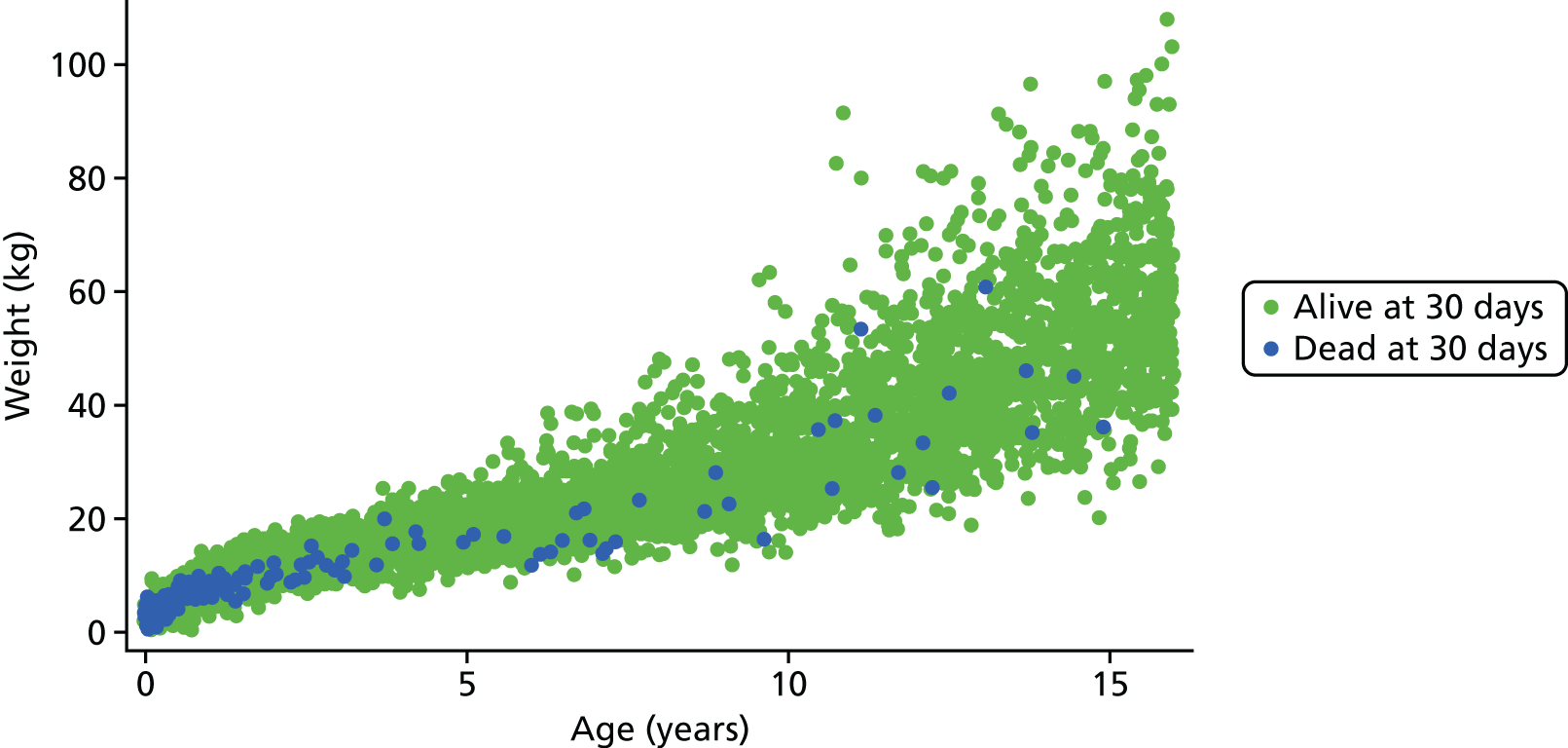

Figure 8 shows the higher incidence of death within 30-day surgical episodes for younger patients and for patients with lower weight.

FIGURE 8.

Weight vs. age scatterplot for episodes in the main data set (n = 21,838).

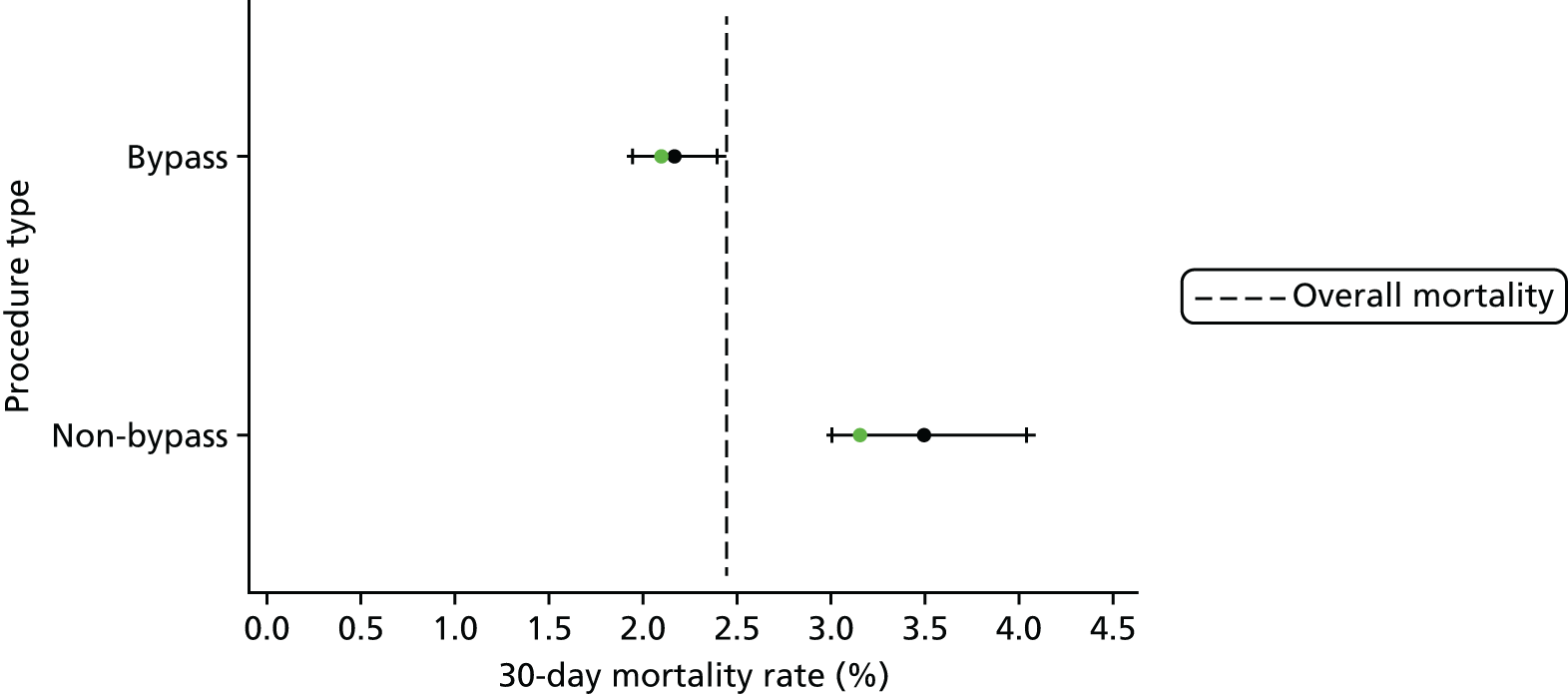

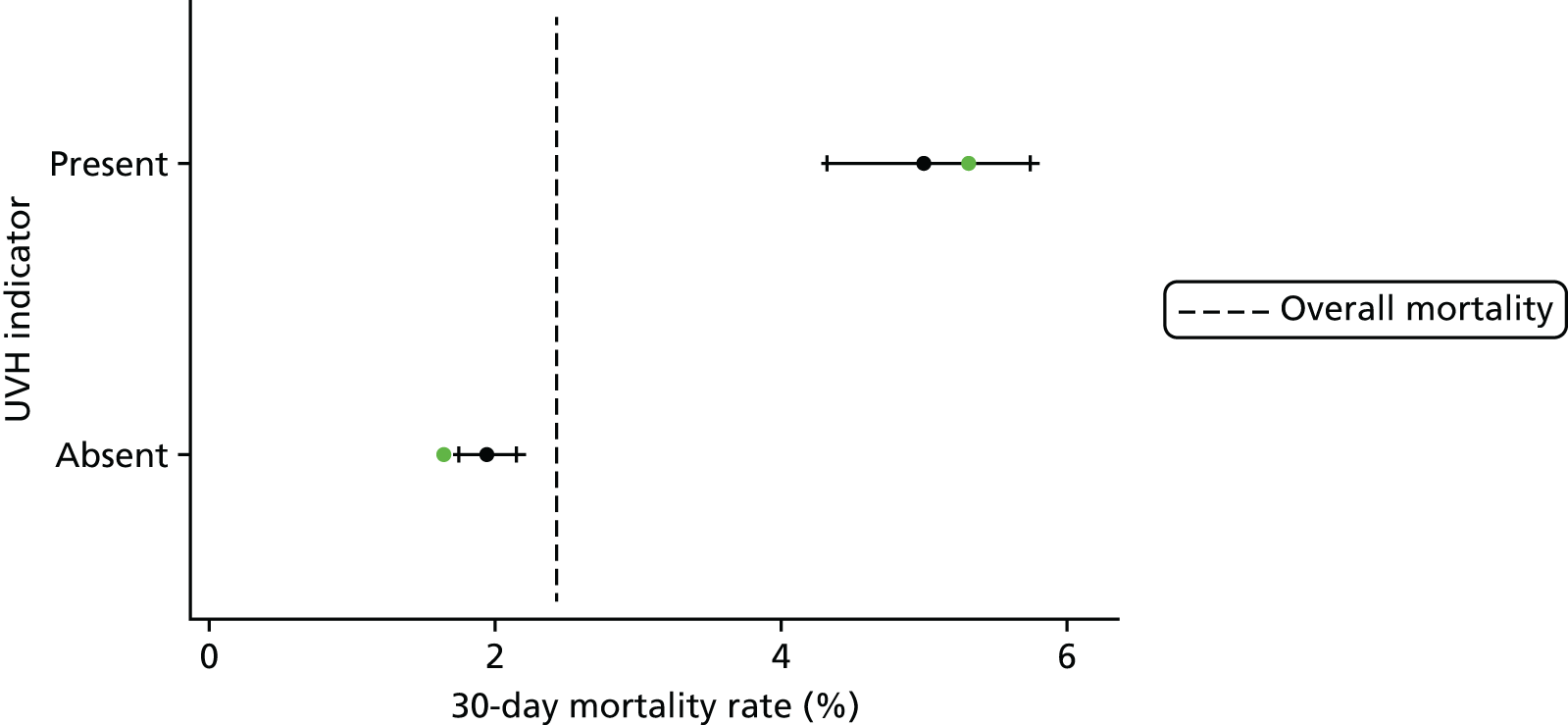

Procedure type and univentricular heart status

The frequency of episodes by UVH status and procedure type is given in Table 2, along with observed mortality rates.

| Risk factor | Frequency, n (%) | Mortality (%) |

|---|---|---|

| UVH status | ||

| Not UVH | 17,749 (82.9) | 1.9 |

| UVH | 3550 (17.1) | 5.0 |

| Procedure type | ||

| Bypass | 16,443 (77.0) | 2.2 |

| Non-bypass | 4819 (22.8) | 3.4 |

| Hybrid | 37 (0.2) | 15.9 |

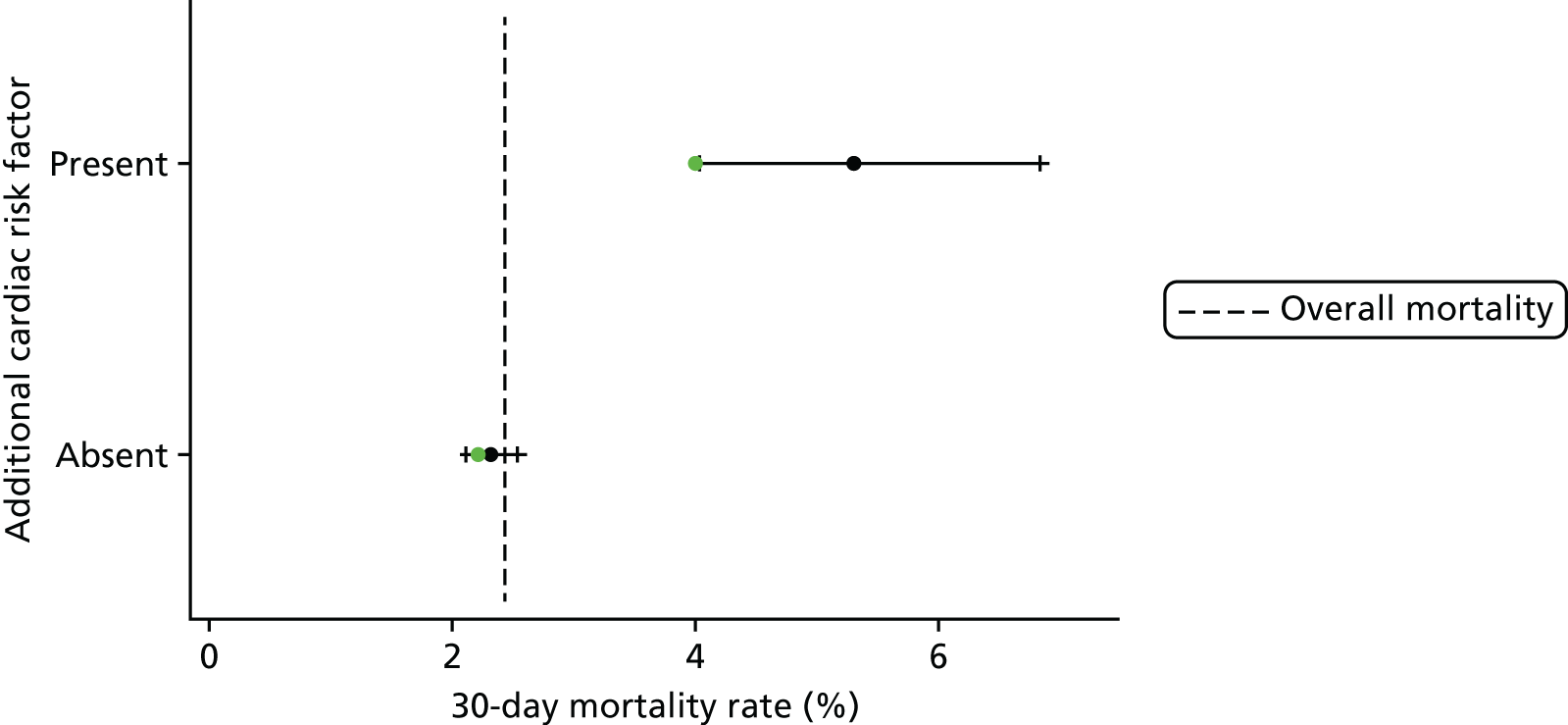

Additional risk factors

The frequency of episodes by additional risk factor and number of additional risk factors are given in Tables 3 and 4, respectively, along with the observed mortality rate.

| Additional risk factor | Frequency, n (%) | Mortality (%) |

|---|---|---|

| Down syndrome absent | 19,635 (92.3) | 2.5 |

| Down syndrome present | 1664 (7.7) | 1.5 |

| Prematurity absent | 18,742 (87.8) | 2.3 |

| Prematurity present | 2557 (12.2) | 4.0 |

| Acquired diagnosis absent | 18,448 (86.5) | 2.4 |

| Acquired diagnosis present | 2851 (13.5) | 3.1 |

| Acquired comorbidity absent | 17,628 (82.1) | 1.7 |

| Acquired comorbidity present | 3671 (17.9) | 5.8 |

| Congenital non-Down syndrome comorbidity absent | 18,890 (88.5) | 2.3 |

| Congenital non-Down syndrome comorbidity present | 2409 (11.5) | 3.8 |

| Number of PRAiS 1 comorbidities (including Down syndrome) | Frequency, n (%) | Mortality (%) |

|---|---|---|

| 0 | 13,215 (61.5) | 1.6 |

| 1 | 4028 (19.0) | 3.0 |

| 2 | 2198 (10.4) | 3.0 |

| 3 | 1284 (6.1) | 4.0 |

| 4 | 391 (2.0) | 9.9 |

| ≥ 5 | 132 (1.0) | 13.7 |

Methods: model development and selection

Benefit of the expert panel

The clinical validity of the risk factors to be included in PRAiS 2 was discussed at length and in considerable detail by the expert panel, both during and between expert panel meetings. In particular, the clinical knowledge of the panel was combined with the evidence from the data to determine the groups of EPCC codes to be included as comorbidities and additional risk factors in the model, how specific procedures and diagnosis should be allocated to broad risk groups and how developments in treatment and outcomes should be incorporated into the updated model. This discussion continued throughout the modelling process.

The process adopted for constructing the final risk model thus consisted of the iterative use of the steps described in the rest of this section.

Multivariable logistic regression

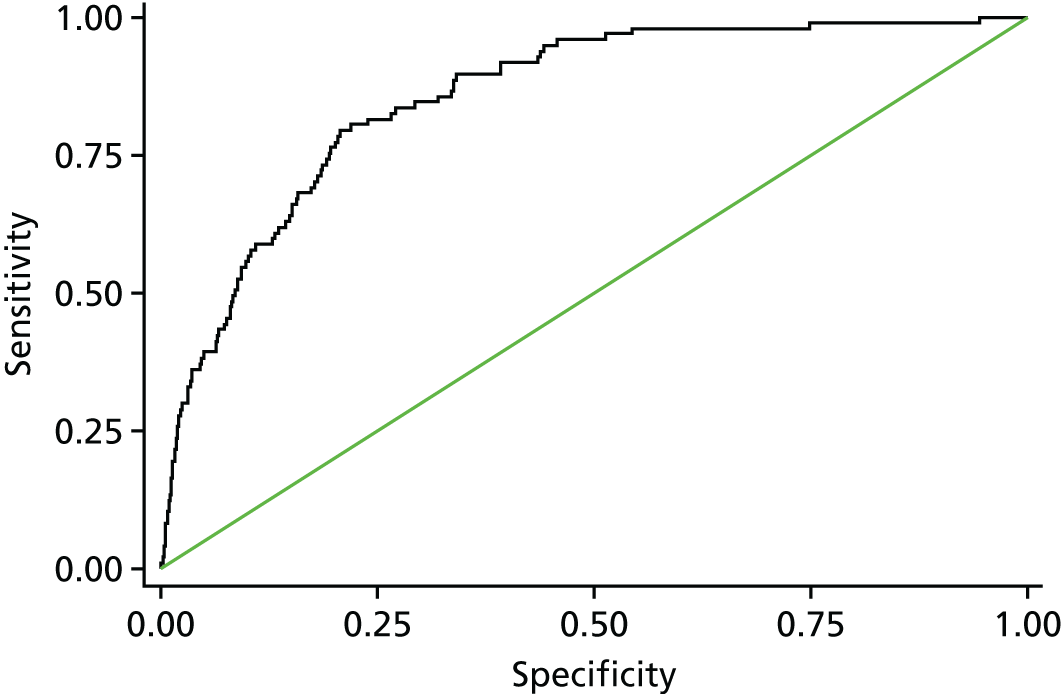

The final set of risk factors included in the model was determined based on a combination of their ability to discriminate between high- and low-risk patients consistently over subsets of the data, their clinical face validity and their ease of use prospectively. All multivariable logistic regression analysis was conducted using the statistical software package Stata, release version 13.0 (2013; StataCorp LP, College Station, TX, USA). The regression output included the risk factor coefficients, the area under the receiving operator characteristic (AUROC) curve, Akaike information criterion (AIC) and the predicted risk for each episode. The AUROC curve measures the discriminative power of a model (i.e. its ability to distinguish between high- and low-risk episodes). 39 A model with an AUROC curve of 1 would predict every outcome perfectly, and an AUROC curve of 0.5 is no better than chance. The AIC is a measure of the goodness of fit of a model that penalises a higher number of model parameters. When comparing models, a lower AIC is preferable. 41

As discussed in Defining episodes of surgical management for analysis, the data set contained more than one episode for some patients. Our aim was to develop a risk model for use at episode rather than patient level in monitoring short-term risk-adjusted outcomes for an entire paediatric cardiac surgical programme, not just for those undergoing their first operation; hence, we treat each surgical episode as independent in running the logistic regressions.

For this reason, the logistic regression output should not be used to infer risk at a patient level, as not all observations used in the regression were independent (with respect to patients). When running our cross-validation procedure (see next section), the data split was adjusted so that each record pertaining to the same patient was in the same cross-validation fold, so that none of the models produced by the cross-validation was tested on patients who had also featured in the development of that model.

25 × fivefold cross-validation

The original PRAiS 1 model developed in 2011 was validated by splitting the data into a development set (70%) and a test set (30%). All analyses were carried out on the development set, and, after calibration within the development set, the model was validated on the test set. 10,33 This was also the original protocol for PRAiS 2. However, there are inherent problems with this method of model validation. As the data are split only once, natural variation in the episodes included in the development and test sets can lead to an imprecise estimate of predictive accuracy. It is also not an efficient method as 30% of the data are never used as part of model development. After discussion with GA from UCL Medical Statistics and our independent statistical expert, DS, we decided to use fivefold cross-validation repeated 25 times (25 × fivefold cross-validation) to develop and test the PRAiS 2 risk model. 42–44 We preferred cross-validation to bootstrapping to ensure that all of the data were used in developing and testing the model, particularly as there exist small but high-risk subpopulations that are important to include.

For a fivefold cross-validation, the process is as follows.

-

The data are randomly split into five equally sized subsets; the model is developed on four subsets (80% of the data) and tested on the subset that was excluded (20% of the data). This is repeated so that all five subsets are treated as the test set once.

-

Step 2 can be repeated on different partitions of the data to increase the reliability performance estimates. We did this 25 times (25 × fivefold cross-validation).

-

The results for the predictive accuracy are averaged over all of the cross-validations to obtain a measure of the predictive accuracy of the final model.

-

The chosen model is then calibrated on the entire data set.

The advantages of 25 × fivefold cross-validation over maintaining a single quarantined test set are as follows. 43

-

As each entry in the data is used an equal amount to test the model, there is less variability in the performance estimate.

-

The model development process and the chosen risk factors are validated and use all of the available data.

-

Repeating the fivefold partition leads to a more accurate performance estimate.

The data splits were stratified by year and unit to ensure a representative case mix and, as discussed above, all episodes for a patient were constrained to lie in the same data split.

To mimic prospective use, no adjustment of weights of this nature was made when using an episode in the test set for each cross-validation run.

Assessment of model performance for each considered model

To assess model performance we used the following measures.

In the main data set:

-

the AUROC curve and AIC of the model developed on the whole main data set.

Under cross-validation:

-

The median and range of the AUROC curve over the cross-validated test sets. We used the somersd command in Stata to calculate an overall AUROC curve for each of the 25 repeats of the cross-validation. 45 The median and full range of these 25 values were then calculated.

-

For each cross-validation fold, a calibration slope (the regression coefficient, β) and the intercept (the constant term, α) were calculated by regressing the outcome on the linear prediction in the test set for that cross-validation. If a model was perfectly calibrated, we would expect β = 1 and α = 0. If β < 1, this can be evidence of overfitting, whereas β > 1 can be evidence of underfitting. If α < 0 the model has overpredicted observed deaths, and if α > 0 the model has underpredicted observed deaths. 46 The coefficient β can also be interpreted as the ‘shrinkage factor’, with a value of much less than 1 being a possible indication of overfitting and of the possible need to apply shrinkage to the model. The calibration slope and intercept were used to test for calibration rather than the more traditional Hosmer–Lemeshow test, as the former method is now considered superior. 41 The median and range of the calibration slope and intercept across the 125 cross-validation test sets.

In the external 2014–15 test data set following final model selection:

-

the AUROC curve of the model developed on the main data set and tested on the 2014–15 data, including confidence intervals (CIs)

-

the calibration slope and intercept of the linear predictor developed on the main data set and regressed on the 2014–15 data set, including CIs.

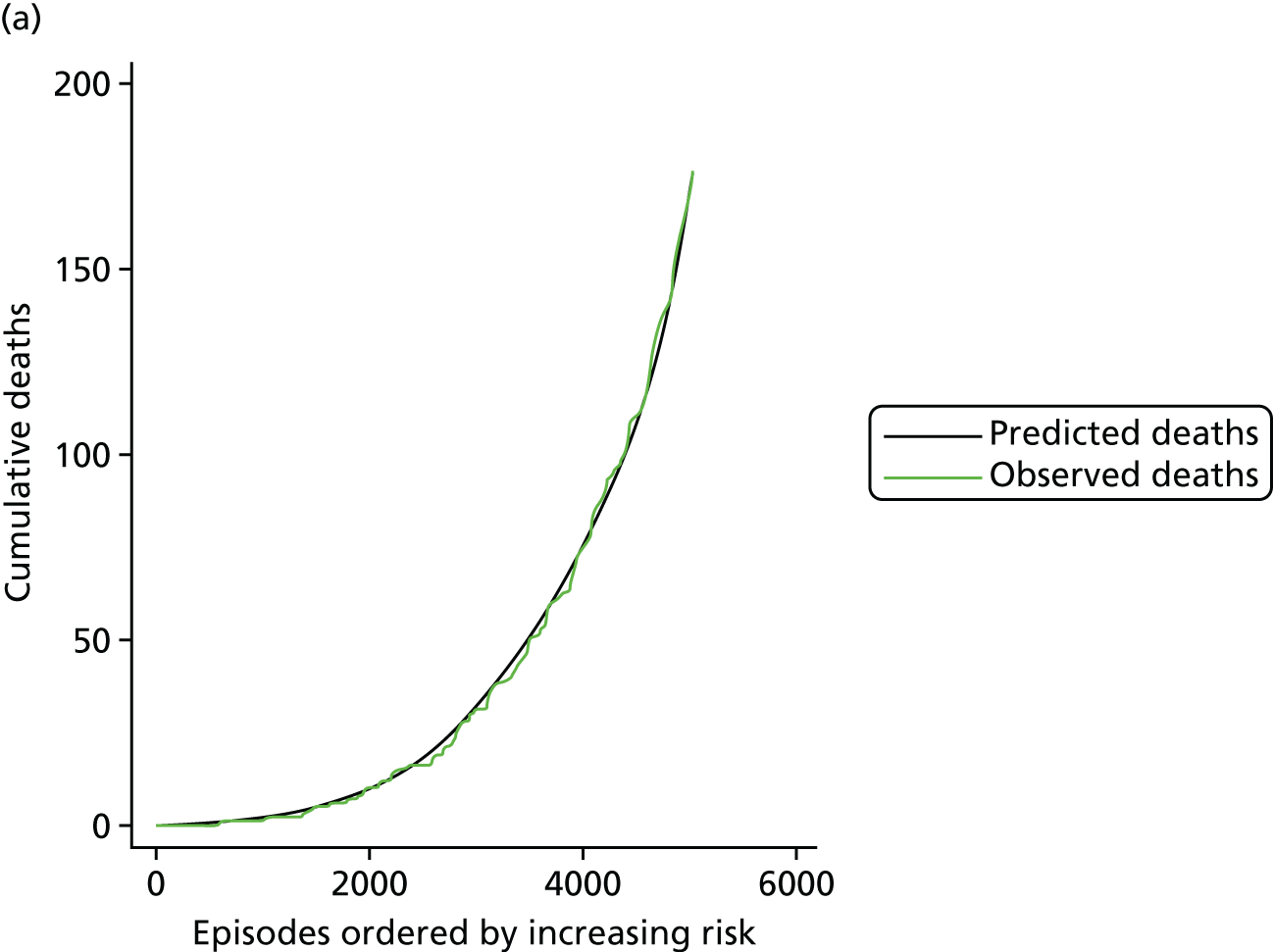

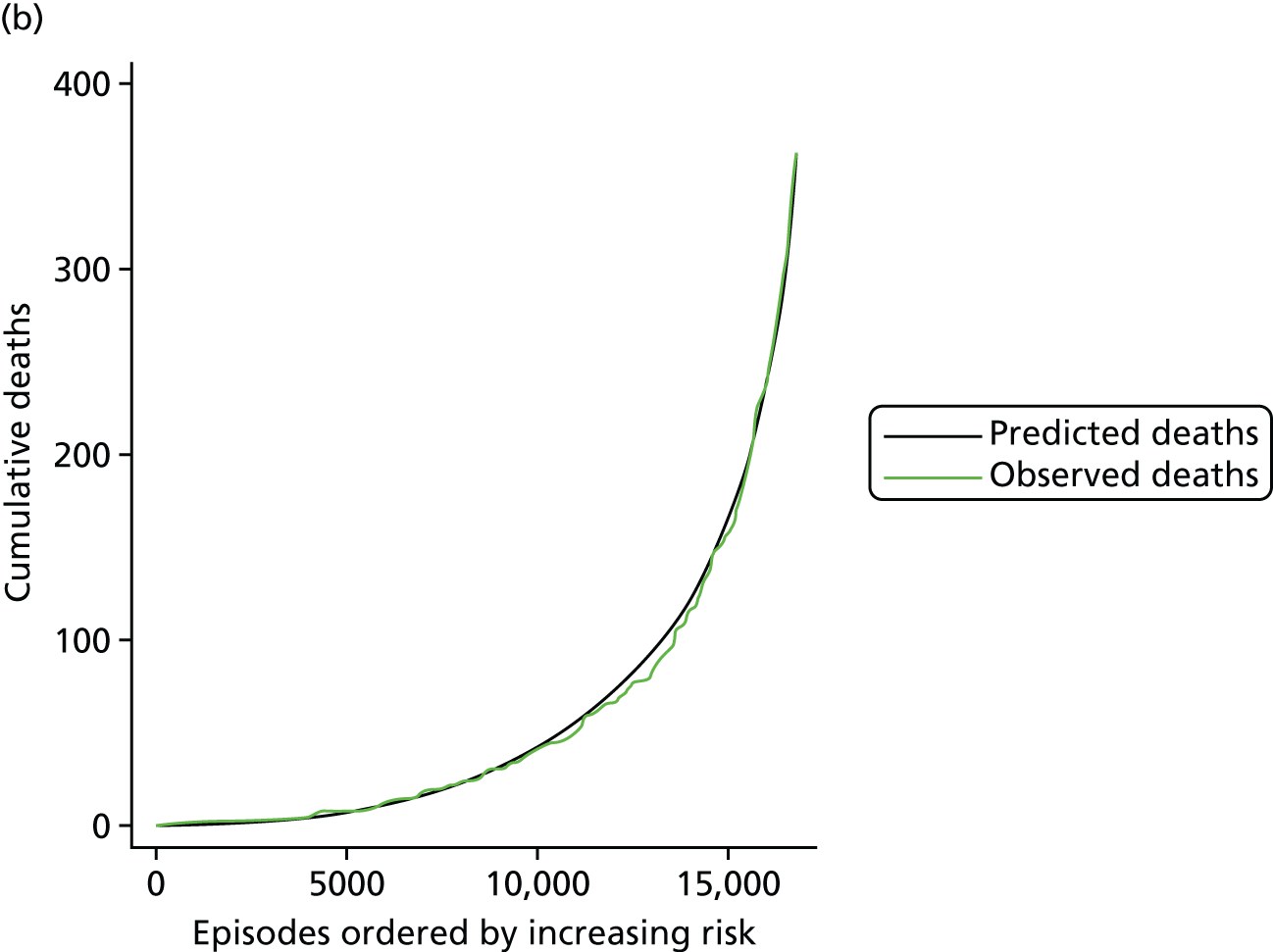

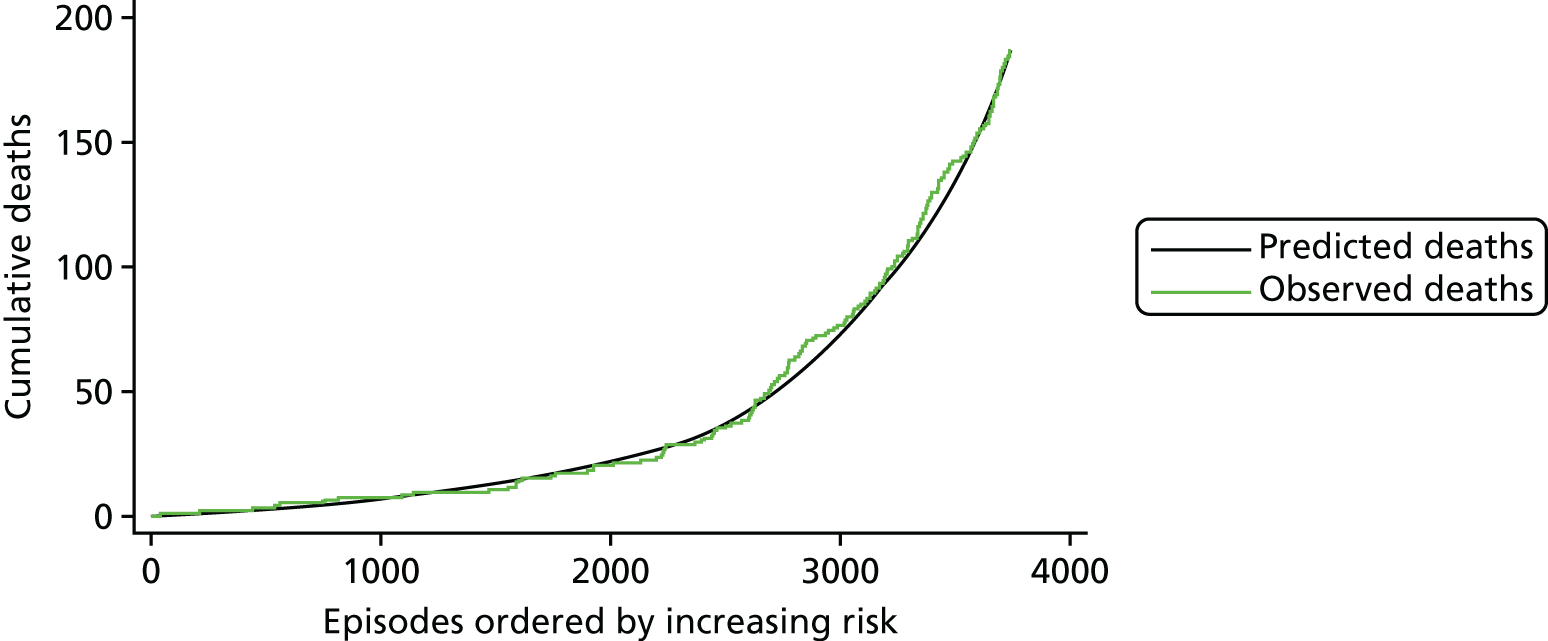

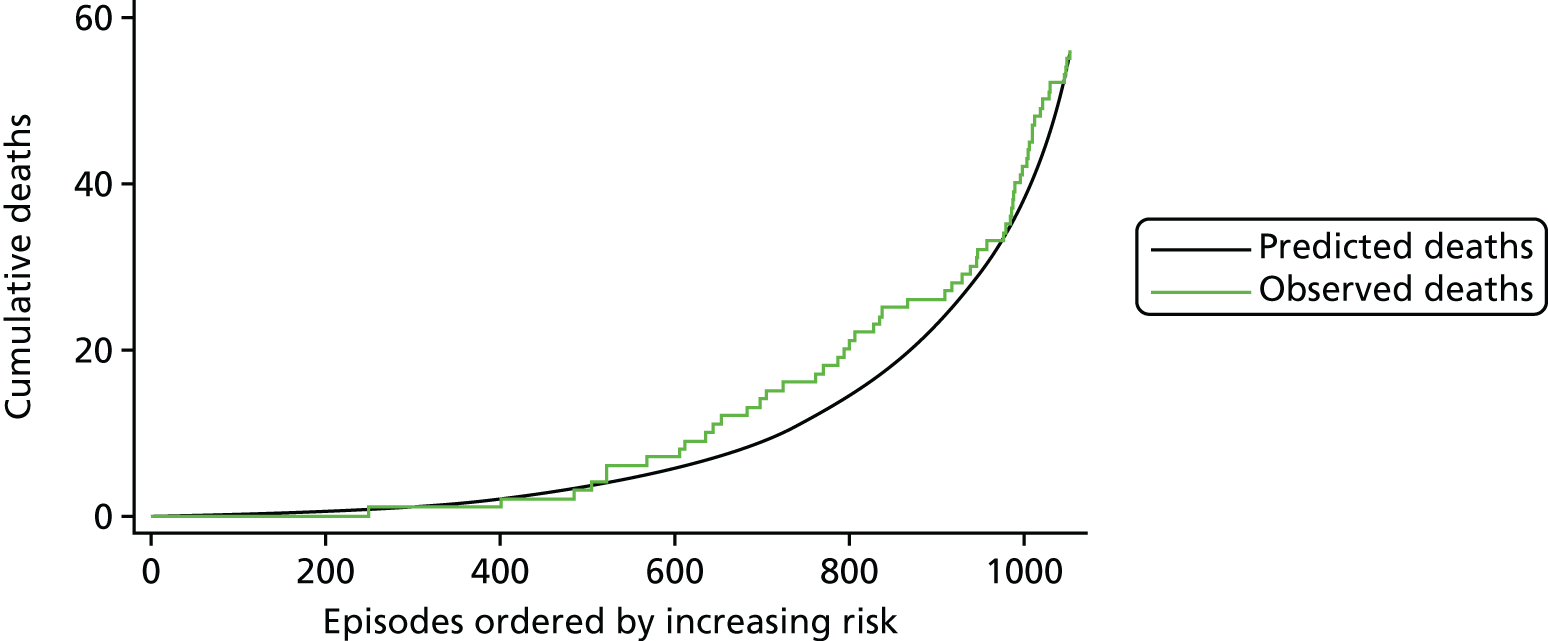

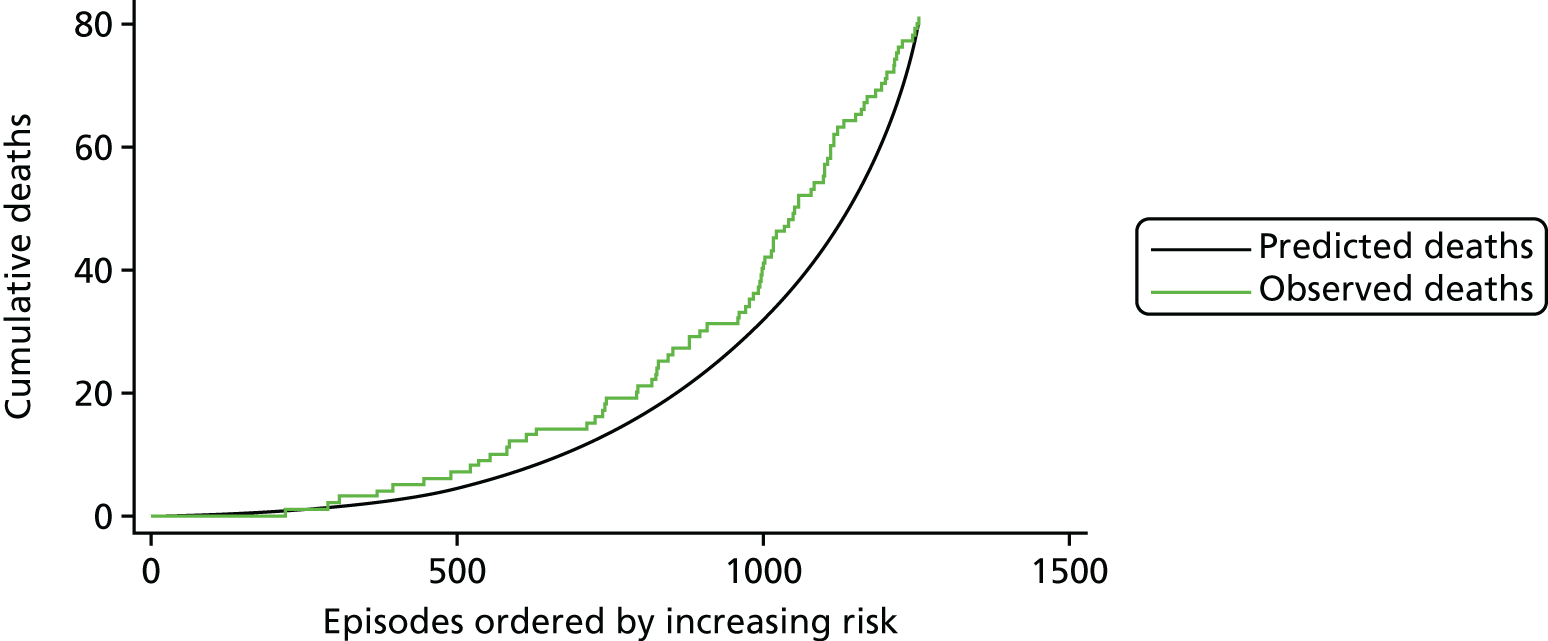

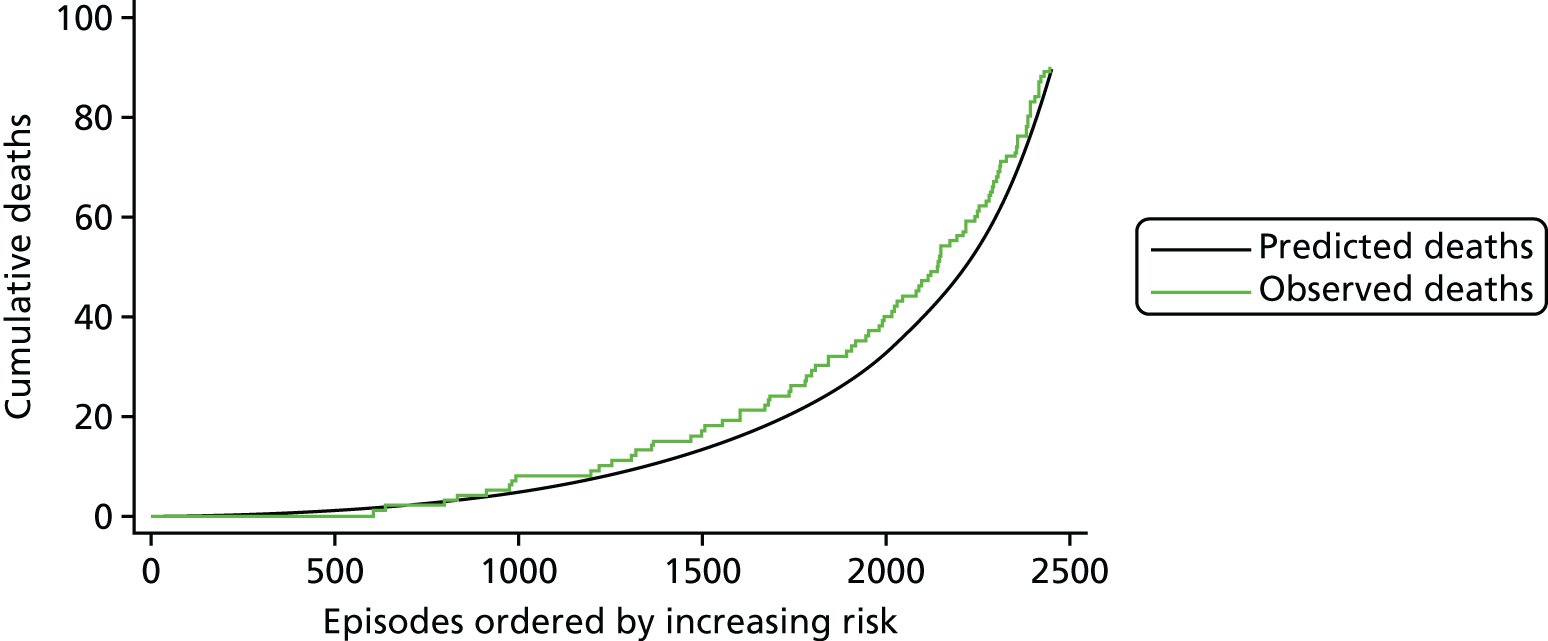

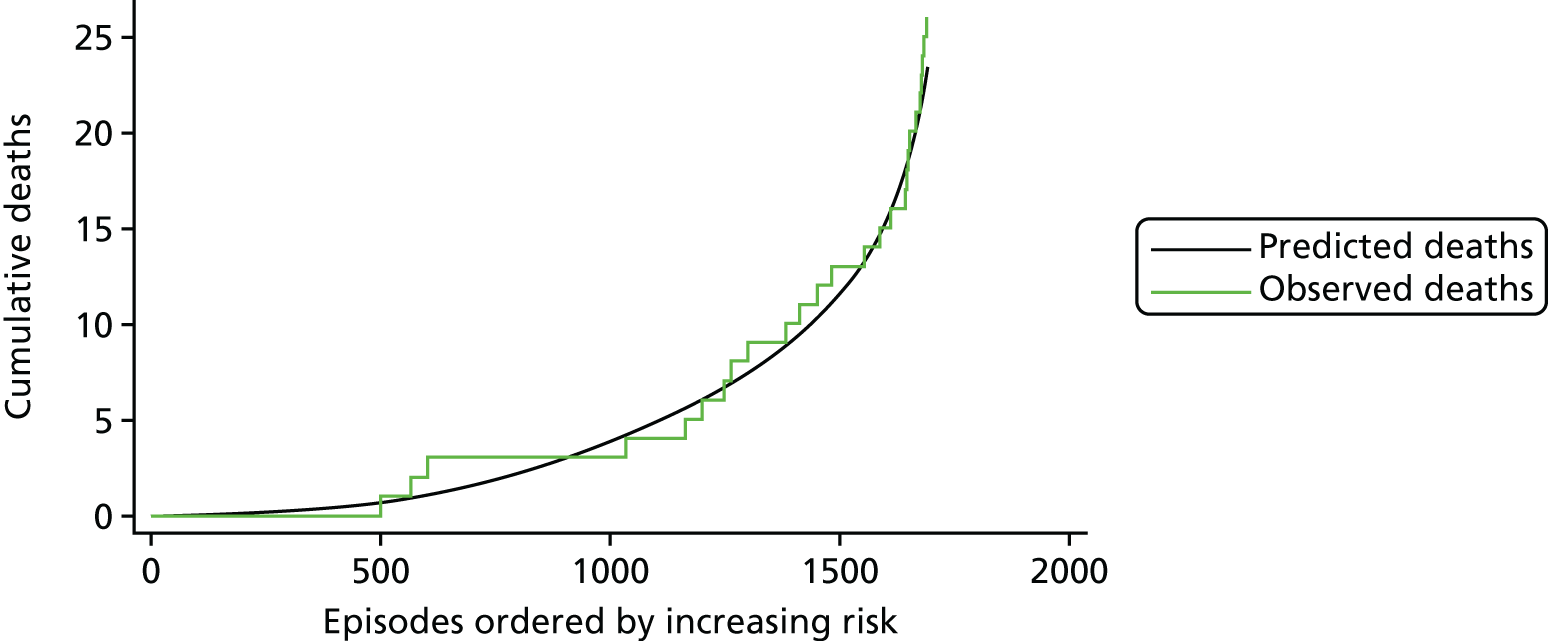

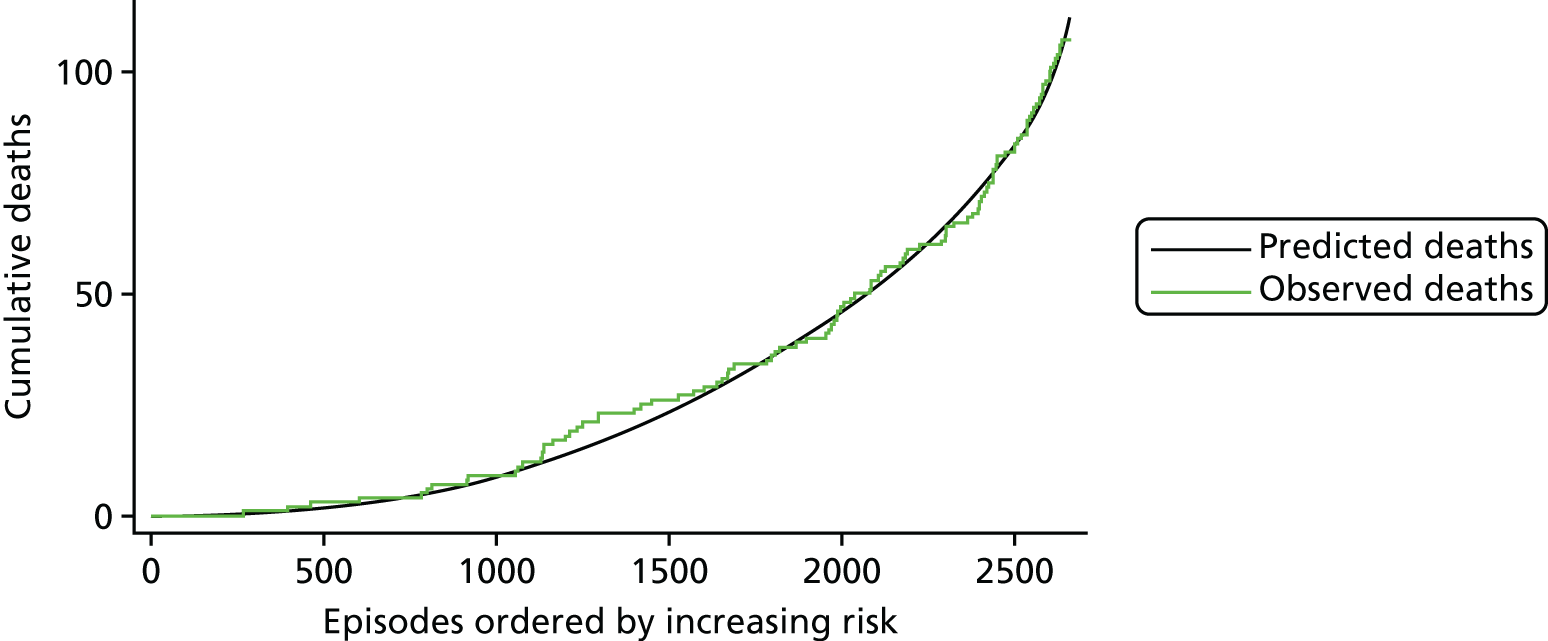

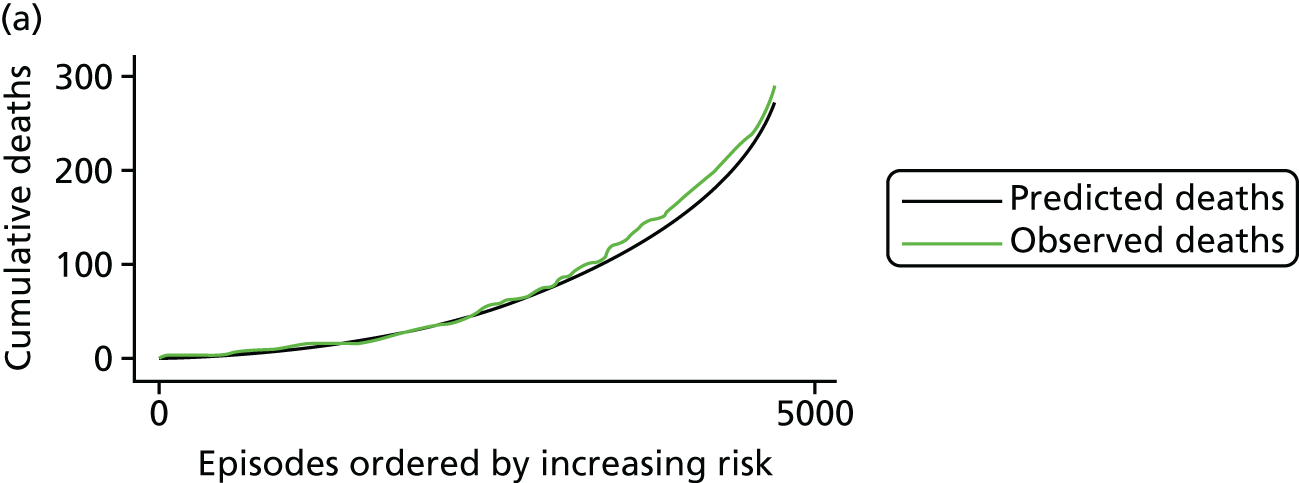

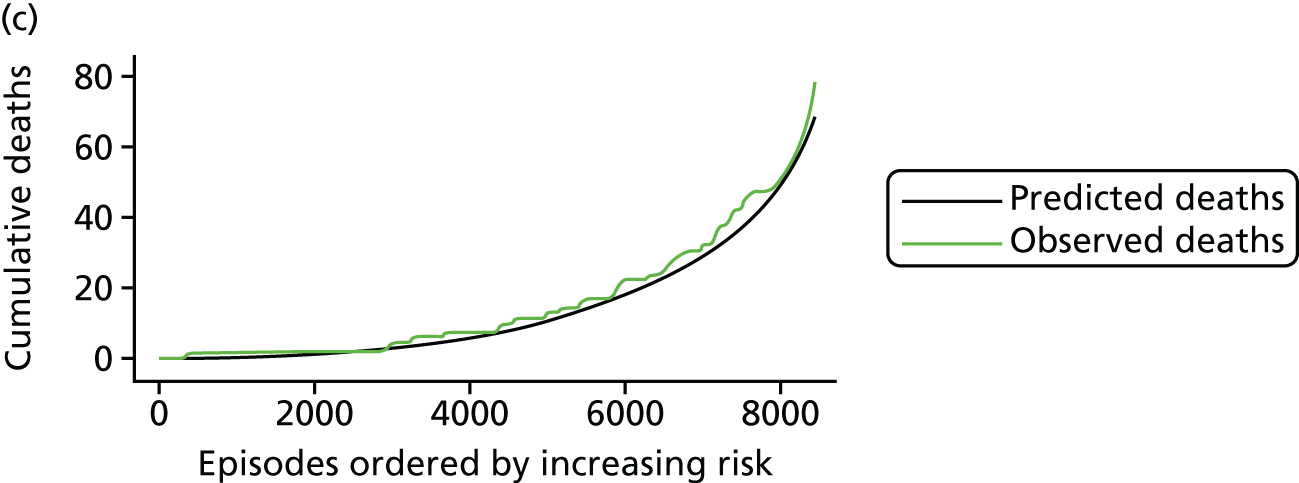

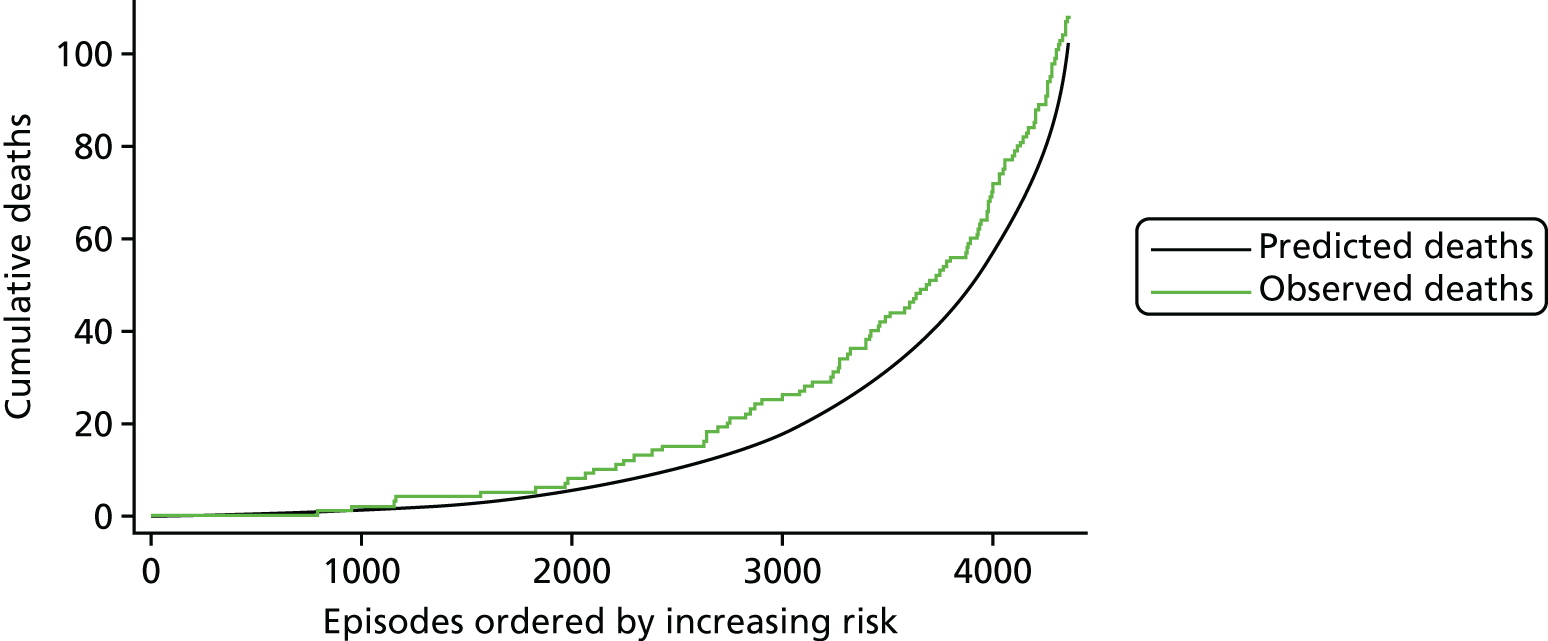

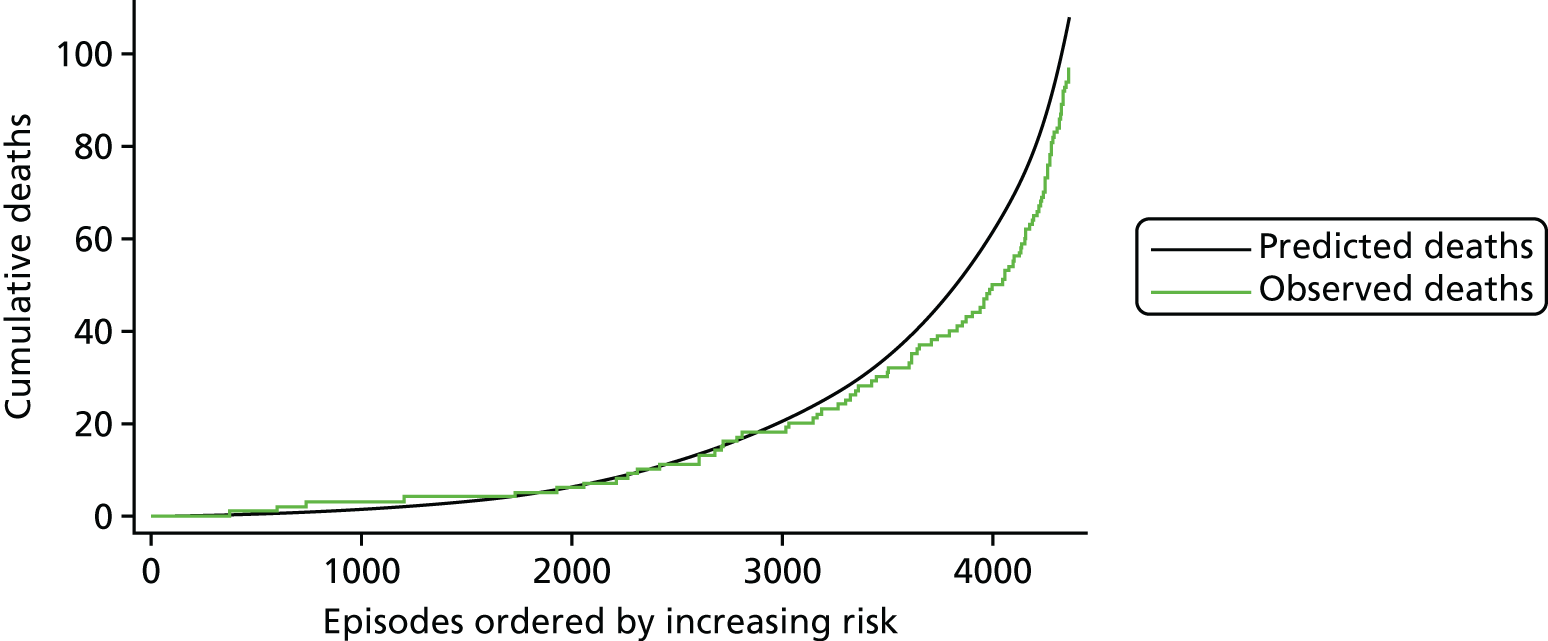

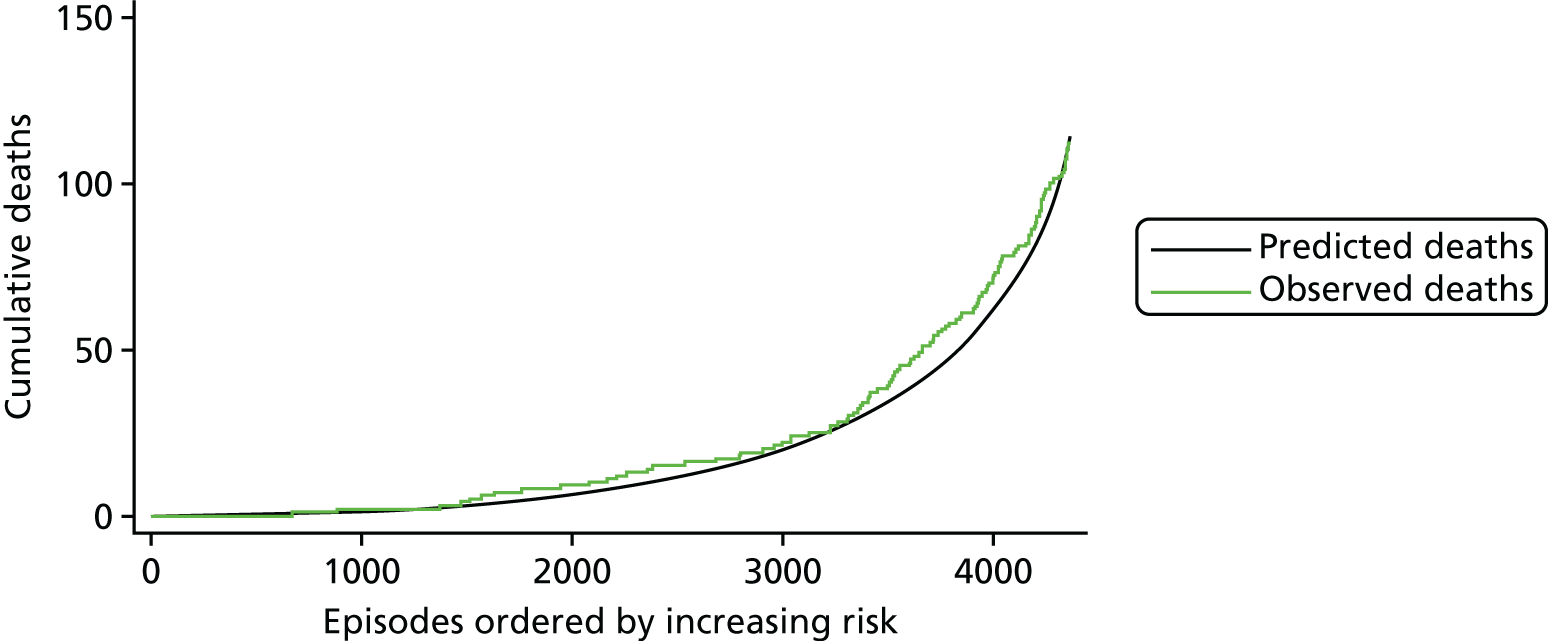

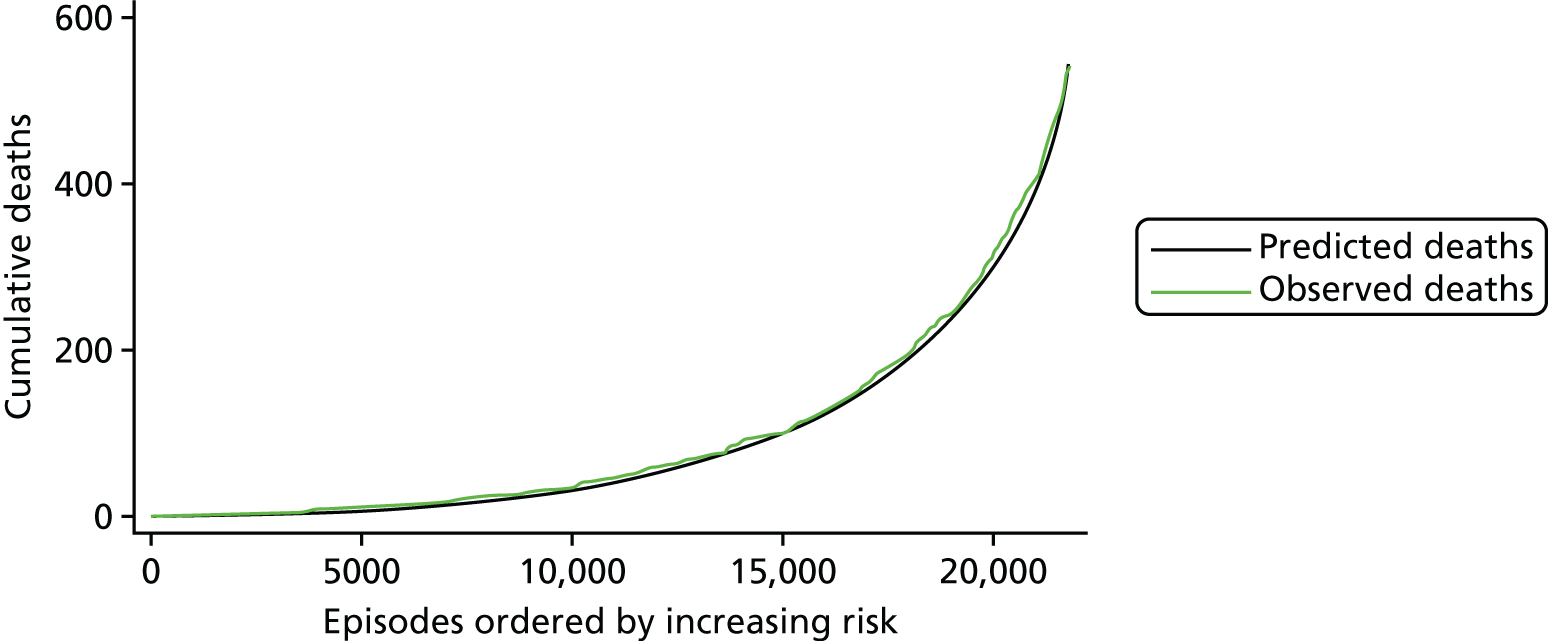

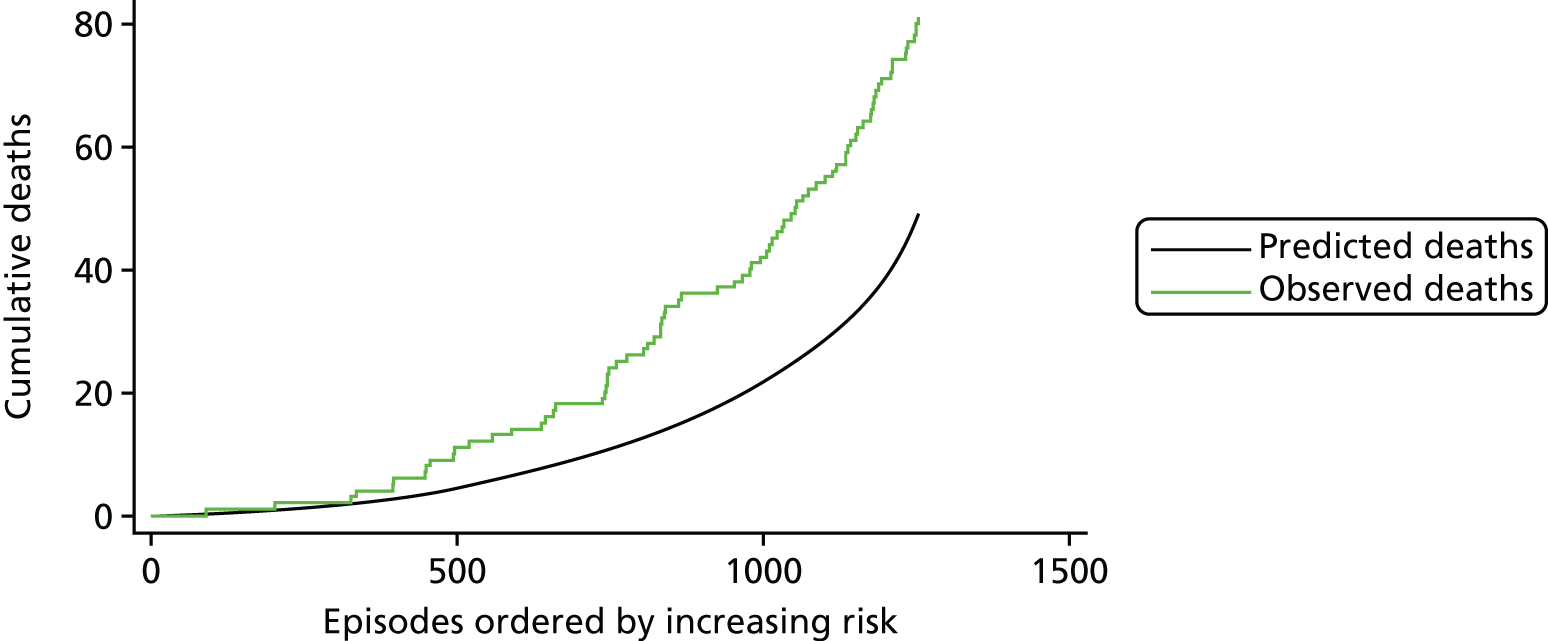

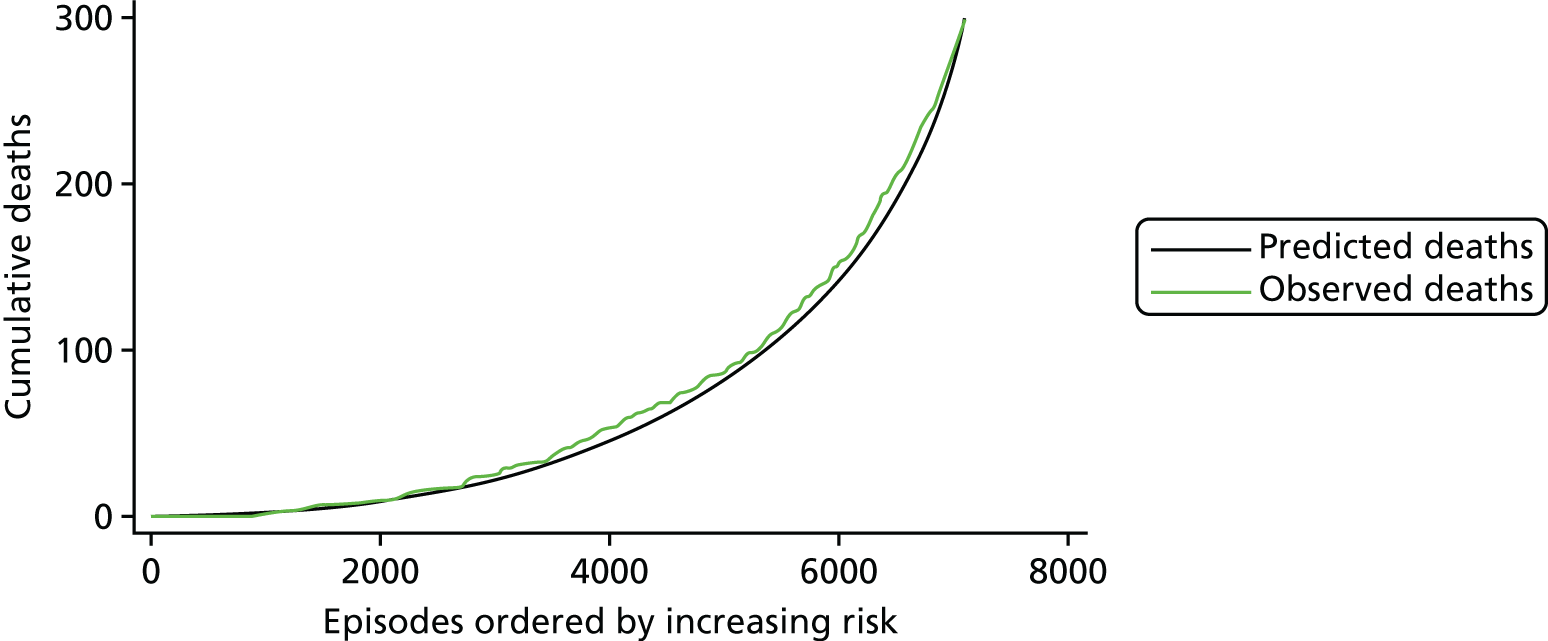

The regression output was also used to construct charts, known as MADCAP (Mean Adjusted Deaths Compared Against Predictions) charts,47 of cumulative predicted and observed deaths against episode number, with episodes ordered by increasing predicted risk. These give a graphical means of summarising the performance of the risk model with respect to discrimination and calibration. The end points of the two lines indicate the overall numbers of deaths predicted and observed over a series of cases. The extent to which the slope of the cumulative observed deaths increases with episode number gives information about the discrimination of the model: the greater the ‘bowing’ towards the bottom right-hand corner of the MADCAP chart, the better the discrimination of the model. Any major deviations of the ‘observed’ curve from the ‘predicted’ curve provide information on where the model may work less well across the spectrum of predicted risk.

Comparison of MADCAP charts between different models was used as a way of informing decisions about the value added or lost in adopting different approaches to how specific procedures, diagnoses and comorbidities were included in the model.

Finally, we investigated any episodes that had particularly large leverage or influence39 to see whether they were genuine outliers or data errors.

Clinical considerations

Comorbidities and additional risk factors

A large part of the clinical discussion centred on how to incorporate more information on comorbidities and other additional risk factors in the model in a clinically meaningful way. A key issue was to reflect the increase in risk posed by other health problems, while balancing the need to make the new risk factors robust in use and not open to gaming in prospective use.

The expert panel felt that a simple count of comorbidities could lead to inflated predicted risk if several comorbidity codes for similar conditions were used, when the actual additional risk from the extra codes would be minimal. For this reason we decided not to include an overall count of comorbidities or a count within different categories. A yes/no indicator for each different category of additional risk meant that predicted risk would be increased if an episode contained different types of comorbidities or additional risk factors, while meaning that records with several similar additional risk factors did not have any additional predicted risk.

The additional cardiac risk factor group of codes was further split into categories of codes relating to different conditions within this group. The clinical members of the expert panel then decided which of these categories would relate to a real increase in risk for any patient in the data set (e.g. conditions that were an expected consequence of a certain diagnosis were not included). Any codes that were too general, and could therefore be applied to many patients with CHD without a real change to their risk, were also excluded. The categories of codes included in the final model were those relating to pulmonary hypertension and poor ventricular function. Any patient who had an acquired diagnosis as their primary diagnosis was excluded from this category to avoid double-counting the risk associated with these conditions.

As this aspect represents both a significant amount of work and novel research, we have included as an appendix (see Appendix 2) a more detailed, clinical account of how this comorbidity and additional risk factor allocation was carried out by the expert panel (written by coauthor KB).

Procedure types

The panel decided to include HLHS hybrid approach procedures in the same procedure type category as non-bypass procedure. As these procedures are still very rare, including them as their own category in the model is not feasible.

Specific procedure and diagnostic risk groupings

At the second expert panel meeting in February 2016, a possible set of specific procedure and diagnosis risk groups was presented to the panel. These groups had been derived by the analytical team using CART analysis based on the risk associated with a procedure/diagnosis and the age at which the episodes occurred.

The groups were discussed by the panel, and a set of possible alternatives was suggested by the panel that were considered clinically more appropriate.

Results: model development and variable selection

Age and weight

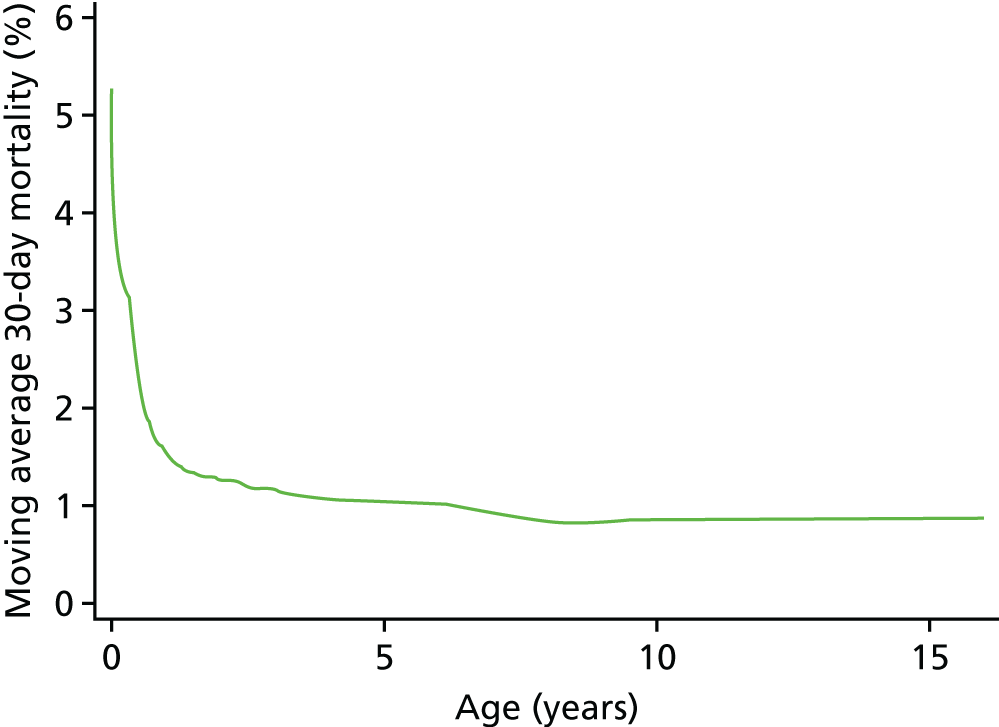

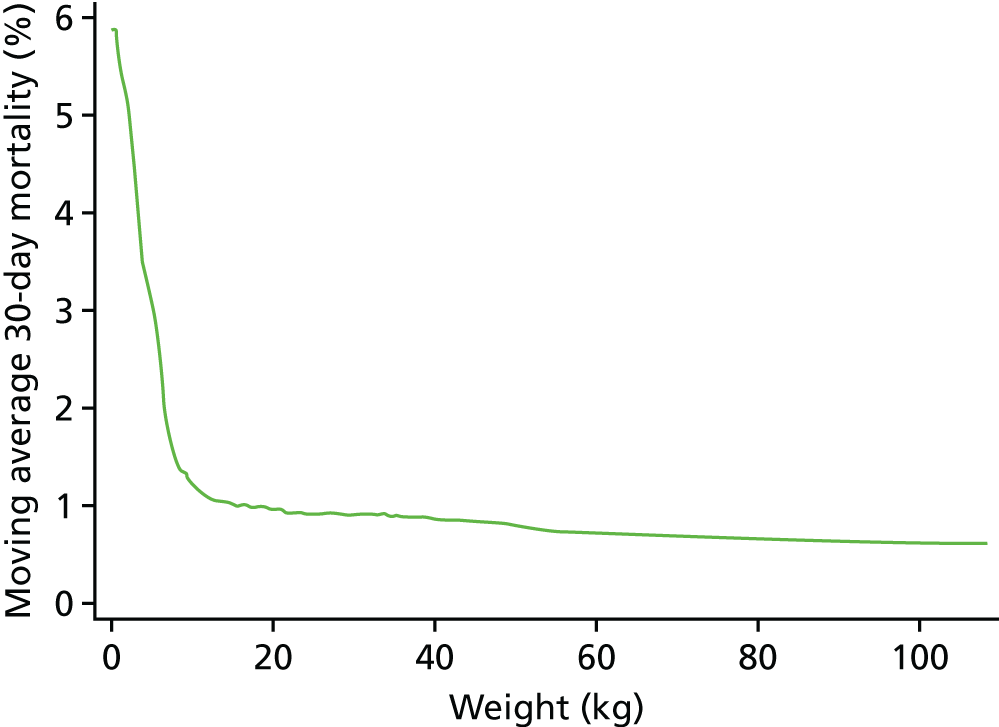

The relationships between age and weight and 30-day mortality are shown in Figures 9 and 10, respectively, which show the weighted-moving average of mortality over the range of ages and weight in the data set. These figures show that the low ages and weights have a very strong relationship with higher mortality, which drops quickly and levels off as patients in this data set get older and heavier.

FIGURE 9.

The weighted moving average 30-day mortality by age.

FIGURE 10.

The weighted moving average 30-day mortality by weight.

As the non-linear nature of the relationship between age and weight was now going to be accounted for more accurately, we did not include a specific low weight (< 2.5 kg) risk factor in the model.

We found that a cubic spline could not adequately model the relationship at all ages, but that fractional polynomials were able to capture the non-linear association for both age and weight.

After considering various two-dimensional fractional polynomials, with a selection of terms with powers of ±2, ±1, ±0.5, 3 and logarithms, that were the best fit in different cross-validation runs, we used ax+bx for both age and weight, as it was simple function that performed very well. Moving to these non-linear functions of age allowed us to discard the categorical age bands used in PRAiS 1.

Comorbidities and additional risk factors

Several months of iterative refinement of comorbidity and additional risk factor categorisation resulted in six final groupings for consideration, shown in Table 5. The details of how these factors are allocated are given in Report Supplementary Material 2.

| Risk factor | Frequency, n (%) | 30-day mortality (%) |

|---|---|---|

| Congenital comorbidity absent | 19,393 (88.8) | 2.3 |

| Congenital comorbidity present | 2445 (11.2) | 3.7 |

| Acquired comorbidity absent | 20,584 (94.3) | 2.2 |

| Acquired comorbidity present | 1254 (5.7) | 6.5 |

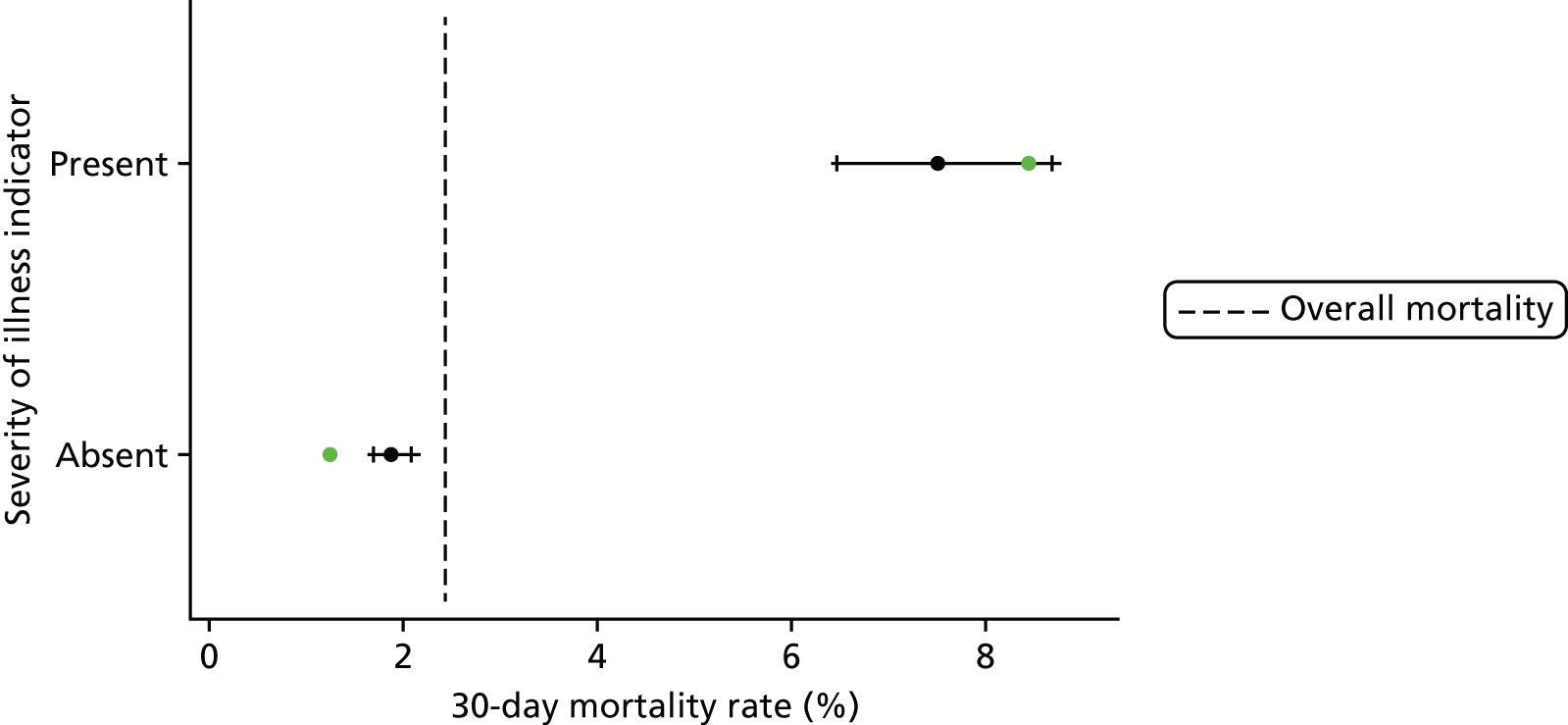

| Severity of illness indicator absent | 19,578 (89.7) | 1.9 |

| Severity of illness indicator present | 2260 (10.3) | 7.5 |

| Additional cardiac risk factor absent | 20,785 (95.2) | 2.3 |

| Additional cardiac risk factor present | 1053 (4.8) | 5.3 |

| Down syndrome absent | 19,635 (92.3) | 2.5 |

| Down syndrome present | 1664 (7.7) | 1.5 |

| Prematurity absent | 18,742 (87.8) | 2.3 |

| Prematurity present | 2557 (12.2) | 4.0 |

In almost all cross-validation runs using all six risk factors, neither prematurity nor Down syndrome was significant at the 5% level in multivariable regression.

Down syndrome had been excluded from the comorbidity risk factor in PRAiS 1 as its presence was not associated with an increased risk of mortality and a majority of Down syndrome patients have a single specific procedure. When this was discussed again by the expert panel, they believed that patients with Down syndrome did not have a risk from this procedure that was substantially different from that of non-Down syndrome patients.

Although prematurity was significantly associated with mortality in univariate regression, this association disappeared once other risk factors were included. It appeared that the better treatment of age and weight (using fractional polynomials) was adequately capturing the additional risk due to prematurity (as these patients are almost all of low weight and young).

Given these considerations, and on the advice of the analysts (LR and CP), the expert panel agreed to exclude Down syndrome and prematurity from further consideration as risk factors for PRAiS 2.

The other four comorbidity and additional risk factor groups devised by the expert panel (see Table 5) remained significantly associated with higher mortality in all cross-validation multivariable logistics regressions and were included in the final model.

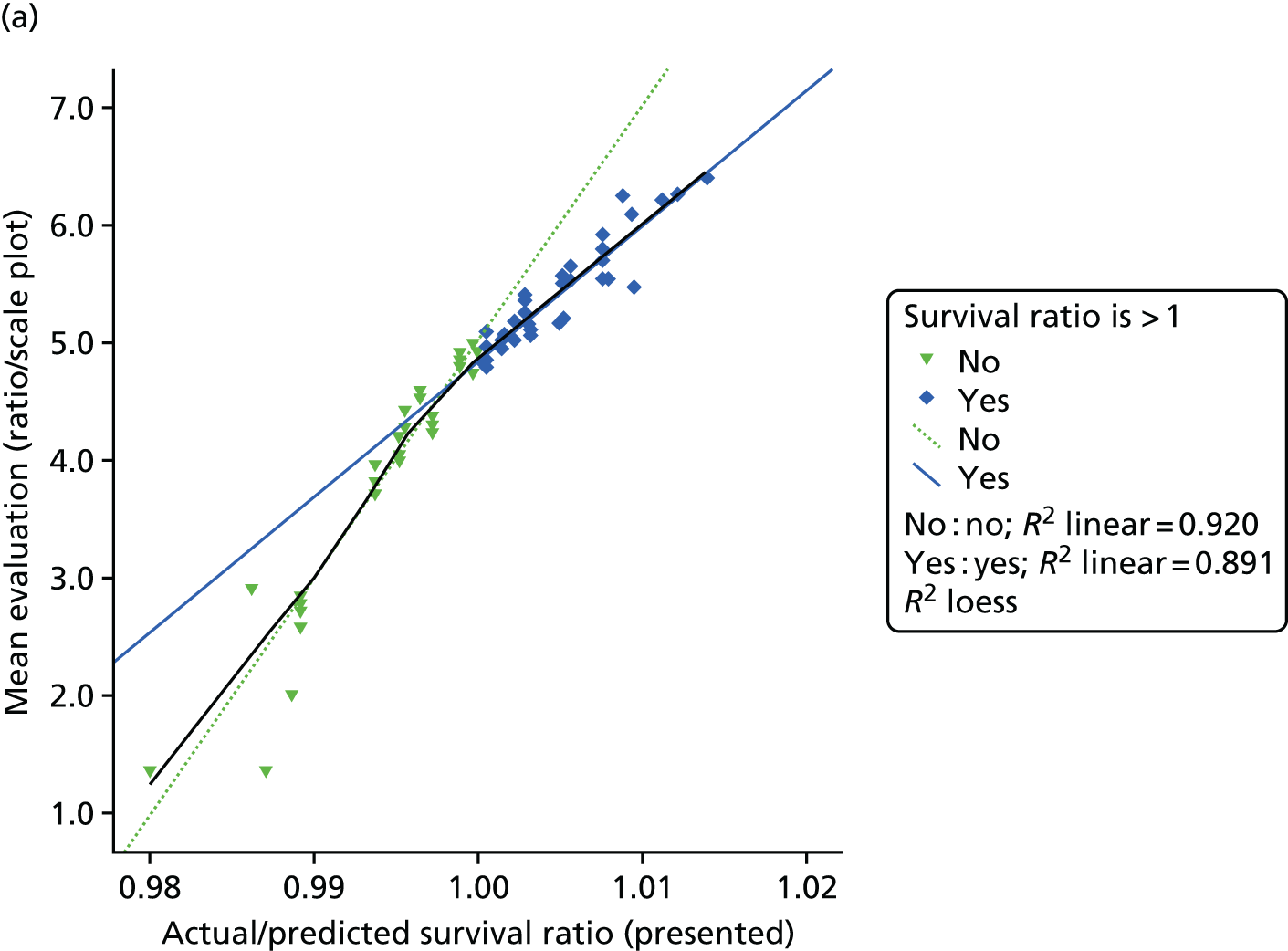

Year of surgery

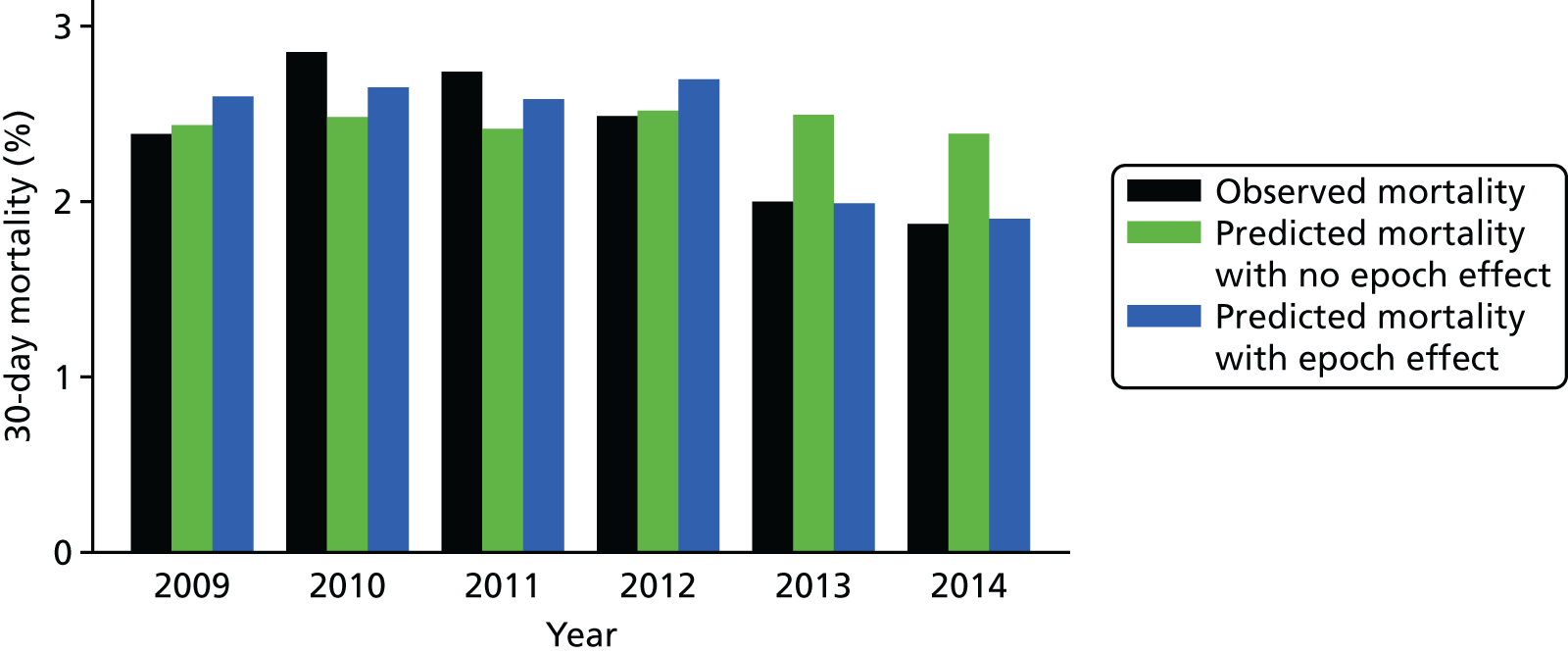

While exploring early versions of the PRAiS 2 risk model by calendar year from 2009 to 2014, we found a clear trend of overestimating risk in the later years, particularly after 2012. This was not unexpected, given the already observed decrease over time in mortality up to 2010. 48 Raw mortality has continued to fall (albeit not evenly), with particular reduction post 2012 (Figure 11). This trend suggested that any model calibrated on data from 2009 to 2014, without taking an epoch effect into account, would already be out of date when used prospectively by hospitals for local quality improvement and assurance and by national audit. To include year of surgery as a continuous or ordinal variable would, however, be to assume that improvement over time is inevitable, and to ignore ceiling and threshold effects. The epoch effect is likely to be caused by a combination of changes in outcomes and coding; however, the expert panel felt that there had been a real improvement in outcomes in recent years, which was reflected in an overall drop in the observed 30-day mortality rate. There was a step change in completeness of the comorbidity field in 2009, and, although there has been improvement since then, it has been more gradual. The need for accurate and currently applicable data needed to be balanced with using the most data possible to get an accurate model, and using data from 2009 onwards with an epoch effect applied from 2013 provided an appropriate compromise. We therefore decided to explore including a binary epoch variable in the model indicating whether an episode occurred pre 2013 or from 2013 onwards.

FIGURE 11.

Observed vs. predicted risk for the final model with and without the post 2012 flag.

After finalising the model, we reran it in the development set with and without the epoch variable. Figure 11 shows the difference between observed and predicted deaths from each model evaluated in the main data set, for each year, weighted by the number of episodes in that year. The model that includes the epoch effect provides a better fit to the mortality over time and the binary epoch flag is highly significant (p < 0.005; AIC with the flag of 4222 vs. AIC without the flag of 4229). We discussed the inclusion of this parameter with the expert panel, asking whether or not they thought that such an epoch effect was clinically plausible, that is, was it plausible that outcomes had improved over the past few years instead of being due to natural variation. The expert panel considered that there had been a real improvement in outcomes and recommended the inclusion of the epoch flag in the final model.

Specific procedure and diagnosis risk groups

As procedure and diagnosis are intrinsically linked, the expert panel considered options for adjusting the CART-generated diagnosis and specific procedure groups together.

Following the second expert panel meeting, the team of analysts considered a shortlist of candidate models and compared their performance using MADCAP charts and consideration of individual specific procedure and broader group frequency/mortality in the national data set.

The initial risk groups presented at the meeting are shown in Tables 6 and 7.

| Specific procedure group: model A | Frequency, n (%) | 30-day mortality (%) |

|---|---|---|

| Specific procedure risk group A | 1356 (6.9) | 9.5 |

| Norwood procedure (stage 1) | 589 (2.7) | 10.7 |

| HLHS hybrid approach | 44 (0.2) | 15.9 |

| TAPVC repair + arterial shunt | 10 (0.0) | 60.0 |

| Truncus and interruption repair | 15 (0.1) | 6.7 |

| Arterial switch + aortic arch obstruction repair (with/without VSD closure) | 81 (0.4) | 8.6 |

| Arterial shunt | 760 (3.5) | 7.8 |

| Specific procedure risk group B | 3364 (15.4) | 4.2 |

| Truncus arteriosus repair | 190 (0.9) | 5.3 |

| Interrupted aortic arch repair | 118 (0.5) | 5.1 |

| Repair of total anomalous pulmonary venous connection | 329 (1.5) | 5.2 |

| Arterial switch + VSD closure | 311 (1.4) | 2.6 |

| Isolated pulmonary artery band | 531 (2.4) | 4.0 |

| PDA ligation (surgical) | 1885 (8.6) | 4.1 |

| Specific procedure risk group C | 1996 (9.1) | 1.2 |

| Arterial switch (for isolated transposition) | 724 (3.3) | 1.5 |

| Isolated coarction/hypoplastic aortic arch repair | 1236 (5.7) | 1.0 |

| Aortopulmonary window repair | 36 (0.2) | 0.0 |

| Specific procedure risk group D | 503 (2.3) | 5.0 |

| Mustard or Senning procedure | 16 (0.1) | 12.5 |

| Ross–Konno procedure | 69 (0.3) | 2.9 |

| Pulmonary vein stenosis procedure | 96 (0.4) | 6.3 |

| Pulmonary atresia VSD repair | 201 (0.9) | 4.5 |

| Tetralogy with absent pulmonary valve repair | 48 (0.2) | 4.2 |

| Unifocalisation procedure (with/without shunt) | 73 (0.3) | 5.5 |

| Specific procedure risk group E | 1672 (7.7) | 2.8 |

| Heart transplant | 152 (0.7) | 3.3 |

| Tricuspid valve replacement | 17 (0.1) | 5.9 |

| Mitral valve replacement | 164 (0.8) | 3.7 |

| Aortic valve repair | 292 (1.3) | 2.1 |

| Pulmonary valve replacement | 328 (1.5) | 1.8 |

| Aortic root replacement (not Ross) | 59 (0.3) | 3.4 |

| Cardiac conduit replacement | 167 (0.8) | 1.8 |

| Isolated RV–PA conduit construction | 400 (1.8) | 3.3 |

| Tricuspid valve repair | 93 (0.4) | 4.3 |

| Specific procedure risk group F | 2414 (11.1) | 1.7 |

| Bidirectional cavopulmonary shunt | 1156 (5.3) | 1.7 |

| Multiple VSD closure | 59 (0.3) | 1.7 |

| AVSD and tetralogy repair | 50 (0.2) | 2.0 |

| AVSD (complete) repair | 889 (4.1) | 1.0 |

| Cor triatriatum repair | 54 (0.2) | 5.6 |

| Supravalvar aortic stenosis repair | 102 (0.5) | 3.9 |

| Rastelli – REV procedure | 104 (0.5) | 3.8 |

| Specific procedure risk group G | 2253 (10.3) | 0.7 |

| Fontan procedure | 989 (4.5) | 1.0 |

| Aortic valve replacement – Ross | 151 (0.7) | 0.7 |

| Subvalvar aortic stenosis repair | 609 (2.8) | 0.3 |

| Mitral valve repair | 235 (1.1) | 0.4 |

| Sinus venosus ASD and/or PAPVC repair | 269 (1.2) | 0.4 |

| Specific procedure risk group H | 2152 (9.9) | 0.6 |

| AVSD (partial) repair | 396 (1.8) | 0.5 |

| Tetralogy of Fallot-type DORV repair | 1521 (7.0) | 0.7 |

| Vascular ring procedure | 235 (1.1) | 0.4 |

| Specific procedure risk group I | 2868 (13.1) | 0.1 |

| Anomalous coronary artery repair | 94 (0.4) | 0.0 |

| Aortic valve replacement – not Ross | 93 (0.4) | 0.0 |

| ASD repair | 941 (4.3) | 0.0 |