Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 14/19/19. The contractual start date was in March 2015. The final report began editorial review in March 2017 and was accepted for publication in July 2017. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Geoff Wong is a member of the National Institute for Health Research Health Technology Assessment programme Primary Care Panel, and is a panel member of the Health and Safety Executive External Peer Review Panel Evaluation Governance Group. During the course of the project Gill Westhorp worked as a consultant and consulting academic undertaking realist evaluations and reviews, and provided some capacity building and some PhD supervision on a commercial basis. These activities were not undertaken under the auspices of this project.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2017. This work was produced by Wong et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2017 Queen’s Printer and Controller of HMSO

Chapter 1 Background

Many of the problems confronting policy- and decision-makers, evaluators and researchers today are complex. For example, much health service demand results from the effects of smoking, suboptimal diets (including obesity), excessive alcohol, inactivity or adverse family circumstances (e.g. partner violence), all of which, in turn, have multiple causes operating at both individual and societal level. Interventions or programmes designed to tackle such problems are themselves complex, often having multiple, interconnected components delivered individually or targeted at communities or populations. Their success depends both on individuals’ responses and on the wider context in which people strive (or not) to live healthy lives. What works in one family, one organisation or one city may not work in another.

Similarly, the ‘wicked problems’ of contemporary health services research – how to improve quality and assure patient safety consistently across the service, how to meet rising need from a shrinking budget and how to realise the potential of information and communication technologies (which often promise more than they deliver) – require complex delivery programmes with multiple, interlocked components that engage with the particularities of context. What works in hospital A may not work in hospital B.

Designing and evaluating complex interventions is challenging. Randomised trials that compare ‘intervention on’ with ‘intervention off’, and their secondary research equivalent, meta-analyses of such trials, may produce statistically accurate statements (e.g. that the intervention works ‘on average’), but may leave us none the wiser about where to target resources or how to maximise impact.

Realist evaluation seeks to address these problems. It is a form of theory-driven evaluation, based on realist philosophy,1 that aims to advance understanding of why these complex interventions work, how, for whom, in what context and to what extent, as well as to explain the many situations in which a programme fails to achieve the anticipated benefit.

Realist evaluation assumes both that social systems and structures are ‘real’ (because they have real effects) and that human actors respond differently to interventions in different circumstances. To understand how an intervention might generate different outcomes in different circumstances, realism introduces the concept of mechanisms – which may be helpfully conceptualised as underlying changes in the reasoning of participants who are triggered in particular contexts. 2 For example, a school-based feeding programme may work by relieving hunger in young children in a low-income rural setting where famine has produced overt nutritional deficiencies, but for teenagers in a troubled inner-city community where many young people are disaffected, it may work chiefly by making pupils feel valued and nurtured. 3 What constitutes ‘working’ is also likely to be somewhat different in the two settings.

Realist evaluations have addressed numerous topics of central relevance in health services research, including what works and for whom when ‘modernising’ health services,4 introducing breastfeeding support groups,5 using communities of practice to drive change,6 involving patients and the public in research,7 how robotic surgery impacts on team-working and decision-making within the operating theatre8 and fines for delays in discharge from hospitals. 9 They have also been used in fields as diverse as international development, education, crime prevention and climate change.

What is realist evaluation?

Realist evaluation was developed by Pawson and Tilley in the 1990s,10 originally in the field of criminology, to address the question, ‘what works for whom in what circumstances and how?’ in criminal justice interventions. This early work highlighted the following points:

-

Social programmes (closely akin to what health service researchers call complex interventions) are an attempt to address an existing social problem (i.e. to create some level of social change).

-

Programmes ‘work’ by enabling participants to make different choices (although choice-making is always constrained by such things as participants’ previous experiences, beliefs and attitudes, opportunities and access to resources).

-

Making and sustaining different choices may require a change in a participant’s reasoning (e.g. in their values, beliefs, attitudes or the logic they apply to a particular situation) and/or the resources (e.g. information, skills, material resources, support) they have available to them. Programmes provide opportunities and resources. The interaction between what the programme provides and the participant’s ‘reasoning’ is what enables the programme to ‘work’ and is known as a ‘mechanism’.

-

Programmes work in different ways for different people (that is, the contexts within programmes can trigger different change mechanisms for different participants).

-

The contexts in which programmes operate make a difference to the outcomes they achieve. Programme contexts include features such as social, economic and political structures, organisational context, programme participants, programme staffing, geographical and historical context, and so on. In realist terms, context does not simply denote spatial, geographical or institutional locations. Context refers, among other things, to the sets of ‘social rules, norms values and interrelationships’ that operate within these locations. 10

-

Some aspects of the context enable particular mechanisms to be triggered. Other aspects of the context may prevent particular mechanisms from being triggered. That is, there is always an interaction between context and mechanism, and that interaction is what creates the programme’s impacts or outcomes: context + mechanism = outcome.

-

Because programmes work differently in different contexts and through different change mechanisms, they cannot simply be replicated from one context to another and automatically achieve the same outcomes. Theory-based understandings about ‘what works for whom, in what contexts, and how’ are, however, transferable.

-

Therefore, one of the tasks of evaluation is to learn more about: ‘what works’, in what respects and to what extent, including intended and unintended outcomes; ‘for whom’, that is, for which subgroups of participants; ‘in which contexts’; and ‘what mechanisms are triggered by what programmes in what contexts’.

A realist evaluation approach assumes that programmes are ‘theories incarnate’. That is, whenever a programme is implemented, it rests on a theory about what ‘might cause change’, even though that theory may not be explicit. One of the tasks of a realist evaluation is, therefore, to make the theories underpinning a programme explicit, by developing clear hypotheses about how, and for whom, programmes might ‘work’. The implementation of the programme, and the evaluation of it, then tests those hypotheses. This means collecting data, not just about programme impacts or the processes of programme implementation, but about the specific aspects of context that might impact on programme intended and unintended outcomes, and about the specific mechanisms that might be creating change.

Pawson and Tilley10 also argue that a realist approach has particular implications for the methods required to evaluate a programme. For example, rather than comparing changes for participants who have undertaken a programme with a group of people who have not (as is done in randomised controlled or quasi-experimental designs), a realist evaluation compares context–mechanism–outcome configurations (CMOCs) within programmes. It may ask, for example, whether a programme works more or less well, and/or through different mechanisms, in different localities (and if so, how and why) or for different subgroups of the population. Furthermore, they argue that different stakeholders will have different information and understandings about how programmes are supposed to work and whether or not they in fact do so. Data collection processes (interviews, focus groups, questionnaires and so on) should be constructed to identify and collect the particular information that those stakeholder groups will have, and thereby to confirm, refute or refine theories about how and for whom the programme ‘works’.

Realist evaluation is underpinned by a realist philosophy of science (‘realism’). 11 Philosophically speaking, realism can be thought of as sitting between positivism (‘there is a real external world which we can come to know directly through experiment and observation’) and constructivism (‘given that all we can know has been interpreted through human senses and the human brain, we cannot know for sure what the nature of reality is’). However, it is worth pointing out that this is not to suggest that ‘constructivism’ and ‘positivism’ represent opposite poles on the same continuum. Realism holds that there is a real social world but that our knowledge of it is amassed and interpreted (partially and/or imperfectly) via our senses and brains, and filtered through our language, culture and past experience. In other words, realism sees the human agent as operating in a wider social reality, encountering experiences, opportunities and resources, and interpreting and responding to the world within particular personal, social, historical and cultural frames. For this reason, different people respond differently to the same experiences, opportunities and resources. Hence, a programme (or, in the language of health services research, a complex intervention) aimed at improving health outcomes is likely to have different levels of success with participants in different contexts, and even in the same context at different times.

The need for standards and training materials in realist evaluation

The RAMESES JISCMail listserv [www.jiscmail.ac.uk/RAMESES (an e-mail list for discussing realist approaches)] postings suggest that enthusiasm for realist evaluation and belief in its potential for application in many fields have outstripped the development and application of robust quality standards in the field. Two important prior publications have systematically shown that many so-called ‘realist evaluations’ were not applying the concepts appropriately and were, as a result, producing potentially misleading findings and recommendations. 12,13

Pawson and Manzano-Santaella, in their paper, ‘A realist diagnostic workshop’, used case examples of flawed realist evaluations to highlight three common errors in such studies. 13 First, while it is possible to show associations and correlations in data from many types of evaluation, the focus of a realist evaluation should be to explore and explain why such associations occur. Second, they explain what may constitute valid data for use in realist evaluation. Producing a realist explanation is likely to require a mix of data types to provide explanations and support for the relationships within and between CMOCs. Third, realist explanations require CMOCs to be produced. Pawson and Manzano-Santaella note that some realist evaluations have presented finely detailed lists of contexts, mechanisms and outcomes, but have failed to produce a coherent explanation of how these contexts, mechanisms and outcomes were linked and related, or not related, to each other. Pawson and Manzano-Santaella called for greater emphasis on elucidating programme theory (the theory about what a programme or intervention is expected to do and, in some cases, how it is expected to work) expressed as CMO configurations.

Marchal et al. 12 undertook a review of the realist evaluation literature in health systems research to quantify and analyse the field. They identified 18 realist evaluations and noted a range of challenges that arose for researchers. Absence of prior theoretical and methodological guidance appeared to have led to recurring problems in the realist evaluations they appraised. Marchal et al. 12 noted that ‘[t]he philosophical principles that underlie realist evaluation are variably interpreted and applied to different degrees’. Different researchers had conceptualised concepts used in realist evaluation such as ‘middle-range theory’, ‘mechanism’ and ‘context’ differently. This, they concluded, was often related to fundamental misunderstandings, and the rigour of the evaluation suffered as a result.

These two papers12,13 showed that, although realist evaluation had been embraced by parts of the health research community, it had also proven a challenging task for some who were unfamiliar with the practical application of realism. Both sets of authors called for methodological guidance to allay misunderstandings about the purpose, underlying philosophical assumptions, analytic concepts and methods of realist evaluation.

Chapter 2 Methods

Objectives

The project had both strategic and operational objectives, and, because it was funded through the health sector, the objectives were framed in relation to health. However, representatives from beyond the health sector were involved to ensure that the products were relevant to any realist evaluation.

Strategic objectives

-

To develop quality standards, reporting guidance and resources and training materials for realist evaluation.

-

To build capacity in health services research for supporting and assessing realist approaches to research.

-

Acknowledging the unique potential of realist research to address the patient’s agenda (‘what will work for us in our circumstances?’), to produce resources and training materials for lay participants, and those seeking to involve them, in research.

Operational objectives

-

Recruit an interdisciplinary Delphi panel of, for example, researchers, support staff, policy-makers, patient advocates and practitioners with various types of experience relevant to realist evaluation.

-

Summarise the current literature and expert opinion on best practice in realist evaluation, to serve as a baseline/briefing document for the panel.

-

Run three rounds (and more if needed) of the online Delphi panel to generate and refine items for a set of quality standards and reporting guidance.

-

In parallel with the Delphi panel:

-

provide ongoing advice and consultancy to up to 10 realist evaluations, including any funded by the National Institute for Health Research (NIHR), thereby capturing the ‘real-world’ problems and challenges of this methodology

-

host the RAMESES JISCMail list on realist research, capturing relevant discussions about theoretical, methodological and practical issues

-

feed problems and insights from 4a and 4b into the deliberations of the Delphi panel.

-

-

Write up the quality standards and guidance for reporting in an open-access journal.

-

Collate examples of learning/training needs for researchers, postgraduate students and peer reviewers in relation to realist evaluation.

-

Develop, deliver and refine resources and training materials for realist evaluation. Deliver three 2-day ‘realist evaluation’ workshops and three 2-day ‘training the trainers’ workshops for a range of audiences [including interested NIHR Research Design Service (RDS) staff].

-

Develop, deliver and refine information and resources for patients and other lay participants in realist evaluation. In particular, draft template information sheets and consent forms that could be adapted for ethics and governance activity.

-

Disseminate training materials and other resources, for example via public-access websites.

Overview of methods

We first provide a brief overview of the range of methods we used to meet the objectives set out above and of how they related to each other. The methods we used in this project closely resemble those we used in another project (the RAMESES project), which developed methodological guidance, reporting standards and training materials for realist and meta-narrative reviews. 14 We have previously published a protocol paper that outlined the methods we intended to use in this project. 15 The following methods sections outline, in more detail, specific aspects of the methods used.

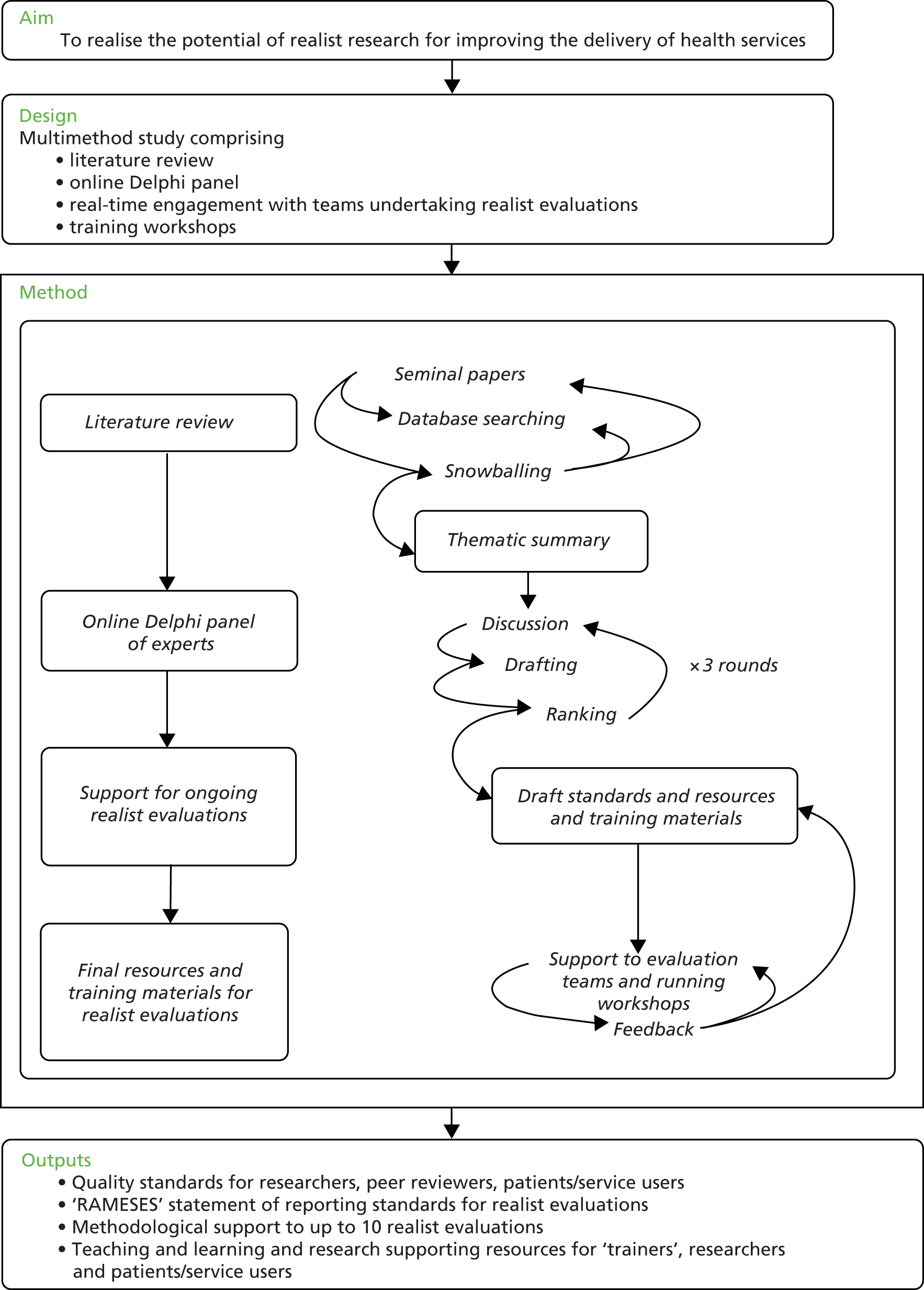

To fulfil operational objectives 1 and 2, we undertook a thematic review of the literature. Findings were supplemented by our content expertise and with feedback collated from presentations and workshops for researchers using or intending to use realist evaluation. We synthesised our findings into briefing materials on realist evaluation for the Delphi panel. We recruited members to the Delphi panel, which had wide representation from researchers, students, policy-makers, evaluators, theorists and research sponsors. We used the briefing materials to inform the Delphi panel in preparation for the task, so they could contribute to developing standards (objective 3). For the advice and consultancy to realist evaluations (objective 4a), we drew on our experience in conducting realist evaluations and developing and delivering education materials, but also on relevant feedback from the Delphi panel, an e-mail list on realist research approaches (www.jiscmail.ac.uk/RAMESES) and the evaluations teams we had supported in the past. To help us refine our reporting standards (objective 5), we captured methodological and other challenges that arose within the realist evaluation projects to which we provided methodological support. All of these sources fed into the reporting standards, quality standards and resources and training materials (objective 7). We did not set specific time points when we would refine the drafts of our project outputs. Instead, we iteratively and contemporaneously fed the data we captured into our draft reporting standards, quality standards and resources and training materials, making changes gradually. Only our Delphi panel ran within a specific time frame. The final guidance and standards were, therefore, the product of continuous refinements. To understand and develop information and resources for patients and other lay participants in realist evaluation (objective 8), we convened a group consisting of patients and the public. We addressed objective 9 through academic publications, online resources and delivery of presentations and workshops. The project was overseen by a Project Advisory Group, which comprised three independent members (see Acknowledgements). This group met with the project team on three occasions (May 2015, November 2015 and May 2016) and provided advice to the project team. Figure 1 provides a pictorial overview of how the different methods we used fed into each other.

FIGURE 1.

Overview of study processes.

Details of literature search methods

With input from an expert librarian, we identified reviews, scholarly commentaries, models of good practice and examples of (alleged) misapplication of realist evaluation. To identify the relevant documents we refined and developed the search used by Marchal et al. 12 for a previous review on a similar topic, and also applied contemporary search methods designed to identify ‘richness’ when exploring complex interventions. 16,17

A search was conducted on 3 March 2015 across 10 databases. Free-text terms were selected to describe realist methods and thesaurus terms were used where available (see Appendix 1). The following databases were searched:

-

Cumulative Index to Nursing and Allied Health Literature (CINAHL; via EBSCOhost)

-

The Cochrane Library (Wiley Online Library)

-

Dissertations & Theses database (ProQuest)

-

EMBASE (via OvidSP)

-

Education Resources Information Center (ERIC; via EBSCOhost)

-

Global Health (via OvidSP)

-

MEDLINE and MEDLINE In-Process & Other Non-Indexed Citations (via OvidSP)

-

PsycINFO (via OvidSP)

-

Scopus, Science Citation Index (SCI), Social Science Citation Index (SSCI) & Conference Proceedings, Citation Index – Science (CPCI-S)

-

Web of Science Core Collection (Thomson Reuters Corporation, New York, NY, USA).

A forward citation search was conducted via the Web of Science Core Collection for the following key text: Pawson R, Tilley N. Realistic Evaluation. London: Sage; 1997. 10

No language or study design filters were applied. We included any document that referred to or claimed to be a realist evaluation that used the approach as set out by Pawson and Tilley in their key publication, Realistic Evaluation. 10 Documents were excluded if they were not realist evaluations, published prior to the year 2000, book reviews, letters and comment. We set the cut-off point at 2000, as we assumed that evaluations based on Pawson and Tilley’s work would begin appearing in the literature from this point onwards. All citation screening was undertaken by Geoff Wong. The whole searching process, from start to the retrieval of all full-text documents, took approximately 1 month.

We decided that, because of the narrow purpose of our review and the number of relevant citations retrieved, we would stop analysing data when we had reached thematic saturation. As a strategy to manage the potential number of realist evaluations, we decided to start our analysis and synthesis from the most recent (i.e. from 2015) realist evaluations and work ‘backwards’. The decision on when thematic saturation had been reached was made in discussion with the whole project team. For both practical reasons (e.g. resource constraints) and academic ones (no new data), we stopped including new papers when there was agreement that saturation of themes had been reached. Thematic saturation was reached once the group agreed by consensus that the new realist evaluations identified contained no new themes or only subthemes that related to the three questions listed below in bullet points.

The thematic analysis was led by Geoff Wong, who undertook all stages of the review and shared findings with the rest of the project team so that discussion, debate and refinement of interpretations of the data could take place. Findings were shared by e-mail and, when necessary, face-to-face meetings were conducted to discuss interpretations of the data.

In undertaking our thematic analysis, we familiarised ourselves with the included evaluations to identify patterns in the data. Aware that the purpose of the review was to produce briefing documents for the Delphi panel, we considered the following questions:

-

What is considered by experts in realist evaluation to be current best practice (and what is the range and diversity of such practice)?

-

What do experts in realist evaluation, and other researchers who have undertaken a realist evaluation, believe counts as high quality and necessary to report?

-

What issues do researchers struggle with (based on thematic analysis of postings on the RAMESES JISCMail list archive as well as the published literature)?

In the panels, we wanted to achieve a consensus on quality and reporting standards, and thus what we needed from our review of the literature were data to inform us on what might constitute quality in executing and reporting realist evaluations. We accepted that we might need to refine, discard or add additional questions and topic areas in order to better capture our analysis and understanding of the literature as these emerged from our reading of the evaluations.

Data were extracted to a Microsoft Excel® (Microsoft Corporation, Redmond, WA, USA) spreadsheet that we iteratively refined to capture the data needed to produce our briefing materials. This review was undertaken in a short time frame. The time taken from obtaining full-text documents to producing the final draft for circulation of the briefing documents was approximately 12 weeks. The output of this phase was a provisional summary that addressed the questions above and highlighted, for each question, the key areas of knowledge, ignorance, ambiguity and uncertainty. This was distributed to the Delphi panel (as our briefing document) as the starting point for its work.

Our purpose in identifying published reviews was not to complete a census of realist evaluations. We make no claims that the review we undertook was exhaustive; thus, we never intended that it should be published as a stand-alone piece of research. In other words, the purpose of our review was not to produce definitive summaries in response to the themes above but to prepare a baseline set of briefing materials for the Delphi panel, and to deliberate on and add to them in the next step. As such, the review we undertook would be best considered as being a rapid, accelerated or truncated thematic review. Such an approach will predictably produce limitations, and these are discussed in Chapter 4, Limitations.

Details of online Delphi process

We recruited Delphi panel members purposefully, to ensure that we had representation from evaluators, researchers, funders, journal editors and experts in realist evaluation. Individuals were recruited through relevant organisations and targeted e-mails, and also through personal contacts and recommendations. Those interested in participating were provided with an outline of the study, and individuals who indicated the greatest commitment and potential to balance the sample were selected.

The Delphi panel was run online using SurveyMonkey (SurveyMonkey, Palo Alto, CA, USA). Participants in round 1 were provided with the briefing materials we developed from the literature review and were invited to suggest what might be included in the reporting standards. Responses were analysed and fed into the design of questionnaire items for round 2.

In round 2 of the Delphi Panel, participants were asked to rank each potential item twice on a 7-point Likert scale (1 = strongly disagree to 7 = strongly agree), once for relevance (i.e. ’Should an item on this theme/topic be included at all in the guidance?’) and once for validity (i.e. ’To what extent do you agree with this item as currently worded?’). Those who agreed that an item was relevant, but disagreed on its wording, were invited to suggest changes to the wording via a free-text comments box. In this second round, participants were again invited to suggest additional topic areas and items. We did not prespecify stop-points for establishing when consensus has been achieved. This was because we wanted to have the flexibility to return to the Delphi panel items that we judged might need further input. Although we accept that this may have enabled us to preferentially return some items and not others, we guarded against this by sending all Delphi panel members an end-of-round report detailing all the findings, changes made to the text and items to be returned to the next round. Panel members were invited to contact us should they have any concerns with the items that were not returned for re-rating, such as believing that the item should be returned to the panel, or disagreeing with wording changes.

Participants’ responses were collated and the numerical rankings were entered onto an Excel spreadsheet. The response rate, average, mode, median and interquartile range (IQR) for each item was calculated. Items that scored low on relevance were omitted from subsequent rounds. We invited further online discussion on items that scored high on relevance but low on validity (indicating that a rephrased version of the item was needed) and on those for which there was wide disagreement about relevance or validity. The panel members’ free-text comments were also collated and analysed thematically.

Following analysis and discussion within the project team, we drew up a second list of statements that were circulated for ranking (round 3). Round 3 contained items for which consensus had not yet been reached. For items on which consensus had been reached, we did not return these to rounds 3, 4 or beyond for panel members to re-rate, even if we had made changes to the wording. This was because, when we undertook the RAMESES project, we had received informal feedback from the Delphi panel members indicating that round 2 of the online Delphi process had been very time-consuming. We were advised that to retain a high response rate for subsequent rounds, we should minimise the time commitment we asked of panel members. We planned that the process of collating responses, further e-mail discussion and re-ranking would be repeated until a maximum consensus was reached (rounds 4, 5, and so on). In practice, very few Delphi panels, online or face to face, go beyond three rounds because participants tend to ‘agree to differ’ rather than move towards further consensus. We used e-mail reminders to optimise our response rate from Delphi panel members. We considered consensus to be achieved when the median score was 6 or above.

We planned to report residual non-consensus as such and to report the nature of the dissent described (if any). Making such dissent explicit tends to expose inherent ambiguities, which may be philosophical or practical, and acknowledges that not everything can be resolved; such findings may be more use to those who use realist evaluation than a firm statement that implies that all tensions have been fixed. We used the findings from the Delphi panel to develop the reporting standards and methodological quality standards for realist evaluations.

Developing quality standards

The quality standards were designed to support professional development, assist evaluators to assess the quality of various aspects of the evaluation process and to assist reviewers with meta-evaluation (i.e. assessing the quality of evaluations).

To develop the quality standards, we drew on the following sources of data:

-

free-text comments from participants and findings from the Delphi panels

-

personal expertise as evaluators, researchers, peer reviewers and trainers in the field

-

feedback from participants at workshops and training sessions run by members of the project team

-

comments made on RAMESES JISCMail.

The data from the sources above were collated contemporaneously and discussed within the project team. Iterative cycles of discussion and revisions for content and clarity of the drafts were needed to develop the standards. Box 1 provides an illustration of how we drew on the data sources to produce the quality standards.

As evaluators, researchers and trainers in realist evaluation, we had noted that there was some confusion among researchers about the nature, need and role of realist programme theory (or theories) in realist evaluations. To develop the briefing materials and initial drafts of the reporting standards for realist evaluations, we searched for and analysed a number of published evaluations and noted that our impressions were well founded.

When providing methodological support for a realist evaluation, the importance of programme theory emerged again. One of the project team commented, ‘I felt the development of the initial “programme theory” pulled things together . . .’ In our Delphi process, we encouraged participants to provide free-text comments. These closely reflected the comments we received about the importance of programme theory.

Development of the quality criteriaWe drew on our content expertise of the topic area and published methodological literature to develop the quality criteria. In addition, we found that some of our Delphi panel participants provided us with clear indications that supported the criteria we set. For example, we suggested that realist evaluations should develop a programme theory and one that did not was ‘inadequate’. Delphi panel participants’ free-text comments echoed our suggestion:

Really important . . .

Initial programme theories will be clearly stated . . .

Many people’s efforts at realist evaluation fall at the programme theory stage . . .

We were also able to draw on the discussions that took place on JISCMail to support some of our criteria. For example, under ‘adequate’, we suggested that: ‘initial tentative programme theory (or theories) were identified and (as far as possible) described in realist terms (that is, in terms of the causal relationship between contexts, mechanisms and outcomes). These were refined as the evaluation progressed’.

As illustration, a comment from JISCMail that we drew upon to support this criterion was:

It’s good to read that you are planning to develop a programme theory. It may be that even before you start data collection that you may wish to develop an initial ‘best guess’ programme theory of the . . . intervention. Do not worry that it may be a best guess and has no CMOCs (i.e. is not particularly realist in nature) – it is a starting point. As the evaluation progresses your job is to gradually (iteratively) ‘convert’ it into a more detailed realist programme theory that has data to support any inferences you have made.

Developing, delivering and refining resources and training materials for realist evaluation

An important part of our project was to produce publicly accessible resources to support training in realist evaluations. We anticipated that these resources will need to be adapted, and perhaps supplemented, for different groups of learners, and interactive learning activities added. We developed, and iteratively refined, draft learning objectives, example course materials and teaching and learning support methods. We drew on a range of sources to inform the content and format of our training materials as well as our experience as trainers and consultants on realist evaluations.

We sought out examples of the kinds of requests that are often made by evaluators for support on realist evaluation, for example using the rich archive of postings on the RAMESES JISCMail listserv from both novice and highly experienced practitioners, going back 3 years. We also proactively asked the list members for additional examples, and used our empirical data from the Delphi panel and our literature review to identify relevant examples. Finally, we sought input from UK RDS staff interested in realist evaluation to describe the kind of problems people bring to them, and where they feel that further guidance, support and resources are needed.

We used a thematic approach to classify examples into a list of problems and issues, each with a corresponding training need(s) and resources to address them. These were developed iteratively in regular discussions and meetings of the research team. Our goal was to develop a coherent and comprehensive curriculum for training realist researchers and for ‘training the trainers’.

Support and consultancy to realist evaluations

The support we offered to fellow evaluators and researchers using realist evaluations consisted of two overlapping and complementary levels:

-

Online discussion and support via JISCMail for evaluators and researchers, at any level, interested in or undertaking a realist evaluation. When questions or issues were raised, either one of the project team or another list member would reply. Where necessary, summaries were made of discussions and clarification was provided by members of the project team.

-

Direct requests for support and training. During the course of the study, members of the project team were frequently approached to provide methodological support to realist evaluation projects. The exact content, nature and duration of the support provided was discussed between the relevant team members to ensure that what was provided met the needs of those who requested the support.

Realist evaluation and ‘training the trainers’ workshops

Throughout the 24 months of the project, members of the project team offered training workshops to other evaluators, researchers and patient organisations on an as-requested basis. When asked to provide a workshop, the logistics and content of each workshop were discussed between the relevant project team member and the hosts.

For the ‘training the trainers’ workshops, we engaged with the NIHR’s RDS. We did this by e-mailing each regional service and also asking for expressions of interest via e-mail lists and personal contacts.

Develop, deliver and refine information and resources for patients and other lay participants in realist evaluation

To develop these resources, we convened a panel of lay participants with the help of the Patient and Public Involvement Co-ordinator from the Nuffield Department of Primary Care Health Sciences at the University of Oxford. We sought to invite lay participants who had been involved in research studies and came from a range of backgrounds and ages. During the panel, we sought to understand what lay participants might wish to know if they were to participate in a realist evaluation and provided examples of the potential materials for their consideration.

Chapter 3 Results

We produced four outputs related to realist evaluations for this project, namely:

-

reporting standards

-

methodological quality standards

-

resources and training materials (for researchers, evaluators and lay participants)

-

capacity building.

This chapter provides details of the results we obtained from the methods and approaches we used, and how they contributed to the content of our outputs.

Literature search

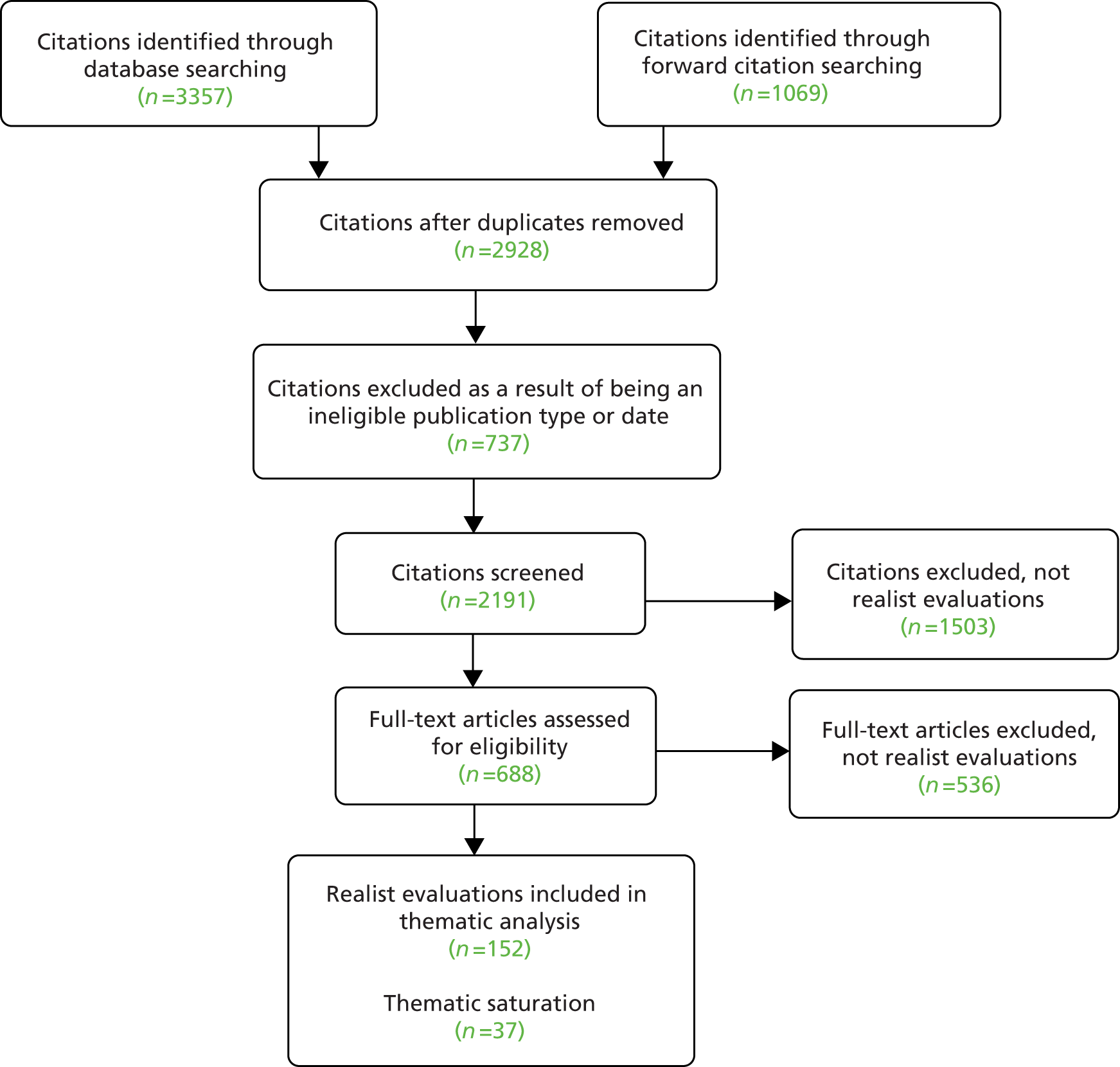

We searched 10 electronic databases from inception (where applicable) to March 2015 and, along with citation tracking, retrieved 4426 documents after removal of duplications. A total of 1498 duplicates were removed, along with a further 737 papers that did not meet our inclusion criteria. A total of 2191 papers were screened by title and abstract for inclusion with 1503 excluded at this stage. Figure 2 shows the disposition of the documents and Table 1 the number of citations returned for each database searched.

FIGURE 2.

Flow diagram outlining the disposition of documents.

| Database | Number of citations returned |

|---|---|

| CINAHL | 215 |

| The Cochrane Library | 26 |

| Dissertations & Theses | 147 |

| EMBASE | 484 |

| ERIC | 209 |

| Global Health | 94 |

| MEDLINE and MEDLINE In-Process & Other Non-Indexed Citations | 455 |

| PsycINFO | 533 |

| Scopus, SCI, SSCI and CPCI-S | 854 |

| Web of Science Core Collection | 340 |

| Citation tracking | |

| Pawson R, Tilley N. Realistic Evaluation. London: Sage; 199710 | 1069 |

One of the project team (GWo) screened the abstracts and titles and included documents that claimed to be realist evaluations. In total, 152 documents were judged to be realist evaluations. Because of the narrow focus of our review of the literature on realist evaluations, as discussed in Chapter 2, Details of literature search methods, we worked ‘backward’ from 2015 to earlier years and sought to stop analysis at the point of thematic saturation. We achieved thematic saturation after analysis of 37 out of the 152 realist evaluations. Out of these realist evaluations, 32 (from years 2015 and 2014 inclusively) evaluated health-related topics, and five (from years 2015 to 2012 inclusively) evaluated non-health-related topics. We made this distinction to ensure that we analysed realist evaluations that covered a range of topic areas, as the approach is used in a broad range of topic areas beyond health research. Hence, Table 2 shows only the characteristics of the documents we analysed (evaluation title, type of document, year submitted for publication and topic area) and drew on to produce our briefing document for the Delphi panel.

| Study title (reference and reference number) | Year submitted | Topic area |

|---|---|---|

| Health-related realist evaluations | ||

| Grades in formative workplace-based assessment: a study of what works for whom and why (Lefroy et al.18) | 2015 | Education – medical (work-based assessment) |

| What works in ‘real life’ to facilitate home deaths and fewer hospital admissions for those at end of life?: results from a realist evaluation of new palliative care services in two English counties (Wye et al.19) | 2015 | Palliative care (home death and hospital admissions) |

| Faculty development for educators: a realist evaluation (Sorinola et al.20) | 2014 | Education – medical (faculty development) |

| Reducing emergency bed-days for older people? Network governance lessons from the ‘Improving the Future for Older People’ programme (Sheaff et al.21) | 2014 | Emergency bed-days for older people |

| Using interactive workshops to prompt knowledge exchange: a realist evaluation of a knowledge to action initiative (Rushmer et al.22) | 2014 | Interactive workshops for knowledge exchange |

| Can complex health interventions be evaluated using routine clinical and administrative data? – a realist evaluation approach (Riippa et al.23) | 2014 | Use of routinely collected data for evaluating complex interventions |

| Introducing Malaria Rapid Diagnostic Tests (MRDTs) at registered retail pharmacies in Ghana: practitioners’ perspective (Rauf et al.24) | 2014 | Implementation of malaria rapid diagnostic tests in retail pharmacies |

| Advancing the application of systems thinking in health: a realist evaluation of a capacity building programme for district managers in Tumkur, India (Prashanth et al.25) | 2014 | Capacity building programme for district health managers |

| Stroke patients’ utilisation of extrinsic feedback from computer-based technology in the home: a multiple case study realistic evaluation (Parker et al.26) | 2014 | Stroke rehabilitation using computer-based technology |

| Educational system factors that engage resident physicians in an integrated quality improvement curriculum at a VA hospital: a realist evaluation (Ogrinc et al.27) | 2014 | Quality improvement in resident physician training |

| Realistic nurse-led policy implementation, optimization and evaluation: novel methodological exemplar (Noyes et al.28) | 2014 | Policy implementation |

| Putting context into organizational intervention design: using tailored questionnaires to measure initiatives for worker well-being (Nielsen et al.29) | 2014 | Work well-being |

| Mechanisms that support the assessment of interpersonal skills: a realistic evaluation of the interpersonal skills profile in pre-registration nursing students (Meier et al.30) | 2014 | Interpersonal skills assessment |

| Factors affecting the successful implementation and sustainability of the Liverpool Care Pathway for dying patients: a realist evaluation (McConnell et al.31) | 2014 | Palliative care – Liverpool Care Pathway |

| Towards a programme theory for fidelity in the evaluation of complex interventions (Masterson-Algar et al.32) | 2014 | Implementation fidelity – complex rehabilitation intervention for patients with stroke |

| Action learning sets in a nursing and midwifery practice learning context: a realistic evaluation (Machin and Pearson33) | 2014 | Education – action learning sets in nursing |

| Advancing the application of systems thinking in health: realist evaluation of the Leadership Development Programme for district manager decision-making in Ghana (Kwamie et al.34) | 2014 | Leadership development programme |

| Adolescents developing life skills for managing type 1 diabetes: a qualitative, realistic evaluation of a guided self-determination-youth intervention (Husted et al.35) | 2014 | Chronic disease management – use of guided self-determination in diabetes |

| The management of long-term sickness absence in large public sector healthcare organisations: a realist evaluation using mixed methods (Higgins et al.36) | 2014 | Sickness absence – long-term sickness absence in health-care workers |

| General practitioners’ management of the long-term sick role (Higgins et al.37) | 2014 | Sickness absence – GPs’ management long-term sickness absence |

| More than a checklist: a realist evaluation of supervision of mid-level health workers in rural Guatemala (Hernández et al.38) | 2014 | Supervision of mid-level health workers |

| Dialysis modality decision-making for older adults with chronic kidney disease (Harwood and Clark39) | 2014 | Treatment decision-making – kidney dialysis |

| Housing, health and master planning: rules of engagement (Harris et al.40) | 2014 | Housing regeneration |

| Public involvement in research: assessing impact through a realist evaluation (Evans et al.41) | 2014 | Public involvement in research |

| Academic practice–policy partnerships for health promotion research: experiences from three research programs (Eriksson et al.42) | 2014 | Health promotion – collaboration between academics, practitioners and policymakers |

| Schools’ capacity to absorb a Healthy School approach into their operations: insights from a realist evaluation (Deschesnes et al.43) | 2014 | Health in schools |

| A realist evaluation of a community-based addiction program for urban aboriginal people (Davey et al.44) | 2014 | Substance use – First Nations, Inuit and Métis populations |

| Community resistance to a peer education programme in Zimbabwe (Campbell et al.45) | 2014 | Health education – peer education of HIV |

| The transformative power of youth grants: sparks and ripples of change affecting marginalised youth and their communities (Blanchet-Cohen and Cook46) | 2014 | Youth empowerment |

| The SMART personalised self-management system for congestive heart failure: results of a realist evaluation (Bartlett et al.47) | 2014 | Chronic disease management – use of technology for self-management of health failure |

| Levels of reflective thinking and patient safety: an investigation of the mechanisms that impact on student learning in a single cohort over a 5 year curriculum (Ambrose and Ker48) | 2014 | Education – teaching patient safety to medical students |

| People and teams matter in organizational change: professionals’ and managers’ experiences of changing governance and incentives in primary care (Allan et al.49) | 2014 | Health services management – organisational change |

| Non-health-related realist evaluations | ||

| Into the void: a realist evaluation of the eGovernment for You (EGOV4U) project (Horrocks and Budd50) | 2015 | E-services designed to tackle social exclusion and disadvantage |

| Evaluating Criminal Justice Interventions in the Field of Domestic Violence – A Realist Approach (Taylor51) | 2014 | Criminal justice – domestic violence interventions |

| How to use programme theory to evaluate the effectiveness of schemes designed to improve the work environment in small businesses (Olsen et al.52) | 2012 | Work environment in small businesses |

| Improving outcomes for a juvenile justice model court: a realist evaluation (Kazi et al.53) | 2012 | Criminal justice – juvenile justice model court |

| A model for design of tailored working environment intervention programmes for small enterprises (Hasle et al.54) | 2012 | Work environment in small enterprises |

Because many evaluation reports are not published and our search strategy focused on published materials, the great majority of documents we analysed were journal articles about evaluations rather than complete evaluation reports. We acknowledge that full evaluation reports may have provided greater detail. However, because journal articles usually require a description of both methods and findings, our focus was methodological and the literature review served only to identify issues to refer to the Delphi panel; therefore, we remain confident that the sample was adequate for the task.

We conducted a thematic analysis guided, initially, by the three questions set out above (see Chapter 2, Details of literature search methods) to produce the briefing documents for the realist evaluation Delphi panel (see Appendix 2). All the data we extracted were either entered into an Excel spreadsheet or written up directly into a draft of our briefing document. Of the three questions set out above, two refer to what experts in realist evaluation and researchers who have undertaken a realist evaluation consider to be best practice and high quality. Much of this information was contained in the documents listed in Table 2, but we also had to supplement our understanding by drawing on more methodological documents. 1,10,12,13

Our first question [what is considered by experts to be current best practice (and what is the range and diversity of such practice)?] related to perceptions of methodological rigour in the execution of realist evaluations. Addressing this question required the most immersion and analysis. With this question, we wanted to understand expert opinions about best practice to produce a high-quality realist evaluation. As a project team, we had our own ideas, but wanted to explore whether or not these were reflected in the included evaluations. We first had to decide whether or not we could agree among ourselves on which of the evaluations we analysed were of high, mixed or low quality. To do this, each evaluation was read in detail (GWo) and selected characteristics were extracted into an Excel spreadsheet. The headings on this spreadsheet were study name, type of document, year submitted, country, topic area, purpose of evaluation, understand realism?, methodological comments, lessons for methods, methods for reporting and challenges reported by reviewers’ notes.

Once completed, the spreadsheet and the full-text documents were circulated to the rest of the project team. Through e-mail discussion and debate, a consensus was achieved on which studies were deemed high, mixed or low quality. The next step in the process was to re-read each of the included evaluations to determine which evaluation practices and processes were necessary to lead to a high-quality evaluation. Later on in the project, to develop reporting standards for realist evaluations, we used these findings to inform what needed to be reported to ensure that sufficient information was available to the reader, so that they were able to make judgments about methodological rigour. This addressed our second question (what do experts and other researchers believe count as high quality and necessary to report?). Again, this was led by Geoff Wong, and each issue that needed addressing was added to a draft of the briefing documents. To further strengthen the inferences we made on issues that needed to be addressed and, hence, included in our briefing materials, we looked back through the archives of the RAMESES JISCMail e-mail listserv to identify if the issues we had included had also been raised by other researchers. We also drew on the methodological issues raised in methods papers on realist evaluations in a similar way. 12,13

The drafts of briefing materials were circulated to the project team and a consensus was achieved through discussion and debate. The briefing materials were the result of four rounds of revisions.

The contents of our briefing materials were as follows:

-

terminology

-

philosophical basis of realist evaluation

-

classification

-

title

-

rationale for using realist evaluation

-

methods

-

data collection methods

-

programme theory

-

findings

-

conclusion

-

recommendations.

The complete briefing document circulated to the Delphi panels for realist reviews and meta-narrative reviews can be found in Appendix 2.

Delphi panel

We ran the Delphi panels between May 2015 and January 2016. We recruited 35 panel members from 27 organisations across six countries. The panel members comprised evaluators of health services (23), public policy (nine), nursing (six), criminal justice (six), international development (two), contract evaluators (three), policy- and decision-makers (two), funders of evaluations (two) and publishing (two) (note that some individuals had more than one role).

We started round 1 in June 2015 and circulated the briefing materials document to the panel. We sent two chasing e-mails to all panel members, and within 8 weeks all panel members who indicated that they wanted to provide comments had done so. In round 1 of the Delphi panel, 33 members provided suggestions for items that should be included in the reporting standards and/or comments on the nature of the standards themselves. We used the suggestions from the panel members and the briefing document as the basis of the online survey for round 2.

Round 2 started at the end of September 2015 and ran until early November 2015. Panel members were invited to complete our online survey and asked to rate each potential item for relevance and validity. A copy of this survey can be found in Appendix 3. Where needed, up to three reminder e-mails were sent to the panel members. For round 2, the panel was presented with 22 items to rank. The overall response rate across all items for this round was 76%. Once the panel had completed its survey, we analysed their ratings for relevance and validity. Full details of the round 2 results can be found in Table 3. We also produced a post-round briefing document from round 2, which detailed for each item:

-

the response rate

-

mode

-

median

-

IQR

-

the action we took for each item based on the panel’s ratings

-

an anonymised list of all the free-text comments made.

| Item | Relevance | Validity | ||||||

|---|---|---|---|---|---|---|---|---|

| Response rate (%) | Mode | Median | IQR | Response rate (%) | Mode | Median | IQR | |

| Title | 28/35 (80) | 7 | 6.5 | 2.25 | 28/35 (80) | 6 | 6 | 2 |

| Summary or abstract | 28/35 (80) | 7 | 6 | 1 | 28/35 (80) | 6 | 5.5 | 3 |

| Rationale for evaluation | 28/35 (80) | 7 | 6 | 1 | 28/35 (80) | 6 | 5 | 2.25 |

| Programme theory | 27/35 (77) | 7 | 7 | 0 | 27/35 (77) | 7 | 7 | 2 |

| Evaluation questions, objectives and focus | 27/35 (77) | 7 | 7 | 1 | 27/35 (77) | 7 | 6 | 3 |

| Ethics | 27/35 (77) | 7 | 7 | 1 | 27/35 (77) | 7 | 7 | 1 |

| Rationale for using realist evaluation | 27/35 (77) | 7 | 7 | 1 | 27/35 (77) | 7 | 6 | 1.5 |

| Protocol or evaluation design | 27/35 (77) | 7 | 7 | 1 | 27/35 (77) | 7 | 6 | 2.5 |

| Setting(s) of the evaluation | 27/35 (77) | 7 | 7 | 1 | 27/35 (77) | 6 | 6 | 2 |

| Nature of the programme being evaluated | 27/35 (77) | 7 | 7 | 1 | 27/35 (77) | 7 | 6 | 3 |

| Recruitment process and sampling strategy | 26/35 (74) | 7 | 7 | 1 | 26/35 (74) | 7 | 6 | 2 |

| Data-gathering approachesa | 26/35 (74) | 7 | 7 | 0.75 | 26/35 (74) | 7 | 6 | 1.75 |

| Data documentationa | 26/35 (74) | 6 | 6 | 1.75 | 26/35 (74) | 5 | 5.5 | 1 |

| Data analysis | 26/35 (74) | 7 | 7 | 0.75 | 26/35 (74) | 7 | 6 | 1.75 |

| Processes used to ensure qualityb | 26/35 (74) | 7 | 6 | 3 | 26/35 (74) | 7 | 5 | 2.75 |

| Characteristics of participants | 26/35 (74) | 7 | 6.5 | 1 | 26/35 (74) | 7 | 6 | 2 |

| Main findings | 26/35 (74) | 7 | 7 | 0.75 | 26/35 (74) | 7 | 6 | 1 |

| Summary of findings | 26/35 (74) | 7 | 7 | 1 | 26/35 (74) | 6 | 6 | 1 |

| Strengths, limitations and future research directions | 26/35 (74) | 7 | 6.5 | 1 | 26/35 (74) | 6 | 6 | 1 |

| Comparison with existing literature | 26/35 (74) | 7 | 7 | 1 | 26/35 (74) | 7 | 6.5 | 1 |

| Conclusion and recommendations | 26/35 (74) | 7 | 7 | 1 | 26/35 (74) | 7 | 6 | 1.75 |

| Funding | 26/35 (74) | 7 | 7 | 1 | 26/35 (74) | 7 | 7 | 1 |

Based on the rankings and free-text comments, our analysis indicated that two items needed to be merged and one item removed. Minor revisions were made to the text of the other items based on the rankings and free-text comments. After discussion within the project team, we judged that only one item (the newly created merged item) needed to be returned to round 3 of the Delphi panel. Prior to the start of round 3, the post-round briefing document from round 2 was circulated to panel members. We did not receive any communication indicating that the panel members disagreed with the actions we undertook in response to their ratings and free-text comments from round 2.

For round 3, we asked the panel to consider again only the single item for which a consensus had not been reached. We produced an online survey for round 3 and, again, asked them to rate the item for relevance and validity. To keep the panel updated, we provided it with our post-round briefing document from round 2 (available on request from authors). Round 3 ran from late November 2015 to early January 2016. A copy of this survey can be found in Appendix 4. Two reminder e-mails were sent to the panel members. Once the panel had completed its survey, we analysed its ratings for relevance and validity (Table 4). The response rate for the single item included in round 3 was 80%. We produced a post-round briefing document from round 3 and circulated this to all our panel members for the sake of completeness (available on request from authors). We did not receive any communication indicating that the panel members disagreed with the actions we undertook in response to their ratings and free-text comments from round 3. Overall, consensus was reached within three rounds on both the content and wording of a 20-item reporting standard.

| Item | Relevance | Validity | ||||||

|---|---|---|---|---|---|---|---|---|

| Response rate (%) | Mode | Median | IQR | Response rate (%) | Mode | Median | IQR | |

| Data collection methods | 28/35 (80) | 7 | 7 | 1 | 28/35 (80) | 7 | 6 | 2.25 |

Using the data we gathered from the three rounds of the Delphi panel, we produced a final set of items to be included in the reporting for realist evaluations. These were published in June 2016 in BMC Medicine, an open-access journal. 55 Within this publication, we have provided an ‘example’ for each standard; that is, an example of good practice drawn from published evaluations. Our reporting standards have also been accepted and listed on the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) network, a resource centre for good reporting of health research studies (www.equator-network.org).

Developing quality standards

We developed quality standards for two user groups, which are set out using rubrics:

-

evaluators and peer reviewers of realist evaluations

-

funders or commissioners of realist evaluations.

Quality standards for evaluators and peer reviewers of realist evaluations

By peer reviewers, here, we specifically refer to individuals who have been asked to appraise the quality of completed evaluations. For each aspect of quality that requires a judgement about quality, we have provided a brief description of why the process is important, as well as descriptors of criteria against which a decision about quality might be arrived at. The quality standards for peer reviewers of realist evaluation reports are set out in Table 5.

| Quality standards for realist evaluation (for evaluators and peer reviewers) | ||||

|---|---|---|---|---|

| 1. The evaluation purpose | ||||

| Realist evaluation is a theory-driven approach, rooted in a realist philosophy of science, which emphasises an understanding of causation and how causal mechanisms are shaped and constrained by context. This makes it particularly suitable for evaluations of certain topics and questions, for example complex interventions and programmes that involve human decisions and actions. A realist evaluation question contains some or all of the elements of ‘what works, how, why, for whom, to what extent and in what circumstances, in what respect and over what duration?’ and applies a realist logic to address the question(s). Above all, realist evaluation seeks to answer ‘how’ and ‘why?’ questions. Realist evaluation always seeks to explain. It assumes that programme effectiveness will always be conditional and is oriented towards improving understanding of the key contexts and mechanisms contributing to how and why programmes work | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| A realist approach is suitable for the purposes of the evaluation. That is, it seeks to improve understanding of the core questions for realist evaluation |

|

|

Adequate plus:

|

Good plus:

|

| The evaluation question(s) are framed to be suitable for a realist evaluation | The evaluation question(s) are not structured to reflect the elements of realist explanation. For example, the question(s):

|

The evaluation question(s) include a focus on how and why outcomes were generated in the evaluand, and contained at least some of the additional elements:for whom, in what contexts, in what respects, to what extent and over what durations | Adequate plus:

|

Good plus:

|

| 2. Understanding and applying a realist principle of generative causation in realist evaluations | ||||

| Realist evaluations are underpinned by a realist principle of generative causation – underlying mechanisms that operate (or not) in certain contexts to generate outcomes: Context + Mechanism = Outcome (CMO). Realist evaluations aim to understand how different mechanisms generate different outcomes in different contexts. This intent influences everything from the type of evaluation question(s) to an evaluation’s design (e.g. the construction of a realist programme theory, recruitment process and sampling strategy, data collection methods, data analysis, to recommendations) | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| A realist principle of generative causation is applied | Significant misunderstandings of realist generative causation are evident. Common examples include the following:

|

Some misunderstandings of realist generative causation are evident, but the overall approach is consistent enough that a recognisably realist analysis results from the process | Assumptions and methods used throughout the evaluation are consistent with a realist generative causation | Good plus:

|

| 3. Constructing and refining a realist programme theory or theories | ||||

|

At an early stage in the evaluation, the main ideas that went into the making of an intervention, programme or policy (the programme theory or theories, which may or may not be realist in nature) are surfaced and made explicit. An initial tentative programme theory (or theories) is constructed, which sets out how and why an intervention, programme or policy is thought to ‘work’ to generate the outcome(s) of interest. Where possible, this initial tentative theory (or theories) will be progressively refined over the course of the evaluation Over the course of the evaluation, if needed, programme theory (or theories) are ‘re-cast’ in realist terms (describing the contexts in which, populations for which, and main mechanisms by which, particular outcomes are, or are expected to be, achieved). Ideally, the programme theory is articulated in realist terms prior to data collection in order to guide the selection of data sources about context, mechanism and outcome. However, in some cases, this will not be possible and the product of the evaluation will be an initial realist programme theory |

||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| An initial tentative programme theory (or theories) is identified and developed. Programme theory is ‘re-cast’ and refined as realist programme theory | Programme theory (or theories) are:

|

|

Adequate plus:

|

Good plus:

|

| 4. Evaluation design | ||||

| Descriptions and justifications of what is planned in the evaluation design, in what order and why should be clearly articulated. Realist evaluations are ideally adaptive – that is, the evaluation question(s), scope and/or design may be adapted over the course of the evaluation to ‘test’ (confirm, refute or refine) aspects of the programme theory as it evolves. If changes are made to the evaluation design, these should be clearly described and justified. At the start of an evaluation, where possible, any changes that might be needed should be anticipated and contingencies planned | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| The evaluation design is described and justified |

|

|

Adequate plus:

|

Good plus:

|

| Ethical clearance is obtained if required |

|

|

|

Specific implications of realist methodology are explained in the proposal for ethics approval [e.g. the need to link data across context, mechanism and outcome; the role of the evaluator(s) in relation to other stakeholders and the programme] and specific strategies to address those implications are included |

| 5. Data collection methods | ||||

| In a realist evaluation, a broad range of data increases the robustness of the theory ‘testing’ process and a range of methods used to collect them. Data will be required for all of context, mechanism and outcome, and to inform the relationships between them. Data collection methods should be adequate to capture not only intended, but also (as far as possible) unintended, outcomes (both positive and negative) and the context–mechanism interactions that generated them. Realist evaluation is usually multimethod (i.e. it uses more than one method to gather data). Where possible, data about outcomes should be triangulated (at least using different sources, if not different types, of information) | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| Data collection methods are suitable for capturing the data needed in a realist evaluation | Within the realist evaluation project:

|

|

Adequate plus:

|

|

| 6. Sample recruitment strategy | ||||

| In a realist evaluation, data are required for contexts, mechanisms and outcomes. One key source is respondents or key informants. Data are used to develop and refine theory about how, for whom and in what circumstances programmes generate their outcomes. This implies that any processes used to invite or recruit individuals need to identify an adequate sample of individuals who are able to provide information about contexts, mechanisms, outcomes and/or programme theory | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| The respondents or key informants recruited are able to provide sufficient data needed for a realist evaluation |

|

Recruitment is:

|

Adequate plus:

|

|

| 7. Data analysis | ||||

|

Data analysis in realist evaluation is not a specific method but a way of interrogating programme theory (or theories) with data, and a way of using theory to understand patterns in data. In other words, data analysis is a way of teasing out what works, for whom, in what contexts, in what respects, over what duration and so on In a realist evaluation, where possible, the analysis process should occur iteratively. The overall approach to data analysis is retroductiveb (i.e. it moves between inductive and deductive processes, includes and tests researcher ‘hunches’ and aims to provide the best possible explanation of acknowledged-to-be-incomplete data). The processes used to analyse the data and integrate them into one or more realist programme theories should be consistent with a central principle of realism – namely generative causation. How these data are then used to further develop, confirm, refute or refine one or more programme theories should be clearly described and justified |

||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| The overall approach to analysis is retroductiveb |

|

|

Adequate plus:

|

Good plus:

|

| Data analyses processes applied to gathered data are consistent with a realist principle of generative causation |

|

|

Adequate plus:

|

Good plus:

|

| Criterion | Inadequate | Adequate | Good | Excellent |

| A realist logic of analysis is applied to develop and refine theory | The analysis does not:

|

|

Adequate plus:

|

Data analysis is iterative over the course of the evaluation, with earlier stages of analysis being used to refine programme theory and/or refine evaluation design for subsequent stages |

| 8. Reporting | ||||

| Realist evaluations may be reported in multiple formats – detailed reports, summary reports, articles, websites and so on. Reports should be consistent with the RAMESES II reporting standards for realist evaluations (see https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-016-0643-1) | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| The evaluation is reported using the items listed in the RAMESES II reporting standard for realist evaluations | Key items are missing. For example:

|

Most items in the RAMESES II reporting standards for realist evaluations are reported. In particular:

|

|

Good plus:

|

| Findings and implications are clear and reported in formats that are consistent with realist assumptions |

|

|

Adequate plus:

|

Good plus:

|

As an illustrative example to explain how to use the layout of these quality standards, in the quality standard for ‘4. Evaluation design’, this aspect of the evaluation could be judged as being adequate if, ‘what was planned in the evaluation design, in what order and why was described and justified in detail’. For this aspect of an evaluation to be judged as ‘good’, we recommend that, as well as fulfilling the criteria for adequate (hence our use of the term ‘adequate plus’), evaluations would need to ensure, among other things, that the ‘adequate plus: the design “tested” multiple aspects of programme theory’ criteria is fulfilled.

Quality standards for funders or commissioners of realist evaluations

As more and more realist evaluations are being undertaken, those commissioning the evaluations need to pass judgements on two broad areas: the proposed evaluation design and methodological expertise. We appreciate that many funding bodies and commissioners already have systems in place to guide their decision-making processes. However, a number of agencies have sought guidance about, or training in, how to assess the methodological aspects of realist tenders and proposals they have to deal with. As such, we see this guidance we have produced not as replacement for, but as a supplement to, existing organisational decision-making processes and guidance. We are also aware that funding bodies and commissioners have differences in the degree of involvement with the evaluations they have funded or commissioned. In response to these differences, these quality standards have been designed and worded in such a way that they may be used when an evaluation is still ongoing. The quality standards for realist evaluations for funders or commissioners of realist evaluations are set out in Table 6.

| Quality standards for realist evaluation (for funders or commissioners of realist evaluations) | ||||

|---|---|---|---|---|

| 1. The evaluation purpose | ||||

| Realist evaluation is a theory-driven approach, rooted in a realist philosophy of science, which emphasises an understanding of causation and how causal mechanisms are shaped and constrained by context. This makes it particularly suitable for evaluations of certain topics and questions, for example complex interventions and programmes that involve human decisions and actions. A realist evaluation question contains some or all of the elements of ‘what works, how, why, for whom, to what extent and in what circumstances, in what respect and over what duration?’ and applies a realist logic to address the question(s). Above all, realist evaluation seeks to answer ‘how?’ and ‘why?’ questions. Realist evaluation always seeks to explain. It assumes that programme effectiveness will always be conditional and is oriented towards improving understanding of the key contexts and mechanisms contributing to how and why programmes work | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| A realist approach is suitable for the purposes of the evaluation |

|

|

Adequate plus:

|

Good plus:

|

| The evaluation question(s) are framed in such a way as to be suitable for a realist evaluation | The evaluation question(s) are not structured to reflect the elements of realist explanation. For example, answering the questions:

|

The evaluation question(s) include a focus on how and why outcomes are likely to be generated, and contain at least some of the additional elements, ‘for whom, in what contexts, in what respects, to what extent and over what durations’ | Adequate plus:

|

Good plus:

|

| 2. Understanding and applying a realist principle of generative causation in realist evaluations | ||||

| Realist evaluations are underpinned by a realist principle of generative causation. That is, underlying causal processes (called ‘mechanisms’) operate (or not) in certain contexts to generate outcomes. The explanatory framework is Context + Mechanism = Outcome (CMO). Realist evaluations aim to understand how different mechanisms generate different outcomes in different contexts. This intent influences everything from the type of evaluation question(s) to an evaluation’s design (e.g. the construction of a realist programme theory, recruitment process and sampling strategy, data collection methods, data analysis, to recommendations) | ||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| A realist principle of generative causation is applied | Significant misunderstandings of realist generative causation are evident. Common misunderstandings include the following:

|

Some misunderstandings of realist generative causation exist, but the overall approach is consistent enough that a recognisably realist analysis results from the process | Assumptions and methods used throughout the evaluation are consistent with a realist generative causation | Good plus:

|

| 3. Constructing and refining a realist programme theory or theories | ||||

|

At an early stage in the evaluation, the main ideas that went into the making of an intervention, programme or policy (the programme theory or theories, which may or may not be realist in nature) are identified and described. An initial tentative programme theory (or theories) is constructed, which sets out how and why an intervention, programme or policy is thought to ‘work’ to generate the outcome(s) of interest. Where possible, this initial tentative theory (or theories) is progressively refined over the course of the evaluation Over the course of the evaluation, if needed, programme theory (or theories) is ‘re-cast’ in realist terms (describing the contexts in which, populations for which and main mechanisms by which particular outcomes are expected to be achieved). Ideally, the programme theory is articulated in realist terms prior to data collection in order to guide the selection of data sources about context, mechanism and outcome. However, in some cases, this will not be possible and the product of the evaluation will be an initial realist programme theory |

||||

| Criterion | Inadequate | Adequate | Good | Excellent |

| An initial tentative programme theory (or theories) is, or will be, identified and developed. Programme theory is or will be ‘re-cast’ and refined as realist programme theory | Programme theory (or theories):

|

|

Adequate plus:

|

Good plus:

|

| 4. Evaluation design | ||||

| Descriptions and justifications of what is planned in the evaluation design, in what order and why should be clearly articulated. Realist evaluations are ideally adaptive; that is, the evaluation question(s), scope and/or design may be adapted over the course of the evaluation to ‘test’ (confirm, refute or refine) aspects of the programme theory as it evolves. If changes are made to the evaluation design, these should be clearly described and justified. At the start of an evaluation, where possible, any changes that might be needed should be anticipated and contingencies planned | ||||