Notes

Article history

The research reported in this issue of the journal was funded by the HS&DR programme or one of its preceding programmes as project number 14/156/15. The contractual start date was in November 2015. The final report began editorial review in July 2017 and was accepted for publication in December 2017. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HS&DR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2019. This work was produced by Rivas et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2019 Queen’s Printer and Controller of HMSO

Chapter 1 Background and introduction

Patient experience surveys

Increasing attention is being paid by health-care providers to the patient-reported experience, which is at the core of all that the NHS does. 1 Patient feedback on their experiences has the potential to drive care-quality improvements, highlight system failures and improve safety, reduce patient harm and increase satisfaction with health-care provision. 2–4

Patient experience is often determined formally through surveys. These are considered to be important indicators of the quality of health service provision and service improvement priorities5–9 because they involve patients’ – or sometimes their carers’ – own evaluations. The NHS has led the way internationally in mandating a national patient survey programme in England since 2001. 10 There are currently hundreds of such surveys administered at various levels – locally, nationally and internationally – across a range of institutional settings and patient groups. 10 The Cancer Patient Experience Survey (CPES), the survey at the core of this study, is an example of a condition-specific patient experience survey (PES). There are many informal sources of patient experience that further add to the knowledge base on NHS websites and other websites dedicated to patient feedback, such as PatientOpinion (www.careopinion.org.uk), iWantGreatCare (www.iwantgreatcare.org), NHS Choices (www.nhs.uk/pages/home.aspx), blogs, social media and online fora.

It is important for patient feedback data to be properly used, and this requires appropriate tools for the collection, analysis, presentation, engagement with and understanding of the data if they are to improve health services effectively. Surveys mostly comprise closed questions, that is, questions with a fixed set of possible answers. This includes yes/no and multiple-choice responses, Likert scales, visual analogue scales and rating questions; the defining factor is that all of these are measures that can be easily quantified for meaning-making. Methods of analysing these have been well developed. 11 Using these, the CPES, which began in 2010, is widely acknowledged as the most successful national PES in enabling and embedding service improvement. 12 This has been achieved by providing trusts with tailored (trust-specific) statistical feedback of responses to the CPES closed questions, which benchmarks their performance against that of all other trusts. 13 More generally, there is evidence that PESs are also effective when their findings are linked to government-led campaigns, targets and incentives,6 such as Care Quality Commission (CQC) assessments.

Patient experience survey free-text comments

To add context to closed-question responses, it is common practice for open-ended questions to be provided within PESs for respondents to leave free-text comments. 7 CPES respondents, for example, are offered three such questions; one in three patients writes comments. 9,12 The ≥ 70,000 free-text comments produced by the CPES each year are anonymised by Quality Health, then selectively provided to NHS trusts, but in unstructured raw data form (unlike the closed-question responses), and so cannot be easily linked to the quantitative analysis or used in any systematic way nationally. The National Institute for Health Research (NIHR) commissioning brief [Health Services and Delivery Research (HSDR) programme 14/156] for this study noted that few organisations have sufficient analytical capacity to interpret large numbers of such complex data. The brief further noted that there was uncertainty as to how to present such data in a meaningful and granular way to stimulate local action, and this is where the study focus was placed.

Current limitations in the usefulness of free-text comments

Critically, there is currently no system to efficiently and usefully analyse and report free-text responses in PESs. 7 The conventional approach is manual thematic analysis (i.e. researchers reading through all of the text and assigning topic or theme codes to each comment). Such work requires considerable staff resource and can take months; thus, the number and costs of analysing these data each year using qualitative methods currently precludes its systematic analysis or reporting. 7 The usefulness of the data thus depends on individual willingness and capacity. 7 Even when analyses are undertaken, as with the 2013 London CPES free-text comments, there is a significant time lag; the London data were released in June 2013, the analysis was completed in December 2014 and was then published in June 2015. 14 There are several problems with such delays. First, the services the comments relate to may have changed considerably and most comments may no longer be relevant. Second, staff could become demoralised if they received misplaced negative feedback on services that they have improved in the meantime. Third, patients themselves worry that their data are not effectively used. Fourth, because the manual approach is resource-heavy in human labour, financial cost and time, it cannot practically be reapplied to new waves of data.

Overall then, because these data are not presented to trusts in a structured and easily accessed and assimilated form, they are unlikely to have much of an impact on service change and commissioning decisions. However, free-text comments potentially provide rich insights into patient experiences that underpin, illustrate and complement the closed-question responses. 7,12,15 The HSDR programme brief reflects the current need for better use of such data and highlights this as being critical in informing service provision in a challenged NHS. 16,17

Alternative approaches to the analysis of free-text comments

Despite the limitations of manual thematic analysis, there have been few previous attempts to analyse large-survey, unstructured, free-text responses in health care, including cancer services, to inform clinical and health-care practice. 7,12,15,18,19 There are notable exceptions,14,18–34 but these all represent one-off analyses (see Chapter 2).

NVivo version 9 (QSR International, Warrington, UK) and other qualitative data analysis (QDA) packages, including dedicated text-analysis software, such as QDA Miner (Provalis Research, Montreal, QC, Canada), are often used to organise manual thematic analyses. This might be considered particularly helpful with large data sets. However, in the experience of the study team, NVivo is unstable when handling a large amount of text; the software creates considerable metadata from relatively small text inputs, resulting in file sizes of several gigabytes. These restrictions became problematic when there were around 4600 comments in previous work. 19 Rapid automated QDA outputs that might be thought useful include automated coding, word clouds, concordance and word-frequency tables and associated statistics (against a comparator dictionary or data set). However, each of these uses basic word-search technology and ignores semantics and syntactics. Automated codes augment but cannot replace even basic manual coding. Word-cloud software generates ‘pictures’ of words from word-frequency lists, but only includes the more frequent words and does not take account of synonyms or closely related words. Depending on the ‘cloud’ shape chosen by the user, the words selected will vary considerably. Word-frequency tables enable the most used words and significant differences between data sets to be determined, but can be hard to interpret. Concordance software enables the user to click on a word to access its use in context, so that a more detailed understanding can be obtained. All of these approaches enable the big picture to be quickly obtained, but present an overwhelming number of irrelevant as well as relevant words, and more so the larger the sample size. Many of the words will be filler words (devoid of semantic content, but used in natural talk and text for flow or to hold attention) and many will be meaningless out of context. Text lists and word clouds will also contain many words with similar meanings that will appear to be of lower frequency because they have not been combined, when they may together represent something very significant. 35 If survey text responses are fairly simple – lists of symptoms, for instance – statistics-based solutions such as these may be useful. With even slightly more complex text responses, as with PESs, both specificity and sensitivity will be compromised.

A possible solution to the problem is to develop analyses of the data using more advanced computational approaches, which some people refer to as artificial intelligence, although the definition of this term is discipline dependent. 36 Text mining has been previously tried with the 2013 Wales CPES (WCPES) free-text comments. 19 The so-called template-based (otherwise known as memory- or instance-based) approach, a form of machine learning, was used; this has often been used for comparable classification tasks. 37 This approach might be expected to be an improvement over manual methods and basic QDA approaches. The work was certainly useful in showing the challenges to quality of life that are often faced by cancer survivors, and associated gaps in health care. 19 This led to a service model showing links between factors that negatively affected patient quality of life and potentially mediating factors.

However, a manual thematic analysis of 80% of the data was still needed to be undertaken using NVivo, meaning that the analysis process still took many months and, therefore, was only slightly faster than a fully manual approach. This is because memory- or instance-based learning approaches such as this classify new data by comparison against stored, ready-labelled instances. The version that was used, from R statistics software (The R Foundation for Statistical Computing, Vienna, Austria), relied on the k-nearest neighbour (KNN) algorithm, which is based on regression analysis and is one of the simplest machine learning approaches. In general, supervised learning methods take pairs of data points (X, Y) as input, in which X are the predictor variables (features) and Y is the target variable (label). The supervised learning method then uses these pairs as training sets and learns a model F, where F(X) is as close to Y as possible. This model F is then used to predict Ys for new data points X that are input into the system, using a statistical similarity measure such as Euclidean distance or cosine similarity. The KNN algorithm works out the distances between the new data point and all stored data points, determines from these which stored points are closest (the KNNs) and then labels the new data point using either a majority or a weighted vote based on this calculation. In other words, the closer the new data point is in its aggregate distance score to one that is already stored, the more likely it is to receive the same label as the stored data point. As this approach uses stored examples for these calculations (i.e. as templates to ‘learn’ or work out where new data will be classified), it cannot analyse data accurately if (1) the new data do not match these templates well or (2) the distances between the templates are too great. This means that for good sensitivity and specificity, this approach requires approximately two-thirds of the data to be manually analysed and labelled as templates,38 as was previously found. 32 The remaining one-third of the data will then be matched accurately.

Although the previous study32 ultimately achieved a sensitivity of 78%, a precision of 83.5% and an overall F-score of 80% (see Chapter 8 for a further explanation of these scores), this approach is of limited use for repeated survey analyses. First, as explained above, it is not sufficiently automated to be useful for repeated waves of data and still does not possess the benefits of immediacy. Second, the algorithms that were used were pre-set by the R software developer and could not be modified. The ‘black-box’ approach was hard to evaluate,39 domain non-specific (i.e. not developed for health-care data per se) and insensitive to unexpected data. Each time new data need analysing, such algorithms need more training with new terms and words that may have arisen in the interim, such as new treatment names, because the algorithms are limited to the ‘controlled vocabulary’ of the templates. 39

Information retrieval

Given these limitations, a memory-based template approach to text mining is not appropriate for repeated waves of data, such as those generated by the annual CPES. The solution is to customise a rule-based IR or knowledge-extraction approach. This approach has been used worldwide in biomedicine. Herland et al. 40 provided a detailed description of some of the recent uses in health care, whereas Ordenes et al. 41 explored the widespread use of this approach to analyse comments made about commercial products and provide customer feedback.

Despite the significant use of rule-based IR over many years, at the time that funding was applied for, it had not been tried for PES free-text comment data. Indeed, it is still believed that this is the first such application; however, since funding was obtained, some teams and commercial organisations have developed analyses for the Friends and Family Test on patient satisfaction (a different concept from experience) and social media and website patient feedback. 42,43

Instead of using templates, rule-based IR methods involve a stage of lexicosyntactic preprocessing and development of gazetteers (sets of lists containing words and phrases for specific entities or concepts). This is followed by cascades of pattern-matching syntactic (grammar and other language structure) applications designed to find the targets for IR – in the case of the study, comments that match particular themes – in combination with rules that look up terms in the gazetteers.

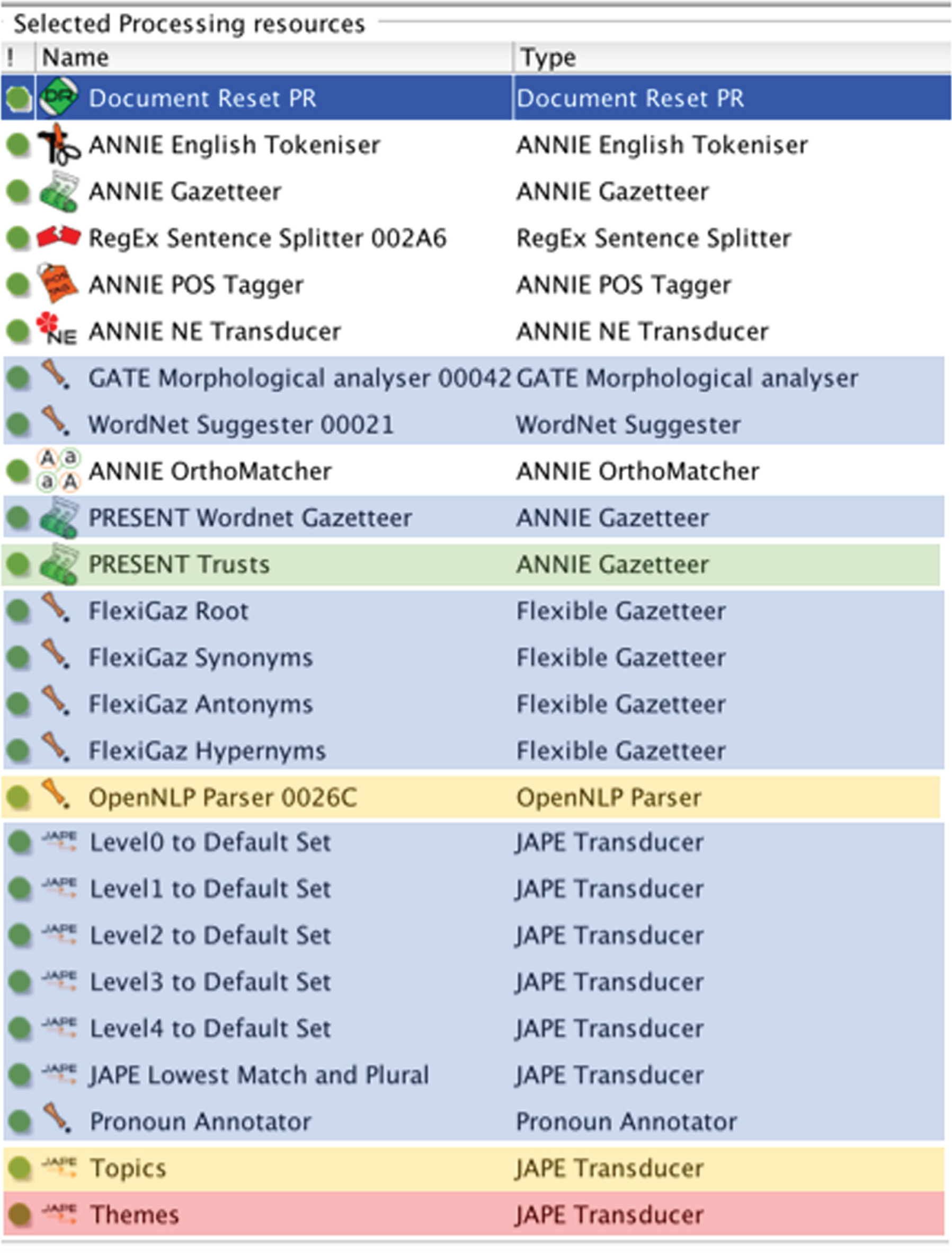

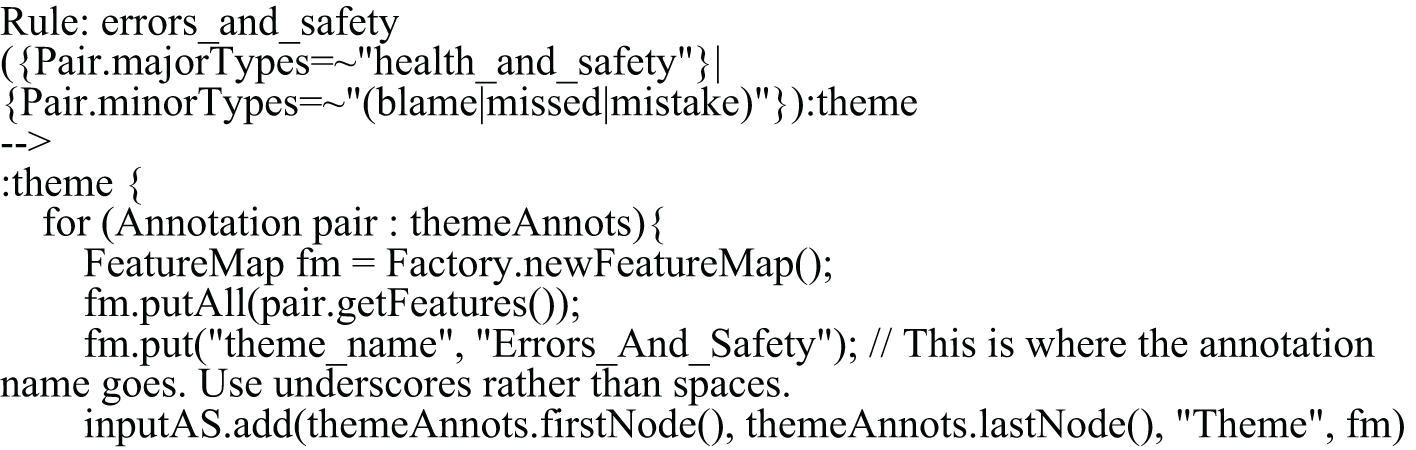

The approach was based on the GATE open-source system, the developers of which have labelled their process as text or knowledge engineering,44 whereas the term rule-based IR is used in this report to encompass the whole analysis process; the study’s ‘text engineers’ have added further applications and lookup rules or search queries to the basic GATE pipeline (for an explanation of these terms, see Chapter 4). To develop the gazetteers and rules, theme labels and data have been used from previous manual (human hand-coded) and template-based machine learning projects that the study team has been involved in.

The aim in relation to IR of text has been to explore how a highly transferable rule-based approach might perform; however, this performance could be further improved by using the rule-based outputs as templates for template machine learning.

Benefits of the approach

This approach has many benefits. Lookup rules can tag or annotate comments as belonging to quite nuanced literal themes, by combining gazetteers using Boolean logic. The functions of the syntactic applications include part-of-speech (POS) tagging, in which an individual word (X in the X–Y annotation used above) is tagged on the basis of the POS it represents (e.g. whether it is a noun, verb or adjective). This can, for example, distinguish between the different uses of the test in ‘the test came back negative’ and ‘I will test the new treatment’. Importantly, words (X) are not independent of each other, because if the previous word was an adjective, it is far more likely that the next word will be a noun than a verb. This enables new words to be more likely to be categorised correctly than not, given these various points of information. Such features of IR have been used to develop an analytical process that can cope with the fragmented phrases and sentences that typify survey free text. The approach also makes use of semantic (meaning-making) information, such as co-location. Thus, for example, if a comment says ‘The nurse was present. She did not speak’, the system will know that ‘She’ refers to the nurse because that is the relevant POS in the nearest sentence. Sentiment analysis has also been used, in which the label Y is used to reveal the sentiment (positive or negative) of each comment. These and other advantages of rule-based IR over template-based machine learning, and the rationale for choosing it, can be summarised as follows:

-

External knowledge repositories [such as WordNet®, version 3.1 (Princeton University, Princeton, NJ, USA)], can be used in categorisation, so that the system can cope with novel data linked in these repositories to existing labels.

-

New words can be added to gazetteers in the time that it takes to type them.

-

By writing rules that are not dependent on specific words, but that take into account the arrangements of words within syntactic and semantic contexts, the software can handle new data with good sensitivity and specificity.

-

It is not required to annotate a large number of data to start the process, in contrast to memory-based machine learning. Thus, once the rules are written, the system can be used in virtual real time for future iterations of PESs with no modification to minimal modification (a matter of hours rather than months). This gives it potential speed advantages in future years.

-

The system can be made domain specific, increasing the relevance of its outputs,45 as the rules are written by specific individuals.

-

New rules could be written very quickly and added to the system in the future and, hence, the approach makes for a potentially more accurate, more adaptable and more future-proofed system than template-based machine learning.

-

The system could be easily modified by others adapting the system in the future; transferability to other health-care surveys was an important element in the HSDR programme brief.

-

Rules could be specifically written to tackle some specific issues with free-text comments (see Chapter 4).

-

The approach is amenable to real-time data processing.

-

It does not require training examples (using resource-consuming manual data categorisation) or redevelopment of the whole system each time novel data are added.

Previous research has shown that linguistics-based IR models such as this can outperform manual (human) categorisation of customer feedback reviews,46 an area in which IR is particularly used. Therefore, it shows promise in the analysis of patient experience feedback.

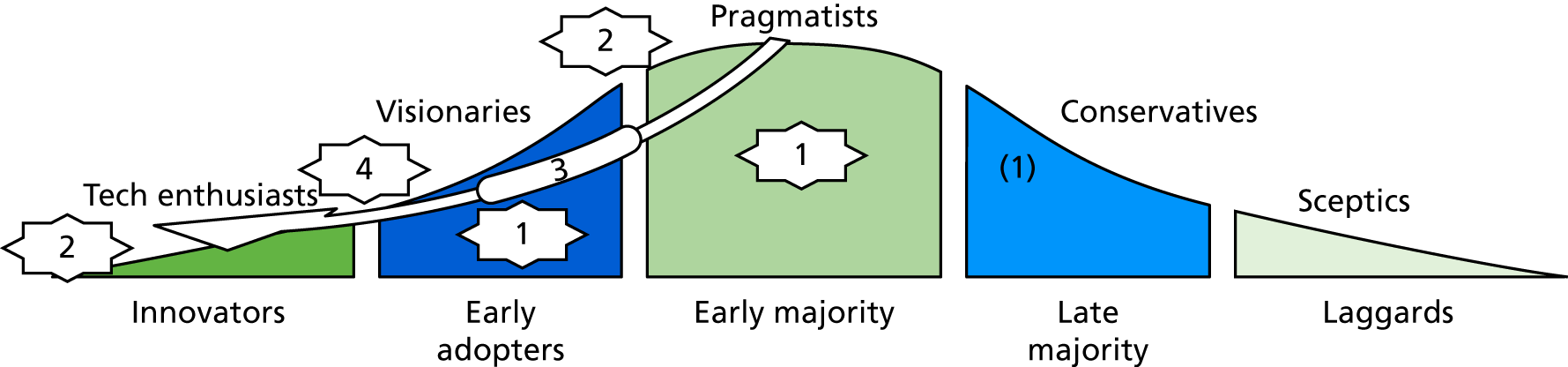

Engagement with the data: making it meaningful for all

The health sciences lag behind other disciplines in the use of computational approaches, such as IR. 47 It has been suggested that this owes much to the chasm between person-centred care and the depersonalised approach of computing. 47 In other words, it is unclear how it can be ensured that computational analyses benefit individual patients. There has been little work to bridge the chasm and use the outputs of computational approaches to directly improve health care for patients. Accordingly, when study design commenced, the pre-funding patient and public involvement (PPI) input included the concern that the results would be mechanistic and reductionist, with the survey respondents and the people and health-care processes they wrote about in their free-text comments being reduced to mere numbers and data points. 48 There was concern that automation, generalisations and breadth would be favoured over the insights the comment boxes were meant to provide.

The study design therefore prioritises PPI (see Chapter 10) and includes a number of co-design-type substudies with patients, carers and health-care professionals that feed into the main analysis and the design of a digital toolkit with a dashboard or visual summary of the data, as well as the presentation of the newly structured (i.e. thematically grouped) free-text comments themselves. This is what Halford and Savage49 call a symphonic approach, with small qualitative and quantitative studies being used in a complementary fashion with large data set (‘big data’) computational approaches. For this reason, the outputs should lead to improvements in the health care of people with cancer in ways that are meaningful to them and incorporate and represent their perspectives and language. 50,51

Aims and objectives

The primary aim was therefore to improve the use and usefulness of PES free-text comments in order to drive improvements in the patient experience, and to do so, it was considered important to use a mixture of rule-based IR and complementary smaller studies.

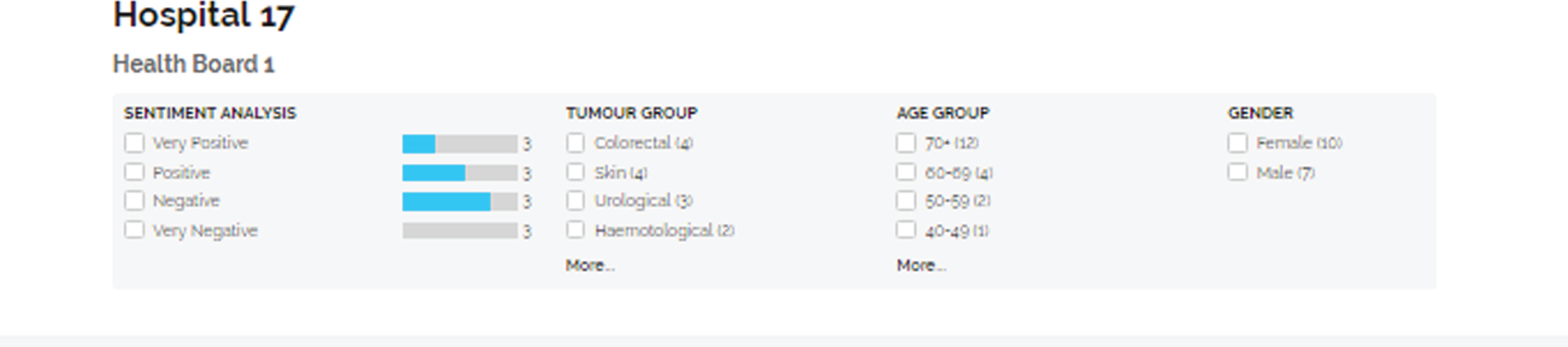

Therefore, it was intended to develop and validate a novel use of rule-based IR to provide rapid automated thematic analysis of large amounts of survey free text (using the CPES as the first case), and to develop and validate a linked ‘dashboard’ (which later became a toolkit) to display the results in a summary format that can be drilled down to the original free text and used by patients and staff alike.

The toolkit, and the use of co-design with stakeholders, gives the approach added value over a simple thematic analysis, in addition to the speed and automaticity that rule-based IR enables. The display is intended to illuminate service gaps and areas in which the patient experience can be practically improved at the team, NHS trust and national levels.

As one of the secondary aims, the transferability of the approach was explored. Manuals ensured transferability to free text in other health surveys more generally. The rule-based IR process (i.e. the topic-oriented automated free-text analysis) and the toolkit were designed to be quickly reproducible and easily modifiable across health-care topics.

The objectives were to integrate co-design and implementation science into the approach, output thematic analyses into a digital display, produce recommendations on toolkit design, validate the approach and ensure transferability. The work primarily asked:

-

Is the novel approach a valid, accurate way to analyse large-volume CPES free-text responses?

-

Can the approach be transferred to similar surveys on other health-care topics?

-

Is co-design (as defined here) with mixed UK stakeholders (patients, their partners/carers, NHS managers and clinicians) feasible and effective for the approach?

-

Is Normalisation Process Theory (NPT) useful for the approach?

Overview of the study

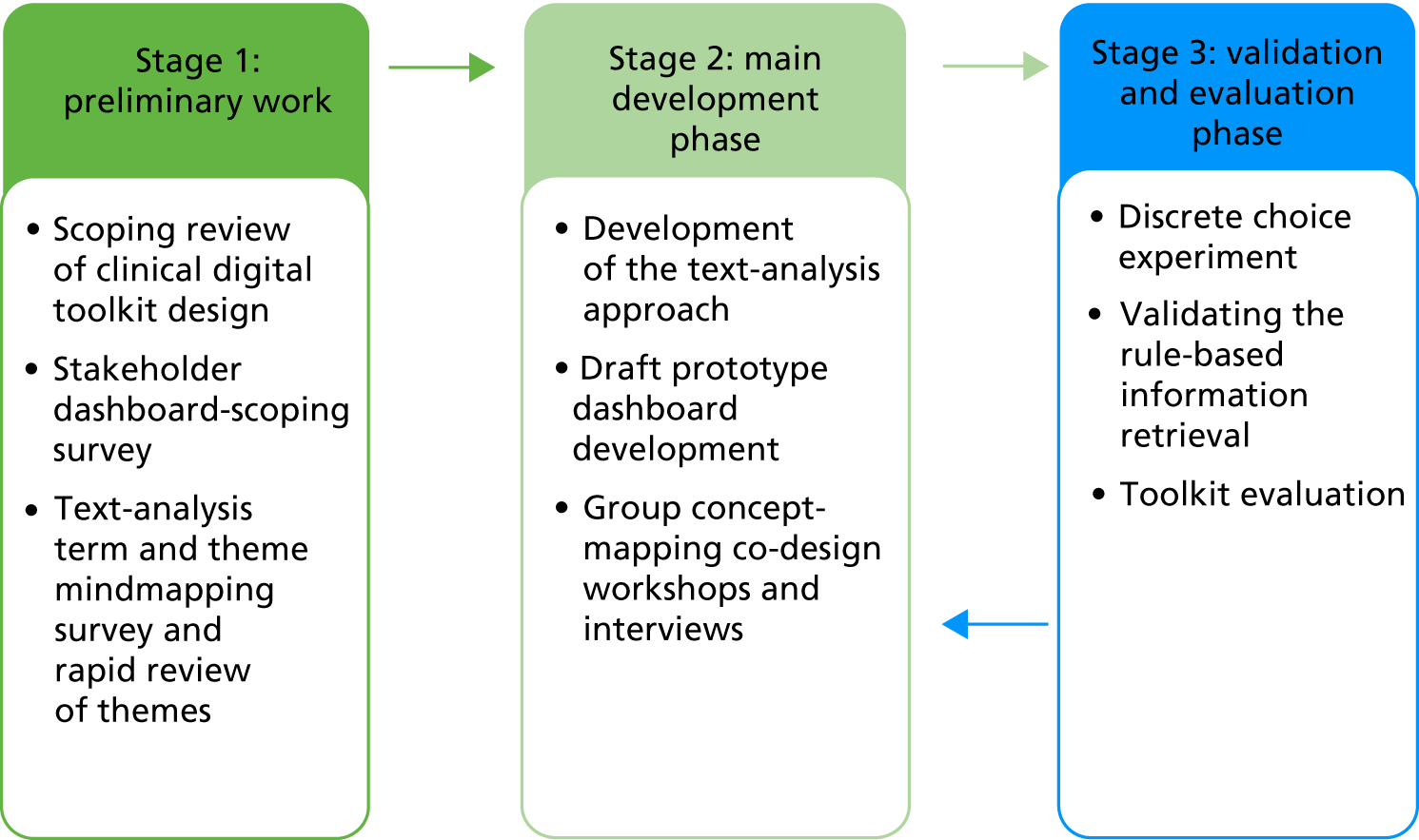

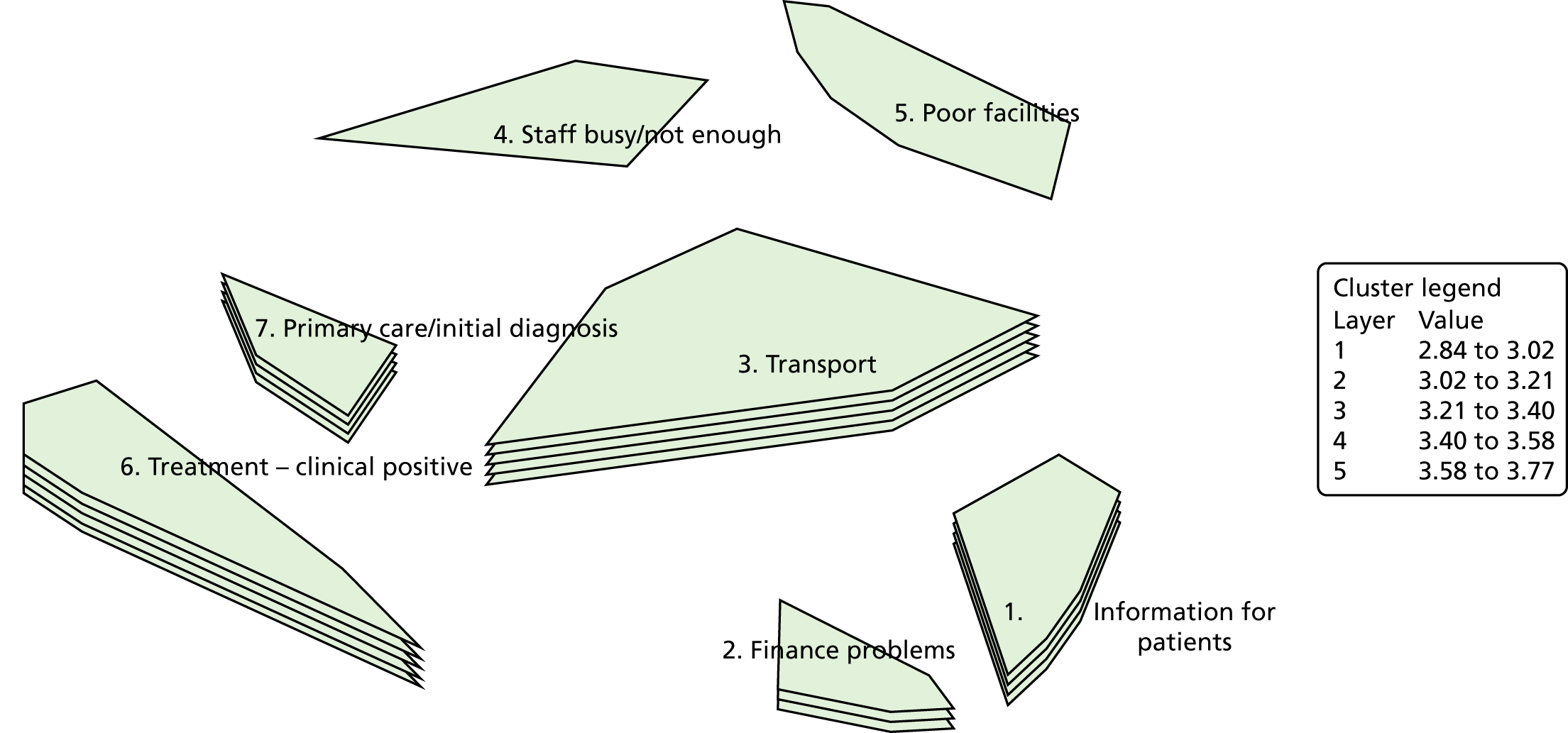

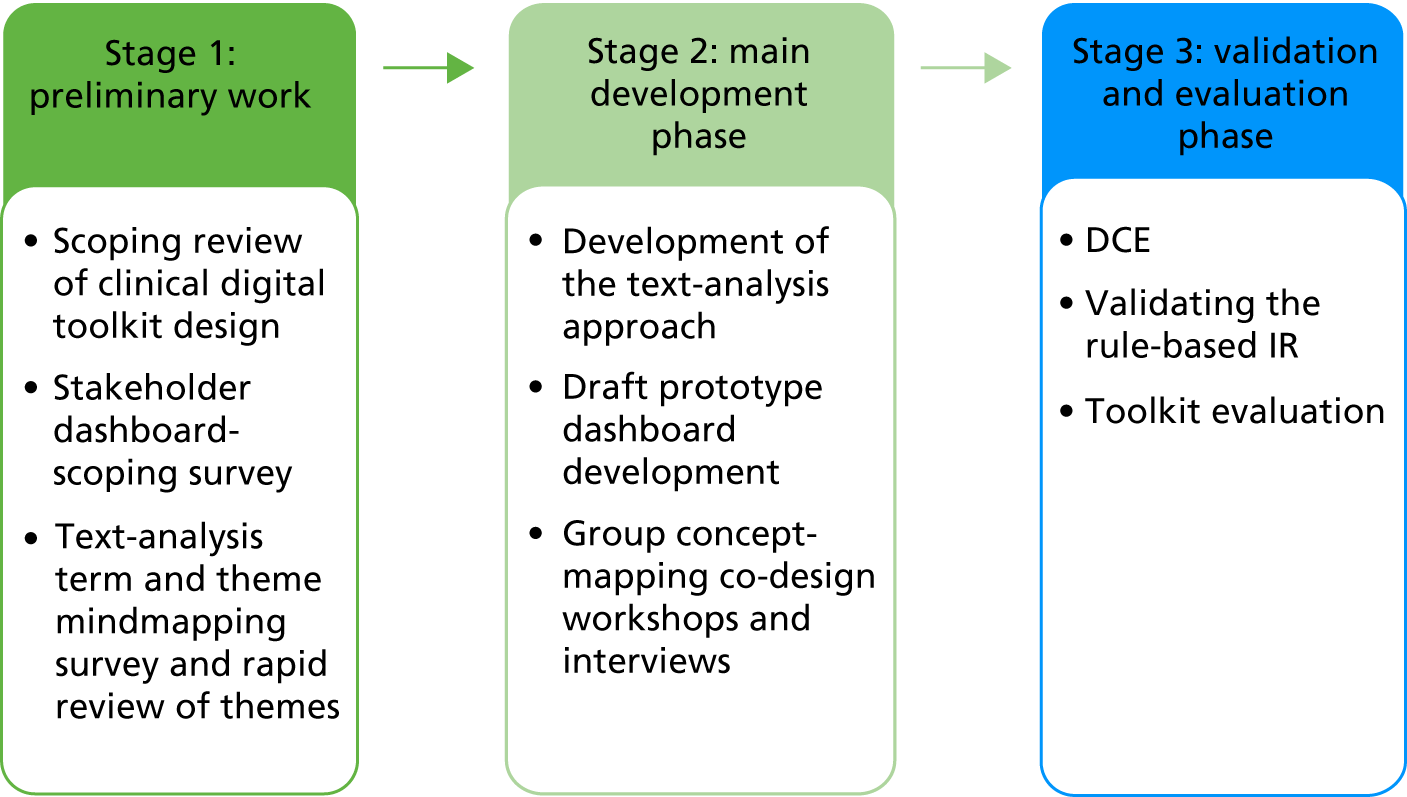

The study comprises three main stages with subcomponents in each, which are summarised in Figure 1. Parts of this figure are repeated throughout the report to orient the reader.

FIGURE 1.

Overview of the study. The flow back from stage 3 to stage 2 represents the final refinements that were made to outputs as a result of stage 3 work.

Stage 1: preliminary (scoping) work

Review: see Chapter 2

A scoping review determined the key health-care dashboard design principles.

Dashboard-scoping survey and term and theme mindmapping survey: see Chapter 3

Patients, carers and health-care professionals completed an online survey to input into the text-analytics work that asked them to mindmap relevant terms and themes. In addition, they completed a separate survey on dashboard design to find out what potential end-users considered to be the key features for a good dashboard.

Rapid review of themes: see Chapter 3

Through a rapid review of the literature and incorporation of themes from previous relevant research, a draft taxonomy of themes was constructed, which was further developed in stage 2.

Stage 2: main development phase

Rule-based information retrieval work: see Chapter 4

The rule-based IR work involved modifications to GATE and further programming to solve issues with the analysis of PES data.

Prototype toolkit development: see Chapter 5

Prototypes were developed from stage 1 findings and refined through stage 2 as new data were collected and analysed.

Group workshops and interviews: see Chapter 6

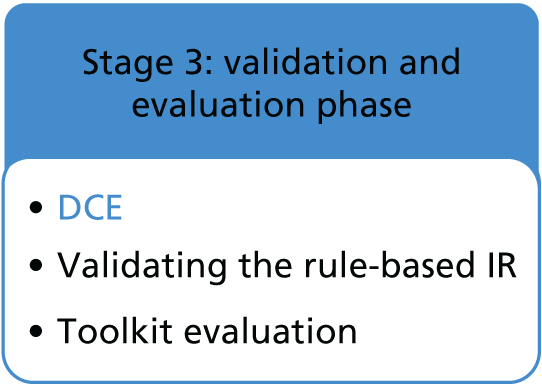

The workshops incorporated group consensus concept-mapping techniques for naming and validating themes, and design discussions around dashboard prototypes, as a result of which the single-screen dashboard developed into a fuller toolkit. The interviews explored the issues in more depth.

Stage 3: validation and evaluation phase

Discrete choice experiment: see Chapter 7

A discrete choice experiment (DCE) was used to objectively validate a set of core features required of the toolkit for it to be taken up within health care, and it included a cost–benefit analysis based on the findings.

Validating the rule-based information retrieval: see Chapter 8

The sensitivity, specificity and accuracy of the approach was tested, as well as its transferability.

Toolkit evaluation through structured walk-through techniques: see Chapter 9

Techniques were used that are standard in technology evaluations to consider the usability and usefulness of the analysis and the toolkit and, combined with NPT, the work that these would do within health-care settings.

Theory

Normalisation Process Theory was used throughout the study. NPT is used to understand actions related to the implementation, embedding and integration of new technology or complex interventions. 52,53 It comprises four core ideas or constructs: (1) coherence, (2) cognitive participation, (3) collective action and (4) reflexive monitoring. Each of these constructs is subdivided into four and, thus, there are 16 constructs in total. The NPT toolkit website [www.normalizationprocess.org/npt-toolkit.aspx (accessed 9 October 2018)] contains example questions that can be used to explore these, and represents the results graphically in radar plots.

Chapter 2 Scoping review of clinical digital toolkit design

Introduction

To ensure that the dashboard, as a core element in the study, was designed to meet standards of best practice, a scoping review was undertaken of the existing evidence on digital toolkit design for use within health or social care or for the dissemination of health or social care data. Scoping reviews are typically used when an area is comparatively poorly understood. 54 Good dashboard design principles have been developed by web designers and programmers,55–61 but there was a dearth of evidence concerning health care specifically. Informal conversations with relevant web developers revealed that they tended to design sites using their technology-focused explicit and tacit knowledge, from a brief developed with or by commissioners of the website, who tend not to be the ultimate users. Consultation with patients and the public was not routine, although was sometimes undertaken, particularly for research studies and – in the last few years – some health smartphone applications. 62,63

In 2009, the NHS Connecting for Health initiative began the Clinical Dashboards Programme. This was incorporated within what was then the Health and Social Care Information Centre [http://webarchive.nationalarchives.gov.uk/20130502102046/http://www.hscic.gov.uk/about-us (accessed 9 October 2018)] and is now called NHS Digital. This provided toolkits for local groups to develop tailored dashboards from local quantitative data,64 an attempt to make dashboard development more accessible to health-care professionals as end-users. However, its recommendations do not appear to have been based on domain-specific considerations that incorporated patient views or were informed by empirical work on health-care dashboard usability and design considerations. Moreover, this programme was so flexible that it led to very divergent design approaches that often breached gestalt design principles. 65 Such flexibility was considered to be useful by the professionals concerned. However, gestalt design principles were developed from basic cognitive processes (see Chapter 10), and good dashboards should tailor their designs to specific end-user requirements without breaking these principles. In other words, end-users need to work with, rather than instead of, designers. In a pre-study exercise, at a PRESENT study launch workshop, three mixed groups of health-care professionals and patients at a PRESENT study launch workshop were formed to rate clinical dashboard programme designs (the Bolton series66) for the information they imparted and for attractiveness. Participants criticised each one as being unsatisfactory. Moreover, the three groups diverged considerably when asked to rank the examples in order of preference. It was clear, therefore, that the field would benefit from some clear direction on the core principles in health-care dashboard design.

As La Grouw61 states, dashboard design ‘starts with understanding the business. Anyone can design a dashboard; however designing an effective dashboard is a combination of brain science and business process knowledge’ [p. 4 (our emphasis)]. This domain-specific scoping review was therefore important in filling an evidence gap in the ‘business process’, as well as being useful in developing the evidence-based dashboard.

Method

A scoping review methodology67–69 with six stages was used: (1) identifying the research question; (2) identifying relevant studies; (3) selecting studies; (4) charting the data; (5) collating, summarising and reporting the results; and (6) consulting knowledge users. The first five steps of the framework are detailed below; for step 6, see Chapter 6.

Identifying the research question

The research question was shaped by the larger study and was ‘How does existing empirical evidence suggest that a digital health-care dashboard or toolkit should be designed to optimise its usefulness and usability?’.

Definitions and scope

The PICOT (participants, intervention, comparison, outcomes, types of study) framework70 was followed to determine inclusion and exclusion criteria and develop the search strategy. Each PICOT element is described in the following sections.

Participants

The focus for the larger study was on digital dashboards or toolkits used to improve health care, but the scope was broadened for the review to include any digital dashboard or toolkit study in which the dashboard or toolkit is intended for use by patients and carers, or by health-care professionals, health service managers, social and community workers or other professionals involved in health services or public health. Data that may be transferable to health-care improvement dashboards were not excluded. The different groups were not defined more precisely than this in order to ensure that sufficient useful information was obtained. Thus, information relevant to the digital dashboard or toolkit design for any health-care or health-care-related service, condition or topic was included.

Intervention

There are many dashboard definitions in common use, but the one provided by La Grouw61 was taken as the working definition: ‘a means to provide “at a glance” visibility into key performance using simple visual elements displayed within a single digital screen’. The following text was added to extend the definition: ‘and that is dynamic, that is, it can be mined down to see the original data’.

From this, a health service or social care dashboard as a digital display of data was defined as:

-

giving health-care or social care providers and stakeholders relevant information on health service performance or social or patient care or satisfaction, or, as in this study (although the review’s scope was broader), the patient experience at a glance; and

-

including some element of data transparency or granularity, so that higher-level summaries can be mined down at areas of interest. 61

The development of dashboards with higher-level summaries and lower-level detail was a key recommendation for the NHS in Lord Darzi’s High Quality Care for All: NHS Next Stage Review Final Report,16 as well as in The Health Informatics Review: Report. 71

Dashboards often form a part of digital toolkits and digital health-care interventions, so the study aimed to be inclusive in the search terms to capture a comprehensive collection of evidence. Therefore, any dashboard or toolkit was considered, or any other form of predominantly graphic communication or information provision intended for digital – usually web-based – use. This was especially important because the terminology in the area is constantly shifting. 72 It proved to be useful when it was decided, on the basis of participant feedback, to expand the dashboard that was being developed into a toolkit (see Chapter 6). A toolkit, as defined by the study team, is a type of internet portal that provides a range of resources, such as advice, information and networking targeted at a specific community.

The main exclusions were non-digital interfaces and digital or non-digital infographics, as they follow different design principles. Infographics are designed to tell a story to disseminate knowledge rather than to result in actionable events. However, static dashboards were not excluded from the search, despite the fact that the working definition included dynamism. This is because the greatest importance should be attached to the summary data page, as many users may not go beyond this (see Chapter 9); thus, analyses of static pages could contribute useful knowledge. Studies of dashboards that did not provide metrics, measures or indicators, or other numeric data, qualitative data or health-care information on a single screen intended to summarise data for future action were excluded.

Comparison

Studies did not need to include comparators.

Outcomes

A broad, and inclusive, approach to outcomes was undertaken within the areas of usability and design, display, communication and engagement features, such as the type and number of infographics, use of colour and font size. Health-related outcomes or effectiveness were not considered.

Types of studies

Any study design was considered and no restrictions were imposed on date. The search was restricted to English-language studies.

Study inclusion and exclusion criteria

Table 1 shows the study inclusion and exclusion criteria, as a summary of the previous sections.

| Criteria | |

|---|---|

| Inclusion | Exclusion |

| Studies in which the intervention being evaluated was some form of digital visual representation of information designed to inform future action | Studies in which the evaluation of the design or usability digital visual intervention was not an objective (e.g. which considered only clinical outcomes resulting from use) |

| Studies in which the intervention was designed for use by any stakeholder within health or social care or related domains | Not used for health or social care data or related domains |

| Strategic, tactical, operational, local or public purpose of the intervention | |

| Any date | Non-English-language papers |

| All peer-reviewed empirical primary study designs | Narrative and review papers, and grey literature |

| Data for display could be qualitative or quantitative, although the dashboard is being designed to summarise qualitative data, but the intervention had to include a summary data page | |

Search strategy

To develop the search strategy, environmental scans were undertaken using the iterative process usually used tacitly in systematic reviews. The process began with the first 100 results in each database; these display results by relevance using a link-analysis system or algorithms. Specificity was considered (identifying keywords of the first 100 articles in each database that may be associated with their relevance or lack of relevance) and this was balanced against the total number of articles retrieved (sensitivity). This approach led to the refinement of the search several times, including or excluding particular terms or words. See Appendix 1 for the final search terms for PubMed, which combined synonyms and metonyms for (1) dashboard, health informatics or related terms; (2) words relating to the design or use of a dashboard or similar technology; or (3) the terms health or health care, and variants thereof.

The scoping review was conducted by a team of five researchers. After the search terms were selected and refined by the whole team, four of the researchers extracted the search results from the databases into EndNote X7.4 [Clarivate Analytics (formerly Thomson Reuters), Philadelphia, PA, USA] for group sharing. The databases were PubMed, Cumulative Index to Nursing and Allied Health Literature (CINAHL), PsycINFO, Web of Knowledge, the Association for Computing Machinery Digital Library, the Journal of Medical Internet Research and EMBASE. The final search was undertaken in March 2017.

Two researchers (Daria Tkacz, Esther Irving/Chun Borodzicz/Johanna Nayoan) independently screened the search results by title, then abstract, then full text, depending on the level of detail needed to reach a decision for inclusion or exclusion. If the reviewers disagreed, a third reviewer (Carol Rivas) would have arbitrated, but this was not necessary. The reviewers assessed all results for relevance using the inclusion criteria in Table 1. In total, 13,823 articles were excluded after reading the titles or abstracts. The remaining 418 articles were subjected to full-text checks. Further articles were identified by backward and forward citation tracking of included studies. Forty-three articles were identified as fitting the criteria and included in the final review. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram detailing the assessment process is shown in Appendix 2 (PRISMA is an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses).

Assessment of methodological quality

A formal quality assessment of the included studies was not undertaken, as the main interest was in using the results to develop the health-care dashboard.

Charting the data and collating, summarising and reporting the results

A data extraction form was developed to ensure consistency in the review process across researchers. This included details of each study’s design, as well as the key constructs and other information needed to answer the research question. The focus was particularly on the format and audience of the dashboard, and results of the evaluation of its features. After two researchers extracted the data from all included studies (with cross-checks for quality control), one researcher (Daria Tkacz) undertook a narrative synthesis of the results. This was checked back against the original documents by Daria Tkacz and Carol Rivas for consistency and face validity. Results were also summarised in tabular form and discussed by the research team, steering committee and user advisory group (AG) members in relation to the PRESENT dashboard design. The DCE and structured walk-through results were mapped onto this table to inform both the final design and the general recommendations for health-care digital dashboard/toolkit design.

Results

Forty-three studies were included in the review. Study settings and intervention types included general practice,73 quality-of-life information systems,74,75 primary care,76,77 emergency departments,78,79 personal health dashboards,80 hospital care79,81–85 and sexual health. 86

The evaluation methods used in studies varied from focus groups and interviews74,87–89 to walk-throughs,74,76,78,82,90,91 eye-tracking82 and questionnaires. 79,82,91

The study findings covered access, flexibility and individualisation, usability, use of images and videos, chart types, data interrogation, print and export, community features, security and privacy and offering recommendations and solutions, which are considered in turn in the following sections.

Access

Access was considered by 9 of the 43 studies. Dashboards and toolkits were reportedly more effective if provided as part of a workflow, integrated into an already existing system92 or otherwise easy or effortless to access or constantly in sight. 76,93–95 Stand-alone systems requiring the user to initiate access via a separate login were less likely to be used regularly. Users may be reluctant to register for access, particularly if the process requires effort. 89,90,94 Any registration process should therefore be clear and short, preferably limited to one screen. 96 The value of the system and its benefits for the user need to be clearly defined before registration, for instance through an engaging homepage. 96

The URL should be simple, memorable and clearly associated with the focus of the dashboard. 89

Having real-time access to a dashboard during clinics with patients was seen as a valuable feature for clinicians. 77

Flexibility and individualisation

The theme of flexibility and individualisation was reported in 11 articles. Users valued the ability to tailor a system to individual and organisational needs. 94,97–100 This functionality could include personalised alerts and reminders,77,79,93,100–102 the option to set default filters75,87 and the ability to pin particular information to the screen to appear at all times, enabling comparisons. 77 Benefits were also reported from being able to dynamically add, remove and adjust elements of the system to fit the user’s focus and workflow. 74,82,103

The appropriate style to use when presenting the data is highly related to the nature of the data set and its audience, and, hence, it was recommended in four studies that the user is presented with a choice of graphs, dials or tables that can be adapted to individual preferences. 74,82,102–104

Usability and navigation

Usability was considered in 24 studies (as reported in 26 papers). Study authors considered a simple-to-follow, clear layout that anticipates the user’s workflow as key to usability. 75,87,90,102,103,105 Faster interpretation of the data is enabled by applying colour to emphasise differentiations and by adopting ‘most common’ lists. 79 Scrolling should be avoided or limited according to study authors,89,96,106 and information should always be accessible in as few mouse clicks as possible. 79,100

Using bright, distinct and highly contrasting colours for the interface is recommended. 84,86,96,104,107,108 Warm colours have been found to be inviting. 109 Black fonts on contrasting, light-coloured backgrounds are favoured. 109 The font must be large and clear in style to ensure accessibility. 76,89,100,106,108

To ensure comfortable navigation, the browsing options should always be clearly visible on all pages. 76,96,110 The navigation buttons should be large and clearly labelled. 90,108 A left-to-right menu has been found to be more successful than a top-down menu. 95 It is also important to consider different screen sizes and browsers to ensure that the layout resizes well and looks suitable on different devices;73,76,111 in other words, the system should be responsive.

Stakeholder preferences for layouts and ways of presenting the data depended on their role. Simple, static dashboard screens were reported to be helpful for ‘at-a-glance’ overviews during busy clinics, whereas interactive views were described as being more helpful for in-depth analytics. 74,103 Correspondingly, simple layouts were found to be appropriate for most users, whereas more complex, highly interactive dashboards were more suitable for ‘power users’ with analytical responsibilities. 74,82,103 Overall, to support the effective interpretation of information on the dashboard, it was recommended that the pages are not cluttered or overburdened with information. 90,96,104,106,109 The information at the bottom of the page tends not to be found easily, thus key points should appear at the top. 82,87 Limiting the immediately visible textual information and giving users an option to view more detail has been found to be effective. 111

The language must be kept simple and abbreviations and jargon should be avoided. 76,89,90,93,104,109,111 One study suggested that the complexity should be kept below a seventh grade (in the USA – equivalent to year 8 in England) reading level, and first-person narrative should be used in the text for a more engaging style. 106 Coyne et al. ,104 who designed and evaluated a dashboard for young people, emphasised that the language should be empowering, not patronising. 104 To avoid misinterpretation, it is important to provide definitions for any dashboard elements that may not be immediately obvious. 74,100,103,112

Use of images and videos

Although images are considered to be effective at fostering engagement with dashboards,87,105 one study emphasised the need to ensure that their purpose was informative, not solely decorative. 109 The choice of pictures should reflect users’ realities regarding factors such as age, gender or ethnicity. 105,106 Videos were found useful both for providing instructions on how to use the dashboard and for providing content, for instance, in the form of patient stories. 88,106,107

Chart types

Four studies reported that line and bar charts were the clearest and most appropriate charts for providers. 76,84,87,103 Line graphs were considered to be particularly suitable for comparisons over time, and they can be used with tool tips. 76 By contrast, one study found that speed dials were more effective than bar charts or radar plots. 77 This might have been linked to the nature of the displayed data, which focused on lifestyle. A sixth study compared the effectiveness of graphs and tables and found that the former were more appropriate for tasks that required analysing relationships and the latter were more appropriate for extracting specific values. 101 This study also showed that graphical formats were suitable for providers with a lower level of analytical skills, whereas tabular formats were more accurate for complex tasks.

Decision-making users with highly developed analytical skills may benefit from innovative graphs showing different data simultaneously82,84 (e.g. with multiple graphs on one screen). 80 Users often stated a desire for historical comparisons. 76,83,84,87,93,97,98,112 When reference data points, such as national averages, are included, interpretation is facilitated.

Regardless of the type, graphs need to be clearly labelled. 74,76,91 Displaying proportions and sample sizes is important for informed judgement74 and using highly contrasting colours to differentiate data points on the graphs helps to avoid misinterpretation. 82,84 The red/amber/green (RAG) traffic-light colour system has been found to be universally recognisable across health care. 76,78,79,81,83,95,112

Data interrogation

The ability to filter the data in real time and to sort these by any level and quality indicator was found to be valuable. 73,76,112 Importantly, the filter parameters need to be practical, clearly defined and aligned with the user’s work. 73,76,80,112 The design needs to make it clear that the page has changed after filtering. 76

Search boxes were also found to be useful for interrogating the data, and a drop-down list or dictionary of suggested search terms was recommended to meet the needs of users who may not be sure what to look for. 90

Hartzler et al. 99 considered the use of pictographs on a dashboard designed for patients and found that weather pictographs were more effective at illustrating patient-reported outcomes than the overly simplistic ones based on smiley and sad faces or charged batteries.

Print and export functions

A feature highlighted in four studies,74,98,104,109 as desired by users, was the option to print or download information and data, for patients to use or share with friends and family, and for professionals to use or share with colleagues.

Community features

A number of studies found that fora, chat rooms or similar community features were highly desired by users, to encourage an exchange of experience and information-swapping and to establish communication between patients and other patients or clinicians. 74,86,89,97,99,101,107,108,111

Discussion and impact on the project

The scoping review provided us with useful recommendations for the development and delivery of the dashboard (see Appendix 3). The evidence suggests that a clear definition of value gains on the homepage and a simple, short registration process are essential for initial user engagement. Ensuring convenience of access and integration with a user’s workflow are important for continued engagement. This can be achieved by offering users the ability to tailor the dashboard to their needs and preferences, making information available quickly and in a clear format, as well as offering a range of filters to enable quick analysis. Thus, the over-riding finding from studies, and the focus of any health-care dashboard designer, should be on ensuring minimum user effort and making role-specific tasks simple and quick to achieve.

Strengths and limitations

This was a scoping review, and for this reason, although it was rigorous and systematic, it had a broad research question. Most studies considered the health-care professional user, and few evaluated dashboards that were designed for patients. Many of the features elucidated are no different to more generalised good dashboard design features (see Chapter 10). For example, many common recommendations from the review studies accord with La Grouw’s61 assertion that a good dashboard requires appropriate high-level summary data, display features that are appropriate for the message and design features that are customised to the requirements of the specific users. Nonetheless, sufficient papers were located to provide a clear picture of health-care dashboards as needing to be easily integrated within existing workflows and simple and effortless to use, but flexible. It was clear that health-care dashboard users were pressed for time.

Implications for research and health care

This scoping review provides a valuable summary of evaluation-based recent evidence on desirable design features for health-care dashboards. The findings have been used to develop a list of recommendations for evidence-based health-care dashboard design, combined with other work. This list of recommendations can be checked by dashboard researchers, commissioners and developers within health care when developing new dashboards, for their optimisation.

How this informed later stages of the study

The findings of this scoping review were fed into a report shared among research team members. The immediate implications for the design of the dashboard were discussed with the programmer. Many of the suggestions raised in the articles discussed in this chapter were considered in the topic guide for the workshops and follow-up interviews, when the opinions were sought of the stakeholders to whom the dashboard is addressed. These were then cumulatively discussed by the research team and considered in relation to the scope of the project. This informed the design decisions for the beta version of the prototype, which was subsequently tested in the proof-of-concept stage.

The scoping review was also essential for designing the DCE. The key design features identified in this chapter informed the list of attributes for the DCE (see Chapter 7). The cove research team met with the economist working on the project, Emmanouil Mentzakis, on three separate occasions, during which the list and variations of the attributes were further discussed and adapted. The final list of attributes was shared with the AG for comments before the DCE survey went live.

Chapter 3 Scoping studies

In this chapter, consideration is given to the data and information that were collected and used to develop the initial plans are considered for the rule-based IR and dashboard/toolkit development, and the involvement of various stakeholders (patients, carers and health-care professionals). The results from the different data sources are synthesised towards the end of the chapter, with findings from the scoping review of the literature (see Chapter 2) also included. The chapter is concluded by considering the limitations of the approach, the implications of the findings for research and health care, and the impact on the project, including recommendations to inform the rule-based IR and toolkit design.

Introduction

The scoping stage enabled us to build on existing research and incorporate the stated needs of the different stakeholders in health care. The stage comprised the following elements:

-

development of preliminary themes from prior research on the patient experience, in particular, the prior machine learning work and a rapid review of thematic analysis of similar PES data (with themes summarised in Table 2)

-

two online surveys, important in giving the different stakeholders (patients, carers and health-care professionals) a voice in the shaping of the study from the start; although it was originally planned to use crowdsourcing for these, it was advised when applying for ethics approval that a more focused approach would need to be undertaken.

These elements are now considered in more detail.

Rapid review of prior research

A rapid review of the literature on patient experience themes was undertaken, using a focused search strategy that was not intended to be exhaustive, but rather to help us to develop the taxonomy of themes. Studies were included across health-care domains that might be relevant to the transferability of the approach. Searches were undertaken in May 2016 and updated on 31 May 2017.

The inclusion criteria were:

-

the study grouped into themes the free-text comments completed by patients as part of a patient health-care experience survey

-

in mixed-methods analyses, free-text themes had to be distinguishable from other themes

-

studies published since 2006

-

English-language studies

-

peer-reviewed studies.

The exclusion criteria were:

-

not satisfying the inclusion criteria

-

patient satisfaction surveys (such as the Friends and Family Test, which probes for a value judgement on whether or not expectations were met, rather than encouraging patients to be specific about how points of care were experienced)

-

patient experience surveys that focused on aspects of care that were not generalisable

-

statistical analyses.

The search was limited to the last 10 years to obtain an up-to-date perspective in the context of modern health care. Only peer-reviewed articles in English were considered because this was a rapid review and in order to avoid unpublished reports that may have been biased by survey or health-care provider interests, and three main databases were searched: Web of Science including PsycINFO, CINAHL and PubMed. See Appendix 1 for the search strategy. This combined synonyms and related terms for digital dashboards with potential outcome measures, such as usability, and with terms for health or health care.

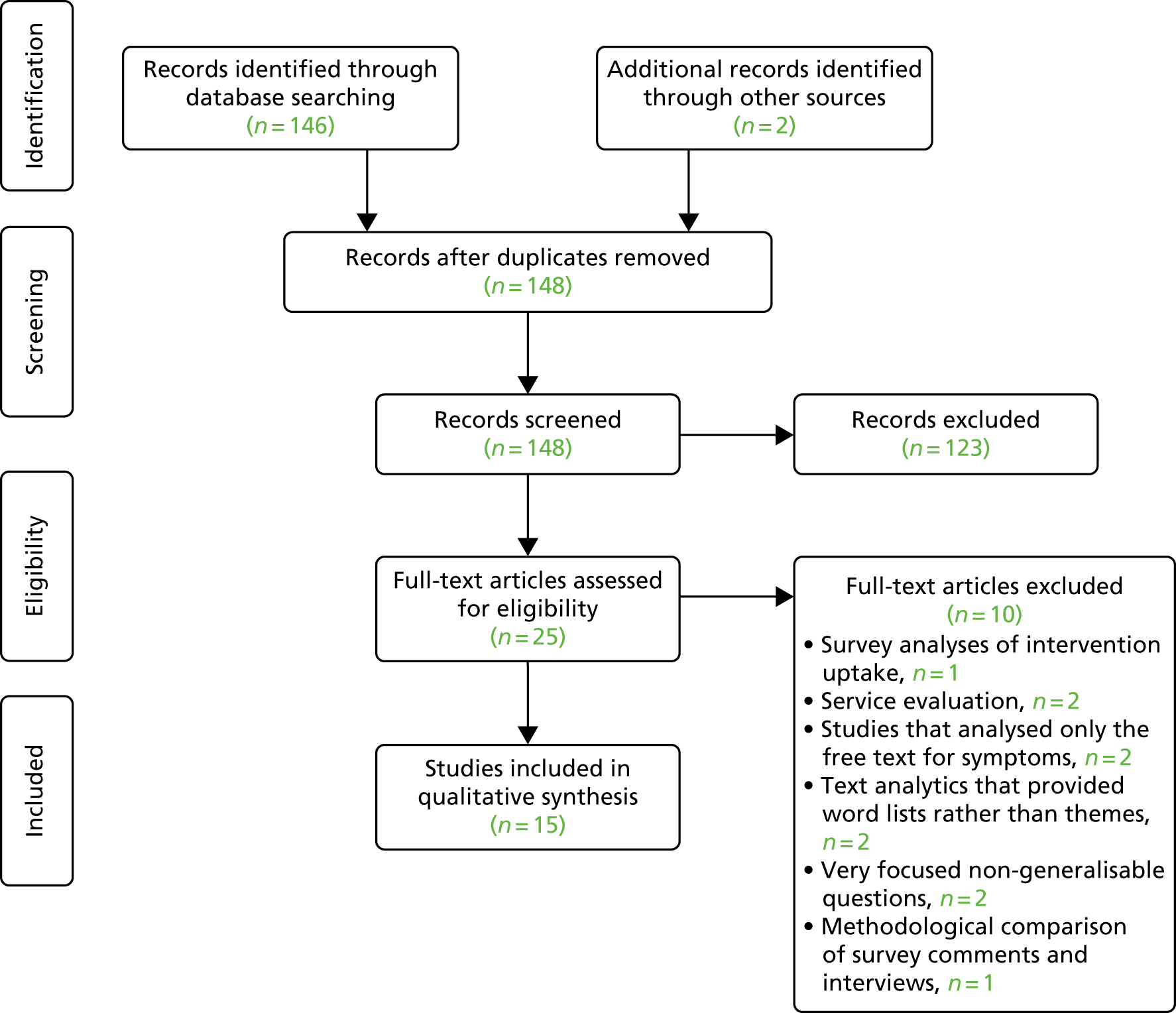

Altogether, 148 potentially relevant articles were identified from these three sources. Once articles were rejected that did not fit the inclusion criteria on the basis of their titles and abstracts, 25 full-text articles were considered. Survey analyses of intervention uptake (n = 1) or service evaluation (n = 2) were then excluded. Studies that analysed only the free text for symptoms (n = 2), text analytics that provided word lists rather than themes (n = 2), very focused non-generalisable questions (such as those on the lesbian, gay, bisexual and transgendered experience) (n = 2), and methodological comparisons of survey comments and interviews (n = 1) were excluded. This left 15 articles for inclusion (see Appendix 4 for the PRISMA flow diagram).

The 36 themes identified in this review are summarised in Table 2. This table shows that transport, hospital environment and food were the three most common structural themes across studies. Considering health-care systems, access was commonly mentioned and no mention was made of broader system constraints, such as NHS funding. Communication and information was the most common theme in the individual needs category and overall; other themes in this category were broken down into quite granular levels. Waiting times, co-ordinated care, and aftercare were the most commonly reported process themes. Studies by team members and an independent analysis of the Scottish CPES contributed the most themes, highlighting the importance of the study and the richness of CPES as a data source.

| Theme: presence or absence of | Study | Tippens et al.31 | McKinnon et al.34 | York et al.33 | Poole et al.30 | Lian et al.28 | Henrich et al.26 | Fradgley et al.24 | Cunningham et al.22,23 | Number of studies | Suggestions from the survey | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CQC standards | Bracher et al.21 | Wiseman et al.14 | Wagland et al.32 | Iversen et al.27 | McLemore et al.29 | Hazzard et al.25 | Attanasio et al.20 | |||||||||||

| Transport- or travel-related issues | ✓ | ✓ | ✓ | ✓ | ✓ | 5 | ||||||||||||

| Hospital environment and equipment | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 7 | ✓ | |||||||||

| Resources | ✓ | 1 | ||||||||||||||||

| Financial concerns | ✓ | 1 | ||||||||||||||||

| Safety | ✓ | ✓ | 2 | |||||||||||||||

| Staff knowledge, skills and abilities | ✓ | ✓ | ✓ | ✓ | 4 | |||||||||||||

| Consent around treatment | ✓ | ✓ | 2 | |||||||||||||||

| Food | ✓ | ✓ | ✓ | ✓ | 4 | |||||||||||||

| Staffing levels | ✓ | ✓ | ✓ | ✓ | 4 | ✓ | ||||||||||||

| Out-of-hours and weekend care | ✓ | 1 | ||||||||||||||||

| Signposting to external sources of support, such as charities | ✓ | ✓ | 2 | |||||||||||||||

| Privacy | ✓ | 1 | ||||||||||||||||

| System | ||||||||||||||||||

| Accessing the care system | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 7 | ✓ | ||||||||||

| Feeling that the system had caused their health to worsen | ✓ | 1 | ||||||||||||||||

| Trust in staff or system/confidence in the system | ✓ | ✓ | 2 | ✓ | ||||||||||||||

| Individual needs | ||||||||||||||||||

| Clear information/communication between patients and staff/candour | ✓ | ✓ | ✓ | ✓ | . ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 12 | ✓ | |||||

| Involvement in decision-making | ✓ | ✓ | ✓ | 3 | ✓ | |||||||||||||

| Emotional, social and psychological support | ✓ | ✓ | ✓ | ✓ | 4 | ✓ | ||||||||||||

| Empowerment | ✓ | 1 | ||||||||||||||||

| Empathy and compassion | ✓ | ✓ | 3 | ✓ | ||||||||||||||

| Person-centred care, dignity and respect | ✓ | ✓ | ✓ | 3 | ✓ | |||||||||||||

| Involving the patient’s family | ✓ | ✓ | ✓ | 4 | ||||||||||||||

| Good support | ✓ | 1 | ||||||||||||||||

| Preparation for side effects | ✓ | ✓ | ✓ | ✓ | 4 | |||||||||||||

| Managing expectations | ✓ | 1 | ||||||||||||||||

| Good clinical care | ✓ | ✓ | 2 | |||||||||||||||

| Processes | ||||||||||||||||||

| Speedy and efficient processes | ✓ | 1 | ||||||||||||||||

| Administration problems | ✓ | 1 | ||||||||||||||||

| Long waits and delays/waiting for appointments/waiting on the day | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 9 | ✓ | ||||||||

| Co-ordinated vs. fragmented care/communication between staff and staff, staff and institutions and institutions and institutions | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 6 | |||||||||||

| Organisation of services | ✓ | 1 | ||||||||||||||||

| Diagnosis and referral | ✓ | ✓ | ✓ | 3 | ||||||||||||||

| Discharge | ✓ | ✓ | ✓ | 3 | ||||||||||||||

| Continuity of care | ✓ | ✓ | 2 | |||||||||||||||

| Follow-up and aftercare | ✓ | ✓ | ✓ | ✓ | ✓ | 5 | ||||||||||||

| Good clinical care | ✓ | ✓ | ✓ | ✓ | 4 | |||||||||||||

| Total = 36 themes | 11 | 13 | 8 | 9 | 3 | 8 | 6 | 2 | 4 | 4 | 4 | 2 | 4 | 6 | 26 | |||

To these themes, CQC fundamentals of care were added, as suggested by the AG.

Themes from prior research by the team in more detail

Prior research by the wider PRESENT team, and included in the rapid review, is considered in more detail in this report for two reasons. First because this shows the team’s natural progression in the thinking about text analysis and second, it includes a study commissioned by Macmillan Cancer Support,21 which involved the thematic analysis of free text from the 2013 WCPES. The same data set was used independently of this analysis to develop the prototype system.

The WCPES analysis, reported by Bracher et al. ,21 was manual and took several months, using a four-stage process of coding and analysis. This involved literal coding (i.e. concrete coding based on respondent’s own words113) for areas of cancer patient experience (i.e. concrete coding based on respondents’ own words;113 literal coding for specific categories within different areas of cancer patient experience; identification of more abstract themes; and comparisons between closed questions and free-text responses). This research highlighted the need for a more automated approach to analysis for these data. The results from the original analysis by Bracher et al. 21 were compared with the PRESENT text-analytics process outputs in the study’s sensitivity and specificity analysis (see Chapter 8).

The main themes from the study by Bracher et al. 21 were:

-

communication between patients and staff

-

waiting for appointments

-

waiting to be seen on the day

-

communication between staff and/or institutions

-

concerns about staffing levels

-

out-of-hours and weekend care

-

investigations and diagnostic services

-

aftercare

-

emotional, social and psychological support

-

hospital environments

-

travel-related issues

-

food and catering

-

financial concerns.

Wiseman et al. 14 undertook a framework analysis of free-text data from the 2012–13 National CPES subsample for two London Integrated Cancer Systems, covering 27 trusts. This comprised 15,403 comments from > 6500 patients. The dominant themes were poor care, poor communication and waiting times in outpatient departments, consistent with the analysis by Bracher et al. 21

Team members had also previously undertaken a memory-based machine learning analysis of the 1688 free-text comments in a population-based survey of colorectal cancer survivors. This necessitated the manual analysis of a large proportion of the data, to train the machine learning algorithms. The main themes from this study were similar to those for the WCPES analysis, relating to:

-

emotional support

-

diagnosis and referral

-

preparation for treatment side effects

-

signposting

-

aftercare

-

co-ordinated care.

New surveys

In spring 2016, patients, carers and health-care professionals were invited to complete an online survey, using the SoGo (Herndon, VA, USA) survey platform (www.sogosurvey.com), which prompted them to mindmap terms and phrases that they might use when writing about their experiences as a patient or potential patient. This is a modification of an approach used by the GATE developers; 15 respondents are considered to provide sufficient information. 114 Respondents were asked to mindmap words in four different categories, relating to their condition, treatments, health-care settings and health-care staff. Respondents were also asked to suggest topics or themes for inclusion in the PRESENT dashboard.

A second ‘dashboard-scoping’ survey was sent out at the same time, which asked a similar range of stakeholders to tell us what they thought a good health-care dashboard should be like functionally and visually, and what good existing examples they could recommend to us. The later DCE data (see Chapter 7) make for an interesting comparison with this study.

As inclusion criteria for the surveys, participants:

-

had to be NHS staff, patients and carers

-

should have a confirmed diagnosis of the cancer or other condition that had led to their being sent the survey, or care for such a person informally (e.g. partner) or professionally (e.g. nurse); non-cancer patients were included to make the approach robust and potentially transferable

-

must be aged ≥ 18 years

-

should have acknowledged that they read the information form (two consent boxes in the questionnaire must be ticked before the participant can proceed).

The surveys were piloted with six health-care professionals and two patients. The aim was to recruit up to 100 participants within 3 months, which was considerably more than the minimum needed, to ensure that diverse voices were heard. Participants were not contacted directly, but invited to fill in the surveys via the relevant networks of study collaborators, for a broad, UK-wide, but targeted, approach. This included internet-based and non-internet-based platforms.

Responses, in the form of ideas, concepts, words and phrases, were collated by Daria Tkacz and analysed by hand by Daria Tkacz and Peter West. The findings were shared with the rest of the team to inform both text analysis and dashboard design.

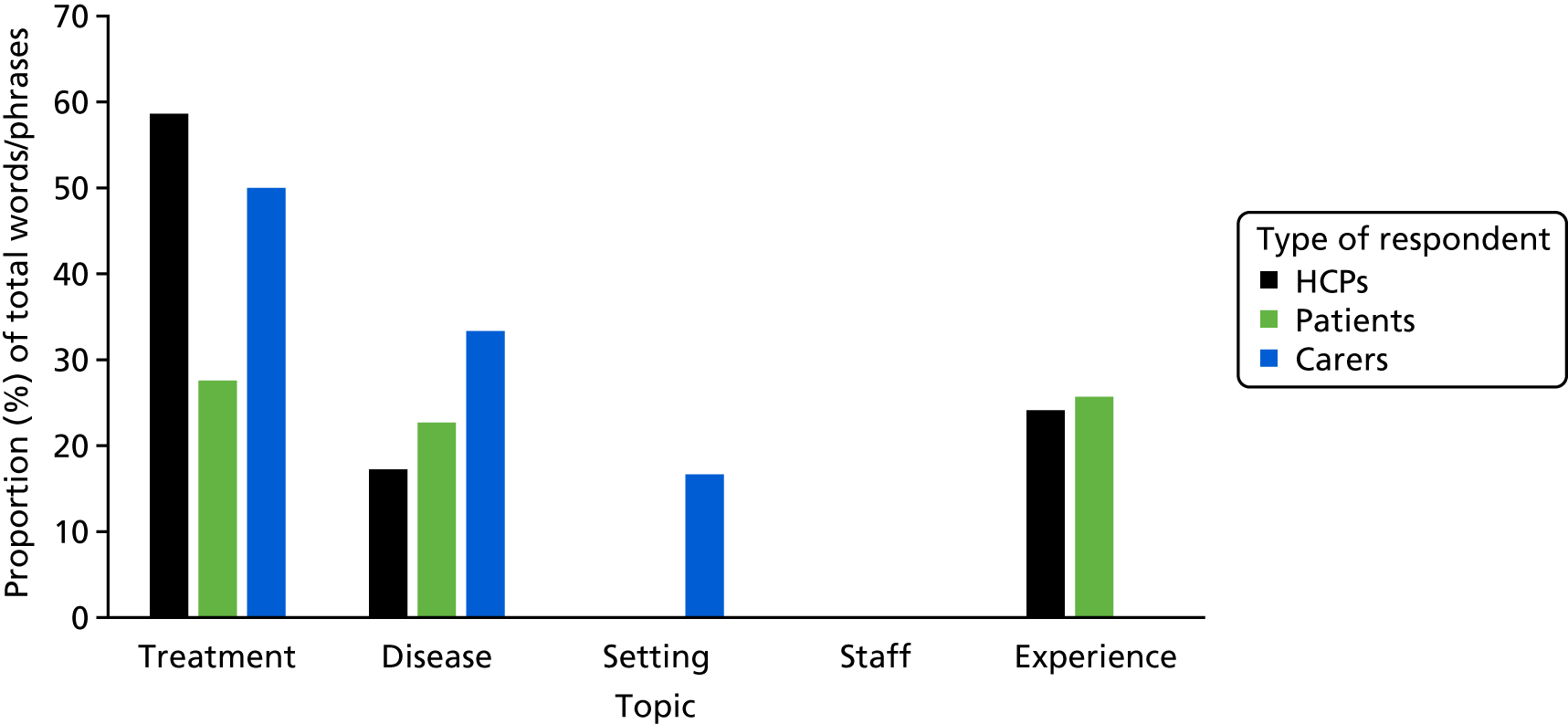

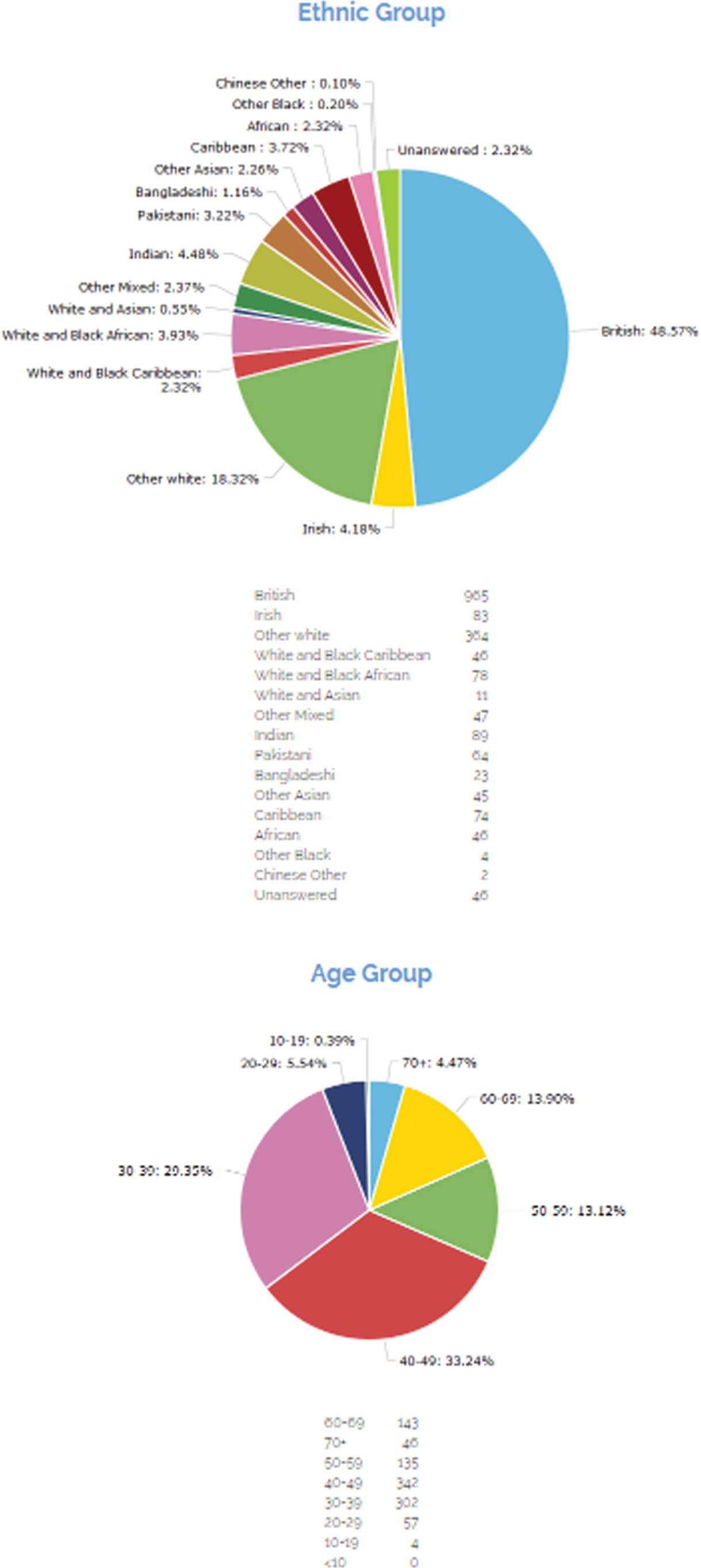

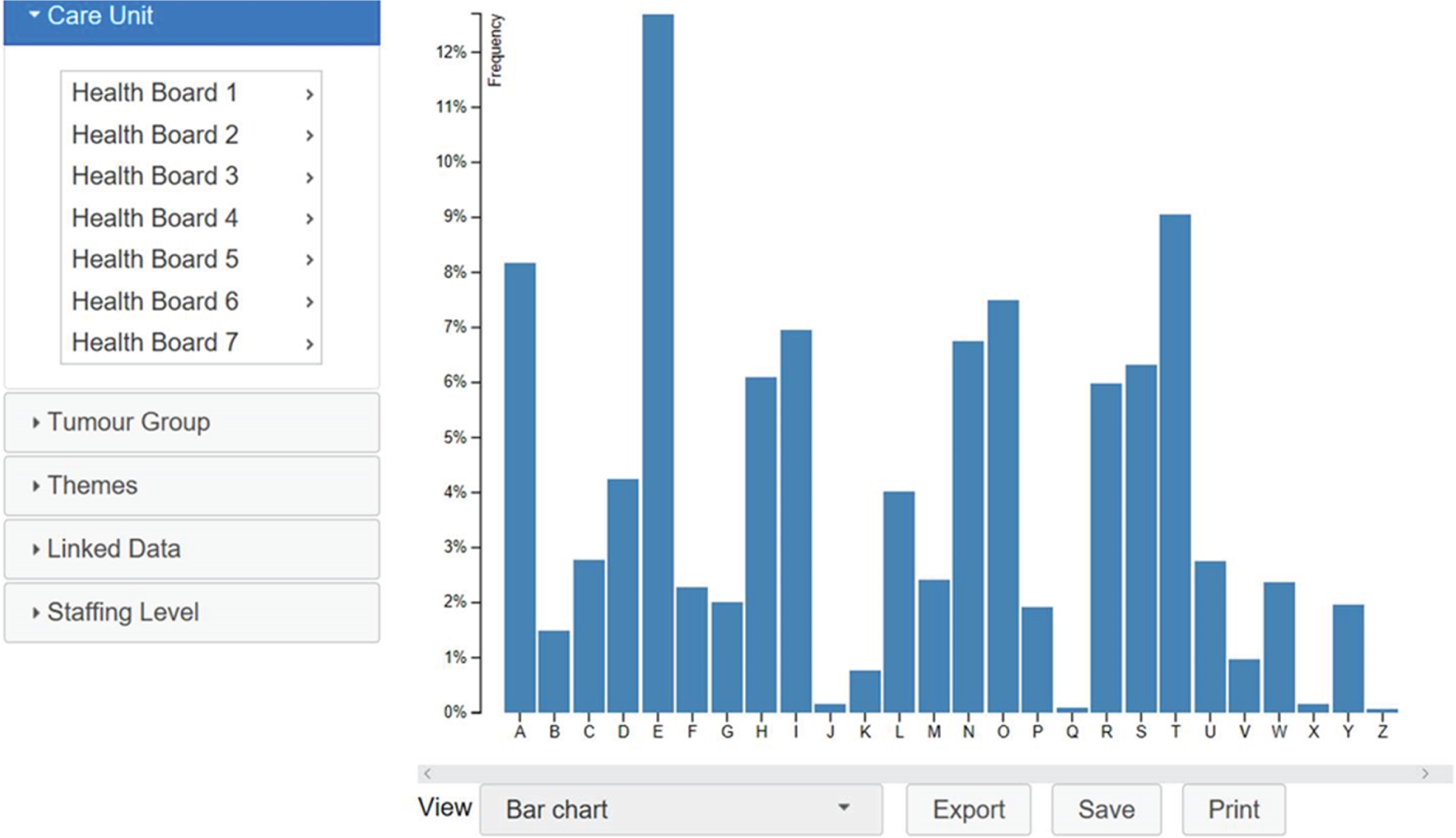

For the terms and themes mindmapping survey, there were 77 respondents, 70% of whom were female and 83% of whom identified as being of white ethnicity. Of these, the majority who specified their professional role self-identified as allied health professionals or nurses. Health-care professionals provided more names, phrases and figures of speech for treatments than for cancer or the patient experience, whereas patients provided a more even spread of terms (Figure 2). Carers also specified names for settings, but as there were only two carers, their data are not discussed further here. No participants specified alternative names for staff.

FIGURE 2.

Proportion (%) of total words suggested for each category by staff, patients and carers in the terms and themes mindmapping survey. Note that there were only two carers. HCP, health-care professional.

When health-care professionals provided treatment names, they were more likely than patients to use euphemisms (e.g. ‘servicing the cancer’), indicating that they considered that the topic might be sensitive to patients. When patients used euphemisms, they were more likely to convey emotion or dynamism (e.g. ‘whipping the prostate out’). Health-care professionals also tended to use acronyms or abbreviations, whereas patients tended to use synecdoche as abbreviations. Synecdoche is the naming of a part of something to refer to the whole, such as in the expression ‘the knife’, which stands for ‘the surgical knife’, or ‘the line’ instead of ‘the catheter line’.

When talking about the focal illness or condition, both patients and staff often used euphemisms, indicating the delicate nature of illness talk. Patients also tended to express emotions, use metaphors for expressiveness (e.g. ‘waterworks problems’) or use understatement, which may be a way of coping. 115 Health-care professionals tended to use abbreviations and metonymy, both being concise and efficient ways of referring to something rather than emotive terms. Metonymy refers to terms that are associated in meaning rather than being part of the original, such as saying ‘the Crown’ instead of ‘the Queen’.

When referring to the illness experience, both patients and staff used metaphors to suggest a fight or a journey (such as ‘I’m going down the tubes’ said by a patient). Patients also expressed their emotions and the uncertainties of their illness with phrases such as ‘living a game of snakes and ladders’, ‘living under a shadow’ and ‘going through hell’. Staff used idioms that focused on the work the patients did to fight their disease and conceal their emotions, such as ‘bottling it up’ and ‘the slog’.

Overall, patients and staff showed the greatest similarity in their talk of treatments and the greatest difference in their terms for the illness experience. Patients emphasised their emotional and uncertain journey, whereas staff believed that patients concealed their emotions. For all the categories of words, staff words and phrases tended to have a more efficient and practical orientation, although it was noted that the number of patients was relatively low, meaning that this research needs to be extended.

Responses were received from 35 respondents for the dashboard-scoping survey, 33 of whom were health-care professionals, but only 70% of whom used dashboards routinely. Respondents rated the quality of the data presented as the most important feature of dashboards, with relevant, focused, meaningful and structured displays. The second most important feature was the clarity and simplicity in the dashboard design, with an intuitive, easy-to-navigate interface. Users wanted visualisations that conveyed the meaning of the data through format choices, such as colour and graphic style. As a third most important feature, they felt that the dashboard should be designed to be used by executive board members as well as clinical staff, so that it had a real impact on the quality of care. Anonymity, flexibility, regular updates and visual appeal all received fewer votes from staff, although they were also considered to be important.

As special features and tools, staff suggested that the dashboard should include comparison features, the raw data, a ‘predictive intelligence capability’ to inform and help plan capacity and the power to differentiate between different areas of care and staff groups in the comments.

The suggested themes were as follows:

-

staff caring

-

involvement in decision-making

-

trust in staff

-

cleanliness

-

being asked about fears/concerns/feeling safe

-

waiting times

-

availability of specialist staff

-

patient satisfaction with access to information

-

availability of hospital staff

-

ease of access to services

-

time to listen to concerns

-

waiting times to be seen in clinic and day care.

These were compared with the themes determined from previous research (see Table 2) and it was found that they incorporated the most common themes and others that were moderately common within the literature. This is encouraging, given that the surveys were predominantly completed by health-care professionals and the themes reported in Table 2 were, with the exception of CQC themes, based on patient feedback.

Impact of the scoping stage on the initial prototype

A list of PES themes was collected from the different data sources to create a tentative taxonomy of themes, which was built on in further stages of the study. The final taxonomy has the potential for use in other research. This initial theme list was important in informing both the text-analytics rule-writing and the prototype dashboard display. Suggestions for priority themes influenced what was shown in the first prototype. Mindmapped terms and phrases were incorporated into the text-analytics gazetteers to enhance sensitivity. The differences were considered in perspective between staff and patients/carers were considered when facilitating stage 2 concept-mapping workshops (see Chapter 6) and these will be reported in the dashboard toolkit.

An attempt was made to accommodate all of the suggested features in the first prototype dashboard design. Theme icons were developed to be clicked on to drill down to more detail, including original comments, enabling the rationale for higher-level directives to be understood at the clinical ‘coalface’ through detailed example. High-level overviews were developed to highlight problem areas and benchmark regional and national performance on one computer screen. As well as providing text analyses, the facility was provided for users to be able to upload and incorporate quantitative data, so that their link to the rule-based IR-generated data could be explored. Importantly, for the health of patients and the NHS, this would maximise the usefulness of all of the data sets, not just the free text. A simple predictive tool and alert flags to indicate data reliability were also incorporated. It should be noted that as the study progressed, as a result of further research and input, some of these features were modified or disenabled. These early examples could, however, be quickly replaced.

The strengths of this phase of the work are that it draws on several different types of data and thus it is likely to have captured the most significant themes. It enables the different stakeholders in health care to contribute in a cheap, simple and efficient way. Thus, the approach could be adopted in complex interventions research as a way to engage the different stakeholders in early parts of a study. The limitations are that the rapid review of themes was undertaken by one researcher and was limited, the sample sizes for the surveys were small and there was considerable sampling bias.

As this stage relies partly on surveys, there was concern that this would immediately exclude some people from commenting. However, this research was intended only to inform the development of the digital dashboard; therefore, this was not the limitation that it might appear to be at first. The need for computer literacy and access to computers in order to be able to see such information in the age of big data is, however, something that needs to be considered within larger debates on the accessibility of big data as is also the need for opportunities to contribute to such data or its analysis and representation.

Chapter 4 Information extraction (rule-based information retrieval)

Having undertaken the groundwork for developing the free-text analysis and display approach, in this chapter the processes involved in rule-based IR are considered in more detail. This is followed by a detailed explanation of the solutions to problems with the analysis of survey free-text comments. In addition, the chapter describes problems that became apparent a posteriori; for example, it was realised that real-name redaction by the data suppliers [QualityWatch; URL: www.qualitywatch.org.uk (accessed 9 October 2018)] was incomplete, and a new secondary aim was to provide a solution to this.

Introduction

GATE (a General Architecture for Text Engineering) is a software framework and collection of resources that can be used for various natural language processing (NLP) tasks. 44,116 NLP is a field of computer science that involves getting computers to process and interpret human language. This covers a range of possibilities, such as:

-

parsing (i.e. sequentially ‘reading’ or analysing) natural language and ‘annotating’ it for grammatical features and other syntactic elements

-

extracting information from segments of natural language

-

actually ‘understanding’ natural language.

Each of these possibilities usually begins by ‘tokenising’ text, that is, breaking it up into words, punctuation marks, numbers and other discrete features. This is a very basic level of analysis, but provides a lot of information and packages the text into units for further processing. The next stage is usually to classify words into their grammatical categories or POSs and label them accordingly, which is known as POS tagging or tagging. Each token is associated with a POS. Tokens and tags are forms of linguistic annotation of the text.

One branch of NLP that is relevant and of interest to us is best described as information extraction (IE) from the text. 117 IE is concerned with the extraction of structured data from unstructured or semistructured data. Free-text answers on a survey are an example of unstructured text data. An example of semistructured text data is the ingredient lines in recipes for cooking food. Data that are grouped into themes provide an example of structured data.

Rules-based NLP approaches, such as the one used in the study, are based on an expert system of rules hand-coded by humans (see Alternative approaches to the analysis of free-text comments and Information retrieval for the rationale for choosing this approach). As the system becomes more complex, the interactions of these rules can also become more and more complex. Other non-rule-based approaches include statistical NLP approaches: NLP using statistics, probabilities, machine learning and similar techniques. Machine learning can be supervised (annotated corpora as learning sets), unsupervised or semisupervised. Different approaches can be combined.