Notes

Article history

The research reported in this issue of the journal was funded by the HSDR programme or one of its preceding programmes as project number 16/04/06. The contractual start date was in October 2017. The final report began editorial review in January 2020 and was accepted for publication in November 2021. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HSDR editors and production house have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the final report document. However, they do not accept liability for damages or losses arising from material published in this report.

Disclaimer

This report contains transcripts of interviews conducted in the course of the research and contains language that may offend some readers.

Permissions

Copyright statement

Copyright © 2022 Randell et al. This work was produced by Randell et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaption in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2022 Randell et al.

Chapter 1 Introduction

Overview

This study involved the design and evaluation of a quality dashboard (QualDash) for exploring national clinical audit (NCA) data. This chapter provides the background to the study, describing what NCAs are and explaining what is meant by a quality dashboard. We then define the research aim and objectives. We conclude the chapter by outlining the structure of the remainder of the report.

Background

National clinical audits

In the UK, the Healthcare Quality Improvement Partnership (HQIP) centrally develops and manages a programme of NCAs each year through the National Clinical Audit and Patient Outcomes Programme (NCAPOP). The number of NCAs included within the NCAPOP varies year to year. At the time of writing (November 2020), there were 61 NCAs included in the NCAPOP. The NHS standard contract requires all health-care organisations that provide NHS services to participate in the NCAPOP NCAs and gives commissioners the power to impose penalties on NHS trusts that fail to participate. 1 Since 2012, trusts have been responsible for funding a proportion of NCAPOP costs through subscription funding, whereby trusts pay an annual amount to HQIP (and this amount has remained at £10,000 since 2016). In addition, there are 82 independent NCAs, not part of the NCAPOP, funded either through subscription, by a charity or professional body or by NHS England (London, UK). Each year, the NHS England Quality Accounts list advises trusts which NCAs they should prioritise for participation.

Each NCA focuses on a particular clinical area or condition and aims to produce a publicly available report annually. The objective of NCAs is to systematically measure the quality of care delivered by clinical teams and health-care organisations and to stimulate quality improvement (QI). 2 Although there is evidence of positive impacts of NCAs,3–5 and previous research suggests that clinicians consider NCA annual reports important for identifying QI opportunities,6 there is variation within and between trusts in the extent to which they engage with NCA data. 6,7 A number of NCAs provide trusts with online access to more recent data and allow users to download data for local analysis. For example, the National Hip Fracture Database provides live data on how hospitals are meeting key performance indicators, plus interactive online charts, dashboards and benchmarking summaries. However, with a recognised shortage of data analysis skills within the NHS,8,9 the use of NCA data poses challenges for some trusts. 7 Consequently, NCA data are substantially underutilised and the potential for NCA data to inform QI is not being realised fully.

Dashboards

Dashboards use data visualisation techniques to provide information to individuals, services or organisations in either a paper or a computer-based format. 10 Use of such techniques is thought to improve data comprehension11 and reduce cognitive load,12 resulting in more efficient and effective decision-making. 13 A distinction can be made between clinical dashboards and quality dashboards. Clinical dashboards may provide data at the level of the patient, the clinician (showing all patients they are caring for and comparing them with their peers and national benchmarks) or allow the user to move between viewing information at both of these levels,14 with the aim of informing decisions about, and thereby improving, patient care. 15 By contrast, quality dashboards show performance at the ward or organisational level (and ideally will provide feedback to be used at both levels16,17) to inform operational decision-making and QI efforts. 18 Visualisations provided by quality dashboards can inform QI by supporting the identification of previously unnoticed patterns in the data. 19

Health-care providers, in the UK and internationally, are increasingly using dashboards to measure care quality and as the basis for QI initiatives. Lord Darzi’s next-stage review20 and the health informatics review,21 which were both published in 2008, recommended greater use of dashboards by NHS organisations. Two distinct strands of thinking permeated these reviews and subsequent policies and guidance on dashboards. One strand of thinking emphasised the need for summary real-time information for use by clinicians in their clinical work. The former NHS Connecting for Health agency encouraged these developments through its clinical dashboard project. 22–24 This was followed by an emphasis on the need for integrated real-time information on care quality, which could be interrogated by clinical teams for the purpose of learning. 25 The other strand of thinking focused on the need for trust boards and regulators to have summary performance information. NHS foundation trusts already published performance dashboards, required by Monitor, but otherwise trusts did not use dashboards for their own internal reporting, or to report to the Care Quality Commission (CQC) or (as they were then) primary care trusts. Developments in this area were prompted by two major reports in 2013, that is, the second Francis report on substandard care provided at Mid Staffordshire NHS Foundation Trust26 and the post-Francis Keogh review of 14 NHS trusts with persistently high mortality rates. 8

An empirical study of quality dashboard use in acute trusts in England was published in 2018. 16 A survey of 15 trusts found that most trusts had some form of quality dashboard in place for reporting measures, such as those included in the NHS Safety Thermometer. 27 However, there was significant variation in dashboard sophistication, the term being used to refer both to information technology (IT) systems and to the outputs from these IT systems, typically in the form of printed reports. The majority of dashboards still depended on resource-intensive manual collation of information from a number of systems by central performance management teams, with retrospective reports then being circulated to wards, directorates and trust boards. However, the study also revealed a clear ‘direction of travel’,16 with a desire for real-time quality dashboards.

Despite the enthusiasm for quality dashboards, most empirical literature has focused on clinical dashboards, with the consequence that little is known about how and in what contexts computer-based quality dashboards drive improvement. A review of studies of the use of computer-based quality and clinical dashboards published between 1996 and 2012 included 11 studies that evaluated their impact on care quality. 10 Only one dashboard met the definition of a quality dashboard,28 with the other 10 dashboards being clinical dashboards. Chapter 3 provides an update to this review, focusing on quality dashboards.

Aim and objectives

The study aim was to develop and evaluate QualDash, an interactive web-based quality dashboard that supports clinical teams, quality subcommittees and NHS trust boards to better understand and make use of NCA data, thereby leading to improved care quality and clinical outcomes. The study had the following research objectives:

-

to develop a programme theory that explains how and in what contexts use of QualDash will lead to improvements in care quality

-

to use the programme theory to co-design QualDash

-

to use the programme theory to co-design an adoption strategy for QualDash

-

to understand how and in what contexts QualDash leads to improvements in care quality

-

to assess the feasibility of conducting a cluster randomised controlled trial (RCT) of QualDash.

Structure of the remainder of the report

Chapter 2 provides the details of the study design and research methods. (Note that details of the study management, including public and patient involvement, are provided in Appendix 1.) Chapter 3 provides an update to the review of computerised dashboards described above, focusing on quality dashboards, while also presenting a summary of substantive theories relevant to understanding how and in what contexts quality dashboards lead to improvements in care quality. Chapter 4 presents our biography of NCAs and the resulting programme theory of how and in what contexts NCA feedback influences QI, drawing primarily on interviews across five NHS trusts, in which we sought to understand what supports and constrains NCA data use. Chapter 5 addresses the first and second objectives. Chapter 5 begins our biography of QualDash, describing functional requirements elicited from the interviews, how these were translated into the design of QualDash through a co-design process with staff from one NHS trust, and the associated programme theory of how and in what contexts QualDash could lead to improvements in care quality. Chapter 6 addresses the third objective. Chapter 6 continues the QualDash biography, describing strategies for supporting adoption, developed with staff from five NHS trusts, additions to the QualDash programme theory resulting from this and the process of undertaking some of these activities prior to installation of QualDash. Chapters 7 and 8 address the fourth objective, reporting the introduction and use of QualDash within the five trusts, the subsequent impacts and the resulting revisions to the QualDash programme theory. Chapter 9 describes new requirements for QualDash that emerged in response to the COVID-19 pandemic. Chapter 10 concludes the report by reflecting on the implications of the study findings and outlining future research priorities.

Chapter 2 Study design and methods

Overview

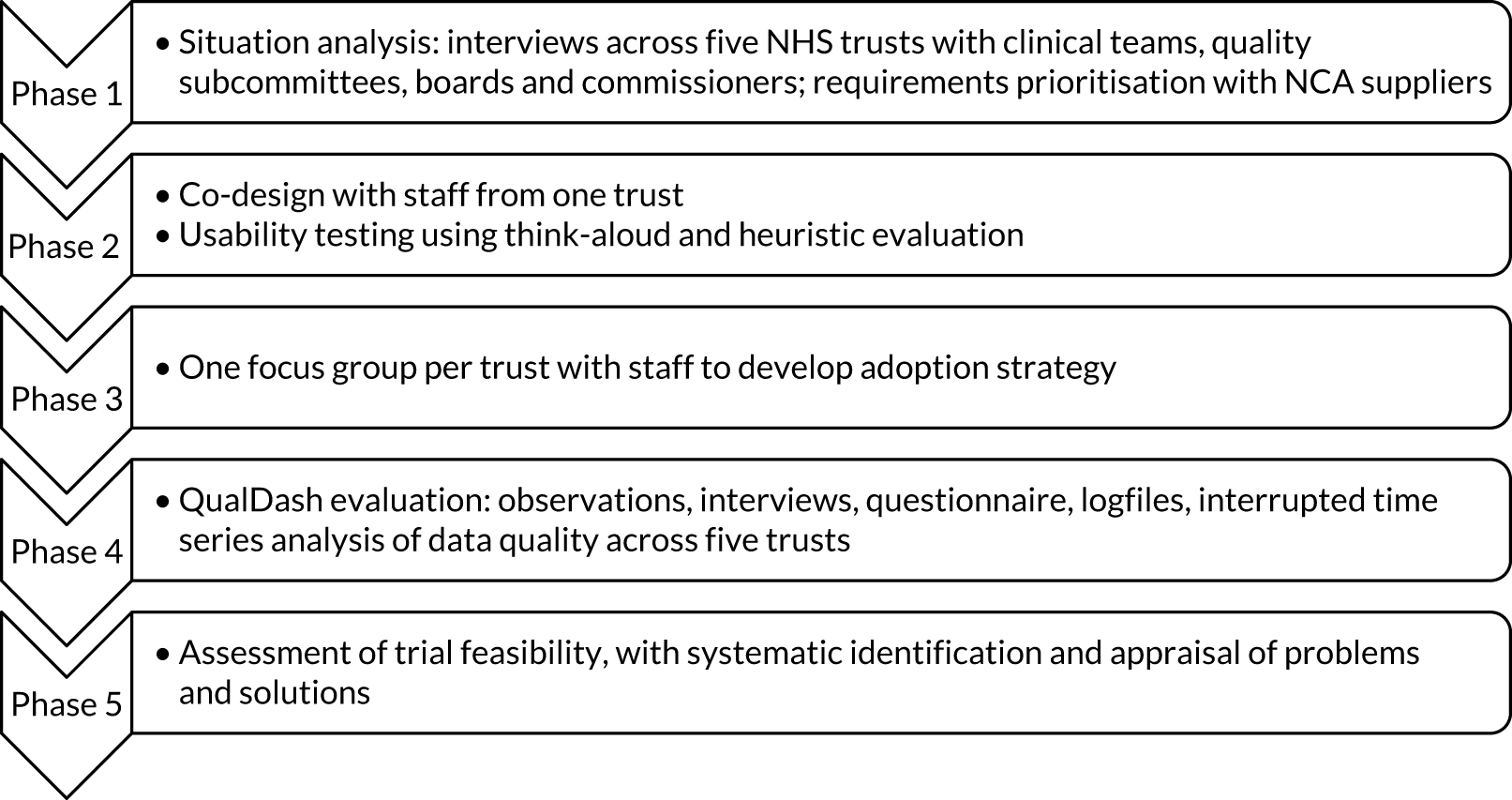

This chapter begins by describing the two approaches used (i.e. realist evaluation and the biography of artefacts). We then summarise deviations to the protocol and reasons for these deviations. The remainder of the chapter is organised according to the study phases (Figure 1).

FIGURE 1.

Overview of study phases.

Realist evaluation

The Medical Research Council guidance for the design and evaluation of complex interventions emphasises that new interventions should have a coherent theoretical basis, with theory being used systematically in their development. 29 In addition, in the field of audit and feedback,25,30,31 and QI more generally,32–34 the need for theory-informed research is well recognised. Therefore, we used realist evaluation35 as a framework for data collection and analysis, an approach that has been recommended for studying QI. 36

Realist evaluation involves building, testing and refining programme theories composed of one or more context–mechanism–outcome (CMO) configurations. These CMO configurations explain how and why an intervention is supposed to work, for whom and in what circumstances. This is because interventions in and of themselves are not seen as determining outcomes. Rather, interventions are considered to offer resources to recipients and outcomes depend on how those recipients make use (or not) of those resources, which will vary according to context. Although theories may sometimes be considered to be abstract, irrelevant and separate from the everyday experience of practitioners, the term can also be used to refer to practitioners’ ideas about how an intervention works,33 and this is how the term is used in realist evaluation. 33,35

Although realist evaluation has been used for studying the introduction and impact of a number of complex interventions in health care,37,38 including large-scale QI programmes,39 there is growing acknowledgement of the value of realist approaches for design. 40–42 In this study, we have used co-design to develop QualDash, as the principles of realist evaluation and co-design have been demonstrated to be complementary. 40

Biography of artefacts

The biography of artefacts approach43 is located within the tradition of science and technology studies. The biography of artefacts approach is concerned with capturing how particular contexts and appropriations of a technology lead to different processes and generate different outcomes, analogous to realist evaluation’s concern with contexts, mechanisms and outcomes. 44 It is an approach that has been used successfully in a number of studies of health information technology (HIT)45,46 and one that we found to be useful in a previous study of dashboards. 16 The biography of artefacts approach involves longitudinal ‘strategic ethnography’43 where data collection is guided by a provisional understanding of the moments and locales in which a technology and associated practices evolve. 44 Although, initially, we intended to use the biography of artefacts approach only for the evaluation of QualDash, it influenced our thinking throughout the study because it enabled us to capture the challenges experienced by both the research team and the intended QualDash users. This helped us to understand and explain why the study unfolded and evolved as it did, with users’ early experiences with QualDash being important contextual factors that shaped subsequent responses. We begin the presentation of our findings through a biography of NCAs before moving on to present the biography of the design, installation, adoption and use of QualDash.

Protocol deviations

This study was completed during the period of the COVID-19 pandemic, which meant that we were unable to undertake all of the activities as outlined in the study protocol. These changes are described below; however, for transparency, we summarise these changes here.

Phase 4: multisite case study

In March 2020, installation of QualDash v2.1 was in progress, with the intention that observations would resume once it had been installed in all sites. However, at this time, all on-site research in hospitals was suspended in response to the COVID-19 pandemic and, therefore, installation of QualDash v2.1 and observations were discontinued. This meant that 148.5 hours of observations were undertaken, rather than the planned 384 hours (see Sampling for further details). It also meant that we were unable to undertake teacher–learner cycle interviews with staff (see Data collection). We notified the National Institute for Health and Care Research (NIHR) of these challenges in our progress report submitted in March 2020.

Phase 4: interrupted time series study

We had intended to consider a range of measures (see Analysis). However, by January 2020, limited QualDash use within our study sites meant that QualDash use could not be hypothesised to have had an impact on these measures. Therefore, we agreed with our Study Steering Committee (SSC) in January 2020 that, if use did not increase, it was not appropriate to use those measures, instead focusing on impact on data quality, for which there was some qualitative evidence. We had planned to undertake further adoption activities following installation of QualDash v2.1, but we were unable to do so because on-site research in hospitals was suspended. Consequently, use did not increase and analysis considered measures of data quality. We notified NIHR of this change in our progress report submitted in March 2020. An additional change was that we had intended to undertake a controlled interrupted time series (ITS) study. By the time that data were to be received for analysis, only three sites had issued research and development (R&D) approval, with delays as a result of prioritising COVID-19 studies and restarting studies that had stopped because of the COVID-19 pandemic. Of the three control sites that had issued R&D approval, only one submitted data, with the failure of the other sites to do so presumably because of service pressures at that time. Given that data for only one control site were received and the matching was not relevant to the measures used in the final analysis, control data were not used, with time providing the control instead (see Sampling). Service pressures meant that site D did not submit data and, therefore, analysis was limited to sites A, B, C and E. We notified NIHR of potential challenges of obtaining data during the COVID-19 pandemic in our progress report submitted in March 2020.

Phase 5: assessment of wider applicability

Part of our plan for phase 5 of the study had been to assess the extent to which QualDash is suitable for different NCAs and the extent to which QualDash meets the needs of commissioners, through three focus groups. However, given the international focus on COVID-19 at the time, and a request from a region-wide Gold Command, we sought, instead, to understand the requirements of such a body (which included commissioners) and how QualDash could be revised to support their work, using interviews for this purpose (see Assessing the wider applicability of QualDash). At this time, we also received a request from one of our sites for us to adapt QualDash to monitor cardiology activity during COVID-19, which we did (see Chapter 9, Adapting QualDash to support daily monitoring). We notified NIHR of this additional work in our progress report submitted in March 2020, which was positively received.

Phase 1: situation analysis

The aim of phase 1 was to undertake a situation analysis (i.e. to identify the nature and extent of the opportunities and problems to be addressed by an intervention and the context within which it will operate). 47 Interviews were used to identify critical elements of context that support and constrain mechanisms underpinning NCA data use for QI, enabling us to establish requirements for the design and introduction of QualDash, while also developing an understanding of the contextual factors that may support or hinder its use. 40 A workshop with suppliers of both NCAPOP and independent NCAs and representatives of HQIP was carried out to prioritise and assess the generalisability of the identified requirements.

Interview study

Settings and participants

Throughout the study, we focused on two NCAs: the Myocardial Ischaemia National Audit Project (MINAP) and the Paediatric Intensive Care Audit Network (PICANet). The decision to work with two audits was motivated by the desire to ensure that the study would generate findings generalisable beyond a single NCA. Both MINAP and PICANet are part of the NCAPOP; however, they are delivered by different suppliers and involve different clinical specialties working with different patient groups and multiple professional groups (e.g. medical and nursing), and include multiple types of metrics (e.g. structure, process and outcome). MINAP and PICANet also differ in data quality and completeness. Further information about these audits is provided in Chapter 4. To increase the generalisability of our findings, in phase 1, we also gathered data on independent audits that have different funding arrangements [i.e. the National Audit of Cardiac Rehabilitation, which is funded by the British Heart Foundation (London, UK), and the Elective Surgery National Patient Reported Outcome Measures Programme, which is funded by NHS England]. There are a number of NCAs for which participation is at individual clinician, rather than trust, level. To understand the impact of this difference, in phase 1, we also gathered data about British Cardiovascular Intervention Society (BCIS) (Lutterworth, UK) and British Association of Urological Surgeons (London, UK) audits.

Throughout the study, we worked with three NHS acute trusts (i.e. sites A–C), which participate in both MINAP and PICANet. These trusts were identified at the time of preparing the study proposal and were selected to ensure variation in key outcome measures [MINAP: 30-day mortality for ST-elevation myocardial infarction (STEMI) patients; PICANet: risk-adjusted standardised mortality ratio (SMR)], using data reported in the most recently published MINAP and PICANet reports (i.e. the MINAP 2014 report, using data from April 2011 to March 2014,48 and the PICANet 2015 annual report, using data from 201449). Given that trusts that participate in PICANet tend to be larger and to be teaching hospitals, they are not representative of the range of trusts that participate in MINAP. Therefore, MINAP use was also studied in two district general hospitals (DGHs) (i.e. sites D and E) without a paediatric intensive care unit (PICU). These were selected to ensure variation in the same key MINAP measure. To further ensure generalisability of our findings, we selected trusts with and without foundation status. Those trusts with PICUs also varied in the number of PICU patients treated per year. We selected one trust that was included in the Keogh8 review of 14 trusts with persistently high mortality.

To identify interviewees, a combination of purposive and snowball sampling was used. In each site, the clinical contact for the study (typically a MINAP or PICANet lead) was interviewed first. These contacts were then asked to identify others who were involved with NCAs, enabling us to map the networks through which NCA data were captured, accessed and analysed. We interviewed participants from a range of professional groups and with a variety of roles. Fifty-four interviews were conducted between November 2017 and June 2018. The number of interviews per site is provided in Table 1.

| Role | Teaching hospital site | DGH site | Total | |||

|---|---|---|---|---|---|---|

| A | B | C | D | E | ||

| Doctor | 5 | 2 | 2 | 4 | 1 | 14 |

| Nurse | 1 | 2 | 7 | 2 | 2 | 14 |

| Audit support staff | 1 | 1 | 2 | 0 | 0 | 4 |

| Trust board and committee member | 2 | 1 | 0 | 1 | 0 | 4 |

| Quality and safety staff | 2 | 1 | 2 | 1 | 1 | 7 |

| Information staff | 3 | 1 | 1 | 0 | 1 | 6 |

| Other | 3 | 1 | 0 | 0 | 1 | 5 |

| Total | 17 | 9 | 14 | 8 | 6 | 54 |

Data collection

Realist ‘theory gleaning’ interviews were undertaken, whereby interviewees are asked to articulate how their contextual circumstances influence their behaviour. 50 The research team developed a semistructured interview topic guide. Questions sought to understand NCA data use in the broader context of processes for monitoring and improving care quality, how and in what contexts NCA data were used for QI, and how and in what contexts interviewees considered a quality dashboard could provide benefit. The interview topic guide was reviewed by the study Lay Advisory Group (LAG) and revised based on their feedback to ensure that the interviews explored topics that matter to patients (see Report Supplementary Material 1 for the interview topic guide). Interviews were audio-recorded and transcribed verbatim. Interviews ranged from 33 minutes to 1 hour 29 minutes, with an average (mean) duration of 54 minutes.

Analysis

Interview transcripts were checked for accuracy against the audio-recordings and anonymised. Framework analysis was used to analyse the transcripts. 51 Informed by the phase 1 objectives (to identify requirements for QualDash and construct programme theories) and the interview topic guide, and through reading preliminary interview transcripts, codes for data indexing were identified and agreed by four members of the research team (NA, LM, RR and ME). Themes and subthemes were incorporated into the framework that reflected realist concepts of context (operationalised as characteristics of staff, culture or infrastructure that appeared to support or constrain NCA data use), mechanism (operationalised as the ways in which people responded to NCA data and why) and outcome (operationalised as the impact of these responses, with a focus on service changes intended to improve performance). Five transcripts were then indexed in NVivo 11 (QSR International, Warrington, UK) to test the applicability of the codes and assess agreement. Where there was variation in indexing, codes were refined and definitions clarified. Refined codes were applied to all transcripts.

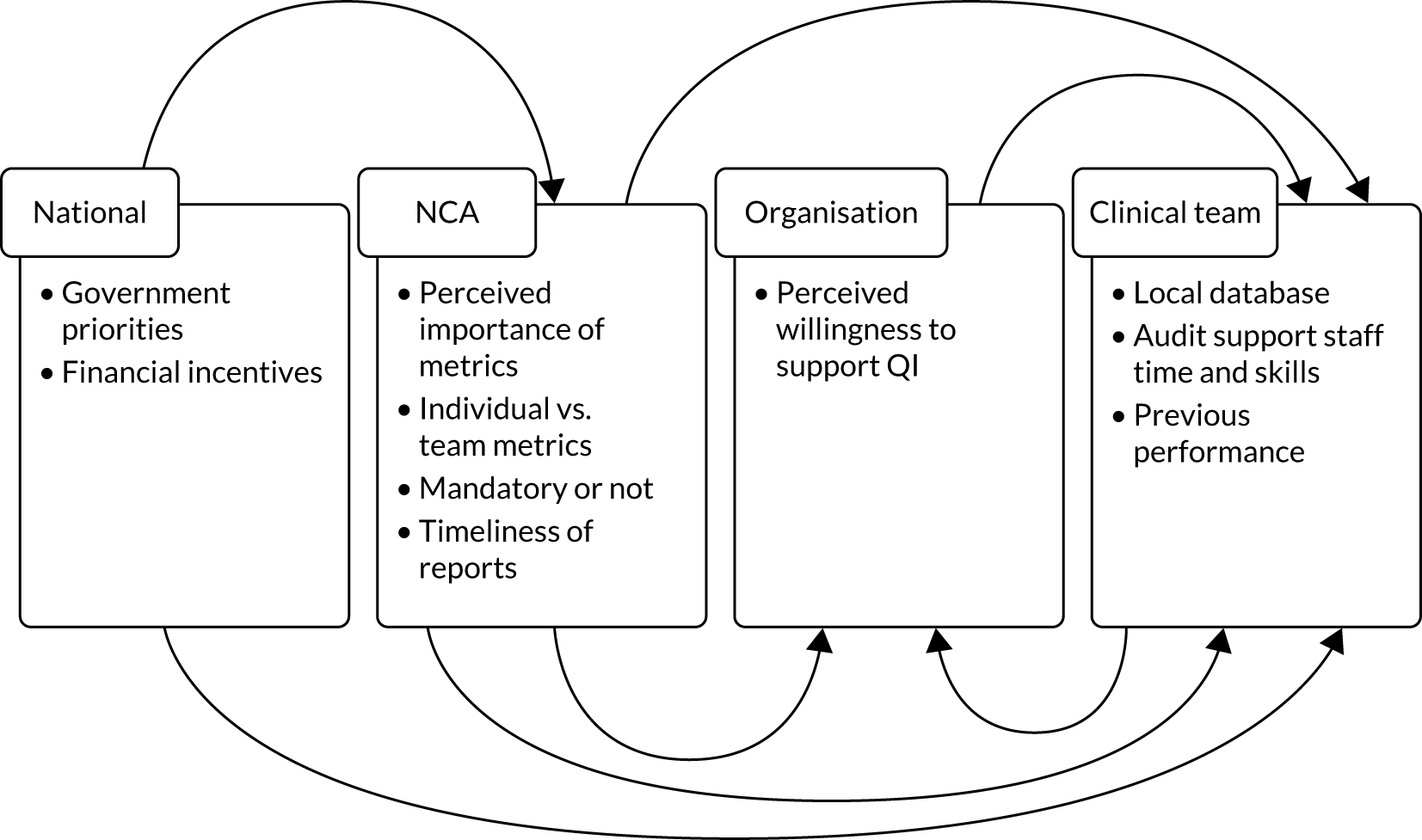

To construct CMOs, we explored the coded data to identify instances where NCA data were reported to have been used. Of primary interest was where use of NCA data had stimulated QI in the form of practice changes. However, we also sought to capture other end points, for example where it was used for assurance (i.e. confirming performance complies with certain standards). The data were then interrogated to understand how and why NCA data were used in these ways and what supported or constrained the use of NCA data across sites.

To elicit QualDash requirements, study team members (ME, RAR, NA, LM and RR) discussed and grouped data indexed under ‘quality dashboard’, which captured what participants reported they would want from a quality dashboard and what they thought would support its introduction and use. In addition, Mai Elshehaly analysed the interview transcripts to generate an initial list of tasks that users of QualDash might want to undertake based on questions they described asking of NCA data. The resulting requirements were brought together into a software requirements specification (SRS).

Workshop with national clinical audit suppliers

Participants

A purposive sample of participants was recruited, comprising suppliers representing NCAPOP and independent NCAs, including audits where participation is at the individual clinician level, and representatives of HQIP. At the time of organising the workshop, NCAPOP and independent NCAs were delivered by 37 suppliers, with some responsible for up to five audits. With HQIP’s permission, we used information about NCA suppliers listed on the HQIP website to contact potential participants, sending them an invitation by e-mail to register for the workshop. Where a supplier delivered more than one NCA, we approached the national clinical lead for each NCA. We also advertised the workshop in the HQIP newsletter. Twenty-nine participants registered and 21 attended on the day, with representation from 19 NCAs and HQIP (see Appendix 2 for a list of participating audits).

Data collection

Ahead of the workshop, participants were sent the requirements. During the workshop, participants worked in groups comprising three to five participants, facilitated by members of the QualDash team (NA, LM, ME, RAR and RR). All activities were audio-recorded.

To identify which requirements were generalisable across NCAs, a variation of the nominal group technique (NGT) was used based on methods used by Rebecca Randell for a similar purpose,52 and involving card-sorting activities recommended for co-design. 53 NGT is a highly structured group process that can be used for identifying requirements and establishing priorities. NGT enables a substantial amount of work to be achieved in a relatively short space of time, providing immediate results with no requirement for further work. 54 The technique has previously been used successfully in the development of other complex interventions. 55 The first activity focused on prioritising functional requirements, which were grouped into categories of visualisation, interaction, data quality, reporting and notification. Participants had 2 hours to complete this activity. To make efficient use of time, interaction requirements were considered jointly with visualisation requirements, and reporting requirements with notification requirements (data quality was considered individually because phase 1 interviews had highlighted its significance and complexity). Groups varied the order in which they considered the categories of requirements to ensure that information was gathered about all of them (in case any group was unable to complete the task for all categories).

The first step was ‘silent generation of ideas’ (a concept used in NGT). This step involved each participant reviewing a set of cards describing the functional requirements and selecting those that they considered to be essential. Participants were also asked to write down on blank cards any additional essential functional requirements that they could think of. Facilitators introduced the task with the question ‘If QualDash were available to the users of your audit, what does it need to be able to do in relation to [interaction and visualisation; reporting and notifications; data quality] to enable/encourage users to make better use of audit data for QI?’. Then, the group merged their requirements, creating an ‘agreed’ and ‘not agreed’ list on a flip chart. The ‘agreed’ list comprised functional requirements that were considered to be essential by all group members and the ‘not agreed’ list comprised requirements that were considered to be essential by some (or none) of the members. A weighting was given to each ‘not agreed’ item based on how many people (if any) considered this requirement to be essential. The lists also included any additional requirements identified by participants. Finally, participants were asked to select individually, and rank in order of priority, the three functional requirements that they considered to be the most important. To allow participants to select the most important requirements for their audits, they could select from both the ‘agreed’ and the ‘not agreed’ lists. Participants were then invited to feed back to the group their highest priority requirements and explain why this was so, in the context of their specific audits, for discussion in the group as a whole. This was repeated for each set of requirements.

The second activity sought to build context around the tasks identified from the interviews and map them to a taxonomic classification to ensure coverage of the space of possible tasks and, subsequently, the space of possible visualisation design alternatives. We designed a scenario-generation activity in which each group of participants was presented chronologically with three task taxonomic dimensions (i.e. task granularity, data type cardinality and task target) in the form of an example scenario. 56 To account for different QualDash contexts of use, two of the groups were assigned an exploratory analysis scenario, two groups were assigned a confirmatory analysis scenario and one group was given an information presentation scenario. These three types of scenario were inspired from the levels of the task ‘goal’ dimension of the task space defined by Schultz et al. 57 In each group, participants were given an activity sheet that described the example scenario in a stepwise fashion and asked them to write down similar details relevant to their audit(s). After developing their own individual scenarios, the group discussed them. Whatever the focus of the worksheet (e.g. explorer, confirmer or presenter), the worksheets asked participants to record:

-

important metrics for their audits

-

the granularity [i.e. level of detail for patient data (whether at individual patient, organisation, regional or global – for the entire audit – level) and time data (e.g. daily, weekly, monthly or annual)] needed to explore, confirm or present the data effectively

-

the type cardinality, which defines the most useful types of information (e.g. specific values, trends over time or frequency) needed to explore, confirm or present the data effectively

-

the number and combination of variables required (e.g. single, two, three or more).

Finally, the target information required in each scenario was elicited by presenting alternative possibilities (e.g. trends over time, distribution and association) and leading a discussion with participants to select the targets that they thought were the most critical.

In the third activity, for which 1.5 hours was allocated, adoption requirements were prioritised. Requirements were grouped into categories of awareness, training, monitoring and support, and access. Activity 3 followed largely the same format as activity 1, moving from silent generation of ideas (based this time on the question ‘If QualDash were available to the users of your audit, what would need to happen to enable/encourage users to use it for QI?’) to merging and ranking requirements. As the task involved reviewing fewer categories than in activity 1, more time was available at each step, giving participants the opportunity to rank the 10 – rather than three – requirements they considered most important.

Analysis

The lists created by the groups for activities 1 and 3 were analysed quantitatively to identify the functional and adoption requirements that were considered to be essential by all groups. Next, the final ranking of priorities was combined to produce a list of functional requirements and a list of adoption requirements ordered by priority.

Participants’ responses to the visualisation worksheets for activity 2 were used to identify tasks QualDash users may want to undertake using NCA data and these were added to the tasks identified through analysis of the interview data. The tasks were analysed in terms of the structural characteristics of granularity, type cardinality and target. This allowed a characterisation of a subset of the task space that covered the most relevant combinations of granularity, type cardinality and target information levels included. This characterisation was used to inform a task sampling process to select a representative subset of tasks, which was taken forward to the co-design activities in phase 2.

To capture the richness of participants’ observations and to provide context to the ranked priorities, we had intended to transcribe the audio-recordings of the sessions and analyse them using framework analysis. However, we decided not to, given the poor quality of the recordings, owing to overall noise levels in the room and because we obtained clear results from the quantitative analysis.

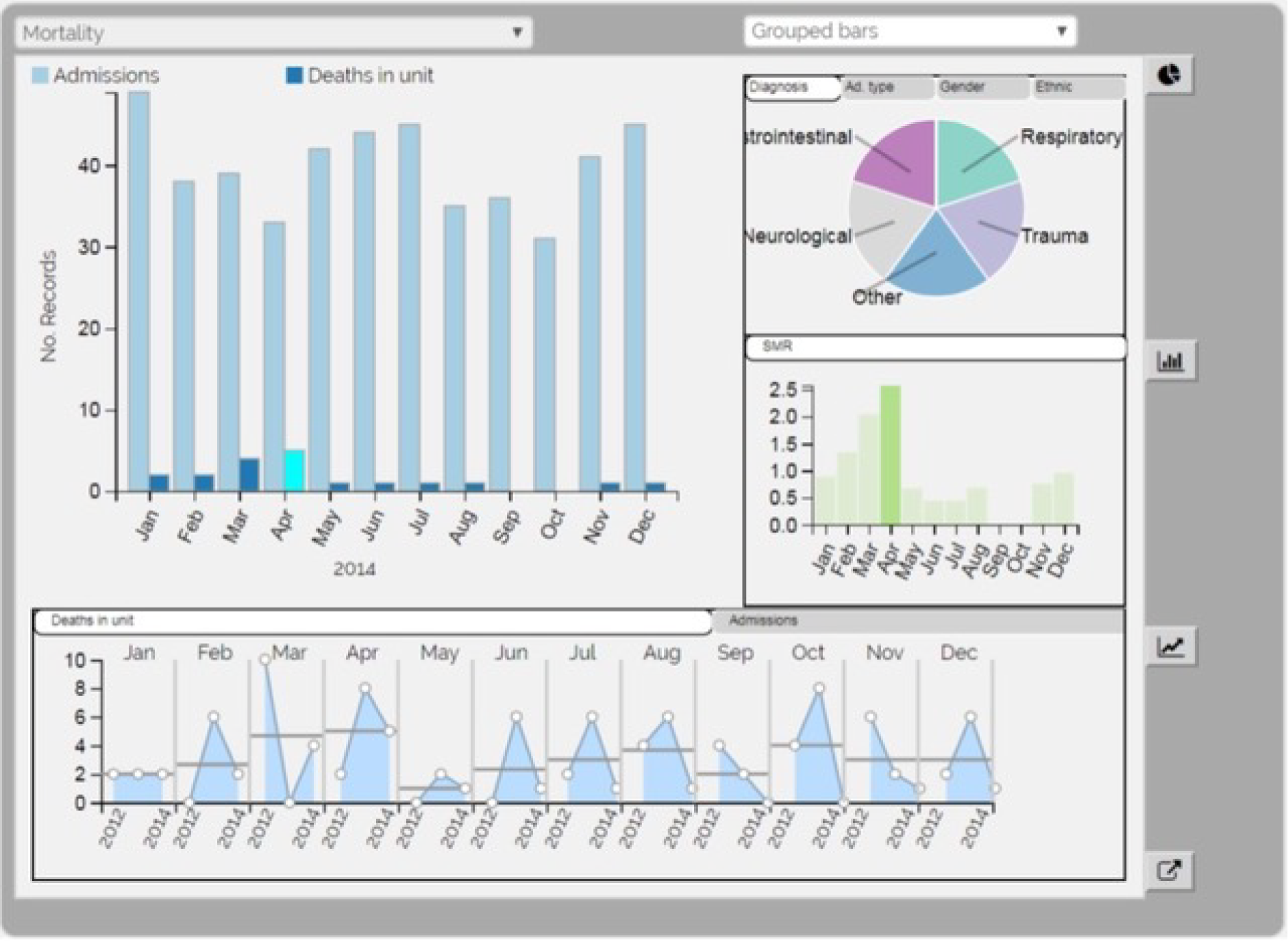

Phase 2a: design of QualDash

A co-design approach was used for design of QualDash, following Bate and Robert’s53 four-stage model for experience-based co-design: (1) reflection, analysis, diagnosis and description; (2) imagination and visualisation; (3) modelling, planning and prototyping; and (4) action and implementation. Phase 1 represented the reflection, analysis, diagnosis and description co-design stage. Phase 2 represented the imagination and visualisation and the modelling, planning and prototyping co-design stages. QualDash was co-designed with NHS staff through two co-design workshops. In addition, observation of meetings at which audit data were discussed was undertaken to provide further information to inform design. The QualDash prototype was then evaluated using heuristic evaluation and the think-aloud protocol (described in Phase 2b: usability evaluation of QualDash). Alongside the design process, team members regularly discussed the QualDash requirements and their understanding of how, for whom and in what contexts the associated features would support use of NCA data to develop the QualDash programme theory. In doing so, we drew on understandings gained from phase 1 and the phase 2 co-design workshops and observations, while Mai Elshehaly and Roy A Ruddle used their expertise in information visualisation to present their own literature-informed theories. 58,59

Co-design workshops

Settings and participants

We intentionally involved staff from one trust only (i.e. site A) in phase 2 to help us to assess, in phase 4, the extent to which the success of QualDash was dependent on this level of staff involvement in the design process. We held two co-design workshops and in both we included users of MINAP and PICANet data and representatives from other trust groups (e.g. information managers) on the basis that bringing together a diverse group would support identification of similarities and differences in the needs and preferences of different stakeholders. There were seven participants in each co-design workshop, including both clinical and non-clinical staff (Table 2). The first co-design workshop was held in September 2018 and the second in February 2019.

| Role | Workshop 1 | Workshop 2 |

|---|---|---|

| Doctor | 2 (paediatricians) | 1 (paediatrician) |

| Nurse | 1 (cardiology nurse specialist) | 1 (cardiology nurse specialist) |

| Audit co-ordinator | 1 (PICANet) | 1 (PICANet) |

| Information manager | 2 | 1 |

| Clinical outcomes and information manager | 1 | 1 |

| Clinical effectiveness and compliance manager | 0 | 2 |

| Total | 7 | 7 |

Data collection

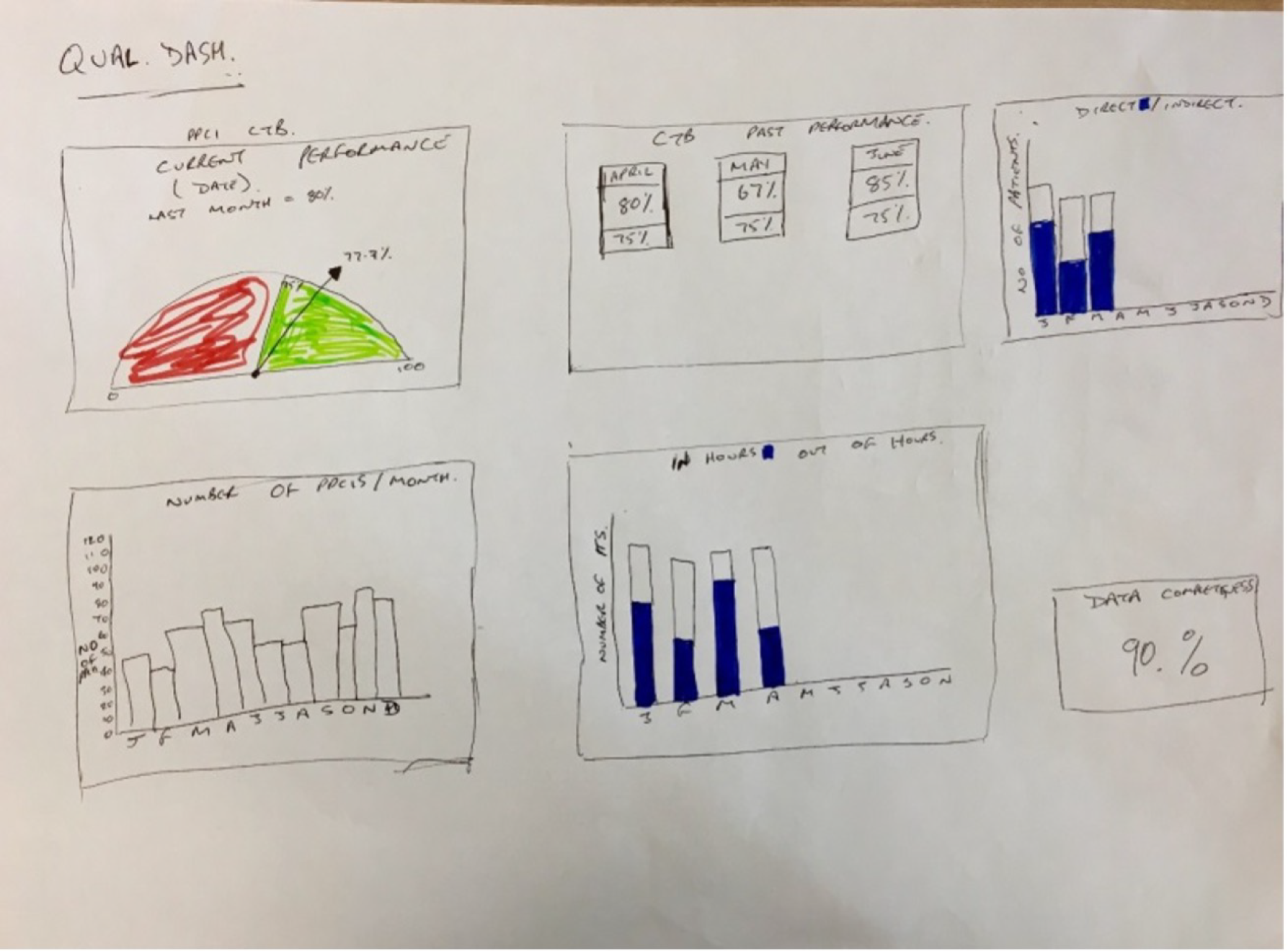

Each co-design workshop lasted 3 hours. In the first workshop, we divided participants into two groups, depending on which audit they were the most familiar with, and assigned each group a task set corresponding to one of the audits. Two study team members facilitated the discussion with each group over the course of two co-design activities.

The first activity was a ‘story generation’ activity that was inspired by approaches used in information visualisation design. 59 We asked participants to answer questions about why tasks are important, how tasks are currently performed, what information needs to be available to perform a task (i.e. input) and what information might arise after completing it (i.e. output). To understand the impact of context, we also asked who can benefit most from a task. To facilitate discussion around these four questions, co-design participants were presented with a set of ‘task cards’. Each card focused on a single task and was subdivided into four main sections. Three sections were designed to elicit information about how this task is performed in current practice: (1) the data elements used, (2) the time it takes and (3) any textual information that might be involved. The majority of space on the card was dedicated to the fourth section, labelled ‘sketches’. This section provided space for participants to sketch out the processes involved in performing the task and visualisations (if any) used in the same context. The header of a card contained an empty box for participants to assign a relevance score. Participants were presented with a set of task cards corresponding to the subset of tasks developed in phase 1. We also gave each participant a set of blank cards. Participants were given the freedom to select task cards that they deemed relevant to their current practice or to write their own on a blank card. For each task, participants were asked to solve the what and how questions individually on paper while thinking about the why and who questions for a later group discussion. During the discussion, we asked participants to justify the relevance scores that they assigned to each task and elaborate on the details that they had written on the cards. Finally, we asked them to sort the cards depending on who they believed this task was most relevant to.

The second activity was designed to identify ‘entry-point’ tasks and sequences of follow-up tasks. We returned the prioritised and grouped task cards from activity 1 to participants and asked them to (1) select the most pressing questions to be answered at a glance at a dashboard, (2) sketch the layout of a static dashboard that could provide the minimally sufficient information for answering these tasks and (3) select/add follow-up tasks that arise from these entry-point tasks.

Between the first and the second co-design workshops, the results of the first workshop were used to develop a QualDash prototype. To ensure that the prototype did not unintentionally move away from the needs of our intended users, we regularly discussed our ideas with participants from site A in a sequence of nine one-to-one meetings. Participants in these meetings were two cardiologists, a paediatrician and two members of audit support staff.

The second co-design workshop involved two activities. The first activity made use of a paper prototype to free participants from learning the software. 60 Participants were divided into three groups. Each group was handed paper prototypes for a set of metric cards. For each metric card, the paper prototype included a task believed to be of high relevance, screen printouts of metric card(s) that address the task and a list of audit measures used to generate the cards. A group facilitator led the discussion of each metric and encouraged participants to change or add more tasks, comment on the data granularity used and sketch ideas to improve the visualisations. The second activity involved interaction with the QualDash software and is described in Phase 2b: usability evaluation of QualDash.

Observation of meetings

The phase 1 interviews identified a range of meetings, at different levels of trusts, at which quality and safety are discussed and QualDash could potentially be used. The research team considered, and the SSC agreed, that it would be useful to observe these meetings to provide further insight into how data were used to inform the design of QualDash. Therefore, meeting observations were added to phase 2.

Sampling

The intention was to observe at least one meeting at the ward level, quality subcommittee level and trust board level per site. However, the phase 1 analysis and adoption focus groups suggested that QualDash was most likely to be used within clinical team and directorate meetings, rather than at quality subcommittee or trust board meetings, and, therefore, we focused observations on those. In total, seven meetings were observed between October and December 2018 (Table 3), representing 13 hours of data collection. Having observed these meetings, the research team concluded that they had sufficient information about such contexts and that there was no need for further observations.

| Meeting type | Site | Total | ||||

|---|---|---|---|---|---|---|

| A | B | C | D | E | ||

| Directorate meeting | 0 | 1 | 0 | 0 | 0 | 1 |

| Clinical governance/morbidity and mortality meetings | 2 | 1 | 0 | 1 | 0 | 4 |

| Business meeting | 0 | 0 | 1 | 1 | 0 | 2 |

| Total | 2 | 2 | 1 | 2 | 0 | 7 |

Data collection and analysis

The researchers observed the meetings and recorded observations in fieldnotes. These notes were written up in detail as soon as possible post observation using a framework developed in collaboration with Mai Elshehaly and Roy A Ruddle (i.e. the team members responsible for software development). The framework was used to capture details that would allow us to better understand user requirements relating to, for example, which data were used in the meeting (variables and metrics), how they were visualised and for what purpose.

An iterative approach to data collection and analysis was taken. Observation notes were read closely by Natasha Alvarado and Lynn McVey (i.e. the qualitative researchers) and Mai Elshehaly, who then met to discuss the findings and identify areas in which further information was required. Summary sections in the observation notes were used to reflect on what the data added to the situation analysis, which, in turn, informed programme theory development, providing insight into motivations for data use within meeting contexts and potential supports and constraints on QualDash use.

Phase 2b: usability evaluation of QualDash

Originally, the study design included a controlled user experiment with a mixed factorial experimental design, designed to compare the visualisations offered through QualDash with how NCA data are currently visualised, on the assumption that QualDash would incorporate novel visualisation techniques. However, nearly all tasks identified in phase 1 contained only two variables, for which standard visualisations, such as bar graphs, are more appropriate, particularly as users would already be familiar with such visualisations. Therefore, it was decided that the design of QualDash would focus on providing benefit through its interactive capabilities and the ability to look at multiple visualisations, rather than through novel visualisations. Consequently, a different approach to user evaluation, which evaluated QualDash’s interactive capabilities, was needed. We decided to use two usability inspection methods: (1) the think-aloud technique and (2) heuristic evaluation. The think-aloud technique is an appropriate fit for the realist approach given that it provides access to participants’ reasoning. We anticipated identifying most usability issues through use of the think-aloud technique, to be conducted with the intended QualDash users, whereas heuristic evaluation was used as a check for any significant usability issues that may have been missed. The System Usability Scale (SUS), a quick and easy questionnaire designed to assess any technology, consisting of 10 statements scored on a five-point scale of strength of agreement, had been proposed for the controlled user experiment. 61 We retained this as a quantitative assessment of usability.

Think-aloud technique

Sample

Two rounds of the think-aloud technique were undertaken. The first round was undertaken during the second co-design workshop, whereas the second round was undertaken during one of the adoption focus groups (see Phase 3: QualDash adoption). To allow time for revisions to be made to the QualDash prototype on the basis of the first round, the second round was undertaken approximately 1 month later. This was undertaken with participants from one of the four trusts not involved in the co-design workshops. These participants were selected to ensure a different level of familiarity with, and confidence in, engaging with NCA data. Seven participants (see Table 2 for roles) took part in round 1 and five participants took part in round 2. Participants in round 2 included a PICANet audit clerk, a PICU matron, a PICU nurse, a senior cardiology information analyst and a data manager.

Data collection

Participants were asked to think aloud as they used the QualDash prototype to complete a series of tasks. Tasks were designed to enable them to explore key QualDash functionality. To have consistency in the tasks that users completed, all participants used the PICANet version of QualDash, which was populated with simulated data. For the first round, conducted at the University of Leeds, participants used QualDash concurrently and worked either alone or in pairs, with one researcher with each individual/pair. The researchers gave a short demonstration of the prototype. As an initial training exercise, the researchers asked participants to reproduce a screen that was printed on paper. This exercise was intended to familiarise participants with the dashboard and the think-aloud technique. Following this step, the researchers presented a sequence of tasks to participants and observed their interactions with the dashboard to perform the tasks. Either video- or audio-recording was used with each individual/pair to capture participants’ comments as they conducted the tasks, with the video providing additional data regarding participants’ interaction with QualDash.

For the second round, conducted at site B, QualDash was run on a laptop with Morae software (TechSmith, Okemos, MI, USA). Participants took it in turns to use QualDash, with Morae software used for recording participants’ interaction with QualDash and capturing audio, video and on-screen activity, and keyboard and mouse input. The researcher provided a short demonstration to the group as part of the adoption workshop, before asking individual participants to complete a sequence of tasks as part of the think-aloud exercise.

In both rounds, following completion of tasks on the prototype, participants were asked to complete the SUS questionnaire.

Analysis

Descriptive statistics were produced for the SUS score. We analysed recordings from all sessions and divided feedback into five categories:

-

a task-related category [capturing (mis)matches between participants’ intended task sequences and view compositions supported in the dashboard]

-

a data-related category (capturing comments relating to the variables and aggregation rules used to generate visualisations)

-

a visualisation-related category (capturing feedback on the choice of visualisation in each view)

-

a graphical user interface-related category (capturing comments on the interface usability)

-

an ‘other’ category (capturing any further comments).

From this, a prioritised list of revisions to be made to QualDash prior to installation in the five sites was generated.

Heuristic evaluation

Sample

Heuristic evaluation is a form of expert review. Following recommendations for the conduct of heuristic evaluation,62 we aimed to involve three to five experts. The selection of experts sought to ensure that their collective expertise spanned the areas of human–computer interaction, visualisation and clinical audit. Five experts agreed to participate, four of whom undertook the task and returned the documentation. Two participants had expertise in human–computer interaction and health informatics (with one participant also having medical training), one participant had expertise in human–computer interaction and visualisation, and one participant was a clinician with expertise in clinical audit.

Data collection

The experts were provided with (1) a list of tasks designed to enable them to explore the functionality of QualDash, (2) a heuristic evaluation checklist and (3) a set of heuristics for determining the potential utility of visualisations (see Report Supplementary Material 2).

The heuristic evaluation checklist was developed and validated by a member of the study team (DD) as part of previous research to develop and evaluate a dashboard. 63 The checklist consists of seven general usability principles derived from Nielsen’s usability principles,64 consisting of 40 usability heuristics, and three visualisation-specific usability principles (i.e. spatial organisation, information coding and orientation), consisting of nine usability heuristics. Although some of the heuristics within the heuristic evaluation checklist were not appropriate for QualDash, we retained them because the questionnaire had already been validated and each item allowed an answer of ‘not applicable’. The experts were instructed to give a score of 1 (yes) if the heuristic was met and a score of 0 (no) if the heuristic was not met.

The set of heuristics to assess the potential utility of the dashboard come from the visualisation literature. 65 The set is based on the visualisation value framework, which contains four components that relate to (1) time savings a visualisation provides, (2) insights and insightful questions it spurs, (3) the overall essence of the data it conveys and (4) the confidence it inspires in the data and the domain. There are 10 heuristics (two related to time, three related to insight, two related to essence and three related to confidence). Each heuristic is rated on a seven-point scale, ranging from 1 (strongly disagree) to 7 (strongly agree). The participant can also answer ‘not applicable’.

Analysis

Output from the heuristic evaluation checklist was a score for each usability principle, along with a list of usability problems identified by the experts. Scores were calculated by dividing the total number of heuristics met (points) awarded by the total number available. Where all participants selected ‘not applicable’, this heuristic was removed from the analysis. Where three of the four participants selected ‘not applicable’, we came to a decision as a team about whether or not that heuristic was applicable. If the heuristic was considered as not applicable, we reduced the total number of heuristics available for that principle by one. For all other cases where one or more participants selected ‘not applicable’, we gave a score of 1 on the basis that the participant had not identified any problems in relation to that heuristic. The higher the score (i.e. the percentage of points awarded compared with total number available), then the greater the system usability.

For the visualisation value heuristics, we calculated the average score for each of the four components.

Phase 3: QualDash adoption

The aim of phase 3 was to agree a QualDash adoption strategy with each site. Within health services research, the term implementation is used to refer to putting an intervention into practice, using strategies to support and encourage the use of that intervention in ways that will lead to the desired impact,66 and it is a key element in the Medical Research Council guidance on development and evaluation of complex interventions. 29 However, the term has variable meanings within software engineering and is sometimes used to refer to the programming involved in translating a requirements specification into a piece of software. Therefore, being a multidisciplinary team, we have used the term adoption, which is familiar to software engineers, to avoid confusion. Although an understanding of adoption requirements was developed in phase 1 interviews and prioritised with NCA suppliers in the phase 1 workshop, it is important that adoption strategies are tailored to the local context. 36 Adoption strategies are also most likely to be successful if stakeholders are involved in their design. 67

Sample

One focus group was held at each site and sought to involve intended QualDash users. Potential participants were provided with an information sheet about the focus group following their participation in either the phase 1 interviews or, for those at site A, following their participation in the co-design workshops. Potential participants were contacted by e-mail to see if they were willing to participate and, if so, to identify a suitable date. In addition, where contacts were identified, IT staff were invited to attend. Details of participants in each focus group are provided in Table 4. Focus groups were conducted between February and April 2019.

| Role | Site | Total | ||||

|---|---|---|---|---|---|---|

| A | B | C | D | E | ||

| Cardiologist | 0 | 0 | 0 | 1 | 0 | 1 |

| Paediatrician | 1 | 0 | 1 | N/A | N/A | 2 |

| Nurse | 1 (MINAP) | 2 (clinical/quality, matron) | 1 (cardiology) | 2 (cardiology, n = 1; radiology, n = 1) | 1 (cardiology) | 7 |

| Audit clerk | 1 (PICANet) | 1 (PICANet) | 1 (MINAP) | 3 | ||

| Managers | 2 (clinical effectiveness) | 1 (data) | 2 (information, n = 1; clinical effectiveness, n = 1) | 1 (patient service) | 1 (clinical audit) | 7 |

| IT | 0 | 1 (information analyst) | 1 | 0 | 1 | 3 |

| Total | 5 | 5 | 6 | 4 | 3 | 23 |

Data collection

Each focus group was facilitated by two researchers, who presented a study summary and provided a demonstration of QualDash. Post demonstration, the adoption strategies and associated activities that were identified and prioritised in phase 1 were presented to the group. Participants were encouraged to discuss why they thought a particular activity would work (or not), for which groups of staff within their trust and how the activity should be delivered. Each focus group was audio-recorded and researchers also made notes during the discussion. Each session was scheduled to last for 90 minutes and the discussion lasted between 41 minutes and 1 hour 9 minutes, with an average (mean) duration of 50 minutes.

Analysis

Audio-recordings were transcribed verbatim, checked for accuracy, anonymised and uploaded into NVivo for indexing. Codes used for indexing transcripts were devised by three researchers (NA, LM and RR), with the intention of categorising data related to assumptions about adoption, dashboard functionality and current/future NCA data use. Close reading of transcripts, indexing and a review of researcher notes were used to identify consistencies and variations across sites regarding participants’ theories about activities that would support adoption. For each site, the researchers summarised discussion of each strategy, including how it should be delivered and why participants felt that it might work to support QualDash uptake and use. These documents became a blueprint for adoption within each site.

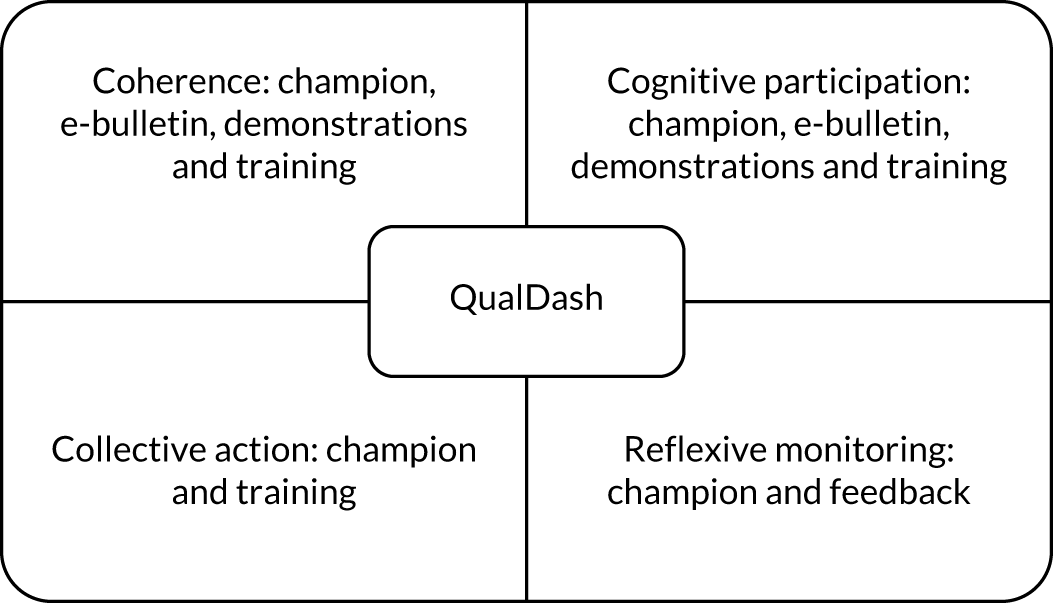

Focus group analysis was also used to further inform the QualDash programme theory. The programme theory developed in phase 2 hypothesised potential QualDash impacts. In their original form, these hypotheses assumed that QualDash would be adopted successfully. The adoption focus groups analysis enabled theorisation around how and why use and uptake would be successful, which was subsequently added to the QualDash programme theory.

Phase 4: QualDash evaluation

Phase 4 was designed to test and refine the QualDash programme theory following introduction of QualDash into the five sites. Realist evaluation does not employ particular methods of data collection, but is explicitly a mixed-methods approach. 68 Outcome data were collected and analysed in an ITS study, whereas a multisite case study69 provided insight into the contexts and mechanisms that led to those outcomes, as well as providing data on intermediate outcomes, such as increased use of NCA data. Data for the multisite case study were captured through a combination of ethnographic observations, informal interviews, log files and a questionnaire. Audit and feedback interventions, and QI interventions more generally, require longitudinal evaluation to allow sufficient time for staff to implement changes and incorporate them into practice. 70–72 Similarly, evaluation of HIT should allow time for staff to integrate the technology into their practices and evolve those practices to take advantage of the functionality offered by the technology. 45 Therefore, the intention was to collect data over a 12-month period.

Multisite case study

Sampling

The intention was that in sites A–C we would undertake a minimum of 24 4-hour periods of observation per trust and in sites D and E we would undertake a minimum of 12 4-hour periods of observation per trust (the difference reflecting that data were to be collected in two clinical areas in sites A–C, but in only one clinical area in sites D and E), totalling 384 hours. The researchers were to return to each trust monthly to understand how QualDash use changed over time, but spending more time in the first few months following the introduction of QualDash, as this is when users are most likely to engage with and explore affordances of QualDash and establish new practices around it, generating information with implications for system enhancement and future adoption strategies. 44 Although observations began in June 2019, they were paused in February 2020 while revisions to QualDash were made. The intention was to have a focused period of data analysis and resume observations once a revised version of QualDash, which addressed user feedback, had been installed. QualDash v2.0 was installed in three sites, but issues were raised when demonstrating QualDash v2.0 and, therefore, further revisions were required. Unfortunately, as installation of QualDash v2.1 was in progress, all on-site research in hospitals was suspended in response to the COVID-19 pandemic and, therefore, installation of QualDash v2.1 and observations were discontinued. In total, 148.5 hours of observations was undertaken (Table 5). Although attempts were made to organise ward observations in site C’s cardiology department, this did not prove possible, but other data collection provided the opportunity to learn about the department’s approaches to MINAP and wider data processes.

| Type of observation | Site | Total | ||||

|---|---|---|---|---|---|---|

| A | B | C | D | E | ||

| QualDash installation and customisation meetings | 9 | 8 | 16 | 7 | 6.5 | 46.5 |

| Ward and ‘back office’ observations, including data collection and validation | 25 | 24.5 | 17 | 2 | 13.5 | 82 |

| Meeting observations and informal interviews | 7.5 | 3 | 4 | 3 | 2.5 | 20 |

| Total | 41.5 | 35.5 | 37 | 12 | 22.5 | 148.5 |

The questionnaire was e-mailed to 35 participants who were known either to have used QualDash themselves or to have seen it demonstrated or used in meetings. Twenty-three participants completed the questionnaire.

Data collection

The primary method of data collection for the multisite case study was ethnographic observation. Ethnography is well suited to realist evaluation because it involves observing phenomena in context, supporting understanding of how context influences the response to an intervention. 73 An initial phase of general observation provided an opportunity for researchers to become familiar with the setting and for those in the setting to become familiar with the presence of the researchers. Following a previous study of dashboards,16 observations were undertaken in clinical areas to understand clinical teams’ working practices and to capture ‘corridor committees’ where issues of quality and safety are discussed more informally,74 as well as to record general details of the setting that may influence QualDash use, such as staffing levels and availability of computers. These initial observations were undertaken in cardiology wards, catheterisation laboratories, PICUs and paediatric high-dependency units (HDUs).

After this initial phase, observation was guided by the QualDash programme theory. In addition to observing clinical and directorate meetings at which NCA data tend to be discussed and used, observation involved shadowing staff members as they undertook particular activities, including collection and entry of NCA data to see if and how this changed over time; accessing and interrogating NCA data, whether using QualDash or another means; preparation of reports and/or presentations using NCA data, again whether using QualDash or another means. Researchers also observed meetings between Mai Elshehaly and site staff to install and test QualDash and discuss how it could be customised to meet user needs more closely. These meetings provided opportunities to explore participants’ working practices and reasoning about QualDash. An observation schedule was developed to support the recording of fieldnotes (see Report Supplementary Material 3). Researchers kept this with them as an aide-memoire, with fieldnotes written in a notebook. Fieldnotes were written up in detail as soon after data collection as possible, using the observation schedule as a template.

Informal interviews were conducted with clinicians and audit staff while undertaking observations. Our intention had been to undertake longer semistructured interviews, using the teacher–learner cycle, with staff later in the evaluation to discuss revisions to our CMO configurations. In teacher–learner cycle interviews, the theories under investigation are made explicit to the interviewee so that the interviewee can use their experiences to refine the researcher’s understanding. 75 Being concerned with the reasoning of intervention recipients, mechanisms are often not observable76 and, therefore, these longer interviews would also have provided the opportunity to explore staff reasoning about QualDash. However, owing to the COVID-19 pandemic, data collection stopped before we were able to undertake such interviews.

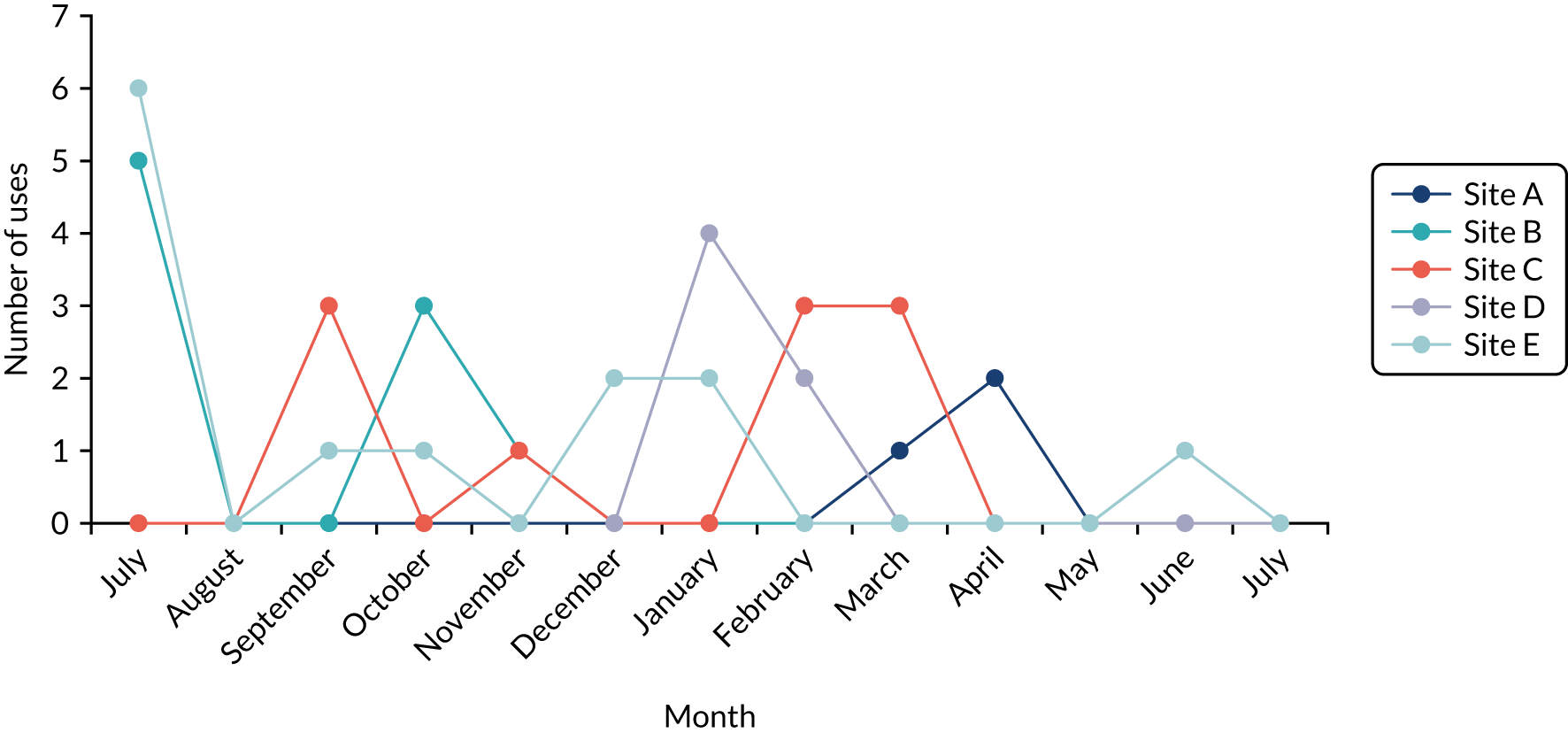

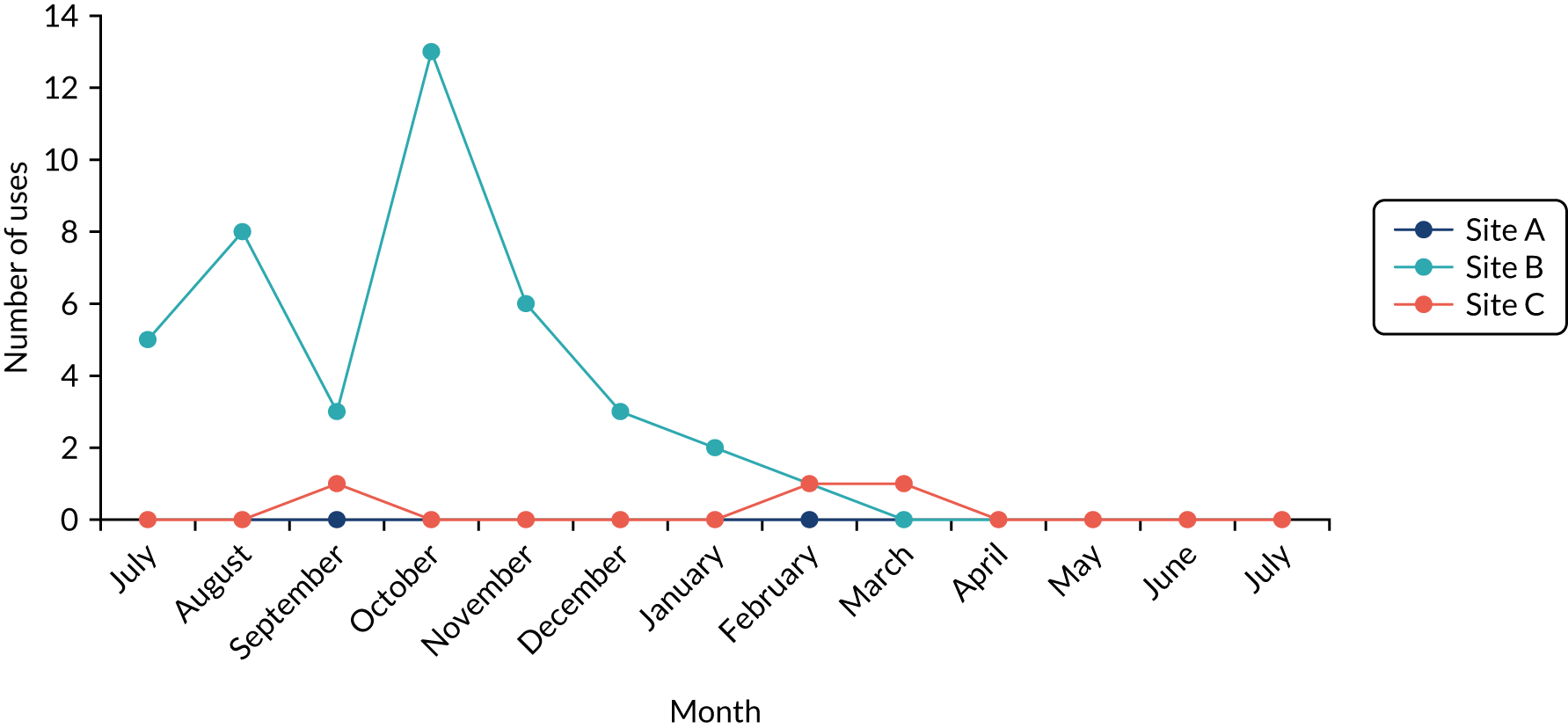

For the duration of the evaluation period, log files automatically recorded information about QualDash use at each site, including information about the user (e.g. job title), data used (e.g. audit and year), time spent interacting with different QualCards and functionality used.

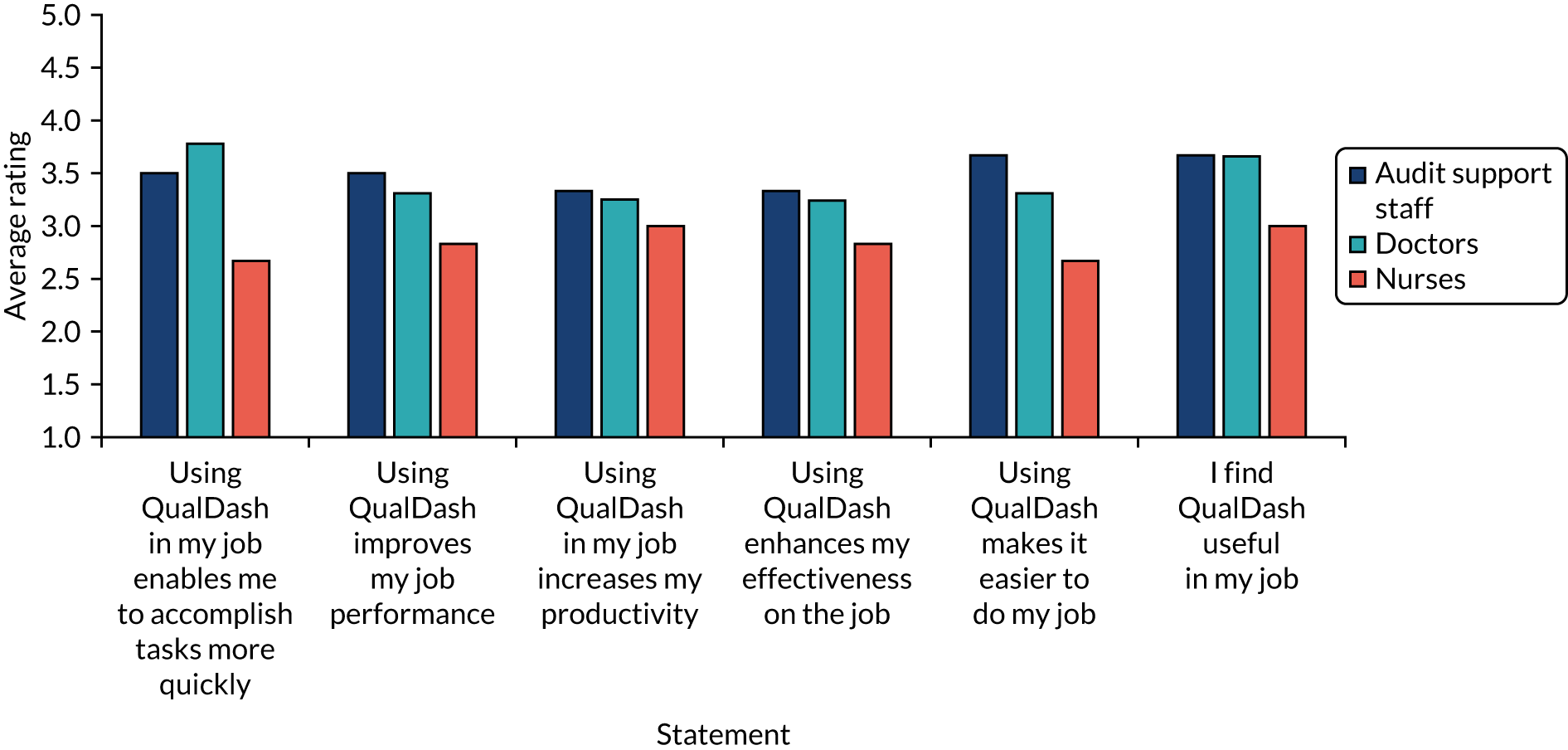

At the beginning of August 2020, a link to the questionnaire was e-mailed to participants. The questionnaire (see Report Supplementary Material 4) is based on the technology acceptance model (TAM), using well-validated items used in numerous previous studies of HIT77 and previous studies of dashboards. 78 The TAM consists of 12 statements, with six statements concerned with usefulness and six statements concerned with ease of use, which respondents rate on a scale of 1 to 5, where 1 indicates strong disagreement and 5 indicates strong agreement. In addition to the TAM items, the questionnaire included questions regarding how frequently respondents used NCA data, how frequently respondents used QualDash during the evaluation period and how likely respondents would be to continue to use QualDash after the evaluation period. Following the initial e-mail, two reminder e-mails were sent. The survey closed at the beginning of September 2020.

Analysis

An iterative approach to data collection and analysis was taken to enable ongoing testing and refinement of the CMO configurations, gathering of further data in the light of such revisions and refinement of QualDash in response to participants’ feedback. Fieldnotes were entered into NVivo and were analysed thematically. Having developed the thematic framework, based on initial reading of the data, Natasha Alvarado and Lynn McVey analysed four sets of ethnographic fieldnotes to test the applicability of the codes and assess agreement. Codes were refined and definitions clarified where there was variation, and refined codes were applied to all fieldnotes, using NVivo. To begin to develop a ‘biography’ of QualDash, we developed narratives that linked cognate themes, enabling us to examine practices within and across cases, and to explore convergence and divergence in participants’ responses. In line with the biography of artefacts approach, we ensured that these narratives traced changes over time. The narratives were compared with the QualDash programme theory to determine whether the findings supported, refuted or suggested a revision or addition to the theory.

Analysis of fieldnotes was supplemented with analysis of the log files and questionnaire data, with the findings, again, being used to assess to what extent they supported or refuted the QualDash programme theory. Log file data were post processed in Microsoft Excel® (Microsoft Corporation, Redmond, WA, USA), removing entries generated by members of the research team undertaking on-site testing of the software. Using timestamps for each login, the number of sessions per audit per month from installation to the end of July 2020 were determined for each site. Where a login occurred less than 20 minutes after the last timestamp and appeared to be the same user (based on the audit and year selected and the job title entered), this was treated as a continuation of the previous session. We also used the login information to determine the breakdown of users by role and audit for each site.

Questionnaire data were analysed in Excel to produce summary statistics for each TAM item, calculating mean and range, for both all respondents and just those who reported having used QualDash, broken down by audit and role. Following previous studies,78 ratings of ≥ 3 were taken to indicate a positive response.

Interrupted time series study

Sampling

The intention was to collect data across the five sites, receiving MINAP data for all sites and PICANet data for the three sites that participated in PICANet, with two control sites per intervention site. This required 10 control sites for MINAP and six control sites for PICANet. Matching of sites was based on the initial measures to be used (described in Analysis below). For MINAP, potential control sites were identified using the most recently published MINAP data (covering 2013–16) and matched according to size (i.e. number of nom-STEMI patients per year), percentage of admissions where door to balloon was < 90 minutes and percentage of patients who received gold-standard drugs at discharge. For PICANet, potential control sites were identified using the most recently published PICANet data (covering 2013–17) and matched according to size (i.e. number of admissions per year and number of beds), number of emergency re-admissions within 48 hours per year and number of accidental extubations per year. A total of 20 potential control sites were approached. Nine control sites for MINAP agreed to participate and five control sites agreed to participate for PICANet. One site, however, subsequently withdrew. By the time that data were to be received for analysis, only three sites had issued R&D approval, with delays as a result of prioritising COVID-19 studies and restarting studies that had stopped because of the COVID-19 pandemic. Of the three control sites that had issued R&D approval, only one submitted data (the failure of the other sites to do so presumably owing to service pressures at that time). Similarly, service pressures meant that site D did not submit data, although data were received for sites A, B, C and E.

Given that data for only one control site were received and the matching was not relevant to the measures used in the final analysis (described in Analysis below), control data were not used, with time, instead, providing the control.

Data collection

An ITS study requires data for a minimum of three time points pre intervention and three time points post intervention for robust estimation of effects and, ideally, will also allow for estimation of seasonal effect on the outcomes. 79 Sites were requested to provide MINAP and, where appropriate, PICANet data for 24 months pre intervention and 12 months post intervention. Consequently, for each site, there were 24 data points prior to introduction and 12 data points post intervention.

Analysis

Given the study intention to determine the feasibility of, and inform the design of, a trial, we intended to consider a range of measures. Our thoughts regarding which measures were appropriate evolved as the study progressed. Initially, we selected two process measures (i.e. one for MINAP and one for PICANet). For MINAP, we selected the composite process measure of cumulative missed opportunities for care. This measure has nine components [pre-hospital electrocardiography (ECG), acute use of aspirin, timely perfusion, referral for cardiac rehabilitation and prescription at hospital discharge of what are considered to be the gold-standard drugs (i.e. aspirin, thienopyridine inhibitor, angiotensin-converting enzyme inhibitor, β-hydroxy β-methylglutaryl coenzyme A reductase inhibitor and beta-blockers)] and is inversely associated with mortality. 80 Given that some of these components, such as pre-hospital ECG, are outside direct control of the trust, we also intended to explore the impact of QualDash on the individual measures that make up cumulative missed opportunities for care. On the basis of the measures that cardiology clinicians described in the interviews as being important for measuring care quality, we intended to also look at the percentage of patients who undergo angiography within 72 hours from first admission to hospital, which is part of the Best Practice Tariff (BPT) financial incentive scheme, and, for those hospitals that provide percutaneous coronary intervention (PCI), the proportion of patients who have a door-to-balloon time (i.e. the time from arrival at the hospital to PCI) of < 60 minutes.

For PICANet, we selected use of non-invasive ventilation first for patients requiring ventilation, which has been shown to be associated with reduced mortality. 81 However, this was not raised as an area of concern in interviews with PICU clinicians. On the basis of this, and two additional considerations, that is (1) it would require loading additional data into QualDash, which would reduce the performance of QualDash in terms of speed, and (2) it would require computation of the data, but the focus of QualDash is on visualising the data, a QualCard was not created for this metric. Although we still intended to include this measure in the ITS, we hypothesised that it would not change, unless other sources of information, such as the PICANet annual report, drew a PICU team’s attention to it. However, accidental extubation and unplanned readmission within 48 hours were identified in our interviews with PICU clinicians as being important indicators of care quality and, therefore, we intended to include these two measures in the ITS.

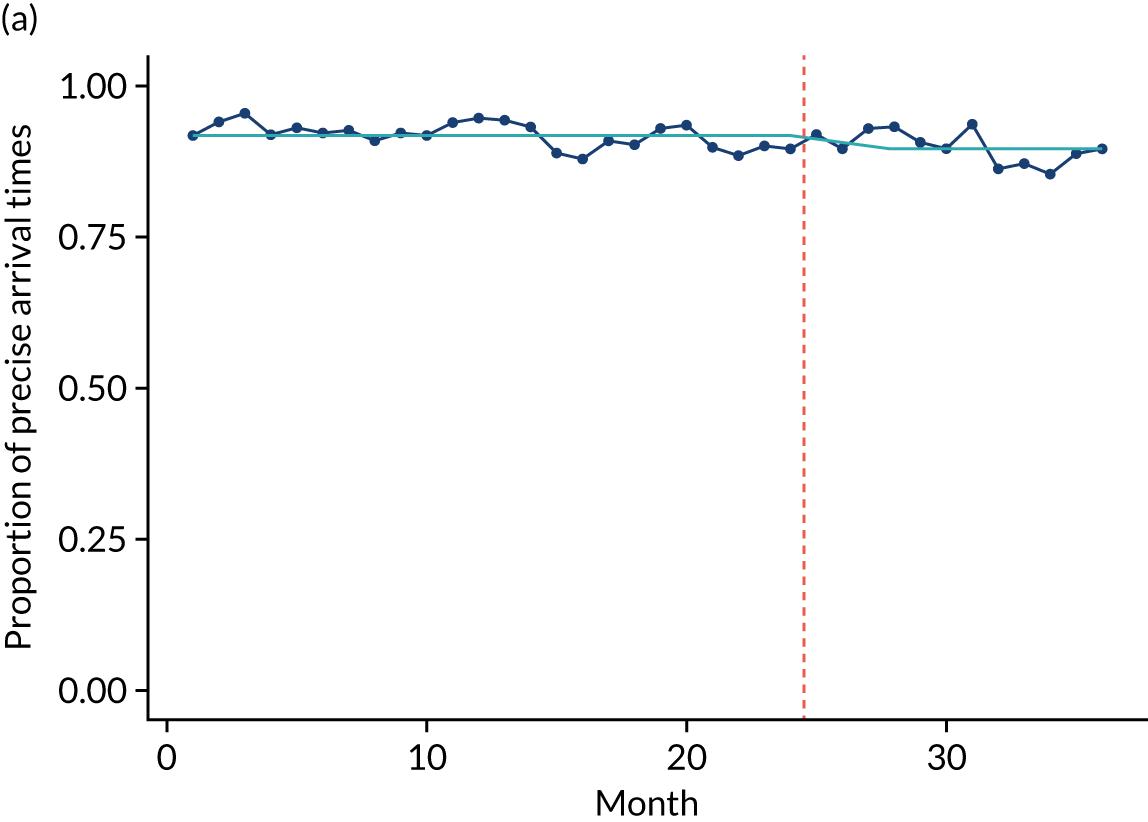

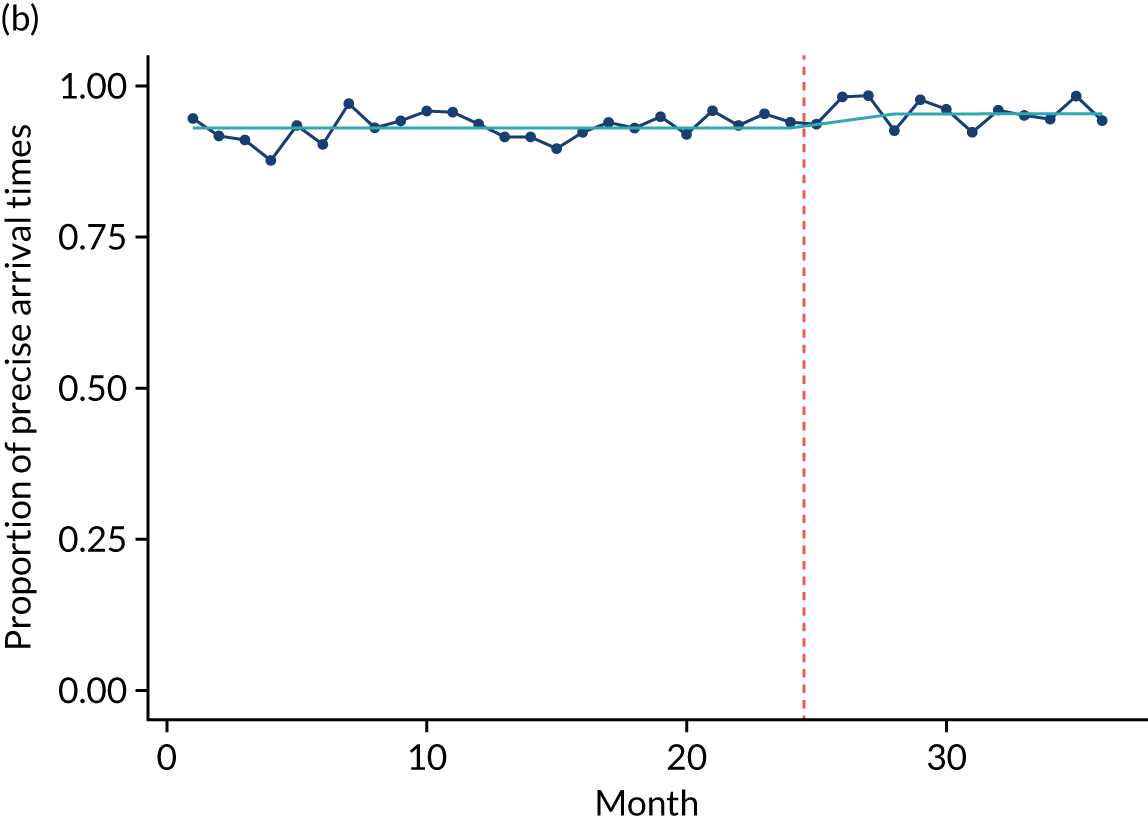

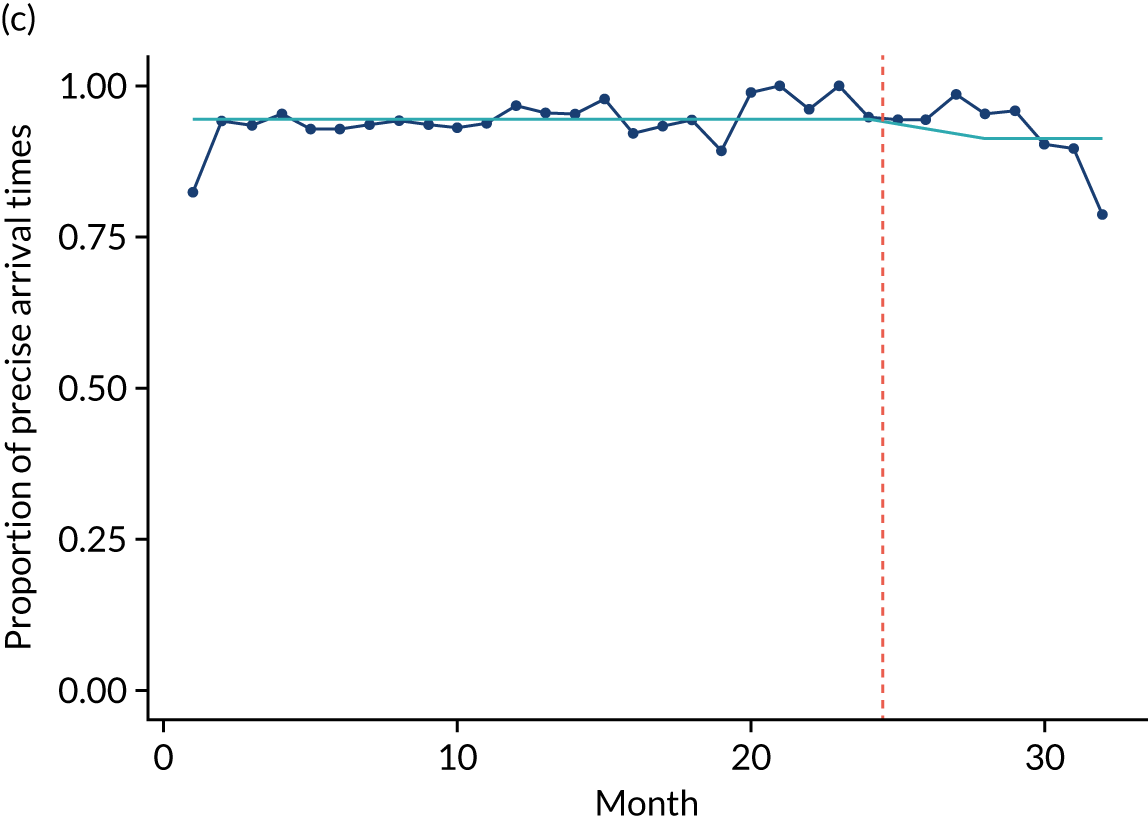

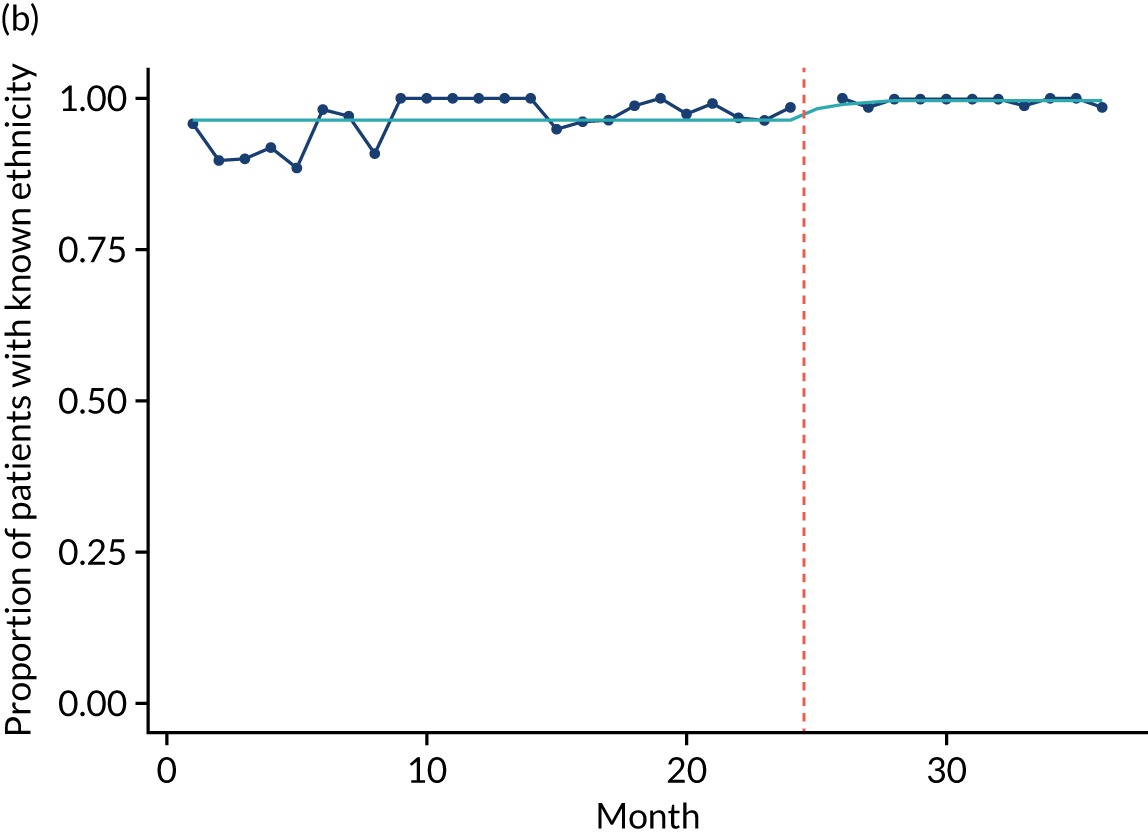

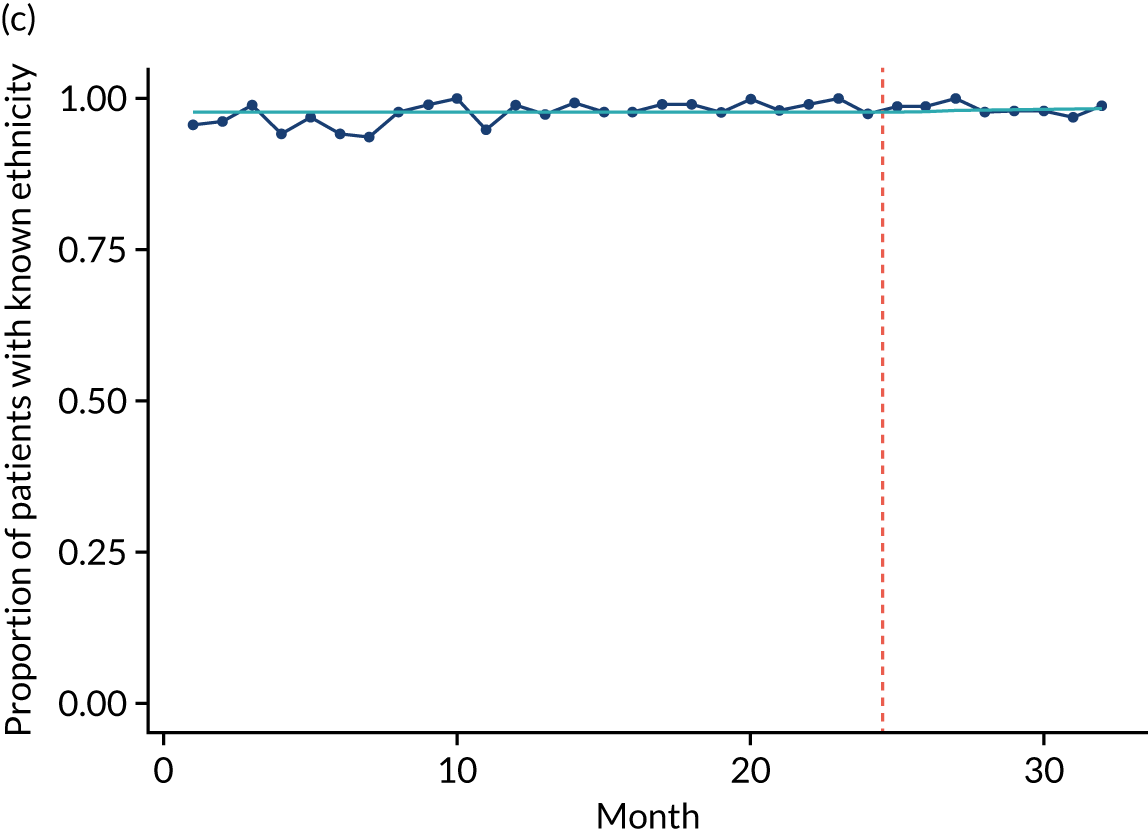

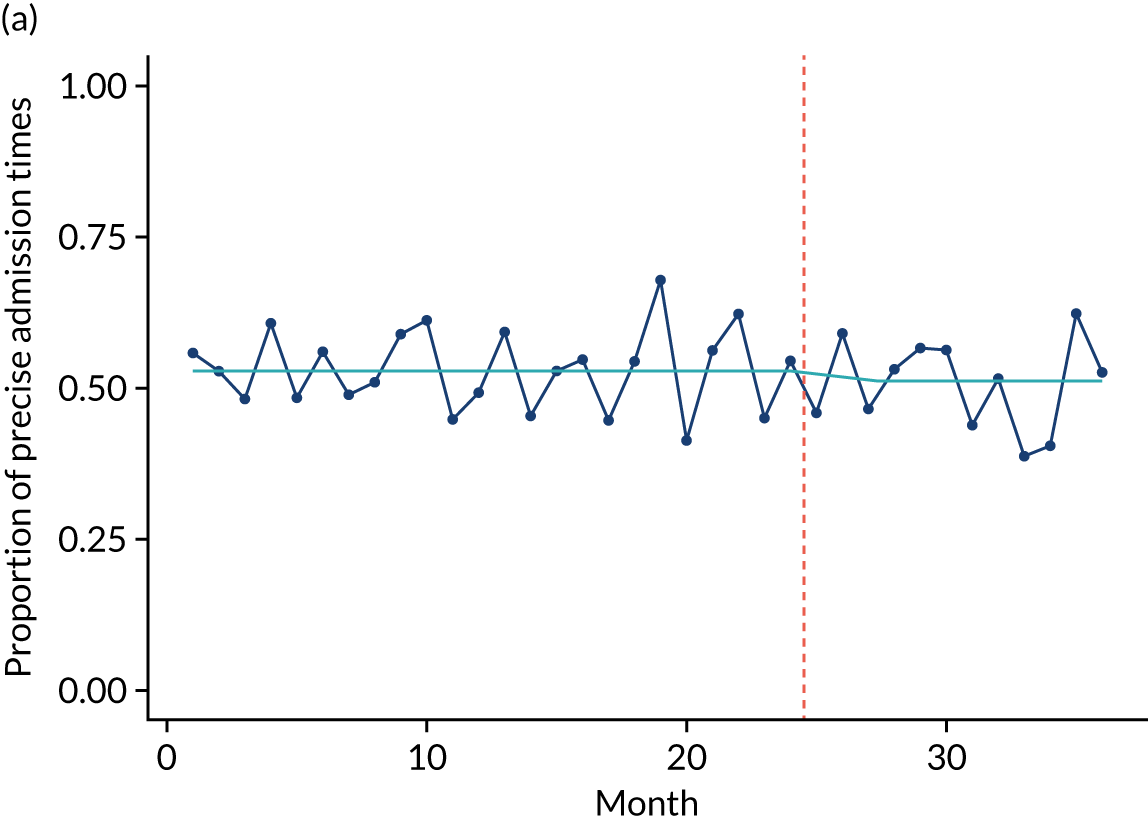

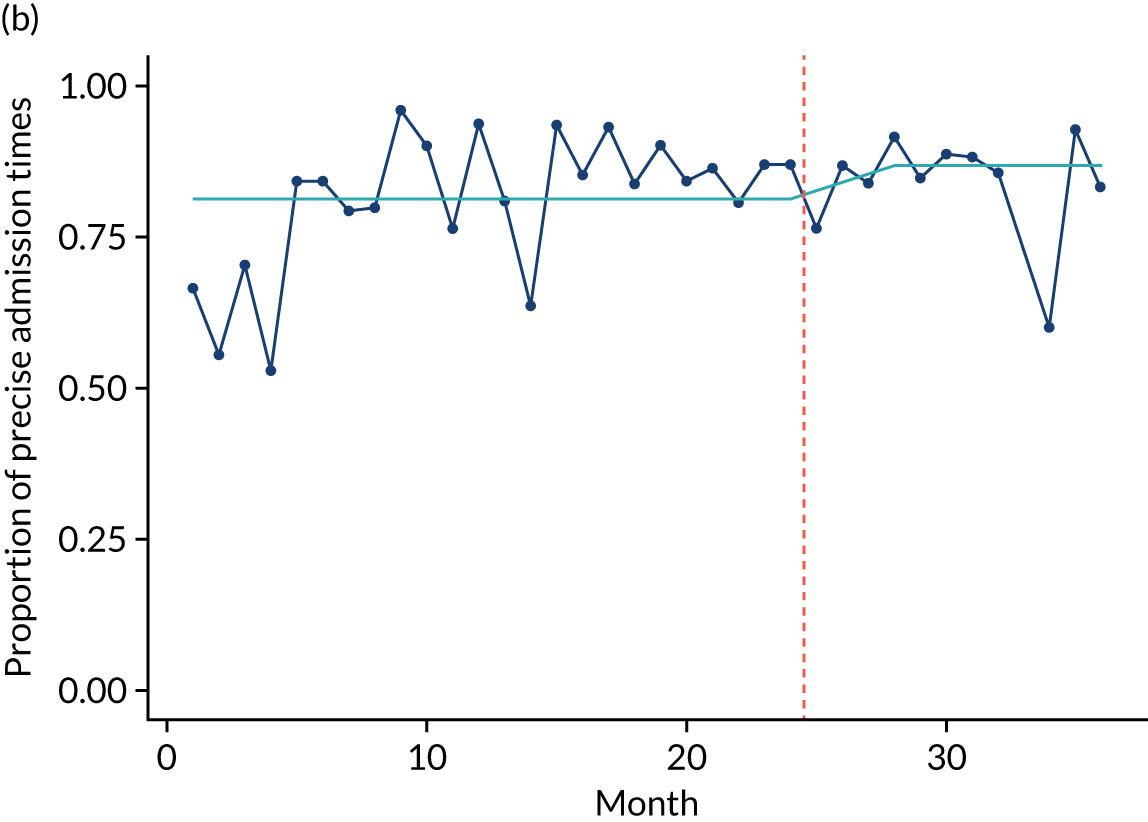

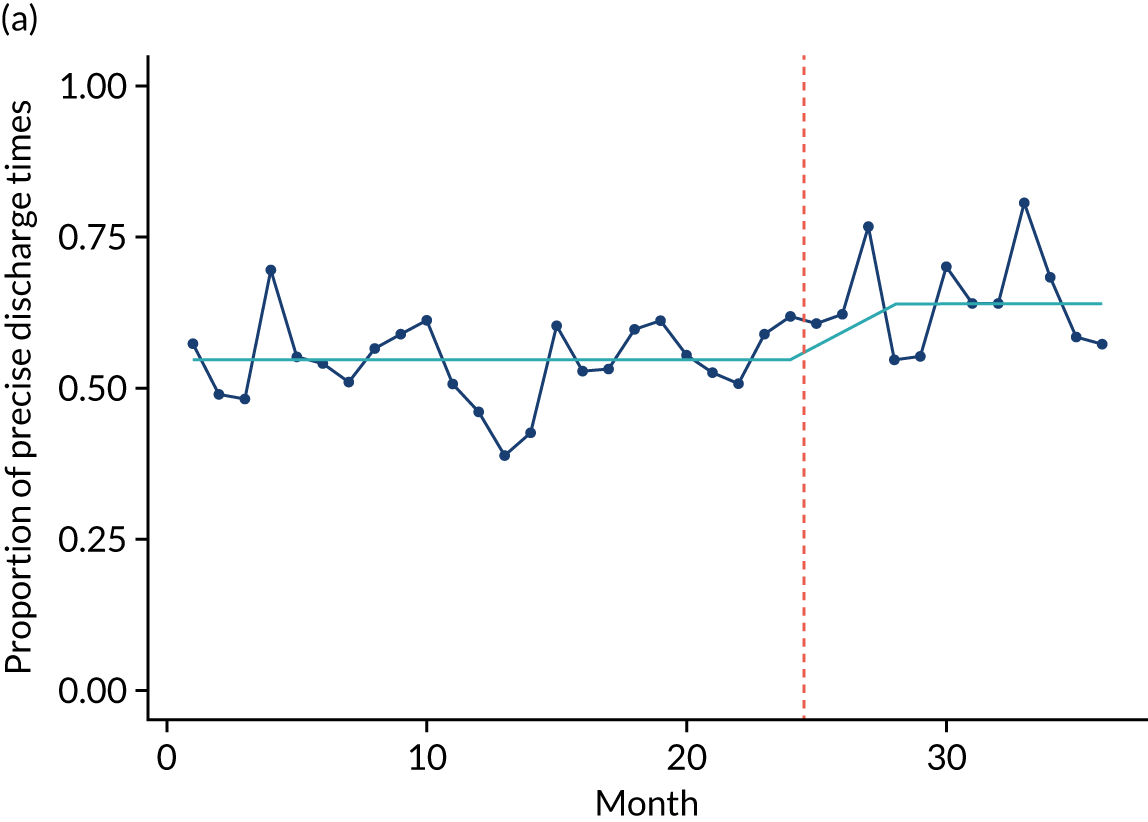

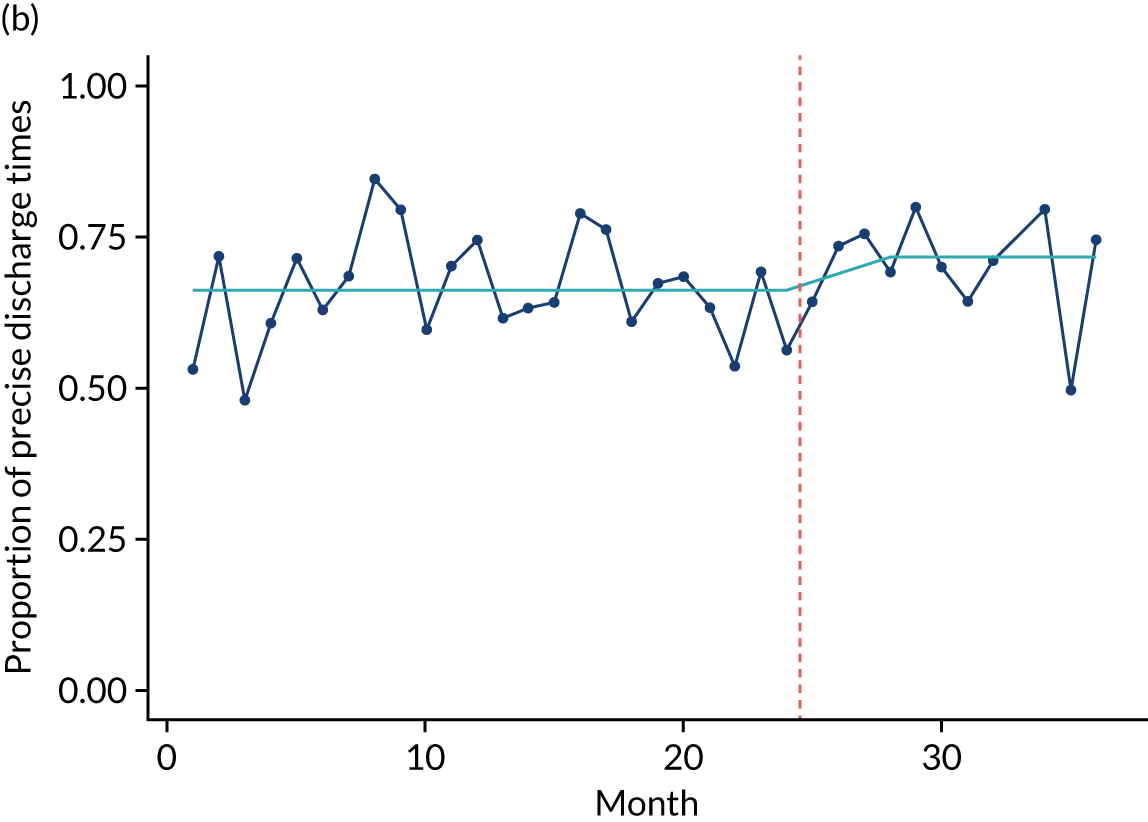

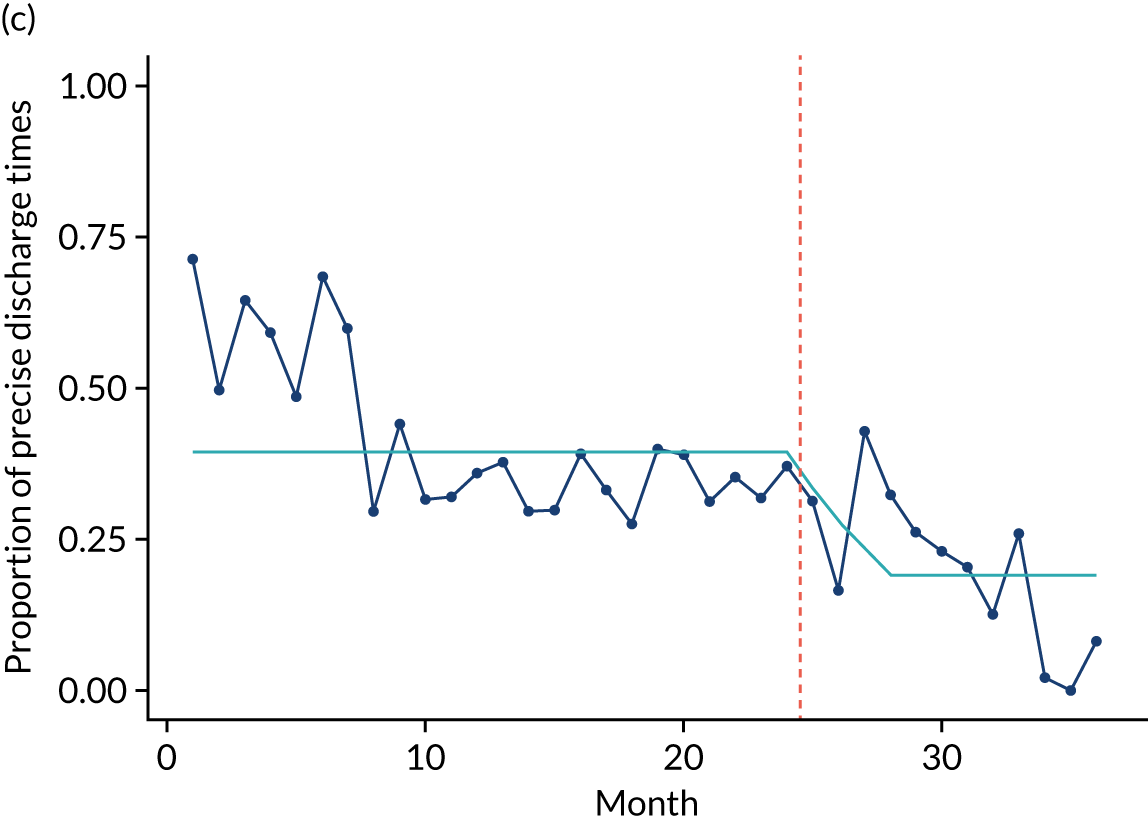

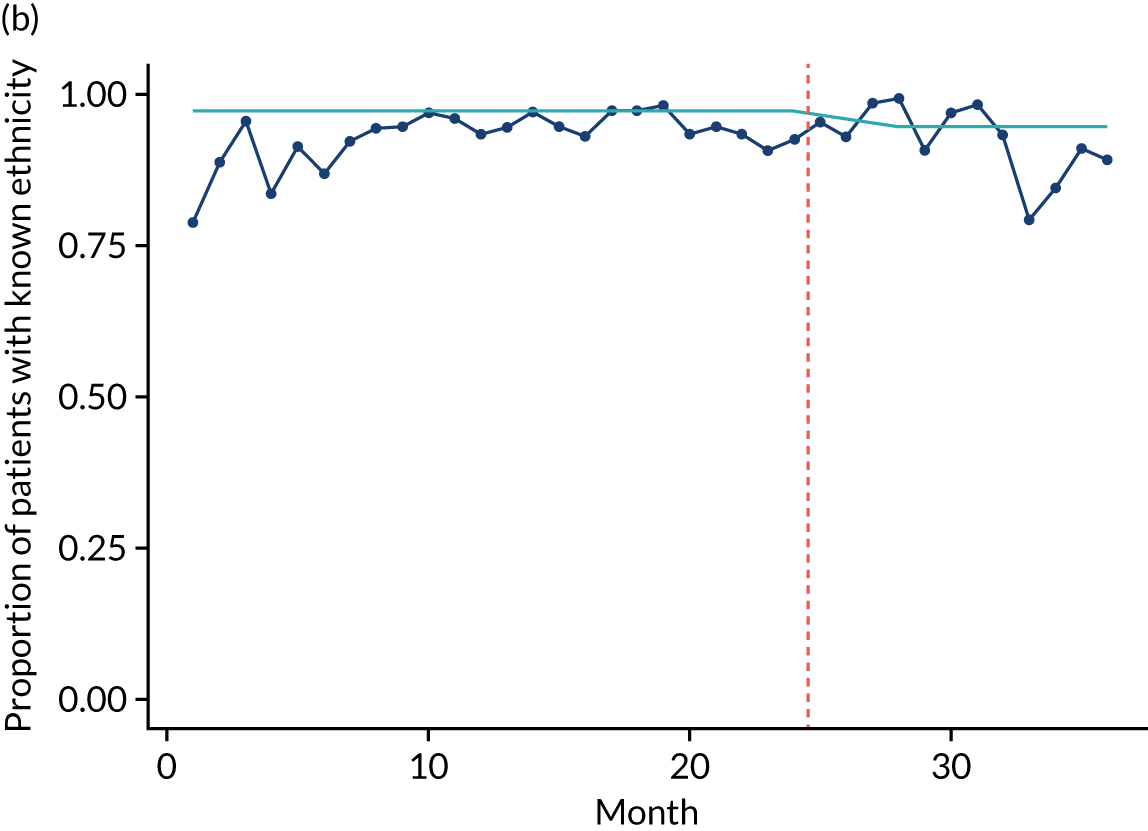

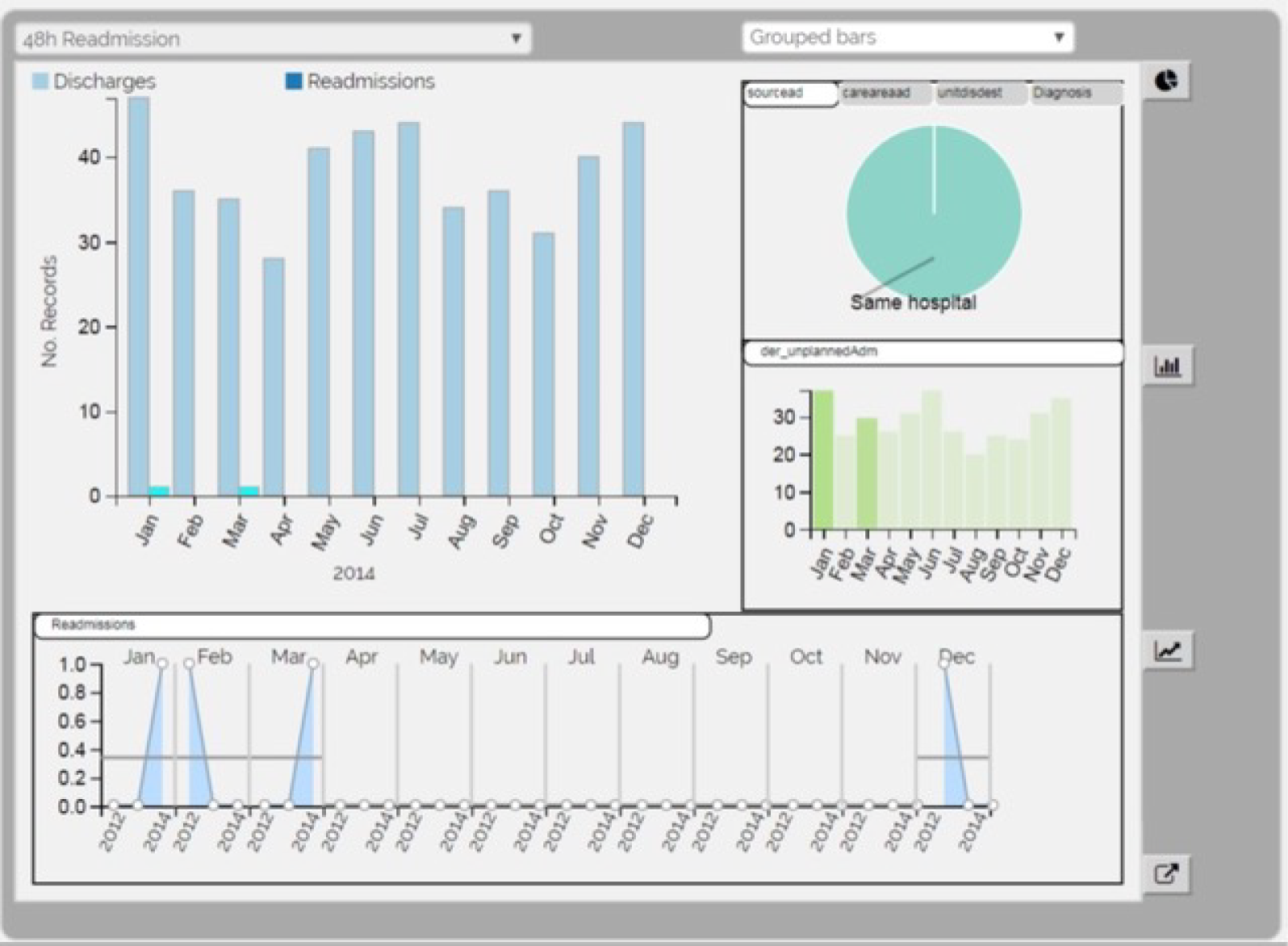

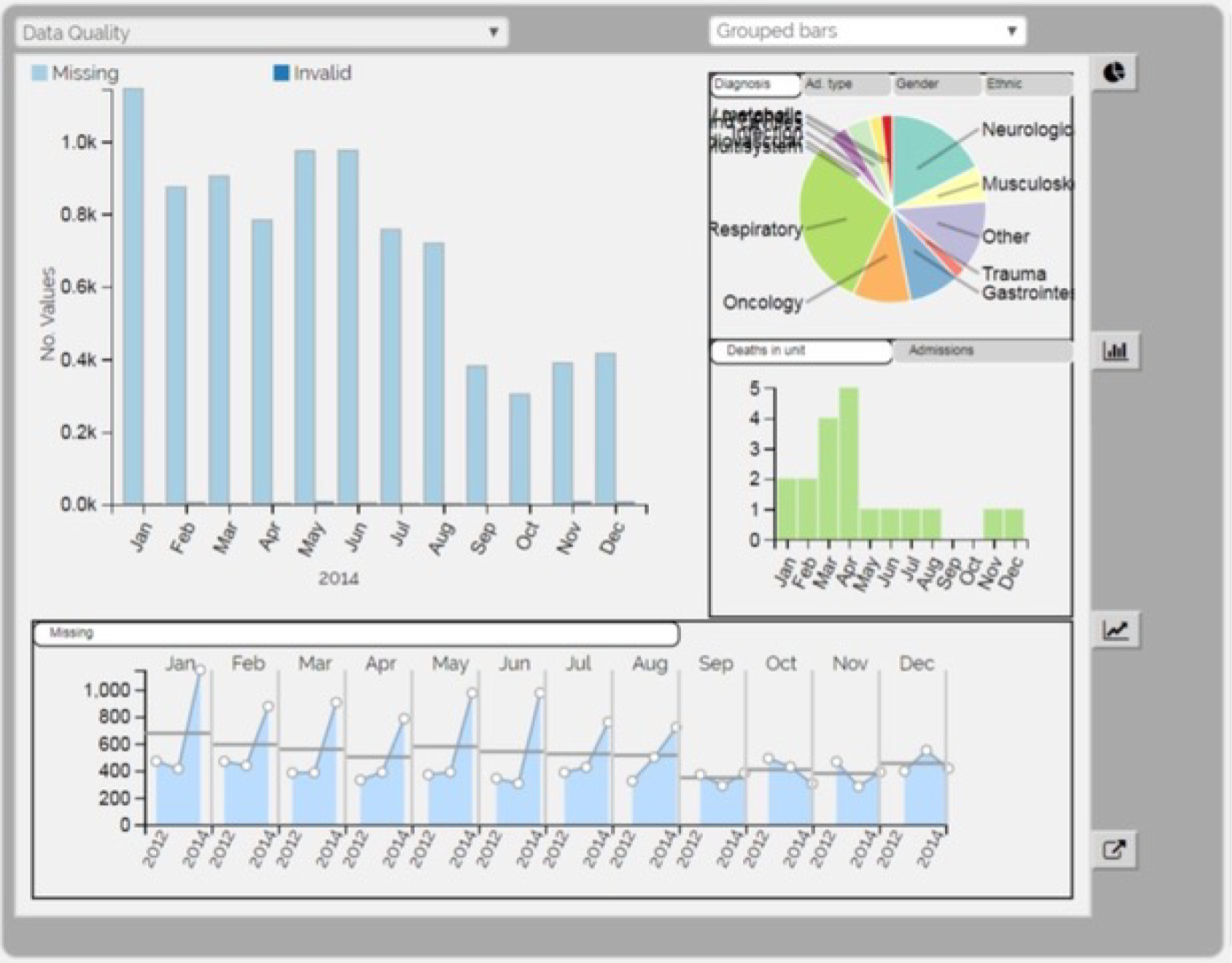

Limited QualDash use within our study sites meant that QualDash use could not be hypothesised to have had an impact on these measures and, therefore, we agreed with our SSC that it was inappropriate to use these measures. There was some qualitative evidence that QualDash positively impacted data quality and, therefore, it was agreed that analysis would focus on this. For MINAP, we looked at the precision of arrival time (as a measure of accuracy) and whether or not ethnicity was recorded (as a measure of completeness). For PICANet, we looked at the precision of admission time and discharge time and whether or not ethnicity was recorded. The decision to focus on the precision of arrival/admission and discharge times was based on discussions with PICANet about their own checks for data quality and we followed the methods that they used, where times are considered precise if they are not on the hour or at half past the hour. In addition, at the time of undertaking the analysis, we were in discussion with the Leeds Institute for Clinical Trials Research (Leeds, UK) regarding the design of a trial of QualDash in collaboration with PICANet (see Appendix 13), with emergency readmission within 48 hours as the primary outcome, for which the precise admission and discharge times would be needed, further motivating our focus on these measures. Ethnicity was chosen for assessing completeness, as this was known to be a field where there was variation in completeness.

For both NCAs, the outcome was regressed on time and intervention (QualDash). Sites were analysed separately to allow for different effects of QualDash in different sites and variation of all other effects by site. Binominal regression was used, meaning that pooling would provide little advantage. Plotting the proportion of precise variables and known ethnicity showed no obvious seasonal effects or trends and, therefore, seasonal and trend effects were not added to the models. A simple fixed model with QualDash as the sole predictive variable was used across all sites and, therefore, variation is between sites and not between models. The effect of QualDash was anticipated to build up over the first few months of implementation and so a 25% impact is modelled in August 2019, 50% in September 2019, 75% in October 2019 and 100% thereafter. R software version 4.0.0 (The R Foundation for Statistical Computing, Vienna, Austria) was used to analyse the data (the R code is provided in Report Supplementary Material 5 and the data are provided in Report Supplementary Material 6). We report the effect of QualDash on a log-odds scale, with the standard error and p-value from Wald test. Plots show the effect on a probability scale.

Phase 5: assessment of trial feasibility and wider applicability of QualDash

Trial feasibility and design

Phase 5 assessed the feasibility of a cluster RCT of QualDash, using the following three progression criteria: (1) QualDash used by ≥ 50% of intended users, (2) NCA data completeness improves or remains the same and (3) participants perceive QualDash to be useful and intend to continue using it after the study. Criteria 1 and 3 are concerned with the acceptability and uptake of the intervention and, therefore, have implications for recruitment to a trial. The second criterion is concerned with ensuring that the intervention does not have unintended negative consequences, which would affect both the success of the intervention (as QualDash will be less useful if data completeness is reduced) and the feasibility of outcome assessment. The third criterion is also concerned with participants’ perceptions of impact of QualDash on care.

As these progression criteria were met (although there were limitations in the data used for assessing whether or not the criteria were met), we drew on our findings from the multisite case study to determine which NCAs should be included in a trial. Having identified PICANet as a suitable NCA, we worked with the Leeds Institute of Clinical Trials Research to design an appropriate trial.

Assessing the wider applicability of QualDash

Part of our plan for phase 5 of the study had been to assess the extent to which QualDash is suitable for different NCAs and the extent to which QualDash meets the needs of commissioners, through three focus groups. However, given the international focus on COVID-19 at the time and a request from a region-wide Gold Command, we sought, instead, to understand the requirements for such a body (which included commissioners) and how QualDash could be revised to support their work, using interviews for this purpose.

Sample

Although it was not possible to interview those individuals who sit on Gold Command because of pressures on their time, access was provided to individuals who support the work of Gold Command members and would have an understanding of their requirements for a quality dashboard. Seven interviews were conducted. Four participants worked part time as general practitioners (GPs) in large community practices and also had more strategic roles, working within the Clinical Commissioning Group (CCG) or a primary care network, bringing together different general practices within the region. From the remaining participants, one participant worked as a chief operating officer for the primary care network, one participant was head of business intelligence for the CCG and one participant was an associate clinical director for the CCG.

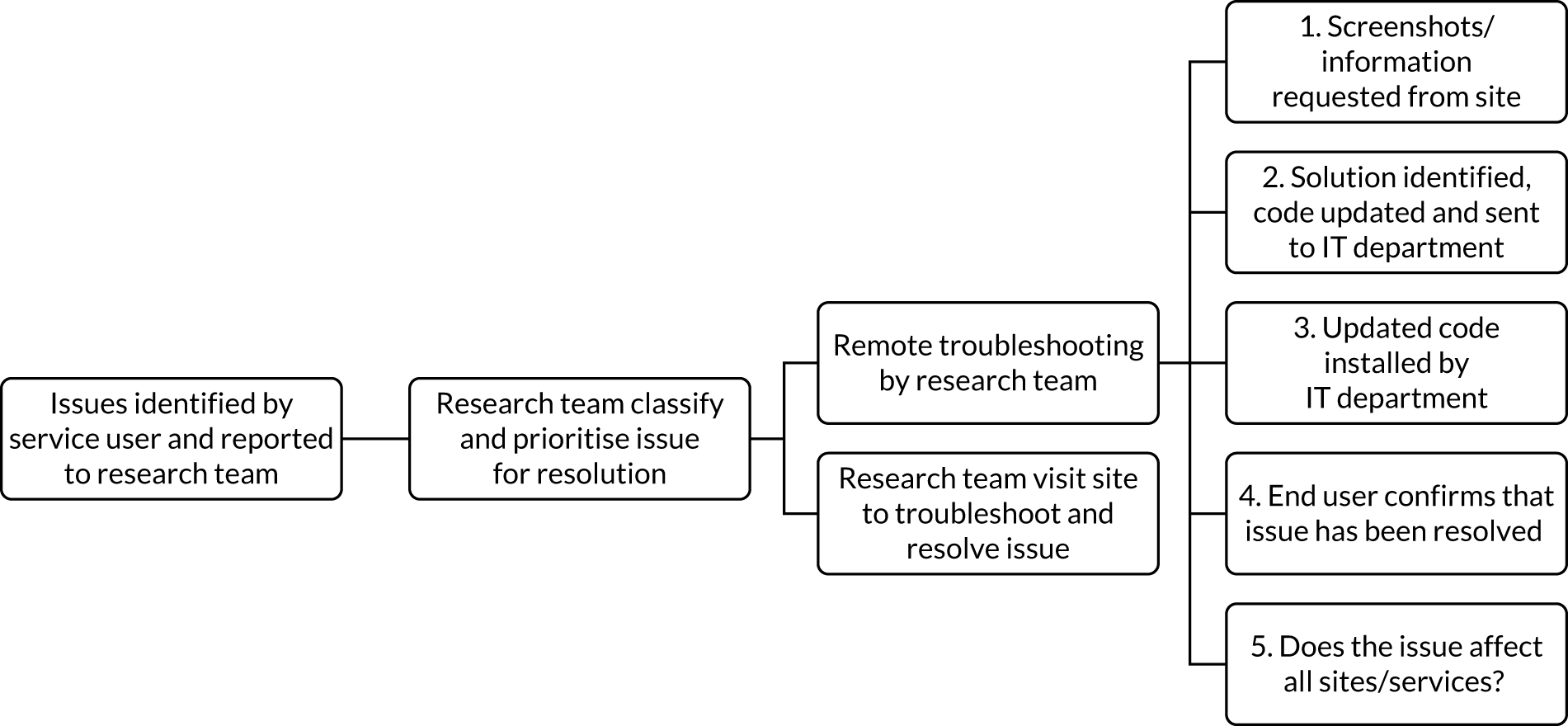

Data collection