Notes

Article history

The research reported in this issue of the journal was commissioned by the National Coordinating Centre for Research Methodology (NCCRM), and was formally transferred to the HTA programme in April 2007 under the newly established NIHR Methodology Panel. The HTA programme project number is 06/91/07. The contractual start date was in June 2005. The draft report began editorial review in September 2010 and was accepted for publication in March 2011. The commissioning brief was devised by the NCCRM who specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2012. This work was produced by Hood et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE) (http://www.publicationethics.org/). This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2012 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Many studies in health sciences research rely on collecting participant-reported outcomes of some form or another. Although some of these are participant reports of factual information, such as adherence to drug regimes, that could be objectively validated, there is increasing recognition of the importance of subjective measures, such as attitude to, and perceptions of, health and services provision. In addition to this, measures relating to health status which are not objectively measurable, such as quality of life (QoL), are becoming key secondary or even primary outcomes in many studies. This has led to a rapid growth in the development and validation of such measures. Few clinical trials, even with interventions pharmacological or surgical in nature, would be run today without measuring the patients’ QoL and assessing the acceptability of the intervention being trialled. The US Food and Drug Administration has recognised the importance of the inclusion of such measures as QoL for registration purposes1 and the National Institute for Health and Clinical Excellence incorporates quality-adjusted life-years (QALYs) as part of its decision-making process.

Alongside the exponential increase in health-related literature devoted to participant-reported outcomes (such as QoL), attention is being paid to the method or mode of data collection. Much of this has been driven by two main components: the rapid development of new technologies that can lead to increased ease, speed and efficiency of data capture, alongside an increasing drive for maximising response rates. This has led to a wide variety of options for mode of data collection being available to the health science researcher, with some studies adopting multiple approaches to follow up as many of the participants as possible. Although this approach may make sense pragmatically, it needs to be informed by an understanding of the participant’s ability to respond and statistical adjustment for biases introduced by multimode usage.

Theoretical approach

Survey methodologies (e.g. in the business, marketing, social and political sciences) have a literature base of their own covering theory to practice, much of which has been only slowly recognised in the health arena. Few health-related outcome development papers indicate a theoretical approach to eliciting survey response.

Although theoretical approaches are rarely considered, there has been a focus on maximising data capture by improving response rates. Reviews have been conducted which consider how features of the survey instrument (e.g. presentation, length, incentives) impact on response rates. 2,3 There has also been an increase in ways in which such data are collected – the mode of data collection. With increasing levels of technology, a wider variety of modes are in use. The main focus in choosing a mode for a study appears to be based predominantly on improving response rates and minimising cost. The impact on the validity of response is not generally a consideration. In addition to this, in order to gain as complete a dataset as possible, many studies are using mixed modes either to enhance participants’ choice (e.g. opting for web- or paper-based surveys) or to improve follow-up rates (i.e. non-responders getting telephone data collection). Although for practical reasons these choices are entirely justifiable, consideration needs to be given to the validity of response via different modes and the impact that choice of mode or modes might have on the conclusions from a study.

Psychological theories of survey response will be considered in depth in Chapter 2. However, survey non-response and increasing concerns about maintaining adequate levels of response have led researchers to seek to categorise different forms of non-response. For example, Groves and Couper4 distinguish non-response due to non-contact, refusal to co-operate and inability to participate. The use of incentives to maintain response has, in turn, fostered theoretical development about how such inducements work, which, for example, have focused upon economic theories of incentives through to models describing a broader consideration of social exchange. Comprehensive theories of survey involvement have also been introduced and tested empirically. 5

More recently, a paradigm shift has been described within survey methodology from a statistical model focused upon the consequences of surveying error to social scientific models exploring the causes of error. 6 Attempts to develop such theories of (1) survey error, (2) decisions to participate and (3) response construction have been brought under the general banner of the Cognitive Aspects of Survey Methodology (CASM) movement. Understanding and reducing measurement error, rather than sampling error, is at the forefront of this endeavour. An impetus for recent theoretical developments is very much provided by technological innovation and diversity, and a requirement to understand the relative impact of different data collection modes upon survey response.

Several information-processing models describing how respondents answer questions have been proposed, which share a common core of four basic stages: (1) comprehension of the question; (2) retrieval of information from autobiographical memory; (3) use of heuristic and decision processes to estimate an answer; and (4) response formulation. 7 These models describe mostly sequential processing. A good example of a sequential information processing model is provided by Tourangeau et al. 8 For each stage, there are associated processes identified, which a respondent may or may not use when answering a question. Each stage and each process may be a source of response error.

As indicated above, there has been a substantial expansion in the modes of data elicitation and collection available to survey researchers over the last 30 years. In 1996, Tourangeau and Smith9 identified six methods that may be used. 9 A quick look at the literature since then will show that this expansion has continued with measures utilised that include personal digital assistants (PDAs) and websites. Subsequently, Tourangeau et al. 8 delineated 13 different modes of survey data collection (including remote data collection methods such as telephone, mail, e-mail and the internet), which they considered differed in terms of five characteristics: (1) how respondents were contacted; (2) the presentational medium (e.g. paper or electronic); (3) method of administration (via interviewer or self-administered); (4) sensory input channel used; and (5) response mode. 8

Variations even within the same mode of data collection further complicate comparison. For example, Honaker10 describes computer-administered versions of the Minnesota Multiphasic Personality Inventory (MMPI), which differ in terms of type of computer being used, different computer–user interfaces with inconsistent item presentation and response formats. Therefore, different computerised versions of a test cannot be easily generalised to other versions. Other variables that could mediate the effect of different modes of data collection have also been considered, including the overall pace of the interview, the order of survey item processing and the role of different mental models used by respondents. Although the role of different mental models used by respondents, in particular, is rarely assessed, it has been considered a potentially significant mediator of response behaviour. 8

The challenge for health sciences research

As described above, the first characteristic underlying the different modes of data collection considered by Tourangeau et al. 8 was method of contact. Work assessing the impact of an integrated process of respondent approach, consent and data collection has addressed bias due to selective non-ascertainment (i.e. the exclusion of particular subgroups). This may be clearly identifiable subgroups, in terms of people without telephones or computers (for telephone or internet approaches), or less clearly identifiable subgroups, i.e. those with lower levels of literacy or the elderly (for paper-based approaches). There is also considerable work on improving response rates and the biases induced by certain subgroups being less likely to consent to take part in a survey.

Furthermore, an important question in health services research is the use of data collection methods within prospective studies, where patients have already been recruited via another approach. This could be within a clinic or other health service setting rather than the survey instrument being the method of approach as well as data collection. Edwards et al. 3 have recently updated a review of the literature (both health and non-health) to identify randomised trials of methods of improving response rates to postal questionnaires. Another review in health-related research has focused on the completeness of data collection and patterns of missing data, as well as response rates. 2

Guidance is needed not just about the ‘best’ method to use and most appropriate theoretical model of response, but also the consequence of combining data collected via different modes. For example, a common multimethod approach is when a second mode of data collection is used when the first has been unsuccessful (e.g. using telephone interview when there has been no response to a postal approach11). Criteria for judging equivalence of the two approaches are therefore required. Honaker10 uses the concepts of psychometric equivalence and experiential equivalence. The former describes when the two forms produce results with equal mean scores, identical distribution and ranking of scores and agreement in how scores correlate with other variables. The latter deals with how two forms may differ in how they affect the psychometric and non-psychometric components of the response task.

In order to inform health services research, guidance is needed which quantifies the differences between modes of data collection and indicates which factors are associated with the magnitude of this difference. These could be contextual-based in terms of where the participant is when the information is completed (e.g. health setting, own home, work), content based in terms of questionnaire topic (e.g. attitudes to sexual behaviour) or population based (e.g. elderly). The factors identified by Tourangeau et al. 8 also need to be tested across a wide range of modes and studies.

Aim

The aim of this project is to identify generalisable features affecting responses to the different modes of data collection relevant to health research from a systematic review of the literature.

Objectives

-

To provide an overview of the theoretical models of survey response and how they relate to health research.

-

To review all studies comparing two modes of administration for subjective outcomes and assess the impact of mode of administration on response quality.

-

To explore the impact of findings for key identified health-related measures.

-

To create an accessible resource for health science researchers, which will advise on the impact of the selection of different modes of data collection on response.

-

To inform the analysis of multimode studies.

Chapter 2 Theoretical perspectives on data collection mode

Background

Understanding the unique experience of both users and providers of health services requires a broad range of suitably robust qualitative and quantitative methods. Both observational (e.g. epidemiological cohort) and interventional studies [e.g. randomised controlled trials (RCTs)] may collect data in a variety of ways, and often require self-report from study participants. Increasingly in clinical studies, clinical indicators and outcomes will form part of an assessment package in which patient lifestyle choices and behaviour, attitudes and satisfaction with health-care provision are a major focus. Health researchers need both to be reassured and to provide reassurance that the measurement tools available are fit for purpose across a wide range of contexts. This applies not only to the survey instrument itself, but also to the way it is delivered and responded to by the participant.

Options for collecting quantitative self-reported data have expanded substantially over the last 30 years, stimulated by technological advances in telephony and computing. The advent of remote data capture has led to the possibility of clinical trials being conducted over the internet. 12,13 Concerns about survey non-response rates have also led researchers to innovate – resulting in greater diversity in data collection. 14 Consequently, otherwise comparable studies may use different methods of data collection. Similarly, a single study using a sequential mixed-mode design may involve, for example, baseline data collection by self-completion questionnaire and follow-up by telephone interview. This has led to questions about the comparability of data collected by the different methods. 15

In this chapter we apply a conceptual framework to examine the differences generated by the use of different modes of data collection. Although there is considerable evidence about the effect of different data collection modes upon response rates, the chapter addresses the processes that may ultimately impact upon response quality. 16–19 The framework draws upon an existing cognitive model of survey response by Tourangeau et al. ,8 which addresses how the impact of different data collection modes may be mediated by key variables. Furthermore, the chapter extends the focus of the model to highlight specific psychological response processes that may follow initial appraisal of survey stimulus. Although much of the empirical evidence for mode effects has been generated by research in other sectors, the relevance for health research will be explored. In doing so, other mediators of response will be highlighted.

It is important to clarify what lies outside the scope of the current review. Although mode of data collection can impact upon response rate as well as response content, that is not the focus of this report. Similarly, approaches that integrate modes of data collection within a study or synthesise data collected by varying modes across studies are addressed only in passing. Although these are important issues for health researchers, this review concentrates on how the mode of data collection affects the nature of the response provided by respondents, with a particular emphasis on research within the health sciences.

Variance attributable to measurement method rather than the intended construct being measured has been well recognised in the psychological literature and includes biases such as social desirability and acquiescence bias. 20 This narrative review has been developed alongside the systematic literature review of mode effects in self-reported subjective outcomes presented in the subsequent chapters. 21 The chapter highlights for researchers how different methods of collecting self-reported health data may introduce bias and how features of the context of data collection in a health setting such as patient role may modify such effects.

Modes and mode features

What are modes?

Early options for survey data collection were either face-to-face interview, mail or telephone. Evolution within each of these three modes led to developments such as computer-assisted personal interview (CAPI), web-delivered surveys and interactive voice response (IVR). Web-based and wireless technologies, such as mobile- and PDA-based telephony, have further stimulated the development of data collection methods and offer greater efficiency than traditional data collection methods, such paper-based face to face interviews. 22 Within and across each mode a range of options are now available and are likely to continue expanding.

A recent example of technologically enabled mode development is computerised adaptive testing (CAT). Approaches such as item response theory (IRT) modelling allow for survey respondents to receive differing sets of calibrated question items when measuring a common underlying construct [such as health-related quality of life (HRQoL)]. 23 Combined with technological advances, this allows for efficient individualised patient surveys through the use of computerised adaptive testing. 24 In clinical populations, CAT may reduce response burden, increase sensitivity to clinically important changes and provide greater precision (reducing sample size requirements). 23 Although IRT-driven CAT may be less beneficial where symptoms are being assessed by single survey items, more general computer-aided testing that mimics the normal clinical interview may be successfully used in combination with IRT-based CAT. 25

What are the key features of different data collection modes?

The choice of mode has natural consequences for how questions are worded. Face-to-face interviews, for example, may use longer and more complex items, more adjectival scale descriptors and show cards. 26 In contrast, telephone interviews are more likely to have shorter scales, use only end-point descriptors and are less able to use visual prompts, such as show cards. However, even when consistent question wording is maintained across modes there will still be variation in how the survey approach is appraised psychologically by respondents.

The inherent complexity of any one data collection approach (e.g. the individual characteristics of a single face-to-face interview paper-based survey) and increasing technological innovation means that trying to categorise all approaches as one or other mode may be too simplistic. Attention has therefore been focused upon survey design features that might influence response. Two recent models by Groves et al. 18 and Tourangeau et al. 8 illustrate this. Tourangeau identified five features: (1) how respondents were contacted (e.g. by post, in person); (2) the presentational medium (e.g. paper or electronic); (3) method of administration (interviewer- or self-administered); (4) sensory input channel (e.g. visual or aural); and (5) response mode (e.g. handwritten, keyboard, telephone). 27 Groves et al. 18 also distinguished five features: degree of interviewer involvement, level of interaction with respondent, degree of privacy, channels of communication (i.e. sensory modalities) and degree of technology. 28 Although both models cover similar ground, Groves et al. 18 place a greater emphasis upon the nature of the relationship between the respondent and the interviewer. Both models attempt to isolate the active ingredients of survey mode. However, Groves et al. 18 note that in practice differing combinations of features make generalisation difficult – reflected in their emphasis upon each individual feature being represented as a continuum. Although research on data collection methods has traditionally referred to as ‘mode’, given the complexity highlighted above, where appropriate we use the term ‘mode feature’ in this chapter.

How mode features influence response quality

Common to several information-processing models of how respondents answer survey questions there are four basic stages: (1) comprehension of the question; (2) retrieval of information from autobiographical memory; (3) use of heuristic and decision processes to estimate an answer; and (4) response formulation. 7 At each stage, a respondent may use certain processes when answering a question, which may result in a response error.

Of the features that might vary across data collection method, Tourangeau et al. 8 proposed four features that may be particularly influential in affecting response: (1) whether a survey schedule is self-administered or interviewer administered; (2) the use of a telephone; (3) computerisation; and (4) whether survey items are read by (or to) the respondent. 8 Although this chapter focuses on differences between these broad mode features, there may still be considerable heterogeneity within each. For example, computerisation in the form of an individual web-delivered survey may apparently provide a standardised stimulus (i.e. overall package of features) to the respondent, but different hardware and software configurations for each user may violate this assumption. 22

Tourangeau et al. 8 considered three variables to mediate the impact of mode feature: degree of impersonality, the sense of legitimacy engendered by the survey approach and the level of cognitive burden imposed. Both impersonality and legitimacy represent the respondent’s perceptions of the survey approach and instrument. Cognitive burden, impersonality and legitimacy are a function of the interaction between the data collection method and the individual respondent (and subject to individual variation). Nevertheless, the level of cognitive burden experienced by individuals is less dependent upon the respondent’s psychological appraisal of the survey task than perceptions of either impersonality or legitimacy.

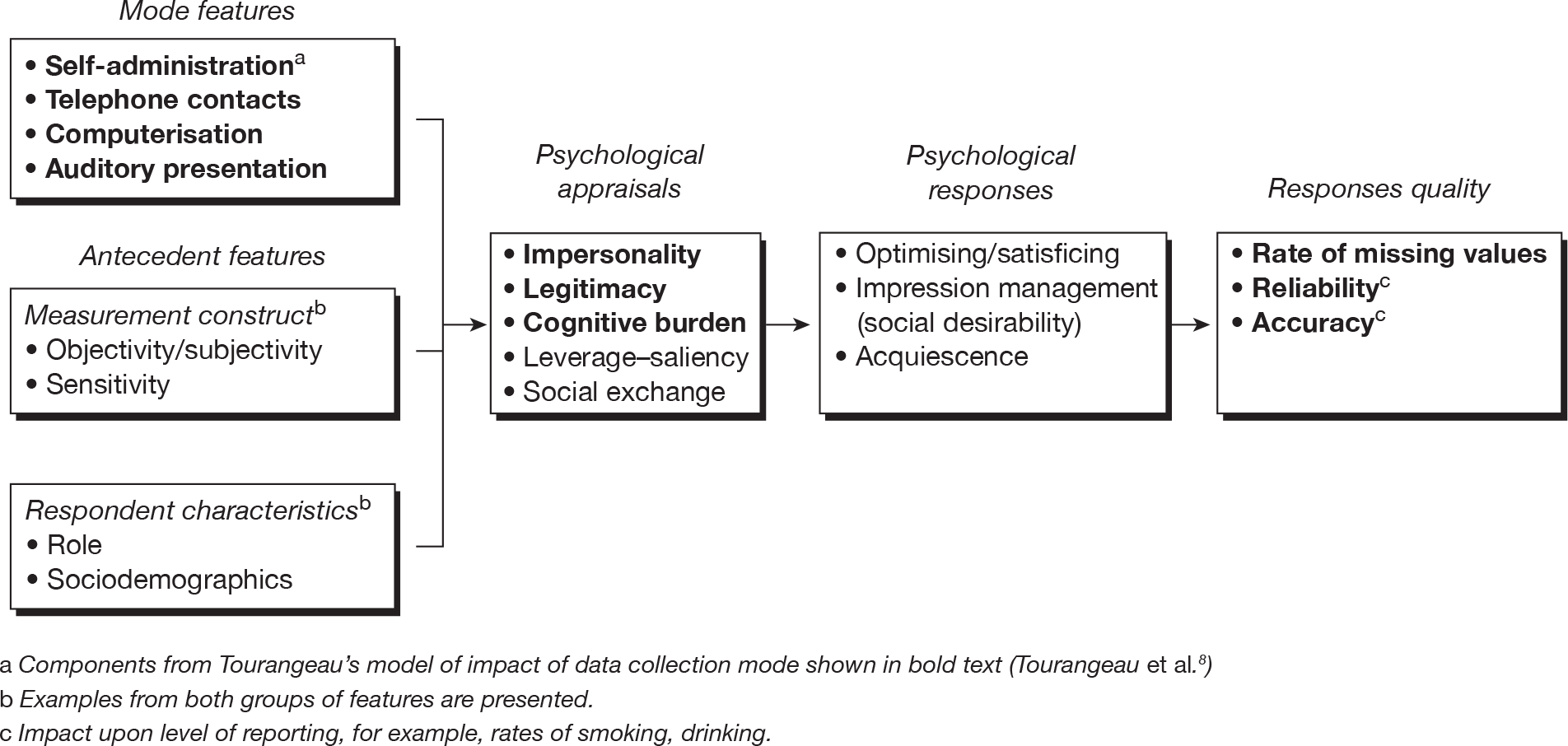

The relationships among these mode features, mediating variables and three response quality indicators (rate of missing values, reliability and accuracy) are shown in Figure 1 and have been previously described by Tourangeau et al. 8 In this chapter, we further distinguish between psychological appraisals and psychological responses. Psychological appraisals entail the initial processing of salient features by individual respondents and incorporate the mediators described by Tourangeau et al. Two additional appraisal processes are included (leverage–saliency and social exchange) and are described below. Initial appraisal then moves onto psychological response processes. In this amended model, these processes include optimising/satisficing, impression management and acquiescence. 29 Each of these processes is described below and together they represent differing theoretical explanations for an individual’s response. The extent to which they are distinct or related processes is also examined.

FIGURE 1.

Mode features and other antecedent features influencing response quality. (a) Components from Tourangeau’s model of impact of data collection mode shown in bold text (Tourangeau et al. 8). (b) Examples from both groups of features are presented. (c) Impact upon level of reporting, for example, rates of smoking, drinking.

Other features may also modify response and are added to the chapter framework. They include features of the ‘respondent’ (the information provider) and ‘construct’ (what is being measured). These features are not directly related to the method of data collection. Some of these features are implied by the mediators described by Tourangeau et al. 8 (e.g. the sensitivity of the construct is implicit to the importance of ‘impersonality’). Nevertheless, we consider it important to separate out these features in this framework. Examples of both sets of features are provided, but are intended to be indicative rather than exhaustive listings. Finally, although there may be no unique feature to distinguish between data collection in health and other research contexts, we have used, where we can, examples of particular relevance to health.

How are data collection stimuli appraised by respondents?

Impersonality

The need for approval may restrict disclosure of certain information. Static or dynamic cues (derived from an interviewer’s physical appearance or behaviour) provide a social context that may affect interaction. 30 Self-administration provides privacy during data collection. Thus, Jones and Forrest31 found greater rates of reported abortion among women using self-administration methods than in personal interview. People may experience a greater degree of privacy when interacting with a computer and feel that computer-administered assessments are more anonymous. 32

The greater expected privacy for methods such as audio computer-assisted self-interview (ACASI) has been associated with increased reporting of sensitive and stigmatising behaviours. 33 It is therefore possible that humanising a computerised data collection interface (e.g. the use of visual images of researchers within computerised forms) could increase misreporting. 34 For example, Sproull et al. 35 found higher social desirability scores among respondents to a human-like computer interface compared with a text-based interface. However, others have found little support for this effect in social surveys. 34 Certain data collection methods may be introduced specifically to address privacy concerns – for example, IVR and telephone ACASI. However, there is also evidence that computers may reduce feelings of privacy. 36 The need for privacy will vary with the sensitivity of the survey topic. Although Smith37 found the impact of the presence of others in response to the US General Social Survey to be mostly negligible, some significant effects were found. For example, respondents rated their health less positively when reporting in the presence of others than when lone respondents.

Legitimacy

Some methods restrict opportunities for establishing researcher credentials, for example when there is no interviewer physically present. A respondent’s perception of survey legitimacy could also be enhanced, albeit unintentionally, by the use of computers. Although this may be only a transient phenomenon, as computers become more familiar as data collection tools, other technological advances may produce similar effects (e.g. PDAs).

Cognitive burden

Burden may be influenced by self-administration, level of computerisation and the channel of presentation. Survey design that broadly accommodates the natural processes of responding to questions across these features is likely to be less prone to error.

Leverage–saliency theory

This general model of survey participation was proposed by Groves et al. 5 and evaluates the balance of various attributes contributing to a decision to participate in a survey. Each attribute (e.g. a financial incentive) varies in importance (leverage) and momentary salience to an individual. Both leverage and salience may vary with the method of data collection and interact with other attributes of the survey (e.g. item sensitivity). Thus, face-to-face interviewers may be able to convey greater salience to responders through tailoring their initial encounter. This common thread of the presence of an interviewer may enhance the perceived importance of the survey to a respondent, which, first, may increase their likelihood of participating (response rate) and, second, enhance perceived legitimacy (response quality). The former effect – ‘participation decisions alone’ – is not examined further in this review. It is possible that the latter effect of mode feature on response quality may be particularly important in clinical studies if data are being collected by face-to-face interview with a research nurse, for example, rather than by a postal questionnaire.

Social exchange theory

This theory views the probability of an action being completed as dependent upon an individual’s perception of the rewards gained and the costs incurred in complying, and his or her trust in the researcher. Dillman38 applied the theory to explaining response to survey requests – mostly in terms of response rate, rather than quality. However, he noted how switching between different modes within a single survey may allow greater opportunities for communicating greater rewards, lowering costs and increasing trust. This focus upon rewards may become increasingly important as response rates in general become more difficult to maintain. Furthermore, the use of a sequential mixed-mode design for non-respondent follow-up within a survey may enhance perceptions of the importance of the research itself by virtue of the researcher’s continued effort.

Unlike the first three appraisal processes described above, both leverage–saliency and social exchange address broader participation decisions. Features of different data collection modes may affect such decision-making, for example through perceived legitimacy. Other features in the framework considered to modify response may also influence participation decisions according to these theories (e.g. the sensitivity of the construct being measured).

Explaining mode feature effects: psychological responses following appraisal

Initial appraisal of survey stimulus will result in a response process, which further mediates response quality. Several explanatory psychological theories have been proposed. We focus upon three general theories of response formulation (optimising/satisficing, social desirability and acquiescence).

‘Taking the easy way out’ – optimising and satisficing

Krosnick29,39 described ‘optimising’ and ‘satisficing’ as two ends of a continuum of thoroughness of the response process. Full engagement in survey response represents the ideal response strategy (optimising), in contrast to incomplete engagement (satisficing). The theory acknowledges the cognitive complexity of survey responding. A respondent may proceed through each cognitive step less diligently when providing a survey response or may omit information retrieval and judgement completely (examples of weak and strong satisficing, respectively). In either situation, respondents may use a variety of decision heuristics when responding. Three factors are considered to influence the likelihood of satisficing: respondent ability, respondent motivation and task difficulty. 29,40 Krosnick39 defines respondent ability (or cognitive sophistication) as the ability to retrieve information from memory and integrate it into verbally expressed judgements. Optimising occurs when respondents have sufficient cognitive sophistication to process the request, when they are sufficiently motivated and when the task requirements are minimal. 42

Mode feature effects may influence optimising through differences in non-verbal communication, interview pace (speed) and multitasking. First, the enthusiastic non-verbal behaviour of an interviewer may stimulate and maintain respondent motivation. Experienced interviewers react to non-verbal cues (e.g. expressions relating to lack of interest) and respond appropriately. Such advantages are lost in a telephone interview with interviewers relying on changes in verbal tones to judge respondent engagement. Although the role of an interviewer to enhance the legitimacy of the survey request was highlighted in Tourangeau et al. ’s8 framework, this additional motivation and support function was not clarified. Second, interview pace may differ between telephone and face-to-face contact, in part because silent pauses are less comfortable on the telephone. A faster pace by the interviewer may increase the task difficulty (cognitive burden) and encourage respondents to take less effort when formulating their response. Pace can vary between self- and interviewer-administered methods. A postal questionnaire may be completed at respondents’ own pace, allowing them greater understanding of survey questions compared with interviewer-driven methods. Tourangeau et al. 8 omitted pace as a mediating variable from their model of mode effects because they considered that insufficient evidence had accrued to support its role. Interview pace has been suggested as an explanation for observed results, but the effects of pace have not necessarily been tested independently from other mode features (e.g. see Kelly et al. 43). Nevertheless, it is discussed here because of its hypothesised effect. 29 Finally, distraction due to respondent multitasking may be more likely in telephone interviews than in face-to-face interviews (e.g. telephone respondents continuing to interact with family members or conduct household tasks while on the telephone). Such distraction increases task difficulty and thus may promote satisficing. 29

Optimising/satisficing has been used to explain a variety of survey phenomena, for example response order effects (where changes in response distributions result from changes in the presentational order of response options). 44 Visual presentation of survey questions with categorical response options may allow greater time for processing initial options leading to primacy effects in those inclined to satisfice. Weak satisficing may also result from the termination of evaluative processing (of a list of response options) when a reasonable response option has been encountered. This may occur for response to items with adjectival response scales and also for ranking tasks. 29 In contrast, aural presentation of items may cause respondents to devote more effort to processing later response options (which remain in short-term memory after an interviewer pauses), leading to recency effects in satisficing respondents. 41 Telephone interviews can increase satisficing (and social desirability response bias) compared with face-to-face interviews. 42 An example of a theoretically driven experimental study that has applied this parsimonious model to studying mode feature effects is provided by Jäckle et al. 45 In the setting of an interviewer-delivered social survey, they evaluated the impact of question stimulus (with or without show cards) and the physical presence or absence of interviewer (face to face or telephone). In this instance, detected mode feature effects were attributable not to satisficing, but to social desirability bias instead.

Social desirability

The tendency for individuals to present themselves in a socially desirable manner in the face of sensitive questions has long been inferred from discrepancies between behavioural self-report and documentary evidence. Response effects due to self-presentation are more likely when respondents’ behaviour or attitudes differ from their perception of what is socially desirable. 46 This may result in over-reporting of some behaviours and under-reporting of others. Behavioural topics considered to induce over-reporting include being a good citizen and being well informed and cultured. 47 Under-reporting may occur with certain illnesses (e.g. cancer and mental ill-health), illegal and non-normative behaviours and financial status. An important distinction has been made between intentional impression management (a conscious attempt to deceive) and unintentional self-deception (where the respondent is unaware of his or her behaviour). 48 The former has been found to vary according to whether responses were public or anonymous, whereas the latter was invariant across conditions.

Most existing data syntheses of mode effects have related to social desirability bias (Table 1). Sudman and Bradburn46 indicated the importance of the method of administration upon socially desirable responding. They found a large difference between surveys either telephone- or self-administered compared with face-to-face interviews. Differences in social desirability between modes have been the subject of subsequent meta-analyses by de Leeuw,49 Richman et al. 50 and Dwight and Feigelson. 51 De Leeuw49 analysed 52 studies, conducted between 1947 and 1990, comparing telephone interviews, face-to-face interviews and postal questionnaires. There was no overall difference in socially desirable responding between face-to-face and telephone surveys among 14 comparisons. There was, however, more bias in telephone interviews in the nine studies published before 1980, but no difference in the later studies. There was less socially desirable responding in postal surveys than in both face-to-face surveys (13 comparisons, mean r = 0.09) and telephone surveys (five comparisons, mean r = 0.06). The presence of an interviewer (telephone or face to face), therefore, appears to determine socially desirable responding. The review included both subjective and objective outcomes, and health issues were the most prominent topic covered.

| Review details | Modes compared | No. of comparisons | Primary result | Evidence of effect moderators |

|---|---|---|---|---|

|

Sudman and Bradburn Years: not reporteda Effect estimate: relative RE Studies: n = 305a |

Face to face, self-administration | |||

| 1. Strong possibility of SD answer | RE: face to face = 0.19, self-administration = 0.32 | |||

| 2. Some possibility of SD answer | RE: face to face = 0.11, self-administration = 0.22 | |||

| 3. Little/no possibility of SD answer | RE: face to face = 0.15, self-administration = 0.19 | |||

| Commentary: The effect measure for attitudinal variables compares any one mode with the weighted mean of all responses (not a direct mode vs mode comparison). Differences in size of RE indicate that one mode has more/less bias than another, but not how much. Individual sample size not accounted for in analysis and may have created spurious results | ||||

|

De Leeuw Years: 1947–1990 Effect estimate: mean weighted product moment correlation Studies: n = 52a |

1. Telephone vs face to face | n = 14 | No overall difference (mean = –0.01) | Year of publication: ‘< 1980’ (mean = –0.03; less bias by face to face), ‘after 1980’ (mean = 0.00) |

| 2. Mail q vs face to face | n = 13 | Less bias by mail (mean = +0.09) | ||

| 3. Mail q vs telephone | n = 5 | Less bias by mail (mean = +0.06) | ||

| Commentary: The square of the correlation indicates proportion of variance explained by mode. The directional coefficient indicates which mode is best (less biased). ‘Social desirability’ assessed by authors of original papers, not review paper | ||||

|

Richman et al. Years: 1967–1997 Effect estimate: ES Studies: n = 61 |

1. Computer vs PAPQ (studies – BS: n = 30; WS: n = 15) |

n = 581 | No overall difference (ES = 0.05) | |

| a. Direct measure of bias | Less bias by computer (ES = –0.39) |

Year of publication: ‘early:1975’ (ES = –0.74); ‘recent: 1996’ (ES = –0.08) Alone: ‘alone’ (ES = –0.82); ‘not alone’ (ES = –0.25) Backtracking: available (ES = –0.65); not available (ES = –0.24) |

||

| No difference in effect between IM and SDE bias | ||||

| b. Inferred measure of bias | Less bias by PAPQ (ES = 0.46) |

Anonymity: ‘anonymous’ (ES = 0.25); ‘identified’ (ES = 0.62) Alone: ‘alone’ (0.12); ‘not alone’ (0.65) Backtracking: available (ES = 0.16); not available (ES = 0.87) |

||

|

2. Computer vs face-to-face (Studies – BS: n = 11; WS: n = 17) |

n = 92 | Less bias by computer (ES = –0.19) |

Measure: personality (ES = 0.73); other (ES –0.51) Year of publication: ‘early: 1975’ (ES = 0.79); recent: 1996 (ES: –1.03) |

|

|

Dwight and Feigelson Years: 1969–1997 Effect estimate: ES Studies: n = 30 |

1. Computer vs paper and pencil or face to face (studies – BS: n = 33; WS: n = 30) |

IM: n = 45 SDE: n = 32 |

Less IM bias by computer (ES = –0.08) No difference in SD bias |

Overall ESs for IM bias reduce over time (r = 0.44) |

| 2. Computer vs paper and pencil |

IM: n = 39 SDE: n = 6 |

Less IM bias by computer (ES = –0.08) No difference in SDE bias |

||

| 3. Computer vs face to face |

IM: n = 25 SDE: n = 7 |

No difference in SDE bias No difference in SDE bias |

||

The meta-analysis of Richman et al. 50 compared computer-administered questionnaires, paper-and-pencil questionnaires and face-to-face interviews in 61 studies. Controlling for moderating factors, there was less social desirability bias in computer administration than in paper-and-pencil administration [effect size (ES) for difference of 0.39]. This advantage over paper-and-pencil methods was greater in studies conducted before 1975 (ES = 0.74), when responses were provided when alone (ES = 0.82) and when backtracking (i.e. ability to move back to earlier section of questionnaire) was available (ES = 0.65). However, when social desirability was inferred from other measures (rather than measured directly) there was more bias using computer administration controlling for moderators (ES = 0.46). Compared with face-to-face interviews, computer administration was associated with less bias overall (ES = 0.19). However, the opposite was true when the construct assessed was personality (ES = 0.73) and in more recently published studies (ES = 0.79).

Dwight and Feigelson51 compared impression management/self-deceptive enhancement in computer-administered measures and either paper-and-pencil or face-to-face measures. Less impression management bias was found for computer administration than for non-computer formats, but the difference was small (ES = –0.08). Individual study ESs reduced significantly over time, indicating a diminishing impact of computerisation. Dwight and Feigelson51 pointed to the recent positive ESs, which they felt was consistent with a ‘Big Brother syndrome’ – respondents fear monitoring and controlling by computers. 52 There was no observed difference between data collection method on scores of self-deceptive enhancement.

It is worth commenting upon the methodological quality of these reviews. 53 None provided an explicit search strategy, although all, apart from Sudman and Bradburn,47 described keywords. Dwight and Feigelson’s52 search was based upon an initial citation search, whereas only Richman et al. ’s51 review provided explicit eligibility criteria for included studies. Sudman and Bradburn46 developed a comprehensive coding scheme that was later extended in de Leeuw’s review. 49 However, coding performance (inter-rater reliability) was reported only by de Leeuw49 and by Richman et al. 50 Difficulties in coding variables with their frameworks was noted by Sudman and Bradburn46 and by Richman et al. ,50 but is probably a ubiquitous problem. The intended coverage of the reviews varied where stated, but is probably generally reflected in the total number of included studies. The Richman et al. 50 review is notable for its attempt to test explicit a priori hypotheses, its operational definition of ‘sensitivity’ and its focus upon features rather than overarching modes. These reviews provide support for the importance of self-administration and consequently impersonality. Richman et al. 50 concluded that there was no overall difference between computer and paper-and-pencil scales. This is consistent with Tourangeau et al. ’s8 model, which directly links computerisation to legitimacy and cognitive burden but not to impersonality. From the first two reviews it is clear that other factors may significantly modify the relationship between mode and social desirability bias. For example, Whitener and Klein54 found a significant interaction between social environment (individual vs group) and mode of administration (computer:unrestricted scanning vs computer:restricted scanning vs paper-and-pencil).

Acquiescence

Asking respondents to agree or disagree with attitudinal statements may be associated with acquiescence – respondents agreeing with items regardless of there content. 55 Acquiescence may result from respondents taking shortcuts in the response process and paying only superficial attention to interview cues. 18 Knowles and Condon56 categorise meta-theoretical approaches to acquiescence as addressing either motivational or cognitive aspects of the response process. 3 Krosnick39 suggested that acquiescence may be explained by the notion of satisficing due to either cognitive or motivational factors. Thus, the role of mode features in varying impersonality and cognitive burden as described above would seem equally applicable here.

There is mixed evidence for a mode feature effect for acquiescence. De Leeuw49 reported no difference in acquiescence between postal, face-to-face and telephone interviews. 49 However, Jordan et al. 57 found greater acquiescence bias in telephone interviews than in face-to-face interviews. Holbrook et al. 42 also found greater acquiescence among telephone respondents than among face-to-face respondents in two separate surveys.

What are the consequences of mode feature effects for response quality?

Several mode feature effects on response quality are listed in Figure 1 and include number of missing data. 9 Computerisation and using an interviewer will decrease the number of missing data due to unintentional skipping. Intentional skipping may also occur and be affected by both the impersonality afforded the respondent and the legitimacy of the survey approach. The model of Tourangeau et al. 8 describes how the reliability of self-reported data may be affected by the cognitive burden placed upon the respondent. 8 De Leeuw49 provides a good illustration of how the internal consistency (psychometric reliability) of summary scales may be varied by mode features through (1) differences in interview pace and (2) the opportunity for respondents to relate their responses to scale items to each other. The reliability of both multiple- and single-item measures across surveys (and across waves of data collection) may also be affected by any mode feature effects resulting from the psychological appraisal and response processes described above.

Tourangeau et al. 8 highlight how accuracy (validity) of the data may be affected by impersonality and legitimacy. Both unreliable and inaccurate reporting will be represented by variations in the level of an attribute being reported. For example, a consequence of socially desirable responding will be under- or over-reporting of attitudes and behaviour. This may vary depending upon the degree of impersonality and perceived legitimacy.

Additional antecedent features

Two further sets of variables are considered in the framework presented in Figure 1: ‘measurement construct’ and ‘respondent characteristics’. Both represent antecedent features that may further interact with the response process described. For the purposes of this chapter they will be described particularly in relation to health research.

Measurement construct

Objective/subjective constructs

Constructs being measured will vary according to whether they are subjective or objectively verifiable. HRQoL and health status are increasingly assessed using standardised self-report measures [increasingly referred to as patient-reported outcome measures (PROMs) in the health domain]. Although the construct being assessed by such measures may in some cases be externally verified (e.g. observation of physical function), for other constructs (e.g. pain) this may not be possible. Furthermore, the subjective perspective of the individual may be an intrinsic component of the construct being measured. 58,59 Cote and Buckley60 reviewed 64 construct validation studies from a range of disciplines (marketing, psychology/sociology, other business, education) and found that 40% of observed variance in attitudes (subjective variable) was due to method (i.e. the influence of measurement instrument) compared with 30% being due to the trait itself. For more objective constructs, variance due to method was lower indicating the particular challenge for assessing subjective constructs.

Sensitivity

Certain clinical topics are more likely to induce social desirability response bias, potentially accentuating mode feature effects. Such topics include sensitive clinical conditions (e.g. human immunodeficiency virus status) and health-related behaviours (e.g. smoking). An illustrative example is provide by Ghanem et al. 61 who found more frequent self-reports of sensitive sexual behaviours (e.g. number of sexual partners in preceding month) using ACASI than with face-to-face interview among attendees of a public sexually transmitted diseases clinic.

Respondent characteristics

Respondent role

In much of the research contributing to the meta-analyses of mode effects on social desirability, the outcome of the assessment was not personally important for study subjects (e.g. participants being undergraduate students). 50 Further methodological research in applied rather than laboratory settings will help determine whether or not mode feature effects are generalisable to wider populations of respondents. It is possible that the motivations of patients (e.g. perceived personal gain and perceived benefits) will reflect their clinical circumstances, as well as other personality characteristics. 62–64 It is therefore worth investigating whether or not self-perceived clinical need, for example, may be a more potent driver of biased responding than social desirability, and whether or not this modifies mode feature effects.

In a review of satisfaction with health care, the location of data collection was found to moderate the level of satisfaction reported, with on-site surveys generating less critical responses. 19 Crow et al. 19 noted how the likelihood of providing socially desirable responses was commonly linked by authors to the degree of impersonality afforded when collecting data either on- or off-site.

Another role consideration involves the relationship between respondent and researcher. The relationship between patient and health-care professional may be more influential than that between social survey respondent and researcher. A survey request may be viewed as particularly legitimate in the former case and less so in the latter. 63 Response bias due to satisficing may be less of a problem in such clinical populations than in non-clinical populations. Systematic evaluation of the consequence of respondent role in modifying mode feature effects warrants further research.

Respondent sociodemographics

There is some indication of differential mode feature effects across demographic characteristics. For example, Hewitt65 reports variation in sexual activity reporting between modes [audio-computer-assisted self-administered interview (CASI) and personal interview] by age, ethnicity, educational attainment and income. The epidemiology of different clinical conditions will be reflected by patient populations that have certain characteristics, for example being older. This may have consequences for cognitive burden or perceptions of legitimacy in particular health studies.

Particular issues in health research

In considering modes and mode feature effect, we will focus on three issues that may be of particular relevance to those collecting data in a health context: antecedent features, constraints in choice of mode and the use to which the data are being put.

Particular antecedent features

Certain antecedent conditions and aspects of the construct being measured may be particularly relevant in health-related studies. Consider the example of QoL assessment in clinical trials of palliative care patients from the perspective of response optimising. Motivation to respond may be high, but may be compromised by an advanced state of illness. Using a skilled interviewer may increase the likelihood of optimising over an approach offering no such opportunity to motivate and assist the patient. Physical ability to respond (e.g. verbally or via a keyboard) may be substantially impaired. This may affect response completeness, but if the overall response burden (including cognitive burden) is increased it may also lead to satisficing. In practice, choice of data collection method will be driven as much by ethical considerations about what is acceptable for vulnerable respondents.

Are there features of self-reported data collection in health that are particularly different from other settings of relevance to mode feature effects?

Surveys will be applied in health research in a wide variety of ways, and some will be indistinguishable in method from some social surveys (e.g. epidemiological sample surveys). Some contexts for data collection in health research may be very different from elsewhere. Data collection in RCTs of therapeutic interventions may often include PROMs to assess differences in outcome. How antecedent features in the trial – in particular those associated with respondent role – may influence psychological appraisal and response is hypothesised in Table 2. These antecedent characteristics may potentially either promote or reduce the adverse impact of mode feature effects. The extent to which these effects may be present will need further research, and, at least, would require consideration in trial design.

| Antecedent features in trial | Appraisal and response: some research hypotheses |

|---|---|

| Respondent role: Participants approached for participation by their professional carer | Legitimacy: An established patient–carer relationship with high levels of regard for the researcher may enhance legitimacy of the survey request sufficiently to modify mode feature effects and therefore reduce satisficing |

| Respondent role: Participants are consented through a formally documented process | Legitimacy: The formality and detail of the consenting process may enhance the legitimacy of the survey request sufficiently to modify mode feature effects and therefore reduce satisficing |

| Respondent role: Participants provide self-reported data at the site of delivery for their health care | Impersonality: On-site data collection may increase the need for confidential and anonymous reporting sufficiently to promote adverse effects of mode feature effects and introduce social desirability bias |

| Respondent role/sensitivity: Participants are patients with an ongoing clinical need | Cognitive burden: The health status of respondent may increase the overall cognitive burden to modify mode feature effects and increase satisficing. Burden and, therefore, effects may vary with disease and treatment progression |

| Impersonality: The nature of the condition may increase the need for confidential and anonymous reporting sufficiently to promote adverse mode feature effects and introduce social desirability bias | |

| Respondent role: Participants are patients in receipt of therapeutic intervention | Legitimacy/leverage–saliency: The requirement for treatment and the opportunity for novel therapy enhance legitimacy and the perceived importance/salience of the research. This may minimise adverse mode feature effects to reduce satisficing |

Particular constraints on choice of mode

As in social surveys, mode feature effects will be one of several design considerations when collecting health survey data. Surveying patients introduces ethical and logistical considerations, which, in turn, may determine or limit the choice of data collection method. Quality criteria such as appropriateness and acceptability may be important design drivers. 66 For example, Dale and Hagen67 reviewed nine studies comparing PDAs with pen-and-paper methods and found higher levels of compliance and patient preference with PDAs. Electronic forms of data collection may offer advantages in terms of speed of completion, decreasing patient burden and enhancing acceptability. 68,69 The appropriateness of different data collection modes may vary by patient group – for example, with impaired response ability due to sensory loss. 70 Health researchers need to balance a consideration of mode feature effects with other possible mode constraints when making decisions about data collection methods.

Particular uses of data

Evaluating mode feature effects will be particularly important as survey instruments start to play a bigger role in the provision of clinical care, rather than solely in research. PROMs are increasingly being applied and evaluated in routine clinical practice. 71–73 Benefits have been found in improving process of care, but there is less consistent evidence for impact on health status. 71,74–76

Perceived benefits of using such patient-reported outcomes include assessing the impact on patients of health-care interventions, guiding resource allocation and enhancing clinical governance. 72 Computerised data collection may be especially important if results are to inform actual consultations, but would require suitably supported technology to permit this. 77,78 With only mixed evidence of clinical benefit, Guyatt et al. 76 highlight computerised-based methods of collecting subjective data in clinical practice as a lower-cost approach.

In this clinical service context, psychological responses such as social desirability bias may vary according to whether patient data are being collected to inform treatment decision-making or clinical audit. Method of data collection may similarly play a role in varying the quality of response provided. However, routinely using subjective outcome measures in clinical practice will require a clear theoretical basis for their use and implementation, and may necessitate additional training and support for health professionals and investment in the technology to support its effective implementation, which is, preferably, cost neutral. 79–82 Overall, though, it may be that any biasing effect of mode feature may be less salient in situations where information is being used as part of a consultation to guide management, and may be more so where data are being collected routinely across organisational boundaries as part of clinical audit or governance.

Managing mode feature effects in health

Managing mode feature effects requires identification of their potential impact. This chapter has focused upon response quality as one source of error in data collection. Two other sources of error influenced by mode are ‘coverage error’ and ‘non-response error’. 83 In the former, bias may be introduced if some members of the target population are effectively excluded by features of the chosen mode of data collection. For example, epidemiological surveys using random digit dialling, which exclude people without landline telephones, may result in biased estimates as households shift to wireless-only telephones. 84 Response rates vary by mode of data collection and different population subgroups vary in the likelihood of responding to different modes. 83 For example, Chittleborough et al. 85 found differences by education, employment status and occupation among those responding to telephone and face-to-face health surveys in Australia.

Social surveys commonly blend different modes of data collection to reduce cost (e.g. by a graduated approach moving from cheaper to more expensive methods18). Mixing modes can also maximise response rates by, for example, allowing respondents a choice about how they respond.

In the long term it may prove possible to correct statistically for mode feature effects if consistent patterns emerge from meta-analyses of empirical studies. Alternatively, approaches to reducing socially desirable responding have targeted both the question threat and confidentiality. An example of the latter is the randomised response technique, which guarantees privacy. 86,87 Another approach is the use of goal priming (i.e. the manipulation and activation of individuals’ own goals to subsequently motivate their behaviour), where respondents are influenced subconsciously to respond more honestly. 88

Evaluating and reporting mode feature effects

As described above, the evaluation of data collection method within individual studies is usually complicated by the package of features representing any one mode. Groves et al. 18 described two broad approaches to the evaluation of effects due to mode features. The first and more pragmatic strategy involves assessing a package of features between two or more modes. Such a strategy may not provide a clear explanation for resulting response differences, but may satisfy concerns about whether or not one broad modal approach may be replaced by another. The second approach attempts to determine the features underlying differences found between two modes. This theoretically driven strategy may become increasingly important as data collection methods continue to evolve and increase in complexity.

As global descriptions of data collection method can obscure underlying mode features, comparative studies should describe these features more fully. This would enable data synthesis, providing greater transparency of method and aid replication. 50

Summary

This chapter has considered how features of data collection mode may impact upon response quality, and key messages are summarised in Box 1. It has added to a model proposed by Tourangeau et al. 8 by drawing apart psychological appraisal and response processes in mediating the effect of mode features. It has also considered other antecedent features that might influence response quality. Mode feature response effects have been most thoroughly reviewed empirically in relation to social desirability bias. Overall effects have been small, although evidence of significant effect modifiers emphasises the need to evaluate mode features rather than simply overall mode. A consistent finding across the reviews is the important moderating effect of year of publication for comparisons involving both telephone and computers. Therefore, mode feature comparisons are likely to remain important as new technologies emerge for collecting data. Although much of the empirical research underpinning the reviewed model has been generated within other academic domains, the messages are nonetheless generally applicable to clinical and health research.

Choice of data collection mode can introduce measurement error, detrimentally affecting the accuracy and reliability of survey response

Surveys in health service and research possess similar features to surveys in other settings

Features of the clinical setting, the respondent role and the health survey content may emphasise psychological appraisal and psychological responses implicated in mode feature effects

The extent to which these features of health surveys result in consistent mode effects that are different from other survey context requires further evaluation

Evaluation of mode effects should identify and report key features of data collection method, not simply categorise by overall mode

Mode feature effects are primarily important when data collected via different modes are combined for analysis or interpretation. Evidence for consistent mode effects may nevertheless permit routine adjustment to help manage such effects

Implications for the MODE ARTS systematic reviewThe theory review provides the framework to structure the systematic review analysis

In doing so it emphasises mode features, rather than modes

Other antecedent features identified in the review may also be explored in the analysis, which, in themselves, may not be directly associated with mode feature

Mediators are clarified in the theoretical framework, but in practice clear measures of these are unlikely to be available in the papers obtained in the systematic review. Nevertheless, the theoretical framework provides a firm basis upon which to interpret emergent results

MODE ARTS, Mode of Data Elicitation, Acquisition & Response To Surveys.

Future evidence syntheses may confirm or amend the proposed model, but this requires as a precursor greater attention to theoretically driven data collection about mode features. The current theoretical review framework, therefore, provides the basic analytic structure for the analysis and a basis upon which emergent results may be interpreted (see Box 1). In particular, the emphasis upon mode features is a key contributor to this analytic model.

Chapter 3 Review methods

The methods used to evaluate the impact of features of mode of data collection on subjective outcome measures follow that of a systematic review. Owing to the large body of literature relating to survey methodology which is published outside the health research arena, all studies that incorporate a mode comparison will be included, regardless of setting. This leads to a broad search strategy covering a wide range of disciplines. In order to target methodological studies, some innovations in search strategy have been undertaken that separate out the process from the traditional reviews of the effectiveness of interventions.

Identifying the literature

Inclusion/exclusion criteria

The inclusion/exclusion criteria for a study to be included in the review were as follows:

-

There is evidence of a comparison between two modes of data collection of either the same question, or set of questions, referring to the same theoretical construct.

-

The construct compared is subjective and cannot be externally validated.

-

The analysis of the study contains an explicit reference to a comparison.

-

Data collection is quantitative, i.e. uses structured questions and answers.

This can include studies in which mode comparisons were made, even if not the main purpose of the study.

Studies were excluded from the review if they involved:

-

a comparison between a quantitative measure and one or more qualitative data collection methods/analyses (e.g. unstructured interviews, focus groups)

-

a comparator derived from routine clinical records – unless explicit reference to specific self-reported construct is made within those records

-

a comparison between the response of two different judges, i.e. comparing a response from an individual with that made by someone other than the responder, for example a clinician providing a diagnosis.

Subjective measures are defined as those in which the perspective of the individual is an intrinsic component of the construct being measured. Comparisons between two different perspectives (even on the same construct) are therefore excluded.

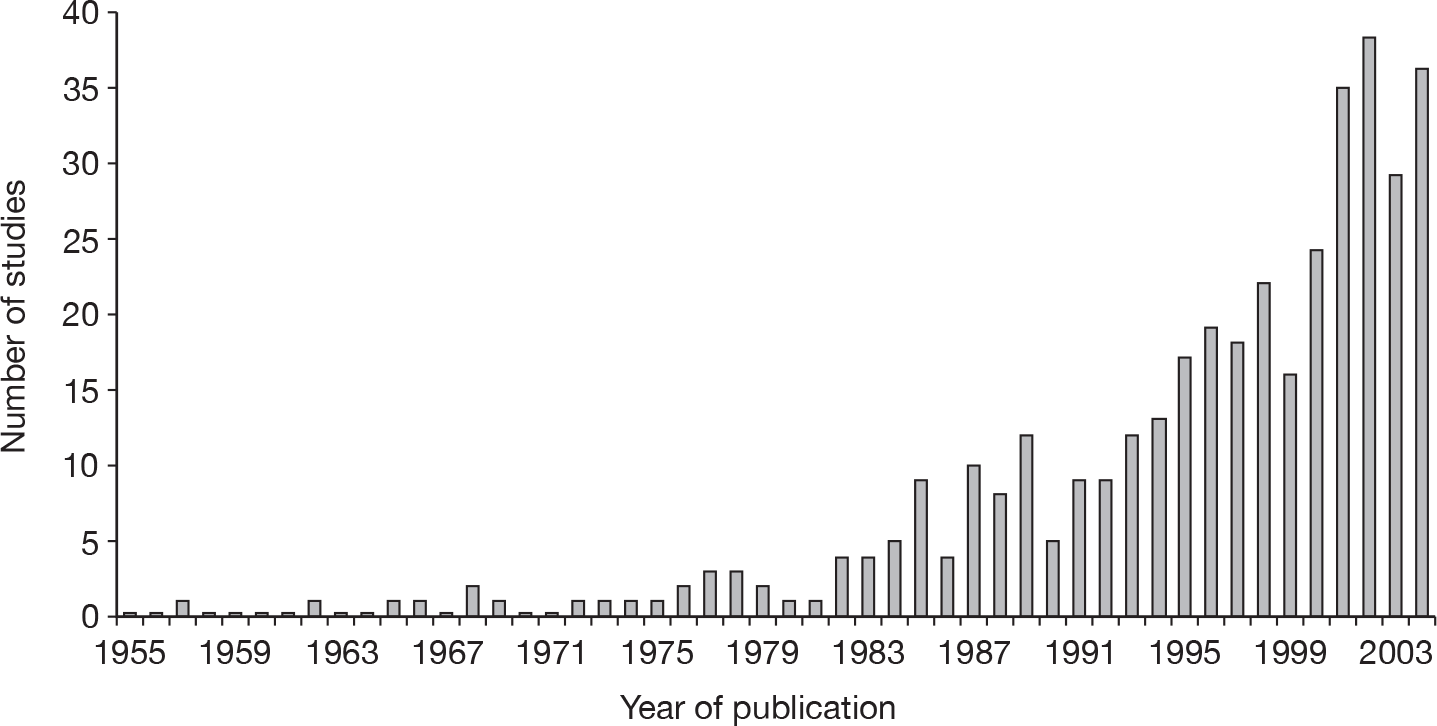

Year of publication

All databases were searched from the earliest point in time until the end of 2004. This was based on the last complete year available to the researchers at the point at which the search was undertaken.

Language and location

No studies were excluded owing to language or country of origin to allow inclusion of as much innovation in design and novel mode application as possible. It is known that the perceived ‘gold standard’ method of data collection will vary, especially in relation to approaching respondents in their homes. 89 In some cultures, a face-to-face interview is perceived as more acceptable than calling on the telephone. 90 In addition, matters of privacy and over-use of mass marketing schemes have changed the availability of telephone numbers and ability to contact. For example, the use of automated marketing technology in the UK has given rise to ‘preference’ services offered by telecommunications companies and the Post Office, whereby registered marketing companies cannot gain access to the recipient.

This chapter will document the development, piloting and optimisation of the search strategy, the three-phase selection process and the methods of synthesis for the data extracted.

Databases

Owing to the broad range of disciplines outside the health sector literature which cover survey methodology, a subject-free approach was required to collate evidence from all research. However, databases were chosen based on subject area to allow as comprehensive a selection as possible. A full list of databases used in the review can be found in Appendix 1 (see Table 21).

MEDLINE was used for the initial development and optimisation of the search strategy. It was decided that grey literature and grey databases would not be searched, as the effort required to retrieve such information usually outweighs the gains. 91 Therefore, only journal articles and conference abstracts cited within journal supplements were included in the review process.

Search strategy

Guides for the development and creation of search strategies used in systematic reviews in defined areas have been well described. 92 However, guides do not exist for searching such a diffuse and multidisciplinary literature base. Therefore, the search strategy for the present review was continually optimised using an iterative process. Initially, an extensive development phase was carried out, followed by the main search and retrieval phase.

From previous literature reviews in the area of survey research2,93 it was shown to be possible to systematically identify a body of literature describing the effects of differences in modes of data collection. However, studies that use only a single mode of data collection are not of interest and, therefore, in order to focus the search strategy, a matrix approach was developed. The matrix was intended to facilitate the search for articles that had two or more modes of data collection. Each column and row of the matrix consisted of a collection of terms relating to a single mode (e.g. postal, survey, mail). Only the off-diagonal terms were considered for inclusion (highlighted cells in Table 3). This used Boolean terminology: Group 1 AND (Group 2 OR 3 OR 4 OR 5 OR 6 OR 7 OR 8 OR 9 OR 10). For example, this would identify any paper that had terms relating to desktop computer use and any one of face to face, paper and pencil, etc.

| Mode of data collection | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| Mode of data collection | 1 | ||||||||

| 2 | |||||||||

| 3 | |||||||||

| 4 | |||||||||

| 5 | |||||||||

| 6 | |||||||||

| 7 | |||||||||

| 8 | |||||||||

Initially, 10 different types of data collection mode were identified, which were defined as ‘data collection groups’. A list of search terms was generated for each group. From these categorisations, one row and column (representing paper-and-pencil administration) was selected and all abstracts identified (759) were screened and the terms and categorisations tested to see if a more specific search strategy would have identified the same studies. On the basis of this, the data collection groups were revised from 10 to 8 as follows:

-

technology assisted (computer and PDA combined)

-

internet based

-

antonym of technology

-

paper-and-pencil administration (combined with mail)

-

fax administration

-

telephone administration

-

in-person administration

-

unspecified mode.

It became apparent that there was an ordered use of language in all articles, allowing a grammatical framework to be applied to the search terms within the data collection groups. Search terms relating to different modes of data collection could be described as a nominal phrase, consisting of a compound noun and one or more compound adjectives. New modes of data collection have evolved with the creation of new technologies, and, instead of developing new nouns, existing nouns have been modified by the development of compound nouns, qualified by compound adjectives, for example computer-assisted telephone interview (CATI). Search terms generated in the initial searches were allocated to the different data collection groups by linking the compound adjective to the group with which it was most associated. The final search terms for each data collection group are in Appendix 1.

Medical subject headings (MeSH) were utilised where available. The specific thesaurus terms used in each database and field codes used to implement the matrix section of the search strategy are detailed in Appendix 1 (see Table 21), concerning health and evidence-based medicine, social sciences, and economics and other, respectively. The use of MeSH can seriously influence the noise in the search strategy (the number, and type of citations retrieved) due to the branching hierarchical classification. For example, when locating articles related to methodological issues the search term ‘method’ is prolific in the introduction, method, results and discussion (IMRaD)’-constructed abstracts, whereas the more specific term ‘methodolog$’ searched in the title, abstract and keywords of the article yielded more precise results.

The strategy was implemented in MEDLINE from the beginning of 1966 to the end of 2004, and all articles were subsequently screened for relevance. The screening accompanied an iterative process identifying new research-specific terms. The iterative process generated 24 new nominal phrases that were added to the appropriate groups, and one new group was identified pertaining to the use of video. No clear distinction was developed between online and offline computerised methods, therefore the terms in the internet-based group were merged with the technology-assisted data collection methods. The strategy was then re-implemented to screen for new, previously unidentified articles.

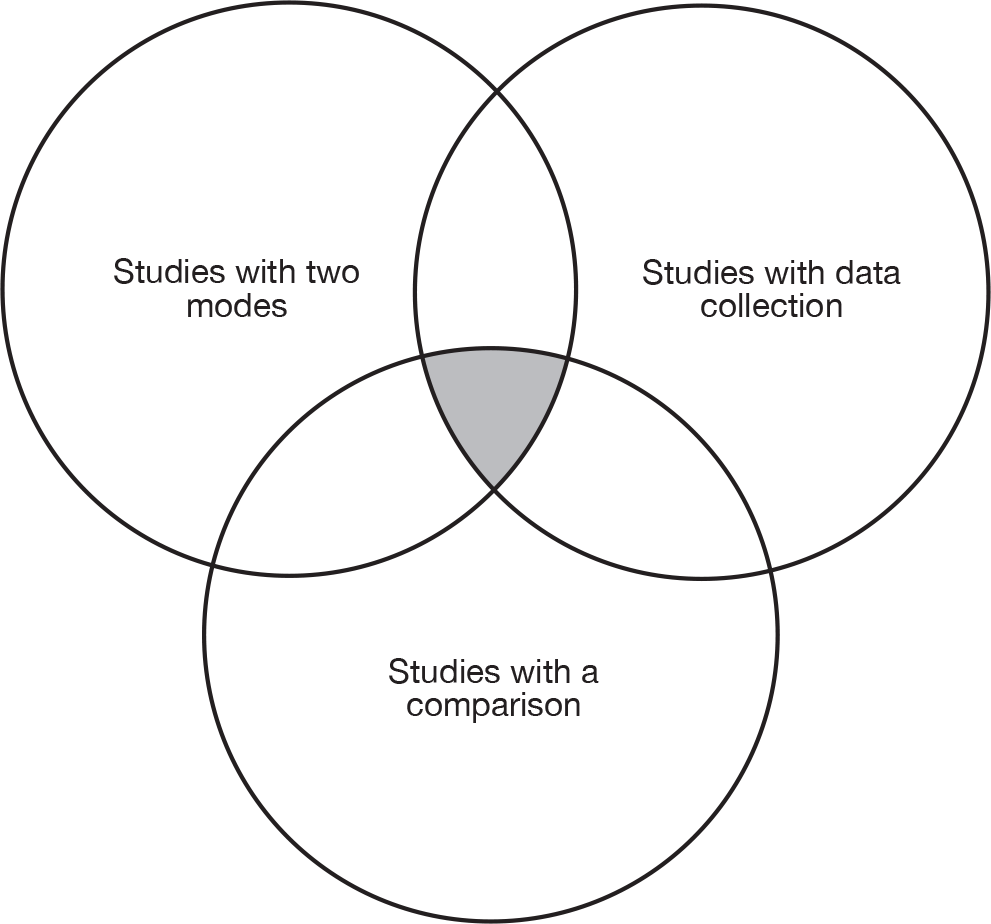

In order to focus the search on studies that were comparisons of modes, rather than just studies that happened to report two modes, the studies identified from the searches above were limited to those that used terms suggestive of a comparison (e.g. comparison, versus, trial, evaluation) and general terms relating to data collection (e.g. administration, survey, assessment). Therefore, only studies that combined all three domains were included for further consideration (Figure 2).

FIGURE 2.

Conceptualisation of search strategy.

Following the successful development of the search strategy within MEDLINE, the same strategy was implemented within all the specified databases, allowing for changes in field codes and thesaurus terms as described in Appendix 1.

Citation information and abstracts were downloaded from the selected databases and imported into an EndNote (Thomson Reuters, CA, USA) database. At each stage of the download, the number of articles requested for download and the numbers of articles actually downloaded were checked for consistency. Duplicate citations were removed using the EndNote Version 9 ‘Find Duplicate’ function. Citations were considered duplicates if either:

-

the title field exactly matched another citation, or

-

the author, year, journal, volume, issue and page numbers exactly matched.

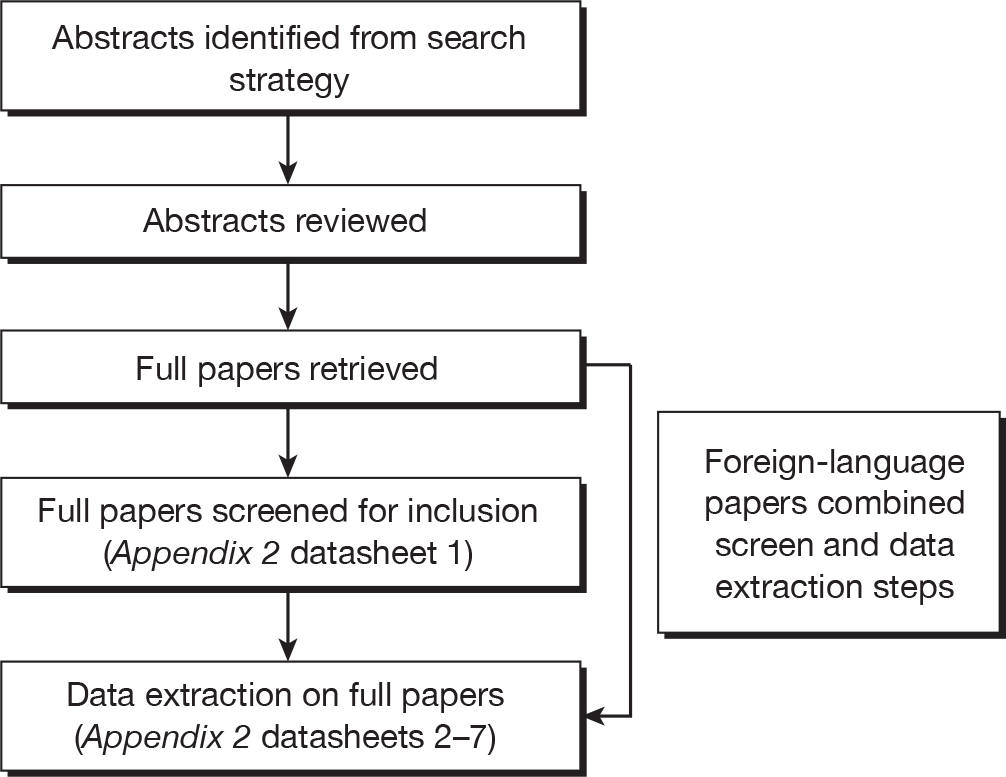

Review process

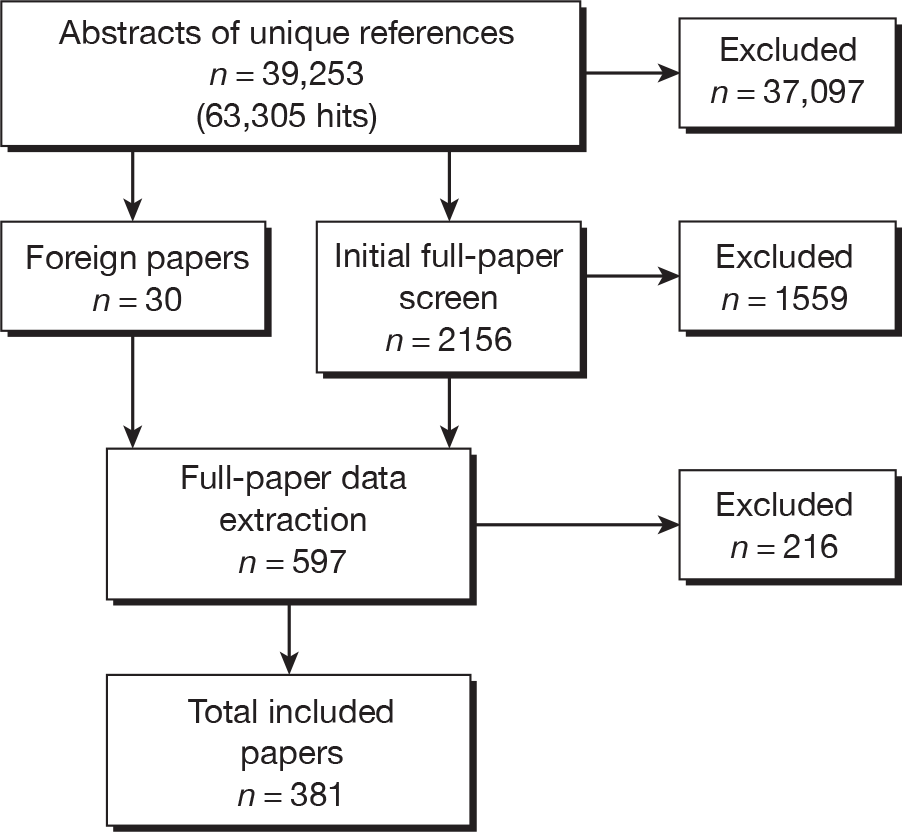

The abstracts (and titles only for some foreign-language papers with no English abstract) were reviewed against the inclusion/exclusion criteria. No assessment was made at this stage as to the subjectivity of the measures presented. Full papers were retrieved for all selected abstracts and then screened again relating to more detailed inclusion criteria relating to the measures used. Papers that were still included were reviewed in full and detailed data extracted (Figure 3). The datasheets used for full-paper screening and data extraction are given in Appendix 2. The screening and data extraction stages were combined for foreign-language papers.

FIGURE 3.

Review process.

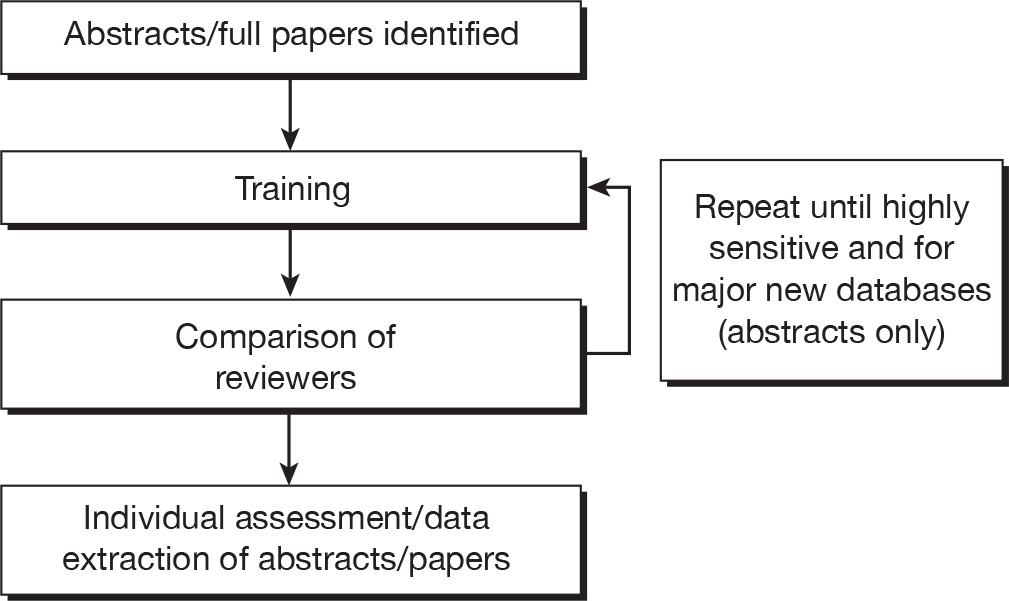

At each stage, abstracts or papers were reviewed by a single reviewer after a period of training (Figure 4). Training for each stage included an assessment of reliability and sensitivity. Training and testing sets of abstract/papers were used. This was repeated for hits from different databases to allow for reassessment with different types of study and abstract layout.

FIGURE 4.

Process of training.

Rigorous training ensured high reliability of the screening process. To quantify this, the efficacy of training was assessed by calculating the area under the curve (AUC) devised from the receiver operating characteristic curve (ROC). The AUC was calculated against a ‘gold standard’ of exact matches arrived at by consensus. Having a sensitive process was considered more appropriate than overall agreement, with a focus on over-including (where in doubt in the early stages) being important to avoid missing key studies.

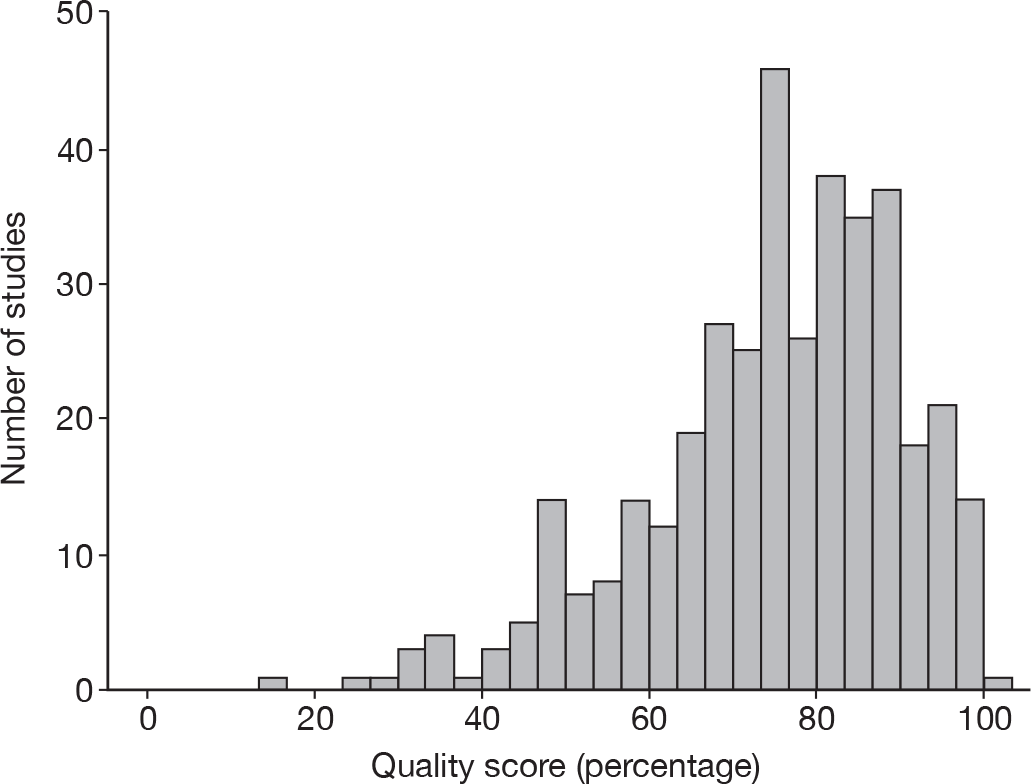

Three reviewers undertook abstract screening (AS, GG and KH). After the triplicate screening of 750 abstracts (three sets of 250) from MEDLINE, the ROCs were calculated, generating AUC scores: AS = 0.865, GG = 0.954, KH = 0.970. Training was repeated for PsycINFO, and 750 triplicate-screened abstracts generated AUC scores: AS = 0.88, GG = 0.92, KH = 0.90. Five reviewers undertook the initial screening of the full papers (AS, GG, KH, MR and CS). Training was carried out with 20 articles and reviewed independently. Consensus was achieved through discussion of included and excluded studies. Then a subsequent set of 20 studies were reviewed independently and the sensitivity of all reviewers was 100%. Data extraction and quality assessment were undertaken by three reviewers (GG, NC and RI). Training was carried out on two sets of 20 papers, giving AUC scores of GG = 0.823, NC = 0.802 and RI = 0.790.

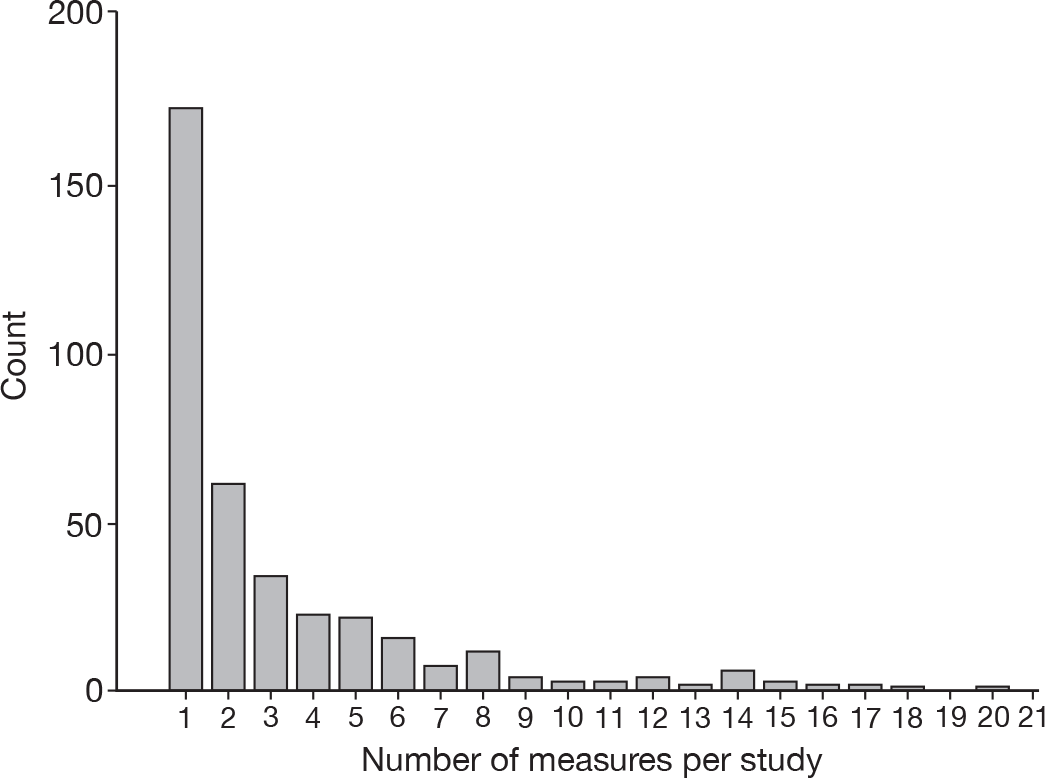

Data extraction

The final extraction stage was carried out using a series of forms (see Appendix 2). These forms were circulated to all members of the study management team for comment and changes were implemented accordingly. As with each stage of the reviewing process, a training phase was completed. The data extraction was comprehensive because of the wide-ranging and diverse nature of the articles selected. Items for data extraction were selected to be as inclusive as possible; the details of each included study were captured under the following headings:

-

population and design (data forms 2 and 3)

-