Notes

Article history

The research reported in this issue of the journal was commissioned by the National Coordinating Centre for Research Methodology (NCCRM), and was formally transferred to the HTA programme in April 2007 under the newly established NIHR Methodology Panel. The HTA programme project number is 06/97/04. The contractual start date was in October 2008. The draft report began editorial review in June 2011 and was accepted for publication in January 2012. The commissioning brief was devised by the NCCRM who specified the research question and study design.The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2012. This work was produced by Brazier et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE) (http://www.publicationethics.org/). This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2012 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction and background

This report is concerned with a range of issues around the development, testing and use of condition-specific preference-based measures (CSPBMs) of health for estimating quality-adjusted life-years (QALYs) for use in economic evaluation of health-care interventions. Although the focus is on CSPBMs, many of the issues raised are relevant to preference-based measures in general, including other types of population-specific measures and generic measures. We begin this chapter by providing the rationale for using CSPBMs for this purpose, and then we outline the key stages of their development and set out the key methodological issues addressed in the report.

Rationale

The last decade has seen the increasing use of economics evaluation around the world to inform the allocation of resources between competing health-care interventions and particularly the use of cost-effectiveness, in which context interventions are assessed in terms of their cost per QALY gained. The QALY provides a way of measuring the benefits of health-care interventions in terms of improvements in health-related quality of life (HRQoL) and survival. QALYs are increasingly being calculated using health-state utility values provided by generic preference-based measures (preference-based measures) of health, such as the EQ-5D,1 SF-6D2,3 or HUI-3. 4 It has been claimed that ‘generic’ preference-based measures are applicable to all interventions and patient groups. This claim has support in many conditions for which they has been shown to be reliable, valid and responsive. 5 Many reimbursement agencies request that QALYs are estimated using a generic preference-based measure for economic evaluation submissions on pharmaceuticals, and the National Institute for Health and Clinical Excellence (NICE) in England and Wales specifies a preference for the EQ-5D. 6

Clinical studies of pharmaceuticals and other health-care interventions often use condition-specific measures (condition-specific measure) of health, which are typically not preference-based, but not generic preference-based, measures. This is partly attributable to concerns about the appropriateness of generic measures in some conditions. Generic preference-based measures have been show to perform poorly in terms of validity or responsiveness to change in some conditions, such as the EQ-5D in visual impairment in macular degeneration,7 hearing loss,8 leg ulcers9 and schizophrenia. 10,11 Whether or not there are genuine concerns with generic measures, researchers are keen to reduce patient burden and cost, meaning often that only a condition-specific measure is included in key studies. Furthermore, many pharmaceutical trials are designed for obtaining licensing approval from the US Food and Drug Administration (FDA), European Medical Agency (EMA) and similar licensing authorities around the world that do not require generic or preference-based measures. Indeed, guidelines published by the FDA on the use of patient-reported outcome measures (PROMs) (in support of labelling claims) have increased the pressure to use condition-specific measures that have specifically been developed in the patient groups in which they are going to be used and are typically not preference based. 12 Condition-specific measures are going to continue to be an important potential source of evidence on effectiveness. To limit the evidence used to populate economic models to generic measures in many cases would exclude valuable evidence on the effectiveness of an intervention. However, the use of condition-specific measures in economic evaluation is severely limited because these measures were not designed for this purpose and, unless they are preference based, cannot be used to calculate QALYs. 13 Another challenge is the focus of condition-specific measures: HRQoL and symptoms or HRQoL only related to the symptoms of the condition. QALYs are typically assumed to reflect HRQoL rather than symptoms, although many preference-based measures used to produce QALYs are a combination of these, for example the EQ-5D has one symptom dimension of pain but all remaining dimensions (mobility, self-care, usual activities, anxiety/depression) arguably measure HRQoL.

One solution to this problem has been to try to ‘map’ from the condition-specific measure on to one of the generic preference-based measures using a data set containing the non-preference-based condition-specific measure and generic preference-based measures using regression techniques. The mapping algorithm is then applied to the clinical study data containing the non-preference-based condition-specific measure to predict utility values for the generic preference-based measures. A review of 28 mapping studies found that the performance of these mapping functions in terms of model fit and predictions varied considerably, with the root-mean-square error (RMSE) ranging between 0.084 and 0.2. 14 These errors are all large as, for example, an error of 0.2 could mean that an observed value of 0.5 could have a predicted value as low as 0.3 or as high as 0.7 on the 1–0 full health–dead scale. More concerning has been the tendency for some models to overpredict in more severe cases and underpredict in very mild cases. 15,16 Mapping methods are fundamentally limited by the degree of overlap between the classification systems of the two measures. Where there are important dimensions of one instrument not covered by the other, then this may well undermine the model. It has been found, for example, that attempts to map SF-36 dimension scores on to the EQ-5D preference-based index yield small and often non-significant coefficients for the vitality or energy dimension. 15 This is not surprising, as the EQ-5D classification system does not contain this dimension. Mapping does not overcome inadequacies in the classification system of the generic measure and is appropriate only if the measure is appropriate for that condition and patient population.

An alternative approach is to construct bespoke vignettes or scenarios to describe different states of health rather than to use standardised measures of health. 17 This approach was widely used in the 1970s and 1980s before the advent of generic preference-based measures, and it continues to be used, such as in submissions to NICE. 18 The vignettes are typically constructed from interviews with clinical ‘experts’ or sometimes patients. They provide an opportunity to be more flexible about the content of the health states and so make them more relevant to a condition and its treatment than a generic measure. The downside is that they have little or no quantitative linkage to clinical trial or other sources of evidence of effectiveness and do not reflect the variability in outcomes commonly found in clinical studies. The construction of these types of vignettes is highly subjective and is prone to manipulation.

For these reasons there has been interest in the development of CSPBMs. 19 CSPBMs can either be developed de novo, i.e. as an entirely new measure,20,21 or developed from existing condition-specific measures. 22,23 Development from existing measures has the advantage that utility scores can be generated for respondents completing the existing measure in existing and future data sets. This means that data collected on the existing measure can be used for a variety of purposes including producing QALY estimates, and respondent burden and cost can be reduced if a generic preference-based measure is not also required. This report examines the methodological issues in developing preference-based measures, and their testing and application. The rest of this chapter provides an overview of the key stages in developing a preference-based condition-specific measure and the methodological issues addressed in this report.

The problem

Condition-specific measures are standardised multi-item questionnaires used to assess patient health across different areas of self-perceived health. The areas covered may include symptoms, physical functioning, work and social activities, and mental well-being. They are designed for patient populations with a specific medical condition. They can be unidimensional or multidimensional. Responses to items are combined into a score using a range of possible methods. The most commonly used scoring system remains a simple summation of responses to produce dimension scores. For example, the AQLQ is designed to assess HRQoL in patients with asthma. 24,25 The AQLQ consists of 32 items that measure HRQoL across four dimensions: symptoms (12 items), activity limitations (11 items), emotional function (five items) and environmental stimuli (four items). For each item in the AQLQ, respondents are asked to choose from a series of seven responses ranging from extreme problems to no problems to obtain a score out of seven for each item. Item scores are then summed to obtain a dimension score and an overall score across all 32 items. The original measure has no preference weightings of the items or dimensions and so no basis for producing a health-state value for calculating QALYs.

One problem that is faced when deriving preference-based indices from existing condition-specific measures, such AQLQ, is that they are large and complex. With multiple dimensions and numerous items they define many millions of potential health states, and each of these states involve too much information for valuation by respondents. Researchers at the University of Sheffield have been developing methods for dealing with this problem by developing health-state classifications from the measures. A health-state classification system consists of multiple dimensions, each with a number of severity levels: for example, the EQ-5D has five dimensions, each containing three severity levels, and is able to define 243 states. The precise number of dimensions and the levels per dimension may vary. However, even with a classification such as the EQ-5D there are too many states to value in a survey and so only a sample are valued and the remainder are then estimated by econometric modelling. More importantly, the health states themselves contain a limited number of statements (five in the case of the EQ-5D) to describe the state of health. Evidence suggests that the most respondents can value is between five and nine statements, and health states of this size have been shown to be amenable to valuation by respondents from the general population. Researchers at the University of Sheffield have applied the approach of developing a health-state classification from a larger instrument to a number of non-preference-based measures over the last 10 years, including SF-36 and SF-12,2,3,26 menopausal health questionnaire,23 atopic dermatitis,27 AQLQ,28,29 OAB-q,30,31 King’s Health Questionnaire,32 Sexual Quality of Life Questionnaire33 and European Organisation for Research and Treatment of Cancer Quality of Life Questionnaire 30 (EORTC QLQ-C30). 34

This ‘health-state classification approach’ aims to produce a new, reduced health-state classification that is amenable to valuation by respondents with a minimum loss of information and is subject to the constraint that responses to the original instrument can be unambiguously mapped on to it. This implies that the text of the items should be altered as little as possible. The task is therefore to determine the dimensions and select items and severity levels for the new classification and this is described next.

An overview of methods for deriving condition-specific preference-based measures from existing patient-reported outcome measures

Introduction

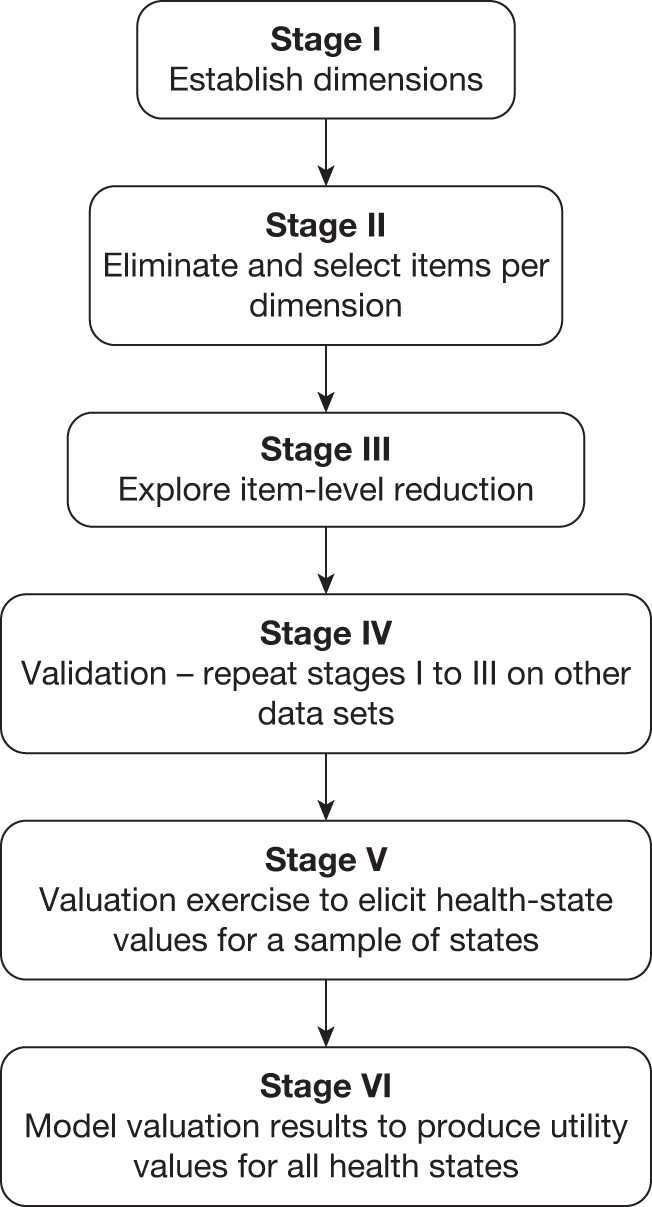

The methods described here cover the six stages (Figure 1) that can be used for deriving a CSPBM from an existing non-preference-based measure of HRQoL using traditional psychometric analysis and Rasch analysis. Item response theory can be used as an alternative to Rasch analysis. These stages are a guide to the key components in the development of a preference-based measure rather than a prescriptive methodology, as it is not always practical or possible to follow each stage separately or sequentially and the precise technique used may differ depending on the size and structure of the original instrument.

FIGURE 1.

The six stages for deriving a preference-based HRQoL measure.

Stage I: establishing dimensions

Conventional preference-based measures of health use a health-state classification system in which the dimensions are structurally independent in order to avoid nonsensical health states generated by the statistical design or multi-attribute utility theory as described in stage VI. In other words, there must be a low correlation between the dimensions. One technique for identifying structurally independent dimensions is factor analysis (confirmatory or exploratory, and this can use orthogonal or oblique solutions), although other techniques can be used. Factor analysis can be helpful in confirming the original dimensional structure of a measure or show where dimensions are not sufficiently independent and suggest ways to reduce the number of dimensions. It can also be used to suggest a possible dimensional structure when none was proposed by the original instrument developer. 27,31 Alternatively, it can suggest modifications to the dimensional structure proposed by the instrument developer; for example, in a study deriving the AQL-5D from the AQLQ, factor analysis suggested that there were five dimensions, whereas the original instrument had four. 28 Factor analysis needs to be used with care, however, as the factors it suggests may not make conceptual sense. Another technique that has been used is cluster analysis. 35 The extent to which items belong to a single dimension can also be examined using Rasch analysis (see Stage II: selecting items, below).

Stage II: selecting items

A dimension of a health-state classification system of preference-based measures is usually represented by one item, or occasionally two items, from the original instrument. The selection of items must be undertaken with great care. This process has been assisted in past research by a combination of conventional psychometric analysis, Rasch analysis and preference data,29,31 although other techniques such as item response theory can be used. A technique that has proven helpful in the process of item selection is Rasch analysis. This is a mathematical technique that converts qualitative (categorical) responses to a continuous (unmeasured) latent scale using a logit model. 36,37 The intuition underlying this approach is that the probability of an affirmative response to each item (or each response to each item) depends on the degree of difficulty of the item (or severity in the case of health) and the ability of the respondent. In the development of a health-state classification system, Rasch analysis can be used to eliminate items that poorly represent the underlying latent scale (for a brief overview of the concept of Rasch analysis, see Appendix 1).

The process of selecting items in a number of studies has been broken down into two stages. The first has been the elimination of poorly performing items that do not meet key criteria. 31 However, for larger measures of HRQoL this will leave a number of items in some dimensions and so the second stage involves selecting the best item for each dimension.

Eliminating items per dimension

For multidimensional measures separate Rasch models should be fitted to each of the dimensions established in stage I. In deriving the AQL-5D from the AQLQ, five Rasch models were fitted and items were eliminated using three criteria:

-

Items that are not suitable for item-level ordering should be eliminated from consideration in the classification system, as it is not possible to distinguish between response levels for these items.

-

Differential item functioning (DIF) establishes whether respondents with different characteristics respond differently to items. Items that display DIF are of limited value in preference-based measures when using them across subgroups of patients defined by the characteristic (as often would be required in an economic evaluation) and are therefore usually excluded.

-

Items that do not fit the underlying Rasch model should be eliminated, as they do not represent the underlying dimension; these items can be identified using Rasch model goodness-of-fit statistics.

Selecting the best item per dimension

Once items have been eliminated from the selection process, Rasch/item response theory and traditional psychometric methods are applied to select the ‘best’ items for the health-state classification. The item selection criterion typically includes at least some of the following:

-

item-level coverage across the latent space using the Rasch model

-

item goodness of fit using the Rasch model

-

feasibility (level of missing data)

-

internal consistency (correlation between item and dimension scores)

-

distribution of responses to identify floor and ceiling effects

-

responsiveness between two time points (e.g. standardised response mean).

Stage III: exploring item-level reduction

In practice, patients may not be able to distinguish between item response choices. 5 Therefore, we recommend including this stage as an exploratory stage in examining the possibility of reducing the number of item levels in stage II. Item threshold probability curves from Rasch and response frequencies can be examined when selecting potential item levels for collapsing. Items for the AQL-5D, for example, were collapsed from 7 to 5 using evidence from the Rasch analysis. Cognitive debriefing of respondents by researchers may also help to inform this process.

Stage IV: validation

The application of stages I–III derives a health-state classification system that is small enough for valuation. However, before proceeding with the valuation process, we recommend validating the selected items by repeating the analysis on an alternative sample from the same data set that was used in stages I–III, an alternative time point from the data set used in stages I–III or an alternative data set.

Stage V: valuation survey

It is infeasible for members of the population to value all health states generated by most health-state classification systems, which typically define several thousands of health states. Therefore, a sample of health states is selected from the classification system for valuation. Two alternative approaches are used to sample health states and subsequently estimate utility values for all states (described below, see Stage VI: model health-state values): the decomposed approach and the composite approach. The decomposed approach uses multi-attribute utility theory to select states to determine the functional form (usually multiplicative or additive). This involves three stages: valuing each dimension separately to estimate single dimension utility functions; valuing ‘corner states’, where, for example, one dimension has extreme problems and all other dimensions have no problems; and valuing a selection of non-corner states. The composite approach involves the valuation of a sample of states chosen using a statistical design such as an orthogonal array and uses regression techniques to estimate values for all states defined by the classification. Both approaches generate states that contain combinations of levels across the dimensions, many of which will involve high levels on some dimensions and low levels for others. The problem this creates is discussed later and is one of the issues addressed in this research.

For use in economic evaluation health-state values must be valued on a common scale with an upper anchor of one at full (or perfect) health and a lower anchor at zero that is assumed equivalent to being ‘dead’. Once the health states have been selected they can then be valued by a sample of the population of interest, usually the general population, using valuation techniques such as time trade-off (TTO), standard gamble, visual analogue scales (VASs)5 or combinations of these. These valuation techniques are individual valuations of health states that require the individual to imagine themselves in the health state of interest. Social valuations of health states can also be used, such as person trade-off where individuals are asked to make a societal judgement of how many patients in one health state they would need to cure to be equivalent to curing a specified number of patients in another group. Individual valuation techniques have been typically used to elicit values for health state, although some researchers argue that societal valuation techniques may be more appropriate.

Stage VI: model health-state values

The decomposed approach produces utility values for all health states by solving a system of equations to generate preference weights for each dimension and any interactions specified in the model. 38 This approach was used to estimate utility values for the HUI-2 and HUI-34,39 and CSPBMs in rhinitis and asthma. 20,21

The composite approach uses regression techniques to estimate utility values for all health states valued. The standard model uses individual-level data, for which the value of a health state is defined as:

where i = 1, 2 … n represents individual health-state values and j = 1, 2 … m represents respondents. The dependent variable is the value for health state i valued by respondent j and X is a vector of dummy explanatory variables for each level λ of dimension ∂ of the health-state classification, where level λ = 1 acts as a baseline for each dimension. Beta represents the coefficients on X. ‘εij’ is the error term that is subdivided, εij = uj + eij, where uj is respondent-specific variation and eij is an error term for the ith health-state valuation of the jth individual, and this is assumed to be random across observations.

A number of alternative models are usually fitted to the data and the preferred model is selected based on goodness-of-fit statistics including mean absolute error (MAE), adjusted R2, and the number of health states with errors of > 0.05 and 0.1. The algorithm from the preferred model can then be used by others to obtain utility values for the preference-based measures.

Methodological problems in the development of condition-specific preference-based measures

Below are a set of methodological problems that this research project sought to address.

Lack of structural independence between dimensions

The approach of developing health-state classification systems has been successfully applied to a number of instruments, but one problem that has emerged is that some condition-specific measures do not have a clear multidimensional structure. This arises where items are found to be highly correlated. The items could be seen as unidimensional, although they may nonetheless tap important nuances in the impact of the condition. A condition may impact on a number of related areas of life, and a richer picture is provided by using more than one item. A lack of independence between items in a health-state classification system creates problems in the valuation and modelling stages. Many of the states that need to be valued for the modelling, using for example an orthogonal design, would involve combinations of dimension levels that would not be credible (e.g. feeling downhearted and low and happy most of the time). This problem is more likely to arise with condition-specific measures, as they tend to define a narrower range of domains.

One approach to this problem is to construct a sample of representative health states without using a health-state classification system. This involves defining health states that represent patients with particular severity levels of a health problem. This approach was pioneered by Sugar, Lenert and others using κ-means cluster analysis to break up the data into states. In one study they identified patterns of the physical and mental health summary scores of the SF-12 into models with varying numbers of discrete states. 40 They selected six states from the SF-12 data in a sample of depressed patients (i.e. near normal, mild mental and physical health impairment, severe physical health impairment, severe mental health impairment, severe mental and moderate physical impairment, and severe mental and physical impairment). These were defined in terms of scores, so a process of turning the score distributions of each state into words taken from the original 12 items to define the states had to be developed based on expert judgement. This is an interesting approach but it suffers from three limitations. First, the derivation of the states uses essentially arbitrary cut-offs in the cluster analysis. Second, it uses dimension scores that then need to be related to the item descriptions to generate the states and this uses expert judgement. Although some expert judgement is always needed in this type of work, it should be minimised where possible. Finally, this method has only been used to value a small sample of states and it is likely that it is not possible to allocate all patients to these states (in contrast to the inclusive approach of the health-state classification).

An alternative approach examined in this report avoiding these problems is to use the results of Rasch modelling to generate states to describe typical respondents at different points along the latent variable. The last decade has seen the increasing use of Rasch modelling in the development and testing of PROMs. As well as providing a method for assisting in the selection of items, we have pioneered its use in selecting health states for valuation that represent different levels of severity. This ‘Rasch vignette approach’ generates logical states based on the natural occurrence of states in the data set and avoids the infeasible combinations generated by statistical designs (e.g. an orthogonal array) from a health-state classification system.

A potential disadvantage with the Rasch vignette approach, as with clustering, is that it generates a small subset of potential states for valuation and so leaves a large number unvalued. A solution examined in this report is to estimate the relationship between the health-state utility values and the latent variable produced by the Rasch model using regression techniques. This permits the estimation of utility values for other points on the latent variable and hence other states generated by the items. This method is explored in Chapter 3.

Naming the condition

Many condition-specific measures state the cause of the health problems being assessed in the instrument (‘Your asthma interferes with getting a good night’s sleep all of the time’). There have been a number of studies looking at the effect of naming the condition on health-state values. 5 Evidence suggests that naming the condition has an impact in some, although not all, cases.

The reason why a condition-specific measure names the medical condition is probably to improve its precision, and avoid unrelated problems. On the other hand, by knowing the name of the medical condition non-patient respondents may bring their prejudices to the valuation exercise (e.g. ‘being limited in pursuing hobbies or other leisure time activities due to cancer’). Furthermore, this relies on the correct attribution by the patient. Patients may not be able to disentangle the impact of a given health condition from other possible conditions and non-health problems in their life. The usual practice in valuing generic measures (as being generic means, by definition, that disease label is not mentioned) is to avoid anything suggesting specific diseases. In the AQL-5D valuation, however, the disease labels were maintained because it was necessary to make sure what is valued in AQL-5D is the same as what patients report about their health using AQLQ, and thus it was important to minimise changes to wording. But, because of the danger of respondents bringing their own ideas to bear, maintaining the same wording does not really guarantee that what patients mean by a given statement and what non-patients understand by the same statement will be the same, and the impact this may have had on the valuation is not known. This report will examine the impact of naming the condition in valuation studies in Chapter 4.

Side effects

A well-known concern with condition-specific measures is that they do not cover potentially important side effects of treatment. When designing a study, one solution to this problem has been to include both generic measures and condition-specific measures in a trial. The problem for economic evaluation is that it is not possible to trade-off two measures to assess overall effectiveness. Economic evaluation requires a preference-based single index measure of health that is decision specific rather than condition specific, and captures the impact on the condition and any side effects. 41 This could be achieved either by taking a condition-specific measure and adding additional dimensions to cover any known side effects or by taking a generic measure that is known to cover side effects and adding dimensions to cover aspects of the condition. In Chapter 5 we examine the former strategy, by adding an additional generic dimension to two CSPBMs and valuing the classification systems with and without the add-on dimension. For one CSPBM we then apply the preference weights for the CSPBMs with and without the add-on dimension to a patient data set and examine the impact.

Comorbidities

Even assuming there are no side effects, the achievement of comparability between specific instruments requires an additional assumption, namely that the impact of different dimensions on preferences is additive, whether or not they are included in the classification system. The impact of breathlessness on asthma health-state values, for example, must be the same whether or not the patient has other health problems not covered by the condition-specific measure, such as pain in joints. Provided the intervention only alters the dimensions covered in the specific instrument then the estimated change in health-state value would be correct. However, it assumes preference independence between dimensions included in the classification system of the specific measure and those dimensions not included (i.e. for a health state with six dimensions, in which the level of each dimension is indicated by a digit, then the difference between state XXX and YYY should be the same as the difference between XXXZ and YYYZ).

Some degree of preference interaction has been shown to exist,2,4,42 with the impact of a problem on one dimension of health being reduced or exacerbated by the existence of a problem on another dimension. This is likely to create a larger problem in condition-specific measures that focus on a narrow range of health dimensions compared with generic measures that cover a broader range of health dimensions (although the problem will also exist for generic health measures, as they too exclude many potentially important dimensions). This problem of preference interaction will also be examined in Chapter 5.

One solution to the problem of preference interaction is to keep on adding extra dimensions to the condition-specific measure. However, this will reduce the usefulness of the new preference-based measure, as it cannot be applied to existing data sets containing the condition-specific measure because it requires additional information to be collected. Furthermore, respondents in valuation studies struggle if there are too many pieces of information at once and so there is a practical constraint on the size of classification systems designed for valuation. Any classification system that is to be amenable to valuation is likely to have a limited number of dimensions of health. For use in cross-programme comparison, it is ultimately a trade-off between having measures that are relevant and sensitive to those things that matter to patients with a particular condition (including side effects) and the potential size of the preference dependence between dimensions. The relative importance of the different arguments in this trade-off will vary between conditions. In Chapter 5, we examine the extent of this problem for two conditions (asthma and common mental health problems).

Information loss compared with original measure

The derivation of health states based on a small subset of items of the original measure inevitably involves a loss of information. The original measures had multiple items in order to improve reliability and so achieve better psychometric performance in terms of validity and responsiveness. Given that the original rationale for using a condition-specific measure is to use its greater relevance and sensitivity, it is important to ensure that it retains this informational advantage in the process of becoming an index.

The extent of information loss can be examined using conventional psychometric tests of validity and responsiveness. 43 In this report we compare the preference-based condition-specific measure with the original full measure in terms of construct validity by examining scores between severity groups and responsiveness to change over time. Any loss in information needs to be balanced against the ability of preference-based measures to generate quality adjustment weights for QALYs, but a substantial loss might suggest that the whole process of selecting a few items inevitably reduces the psychometric performance of the measure. Chapter 7 looks at this empirically for four CSPBMs.

Do preference-based measures derived from condition-specific measures really offer an improvement over generic measures?

An important question is whether preference-based measures derived from condition-specific measures really do offer an improvement over existing generic measures in terms of their psychometric properties, and whether they generate sufficiently different values for differences between states and for changes over time to be important for the results of economic evaluation. This is a further development of the previous section (see Information loss compared with original measure) and is addressed in Chapter 6 using similar methods on data sets that contain the condition-specific measure and one or more of the generic preference-based measures.

What are policy implications of using condition-specific measures?

One of the reasons for health economists being reluctant to use condition-specific measures has been a view that they cannot be used to make cross-programme comparisons. In Chapter 7 of this report we review these arguments in the light of the findings of the research presented in this report.

Aims and objectives of the report

The overall aim is to critically review and test methods for deriving preference-based measures of health from condition-specific measures of health (and other non-preference-based measure of health) in order to provide guidance on when and how to produce CSPBMs and to identify areas for further research.

The specific objectives are as follows:

-

to identify and review the existing literature on current methods for deriving a preference-based measure of health from non-preference-based measures of health in order to develop a framework

-

to examine and test a new method for generating health states – the Rasch-based vignette approach – from non-preference-based measures using Rasch modelling

-

to assess the impact of referring to the medical condition (or disease) in the descriptions on health-state values

-

to assess the impact on health-state utility values of attempting to capture side effects using CSPBMs

-

to assess the impact of comorbidities by testing the additivity assumption and the extent of any violation across two conditions (asthma and common mental health problems)

-

to examine the degree of information loss of moving from the original instrument to the preference-based index

-

to compare preference-based measures derived from the condition-specific measures with generic preference-based measures (EQ-5D and SF-6D) in order to examine the degree of agreement and the extent of any gain in psychometric performance

-

to propose a set of conditions that should be satisfied in order to justify the development and valuation of a CSPBMs for use in economic evaluation

-

to examine whether CSPBMs can be used to inform resource allocation decisions.

There are other issues to address in the development of preference-based measures, such as the methods of valuation and response shift, but these are not specific to CSPBMs and are therefore not addressed in this project.

Chapter 2 A review of studies developing condition-specific preference-based measures of quality of life to produce quality-adjusted life-years

Introduction

Over the last two decades there has been interest in the development of CSPBMs from existing condition-specific measures as this has the advantage that QALYs can be directly calculated using the data originally collected in the trial or study. Alternatively, CSPBMs can be developed ‘de novo’ to produce an entirely new measure. Yet unlike non-preference-based patient-reported outcome measures, there are no guidelines on the derivation of CSPBMs developed either from an existing measure or ‘de novo’. Surprisingly little research to date has been conducted on the methodological development of CSPBMs to inform best practice. The aims of this chapter are first to describe the methods used in previous studies that produce CSPBMs, and second from these findings to identify the main methodological issues in the development of these measures, in particular any issues that are additional to those identified in Chapter 1.

This chapter presents a review of the existing literature reporting on the derivation of a CSPBM. A CSPBM is defined as consisting of both (1) a classification system that can be used to categorise all patients with the condition of interest and (2) a means of obtaining a utility score for all states defined by the system. In Chapter 1 we outlined a six-stage approach for deriving a CSPBM from an existing measure and use this to provide the structure for this review. We use this structure both for measures developed ‘de novo’ and measures developed from existing measures as arguably the same process applies to both types of measures. We describe the studies undertaken to date and critically review these methods. We then use these results to outline methodological challenges and areas requiring further research. To retain focus and application the review is concerned only with papers deriving CSPBMs from an existing condition-specific measure or ‘de novo’. To illustrate, different concerns may arise in the development of generic and population-specific preference-based measures, for example deriving a measure for children or for the elderly poses population-specific considerations, and generic preference-based measures will face different concerns regarding focus and content.

Methods

Search strategy

Current methods for developing preference-based measures of quality of life from condition-specific measures or ‘de novo’ that were published in English were identified using a literature search conducted in December 2010. The literature search was undertaken on MEDLINE, EMBASE, Health Technology Assessment (HTA) database, Cochrane Central Register of Controlled Trials (CENTRAL), Web of Science (including Science Citation Index, Social Sciences Citation Index, Conference Proceedings), Cochrane Database of Systematic Reviews (CDSR), Cochrane Methodology Register, Database of Abstracts of Reviews of Effects (DARE). The following search strategy was used:

-

qol/hrqol/qaly/”Quality of Life”/quality of life/Quality Adjusted Life Year AND

-

utility/utilities/preference with based/index/measure within 4 words of 1 AND

-

transform*/translat*/transfer*/conver*/map*/deriv*.

The search identified 4093 papers; 104 papers remained after a title and abstract sift. The search strategy meant that the review included only journal papers published in or before December 2010.

Exclusion criteria

Of the remaining 104 papers, 78 papers were excluded at the full-paper stage for the following reasons: 30 reported the derivation and/or valuation of vignettes; 18 were either summaries or analyses of patient-valued utility data of own health, for example using standard gamble or TTO or existing utility measures in a patient group; eight reported the methodology used to develop a generic measure; five reported on measures that use patient-reported own health to produce utility scores for a selection of states, with no modelling of a tariff to produce values for all states; four were mapping studies (either mapping between measures, between valuation techniques or between a valuation technique and summary score); four were population-specific rather than condition-specific; three had no utility score (they either summarised values derived only from individuals, the scoring system was not preference based, or a tariff has not as yet been produced for the measure); two were treatment specific, not condition specific; one measured global quality of life rather than HRQoL and was not condition specific; one involved non-health aspects such as cost; and one measured relative improvement in HRQoL rather than HRQoL. This left 26 papers for inclusion in the final review.

Data extraction

The review examined the methodology used to produce the CSPBMs, focusing on the six-stage approach outlined in Chapter 1, where stages I–III produce the classification system, stage IV validates the classification system, stage V elicits health-state utility values and stage VI models the utility data to produce utilities for all health states defined by the classification system. The review was concerned with the motivation behind the study; the conditions examined; for stages I–III the method of construction and the number and composition of items and dimensions in the classification system; for stage IV, whether and how the classification system was validated; for stage V, whose values were used, how values were obtained, and whether utilities were valued on to a 1–0 full health–dead scale; and for stage VI, how utilities were estimated for every states and the accuracy of this process. Data extraction was undertaken by one member of the research team and summarised in Microsoft Excel (Microsoft Corporation, Redmond, WA, USA) using items summarised in Table 1 that were previously agreed by the team.

| General | Author name |

| Title of paper | |

| Journal | |

| Condition | |

| Condition-specific measure | |

| CSPBM | |

| How/why chose original instrument | |

| Reasons for deriving preference-based measures | |

| Classification system | Dimensions and items in condition-specific measure |

| Dimensions and items in CSPBMs classification | |

| No. of states defined by system | |

| Method for reducing condition-specific measure to CSPBMs classification/producing CSPBMs classification | |

| Data used | |

| Testing/validation of classification system | |

| How dealt with multiple versions | |

| Valuation | Population, method of recruitment and setting |

| Sample size and selection of sample size | |

| Preference elicitation technique | |

| Anchors | |

| No. of states valued | |

| Sampling technique for states | |

| Condition mentioned | |

| Modelling preference data | Model type |

| Dependent variable | |

| Main effects variables | |

| Interaction terms | |

| Sociodemographics | |

| Constant | |

| Other variables | |

| Transformations | |

| Preferred model | |

| Proportion of regression coefficients p < 0.05 | |

| Proportion of regression coefficients with unexpected sign, and p < 0.05 | |

| Proportion of inconsistent regression coefficients and p < 0.05 | |

| R2-value and adjusted R2-value | |

| Mean error | |

| MAE and 95% CI | |

| Proportion MAE > 0.05, > 0.10 | |

| MAE as a percentage of observed range of the dependent variable | |

| RMSE | |

| Maximum predicted score compared with observed | |

| Minimum predicted score compared with observed | |

| Correlation | |

| Plots | |

| Other goodness-of-fit measures |

Results

Included papers

A brief summary of the 26 papers included in the review is presented in Table 2. Out of these 26 papers, 17 were published in non-clinical journals, including Quality of Life Research (five papers), Health and Quality of Life Outcomes (3), Pharmacoeconomics (3), Health Economics (1), International Journal for Quality in Health Care (1), Journal of ClinicalEpidemiology (1), Medical Decision Making (1), Quality in Health Care (1) and Value in Health (1). The remaining nine papers are each published in separate clinical journals: Amyotrophic Lateral SclerosisandOther Motor Neuron Disorders, British Journal of Cancer, British Journal of Dermatology, British Journal of Obstetrics and Gynaecology, Chest, Investigative Ophthalmology and Visual Science, Journal of Oral & Maxillofacial Surgery, Ophthalmic Epidemiology and Optometry and Vision Science.

| First author | Year | Journal | Condition | Non-preference-based measure | Classification developed ‘de novo’ | Preference-based measure |

|---|---|---|---|---|---|---|

| Beusterien44 | 2005 | Amyotrophic Lateral Sclerosis and Other Motor Neuron Disorders | ALS | ALSFRS-R | No | ALS Utility Index |

| Brazier23 | 2005 | Health and Quality of Life Outcomes | Menopause | Menopause-specific quality-of-life questionnaire | ||

| Brazier32 | 2008 | Medical Decision Making | Urinary incontinence | The King’s Health Questionnaire (used for urinary incontinence and lower urinary tract symptoms) | No | |

| Burr45 | 2007 | Optometry and Vision Science | Glaucoma | Glaucoma Profile Instrument | Yes | GUI |

| Chiou46 | 2005 | International Journal for Quality in Health Care | Paediatric asthma | N/A | Yes | PAHOM |

| Goodey47 | 2000 | Journal of Oral & Maxillofacial Surgery | Minor oral surgery | N/A | Yes | |

| Harwood48 | 1994 | Quality in Health Care | Handicap | International Classification of Impairments, Disabilities, and Handicaps (ICIDH) | No | London Handicap Scale – designed for completion or self-completion in postal surveys |

| Hodder49 | 1997 | British Journal of Cancer | Head and neck cancer | N/A | Yes | |

| Kind50 | 2005 | Pharmacoeconomics | Lung cancer | FACT-L | No | |

| Lamers51 | 2007 | Pharmacoeconomics | Lung cancer | FACT-L | No | |

| McKenna52 | 2008 | Health and Quality of Life Outcomes | Pulmonary hypertension | Cambridge Pulmonary Hypertension Outcome Review (CAMPHOR) | No | |

| Misajon53 | 2005 | Investigative Ophthalmology & Visual Science | Vision/visual impairment | N/A | Yes | VisQoL/AQoL-7D |

| Peacock54 | 2008 | Ophthalmic Epidemiology | Vision/visual impairment | N/A | Yes | VisQoL/AQoL-7D |

| Palmer55 | 2000 | Quality of Life Research | Parkinson’s disease | N/A | Yes | |

| Poissant56 | 2003 | Health and Quality of Life Outcome | Stroke | N/A | Yes | |

| Ratcliffe33 | 2009 | Health Economics | Sexual quality of life | SQoL | No | SQoL-3D |

| Revicki21 | 1998 | Chest | Asthma | N/A | Yes | ASUI |

| Revicki20 | 1998 | Quality of Life Research | Rhinitis | N/A | Yes | RSUI |

| Shaw57 | 1998 | British Journal of Obstetrics and Gynaecology | Menorrhagia | N/A | Yes | |

| Stevens27 | 2005 | British Journal of Dermatology | Paediatric atopic dermatitis | Un-named questionnaire on atopic dermatitis | No | |

| Stolk22 | 2003 | Quality of Life Research | Erectile (dys)functioning | IIEF | No | |

| Sundaram58 | 2009 | Journal of Clinical Epidemiology | Diabetes | ADDQoL plus additional items | No | Diabetes Utility Index |

| Sundaram59 | 2010 | Pharmacoeconomics | Diabetes | ADDQoL plus additional items | No | Diabetes Utility Index |

| Yang30 | 2009 | Value in Health | Overactive bladder | OAB-q | No | OAB-5D |

| Young31 | 2009 | Quality of Life Research | Overactive bladder | OAB-q | No | OAB-5D |

| Young60 | 2010 | Quality of Life Research | Flushing | FSQ | No |

Conditions

The range of conditions is broad, covering amyotrophic lateral sclerosis (ALS), asthma and paediatric asthma, erectile (dys)functioning, diabetes, flushing, glaucoma, handicap, head and neck cancer, lung cancer, menopause, menorrhagia, minor oral surgery, overactive bladder, paediatric atopic dermatitis, Parkinson’s disease, pulmonary hypertension, rhinitis, sexual quality of life, stroke, urinary incontinence, visual impairment. However, several measures cover similar conditions: asthma (two measures), cancer (two measures), vision (three measures), bladder (two measures), and sexual functioning (two measures). The papers discuss the derivation of 22 measures (four measures are each described using two papers, one of which has two sets of preference weights derived in two different countries).

Stages of developing preference-based measure

Stages I–III: deriving the classification system

Three papers reported only the valuation component30,54,59 of deriving a measure and hence are not discussed in this section, as the derivation of the classification systems was undertaken in separate papers. 31,53,58

Derivation of classification system from existing measure

Fourteen papers reported on measures that derived the classification system for the preference-based measure from an existing measure of HRQoL. All but one of these studies derived the classification system using a subset of items from the existing measure as the existing measure was considered too large to be amenable to valuation. One study supplemented the existing measure using other condition-specific items. The one study that used the classification system of the non-preference-based measure without modification actually derived the non-preference-based measure in the same study, and is therefore discussed in the section below on deriving measures ‘de novo’. 45

Six papers provide a clear and detailed description of their chosen methodology, and all analysed the performance of items from the non-preference-based measure using existing data and used a selection of psychometric criteria to select a subset of items. 31,50–52,58,60 Two papers also used qualitative analysis alongside the psychometric analysis. 50,51 The methods varied considerably between studies but all relied on the judgement of the researchers and/or experts, with varying degrees of usage of psychometric methods including factor analysis, Rasch analysis and classical psychometric methods of validity and responsiveness to determine both dimensionality and item selection.

Kind and Macran50 and Lamers et al. 51 reported on the same classification system. The method involved the use of factor analysis to determine the underlying structure and principal items in each dimension. The analysis was conducted on data from two clinical trials (n = 363) conducted for the condition of interest. Members of the research team independently qualitatively reviewed items and subscales from the existing measure for suitability and importance, and interviewed specialists, finding that these results were largely in agreement with the psychometric analysis.

McKenna et al. 52 selected items using the following criteria: percentage affirmation of item (not very small or very large); reasonable spread of item severity using the logit location in the Rasch model; significant coefficient in a model regressing items on to the general health question (‘very good/good/fair/poor health’); and content of items to ensure coverage of a range of issues. The analysis was conducted on responses of 201 patients.

Young et al. 31 selected items using the following process: first, factor analysis was used to establish instrument dimensionality; second, Rasch analysis was used to exclude items on the basis of item-level ordering, DIF and goodness of fit; third, Rasch and other psychometric analysis (e.g. low ceiling effects, low floor effects, low missing data, standardised response mean, correlation with domain score) was used to select items; fourth, Rasch analysis was used to collapse levels; and finally results were validated using other data. The analysis was conducted on data from a trial (n = 391) and validated on remaining patients in the trial (n = 746) and a separate trial (n = 793). Young et al. 60 also followed this approach using responses of patients suffering from the condition (n = 1270). Sundaram et al. 58 followed a similar approach using factor and Rasch analysis (but with different exclusion and selection criteria focusing on unidimensionality, interval-level measurement, additivity and sample-free measurement) on several data sets (n = 385, 52, 65, 111) and selected items for inclusion using both psychometric analysis and expert opinion.

The remaining seven papers use similar methods to the papers outlined above, but although they made reference to some criteria used to select items they do not fully outline the process used to derive the classification system. 22,23,27,32,33,44,48 For example, one paper23 stated that the most robust item per domain was selected, but the process used to determine robustness was not detailed. A further paper stated that items are selected that have the best coverage and responsiveness to change while ensuring the selected items represent different types of impact and that are related to disease severity, but the methods and results were not provided. 27 Brazier et al. 32 selected items using the following criteria: relevance to quality of life; percentage completed; avoidance of redundancy; face validity; distribution of scores (avoidance of floor/ceiling effects); construct validity; and responsiveness. Despite the detailed criteria the exact methods and data used to conduct the analysis was not reported. Another paper22 chose two items as they were considered primary end points, but this was not justified or explained.

Derivation of classification system ‘de novo’

Ten papers generated a classification system from scratch or ‘de novo’. Three papers used qualitative research,47,49,57 two papers used a literature review,46,55 three papers used a combination of literature review, patient interviews and expert opinion,20,21,45 one paper used a combination of qualitative research and a variety of psychometric analyses53 and one paper used psychometric analysis of a battery of existing items from the literature. 56

All three papers that used qualitative research to determine the classification system used a similar approach and conducted the process using recommendations by Babbie. 61 Goodey et al. 47 conducted semistructured interviews on 77 patients to identify domains patients believed were affected by surgery. Results were classified into ‘areas of concern’ by a panel of experts including patients and then categorised into domains taking into account frequency. The panel then constructed levels for these domains. Hodder et al. 49 conducted semistructured interviews on 25 patients and five experts. A Delphi panel including experts and a researcher was used to produce domains and the panel constructed levels for these domains. Shaw et al. 57 conducted unstructured interviews on 40 patients to identify the effects of the condition on different areas of their life. A panel consisting of experts and researchers derived domains and the panel constructed levels for the domains.

Chiou46 conducted a literature review of existing non-preference-based measures and a team including a psychologist and two specialists chose attributes based on patterns observed across the measures. Palmer et al. 55 identified dimensions using data from clinical trials, literature review and review of existing measures. Health states were reviewed by clinicians and researchers, piloted and subsequently revised.

The three papers that used a combination of literature review and qualitative research using patients provide little detail in their papers. Burr et al. 45 conducted focus groups with patients to explore their views of the effects of the condition and treatment on quality of life (guided by results from the literature and expert opinion) and the results were analysed using framework methodology to identify key domains for inclusion in the measure. Two papers used a combination of literature review, patient interviews and expert opinion,20,21 where the qualitative data were collected using 10 patient interviews to identify troublesome or distressing symptoms and problems and their relative importance.

Misajon et al. 53 conducted focus groups of patients to elicit attributes, guided by their previously validated questionnaire. Items and dimensions were generated using the focus groups and previous research and items were administered to 70 patients and 86 respondents without the condition. The number of items were reduced using psychometric criteria, factor analysis and reliability analysis, item response theory and structural equation modelling. The classification was confirmed by administering it to a second sample of 218 participants, 35% of whom were patients.

Poissant et al. 56 conducted telephone interviews on 493 patients, and 442 members of the general population matched on the basis of age and city district on a battery of existing measures and some additional items. Items were retained for consideration in the classification on the basis of prevalence and ability to capture effects of the condition. A subset of patients was subsequently asked to rate each item in terms of difficulty (i.e. severity) and importance and the results were used to select items. Three levels were selected per item and VAS on a convenience sample of 29 students was used to examine ordinality, and levels were reworded if necessary. The final classification was tested in a pilot study.

Content of health-state classifications

Table 3 outlines the number of dimensions and severity levels in each classification system and the number of health states defined by the classification. The number of dimensions varied: two measures had two dimensions; two measures had three dimensions, three measures had four dimensions, seven measures had five dimensions, five measures had six dimensions, three single measures had seven, eight and 10 dimensions each. The number of severity levels varied from 2 to 10 and often varied for different dimensions within a measure. The number of health states varied greatly from 10 to 390,625. Table 3 outlines the dimensions for each measure. It is worth noting that the focus of the dimensions differed by measure. Some measures had attributes that capture only symptoms or quality of life only related to the symptoms of the condition,22,31,60 whereas others incorporated dimensions that are likely to capture side effects and comorbidities covering both symptoms and HRQoL (e.g. Brazier et al. ,23 Kind and Macran50 and Lamers et al. 51). The measures suggest that there is not a single coherent underlying concept of HRQoL that is common to these measures, even for measures within a condition or International Classification of Diseases (ICD) classification.

| First author | Condition | No. of dimensions | Severity levels | No. of states defined by system | Dimensions |

|---|---|---|---|---|---|

| Beusterien44 | ALS | 4 | 5–6 | 750 | Speech and swallowing; eating, dressing and bathing; leg function; respiratory function |

| Brazier23 | Menopause | 7 | 3–5 | 6075 | Hot flushes; aching joints/muscles; anxious/frightened feelings; breast tenderness; bleeding; vaginal dryness; undesirable androgenic signs |

| Brazier32 | Urinary incontinence | 5 | 4 | 1024 | Role limitations; physical limitations; social limitations/family life; emotions; sleep/energy |

| Burr45 | Glaucoma | 6 | 4 | 4096 | Central and near vision; lighting and glare; mobility; activities of daily living; eye discomfort; other effects |

| Chiou46 | Paediatric asthma | 3 | 2–3 | 12 – but only 10 are valid | Symptoms; emotion; activity |

| Goodey47 | Minor oral surgery | 5 | 4 | 1024 | General health and well-being; health and comfort of mouth, teeth, and gums; impact on home/social life; impact on job/studies; appearance |

| Harwood48 | Handicap | 6 | 6 | 46,656 | Handicap mobility; occupation; physical independence; social integration; orientation; economic self sufficiency |

| Hodder49 | Head and neck cancer | 8 | 5 | 390,625 | Social function; pain; physical appearance; eating problems; speech problems; nausea; donor site problems; shoulder function |

| Kind50 and Lamers51 | Lung cancer | 6 | 2 | 64 | Physical; social/family; emotional; functional; symptoms – general: symptoms – specific |

| McKenna52 | Pulmonary hypertension | 4 | 2–3 | 36 | Social activities; travelling; dependence; communication |

| Misajon53 | Vision/visual impairment | 6 | 5–7 | 45,360 | Physical well-being; independence; social well-being; emotional well-being; self-actualisation; planning and organisation |

| Palmer55 | Parkinson’s disease | 2 | 2–5 | 10 | Disease severity; proportion of the day with ‘off-time’ (impact on quality of life due to condition covering domains: social function, ability to carry out daily activities, psychological function) |

| Poissant56 | Stroke | 10 | 3 | 59,049 | Walking; climbing stairs; physical activities/sports; recreational activities; work; driving; speech; memory; coping; self-esteem |

| Ratcliffe33 | Sexual quality of life | 3 | 4 | 64 | Sexual performance, sexual relationship, sexual anxiety |

| Revicki20 | Asthma | 5 | 10 | 100,000 | Cough; wheeze; shortness of breath; awakening at night; side effects of asthma treatment |

| Revicki19 | Rhinitis | 5 | 10 | 100,000 | Stuffy or blocked nose; runny nose; sneezing; itchy watery eyes; itchy nose or throat |

| Shaw57 | Menorrhagia | 6 | 4 | 4096 | Practical difficulties; social life; psychological health; physical health; working life; family life |

| Stevens27 | Paediatric atopic dermatitis | 4 | 2 | 16 | Activities; mood; settled; sleep |

| Stolk22 | Erectile (dys)functioning | 2 | 5 | 25 | Ability to attain an erection sufficient for satisfactory sexual performance; ability to maintain an erection sufficient for satisfactory sexual performance |

| Sundaram58 | Diabetes | 5 | 3–4 | 768 | Physical ability and energy level; relationships; mood and feelings; enjoyment of diet; satisfaction with managing diabetes |

| Young31 | Overactive bladder | 5 | 5 | 3125 | Urge to urinate; urine loss; sleep; coping; concern |

| Young60 | Flushing | 5 | 4–5 | 2500 | Redness of skin; warmth of skin; tingling of skin; itching of skin; difficulty sleeping |

Stage IV: validation of classification system

Few papers mention whether and how the classification system has been validated,20,21,31,45,56 and where validation of the classification system is mentioned the meaning of validation is interpreted differently depending on the study. One paper31 replicates the analysis used to construct the classification system using a different data set and different time-points of the data set used in the initial analysis and this can be interpreted as validation of the classification system. The remaining four papers20,21,45,56 do not validate the classification system but validate their measure using discriminative validity, by examining how the measure performs across subgroups of patients with different severity levels of the condition. These papers (with the exception of Burr et al. 45) also examine the agreement of their measure with a generic utility measure and a non-preference-based measure (Poissant et al. 56 used a generic measure, whereas Revicki et al. 20,21 used a condition-specific measure).

Stage V: valuation to elicit health-state values

The valuation component of each of the studies is outlined in Table 4. Three papers31,53,58 report only the classification component of deriving a measure and hence are not discussed in this section.

| First author | Preference elicitation technique | Population | Administration | Sample size | No. of states | States valued per respondent | Sampling technique for states | Condition mentioned |

|---|---|---|---|---|---|---|---|---|

| Beusterien44 | VAS (for both each level of each dimension alone and health states) and standard gamble | Random US population sample | Self-administered via internet | 1108 for modelling, 1374 for descriptive analysis | 9 | 5 | Unclear | No |

| Brazier23 | TTO | Women aged 45–60 years, randomly selected from GP lists in UK | Self-complete questionnaire undertaken in groups with two interviewers | 229 | 96 | 8 plus own health | Orthogonal array plus additional states | Yes |

| Brazier32 | Standard gamble | UK patients with condition | Interview | 110 | 49 | 9 | Orthogonal array | Referred to as ‘bladder problem’ |

| Burr45 | DCE | UK people with glaucoma | Postal survey | 286 used in analysis | 64 (32 pair-wise comparisons) | 32 | Fractional factorial design | Yes |

| Chiou46 | VAS, standard gamble (asked to respond for children) | Random US population sample | Unclear | 94 for VAS, 101 for standard gamble | 10 in VAS, 5 in standard gamble | Unclear | VAS – all valid states, standard gamble – unclear | Unclear |

| Goodey47 | Two tasks: distribute 100 counters across the dimensions in proportion to their importance; VAS of the levels per dimension | UK patients | Interview | 100 | N/A | N/A | N/A | Yes |

| Harwood48 | VAS | UK patients aged 55–74 years | Interview | 79 | 30 | 30 | Conjoint analysis, but reduced number of levels for valuation | Unclear |

| Hodder49 | VAS for dimensions relative to each other and VAS for the levels per dimension | UK surgeons | Unclear | 10 | N/A | N/A | N/A | Yes |

| Kind50 | VAS | UK general population sample | Postal survey | 433 | Items for classification split into two sets, 10 states for each set | 10 plus own health | Orthogonal array | Yes |

| Lamers51 | VAS | Dutch general population sample | Internet survey | 961 | Items for classification split into two sets, 10 states for each set | 10 plus own health | Orthogonal array | Yes |

| McKenna52 | TTO | UK general population | Interview | 249 | 36 (all states) | 9 | All | Unclear |

| Peacock54 | TTO and VAS for the levels per dimension | Unclear | Interview | Not included | 7 | 7 for TTO | Corner states (item worst responses with all other items at best level, e.g. 511111 and worst state) | Unclear |

| Palmer55 | VAS and standard gamble | US patients | Interview | 59 for VAS, 58 for standard gamble | 10 (all) | 10 | All | Yes |

| Poissant56 | VAS | Canadian patients and caregivers | Interview | 32 stroke patients, 28 caregivers | 11 | 11 | All best, all worst, corner states (item worst responses with all other items at best level) | Yes |

| Ratcliffe33 | TTO, ranking, DCE | UK general population | TTO and ranking interview, DCE postal survey | TTO and ranking 207, DCE 102 | 64 (all) for TTO and ranking, 24 states across 12 pair-wise choices for DCE | TTO and ranking – 9, DCE – 12 (six choices) | TTO and rank used all states, DCE used optimal statistical design | Yes |

| Revicki21 | Standard gambles and VAS both for states and for the levels per dimension | US patients | Interview | 161 | 10 | 10 | Corner and multisymptom states | Yes |

| Revicki20 | Standard gamble and VAS both for states and for the levels per dimension | US patients | Interview | 100 | 10 | 10 | Corner and multisymptom states | Yes |

| Shaw57 | Two tasks: distribute 21 counters across the dimensions in proportion to their importance; VAS of the levels per dimension | UK patients | Interview | 100 | N/A | N/A | N/A | Yes |

| Stevens27 | Standard gamble (asked to respond for children) | UK general population | Interview | 137 | 16 (all) | 10 | All | No |

| Stolk22 | TTO | Dutch general population and students | Group interviews for general population, interviews in groups and individually for students | 265 | 24 (all, excluding best state) | 24 | All (excluding best state) | Yes |

| Sundaram59 | Standard gamble and VAS | US patients | Interview | 100 | 19 | VAS – 19, standard gamble – 4 | Corner, multisymptom and anchor states | Yes |

| Yang30 | TTO | UK general population | Interview | 311 | 99 | 8 | Balanced design | Yes |

| Young60 | TTO | UK general population | Interview | 147 | 16 | 8 | Rasch analysis to select plausible health states | Yes |

Elicitation technique

Out of the remaining 23 papers covering 23 valuation studies, five elicited values using VAS alone, five elicited values using only the TTO technique, six used both VAS and standard gamble, two used both VAS and the distribution of counters to indicate importance, two used standard gamble alone, one used discrete choice experiment (DCE) alone, one used TTO and VAS, and one used TTO, DCE and ranking. In total, VAS was used in 14 studies, TTO was used in seven studies, standard gamble was used in eight studies, DCE was used in two studies and ranking was used in one study. Although VAS was the most commonly used technique, its usage differs across studies. The VAS was used to value health states in 10 studies but six of these used VAS to predict standard gamble values using a mapping function. Furthermore, VAS was used to value severity levels within a dimension in seven studies and of these used to value the different dimensions in two studies.

Health-state selection

Nineteen studies elicited values for health states. The number of states included in the valuation studies varied from 0.01% of states to the inclusion of all states. The sampling technique used to select states varied by study, and this is affected by the valuation technique, sample size and the size of the classification system. Six papers valued all health states (one of these also used a statistical design to produce states for DCE), four used an orthogonal array, two used a fractional factorial design, five used corner states and multisymptom states (although the exact selection varied), one used a balanced design, one used Rasch analysis, and one paper selected a small number of states alongside levels of each dimension but the selection process is unclear. Three papers elicited values for levels and dimensions of the classification system directly and therefore did not value any health states. Only one study examined the issue of lack of independence between items, where some health states defined by the classification system may be infeasible,60 and this approach is reported in further detail in Chapter 3.

Population

The majority of valuation studies, 13 studies, were conducted in the UK. In addition, six studies were conducted in the USA, two in the Netherlands and one in Canada. Ten valuation studies elicited values from patients, nine studies elicited values for the general population, one study elicited values from both the general population and students, one study elicited values from patients and caregivers and one study elicited values from surgeons. For one study the population is unclear. 54 Sample size varies by study from 10 to 1374. The majority of valuation studies mentioned the condition. Two valuation studies did not mention the condition in their survey and whether a condition was mentioned was unclear for four studies.

Mode of administration

Interviews were the most popular mode of administration, with 17 studies using interviews. Of these 17 studies, 15 involved interviews undertaken individually, one study involved interviews undertaken in groups and one study involved both individual and group interviews. Other modes of administration were used, with three studies using postal surveys (one of which also used interviews) and two studies using internet surveys. For two studies the mode of administration is unclear. Mode of administration can affect response rates and the demographic composition of the sample. Historically most valuation surveys were untaken by interview, but in recent years the use of internet surveys is gaining popularity as it is cheaper and quicker, but may mean that the sample is not representative of the population of interest, for example with fewer elderly respondents. There is little published evidence examining the impact of all modes of administration on survey results.

Stage VI: modelling health-state utility data

The methods used to obtain health-state values for all states defined by the classification system are shown in Table 5. Three papers valued all health states defined by the classification and therefore did not undertake any form of modelling of these values. One study valued all states using VAS and converted these to standard gamble using a power function. Nine studies applied a composite approach using statistical analysis involving regression analyses used to estimate an additive function. The utility value of a health state is calculated as the sum of the coefficients of the appropriate levels of each dimension. Eight papers used a decomposed approach, which uses multi-attribute utility theory as the basis for the modelling, with five papers estimating a multiplicative function and three papers estimating an additive function. The exact process used in the papers reported here differs across different studies. Another study mapped Rasch logit scores generated for health states on to mean observed health-state values using regression analysis to produce a mapping function enabling utility values for all states to be estimated using the Rasch logit scores of each health state. 60 The methodology for one study was unclear. 56

| First author | Preference elicitation technique | Method of extrapolation | Anchors | Anchored at dead = 0 |

|---|---|---|---|---|

| Beusterien44 | VAS (for both each level of each dimension alone and health states) and standard gamble | Decomposed – multiplicative | 0 = dead, 1 = full health | Yes |

| Brazier23 | TTO | Composite – additive | 0 = dead, 1 = full health | Yes |

| Brazier32 | Standard gamble | Composite – additive | 0 = dead, 1 = full health | Yes |

| Burr45 | DCE | Composite – additive | 0 = worst state, 1 = best state | No |

| Chiou46 | VAS and standard gamble (asked to respond for children) | Power function used to convert VAS to standard gamble, all states valued using VAS | 0 = death, 1 = full health | Yes |

| Goodey47 | Two tasks: distribute 100 counters across the dimensions in proportion to their importance; VAS of the levels per dimension | Decomposed – additive | 0 = worst state, 100 = best state | No |

| Harwood48 | VAS | Composite – additive | 0 = worst state, 1 = best state | No |

| Hodder49 | VAS for dimensions relative to each other and VAS for the levels per dimension | Decomposed – additive | 0 = worst state, 100 = best state | No |

| Kind50 | VAS | Composite, one model for each classification system, merged to obtain overall weights | 0 = dead,1 = full health | Yes |

| Lamers51 | VAS | Composite, one model for each classification system, merged to obtain overall weights | 0 = dead,1 = full health | Yes |

| McKenna52 | TTO | Composite – additive | 0 = dead,1 = full health | Yes |

| Peacock54 | TTO and VAS for the levels per dimension | Decomposed – multiplicative | 0 = dead,1 = full health | Yes |

| Palmer55 | VAS and standard gamble | All states valued | 0 = dead,1 = full health | Yes |

| Poissant56 | VAS | Unclear | 0 = worst state, 1 = best state | No |

| Ratcliffe33 | TTO, ranking and DCE | Composite – additive | 0 = dead,1 = full health | Yes |

| Revicki21 | Standard gamble and VAS both for states and for the levels per dimension | Decomposed – multiplicative | 0 = worst state, 1 = best state | No |

| Revicki20 | Standard gamble and VAS both for states and for the levels per dimension | Decomposed – multiplicative | 0 = worst state, 1 = best state | No |

| Shaw57 | Two tasks: distribute 21 counters across the dimensions in proportion to their importance; VAS of the levels per dimension | Decomposed – additive | 0 = worst state, 100 = best state | No |

| Stevens27 | Standard gamble (asked to respond for children) | All states valued | 0 = dead,1 = full health | Yes |

| Stolk22 | TTO | All states valued | 0 = dead, 1 = absence of condition | Yes |

| Sundaram59 | VAS and standard gamble | Decomposed – multiplicative | 0 = dead, 1 = full health | Yes |