Notes

Article history

The research reported in this issue of the journal was commissioned by the National Coordinating Centre for Research Methodology (NCCRM), and was formally transferred to the HTA programme in April 2007 under the newly established NIHR Methodology Panel. The HTA programme project number is 06/91/10. The contractual start date was in October 2005. The draft report began editorial review in March 2011 and was accepted for publication in August 2011. The commissioning brief was devised by the NCCRM who specified the research question and study design. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the referees for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Nicky Welton has received honoraria for teaching and consultancy relating to indirect and mixed treatment comparison meta-analyses from Abacus International (application areas unknown to Dr Welton), Pfizer (teaching only), United Biosource (rheumatoid arthritis) and the Canadian Agency for Drugs and Technology in Health (lung cancer and type 2 diabetes). In all cases, Dr Welton was blind to the included treatments. Hayley Jones has received honoraria for consultancy relating to mixed-treatment comparison meta-analysis from Novartis Pharma AG, but worked only with simulated data and was blind to any specific applications.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2012. This work was produced by Savović et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to NETSCC. This journal is a member of and subscribes to the principles of the Committee on Publication Ethics (COPE) (http://www.publicationethics.org/). This journal may be freely reproduced for the purposes of private research and study and may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NETSCC, Health Technology Assessment, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2012 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Although meta-analyses of randomised trials offer the best evidence for the evaluation of clinical interventions, they are not immune from bias. 1 Bias may arise because of selective reporting of whole trials or of outcomes within trials,2–5 or because of study design characteristics that compromise internal validity. 6 The design of randomised controlled trials (RCTs) should incorporate characteristics that avoid biases resulting from lack of comparability of the intervention and control groups. For example, concealment of randomised allocation avoids selection bias (differences in the probability of recruitment to the intervention and control groups based on participant characteristics at the time of recruitment), blinding of participants and trial personnel avoids performance bias (differences in aspects of patient management between intervention groups) and blinding of outcome assessors avoids detection bias (differences in outcome measurement).

Empirical evidence about the magnitude and relative importance of the influence of reported study design characteristics on trial results comes from ‘meta-epidemiological’ studies in which collections of meta-analyses are used to study associations between trial characteristics and intervention effect estimates. 7,8 There is evidence from such studies that inadequate allocation concealment and lack of blinding lead, on average, to exaggeration of intervention effect estimates. 9–11 However, the evidence is not consistent: some studies did not find evidence of effects of these characteristics,12–14 whereas others suggested that other study design characteristics were of importance. 9–11,15 Possible reasons for this lack of consistency include differential effects of study design characteristics across different clinical interventions or between different objectively and subjectively assessed outcome measures,16 differences in definitions of characteristics and methods of assessment (some studies relied on assessments reported in contributing systematic reviews9), and chance. 8 The extent of overlap between meta-analyses contributing to the different studies is unclear.

As the effects of study design characteristics tend to be estimated imprecisely within individual meta-analyses,8 large collections of meta-analyses are needed to estimate effects of these characteristics with precision, and to examine variability in these effects according to clinical area or type of outcome measure. It is, therefore, desirable to combine the collections of meta-analyses that were assembled in previous studies so that unified analyses can be conducted. However, these studies assembled their collections of meta-analyses independently, and so the extent of overlap between the meta-analyses and trials included in the different studies is unknown. Identification and resolution of such overlaps is difficult because of the multiplicities inherent in the data structure: trials report results on multiple outcomes and may be included in multiple meta-analyses, while systematic reviews may contain many different meta-analyses. Data are extracted from multiple publications that may describe trials, systematic reviews or both.

By combining data from 10 meta-epidemiological studies into a single database we identified and removed overlaps between trials and meta-analyses, and investigated the consistency of assessments of reported study design characteristics between the different contributing studies. 17 Based on seven contributing studies in which both study design characteristics and results were recorded, we investigated the influence of different study design characteristics on both average intervention effects and between-trial heterogeneity, according to type of intervention and type of outcome measure.

Chapter 2 Development of a combined database for meta-epidemiological research

Methods

Data

Data were combined as part of the BRANDO (Bias in Randomized and Observational studies) project. The authors of 10 meta-epidemiological studies9,11–15,18–21 (Table 1) agreed to contribute their original data to a combined database that would be used to conduct combined analyses and investigate reasons for differences between the results of the original studies. The combined database was created using Microsoft Access™ software (Microsoft Corporation, Redmond, WA, USA). Most authors supplied separate tables containing data on included trials and meta-analyses, allowing linkage between these two levels of data. When such information was not supplied, information from publications of relevant systematic reviews was used to link trials and meta-analyses. 15 Citations (of publications from which the data were extracted) were linked to the data sets using matching via source systematic review, author name and publication year. 12–15 Studies by Sampson et al. 20 and McAuley et al. 18 and the part of the study by Egger et al. 9 that was based on published journal articles [referred to hereafter as Egger (journal)] did not include information on study design characteristics in the included trials, whereas the study by Royle and Milne19 did not include outcome data. These studies cannot contribute to meta-epidemiological analyses, but were retained to contribute to other, descriptive analyses.

| Contributing meta-epidemiological study | Number of contributed meta-analyses (trials) | Clinical areas/types of interventions | Number of meta-analyses (trial results) in final database |

|---|---|---|---|

| Als-Nielsen et al.12,22 | 48 (523) | Various | 46 (506) |

| Balk et al.13 | 26 (276) | Circulatory, paediatrics, infection, surgery | 23 (251) |

| Contopoulos-Ioannidis et al.21 | 16 (133) | Mental health | 11 (94) |

| Egger et al.9 | 165 (1776)a | Various | 121 (1115)a |

| Kjaergard et al.15 | 14 (190) | Various | 8 (72) |

| McAuley et al.18 | 31 (454) | Various | 18 (205)b |

| Pildal et al.14 | 68 (474) | Various | 67 (460) |

| Royle and Milne19 | 29 (541) | Various | 28 (452)c |

| Sampson et al.20 | 24 (257) | Circulatory, digestive, mental health, pregnancy and childbirth | 14 (112)b |

| Schulz et al.11 | 33 (250) | Pregnancy and childbirth | 27 (210) |

Assigning identification numbers and creating a unified data set

To enable automated identification of meta-analyses and trials that occurred in more than one meta-epidemiological study, we labelled each trial and review entry in contributing data sets with a MEDLINE, EMBASE or ISI Web of Science unique publication identifier (ID), in respective order of preference. We first searched MEDLINE (through PubMed™) using bibliographic information for every trial and review included in contributing data sets [e.g. full title, author(s), publication year, journal, volume, pagination] and retrieved their unique MEDLINE identifier (PMID). For references that were not located in MEDLINE, EMBASE was searched (through Ovid™) in the same way and EMBASE-unique identifiers were retrieved. For references that were not located in EMBASE the search was carried out in the ISI Web of Science database, but only a small number of the remaining non-indexed citations were identified in this way. Trial references not indexed in MEDLINE, EMBASE or ISI were cross-checked for duplication with other references using duplicate search facilities in Reference Manager™ bibliographic database software (Thompson Reuters, New York, NY, USA), followed by manual checking. All non-indexed, unique references were assigned a unique identification number derived from their ID number in the Reference Manager database.

We defined in the master database the variables that we wished to combine from the contributing studies. An initial combined data set was then created by mapping each variable in each contributed data set to the predefined variable in the master database that it most closely matched. New variables were then added to the master database to capture contributed data that did not fit the a priori variable definitions.

Identification and removal of duplicate meta-analyses and trials

The unique identifiers of trials included in each meta-analysis in the combined data set were compared with those in every other meta-analysis (regardless of whether or not the meta-analyses assessed the same outcome). Using Intercooled Stata™ version 9 (StataCorp LP, College Station, TX, USA), meta-analyses that contained any trials in common with any other meta-analysis were grouped together. Not all meta-analyses within these sets contained overlapping trials: for example, two meta-analyses with no trials in common might each contain trials in common with a third meta-analysis and these three meta-analyses formed one set.

Meta-analyses that had no overlap with any other meta-analysis in the combined data set were transferred to the final data set. We then considered each set of overlapping meta-analyses in turn. Meta-analyses were excluded from each set until there was minimal overlap between the remaining meta-analyses, according to the following rules:

-

Exclude meta-analyses from the Royle and Milne19 study, which did not include outcome data (entire meta-analysis removed regardless of the extent of overlap).

-

Exclude meta-analyses for which information on study design characteristics was not available in preference to those for which such information was available. If both meta-analyses in a duplicate pair contain no information on study design characteristics, exclude meta-analyses from earlier studies first: in order Egger (journal),9 Sampson et al. ,20 McAuley et al. 18 (entire meta-analysis removed regardless of the extent of overlap).

-

Exclude meta-analyses from the portion of the study by Egger et al. 9 that was based on assessment of study design characteristics in Cochrane reviews in preference to meta-analyses from studies that directly assessed these characteristics.

-

Exclude meta-analyses with fewer assessed study design characteristics in preference to those with more assessed characteristics.

-

Exclude meta-analyses from less recently published systematic reviews in preference to more recently published reviews.

-

Exclude meta-analyses including fewer trials in preference to meta-analyses including more trials.

These rules were used in order of priority, that is, the next rule was applied only if the previous could not yield a decision. We recorded reasons for all decisions to exclude meta-analyses. Pairs of meta-analyses with recorded study design characteristics and results that had only a minimal overlap between them were retained at this stage. Overlap was considered minimal if the number of overlapping trials was no more than 10% of the sum of the numbers of overlapping trials and unique trials from both meta-analyses. We then removed the overlapping trials from the meta-analysis in the duplicate pair that (1) contained more trials or (2) according to the rules 3 to 5 above. If these rules did not yield a clear decision, overlapping trials were removed from one of the meta-analyses at random. At both stages, the choice of meta-analyses or trials to be removed was independent of the assessment of the study design characteristics, and disagreements in these assessments between studies were not considered. Similarly, the decision on removal was also independent of the types of outcome measure assessed in the overlapping meta-analyses.

Some meta-analyses contained multiple results from the same trial, usually because they included multiarm trials in which the same control/comparison group was compared with two different treatment groups. Where appropriate, the two treatment groups were combined. In other cases one of the results was removed at random. We then checked the 2 × 2 results tables from trials in the deduplicated data set against the data in the source review publications. Inconsistencies were clarified with the contributors and corrected where necessary. We recoded the direction of outcome events where necessary so that the coded outcome for each trial corresponded to an adverse (undesirable) event.

Assessing consistency of assessment of reported study design characteristics in different contributing studies

We used trials included in more than one meta-epidemiological study to assess the reliability of the assessment of methodological characteristics between studies. For this analysis we defined such trials as those with the same bibliographic reference occurring in more than one meta-epidemiological study, irrespective of numbers of participants or events, or the type of outcome measure. To assess the inter-rater reliability we compared contributors’ assessments of the following three methodological characteristics: adequacy of the method for generating the random sequence used for allocating participants to treatment groups (sequence generation), adequacy of concealment of treatment allocation from participants and investigators at the point of enrolment into the trial (allocation concealment), and contributors’ assessments of whether or not a trial was double blind or not (blinding). Kappa statistics were calculated for the assessment of sequence generation (inadequate/unclear compared with adequate), allocation concealment (inadequate/unclear compared with adequate) and blinding (not double blind/unclear compared with double blind) of duplicated trials for each pair-wise comparison between contributing meta-epidemiological studies. Only comparisons with at least 10 overlapping trials between any two meta-epidemiological studies were analysed, for each of the three characteristics.

Classification of interventions and outcome measures

We classified the type of experimental intervention, type of comparison intervention and type of outcome measure for each meta-analysis in the final data set. Interventions were classified based on an expanded and modified version of the classification by Moja et al. 23 Comparison interventions were further classified as inactive (e.g. placebo, standard care) or active. When it was not clear which intervention group should be considered experimental and which the comparison, at least two study collaborators made a consensus decision. In cases when a decision could not be reached, such meta-analyses were excluded from analyses of associations between study design characteristics and intervention effect estimates.

We classified outcome measures according to an expanded and modified version of the classification developed by Wood et al. 16 Outcome measures were further grouped as all-cause mortality, other objectively assessed (including pregnancy outcomes and laboratory outcomes), objectively measured but potentially influenced by clinician/patient judgement (e.g. hospital admissions, total dropouts/withdrawals, caesarean section, operative/assisted delivery) and subjectively assessed. When a review reported that different methods of outcome assessment were used in different trials within the same meta-analysis, the meta-analysis was classified according to the most subjective method of outcome assessment. This was the case in 16 meta-analyses. For example, some trials of smoking cessation assessed outcomes using exhaled carbon dioxide or salivary cotinine (classified as objectively assessed), whereas for others it was by patient self-report (classified as subjectively assessed). Classifications of interventions and outcome measures were checked by at least two of the collaborators who were clinicians by training (CG, LLG, BA-N or JP). Classifications of both outcome measures and interventions were based solely on the information provided in the review from which the meta-analysis was extracted. We did not retrieve further information on individual outcome measures from publications of included trials.

Results

Database structure

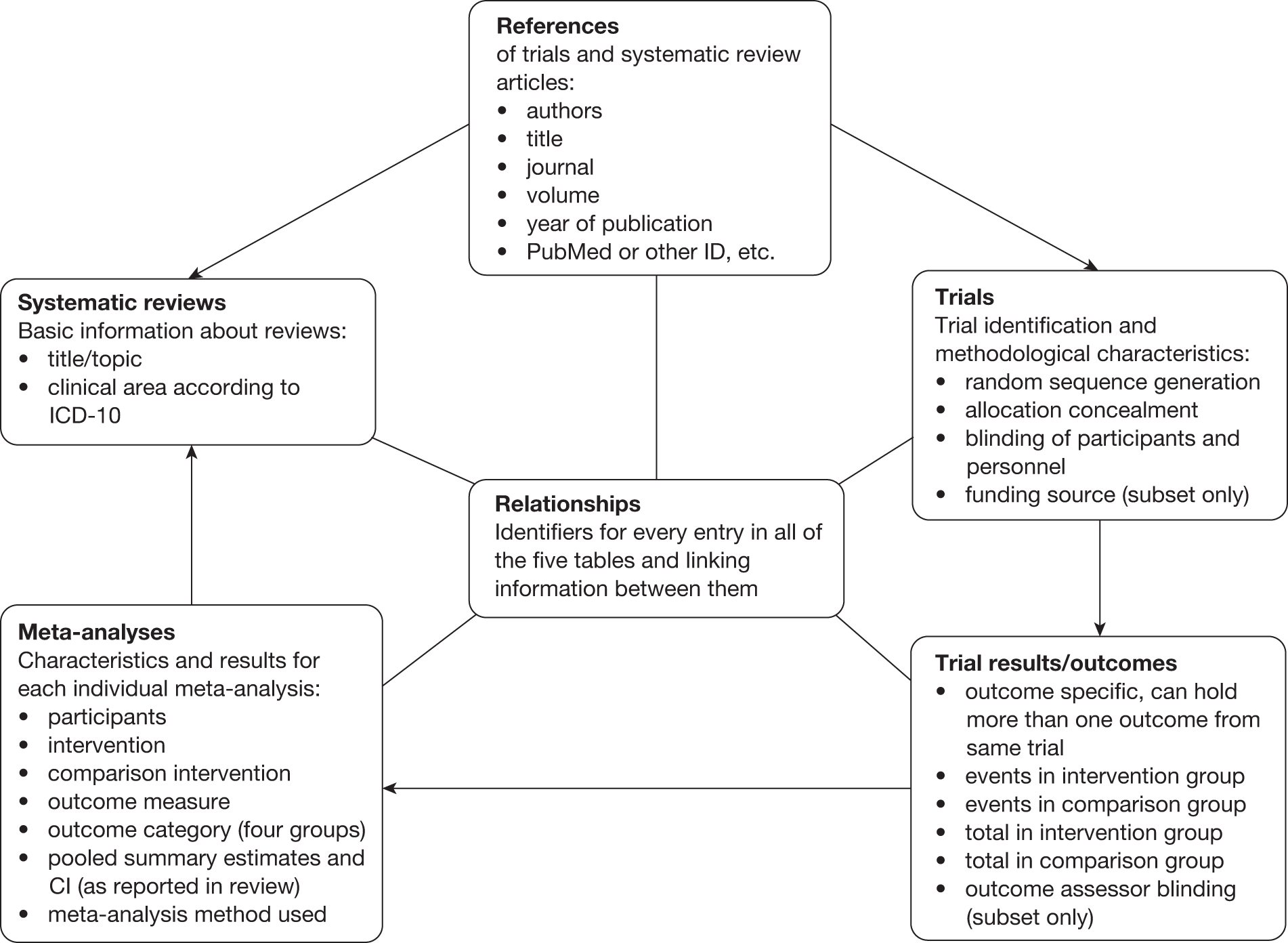

The database design allowed for multiple results from the same trial to be contained in different meta-analyses, multiple meta-analyses in systematic reviews, overlapping meta-analyses between systematic reviews and multiple references to the same trial or review. The final database structure consisted of six tables (Figure 1). The first five tables, References, Trials, Trial Results, Meta-analyses and Systematic Reviews, contained relevant study characteristics. The sixth table, Relationships, contained the identifiers necessary to link information between all the other tables. Each reference entry was linked to its related trial or systematic review, and further links were established between trials, trial results, meta-analyses and systematic reviews in the corresponding database tables. Figure 1 lists core variables included in each table.

FIGURE 1.

Schematic representation of the final database structure. ICD-10, International Classification of Disease, 10th edition. 30

Removing overlaps

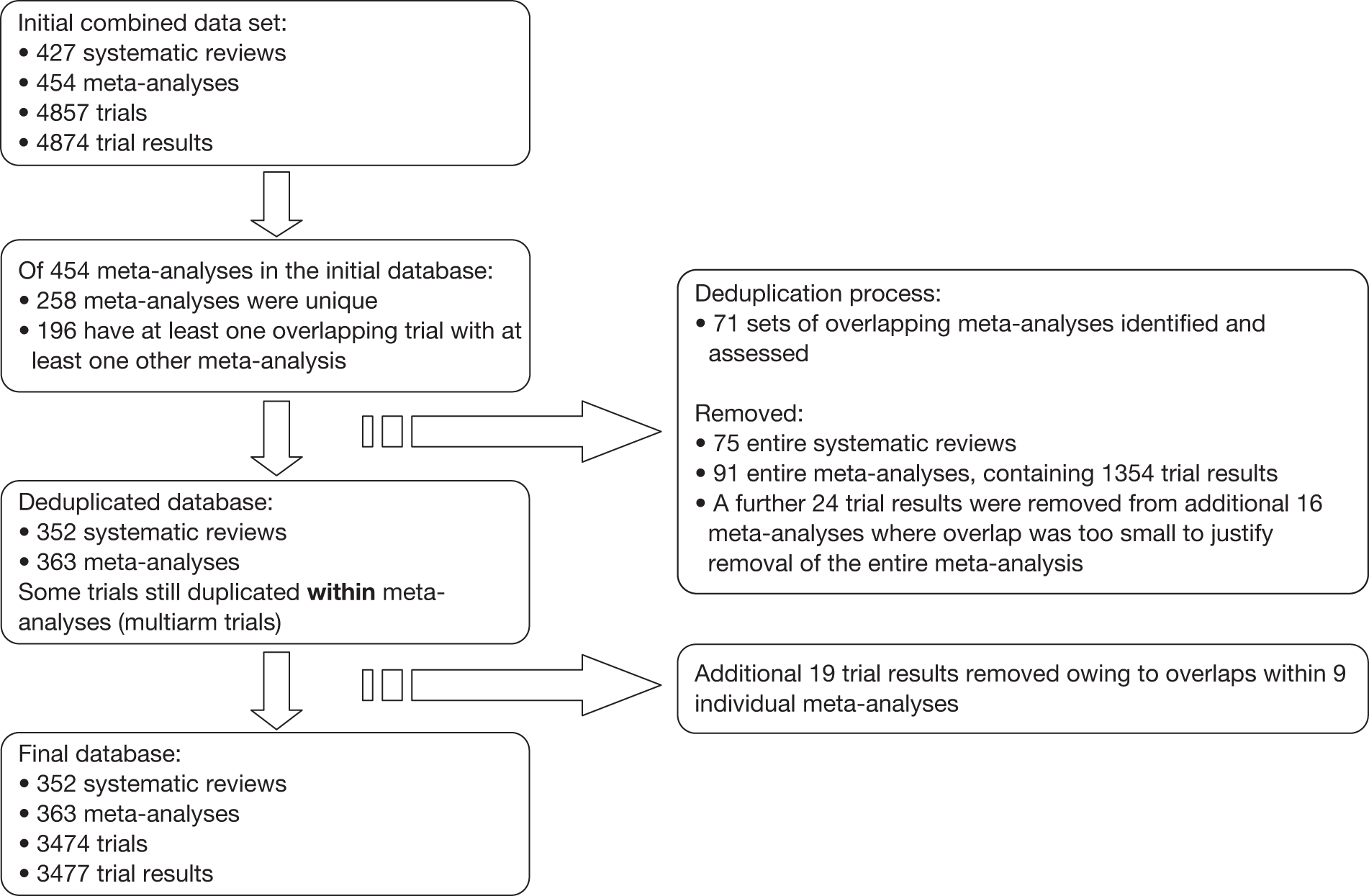

Figure 2 depicts the derivation of the final database through removal of overlaps between meta-analyses. The initial combined data set contained 427 systematic reviews with 454 meta-analyses, 4857 trials and 4874 trial results. Of the 454 meta-analyses, 196 contained at least one trial that overlapped with at least one other meta-analysis, among which we identified 71 sets of meta-analyses containing overlapping trials. The size of these sets varied from 2 to 17 meta-analyses (median 2). We removed 91 entire meta-analyses containing 1354 trial results during the deduplication process. Of the 1354 results removed, 844 were duplicates of trials retained in the database, whereas 510 were unique. Of those that were unique, 340 (67%) were from studies that did not record either methodological characteristics or results, thus 170 potentially informative trials were removed during this process. A further 24 individual trial results were removed from 16 additional meta-analyses for which overlap was minimal (see methods). One trial was removed from 10 meta-analyses, two trials from four meta-analyses, and three trials from two meta-analyses each. An additional 19 trial results were removed as a result of overlaps within nine of the meta-analyses (e.g. where there were two comparisons with a common control group). The final database contained 352 systematic reviews contributing 363 meta-analyses, 3474 trials and 3477 trial results.

FIGURE 2.

Flow diagram depicting the deduplication process.

Table 2 shows an example of a set of four meta-analyses containing overlapping trials. Three meta-analyses (1005, 2456 and 2531) were taken from the same Cochrane review,24 whereas 2097 was from a journal review25 with a similar topic. Table 3 summarises the overlap between the trials in each pair of meta-analyses. To remove overlaps, meta-analyses were dropped in the following order: (1) meta-analysis 2097, because it was contributed by the Egger (journal) study,9 which did not include study design characteristics; (2) meta-analysis 2456, because study design characteristics in this study were extracted from a Cochrane review rather than from primary publications. The overlap between the remaining two meta-analyses (1005, from the study by Contopoulos-Ioannidis et al. 21 and 2531 from the study by Kjaergard et al. 15) was then examined in detail (Table 4). Each meta-analysis contained 10 trials, of which eight overlapped. Of the overlapping trials, seven had the same totals per group (different event numbers), and one trial had different data for the control group. These meta-analyses assessed two different outcomes: that in meta-analysis 1005 was ‘No change in positive and negative syndrome scale (data greater than 20%)’, whereas that in meta-analysis 2531 was ‘dropouts’. This explains why there was no exact correspondence in the 2 × 2 data for any trial. Meta-analysis 1005 was retained because the contributing study provided information on one additional study design characteristic (sequence generation).

| Meta-analysis ID | PMID of review | Title of systematic review | Assessed outcome | Meta-epidemiological study |

|---|---|---|---|---|

| 1005 | 10796543 | Risperidone versus typical antipsychotic medication for schizophrenia (Cochrane review–CD000440)24 | No change in positive and negative syndrome scale | Contopoulos-Ioannidis et al.21 |

| 2097 | 9097896 | Risperidone in the treatment of schizophrenia: a meta-analysis of randomized controlled trials (journal review)25 | Clinical improvement | Egger (journal)9 |

| 2456 | 10796543 | Risperidone versus typical antipsychotic medication for schizophrenia (Cochrane review–CD000440)24 | Withdrawals/dropouts | Egger (CDSR)9 |

| 2531 | 10796543 | Risperidone versus typical antipsychotic medication for schizophrenia (Cochrane review–CD000440)24 | Withdrawals/dropouts | Kjaergard et al.15 |

| Meta-analysis 1 | Meta-analysis 2 | Overlaps | Comment | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ID | Contributing study | Unique trials | ID | Contributing study | Unique trials | Exact | Same totals | Different | Within | |

| 1005 | Contopoulos-Ioannidis et al.21 | 3 | 2097 | Egger (journal)9 | 4 | 3 | 2 | 2 | 0 | 2097 removed, no methodology data |

| 1005 | Contopoulos-Ioannidis et al.21 | 3 | 2456 | Egger (CDSR)9 | 5 | 0 | 7 | 0 | 0 | 2456 removed, data from review only |

| 1005 | Contopoulos-Ioannidis et al.21 | 2 | 2531 | Kjaergard et al.15 | 2 | 0 | 7 | 1 | 0 | Detailed assessment needed (see Table 4) |

| 2097 | Egger (journal)9 | 4 | 2456 | Egger (CDSR)9 | 5 | 0 | 5 | 2 | 0 | Both already removed |

| 2097 | Egger (journal)9 | 3 | 2531 | Kjaergard et al.15 | 2 | 0 | 6 | 2 | 0 | 2097 already removed |

| 2456 | Egger (CDSR)9 | 3 | 2531 | Kjaergard et al.15 | 1 | 8 | 0 | 1 | 0 | 2456 already removed |

| Trial IDa | Meta-analysis 1005 (Contopoulos-Ioannidis21) – kept | Meta-analysis 2531 (Kjaergard15) – removed | Trial unique or overlaps? | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| d1 | h1 | n1 | d0 | h0 | n0 | d1 | h1 | n1 | d0 | h0 | n0 | ||

| R_17134 | 65 | 28 | 93 | 77 | 14 | 91 | Unique | ||||||

| R_17135 | 126 | 223 | 349 | 170 | 156 | 326 | Unique | ||||||

| P_1375801 | 15 | 7 | 22 | 17 | 5 | 22 | 1 | 21 | 22 | 5 | 17 | 22 | Overlap (equal totals) |

| P_1381102 | 26 | 27 | 53 | 31 | 22 | 53 | Unique | ||||||

| P_7508675 | 18 | 37 | 55 | 22 | 30 | 52 | 14 | 41 | 55 | 15 | 37 | 52 | Overlap (equal totals) |

| P_7514366 | 139 | 117 | 256 | 46 | 20 | 66 | 122 | 134 | 256 | 38 | 28 | 66 | Overlap (equal totals) |

| P_7542829 | 20 | 28 | 48 | 29 | 21 | 50 | 17 | 31 | 48 | 23 | 27 | 50 | Overlap (equal totals) |

| P_7545060 | 457 | 679 | 1136 | 97 | 129 | 226 | 280 | 856 | 1136 | 63 | 163 | 226 | Overlap (equal totals) |

| P_7683702 | 44 | 48 | 92 | 11 | 10 | 21 | 36 | 56 | 92 | 13 | 8 | 21 | Overlap (equal totals) |

| P_7691017 | 6 | 10 | 16 | 5 | 14 | 19 | 3 | 13 | 16 | 0 | 19 | 19 | Overlap (equal totals) |

| P_7694306 | 0 | 31 | 31 | 3 | 28 | 31 | Unique | ||||||

| P_8834417 | 4 | 17 | 21 | 8 | 12 | 20 | 4 | 17 | 21 | 6b | 4b | 10b | Overlap (different)b |

Reliability of assessment of reported study design characteristics

Eight of the participating meta-epidemiological studies contained sufficient trials (at least 10) in common to contribute to analyses of the reliability of assessment of reported study design characteristics (Table 5). Overall, there was good agreement between assessments carried out in the different contributing studies. For sequence generation (two comparisons), the percentages of studies in which the assessments were in agreement were 81% and 82% and kappa statistics were 0.56 and 0.64. For allocation concealment (12 comparisons), percentage agreement varied between 52% and 100% and kappa statistics between 0.19 and 1.00 (median 0.58). The lowest kappa (0.19) was observed in the comparison between the Egger et al. 9 and Pildal et al. 14 studies: 10 (48%) studies assessed as having adequate concealment of allocation in the Egger study (which used assessments reported by Cochrane review authors) were assessed as having inadequate or unclear concealment of allocation in the Pildal study. Assessments were most reliable for blinding (nine comparisons): percentage agreement ranged from 80% to 100% (in four comparisons), and kappa statistics ranged from 0.55 to 1.00 (median 0.87).

| Study 1 | Study 2 | Kappa | Agreement (%) | No. of trials | n00a | n01a | n10a | n11a |

|---|---|---|---|---|---|---|---|---|

| Sequence generation (inadequate/unclear vs adequate) | ||||||||

| Als-Nielsen et al.12,22 | Pildal et al.14 | 0.64 | 82 | 22 | 11 | 0 | 4 | 7 |

| Schulz et al.11 | 0.56 | 81 | 16 | 10 | 0 | 3 | 3 | |

| Allocation concealment (inadequate/unclear vs adequate) | ||||||||

| Als-Nielsen et al.12,22 | Balk et al.13 | 0.63 | 93 | 14 | 12 | 1 | 0 | 1 |

| Egger et al.9 | 0.78 | 96 | 94 | 82 | 2 | 2 | 8 | |

| Kjaergard et al.15 | 0.48 | 86 | 56 | 43 | 6 | 2 | 5 | |

| Pildal et al.14 | 0.58 | 86 | 22 | 16 | 2 | 1 | 3 | |

| Royle and Milne19 | 0.63 | 85 | 54 | 36 | 8 | 0 | 10 | |

| Schulz et al.11 | 0.76 | 94 | 16 | 13 | 1 | 0 | 2 | |

| Egger et al.9 | Contopoulos-Ioannidis et al.21 | NA | 100 | 14 | 14 | 0 | 0 | 0 |

| Pildal et al.14 | 0.19 | 52 | 21 | 8 | 0 | 10 | 3 | |

| Kjaergard et al.15 | Balk et al.13 | 0.39 | 67 | 15 | 6 | 0 | 5 | 4 |

| Egger et al.9 | 0.58 | 90 | 63 | 52 | 0 | 6 | 5 | |

| Contopoulos-Ioannidis et al.21 | 0.38 | 80 | 10 | 7 | 1 | 1 | 1 | |

| Schulz et al.11 | Egger et al.9 | 1.00 | 100 | 20 | 14 | 0 | 0 | 6 |

| Blinding (not double blind/unclear vs double blind) | ||||||||

| Als-Nielsen et al.12,22 | Egger et al.9 | 0.89 | 95 | 112 | 63 | 4 | 2 | 43 |

| Kjaergard et al.15 | 0.74 | 88 | 56 | 20 | 5 | 2 | 29 | |

| Pildal et al.14 | 1.00 | 100 | 22 | 10 | 0 | 0 | 12 | |

| Schulz et al.11 | NA | 100 | 15 | 15 | 0 | 0 | 0 | |

| Egger et al.9 | Contopoulos-Ioannidis et al.21 | 1.00 | 100 | 17 | 10 | 0 | 0 | 7 |

| Pildal et al.14 | 0.87 | 95 | 19 | 5 | 0 | 1 | 13 | |

| Kjaergard et al.15 | Egger et al.9 | 0.69 | 84 | 77 | 32 | 6 | 6 | 33 |

| Contopoulos-Ioannidis et al.21 | 0.55 | 80 | 10 | 2 | 2 | 0 | 6 | |

| Schulz et al.11 | Egger et al.9 | NA | 100 | 15 | 15 | 0 | 0 | 0 |

Interventions and outcome measures

Outcome measures were initially classified into one of 23 categories. Table 6 shows the numbers of meta-analyses with outcomes in each of these categories, together with the final category grouping. The most common outcome measure was all-cause mortality (64 meta-analyses, 18%), followed by clinician-assessed outcomes (51 meta-analyses, 14%).

| Type of outcome measure | No. of meta-analyses | Outcome groupa |

|---|---|---|

| Adverse events (as adverse effects of the treatment) | 6 | 4 |

| All-cause mortality | 64 | 1 |

| Cause-specific mortality | 2 | 4 |

| Clinician-assessed outcomes (e.g. body mass index, blood pressure, lung function, infant weight) | 51 | Mostly 4 |

| Composite end point including end points other than mortality/major morbidity | 0 | NA |

| Composite end point including mortality and/or major morbidity | 9 | 2, 3 or 4 |

| Global improvement | 4 | 4 |

| Health perceptions (person’s own view of general health) | 0 | 4 |

| Laboratory-reported outcomes (e.g. blood components, tissue analysis, urinalysis) | 29 | Mostly 2 (two 4) |

| Lifestyle outcomes (including diet, exercise, smoking) | 12 | Mostly 4 (one 2) |

| Major morbidity event (including myocardial infarction, stroke, haemorrhage) | 6 | 4 |

| Mental health outcomes (including cognitive function, depression and anxiety scores) | 16 | 4 |

| Other outcomes (not classified elsewhere) | 7 | 2, 3 or 4 |

| Pain (extent of pain a patient is experiencing) | 13 | 4 |

| Perinatal outcomes | 32 | 2 or 3 |

| Pregnancy outcomes | 11 | 2 |

| Quality of life (including ability to perform physical, daily and social activities) | 0 | 4 |

| Radiological outcomes (including radiograph abnormalities, ultrasound, magnetic resonance imaging results) | 12 | 4 |

| Resource use (including cost, hospital stay duration, number of procedures) | 4 | 3 |

| Satisfaction with care (including patient views and clinician assessments) | 0 | 4 |

| Surgical and device-related outcomes | 16 | Mostly 4 (two 3) |

| Symptoms or signs of illness or condition | 35 | 4 |

| Withdrawals/dropouts/compliance | 16 | 3 |

Experimental and comparison interventions were classified as shown in Table 7. The majority of included meta-analyses (242 meta-analyses, 67%) assessed a pharmacological experimental intervention. The most common comparison intervention was placebo or no treatment (225 meta-analyses, 62%), followed by a pharmacological comparison (54 meta-analyses, 15%). Forty-eight meta-analyses (397 trial outcomes) in which it was unclear what was the experimental intervention were excluded from meta-epidemiological analyses.

| Intervention categories | Meta-analyses per categorya | |

|---|---|---|

| Experimental | Comparison | |

| Experimental and comparison interventions | ||

| Diagnostics and screening | 7 | 4 |

| Interventions applying energy source for therapeutic purposes (e.g. ECT/radiotherapy, light therapy, etc.) | 5 | 1 |

| Lifestyle interventions (diet change, exercise, smoking cessation, etc.) | 5 | 1 |

| Medical devices | 15 | 11 |

| Pharmacological | 242 | 54 |

| Physical and manipulative therapy (physiotherapy, chiropractics, etc.) | 2 | 0 |

| Psychosocial (including psychotherapy, counselling, behavioural, advice, guidelines, self-help, etc.) | 16 | 2 |

| Resources and infrastructure/provision of care | 9 | 0 |

| Specialist nutritional interventions and fluid delivery (e.g. parenteral nutrition) | 8 | 6 |

| Surgical interventions or procedures | 26 | 19 |

| Therapies of biological origin (excluding vaccines, including in vitro fertilisation) | 2 | 1 |

| Vaccines | 4 | 1 |

| Other | 7 | 3 |

| Dual intervention (a complex intervention with components that fall in two different categories) | 12 | 0 |

| Multiple intervention (a complex intervention with components that fall in more than two different categories) | 3 | 2 |

| Comparison interventions only | ||

| No treatment | 58 | |

| Placebo | 99 | |

| Placebo or no treatment combined at meta-analysis level | 68 | |

| Standard/usual care | 11 | |

| Standard care and/or placebo and/or no treatment combined at meta-analysis level | 8 | |

Description of the final database

The final data set contained information from 363 meta-analyses containing 3474 trials. Of these, 282 meta-analyses (2572 trials) had 2 × 2 results data available. A total of 186 meta-analyses (1236 trials) had information on randomisation sequence generation, 228 (1840) on allocation concealment and 234 (1970) on blinding. In total, 175 meta-analyses (1171 trials) had information on 2 × 2 results data and these three study design characteristics.

Chapter 3 Influence of reported study design characteristics on average intervention effects and between-trial heterogeneity

Introduction

In this chapter we report the results of analyses of the influence of three reported study design characteristics [inadequate or unclear (compared with adequate) random sequence generation; inadequate or unclear (compared with adequate) allocation concealment; and absent or unclear double blinding (compared with double blinding)] on both average intervention effects and between-trial heterogeneity, according to the type of intervention and type of outcome. We examine whether or not these influences vary with the type of clinical area, intervention, comparison and outcome measure, examine effects of combinations of study design characteristics, estimate-adjusted effects using multivariable models, compare results with those derived using previously used (meta-meta-analytic) methods and explore implications of these findings for downweighting of trials whose study design characteristics are associated with bias in future meta-analyses.

Methods

For this part of the study we removed, from the database described in Chapter 2, data from three meta-epidemiological studies18–20 and one part of the Egger et al. study9 that did not collect data on study design characteristics (87 meta-analyses with 1093 trials). We also removed 36 meta-analyses (300 trials) in which it was not possible to classify one intervention as experimental and the other as control, one meta-analysis (four trials) that had a continuous outcome, 45 trials in which outcome data were missing and 50 trials in which either no or all participants experienced the outcome event.

Categories of intervention (see Table 7) containing fewer than 10 meta-analyses or 50 trials were combined into four types of intervention: pharmacological, surgical, psychosocial and behavioural, and all other interventions. Comparison interventions were classified as inactive (e.g. placebo, no intervention, standard care) or active. Outcome measures were grouped as all-cause mortality, other objectively assessed (including pregnancy outcomes and laboratory outcomes), objectively measured but potentially influenced by clinician/patient judgement (e.g. hospital admissions, total dropouts/withdrawals, caesarean section, operative/assisted delivery) and subjectively assessed (e.g. clinician-assessed outcomes, symptoms and symptom scores, pain, metal health outcomes, cause-specific mortality). Too few meta-analyses were categorised as having ‘other objectively assessed’ and ‘objectively measured but potentially influenced by clinician/patient judgement’ outcome measures to allow separate analyses; these categories were therefore grouped together.

Statistical methods

Intervention effects were modelled as log-odds ratios and outcomes were recoded where necessary so that odds ratios (ORs) < 1 corresponded to beneficial intervention effects. We fitted Bayesian hierarchical bias models using the formulation previously described as ‘Model 3’ by Welton et al. 26 We assumed that the observed number of events in each arm of each trial has a binomial distribution, with the underlying log-odds ratio in trial i in meta-analysis m (LORim) equal to

where Xim = 1 and 0 for trials with and without the reported characteristic. The parameter δim represents the intervention effect in trial i of meta-analysis m. These are assumed to be randomly distributed with variance τm2 within each meta-analysis:

Parameter βim quantifies the potential bias associated with the study design characteristic of interest. We assumed the following model structure:

for trials i with the reported characteristic in each meta-analysis m, and

across meta-analyses.

This allows for three effects of bias. First, mean intervention effects may differ between trials with and without the reported study design characteristic. Estimated mean differences (b0) were exponentiated and are thus reported as ratios of odds ratios (RORs). Second, variation in bias between trials within meta-analyses is quantified by standard deviation κ; κ2 corresponds to the average increase in between-trial heterogeneity in trials with a specified study design characteristic. Third, variation in mean bias between meta-analyses is quantified by between-meta-analysis standard deviation φ. We derived 95% credible intervals (CrI) for each parameter. In presenting results from our primary analyses, we also display the posterior variance of the parameter b0, denoted by V0. Use of this value in downweighting results from trials at high risk of bias in future meta-analyses is discussed below. For the results of our secondary analyses we do not present V0 explicitly, but the posterior uncertainty about b0 is reflected in the CrI for the ROR.

Data management and cleaning prior to analysis, and graphical displays of results, were carried out using Stata Version 11. Bias models were then fitted using WinBUGS Version 1.4 (MRC Biostatistics Unit, Cambridge, UK),27 assuming vague prior distributions for unknown parameters. Preliminary results indicated that estimated variance components κ and φ were sensitive to the prior distributions assumed for these parameters. Sensitivity to priors for variance parameters is a well-known problem in Bayesian hierarchical modelling. 28,29 This motivated a simulation study in which the performance of a range of prior distributions for variance components was compared, assuming typical values from the BRANDO database (Harris et al. , Health Protection Agency, 2010, personal communication). The prior found to give the best overall performance was a modified Inverse Gamma(0.001, 0.001) prior with increased weight on small values. This prior was therefore assumed for each variance parameter in all analyses. For location parameters (overall mean bias, baseline response rates, treatment effects), Normal(0, 1000) priors were assumed.

Meta-analyses can inform estimates of the effect of a study design characteristic only if they contain at least one trial with and one without the characteristic. We refer to such meta-analyses and trials from these meta-analyses as informative. As it is impossible to estimate both τ2 and κ in a meta-analysis with fewer than two studies with and without the study design characteristic of interest, such meta-analyses were prevented from contributing to the estimation of κ by use of the ‘cut’ function in WinBUGS.

We first conducted univariable analyses for each study design characteristic separately using all informative meta-analyses for that characteristic. The primary analysis used dichotomised variables for each characteristic (inadequate/unclear compared with adequate for sequence generation and allocation concealment, and not double blind/unclear compared with double bind). All such analyses were repeated separately for different types of outcome measure (all-cause mortality, other objectively assessed and subjectively assessed). Evidence that effects of bias differed according to the type of outcome was quantified using posterior probabilities that effects for subjective or other objective outcomes were larger than those for mortality outcomes: for example Pr(κsubjective > κmortality). The main univariable analyses were repeated using the meta-meta-analytic approach used in previous analyses8,16 allowing for random effects both within and between meta-analyses.

Further univariable and multivariable analyses were conducted using two data subsets: (1) meta-analyses of trials with information on all three study design characteristics and (2) meta-analyses of trials with information on both allocation concealment and blinding. Subset 2 was used because many studies did not have a recorded bias judgement on sequence generation (see Table 11). We conducted univariable analyses on three composite dichotomous variables: risk of bias due to inadequate/unclear allocation concealment or lack of blinding (using subset 2), any risk of selection bias (inadequate or unclear sequence generation or allocation concealment) and any risk of bias (inadequate or unclear sequence generation or allocation concealment, or not double blind). Analyses of the second two composite variables used subset 1.

Multivariable analyses were based on an extension of ‘Model 3’ of Welton et al. 26 We assumed distinct variance components associated with each study design characteristic. In the main multivariable analyses, we assumed no interactions between the different characteristics. In an additional analysis on subset 2 we allowed for interactions between inadequate allocation concealment and lack of double blinding. Interaction terms were assumed to have the same hierarchical structure as the main effects, again with distinct variance components. The implied average bias in studies with both characteristics was estimated (on the log-odds scale) as the sum of the fitted coefficients representing the average effect of each of the two characteristics and the fitted coefficient representing the average interaction term. A 95% CrI for this sum, accounting for correlations between the three coefficients, was calculated using WinBUGS. These measures were exponentiated in order to express the implied average bias as ROR. For comparison, we also calculated the corresponding implied average bias for the model without interaction terms for subset 2. We repeated all univariable analyses on subsets 1 and 2 to allow comparisons with the results of multivariable analyses.

In additional analyses we estimated separate effects of ‘inadequate’ and ‘unclear’, for each study design characteristic, by fitting models in which these two categories had different average bias (compared with ‘adequate’ trials) and distinct variance components (κ and φ). It was necessary to exclude some of the contributing meta-epidemiological studies from these analyses because their original data coding had not separated unclear from inadequate (see Tables 9 and 11). We conducted separate analyses according to clinical area and type of intervention, and repeated analyses in meta-analyses that were derived from the subset of contributing studies not included in the study of Wood et al. 16 For meta-analyses comparing two active interventions it is not possible to estimate RORs quantifying average bias, or between-meta-analysis heterogeneity in average bias, because there is no clear direction in which bias operates. Instead, we estimated parameters from restricted models to estimate increases in between-trial (within-meta-analysis) heterogeneity in trials with, compared with those without, specified study design characteristics, among meta-analyses containing at least two trials with and without the characteristic of interest.

Welton et al. 26 showed how results from hierarchical bias models can be used to formulate a prior distribution for the bias associated with a study design characteristic in a new fixed-effect meta-analysis that is assumed to be statistically exchangeable with the meta-analyses used to estimate the model parameters. This approach was based on a normal approximation to the distribution of the observed intervention effect yi in each new trial i, which is assumed to have known sampling variance σi2. Assuming known b0, κ, φ and V0, a posteriori use of the empirically based prior distribution leads to results from trials with the reported characteristic being corrected for the estimated average bias across meta-analyses (estimated b0), and the variance of such results being increased from σi2 to (σi2 + κ2 + φ2 + V0). The minimum variance of the estimated intervention effect that can in theory be achieved by an infinitely large trial with the reported characteristic is therefore κ2 + φ2 + V0.

It is of interest to quantify the likely magnitude of increases in variance resulting from application of this bias adjustment to future trial results. To do so, for each trial in the BRANDO data set we calculated the observed log-odds ratio yi and the Woolf estimate of its sampling variance, σi2. We assumed that these represented a typical range of σi2s that may be observed in future trials. For each high/unclear-risk trial result in turn, we calculated the percentage increase in variance that would result from bias adjustment at the trial level, that is:

The calculations used the posterior median values of κ and φ. We summarised these percentage increases in variance by the median and interquartile range (IQR) across trials for each study design characteristic.

Formulae from Welton et al. 26 also allow us to calculate a bias-adjusted summary mean and variance of the intervention effect in a new fixed-effect meta-analysis. Using this approach, results from trials at low risk of bias are assigned the usual inverse variance (1/σi2) weight. For trials at high/unclear risk of bias, the bias-corrected estimated effect size is used, and downweighted according to a function of σi2, κ2, φ2 and V0 (interested readers are referred to page 123 of Welton et al. 26). The resulting bias-adjusted estimate of the summary effect size will therefore have a larger variance than the standard unadjusted meta-analytic summary. The magnitude of this increase in variance will depend on the number of trials and the variances of the intervention effect estimates in the new meta-analysis, and the number of trials classified as high/unclear risk of bias. We assumed that the meta-analyses in the BRANDO database are typical in terms of these characteristics, and calculated the percentage increase in variance of the summary log-odds ratio due to bias adjustment for each meta-analysis in turn. These were summarised by the median and IQR across meta-analyses for each study design characteristic.

Results

Table 8 shows the included meta-epidemiological studies, the sources of their collections of meta-analyses and the study design characteristics that they assessed. For five studies12–15,21 data from each trial report were extracted by two researchers independently; in the study by Pildal et al. 14 the assessors were also blinded to trial results. In the study by Schulz et al. ,11 one researcher, who was blinded to the trial outcome, assessed the reported methodological characteristics of included trials using a detailed classification scheme. The study of Egger et al. 9 was based on quality assessments by the authors of the included Cochrane reviews, which were generally carried out in duplicate by two observers.

| Contributing study | Source of systematic reviews/meta-analyses | Choice of meta-analyses | Study design characteristics examined | No. of meta-analyses (trials) |

|---|---|---|---|---|

| Als-Nielsen et al.12,22 | Randomly selected from The Cochrane Library, Issue 2, 2001 | Binary outcome and ≥ 5 full-paper trials of which at least one had adequate and one inadequate allocation concealment | Sequence generation, allocation concealment, blinding, intention-to-treat analysis, power calculation | 38 (401) |

| Balk et al.13 | From four clinical areas (cardiovascular disease, infectious disease, paediatrics, surgery) identified from previous research database, MEDLINE (1966–2000) and The Cochrane Library, Issue 4, 2000 | Binary outcome, ≥ 6 trials, significant between-study heterogeneity (OR scale) | 27 characteristics including allocation concealment, blinding, intention-to-treat analysis, power calculation, stopping rules, baseline comparability | 20 (229) |

| Contopoulos-Ioannidis et al.21 | Mental health-related interventions identified from the Mental Health Library, 2002 (Issue 1) | At least one large and one small trial | Trial size, method of randomisation, allocation concealment, blinding | 9 (66) |

| Egger et al.9 | Meta-analyses from the Cochrane Database of Systematic Reviews that had performed comprehensive literature searches | Outcome measure reported by the largest number of trials | Publication status, language of publication, publication in MEDLINE-indexed journals, allocation sequence generation (subset), allocation concealment, blinding | 79 (643) |

| Kjaergard et al.15 | In The Cochrane Library, MEDLINE or PubMed with at least one trial with ≥ 1000 patients | Outcome measure described as primary by the review authors or reported by the largest number of trials | Sequence generation, allocation concealment, blinding, description of dropouts and withdrawals | 6 (59) |

| Pildal et al.14 | Random sample of 38 reviews from The Cochrane Library, Issue 2, 2003, and 32 other reviews from PubMed accessed in 2002 | Binary outcome from a meta-analysis presented as the first statistically significant result that supported a conclusion in favour of one of the interventions | Language of publication, sequence generation, allocation concealment, blinding | 56 (370) |

| Schulz et al.11 | Cochrane Pregnancy and Childbirth Group, ≥ 5 trials containing ≥ 25 events in the control group, at least one trial with and without adequate allocation concealment | The most homogeneous group of interventions | Allocation sequence generation, allocation concealment, blinding, reporting of exclusions | 26 (205) |

Table 9 shows the definitions of adequate sequence generation, allocation concealment and blinding used in the seven studies. Definitions of adequate sequence generation and adequate allocation concealment were similar in all seven studies and were based on the definitions originally proposed by Schulz et al. 11 Sequence generation was assessed as adequate, unclear or inadequate in five studies. The study by Kjaergard et al. 15 provided only dichotomised assessments of adequate compared with inadequate or unclear sequence generation, and Balk et al. 13 did not assess adequacy of sequence generation. Allocation concealment was assessed as adequate, unclear or inadequate in all seven studies. Definitions of ‘double blind’ varied between studies and were somewhat stricter in the studies by Schulz et al. 11 and Pildal et al. 14 Trials were categorised as double blind, unclear or not double blind in three studies,9,12,14 with the remaining four11,13,15,21 categorising trials as either double blind or unclear/not double blind.

| Study | Sequence generation | Allocation concealment | Blinding | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Adequate | Unclear | Inadequate | Adequate | Unclear | Inadequate | Double blind | Unclear | Not double blind | |

| Als-Nielsen et al.12,22 | Computer-generated, random number tables, flip of coin, drawing cards or lots, comparable stochastic method | ND | Quasi-randomised (dates, alternation or similar) | Central randomisation (incl. pharmacy controlled), coded identical drug boxes, sealed envelopes, on-site locked computer system or comparable | ND | Open allocation sequence | Described as double blind or at least two key groups (patient/doctor/assessor/analyst) blinded | ND | Single blind or not blinded |

| Balk et al.13 | Did not assess adequacy of sequence generation | Central randomisation; blinded code, coded drug containers; drugs prepared by pharmacy; serially numbered, opaque, sealed envelopes | ND | Random tables, cards, methods using year of birth or registration numbers | Patients and either caregivers or outcome assessors blinded | NA | Any other descriptions not classified as adequate or unclear | ||

| Contopoulos-Ioannidis et al.21 | Computer-generated, random number tables, coin or dice toss, other methods ensuring random order | ND or unclear | Alternation, case records, dates or similar non-random method | Central facility, central pharmacy, with sealed and opaque envelopes | ND or unclear | Any other methods that could not be classified as adequate | Described as double blind, or patients and either outcome assessor or caregiver blinded | NA | Not blinded, single blind, blinding not feasible, ND unclear |

| bEgger et al.9 | Computer-generated, random number tables or other methods that ensure random order | ND | Alternation, case record numbers, date of birth, etc. | Central randomisation; numbered or coded bottles or containers; drugs prepared by pharmacy; serially numbered, opaque, sealed envelopes; other convincing description implying concealment | ND | Alternation, open random number tables, etc. | Described as double blind | ND | Described as open or similar |

| Kjaergard et al.15 | Computer-generated or similar | NA | ND or non-random | Central independent unit, sealed envelopes or similar | NA | ND, open random number table or similar | Described as double blind and used identical placebo or similar | NA | Open (not blind), or described as single blind |

| Pildal et al.14 | Computer-generated sequence, random number tables, drawing lots/envelopes, coin toss | ND or unclear | Alternation, case record numbers, date of birth, etc. | Central randomisation; coded drug containers; drugs prepared by central pharmacy, serially numbered, opaque, sealed envelopes; other convincing description implying concealment | ND or approach not falling into other categories | Obvious which treatment the next patient would be allocated (alternation, case record numbers, dates of birth, etc.) | Described as double blind or patients and caregivers reported as blinded, placebo controlled without indication that treatments distinguishable or investigators unblindeda | ND or unclear | Not blinded, single blind, did not fit the definition of double blinda |

| Schulz et al.11 | Computer random number generator, random number tables, coin tossing, shuffling, other random process, minimisation | ND | Non-random | Central randomisation; numbered or coded bottles or containers; drugs prepared by pharmacy; serially numbered, opaque, sealed envelopes; other convincing description implying concealment | ND or approach not falling into other categories | Alternation or allocation by case record number or date of birth | Participants, caregivers and outcome assessors all described as blinded | NA | Descriptions not consistent with definition of double blind, blinding not feasible, ND or unclear |

Table 10 shows characteristics of the 234 meta-analyses and 1973 trials included in the database analysed. The median year of publication was 2000 for meta-analyses and 1989 for trials, whereas the median sample size was 1264 for meta-analyses and 112 for trials. A total of 57 meta-analyses (24.4%) were concerned with conditions related to pregnancy and childbirth, followed by circulatory system conditions (31, 13.3%) and mental health (26, 11.1%). The majority of experimental interventions were pharmacological (162 meta-analyses, 69.2%), whereas placebo or no treatment was the most common comparison intervention (172, 73.5%). A total of 98 meta-analyses (41.9%) analysed a subjectively assessed outcome, followed by all-cause mortality (44, 18.8%), outcomes that are objectively measured, but potentially influenced by patient/clinician judgement (42, 18.0%) and other objectively assessed outcomes (36, 15.4%); 14 meta-analyses (6.0%) contained trials with both objective and subjective outcome measures (e.g. validated and self-reported smoking cessation).

| Characteristics of meta-analyses and trials | Meta-analyses (n = 234) | Trials (n = 1973) | ||

|---|---|---|---|---|

| n | % | n | % | |

| Contributing meta-epidemiological study | ||||

| Als-Nielsen et al.12,22 | 38 | 16.2 | 401 | 20.3 |

| Balk et al.13 | 20 | 8.6 | 229 | 11.6 |

| Contopoulos-Ioannidis et al.21 | 9 | 3.9 | 66 | 3.4 |

| Egger et al.9 | 79 | 33.8 | 643 | 32.6 |

| Kjaergard et al.15 | 6 | 2.6 | 59 | 3.0 |

| Pildal et al.14 | 56 | 23.9 | 370 | 18.8 |

| Schulz et al.11 | 26 | 11.1 | 205 | 10.4 |

| Clinical area according to ICD-10 chapters30 | ||||

| Pregnancy and childbirth (chapter XV, blocks O) | 57 | 24.4 | 447 | 22.7 |

| Mental and behavioural (chapter V, F) | 26 | 11.1 | 302 | 15.3 |

| Circulatory system (chapter IX, I) | 31 | 13.3 | 277 | 14.0 |

| Digestive system (chapter XI, K) | 17 | 7.3 | 152 | 7.7 |

| Other factorsa (chapter XXI, Z) | 18 | 7.7 | 128 | 6.5 |

| Respiratory system (chapter X, J) | 14 | 6.0 | 125 | 6.3 |

| Other ICD-10 chapters | 70 | 29.9 | 539 | 27.3 |

| Unclassified | 1 | 0.4 | 3 | 0.2 |

| Type of experimental intervention | ||||

| Pharmacological | 162 | 69.2 | 1418 | 71.9 |

| Surgical | 14 | 6.0 | 122 | 6.2 |

| Psychosocial/behavioural/educational | 13 | 5.6 | 121 | 6.1 |

| Other | 45 | 19.2 | 312 | 15.8 |

| Type of comparison intervention | ||||

| Placebo or no treatment | 172 | 73.5 | 1438 | 72.9 |

| Other inactive (‘standard care’) | 16 | 6.8 | 152 | 7.7 |

| Active comparison | 44 | 18.8 | 368 | 18.7 |

| Mixture of active and inactive within meta-analysis | 2 | 0.9 | 15 | 0.8 |

| Type of outcome measure | ||||

| All-cause mortality | 44 | 18.8 | 364 | 18.5 |

| Other objective | 36 | 15.4 | 213 | 10.8 |

| Objectively measured but influenced by judgement | 42 | 18.0 | 407 | 20.6 |

| Subjective | 98 | 41.9 | 809 | 41 |

| Mixture of objective and subjective | 14 | 6.0 | 180 | 9.1 |

| Year of publication of reviewb/trial | ||||

| Median (range) | 2000 (1983–2005) | 1989 (1948–2002) | ||

| IQR | 2000 to 2001 | 1983 to 1994 | ||

| Sample size of meta-analysis/trial | ||||

| Median (range) | 1264 (72–176,733) | 112 (2–82,892) | ||

| IQR | 533 to 2582 | 58 to 267 | ||

Table 11 summarises the characteristics of trials included in analyses. Information on sequence generation was available for 1207 (61.2%) trials included in 186 meta-analyses, of which 112 meta-analyses containing 944 trials were informative. Sequence generation was assessed as unclear in 769 (63.7%) of these trials, although 306 (25.4%) were assessed as having adequate sequence generation. Percentages were similar for trials included in informative meta-analyses.

| Study design characteristic | No. (%) of meta-analyses with information (n = 234) | No. (%) of trials with information (n = 1973) | No. (%) of informative meta-analyses with information | No. (%) of trials with information included in informative meta-analyses |

|---|---|---|---|---|

| Adequate sequence generation | 186 (79.5) | 1207 (61.2) | 112 (47.9) | 944 (47.8) |

| Yes | 306 (25.4) | 248 (26.3) | ||

| Unclear | 769 (63.7) | 598 (63.3) | ||

| No | 101 (8.4) | 67 (7.1) | ||

| No/uncleara | 31 (2.6) | 31 (3.3) | ||

| Adequate allocation concealment | 228 (97.4) | 1796 (91.0) | 146 (62.4) | 1292 (65.5) |

| Yes | 416 (23.2) | 376 (29.1) | ||

| Unclear | 1244 (69.3) | 828 (64.1) | ||

| No | 136 (7.6) | 88 (6.8) | ||

| Double blind | 234 (100.0) | 1970 (99.8) | 104 (44.4) | 1057 (53.6) |

| Yes | 929 (47.2) | 590 (55.8) | ||

| Unclear | 109 (5.5) | 63 (6.0) | ||

| No | 683 (34.7) | 249 (23.6) | ||

| No/uncleara | 249 (12.6) | 155 (14.7) | ||

| Information on both allocation concealment and blinding | 228 (97.4) | 1793 (90.9) | ||

| Information on all three characteristics | 175 (74.8) | 1171 (59.4) |

Information on allocation concealment was available for most meta-analyses (228, 97.4%) and trials (1796, 91.0%), of which 146 meta-analyses containing 1292 trials were informative. In 1244 (69.3%) trials, allocation concealment was assessed as unclear; 416 (23.2%) trials reported sufficient information to be classed as having adequate allocation concealment. The percentage of trials assessed as having adequate allocation concealment was somewhat higher (29.1%) among those included in informative meta-analyses.

Information on double blinding was available for all except three trials. However, only 104 meta-analyses (1057 trials) were informative; 77 meta-analyses contained no trials that were double blind, whereas 53 contained only double-blind trials. A total of 929 (47.2%) trials were classified as double blind, compared with 590 (55.8%) trials in informative meta-analyses.

Information on both allocation concealment and blinding was available in 1793 (90.9%) trials contained in 228 (97.4%) meta-analyses, although information on all three study design characteristics was available in 1171 (59.4%) trials contained in 175 (74.8%) meta-analyses.

Table 12 shows associations between the reported study design characteristics, for all trials combined and separately according to the nature of the outcome measure. Trials reporting adequate sequence generation were more likely to report adequate allocation concealment [OR 3.01, 95% confidence interval (CI) 2.20 to 4.12], but there was little association between adequate sequence generation and double blinding (OR 1.03, 95% CI 0.78 to 1.35). However, adequately concealed trials were more likely to be double blind (OR 3.14, 95% CI 2.49 to 3.96).

| Study characteristic 1 | Study characteristic 2 | No. (%) of trials | |||

|---|---|---|---|---|---|

| All trials | Mortality outcome | Objective outcome | Subjective outcome | ||

| Sequence generation | Allocation concealment | 1171 | 157 | 368 | 646 |

| Adequate | Adequate | 91 (7.8) | 16 (10.2) | 32 (8.7) | 43 (6.7) |

| Adequate | Inadequate/unclear | 182 (15.5) | 25 (15.9) | 64 (17.4) | 93 (14.4) |

| Inadequate/unclear | Adequate | 128 (10.9) | 15 (9.6) | 45 (12.2) | 68 (10.5) |

| Inadequate/unclear | Inadequate/unclear | 770 (65.8) | 101 (64.3) | 227 (61.7) | 442 (68.4) |

| OR (95% CI) | 3.01 (2.20 to 4.12) | 4.31 (1.88 to 9.88) | 2.52 (1.48 to 4.29) | 3.01 (1.93 to 4.68) | |

| Sequence generation | Blinding | 1171 | 157 | 368 | 646 |

| Adequate | Double blind | 127 (10.8) | 21 (13.4) | 44 (12.0) | 62 (9.6) |

| Adequate | Not double blind/unclear | 146 (12.5) | 20 (12.7) | 52 (14.1) | 74 (11.5) |

| Inadequate/unclear | Double blind | 412 (35.2) | 53 (33.8) | 127 (34.5) | 232 (35.9) |

| Inadequate/unclear | Not double blind/unclear | 486 (41.5) | 63 (40.1) | 145 (39.4) | 278 (43.0) |

| OR (95% CI) | 1.03 (0.78 to 1.35) | 1.25 (0.61 to 2.55) | 0.97 (0.61 to 1.54) | 1.00 (0.69 to 1.47) | |

| Allocation concealment | Blinding | 1793 | 328 | 550 | 915 |

| Adequate | Double blind | 283 (15.8) | 65 (19.8) | 93 (16.9) | 125 (13.7) |

| Adequate | Not double blind/unclear | 133 (7.4) | 30 (9.1) | 45 (8.2) | 58 (6.3) |

| Inadequate/unclear | Double blind | 556 (31.0) | 108 (32.9) | 159 (28.9) | 289 (31.6) |

| Inadequate/unclear | Not double blind/unclear | 821 (45.8) | 125 (38.1) | 253 (46.0) | 443 (48.4) |

| OR (95% CI) | 3.14 (2.49 to 3.96) | 2.51 (1.52 to 4.15) | 3.29 (2.19 to 4.94) | 3.30 (2.34 to 4.66) | |

Associations between reported study design characteristics were similar for the different types of outcome measure. Table 13 shows the number of trials assessed with each of the eight possible combinations of the study design characteristics, overall and according to the type of outcome measure. Only 60 (5.1%) trials were assessed as at low risk of bias for all three characteristics, whereas 453 (38.7%) were assessed as at high risk of bias for all three characteristics.

| Study design characteristics | Number of trials | |||||

|---|---|---|---|---|---|---|

| Sequence generation | Allocation concealment | Blinding | All | Mortality | Objective | Subjective |

| Adequate | Adequate | Double blind | 60 | 12 | 22 | 26 |

| Adequate | Adequate | Not double blinda | 31 | 4 | 10 | 17 |

| Adequate | Inadequatea | Double blind | 67 | 9 | 22 | 36 |

| Adequate | Inadequatea | Not double blinda | 115 | 16 | 42 | 57 |

| Inadequatea | Adequate | Double blind | 95 | 9 | 35 | 51 |

| Inadequatea | Adequate | Not double blinda | 33 | 6 | 10 | 17 |

| Inadequatea | Inadequatea | Double blind | 317 | 44 | 92 | 181 |

| Inadequatea | Inadequatea | Not double blinda | 453 | 57 | 135 | 261 |

| Total | 1171 | 157 | 368 | 646 | ||

Influence of reported study design characteristics on intervention effect estimates: univariable analyses of individual characteristics

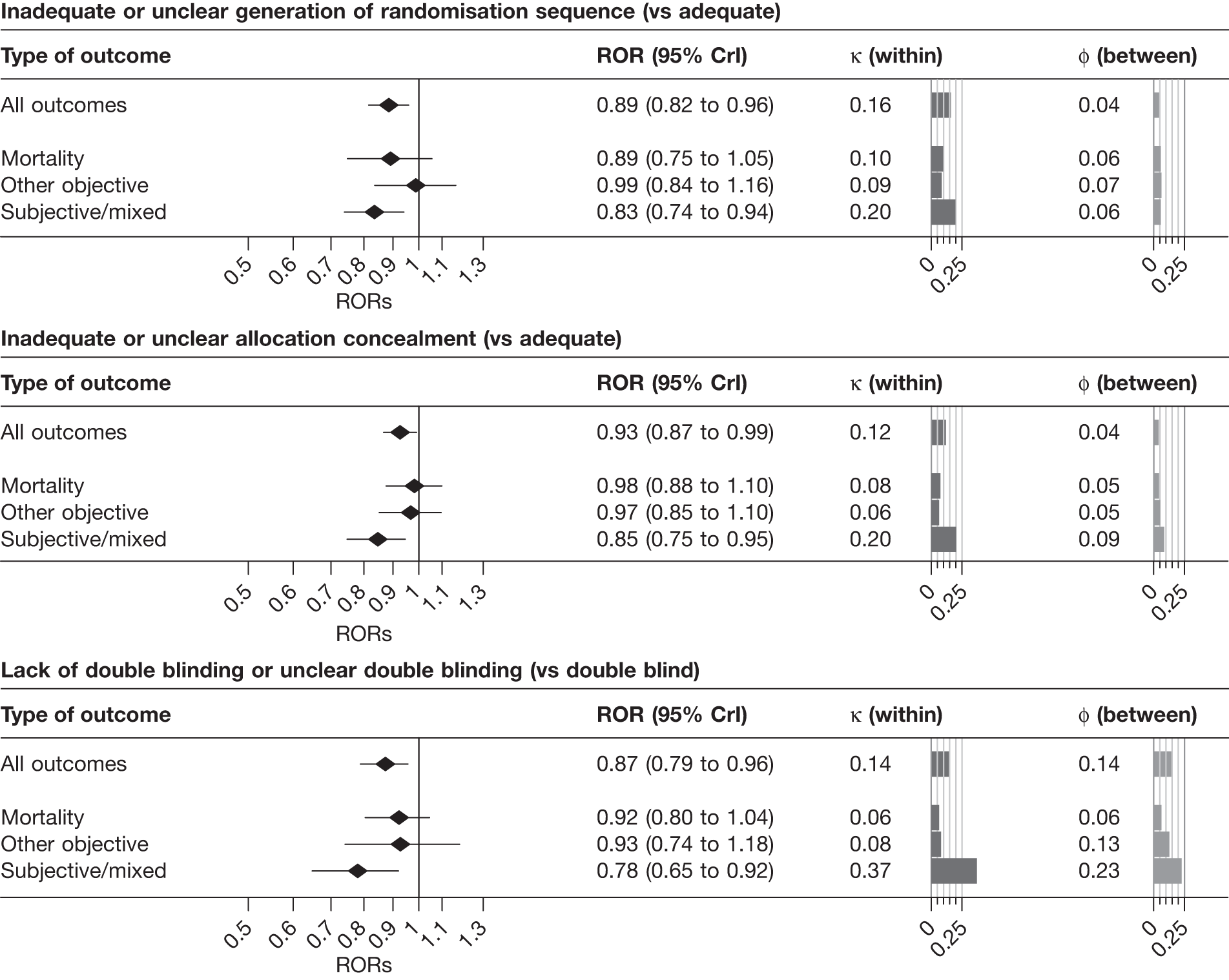

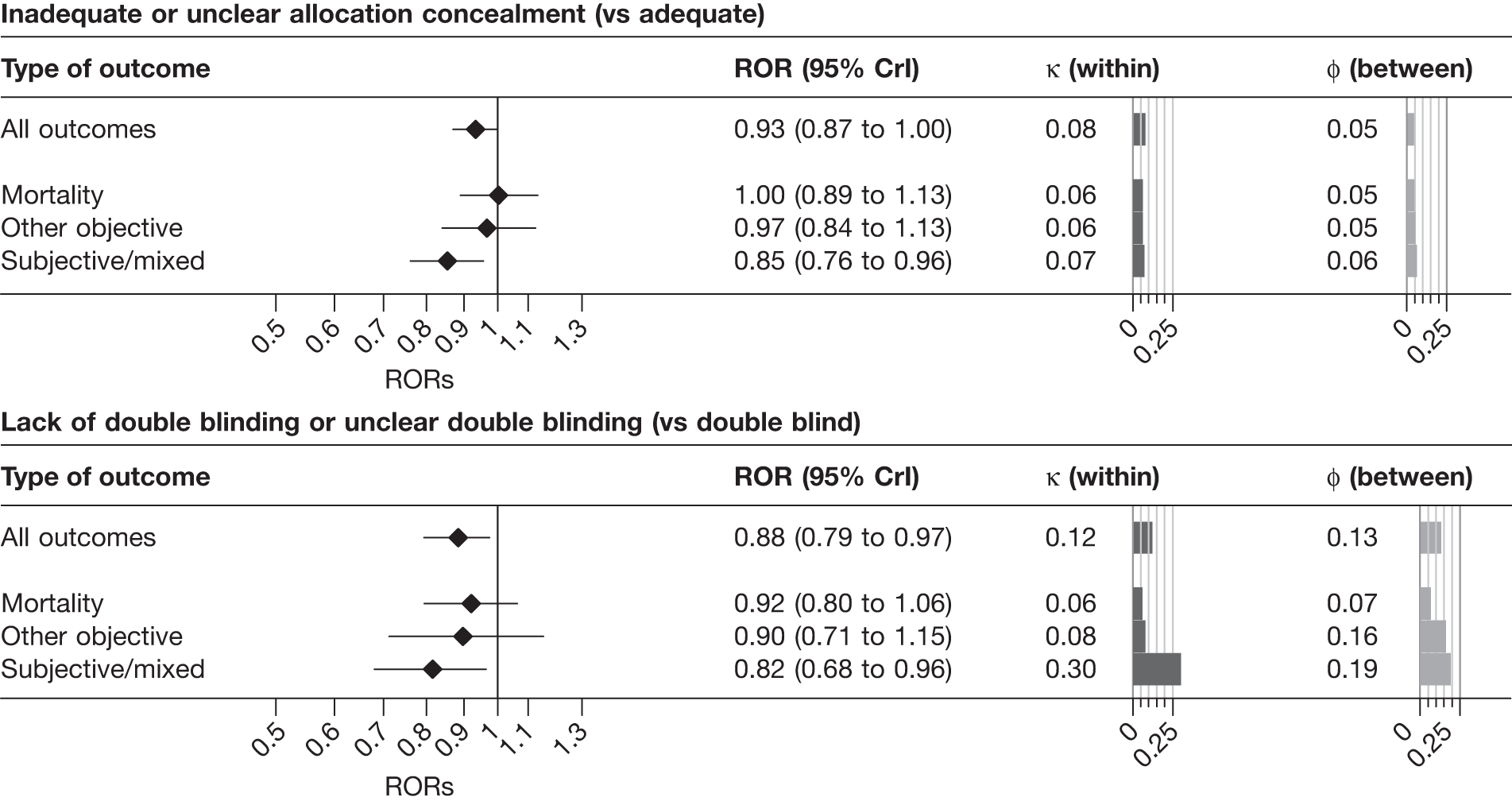

Figure 3 and Table 14 present results from univariable analyses of the influence of reported study design characteristics on intervention effect estimates, both overall and separately according to type of outcome measure. Compared with Figure 3, Table 14 additionally includes 95% CrIs for the variance parameters κ and φ and displays the numbers of trials, and trials at high risk of bias, included in analyses. Overall, intervention effect estimates were exaggerated by an average of 11% in trials with inadequate or unclear sequence generation (ROR 0.89, 95% CrI 0.82 to 0.96), and between-trial heterogeneity was higher among such trials (κ = 0.16, 95% CrI 0.03 to 0.27). When analyses were stratified according to the type of outcome measure, the average effect of inadequate or unclear sequence generation appeared greatest for subjective outcomes [ROR 0.83, 95% CrI 0.74 to 0.94, posterior probability (PPr) that RORsubjective < RORmortality = 0.73], and the increase in between-trial heterogeneity was also greatest for such outcomes (κ = 0.20, CrI 0.03 to 0.32, PPr that κsubjective > κmortality = 0.78). In contrast, there was little evidence that inadequate or unclear sequence generation was associated with exaggeration of intervention effects for all-cause mortality (ROR 0.89, 95% CrI 0.75 to 1.05) or for other objective outcomes (ROR 0.99, 95% CrI 0.84 to 1.16). For all types of outcome measure there was only limited between-meta-analysis heterogeneity in mean bias (estimated φ between 0.04 and 0.07).

| Study design characteristic and outcome | No. of meta-analyses | No. of trials | Contributing meta-analysesa | Contributing trials | No. (%) of trials at high risk of biasb | ROR | 95% CrI | κ | 95% CrI | φ | 95% CrI | V0 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Inadequate or unclear sequence generation (vs adequate) | ||||||||||||

| All | 186 | 1207 | 112 (57) | 944 | 696 (73.7) | 0.89 | 0.82 to 0.96 | 0.16 | 0.03 to 0.27 | 0.04 | 0.01 to 0.14 | 0.002 |

| Mortality | 30 | 163 | 16 (12) | 129 | 92 (71.3) | 0.89 | 0.75 to 1.05 | 0.10 | 0.01 to 0.29 | 0.06 | 0.01 to 0.25 | 0.008 |

| Objective | 66 | 387 | 47 (19) | 328 | 234 (71.3) | 0.99 | 0.84 to 1.16 | 0.09 | 0.01 to 0.34 | 0.07 | 0.01 to 0.25 | 0.007 |

| Subjective | 90 | 657 | 49 (26) | 487 | 370 (76.0) | 0.83 | 0.74 to 0.94 | 0.20 | 0.03 to 0.32 | 0.06 | 0.01 to 0.22 | 0.004 |

| Inadequate or unclear allocation concealment (vs adequate) | ||||||||||||

| All | 228 | 1796 | 146 (80) | 1292 | 916 (70.9) | 0.93 | 0.87 to 0.99 | 0.12 | 0.02 to 0.23 | 0.04 | 0.01 to 0.13 | 0.001 |

| Mortality | 44 | 328 | 32 (15) | 268 | 183 (68.3) | 0.98 | 0.88 to 1.10 | 0.08 | 0.01 to 0.21 | 0.05 | 0.01 to 0.18 | 0.003 |

| Objective | 76 | 551 | 45 (21) | 372 | 253 (68.0) | 0.97 | 0.85 to 1.10 | 0.06 | 0.01 to 0.26 | 0.05 | 0.01 to 0.24 | 0.004 |

| Subjective | 108 | 917 | 69 (44) | 652 | 480 (73.6) | 0.85 | 0.75 to 0.95 | 0.20 | 0.02 to 0.33 | 0.09 | 0.01 to 0.29 | 0.003 |

| Lack of double blinding or unclear double blinding (vs double blind) | ||||||||||||

| All | 234 | 1970 | 104 (66) | 1057 | 467 (44.2) | 0.87 | 0.79 to 0.96 | 0.14 | 0.02 to 0.30 | 0.14 | 0.03 to 0.28 | 0.002 |

| Mortality | 44 | 364 | 25 (14) | 245 | 109 (44.5) | 0.92 | 0.80 to 1.04 | 0.06 | 0.01 to 0.20 | 0.06 | 0.01 to 0.22 | 0.004 |

| Objective | 78 | 619 | 28 (17) | 282 | 120 (42.6) | 0.93 | 0.74 to 1.18 | 0.08 | 0.01 to 0.38 | 0.13 | 0.01 to 0.50 | 0.014 |

| Subjective | 112 | 987 | 51 (35) | 530 | 238 (44.9) | 0.78 | 0.65 to 0.92 | 0.37 | 0.19 to 0.53 | 0.23 | 0.04 to 0.44 | 0.008 |

FIGURE 3.

Estimated RORs and effects on heterogeneity associated with reported study design characteristics, according to the type of outcome measure: univariable analyses based on all available data. Random sequence generation: 944 trials from 112 informative meta-analyses; allocation concealment: 1292 trials from 146 informative meta-analyses; blinding: 1057 trials from 104 informative meta-analyses.

Overall, intervention effect estimates were exaggerated by 7% in trials with inadequate or unclear allocation concealment (ROR 0.93, 95% CrI 0.87 to 0.99), and there was evidence that between-trial heterogeneity was increased for such studies (κ = 0.12, 95% CrI 0.02 to 0.23). The influence of inadequate or unclear allocation concealment appeared greatest among meta-analyses with a subjectively assessed outcome measure (ROR 0.85, 95% CrI 0.75 to 0.95, PPr that RORsubjective < RORmortality = 0.97; κ = 0.20, 95% CrI 0.02 to 0.33, PPr that κsubjective > κmortality = 0.85). In contrast, the average effect of inadequate or unclear allocation concealment was close to the null for meta-analyses with mortality (ROR 0.98, 95% CrI 0.88 to 1.10) and other objective outcomes (ROR 0.97, 95% CrI, 0.85 to 1.10). Estimates of both between-trial and between-meta-analyses heterogeneity in bias were lower for such outcomes than for subjectively assessed outcomes.

Lack of, or unclear, double blinding was associated with an average 13% exaggeration of intervention effects (ROR 0.87, 95% CrI 0.79 to 0.96). There was evidence that between-trial heterogeneity was increased for such studies (κ = 0.14, 95% CrI 0.02 to 0.30), and that average bias varied between meta-analyses (φ = 0.14, 95% CrI 0.03 to 0.28). Average bias (ROR 0.78, 95% CrI 0.65 to 0.92), increased between-trial heterogeneity (κ = 0.37, 95% CrI 0.19 to 0.53) and between-meta-analysis heterogeneity in average bias (φ = 0.23, 95% CrI 0.04 to 0.44) all appeared greatest for meta-analyses assessing subjective outcomes (PPr RORsubjective < RORmortality = 0.94, PPr κsubjective > κmortality = 0.99, PPr φsubjective > φmortality = 0.90). Among meta-analyses with subjectively assessed outcomes, the influence of lack of blinding appeared greater than the influence of inadequate or unclear sequence generation or allocation concealment.

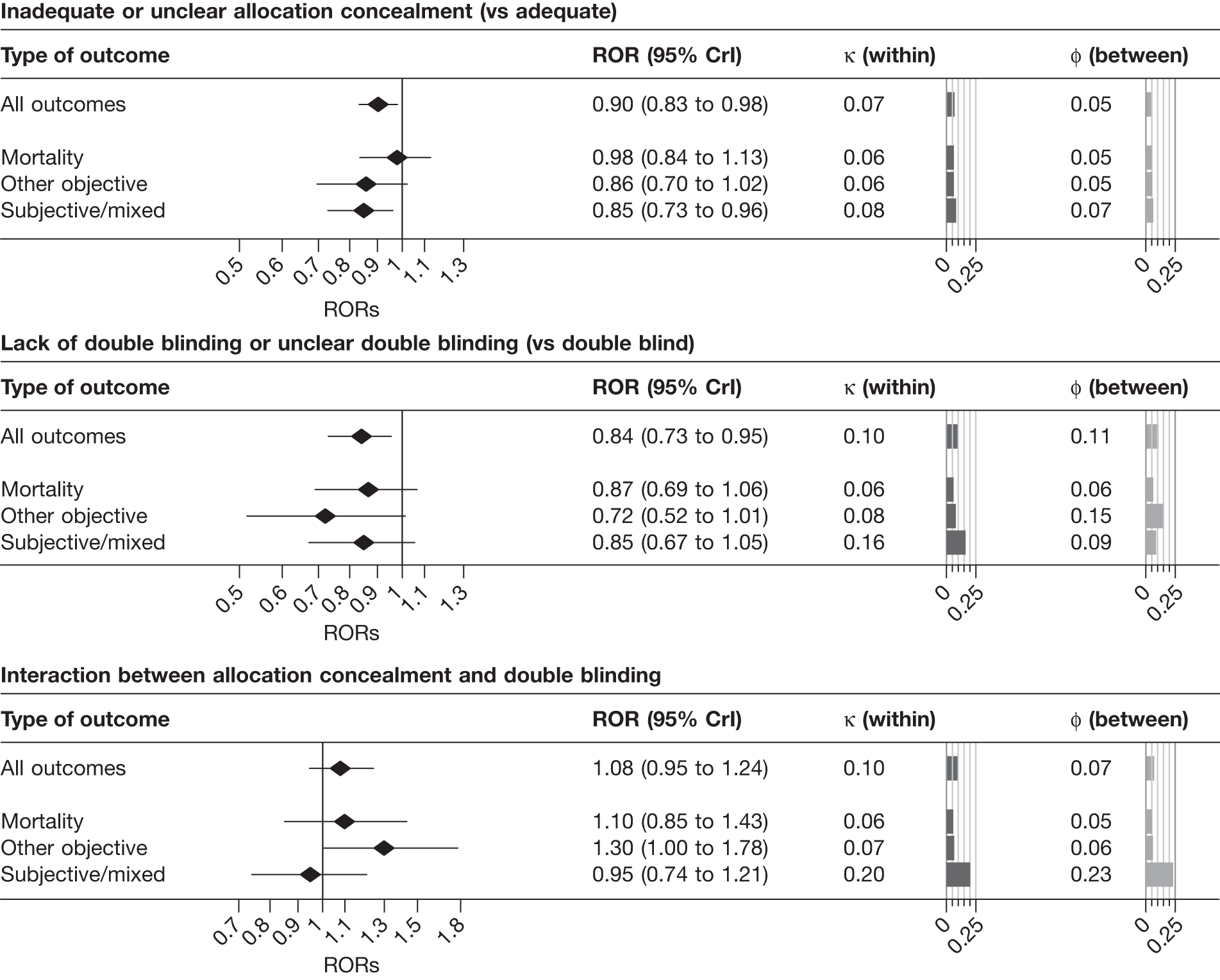

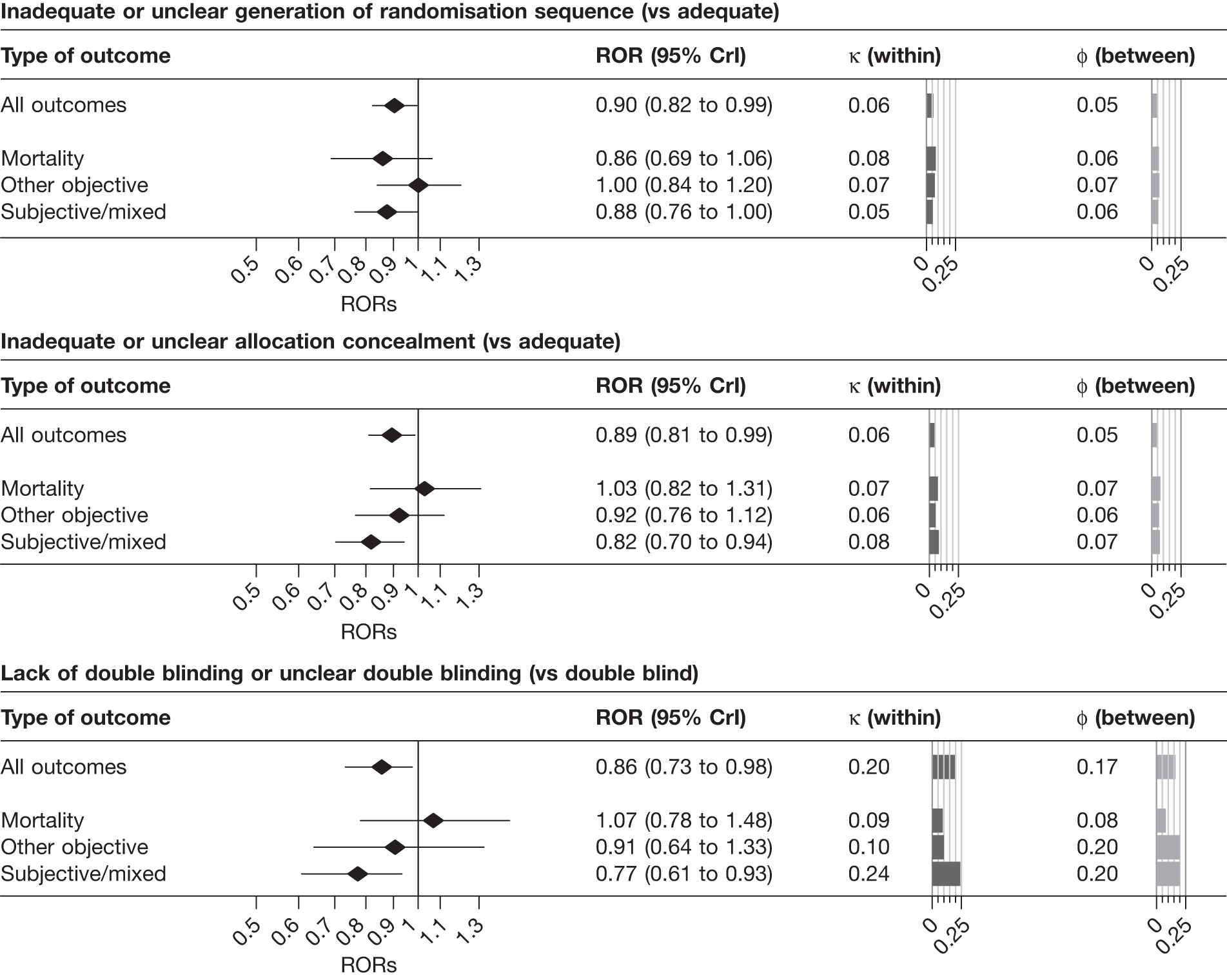

Results from univariable analyses of the influence of inadequate or unclear allocation concealment and lack of double blinding restricted to the 228 meta-analyses (1793 trials) that contained information on both of these characteristics were similar (Table 15) to those presented in Figure 3 and Table 14. We also repeated the univariable analyses in a data set restricted to 175 meta-analyses (1171 trials) that had information on all three study design characteristics (sequence generation, allocation concealment and blinding). The results were similar (Table 16), although the influence of inadequate allocation concealment appeared somewhat greater in analyses restricted to the 88 informative meta-analyses (811 trials) that had information on all three characteristics.

| Study design characteristic and outcome | No. of meta-analyses | No. of trials | Contributing meta-analysesa | Contributing trials | No. (%) of trials at high risk of biasb | ROR | 95% CrI | κ | 95% CrI | φ | 95% CrI |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Inadequate or unclear allocation concealment (vs adequate) | |||||||||||

| All | 228 | 1793 | 146 (80) | 1291 | 915 (70.9) | 0.93 | 0.87 to 0.99 | 0.11 | 0.02 to 0.22 | 0.04 | 0.01 to 0.13 |

| Mortality | 44 | 328 | 32 (15) | 268 | 183 (68.3) | 0.98 | 0.88 to 1.10 | 0.08 | 0.01 to 0.21 | 0.05 | 0.01 to 0.18 |

| Objective | 76 | 550 | 45 (21) | 372 | 253 (68.0) | 0.97 | 0.85 to 1.10 | 0.06 | 0.01 to 0.26 | 0.05 | 0.01 to 0.21 |

| Subjective | 108 | 915 | 69 (44) | 651 | 479 (73.6) | 0.84 | 0.75 to 0.94 | 0.20 | 0.03 to 0.33 | 0.09 | 0.01 to 0.30 |

| Lack of double blinding or unclear double blinding (vs double blind) | |||||||||||

| All | 228 | 1793 | 101 (62) | 977 | 432 (44.2) | 0.87 | 0.78 to 0.96 | 0.13 | 0.02 to 0.29 | 0.13 | 0.02 to 0.26 |

| Mortality | 44 | 328 | 25 (13) | 215 | 99 (46.0) | 0.92 | 0.80 to 1.05 | 0.06 | 0.01 to 0.20 | 0.07 | 0.01 to 0.24 |

| Objective | 76 | 550 | 27 (17) | 259 | 108 (41.7) | 0.88 | 0.71 to 1.11 | 0.07 | 0.01 to 0.38 | 0.13 | 0.01 to 0.49 |

| Subjective | 108 | 915 | 49 (32) | 503 | 225 (44.7) | 0.79 | 0.65 to 0.93 | 0.33 | 0.08 to 0.49 | 0.21 | 0.04 to 0.41 |

| Inadequate or unclear allocation concealment or not double blind (vs adequate allocation concealment and double blind) | |||||||||||

| All | 228 | 1793 | 104 (55) | 990 | 731 (73.8) | 0.88 | 0.81 to 0.95 | 0.12 | 0.02 to 0.22 | 0.05 | 0.01 to 0.14 |

| Mortality | 44 | 328 | 25 (13) | 220 | 157 (71.4) | 0.95 | 0.84 to 1.06 | 0.08 | 0.01 to 0.22 | 0.05 | 0.01 to 0.19 |

| Objective | 76 | 550 | 30 (16) | 268 | 191 (71.3) | 0.84 | 0.69 to 1.00 | 0.07 | 0.01 to 0.33 | 0.07 | 0.01 to 0.33 |

| Subjective | 108 | 915 | 49 (26) | 502 | 383 (76.3) | 0.83 | 0.73 to 0.93 | 0.17 | 0.02 to 0.31 | 0.06 | 0.01 to 0.23 |

| Study design characteristic and outcome | No. of meta-analyses | No. of trials | Contributing meta-analysesa | Contributing trials | No. (%) of trials at high risk of biasb | ROR | 95% CrI | κ | 95% CrI | φ | 95% CrI |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Inadequate or unclear sequence generation (vs adequate) | |||||||||||

| All | 175 | 1171 | 104 (54) | 911 | 676 (74.2) | 0.88 | 0.81 to 0.96 | 0.17 | 0.03 to 0.27 | 0.04 | 0.01 to 0.15 |

| Mortality | 27 | 157 | 15 (11) | 122 | 88 (72.1) | 0.88 | 0.73 to 1.05 | 0.11 | 0.01 to 0.32 | 0.06 | 0.01 to 0.26 |

| Objective | 62 | 368 | 42 (18) | 310 | 223 (71.9) | 0.99 | 0.84 to 1.18 | 0.08 | 0.01 to 0.32 | 0.07 | 0.01 to 0.27 |

| Subjective | 86 | 646 | 47 (25) | 479 | 365 (76.2) | 0.83 | 0.73 to 0.93 | 0.20 | 0.03 to 0.32 | 0.06 | 0.01 to 0.22 |

| Inadequate or unclear allocation concealment (vs adequate) | |||||||||||

| All | 175 | 1171 | 88 (45) | 811 | 605 (74.6) | 0.88 | 0.80 to 0.96 | 0.13 | 0.02 to 0.26 | 0.05 | 0.01 to 0.18 |

| Mortality | 27 | 157 | 15 (5) | 118 | 89 (75.4) | 0.97 | 0.80 to 1.18 | 0.09 | 0.01 to 0.31 | 0.07 | 0.01 to 0.32 |

| Objective | 62 | 368 | 31 (15) | 257 | 180 (70.0) | 0.93 | 0.80 to 1.09 | 0.06 | 0.01 to 0.24 | 0.06 | 0.01 to 0.26 |

| Subjective | 86 | 646 | 42 (25) | 436 | 336 (77.1) | 0.79 | 0.67 to 0.90 | 0.22 | 0.03 to 0.36 | 0.10 | 0.01 to 0.36 |

| Lack of double blinding or unclear double blinding (vs double blind) | |||||||||||

| All | 175 | 1171 | 60 (36) | 592 | 251 (42.4) | 0.83 | 0.72 to 0.95 | 0.26 | 0.05 to 0.42 | 0.16 | 0.03 to 0.33 |

| Mortality | 27 | 157 | 9 (3) | 74 | 29 (39.2) | 1.06 | 0.82 to 1.44 | 0.11 | 0.01 to 0.47 | 0.08 | 0.01 to 0.41 |

| Objective | 62 | 368 | 17 (9) | 165 | 73 (44.2) | 0.89 | 0.64 to 1.26 | 0.10 | 0.01 to 0.49 | 0.22 | 0.02 to 0.79 |

| Subjective | 86 | 646 | 34 (24) | 353 | 149 (42.2) | 0.73 | 0.57 to 0.89 | 0.33 | 0.09 to 0.50 | 0.22 | 0.05 to 0.42 |

| Inadequate or unclear sequence generation or allocation concealment (vs adequate sequence generation and allocation concealment) | |||||||||||

| All | 175 | 1171 | 53 (22) | 534 | 445 (83.3) | 0.89 | 0.78 to 1.00 | 0.12 | 0.02 to 0.27 | 0.06 | 0.01 to 0.22 |

| Mortality | 27 | 157 | 10 (4) | 79 | 65 (82.3) | 0.94 | 0.74 to 1.15 | 0.08 | 0.01 to 0.29 | 0.08 | 0.01 to 0.38 |

| Objective | 62 | 368 | 19 (10) | 176 | 144 (81.8) | 0.82 | 0.57 to 1.10 | 0.15 | 0.01 to 0.61 | 0.20 | 0.02 to 0.68 |

| Subjective | 86 | 646 | 24 (8) | 279 | 236 (84.6) | 0.85 | 0.70 to 1.01 | 0.15 | 0.01 to 0.32 | 0.08 | 0.01 to 0.34 |

| Inadequate or unclear sequence generation or allocation concealment or not double blind (vs adequate sequence generation and allocation concealment and double blind) | |||||||||||

| All | 175 | 1171 | 37 (14) | 409 | 351 (85.8) | 0.79 | 0.64 to 0.92 | 0.12 | 0.01 to 0.28 | 0.12 | 0.02 to 0.40 |

| Mortality | 27 | 157 | 7 (3) | 65 | 55 (84.6) | 0.94 | 0.72 to 1.19 | 0.09 | 0.01 to 0.32 | 0.08 | 0.01 to 0.38 |

| Objective | 62 | 368 | 14 (6) | 139 | 117 (84.2) | 0.63 | 0.42 to 0.98 | 0.24 | 0.02 to 0.77 | 0.23 | 0.02 to 0.82 |

| Subjective | 86 | 646 | 16 (5) | 205 | 179 (87.3) | 0.71 | 0.52 to 0.89 | 0.12 | 0.01 to 0.31 | 0.16 | 0.02 to 0.55 |

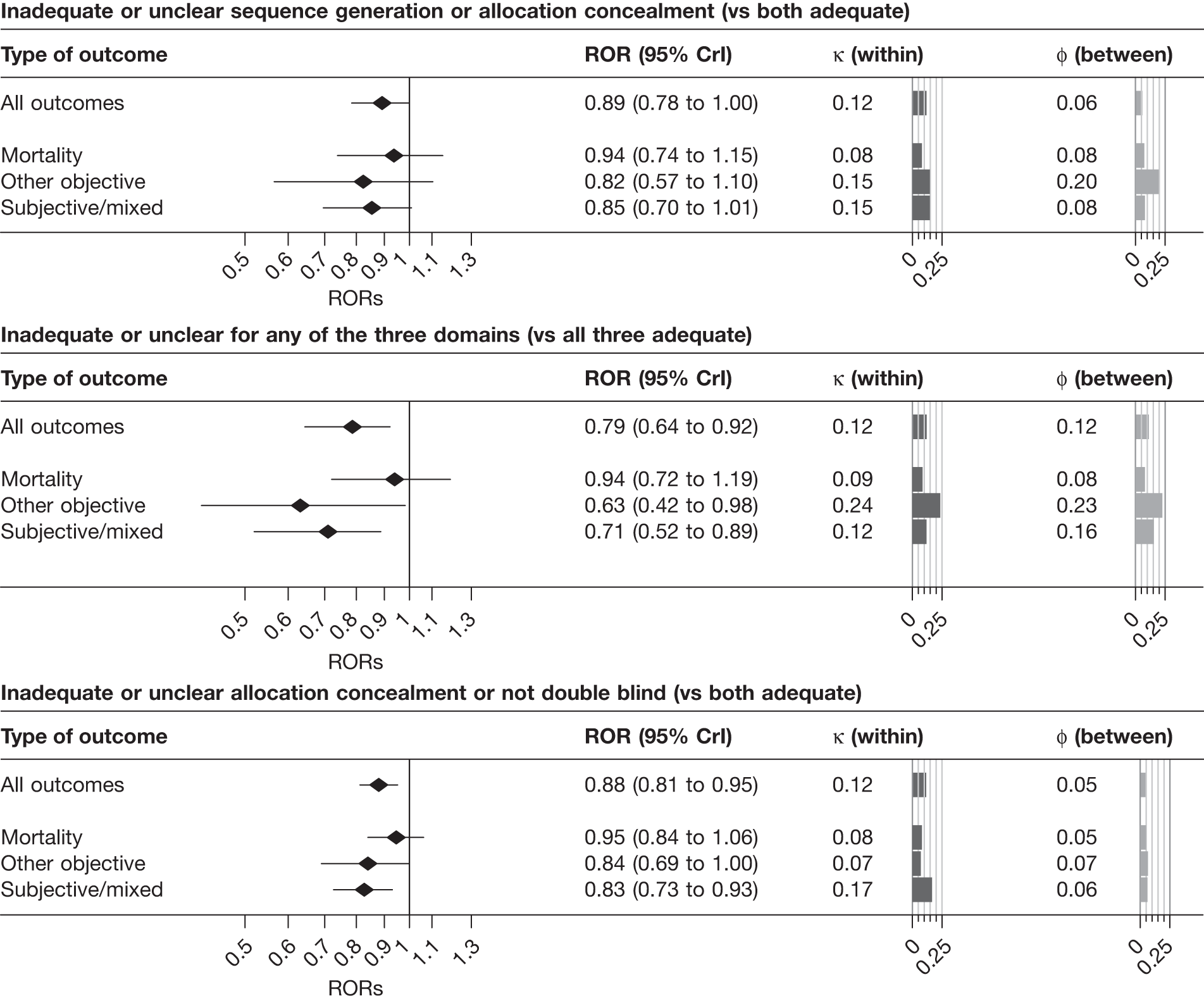

Influence of reported study design characteristics: univariable analyses of combinations of characteristics