Notes

Article history

This issue of the Health Technology Assessment journal series contains a project commissioned/managed by the Methodology research programme (MRP). The Medical Research Council (MRC) is working with NIHR to deliver the single joint health strategy and the MRP was launched in 2008 as part of the delivery model. MRC is lead funding partner for MRP and part of this programme is the joint MRC–NIHR funding panel ‘The Methodology Research Programme Panel’.

To strengthen the evidence base for health research, the MRP oversees and implements the evolving strategy for high-quality methodological research. In addition to the MRC and NIHR funding partners, the MRP takes into account the needs of other stakeholders including the devolved administrations, industry R&D, and regulatory/advisory agencies and other public bodies. The MRP funds investigator-led and needs-led research proposals from across the UK. In addition to the standard MRC and RCUK terms and conditions, projects commissioned/managed by the MRP are expected to provide a detailed report on the research findings and may publish the findings in the HTA journal, if supported by NIHR funds.

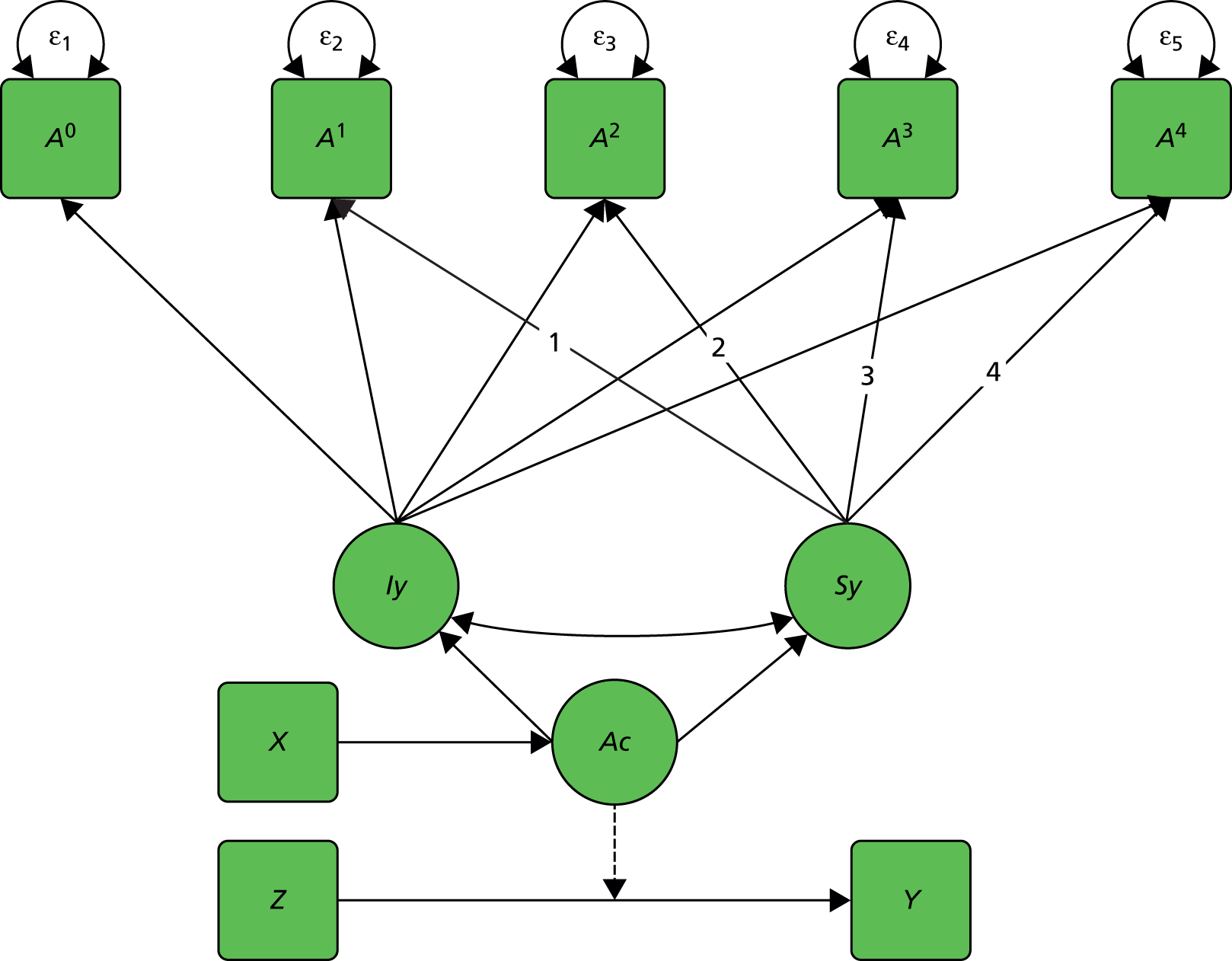

The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Dr Emsley reports grants from the UK Medical Research Council (MRC) during the conduct of the study. Professor Landau reports grants from the National Institute for Health Research (NIHR) during the conduct of the study. Professor Pickles reports grants from the MRC and from the NIHR during the conduct of the study and royalties from Western Psychological Services outside the submitted work.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2015. This work was produced by Dunn et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Efficacy and mechanism evaluation

Background

The development of the capability and capacity to implement high-quality clinical research, including the evaluation of complex interventions through randomised trials, is a key priority of the NHS, the National Institute for Health Research (NIHR) and the Medical Research Council (MRC). 1 The evaluation of complex treatment programmes for mental illness [e.g. cognitive–behavioural therapy (CBT) for depression or psychosis] not only is a vital component of this research in its own right but also provides a well-established model for the evaluation of complex interventions in other clinical areas. It is recognised, however, that randomised trials of psychological treatments need to be implemented on a larger scale than has typically been the case hitherto, and the NIHR Mental Health Research Network (MHRN) was established to foster and support developments in this area. The parallel development of research methodology for the optimal design, implementation and interpretation of the results of such trials is an essential component of these developments. In particular, there is a need for robust methods to make valid causal inferences for explanatory analyses of the mechanisms of treatment-induced change in clinical and economic outcomes in randomised clinical trials. This has been recognised by the MHRN in the formation of a MHRN Methodology Research Group, of which all investigators are members and which is led by the principal investigator in the current project. The MHRN Methodology Research Group is an initiative to bring key scientists in the field together, to develop resources and training programmes, and to foster the development and evaluation of relevant methodologies.

Broadly speaking, the research presented in this report aims to answer four questions about complex interventions/treatments:

-

Does it work?

-

How does it work?

-

Whom does it work for?

-

What factors make it work better?

In particular, the present project was aimed at strengthening the methodological underpinnings of psychological treatment trials: to develop, evaluate and disseminate statistical and econometric methods for the explanatory analysis of trials of psychological treatment programmes involving complex interventions and multivariate responses.

By explanatory analysis, we mean a secondary analysis in which one tries to explain how a given therapeutic effect has been achieved or, alternatively, why the therapy is apparently ineffective. This is scientifically useful because it can allow investigators to tailor treatments more effectively or to identify different mechanisms. An explanatory trial is one that is designed to answer these questions.

We use psychological treatment trials as an exemplar of complex interventions, but the methodology and associated problems are more generic and can be readily applied to other clinical areas (although this is beyond the scope of this report).

Hand in hand with the development of the methods of analysis there was consideration of more effective designs for these trials, particularly in the choice of social and psychological markers as potential prognostic factors, predictors (moderators) and mediators or candidate surrogate outcomes of clinical treatment effects. We will define these formally later in the chapter; however, in summary, a prognostic variable indicates the long-term treatment-free outcome for patients and a predictive variable interacts with treatment to identify if the treatment effect varies depending on the level of the predictive variable. All of these have direct relevance to the development and evaluation of personalised therapies (stratified medicine).

Much of the methodological work in this area is mirrored by wider interests in statistical methods for biomarker validation2 and the evaluation of their role as putative surrogate outcomes. 3 The aim is to add significantly to our understanding of biological and behavioural processes [see the NIHR Efficacy and Mechanism Evaluation (EME) programme – www.eme.ac.uk]. Part of the rationale for the present project was to integrate statistical work on surrogate outcome and other biomarker validation with that on the evaluation of mediation in the social and behavioural sciences. We refer to the term ‘marker’ to emphasise this common ground.

The present project was focused on the use of social and psychological markers to assess both treatment effect mediation and treatment effect modification by therapeutic process measures (‘therapeutic mechanisms evaluation’) in the presence of measurement errors, hidden confounding (selection effects) and missing data. The proposed programme of work had three integrated components: (1) the extension of instrumental variable (IV) methods to latent growth curve models and growth mixture models (GMMs) for repeated-measures data; (2) the development of designs and meta-analytic/metaregression methods for parallel trials (and/or strata within trials); and (3) the evaluation of the sensitivity/robustness of findings to the assumptions necessary for model identifiability. A core feature of the programme was the development of trial designs, involving alternative randomisations to different interventions, specifically aimed at solving the identifiability problems. Incidentally, the programme also led to the development of easy-to-use software commands for the evaluation of mediational mechanisms.

The role of the present report is not simply to summarise our research findings (although it will do this) but primarily to disseminate them in a relatively non-technical way in which the philosophy and technical approaches described in the modern causal inference literature can be applied to the design and analysis of rigorous randomised clinical trials for the evaluation of both treatment efficacy and treatment effect mechanisms. These are known as EME trials. This type of trial usually tests if an intervention works in a well-defined group of patients, and also tests the underlying treatment mechanisms, which may lead to improvements in future iterations of the intervention. One particularly promising area of application of this methodological work is in the development of EME trials for personalised therapies (or, more generally, the whole field of personalised or stratified medicine). Our aim here is to promote the full integration of marker information in EME trials in personalised (stratified) therapy.

Treatment efficacy

Let us start with the question ‘Does it work?’, which underpins the concept of treatment efficacy. We begin by describing some of the fundamental ideas of causal inference: the role of potential outcomes (counterfactuals) in the evaluation of treatment effects; average treatment effects (ATEs) and the challenges of confounding and treatment effect heterogeneity; and the challenges and pitfalls of mechanisms evaluation.

What is the effect of therapy?

Alice has suffered from depressive episodes, on and off, for several years. Six months ago a family friend advised her to ask for a course of CBT. She accepted the advice, asked her doctor for a referral to a clinical psychology service and has had several of what she believes to be helpful sessions with the therapist. She is now feeling considerably less miserable. Let us assume that her Beck Depression Inventory (BDI)4 score is now 10, having been 20 6 months ago. What proportion of the drop in the BDI score from 20 to 10 points might be attributed to the receipt of therapy? Has the treatment worked? We ask whether Alice’s depression has improved ‘because of the treatment, despite the treatment, or regardless of the treatment’. 5 What would the outcome have been if she had not received a course of CBT? The effect of the therapy is a comparison of what is and what might have been. It is counterfactual. We wish to estimate the difference between Alice’s observed outcome (i.e. after the sessions of CBT) and the outcome that would have been observed if, contrary to fact, she had carried on with treatment (if any) as usual. 6 Without the possibility of comparison, the treatment effect is not defined. Prior to the decision to treat (treatment allocation in the context of an RCT), we can think of two potential outcomes:

BDI following 6 months of therapy: BDI(T).

BDI following 6 months in the control condition: BDI(C).

The effect of therapy is the difference (Δ): Δ = BDI(T) – BDI(C).

This is called the individual treatment effect, which, since the BDI is a continuous score, is the difference between BDI(T) and BDI(C). The problem, however, is that this effect can never be observed. Any given individual receives treatment and we observe BDI(T), or the person receives the control condition and we observe BDI(C). We never observe both: we know the outcome of psychotherapy for Alice but the outcome that we might have seen had she not received therapy remains an unobserved counterfactual.

Efficacy: the average treatment effect

For a given individual, the effect of therapy is the difference Δ = BDI(T) – BDI(C) and, over a relevant population of individuals, the ATE is Ave[BDI(T) – BDI(C)]. Here we use ‘Ave[]’ instead of the mathematical statisticians’ customary expectation operator ‘E[]’ in order to make the discussion a little easier for the non-mathematically trained reader to follow (but later in the report we will use ‘E[]’ because of the need for both clarity and precision). Therefore, the efficacy of the therapy is the average of the individual treatment effects. How do we estimate efficacy? The ideal is through a well-designed and well-implemented randomised controlled trial (RCT).

Confounding and the role of randomisation

Note the simple mathematical equality:

If the selection of treatment options is purely random (as in a perfect RCT in which all participants are exposed to the treatment to which they have been allocated) then immediately it follows from the random allocation of treatment that:

Here ‘Ave[BDI|Treatment]’ means ‘the average of the BDI scores in the treated group’.

If treatment is randomly allocated, then efficacy is the difference between the average of the outcomes after treatment and the average of the outcomes under the control condition. It is estimated by simply comparing the corresponding averages resulting from the implemented trial. This straightforward and simple approach to the data analysis arises from the fact that treatment allocation and outcome do not have any common causes (the only influence on treatment allocation is the randomisation procedure) and therefore the effect of treatment receipt on clinical outcome is not subject to confounding.

Readers should note at this stage that this simple situation applies only if there is perfect adherence to (or compliance with) the randomly allocated treatments. If there are departures from the allocated treatments then the familiar intention-to-treat (ITT) estimator (i.e. compare outcomes as randomised) does not provide us with an unbiased estimator of efficacy. It provides an estimate of the effect of offer of treatment (effectiveness) and not the effect of actually receiving it (but is still not subject to confounding, as it is just estimating something subtly different from treatment efficacy). Common alternatives are the so-called per-protocol analysis (restricting the analyses to the outcomes for only those participants who have complied with their treatment application) and as-treated analysis (ignoring randomisation altogether). Both of these are potentially flawed. Both are likely to be subject to confounding by treatment-free prognosis: patients withdrawing from treatment, or being withdrawn by their clinician, may have quite a different prognosis from those who remain on therapy. In general, using a more general notation of Y for an outcome variable rather than BDI, we introduce potential outcomes Y(T) and Y(C). It is important to remember that generally:

Accordingly, how do we approach the problem of estimating efficacy (rather than effectiveness) in the presence of non-compliance? We will describe this below; however, first we introduce the problem (challenge) of treatment effect heterogeneity.

Treatment effect heterogeneity

Returning to our therapeutic intervention to improve levels of depression, there is no reason to believe that the individual treatment effect, Δ = BDI(T) – BDI(C), is constant from one individual to another. It is very likely to be variable, and we would like to evaluate how it might depend on potential moderators (‘predictive markers’ in the jargon of stratified or personalised medicine) and process measures such as the strength of the therapeutic alliance. Indeed, it is an article of faith among the personalised therapy community that there will be high levels of treatment effect heterogeneity among the general population and, given our ability to find markers that will be good predictors of treatment effect differences, these markers should then be very useful in the selection of therapies that might be optimal for patients with a given set of characteristics. This will form the basis of later discussions, but here we will illustrate the implications of treatment effect heterogeneity for efficacy estimation in RCTs for which there is a substantial amount of non-compliance with allocated treatment (compliance assumed for simplicity to be either all or none).

Staying with our relatively simple RCT in which we allocate participants to a treatment or a control condition, we can envisage situations in which those allocated to treatment fail to turn up for any of their therapy. There may also be participants who were allocated to the control condition but who, for whatever reason, actually received a course of therapy. Here the decision concerning the actual receipt of treatment is not determined by the trial investigators and, in particular, it is certainly not solely determined by the randomisation (although we would hope that, compared with the control participants, a considerably higher proportion of those allocated to the treatment condition would actually receive the therapy). An obvious question now is ‘What is the effect of treatment in the treated participants?’. Similarly, we might ask what the effect of treatment might have been in those who did not receive it. These two treatment effects are the average effect of treatment in the treated and the average effect of treatment in the untreated. If treatment effects were homogeneous (i.e. the same for everyone in the trial or equivalent target population) then these two ATEs would be identical and therefore the same as the ATE. If there is treatment effect heterogeneity, however, and actual receipt of treatment is in some way associated with treatment efficacy, then life becomes considerably more complicated. Frequently, we cannot estimate without bias the average effect of treatment in the people treated under these circumstances, but we can still define a group of participants for which we might be able to infer a treatment effect progress using randomisation (together with some additional assumptions). We refer to this group as the compliers and the average effect of treatment in the compliers as the complier-average causal effect (CACE). 7

The complier-average causal effect

Barnard et al. 8 have described a RCT in which there is non-compliance with allocated treatment together with subsequent loss to follow-up as ‘broken’. Our aim is to make sense of the outcome data from a broken trial. Can the broken trial be ‘mended’? Yes, but subject to the validity of a few assumptions. Before proceeding with this topic, however, we stress that, in a randomised trial, non-adherence or non-compliance with an allocated therapy or other intervention is neither an indicator of a trial’s failure (or lack of quality) nor a judgement on the trial participants. Especially in mental health, non-compliance can arise from patients making the wisest choice as they gather more information; for example, there may have been an adverse event which appeared to be linked to the therapy and, in this case, the patient’s doctor may have been involved in the decision to withdraw from treatment. The analysis of data from trials with a significant amount of non-compliance does need careful thought, however, particularly if non-compliance increases the risk of there being no follow-up data on outcome. Returning to our hypothetical trial with two types of non-compliance with allocated treatment (failure to turn up if you are in the therapy group, obtaining therapy if you are a control), we start by following Angrist et al. 7 and postulate that a trial comprises up to four types or classes of patient:

-

those who will always receive therapy irrespective of their allocation (always treated)

-

those who will never receive therapy irrespective of their allocation (never treated)

-

those who receive therapy if and only if they are allocated to the treatment arm (compliers)

-

those who receive therapy if and only if they are allocated to the control arm (defiers).

It is reasonable to assume that under most circumstances there are no defiers7 (the so-called monotonicity assumption), leaving us with three classes (the always treated, the never treated and compliers). However, we cannot always identify which class a particular participant should belong to; a patient who is allocated to the treatment group who then receives therapy is either always treated or a complier, and a participant who is allocated to the control group and who actually experiences the control condition is either never treated or a complier. However, a participant who is allocated to treatment and fails to receive therapy must be a member of the never treated. Similarly, a participant who is allocated to the control group and in fact receives therapy must be a member of the always treated.

The CACE is defined as the average effect of treatment in the compliers. This is the average effect that we hope we can estimate. However, first we have to make two additional assumptions:

-

As a direct result of randomisation the proportions of the three classes are (on average) the same in the two arms of the trial.

-

The effects of random allocation (i.e. the ITT effects) on outcome in the always treated and the never treated are both zero (the so-called exclusion restrictions). Note that this is not the same as saying that the ATEs (if they could be estimated) would be zero.

Following assumption 1 we can immediately estimate the proportion of the always treated from the proportion receiving therapy in the control group. Similarly, the proportion of the never treated follows from the proportion in the treatment group who fail to turn up for their therapy. The proportion of compliers is then what is left. Representing these three proportions as PAT, PNT and PC, respectively, and the associated ITT effects in the three classes as ITTAT, ITTNT and ITTC, then it should be clear that the overall ITT effect is the weighted sum of these class-specific effects:

The CACE is estimated by ITTC, and the other two ITT effects on the right-hand side of the equation (ITTAT and ITTNT) have both been assumed to be zero. It follows immediately that the CACE can be estimated by dividing the overall ITT effect by the estimated proportion of compliers (which, itself, is actually the ITT effect on the receipt of therapy: the arithmetic difference between the proportion receiving therapy in the treatment arm and the proportion receiving therapy in the control arm).

In the absence of treatment effect heterogeneity, the CACE estimate provides us with an estimate of the ATE. If this is actually the case, then it is clear that the overall ITT effect is a biased estimator of the ATE (it is attenuated, shrunk towards the null hypothesis of a zero treatment effect, the shrinkage being the proportion of compliers, PC). If, however, we are convinced that there is a possibility of treatment effect heterogeneity, then all we can say is that the CACE estimate is simply the estimated treatment effects for the compliers in this particular trial. It tells us nothing about the ATE in the always treated and never treated, and it follows that we have only limited information about the ATE (bounds can be determined for the ATE9,10 but this is beyond the scope of the present report). If, in a subsequent trial (or trials), the conditions are such that different participants are induced to be compliers, then the CACE will shift accordingly. It is a challenge to use one particular CACE estimate to generalise or predict what the ATE in the compliers will be under different circumstances.

Here, we have introduced the CACE to illustrate how treatment effect heterogeneity can complicate and threaten the validity of apparently straightforward estimators of ATEs (efficacy). Although treatment effect heterogeneity holds out great promise for the development of personalised therapies, it is also a potential nuisance and trap for the unwary. Exposure to the concept of the CACE, however, is also motivated by other considerations. Treatment receipt provides a simple introduction to mediation. Random allocation encourages participants to take part in therapy (or not, if they are in the control arm), which in turn influences clinical outcomes. The exclusion restrictions (random allocation has no effect on outcome in the always treated and the never treated) are equivalent to the assumptions that there is no direct effect of randomisation on outcome but that the effect of randomisation is completely mediated by treatment received. Randomisation, here, is an example of an IV (see Chapter 3) and the above expression for the CACE estimate is an example of what is known as an IV estimator. 7 Finally, the four latent classes of Angrist et al. 7 (always treated, never treated, compliers and defiers) also provide a relatively simple and straightforward example of principal stratification,11 an idea which will be described in some detail in Chapters 2 and 3.

Therapeutic mechanisms

We have discussed the rationale of efficacy estimation in some detail. What about the second component of EME: the challenge of evaluating mechanisms? ‘How does the treatment/complex intervention work?’. We will illustrate this with a description of a trial currently funded by the NIHR EME programme, the Worry Intervention Trial (WIT). 12 Here, we summarise the trial protocol.

The approach taken by the WIT was to improve the treatment by focusing on key individual symptoms and to develop interventions that are designed to target the mechanisms that are thought to maintain them. In the investigators’ earlier work, worry had been found to be an important factor in the development and maintenance of persecutory delusions. Worry brings implausible ideas to mind, keeps them in mind and makes the ideas distressing. The aim of the trial was to test the effect of a cognitive–behavioural intervention to reduce worry in patients with persecutory delusions and, very relevant to the context of the present report, determine how the worry treatment might reduce delusions. WIT involved randomising 150 patients with persecutory delusions either to the worry intervention in addition to standard care or to standard care alone. The principal hypotheses to be evaluated by the trial results are that a worry intervention will reduce levels of worry and that it will also reduce the persecutory delusions.

The key features of WIT are to establish that (1) the worry intervention reduces levels of worry, (2) the worry intervention reduces the severity of persecutory delusions and (3) the reduction in levels of worry leads to a reduction in persecutory delusions [i.e. that worry is a mediator of the effect of the intervention on the important clinical outcome (persecutory delusions)]. It is reasonably straightforward to establish the efficacy of the intervention in terms of its influence on worry (the intermediate outcome) and on persecutory delusions (the ‘final’ outcome). It is also straightforward to show whether or not levels of worry are associated or correlated with levels of persecutory delusions. However, this association could arise from three sources (not necessarily mutually exclusive): worry may have a causal influence on delusions; delusions may have a causal influence on worry; and there may be common causes of both (some or all of them neither measured nor even suspected to exist). As the randomised intervention is very clearly targeting worry, it seems reasonable to assume that worry is the intermediate outcome (mediator) leading to persecutory delusions, and not vice versa. Ruling out or making adjustments for common causes (confounding) is much more of a challenge. Another challenge is measurement error in the intermediate outcome (mediator). Finally, we have to deal with missing data (missing values for the mediator, missing values for the final outcome or both). Methods for tackling these problems will be discussed in detail in Chapter 2.

The evaluation of mediation is a key component of mechanisms evaluation for complex interventions. A second important aspect of mechanisms evaluation is the role of psychotherapeutic processes as a possible explanation of treatment effect heterogeneity. This answers the question ‘What factors make the treatment work better?’. For example, how might the treatment effect be influenced by characteristics of the therapeutic process such as the amount of therapy received (sessions attended), adherence to treatment protocols (the fidelity/validity of the treatment received13) or the strength of the therapeutic alliance between therapist and patient?14 Although they are modifiers of the effects of treatment, such process measures are integral to the therapy (they do not precede the therapy) and cannot be regarded as predictive markers or treatment moderators. One major hurdle in the evaluation of the role of these process measures is that they are not measured (or even defined) in the absence of therapy; they cannot be measured in the control participants. One cannot measure the strength of the therapeutic alliance in the absence of therapy. Second, the potential effect modifiers are likely to be measured with a considerable amount of measurement error (number of sessions attended, for example, is only a proxy for the ‘dose’ of therapy; rating scales for strength of the therapeutic alliance will have only modest reliability). Third, there are also likely to be hidden selection effects (hidden confounding). A participant may, for example, have a good prognosis under the control condition (no treatment). If that same person were to receive treatment, however, the factors that predict good outcome in the absence of treatment would also be likely to predict good compliance with the therapy (e.g. number of sessions attended or strength of the therapeutic alliance). Severity of symptoms or level of insight, measured at the time of randomisation, for example, is likely to be a predictor of both treatment compliance and treatment outcome. They are potential confounders. If we were to take a naive look at the associations between measures of treatment compliance and outcomes in the treated group, then we would most likely be misled. These associations would reflect an inseparable mix of selection and treatment effects (i.e. the inferred treatment effects would be confounded). We can allow for confounders in our analyses, if they have been measured, but there will always be some residual confounding that we cannot account for. The fourth and final challenge to be considered here arises from missing outcome data. Data are unlikely to be missing purely by chance. Prognosis, compliance with allocated treatment and the treatment outcome itself are all potentially related to loss to follow-up, which, in turn, leads to potentially biased estimates of treatment effects, their mediated parts and estimates of the influences of treatment effect modifiers. These methodological issues will be discussed in detail in Chapter 3.

Personalised therapy

The explicit notion of the heterogeneity of the causal effect of treatment on outcome, and the search for patient characteristics (i.e. markers) that will explain this heterogeneity and will be useful in subsequent treatment choice, is at the very core of what we label as personalised therapy. This answers our question ‘Whom does the treatment work for?’.

Other names that have been used for this activity in the wider context of medical and health-care research are ‘personalised medicine’, ‘stratified medicine’ (stratification implying classifying patients in terms of their probable response to treatment), ‘predictive medicine’, ‘genomic medicine’ and ‘pharmacogenomics’. None of these names, on its own, is fully satisfactory, but taken together they convey most of the essential information. We start by assuming that there is treatment effect heterogeneity: a given treatment will be more beneficial for some patients than for others. If we have a second competing treatment available for the same condition, then we also assume that it too will display varying efficacy but, with luck, it will be (most) beneficial for the patients for whom the first treatment seems to offer little promise (a distinct possibility if the second treatment has a completely different mechanism of action). A related approach might be motivated by reduction in the incidence of unpleasant or life-threatening side effects (possibly more relevant to drug treatment than psychotherapies but they should always be borne in mind). None of this knowledge is of any practical value, however, unless we can identify (in advance of treatment allocation) which patients might gain most benefit from each of the treatment options. We need access to pre-treatment characteristics (markers, often biological or biomarkers, but also including social, demographic and clinical indicators) that singly or jointly predict (i.e. are correlated or associated with) treatment effect heterogeneity. These so-called predictive markers (more familiarly known as treatment moderators in the psychological literature) can be identified through prior biological or psychological (cognitive) theory concerning treatment mechanisms or through statistical searches but, before they can be incorporated into a large clinical trial to validate their use, the preliminary evidence for their predictive role needs to be pretty convincing. If the predictive biomarker passes this preliminary hurdle, our contention is that a large trial of efficacy, designed to evaluate both treatment effect heterogeneity and corresponding mediational mechanisms, will provide a richer and more robust foundation for personalised or stratified therapy. We will return to these thoughts in detail in Chapter 5.

Markers and their potential roles

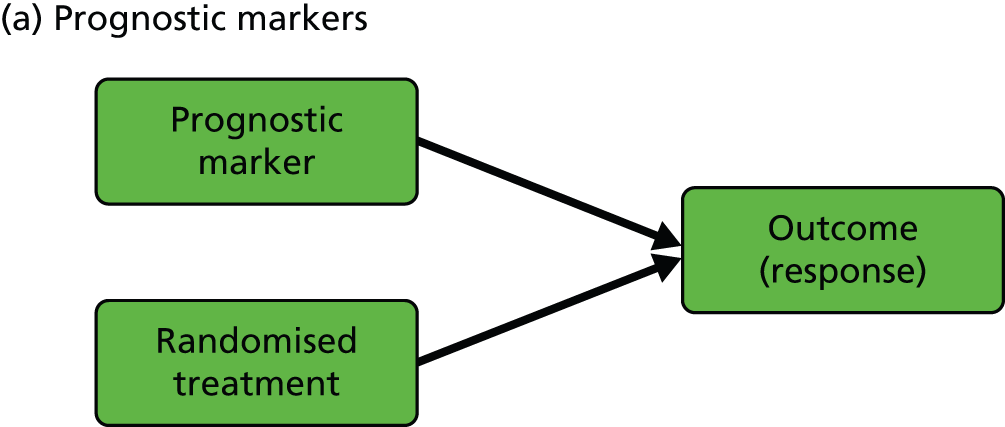

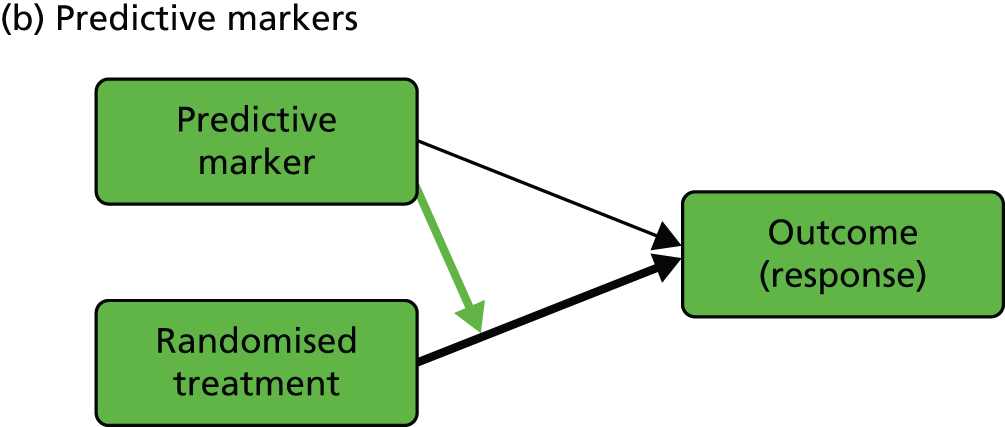

We start with the rather confusing terminology and with definitions provided by Simon:15 ‘a “prognostic biomarker” is a biological measurement made before treatment to indicate long-term outcome for patients either untreated or receiving standard treatment’ and ‘a “predictive biomarker” is a biological measurement made before treatment to identify which patient is likely or unlikely to benefit from a particular treatment’. In our view, both definitions need to be clarified and expanded in the context of a given evaluative RCT (we will interpret ‘biological marker’ here as meaning any type of useful biological, psychological, social or clinical information). Let us assume that we are planning to run a controlled psychotherapy trial: supposedly active therapy [plus treatment as usual (TAU)] versus TAU alone. Here, a purely prognostic marker would be a marker for which the effect on patient outcome is identical in the two arms of the trial (i.e. we would need to include no interactions between marker and treatment but only the independent effects of marker and randomised treatment in, for example, a generalised linear model to describe the treatment outcomes). Equivalently, the treatment’s effect on outcome does not vary with (is independent of) the value of a prognostic marker. On the other hand, the treatment’s effect is dependent on (predicted by) the value of a predictive marker (i.e. there would be a need to include, and estimate, the size of interactions between marker and treatment in the model to describe the treatment outcomes). In the extensive literature in the behavioural and social sciences (and mental health trials) a predictive marker would be called a treatment moderator;16,17 the baseline marker moderates or modifies the effect of the subsequent treatment. Our prognostic marker is usually referred to as a predictor or predictive variable. To add to the confusion, these definitions imply not that a predictive marker has no prognostic value but simply that its prognostic value is different in the two arms of the trial (another interpretation of the marker by treatment interaction). Graphical representations of the effects of prognostic and predictive biomarkers are illustrated in Figure 1.

FIGURE 1.

Graphical representations of the effects of (a) prognostic and (b) predictive markers. Black arrows indicate causal effects (the heavy lines being the ones of particular interest); the green arrow indicates moderation of the effect of treatment on outcome.

In the present report, we are primarily concerned with the distinction between prognostic and predictive markers, but of course we also discuss markers of mediational mechanisms and therapeutic processes. We use the terms ‘prognostic marker’ and ‘predictive marker’ to indicate measurements made prior to treatment allocation (i.e. a subset of the more general profile of potential baseline covariates in a conventional randomised trial, genetic markers being particularly prominent). Returning to measurements made after the onset of treatment, the third type of biomarker that would be potentially very useful is a marker of the function targeted by the treatment (i.e. the putative mediator). In some situations, investigators might wish to evaluate and promote the putative treatment effect mediator as a surrogate outcome; however, the evaluation of surrogate outcomes is not a topic that we wish to pursue here.

The rest of the report: where do we go from here?

In the next chapter we discuss the statistical evaluation of treatment effect mediation in some detail, starting with long-established strategies from the psychological literature,17–19 with the possibility of using prognostic markers for confounder adjustment, introducing definitions of direct and indirect effects based on potential outcomes (counterfactuals) (together with appropriate methods for their estimation), and then moving on to methods allowing for the possibility of hidden confounding between mediator and final outcome. In Chapter 3 we start by criticising the usual naive approach to evaluating the modifying effects of process measures (correlating their values with clinical outcomes in the treated group, with no reference to the controls) and then describe modern methods developed from the use of IVs14 and principal stratification. 7,11 Chapter 4 extends the ideas from these two chapters to cover trials involving longitudinal data structures (repeated measures of the putative mediators and/or process variables, as well as of clinical outcomes). Chapter 5 considers the challenge of trial design in the context of the use of IV methods and principal stratification. A considerable proportion of the discussion within this chapter will focus on EME trials for personalised therapy. Many of the statistical methods for mechanisms evaluation in EME trials (in fact all of them) require assumptions that are not testable using the data at hand. We will discuss sensible strategies for the reporting and interpretation of a given set of trial results (Appendices 5 and 6 summarise the results of a series of Monte Carlo simulations to assess the sensitivity of the results to departures from these assumptions). In Chapter 6, we finish with a general overview of our results and discussion of possibilities for future research but, perhaps more importantly, we provide a practical guideline for the design and analysis of EME trials with accompanying software scripts to help readers implement their own analysis strategies.

Chapter 2 Treatment effect mediation

Putative mediators

We have already briefly described the rationale for the MRC/NIHR WIT. Here, the mediational hypothesis is that the therapeutic intervention will lower the levels of worry, which, in turn, will lead to lowering of the levels of persecutory delusions. Worry is the proposed mediator: the treatment effect mechanism.

The MRC COMMAND trial was a multicentre RCT of cognitive therapy to prevent harmful compliance with command hallucinations. 20 Here, the cognitive therapy was aimed at reducing the perceived power of the auditory hallucinations (voices), which, in turn, would lead to lowering of the risk of the patient complying with voices telling him or her to harm him- or herself or others. The putative mediator is the perceived power of the voices.

Another example comes from the MRC Motivational Interviewing for Drug and Alcohol misuse in Schizophrenia (MIDAS) trial. 21 The intervention being evaluated was a combination of motivational interviewing and cognitive therapy to improve psychotic symptoms in dual-diagnosis patients (a combination of psychosis and substance abuse). In cannabis users, for example, the aim was to test whether or not the intervention reduced cannabis use, which, in turn, would lead to reduction in psychotic symptoms. The level of substance misuse was the putative mediator.

The MRC Pre-school Autism Communication Trial (PACT) was a two-arm RCT of about 150 children with core autism aged 2 years to 4 years 11 months. 22 Its aim was to evaluate a parent-mediated communication-focused treatment in in these children. After an initial orientation meeting, families attended twice-weekly clinic sessions for 6 months followed by a 6-month period of monthly booster sessions. Families were asked to undertake 30 minutes’ daily home practice between sessions. The primary outcome of the trial was the Autism Diagnostic Observation Schedule-Generic social communication algorithm score,23 which is a measure of the severity of the autism symptoms. Secondary outcomes included a measure of parent–child interaction, which was assessed through video ratings. Previous analysis of PACT data has shown that children and parents assigned to the PACT intervention showed some reduction of a modified Autism Diagnostic Observation Schedule-Generic algorithm score compared with those assigned to TAU, although the effect size was not statistically significant. 22 However, the between-group effect size for the secondary outcomes of parental synchronous acts (as a proportion of total parent communication acts) and child initiations (as a proportion of total child acts) were substantial and statistically significant. In the evaluation of treatment effect mechanisms the goal is to understand the two-step mechanism by which the intervention influences the child behavioural outcome with the parent and then, in turn, generalises to behaviour with the external Autism Diagnostic Observation Schedule-Generic assessor.

In summary, in the above trials the aim was to evaluate and estimate the size of the indirect effect of the intervention (i.e. that explained by changes in the proposed mediator). The direct effect (that explained by a mechanism or mechanisms not involving the proposed mediator), although important, was not the main focus of interest.

In some cases, however, the reverse scenario is true. For example, Prevention of Suicide in Primary Care Elderly: Collaborative Trial (PROSPECT) was a prospective, randomised trial designed to evaluate the impact of a primary care-based intervention on the reduction of major risk factors (including depression) for suicide in later life. 24 The trial was intended to evaluate an intervention based on treatment guidelines tailored for the elderly with care management compared with TAU. Bruce et al. 24 reported an ITT analysis for a cohort of participants recognised as being depressed at the time of randomisation. Data from this trial have also been analysed in detail in a series of papers25–28 developing and illustrating the estimation of direct and indirect treatment effects in RCTs in the presence of possible hidden confounding between the intermediate and the final outcome. An intermediate outcome (putative mediator) in PROSPECT was whether or not the trial participant adhered to antidepressant medication during the period following allocation of the intervention. The question here is whether or not changes in medication adherence following the intervention might explain some or all of the observed (ITT) effects on clinical outcome. The focus is on the estimation of direct effects of the intervention, that is the effects of CBT that are not explained by changes in medication. We call these intermediate variables ‘nuisance mediators’, as the intervention is not hypothesised to work directly through them, and we seek to estimate direct effects as our primary focus, the indirect effect being considered of secondary importance.

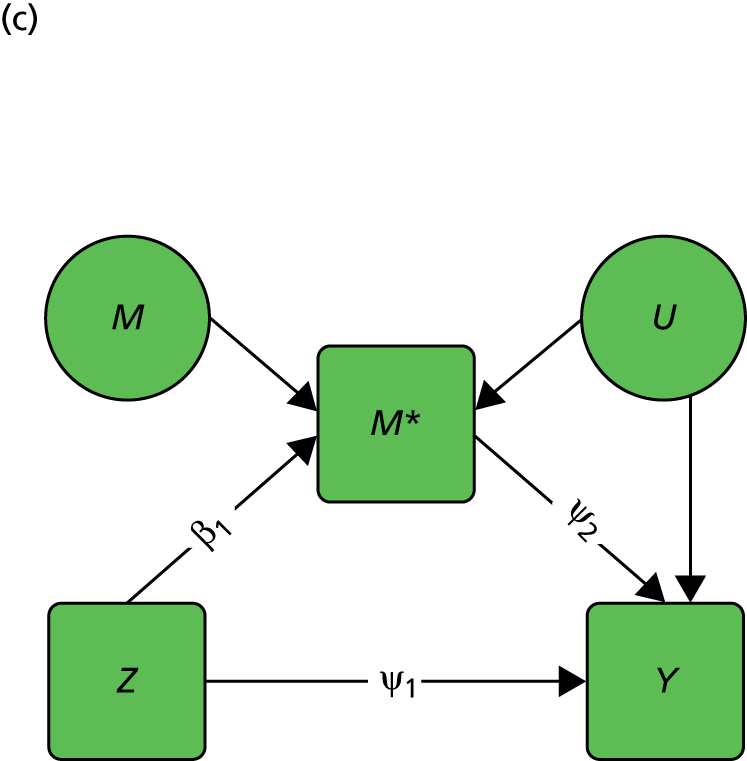

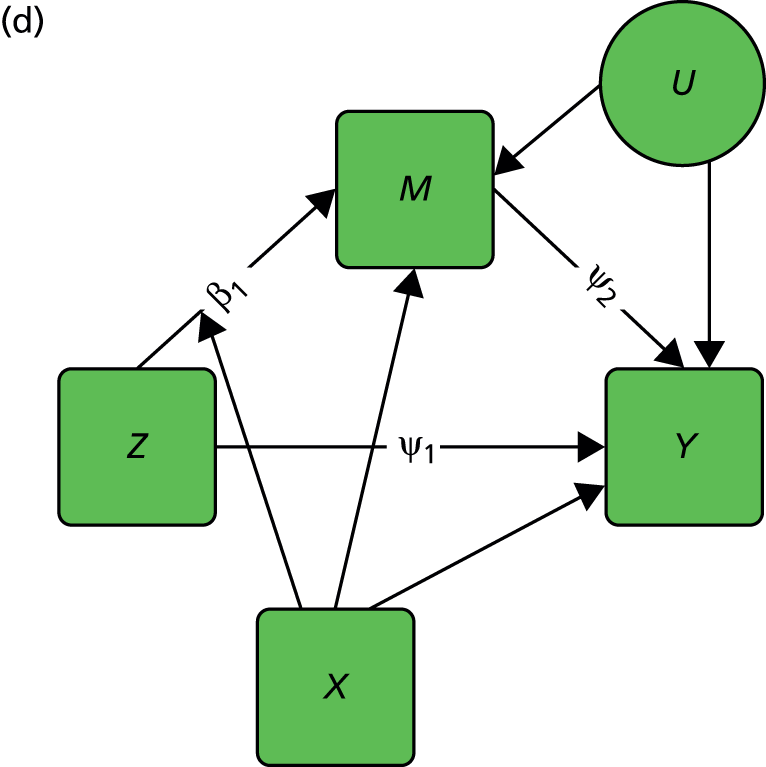

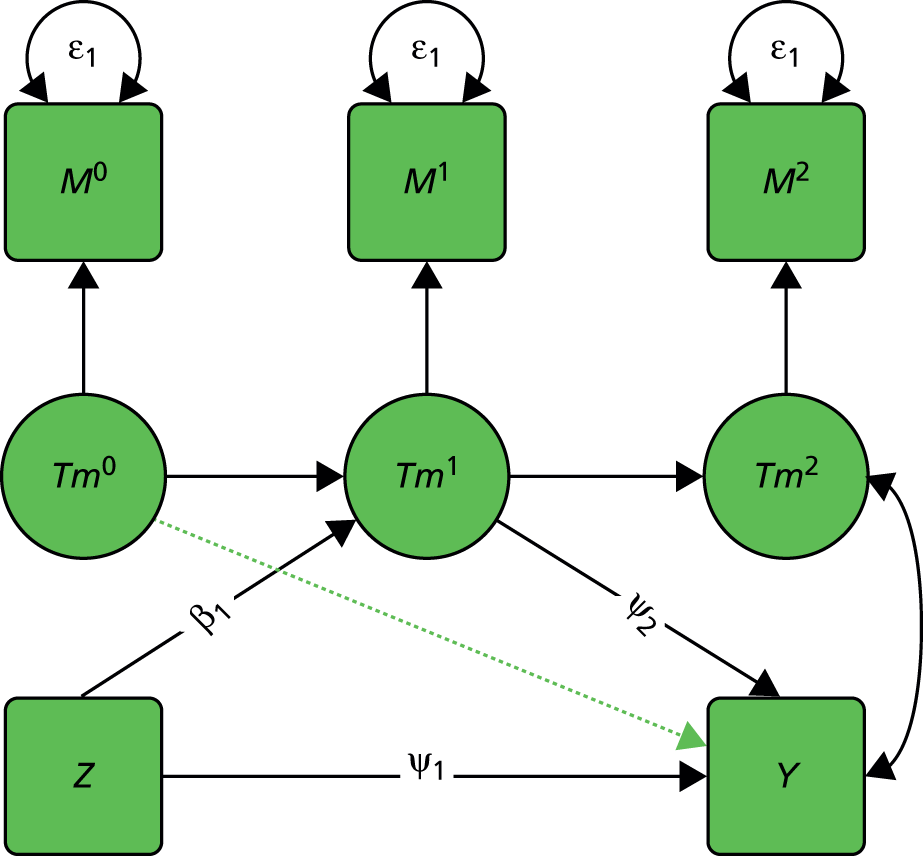

A brief description of mediation and moderation

We start with a trial in which there are no measured baseline covariates. Consider the simple directed or causal inference graph (causal graph) given in Figure 2a. In such a graph, each arrow represents an assumed causal influence of one variable on another. Randomised treatment allocation (Z) has an effect on an intermediate outcome (M), which, in turn, has an effect on the final outcome, Y. There is also a direct effect of Z on Y. The part of the influence of Z on Y that is explained by the effect of Z on M is an indirect or mediated effect. The intermediate variable, M, is a treatment effect mediator. The key thing to remember is that Figure 2a is representing structural or causal relationships, not merely patterns of association. The effect of Z on M is the effect of manipulating Z, that is, setting Z to equal a particular value z. Similarly, the effect of M on Y is the effect of manipulating M on the outcome Y. It is not necessarily the same as the observed association between M and Y given an observed value of the mediator that has not been manipulated by the investigator.

FIGURE 2.

Causal path diagrams relating randomised treatment allocation (Z) to an intermediate outcome (M) and a final outcome (Y). (a) Diagram showing mediation of Z on Y through M; (b) mediation with unmeasured confounding U between mediator and outcome; (c) mediation with measurement error in M, where M* is the error-prone measure; (d) mediation with the interaction between X and Z as a valid instrumental variable; and (e) mediation with moderation of all effects by X.

The important skill that an investigator needs in interpreting directed graphs such as Figure 2a, is to think automatically ‘What vital component might be missing?’ or ‘What’s not in the graph?’. In our experimental set-up (the RCT), we are able to manipulate Z through random allocation (so we can assume that there are no confounders of the effects of Z on either M or Y). However, typically, we have no control over either M or Y (they are both, in fact, outcomes of randomisation). Therefore, there may be unobserved variables, other than treatment (Z), that influence both M and Y. Let these unobserved influences be represented by the variable U. The directed graph for this situation is shown in Figure 2b. Let us further suppose that we cannot measure M directly (i.e. without error), but we have an error-prone proxy, M*. The corresponding graph is now Figure 2c.

Let us assume that a data analyst uses simple (multiple) linear regression or structural equation modelling (SEM) approaches to estimate the size of the effects illustrated by Figure 2a. Can one interpret the resulting regression coefficients as causal effects? Yes, if and only if the model represented by Figure 2a is the correct one. However, if either Figure 2b or c, or a more complex model, is correct then a naive analysis based on Figure 2a will lead to invalid results.

Let us finally assume that we have measured an important baseline covariate, X. Suppose the effect of Z on M is influenced by the value of X. The covariate X is said to be a moderator of the effect of Z on M. In addition, X itself is assumed to influence the values of M and to directly influence the values of Y, but we assume here that there are no interactions between covariate and treatment for these components of the model. The resulting graph (assuming M is measured without error) is given in Figure 2d. By convention, a causal graph or diagram with multiple arrows pointing at a single variable implicitly allows for interactions between the causal variables. 5 To explicitly reflect the absence/presence of interactions in our models, we depart from this convention and indicate the interaction between X and Z on M (i.e. the moderation of the effect of Z on M by X) by a single-headed arrow from X to the causal pathway from Z to M. This approach is the one commonly taken in the path analysis/SEM literature in the psychological and social sciences. Again, when interpreting Figure 2d we should be carefully considering what components are missing (the two additional arrows for the interactions that may have incorrectly been assumed to be absent, for example the green arrows in Figure 2e, as well as the ones that are drawn). The missing paths are indicative of some of the vital assumptions on which any valid analysis might be made.

Brief historical survey

At present the field comprises two distinct traditions. The older and more popular approach, particularly in the social and behavioural sciences, is concerned with the estimation of direct and indirect effects through the use of path analysis (and associated regression models) and SEM. More recently, the ‘causal inference’ approach is being developed by statisticians, econometricians and others interested in explicitly defining the assumptions needed for valid inferences concerning the causal effects of treatments/interventions.

One key difference between the two approaches is in the degree of care taken in the specification of the statistical models so that parameter estimates can be legitimately interpreted as causal effects. In the former approach, the assumptions are sometimes implicit (and users are frequently unaware of what they are or of their implications). In the newer approach, every attempt is made to make the assumptions explicit (and open to challenge). We will refer to the latter as ‘causal mediation analysis’, keeping in mind that no mediation analysis makes sense in the absence of any attempt to infer causality.

As we shall discuss in more detail in the following sections, we summarise that the traditional approach has four main differences from the casual mediation analysis methods:

-

It assumes no unmeasured confounding between mediator and outcome.

-

It assumes that there are no interactions between exposure and mediator on outcome.

-

It does not easily extend to non-linear models.

-

It assumes that all the statistical models are correctly specified.

Traditional methods: the Baron and Kenny approach

The traditional methods of statistical mediation analysis are discussed in detail by the psychologist David MacKinnon,19 whose methodological work has been very influential in this area (e.g. MacKinnon and Dwyer29). Papers by Judd and Kenny,18 and particularly by Baron and Kenny (B&K),16 have also been extremely influential. We introduce some notation whereby the subscript i denotes an individual i, Yi represents the observed outcome, Mi represents the observed value for the mediator, Zi represents treatment – for example, for simplicity, an intervention (Zi = 1) or control (Zi = 0) – and Xi represents a vector of baseline covariates.

In their 1986 paper, B&K16 set out three steps in the evaluation of mediation through the use of appropriate linear regression models: (1) demonstrate that treatment, Z, has an effect on the outcome, Y; (2) demonstrate that treatment, Z, has an effect on the putative mediator, M; and (3) demonstrate that the mediator, M, has an effect on the outcome, Y, after controlling for treatment, Z.

Many authors, including MacKinnon,19 have argued that the first step is not necessary. It implies that the evaluation of mediation is of value only when we have a statistically significant total treatment effect on the final clinical outcome. However, analysis of mediation might also tell us why a trial result is negative. Is it because the intervention has failed to shift the mediator or could it be that the mediator failed to influence the outcome? Or is there a harmful direct effect of the intervention that counterbalances the benefits attained via the mediator?

The B&K procedure for statistical mediation analysis16 uses two regression models:

The first is a model for the mediator conditional on treatment and the set of covariates (note that the original B&K procedure did not explicitly include covariates). The second model is the expectation of the outcome conditional on the mediator, covariates and treatment. From these, the direct effect of treatment on outcome would be ψ1 and the indirect effect of treatment acting through the mediator would be β1 × ψ2.

The validity of this approach is dependent on three key assumptions, which were introduced in Figure 2:

-

BK1: there are no other common causes of M and Y, that is there is no unmeasured confounding between mediator and the outcomes.

-

BK2: there is no interaction between M and Z on Y.

-

BK3: there is no error in the measurements of M.

Assumption BK3 also applies to Z, of course, but, in the case of a RCT, we assume that random allocation is known and that we are working with ITT effects; however, non-compliance with randomised treatment would complicate the issue if we were concentrating on the effects of actually receiving treatment.

It is clear that, if Figure 2a is the true underlying data-generating model, then we can obtain unbiased estimates of ψ1, β1 and ψ2. However, if Figure 2b or c is the true model, then using the regression model for the outcome outlined previously we can no longer obtain an unbiased estimate for ψ2 or ψ1, so the resulting estimates of the direct and indirect effect are biased.

Assumption BK1 can be made more plausible by the inclusion of measured confounders (such as X in the models above), but the presence of unmeasured variables cannot be unequivocally ruled out, so the results of the mediation analysis should be considered with this caveat in mind. Alternative IV methods have been proposed (see Structural mean models), which allow for valid estimation in the presence of unmeasured confounding by proposing alternative assumptions. 14,30,31

Assumption BK2 rules out the presence of treatment–mediator interactions in the outcome model. If such an interaction were to be included, the second model would become:

This model has an additional parameter, ψ3, and it becomes unclear how this parameter should be incorporated into the definition of direct and indirect effects proposed by the B&K procedure, as, for example, the direct effect ψ1 now also depends on the value of m.

Causal mediation analysis: formal definitions of direct and indirect effects

Alternative parameters for mediation analysis have been proposed in the causal inference literature, which uses the counterfactual framework for defining causal effects. The first rigorous description of the problems arising in the estimation of direct and indirect effects appears to be that provided by Robins and Greenland. 32 A thorough exposition has also been provided by Pearl and his colleagues. 5,33,34 We now review the essence of these proposals, and demonstrate when they are equivalent to the definitions provided previously.

We define the following counterfactual outcomes:

-

Mi(z) represents the mediator with treatment level Zi = z.

-

Yi(z,m) represents the outcome with treatment level Zi = z and mediator level Mi = m.

-

Yi(0) = Yi(0,Mi(0)) represents the outcome if randomised to usual care with mediator Mi(0).

-

Yi(1) = Yi(1,Mi(1)) represents the outcome if randomised to treatment with mediator Mi(1).

Note that these counterfactual outcomes differ from the observed outcomes. In the usual-care arm where Z = 0, Yi = Yi(0) and Mi = Mi(0), so that Mi(0) and Yi(0) are the observed values and Mi(1) and Yi(1) are unobserved. Similarly in the treatment arm where Z = 1, Mi(0) and Yi(0) are unobserved and Yi = Yi(1) and Mi = Mi(1) are observed.

Using the counterfactual definitions, we can define the following causal parameters:5,33

Natural direct effect:

Natural indirect effect:

Controlled direct effect at mediator level m:

The natural direct effect is the direct effect of treatment on outcome, given the ‘natural’ level of the mediator Mi (0), that is the level that the mediator would be at under the usual care condition. The natural indirect effect is the effect of the change in mediator on outcome if receiving treatment (i.e. Z = 1), that is the direct effect of treatment on outcome remains constant but alters the mediator. The controlled direct effect is the direct effect of treatment on outcome at mediator level m. It can easily be shown, as below, that the total effect is the sum of the natural direct effect and the natural indirect effect. 5 When there is no interaction between treatment and mediator, the controlled direct effect is constant at all levels of M and is equal to the natural direct effect. In many of the analyses presented in the present report, we will be assuming the absence of this interaction.

The total effect of randomisation (Z) on outcome (Y) for the ith subject is:

-

Yi(1,Mi(1))−Yi(0,Mi(0)).

Similarly, the effect of Z on the intermediate outcome or mediator (M) is:

-

Mi(1) − Mi(0).

Taking expectations (averaging) over i, and reverting to the usual mathematical notation of E[], we define the ATE on the outcome as:

and the ATE on the mediator as:

Here, the first component of this decomposition is the direct effect of randomisation given M(0) and the second component is the effect of the change in mediator if randomised to receive treatment (i.e. Z = 1). The first of these is the natural direct effect, and the second is the natural indirect effect, as we defined previously.

We define the direct effect of treatment assignment on outcome at mediator level m as Yi (1, m) − Yi (0, m) (i.e. the controlled direct effect, as defined above) and if we are prepared to assume that this does not depend on m then for any m and m′:

This enables us to define the mean direct effect as:

Now, if we define the effect of M on Y via:

where we acknowledge lack of homogeneity of treatment effects (σε2 > 0; E(εi) = 0) but we assume that Cov(εi, (Mi(1) − Mi(0))) = 0 (i.e. that there is no essential heterogeneity as defined in the econometrics literature35). It follows from equations 13 and 15 that:

This is the decomposition from a traditional path analysis model as we observed previously.

It is no coincidence that the decomposition of the causal mediation parameters leads to the same decomposition as from the traditional model. When there is no interaction between treatment and mediator, and the outcome and mediator are continuous so that we have linear models for the expectations, this result will hold. The coefficient for randomisation ψ1 in the model for the outcome Y estimates the controlled direct effect and (equivalently) the natural direct effect, and the β1 × *ψ2 term estimates the natural indirect effect. However, this result only holds under linear models without interaction terms, as we discuss in Estimation and assumptions.

Estimation and assumptions

No hidden confounding or measurement error in the putative mediator

When we have no hidden confounding or any measurement error in the mediator, then the B&K approach yields results that can safely be interpreted as causal. Work on identification and estimation of the natural direct and indirect effects, and controlled direct effects using parametric regression models has been developed by VanderWeele and Vansteelandt. 36,37 Informally this extends the B&K procedure to allow for interactions between mediator and treatment on outcome (we will not pursue the details here). It allows for confounding by observed covariates. It also extends the models to include binary mediators and/or binary or count outcomes; this requires the parameters from the parametric regression models to be combined through the mediation formula to generate the causally defined mediation parameters. In the context of the present project, Emsley et al. 38 have produced the paramed command to implement these estimation procedures in Stata (StataCorp LP, College Station, TX, USA) (see Appendix 1).

Problems arising through omitted common causes (hidden confounding)

Let us briefly consider complete mediation, where there is no direct effect of treatment on outcome. In the absence of any hidden confounding (i.e. no unmeasured common causes of M and Y) we have conditional independence between treatment and outcome: Z╨Y |M, X (here we use the symbol ‘╨’ to mean ‘is statistically independent of’). Now, if we have a source of hidden confounding, U, complete mediation implies Z╨Y |M, X, U. Note that in the presence of U it is not true that Z╨Y |M, X. Examination of partial correlations or the equivalent partial regression coefficients, ignoring U, will lead us astray. Similarly, hidden confounding caused by U will lead investigators astray in using regression or SEM to assess incomplete mediation. Their estimated regression coefficients will be biased. The probable presence of hidden confounding, U, is the reason why the standard SEM approach has doubtful validity.

In a RCT, the mediator and the final clinical outcome are both outcomes of randomisation. The standard regression/SEM approach involves controlling for the mediator (the intermediate outcome) when evaluating the direct effects of randomisation on the final outcome. The potential pitfalls of controlling for post-randomisation variables have been recognised for many years (e.g. Herting39). In the context of the estimation and testing of direct and indirect effects, there are several powerful critiques of the standard methods. 32,40–44 But it is worth noting at this point that it is not SEM as a general technique that is necessarily at fault but that the users of the methodology are frequently fitting the wrong (i.e. mispecified) models. 45 Subject to solving the problems of identification, it is possible to use SEM methodology in an appropriate way (see Coping with hidden confounding).

Coping with hidden confounding

One way around the hidden confounding problem is to assume a priori that there is no direct effect of treatment (i.e. complete mediation). This leads to the use of IV methods with randomisation as the instrument. Briefly, in a standard regression model, if an explanatory variable is correlated with the error term (known as endogeneity) its coefficient cannot be estimated unbiasedly. An IV is a variable that does not appear in the model, is uncorrelated with the model’s error term and is correlated with the endogenous explanatory variable; randomisation, where available, often satisfies these criteria. A two-stage least squares (2SLS) procedure can then be applied to estimate the coefficient. At its simplest, the first stage involves using a simple linear regression of the endogenous variable on the instrument and saving the predicted values. In the second stage the outcome is then regressed on the predicted values, with the latter regression coefficient being the required estimate of the coefficient. This procedure is routinely used by econometricians and further details including the derivation of the standard errors (SEs) are found in standard econometric texts such as Wooldridge. 46

The main concern of this report is evaluating both direct and indirect effects in the presence of hidden confounding between mediator and outcome. IVs provide one method of estimating these effects, although the identification of valid instruments is a major challenge. Of related interest is the pioneering work of Gennetian et al. 47,48 on the use of IV methods to look at the joint effects of two or more putative mediators, where, again, identification of the causal parameters is the major challenge. 49 Here the authors propose to use as instruments baseline covariates that are good predictors of an assumed heterogeneous effect of treatment allocation (i.e. randomisation) on levels of the mediator (i.e. moderators of the effect of treatment on the putative mediator).

Ten Have et al. 25 have recently used G-estimation methods to solve the problem of valid estimation of direct and indirect effects (see also Bellamy et al. 26 and Lynch et al. 27). In this approach we observe treatment-free outcomes in those randomised to the control group and, if we can deduct the effect of treatment from each of the participants allocated to the treatment group to obtain their treatment-free outcomes, then we would expect treatment-free outcome to be independent of randomisation. In essence, G-estimation is a means of finding a treatment effect estimate that makes the treatment-free outcome independent of randomisation. Methods based on 2SLS and extensions of the G-estimation algorithms of Fischer-Lapp and Goetghebeur50 have been described by Dunn and Bentall14 (and been shown, in the case of the appropriate linear models, to be exactly equivalent). Albert51 also used 2SLS estimation. Recent reviews of these methods are provided by Emsley et al. 30 and by Ten Have and Joffe. 52

Measurement errors

In the context of epidemiological and psychological/sociological modelling, measurement and misclassification errors in explanatory variables (putative risk factors as well as confounders) are a well-known threat to the validity of causal inference,53–55 although the problems are not so well appreciated among the applied clinical research community as one might hope. An implicit assumption in our models of mediation has been that the mediator is measured without error. It is highly likely that this assumption is invalid (or not even approximately true in the case of many psychological markers). Although it is difficult, if not impossible, to verify, measurement and misclassification errors might be a greater problem in this area than unmeasured confounders. How might one cope with this threat? One possibility is replication (either independent repeated measurements of the mediator in question or the use of a panel of distinct instruments to measure it) and to explicitly use latent variable models. 55 We will not pursue this option here but instead will discuss a similar approach using PACT later in this chapter. Another possibility is to use IV (2SLS) procedures, even when there is no hidden confounding suspected. The use of IV methods is a well-known tool for coping with biases caused by random measurement errors in explanatory variables,55–57 although their explicit use in allowing for measurement errors in putative mediators and process variables seems to be quite rare. 14,58,59 So some of the changes in inference about mediation that might arise from a comparison of naive B&K analyses with IV-based analyses might arise from this source rather than from unobserved confounders. Moreover, in a typical trial we commonly have additional information about the reliability of measurement of the mediator, and further information about other possible mediators. In simple single-mediator problems, failure to account for measurement error in the mediator results in systematic underestimation of the mediated path. 14,60 In the multiple mediators case, biases in either direction are possible. This suggests that sources of information on measurement error can be exploited to provide adjusted estimates of mediation without the cost of the much increased uncertainty of the IV method.

Structural mean models

A structural mean model is a model relating the potential outcomes Yi (z, m) to one another or to Yi (0, 0). If we assume a linear model for the potential outcome Yi (0, 0) in terms of a set of measured baseline covariates, Xi (including a vector of 1s), then as Lynch et al. ,27 we could write:

for all values of z and m, with ε being independent of Z but not of X and M, that is E[εi|Z = z] = 0.

However, it is unnecessary to model counterfactuals that would not have been observed under either randomisation. Instead, we can write this as follows, where the aim is to estimate the causal parameters ψ1 and ψ2:

where E[ei] = 0 and σe2 > 0. This term allows for treatment effect heterogeneity, but assuming that Cov(ei, (Mi(1) – Mi (0))) = 0 (no essential heterogeneity as previously35).

At the same time we have a model for M(z), which for quantitative M(z) might be:

Again, there is a random departure term, ωi (z), with zero expectation. The valid estimation of β1 and the effects of the baseline covariates on M (i.e. the θ) is very straightforward by linear regression. Unfortunately, the estimation of ψ1 and ψ2 is much more problematic. In the presence of hidden confounding between M and Y, these two parameters are not identified. Often, however, we can improve our ability to identify and estimate ψ1 and ψ2 by finding a moderator of the effect of Z on M (i.e. a predictive marker) and introducing the moderator by randomisation interaction into the model for M(z).

Model identification and parameter estimation: utilisation of baseline covariate (moderator) by randomisation interactions

Consider a binary pre-randomisation covariate, X. This covariate is assumed (or has been shown) to be a moderator of the effect of treatment (i.e. it is a predictive marker) on outcome (Y). We make the crucial assumption that X influences the total treatment effect (τ) through its effect on the level of mediation (β1) but that X does not moderate (modify) either the direct effect of the intervention (ψ1) or the effect of the mediator on the outcome (ψ2), as illustrated in Figure 2d.

For level 1 of the moderator (X = 1), we have a level specific total effect of Z on Y:

Similarly, for level 2 of the moderator (X = 2):

(being careful to distinguish the meaning of the new parameter, ψ3, from the earlier use of ψ3 to describe a randomisation by mediator interaction on the outcome).

Clearly, τ1 – τ2 = (ψ2 – ψ3)β1, and it immediately follows that β1 = (τ1 – τ2)/(ψ2 – ψ3). Recall that τ1, τ2 (the level-specific effects of randomisation on outcome), ψ2 and ψ3 (the level-specific effects of randomisation on the mediator) may all be estimated by regressing Y and M on Z separately at each level X = 1,2 (possibly adjusting for other covariates). Therefore, β1 is now identified, as is ψ1 (by substitution back into either of the above equations).

Thus, we have managed to allow for hidden confounding. Note, too, that, apart from possible lack of precision, the effects of the treatment on the mediator (ψ2 and ψ3) will not be affected by random measurement error in the mediator (they will be consistent). So, we have also dealt with imprecision in the mediator measurements. But the Achilles heel of this solution is finding convincing treatment moderators, particularly when their influence on the effects of treatment on outcomes is expected to be fully explained by the moderation of the treatment effects on the putative mediator (see Chapter 5).

If instead the baseline covariate, X, has many levels then, in general, τx = ψ1 + ψ(x + 1)β1 and so β1 and ψ1 are, respectively, the slope and intercept of the straight line relating the ATE (τ) at each level of X to the average effect of treatment on the mediator (ψx+ 1) at that level of X. This approach has much in common with the meta-analytic regression techniques for the evaluation of surrogate outcomes. 3,61–63 We note, again, that if a proxy for the mediator (M*) is simply the true mediator subject to random measurement error, then using M* rather than M itself will still yield valid causal effect estimates, as will the IV methods. 59,61

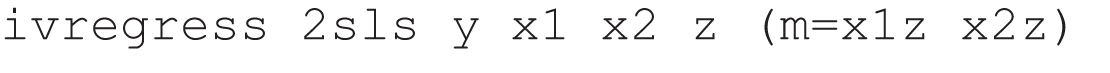

As the baseline covariate is influencing the size of the effect of treatment on the mediator, we have an X by Z interaction in the structural model for M(z). There is no interaction in the model for Y(z,m), and therefore the interaction is an IV (its only influence on outcome is through the mediator, as stated in our previous definition). So, if we have baseline covariates (e.g. X1 and X2), then at an individual level we can fit an equivalent IV model through the use of 2SLS. 14,51 In Stata, for example, we could use the following ivregress command:

where x1z and x2z are the products of x1 or of x2 and randomisation, respectively. For a binary mediator, we might wish to use a control function approach; these are an additional function which when added to the standard regression equation removes the endogeneity because they account for the correlation between the error term and the unobserved part of the outcome. 64 A typical Stata command would be:

Binary mediators: an alternative two-stage least-squares estimation procedure

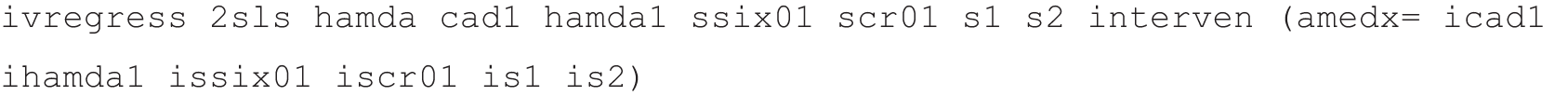

Elsewhere (Emsley et al. 65), we demonstrate both mathematically and through Monte Carlo simulation studies that the G-estimation procedure as described by Ten Have et al. 25 is identical to an IVs 2SLS estimation procedure using a function of the subject’s compliance score as an IV for M. Assuming that a set of covariates, X, contain the required moderators of the treatment effect on the mediator, this procedure can by fitted using standard statistical software, for example using the following procedure:

-

Fit a model for the probability that Mi = 1 given the covariates, Xi, for those in the intervention group (i.e. Pr[Mi = 1|Xi, Zi = 1]) using a logistic regression, and predict this probability for everyone in the trial.

-

Fit a model for the probability that Mi = 1 given the covariates, Xi, for those in the control group (i.e. Pr[Mi = 1|Xi, Zi = 0]) using a logistic regression, and, again, predict this probability for everyone in the trial.

-

Calculate cscore = (Zi – q){Pr[Mi = 1|Xi, Zi = 1] – Pr[Mi = 1|Xi, Zi = 0]} for each subject in the whole sample (where q is the proportion of the subjects randomised to receive treatment and the difference between the probabilities predicted by steps 1 and 2, Pr[Mi = 1|Xi, Zi = 1] – Pr[Mi = 1|Xi, Zi = 0], is the subject’s compliance score, denoted cscore).

-

Use a 2SLS procedure with cscore as the instrument for Mi (allowing for covariates, Xi, in both stages of the estimation).

Application of the alternative two-stage least squares algorithm

Although we do not believe that the present report is the right place to formally describe the mathematical and statistical equivalence of our 2SLS procedure and the G-estimation algorithm described by Ten Have et al. ,25 readers may find it useful to see an empirical demonstration of their equivalence. We do this by analysing the data from PROSPECT.

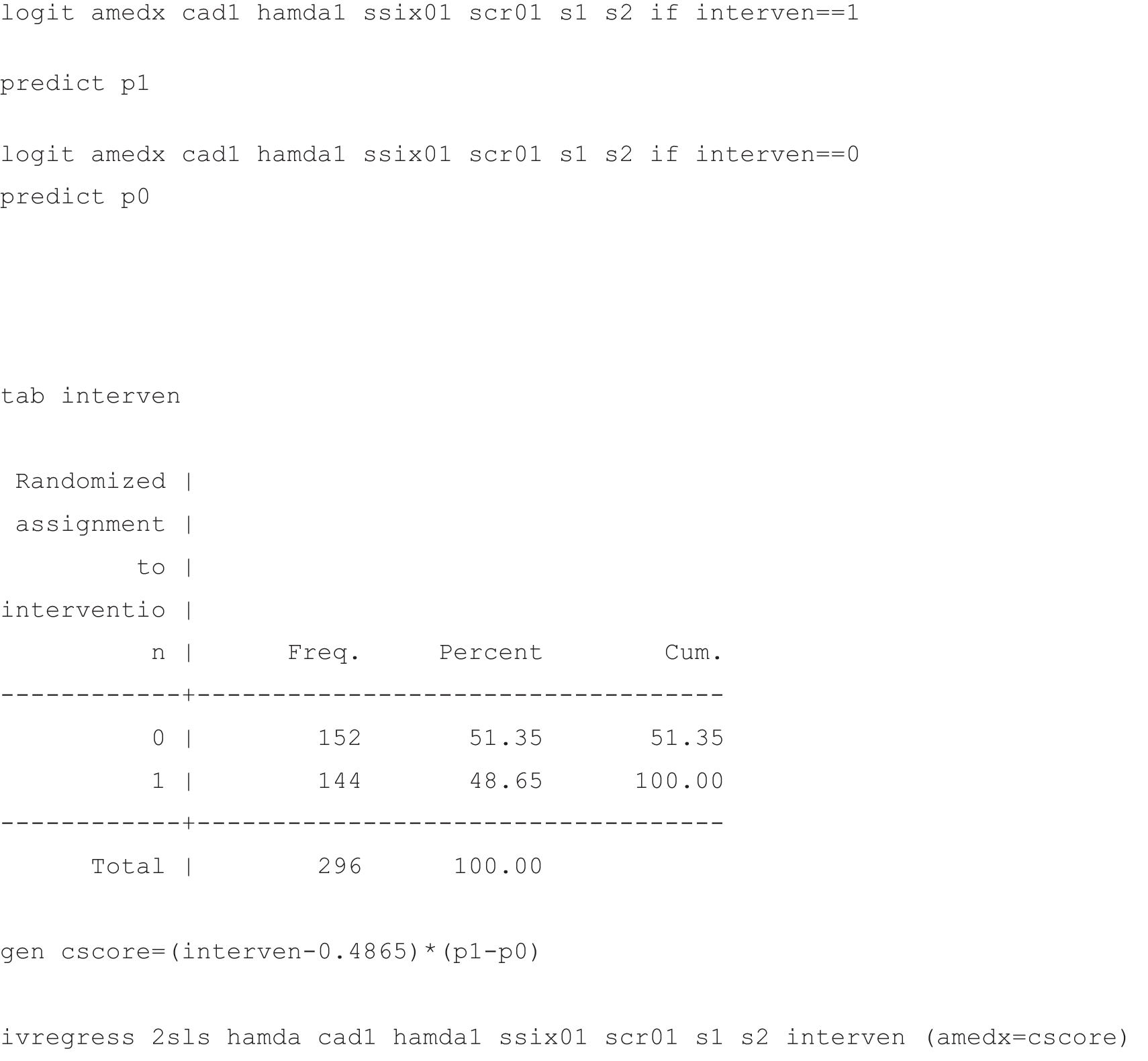

Data from this trial are available on the Biometrics website (www.biometrics.tibs.org/datasets/060225CF_biomweb.zip) as supplementary material to the paper by Ten Have et al. 25 Table 1 summarises these data and comprises information on the 297 depressed elderly trial participants with complete outcome data (here the Hamilton Depression Rating Scale66 score at 4 months after randomisation, the variable hamda). Here we use variable labels as provided in the Biometrics file. The baseline covariates are site (used in our analyses as the categorical factor site or as two dummy variables s1 and s2), previous use of medication (scr01), use of antidepressants at the time of the baseline assessment (cad1, scored from 0 to 5), a dichotomised measure of suicidal ideation at baseline (ssix01), based on the Scale for Suicidal Ideation67 and the Hamilton Depression Rating Scale total at baseline (hamda1). Medication adherence following treatment allocation (interven) is recorded by the binary variable amedx.

| Variable | Site 1 | Site 2 | Site 3 | |||

|---|---|---|---|---|---|---|

| Control (N = 53) | Intervention (N = 53) | Control (N = 57) | Intervention (N = 54) | Control (N = 4) | Intervention (N = 38) | |

| Baseline characteristics: n (%) | ||||||

| Antidepressant use, cad1 | 22 (41.5) | 18 (34.0) | 25 (43.9) | 25 (46.3) | 25 (59.5) | 21 (55.3) |

| Previous medication, scr01a | 27 (50.9) | 24 (45.3) | 25 (43.9) | 28 (51.9) | 29 (69.1) | 20 (52.6) |

| Suicidal ideation, ssix01 | 9 (17.0) | 13 (24.5) | 12 (21.1) | 18 (33.3) | 13 (31.0) | 16 (42.1) |

| Post-randomisation adherence to antidepressant medication: n (%) | ||||||

| Amedx | 20 (37.7) | 44 (83.0) | 19 (33.3) | 45 (83.3) | 30 (71.4) | 34 (89.5) |

| Hamilton depression scores: mean (SD) | ||||||

| At baseline, hamda1 | 16.48 (5.33) | 18.11 (6.15) | 17.25 (5.26) | 19.87 (6.40) | 18.62 (6.32) | 18.74 (5.85) |

| At 4 months, hamda | 13.42 (8.12) | 11.98 (7.75) | 14.10 (8.55) | 12.12 (7.29) | 12.98 (8.53) | 9.97 (6.92) |

There appears to be a beneficial effect of the intervention on the 4-month Hamilton Depression Rating Scale score, but there is also a clear effect of intervention on adherence to antidepressant medication. Could this be explaining the observed ITT effect on outcome? In our analyses, reported in Table 2, similar to previous authors, we make no attempt to allow for the clustering of the data within primary care practices.

| Estimation method | Estimate | SE | 95% CI |

|---|---|---|---|

| ITT effect | –3.15 | 0.82 | –4.76 to –1.53 |

| Effect of mediator on outcome (ψ2) | |||

| G-estimation | –1.975 | 2.313 | –6.509 to 2.560 |

| 2SLS using function of compliance score as IV | –1.975 | 2.401 | –6.680 to 2.730 |

| 2SLS using interactions as IVs | –1.953 | 2.714 | –7.294 to 3.388 |

| Regression as in B&K | –1.244 | 1.092 | –3.394 to 0.906 |

| Direct effect of the intervention on outcome (ψ1) | |||

| G-estimation | –2.367 | 1.274 | –4.864 to 0.130 |

| 2SLS using function of compliance score as IV | –2.367 | 1.316 | –4.946 to 0.212 |

| 2SLS using interactions as IVs | –2.376 | 1.350 | –5.032 to 0.281 |

| Regression as in B&K | –2.656 | 0.926 | –4.479 to –0.832 |

Ten Have et al. ’s original analysis split the baseline covariates into two sets. 25 One set is used in the logistic model to calculate the compliance score. The other set is used in the linear outcome model. We used all the baseline covariates in both steps (analogous to the standard 2SLS procedures). We compared the results of using this G-estimation procedure with those using the standard B&K regression, those from a conventional 2SLS run using baseline covariate by intervention interactions as instruments and, finally, using our modified 2SLS procedure using the compliance score as the IV.

First, we translated the Ten Have et al. 25 SAS G-estimation programs (available in the ZIP file from the Biometrics website) into Stata do files. We do not provide any details here (these available from the authors on request). We simply present the results.

The B&K regression is as follows:

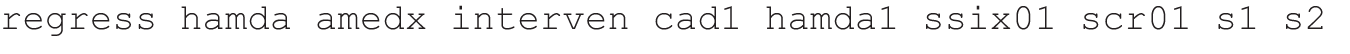

The standard 2SLS regression, using intervention by covariate interactions (the products icad1 ihamda1 issix01 iscr01 is1 is2) as instruments, is:

The modified 2SLS algorithm, using the compliance score as instrument, was operationalised as follows: