Notes

Article history

The research reported in this issue of the journal was funded by the HTA programme as project number 11/129/195. The contractual start date was in March 2013. The draft report began editorial review in February 2015 and was accepted for publication in April 2015. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Barnaby C Reeves reports receiving grants from the National Institute for Health Research Health Technology Assessment programme during the conduct of the study; the National Institute for Health Research grants (paying for his time through his academic employer) for various ophthalmological studies, including ones investigating wet age-related macular degeneration; personal fees from Janssen-Cilag outside the submitted work; and membership of the Health Technology Assessment Commissioning Board and Systematic Reviews Programme Advisory Group. In particular, he is a coinvestigator on the National Institute for Health Research-funded IVAN trial (a randomised controlled trial to assess the effectiveness and cost-effectiveness of alternative treatments to Inhibit VEGF in Age-related choroidal Neovascularisation; ISRCTN92166560) and is continuing follow-up of the IVAN trial cohort. Ruth Hogg reports she received grants and personal fees from Novartis Pharmaceuticals UK, outside the submitted work. Chris A Rogers reports she received a fee from Novartis Pharmaceuticals UK for a lecture unrelated to this work. Simon P Harding reports grants from the National Institute for Health Research during the conduct of the study. Usha Chakravarthy reports membership of the Health Technology Assessment Interventional Procedures Panel.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2016. This work was produced by Reeves et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Introduction

Background

Wet, or neovascular, age-related macular degeneration (nAMD) is a common condition which can cause severe sight loss and blindness. It occurs as a result of a pathological process in which new blood vessels arising from the choroid breach the normal tissue barriers and come to lie within the subpigment epithelial and subretinal spaces. These new blood vessels leak fluid and, because they are fragile, they can bleed easily. The collection of fluid or blood between the tissue layers and within the neural retina is incompatible with normal eyesight. Other variants of nAMD which are usually treated as nAMD include (1) an abnormal vascular complex arising de novo from the retinal circulation, known as retinal angiomatous proliferation, and (2) intrachoroidal aneurysmal dilatation(s) of the vasculature, known as polypoidal choroidopathy.

Currently, patients with nAMD (or nAMD variants) are treated with intravitreal injections of anti-vascular endothelial growth factor (VEGF) drugs1 (drugs that inhibit VEGF). The most commonly used drugs are ranibizumab (Lucentis®, Novartis Pharmaceuticals UK), bevacizumab (Avastin®, Roche Products Limited) and aflibercept (Eylea®, Bayer). Ranibizumab prevents sight loss in over 90% of eyes with nAMD when given as monthly intravitreal injections for up to 2 years. 2,3 Bevacizumab (unlicensed for nAMD) and aflibercept are non-inferior to ranibizumab in maintaining visual acuity after 1 year of treatment,4–6 and bevacizumab is also non-inferior to ranibizumab after 2 years of treatment. 7–9

Anti-VEGF drugs render the nAMD lesion quiescent by making the leaky vessels competent. However, adequate concentrations of the drug need to be present in order to maintain the neovascular complexes in a quiescent non-leaky state and to ameliorate the exudative manifestations. Once the macula has been rendered fluid free, cessation of treatment is the norm and patients are monitored for relapse at regular clinic visits, which are usually monthly. Monitoring involves visual acuity checks, clinical examination and optical coherence tomography (OCT), with treatment being restarted if required. There is now evidence that intensive regular monthly review to detect recurrence, restarting treatment when necessary, can result in functional outcomes similar to those observed in industry-sponsored trials of ranibizumab in which patients received monthly treatment over 2 years. 1,3,8 However, regular monthly review in the Hospital Eye Services (HESs), even without treatment, blocks clinic space, uses valuable resources, is expensive and is also burdensome to the patients and their carers. Ophthalmologists have also investigated giving ‘prophylactic’ treatment to quiescent eyes, extending the interval between clinic visits providing the disease remains quiescent, in order to lessen the burden of regular visits to patients and to the NHS. A disadvantage of this method of treatment is that it can lead to unnecessary overtreatment.

Existing evidence

There is currently no evidence about the effectiveness of community follow-up by optometrists for nAMD. On the other hand, there is evidence about the effectiveness of optometrists providing ‘shared care’ with the HESs for glaucoma and diabetic eye disease and the training programmes that have been used to achieve them. Evaluations comparing management by optometrists and ophthalmologists have shown acceptable levels of agreement between the decisions made in the context of glaucoma and accident and emergency services. 10,11 Thus, there are existing models of shared care management which are well established through formal evaluation. A recent review has outlined different approaches used to increase the capacity in nAMD services across the UK. 12 The case studies in this review show a variety of scenarios, with many involving extended roles for optometrists and nurse practitioners, but these occur within the HES. Some studies have also evaluated the potential of remote care, but these approaches involve assessments by an ophthalmologist specialising in medical retina working in the HES of OCTs captured by outreach services. 13,14

Taking and interpreting retinal images are skills that can be easily taught (the former is usually carried out by technicians in the HES) and, therefore, the final evaluation in a telemedicine scenario need not always involve an ophthalmologist. Many of the hospital-based scenarios involve specialist optometrists and nurse practitioners making clinical decisions, although the effectiveness of these management pathways has not yet been formally evaluated. The transfer of the care of patients who are not receiving active treatment for nAMD requires the ability to interpret signs in the fundus of the eye (through either clinical examination or fundus photography) combined with an examination of OCT images of the macula, as well as the facility for patients to be returned seamlessly and expediently to the HESs when there is reactivation of disease. Optometrists represent a highly skilled and motivated workforce in the UK and the vast majority of optometric practitioners are based in the community. A number of UK community optometric practices have already invested in the technology for performing digital fundus photography and OCT and use these technologies to make decisions about diagnosis and the need to refer a patient to the HES. However, the skill and ability of optometrists to differentiate quiescent nAMD from active nAMD have not been evaluated. In addition, to the best of our knowledge, no shared care management scheme for nAMD has been formally evaluated.

Relevance to the NHS/health policy

Even when nAMD has been successfully controlled by treatment with an anti-VEGF drug, clinicians continue to review patients regularly because there is a very high risk of relapse, evidenced by the proportion of patients who remain in follow-up for many years after initiation of therapy. 15 One of two strategies is typically used: (1) monthly review until active disease recurs, termed the ‘pro re nata regimen’, or (2) the ‘treat and extend regimen’. The latter method requires that treatment is administered even if there is no fluid at the macula, but the subsequent review interval is extended by approximately 2 weeks. The pro re nata regimen is very burdensome for patients and for the NHS, and the treat and extend regimen leads to overtreatment, with its attendant risks and additional expense.

If monitoring of the need for retreatment by community optometrists could be shown to have similar accuracy to the monitoring of the need for retreatment by ophthalmologists in the HES, there would be a strong impetus to devolve monitoring of patients whose disease is quiescent to the community setting. Community optometrists have the necessary training to recognise nAMD (they are responsible for the majority of referrals to the HES) but would need to be trained to acquire OCT images and to interpret them in order to assess the need for retreatment. If optometrists can be trained to perform these tasks and make the correct clinical decision, they could manage patients with quiescent disease effectively in the community until reactivation occurs, at which point rapid referral to the HES could be initiated.

The advantages of devolving monitoring to community optometrists include having clinic capacity freed up for the overstretched NHS and less travel time for patients.

Aims and objectives

The aim of the ECHoES (Effectiveness, cost-effectiveness and acceptability of Community versus Hospital Eye Service for follow-up) trial was to test the hypothesis that, compared with conventional HES follow-up, community follow-up by optometrists (after appropriate training) is not inferior for patients with nAMD with stable vision.

This hypothesis was tested by comparing decisions made by samples of ophthalmologists working in the HES and optometrists working in the community about the need for retreatment using clinical vignettes and images generated in the IVAN (a randomised controlled trial to assess the effectiveness and cost-effectiveness of alternative treatments to Inhibit VEGF in Age-related choroidal Neovascularisation) clinical trial [Health Technology Assessment (HTA) programme reference: 07/36/01; International Standard Randomised Controlled Trial Number (ISRCTN) 921665609]. Retreatment decisions made by participants in both groups were validated against a reference standard (see Chapter 2, Reference standard).

The trial had five specific objectives:

-

to compare the proportion of retreatment decisions classified as ‘correct’ (against the reference standard, ‘active’ vs. ‘suspicious’ or ‘inactive lesion’) made by optometrists and ophthalmologists

-

to estimate the agreement, and nature of disagreements, between retreatment decisions made by optometrists and ophthalmologists

-

to estimate the influence of vignette clinical and demographic information on retreatment decisions

-

to estimate the cost-effectiveness of follow-up in the community by optometrists compared with follow-up by ophthalmologists in the HES

-

to ascertain the views of patient representatives, optometrists, ophthalmologists and clinical commissioners on the proposed shared care model.

Chapter 2 Methods

The results of the cost-effectiveness analysis have been published open access in BMJ Open. 16

Study design

The ECHoES study is a non-inferiority trial designed to emulate a parallel-group design (Table 1). However, as all vignettes were reviewed by both optometrists and ophthalmologists in a randomised, balanced, incomplete block design,17,18 the ECHoES trial is more analogous to a crossover trial than to a parallel-group trial. This trial is registered as ISRCTN07479761.

| Research question component | ECHoES (crossover) trial | Conventional (parallel-group) trial |

|---|---|---|

| Population | Vignettes representing the clinical features of patients with quiescent nAMD being monitored for reactivation of disease | Patients with quiescent nAMD being monitored for nAMD reactivation |

| Intervention | Assessment of vignettes by a traineda optometrist to identify nAMD reactivation | Monthly review by a community optometrist, after training,b to detect nAMD reactivation |

| Comparator | Assessment of vignettes by a traineda ophthalmologist to identify nAMD reactivation | Monthly review by an ophthalmologist in the HESb to detect nAMD reactivation |

| Outcome | Correct identification of reactivated nAMD (presumed to lead to appropriate treatment to preserve visual acuity) | Visual acuity |

The trial aimed to quantify and compare the diagnostic accuracy of ophthalmologists and optometrists in assessing reactivation of quiescent nAMD lesions, compared with the reference standard (see Reference standard). This type of design was possible only with limited permutations of the total number of vignettes, participants and number of vignettes per participant. For the ECHoES trial, a total of 288 vignettes were created. Forty-eight ophthalmologists and 48 optometrists each assessed a sample of 42 vignettes. Each vignette was assessed by seven ophthalmologists and seven optometrists. Each sample of 42 vignettes was assessed in the same order by one optometrist and one ophthalmologist, both selected randomly from their cohorts.

Vignettes

A database of vignettes was created for the ECHoES trial using images collected in the IVAN trial (HTA reference: 07/36/01; ISRCTN 921665609), which included a large repository of fundus images and OCT images from eyes with varying levels of lesion activity. In the IVAN trial, OCT and fundus images were captured from 610 participants every 3 months for up to 2 years, generating a repository of almost 5000 sets of images, with associated clinical data. However, only a subset (estimated to be about 25% of all of the available OCT images) was captured using the newer-generation Fourier domain technology (now the clinical standard), which provides optimal images of the posterior ocular findings. The vignettes in the ECHoES trial were populated only with OCT images captured on spectral/Fourier domain systems.

Each vignette consisted of sets of retinal images (colour and OCT) at two time points (baseline and index), with accompanying clinical information (gender, age, smoking status and cardiac history) and best corrected visual acuity (BCVA) measurements obtained at both time points. The ‘baseline’ set were images from a study visit when the nAMD was deemed quiescent (i.e. all macular tissue compartments were fluid free) and the ‘index’ set consisted of images from another study visit. Considering both baseline and index images, and taking into account the available clinical and BCVA information, participants reviewed these vignettes and made classification decisions about whether the index lesion was believed to be reactivated, suspicious or quiescent. Further details are published elsewhere. 19 A reference standard lesion classification was assigned to each vignette on the basis of independent assessment by three retinal experts (see Reference standard).

Participants

Recruitment

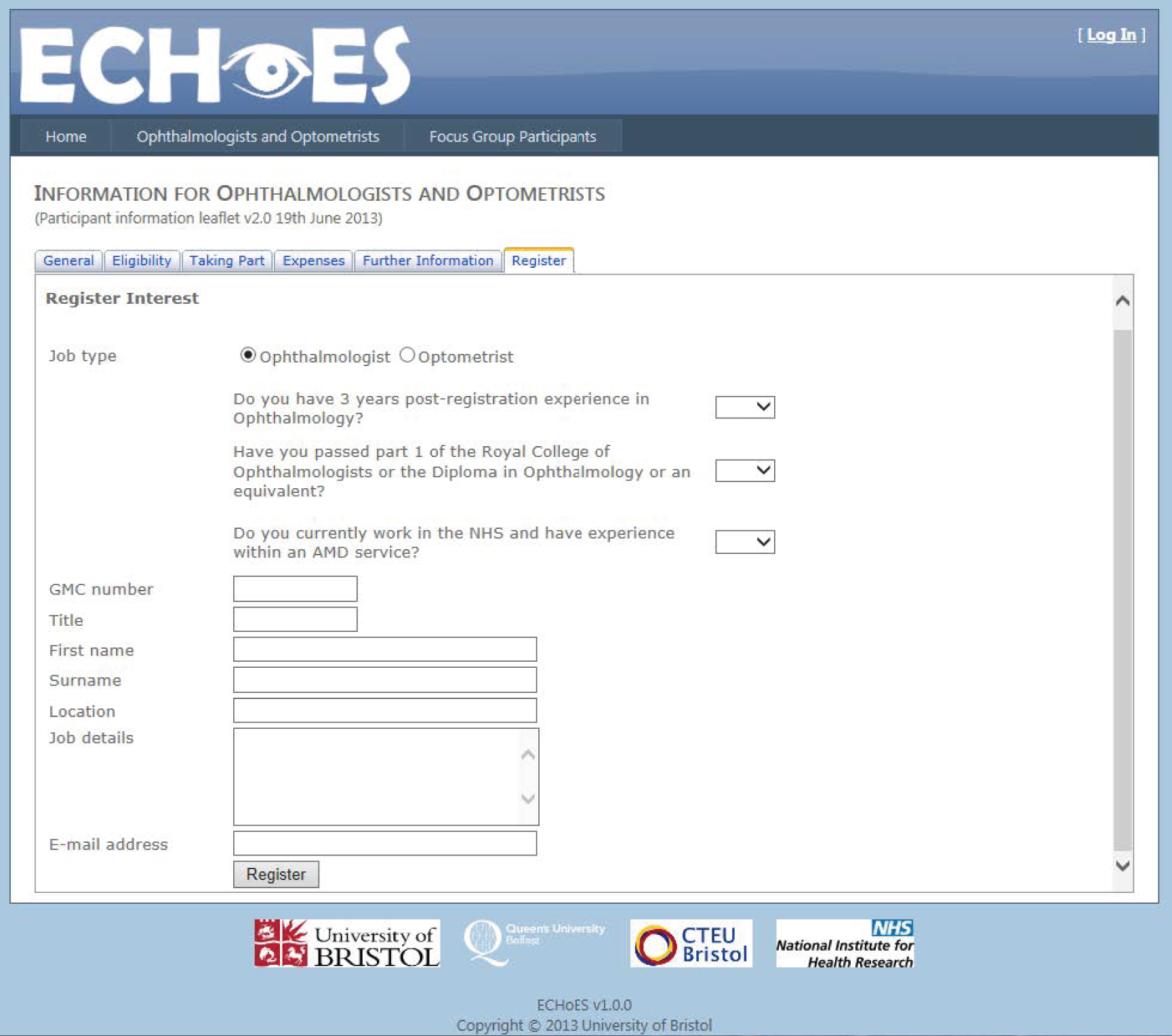

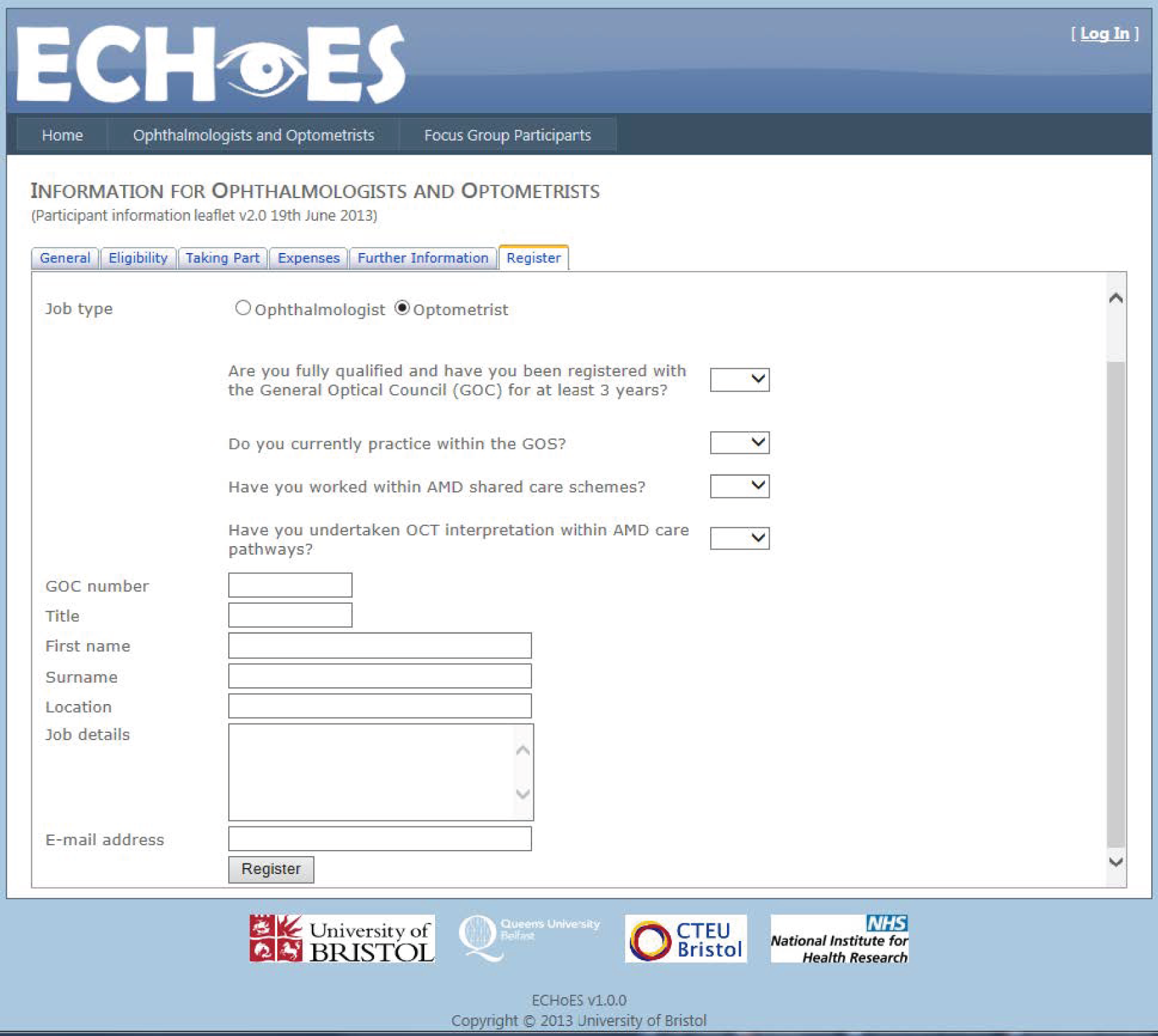

The ECHoES trial was publicised in optometry journals and forums to attract optometrists, and circulated to ophthalmologists who were members of the UK and Welsh medical retinal groups. Potential participants were directed to the ECHoES trial website, where they could read the information sheet and register their interest in the trial.

Eligibility criteria

Participants had to meet the following inclusion/exclusion criteria.

Ophthalmologists:

-

have 3 years’ post-registration experience in ophthalmology

-

have passed part 1 of the Royal College of Ophthalmologists examination or hold the Diploma in Ophthalmology or equivalent qualification

-

working in the NHS at the time of participation in the ECHoES trial

-

have experience within an age-related macular degeneration (AMD) service.

Optometrists:

-

be fully qualified and registered with the General Optical Council for at least 3 years

-

be practising within the General Optical Service at the time of participation in the ECHoES trial

-

must not be working within AMD shared care schemes or undertaking OCT interpretation within AMD care pathways.

There were also some practical circumstances in which a potential participant was not accepted to assess the main study vignette set:

-

unable to attend any of the webinar training sessions

-

unable to achieve an adequate standard (75%) with respect to the assessment of lesion activity status (i.e. reactivated, suspicious or quiescent) on the training set of vignettes.

Training participants

Both ophthalmologists and optometrists are qualified to detect retinal pathology, but optometrists (and some ophthalmologists) may not have the skills to assess fundus and OCT images for reactivation of nAMD. Therefore, the training was designed to provide the key information necessary to perform this task successfully, so that all participants had a similar level of background knowledge when starting their main trial vignette assessments. The training included two parts.

Webinar lectures

All participants were required to attend two mandatory webinar lectures. The first webinar covered the objectives of the EcHoES trial, its design, eligibility criteria for participation, outcomes of interest, and the background to detection and management of nAMD. The second webinar covered the detailed clinical features of active and inactive nAMD, the imaging modalities used to determine activity of the lesion and interpretation of the images. Each webinar lecture lasted approximately 1 hour, with an additional 15 minutes for questions.

Test of competence

After confirmation of attendance at the webinars, participants were allocated 24 training vignettes. In order to qualify for the main trial, participants had to assign the ‘correct’ activity status to at least 75% (18 of 24) of their allocated training vignettes, according to expert assessments (see Reference standard). If participants failed to reach this threshold, they were allocated a further 24 vignettes (second training set) to complete. If participants failed to reach the performance threshold for progressing to the main trial on their second set of training vignettes, they were withdrawn from the trial. Participants who successfully passed the training phase (after either one or two attempts) were allocated 42 vignettes for assessment in the main phase of the trial.

Training vignettes were randomly sampled from the same pool of 288 vignettes as those used in the main study. However, the sampling method ensured that participants assessed different vignettes in their main study phase from those assessed during their training phase; the samples for assessment in the main trial were allocated to participant IDs in advance (as part of the trial design) and training sets of vignettes were sampled randomly from the 246 remaining vignettes.

Reference standard

The reference standard was established based on the judgements of three medical retina experts. Using the web-based application (see Implementation/management/data collection), these experts independently assessed the vignette features and made lesion classification decisions for all 288 index images. As the judgements of experts did not always agree, a consensus meeting was held to review the subset of vignettes for which experts’ classifications of lesion status disagreed. The experts reviewed these vignettes together without reference to their previous assessments and reached a consensus agreement. This consensus decision (‘reactivated’, ‘suspicious’ or ‘quiescent’ lesion) for all 288 vignettes made up the reference standard and was used to determine ‘correct’ participant lesion classification decisions.

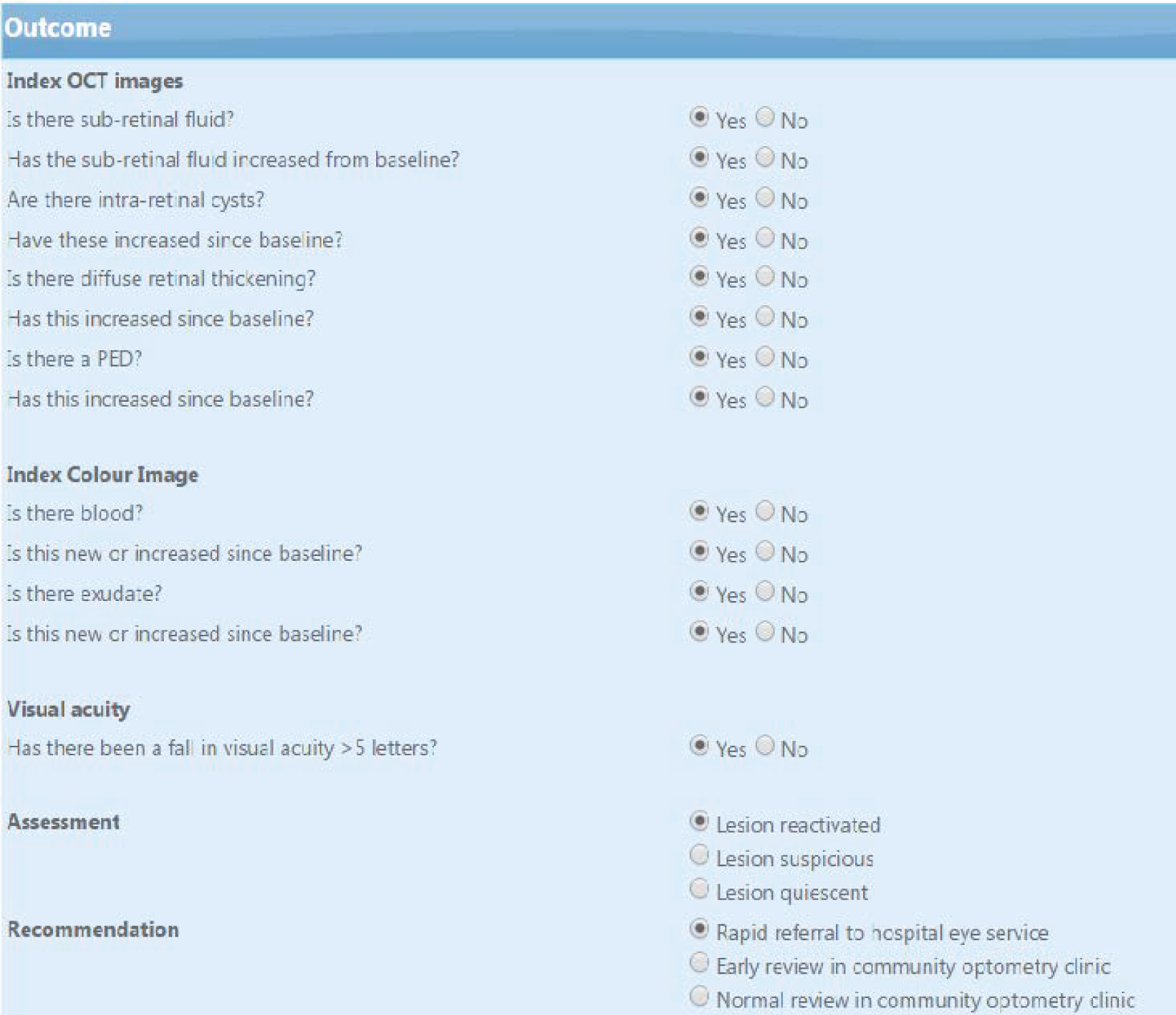

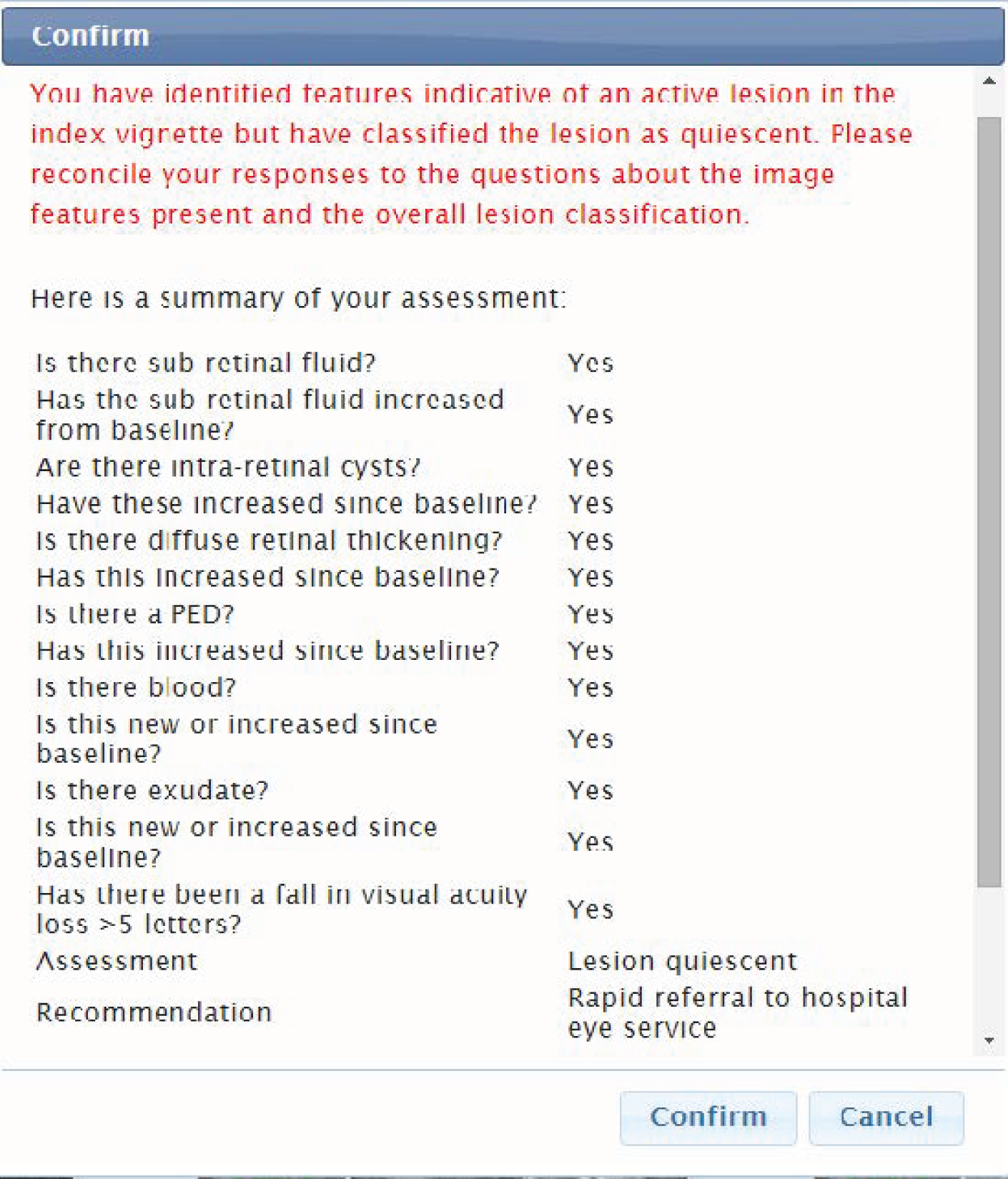

As described in the protocol,20 the classification of a lesion as reactivated or quiescent depended on the presence or absence of predefined lesion components and whether or not the lesion component had increased from baseline. Two imaging modalities were used. These were colour fundus (CF) photographs and OCT images. Lesion components in colour photographs that were prespecified comprised haemorrhage and exudate. Tomographic components comprised subretinal fluid (SRF), diffuse retinal thickening (DRT), localised intraretinal cysts (IRCs) and pigment epithelial detachment (PED). Experts indicated whether each lesion component was present or absent. Rules for the classification of a lesion as reactivated or quiescent from the assessment of lesion components were prespecified (Table 2). Experts could disagree about the presence or absence of a specific lesion component and whether or not these components had increased from the baseline; however, they had to follow the rules when classifying a lesion as reactivated or quiescent (note that the PED lesion component did not inform lesion classification as active or quiescent). Validation prompts reflecting these rules were added to the web application after the training phase to prevent data entry or keystroke errors; the validation rules did not assist participants in making the overall assessment because they had to enter their assessments of the relevant image features present in each vignette before classifying the lesion status.

| Feature | Lesion reactivated | Lesion quiescent |

|---|---|---|

| SRF on OCT | Yes | No |

| Or | And | |

| IRC on OCT | Yes, and increased from baseline | No/not increased from baseline |

| Or | And | |

| DRT on OCT | Yes, and increased from baseline | No/not increased from baseline |

| Or | And | |

| Blood on CF | Yes, and increased from baseline | No/not increased from baseline |

| Or | And | |

| Exudates on CF | Yes, and increased from baseline | No/not increased from baseline |

Owing to the short duration of the trial, participant training and assessments were undertaken concurrently with the independent experts’ assessments of the vignettes. Therefore, training sets of vignettes were scored against experts’ assessments that were complete at the time. Subsequently, checks were instituted to ensure that no participant was excluded from the main trial who might have passed the threshold performance score had the consensus reference standard been available at the time.

Outcomes

Primary outcome

The primary outcome was correct classification of the activity status of the lesion by a participant, based on assessing the index images in a vignette, compared with the reference standard (see Reference standard). Activity status could be classified as ‘reactivated’, ‘suspicious’ or ‘quiescent’. For the primary outcome, a participant’s classification was scored as ‘correct’ if:

-

both the participant and the reference standard lesion classification were reactivated

-

both the participant and the reference standard lesion classification were quiescent

-

both the participant and the reference standard lesion classification were suspicious

-

either the participant or the reference standard lesion classification was suspicious, and the other classification (reference standard or participant) was quiescent.

In effect, for the primary outcome, suspicious and quiescent classifications were grouped, making the primary outcome binary (Table 3).

| Participant classification | Reference standard classification | ||

|---|---|---|---|

| Reactivated | Suspicious | Quiescent | |

| Reactivated | ✓ | ✗ | ✗ |

| Suspicious | ✗ | ✓ | ✓ |

| Quiescent | ✗ | ✓ | ✓ |

Secondary outcomes

-

The frequency of potentially sight-threatening ‘serious’ errors. An error of this kind was considered to have occurred when a participant’s classification of a vignette was ‘lesion quiescent’ and the reference standard classification was ‘lesion reactivated’; that is, a definitive false-negative classification by the participant. Definitive false positives were not considered sight-threatening, but were tabulated. Misclassifications involving classifications of ‘lesion suspicious’ were also not considered sight-threatening.

-

Judgements about the presence or absence of specific lesion components, for example blood and exudates in the fundus colour images, SRF, IRC, DRT and PED in the OCT images and, if present, whether or not these features had increased since baseline.

-

Participant-rated confidence in their decisions about the primary outcome, on a 5-point scale.

Adverse events

This study does not involve any risks to the participants; therefore, it was not possible for clinical adverse events to be attributed to study-specific procedures.

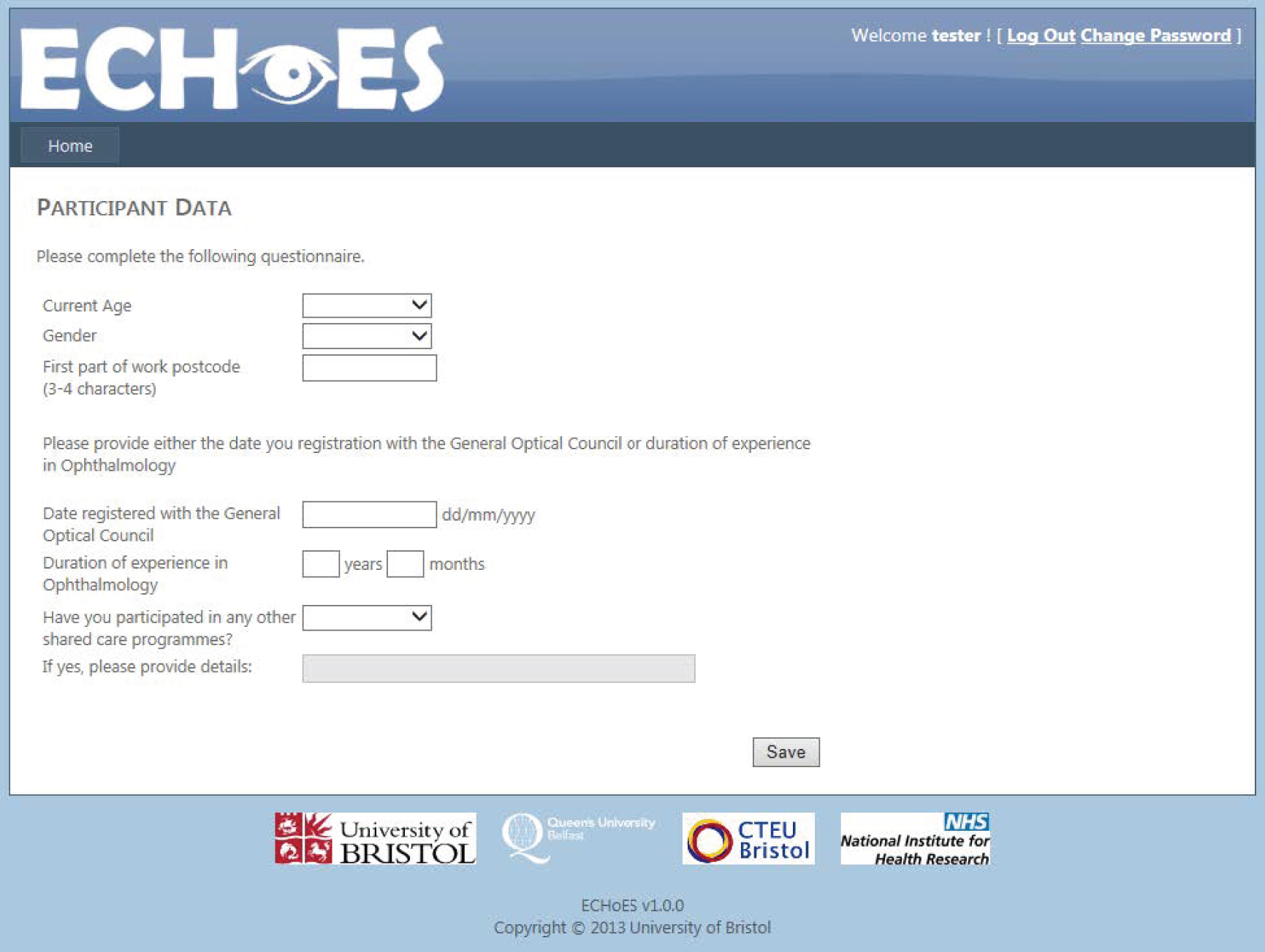

Implementation/management/data collection

A secure web-based application was developed to allow participants to take part in the trial remotely (see example screenshots Appendix 2). The website www.echoestrial.org/demo shows how assessors carried out assessments in the trial. Participants registered interest, entered their details, completed questionnaires (regarding opinions on ECHoES trial training and shared care), and assessed their training and main study vignettes through this application. The webinar material was also available in the web application for participants to consult if they needed to revisit it.

Additionally, the web application had tools accessible only to the trial team to help manage and monitor the conduct and progression of the trial. Further details are published elsewhere. 19

Sample size

With respect to the primary outcome, the trial was designed to answer the non-inferiority question ‘Is the performance of optometrists as good as that of ophthalmologists?’. A sample of 288 vignettes was chosen to have at least 90% power to test the hypothesis that the proportion of vignettes for which lesion status was correctly classified by the optometrist group. We assumed that the proportion of vignettes for which lesion status was correctly classified by the ophthalmologist group was at least 95% and that each vignette would be assessed by only one ophthalmologist and one optometrist. However, as each vignette was assessed seven times by each group, the trial in fact had 90% power to detect non-inferiority for lower proportions of vignettes correctly classified by the ophthalmologist group.

Statistical methods

The analysis population consisted of the 96 participants who completed the assessments of their training and main study samples of vignettes. Continuous variables were summarised by means and standard deviations (SDs), or by medians and interquartile ranges (IQRs) if distributions were skewed. Categorical data were summarised as a number and percentage. Baseline participant characteristics were described and groups formally compared using t-tests, Mann–Whitney U-tests, chi-squared tests or Fisher’s exact tests as appropriate.

Group comparisons

All primary and secondary outcomes were analysed using mixed-effects regression models, adjusting for the order the vignettes were viewed as a fixed effect (tertiles: 1–14, 15–28, 29–42) and participant and vignette as random effects. All outcomes were binary and as such were analysed using logistic regression, with group estimates presented as odds ratios (ORs) with 95% confidence intervals (CIs).

In addition to the group comparisons, the influence of key vignette features [age, gender, smoking status, cardiac history (including angina, myocardial infarction and/or heart disease), and baseline and index BCVA (modelled as the sum and difference of BCVA at the two time points)] on the number of incorrect vignette classifications were investigated, adjusting for the reference standard classification (reactivated vs. quiescent/suspicious). This additional analysis was carried out using fixed-effects Poisson regression with the outcome of the number of incorrect classifications. The prior hypothesis was that this information would not influence the number of correct (or incorrect) classifications.

The sensitivity and specificity of the primary outcome are also presented. For these performance measures, the sensitivity is the proportion of lesions for which the reference standard is ‘reactivated’ and participants correctly classified the lesion. The specificity is the proportion of lesions for which the reference standard is either ‘suspicious’ or ‘quiescent’ and the participant’s classification is also ‘suspicious’ or ‘quiescent’.

Non-inferiority limit

For the sample size calculation, it was agreed that an absolute difference of 10% would be the maximum acceptable difference between the two groups, assuming that ophthalmologists would correctly assess 95% of their vignettes. As the group comparison of the primary outcome was analysed using logistic regression and presented as an OR, this non-inferiority margin was converted to the odds scale. Therefore, this limit was expressed as an OR of 0.298 [i.e. the odds correct for the worst acceptable performance by optometrists (85%) divided by the odds correct for worst assumed performance of ophthalmologists (95%): (0.85/0.15)/(0.95/0.05)].

Sensitivity analysis

A sensitivity analysis of the primary outcome, regrouping vignettes graded as suspicious into the ‘lesion reactivated’ group rather than ‘quiescent lesion’ group, was undertaken to assess the sensitivity of the conclusions to the classification of the vignettes graded as suspicious. This analysis was prespecified in the analysis plan but not in the trial protocol.

Post-hoc analysis

The following post-hoc analyses were prespecified in the analysis plan, but not in the trial protocol.

-

Lesion classification decisions were tabulated against referral decisions for both groups.

-

A descriptive analysis of the time taken to complete each vignette and how the duration of this time changed with experience in the trial (learning curve) was performed. The relationship between the duration of this time and participants’ ‘success’ in correctly classifying vignettes was also explored.

-

Cross-tabulations and kappa statistics were used to compare experts’ initial classifications with the final reference standard. Similarly, cross-tabulations and kappa statistics were used to compare lesion component classifications between the three experts.

In addition, a descriptive analysis of the participants’ opinions about the training provided in the ECHoES trial, as well as their perceptions of shared care, was carried out (see ECHoES participants’ perspectives of training and shared care).

Missing data

By design, there were no missing data for the primary and secondary outcomes. However, time taken to complete vignettes was calculated as the time between when each vignette was saved on the database. It was, therefore, not possible to calculate this for the first vignette of each session. Additionally, it was assumed that times longer than 20 minutes (database timeout time) were due to interruptions, so these were set to missing. As the analysis using these times was descriptive and was not specified in the protocol, complete case analysis was performed and missing/available data described.

Statistical significance

For hypothesis tests, two-tailed p-values of < 0.05 were considered statistically significant. Likelihood ratio tests were used in preference to Wald tests for hypothesis testing.

All data management and all analyses carried out by the Clinical Trials and Evaluation Unit, Bristol, were performed in SAS version 9.3 (SAS Institute Inc., Cary, NC, USA) or Stata version 13.1 (StataCorp LP, College Station, TX, USA).

Changes since commencement of study

At the end of their participation in the trial, all participants were asked to complete a questionnaire regarding their opinions about the training provided in the ECHoES trial and their attitudes towards a shared care model. This was made available to all ECHoES trial participants.

In addition, the reference standard was originally planned to include only two categories, namely lesion reactivated or lesion quiescent. However, after a concordance exercise was carried out by the three retinal experts on the ECHoES trial team, a third category of suspicious lesion was added. This change occurred before any experts’ or participants’ lesion classifications were made.

Finally, after much consideration, it was agreed the primary analysis would be carried out using logistic regression rather than Poisson regression in order to fully account for the incomplete block design. Fitting a Poisson model would not have allowed us to include both vignette and participant as random effects in the model.

Health economics

Aims and research questions

The economic evaluation component of the ECHoES trial aimed to estimate the incremental cost and incremental cost-effectiveness of community optometrists compared with hospital-based ophthalmologists performing retreatment assessments for patients with quiescent nAMD. This would enable us to determine which professional group represents the best use of scarce NHS resources in this context. The main outcome measure was a cost per correct retreatment decision, with correct meaning that both experts and trial participants judge the vignettes to be lesion reactivated, lesion quiescent or lesion suspicious.

Analysis perspective

The economic evaluation took the cost perspective of the UK NHS, personal social services and private practice optometrists, and was performed in accordance with established guidelines and recent publications. 21–23 Although it is possible that any incorrect retreatment decisions (false positives) could lead to costs being incurred by patients, their families or employers because of time away from usual activities, these wider societal costs were felt to be small compared with the implications for the NHS. Therefore, in line with the IVAN trial9, we decided not to adopt a societal perspective in the ECHoES trial.

Economic evaluation methods

The methods used in the economic evaluation are summarised in Table 4. Data on resource use and costs were collected using bespoke costing questionnaires for community optometrists. With the help of the ECHoES trial team (clinicians and optometrists), we first identified the typical components/tasks which are included in a monitoring review for nAMD and then subcategorized these tasks into resource groups such as staffing, equipment and building space. As some optometrist practices are likely to incur set-up costs associated with assessing the need for retreatment, we compiled a list of items of equipment necessary for performing each task within a monitoring review. The resource-use and cost questionnaire asked each participant which items of equipment from this list their practice currently owned and how much they had paid for the items. The questionnaire was piloted with a handful of optometrists and ophthalmologists who were able to advise on whether or not our questionnaire was straightforward to comprehend and complete. For any items which would be necessary for the review, but which the practice did not already own, we inferred that these items would have to be purchased ‘ex novo’. Once the total costs of equipment were identified, along with their predicted life span as suggested by study clinicians, we created an equivalent annual cost for all of the equipment using a 3.5% discount rate,24 and divided this cost by the number of potential patients who could undergo the monitoring reviews in the community practices. See Appendix 3 for a copy of the bespoke costing questionnaires for the optometrists in the ECHoES trial.

| Aspect of method | Strategy used in base-case analysis |

|---|---|

| Form of economic evaluation | Cost-effectiveness analysis for comparison between optometrists and ophthalmologists |

| Perspective | NHS, Personal Social Services and private practice optometrists’ costs |

| Data set | Optometrist and ophthalmologists enrolled in the ECHoES trial |

| Missing data | Imputation |

| Costs included in analysis | Equipment, staff, building, training for webinars |

| Effectiveness measurement | Correct retreatment decisions |

| Sensitivity analysis | Three injections of Lucentis and consultations instead of one, using Eylea instead of Lucentis and using Avastin instead of Lucentis |

| Time horizon | A within-trial analysis, taking an average of 2 weeks (maximum of 4 weeks) |

We estimated the costs associated with training optometrists to perform the assessments. The costs were estimated for the 2-hour duration webinar, plus time spent to revisit the webinar and time spent in checking other resources. Finally, the amount of time the ECHoES study clinicians spent preparing and delivering the webinars was also estimated.

For information on the costs of ophthalmologists performing the monitoring assessments, we used cost data from the IVAN trial, in which we had undertaken a very detailed micro-costing study. 25

In the economic evaluation, it is advised that value-added tax (VAT) should be excluded from the analysis. 21 Where possible, we used costs without VAT for our base-case analysis.

Missing data

Only 40 of the 61 optometrists who were initially invited to complete the health economics questionnaire (those who completed webinar training) actually submitted the questionnaire; 34 of the 40 completed their main assessments for the trial. Fifty-five of the 61 optometrists who were invited to complete the health economics questionnaire replied to the feedback questionnaire, which also contained information on the time spent on training by the optometrist. This training time was costed and contributes to the calculation of the cost per monitoring review for optometrists. Therefore, in order to maximise the use of questionnaire replies, a cost per monitoring review was estimated using all available information as reported by all optometrists rather than focusing only on the 48 that subsequently completed the main assessments.

For consistency with the procedure adopted in the IVAN trial costing, mean values of the relevant variables were imputed to the 21 optometrists who did not submit the cost questionnaire and to the six who did not complete the feedback questionnaire. The estimated costs from the 61 practices were randomly assigned (see Cost model: random, allocation) to each of the 2016 vignettes assessed by the 48 optometrists who completed the main assignment.

Cost model: random, allocation

From the IVAN trial, we had estimates of the cost of clinician-led monitoring reviews from 28 eye hospitals. Using a random allocation procedure similar to the one used in the IVAN trial,9 these estimates were allocated to each of the 2016 vignettes assessments carried out by the 48 ophthalmologists in the ECHoES trial. From the data collected for the ECHoES trial, we had estimates of the cost of the monitoring review from 61 (after imputation) optometric practices, which varied across practices. Although it would have been possible to link each vignette to the cost derived for the optometry practice in which the optometrist assessing the vignette worked, this would have ignored the heterogeneity between practices and the uncertainty around the estimates of the mean cost of the monitoring review. Therefore, we adopted the same approach as used for the IVAN trial, which was to randomly allocate costs. We randomly sampled from the distribution of costs from different optometrist practices using the procedure described below. Each of the 61 practices (for which the cost of a monitoring review had been estimated) reported the monthly number of nAMD patients that they could accommodate after all required changes in the practice (purchase of necessary equipment, changes to the structure/size of the practice, new staff, etc.) would have been implemented. These numbers were used to calculate weights; that is, each weight was the number of nAMD patients that the practice could accommodate per month divided by the summation of all patients with quiescent nAMD who could theoretically be accommodated by all of the practices per month after implementing changes. Cumulative weights were then calculated and assigned to each optometric practice. For each vignette (potential patient) a random number was generated. This random number determined which of the monitoring review costs each vignette was randomly assigned to. For each vignette assessed by a community optometrist, we randomly drew a value for the cost of the community optometry review from the distribution of the ECHoES trial monitoring review costs; for each vignette assessed by an ophthalmologist, we randomly drew a value for the cost of a hospital review from the cost distribution used in IVAN. For those vignettes where an incorrect treatment decision resulted in an additional hospital monitoring consultation or an unnecessary injection, we drew additional random numbers to sample those consultation costs from the distribution of costs as reported for the IVAN trial.

Care cost pathway decision tree

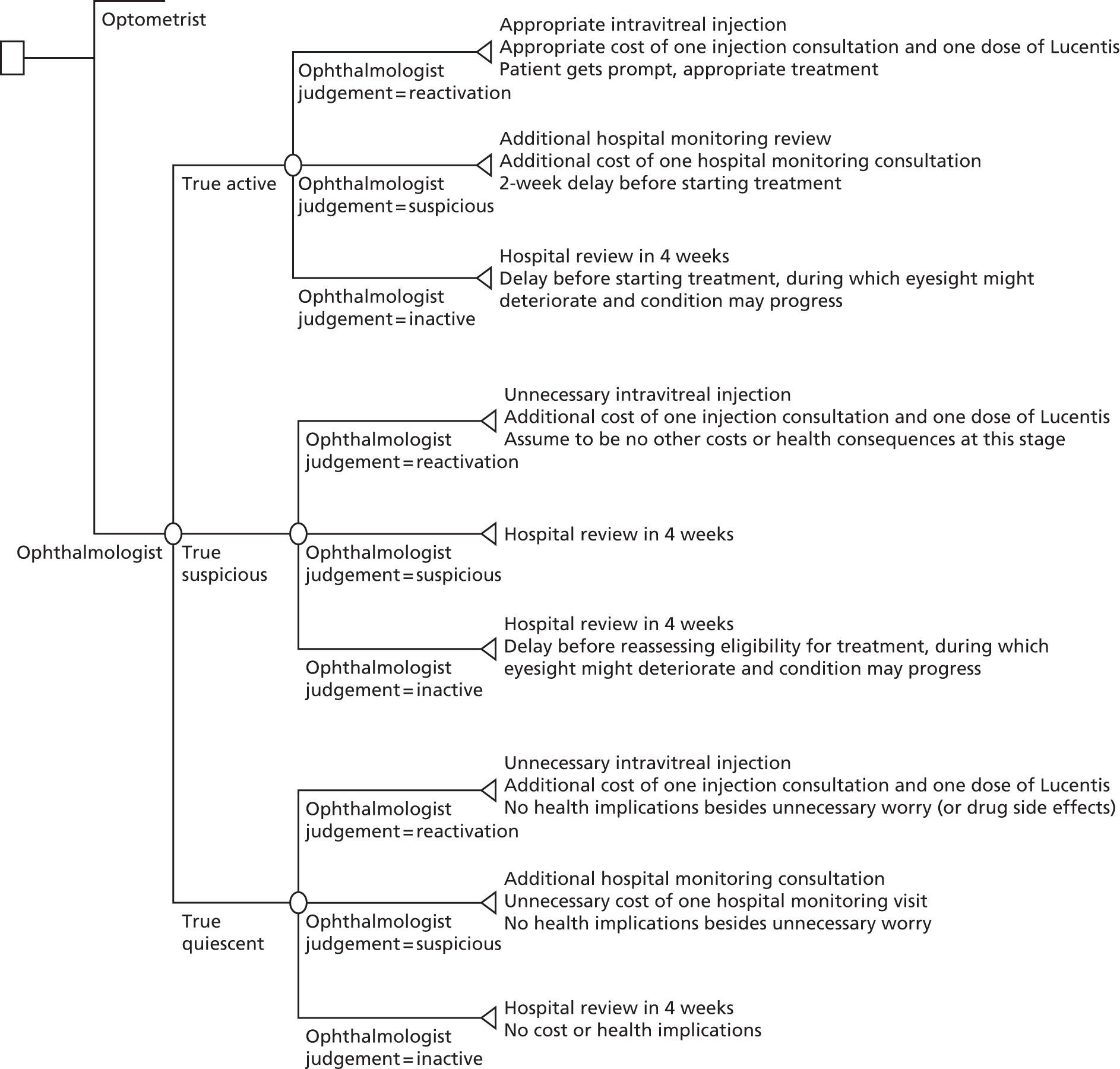

In order to generate an estimate for a cost for a correct retreatment decision, we first mapped the various care pathways that would possibly happen from the treatment assessments in the study, by comparing the reference lesion classification for each vignette to the actual classification made by the study participants (48 ophthalmologists and 48 optometrists). This gave rise to two versions of a simple decision tree: one for ophthalmologists (Figure 1) and one for optometrists (Figure 2). The starting point for the decision trees was the ‘actual’ truth. The three main branches of the trees were true active, true quiescent and true suspicious. Participants’ main trial assessments provided information about the number of participants who made correct and incorrect decisions on treatment compared with the reference standard decisions.

FIGURE 1.

Decision tree for hospital ophthalmologist review. Reproduced open access from Violato et al. 16 under the terms of the Creative Commons Attribution (CC BY 4.0) licence.

FIGURE 2.

Decision tree for community optometrist review. Reproduced open access from Violato et al. 16 under the terms of the Creative Commons Attribution (CC BY 4.0) licence.

The decisions that both groups made about the vignettes were then placed into the decision trees and the associated costs for the different pathways were calculated. This process generated an average cost for each of the alternative care pathways. Any ‘incorrect’ decisions implied that patients would have had unnecessary repeat monitoring visits at the optometrist practice or at the hospital and maybe unnecessary anti-VEGF injections if they misclassified patients as requiring treatment. All costs are reported in 2013/14 prices unless specified otherwise.

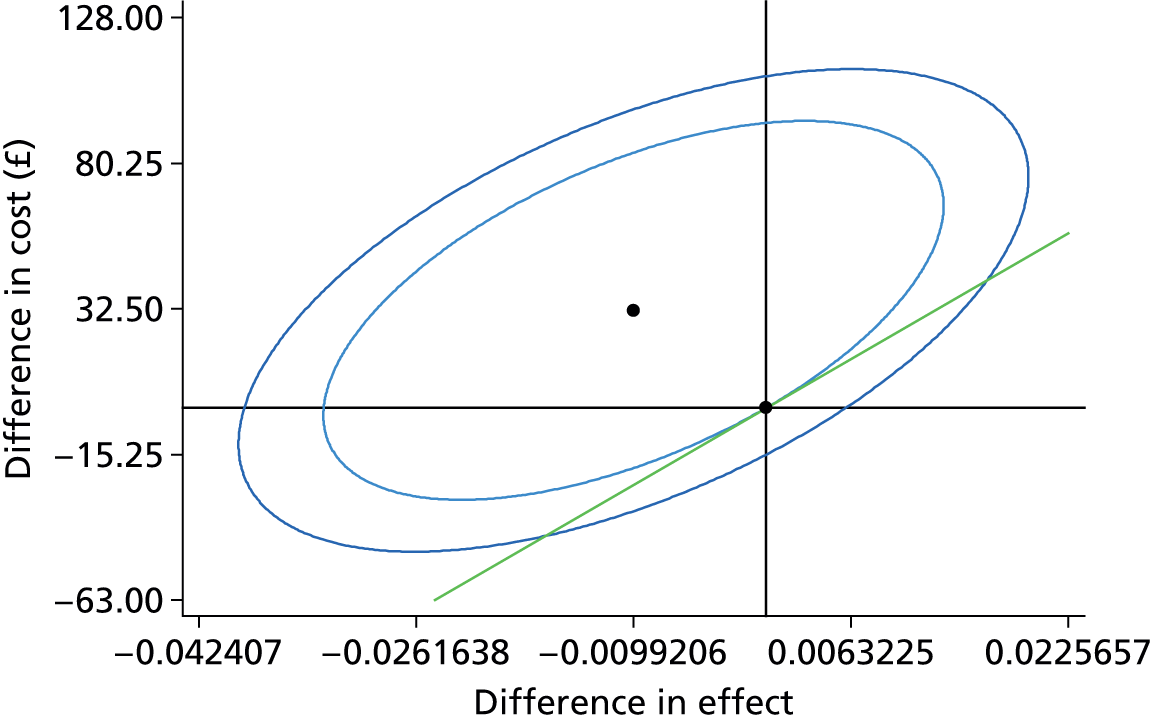

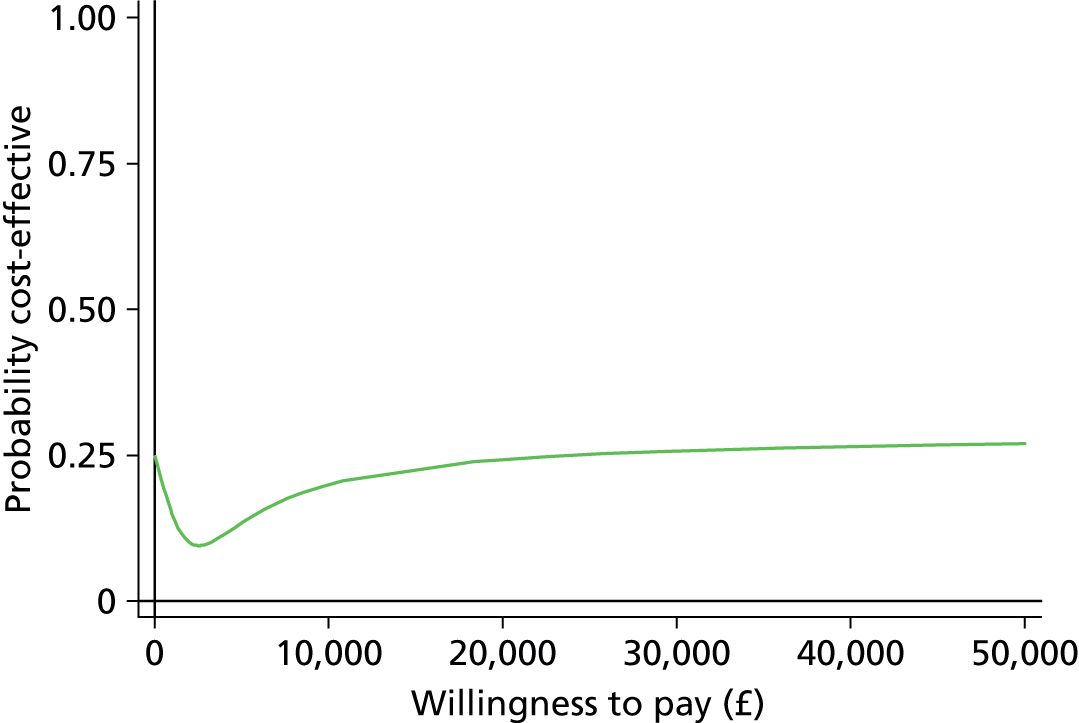

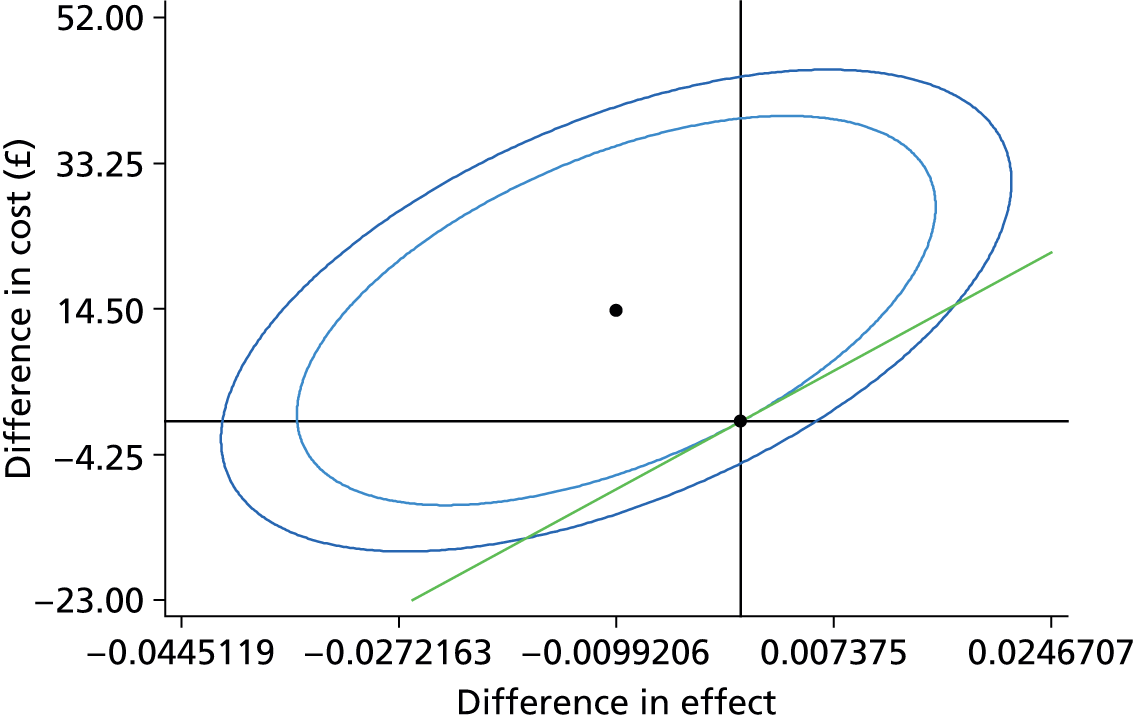

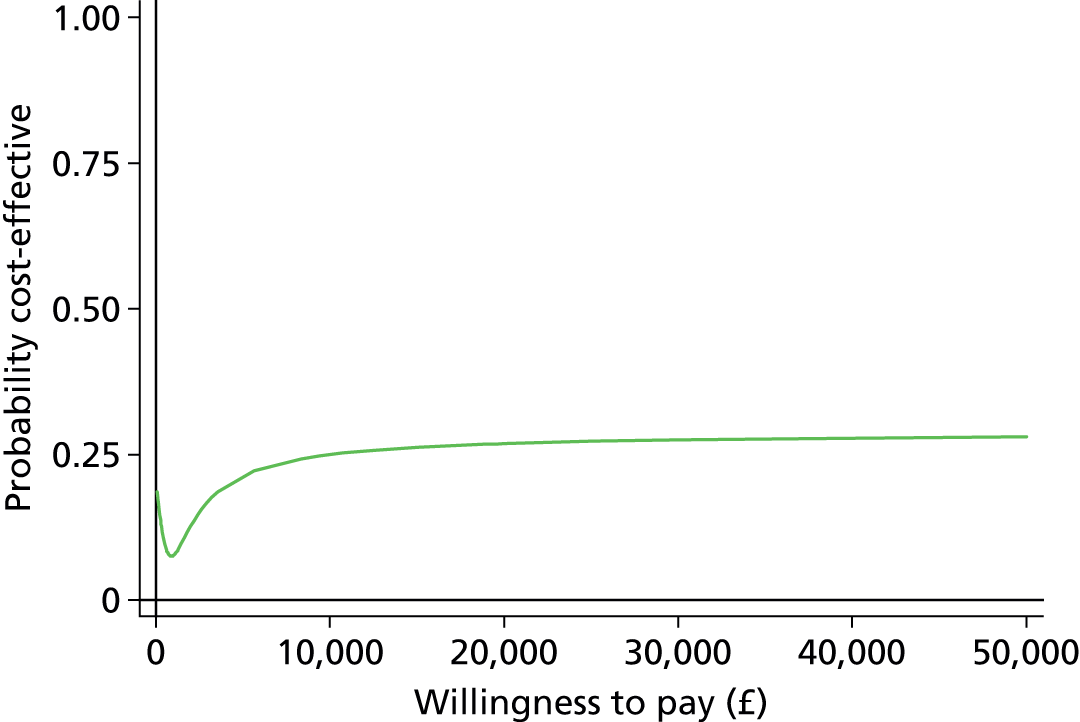

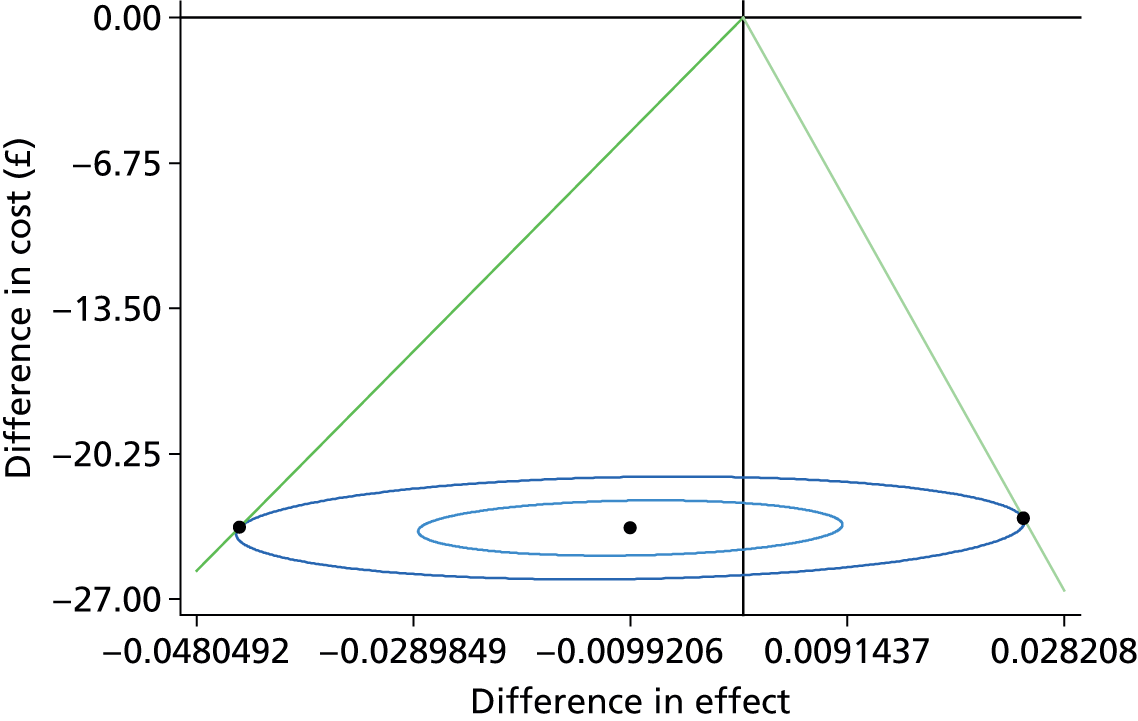

Our baseline analysis calculated the average cost and outcome on a per patient basis. From these estimates, the incremental cost-effectiveness ratios (ICERs) for the different assessment options were derived, producing an incremental cost per accurate retreatment decision. Sensitivity analyses were carried out to demonstrate the impact of variation around the key parameters in the analysis on the baseline cost-effectiveness results. The four sensitivity analyses which we conducted were:

-

Sensitivity analysis 1: all patients initiating treatment were assumed to have a course of three ranibizumab given at three subsequent injection consultations, with no additional monitoring reviews. This matched the way in which discontinuous treatment was administered in the IVAN trial.

-

Sensitivity analysis 2: treatment was assumed to be one aflibercept injection given during an injection consultation.

-

Sensitivity analysis 3: treatment consisted of one bevacizumab injection given during an injection consultation.

-

Sensitivity analysis 4: only considered the cost of a monitoring review rather than considering the cost of the whole pathway.

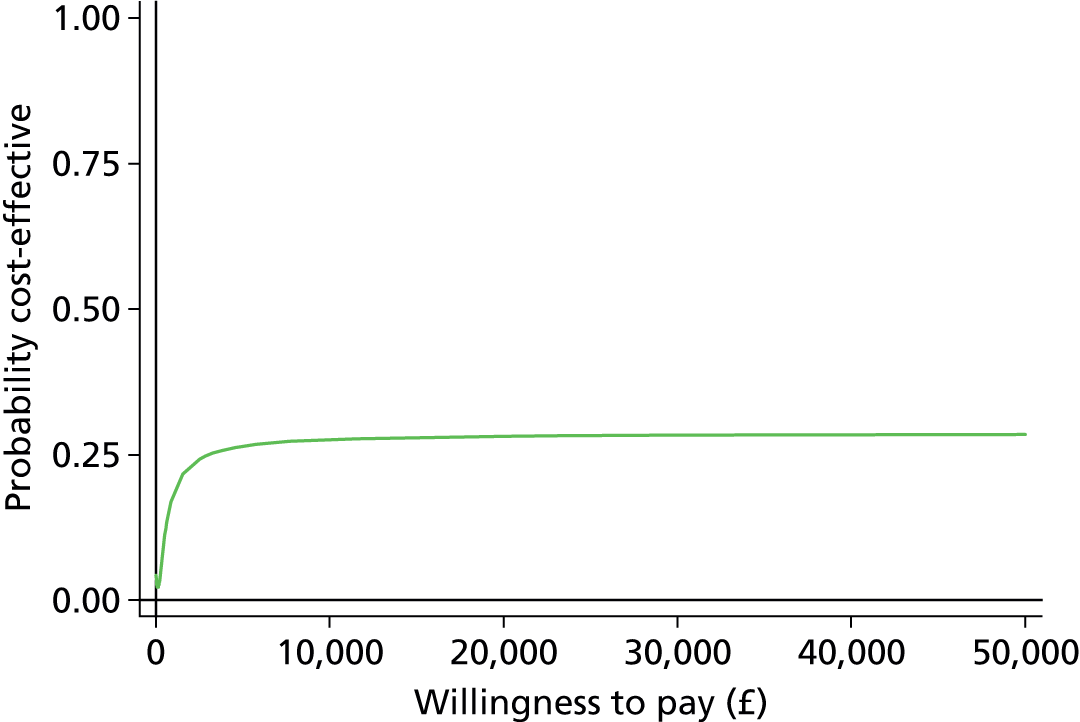

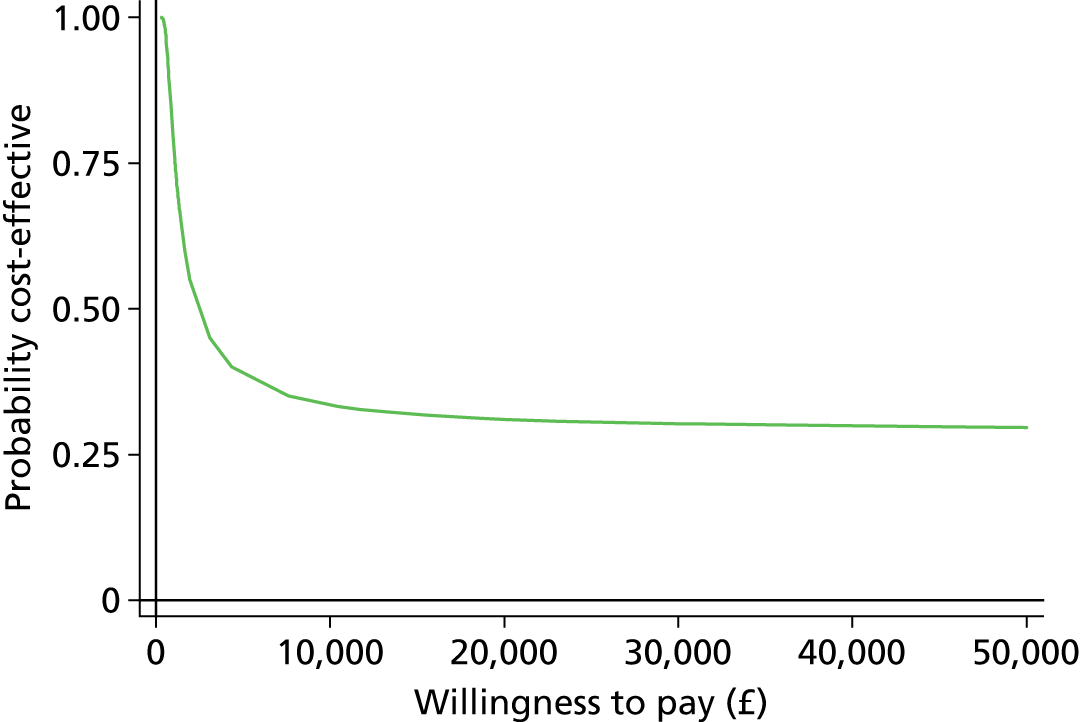

The economic evaluation results were expressed in terms of a cost-effectiveness acceptability curve (CEAC). This indicates the likelihood that the results fall below a given cost-effectiveness ceiling and could help decision makers to assess whether optometrists are likely to represent value for money for the NHS when compared with ophthalmologists in making decisions about retreatment in nAMD patients and also completely disparate health-care interventions. All health economics analyses were conducted in Stata version 12.1 (StataCorp LP, College Station, TX, USA), except the budget impact analysis.

Budget impact

Freeing up HES clinic time could lead to an increase in the overall capacity of the NHS both to manage the nAMD population more effectively and to manage non-nAMD eye patients (more time for ophthalmologists to spend with non-nAMD patients if they are seeing fewer nAMD patients for monitoring). Therefore, we attempted to estimate the potential time and costs that could be saved by HES clinics if some of the management of nAMD patients could be undertaken in the community. This was done by using the resource-use information collected during the IVAN trial as a basis on which to consider what proportion of an ophthalmologist’s time is spent on retreatment decisions for this group of patients relative to other aspects of their care. We brought together data from the IVAN trial (average number of patients attending a clinic) and information from the literature, and used expert opinion from the ECHoES study ophthalmologists and optometrists to try to estimate the total number of patients with quiescent or no lesion in both eyes who would be eligible for monitoring by a community optometrist in a given month and the total number of monitoring visits that could be transferred from a hospital to the community per year. We then replaced the costs of the ophthalmologist’s time with the cost of the optometrist’s time and examined the difference. The Microsoft Excel® spreadsheet (Microsoft Corporation, Redmond, WA, USA) calculations for the budget impact are reported in Appendix 3.

Qualitative research

Focus groups were conducted with ophthalmologists, optometrists and eye service users separately, and one-to-one interviews were conducted with other health professionals involved in the care and services of those with eye conditions.

Recruitment

Focus groups with optometrists and ophthalmologists

Two focus groups were conducted with ophthalmologists and optometrists separately, held at specialist conferences (the National Optical Conference for Optometrists in Birmingham, November 2013, and the Elizabeth Thomas Seminar for Ophthalmologists in Nottingham, December 2013). For the optometrist focus groups, information about the study was placed in specialist press and the ECHoES study website. Focus group participants were also recruited by the snowball technique, with health-care professionals informing other potentially interested colleagues. For the ophthalmologist focus groups, conference organisers emailed information about the study to delegates. Interested participants were asked to contact the qualitative researcher for more information.

Interviews with commissioners, clinical advisors to clinical commissioning groups and public health representatives

Clinical commissioning groups (CCGs) in England were contacted by an e-mail which contained information about the study, asking it to be forwarded on to general practitioners (GPs)/commissioners in each CCG who were responsible for commissioning ophthalmology care. Those who were interested were asked to contact the qualitative researcher for more information. Subsequent selection of clinical advisors and public health representatives was guided via the snowball technique. Interviews were mostly conducted in person (n = 6) although, when it was not practicable to do so, a telephone interview was conducted (n = 4). Interviews were conducted between March and June 2014.

Focus groups with service users

Participants with nAMD were recruited from local support groups organised by the Macular Society, formerly known as the Macular Disease Society, a UK-based charity for anyone affected by central vision loss with over 15,000 members and 270 local support groups around the UK (www.macularsociety.org). Three support groups in the south-west of England were initially selected, one based in a major city, one in a large town and one in a rural village. Service users with any history of nAMD were invited to join the study (regardless of whether they had nAMD in one eye or both, dry AMD in their other eye, were currently receiving or had had any treatment in the past).The researcher attended the local support meetings to explain about the research and provide attendees with a participant information leaflet to take home. Contact details of those potentially interested at this stage were obtained. Those who expressed an interest were telephoned by the researcher a week later to discuss the study further and to answer any questions to help them decide whether or not to take part.

Sampling

Basic demographic, health and professional information was collected from those who agreed to be contacted for sampling purposes. A purposeful sampling strategy was used to ensure that the feasibility and acceptability of the proposed shared model of care for nAMD was captured from a range of perspectives. Within this sampling approach, maximum variation was sought in relation to profession, age, gender and geographic location (for health professionals) and gender, age, type of AMD and time since diagnosis (for service users). Participant characteristics were assessed as the study progressed, and individuals or groups that were under-represented were targeted (i.e. commissioners or clinical advisors). Where it was felt that variation had been achieved, potential participants were thanked for their interest and informed that sufficient numbers had been recruited (i.e. optometrists).

Data collection

A favourable ethical approval for this study was granted by a UK NHS Research Ethics Committee. Written consent was obtained from participants at the start of each focus group or interview. Separate topic guides were developed for service users, optometrists/ophthalmologists (with additional questions for each professional group) and all other health professionals, to ensure that discussions within each group covered the same basic issues but with sufficient flexibility to allow new issues of importance to the informants to emerge. These were based on the study aims, relevant literature and feedback from eye-health professionals in the ECHoES study team, and consisted of open-ended questions about the current model of care for nAMD and perspectives of stable patients being monitored in the community by optometrists. They were adapted as analysis progressed to enable exploration of emerging themes.

All of the focus groups and interviews were conducted by DT; these were predominantly led by the participants themselves, with DT flexibly guiding the discussion by occasionally probing for more information, clarifying any ambiguous statements, encouraging the discussion to stay on track, and, in the focus groups, providing an opportunity for all participants to contribute to the discussion. All participants were offered a £20 gift voucher to thank them for their time (two commissioners declined the voucher).

Data analysis

Focus groups and interviews were transcribed verbatim and checked against the audio-recording for accuracy. Transcripts were imported into NVivo version 10 (QSR International, Warrington, UK), where data were systematically assigned codes and analysed thematically using constant comparison methods derived from grounded theory methodology. 26 Transcripts were repeatedly read and ongoing potential ideas and coding schemes were noted at every stage of analysis. To ensure each transcript was given equal attention in the coding process, data were analysed sentence by sentence and interesting features were coded. Clusters of related codes were then organised into potential themes, and emerging themes and codes within transcripts and across the data set were then compared with explore shared or disparate views among participants. The transcripts were reread to ensure the proposed themes adequately covered the coded extracts, and the themes were refined accordingly. Emerging themes were discussed with a second experienced social scientist (NM) with reference to the raw data. Data collection and analysis proceeded in parallel, with emerging findings informing further sampling and data collection. Data collection and analysis continued until the point of data saturation, that is, the point at which no new themes emerged.

The ECHoES trial participants’ perspectives of training and shared care

In addition to the information collected during focus groups and interviews, the opinions of all optometrists and ophthalmologists who had taken part in the ECHoES trial were sought in a short online questionnaire. The survey related to participants’ experiences of the training and their attitudes towards shared care for nAMD. Questions required a binary, a Likert scale or a free-text response.

Quantitative data were analysed in Microsoft Excel and presented as proportions. Free-text responses were imported into NVivo and coded into categories, which were derived from the main survey questions, relating to feedback on the training programme (experiences of using the web application, ease of training and additional resources used) and attitudes towards shared care (general perspectives of the proposed model, and perceived facilitators and barriers to implementation). Data were analysed thematically using the constant comparative techniques of grounded theory, whereby codes within and across the data set were compared to look for shared or disparate views among optometrists and ophthalmologists.

Chapter 3 Results: classification of lesion and lesion components (objectives 1 to 3)

Registered participants

A total of 155 health-care professionals (72 ophthalmologists and 83 optometrists) registered their interest in the ECHoES trial. Of these, 62 ophthalmologists and 67 optometrists consented to take part. Everyone who registered an interest was eligible for the trial; those who did not consent either did not return their consent forms or were no longer required for the trial. See Figure 3 for details.

FIGURE 3.

Flow of participants.

Recruitment

Participants were initially recruited between 1 June and 9 October 2013. However, as participants progressed through the training stages, it became apparent that the withdrawal rate of optometrists was higher than expected and that more would be required if the planned sample size were to be met. Recruitment was therefore reopened between 13 February and 6 March 2014, when a further seven optometrists consented to take part. A number of participants withdrew or were withdrawn by co-ordinating staff throughout the trial, mostly because they missed webinars or failed their assessment of training vignettes (see Figure 3 and Withdrawals for details). The final participant completed the main study vignettes on 21 April 2014. As planned, 48 ophthalmologists and 48 optometrists completed the full trial.

At the start of the trial we were unsure how many participants we would need to recruit in order to meet our target of 48 participants in each group. Therefore, we over-recruited at the consent stage and asked a number of participants to complete the webinar and then ‘wait and see’ whether or not we needed them to participate in the main trial. We also slightly over-recruited at each stage of the trial to account for dropouts. This resulted in a small number of participants being withdrawn at various stages of the trial because they were no longer required.

Withdrawals

During the ECHoES trial, withdrawals could occur for a number of reasons. First, participants could withdraw or be withdrawn between consenting and receiving their training vignettes if the mandatory webinar training was not completed, they no longer wanted to take part or they were no longer needed for the trial. This occurred for six ophthalmologists and six optometrists (see Figure 3 for details). Second, participants could be withdrawn if the threshold performance score for the assessments of their training vignettes was not attained. Of the 54 ophthalmologists who completed their vignette training, 48 (88.9%) passed first time, two (3.7%) passed on their second attempt and four (7.4%) failed their second attempt so were withdrawn. Of the 57 optometrists who completed their vignette training, 38 (66.7%) passed first time, 11 (19.3%) passed on their second attempt and eight (14.0%) failed their second attempt and were withdrawn from the trial. Two ophthalmologists and one optometrist who passed their training vignettes were withdrawn from the trial as the target sample size (48 in each group completing main assessments) had already been reached.

Numbers analysed

Ninety-six participants, 48 ophthalmologists and 48 optometrists, were included in the analysis population. For the primary and secondary outcomes, all participants were included as by design there were no missing data.

Reference standard classifications

The reference standard classified 142 (49.3%) of the 288 vignettes as reactivated, 5 (1.7%) as suspicious and 141 (49.0%) as quiescent.

Participant characteristics

The characteristics of participants collected for the trial included only age, gender and date of qualification for the participant’s profession. As participants were not randomised to the two comparison groups, baseline characteristics were presented descriptively and formally compared. Table 5 shows that the gender balance and average ages were similar among optometrists and ophthalmologists [mean 43.1 years (SD 10.1 years) and 42.2 years (SD 8.0 years), respectively; 50.0% vs. 43.8% women]. Optometrists had on average significantly more years of qualified experience than ophthalmologists [median 17.4 years (IQR 10.1–28.4 years) and 11.4 years (IQR 4.8–16.9 years), respectively].

| Characteristic | Ophthalmologists (n = 48) | Optometrists (n = 48) | p-value |

|---|---|---|---|

| Age (years), mean (SD) | 42.2 (8.0) | 43.1 (10.1) | 0.616 |

| Gender (female), n/N (%) | 21/48 (43.8) | 24/48 (50.0) | 0.539 |

| Years since qualification, median (IQR) | 11.4 (4.8–16.9) | 17.4 (10.1–28.4) | < 0.001 |

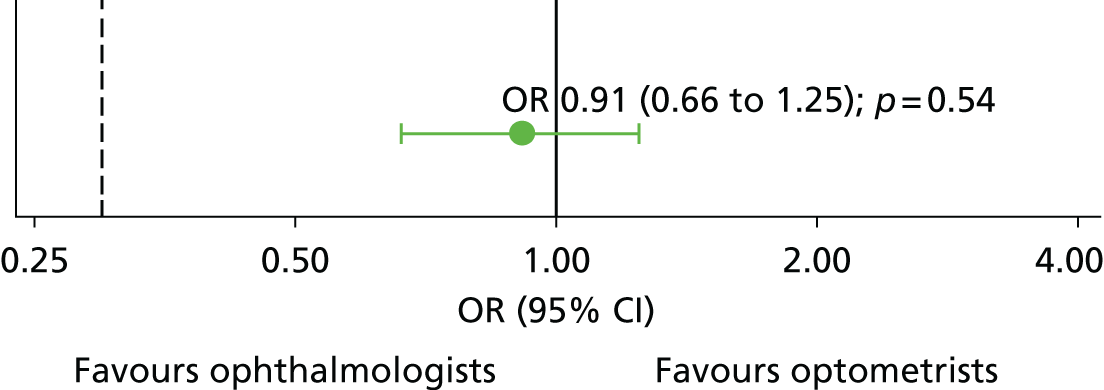

Primary outcome

The primary outcome (correct lesion classification by a participant compared with the reference standard: 2.5.1) was achieved by the ophthalmologists for 1722 out of 2016 (85.4%) vignettes and by the optometrists for 1702 out of 2016 (84.4%) vignettes (Table 6). The odds of an optometrist being correct were not statistically different from the odds of an ophthalmologist being correct (OR 0.91, 95% CI 0.66 to 1.25; p = 0.543). The ability of optometrists to assess vignettes is non-inferior (both clinically and statistically) to the ability of ophthalmologists, according to the prespecified limit of 10% absolute difference (0.298 on the odds scale; illustrated by the dashed black line on Figure 4). In this primary outcome model, the variance attributed to the participant random effect was far smaller than that of the vignette random effect (0.360 and 2.062, respectively).

| Primary outcome | Ophthalmologists (n = 48) | Optometrists (n = 48) | OR (95% CI) | p-value |

|---|---|---|---|---|

| Lesions correctly classified (overall), n/N (%) | 1722/2016 (85.4) | 1702/2016 (84.4) | 0.91 (0.66 to 1.25) | 0.543 |

| Sensitivity (overall), n/N (%) | 736/994 (74.0) | 795/994 (80.0) | 1.52 (1.08 to 2.15) | 0.018 |

| Specificity (overall), n/N (%) | 986/1022 (96.5) | 907/1022 (88.7) | 0.27 (0.17 to 0.44) | < 0.001 |

| Median correct participant score (IQR) | 37.0 (35.0–38.5) | 36.0 (33.0–38.0) | – | – |

| Median sensitivity, participant level (IQR) | 76.5 (64.9–87.1) | 83.7 (71.6–94.1) | – | – |

| Median specificity, participant level (IQR) | 100.0 (94.6–100.0) | 94.7 (79.2–100.0) | – | – |

FIGURE 4.

Comparison between optometrists and ophthalmologists for the primary outcome. Black dashed line represents the non-inferiority limit of 0.298. Effect size point estimate is shown as the OR and the error bar represents the 95% CI. The solid vertical line illustrates line of no difference at 1.

The median number of correct lesion classifications (compared with the reference standard) by individual participants was 37 (IQR 35.0–38.5) in the ophthalmologist group compared with 36 (IQR 33.0–38.0) in the optometrist group. The lowest number of correct lesion classifications was 26 out of 42 in the ophthalmologist group and 24 out of 42 in the optometrist group; the highest number of correct lesion reactivation decisions was 41 out of 42, achieved by one ophthalmologist and three optometrists. It was recommended by a reviewer that the relationship between years of experience and the number of correct responses of ophthalmologists should be explored. Appendix 4, Figure 27 suggested no clear relationship. Appendix 4, Figure 28 shows a plot of individual optometrist participant scores against their paired ophthalmologist counterparts for the 48 different sets of vignettes. The sensitivity and specificity (see Table 6; described in Chapter 2, Group comparisons) and detailed breakdown of the participants’ classifications (Table 7 and Figure 5) show the agreement between participants and the reference standard in more detail. Figure 5 shows that optometrists were more likely than ophthalmologists to correctly classify a vignette as reactivated (80% vs. 74.0%), but were less likely to correctly classify a vignette as quiescent (65.7% vs. 81.7%).

| Participants’ lesion classifications by reference standard classification | Ophthalmologists | Optometrists | Overall | |||

|---|---|---|---|---|---|---|

| n/N (observations) | % | n/N (observations) | % | n/N (observations) | % | |

| Reactivated (n = 142) | ||||||

| Reactivated | 736/994 | 74.0 | 795/994 | 80.0 | 1531/1988 | 77.0 |

| Suspicious | 196/994 | 19.7 | 142/994 | 14.3 | 338/1988 | 17.0 |

| Quiescent | 62/994 | 6.2 | 57/994 | 5.7 | 119/1988 | 6.0 |

| Suspicious (n = 5) | ||||||

| Reactivated | 1/35 | 2.9 | 10/35 | 28.6 | 11/70 | 15.7 |

| Suspicious | 17/35 | 48.6 | 11/35 | 31.4 | 28/70 | 40.0 |

| Quiescent | 17/35 | 48.6 | 14/35 | 40.0 | 31/70 | 44.3 |

| Quiescent (n = 141) | ||||||

| Reactivated | 35/987 | 3.5 | 105/987 | 10.6 | 140/1974 | 7.1 |

| Suspicious | 146/987 | 14.8 | 234/987 | 23.7 | 380/1974 | 19.3 |

| Quiescent | 806/987 | 81.7 | 648/987 | 65.7 | 1454/1974 | 73.7 |

FIGURE 5.

Participants’ lesion classifications for vignettes classified as reactivated or quiescent by the reference standard. The 35 observations in each group for the five vignettes classified by the reference standard as suspicious are not shown in this graph. [The data are ophthalmologists: 1, reactivated (2.9%); 17, suspicious (48.6%); 17, quiescent (48.6%); optometrists: 10, reactivated (28.6%); 11, suspicious (31.4%); and 14, quiescent (40.0%). These data are consistent with a more cautious approach to decision-making about retreatment by optometrists than ophthalmologists.]

A post-hoc analysis to look at this subgroup effect was undertaken. The interaction between participant group and reference standard vignette classification (reactivated vs. quiescent/suspicious) was statistically significant (interaction p < 0.001); the odds of an optometrist being correct were about 50% higher than those of an ophthalmologist if the reference standard classification was reactivated (OR 1.52, 95% CI 1.08 to 2.15; p = 0.018) but about 70% lower if the reference standard classification was quiescent/suspicious (OR 0.27, 95% CI 0.17 to 0.44; p < 0.001).

Secondary outcomes

Serious sight-threatening errors

Serious sight-threatening errors could occur only for the vignettes which were classified as ‘reactivated’ by the reference standard. These errors occurred in 62 out of 994 (6.2%) of ophthalmologists’ classifications and 57 out of 994 (5.7%) of optometrists’ classifications; this difference was not statistically significant (OR 0.93, 95% CI 0.55 to 1.57; p = 0.789).

Each participant viewed between 15 and 27 ‘reactivated’ vignettes and the most sight-threatening errors made by a single participant was eight (out of 25) in the ophthalmologists group and five (out of 19) in the optometrists group (Table 8). Table 8 also shows the number of non-sight-threatening errors, that is, participants classifying vignettes as reactivated when the reference standard was quiescent. This type of error occurred more frequently in the optometrists group (105 out of 987; 10.6%) than in the ophthalmologists group (35 out of 987; 3.5%); this difference was not formally compared.

| Type of serious error | Ophthalmologists (n = 48) | Optometrists (n = 48) | OR (95% CI) | p-value | ||

|---|---|---|---|---|---|---|

| n/N | % | n/N | % | |||

| Sight-threatening | 62/994 | 6.2 | 57/994 | 5.7 | 0.93 (0.55 to 1.57) | 0.789 |

| Non-sight-threatening | 35/987 | 3.5 | 105/987 | 10.6 | – | – |

| Number of participants making different numbers of sight-threatening serious errorsa | ||||||

| 0 errors | 17/48 | 35.4 | 19/48 | 39.6 | – | – |

| 1 error | 13/48 | 27.1 | 13/48 | 27.1 | – | – |

| 2 errors | 11/48 | 22.9 | 8/48 | 16.7 | – | – |

| 3 errors | 5/48 | 10.4 | 5/48 | 10.4 | – | – |

| 4 errors | 1/48 | 2.1 | 2/48 | 4.2 | – | – |

| 5 errors | 0/48 | 0.0 | 1/48 | 2.1 | – | – |

| 8 errors | 1/48 | 2.1 | 0/48 | 0.0 | – | – |

| Number of participants making different numbers of non-sight-threatening serious errorsa | ||||||

| 0 errors | 27/48 | 56.3 | 19/48 | 39.6 | – | – |

| 1 error | 11/48 | 22.9 | 6/48 | 12.5 | – | – |

| 2 errors | 7/48 | 14.6 | 7/48 | 14.6 | – | – |

| 3 errors | 2/48 | 4.2 | 4/48 | 8.3 | – | – |

| 4 errors | 1/48 | 2.1 | 3/48 | 6.3 | – | – |

| 5 errors | 0/48 | 0.0 | 5/48 | 10.4 | – | – |

| 7 errors | 0/48 | 0.0 | 3/48 | 6.3 | – | – |

| 15 errors | 0/48 | 0.0 | 1/48 | 2.1 | – | – |

Lesion components

We did not attempt to achieve a consensus among the three experts for the individual features of the vignettes. Therefore, responses given by the professional groups for these individual features were formally compared with each other rather than with any reference standard. Optometrists judged lesion components to be present for all components except PED more often than ophthalmologists (Table 9 and Figure 6). This difference was particularly evident for DRT and exudates; the odds of identifying these components as present were more than three times higher in the optometrist group than in ophthalmologist group [OR 3.46, 95% CI 2.09 to 5.71 (p < 0.001), and OR 3.10, 95% CI 1.58 to 6.08 (p < 0.001), respectively]. SRF was also reported significantly more often by optometrists than ophthalmologists (OR 1.73, 95% CI 1.21 to 2.48; p = 0.002). The difference between the groups was of borderline statistical significance for blood (OR 1.56, 95% CI 1.00 to 2.44; p = 0.048). The differences between the groups for IRC and PED did not differ statistically [OR 1.00, 95% CI 0.61 to 1.65 (p = 0.985) and OR 0.91, 95% CI 0.47 to 1.79 (p = 0.786), respectively].

| Secondary outcomes | Ophthalmologists (n = 48) | Optometrists (n = 48) | OR (95% CI) | p-value | ||

|---|---|---|---|---|---|---|

| n/N | % | n/N | % | |||

| Is there SRF? | 515/2016 | 25.5 | 627/2016 | 31.1 | 1.73 (1.21 to 2.48) | 0.002 |

| Has it increased since baseline? | 498/515 | 96.7 | 541/627 | 86.3 | – | – |

| Are there IRC? | 799/2016 | 39.6 | 808/2016 | 40.1 | 1.00 (0.61 to 1.65) | 0.985 |

| Has it increased since baseline? | 667/799 | 83.5 | 683/808 | 84.5 | – | – |

| Is there DRT? | 482/2016 | 23.9 | 826/2016 | 41.0 | 3.46 (2.09 to 5.71) | < 0.001 |

| Has it increased since baseline? | 381/482 | 79.0 | 597/826 | 72.3 | – | – |

| Is there any PED? | 845/2016 | 41.9 | 842/2016 | 41.8 | 0.91 (0.47 to 1.79) | 0.786 |

| Has it increased since baseline? | 311/845 | 36.8 | 392/842 | 46.6 | – | – |

| Is there blood? | 150/2016 | 7.4 | 194/2016 | 9.6 | 1.56 (1.00 to 2.44) | 0.048 |

| New or increased since baseline? | 126/150 | 84.0 | 146/194 | 75.3 | – | – |

| Are there exudates? | 152/2016 | 7.5 | 380/2016 | 18.8 | 3.10 (1.58 to 6.08) | < 0.001 |

| New or increased since baseline? | 38/152 | 25.0 | 87/380 | 22.9 | – | – |

FIGURE 6.

Lesion components. Point estimates for different lesion components are ORs and error bars are 95% CIs. The vertical dashed line illustrates line of no difference at 1.

Confidence ratings

The confidence ratings displayed in Table 10 show that ophthalmologists were clearly more confident in their decisions than optometrists. Ophthalmologists stated that they were very confident (5 on the rating scale) in their judgements for 1175 out of 2016 (58.3%) vignettes, whereas optometrists reporting the same level of confidence in their judgements for only 575 out of 2016 (28.5%) vignettes (OR 0.15, 95% CI 0.07 to 0.32; p < 0.001). For both groups, a confidence rating of 5 resulted in a correct answer over 90% of the time, but there did not appear to be any clear relationship between confidence and correctness for lower confident ratings, especially for optometrists (see Table 10).

| Secondary outcomes | Ophthalmologists (n = 48) | Optometrists (n = 48) | OR (95% CI) | p-value | ||

|---|---|---|---|---|---|---|

| n/N (observations) | % | n/N (observations) | % | |||

| Confidence rating | ||||||

| 1 | 7/2016 | 0.3 | 52/2016 | 2.6 | 0.15 (0.07 to 0.32)a | < 0.001 |

| 2 | 26/2016 | 1.3 | 140/2016 | 6.9 | ||

| 3 | 220/2016 | 10.9 | 496/2016 | 24.6 | ||

| 4 | 588/2016 | 29.2 | 753/2016 | 37.4 | ||

| 5 | 1175/2016 | 58.3 | 575/2016 | 28.5 | ||

| Correct lesion classifications for each confidence ratingb | ||||||

| 1 | 3/7 | 42.9 | 42/52 | 80.8 | – | – |

| 2 | 21/26 | 80.8 | 114/140 | 81.4 | – | – |

| 3 | 147/220 | 66.8 | 362/496 | 73.0 | – | – |

| 4 | 474/588 | 80.6 | 634/753 | 84.2 | – | – |

| 5 | 1077/1175 | 91.7 | 550/575 | 95.7 | – | – |

Key vignette information

It was stated in the protocol that the effect of key vignette features on correct reactivation decisions would be assessed. For interpretability we modelled the effect of these features on the number of incorrect classifications.

The influence of vignette features was investigated using Poisson regression, adjusting for the reference standard classification. Interactions between these vignette characteristics and professional group were tested but were not retained as they were not statistically significant at the 5% level. The outcome of this analysis can be seen in Figure 7: professional group, gender, cardiac history and age did not significantly influence the number of incorrect classifications. In contrast, the reference standard classification, smoking status and BCVA sum and difference were all statistically significant (p < 0.001, p = 0.005, p = 0.001 and p = 0.004, respectively). Vignettes of current smokers were more likely to be incorrectly classified than non-smokers, but differences between ex-smoker and non-smoker vignettes were not found [incidence rate ratio (IRR) 1.33, 95% CI 1.05 to 1.70, and IRR 0.91, 95% CI 0.76 to 1.10, respectively]. Vignettes with better BCVA (larger average BCVA over the two visits) were less likely to be incorrectly classified (IRR 0.996, 95% CI 0.993 to 0.998), while vignettes with a greater increase in BCVA from baseline to index visit were more likely to be incorrectly classified (IRR 1.017, 95% CI 1.005 to 1.028). Vignettes classified as reactivated by the reference standard were more likely to be incorrectly classified (IRR 3.16, 95% CI 2.62 to 3.81); this finding was in agreement with the raw data displayed in Figure 5 (noting that, in the two right-hand columns, classifications of quiescent and suspicious should be pooled for the total percentage correct).

FIGURE 7.

Influence of key vignette information. Cardiac history included angina, myocardial infarction and/or heart failure. Point estimates show IRRs and error bars are 95% CIs. Line of no difference is illustrated by the vertical dashed line at 1. For items of information with multiple categories, p-values are for tests across all categories. RS, reference standard.

Sensitivity analysis

For the primary outcome, a vignette classification was defined as ‘correct’ if both the reference standard and the participant classified the vignette as ‘reactivated’ or if both classified a vignette as ‘suspicious’/’quiescent’. A sensitivity analysis of the primary outcome was performed in which suspicious classifications were grouped with reactivated classifications instead of quiescent classifications. In this analysis, ophthalmologists correctly classified 1756 out of 2016 (87.1%) vignettes and optometrists correctly classified 1606 out of 2016 (79.7%). This difference was statistically significant (OR 0.51, 95% CI 0.38 to 0.67; p < 0.001), but the lower end of the CI did not cross the non-inferiority margin (0.298). Therefore, when correct classifications were redefined in this way, optometrists were statistically inferior but clinically non-inferior to ophthalmologists.

Additional (post-hoc) analyses

Vignette classifications compared with referral recommendations

It was of interest to see how lesion classification decisions related to referral decisions. These should be congruent (as referral decisions should be made based on classification decisions) but no such rules were imposed in this trial. Table 11 illustrates that, as expected, lesions classified as reactivated were usually paired with a recommendation for rapid referral to hospital (1679/1682, 99.9%) and lesions classified as quiescent were usually paired with a recommendation for review in 4 weeks (1526/1604, 95.1%). There was very little difference between the two professional groups in the extent to which lesion classifications and referral decisions were paired. Lesions classified as suspicious were often paired with a recommendation for review in 2 weeks (605/746, 81.1%), but rapid referral to hospital was not uncommon (131/746, 17.6%). Optometrists more often than ophthalmologists tended to pair suspicious classifications with review in 2 weeks (87.3% vs. 74.4%), whereas ophthalmologists tended to pair suspicious classifications with rapid referral to hospital more often than optometrists (24.8% vs. 10.9%).

| Referral decisions by lesion classification | Ophthalmologists (n = 48) | Optometrists (n = 48) | Overall (n = 96) | |||

|---|---|---|---|---|---|---|

| n/N (observations) | % | n/N (observations) | % | n/N (observations) | % | |

| Reactivated | ||||||

| Refer to hospital | 771/772 | 99.9 | 908/910 | 99.8 | 1679/1682 | 99.8 |

| Review in 2 weeks | 0/772 | 0.0 | 2/910 | 0.2 | 2/1682 | 0.1 |

| Review in 4 weeks | 1/772 | 0.1 | 0/910 | 0.0 | 1/1682 | 0.1 |

| Suspicious | ||||||

| Refer to hospital | 89/359 | 24.8 | 42/387 | 10.9 | 131/746 | 17.6 |

| Review in 2 weeks | 267/359 | 74.4 | 338/387 | 87.3 | 605/746 | 81.1 |

| Review in 4 weeks | 3/359 | 0.8 | 7/387 | 1.8 | 10/746 | 1.3 |

| Quiescent | ||||||

| Refer to hospital | 1/885 | 0.1 | 1/719 | 0.1 | 2/1604 | 0.1 |

| Review in 2 weeks | 44/885 | 5.0 | 32/719 | 4.5 | 76/1604 | 4.7 |

| Review in 4 weeks | 840/885 | 94.9 | 686/719 | 95.4 | 1526/1604 | 95.1 |

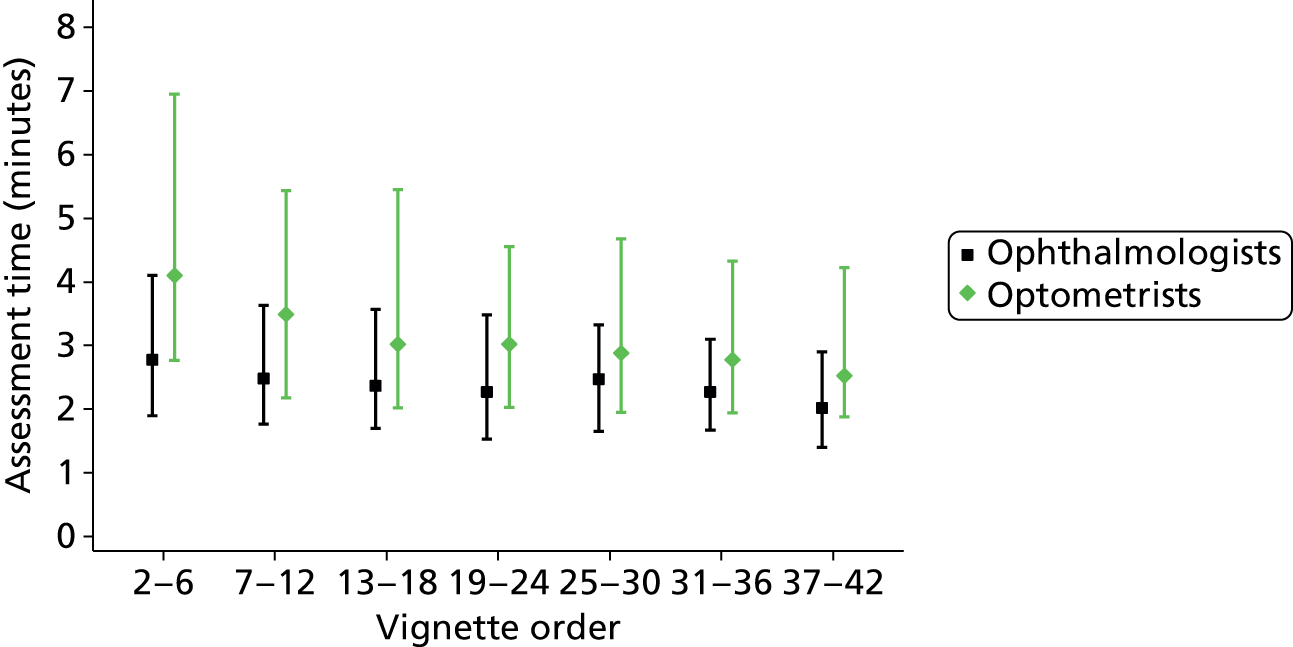

Duration of vignette assessment

The assessment durations were calculated for each participant as the difference between the time an assessment was saved and the time the previous assessment was saved. Therefore, this information was not available for all 4032 assessments because many participants took breaks between assessments (see Chapter 2, Missing data). The median durations of vignette assessment for all main study assessments were 2 minutes 21 seconds (IQR 1 minute 39 seconds to 3 minutes 27 seconds; n = 1835) for ophthalmologists and 3 minutes 2 seconds (IQR 2 minutes 5 seconds to 4 minutes 57 seconds; n = 1758) for optometrists. Assessment time, on average, reduced as experience increased, especially in the optometrists group; for ophthalmologists and optometrists respectively, the median assessment durations were 2 minutes 44 seconds (IQR 2 minutes 3 seconds to 4 minutes 40 seconds; n = 45) and 5 minutes 23 seconds (IQR 3 minutes 18 seconds to 10 minutes 3 seconds; n = 41) for the second main study vignette, and 1 minute 55 seconds (IQR 1 minute 19 seconds to 2 minutes 49 seconds; n = 48) and 2 minutes 31 seconds (IQR 1 minute 52 seconds to 4 minutes 26 seconds; n = 48) for the 42nd (final) main study vignette. Figure 8 shows this relationship.

FIGURE 8.

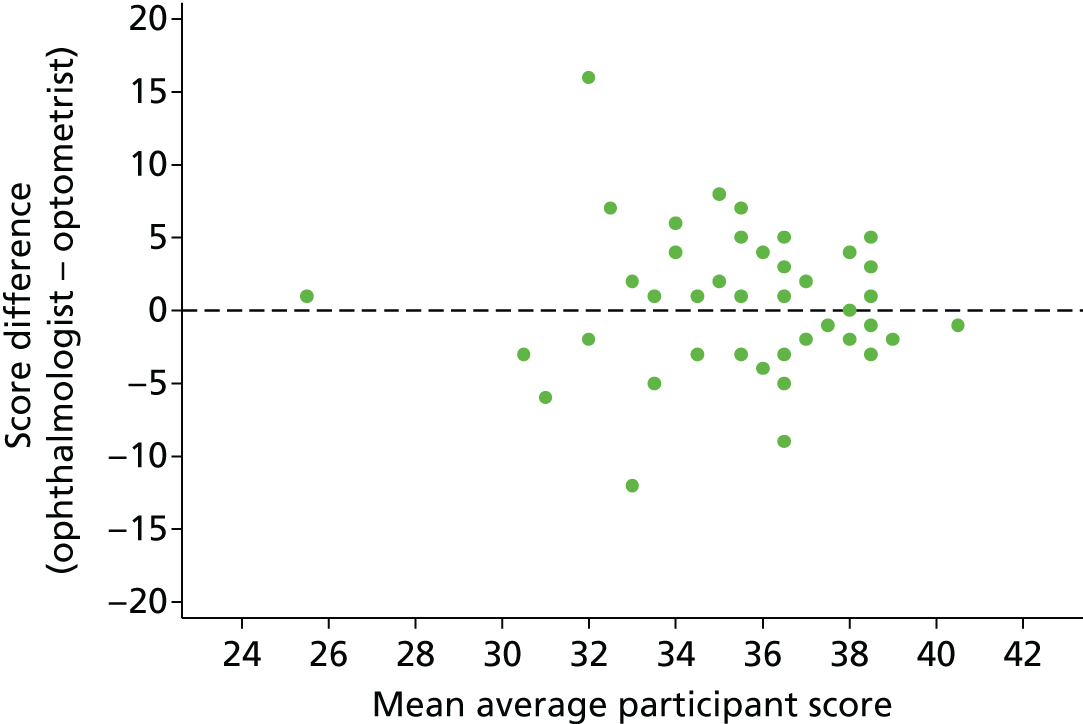

Duration of vignette assessment. Point estimates show median assessment durations and error bars show IQRs.