Notes

Article history

The research reported in this issue of the journal was funded by the HTA programme as project number 11/21/02. The contractual start date was in December 2012. The draft report began editorial review in November 2015 and was accepted for publication in July 2016. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

SriniVas Sadda received grants and personal fees from Optos and Carl Zeiss Meditec during the duration of the study.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2016. This work was produced by Tufail et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Introduction

Background

Diabetes mellitus, particularly type 2 diabetes mellitus, is a major public health problem both in the UK1 and globally. 2 Diabetic retinopathy is a common complication of diabetes mellitus and is one of the major causes of vision loss in the working-age population. 3 However, in the UK, diabetic retinopathy is no longer the leading cause of vision loss in this age group. 3 This has been attributed to the effectiveness of national screening programmes, including that of the NHS diabetic eye screening programme (DESP),4 in identifying those in need of treatment, particularly early treatment, which is highly effective in preventing visual loss. 3

The Airlie House Symposium on the Treatment of Diabetic Retinopathy established the basis for the current photographic method of quantifying the presence and severity of diabetic retinopathy. 5 The characteristic lesions of diabetic retinopathy are presently estimated to affect nearly half of those diagnosed at any given time. 6–9 The advances in the medical management of diabetes mellitus have substantially increased patient survival and life expectancy. However, in doing so people with diabetes mellitus are placed at an increased risk for developing diabetes mellitus-related microvascular complications, the most common of which is diabetic retinopathy. 7,8

The current treatment recommendations for diabetic retinopathy are highly effective in preventing visual loss. 10–12 Early detection and accurate evaluation of diabetic retinopathy severity, co-ordinated medical care and prompt appropriate treatment represent an effective approach for diabetic eye care.

A total of 2.04 million people were offered diabetic eye screening in 2014/15 in England, among whom the rate of uptake was 83%. 13 This represents a major challenge for the NHS, as current retinal screening programmes are labour intensive (requiring one or more trained human graders), and future numbers will escalate given that both prevalence and incidence of diabetes mellitus are increasing markedly. 14

The number of individuals with diabetes mellitus is projected to reach 552 million globally by 2030, so technical enhancements addressing image acquisition, automated image analysis algorithms and predictive biomarkers are needed if current diabetic retinopathy screening programmes are to manage this burden successfully. Automated retinal image analysis systems (ARIASs) have been developed. Although such an approach has been implemented in Scotland, little independent evaluation of commercially available or Conformité Européenne (CE)-marked software has been done or been undertaken for the NHS DESP using its image acquisition protocol.

Automated diabetic retinopathy screening

There is increasing interest in systems for the automated detection of diabetic retinopathy. In Scotland, one automated system reported a very high detection rate (100% for proliferative retinopathy and over 97% for maculopathy) in a large, unselected population attending two regional screening programmes. 15 The system differentiates those who have sight-threatening diabetic retinopathy or other retinal abnormalities from those at low risk of progression to sight-threatening retinopathy. However, other systems have since become commercially available and, although the diagnostic accuracy of these computer detection systems has been reported to be comparable with that of experts,16–22 the independent validity of these systems and their applicability to ‘real-life’ screening remains unclear. The external validity of such studies to date is limited because, commonly, images are not available for comparison, non-validated reading protocols were used and detection programs were developed on images and populations highly similar to the one they are tested on, including distribution of retinopathy severity, camera type, field of view and number of images per eye. The Scottish programme uses one field per eye, whereas two fields per eye are used in the NHS DESP. The limited external validity of studies to date may be in part because the majority of studies so far have been designed, run and analysed by individuals linked to the development of the software being tested. Independent expert groups without links to the commercial development of the software are uncommon and the alternative approach of using an independent, internationally recognised fundus photography reading centre is prohibitively expensive. Moreover, these image analysis systems are not currently authorised for use in the NHS DESP. Recent work has also shown that optical coherence tomography (OCT) imaging is a useful adjunct to colour fundus photography in screening for referable diabetic maculopathy and has the potential to significantly reduce over-referral of cases of macula oedema compared with human assessment of colour fundus images. 23 Some hospital eye services are already incorporating OCT assessment within their DESP. 24

Development and process of automated retinal image analysis systems

The introduction of high-resolution digital retinal imaging systems in the 1990s, combined with growth in computing power, permitted the development of computer algorithms capable of computer-aided detection and diagnosis. Computer-aided detection is the identification of pathological lesions. Computer-aided diagnosis provides a classification that incorporates additional lesion or clinical information to stratify risk or estimate the probability of disease. These recent advances have allowed ARIASs to have potential clinical utility.

Broadly, the approach to ARIASs can be categorised into two components: (1) image quality assessment and (2) image analysis.

Image quality assessment

The NHS DESP currently specifies two-field colour digital photography as the only acceptable method of systematic screening for diabetic eye disease in the NHS and define specific camera systems and minimum resolutions. 25 Currently, retinal digital photography has progressed to a stage at which colour retinal photographs can be obtained using low levels of illumination through an undilated pupil. 26 However, human factors such as movement and positioning and ocular factors such as a cataract and reflections from retinal tissues can produce artefacts. Without pupillary dilatation, artefacts are observed in 3–30% of retinal images to an extent at which they impede human grading. 27 Thus, the importance of good image quality prior to automated image analysis has been recognised and much ancillary research has been conducted in the field of image pre-processing. 28

Image analysis

The main challenge encountered with processing of colour digital images is the presence of numerous ‘distractors’ within the retinal image (retinal capillaries, underlying choroidal vessels and reflection artefacts), all of which may be confused with diabetic retinopathy lesions. As a result, much research has been focused on the selective identification of diabetic retinopathy features, including microaneurysms, haemorrhages, hard or soft exudates, cotton wool spots and venous beading. These clinical features have been described in great detail in landmark clinical trials, Diabetic Retinopathy Study and Early Treatment Diabetic Retinopathy Study. 10,29

The main approaches to image analysis of ARIASs can be found in a recent review by Sim et al. 30

Diagnostic accuracy of automated retinal image analysis systems reported to date

Commercial systems that were available at the initiation of this study and invited to participate in the study are described below. ARIASs not available at initiation of the study or that did not meet entry criteria, and that are therefore not evaluated, are summarised in a recent review. 30

Commercial systems that were available within 6 months of the start of the study include iGradingM (version 1.1; originally Medalytix Group Ltd, Manchester, UK, but purchased by Digital Healthcare, Cambridge, UK, at the initiation of the study, purchased in turn by EMIS UK, Leeds, UK, after conclusion of the study), IDx-DR (IDx, LLC, Iowa City, IA, USA), Retmarker (version 0.8.2, Retmarker Ltd, Coimbra, Portugal) and EyeArt (Eyenuk Inc., Woodland Hills, CA, USA). A comparison of the published sensitivity and specificity of each ARIAS, as well as a brief description of the published studies supporting each is summarised in a paper by Sim et al. 30 Direct head-to-head comparisons between systems to date have proven difficult, mainly because of different photographic protocols, algorithms and patient populations used for validation. A common thread among these automated systems is to identify referable retinopathy, defined as diabetic retinopathy that requires the attention of an ophthalmologist. To date, we have not progressed to a stage at which human intervention can be fully removed from screening programmes. All of the systems described below are semi-automated at some point in the workflow pathway and require the assistance of a human reader/grader.

iGradingM

The iGradingM ARIAS has a class I CE mark and performs ‘disease/no disease’ grading for diabetic retinopathy. 19 It was developed at the University of Aberdeen, uses previously published algorithms to assess both image quality and disease and has been described in detail elsewhere. 19 A previously trained automated classifier on a set of 35 images containing 198 individually annotated microaneurysm or dot haemorrhages was used in its development. It was first deployed on a large-scale population in the Scottish diabetic retinopathy screening programme in 2010, after being validated using several large screening populations in Scotland,15,18–21 the largest being 78,601 single-field 45° colour fundus images from 33,535 consecutive patients. 15 In this retrospective study, 6.6% of the cohort had referable retinopathy and iGradingM attained a sensitivity of 97.3% for referable retinopathy. The specificity was not reported in the paper. 15 iGradingM has been further validated on 8271 screening episodes from a south London population in the UK. 31 Here, there was a higher percentage (7.1%) of referable disease, and sensitivity of 97.4–99.1% and specificity of 98.3–99.3% were attained depending on the configuration used.

IDx-DR

The IDx-DR has CE approval as a class IIa medical device for sale in the European Economic Area. 32 It utilises the Iowa Detection Program, which consists of previously published algorithms, including features such as image quality assessment, detection of microaneurysms, haemorrhage, cotton wool spots and a fusion algorithm that combines these analyses to produce the diabetic retinopathy index. 33–35 This index represents a dimensionless number from 0 to 1 and represents the likelihood that the image contains referable disease.

The IDx-DR has been validated in several large screening populations. 16,36 Most recently, the IDx-DR was validated on 1748 eyes with single-field 45° colour fundus images acquired in French primary care clinics. 36 In this study, the proportion of referable diabetic retinopathy was high, at 21.7%, and sensitivity and specificity was reported as 96.8% and 59.4%, respectively.

Retmarker

Retmarker was developed at Coimbra University, Portugal, and has been used in screening programmes in Portugal since 2011. It was launched commercially in 2011 by ‘Critical Health’, which has since been renamed Retmarker Ltd, and attained CE approval as a class IIa medical device in April 2010. Retmarker includes an image quality assessment algorithm which has been validated on publicly available data sets,37 and a co-registration algorithm, which allows comparisons of the same location in the retina to be made between visits. 38

One study showed that Retmarker can detect referable retinopathy with a sensitivity of 95.8% and a specificity of 63.2%. 39 Retmarker can also perform predictive longitudinal analysis using microaneurysm turnover. Two independent prospective longitudinal studies using the Retmarker on single-field images have demonstrated the relationship of increased microaneurysm turnover rates identified by the Retmarker program and an increased rate for developing clinically significant macular oedema. 40,41

EyeArt

EyeArt is a class IIa marked ARIAS for sale in the European Economic Area. The screening system is engineered to work on the cloud cluster (Amazon Elastic Cloud; Amazon EC2, Amazon, Seattle, WA, USA).

EyeArt has been validated on diabetic data sets and a recent abstract showed that EyeArt produced a refer/no refer screening recommendation for each patient in the Messidor 2 data set, a large, real-world public data set. 42 This data set comprises images from 874 patients (1748 eyes). EyeArt screening sensitivity was 93.8% [95% confidence interval (CI) 91.0% to 96.6%] and had a specificity of 72.2% (95% CI 68.6% to 75.8%).

Human grader performance and current screening

The effectiveness of different screening modalities has been widely investigated. UK studies show sensitivity levels for the detection of sight-threatening diabetic retinopathy of 48–82% for optometrists and 65% for ophthalmologists. Sensitivities for the detection of referable retinopathy by optometrists have been found in the range of 77–100%, with specificities between 94% and 100%. 43 Photographic methods currently use digital images with subsequent grading by trained individuals. Sensitivities for the detection of sight-threatening diabetic retinopathy have been found to range from 87% to 100% for a variety of trained personnel reading mydriatic 45° retinal photographs, with specificities of 83–96%. Compared with examination by an ophthalmologist, two, 45° field photographs with pupil dilatation has been reported to have a sensitivity of 95% in identifying diabetic patients with sight-threatening retinopathy (specificity 99%) and 83% sensitivity for detecting any retinopathy (specificity 88%). 44 The British Diabetic Association (Diabetes UK) established standard values for diabetic retinopathy screening programmes of at least 80% sensitivity and 95% specificity45 based on recommendations from a first National Workshop on Mobile Retinal Screening summarised in a paper by Taylor et al. 46

The standard method of grading digital colour photographs in the UK uses trained human graders who meet specific quality standards, with multiple possible levels of grading and quality control checks. One key challenge with all studies that measure diagnostic accuracy is the determination of an appropriate gold standard. It is debatable whether or not a gold standard exists in the detection of diabetic retinopathy, but various methods are thought to have better properties than others. The two methods that might possibly be considered as gold standard are seven-field stereoscopic photography and biomicroscopy carried out by a skilled ophthalmologist through dilated pupils. However, these methods have been compared in a number of studies47–50 and do not show perfect agreement. 51 Hence, it is clear that one or both allow fairly frequent errors in detecting retinopathy. It is not possible to decide objectively which is in error, but Kinyoun et al. 47 did subject disagreements to an expert review, which tended to favour the seven-field photography, with two errors, over the indirect ophthalmoscopy, with 12 errors. Moss et al. 49 also examined the disagreement closely and concluded that many involved detection of microaneurysms from photographs that were not detected by ophthalmoscopy. No matter which method was correct, this might suggest that disagreements tend to happen in milder disease states. The direct comparisons of indirect ophthalmoscopy (used by an ophthalmologist) with seven-field photography also raises questions about the standards of 80% sensitivity and 95% specificity45 seen as desirable by the British Diabetic Association (Diabetes UK) from The National Workshop on Mobile Retinal Screening in 1994 summarised in a paper by Taylor et al. 46 As these standards were not invariably met in comparisons of these two ‘gold standard’ methods, they may represent an unrealistic target for other methods.

Rationale for the study

Current retinal screening programmes are labour intensive (requiring one or more trained human graders) and with the growing burden of screening there has been increasing interest in systems that allow automated detection of diabetic retinopathy. In Scotland, one automated system is deployed in routine screening. The Scottish Screening Programme utilises one image per eye (field) taken for screening, as opposed to the two-field images used in the NHS DESP. A number of commercially available CE-marked systems can potentially differentiate those who have sight-threatening diabetic retinopathy or other retinal abnormalities from those at low risk of progression to sight-threatening retinopathy. The diagnostic accuracy of these computer detection systems has been reported to be comparable with that of experts; however, the independent validity of these systems and clinical applicability to ‘real-life’ screening remains unclear. Studies to date have tended to have a low rate of severe levels of diabetic retinopathy in the test population. This means that most studies are inadequately powered to show efficacy of the automated software in identification of severe diabetic retinopathy. For example, if proliferative diabetic retinopathy is rare or absent in the test population, a detection program unable to detect proliferative diabetic retinopathy may seem to perform well. However, in a different population with a higher number of people with proliferative disease or with a different ethnicity or age profile, it may miss patients who require immediate treatment. Moreover, these image analysis systems are not currently authorised for use in the NHS DESP.

There is a need for an independent evaluation of the clinical effectiveness and cost-effectiveness of available ARIAS with CE marking, to inform potential deployment in the NHS DESP. This study addresses this important clinical need in a relevant setting of the NHS DESP. It is based on a large population with sufficient numbers of patients with severe levels of diabetic retinopathy in the test population using the multi-image per eye photographic standard and a spectrum of different ethnicities, age groups and sexes, and a range of cameras typically approved for NHS use. The cost-effectiveness of the intervention specifically for incorporation in different parts of the screening pathway will also be addressed.

Research aim

This report presents the research methods, analysis plan and findings of work commissioned by the National Institute for Health Research (NIHR) Health Technology Assessment (HTA) programme (project number 11/21/02) to evaluate three commercially available automated grading systems against the NHS DESP manual grading in a large population of patients diagnosed with diabetes mellitus attending a real-life diabetic retinopathy screening programme. The health economics of automated versus manual grading provides a key decision-making tool to determine whether or not automated screening should take place, and how it should take place, for diabetic retinopathy in the future.

Research objectives

Primary objectives

This is a measurement comparison study to quantify the screening performance and diagnostic accuracy of commercially available automated grading systems compared with the NHS DESP manual grading. Screening performance was re-evaluated after a subset of images underwent arbitration by an approved Reading Centre to resolve discrepancies between the manual grading and the automated system. Health economics examined the cost-effectiveness of (1) replacing level 1 manual graders with automated grading and (2) using the automated software prior to manual grading.

Secondary objectives

Alternative reference standards were considered based on a consensus between the ARIASs and the NHS DESP manual grades if performance of ARIASs was sufficiently high for the detection of any retinopathy. Exploratory analyses examined the influence of patients’ ethnicity (Asian, black African Caribbean and white European), age, sex and camera on screening performance of ARIASs. Issues related to the implementation of ARIASs to the hospital eye screening service at the Homerton University Hospital Foundation Trust was evaluated prospectively.

Chapter 2 Methods

Study design, population and setting

The study design was an observational retrospective study based on data from consecutive diabetes mellitus patients aged ≥ 12 years who attended the annual Diabetes Eye Screening programme of the Homerton University Hospital Foundation Trust in London between 1 June 2012 and 4 November 2013, which adhered to the NHS DESP guidelines. 52,53 The aim was to obtain around 20,000 unique screening episodes that had been graded manually.

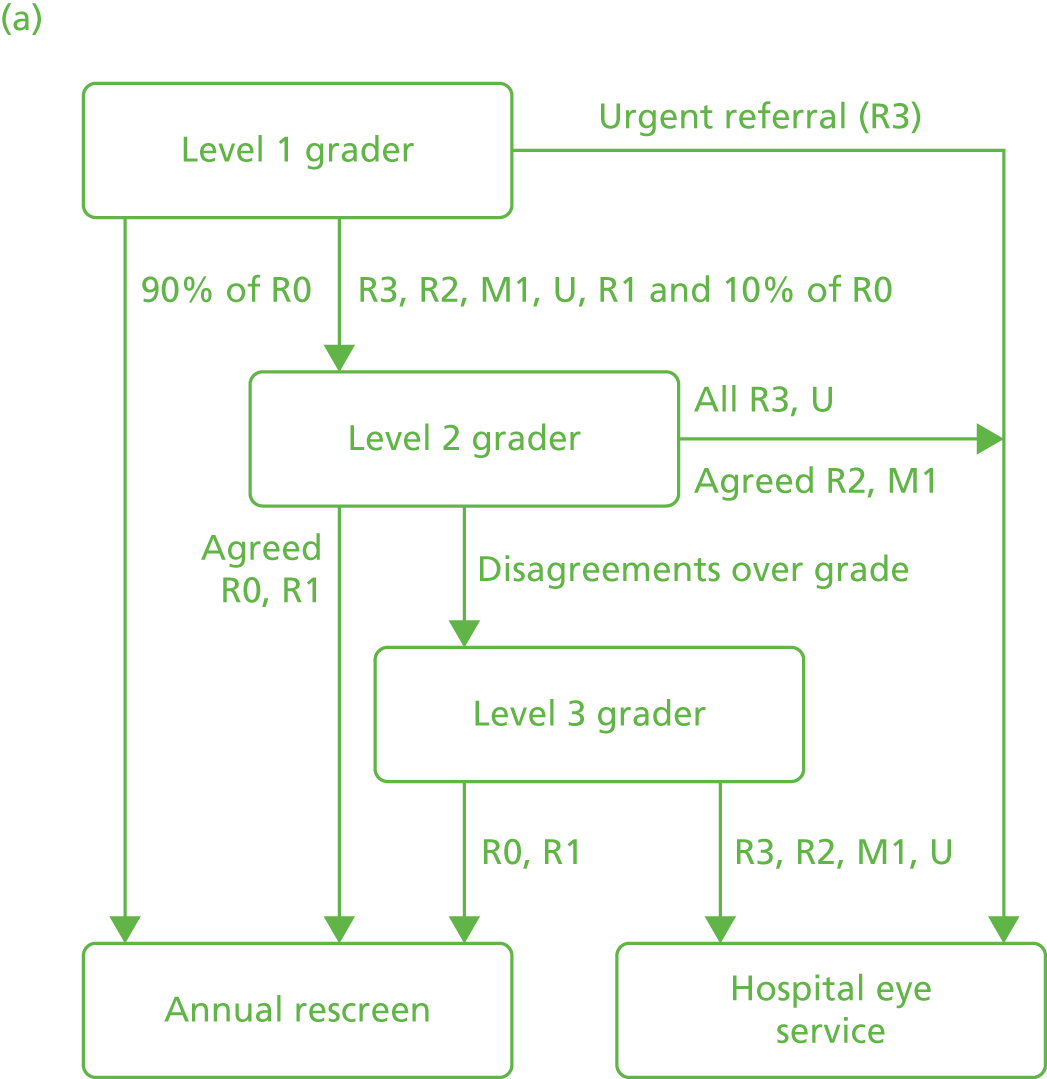

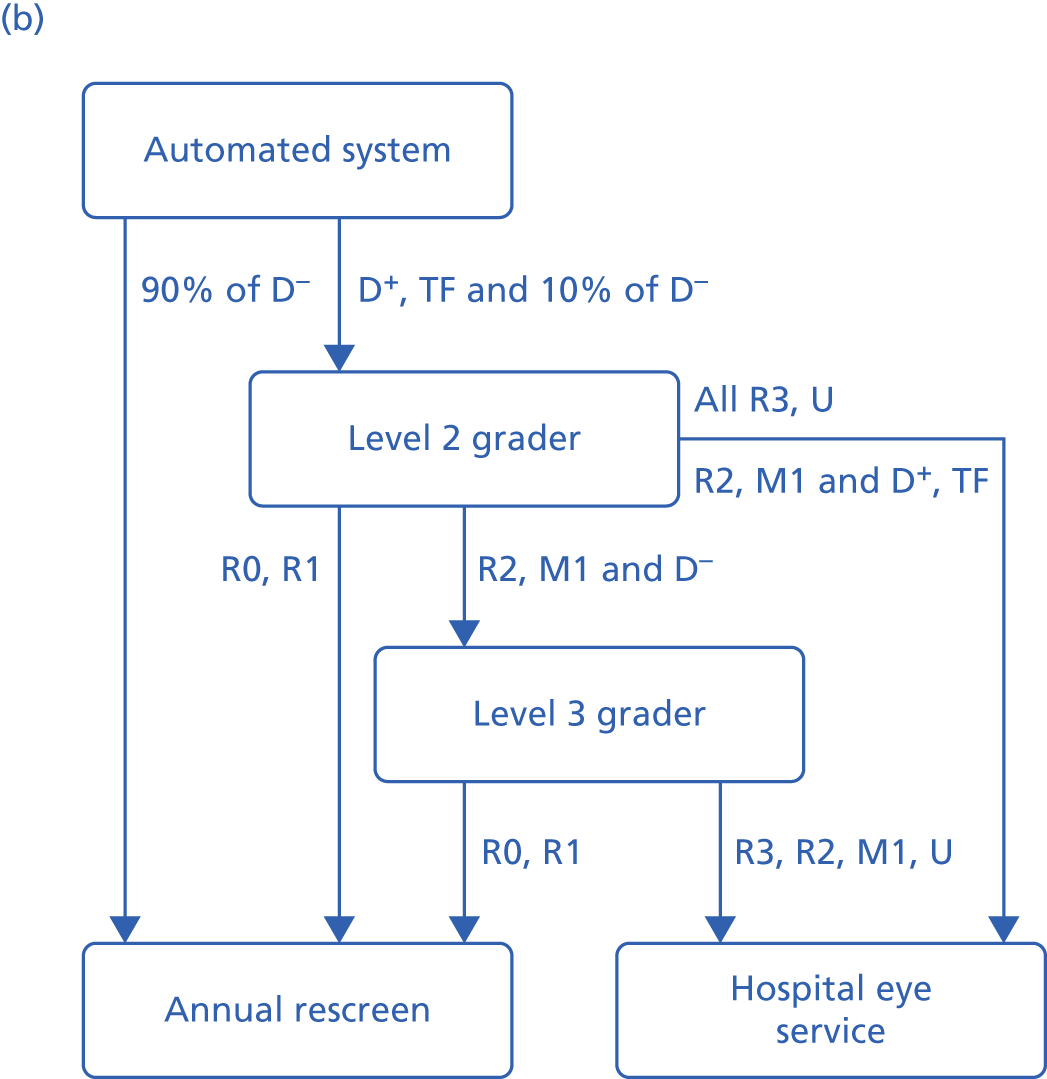

Image acquisition and manual grading

The screening protocols used in the NHS DESP at the time of this study have been published and an updated version of that used is available online. 54 In brief, the protocol requires retinal photography imaging through a dilated pupil to capture four images per patient; for each eye, one image centred on the optic disc and one image centred on the macula. In routine screening practice, additional images are often taken and stored on the screening software to ensure that enough images of sufficient quality for retinal grading are obtained and to document other pathology (e.g. a cataract). Up to three human graders who meet the NHS DESP quality assurance (QA) standards assess the images to determine a disease severity grade and produce a ‘final grade’ for each eye according to the highest level of severity observed. The grading classifications for the ‘eye for which action is most urgently required’ in order of increasing severity are no retinopathy (R0), background retinopathy (R1), no maculopathy (M0), ungradable (U), (classification) maculopathy (M1), pre-proliferative retinopathy (R2) and proliferative retinopathy (R3). 52,53 Level 2 grading of images is carried out by more senior graders. Disagreements between level 1 and level 2 graders for episodes that are potentially M1 or R2 are sent to a third grader for arbitration, whose assessment is final. Patients with grades U, M1, R2 and R3 are referred to hospital eye service ophthalmologists, whereas patients with grades R0 and R1 are invited for rescreening in 12 months.

In the screening service for much of the period during which images were retrieved, patients whose photographs were ungradable at their previous screening episodes, for example because of a known cataract that degraded the quality of retinal photography, were ‘technically failed’ and underwent slit-lamp biomicroscopy by optometrists in a clinic adjacent to the photographic screening clinic. There were 1243 such patients, and they have been omitted from the data set (as they have no gradable images). This reduced the percentage of ungradable images in the set analysed. All manual grades were stored and accessed using an electronic data storage system (Digital Healthcare OptoMize). Patients with no perception of light were excluded as per the standard national guidance.

Reader experience

The community diabetic retinopathy screening programme that was studied had a stable grading team of 18 full-time and part-time optometrists and non-optometrist graders performing well against the national standards and with City and Guilds accreditation relevant to their designation within the grading programme. Performance against national standards is reviewed and reported quarterly at programme board meetings. In addition, the programme was externally quality assured by the national team in 2008, and the 2012 programme performance for the grading team was equivalent to the national average on the six external QA tests reported for that year at the programme board. There was a good level of intergrader agreement within the programme and for arbitration of images and agreement at primary and secondary grader level. All non-optometrist graders had assessed between 1000 and 2000 image sets in the 1-year period (2012–13) and the optometrist graders had assessed > 500 image sets. Hence, the study team is confident that this programme is representative of a diabetic retinal screening service that is performing to the standard expected within the English NHS.

Image management

Images in the main study were extracted using a custom-written extraction tool: Data Export Module for OptoMize version 3 (Digital Healthcare, Cambridge, UK). This software generates a data file containing a number of variables (see Appendix 1) including a patient pseudoanonymised identification (ID) code generated by the software, retinopathy grading by each grader at a patient level, visual acuity, camera type, outcome recommended by each grader and final manual grade. A unique image ID was used to link to the images exported by OptoMize Data Export Module as Joint Photographic Experts Group (JPEG) images. The patient pseudoanonymised ID was used to link the images at a patient level. A separate export was undertaken from OptoMize to extract data on patient demographic characteristics (age, sex, ethnicity and camera type) linked back to the patient using the pseudoanonymised image ID code.

A test set of 2500 patient images from the same screening programme (Homerton University Hospital Foundation Trust, London), but not including any images from the main study, were provided to all four ARIAS vendors for the same length of time to develop the importation of the image files and output the final ARIAS classification. Although the NHS DESP stipulated two images per eye at the time of the study image acquisition in 2012–13, in reality additional images were often taken by the screening photographer and additional lens shots were often taken to document the presence of lens opacity. The four ARIAS vendors were advised of these issues to allow them to develop a system to handle non-retinal images and a variable number of images per eye. The vendors were notified that the images were not annotated as to whether or not the image was a retinal image.

The image test sets were exported onto encrypted drives and the corresponding data file, which was stripped of all grading results, was placed on four identical servers to allow linkage at a patient level. Three of the vendors installed their software on the servers [Intel® Xeon® (Intel Corporation, Santa Clara, CA, USA) six core processors, 8 GB random-access memory and Windows Server 2008 R2 (Microsoft Corporation, Redmond, WA, USA)], the fourth vendor (IDx, LLC) supplied a hardware unit to link to the server that processed the test images. Each of the vendors had remote access with administrative privileges to install, test and verify their software. After a predetermined date, remote access ceased and the vendors were not allowed to access installation of their software. In addition, the larger data set for the main study described below was loaded on to each of the servers after this date and processed following the instructions received from each of the companies.

To assess implementation of ARIASs in a real-life screening clinic, the ARIASs on each individual server were connected to the Homerton Univesity Hospital Foundation Trust network connected to the Digital Healthcare system used to store diabetic screening images and associated data. Images were exported on a daily basis using the OptoMize Data Export Module. This attempt to process images in a live, busy screening programme was used in order to understand the technical issues that need to be addressed to allow introduction into the NHS.

Anonymisation of images

Digital Healthcare OptoMize was used to store and extract data from the manual grading process. Data were extracted for 20,258 patients and anonymised to exclude personal identifying data. A second unique identifier was created for patient-screening episodes (and images within an episode) so that data sent for arbitration grading could not be linked with the original anonymised patient episode code.

Automated grading systems

Automated systems for diabetic retinopathy detection with a CE mark obtained or applied for up to 6 months after July 2013 were eligible for evaluation. Three software systems were selected from a literature search and discussion with experts and all three were agreed to participate in the study: iGradingM,31 Retmarker and IDx-DR. 36 For commercial reasons, IDx, LLC withdrew from the study before the analysis, and in 2013 another software system (EyeArt) asked to join the study, confirming that it would meet CE-mark eligibility criteria. Three systems (iGradingM, Retmarker and EyeArt) then processed all images from all screening episodes. Permission to extract pseudoanonymised images and process all images by the automated systems was obtained from the Caldicott Guardian. No formal ethics approval was required as all extracted images were anonymised and no change in clinical pathway occurred.

All automated systems are designed to identify cases of diabetic retinopathy of R1 or above. EyeArt is additionally designed to identify cases requiring referral to ophthalmology (diabetic retinopathy of U, M1, R2 or R3). Retmarker and EyeArt process all the images associated with a screening episode and provide a classification per episode. The iGradingM system provides an outcome classification for each image.

Outcome classification from the automated software

To compare screening performance across the automated systems with the manual reference standard, episodes were classified as (1) ‘disease present’ or ‘technical failure’ or (2) ‘disease absent’. Retmarker automatically classifies screening episodes in this way. For EyeArt, episodes were classified as (1) ‘disease’, ‘definite disease’ and ‘ungradable’ or (2) as ‘definite no disease’ and ‘no disease’. For iGradingM, episodes were classified as ‘disease present’ or ‘ungradable’ using the following approach:

-

If the outcome of at least one image in a screening episode is classified as ‘disease’, then the outcome classification for the episode will be ‘disease present’.

-

If the outcome of at least one image in a screening episode is classified as ‘ungradable’ and ‘no disease’ is detected in all the other images of the screening episode, then the outcome classification for the episode will be ‘ungradable’.

-

If the outcome of all images in a screening episode is ‘no disease’, then the outcome classification for the episode will be ‘disease absent’.

All automated systems also report a numerical value for a decision statistic; values above a certain threshold imply that diabetic retinopathy is present. EyeArt additionally provides a classification for referable versus non-referable disease that is intended to distinguish U, M1, R2 and R3 from R0M0 and R1M0. Screening performance using this outcome classification was also assessed.

Reference standards

Final human grade

Screening performance of each automated system was assessed using the final human manual grade as the reference standard, as well as that modified by arbitration.

Final human grade modified by arbitration

An internationally recognised fundus photographic reading centre (Doheny Image Reading Centre, Los Angeles, CA, USA),55 masked to the final human grading and ARIAS grading, carried out arbitration on disagreements between the final human grade and the grades assigned by the automated systems. Arbitration was limited to 1700 patient episodes. Emphasis was placed on arbitration of all discrepancies with final manual grades R3, R2 and M1. A random sample of screening episodes when two or more systems disagreed with the final human grade of R1 or R0 were also sent for arbitration. Table 1 summarises the process of arbitration and how the final reference grade was modified. The percentage of screening episodes falling into cases 3 and 4 (see Table 1) that were sent for arbitration was determined by the sensitivity and false-positive rate of the automated systems. The British Diabetic Association (Diabetes UK) sets standards for diabetic retinopathy screening of at least 80% sensitivity for sight-threatening retinopathy45 and any automated system that did not meet this operating standard was not considered in the process of identifying episodes for arbitration. Anonymised images were sent to the reading centre and diabetic retinopathy feature-based grading was entered into a database provided by the study team (see Appendix 2). The SABRE software application (Netsima Ltd, Port Talbot, Wales) was used to convert feature-based grading at an image level to a retinopathy grade at eye and patient level.

| Scenario | Criteria (combined human and software) | Arbitration | Final human grade modified by arbitration | |

|---|---|---|---|---|

| 1 | Human grade | M1, R2 or R3 | No arbitration | Final human grade |

| All software | Disease present | |||

| 2 | Human grade | M1, R2 or R3 | All images sent to reading centre | Grade from reading centre |

| One or more software | Disease not present | |||

| 3 | Human grade | R0 | For each software a proportion of all screening episodes were sent to the reading centre (random selection)a | Grade from reading centre |

| Two or more software | Disease present | |||

| 4 | Human grade | R1 | For each software a proportion of all screening episodes were sent to the reading centre (random selection)a | Grade from reading centre |

| Two or more software | Disease not present | |||

| 5 | Human grade | R0 | No arbitration | Final human grade |

| Only one software | Disease present | |||

| 6 | Human grade | R1 | No arbitration | Final human grade |

| Only one software | Disease not present | |||

| 7 | Human grade | R0 | No arbitration | Final human grade |

| All software | Disease not present | |||

| 8 | Human grade | R1 | No arbitration | Final human grade |

| All software | Disease present | |||

| 9 | Human grade | R0, R1, M1, R2 or R3 | No arbitration | Final human grade |

| More than one software | Technical failure | |||

| 10 | Human grade | R0, R1, M1, R2 or R3 | Proceed as in cases 1–8 considering the grades of the remaining software | |

| Only one software | Technical failure | |||

| 11 | Human grade | U | No arbitration | Final human grade |

Consensus grade

If the automated systems achieved at least 80% sensitivity in identifying cases of diabetic retinopathy of R1 or above and 100% sensitivity for R3, two ‘consensus grades’ were considered.

-

a majority classification based on data from the final human grade and the automated system classifications (disease absent/disease present or technical failure)

-

a majority classification based on the automated system classification only (disease absent/disease present or technical failure).

The change in screening performance for each system was compared with the consensus grade.

Statistical methods

Primary analyses

The primary analysis was to assess the screening performance of the automated systems using the final manual grade (with and without reading centre arbitration) as the reference standard. The sensitivity, false-positive rate (specificity) and likelihood ratios of each automated grading system were determined for (1) any retinopathy or ungradable episodes (final human grades R1, U, M1, R2 or R3 vs. R0), (2) episodes with final human grades U, M1, R2 or R3 versus final human grades R1 or R0 and (3) for each grade of retinopathy separately (R0, R1, M1, R2, R3 and U) using data from all 20,258 first screening episodes. The diagnostic accuracy of sensitivity, the false-positive rate and likelihood ratios were defined by 95% CIs obtained using bootstrapping. Clinical interpretation of the lower limit of the 95% CI for the detection of R3, R2 and M1 grades provides an indication of the potential number of screening episodes requiring clinical intervention that could be missed by the automated systems. The upper confidence limit for false-positive rates (and lower limit for specificity) for retinopathy grades R1 and R0 allowed the additional potential number of screening episodes requiring further investigation to be quantified. The level of uncertainty around these estimated lower and upper limits was obtained using bootstrapping.

Secondary analyses

The consensus grade approach outlined above as an alternative reference standard was considered for the evaluation of sensitivity and false-positive rates for the automated systems. Exploratory analyses examined the influence of patient demographic factors on the performance of the automated systems. Sensitivity and false-positive rates were summarised for each automated system for the three main ethnic groups (Asian, black African Caribbean and white European), tertiles of age, sex and camera type, using the final manual grade as modified by arbitration as the reference standard. Multiple variable logistic regression models were used to statistically assess whether or not the odds of the ‘disease’ versus ‘no disease’ outcome with the automated system were modified by age, sex, ethnicity or camera type. Patients with the highest manual grade in the worst eye modified by arbitration of R1, M1, U, R2 and R3 were classified as ‘cases’ and patients with grades R0M0 were classified as non-cases. These cases versus non-case definitions were chosen as the ARIASs are designed to detect retinopathy of R1 or worse. Logistic regression against age (categorised in tertiles), sex, ethnicity and camera type as exploratory variables and interaction terms for each demographic variable with the ARIAS outcome (disease vs. no disease), simultaneously adjusting for the other factors, was explored. An interaction with age was examined first, followed by sex, ethnicity and, finally, camera type. Although the study was not specifically powered to examine interactions, they were examined to identify potential hypotheses requiring further investigation. In view of the number of statistical significance tests for interaction terms, the p-value was set a priori to < 0.01 to determine statistical significance.

Sample size

A pilot study of 1340 patient screening episodes at St George’s Hospital NHS Trust, London (three of the applicants were part of this pilot study), revealed that the prevalence of R0, R1, M1, R2 and R3 is 68%, 24%, 6.1%, 1.2% and 0.5%, respectively. In addition, in this pilot study one software (iGradingM) was compared with manual grading as the reference standard. The sensitivities for R1, M1, R2 and R3 were 82%, 91%, 100% and 100%, respectively, and 44% of R0 were graded as ‘disease present’. The prevalence of different grades of retinopathy and the likely sensitivity of the software guided the sample size required. The sample size calculation was based on the number of screening episodes to ensure that the lower limit of the 95% CI for sensitivity of automated grading did not fall below 97% for retinopathy classified as R3 by human graders. R3 is the rarest and most serious outcome (0.5%) and governed the sample size. Under these assumptions 24,000 screening episodes would be needed. However, should the sensitivity fall to 90%, the associated binomial exact 95% CI for the detection of R3 would range from 83% to 95%. If the prevalence of R3 were instead 1%, only 20,000 episodes would be needed to achieve a similar degree of accuracy for the 95% CI. The number of unique patient screening episodes (not repeat screens) undertaken in a 12-month period at the Homerton University Hospital Foundation Trust was 20,258 and, based on pilot data, was expected to provide sufficient R3 events (a more detailed participant flow diagram is given in Chapter 3).

Table 2 outlines the prevalence of retinopathy grades reported in this screening programme and the precision with which different levels of sensitivity would be estimated for four potential levels of sensitivity between 80% and 95%. For example, if the automated system detects 85% R3, the 95% CI around this estimate of 85% sensitivity will be from 81% to 89%.

| Final human grade | Screening episodes | 95% CIa for specified sensitivity of an automated system (%) | |||

|---|---|---|---|---|---|

| n (prevalence, %) | 80% | 85% | 90% | 95% | |

| Retinopathy grades | |||||

| R0 | 12,727 (63) | 79 to 81 | 84 to 86 | 89 to 91 | 94.6 to 95.4 |

| R1 | 4749 (23) | 79 to 81 | 84 to 86 | 89 to 91 | 94 to 96 |

| M1 | 1609 (7.9) | 78 to 82 | 83 to 87 | 89 to 91 | 94 to 96 |

| R2 | 637 (3.1) | 77 to 83 | 82 to 88 | 88 to 92 | 93 to 97 |

| R3 | 236 (1.2) | 75 to 85 | 81 to 89 | 86 to 94 | 92 to 97 |

| U | 300 (1.5) | 75 to 84 | 81 to 89 | 86 to 93 | 92 to 97 |

| Combination of grades | |||||

| R1, U, M1, R2 or R3 | 7531 (37) | 79 to 81 | 84 to 86 | 89 to 91 | 94.5 to 95.4 |

| U, M1, R2 or R3 | 2782 (13.7) | 79 to 81 | 84 to 86 | 89 to 91 | 94 to 96 |

| Total | 20,258 (100) | – | – | – | – |

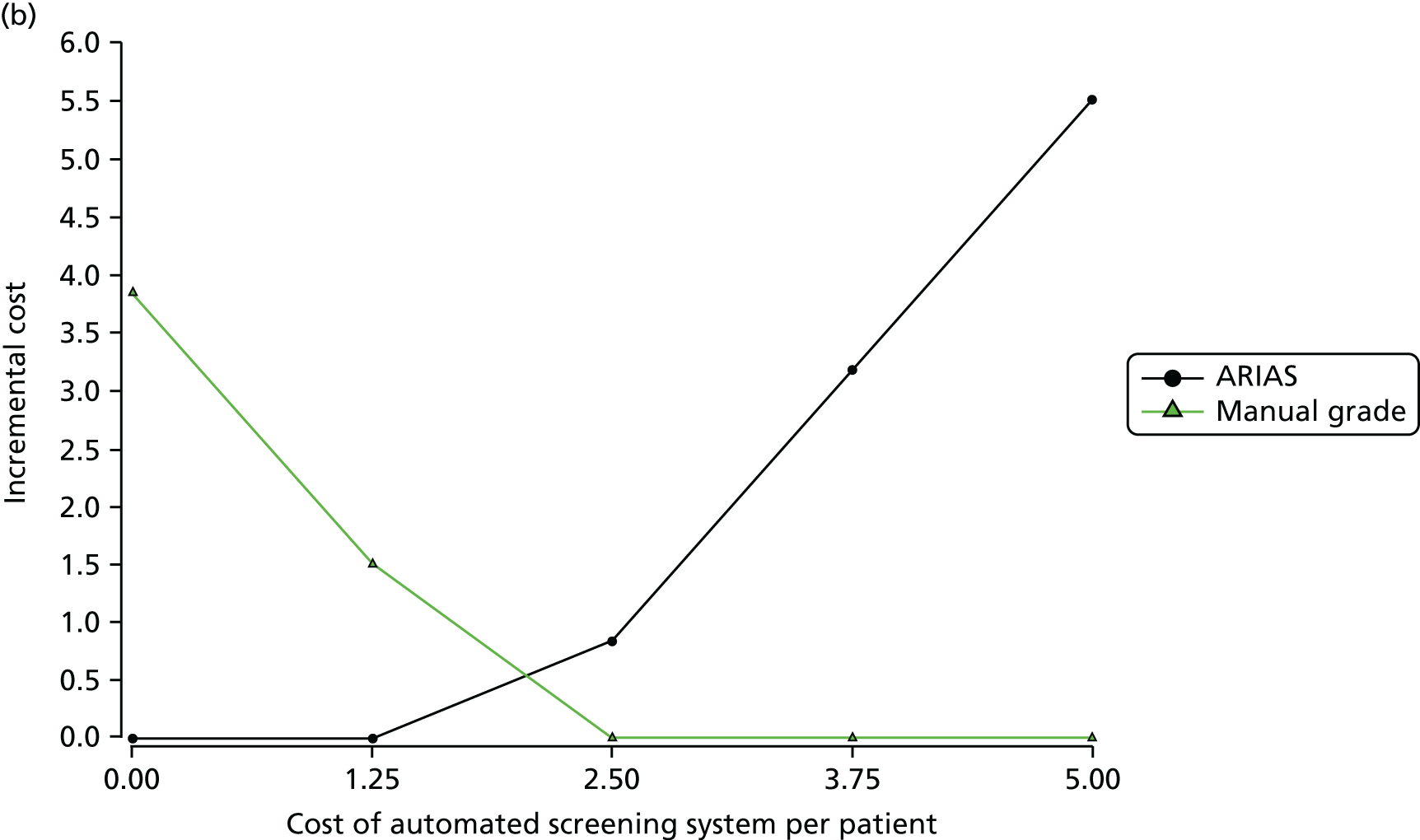

Health economic analyses

Details of the methods used in the health economic analysis, together with the outcomes, are given in Chapter 4.

In brief, a health economic analysis was carried out to investigate the economic implications involved if (1) an automated system were to replace level 1 human graders and (2) the automated system were to be used as a filter prior to level 1 grading.

Altered thresholds

The question is whether or not the automated screening of retinal images will identify a sufficiently large proportion of images as not requiring human intervention to justify (1) the cost of the software and (2) the risk of harm that necessarily follows from automation. In any such calculation there is a trade-off between sensitivity and specificity: the larger the proportion of images identified as not requiring human reading, the greater the risk that abnormality will be missed. Manufacturers of ARIASs fix the ‘operating point’ to provide a trade-off for regulatory approval and a commercial case made for the system’s effectiveness. However, the chosen operating point may not be optimal for all screening programmes. We explored the consequences of different operating points.

Chapter 3 Results

Data extraction from the Homerton diabetic eye screening programme

Box 1 shows the number of screening episodes extracted from the Homerton DESP for screening visits between 1 June 2012 and 4 November 2013. Box 1 shows the degree of data completeness for manual grades. Data from 28,079 screening episodes were obtained (142,018 images) and 20,258 patients were entered into the study. Data from first screening episodes (20,258 patients and 102,856 images) were included in the analysis. The data available for each episode included a unique anonymised patient ID code, episode screening date, retinal image filenames associated with each screening episode, camera type used and manual grades for retinopathy, maculopathy and associated assessment of image quality for each eye from each grader that processed the images. The final manual grade of each episode was obtained by combining information on the quality of the retinal image with retinopathy and maculopathy grades for each eye from each human grader using the highest manual grade, in accordance with the NHS DESP guidelines. 52,53 Individuals with one eye were identifiable in the data set and image quality and manual grades from one eye only were included.

A total of 2298 episodes excluded as there were no data on manual grades owing to a data extraction error in Digital Healthcare OptoMize.

A total of 28,231 screening episodes with data on manual gradingA total of 152 episodes excluded:

-

147 episodes incomplete grading pathways as screening process incomplete

-

five episodes in which the patient’s vision was perception of light vision.

Number of patients with at least one episode: 20,258 (102,856 images).

Number of patients with repeat episodes: 7821 (39,162 images).

Data on age, sex and ethnicity were available for 20,212 patients (Table 3). The median age of patients was 60 years (range 10–98 years), with 0.5% aged < 18 years and 37% aged > 65 years; 41% of patients were white European, 35% were Asian and 20% were black African-Caribbean. White European patients were more likely than Asian or black African-Caribbean patients to have retinopathy graded R0M0, despite being, on average, 7 years older than Asian and black African-Caribbean patients. Conversely, white European patients were less likely to be graded U, R1M1, R2 or R3 (11.2%) than Asian patients (15.0%) or black African-Caribbean patients (16.0%). Black African-Caribbean patients were marginally more likely than white European and Asian patients to have ungradable images (2.0% vs. 1.3% and 1.3%, respectively).

| Characteristic | Ethnic group | |||||

|---|---|---|---|---|---|---|

| White European | Asiana | Blackb | Mixedc | Otherd | Total | |

| Number of patients, n (%) | 8358 (41.4) | 7018 (34.7) | 3957 (19.6) | 136 (0.7) | 743 (3.7) | 20,212 (100) |

| Median age (years) (IQR) | 64.2 (53.9–74.0) | 57.5 (48.7–66.4) | 56.8 (48.6–69.4) | 52.9 (44.7–63.8) | 58.5 (50.0–67.2) | 60.0 (50.4–70.4) |

| Percentage male | 56 | 55 | 49 | 55 | 55 | 54 |

| Manual grade (worst eye) | Prevalence n (%) | |||||

| R0M0 | 5418 (64.8) | 4320 (61.6) | 2451 (61.9) | 81 (59.6) | 437 (58.8) | 12,707 |

| R1M0 | 2002 (24.0) | 1648 (23.5) | 873 (22.1) | 25 (18.4) | 190 (25.6) | 4738 |

| U | 105 (1.3) | 90 (1.3) | 79 (2.0) | 3 (2.2) | 16 (25.6) | 293 |

| R1M1 | 504 (6.0) | 621 (8.8) | 394 (10.0) | 17 (12.5) | 70 (9.4) | 1606 |

| R2 | 250 (3.0) | 247 (3.5) | 107 (2.7) | 7 (5.1) | 25 (3.4) | 636 |

| R2M0 | 94 (1.1) | 73 (1.0) | 30 (0.8) | 3 (2.2) | 9 (1.2) | 209 |

| R2M1 | 156 (1.9) | 174 (2.5) | 77 (1.9) | 4 (2.9) | 16 (2.2) | 427 |

| R3 | 79 (0.9) | 92 (1.3) | 53 (1.3) | 3 (2.2) | 5 (0.7) | 232 |

| R3M0 | 30 (0.4) | 25 (0.4) | 16 (0.4) | 1 (0.7) | 1 (0.1) | 73 |

| R3M1 | 49 (0.6) | 67 (1.0) | 37 (0.9) | 2 (1.5) | 4 (0.5) | 159 |

| Combination of grades | ||||||

| R0M0, R1M0 | 7420 (88.8) | 5968 (85.0) | 3324 (84.0) | 106 (77.9) | 627 (84.4) | 17,445 |

| U, R1M1, R2, R3 | 938 (11.2) | 1050 (15.0) | 633 (16.0) | 30 (22.1) | 116 (15.6) | 2767 |

| R1M0, U, R1M1, R2, R3 | 2940 (35.2) | 2698 (38.4) | 1506 (38.1) | 55 (40.4) | 306 (41.2) | 7505 |

| Total | 8358 (100) | 7018 (100.0) | 3957 (100.0) | 136 (100.0) | 743 (100.0) | 20,212 |

Table 31 in Appendix 3 summarises the screening pathways for all first screening episodes for 20,258 patients; 10,788 episodes (53%) were graded R0M0 by a level 1 grader and did not go on to the level 2 grader. The second largest group comprised 4534 episodes (22%) that were coded as R1M0 by the level 1 grader and were then passed to the level 2 grader, who confirmed the R1M0 grade. Overall, the level 1 grader classified 295 episodes as U (≈1%); this is a relatively low level because patients known to be photographically ungradable were technically failed before image capture and underwent slit-lamp biomicroscopy in the clinic. The Homerton DESP graded M1 as either maculopathy with exudates within 1-disc diameter of centre of the fovea (M1a) or as maculopathy with exudates within the macula (M1b). M1a was graded when visual acuity was 6/6 or better, or 6/9 in the past with no deterioration when the new problem emerged and there were no new symptoms, and 6-month recall was needed. M1b was graded when the level of exudation was more than modest or if the visual acuity is 6/9 or worse, and referral to the hospital eye services was needed.

Screening performance of EyeArt software

Table 4 gives the sensitivity (detection rate) and false-positive rates for the EyeArt software by the highest (worst eye) manual retinopathy grade per episode as modified by the Reading Centre arbitration as the reference standard. The specificity for episodes graded R0M0 (i.e. no retinopathy) was 20%, equivalent to a false-positive rate of 80% (see Table 4). For episodes graded manually as R3 the sensitivity was 100%. For any retinopathy (manual grades of R1M0 or higher) the sensitivity was 95% and for grades R1M1 or higher (including ungradable images) the sensitivity was 94%. Table 5 presents the diagnostic accuracy for the point estimates in Table 4 and likelihood ratios. For example, the 95% CI for the estimated sensitivity for R3 was 97.0% to 99.9% and the bootstrapped 95% CI around the lower limit was 85.5% to 97.4% and around the upper limit was 99.5% to 99.9%. The likelihood ratios for the software show that patients with manual grades of R1M1, R2 and R3 were all approximately 1.2 times more likely to be classified as ‘disease present’ than patients with manual grades R0M0. Patients graded U, R1M1, R2, R3 were 1.12 times more likely than patients with manual grades R0M0 and R1M0 to be classified as ‘disease present’. The comparable measures of screening performance, diagnostic accuracy and likelihood ratios as obtained prior to arbitration of manual grades by the Reading Centre are presented in Tables 32 and 33 in Appendix 3. Measures of screening performance are very similar when comparing results before and after arbitration of manual grades.

| Manual grade (worst eye) | EyeArt outcome, n (%a) | Total, n (%b) | |

|---|---|---|---|

| No disease | Disease | ||

| Retinopathy grades | |||

| R0M0 | 2542 (20) | 10,254 (80) | 12,796 (63) |

| R1M0 | 217 (5) | 4401 (95) | 4618 (23) |

| U | 98 (23) | 329 (77) | 427 (2) |

| R1M1 | 73 (5) | 1485 (95) | 1558 (8) |

| R2 | 4 (1) | 622 (99) | 626 (3) |

| R2M0 | 3 (2) | 190 (98) | 193 (1) |

| R2M1 | 1 (0) | 432 (100) | 433 (2) |

| R3 | 1 (0) | 232 (100) | 233 (1) |

| R3M0 | 0 (0) | 71 (100) | 71 (0) |

| R3M1 | 1 (1) | 161 (99) | 162 (1) |

| Combination of grades | |||

| R0M0, R1M0 | 2759 (16) | 14,655 (84) | 17,414 (86) |

| U, R1M1, R2, R3 | 176 (6) | 2668 (94) | 2844 (14) |

| R1M0, U, R1M1, R2, R3 | 393 (5) | 7069 (95) | 7462 (37) |

| Total | 2935 (100) | 17,323 (100) | 20,258 (100) |

| Manual grade (worst eye) | Proportion classified as disease present or technical failure | Likelihood ratio vs. R0 (95% CI) | ||

|---|---|---|---|---|

| Estimate (95% CI) | Lowera (95% CI) | Upperb (95% CI) | ||

| Retinopathy grades | ||||

| R0M0c | 0.199 (0.192 to 0.206) | 0.192 (0.185 to 0.198) | 0.206 (0.199 to 0.212) | – |

| R1M0 | 0.953 (0.947 to 0.959) | 0.947 (0.941 to 0.953) | 0.959 (0.953 to 0.964) | 1.189 (1.179 to 1.201) |

| U | 0.770 (0.728 to 0.808) | 0.728 (0.691 to 0.773) | 0.808 (0.773 to 0.847) | 0.961 (0.915 to 1.016) |

| R1M1 | 0.953 (0.941 to 0.963) | 0.941 (0.930 to 0.951) | 0.963 (0.954 to 0.971) | 1.189 (1.173 to 1.204) |

| R2 | 0.994 (0.983 to 0.998) | 0.983 (0.972 to 0.989) | 0.998 (0.992 to 1.000) | 1.240 (1.227 to 1.251) |

| R2M0 | 0.984 (0.953 to 0.995) | 0.953 (0.850 to 0.967) | 0.995 (0.859 to 0.999) | 1.229 (1.000 to 1.247) |

| R2M1 | 0.998 (0.984 to 1.000) | 0.984 (0.979 to 0.986) | 1.000 (0.998 to 1.000) | 1.245 (1.000 to 1.255) |

| R3 | 0.996 (0.970 to 0.999) | 0.970 (0.855 to 0.974) | 0.999 (0.995 to 0.999) | 1.243 (1.000 to 1.256) |

| R3M0 | 1.000 | – | – | 1.248 (1.237 to 1.258) |

| R3M1 | 0.994 (0.958 to 0.999) | 0.958 (0.851 to 0.963) | 0.999 (0.862 to 0.999) | 1.240 (1.000 to 1.254) |

| Combination of grades | ||||

| R0M0, R1M0c | 0.158 (0.153 to 0.164) | 0.153 (0.148 to 0.159) | 0.164 (0.159 to 0.170) | – |

| U, R1M1, R2, R3 | 0.938 (0.929 to 0.946) | 0.929 (0.920 to 0.941) | 0.946 (0.939 to 0.957) | 1.115 (1.102 to 1.128)d |

| R1M0, U, R1M1, R2, R3 | 0.947 (0.942 to 0.952) | 0.942 (0.937 to 0.949) | 0.952 (0.948 to 0.958) | 1.182 (1.172 to 1.194) |

EyeArt provides an alternative classification that attempts to identify cases requiring referral to ophthalmology (grades U, M1, R2 and R3); Tables 6 and 7 present the findings using this alternative output from EyeArt. This showed a marked effect for patients graded R0M0. In Table 4, 20% were classified as ‘no disease’, compared with 41% classified as ‘no refer’. The impact on the other retinopathy grades was less marked, with a reduction in sensitivity for R1M1 from 95% to 91%, and smaller changes in sensitivity for R2 and R3. Table 7 shows that the precision of detection was not affected but the likelihood ratios were marginally increased. Very similar results were observed prior to arbitration (see Appendix 3, Tables 34 and 35).

| Manual grade (worst eye) | EyeArt outcome, n (%a) | Total, n (%b) | |

|---|---|---|---|

| No refer | Refer | ||

| Retinopathy grades | |||

| R0M0c | 5212 (41) | 7584 (59) | 12,796 (63) |

| R1M0 | 837 (18) | 3781 (82) | 4618 (23) |

| U | 145 (34) | 282 (66) | 427 (2) |

| R1M1 | 137 (9) | 1421 (91) | 1558 (8) |

| R2 | 7 (1) | 619 (99) | 626 (3) |

| R2M0 | 5 (3) | 188 (97) | 193 (1) |

| R2M1 | 2 (0) | 431 (100) | 433 (2) |

| R3 | 2 (1) | 231 (99) | 233 (1) |

| R3M0 | 1 (1) | 70 (99) | 71 (0) |

| R3M1 | 1 (1) | 161 (99) | 162 (1) |

| Combination of grades | |||

| R0M0, R1M0c | 6049 (35) | 11,365 (65) | 17,414 (86) |

| U, R1M1, R2, R3 | 291 (10) | 2553 (90) | 2844 (14) |

| Total | 6340 (100) | 13,918 (100) | 20,258 (100) |

| Manual grade (worst eye) | Proportion classified as refer or technical failure | Likelihood ratio vs. R0 + R1M0 (95% CI) | ||

|---|---|---|---|---|

| Estimate (95% CI) | Lowera (95% CI) | Upperb (95% CI) | ||

| Retinopathy grades | ||||

| R0M0c | 0.407 (0.399 to 0.416) | 0.399 (0.390 to 0.406) | 0.416 (0.407 to 0.423) | – |

| R1M0 | 0.819 (0.807 to 0.830) | 0.807 (0.797 to 0.819) | 0.830 (0.820 to 0.841) | – |

| U | 0.660 (0.614 to 0.704) | 0.614 (0.572 to 0.675) | 0.704 (0.664 to 0.762) | 1.012 (0.950 to 1.102) |

| R1M1 | 0.912 (0.897 to 0.925) | 0.897 (0.885 to 0.911) | 0.925 (0.914 to 0.937) | 1.398 (1.375 to 1.421) |

| R2 | 0.989 (0.977 to 0.995) | 0.977 (0.965 to 0.986) | 0.995 (0.988 to 0.999) | 1.515 (1.493 to 1.534) |

| R2M0 | 0.974 (0.939 to 0.989) | 0.939 (0.901 to 0.961) | 0.989 (0.969 to 0.998) | 1.493 (1.447 to 1.522) |

| R2M1 | 0.995 (0.982 to 0.999) | 0.982 (0.675 to 0.985) | 0.999 (0.688 to 1.000) | 1.525 (1.000 to 1.544) |

| R3 | 0.991 (0.966 to 0.998) | 0.966 (0.677 to 0.973) | 0.998 (0.690 to 0.999) | 1.519 (1.000 to 1.542) |

| R3M0 | 0.986 (0.907 to 0.998) | 0.907 (0.868 to 0.928) | 0.998 (0.992 to 0.999) | 1.511 (1.485 to 1.536) |

| R3M1 | 0.994 (0.958 to 0.999) | 0.958 (0.681 to 0.963) | 0.999 (0.694 to 0.999) | 1.523 (1.000 to 1.542) |

| Combination of grades | ||||

| R0M0, R1M0c | 0.347 (0.340 to 0.354) | 0.340 (0.332 to 0.346) | 0.354 (0.346 to 0.360) | – |

| U, R1M1, R2, R3 | 0.898 (0.886 to 0.908) | 0.886 (0.876 to 0.899) | 0.908 (0.899 to 0.920) | 1.375 (1.354 to 1.396)d |

Screening performance of Retmarker software

Tables 8 and 9 provide the corresponding results for Retmarker software. The specificity for episodes graded manually as R0M0 was higher for Retmarker than for EyeArt (53% vs. 20%). However, the detection of R2 or R3 retinopathies was slightly lower than that for EyeArt, with sensitivities of 96.5% versus 99.4% for R2 and 97.9% versus 99.6% for R3. Table 9 gives the measures of diagnostic accuracy around estimates of screening performance in Table 8. For example, for R2 the estimated sensitivity was 96.5% with a 95% CI of 94.7% to 97.7%. The bootstrapped 95% CI on the lower bound of this confidence limit ranged from 92.5% to 96.6% for Retmarker compared with 97.2% to 98.9% for EyeArt (see Table 5). The precision on the lower bound of the 95% confidence limit for sensitivity for R3 ranged from 92.1% to 96.8% for Retmarker and from 85.5% to 97.4% for EyeArt (see Table 5). Focusing on the lower bound for the detection of R3 suggests that the sensitivity was potentially better for Retmarker than for EyeArt, whereas for R2 EyeArt had higher sensitivity. However, when examining combined manual grades of U, R1M1, R2 and R3, the 95% CI on the lower bound for sensitivity ranged from 81.9% to 84.9% for Retmarker and from 92.0% to 94.1% for EyeArt (see Table 5). For these combined grades EyeArt had a higher sensitivity than Retmarker when examining the diagnostic accuracy around the lower bound of the 95% CI. The likelihood ratios for Retmarker were generally higher than those for EyeArt, but this appeared to be driven by the higher specificity of Retmarker for grades R0M0 rather than improved sensitivity for manual grades of R1M1 and above. Tables 36 and 37 in Appendix 3 give the corresponding results for Retmarker prior to arbitration, which are remarkably similar to Tables 8 and 9.

| Manual grade (worst eye) | Retmarker outcome, n (%a) | Total, n (%b) | |

|---|---|---|---|

| No disease | Disease | ||

| Retinopathy grades | |||

| R0M0 | 6730 (53) | 6066 (47) | 12796 (63) |

| R1M0 | 1585 (34) | 3033 (66) | 4618 (23) |

| U | 194 (45) | 233 (55) | 427 (2) |

| R1M1 | 207 (13) | 1351 (87) | 1558 (8) |

| R2 | 22 (4) | 604 (96) | 626 (3) |

| R2M0 | 5 (3) | 188 (97) | 193 (1) |

| R2M1 | 17 (4) | 416 (96) | 433 (2) |

| R3 | 5 (2) | 228 (98) | 233 (1) |

| R3M0 | 1 (1) | 70 (99) | 71 (0) |

| R3M1 | 4 (2) | 158 (98) | 162 (1) |

| Combination of grades | |||

| R0M0, R1M0 | 8315 (48) | 9099 (52) | 17414 (86) |

| U, R1M1, R2, R3 | 428 (15) | 2416 (85) | 2844 (14) |

| R1M0, U, R1M1, R2, R3 | 2013 (27) | 5449 (73) | 7462 (37) |

| Total | 8743 (100) | 11,515 (100) | 20,258 (100) |

| Manual grade (worst eye) | Proportion classified as disease present or technical failure | Likelihood ratio vs. R0 (95% CI) | ||

|---|---|---|---|---|

| Estimate (95% CI) | Lowera (95% CI) | Upperb (95% CI) | ||

| Retinopathy grades | ||||

| R0M0c | 0.526 (0.517 to 0.535) | 0.517 (0.510 to 0.527) | 0.535 (0.527 to 0.544) | – |

| R1M0 | 0.657 (0.643 to 0.670) | 0.643 (0.632 to 0.657) | 0.670 (0.659 to 0.684) | 1.385 (1.353 to 1.431) |

| U | 0.546 (0.498 to 0.592) | 0.498 (0.457 to 0.554) | 0.592 (0.552 to 0.647) | 1.151 (1.069 to 1.277) |

| R1M1 | 0.867 (0.849 to 0.883) | 0.849 (0.832 to 0.863) | 0.883 (0.868 to 0.896) | 1.829 (1.789 to 1.876) |

| R2 | 0.965 (0.947 to 0.977) | 0.947 (0.925 to 0.966) | 0.977 (0.962 to 0.988) | 2.035 (1.993 to 2.088) |

| R2M0 | 0.974 (0.939 to 0.989) | 0.939 (0.898 to 0.964) | 0.989 (0.967 to 0.999) | 2.055 (1.988 to 2.118) |

| R2M1 | 0.961 (0.938 to 0.975) | 0.938 (0.913 to 0.961) | 0.975 (0.959 to 0.989) | 2.027 (1.976 to 2.080) |

| R3 | 0.979 (0.949 to 0.991) | 0.949 (0.921 to 0.968) | 0.991 (0.978 to 0.998) | 2.064 (2.015 to 2.109) |

| R3M0 | 0.986 (0.907 to 0.998) | 0.907 (0.882 to 0.929) | 0.998 (0.993 to 0.999) | 2.080 (2.037 to 2.125) |

| R3M1 | 0.975 (0.936 to 0.991) | 0.936 (0.559 to 0.957) | 0.991 (0.573 to 0.999) | 2.057 (1.990 to 2.106) |

| Combination of grades | ||||

| R0M0, R1M0c | 0.477 (0.470 to 0.485) | 0.470 (0.464 to 0.478) | 0.485 (0.478 to 0.493) | – |

| U, R1M1, R2, R3 | 0.850 (0.836 to 0.862) | 0.836 (0.819 to 0.849) | 0.862 (0.846 to 0.874) | 1.626 (1.593 to 1.659)d |

| R1M0, U, R1M1, R2, R3 | 0.730 (0.720 to 0.740) | 0.720 (0.709 to 0.731) | 0.740 (0.728 to 0.750) | 1.540 (1.507 to 1.578) |

Screening performance of iGradingM software

Table 10 shows results for iGradingM using the outcome classification described in Chapter 2 prior to arbitration by the Reading Centre. None of the screening episodes was classified as ‘no disease’. This raised concerns about the iGradingM software processing both disc- and macular-centred images. We examined this directly on the subset of screening episodes that underwent arbitration and we were able to identify that 99% of disc-centred images were classified as ‘ungradable’ by iGradingM compared with 36% of macular-centred images. Note that Table 10 is based on the hierarchy for grading outcome at episodes level for iGradingM as defined a priori in Chapter 2. 56 If at least one image is classified as ‘disease’ the outcome at a patient level will be ‘disease’ even if the disc-centred images are ungradable. However, if at least one image is ‘ungradable’ and all others are ‘no disease‘ then ‘ungradable’ is the worst outcome at a patient level.

| Manual grade (worst eye) | iGradingM outcome, n (%a) | Total, n (%b) | ||

|---|---|---|---|---|

| No disease | Disease | Ungradable | ||

| Retinopathy grades | ||||

| R0M0 | 0 (0) | 4871 (38) | 7856 (62) | 12,727 (63) |

| R1M0 | 0 (0) | 2913 (61) | 1836 (39) | 4749 (23) |

| U | 0 (0) | 118 (39) | 182 (61) | 300 (1) |

| R1M1 | 0 (0) | 1136 (71) | 473 (29) | 1609 (8) |

| R2 | 0 (0) | 504 (79) | 133 (21) | 637 (3) |

| R2M0 | 0 (0) | 169 (80) | 41 (20) | 210 (1) |

| R2M1 | 0 (0) | 335 (78) | 92 (22) | 427 (2) |

| R3 | 0 (0) | 181 (77) | 55 (23) | 236 (1) |

| R3M0 | 0 (0) | 61 (81) | 14 (19) | 75 (0) |

| R3M1 | 0 (0) | 120 (75) | 41 (25) | 161 (1) |

| Combination of grades | ||||

| R0M0, R1M0 | 0 (0) | 7784 (45) | 9692 (55) | 17,476 (86) |

| U, R1M1, R2, R3 | 0 (0) | 1939 (70) | 843 (30) | 2782 (14) |

| R1M0, U, R1M1, R2, R3 | 0 (0) | 4852 (64) | 2679 (36) | 7531 (37) |

| Total | 0 (100) | 9723 (100) | 10,535 (100) | 20,258 (100) |

Arbitration on subset of screening episodes

Table 11 shows the agreement between the manual grade from the Homerton DESP and the Doheny image Reading Centre grade for 1700 screening episodes (8373 images) that underwent arbitration. Some disagreement exists for each grade, but for combinations of grades the agreement was reasonable. For example, the manual grade from the Homerton DESP was R0M0 (n = 862) or R1M0 (n = 362) in 1224 episodes. The Doheny Image Reading Centre confirmed a grade of R0M0 or R1M0 in 81% [(734 + 22 + 149 + 91)/1224] and an additional 9.8%, although graded by the Homerton DESP, were classified as U [(89 + 32)/1224] and 8.7% were graded R1M1, R2M0, R2M1, R3M0 or R3M1 by the Doheny Image Reading Centre. Episodes with manual grades R1M1, R2M0, R2M1, R3M0 or R3M1 from the Homerton DESP (413 + 32 + 18 + 10 + 3 = 476 episodes) were assigned a grade of at least R1M1 by the Reading Centre in 59% of episodes (279/476 episodes) and an additional 8.4% were classified as U.

| Manual grade from Homerton DESP | Arbitration grade from Doheny Image Reading Centre, n | Total, n | |||||||

|---|---|---|---|---|---|---|---|---|---|

| R0M0 | R1M0 | U | R1M1 | R2M0 | R2M1 | R3M0 | R3M1 | ||

| R0M0 | 734 | 22 | 89 | 15 | 1 | 0 | 1 | 0 | 862 |

| R1M0 | 149 | 91 | 32 | 85 | 3 | 0 | 0 | 2 | 362 |

| R1M1 | 82 | 60 | 29 | 222 | 0 | 16 | 1 | 3 | 413 |

| R2M0 | 7 | 7 | 3 | 9 | 1 | 4 | 0 | 1 | 32 |

| R2M1 | 0 | 0 | 4 | 9 | 2 | 2 | 1 | 0 | 18 |

| R3M0 | 0 | 1 | 2 | 2 | 0 | 2 | 3 | 0 | 10 |

| R3M1 | 0 | 0 | 2 | 0 | 0 | 0 | 0 | 1 | 3 |

| Total | 972 | 181 | 161 | 342 | 7 | 24 | 6 | 7 | 1700 |

Exploratory analyses of demographic factors on screening performance

Tables 12–17 summarise the screening performance for EyeArt and Retmarker for all manual retinopathy grades as modified by arbitration for three ethnic groups (white European, Asian and black African-Caribbean), three age groups and by sex. There was no evidence to suggest that ethnicity (see Tables 12 and 13) or sex (see Tables 16 and 17) influences the sensitivity of EyeArt or Retmarker. However, false-positive rates appeared lower in white Europeans for combined grades R0M0 + R1M0 than in Asians or black African-Caribbeans for both ARIASs. There was some evidence that as age increased, detection of combined grades U, R1M1, R2 and R3 was reduced for both ARIASs (see Tables 14 and 15). Sensitivity falls from 96% in the youngest age group to 92% in the oldest age group for EyeArt and from 93.5% to 78.5% for Retmarker. For Retmarker (but not EyeArt) there was a decline in the false-positive rate with age, from 64% in the youngest age group to 51% in the oldest age group.

| Manual grade (worst eye) | EyeArt outcome by ethnic group, n (%a) | |||||

|---|---|---|---|---|---|---|

| White European | Asian | Black African-Caribbean | ||||

| No disease | Disease | No disease | Disease | No disease | Disease | |

| Retinopathy grades | ||||||

| R0M0 | 1241 (22.7) | 4232 (77.3) | 707 (16.3) | 3632 (83.7) | 480 (19.6) | 1963 (80.4) |

| R1M0 | 96 (5.0) | 1839 (95.0) | 60 (3.7) | 1551 (96.3) | 43 (5.1) | 806 (94.9) |

| U | 40 (30.5) | 91 (69.5) | 23 (18.1) | 104 (81.9) | 27 (19.4) | 112 (80.6) |

| R1M1 | 33 (6.7) | 460 (93.3) | 28 (4.6) | 580 (95.4) | 9 (2.4) | 359 (97.6) |

| R2 | 0 (0.0) | 246 (100.0) | 1 (0.4) | 241 (99.6) | 3 (2.8) | 105 (97.2) |

| R2M0 | 0 (0.0) | 85 (100.0) | 1 (1.4) | 68 (98.6) | 2 (6.9) | 27 (93.1) |

| R2M1 | 0 (0.0) | 161 (100.0) | 0 (0.0) | 173 (100.0) | 1 (1.3) | 78 (98.7) |

| R3 | 0 (0.0) | 80 (100.0) | 0 (0.0) | 91 (100.0) | 1 (2.0) | 49 (98.0) |

| R3M0 | 0 (0.0) | 29 (100.0) | 0 (0.0) | 25 (100.0) | 0 (0.0) | 13 (100.0) |

| R3M1 | 0 (0.0) | 51 (100.0) | 0 (0.0) | 66 (100.0) | 1 (2.7) | 36 (97.3) |

| Combination of grades | ||||||

| R0M0, R1M0 | 1337 (18.0) | 6071 (82.0) | 767 (12.9) | 5183 (87.1) | 523 (15.9) | 2769 (84.1) |

| U, R1M1, R2, R3 | 73 (7.7) | 877 (92.3) | 52 (4.9) | 1016 (95.1) | 40 (6.0) | 625 (94.0) |

| R1M0, U, R1M1, R2, R3 | 169 (5.9) | 2716 (94.1) | 112 (4.2) | 2567 (95.8) | 83 (5.5) | 1431 (94.5) |

| Total | 1410 (16.9) | 6948 (83.1) | 819 (11.7) | 6199 (88.3) | 563 (14.2) | 3394 (85.8) |

| Manual grade (worst eye) | Retmarker outcome by ethnic group, n (%a) | |||||

|---|---|---|---|---|---|---|

| White European | Asian | Black African-Caribbean | ||||

| No disease | Disease | No disease | Disease | No disease | Disease | |

| Retinopathy grades | ||||||

| R0M0 | 3299 (60.3) | 2174 (39.7) | 2098 (48.4) | 2241 (51.6) | 1087 (44.5) | 1356 (55.5) |

| R1M0 | 701 (36.2) | 1234 (63.8) | 540 (33.5) | 1071 (66.5) | 267 (31.4) | 582 (68.6) |

| U | 69 (52.7) | 62 (47.3) | 50 (39.4) | 77 (60.6) | 65 (46.8) | 74 (53.2) |

| R1M1 | 77 (15.6) | 416 (84.4) | 83 (13.7) | 525 (86.3) | 36 (9.8) | 332 (90.2) |

| R2 | 7 (2.8) | 239 (97.2) | 5 (2.1) | 237 (97.9) | 10 (9.3) | 98 (90.7) |

| R2M0 | 0 (0.0) | 85 (100.0) | 1 (1.4) | 68 (98.6) | 4 (13.8) | 25 (86.2) |

| R2M1 | 7 (4.3) | 154 (95.7) | 4 (2.3) | 169 (97.7) | 6 (7.6) | 73 (92.4) |

| R3 | 2 (2.5) | 78 (97.5) | 1 (1.1) | 90 (98.9) | 2 (4.0) | 48 (96.0) |

| R3M0 | 0 (0.0) | 29 (100.0) | 1 (4.0) | 24 (96.0) | 0 (0.0) | 13 (100.0) |

| R3M1 | 2 (3.9) | 49 (96.1) | 0 (0.0) | 66 (100.0) | 2 (5.4) | 35 (94.6) |

| Combination of grades | ||||||

| R0M0, R1M0 | 4000 (54.0) | 3408 (46.0) | 2638 (44.3) | 3312 (55.7) | 1354 (41.1) | 1938 (58.9) |

| U, R1M1, R2, R3 | 155 (16.3) | 795 (83.7) | 139 (13.0) | 929 (87.0) | 113 (17.0) | 552 (83.0) |

| R1M0, U, R1M1, R2, R3 | 856 (29.7) | 2029 (70.3) | 679 (25.3) | 2000 (74.7) | 380 (25.1) | 1134 (74.9) |

| Total | 4153 (49.7) | 4154 (49.7) | 2777 (39.6) | 4241 (60.4) | 1467 (37.1) | 2490 (62.9) |

| Manual grade (worst eye) | EyeArt outcome by age group, n (%a) | |||||

|---|---|---|---|---|---|---|

| < 50 years | 50 to < 65 years | 65 to 98 years | ||||

| No disease | Disease | No disease | Disease | No disease | Disease | |

| Retinopathy grades | ||||||

| R0M0 | 637 (20.7) | 2444 (79.3) | 962 (19.4) | 3997 (80.6) | 938 (19.8) | 3798 (80.2) |

| R1M0 | 73 (6.3) | 1078 (93.7) | 68 (4.1) | 1608 (95.9) | 73 (4.1) | 1706 (95.9) |

| U | 9 (24.3) | 28 (75.7) | 26 (23.0) | 87 (77.0) | 62 (23.0) | 208 (77.0) |

| R1M1 | 16 (3.9) | 391 (96.1) | 39 (5.5) | 669 (94.5) | 18 (4.1) | 422 (95.9) |

| R2 | 0 (0.0) | 162 (100.0) | 3 (1.1) | 263 (98.9) | 1 (0.5) | 197 (99.5) |

| R2M0 | 0 (0.0) | 51 (100.0) | 2 (2.6) | 75 (97.4) | 1 (1.5) | 64 (98.5) |

| R2M1 | 0 (0.0) | 111 (100.0) | 1 (0.5) | 188 (99.5) | 0 (0.0) | 133 (100.0) |

| R3 | 0 (0.0) | 51 (100.0) | 0 (0.0) | 115 (100.0) | 1 (1.6) | 62 (98.4) |

| R3M0 | 0 (0.0) | 16 (100.0) | 0 (0.0) | 35 (100.0) | 0 (0.0) | 18 (100.0) |

| R3M1 | 0 (0.0) | 35 (100.0) | 0 (0.0) | 80 (100.0) | 1 (2.2) | 44 (97.8) |

| Combination of grades | ||||||

| R0M0, R1M0 | 710 (16.8) | 3522 (83.2) | 1030 (15.5) | 5605 (84.5) | 1011 (15.5) | 5504 (84.5) |

| U, R1M1, R2, R3 | 25 (3.8) | 632 (96.2) | 68 (5.7) | 1134 (94.3) | 82 (8.4) | 889 (91.6) |

| R1M0, U, R1M1, R2, R3 | 98 (5.4) | 1710 (94.6) | 136 (4.7) | 2742 (95.3) | 155 (5.6) | 2595 (94.4) |

| Total | 735 (15.0) | 4154 (85.0) | 1098 (14.0) | 6739 (86.0) | 1093 (14.6) | 6393 (85.4) |

| Manual grade (worst eye) | Retmarker outcome by age group, n (%a) | |||||

|---|---|---|---|---|---|---|

| < 50 years | 50 to < 65 years | 65 to 98 years | ||||

| No disease | Disease | No disease | Disease | No disease | Disease | |

| Retinopathy grades | ||||||

| R0M0 | 1231 (40.0) | 1850 (60.0) | 2924 (59.0) | 2035 (41.0) | 2567 (54.2) | 2169 (45.8) |

| R1M0 | 277 (24.1) | 874 (75.9) | 678 (40.5) | 998 (59.5) | 624 (35.1) | 1155 (64.9) |

| U | 9 (24.3) | 28 (75.7) | 51 (45.1) | 62 (54.9) | 133 (49.3) | 137 (50.7) |

| R1M1 | 33 (8.1) | 374 (91.9) | 112 (15.8) | 596 (84.2) | 62 (14.1) | 378 (85.9) |

| R2 | 1 (0.6) | 161 (99.4) | 10 (3.8) | 256 (96.2) | 11 (5.6) | 187 (94.4) |

| R2M0 | 0 (0.0) | 51 (100.0) | 3 (3.9) | 74 (96.1) | 2 (3.1) | 63 (96.9) |

| R2M1 | 1 (0.9) | 110 (99.1) | 7 (3.7) | 182 (96.3) | 9 (6.8) | 124 (93.2) |

| R3 | 0 (0.0) | 51 (100.0) | 2 (1.7) | 113 (98.3) | 3 (4.8) | 60 (95.2) |

| R3M0 | 0 (0.0) | 16 (100.0) | 1 (2.9) | 34 (97.1) | 0 (0.0) | 18 (100.0) |

| R3M1 | 0 (0.0) | 35 (100.0) | 1 (1.3) | 79 (98.8) | 3 (6.7) | 42 (93.3) |

| Combination of grades | ||||||

| R0M0, R1M0 | 1508 (35.6) | 2724 (64.4) | 3602 (54.3) | 3033 (45.7) | 3191 (49.0) | 3324 (51.0) |

| U, R1M1, R2, R3 | 43 (6.5) | 614 (93.5) | 175 (14.6) | 1027 (85.4) | 209 (21.5) | 762 (78.5) |

| R1M0, U, R1M1, R2, R3 | 320 (17.7) | 1488 (82.3) | 853 (29.6) | 2025 (70.4) | 833 (30.3) | 1917 (69.7) |

| Total | 1551 (31.7) | 3308 (68.3) | 3777 (48.2) | 4060 (51.8) | 3400 (45.4) | 4086 (54.6) |

| Manual grade (worst eye) | EyeArt outcome by sex, n (%a) | |||

|---|---|---|---|---|

| Male | Female | |||

| No disease | Disease | No disease | Disease | |

| Retinopathy grades | ||||

| R0M0 | 1305 (19.5) | 5390 (80.5) | 1232 (20.3) | 4849 (79.7) |

| R1M0 | 121 (4.6) | 2521 (95.4) | 93 (4.7) | 1871 (95.3) |

| U | 46 (22.2) | 161 (77.8) | 51 (23.9) | 162 (76.1) |

| R1M1 | 37 (4.1) | 864 (95.9) | 36 (5.5) | 618 (94.5) |

| R2 | 2 (0.5) | 378 (99.5) | 2 (0.8) | 244 (99.2) |

| R2M0 | 1 (0.8) | 117 (99.2) | 2 (2.7) | 73 (97.3) |

| R2M1 | 1 (0.4) | 261 (99.6) | 0 (0.0) | 171 (100.0) |

| R3 | 0 (0.0) | 152 (100.0) | 1 (1.3) | 76 (98.7) |

| R3M0 | 0 (0.0) | 42 (100.0) | 0 (0.0) | 27 (100.0) |

| R3M1 | 0 (0.0) | 110 (100.0) | 1 (2.0) | 49 (98.0) |

| Combination of grades | ||||

| R0M0, R1M0 | 1426 (15.3) | 7911 (84.7) | 1325 (16.5) | 6720 (83.5) |

| U, R1M1, R2, R3 | 85 (5.2) | 1555 (94.8) | 90 (7.6) | 1100 (92.4) |

| R1M0, U, R1M1, R2, R3 | 206 (4.8) | 4076 (95.2) | 183 (5.8) | 2971 (94.2) |

| Total | 1511 (13.8) | 9466 (86.2) | 1415 (15.3) | 7820 (84.7) |

| Manual grade (worst eye) | Retmarker outcome by sex, n (%a) | |||

|---|---|---|---|---|

| Male | Female | |||

| No disease | Disease | No disease | Disease | |

| Retinopathy grades | ||||

| R0M0 | 3597 (53.7) | 3098 (53.7) | 3125 (51.4) | 2956 (51.4) |

| R1M0 | 912 (34.5) | 1730 (65.5) | 667 (34.0) | 1297 (66.0) |

| U | 92 (44.4) | 115 (55.6) | 101 (47.4) | 112 (52.6) |

| R1M1 | 116 (12.9) | 785 (87.1) | 91 (13.9) | 563 (86.1) |

| R2 | 9 (2.4) | 371 (97.6) | 13 (5.3) | 233 (94.7) |

| R2M0 | 3 (2.5) | 115 (97.5) | 2 (2.7) | 73 (97.3) |

| R2M1 | 6 (2.3) | 256 (97.7) | 11 (6.4) | 160 (93.6) |

| R3 | 2 (1.3) | 150 (98.7) | 3 (3.9) | 74 (96.1) |

| R3M0 | 1 (2.4) | 41 (97.6) | 0 (0.0) | 27 (100.0) |

| R3M1 | 1 (0.9) | 109 (99.1) | 3 (6.0) | 47 (94.0) |

| Combination of grades | ||||

| R0M0, R1M0 | 4509 (48.3) | 4828 (51.7) | 3792 (47.1) | 4253 (52.9) |

| U, R1M1, R2, R3 | 219 (13.4) | 1421 (86.6) | 208 (17.5) | 982 (82.5) |

| R1M0, U, R1M1, R2, R3 | 1131 (26.4) | 3151 (73.6) | 875 (27.7) | 2279 (72.3) |

| Total | 4728 (43.1) | 6249 (56.9) | 4000 (43.3) | 5235 (56.7) |

Five different cameras were in use at the Homerton DESP: 415 episodes were photographed with the Canon 2CR (Canon, Tokyo, Japan), 7569 episodes with the Canon 2CR-Dgi (Canon), 1230 episodes with the Canon EOS (Canon), 4246 episodes with the Canon EOS2 (Canon) and 3432 episodes with the TOPCONnect (Topcon, Tokyo, Japan). In 3395 episodes the camera type was not recorded. The main reason for the camera type not being recorded was a network interruption or ‘processor timeout’. In such situations images are saved to a separate location for uploading later and the camera type code was not retained. Screening performance by camera type is summarised in Tables 38 and 39 in Appendix 3. It appears that both ARIASs performed least well with the Canon EOS2 out of all the cameras.

Multiple variable logistic regression models (cases being defined as those with manual grade refined by arbitration in the worst eye of R1M0, U, M1, R2 or R3 and non-cases patients with a worst eye grade of R0M0) were used to formally test for an interaction between the ARIAS outcome classification of ‘disease’ versus ‘no disease’, with age group, sex, ethnicity and camera type as categorical variables.

A multiple variable logistic regression model with adjustment for age, sex, ethnicity and camera type showed that EyeArt cases were 4.42 (95% CI 3.95 to 4.96) times more likely than non-cases to be classified as ‘disease’ rather than ‘no disease’. Inclusion of interaction terms in the model was not statistically significant, suggesting that the performance of EyeArt was not affected by age (p = 0.51), sex (p = 0.20), ethnicity (p = 0.58) or camera type (p = 0.03). The corresponding models for Retmarker show that cases were 3.19 (95% CI 2.99 to 3.41) times more likely than non-cases to be classified as ‘disease’ rather than ‘no disease’. However, for Retmarker there were statistically significant interactions at the 1% level for all covariates except sex, suggesting that Retmarker performance was influenced by age, ethnicity and camera type. Odds ratios from the multiple variable logistic regression model including all two-way interactions for Retmarker outcome with age, sex, ethnicity and camera type are given in Table 18. The reference category is white European men aged < 50 years photographed with the Canon 2CR DGi camera; cases were 3.73 (95% CI 3.06 to 4.54) times more likely than non-cases to be classified as ‘disease’ rather than ‘no disease, once we take account of all main effects and two-way interactions. This odds ratio increased to 3.98 for the 50 to < 65 years age group but reduced to 2.97 for the oldest age group (65–98 years), suggesting that the discrimination of Retmarker was marginally poorer in the oldest age group. If the man was Asian instead of white European, the odds ratio decreased to 2.63 (95% CI 2.17 to 3.19). A similar odds ratio was observed in black African-Caribbean individuals, implying slightly poorer discrimination of Retmarker in Asian and black African-Caribbean individuals than in white European individuals. The results suggest that the software performs differentially by camera type. For example cases were much more likely than non-cases, to be classified as ‘disease’ rather than ‘no disease’ with the Canon EOS than with the Canon 2CR DGi (odds ratios of 3.73 vs. 7.93). The interaction with patient sex, although not significant at the 1% level, has been included for completeness.

| Covariate | Odds ratio for Retmarker outcome (disease vs. no disease) (95% CI) | p-value for interactiona |

|---|---|---|

| Age | ||

| < 50 years | 3.73 (3.06 to 4.54) | 0.006 |

| 50 to < 65 years | 3.98 (3.38 to 4.67) | |

| 65 to 98 years | 2.97 (2.55 to 3.47) | |

| Sex | ||

| Males | 3.73 (3.06 to 4.54) | 0.02 |

| Females | 3.24 (2.64 to 3.98) | |

| Ethnic group | ||

| White European | 3.73 (3.06 to 4.54) | < 0.0001 |

| Asian | 2.63 (2.17 to 3.19) | |

| Black African-Caribbean | 2.52 (2.00 to 3.18) | |

| Camera | ||

| Canon 2CR-DGi | 3.73 (3.06 to 4.54) | < 0.0001 |