Notes

Article history

The research reported in this issue of the journal was funded by the HTA programme as project number 11/46/03. The contractual start date was in October 2012. The draft report began editorial review in September 2016 and was accepted for publication in January 2017. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2017. This work was produced by Birrell et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

Chapter 1 Background

Each year, the UK government publishes a document entitled the ‘National Risk Register of Civil Emergencies’. The latest edition of the register lists the outbreak of a pandemic influenza virus to be the highest priority non-terrorism risk faced by the UK population. 1 This highlights the importance of the country being in a high state of preparedness for such an outbreak. A key component of any protocol governing the public health response to an outbreak is a plan to monitor and predict the progress of a pandemic in real time.

During the 2009 outbreak of pandemic A/H1N1 influenza, much attention was devoted to the problem of capturing the epidemic dynamics through real-time modelling. The aim of such modelling was to provide up-to-the-moment assessments of the state of the epidemic, as well as to make predictions about its future course, all based on continually updating streams of information. The models used are mathematical constructs, that is, systems of equations designed to approximate epidemic dynamics, describing the changes over time in the number of people within a population who are susceptible to infection, the number currently infected and the number who are presently immune. These equations are governed by a few key (hitherto unknown) quantities known as parameters, which usually represent a physical characteristic of the epidemic (e.g. the average duration of infection or the relative rates of contact between members of relevant population groups). To enable assessment of the current state of the epidemic and its future evolution, values for these parameters need to be identified that are consistent with epidemic data. In addition, the uncertainty in the parameter values needs to be properly reflected in such assessments. To make formal, statistical estimations of model parameters, models can often be simplified to ensure that estimates can be derived from the available data, computational resources and expertise. More generally, as seen in research focused on the evaluation of in-pandemic mitigation strategies,2,3 parameter estimates have been obtained on a more ad hoc basis by using the models and assumed ranges of parameter values to simulate epidemic scenarios. A selection of these parameter values is then retained based on some informal comparison between the corresponding simulated epidemics and the observed data. This type of approach is common to the literature on real-time modelling prior to 2009, in which the proposed methodologies are heavily reliant on an idealised set of circumstances and/or on ad hoc estimation methods. 4

Bayesian statistical epidemic models provide a natural, rigorous framework for the incorporation of relevant contemporaneous surveillance data into the modelling process, alongside collateral information that may be available from other sources. Such models have been used in the context of real-time monitoring for other infectious diseases. For severe acute respiratory syndrome, models have been proposed and applied for real-time estimation where the focus is on the reproductive number,5–7 a key epidemic characteristic often denoted R0. A more complex Bayesian approach is utilised in an application to data stemming from the avian influenza epidemic in the UK poultry industry. 8 Here, the availability of individual-level data and the use of computationally intensive Bayesian techniques make it possible to carry out inference on the transmission dynamics, rather than merely the reproductive number. A similar model has been formulated within the Bayesian statistical paradigm to provide real-time estimates of the time-evolving effective reproductive number R0(t) for a generic emerging disease,9 an approach that has since been applied to an A/H7N9 outbreak in China10 and subsequently extended to a more complex version of the model. 11

However, the modelling approaches above have typically used a single data stream providing direct data on the number of new cases of an infectious disease over time. This is also the case in the context of the 2009 outbreak in Singapore,12 where a real-time reporting system for influenza-like illness (ILI) in sentinel general practices (GPs) was established, and the resulting data were used to predict the epidemic in real time. In practice, as illustrated by the 2009 outbreak in the UK, direct data are seldom available and it is more likely that multiple sources of data exist, each indirectly informing the epidemic development and each subject to possible sources of bias. This calls for more involved complex epidemic modelling that can synthesise the information held within a range of data sources to compensate for the lack of direct observation of the infection process. As a result of this, real-time modelling in the UK in the face of the 2009 A/H1N1pdm outbreak proved to be more demanding and more intricate than had been anticipated. 13

In response to the 2009 pandemic in England, two approaches to real-time modelling were developed. 14,15 In the first,14 the authors present a framework for the real-time assessment of the effectiveness and cost-effectiveness of vaccination strategies, considering the whole of England. Embedded inside a cost-effectiveness model is an age and risk group structured deterministic mass-action susceptible, exposed, infectious, recovered (SEIR) transmission model, parameterised in terms of an age-specific force of infection, that is, the rate at which susceptible individuals acquire infection. Model parameters are estimated by a hybrid of ad hoc approaches using the estimated weekly numbers of symptomatic cases routinely produced by the Health Protection Agency (HPA) [from 2011, Public Health England (PHE)] as data during the outbreak. 16 Uncertainty in key parameters (e.g. R0) is generated by sampling values of each parameter from a range or distribution to form a ‘scenario’ from each combination of parameter values. Results from each scenario are compared with the scaled estimates, and only the best-fitting 1% of the 60,000 realisations are retained to simulate future incidence and evaluate, with epidemiological uncertainty, the impact of different vaccination strategies on severe outcomes.

In the second approach,15 data were more directly utilised within a Bayesian statistical framework. The basic modelling features resemble those used to measure the effects of school closure as a strategy for epidemic mitigation in Hong Kong. 17 The primary difference is in the data used. Instead of using counts of case confirmations alone, an array of different data sets were combined: age- and region-stratified data on GP consultations for ILI;18 virological positivity data from individuals reporting symptoms of ILI available through the Royal College of General Practitioners (RCGP) surveillance network and the Regional Microbiology Network (RMN) of the HPA; virological case confirmations from the early part of the epidemic; and data on the seropositivity of sera samples taken before and during the 2009 pandemic and held by the Weekly Returns Service of the RCGP. The modelling details of this second approach are detailed in Modelling methodology: single region model and Appendix 1 for more details. 19 This work, however, considered only the London region.

After the 2009 experience, two main issues were left unresolved. The first is the development of a spatial characterisation of the epidemic. This would need to be carried out at a geographical level fine enough to ensure homogeneous epidemic activity within each geographical unit, yet coarse enough to guarantee enough available data to ensure that each unit has a sufficiently informative sample size. The second issue is the need to accommodate the greater wealth of epidemic surveillance data supposedly available in future pandemics. 20

Both developments pose a challenge to existing modelling approaches. With regard to the Bayesian approach,15 the challenge is to extend the model structure and increase the number of pandemic data sets to be assimilated into an already complex model in a sufficiently timely fashion for analysis to be feasible in real time. This requires the development of more computationally efficient methods for Bayesian inference.

Computational methods

The Bayesian approach is based on a computational technique known as Markov chain Monte Carlo (MCMC). 21 MCMC can be computationally burdensome when estimation and prediction of an evolving epidemic are needed in real time. Every time new data become available, MCMC reanalyses the data in their entirety, requiring possibly millions of evaluations of the model. This is computationally costly and will, consequently, limit the speed at which results can be obtained.

There are existing methods to approximate the estimation procedure, either by replacing the model with a more-readily evaluated proxy22 or by approximating the Bayesian approach. 23 However, sequential Monte Carlo (SMC) methods are a more appropriate alternative to MCMC. 24–26 The use of such methods to analyse epidemic data is relatively common,11,27 yet analyses with a real-time focus are rare,12 and those using a synthesis of numerous types of data do not exist.

A further complication, which is not considered in the existing literature, is the need to accommodate the impact that public health interventions might have on the surveillance data underpinning the real-time analysis. As infection becomes more widespread, health-care facilities become harder to access, with those in need of health care channelled elsewhere. Any effective real-time computational approach has to cope with the sudden shocks, unforeseen in some cases, that interventions might generate on the time course of the surveillance data.

Outline

The work reported here will expand on an existing framework for statistical epidemic modelling,15 increasing its complexity to allow for spatial heterogeneity in transmission. Two competing spatial modelling approaches are examined (see Chapter 3, Modelling methodology: multi-region models and Chapter 4, Spatial modelling) to investigate how epidemic activity in different regions can be most efficiently and accurately estimated. This increased complexity and the extra dimension added to each of the epidemic data sets add to the computational burden. A general algorithm for Bayesian statistical inference in such a scenario is developed and tested on a suite of synthetic pandemic data, incorporating the presence of ‘shocks’ in surveillance data arising from public health interventions (see Chapter 3, Monte Carlo methods and Chapter 4, Comparison of the real-time performance of the Monte Carlo methods). The report concludes with a discussion (see Chapter 5) and recommendations for future research (see Chapter 6).

Chapter 2 Study objectives

The central objective of this study is to advance the state of the art of real-time modelling of influenza epidemics and to provide a useful tool that can be used to monitor and predict the development of an ongoing pandemic outbreak. This advancement involves:

-

accounting for spatial heterogeneity in transmission – this may be done through the modelling of separate, non-interacting but parametrically linked epidemics in spatially disjoint regions of a country, or through further stratification of the population according to location

-

building capacity in terms of the different types and increasing the number of data that can be used for real-time modelling

-

improving the efficiency with which real-time statistical inference can be made

-

developing a real-time inferential system that is robust to likely pandemic mitigation or treatment interventions.

A suite of software has been produced to achieve the above objectives and provide support to national public health bodies in the event of a pandemic. This software has been tailored to the specific requirements of PHE, the responsible public health body in England.

Initially, there was also a component of this research promising support to the HPA (now PHE) in the event of a pandemic in their real-time production of estimates and projections of the health-care burden attributable to the pandemic. Such an outbreak did not occur over the duration of the study, and so this component of the project has been disregarded in the report.

Chapter 3 Methods

We shall begin by describing the modelling approaches used in this work (see Modelling methodology: single region model and Modelling methodology: multi-region models). In Data we will examine the data types that PHE currently envisage being available at some stage during a pandemic and discuss how the structure of the available data from 2009 helped to determine the precise parameterisation of the real-time model. Together, the modelling approaches and data types will inform the spatial modelling study.

Bayesian inference provides an introduction to Bayesian inference and Monte Carlo methods discusses the MCMC and SMC methods. Monte Carlo methods, in particular, contains a significant amount of technical detail, including the tuning of a number of algorithmic components necessary to achieve timely inference, and may be omitted by the reader not interested in such detail.

Modelling methodology: single-region model

The starting point for the investigation in this study is the model and analysis of Birrell et al. 15 Here, information from multiple sources is integrated into a composite model, including:

-

an age-structured dynamic transmission component

-

a disease component

-

a component describing the mechanism of symptom reporting to health-care facilities.

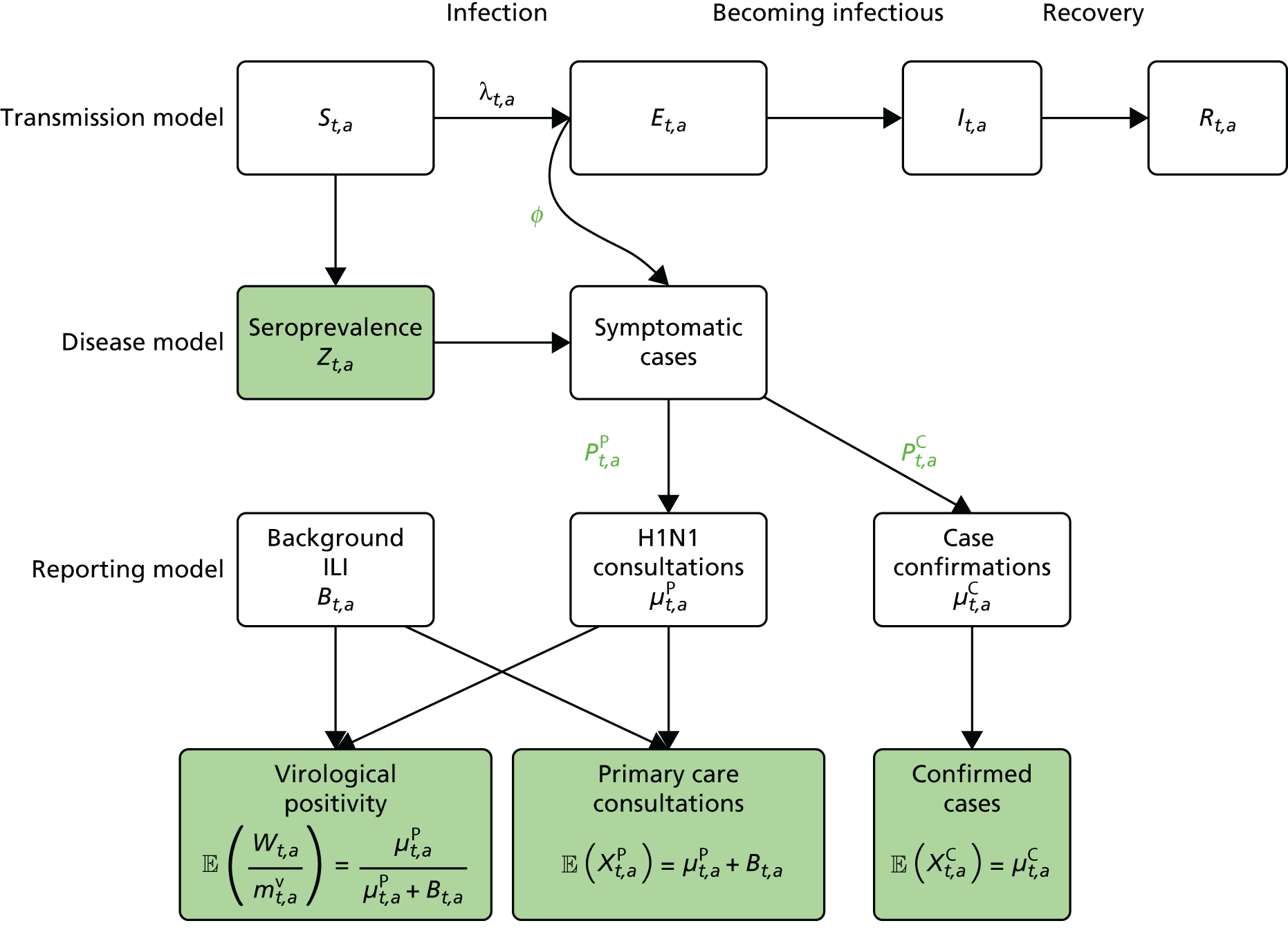

A schematic model representation is given in Figure 1. Transmission in the SEIR model is governed by a time- and age-varying force of infection that is dependent on the population structure, the transmissibility of the virus, the mixing patterns between population strata and the expected time spent in the E (exposed) and I (infectious) states. In the disease model layer, a proportion, ϕ, of the newly exposed individuals develop febrile symptoms. In the reporting model layer, further proportions of these symptomatic individuals consult their GP and/or have their symptoms officially confirmed through a virologically positive swab result.

FIGURE 1.

A schematic diagram of the model. Adapted from Birrell et al. 15 © 2011 National Academy of Sciences.

Ideally, direct data on the number of new infections would be available, and in studies of modelling methodology these types of data are often assumed. 11 However, more realistically, surveillance data sets are noisy and record events (such as GP consultations; see section entitled Pandemic data) that occur some time later than the infection. Specifically for influenza, disease reporting is frequently through syndromic surveillance, whereby non-disease specific symptoms are reported. Instead of reporting influenza infections, the reporting is of patients suffering with ILI. Therefore, data from such sources include contamination from patients carrying infections other than the pathogen of interest. This adds greater complexity to the task of disentangling the underlying disease incidence from the available information, particularly as this contamination is likely to vary substantially during the pandemic. To identify the disease incidence, these noisy consultation data are combined with information on virological positivity from complimentary surveillance systems (see Figure 1 and Data). When multiple time series data sets are available, data on events occurring as close as possible to the time of infection should be preferred, as they will be more informative. Alternatively, data arising as a result of severe symptoms are also valuable; severity is a property of the virus, and so the proportion of cases that appear in data will become more stable over time.

The equations governing the epidemic dynamics are included in Appendix 1.

Modelling methodology: multi-region models

The transmission model shown in Figure 1 and Appendix 1 is extended to accommodate spatial heterogeneity in the 2009 A/H1N1 pandemic data in two ways: (1) by using a parallel-region (PR) approach and (2) by using a meta-region (MR) approach. 28 These approaches will be introduced in the two following subsections, respectively.

The parallel-region model

In the PR modelling approach, the spatially heterogeneous epidemic is assumed to be composed of a number of smaller epidemics occurring in parallel within each spatial unit, with no direct interaction (specifically, no transmission) between regions. The rationale here is that the purpose of the real-time model is to monitor the pandemic once infection is widespread. By such a time, it is reasonable to assume that long-range inter-region transmission will be negligible in comparison with that occurring within each region.

The parallel epidemics are still jointly modelled; however, there is a sharing of information through a number of model parameters that either have a common value or a common temporal trend in each region. These parameters are typically those representing biological characteristics of the virus (mean infectious period, proportion symptomatic, etc.). Additionally, the mixing patterns are assumed to exhibit no regional variation. Appendix 1 presents a system of equations governing single-region dynamics. This system is driven by two key quantities: the reproductive number, R0, and the initial state of the system, defined by a parameter giving the initial number of infective individuals, I0. These are region specific parameters (R0,r, and I0,r, r = 1, . . . ,R) as they are functions of both the regional population and the virus. Together, these parameters account for the different timing of the pandemic activity in each region.

The system of dynamic equations given in Equation 18, Appendix 1 applies within each spatial unit, and so needs little modification.

The meta-region model

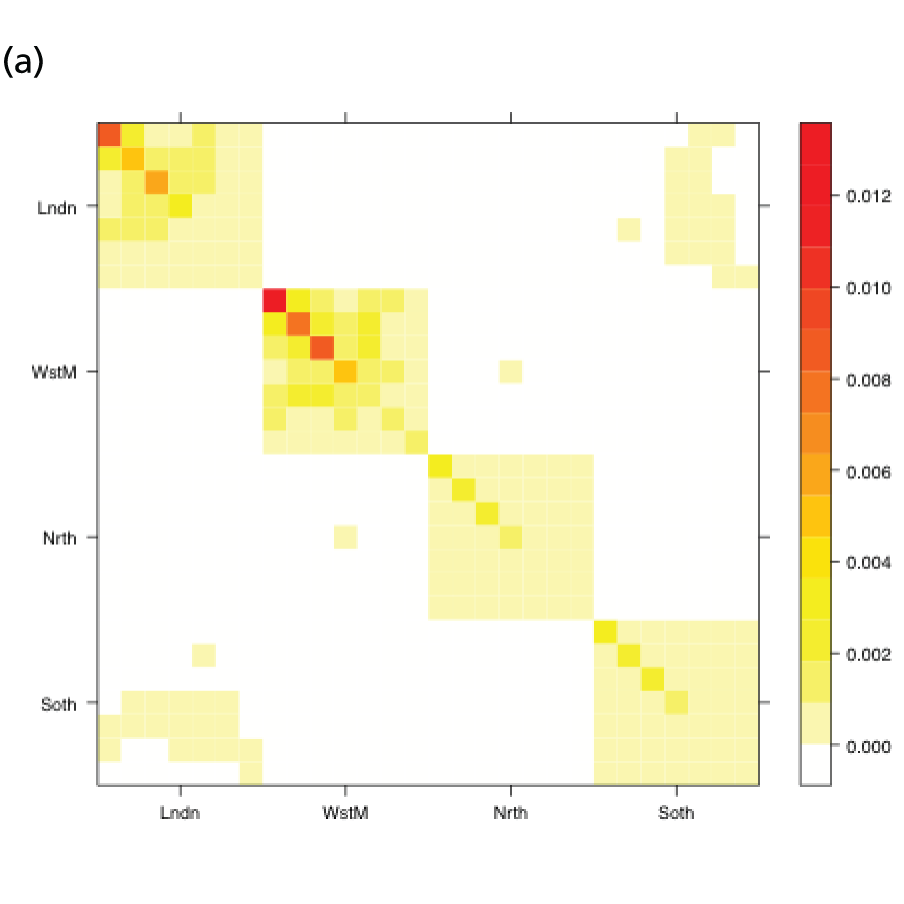

In the MR modelling approach, regions are assumed connected such that transmission is possible between individuals resident in different regions. Here, we look at the country as a whole and treat it as a metapopulation of R sub-regions. Therefore, we can generalise the notation in the system of equations (see Equation 18) in Appendix 1 so that the index a now takes values over the range 1, . . . ,RA. It is therefore necessary to define (RA × RA) contact matrices, Π(tk), k = 1, . . . ,K, that describe the rates at which individuals of the various (region- and age-defined) strata come into contact. In the single-region and PR models, this matrix describes the rates at which individuals of the various age groups interact, and was informed by UK data collected as part of the Improving Public Health Policy in Europe through the Modelling and Economic Evaluation of Interventions for the Control of Infectious Diseases (POLYMOD) study (see Equation 20 and relevant text). 29 In this expanded matrix, the entries that correspond to within-region contacts resemble the POLYMOD-based matrices. However, entries corresponding to inter-region interactions are typically of a lower order of magnitude, as people interact less frequently with others living in a different geographic region. These rates of contact are derived from census data on daily commuter movements between regions. The details of how, at the kth time point, tk, the POLYMOD matrices and the commuter data combine to produce contact matrices Π(tk) are given in Appendix 1. Figure 2 shows a heat map of the elements of Π(t1) as contact intensities on the absolute and log-scales. The strata are organised within regions, giving the matrix the appearance of an array of submatrix blocks within which the POLYMOD patterns of contact are repeated. The blocks on the diagonal give rates of within-region contact and, therefore, show much higher contact rates.

FIGURE 2.

Heat maps for the contact matrices used in the MR model (with regional density dependence) based on (a) contact rates; and (b) log-contact rates. The matrices show a strong, block-diagonal structure, with red areas indicating higher rates of contact. The blocks are ordered such that contacts involving residents of London are in the top row and in the first column, with the ordering of London, West Midlands, the North and, in the final row and column, the South. Within blocks, age is increasing from top to bottom and from left to right. Red squares indicate interactions of high frequency, white squares interactions of zero frequency. Adapted from Birrell et al. 28 © 2016, Macmillan Publishers Limited. This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

The MR model has only one system of dynamic equations of the type in shown in Equation 18 of Appendix 1, removing the flexibility of having region-specific values for R0 and the initial seeding of infectives, I0. However, there are a number of modelling considerations to be made when using the MR model, considerations that are not relevant to the PR modelling approach.

Density dependence

In a single-region model, the POLYMOD-based contact matrices give the relative frequency of contact between pairs of individuals of the different age groups. When these matrices are inserted into the block diagonal of the MR contact matrix (see Figure 2), an assumption of frequency-dependent contact is made. This implies that individuals are equally likely to have contact with any other individual in the region irrespective of the region in which they live. Therefore, individuals who live in regions with a higher population will make proportionately more contacts. This may not seem to be a reasonable assumption, as the total population of a region does not necessarily indicate a high population density. The alternative considered here is density-dependent contacts. In this case, the likelihood of a contact is scaled down by the population size, either of the regional population or of the strata (region and age) population. Both types of density dependence are considered.

Initial seeding of infectives

At the beginning of an epidemic, the transmission process is kick-started by a number of initial infectives. The POLYMOD-based contact matrices used for a single-region model (and in the PR model) will lead to rapid convergence towards a stable pattern of infection. In other words, for most reasonable choices of initial seeding, it takes only a short time for this seeding to be ‘forgotten’ by the transmission dynamics.

This is not the case in the MR model, with its block-structured matrix. Infection spreads very slowly between the regions, and so the initial seeding is not quickly forgotten. Epidemics seeded with infectives in different regions can lead to very different outcomes. Therefore, although the choice of the seeding in the PR model is not important, it is a significant modelling choice for the MR approach and a number of different seedings are considered: the single-region equilibrium distribution (i.e. the stable pattern of infection observed in the PR model) derived from the next generation matrix; the initial empirical age- and region-specific distribution of the initial confirmed cases; and a hybrid approach using a within-region equilibrium distribution scaled by the empirical distribution of the initial confirmed cases over regions.

Commuting at random

Each model makes the assumption of homogeneous mixing within each stratum. This means that all individuals within a population stratum are as likely to acquire or spread infection as any other individual of the same infection status. In the MR model, where infection is transmitted between regions through the routine movements of commuters, this assumption of homogeneity implies that on any day each individual is equally likely to commute. This is an unrealistic representation, however, as only a subset of people are likely to commute on a regular basis, and a (larger) subset are likely to stay within their home region. To account for this, adult age groups in each region are further subdivided into commuters and non-commuters, so that the commuters are a fixed group of people who move each day. This results in the effects of commuting on transmission being more transient, as there is a smaller, more rapidly exhaustible supply of susceptible individuals available to transfer infection from one region to another.

The downside to this further level of stratification is that it places an increased computational burden on the model, greatly slowing down the estimation process.

Data

During the 2009 pandemic, the HPA provided A/H1N1pdm incidence estimates for each of the then ten Strategic Health Authorities (SHAs) across England. Two of the SHAs, Greater London and the West Midlands, were believed to have experienced a significant, pre-summer wave of infection. Ideally, we would adopt the same geographical partition; however, the volume of data available from each of the SHAs is insufficient to do so. A reasonable compromise solution is to divide the country into four spatial units: London, the West Midlands (the two regions that had significant first waves of infection), the North and the South. The North and South regions each comprise four SHAs; in the North these are North East, North West, Yorkshire and Humberside, and East Midlands, and in the South they are East of England, South Central, South East Coast and South West.

The age categorisation favoured by the HPA, now PHE, is to break up the population into the age groups < 1 year, 1–4 years, 5–14 years, 15–24 years, 25–44 years, 45–64 years and ≥ 65 years. For the rest of this section, a will denote a general population stratum, whether defined by age alone or by both region and age.

The section, Pandemic data below, itemises the pandemic data streams that the real-time modelling framework is set up to work with, detailing, when applicable, how these data were used in modelling the 2009 pandemic and discussing how the various surveillance schemes have evolved over the intervening period. Distributional assumptions introduces some technical statistical details, showing how these data streams link into the SEIR transmission model, covering the distributional assumptions that are required to allow formal statistical inference to be made.

Pandemic data

General practice consultation data

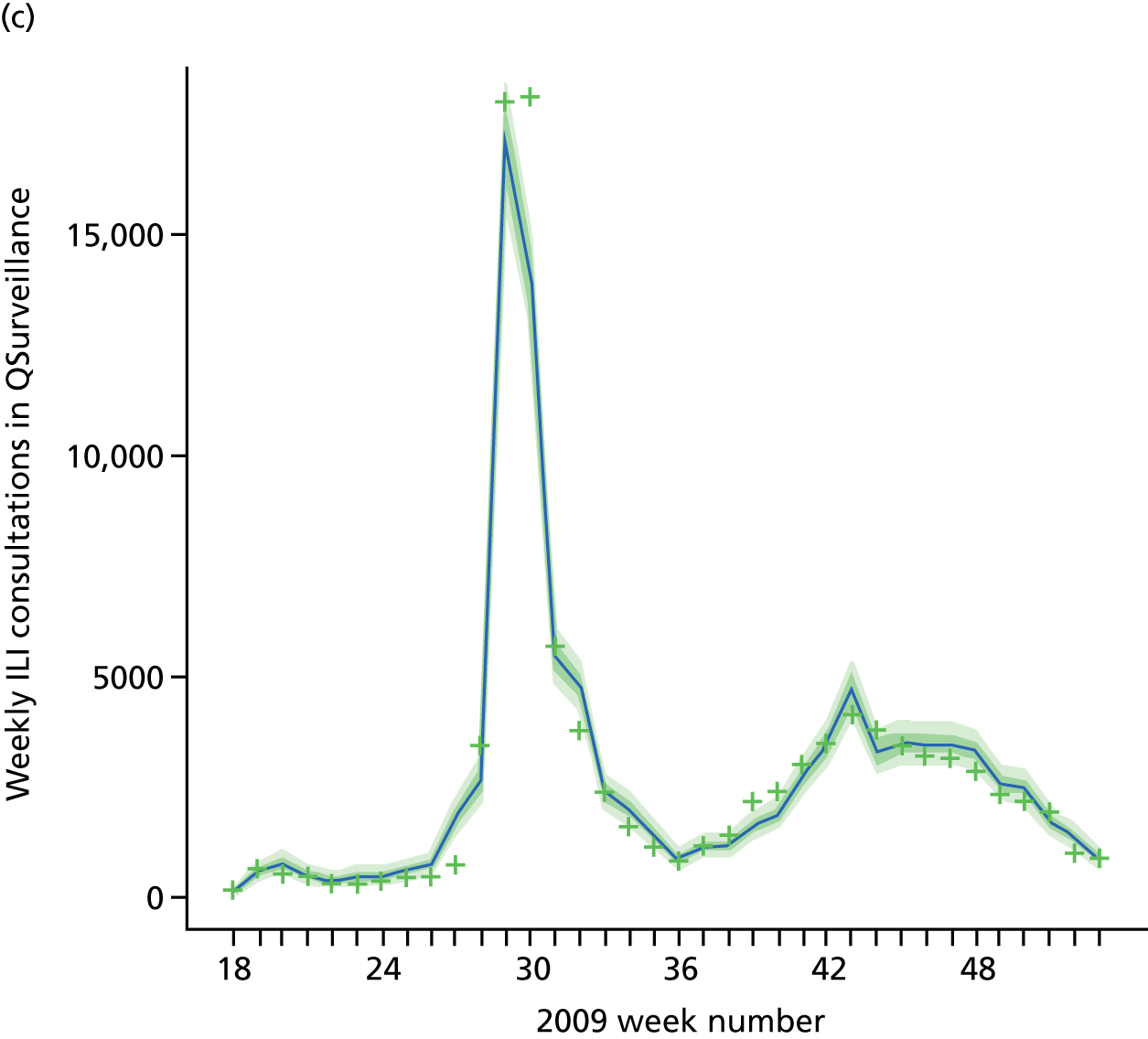

Public Health England carry out syndromic surveillance to monitor influenza activity in the population by routinely collecting data on individuals presenting with an ILI at GPs. In 2009, such data were provided from two sources. The first source was the Weekly Returns Service of the RCGP, a sentinel GP network covering a weekly population of approximately 900,000. 30 The second source was the HPA/QSurveillance national surveillance system, which covers a much larger population of ≈23 million people. 31 ILI data from both schemes were available stratified by both age group and SHA. In the end, daily ILI reports from the QSurveillance system were used to guide the public health response to the pandemic. 31

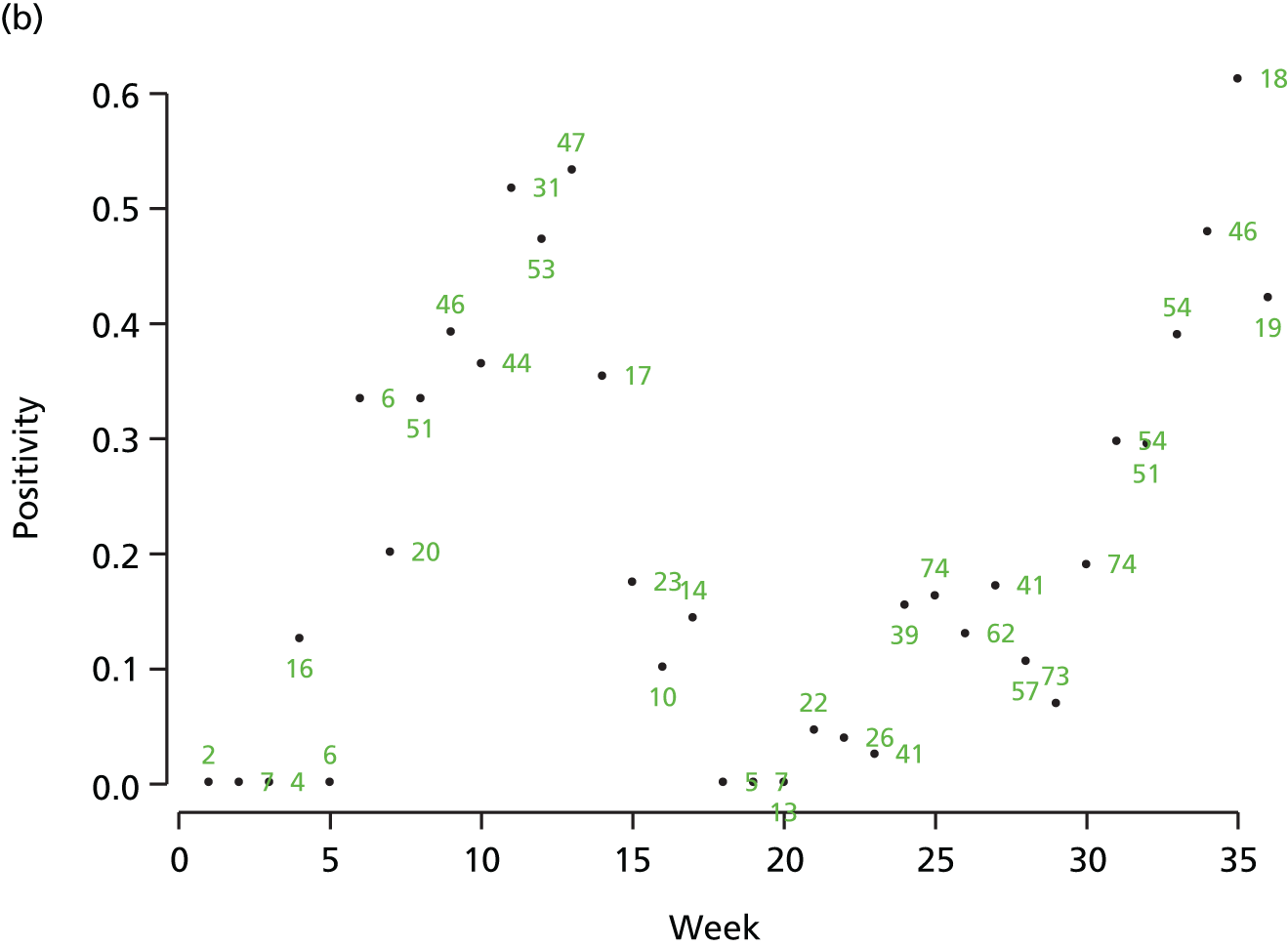

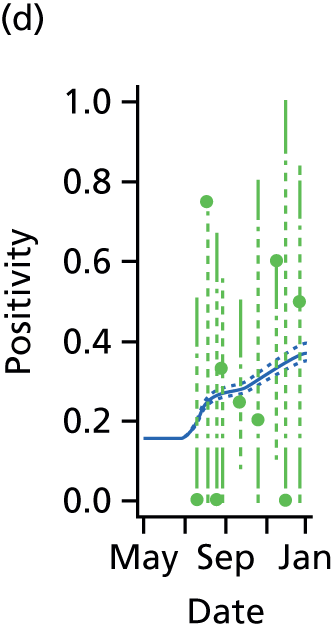

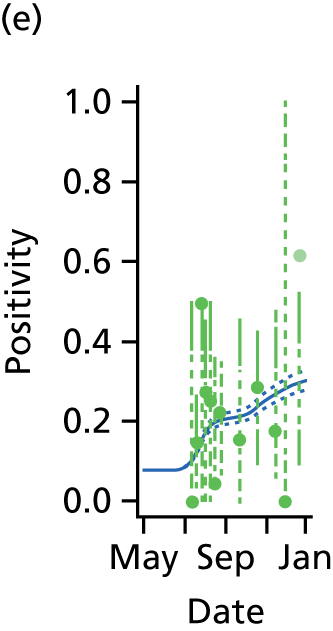

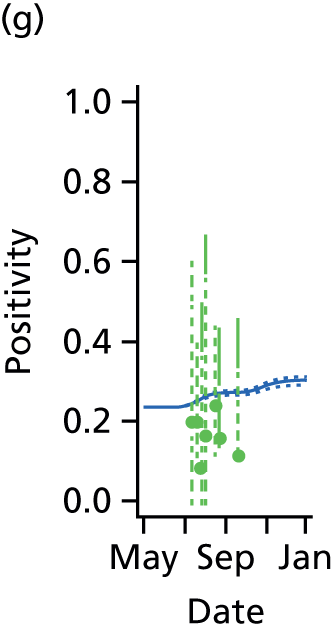

The GP data are reported counts of consultations for non-pandemic specific symptoms, and include cases not infected with the pandemic pathogen. Therefore, information is required on the proportion of the reported counts that are truly of interest when tracking the levels of transmission of the pandemic infection. The RCGP augment their primary care surveillance with virological monitoring. 32 This monitoring involves taking respiratory swabs from a subset of patients (chosen at random) consulting for ILI at participating GPs. A polymerase chain reaction (PCR) assay is then employed to test the swabs for the presence of influenza strains as well as for other respiratory virus infections. Similar data are obtained and made available by PHE’s RMN, covering an additional 400,000 patients in England. 33 A complete account of the virological monitoring undertaken by the two schemes throughout the 2009 pandemic can be found elsewhere,34 but together they provide data on the positivity of the swabs taken in GPs together with the epidemiological information attached to each sample. To ensure high sensitivity of the testing process, swabs were only included in any analysis presented here if the time between symptom onset and the swab being taken was ≤ 5 days. Combining this swabbing information with the GP consultation data, the number of consultations that are actually directly due to the pandemic can be estimated.

Since 2009, PHE has expanded its primary care surveillance portfolio, now additionally working with The Phoenix Partnership to access anonymous GP records through its SystmOne™ computer system (The Phoenix Partnership, Leeds, UK). 35 These data could either be combined with the QSurveillance data or provide an additional sample of data used for model validation. When infection becomes widespread, the National Pandemic Flu Service (NPFS) will be activated. The NPFS is an internet and telephone service designed to expedite the administration of antiviral drugs, alleviating the burden placed on GP surgeries. This service was launched in 2009, and, after a short bedding-in period, was observed to be subject to the same trends as the GP-based data. Those using the service were also swabbed, to understand the underlying pandemic incidence. Owing to an anticipated fall in the consultation numbers that would arise as a result of a NPFS launch, these data could easily be added (if the degree of overlap between the two data sets is understood) or used to replace the GP consultation data in order to build a picture of the numbers accessing primary care services for ILI.

Virologically continued cases

Management strategies over the initial stages of the 2009 pandemic were primarily concerned with containing the spread of the epidemic, before moving into a treatment phase. The initial containment phase was a period of enhanced surveillance during which contacts of known infected individuals were traced and laboratory confirmations of the infection were obtained whenever possible. This work resulted in the generation of the first few hundred (FF100) and the FluZone databases. 34 Routine laboratory confirmations were discontinued on 25 June 2009, but we use here the data only up to 19 June 2009 to allow for the gradual cessation in the collection of this type of data. In practice, any real-time modelling is likely not to start within the first 5 weeks of the outbreak, given the anticipated difficulty in detecting any signal from the epidemic data at such early stages. Instead, it is anticipated that the information on confirmed cases in this period will inform the model construction and provide some prior information (see Model parameterisation) for various model parameters. In the analysis of the 2009 pandemic data, these data contributed to the analysis in the same way it is proposed that hospitalisation data will in future pandemics (see Hospitalisation data – UK Severe Influenza Surveillance System and Distributional assumptions).

Hospitalisation data – UK Severe Influenza Surveillance System

Prior to the 2009 pandemic, there was a gap in the surveillance of severe respiratory infections in the UK with regard to hospitalised cases of influenza. During the pandemic, a web-based hospital reporting system was established to meet this need. The data were available relatively late in the pandemic and, even now, the biases and weaknesses of the data derived from this reporting system are not well understood. This motivated the development of a more robust, well-tested surveillance scheme for the reporting and handling of such important data. As a result, the UK Severe Influenza Surveillance System (USISS) was initiated during the 2010/11 influenza season, becoming routine for each subsequent season. 36 Data collected during influenza seasons prior to any pandemic outbreak are anticipated to provide baseline information that may prove useful in identifying a pandemic ‘signal’.

Outside a pandemic, USISS is a two-stream surveillance system. All NHS hospital trusts carry out mandatory weekly reporting of admissions of severe influenza cases (i.e. patients admitted to a high-dependency unit or an intensive care unit (ICU) with laboratory confirmation of infection). USISS also provides sentinel influenza surveillance through an annually selected random sample of trusts, where testing for the presence of influenza in all patients presenting with ILI is mandatory, and results are reported together with an array of epidemiological information. In the event of a pandemic being declared, all trusts will switch to this level of reporting.

In a pandemic, therefore, USISS should provide a time series of reported cases that have two distinct advantages over the GP consultation data:

-

They are counts of laboratory confirmed cases, so there is no contamination from non-pandemic ILI.

-

The proportion of cases that are reported to USISS is a function of the severity of the virus and access to hospital services, and should therefore be less volatile over time.

However, hospital resources are finite, and, in a rapidly developing pandemic, may quickly become exhausted, so that patients may be turned away where previously they would have been hospitalised. In such a situation, the proportion of patients who are hospitalised will decrease as a function of the increasing incidence. This decrease may be difficult to characterise, potentially limiting the period of time for which the hospitalisation data can be reliably informative.

Serological data

Serological data are the only surveillance data source directly informing the transmission component of the real-time model. As the prevalence of immunity-conferring antibodies increases, the number of susceptibles decreases. In modelling the 2009 pandemic, the inclusion of serological data has been shown to be crucial to the reconstruction of the underlying epidemic curve. 15

Initially, the serological data used in the analysis of the 2009 data came from the HPA’s annual collection of residual blood serum samples submitted to microbiological laboratories for the purpose of carrying out cross-sectional antibody prevalence studies. 37 Later in the pandemic, it became clear that a more rapid, more representative approach to the collection of serum samples was required. Chemical pathology laboratories were therefore approached at hospitals in each of the RMN regions. This ensured a regular supply of age-stratified serum samples, obtained in a timely fashion and with good geographical coverage. 38 In all samples, a haemagglutin-inhibiting antibody titre of 32 was assumed to be sufficient to indicate protection against A/H1N1pdm influenza. 39–41 It is further assumed that there is a 2-week delay between infection and seroconversion. Each sample was, therefore, treated as representative of the level of cumulative infection among the population 14 days prior to the sampling date. Testing of some residual sera samples collected in 2008 also took place to provide age-specific estimates of baseline antibody prevalence.

In recent years, ahead of each winter influenza season, researchers at PHE have carried out stratified sampling from the population to select potential participants for a telephone survey regarding the public’s attitudes towards influenza vaccination. 36 At the end of the survey, respondents were asked if they would be willing to submit a blood serum sample. Those who agreed to take part submitted two samples, one at the start of the season and one at the end of the season. In the event of a pandemic outbreak that does not overlap with the winter flu season, the telephone surveys will be reactivated as rapidly as possible.

There is some uncertainty inherent in these data as to precisely what titre value will confer immunity. It is also possible that, to indicate long-standing immunity, different titre levels may be required from levels that indicate recent infection. The real-time modelling system does allow for this potential difference in the titre thresholds, but it does not yet account for any uncertainty in these values.

Commuting data

Commuting data were extracted from the UK 2001 census. 42 For individuals aged ≥ 16 years, these data are in the form of counts of the number of surveyed individuals in each age group and within each Government Office Region (GOR) who, on the day of the census, travelled into another GOR and the number who stayed within their home region. Data were then aggregated so that they conformed to the regional split chosen for modelling the 2009 pandemic: London, West Midlands, the North and the South. The number of people in age group a who moved on the day of the census from region r to region s are denoted by Cr,s*(a), and these numbers were standardised to give:

Equation 27 in Appendix 1 illustrates how these data are combined with information from the UK component of the POLYMOD study to generate contact matrices suitable for use in the MR approach to handling spatially heterogeneous epidemics.

Population totals stratified by agegroup and GOR were also derived from UK Office for National Statistics (ONS) data, using the 2008 mid-year estimates. 43

Distributional assumptions

Count data

General practice consultation data, virological confirmations and hospital admissions are all examples of count data that the real-time modelling framework has been designed to accommodate. We assume that these are realisations of either Poisson or Negative Binomial distributions. The expectations of these distributions have derivations that share some common features, accounting for:

-

A delay from infection to the health-care event being recorded.

-

The fact that these are a proportion of the symptomatic cases: those with a sufficiently severe illness or who make a particular health-care choice. For data on hospitalisations, this proportion is the case-hospitalisation or case-ICU risk, and, for GP consultations, it is a time-evolving propensity for individuals to seek consultation in the presence of symptoms.

Therefore, the expected number of daily reports of hospitalisations, denoted µah(tk) for day tk within stratum a is linked to the daily number of new infections through an expression of the type:

where pah(tk) is the relevant case-severity risk, qlh is the probability that the time taken from infection to being reported in data as having been hospitalised spans l time intervals, and Δa(infec)(tk) is the number of new infections at time tk as found from Equation 21 in Appendix 1.

For GP surveillance data, the expected number of consultations arising from the pandemic, µag(tk) is calculated via a similar expression to Equation 2:

Before this quantity can be related to data, however, there are three additional considerations to make:

-

The non-pandemic consultations need to be added. These consultations are denoted by Ba(tk), the expected values of these at time tk.

-

The within-week pattern of consultations has to be accounted for. Typically, no data are reported at weekends and on bank holidays. This leads to a strong artefactual peak in the number of consultations each week on Mondays.

-

Although the population coverage of PHE’s combined surveillance schemes in England is very high, it is still incomplete, and this needs to be accounted for. The expected number of consultations needs to be scaled to allow for this incomplete coverage. Surveillance schemes will report daily coverage figures as a proportion of the total population in each stratum, which we denote as Da(tk).

The expected daily counts of consultations in a general stratum a on day tk are E[Xk,ag] such that:

where d(tk) indicates the day of the week on which time tk falls and κd(tk) is the adjustment factor accounting for the within-week effects on reporting. These factors should be estimated subject to the constraint that ∏d=17κd=1.

In particular, the GP data are likely to be highly volatile owing to the sensitivity of the population’s health-care-seeking behaviour to governmental advice and media reporting. If it is decided that, as in 2009, the most appropriate distribution for the consultation data is the negative binomial, then the real-time model will include dispersion parameters, ηk,ag, such that the variance is given by:

Sampling data

Both the virological and serological data represent a number of positive readings in a sample of fixed size.

We denote the virological data as (mk,av,Wk,a) where mk,av gives the number of swabs tested within 5 days of symptom onset and Wk,a is the number of those swabs that test positive for the presence of the pandemic pathogen. If we assume that the PCR test has test sensitivity ksens and test specificity kspec, then these data are binomially distributed with the expected value:

In all of the analyses presented in this report, the virological testing procedure is assumed to be perfect, with

Similarly, we denote the number of blood sera samples that test positive for the presence of antibodies as Zk,a among a total of mk,as samples. The expected number of positive samples is linked to the level of susceptibility in the population, Sa(tk), via:

where k0 is a time lag representing the number of time-steps required for the development of antibodies. In 2009, this was taken to correspond to 14 days. If pre-season sampling occurs prior to the chosen t1, for modelling purposes these samples can be assumed to be informative about the population prevalence of antibodies on the first day of the outbreak, and can be added as data at this time.

Model parameterisation

Apart from parameters that describe some initial condition of the transmission model, parameters are permitted to vary over time, region and age. Appendix 2 details all the model parameters that can, in principle, be estimated within the real-time model framework. In reality, depending on the availability of relevant data, a subset of parameters is pragmatically chosen for estimation. Table 1 presents a list of the parameters estimated in the spatial analysis of the 2009 pandemic data, indicating whether each parameter varies across regions (denoted as ‘spatial’) or not (denoted as ‘global’).

| Parameter | Description | Model | |

|---|---|---|---|

| PR | MR | ||

| η | Dispersion parameters for GP consultation | Spatial | Spatial |

| d l | Average duration of infectious period | Global | Global |

| ɸ | Proportion of infections that lead to ILI symptoms | Global | Global |

| mk,k = 1, . . . ,5 | Parameters of the contact matricesa | Global | Global |

| ψ | Exponential growth rates | Spatial | Global |

| ν | Initial number of infectives, log-transformed | Spatial | Global |

| p g | Propensity of ILI patients to consult with their GP | Spatial | Spatial |

| p h | Propensity of ILI patients to receive case confirmation | Spatial | Spatial |

| β B | Regression parameters determining the rates of background ILI consultation | Spatial | Spatial |

| κ d | Day-of-the-week effects on the reporting of GP consultations | Global | Global |

Parameters dl and ɸ are deemed to be properties of the virus and therefore are treated as constant over region, time and age. Parameters ψr and vr describe initial conditions (see Appendix 2 for their interpretation) and therefore they have a region-specific value in the PR model and a global value in the MR model. As virological case confirmation data were used in the absence of consistent data on hospitalisations, the proportion of cases that received virological confirmation of their infection, here set to be ph, is an observation model parameter, relevant only for the first 50 days of the epidemic while this type of data were still being collected. Therefore, no temporal or age-specific variation is considered, although variation over regions is included on account of the very different levels of pandemic activity in each region over the early period. The specification of the parameter κd has already been discussed in the text following Equation 4.

Parameter vector m = (m1 , . . . , m5) consists of mulitpliers to specified elements of the contact matrices. These parameters are used to measure the impact of school holidays on contacts among 1- to 4-year-olds and 5- to 14-year-olds, and to down-weight the contribution of all contacts involving at least one adult.

Both parameter vectors η and pg are properties of the reporting model for the GP consultations. Therefore, they both have a temporal change point at time tk = 83 days, the time of the NPFS launch. Additionally, pg differs across ages (different values for children and adults), as well as changing value at two points later in the epidemic to account for the gradual reversion in the public’s health-care-seeking behaviour to pre-NPFS habits. Thus, η is an eight-dimensional parameter component and the pg parameters are 32-dimensional.

The parameters describing the rates of non-pandemic ILI consultations, known as the background rates of consultation, have the most complex specifications. Regional variation in these rates is specified through a log-linear regression model, allowing information on trends and age effects to be shared across regions. As the background rates of consultation are quite likely to be volatile over time, approximately fortnightly breakpoints are chosen, dividing the 245 days under study into 17 distinct time segments.

The modelling process begins with a first-phase of model choice within the PR modelling framework to specify the precise form of this regression. Letting τ(tk) denote the fortnightly interval into which time tk falls, and explicitly denoting the strata (r,a), a saturated model for the consultation rates, Br,a(tk), takes the form:

Parameters in Equations 9 and 10 represent the main effects of region (r), time period (τ) and age group (a) and their interactions; TX indicates the fortnightly time interval that concludes at the same time as the launch of the NPFS, and the regions {L, W, N, S} correspond to London, West Midlands, the North and the South, respectively. This specification implies that there are separate and non-interacting models for the periods pre- and post-NPFS launch. In a preliminary phase of modelling, regression terms from Equations 9 and 10 are sequentially removed until there is an appreciable loss of fit to the data, to obtain simplified versions of the regression equations (see Chapter 4, section Finding an optimal parameterisation).

Bayesian inference

In the Bayesian framework, statistical inference about an unknown parameter of interest, θ, proceeds by combining a priori information about θ with data from a current study. The initial information on θ is expressed in terms of a probability distribution, p(θ), known as a prior. This distribution encapsulates all that is known (or not known) about the parameter (e.g. from expert opinion or historical data) before the current study is carried out. After carrying out the study and observing data y, the knowledge about parameter θ is updated to give a probability distribution, known as the posterior, p(θ|y). This posterior distribution is found through Bayes’ formula:

where L(y; θ) is the likelihood function, expressing the likelihood of observing data y conditional on the parameter taking value θ. The likelihood for the spatial study and a summary of the chosen prior distributions for the parameters are given in Likelihood and Priors, below.

Likelihood

Denoting the number of days over which we have epidemic data as K, and using bold to denote (possible) vector quantities, we write that the epidemic data set is y1:K = (y1,. . .,yK). Each data vector yk contains components (wk,xkg,xkh,zk), consisting of the data types discussed in Distributional assumptions, with each data component containing stratum-specific data reported at time tk.

These data contribute to inference through the likelihood function. Conditional on all the model parameters, using θ to denote the parameters in Table 2, it is assumed that all observations can be considered independent. The likelihood is then expressed as:

| Transmission model parameter | Symbol | Prior/fixed value |

|---|---|---|

| Exponential growth rates | ψ r | ∼Γ (6.3 to 57) |

| Initial log-hazards of GP consultation | υ r | ∼N (-19.15, 16.44) |

| Mean infectious period | d I | 2 + Z, Z∼Γ (518, 357) |

| Mean latent period | d L | 2 |

| Contact matrix parameters | m i | ∼U[0,1],∀i |

| Initial proportion susceptible in age groupa | ρ a | 1 (< 1 year), 0.980 (1–4 years), 0.969 (5–14 years), 0.845 (15–24 years), 0.920 (25–44 years), 0.865 (45–64 years), 0.762 (≥ 65 years) |

| Disease and reporting model parameters | ||

| Mean (SD) of gamma-distributed incubation times | 1.6 (1.8) | |

| Proportion of infections symptomatic | ϕ | ∼β (32.5, 18.5) |

| Proportion of patients who consult a GP, varying by age, time and regiona | prag(tk) | prag(tk)=log(pr,i/(1−pr,i))pr,i∼{N(−0.187,0.166)i=1,2 (tk≤83)N(0.426,0.929)i=3,4 (tk≤130)N(−0.319,0.263)i=5,6 (tk≤178)N(−0.284,0.264)i=7,8 (tk>178) |

| Proportion of cases laboratory confirmed | prh | ∼β (1.03, 2.69) |

| Mean (SD) of gamma-distributed waiting time from symptoms to GP consultation | 2.0 (1.2) | |

| Mean (SD) of gamma-distributed waiting time from symptoms to laboratory confirmation | 6.6 (3.7) | |

| Mean (SD) of gamma-distributed reporting delay of GP consultations | 0.5 (0.5) | |

| Reporting delay of laboratory confirmations | 0 | |

| GP consultation data dispersion parameters | η r,i | ∼Γ (0.01, 0.01) |

| Regression parameters for the background consultation rates | β B | N61 (0,VB) |

| Day of the week effects on the reporting of ILI cases, log-transformed | log κd | N6 (0,Vκ) |

The terms inside the product correspond to the likelihood of virological swabbing data, GP consultation data, USISS hospitalisation data and serological data, all reported at time tk and for each stratum a (assuming here that this encompasses both age group and region).

Priors

Table 2 provides a summary list of model parameters and, in the spatial analysis, their assumed prior distributions, or fixed (known) values as applicable. In some rows of the table, dependence on region has been made explicit through the use of a subscript r.

The justifications for the majority of the choices in the table have been given elsewhere15 and Model parameterisation outlines which parameter components have been considered for the additional spatial variation. When regional variation exists, parameters are identically distributed in each region.

In short, parameters that are hard to estimate from this type of model and surveillance data, such as dI and ϕ, have informative prior distributions based on historical studies and analyses of early epidemic data. 15 The prior for pg uses information from FluSurvey,44 whereas the priors on the parameters ψr and νr can be considered to be relatively uninformative, and the priors for the components of η are particularly diffuse. The bottom two rows of Table 2 are for parameters used to calculate the background consultation rates and the day-of-the-week effects. These are given zero-mean multivariate normal distributions with covariance matrices VB and VK. The covariance matrices are designed so that the background quantities Br,a(tk) and κd, d = 1, . . . ,7, are uncorrelated and identically distributed wherever possible.

Monte Carlo methods

Typically, the posterior distribution of Equation 11 is known only up to a constant of proportionality and, as a result, is seldom possible to derive analytically, particularly when working with a model as complex as that of Figure 1. However, it is possible to obtain a sample from such a distribution. The class of methods used to produce such a sample are called Monte Carlo methods, and two of the most common such methods are discussed below.

Markov chain Monte Carlo

Markov chain Monte Carlo (MCMC), a widespread and popular algorithm for Bayesian computation, is used to derive estimates of the posterior distribution of the model parameters in the spatial analysis of 2009 pandemic data. More detailed introductions to MCMC can be found elsewhere;21 however, in short, MCMC techniques are used when it is necessary to sample from a distribution when this sampling cannot be done directly. In any complex modelling scenario, the posterior distribution in Equation 11 represents such a distribution. MCMC works by generating a sequence of values known as a Markov chain. If allowed to run for a long time, this chain will eventually constitute a dependent sample from the desired distribution. Typically, one would run a small number of such chains (approximately between two and five), starting each chain at dispersed values. The chains run for a burn-in period until samples derived from each are statistically similar. At this point, it can be said that the chains have converged, and then the chains run for a sufficient length of time to derive a sample of the desired quality. This can often require many iterations of the chain (in applications of dimension comparable with the dimension of the parameter vector in our example, often 104−106 iterations may be required) and can often be a time-consuming process as a result.

To see how to generate a sample from a posterior distribution, we introduced some technical detail outlining the Metropolis–Hastings (MH) algorithm, the most commonly used MCMC method. Formally suppose that, at time tk we are trying to derive a sample from the posterior p(θ|y1:k), where y1:k denotes all of the data observed up to the present time. Suppose the parameter value at the nth iteration of the chain is θn. From a carefully chosen probability distribution known as the proposal distribution, a new state for the chain is proposed, θ*∼qk(·|θn). This value is then accepted as the next state of the chain with the probability:

If the proposed parameter value is not accepted, then the chain stays where it is and θn+1 = θn.

The performance of a MCMC algorithm crucially rests on the choice of the proposal distributionzs qk(·|·). However, regardless of this choice, the algorithm remains highly linear, with minimal scope for taking advantage of the benefits offered by parallel computing. Therefore, this algorithm will struggle to reap any of the benefits of cluster computing. More importantly, in an iteration of the algorithm, the suitability of proposed values is evaluated using knowledge of the full data likelihood (from time t0 to time tk). This will require the evaluation of the system of equations in Equation 18 in Appendix 1 and of Equations 2 and 3. When repeated 105 (orders of magnitude) or more times, this can compromise the capacity for timely, real-time inference.

This motivates a more readily parallelisable algorithm, and one that is sequential in nature, demanding only the evaluation of the likelihood of the incoming batch of data, rather than the full data history.

Sequential Monte Carlo

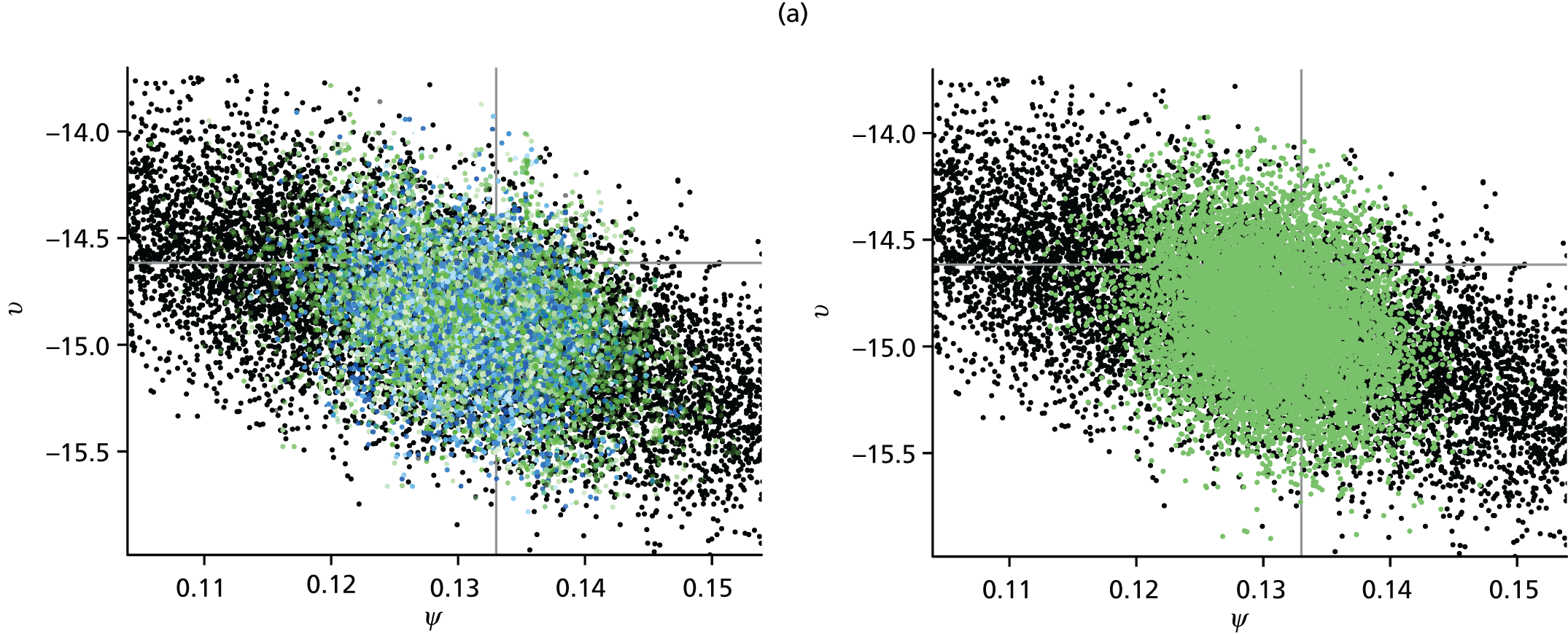

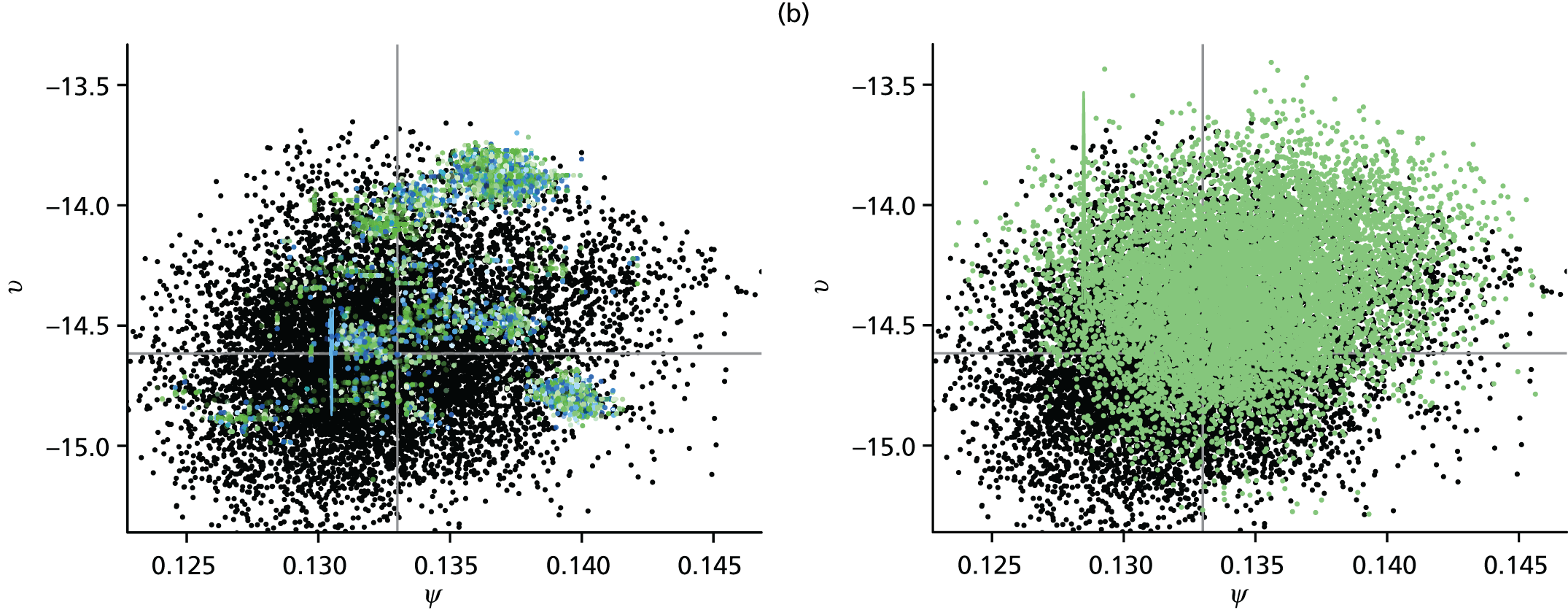

In general terms, SMC provides a prescription to sample from a target probability distribution, denoted π(.), by sequentially moving through a number of, say, L intermediate distributions π0(·), . . . , πL(·) = π(·). By setting K = L and πL(·) = p(·|y1:L), it can be seen how this algorithm may lend itself to the problem of online inference. At the kth stage of the sequence of target distributions, a weighted sample of size nk from p(·|y1:k) is obtained, denoted:

Here, the weight ωk(j) attached to a parameter value θk(j), known in this context as a particle, indicates the relative importance of the jth particle to the sample (known as the particle set). This means that if we have a function of the parameter, such as the epidemic trajectory, which we denote as ƒ(θ), then we would estimate it by its weighted mean:

The basic idea is that the SMC algorithm proceeds, on observing a (k + 1)th batch of data, by reweighting the sample according to the likelihood of the new data. This reweighted sample is theoretically representative of the next target distribution πk + 1(·)=p(·|y1:(k + 1)). Therefore, we can base inferences at time tk + 1 on the previous sample of parameter values and the new set of weights, which require only the likelihood of the new data to be calculated. This represents a significantly reduced computational burden, and, as the reweighting for each of the particles can be calculated in parallel, it is also a highly parallelisable computation.

Unfortunately, such a process swiftly suffers from a phenomenon called particle degeneracy. This happens gradually over time as the particle weights scale in such a way that only a very small handful of particles have non-negligible weight. When this degeneracy occurs, although the weighted sample is of size nk, the low weight attached to the majority effectively removes them from the sample, and estimation and projections are made based on only a handful of particles and are, therefore, subject to significant error.

To prevent this degeneracy, the sample requires some rejuvenation. 45 The first step of this rejuvenation involves the removal of all those particles of too low weight. This is done through a process of resampling, in which a new sample is drawn from the old set of particles according to their weights. The consequence of doing this is that the sample is composed of multiple copies of a much smaller number of identical particles. It is therefore necessary to jitter this sample somehow. To do this, short MCMC implementations are run for each particle, using the current value of the particle as the starting state for the chain.

The sequential Monte Carlo algorithm

A brief overview of the SMC algorithm is shown below, based on the resample-move algorithm. 46

-

Set k = 0. At time t0, draw a sample {θ0(1),. . . ,θ0(n0)} from the prior distribution, π0(θ), set the weights ω0(j)=1/n0 for all j={1,. . . n0}.

-

Set k = k+1. Observe a new batch of data yk. The particles are reweighted according to the likelihood of the incoming data. ω˜k(j) ∝ ωk−1(j)L(yk;θk−1(j)).

-

Has the particle set become degenerate? If not, set θk(j)=θk−1(j),ωk(j)=ω˜k(j),nk=nk−1 and return to step 2.

-

Resample. Choose nk and sample {θ˜k(1), . . . ,θ˜k(nk)}from the set of particles {θk−1(1), . . . ,θk−1(nk−1)} with probabilities proportional to {ω˜k(1),. . . ,ω˜k(nk−1)}. Re-set ωk(j)=1/nk.

-

Move. For all j, move θ˜k(j) to θk(j) via a short MCMC chain. If k < K return to step 2, otherwise end.

Despite the presence of the short MCMC runs in step 5, this still presents a significant improvement over the plain original MCMC algorithm because:

-

The computationally intensive steps of the algorithm (steps 2 and 5) both allow for calculations on each particle to be made in parallel.

-

At most times the particle set will not be degenerate, and hence only the likelihood of the new data needs to be calculated to reweight the sample.

-

Each of the many parallel MCMC chains can be assumed to start from a point that is sampled from the target distribution. There is, therefore, no need to allow the chain time to reach convergence, and only very low numbers of iterations will be required.

-

Before the MCMC phase starts, we already have an estimated sample from the target distribution by using the weighted sample achieved in step 2. This estimate can be used to construct good proposal distributions for the MCMC, improving its efficiency.

A number of algorithmic tweaks have been required to make the algorithm robust to the vagaries of epidemic data and characteristics of the real-time model. The technical detail involved has been presented elsewhere,47 and only a brief overview is given here.

For how long should the Markov chain Monte Carlo run?

The MCMC chains should be run for long enough to have a rich sample of parameter values, but for no longer than is strictly necessary to maintain real-time efficiency. At the start of the MCMC phase, there may be many particles with the same parameter value, which can define a cluster. The MCMC should be run for long enough that particles from different clusters have fully intermingled and the original clusters are no longer identifiable.

To formally measure the dispersal of the particles, an estimate of the intraclass correlation coefficient (ICC) is used. 48 This measures the clustering in the value of a summary quantity calculated for each particle. The chosen summary was the projected epidemic ‘attack rate’ (the total cumulative incidence measured as a proportion of the total population). 47 At the start of the MCMC phase, the ICC = 1. As the iterations progress, this value will gradually fall and the MCMC iterations will stop once the ICC falls below a pre-defined limit. It has been shown elsewhere that values in the range of 0.1–0.2 should be adequate. 47

Choosing good Markov chain Monte Carlo proposals

Equation 13 gives the acceptance probability for a proposed value for the next state of the chain. Within SMC, the aim is to rapidly diversify the set of particles, without running the chain for too long. To do this, we want to propose values for θ* that are not too close to the current values, and that are likely to be accepted. By setting qk(θ|θk(n))=pk(θ|y1:k), the acceptance ratio would always be 1 (so the proposal will definitely be accepted). This has the added advantage that the proposal is independent of the current state of the chain, so the set of particles would be immediately intermingled.

Unfortunately, we cannot sample directly from pk(θ|y1:k) so easily. However, after step 2 of the algorithm, we have a weighted sample that should approximate a sample from this distribution. Therefore, by choosing qk(·|·) to be a multivariate normal distribution centred on the weighted mean and weighted covariance of the particle set calculated at the end of step 2, we have a distribution that approximates the target density and should ensure reasonable rates of acceptance while rapidly replenishing the particle set. This works well, provided that the particle set at the end of step 2 has not become impoverished to a degree that it cannot provide a reasonable approximation to the target distribution. 47

When to rejuvenate? When are the particles degenerate?

The standard approach is to rejuvenate the particle set when the effective sample size (ESS) falls below a specific level. 49 The ESS is a measure of the number of independent, equally weighted, observations from the target distribution that are as informative as the weighted particle set. At the end of step 2 of the algorithm, the ESS is calculated as:

Values of the ESS that are close to nk – 1 indicate a sample that contains plenty of information about the posterior distribution. A typical level above which the ESS is deemed to be acceptable (and, thus, there is no need to rejuvenate the sample) is ESS ≥ nk – 1/2.

In some of the examples, such as those considered in section Comparison of the real-time performance of the Monte Carlo methods (see Chapter 4), there are times when the addition of the next batch of data in the sequence can lead to a sudden drop in the ESS to very low values. In such cases the MCMC algorithms have too much work to do to adjust the sample and timely inference would not be possible. Therefore, to limit the depletion in the ESS, we introduce rejuvenation steps at intermediate times, between tk and tk + 1, by adding in the data fractionally. If we add a fraction of the data, α, such that 0 < α ≤ 1, then a value α0 can be identified such that the ESS falls to only approximately nk/2. The next batch of data to arrive is either the remaining portion of the time tk + 1 data, or a further portion of it, sufficient to once again bring the ESS down to the threshold value. This is the ‘real-time’ algorithm presented in the technical publication reporting this work. 47

Chapter 4 Results

Spatial modelling

This section presents the results obtained when applying the PR and MR models to reconstruct the 2009 A/H1N1pdm outbreak in England and, in particular, to characterise the impact of inter-region transmission. As discussed in Chapter 3, The meta-region model, there are a number of competing hypotheses regarding the precise formulation of the MR model, and initially we shall present results that assumed the ‘best fitting’ MR model, before discussing the exact composition of this model in Goodness of fit.

Reconstructing the epidemic

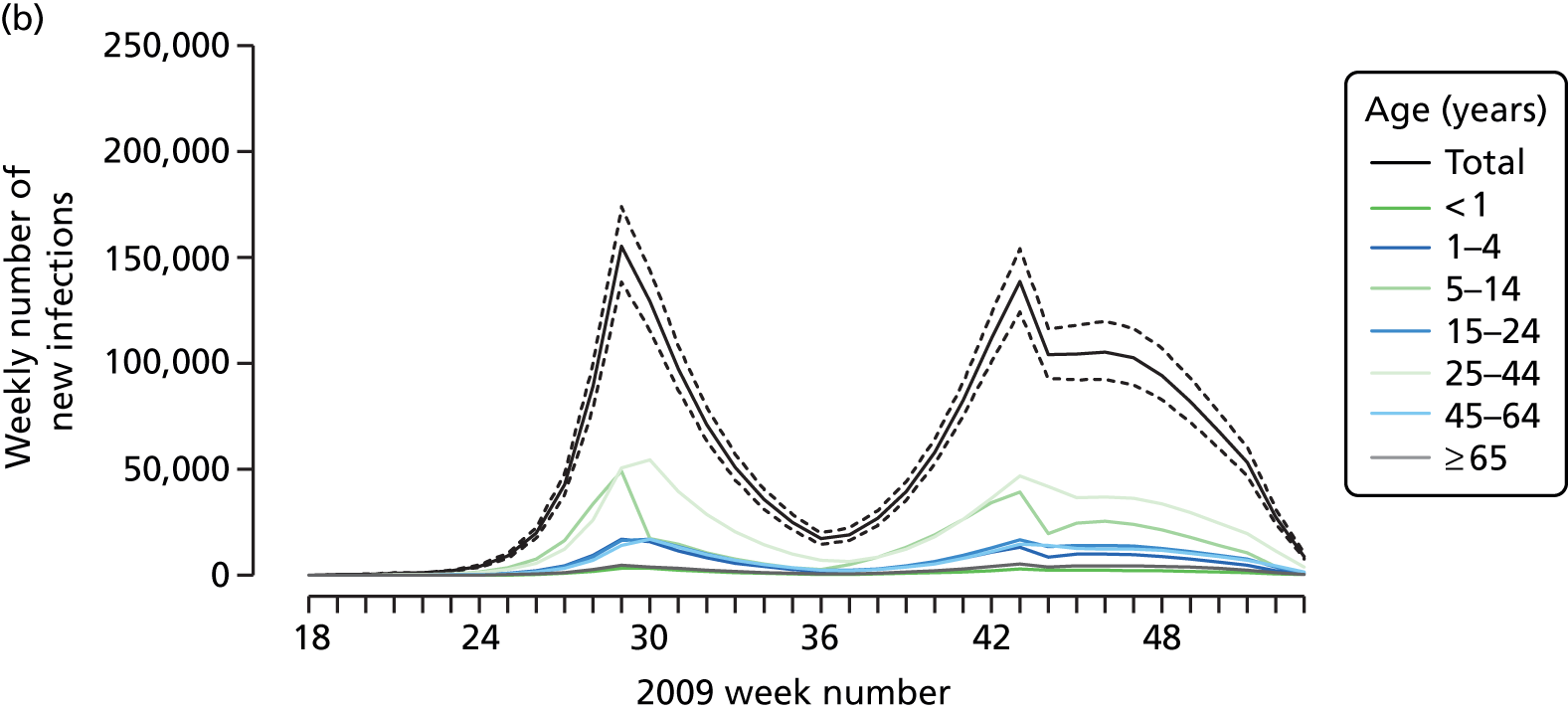

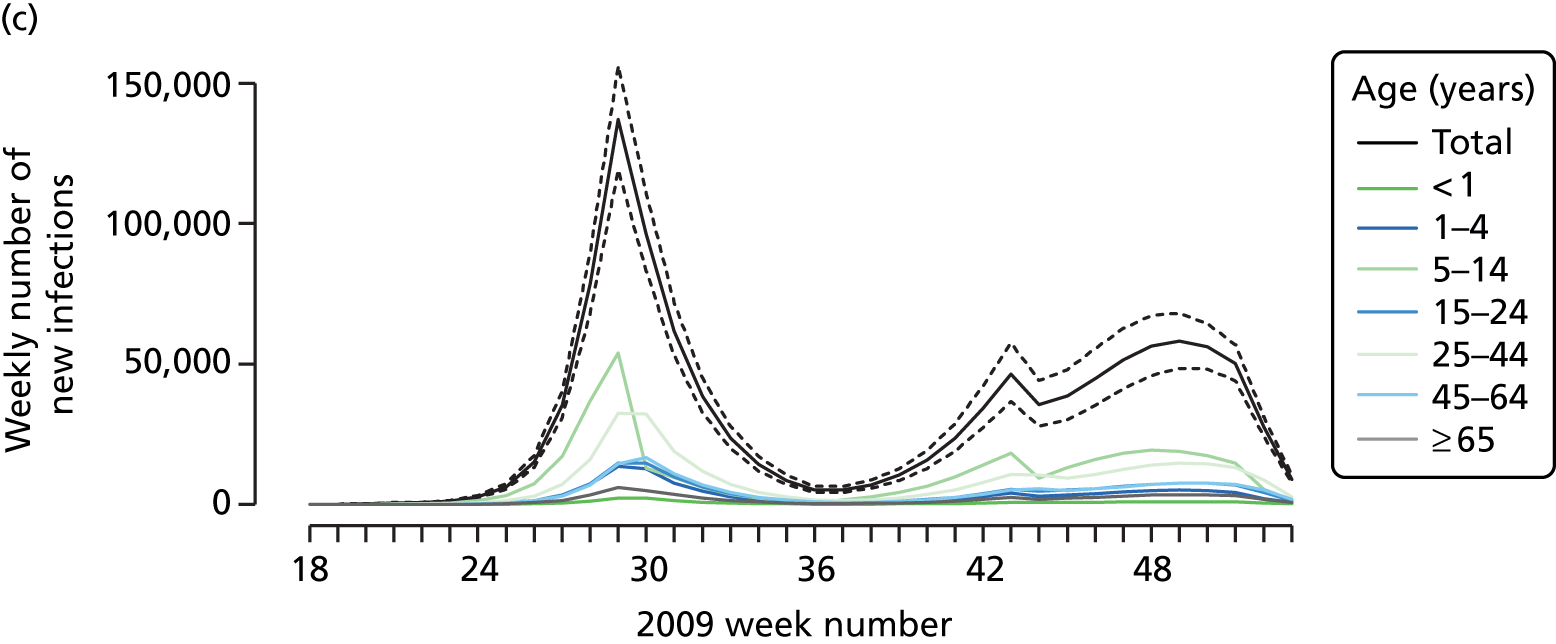

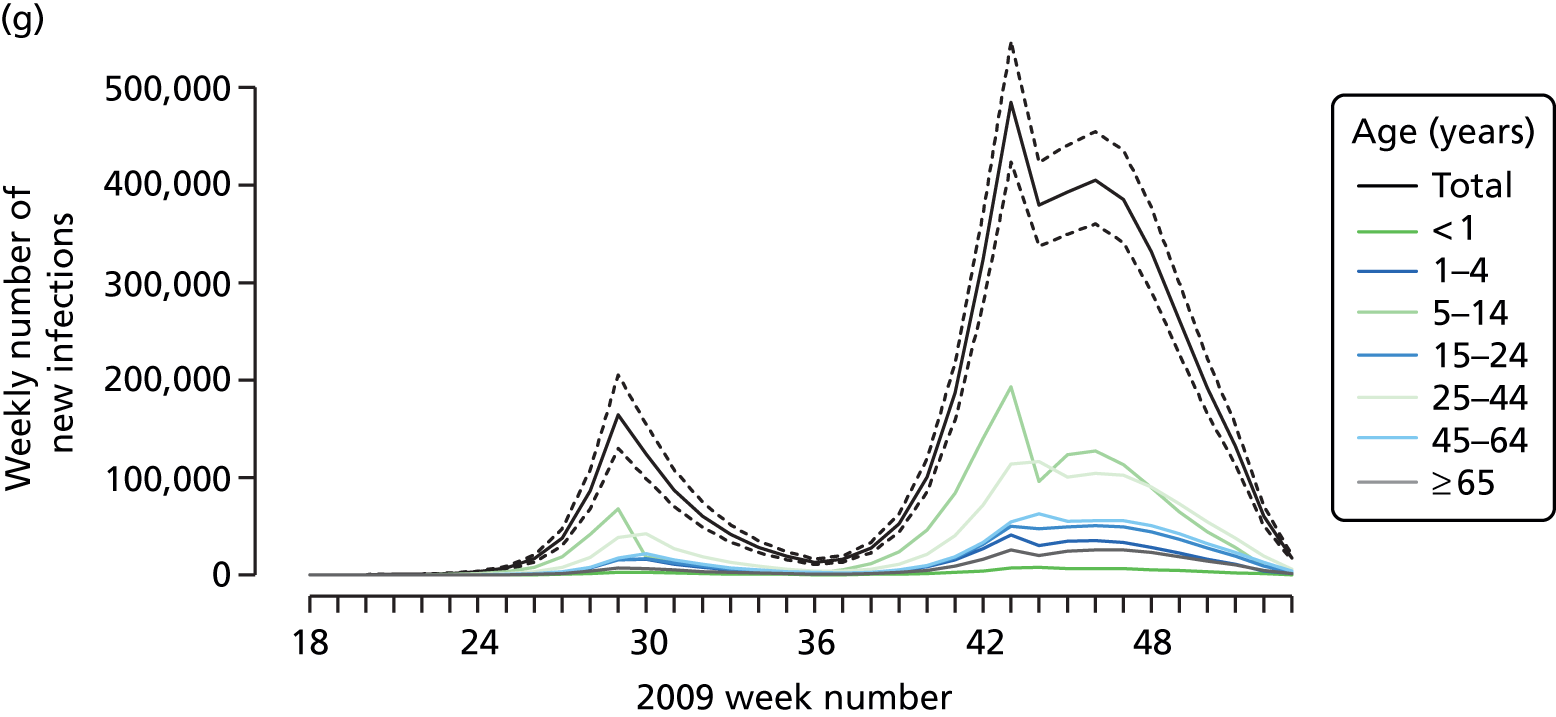

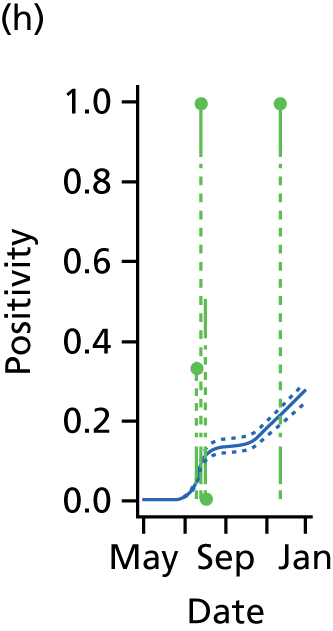

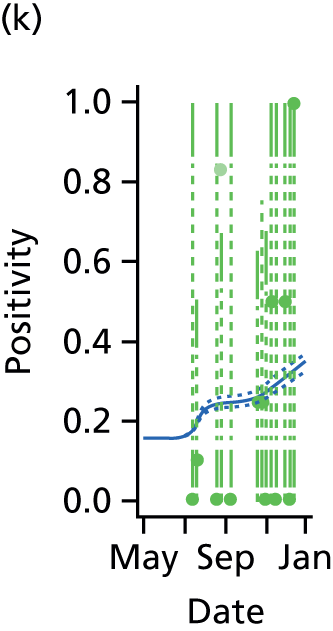

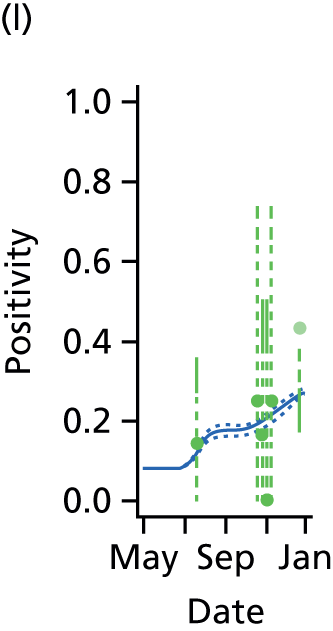

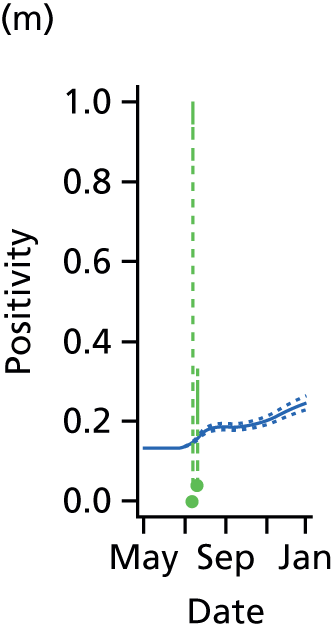

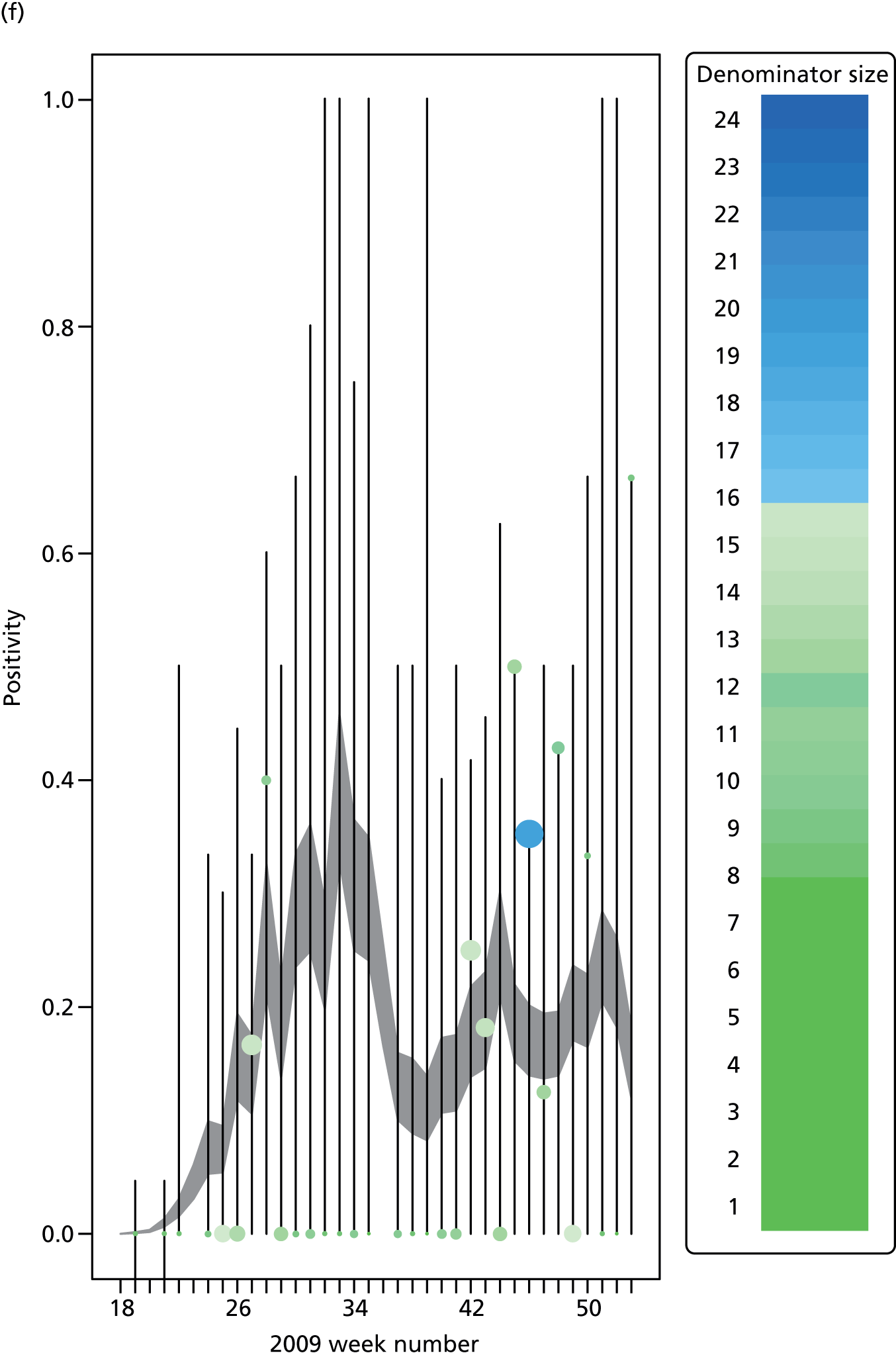

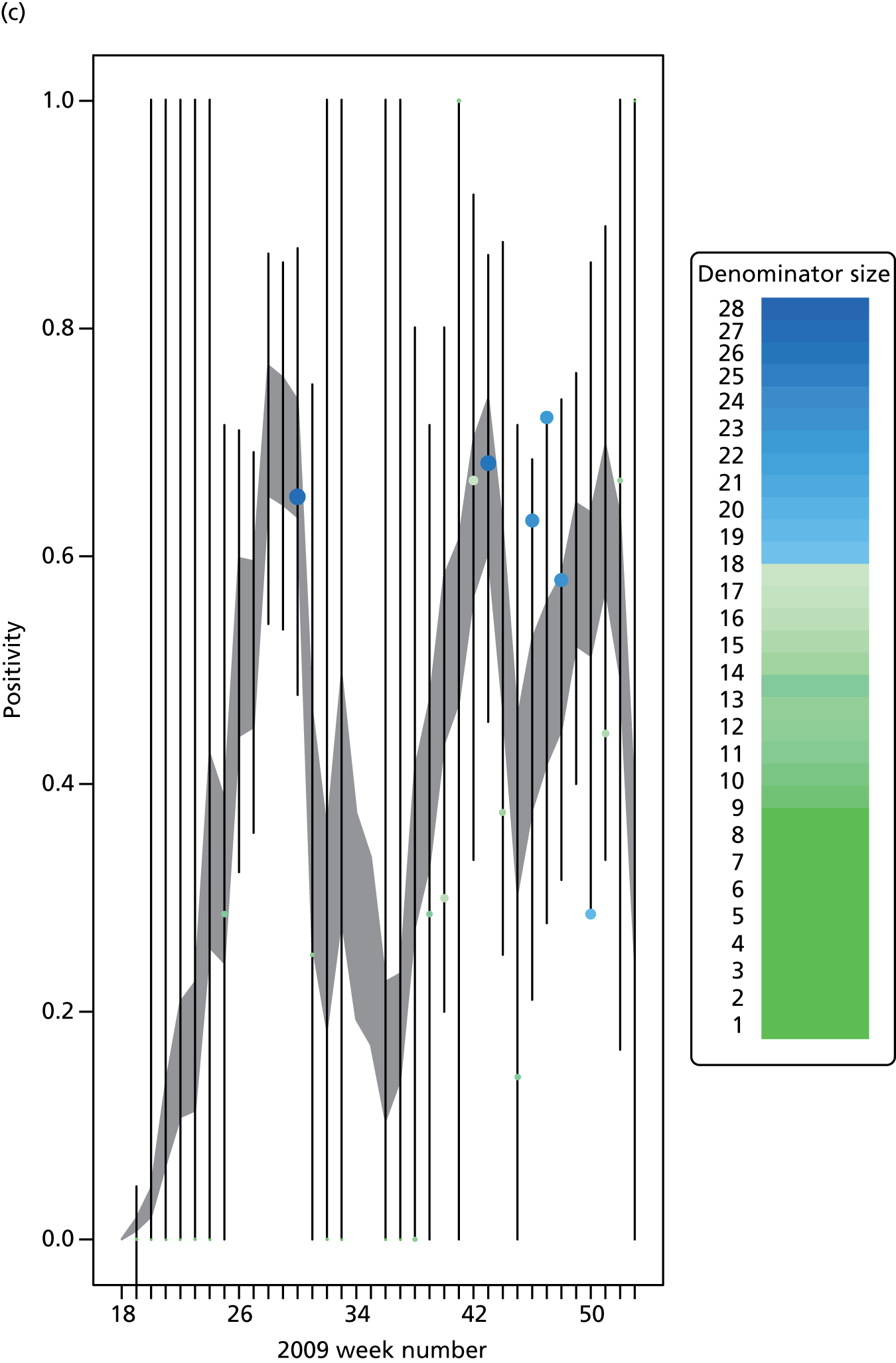

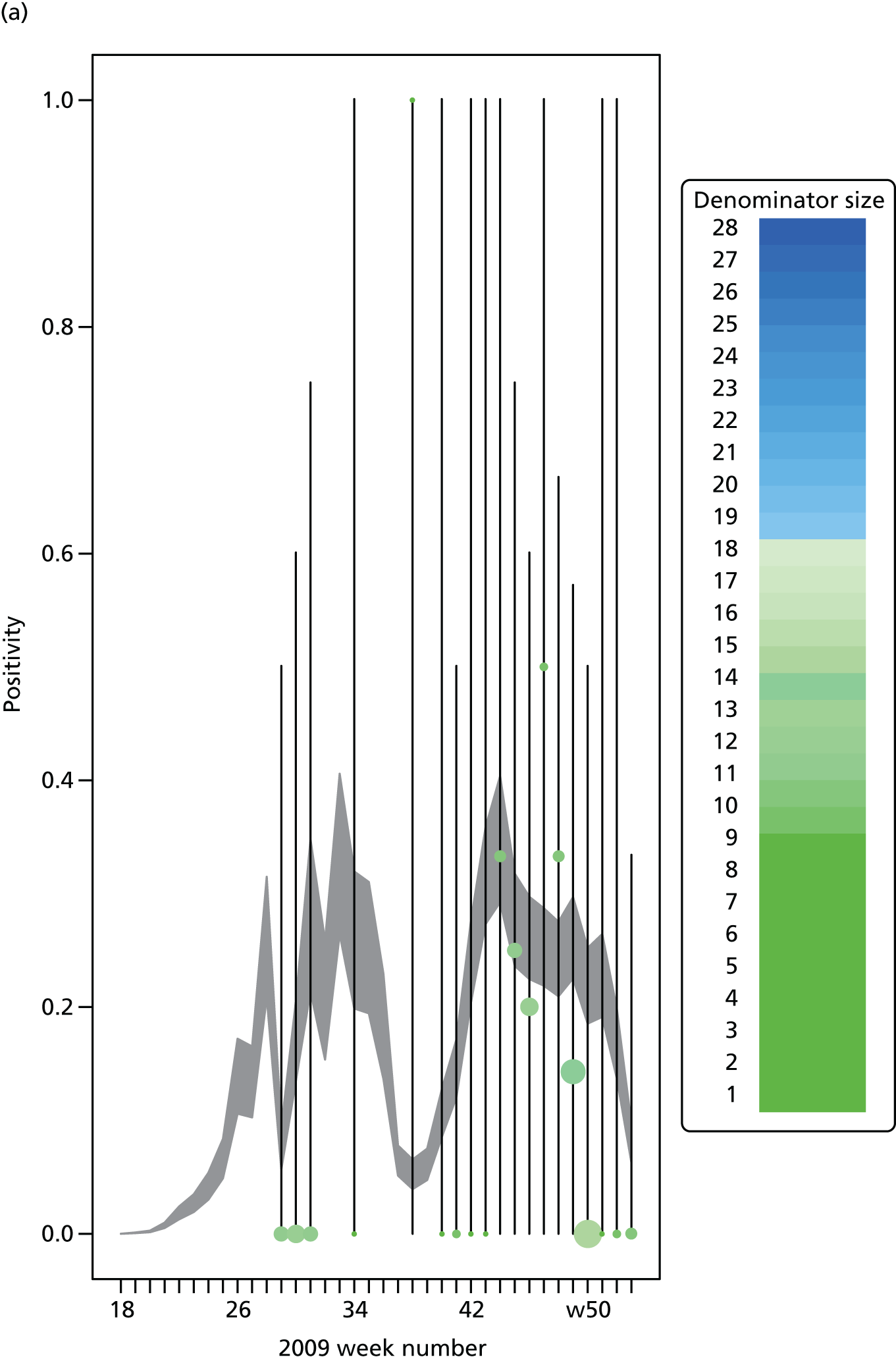

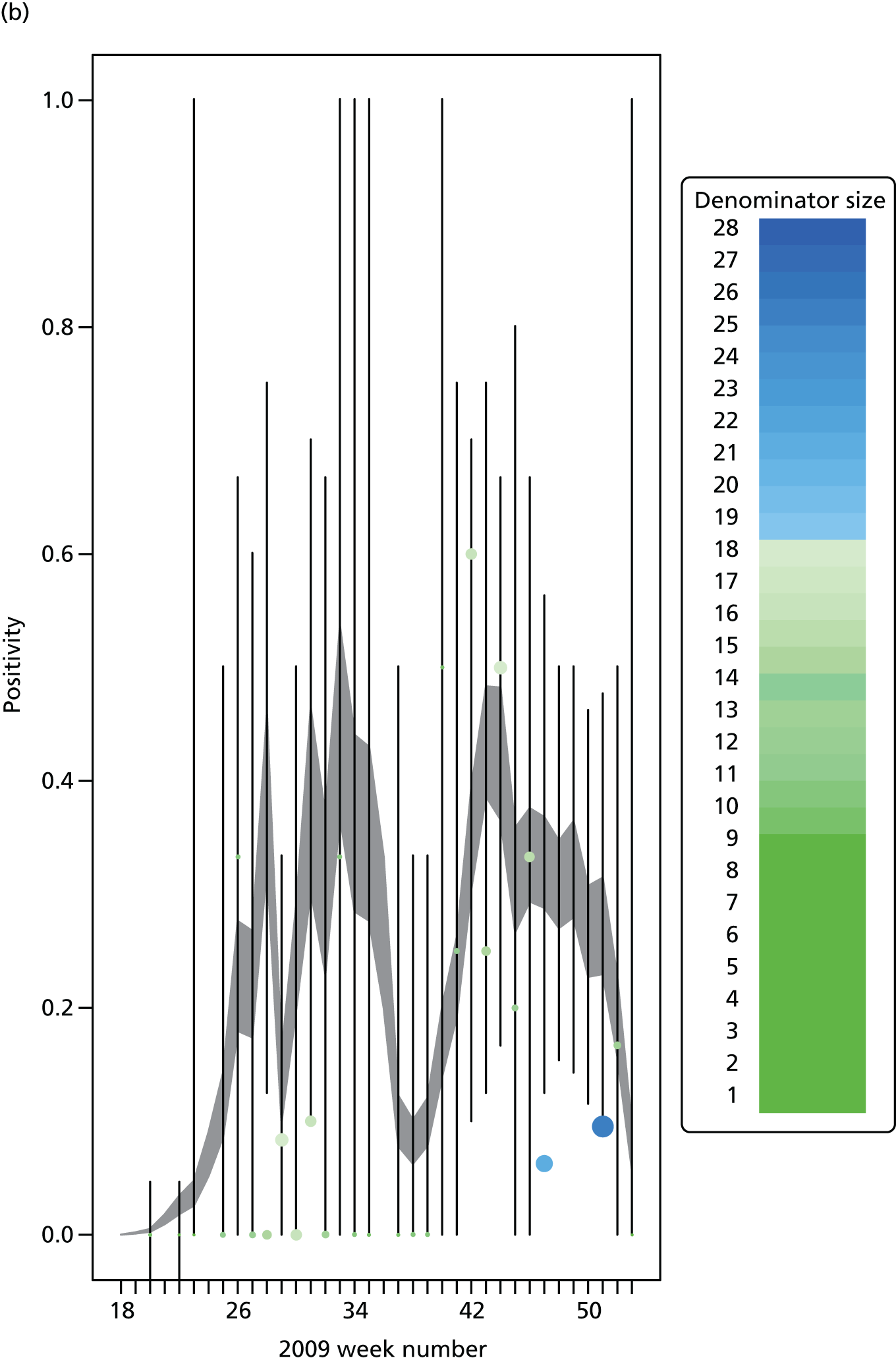

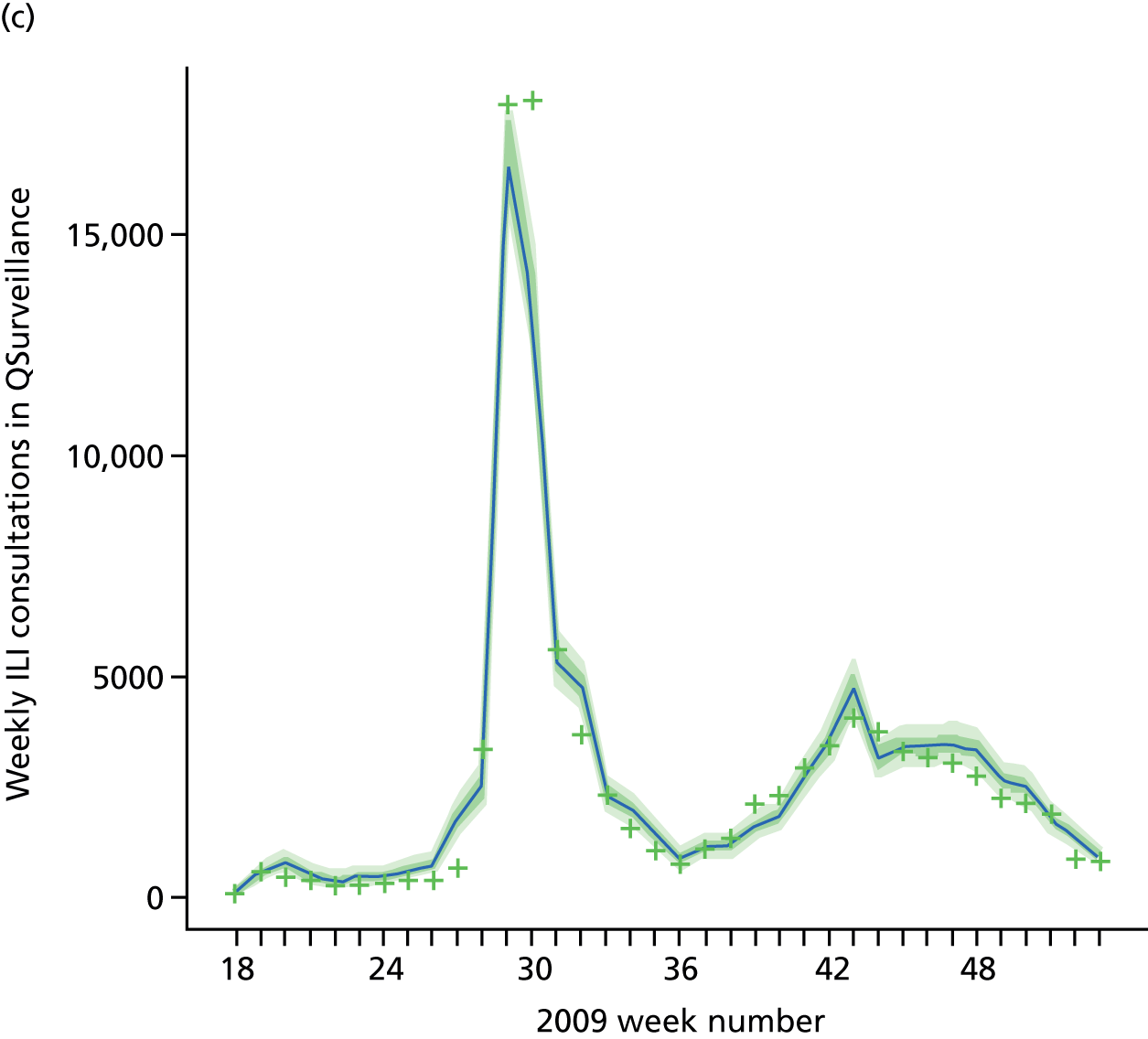

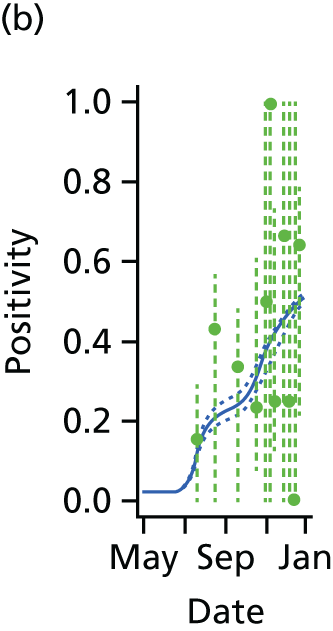

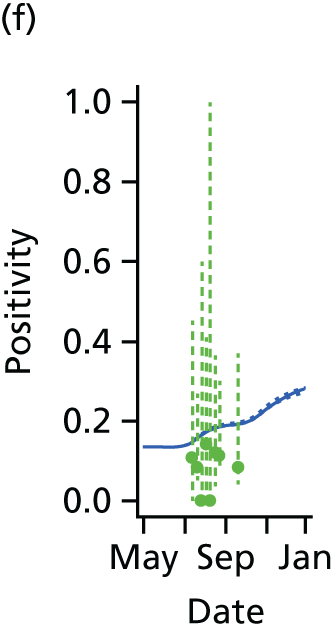

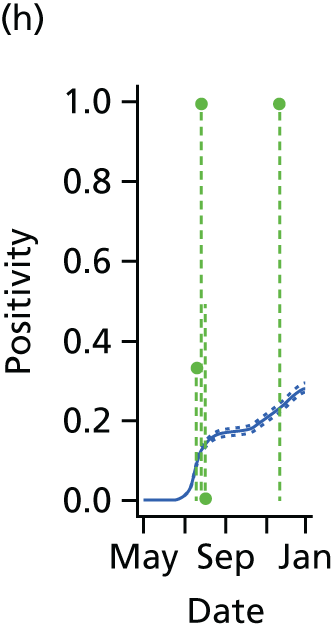

The PR and MR models are both sufficiently flexible to be able to reproduce the two epidemic waves of the 2009 pandemic. The estimated incidence curves are reproduced in Figure 3. The estimated epidemic in the North is consistent across both models. London and the West Midlands are characterised by bigger first waves of infection (and subsequently smaller second waves) under the PR model, the opposite being true for the South. This is apparent from the peaks in Figure 3 and the given population-level attack rates in Table 3 (age-specific attack rates can be found in Appendix 3). Peak timings in both waves of infection are the same under both modelling approaches, and coincide with the start of school holidays, with the exception of the second wave in the West Midlands. Here, a sufficient supply of susceptible individuals remains in the population after the holiday to allow transmission to increase once more (albeit briefly). This may well, however, be a phenomenon of different school term dates in this region to those that predominate elsewhere in the country.

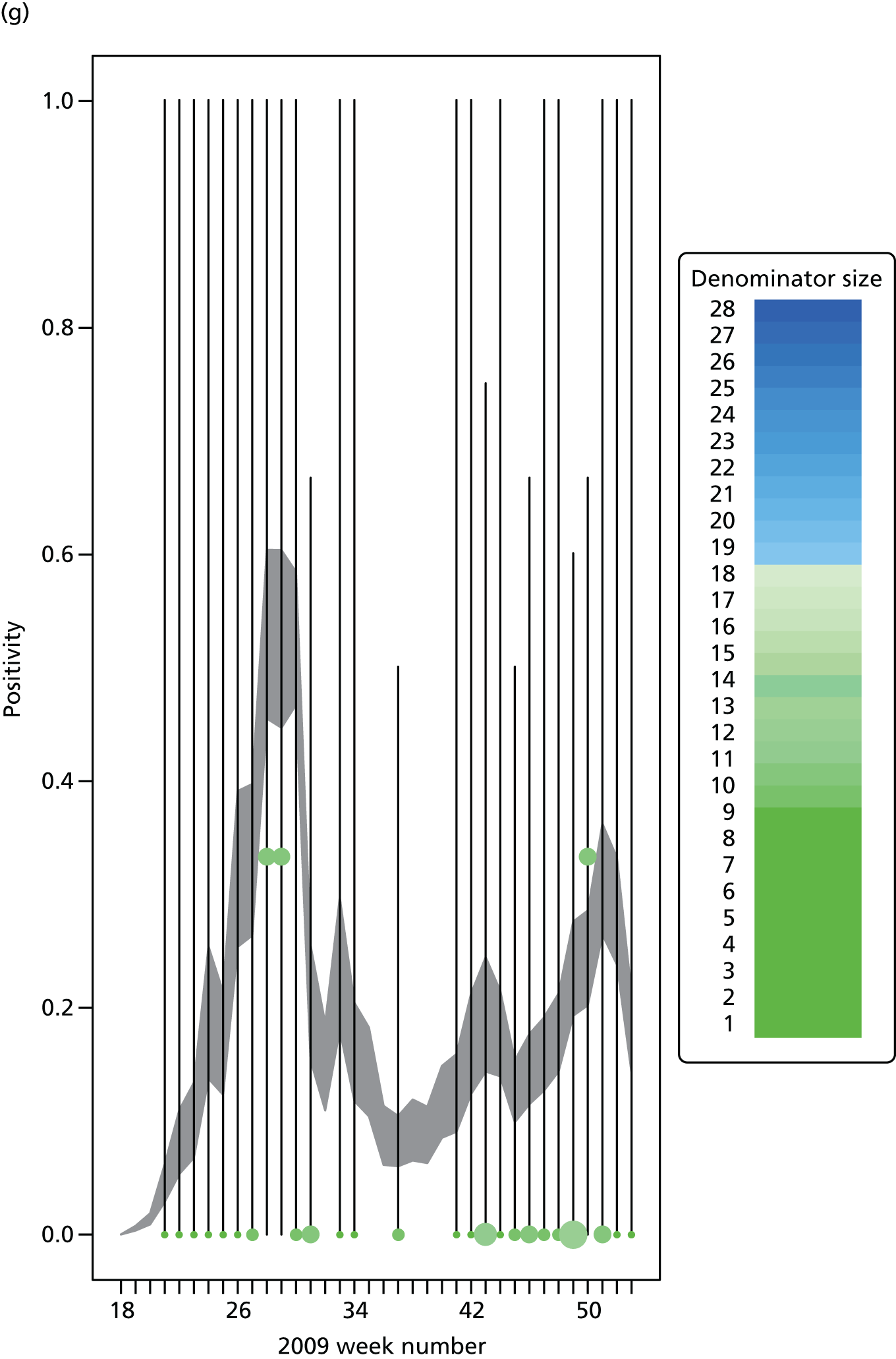

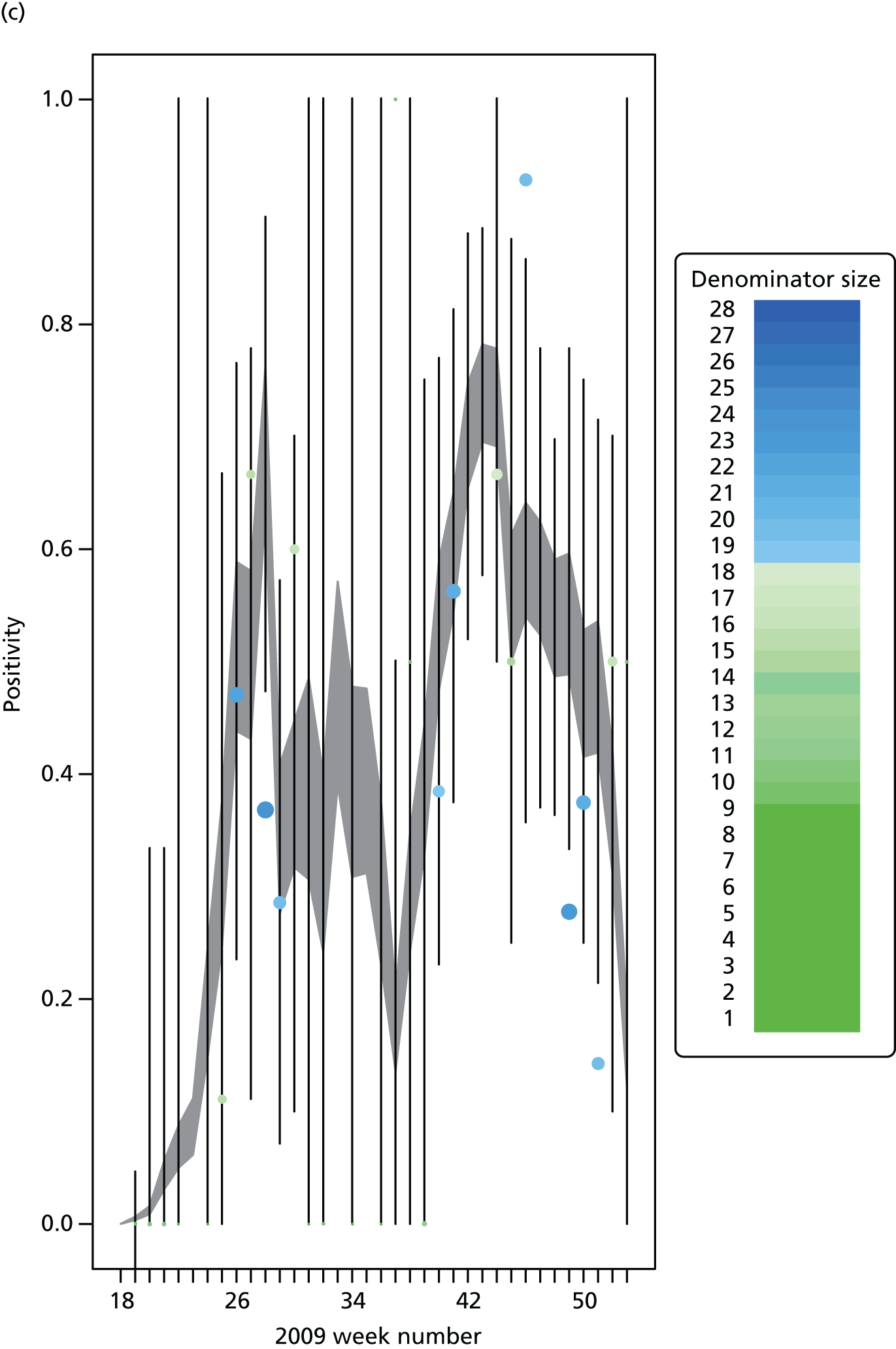

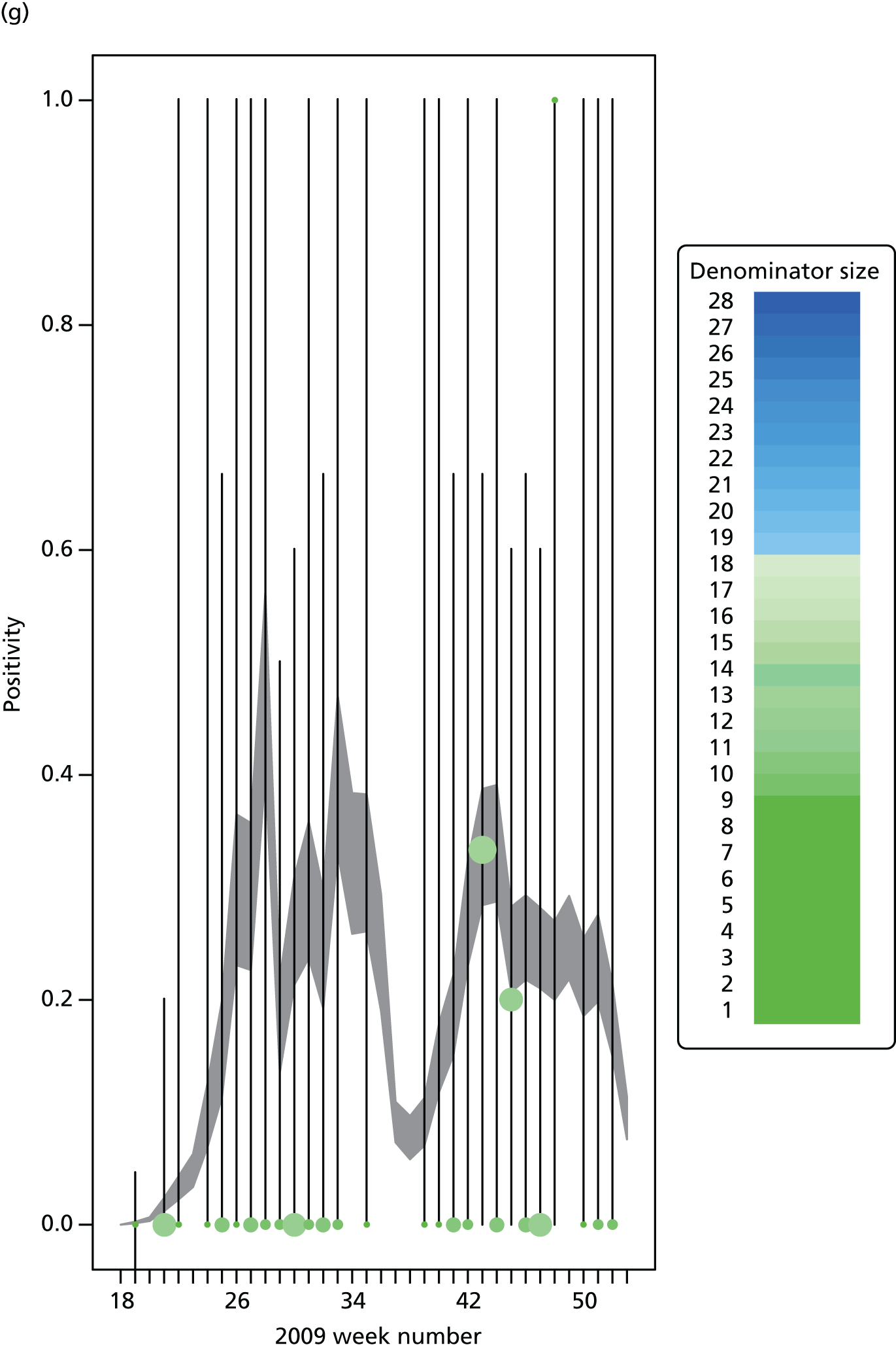

FIGURE 3.

Estimated weekly number of new A/H1N1pdm infections for (a) London under the PR model; (b) London under the MR model; (c) the West Midlands under the PR model; (d) the West Midlands under the MR model; (e) the North under the PR model; (f) the North under the MR model; (g) the South under the PR model; and (h) the South under the MR model. Solid black lines represent incidence aggregated over age groups with associated 95% (CrIs) (dashed lines). Reproduced from Birrell et al. 28 © 2016, Macmillan Publishers Limited. This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

| London | West Midlands | North | South | |

|---|---|---|---|---|

| Parallel-region model | ||||

| May–August | ||||

| Infections | 988 (958 to 1124) | 525 (456 to 600) | 1058 (839 to 1316) | 692 (554 to 854) |

| Cases | 152 (123 to 184) | 80 (65 to 98) | 161 (121 to 215) | 105 (80 to 139) |

| Attack rate (%) | 13.2 (11.4 to 14.9) | 9.8 (8.5 to 11.2) | 5.6 (4.4 to 6.9) | 3.6 (2.9 to 4.5) |

| September–December | ||||

| Infections | 764 (641 to 901) | 571 (483 to 656) | 3671 (3379 to 3987) | 3750 (3508 to 4021) |

| Cases | 117 (91 to 153) | 87 (64 to 115) | 563 (462 to 689) | 576 (471 to 697) |

| Attack rate (%) | 10.1 (8.5 to 11.9) | 10.6 (9.0 to 12.2) | 19.3 (17.8 to 21.0) | 19.6 (18.3 to 21.0) |

| MR model | ||||

| May–August | ||||

| Infections | 751 (674 to 832) | 669 (621 to 718) | 886 (792 to 986) | 1150 (1036 to 1270) |

| Cases | 85 (74 to 98) | 76 (66 to 88) | 100 (87 to 117) | 130 (113 to 151) |

| Attack rate (%) | 9.9 (8.9 to 11.0) | 12.4 (11.5 to 13.3) | 4.7 (4.2 to 5.2) | 6.0 (5.4 to 6.6) |

| September–December | ||||

| Infections | 1228 (1129 to 1331) | 477 (405 to 559) | 3923 (3721 to 4129) | 3450 (3256 to 3658) |

| Cases | 140 (114 to 172) | 54 (42 to 69) | 447 (377 to 532) | 393 (329 to 472) |

| Attack rate (%) | 16.2 (14.9 to 17.6) | 8.9 (7.5 to 10.4) | 20.6 (19.6 to 21.7) | 18.0 (17.0 to 19.1) |

Estimated epidemic characteristics

Table 4 presents estimates of some key transmission parameters under both models. There is a pleasing consistency across the modelling approaches in the parameter estimates. For example, estimates for the (initial) reproductive number R0init, derived from the exponential growth rates, are centred on 1.8, with the region-specific estimates of the PR model being tightly distributed around this value. This is in broad agreement with other estimates for R0 obtained from a review of 2009 pandemic transmission parameters,50 and a slight increase on what had been estimated for the single region version of the model. 15 In a similar (single-region) modelling study, much higher estimates for the R0 associated with the A/H1N1pdm virus have been derived, although this was over the course of a later third wave of pandemic infection occurring in the winter season 2010–11. 51 Similarly, the estimates for the other transmission parameters are robust to the model specification [note the overlapping nature of the credible intervals (CrIs) in Table 4]. In particular, parameter m1, which gives the down-weighting applied to all contacts involving adults, is estimated consistently to be in the range 0.57–0.62. Estimates for m3 indicate that the summer school holiday period reduced the rate of effective infectious contacts among the 5–14 years age group to below 3% of the school term-time figure. However, when averaged over all age groups, this represents a drop in R0init of between 43% (in London) and 50% (in the South). To compare, a Canadian study recorded a 28% drop in transmissibility during a similar school holiday period. 52 The reduction in the effective contact rates in the other school holidays, as measured by parameters m4 and m5 were neither as well estimated (note the width of the CrI attached to the estimates for parameter m4) nor did they indicate a similar reduction in the contact rates, the shorter duration of these holidays evidently causing a milder disruption to routine contact patterns. Estimates for the proportion symptomatic, ϕ, do appear to be rather low, although consistent across approaches and with an estimate of 11% based on a closely observed outbreak. 53

| Parameter | PR model, posterior median (95% CrI) | MR model, posterior median (95% CrI) |

|---|---|---|

| R 0 | – | 1.810 (1.770 to 1.840) |

| d l | 3.470 (3.350 to 3.590) | 3.460 (3.340 to 3.580) |

| ɸ | 0.154 (0.126 to 0.186) | 0.114 (0.098 to 0.134) |

| m 1 | 0.569 (0.536 to 0.605) | 0.618 (0.584 to 0.651) |

| m 2 | 0.901 (0.610 to 0.996) | 0.666 (0.265 to 0.740) |

| m 3 | 0.007 (0.000 to 0.032) | 0.006 (0.000 to 0.032) |

| m 4 | 0.167 (0.008 to 0.669) | 0.214 (0.004 to 0.909) |

| m 5 | 0.446 (0.341 to 0.557) | 0.411 (0.291 to 0.528) |

Comparison between meta-region and parallel-region modelling

In Table 5, the posterior mean deviance is used to discriminate between different formulations of the MR model (to be discussed further in Goodness of fit), comparing each formulation relative to the comparable PR model. In fitting these models, MCMC provides a sample of parameter values {θ(1), . . . , θ(n)}, from which the posterior mean deviance, Dm=−(2/n)∑j=1nlog(Lm(y1:K;θ(j))) can be derived, where Lm(·;·) indicates the likelihood under a specific model, m. Note that lower values of Dm are preferred. The discrepancy between the PR model and the best-performing MR model is 57.89. Owing to the regional variation permitted by the PR model in the estimation of R0init and I0, the PR model has six more parameters than the MR model. This improvement in deviance for such a small number of parameters suggests that the PR represents a significantly better fit to the data. This compounds the practical benefit of the PR model being markedly faster to implement; it is more suited to parallel computation and the calculation of R0* in Equation 20 of Appendix 1, requires the calculation of eigenvalues of (7 × 7) matrices rather than the (28 × 28) or (44 × 44) matrices required by the MR model.

| α | Density type | Seed type | Commuting | ΔD(θ) (SD) |

|---|---|---|---|---|

| 0.0 | By stratum | Nextgen | Random | 3890 (34.23) |

| 0.0 | By stratum | Nextgen | Fixed | 4376 (33.83) |

| 0.0 | By stratum | Empirical | Random | 4548 (31.53) |

| 0.0 | By stratum | Empirical | Fixed | 4949 (32.21) |

| 0.5 | By stratum | Nextgen | Random | 3025 (34.21) |

| 0.5 | By stratum | Nextgen | Fixed | 2241 (32.13) |

| 0.5 | By stratum | Empirical | Random | 3191 (36.65) |

| 0.5 | By stratum | Empirical | Fixed | 2269 (31.90) |

| 1.0 | By stratum | Nextgen | Random | 2770 (30.23) |

| 1.0 | By stratum | Nextgen | Fixed | 2466 (30.05) |

| 1.0 | By stratum | Empirical | Random | 2578 (29.43) |

| 1.0 | By stratum | Empirical | Fixed | 2359 (29.39) |

| 1.0 | By region | Nextgen | Random | 449.2 (27.60) |

| 1.0 | By region | Nextgen | Fixed | 437.9 (27.21) |

| 1.0 | By region | Empirical | Random | 170.1 (28.47) |

| 1.0 | By region | Empirical | Fixed | 166.4 (29.76) |

| 1.0 | By region | Hybrid | Random | 57.89 (27.20) |

| PR model | 0 | |||

Finding an optimal parameterisation

Inferences drawn from either the PR or the MR modelling approach are found to be sensitive to the precise form of the regression for the background rates of GP consultation. Because of this, it was important to implement submodels of Equations 9 and 10 in order to most appropriately characterise the changes in consultation behaviour over the pandemic period. Again, the posterior mean deviance was used to identify a preferred model. The real-time PR model was repeatedly implemented with the higher-order interactions systematically removed from Equations 9 and 10 in the hope of finding simplified regression models without incurring any significant loss of fit to the data. Additionally, some age groups and regions were paired together to cover gaps where data were too sparse to warrant the additional age/region effects. Under the PR model, the seemingly optimal choice for the regression model, and the one that has been used in the generation of all the results presented in this section, is:

with the rates in the North found to be equal to those in the South, Br,a(tk) = Bs,a(tk). This is unsurprising given the sparsity of virological data in the North to accurately estimate the non-pandemic consultation rates. Also, the rates in the two youngest age groups have been set to be equal (note the sum over the a index omits a = 1), Br,1(tk) = Br,2(tk), which, again, is not an unreasonable finding given that only the virological swabbing and not the QSurveillance GP data sets provide data with sufficient granularity to distinguish between the first two age groups (< 1 year and 1–4 years).

When the same model refinement process was undertaken using the MR model, the same regression equations were again preferred.

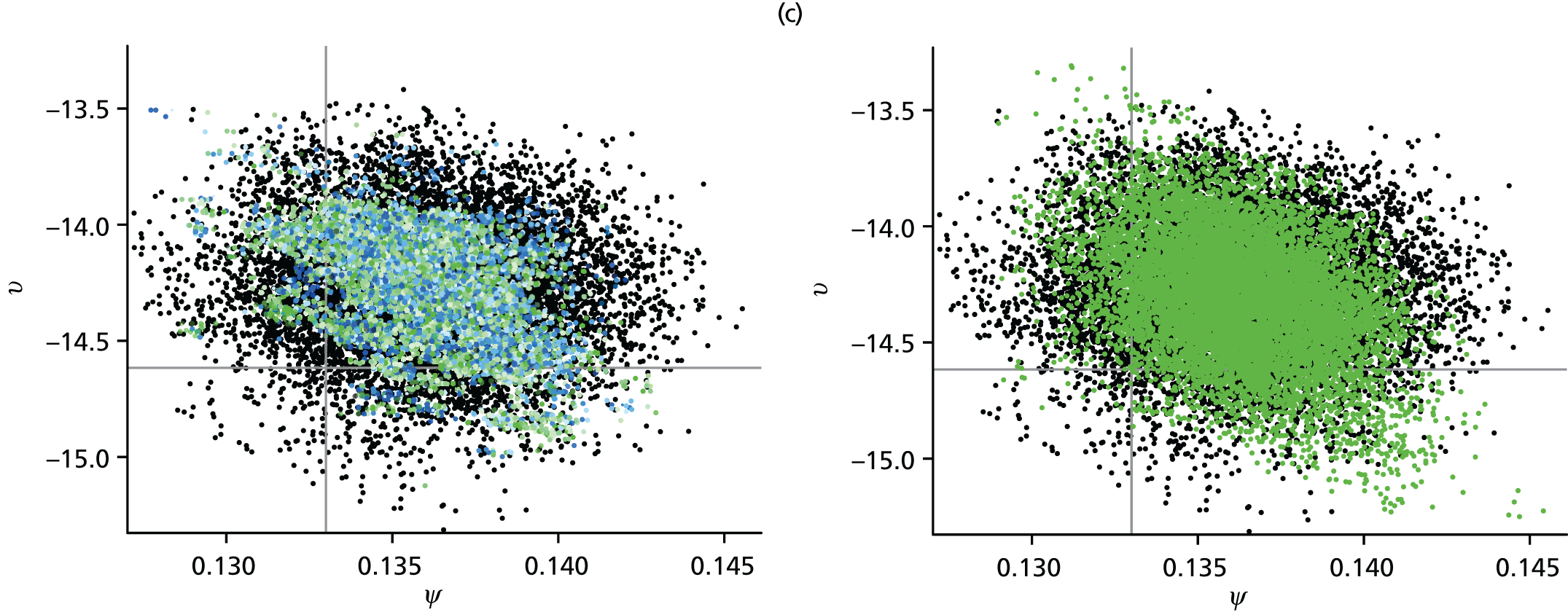

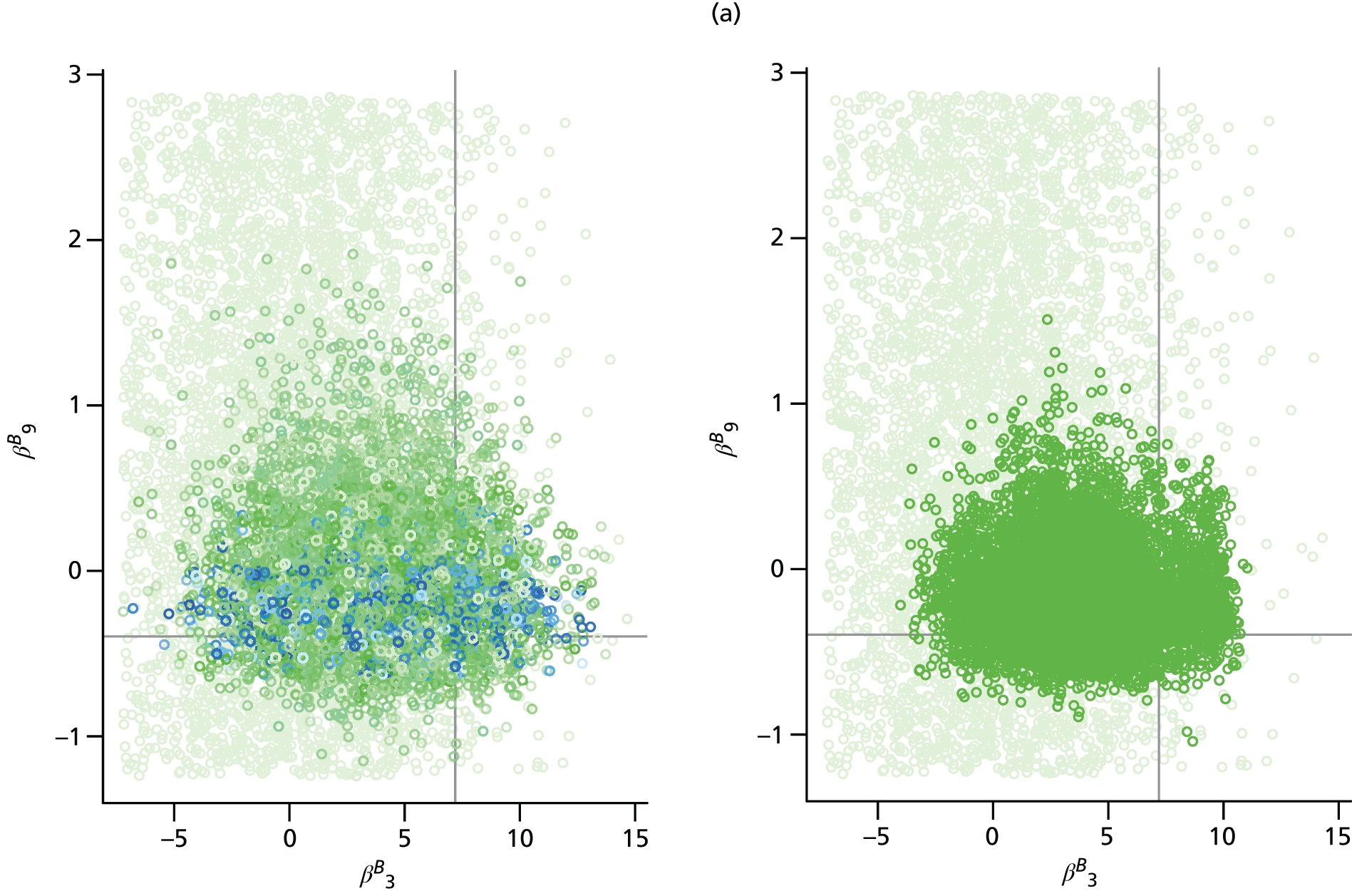

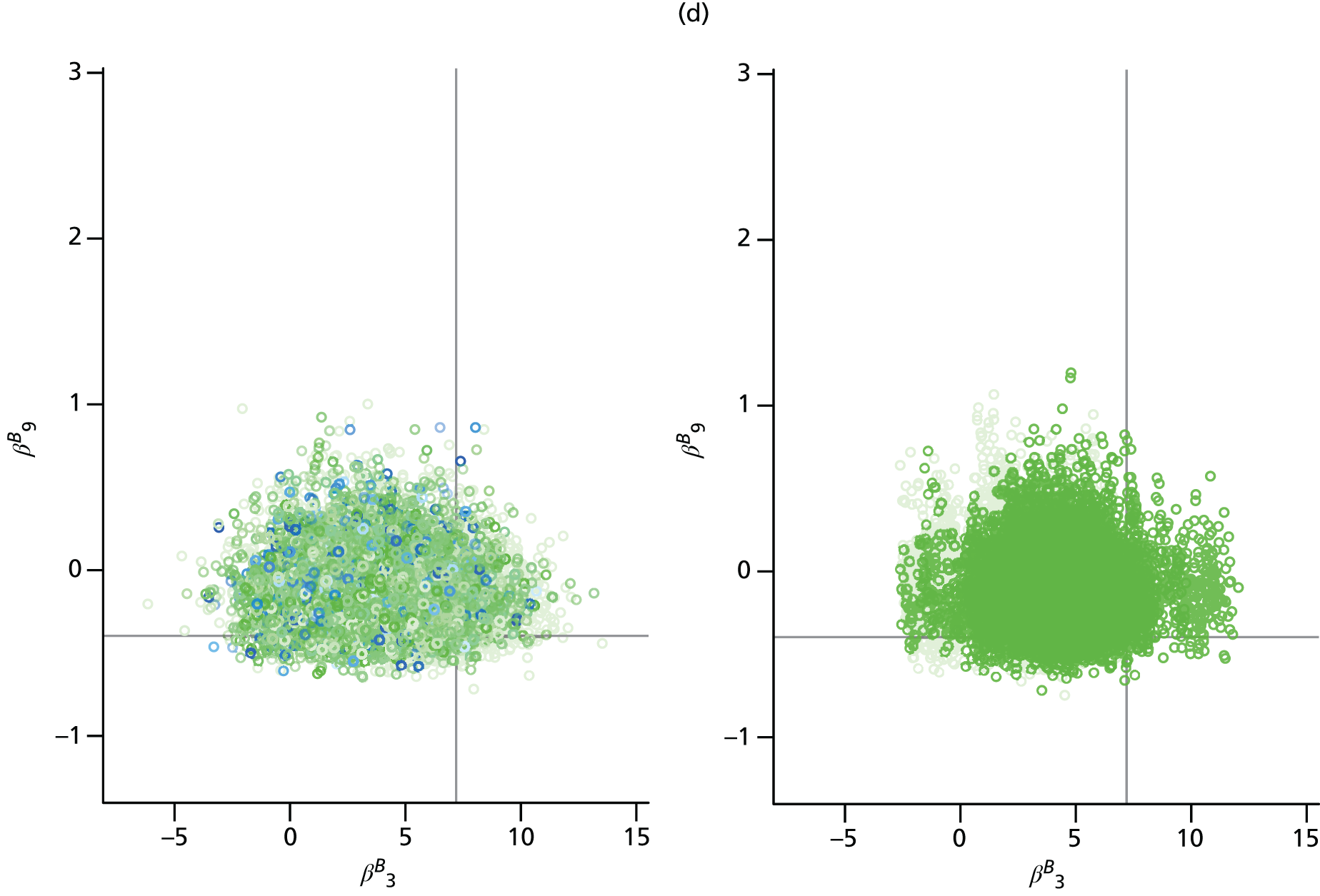

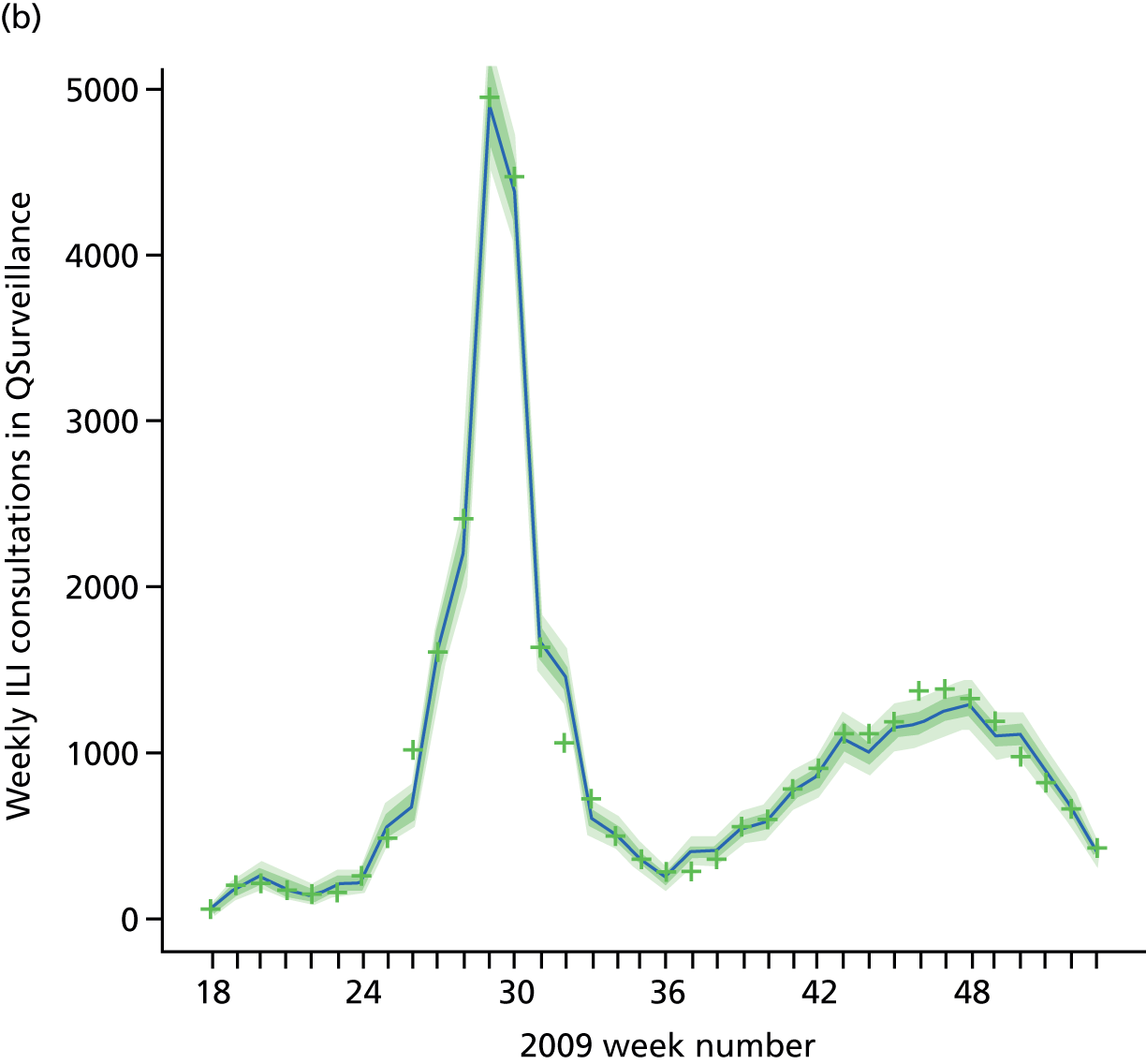

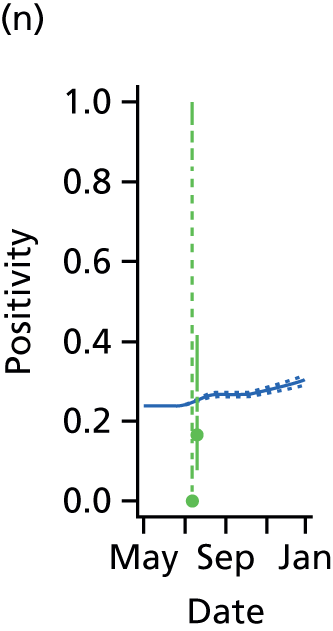

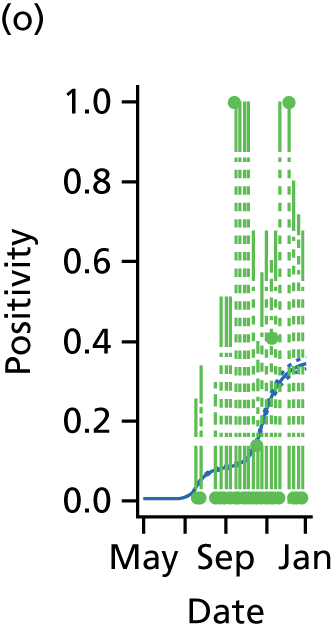

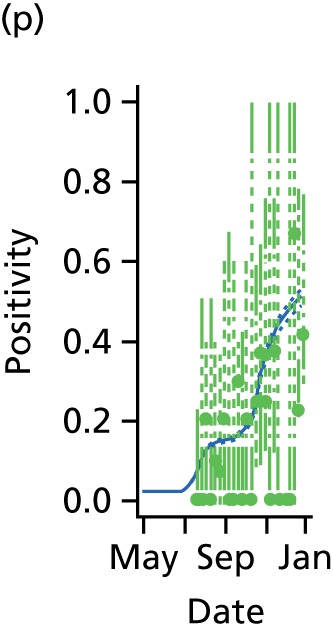

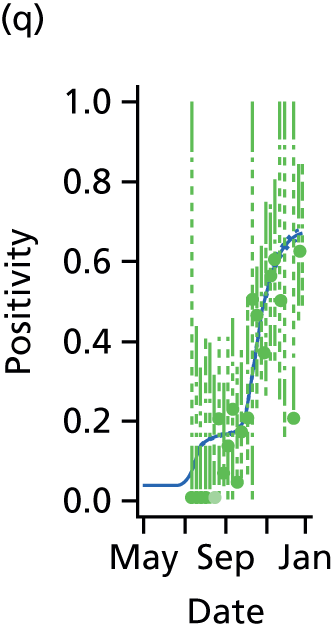

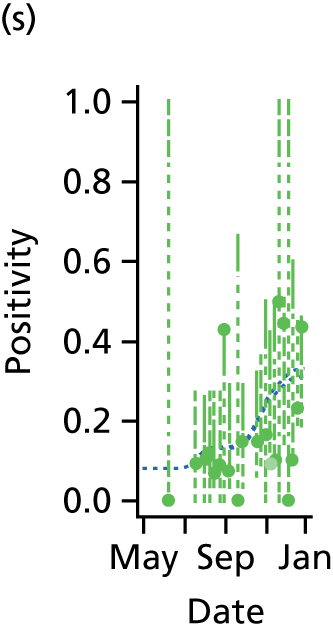

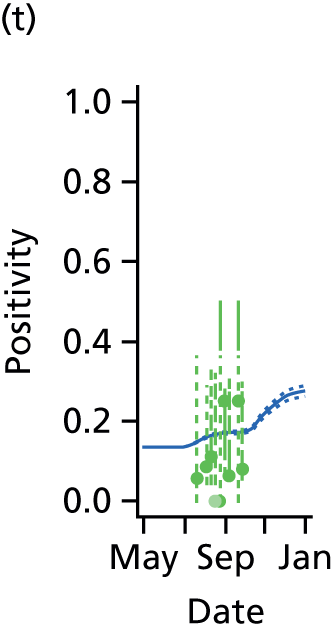

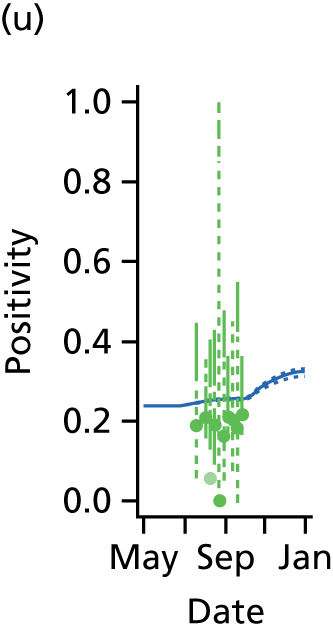

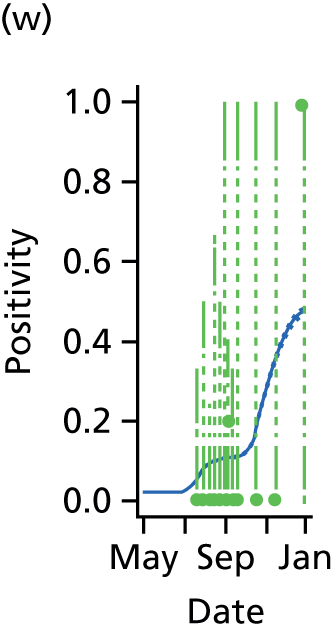

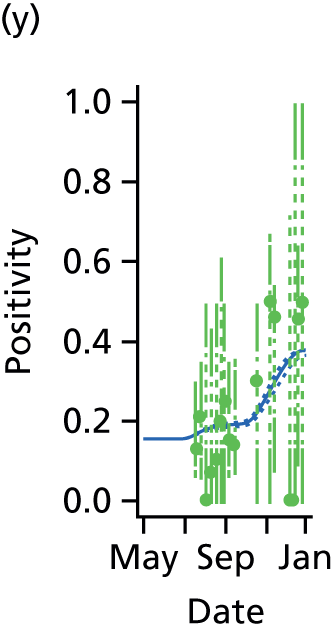

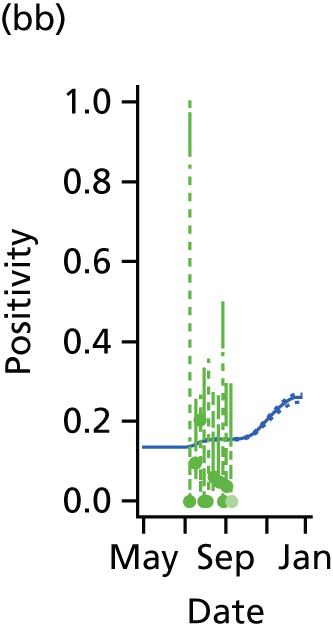

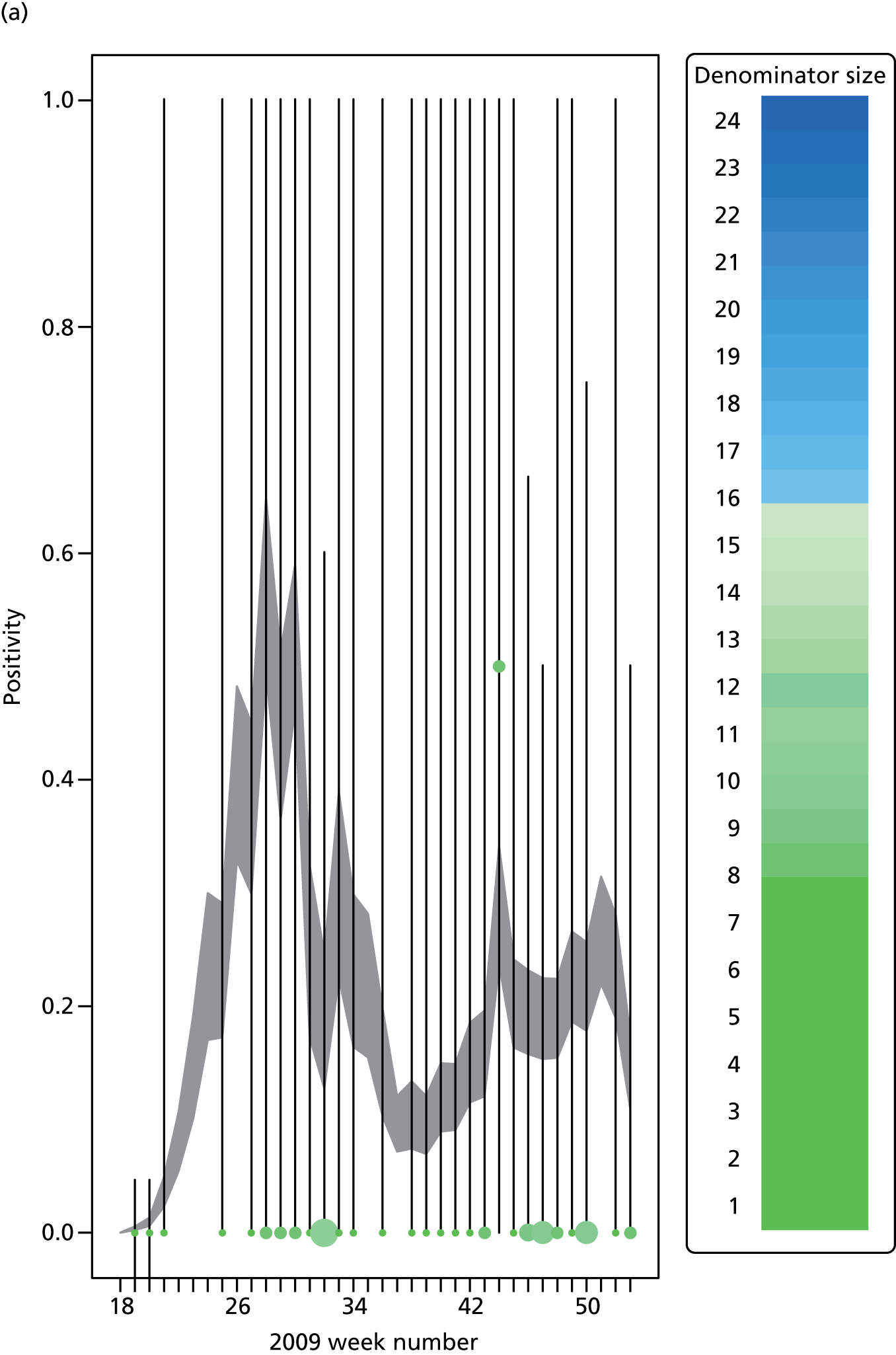

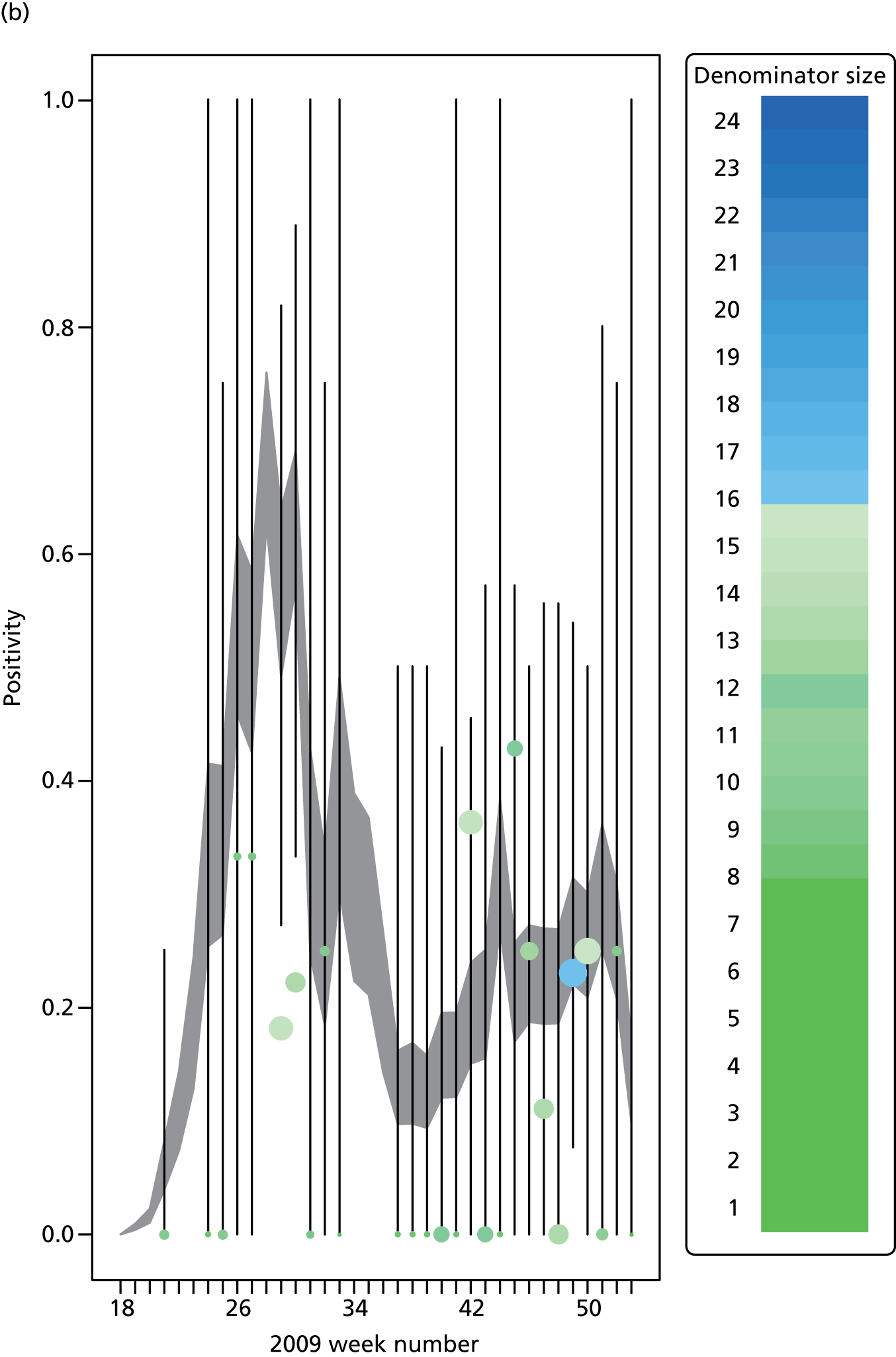

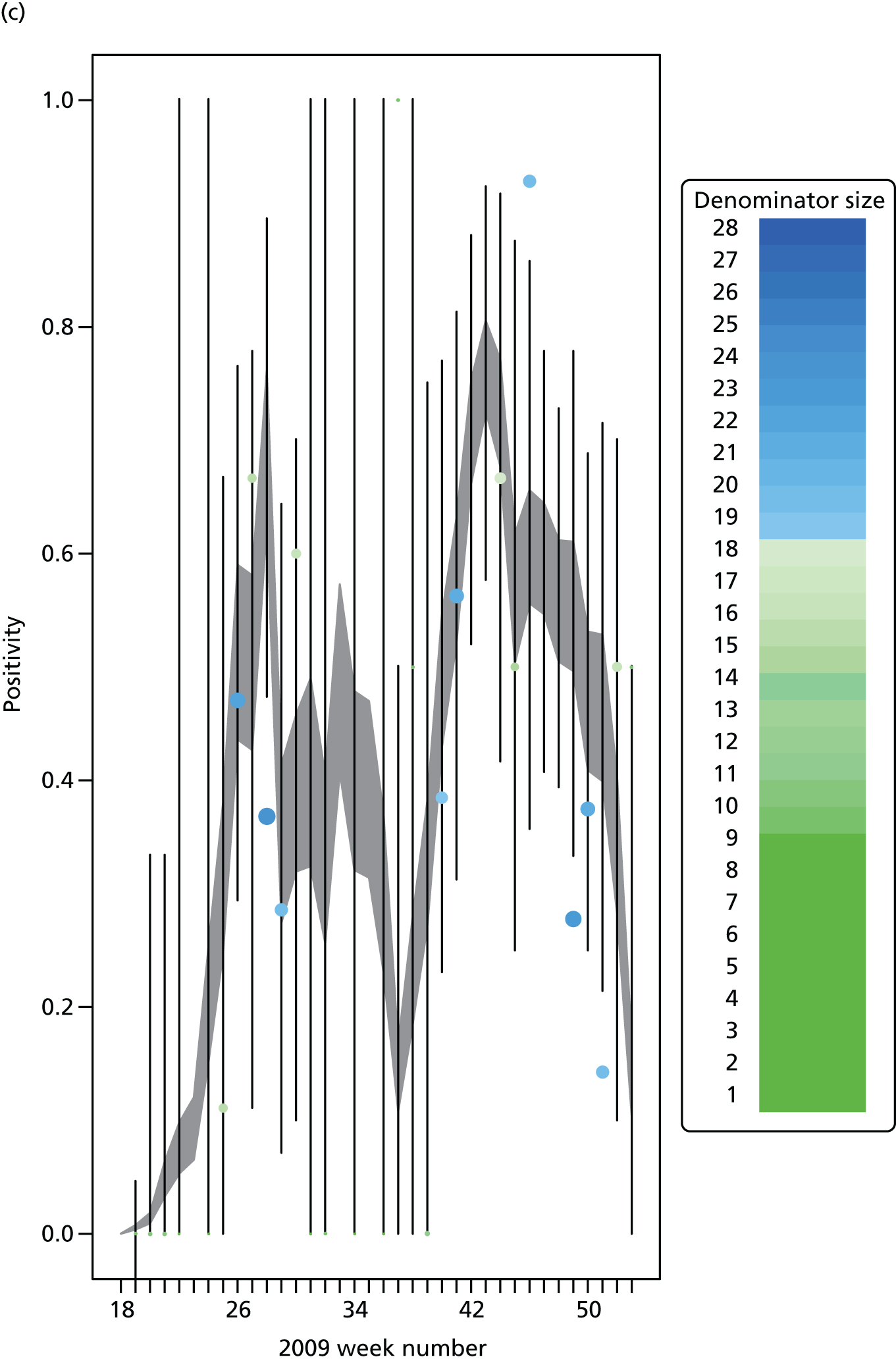

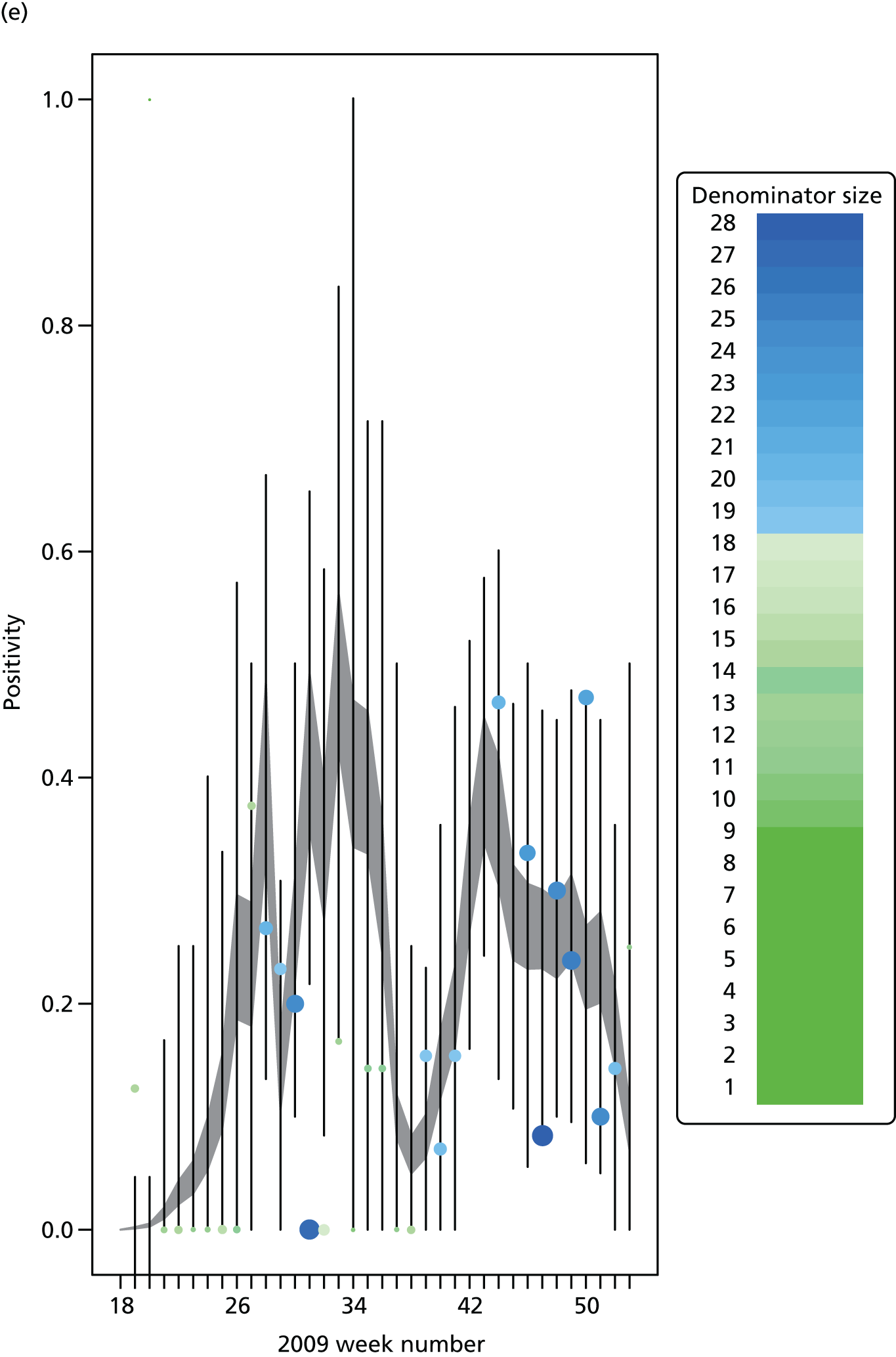

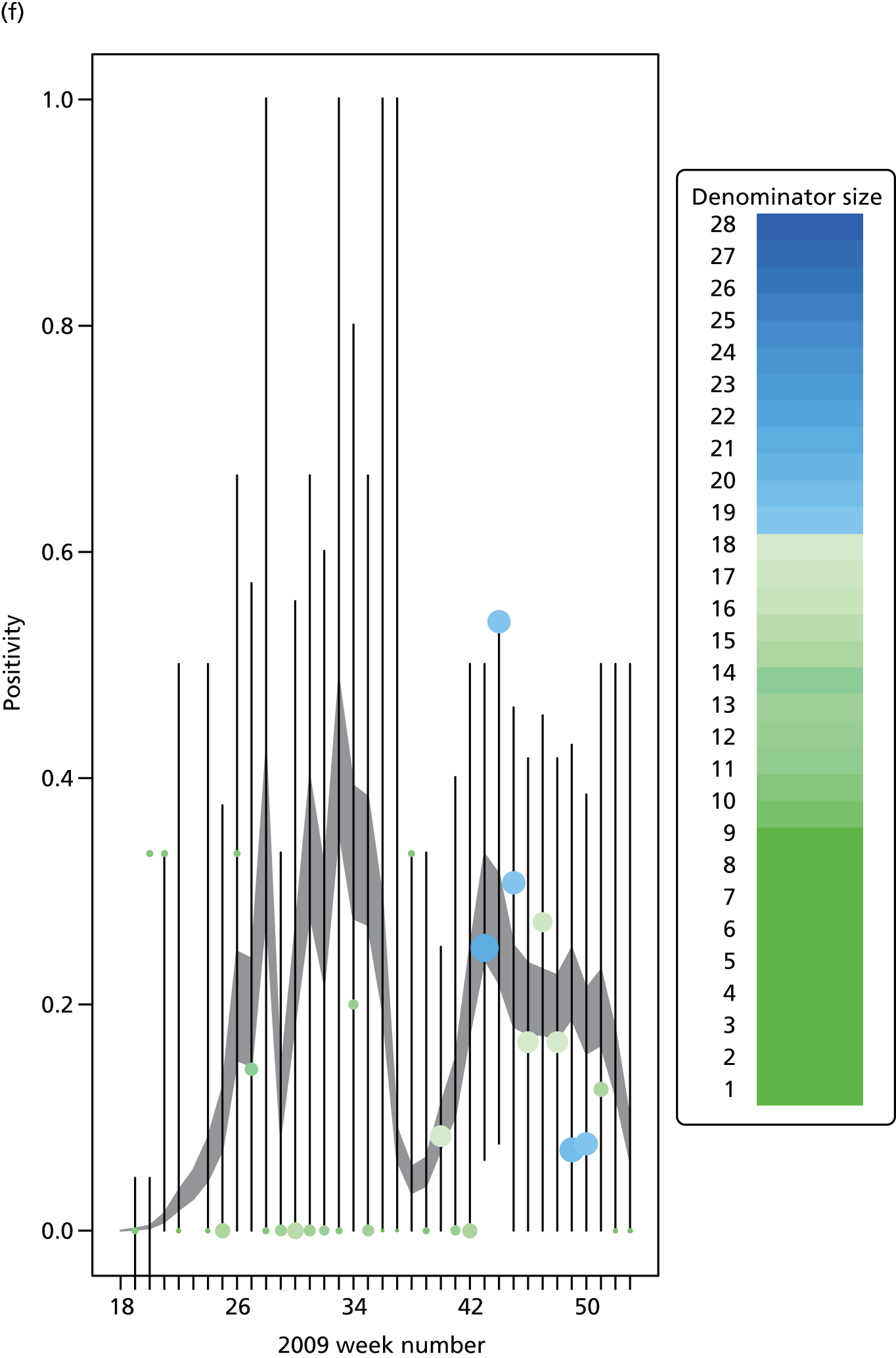

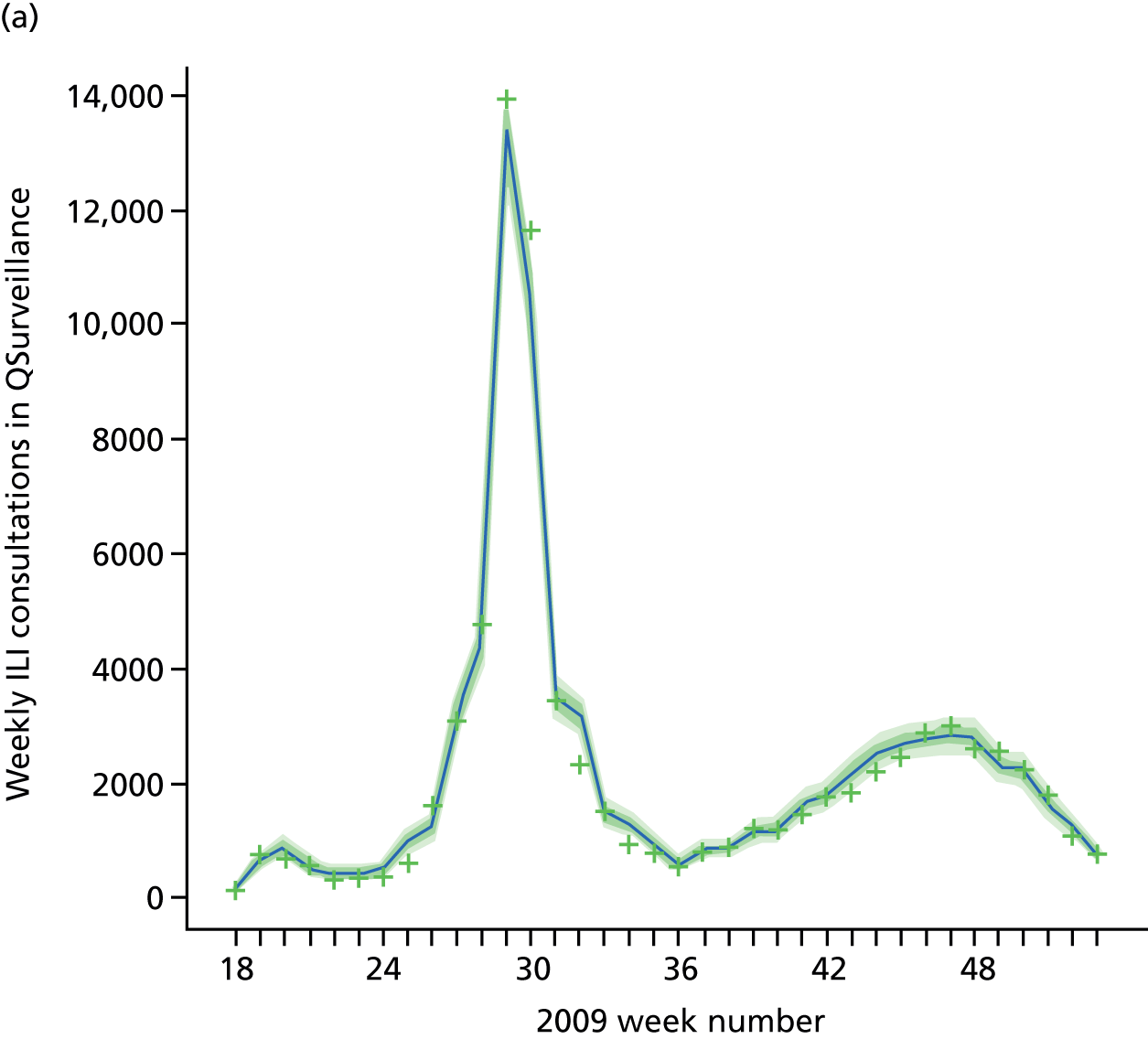

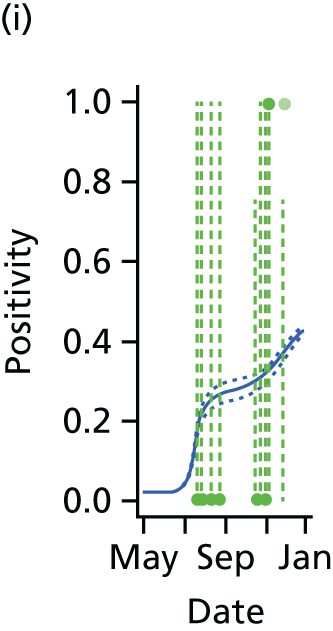

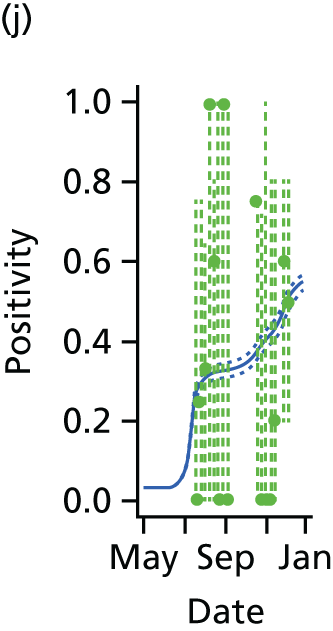

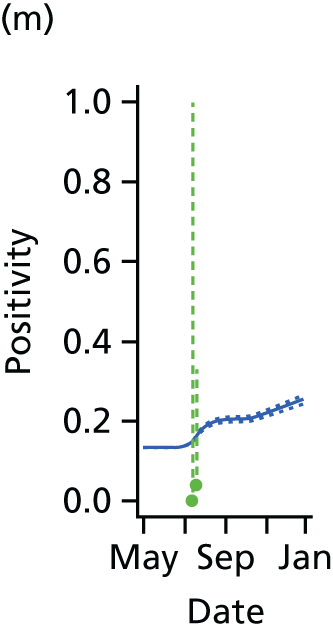

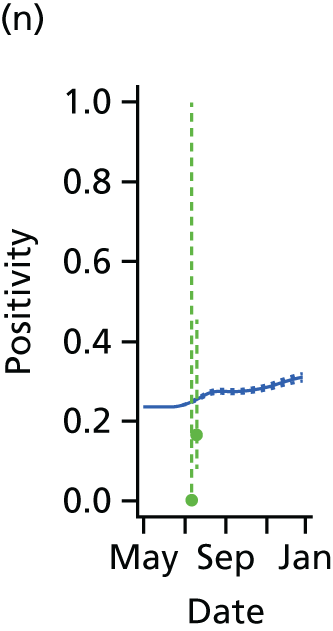

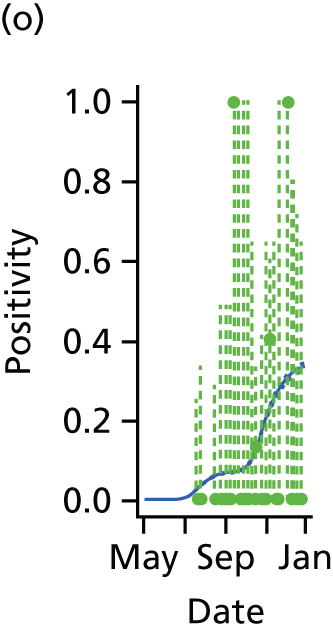

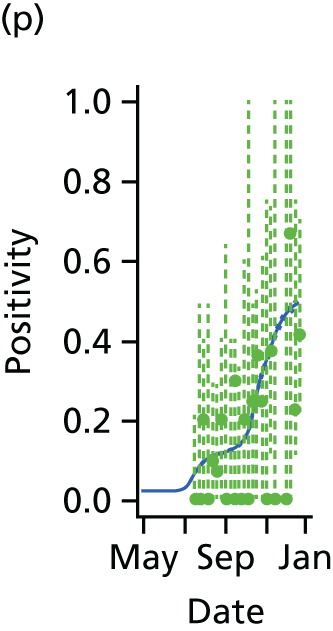

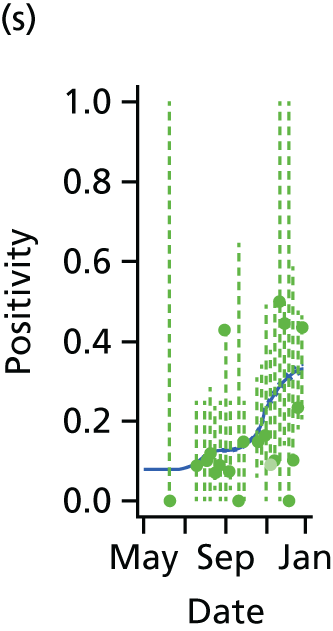

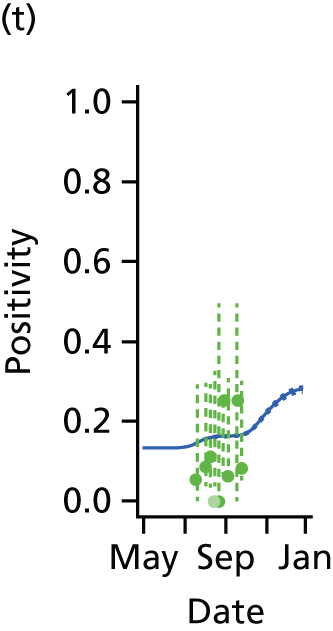

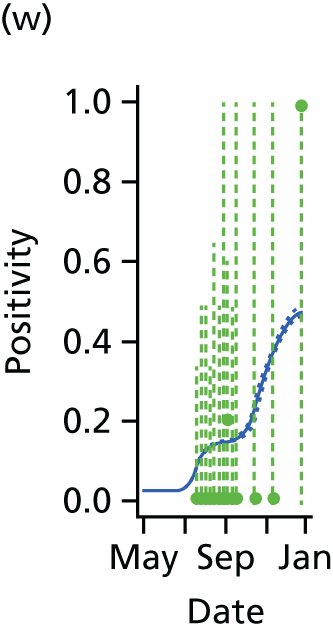

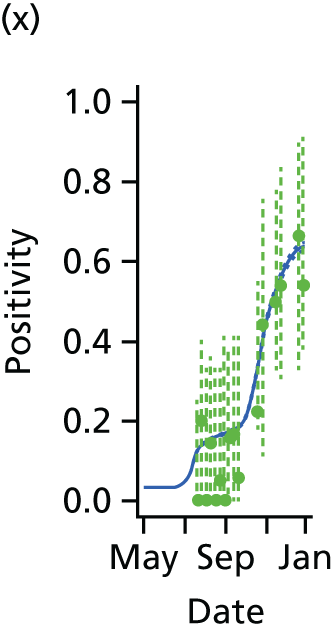

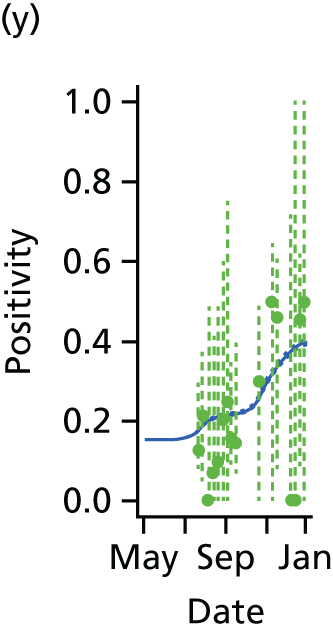

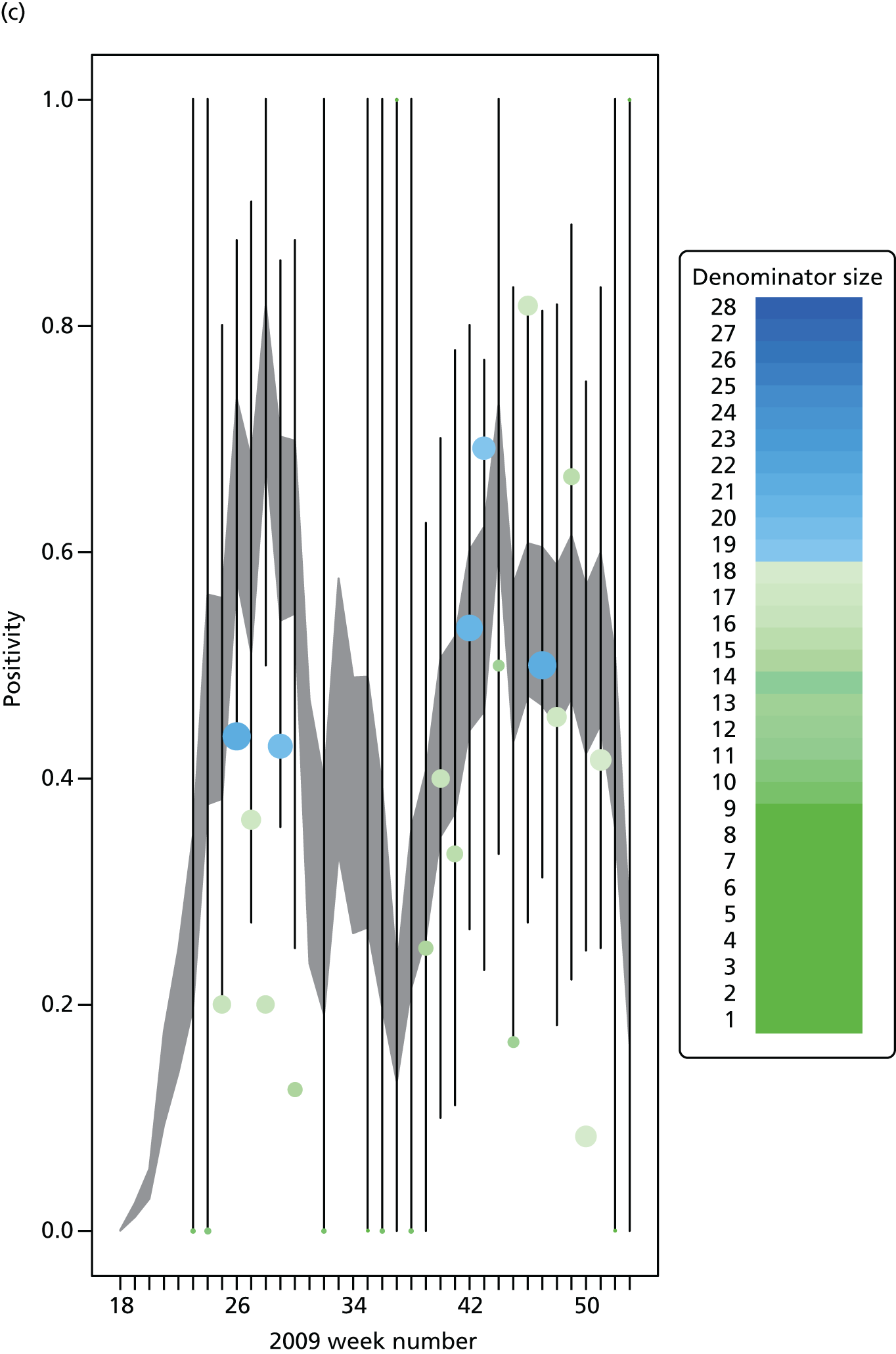

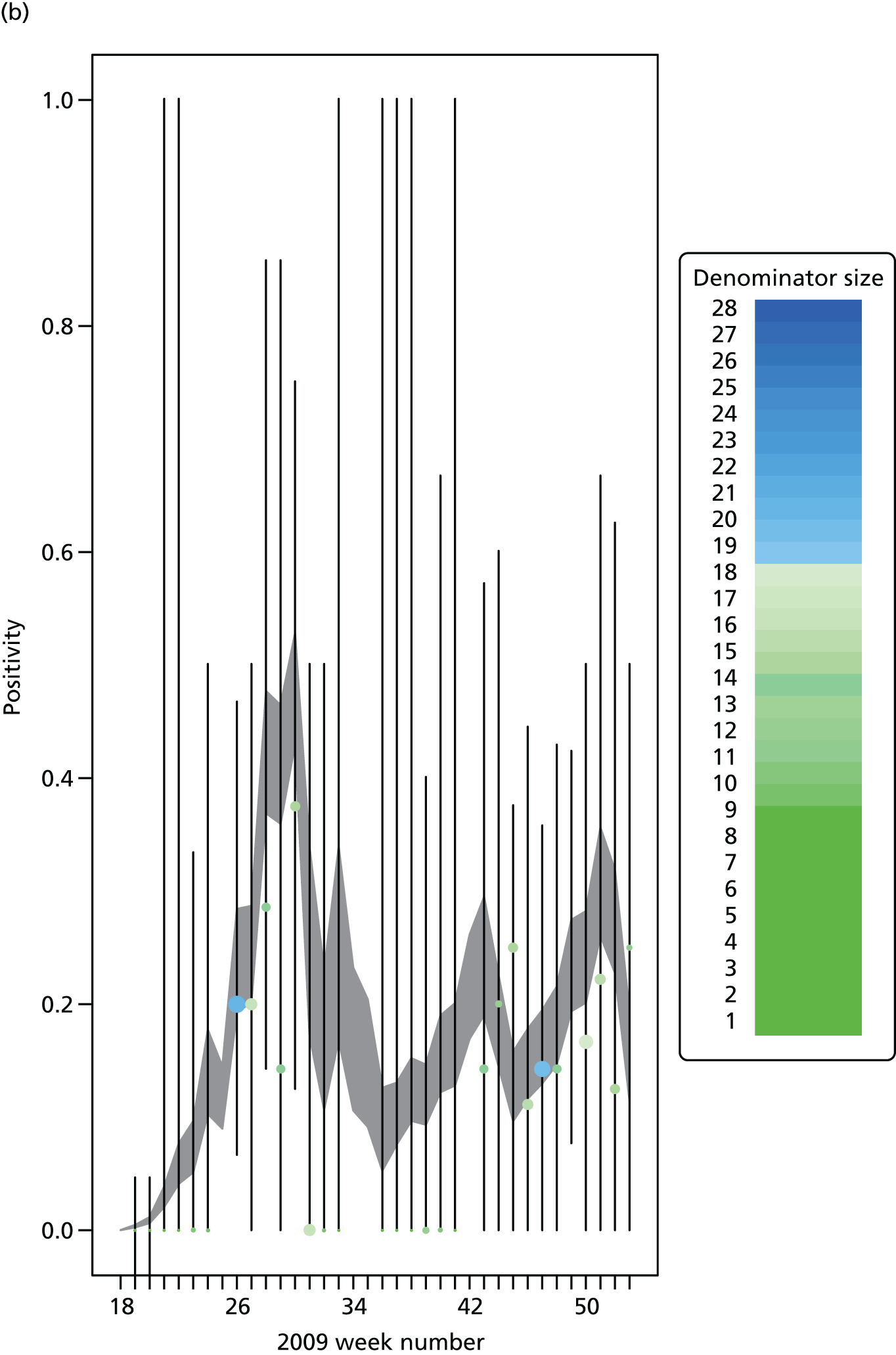

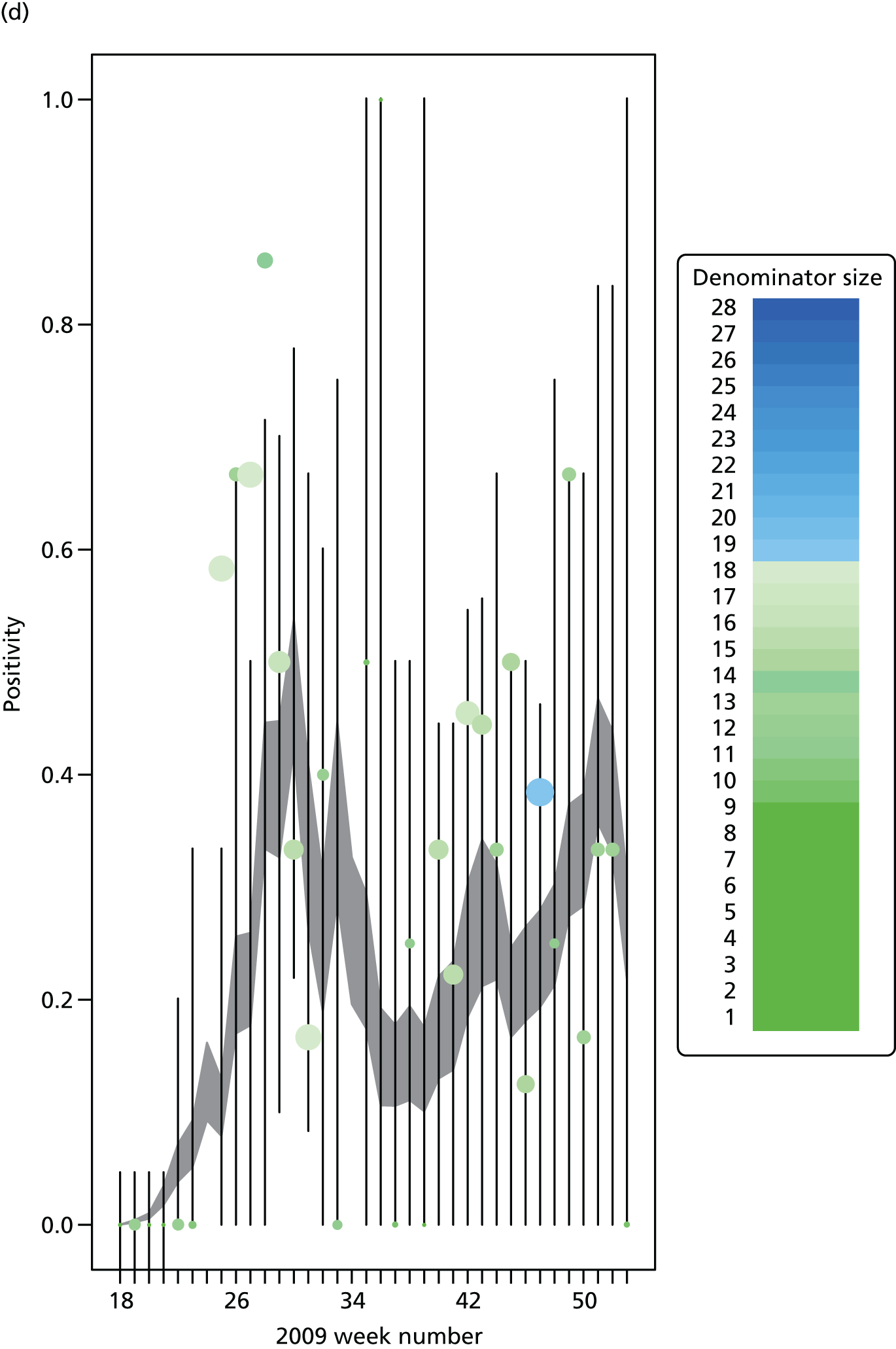

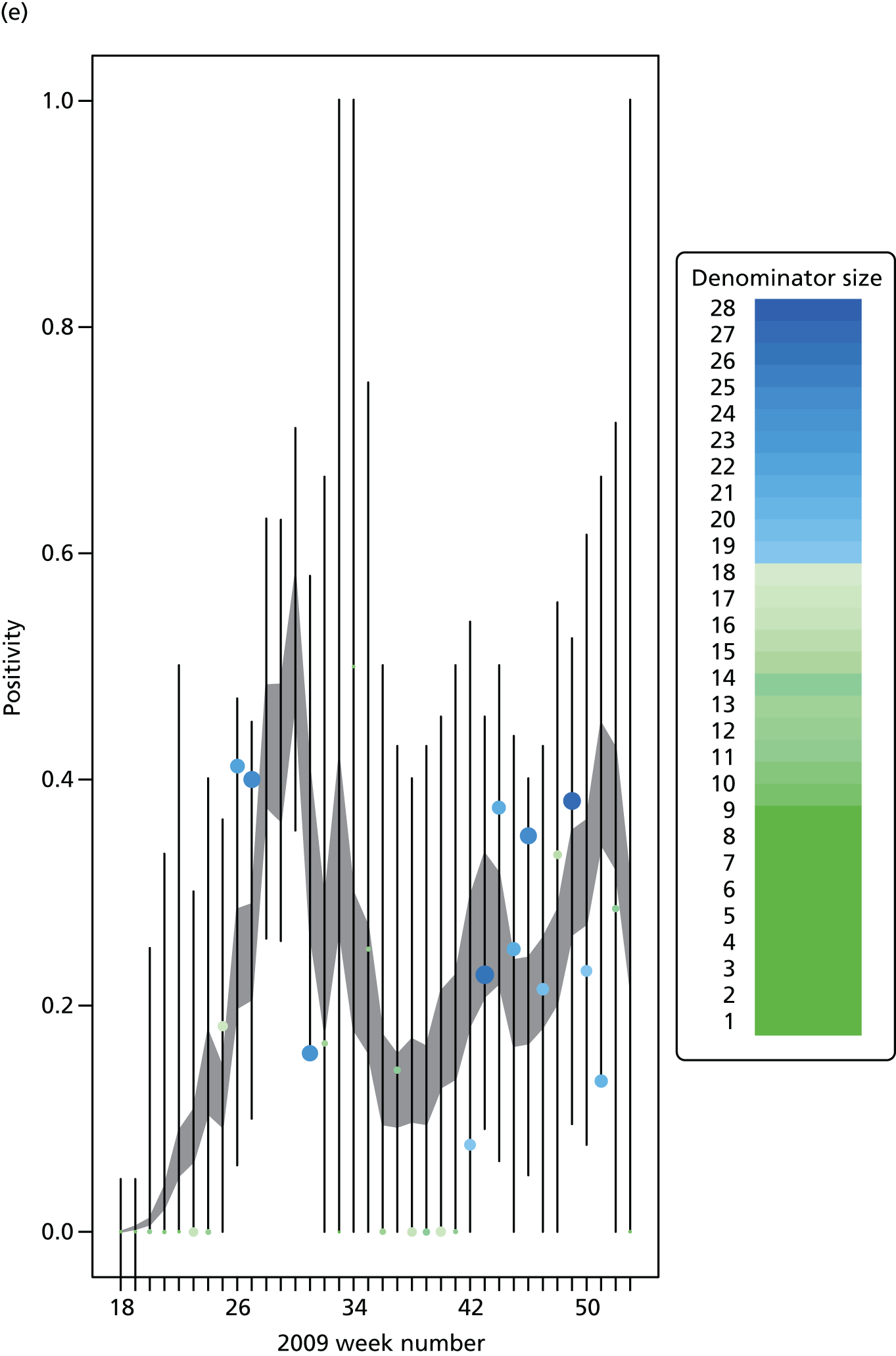

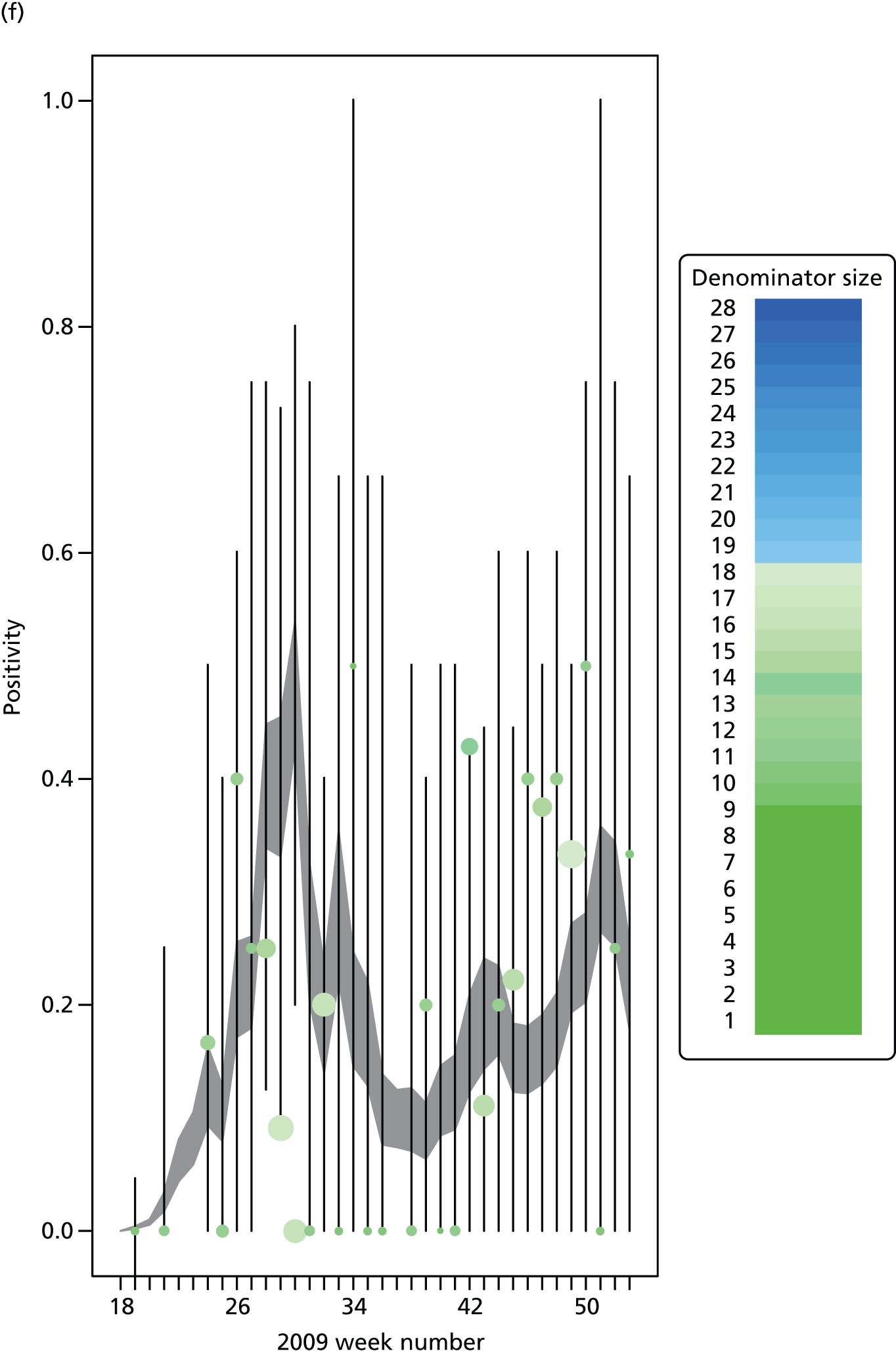

Having established the form of the regression equation for the background consultation rates, the next stage of model building in the MR approach was to consider the alternative model formulations of Chapter 3, The meta-region model, governing how the model handles density dependence, random commuting and the choice of the initial seeding. Examination of the posterior mean deviances presented in Table 5 shows that density dependence is best accounted for by scaling entries of the contact matrices by the population of the region, not the population of the relevant stratum, that is, by replacing Nr,a and Nv,a in Appendix 1, Equation 27 with Nr and Nv, the sum of the regional populations over age groups. Furthermore, it was found that within-region transmission that is density dependent (corresponding to the case α = 1 in Equation 27 of Appendix 1) gave better model fit than either frequency-dependent transmission (α = 0) or a mixture of the two (α = 0.5).