Notes

Article history

This issue of the Health Technology Assessment journal series contains a project commissioned/managed by the Methodology research programme (MRP). The Medical Research Council (MRC) is working with NIHR to deliver the single joint health strategy and the MRP was launched in 2008 as part of the delivery model. MRC is lead funding partner for MRP and part of this programme is the joint MRC–NIHR funding panel ‘The Methodology Research Programme Panel’.

To strengthen the evidence base for health research, the MRP oversees and implements the evolving strategy for high-quality methodological research. In addition to the MRC and NIHR funding partners, the MRP takes into account the needs of other stakeholders including the devolved administrations, industry R&D, and regulatory/advisory agencies and other public bodies. The MRP funds investigator-led and needs-led research proposals from across the UK. In addition to the standard MRC and RCUK terms and conditions, projects commissioned/managed by the MRP are expected to provide a detailed report on the research findings and may publish the findings in the HTA journal, if supported by NIHR funds.

The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

none

Note to reader

it is acknowledged that there has been a regrettable delay between carrying out the project, including the searches, and the publication of this report, because of serious illness of the principal investigator. The searches were carried out in 2010/11.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2017. This work was produced by Lefebvre et al. under the terms of a commissioning contract issued by the Secretary of State for Health. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2017 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Background

The effective retrieval of relevant evidence is essential in the development of clinical guidance or health policy, the conduct of health research and the support of health-care decision-making. Whether the purpose of the evidence retrieval is to find a representative set of results to inform the development of an economic model or to find extensive evidence on the clinical effectiveness or cost-effectiveness of a health-care intervention, retrieval methods need to be appropriate, efficient within the time and cost restraints that exist, consistent and reliable.

One tool that can be useful for effective retrieval is the search filter. Search filters are a combination of search terms designed to retrieve records about a specific concept, which may be a study design, such as randomised controlled trials (RCTs), outcomes such as adverse events, a population such as women or a disease or condition such as cardiovascular disease. A methodological search filter is designed to capture the records of studies that have used a specific study design. Effective search filters may seek to maximise sensitivity (the proportion of relevant records retrieved), maximise precision (the proportion of retrieved records that are relevant) or optimise retrieval using a balance between maximising sensitivity and achieving adequate precision. Search filters can offer a standard approach to study retrieval and release searcher time to focus on developing other sections of the search strategy such as the disease concept.

Aims and objectives

This project was funded to inform National Institute for Health and Care Excellence (NICE) methods development by investigating the methods used to develop and assess the performance of methodological search filters, exploring what searchers require of search filters during the life of various types of projects and exploring what information searchers value to help them choose a search filter. We also explored systems and approaches for providing better access to relevant and useful performance data on methodological search filters, including developing suggested approaches to reliable and efficient search filter performance measurement.

Our objectives were to:

-

identify and summarise the performance measures for search filters (single studies or performance reviews of a range of filters) that are reported

-

identify and summarise other performance measures reported in diagnostic test accuracy (DTA) studies and DTA reviews

-

identify and summarise ways to present filter/test performance data (e.g. graphs or tables) to assist users (searchers or clinicians) in choosing which filters or tests to use

-

identify and summarise evidence on how searchers choose search filters

-

identify and summarise evidence on how clinicians choose diagnostic tests

-

understand better how searchers choose search filters and what information they would like to receive to inform their choices

-

explore different ways to present search filter performance data for searchers and provide suggested approaches to presenting the performance data that searchers require

-

develop suggested approaches for reliable and efficient measurement for search filter performance.

We acknowledge that there has been a regrettable delay between carrying out the project, including the searches, and the publication of this report, because of serious illness of the principal investigator. The searches were carried out in 2010/11.

Chapter 2 Methods

The research plan had several stages. It began with a series of five literature reviews into various aspects of search filter reporting and use. The reviews informed the development of an interview schedule and a web-based questionnaire (see Appendix 1). The reviews, interviews and questionnaire informed the development of suggested approaches to gathering and reporting search filter performance and a test website, on which we invite further feedback [see https://sites.google.com/a/york.ac.uk/search-filter-performance/ (accessed 22 August 2017)].

Reviews

The research was grounded in a series of five reviews. We conducted two reviews on how the performance of methodological search filters has been measured, in single studies and also in studies comparing the performance of search filters. In a third review we sought to find inspiration and synergies in the DTA literature by reviewing the literature on diagnostic test reporting and included an exploration of the potential relevance of performance measures used in DTA studies. Search filters are analogous to diagnostic tests, being designed to distinguish relevant records from irrelevant records, and the performance of search filters and diagnostic tests is reported using similar measures, such as sensitivity and specificity. A fourth review sought reports on how searchers make choices about filters based on the information presented to them and a fifth review sought to identify any information on how clinicians make choices about diagnostic tests to gain insights into how searchers do or might in the future be encouraged to make choices about search filters.

The reviews were informed by literature searches conducted in databases in a number of disciplines including information science. Further information about the searches can be found within each of the reviews described later in this chapter and the search strategies are all included in the relevant appendices. The sources searched were:

-

The Cochrane Library

-

EMBASE

-

European network for Health Technology Assessment (EUnetHTA)

-

health technology assessment (HTA) organisation websites

-

Health Technology Assessment international (HTAi) Vortal

-

Inter Technology Appraisal Support Collaboration (InterTASC) Information Specialists’ Sub-Group (ISSG) Search Filters Resource

-

Library and Information Science Abstracts (LISA)

-

MEDLINE

-

PsycINFO.

The reviews were conducted to reflect the project objectives, which were to determine:

-

what performance measures are reported for single studies of search filters and how they are presented (review A)

-

what performance measures are reported when comparing a range of search filters and how the performance measures are synthesised (review B)

-

what performance measures are reported in DTA studies and DTA reviews (review C)

-

how searchers choose search filters (review D)

-

how filter/test performance data are presented (e.g. text, graphs, tables, graphics) to assist users (searchers or clinicians) in choosing which filters or tests to use (reviews A, B and C)

-

how clinicians or organisations choose diagnostic tests (review E).

Interviews and questionnaire

The objective of the reviews was to identify information about:

-

performance measures in use

-

the presentation of performance measures

-

how searchers and clinicians choose search filters or diagnostic tests.

The next stage, consisting of two phases (semistructured interviews and a questionnaire survey), was to ascertain which search filter performance measures were deemed to be the most important by searchers for informed decision-making. We sought to gain information on how search filter performance information could most usefully be presented to assist decisions and whether or not there is scope for performance information to be obtained as part of routine project work.

Phase 1: semistructured interviews

As this project was funded to inform NICE methods development, the involvement of NICE staff was central to it. We contacted NICE information specialists and project managers and offered them the opportunity to participate in the project. Each interview, which was recorded, lasted for no more than 45 minutes. Once the interview time and date were agreed, confirmation details (date, time, length of interview and interviewer details), along with a topic guide and assurance of anonymity, were sent to each interviewee. After each interview, an e-mail containing a summary of the key points raised during the interview was sent to each interviewee, who was offered the opportunity to check the notes for accuracy and add any additional points that may have occurred to him or her after the interview had ended.

Phase 2: questionnaire survey

Information from the literature reviews and the interviews was used to inform the design and content of a web-based questionnaire. NICE information specialists and project managers were invited to complete the questionnaire but it was also used to collect the views of the wider (national and international) systematic review, HTA and guidelines information community. This information community is well networked and was reached via e-mail lists, as described in Chapter 4 (see Questionnaire methods).

Presentation of filter information

Information from the reviews and interview and questionnaire responses was used to develop suggested approaches to measuring search filter performance.

We also developed a series of pilot formats for presenting search filter performance information. With the approval of the authors, some of the data from the Cochrane methodology review of the performance of search filters in identifying DTA studies,1,2 which at the time of the project was not yet published, was used to populate the pilot formats.

Performance tests, reports and performance resource

We developed a prototype web resource (using content management systems available at the University of York) to present performance data and to facilitate feedback and comments from NICE staff and others from within the evidence synthesis information community. Without prejudging users’ requirements or the results of the research, the performance resource presented a matrix of information showing how well published search filters perform for specific study designs in different clinical specialties and with different user preferences for measures such as sensitivity or precision.

Based on the suggested approaches, we developed performance tests and performance reports, which were uploaded onto the project website. We also developed detailed procedures with the intention of assisting researchers to conduct and report future performance tests. We considered that if we could ascertain that users valued information in a specific format then we could try to develop suggested approaches to promoting these methods. The intention was to develop user-friendly tools for the future and to explore options to make these tools widely available.

Performance measures for methodological search filters (review A)

Introduction

Although there are a large number of search filters in existence, many have been developed pragmatically and have not undergone validation. Even for those search filters that have been validated, few have been validated beyond the data in the original publication. This method is described as internal validation and is a less rigorous approach than external validation, in which a filter is tested using a different gold standard from the one used to develop the filter. External validation provides an independent assessment of filter performance and gives a better indication of how a filter is likely to perform in the real world.

Selection of a search filter will depend on the particular searching task and on the performance of the search filter. Thus, it is important to report performance measures for search filters. There are a few tools available that can be used to assess or appraise search filters and these can help in the selection of search filters for specific tasks. 3–5

The aim of this review was to look at the performance measures that are reported for search filters (single studies) and how they are presented. Single studies were defined as those in which a new search filter (or series of filters) was developed, or a search filter was revised, and in which performance measures of the search filter(s) were also reported.

The objectives of the review were to:

-

identify and summarise the methods used to develop and validate search filters

-

identify and summarise the performance measures used in single studies of search filters

-

describe how these performance measures are presented.

Methods

Identification of studies

Studies were identified from the ISSG Search Filters Resource. 6 The ISSG Search Filters Resource is a collaborative venture to identify, assess and test search filters designed to retrieve health-care research by study design. It includes published filters and ongoing research on filter design, research evaluating the performance of filters and articles providing a general overview of search filters. At the time of this project, regular searches were being carried out in a number of databases and websites, and tables of contents of key journals and conference proceedings were being scanned to populate the site. Researchers working on search filter design are encouraged to submit details of their work. The 2010 update search carried out by the UK Cochrane Centre to support the ISSG Search Filters Resource website was also scanned to identify any relevant studies that were not included on the website at that time.

We acknowledge that there has been a regrettable delay between carrying out the project, including the searches, and the publication of this report, because of serious illness of the principal investigator. The searches were carried out in 2010/11.

Inclusion criteria

The review included studies that reported the development and evaluated the performance of methodological search filters for health-care bibliographic databases. For pragmatic reasons, the review specifically focused on studies that developed and evaluated methodological search filters for economic evaluations, DTA studies, systematic reviews and RCTs. These study types are the ones most commonly used by organisations such as NICE to underpin their decision-making when producing technology appraisals and economic evaluations of health-care technologies and subsequent clinical guidelines. Publications prior to 2001 were excluded partly for pragmatic reasons but also because during this period search filters tended to be derived by subjective methods and because some of the filters had subsequently been updated or were now out-of-date because of changes in database indexing.

Exclusion criteria

Studies were excluded from the review if they:

-

were available only in abstract form (e.g. conference abstracts)

-

did not develop or revise a search filter

-

did not report details of the methods used in developing the search filter

-

did not evaluate search filter performance

-

were published before 2001.

Data extraction

Data were extracted from selected studies using a standardised data extraction form to identify information regarding gold/reference standards, filter development/validation and performance measures reported.

Results

Fifty-eight studies were identified from the ISSG Search Filters Resource. After applying the outlined inclusion and exclusion criteria, 23 studies were identified for inclusion in the review. 7–29 Details from the included studies, grouped according to type of methodological search filter (economic, diagnostic, systematic review and RCT), are provided in Tables 1–4.

| Reference | Database/platform | Gold standard to derive/report filter performance (internal) | Filter development | Filters tested | Performance measures reported (presentation) | Gold standard to report external validation | External validation measures |

|---|---|---|---|---|---|---|---|

| aMcKinlay 20067 | EMBASE (Ovid) | Hand-search of 55 journals for publication year 2000 (n = 183 for costs; n = 31 for economics). Articles were assessed by six research assistants; inter-rater agreement previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 25% and specificity of > 75% were incorporated into the development of the filters. Terms were combined with Boolean OR | Six single terms and six combinations of terms were reported (three each for costs and economics):

|

Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| aWilczynski 20048 | MEDLINE (Ovid) | Hand-search of 68 journals for publication year 2000 (n = 199 for costs, n = 23 for economics). Articles were independently assessed by two research assistants and disagreements were resolved by a third independent assessment | Subjective – index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 25% and specificity of > 75% were incorporated into development of the filters. Terms were combined with Boolean OR | Nine combinations of terms were reported (five for costs and four for economics):

|

Sensitivity, specificity, precision (tables) | None | No |

| Reference | Database/platform | Gold standard to derive/report filter performance (internal) | Filter development | Filters tested | Performance measures reported (presentation) | Gold standard to report external validation | External validation measures |

|---|---|---|---|---|---|---|---|

| Astin 20089 | MEDLINE (Ovid) | Derivation set: hand-search of six journals for publication years 1985, 1995 and 1988 (n = 333). Articles were assessed independently by three researchers and discrepancies were resolved by discussion | Candidate terms from previously published strategies and MeSH and text words from derivation set MEDLINE records. Terms were added sequentially beginning with terms with the highest PPV and at each step adding the term that retrieved the largest proportion of additional derivation set records. The steps were repeated until the highest sensitivity was achieved | One filter tested. Separate filter for retrieving imaging studies developed | Sensitivity, specificity, PPV, confidence intervals reported (tables) | Validation set: hand-search of six journals for the publication year 2000 (n = 186) | Sensitivity, specificity, PPV, confidence intervals reported (tables) |

| Bachmann 200210 | MEDLINE (DataStar) | Hand-search of four journals for publication year 1989 (n = 83). Articles were assessed independently by two researchers | Word frequency analysis of all words in MEDLINE records, excluding those not semantically associated with diagnosis. The 20 terms with the highest individual sensitivity × precision score plus MeSH exp “sensitivity and specificity” were combined with OR in a stepwise fashion into a series of strategies and were performance tested | Two filters tested | Sensitivity, precision, NNR, confidence intervals reported (tables) | Hand-search of same four journals for publication year 1994 (n = 53) and four different journals for publication year 1999 (n = 61) | Sensitivity, precision, NNR, confidence intervals reported (for 1994 data) (tables) |

| Bachmann 200311 | EMBASE (DataStar) | Hand-search of four journals for publication year 1999 by one researcher, 10% independently assessed by second researcher (n = 61) | Word frequency analysis of all words in EMBASE records, excluding those not semantically associated with diagnosis. The 10 terms with the highest individual sensitivity × precision score were combined with OR into a series of strategies and were performance tested | Eight filters tested, three filters recommended:

|

Sensitivity, precision, NNR, confidence intervals reported (tables) | None | No |

| Berg 200512 | MEDLINE (PubMed) | PubMed search carried out on 25 November 2002 of cancer-related fatigue using NLINKS-EBN matrix search strategies (n = 238). Articles were assessed by two reviewers. Inter-rater reliability 0.71 | Terms from the PubMed clinical queries diagnosis filter. Additional terms from MeSH and text terms from gold standard records and additional search filters. Terms were tested to see if they fulfilled one inclusion criterion including having individual sensitivity of > 5% and specificity of > 95%. Terms were combined with OR until sensitivity was maximised | Two filters tested:

|

Sensitivity, specificity, NNR, LR+ values (tables) | None | No |

| aHaynes 200413 | MEDLINE (Ovid) | Hand-search of 161 journals for publication year 2000 (n = 147). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 25% and specificity of > 75% were incorporated into development of the filters. Tested combining terms with OR | Three single terms and nine combinations of terms reported:

|

Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| Vincent 200314 | MEDLINE (Ovid) | Reference set: studies included in 16 systematic reviews of diagnostic tests for deep-vein thrombosis and indexed in MEDLINE (n = 126 published from 1969 to 2000). Authors note that the reference set excluded many high-quality articles |

|

Three filters tested. One filter was recommended as ‘more balanced’ with high sensitivity and improved precision | Sensitivity (table) (data available to calculate precision) | None | No |

| aWilczynski 200515 | EMBASE (Ovid) | Hand-search of 55 journals for publication year 2000 for methodologically sound diagnostic studies (n = 97). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with an individual sensitivity of > 25% and specificity of > 75% were incorporated into the development of the filters. Tested out combining terms with OR | In total, 6574 strategies were tested. Three single terms and five combinations of terms were reported:

|

Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| Reference | Database/platform | Gold standard to derive/report filter performance (internal) | Filter development | Filters tested | Performance measures reported (presentation) | Gold standard to report external validation | External validation measures |

|---|---|---|---|---|---|---|---|

| Berg 200512 | MEDLINE (PubMed) | PubMed search carried out 25 November 2002 on cancer-related fatigue using NLINKS-EBN matrix search strategies (n = 238). Articles were assessed by two reviewers. Inter-rater reliability 0.55 | Terms from the PubMed clinical queries systematic review filter. Additional terms from MeSH and text terms from gold standard records and additional search filters. Terms were tested to see if they fulfilled one inclusion criterion including having an individual sensitivity of > 5% and specificity of > 95%. Terms were combined with OR until sensitivity was maximised | Numerous filters tested – results reported only for the best filter, which had high sensitivity and high specificity. Separate filters to identify diagnostic tests were also developed | Sensitivity, specificity, NNR, LR+ values (tables) | None | No |

| aEady 200816 | PsycINFO (Ovid) | Hand-search of 64 journals for publication year 2000 (n = 58). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 10% and specificity of > 10% were incorporated into the development of the filters. Tested out combining terms with OR | One single term and four combinations of terms reported:

|

Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| aMontori 200517 | MEDLINE (Ovid) | Derivation set: hand-search of 10 journals for publication year 2000 (n = 133 used to test strategies). Internal validation set: validation data set excluding CDSR (n = 332 used to validate strategies). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 50% and specificity of > 75% were incorporated into the development of the filters. Tested out combining terms with OR | Five single terms reported: best sensitivity (with specificity ≥ 50%), best specificity (with sensitivity of ≥ 50%), best precision (based on sensitivity of ≥ 25% and specificity of ≥ 50%). Two combination strategies maximising sensitivity and minimising the difference between sensitivity and specificity. Four combination strategies maximising precision | Sensitivity, specificity, precision, confidence intervals reported (tables) | Validation dataset: hand-search of 161 journals for publication year 2000 (n = 753) | Sensitivity, specificity, precision, confidence intervals reported (tables) |

| Shojania 200118 | MEDLINE (PubMed) | None | Relevant publication types (‘meta-analysis’, ‘review’, ‘guideline’) plus title and text words typically found in systematic reviews | One filter tested against two external gold standards and also applied to three clinical topics (screening for colorectal cancer, thrombolytic therapy for venous thromboembolism and treatment of dementia) | No | Sensitivity:

|

Sensitivity, confidence intervals reported (tables); PPV, confidence intervals reported (tables) |

| White 200119 | MEDLINE (Ovid) | Hand-search of five journals for publication years 1995 and 1997 (quasi-gold standard, n = 110). Articles were assessed independently by two experienced researchers. Two sets for comparison:

|

Textual analysis of quasi-gold standard test set records. MeSH and publication type analysed for each of three test sets. A total of 38 terms were analysed by discriminant analysis to determine which best distinguished between the three sets of records | Five models (filters) were tested on the full test set | Sensitivity, specificity, precision (tables) | One model was tested on the validation set. All models were tested in a ‘real-world’ scenario using Ovid MEDLINE on CD-ROM from 1995 to 1998 (and compared with two previously published strategies) | Sensitivity, precision (discussed in text), sensitivity, precision (table) |

| aWilczynski 200720 | EMBASE (Ovid) | Hand-search of 55 journals for publication year 2000 (n = 220). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 25% and specificity of > 75% were incorporated into the development of filters. Tested out combining terms with OR | Two single terms and four combinations of terms reported:

|

Sensitivity, specificity, precision, accuracy, confidence intervals reported, (tables) | None | No |

| aWong 200621 | CINAHL (Ovid) | Hand-search of 75 journals for publication year 2000 (n = 127). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of at least 10% and specificity of at least 10% were incorporated into development of the filters. Tested out combining terms with OR | Three single terms and four combinations of terms were reported:

|

Sensitivity, specificity, precision, accuracy confidence intervals reported, (tables) | None | No |

| Reference | Database/platform | Gold standard to derive/report filter performance (internal) | Filter development | Filters tested | Performance measures reported (presentation) | Gold standard to report external validation | External validation measures |

|---|---|---|---|---|---|---|---|

| aEady 200816 | PsycINFO (Ovid) | Hand-search of 64 journals for publication year 2000 (n = 233). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of ≥ 10% and specificity of ≥ 10% were incorporated into development of the filters. Tested out combining terms with OR and used stepwise logistic regression | One single term and five combinations of terms were reported

|

Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| Glanville 200622 | MEDLINE (Ovid) | Database searches of MEDLINE and CENTRAL. Gold standard: randomly selected RCT records (1970, 1980, 1990, 2000) (n = 1347). Comparison group of randomly selected records of non-trials for the same years (n = 2400) | Frequency analysis of gold standard records to identify terms. Logistic regression analysis used to identify best-discriminating sets of terms in 50% of gold standard and comparison group records. Terms tested on remaining 50% of gold standard/comparison group records. Six search strategies were derived: two single-term and four multiterm strategies | Search strategies derived from 50% of the gold standard/comparison group records were tested on the remaining 50% of the records. No details given of performance measures | None | External gold standard:

|

External gold standard:

|

| aHaynes 200523 | MEDLINE (Ovid) | Hand-search of 161 journals for publication year 2000 (n = 1587); internal development set (60%) (n = 930); validation set (40%) (n = 657). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 25% and specificity of > 75% were incorporated into the development of the filters. Tested out combining terms with OR and used stepwise logistic regression | Three single terms for high sensitivity, high specificity or optimised balance between sensitivity and specificity. Three combination strategies for highest sensitivity (specificity > 50%), three combination strategies for highest specificity (sensitivity > 50%), three combination strategies for highest accuracy (sensitivity > 50%), three combination strategies for optimising sensitivity and specificity (based on an absolute difference of < 1%). Best strategy for optimising trade-off between sensitivity and specificity when adding Boolean AND NOT. Best three combination strategies derived using logistic regression techniques | Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| Lefebvre 200824 | EMBASE | Hand-search of two journals for publication years 1990 and 1994 (n = 384) were used to assess the performance of individual terms and select terms for further analysis. EMBASE records 1974–2005 (excluding those with corresponding MEDLINE record indexed as a RCT) and assessed as trials or not trials. This data set was used to combine and reject terms | MeSH terms from the MEDLINE Highly Sensitive Search Strategy were converted to Emtree where possible; additional Emtree terms and free text terms were also identified. Experts were consulted for further suggestions. Terms were tested against the internal gold standard records and those with an individual precision of > 40% and sensitivity of > 1% were selected and added sequentially to develop the filter. Terms with low cumulative precision were rejected | One filter | Cumulative sensitivity for each term, cumulative precision for each term and total (table) | ||

| Manríquez 200825 | LILACS (internet) | Hand-search of 44 Chilean journals for the publication years 1981–2004 indexed in LILACS (n = 267) | A total of 120 terms were identified from internal gold standard records. Terms with individual sensitivity of > 20% and specificity and accuracy of > 60% were included in two-term strategies. Terms in two-term strategies with sensitivity, specificity and accuracy of > 60% were combined to give three- or four-term strategies. All terms in three- to four-term strategies were combined to give a maximum sensitivity strategy. The final strategy excluded terms with 0% sensitivity and high specificity | The sensitivity, specificity and accuracy are given for 16 single terms, 23 two-term strategies and 13 three- or four-term strategies. Sensitivity and specificity are given for a 10-term strategy (A) and a final strategy (B) (B is derived from strategy A by excluding terms with a sensitivity of 0% and high specificity) | Sensitivity, specificity (figure). The figure contains the full search strategy and values for sensitivity and specificity | None | No |

| Robinson 200226 | MEDLINE (PubMed) | None | Adapted from a previous search filter (Cochrane Highly Sensitive Search Strategy). Three revisions to the original Cochrane RCT strategy. Strategies also translated for PubMed | Comparison of results retrieved by the original and revised strategies for both MEDLINE Ovid and PubMed | Number of additional relevant and non-relevant records retrieved by revisions | Cochrane CENTRAL records from 11 journals for 1998 (n = 308) | Sensitivity (discussed in text of article) |

| bTaljaard 201027 | MEDLINE (Ovid) | Hand-search of 78 journals for one randomly assigned year from 2000 to 2007 (n = 162). Subset initially examined independently by two reviewers – inter-rater reliability of 0.81 | Frequency analysis of text from internal gold standard records was used to create a search strategy for identifying CRTs | Three filters were tested: simple – RCT.pt; sensitive – identified CRT terms combined using OR and then combined with RCT.pt using OR; precise: identified CRT terms combined using OR and then combined with RCT.pt using AND | Sensitivity, precision, 1 – specificity (fallout) (tables), NNR (discussed in text of article) | Seven systematic reviews of CRTs covering 1979–2005 (n = 363) | Sensitivity (table) (referred to as RR in the text) |

| aWong 200621 | CINAHL (Ovid) | Hand-search of 75 journals for publication year 2000 (n = 506). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with an individual sensitivity of at least 10% and specificity of at least 10% were incorporated into the development of the filters. Tested out combining terms with OR and used stepwise logistic regression | Three single terms and five combinations of terms were reported: (1) best sensitivity (with specificity of ≥ 50%), (2) best specificity (with sensitivity of ≥ 50%), (3) best optimised (based on the smallest absolute difference between sensitivity and specificity) | Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| aWong 200628 | EMBASE (Ovid) | Hand-search of 55 journals for publication year 2000 (n = 1256). Articles were assessed by six research assistants. Inter-rater agreement was previously established as > 80% | Index terms and text words from clinical studies and advice sought from clinicians and librarians. Terms with individual sensitivity of > 25% and specificity > 75% were incorporated into development of the filters. Tested out combining terms with OR | Three single terms and four combinations of terms were reported: (1) best sensitivity (with specificity of ≥ 50%), (2) best specificity (with sensitivity of ≥ 50%), (3) best optimised (based on the smallest absolute difference between sensitivity and specificity) | Sensitivity, specificity, precision, accuracy, confidence intervals reported (tables) | None | No |

| Zhang 200629 | MEDLINE (Ovid) | None | Used existing filters and revisions of existing filters | Evaluated six filters: the top two phases of the Cochrane Highly Sensitive Search Strategy (SS123, SS12) and four revisions of this strategy (SS-crossover, SS-crossover studies, SS-volunteer, SS-versus) | No | A total of 61 reviews identified from the CDSR in 2003 that had used the Highly Sensitive Search Strategy to identify RCTs and provided details of the subject search | Sensitivity, precision, article read ratio, interquartile ranges reported (tables) |

Of the 35 studies excluded, 19 were rejected because they were published before 2001. The reasons why the remaining 16 studies were excluded are presented in Table 5.

| Study identifier | Reason for exclusion | Type of filter |

|---|---|---|

| Abhijnhan 200730 | Did not develop and test a filter. Focus is on a comparison of database content/coverage | RCT |

| Almerie 200731 | Did not develop and test a filter. Focus is on a comparison of database content/coverage | RCT |

| Chow 200432 | Did not develop or revise a filter | RCT |

| Methods used to develop filter not reported | ||

| Corrao 200633 | Filter not evaluated against either internal or external gold standards | RCT |

| No internal or external validation standards | ||

| Day 200534 | Did not develop or test a RCT search filter. The search strategies derived were based on the condition and intervention of interest | RCT |

| de Freitas 200535 | Did not develop and test a filter | RCT |

| Devillé 200236 | This was a guideline for conducting diagnostic systematic reviews | Diagnostic |

| No filter development or evaluation | ||

| Eisinga 200737 | Did not develop or revise a filter | RCT |

| Kele 200538 | Did not develop and test a filter. Focus is on a comparison of database content/coverage | RCT |

| Kumar 200539 | Did not develop and test a filter. Focus is on a comparison of database content/coverage | RCT |

| McDonald 200240 | Did not develop and test a filter | RCT |

| Royle 200341 | Did not develop or revise a search filter | Economic |

| Did not evaluate a search filter | ||

| Focus is on sources used for searching for economic studies | ||

| Royle 200542 | Did not develop and test a filter | RCT |

| Royle 200743 | Methods used to develop filter not reported | RCT |

| Sassi 200244 | Methods used to develop search filter not reported | Economic |

| No gold standard – comparator is an ‘extensive search’ | ||

| Wilczynski 200945 | Focus is on the quality of indexing of systematic reviews and meta-analyses in MEDLINE | Systematic review |

Study details

Three studies included analyses of more than one search filter type: one study12 included details of a diagnostic filter and a secondary (systematic review) filter and two studies16,21 included details of both systematic review and RCT search filters. Thus, there were two studies examining economic search filters, seven studies examining diagnostic search filters, seven studies examining systematic review search filters and 10 studies examining RCT search filters.

The majority of the studies (n = 14)8–10,12–14,17–19,22,23,26,27,29 addressed the development of search filters for use with MEDLINE, 10 for the Ovid platform,8,9,13,14,17,19,22,23,27,29 three for PubMed12,18,26 and one for DataStar. 10 Six studies developed search filters for the EMBASE database,7,11,15,20,24,28 four for the Ovid platform,7,15,20,28 one for DataStar11 and one that used three different platforms (DataStar, Dialog and Ovid). 24 The remaining three studies developed search filters for the Cumulative Index to Nursing and Allied Health Literature (CINAHL),21 PsycINFO16 and the Latin American and Caribbean Health Sciences Literature (LILACS) database25 respectively. The CINAHL and PsycINFO search filters used the Ovid platform whereas the LILACS database was searched using an internet interface.

Internal gold standards

A reference standard is a set of relevant records against which a search filter’s performance can be measured. In some studies the reference standard is used both to derive and to test a search filter. In these cases the standard is described as an internal standard.

Almost all of the studies used an internal standard to derive and/or validate the search filters. Only three of the 23 studies did not include an internal standard. 18,26,29 These studies tested the search filters against external standards (see External standards). Seventeen7–11,13,15–17,19–21,23–25,27,28 of the 20 studies that included an internal standard had derived this standard by hand-searching journals. The number of journals searched ranged from 2 to 161. In the other three studies12,14,22 the internal standards were generated by a PubMed subject-specific search or from studies included in a number of systematic reviews, or from a database search [MEDLINE and the Cochrane Central Register of Controlled Trials (CENTRAL)]. One other study24 used a search of EMBASE as well as hand-searching of journals to derive an internal standard. The size of the gold or reference standards varied from 58 to 1587 records. In three studies, the reference standard was initially split into two, with one set used to derive the filter and the second set used to internally validate the performance. 17,19,23

Inter-rater reliability in selecting studies for inclusion in the reference standard was assessed for almost all of the studies produced by the McMaster Hedges team7,8,13,15–17,20,21,23,28 and exceeded 80% in every case. In one McMaster Hedges team study,8 articles were independently assessed by two reviewers with disagreement being resolved by a third independent reviewer. Two studies quoted inter-rater reliabilities of 71%12 and 81%27 after articles were assessed by two reviewers. Two further studies10,19 reported that articles were assessed by two reviewers, whereas one study11 reported that articles were assessed by one reviewer with 10% of articles assessed by a second reviewer and one study9 reported that articles were assessed by three researchers with discrepancies resolved through discussion. None of these studies reported values for inter-rater reliability. The remaining four studies that derived internal standards14,22,24,25 did not describe how the studies were selected.

Identifying candidate terms and combining them to create filters

In the 20 studies with internal standards, the internal standard records were used as a source for the identification of candidate search terms. Ten of these studies7,8,13,15–17,20,21,23,28 were carried out by the McMaster Hedges team and used essentially the same methodology for deriving search filters. This method involved the identification of index terms and text words from an internal standard of records as well as consultation with clinicians, librarians and other experts to add any other relevant terms. The individual terms identified were analysed for sensitivity and specificity and then terms with specified values of sensitivity and specificity were combined to create multiple-term search filters using the Boolean OR operator. The specified values for term inclusion varied for sensitivity and specificity from > 10% to > 75%. In one of the 10 studies23 stepwise logistic regression was also used to try to optimise search filter performance. The use of logistic regression, however, did not result in better-performing search filters than those developed simply using the Boolean OR operator and therefore this approach was not used in any of the subsequent studies.

Another study25 also identified terms from an internal standard and then combined terms with particular values for sensitivity, specificity and accuracy to derive multiple-term strategies to produce a maximally sensitivity strategy. Single terms with an individual sensitivity of > 20% and specificity and accuracy of > 60% were combined to give two-term strategies. Terms in the two-term strategies with sensitivity, specificity and accuracy of > 60% were then combined to give three- or four-term strategies. All terms in the three- and four-term strategies were then combined to give a maximally sensitivity strategy consisting of 10 terms. This final strategy was refined further by using the Boolean AND NOT operator to exclude single terms with zero sensitivity and high specificity. This increased the specificity of the final strategy while maintaining high sensitivity.

Five studies10,11,19,22,27 used bibliographic software to undertake a more formal frequency analysis of the terms in the internal standard. Two of these studies10,11 carried out word frequency analysis for all of the records in the internal standard and then created search strategies by combining those terms that had the highest scores as determined by multiplying the sensitivity and precision scores. Two studies19,22 used textual analysis of the internal standard records followed by discriminant analysis using logistic regression to determine the best terms to be included in the search strategy. The fifth study27 also used frequency analysis to identify candidate terms for building a search strategy.

Previously published filters were used as a source of terms for four studies. 9,12,14,24 These strategies were then further developed by adding extra medical subject heading (MeSH) and text terms identified from the internal standard records. In one of these studies24 the MeSH terms were first translated from a MEDLINE strategy into Emtree terms before adding additional Emtree terms and free-text terms identified from the internal standard records. This study also consulted experts for further suggestions. Individual terms were tested against the internal standard and those with a precision of > 40% and sensitivity of > 1% were added sequentially to develop the filter. Astin et al. 9 also used the sequential addition of search terms to develop the search filter.

Internal validation performance measures

The performance of the search filters was tested against the gold or reference standard in 19 studies7–17,19–21,23–25,27,28 to test internal validity. Nine studies7,13,15–17,20,21,23,28 carried out by the McMaster Hedges team reported the results for single-term and combined-term search strategies, whereas the remaining study8 from this team reported only the performance of combination-term strategies. Studies reporting single-term strategies included between one and six single-term strategies whereas the number of combination strategies reported varied between four and 14. The performance of strategies was usually reported in terms of high sensitivity, high specificity or optimised balance between sensitivity and specificity. The other nine studies9–12,14,19,24,25,27 tested between one and eight filters, with some single-term strategies but mostly combination strategies. The focus of these search filters was to produce highly sensitive, highly specific or highly precise outcomes.

The performance measures reported for internal validation are presented in Table 6. Sensitivity was reported by all 19 studies, precision was reported by 16 studies and specificity was reported by 14 studies. Accuracy was reported by seven studies and the number needed to read (NNR) by four studies. Positive likelihood ratio (LR+) values and fall-out were each only reported in a single study. All of the performance measures were presented in tables with the exception of one study,25 for which the results were presented in a figure that contained the full search strategy and values for sensitivity and specificity.

| Performance measure | Number of studies reporting the performance measure | Reference numbers of articles reporting the studies | Percentage of studies reporting the performance measure |

|---|---|---|---|

| Sensitivity | 19 | 7–17,19–21,23–25,27,28 | 100 |

| Specificity | 14 | 7–9,12,13,15–17,19–21,23,25,28 | 74 |

| Precision (or PPV) | 16 | 7–11,13,15–17,19–21,23,24,27,28 | 84 |

| Accuracy | 8 | 7,13,15,16,20,21,23,28 | 42 |

| NNR | 4 | 10–12,27 | 21 |

| LR+ | 1 | 12 | 5 |

| Fall-out | 1 | 27 | 5 |

External standards

Nine of the 23 studies used external standards to test or validate the search filters that had been developed or revised. 9,10,17–19,22,26,27,29 For these studies, a reference standard that was different from the one used to derive the search filter was used. These studies included studies of diagnostic test, systematic review and RCT filters. Four studies9,10,17,18 used hand-searching of journals to generate the external standard. The number of journals searched ranged from 1 to 161, resulting in between 53 and 332 records in the external standards. Two of these four studies17,18 increased the numbers in the external standard by adding records from a search of either the Cochrane Database of Systematic Reviews (CDSR) or the Database of Abstracts of Reviews of Effects (DARE).

Four22,26,27,29 of the other five studies that used external standards were of RCT search filters and one19 was of a systematic review search filter. Two of these studies27,29 identified records for their standards by searching systemic reviews (one searched 61 reviews from the CDSR29 and one27 searched seven systematic reviews of cluster RCTs). Another study26 searched for records in 11 journals in the CENTRAL database, generating 308 references. In the remaining RCT search filter study22 MEDLINE was searched to identify records that were assessed as being trials. In the study that examined a systematic review search filter19 models were tested using a validation data set and against a ‘real-world’ scenario using Ovid MEDLINE on compact disc, read-only memory (CD-ROM). The validation data set had been created from a hand-search of five journals. The results of this hand-search had been split into an internal test set (n = 256, 75%) and an external validation set (n = 89, 25%).

External validation performance measures

The performance of the search filters was tested against external standards in nine studies. 9,10,17–19,22,26,27,29 The performance measures reported for external validation are presented in Table 7. All nine studies reported sensitivity and seven of the nine studies reported precision. Two studies9,17 reported specificity and two10,29 reported the NNR (described as ‘article read ratio’ in one article). Two studies26,27 reported a single performance measure, that is, sensitivity only, three studies18,19,22 reported two performance measures and four studies9,10,17,29 reported three performance measures. The performance measures were again presented almost exclusively in tables, with one exception,26 in which the performance measures were simply discussed in the text of the article.

| Performance measure | Number of studies reporting the performance measure | Reference numbers of articles reporting the studies | Percentage of studies reporting the performance measure |

|---|---|---|---|

| Sensitivity | 9 | 9,10,17–19,22,26,27,29 | 100 |

| Specificity | 2 | 9,17 | 22 |

| Precision (or PPV) | 7 | 9,10,17–19,22,29 | 78 |

| NNR (article read ratio) | 2 | 10,29 | 22 |

Discussion

Methods used to develop and validate search filters

A total of 23 studies were included in this review. In the majority of these studies an internal gold or reference standard was used to develop the search filter by identifying candidate terms and assessing performance. The way in which terms were chosen for inclusion, however, and how the combinations were determined varied. The internal gold standards were mainly derived from journal hand-searches although a few were derived by other methods (from a database search or studies identified from systematic reviews). Ten of the studies were produced by the McMaster Hedges team and these all used the same method of search filter development, for example through consultation with experts and use of their internal gold or reference standard. Five other studies made use of statistical methods for filter development. The use of statistical methods helps to make the process more objective rather than depending on human expertise. In a few cases, the search filter was not developed using a gold standard or reference standard but was adapted from a previous search filter. Only nine studies undertook external validation, that is, validation against a standard that was different from the one used to develop the filter. As this provides an independent assessment of filter performance, it provides a more rigorous assessment and gives a better indication of how a filter is likely to perform in the real world.

Reported performance measures

Across the 23 studies included in the review, eight different performance measures were reported; however, as precision and positive predictive value (PPV) are equivalent, there were actually seven different performance measures. The performance measures used for internal and external validation and their frequency of use are listed in Tables 6 and 7 respectively. The most frequently reported performance measures were sensitivity, precision and specificity respectively.

All studies reported sensitivity, reflecting the importance of this measure when determining the usefulness of a search filter. As the filters are used to identify relevant articles, it is important to measure the number of relevant articles retrieved by the filter compared with the total possible number of relevant articles. When carrying out a systematic review, in which it is important to identify as many relevant studies as possible, it makes sense to use a search filter with a high sensitivity value.

The performance measures of specificity and precision were the next most reported measures. It is important that a search filter rejects non-relevant articles and thus a high specificity is desirable. In a well-performing search filter a high specificity value would be desirable as well as a high sensitivity value, as there would not be much point in using a filter that retrieves lots of non-relevant articles as well as all of the relevant articles. The articles in the review often included search filters that were optimised for the best balance of sensitivity and specificity.

As precision measures the number of relevant articles as a proportion of all articles retrieved, the aim is to maximise the precision of a search filter. As sensitivity and precision are, however, inversely related, it is difficult to achieve both high sensitivity and high precision. The NNR is another way of reporting precision as it is calculated by dividing 1 by the precision value. This measure gives the number of articles that need to be read to find one relevant article and may, therefore, be more easily understood than precision, which is usually quoted as a percentage value.

The accuracy performance measure was used only in articles produced by the McMaster Hedges team. It provides a measure of the number of articles that are classified correctly as either relevant or non-relevant. The usefulness of this measure on its own, however, is unclear as a high accuracy value may be obtained when the specificity value is high but the sensitivity value is medium or low. In most cases the accuracy value is close to the specificity value and does not give an indication of the sensitivity value.

The other two performance measures that were found (LR+ and fall-out) each appeared in one article. These performance measures were reported in addition to sensitivity and either specificity or precision.

Presentation of performance measures

The most commonly used format for the presentation of performance measures used for single studies of search filters was tables. Only two studies of RCT filters did not present the performance measures in tables. One of these studies presented the search strategy and its performance measures in a figure whereas the other study simply discussed the performance measures in the text of the article. Thus, tables seem to be a popular and useful way of presenting performance measures. Often the results are ordered in tables according to one of the performance measures, for example sensitivity, thus making it easy to identify the most sensitive and the least sensitive search filter. The studies often presented the performance measures in a number of tables to allow ordering by different performance measures, for example tables ordered by sensitivity or specificity or precision. This makes it easier to select a search filter for a specific need, for example researchers involved in performing systematic reviews requiring very sensitive search filters could select the most sensitive search filters whereas busy clinicians who are simply looking for some relevant articles could select a filter with the highest precision.

Key findings

-

Internal gold or reference standards were mostly derived by hand-searching of journals.

-

Validation of filters was mostly carried out using internal validation.

-

The most commonly used performance measures were sensitivity, precision and specificity.

-

The majority of the studies presented performance measures in tables.

Measures for comparing the performance of methodological search filters (review B)

Reproduced with permission from Harbour et al. 46 © 2014 The authors. Health Information and Libraries Journal © 2014 Health Libraries Journal. Health Information & Libraries Journal, 31, pp. 176–194.

Introduction

A variety of methodological search filters are already available to find RCTs, economic evaluations, systematic reviews and many other study designs. In principle, these filters can offer efficient, validated and consistent approaches to study identification within large bibliographic databases. Search filters, however, are an under-researched tool. Although there are many published search filters, few have been extensively validated beyond the data offered in the original publications. 47–49 This means that their performance in the real-world setting of day-to-day information retrieval across a range of search topics is unknown. 50 Furthermore, search filters are seldom assessed against common data sets, which makes a comparison of performance across filters problematic. Consequently, the use of search filters as a standard tool within technology assessment, guideline development and other evidence syntheses may be pragmatic rather than evidence based. 50,51

As search filters proliferate, the key question becomes how to choose between them. The most useful information to assist search filter choice is likely to be performance data derived from well-conducted and well-reported performance tests or comparisons. Methods exist to test search filter performance and to build the performance picture, including reviews of search filter performance. 48,49,52–54 There is no formal guidance, however, on the best methods for testing filter performance, on which performance measures are valued by searchers and on which measures should ideally be reported to assist searchers in choosing between filters. The performance picture for filters across different disciplines, questions and databases is therefore largely unknown. Different performance measures are reported in studies describing search filters and the process whereby searchers choose a filter remains unclear.

The purpose of this review was to consider the measures and methods used in reporting the comparative performance of multiple methodological search filters.

Objectives

This review addressed the following questions:

-

What performance measures are reported in studies comparing the performance of one or more methodological search filters in one or more sets of records?

-

How are the results presented in studies comparing the performance of one or more methodological search filters in one or more sets of records?

-

How reliable are the methods used in studies comparing the performance of methodological search filters?

-

Are there any published methods for synthesising the results of several filter performance studies?

-

Are there any published methods for reviewing the results of several syntheses?

Methods

Identification of studies

Studies were identified from the ISSG Search Filters Resource. 6 The ISSG Search Filters Resource is a collaborative venture to identify, assess and test search filters designed to retrieve health-care research by study design. It includes published filters and ongoing research on filter design, research evaluating the performance of filters and articles providing a general overview of search filters. At the time of this project, regular searches were being carried out in a number of databases and websites, and tables of contents of key journals and conference proceedings were being scanned to populate the site. Researchers working on search filter design are encouraged to submit details of their work. The 2010 update search carried out by the UK Cochrane Centre to support the ISSG Search Filters Resource website was also scanned to identify any relevant studies not at that time included on the website. We acknowledge that there has been a regrettable delay between carrying out the project, including the searches, and the publication of this report, due to serious illness of the principal investigator. The searches were carried out in 2010/2011.

Inclusion criteria

For the purpose of this review, methodological search filters were defined as any search filter or strategy used to identify database records of studies that use a particular clinical research method. A pragmatic decision was taken to include only studies comparing the performance of filters for RCTs, DTA studies, systematic reviews or economic evaluation studies. These study types are the ones most commonly used by organisations such as NICE to underpin their decision-making when producing technology appraisals and economic evaluations of health-care technologies and subsequent clinical guidelines.

Studies were selected for inclusion in the review if they compared the performance of two or more methodological search filters in one or more sets of records. Studies reporting the development of new methodological filters whose performance was compared with that of previously published filters were also included.

Exclusion criteria

Studies were excluded from the review if they:

-

reported the development and initial testing of a single search filter that did not include any formal comparison with the performance of other search filters

-

compared methodological search filters that had not been designed to retrieve RCTs, DTA studies, systematic reviews or economic evaluation studies

-

compared the performance of a single filter in multiple databases or interfaces

-

were not available as a full report, for example conference abstracts

-

were protocols for studies or reviews

-

lacked sufficient methodological detail to undertake the data extraction process.

Data extraction and synthesis

A data extraction form was developed by two reviewers (JH, CF) to standardise the extraction of data from the selected studies and allow cross-comparisons between studies. Details extracted included the methods used to identify published filters for comparison, the methods used to test filter performance and the performance measures reported. Data extraction for each study was carried out by one reviewer (JH) and verified by a second reviewer (CF). A narrative synthesis was used to summarise the results from the review.

Results

Twenty-one studies were identified as potentially meeting the inclusion criteria for this review based on titles and abstracts2,10,14,15,17,19,22,23,25,33,48,49,55–63 Of these studies, 10 reported the development of one or more search filters, whose performance was then compared against the performance of existing filters10,14,15,17,19,22,23,25,56,57 and 11 reported the comparative performance of existing filters. 2,33,48,49,55,58–63 On receipt of the full articles, three studies55,60,62 were excluded from the review based on the criteria outlined in the methods section. The 18 included studies are listed in Tables 8 and 9 and the excluded studies are listed in Table 10. No studies were identified that synthesised the results of several performance reports or reviewed the results of several syntheses.

| Study | How were filters identified for comparison? | What study type was the filter designed to retrieve? | Total number of included filters (number of included filters developed by the author) | Database in which filters were tested |

|---|---|---|---|---|

| Bachmann 200210 | Published filters | DTA studies | 2 (1) | MEDLINE |

| Boynton 199856 | Published filters | Systematic reviews | 15 (11) | MEDLINE |

| Corrao 200633 | Published filters, author-modified strategy | RCTs | 2 | MEDLINE |

| Devillé 200057 | Published filters | DTA studies | 5 (4) | MEDLINE |

| Doust 200558 | Published filters | DTA studies | 5 | MEDLINE |

| Glanville 200622 | Published filters | RCTs | 12 (6) | MEDLINE |

| Glanville 200959 | Websites, contact with experts | Economic evaluations | 22 | MEDLINE and EMBASE |

| Haynes 200523 | Websites, published filters | RCTs | 21 (2) | MEDLINE |

| Leeflang 200648 | Database search | DTA studies | 12 | MEDLINE |

| Manríquez 200825 | Published filters | RCTs | 2 (1) | LILACS |

| McKibbon 200961 | Database search, websites, published filters | RCTs | 38 | MEDLINE |

| Montori 200517 | Published filters | Systematic reviews | 10 (4) | MEDLINE |

| Ritchie 200749 | Database search, contact with experts, published filters | DTA studies | 23 | MEDLINE |

| Vincent 200314 | Database search, websites | DTA studies | 8 (3) | MEDLINE |

| White 200119 | Published filters | Systematic reviews | 7 (5) | MEDLINE |

| Whiting 2011 (online 2010)2 | Contact with experts, database search, published filters | DTA studies | 22 | MEDLINE |

| Wilczynski 200515 | Published filters | DTA studies | 4 (2) | EMBASE |

| Wong 200663 | Published filters | RCTs and systematic reviews | 13 | MEDLINE and EMBASE |

| Study | Filters included | Tested in | Identification of filters | Filter translation | Gold standard | Method of testing | Measures reported |

|---|---|---|---|---|---|---|---|

| Studies reporting on the comparative performance of published filters | |||||||

| Corrao 200633 | Two RCT filters | PubMed | PubMed Clinical Queries specific therapy filter and authors’ modified version: addition of term “randomised [Title/Abstract]” | Not required | None | Retrieved citations ‘formally checked’ to confirm RCT study design | Number retrieved that were confirmed RCTs, precision, retrieval gain (absolute and percentage) |

| Doust 200558 | Five DTA study filters | MEDLINE (WebSpirs) | Published strategies for diagnostic systematic reviews (no further details given) | Reports conversion from PubMed to MEDLINE (WebSpirs) for one filter. Reproduced terms used for all filters but did not discuss translation | Included studies from two systematic reviews. Studies identified from MEDLINE search using Clinical Queries diagnostic filter and reference check – 53 records | Filter terms, complete filter and filter plus original subject searches for reviews. Did not report date searched | Sensitivity/recall, precision |

| Glanville 200959 | 14 MEDLINE economic evaluation study filters; eight EMBASE economic evaluation study filters | MEDLINE and EMBASE (Ovid) | Consulted websites and experts | Strategies adapted for Ovid ‘as necessary’ and reported in supplementary table | Records coded as economic evaluations in NHS EED (2000, 2003, 2006) and indexed in MEDLINE or EMBASE – MEDLINE 1955 records, EMBASE 1873 records | Filters run in MEDLINE and EMBASE for the same years as the gold standard with and without exclusions (animal studies and publication types unlikely to yield economic evaluations) | Sensitivity, precision |

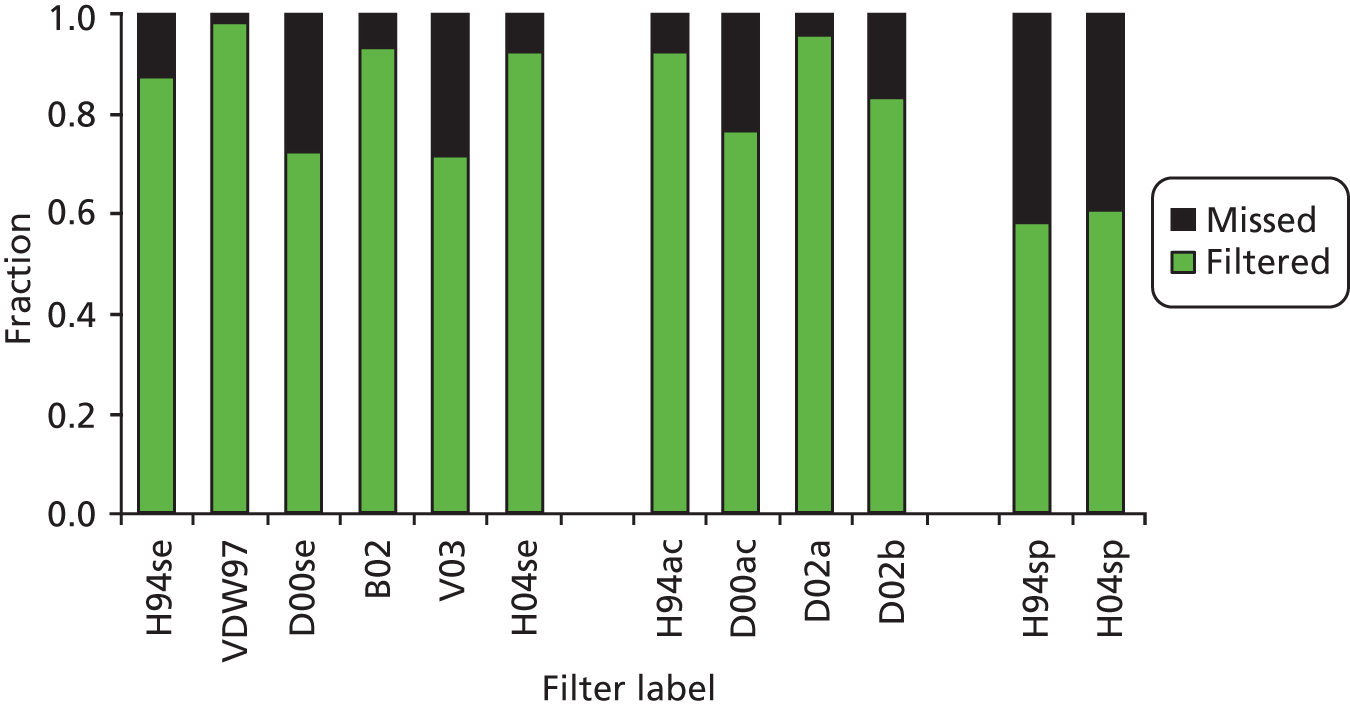

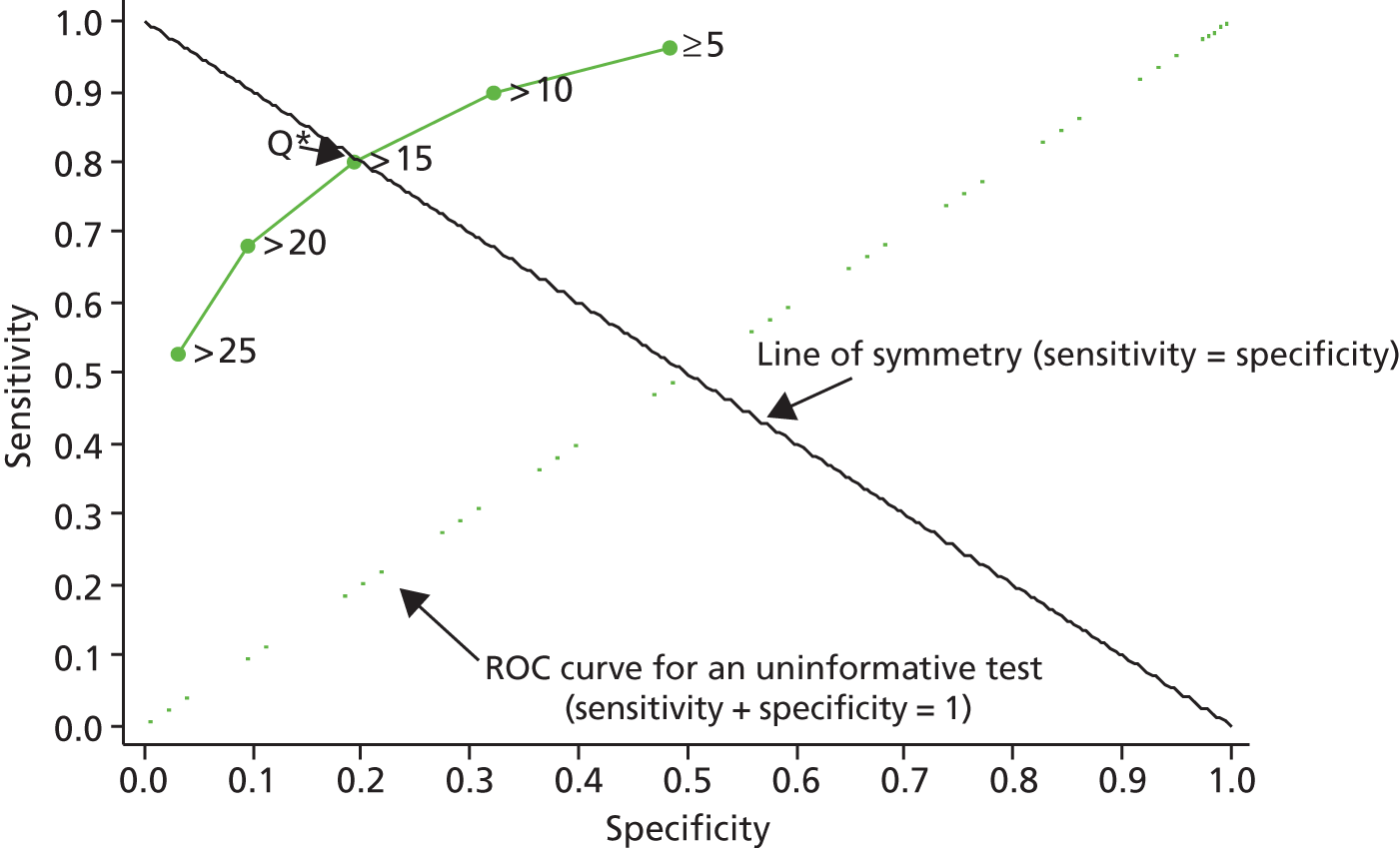

| Leeflang 200648 | 12 DTA study filters | PubMed | MEDLINE, EMBASE and Cochrane Methodology Register searches. When multiple filters were reported selected highest sensitivity, highest specificity and highest accuracy filters according to the original author(s) | Strategies adapted for PubMed. Translations reported in full | Included studies from 27 systematic reviews – 820 records | Filters run against PubMed records. Replicated original searches for six reviews with the addition of filters and using the same time frame | NNR, proportion of original articles missed, average proportion of retrieved and missed gold standard records per filter (bar chart), proportion of articles not identified per year (graph) |

| McKibbon 200961 | 38 RCT filters | MEDLINE (Ovid) | Database (PubMed) searches, web searches, consulted websites, reviewed bibliographies, personal files | Strategies translated for Ovid. Translated filters reported in appendix | Hand-searching of 161 journals in 2000 – 1587 records of RCTs | Filters run in Clinical Queries Hedges database (49,028 MEDLINE records from hand-searched journals) | Sensitivity/recall, precision, specificity, confidence intervals reported |

| Ritchie 200749 | 23 DTA study filters | MEDLINE (Ovid) | MEDLINE search, personal files, contacted experts | Reports one strategy translated from SilverPlatter to Ovid | Included studies from one review indexed in MEDLINE – 160 records | Replicated original review search (noted small discrepancy in results) with addition of filters | Sensitivity/recall, precision, number of records retrieved |

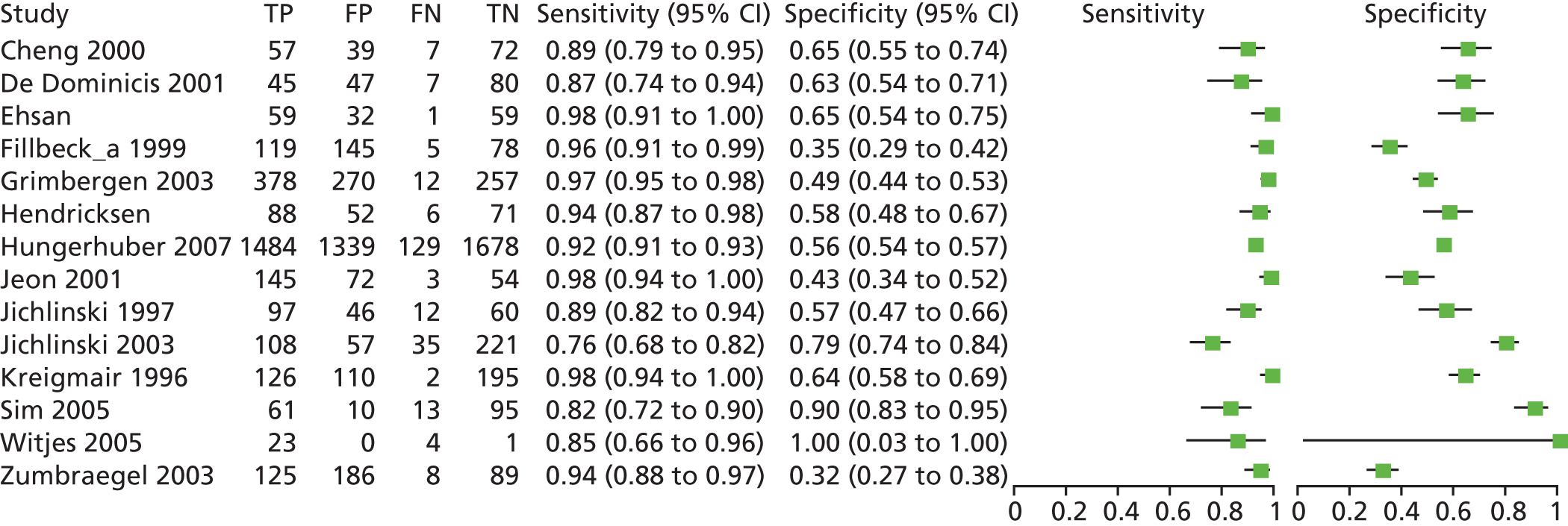

| Whiting 2011 (2010 online)2 | 22 DTA study filters | MEDLINE (Ovid) | MEDLINE (Ovid) search, consulted experts | Details of translations to MEDLINE (Ovid) syntax reported as an appendix | 506 references from seven systematic reviews of test accuracy studies that had not used methodological filters in the original search strategy | Compared performance of subject searches with that of filtered searches | Sensitivity/recall, precision, NNR, number of missed records, confidence intervals reported |

| Wong 200663 | Three MEDLINE RCT filters; three EMBASE RCT filters; three MEDLINE systematic review filters; four EMBASE systematic review filters | MEDLINE and EMBASE (Ovid) | Strategies developed by the authors and previously published | Not required | Hand-searching of 161 journals for MEDLINE and 55 for EMBASE. Not an external gold standard. RCT records: MEDLINE 930, EMBASE 1256; systematic review records: MEDLINE 753, EMBASE 220 | None – reanalysis comparing results of previous publications | Sensitivity/recall, precision, specificity, confidence intervals reported |

| Studies reporting on the development of one or more filters and their performance in comparison to the performance of previously published filters | |||||||

| Bachmann 200210 | Two DTA study filters, one developed (highest sensitivity × precision) and one published (Haynes 199464) | MEDLINE (DataStar) | PubMed Clinical Queries (Haynes 199464) | Did not discuss translation or reproduce Haynes64 strategy used | Hand-search of four journals from 1994 (53 records) and four different journals from 1999 (61 records) | External validation: direct comparison of developed filter and current PubMed filter | Sensitivity/recall, precision, NNR (for developed filter only), confidence intervals reported |

| Boynton 199856 | 15 systematic review filters, 11 developed and four published | MEDLINE (Ovid) | Not specified other than published strategies using Ovid Interface | Translation not required | Hand-searching of six journals from 1992 and 1995 – 288 records | Internal validation: compared filter performance against a ‘quasi-gold standard’ | Sensitivity/recall (described as cumulative), precision (described as cumulative), total articles retrieved, number of relevant articles retrieved |

| Devillé 200057 | DTA study filters – internal validation: four developed and one published (Haynes 199464 sensitive strategy); external validation: one developed (most sensitive) and one published (Haynes 199464 sensitive strategy) | MEDLINE (interface unspecified) | Only extensive article on diagnostic filters (Haynes 199464) | Not specified but Haynes64 filter reproduced | Internal validation set: hand-search of nine family medicine journals indexed in MEDLINE (1992–5); database search of MEDLINE (1992–5) to create the ‘control set’ – 75 records in the gold standard, 137 records in the ‘control set’. External validation set: 33 articles on physical diagnostic tests for meniscal lesions; no further details supplied | Internal and external validation: compared retrieval of published and developed strategies | Internal validation: sensitivity/recall, specificity, DOR, confidence intervals reported. External validation: sensitivity/recall, predictive value |

| Glanville 200622 | 12 RCT filters, six developed and six published | MEDLINE (Ovid) | Published strategies reporting > 90% sensitivity and with > 100 records in the gold standard used for development | Not specified and filters not reproduced | Database search of MEDLINE (Ovid) (2003) using four clinical MeSH terms. Results assessed to identify indexed and non-indexed trials – 424 records | External validation: compared retrieval in MEDLINE of four clinical MeSH terms with retrieval for each comparator filter | Sensitivity/recall, precision |

| Haynes 200523 | 21 RCT filters, two developed (best sensitivity, best specificity) and 19 published | MEDLINE (Ovid) | University filters website and known published articles. Selected strategies that had been tested against gold standards based on a hand-search of published literature and for which MEDLINE records were available from 1990 onwards | Not specified and filters not reproduced | Hand-searching of 161 journals from 2000 – 657 records | External validation: compared performance but full results not presented | Sensitivity/recall, specificity |

| Manríquez 200825 | Two RCT filters, one developed and one published (Castro 199965) | LILACS | Not specified | Not required (both developed and published filters designed for LILACS) | Hand-searching of 44 journals published between 1981 and 2004 and indexed in LILACS – 267 records | Internal validation: compared ability to retrieve clinical trials included in the gold standard from the LILACS interface | Sensitivity/recall, specificity, precision, confidence intervals reported |

| Montori 200517 | 10 systematic review filters, four developed and six published | MEDLINE (Ovid) | ‘Most popular’ published filters | Not specified and filters used not reproduced | Hand-searching of 161 journals indexed in MEDLINE in 2000 – 735 records | External validation: compared filters against validation standard | Sensitivity/recall, precision, specificity, confidence intervals reported |

| Vincent 200314 | Eight DTA study filters, three developed and five published | MEDLINE (Ovid) | Consulted websites, database search of MEDLINE | Not discussed but filters reproduced | References from 16 systematic reviews – 126 records | Internal validation: compared sensitivity of developed and published strategies using reference set of MEDLINE records | Sensitivity/recall |

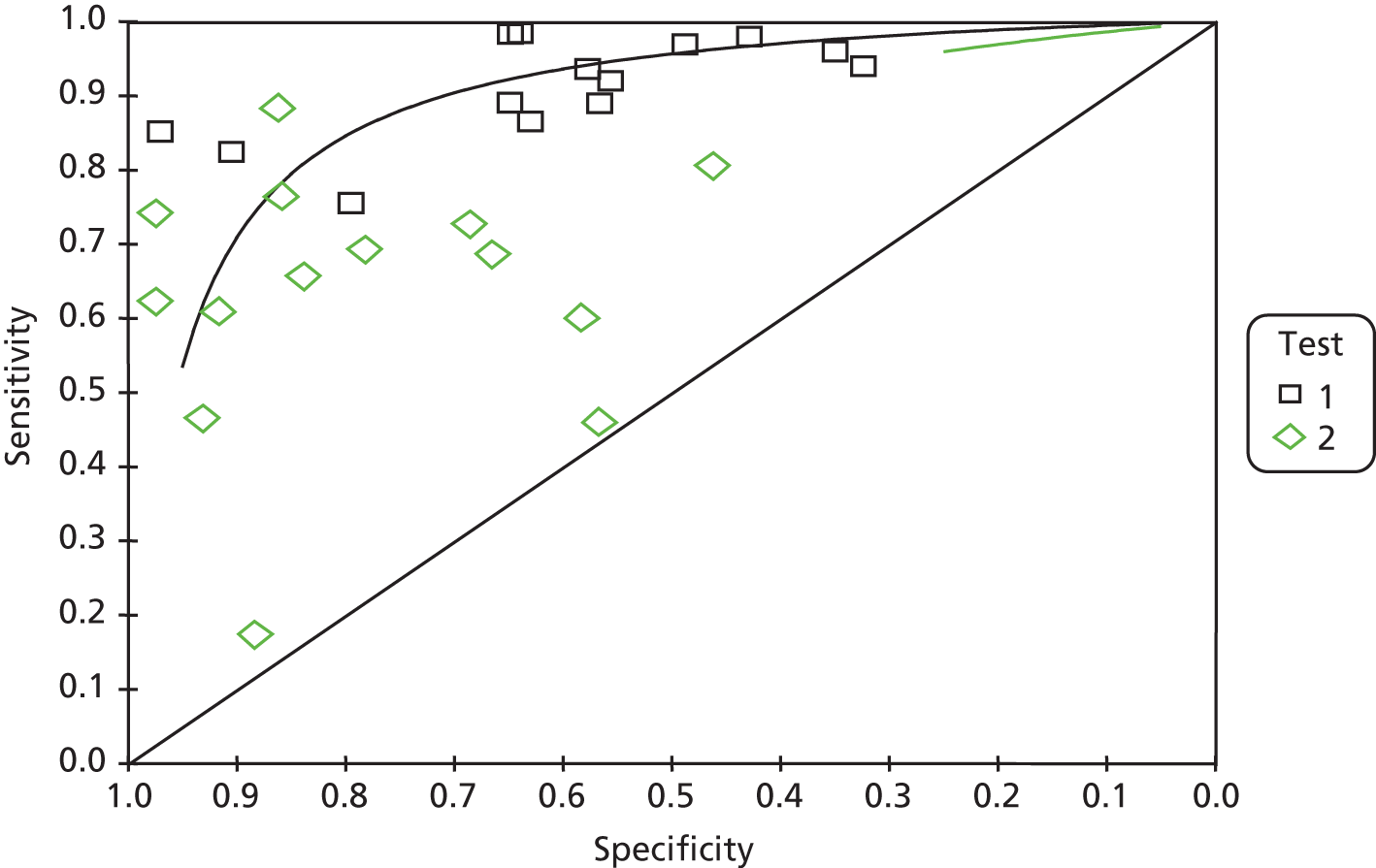

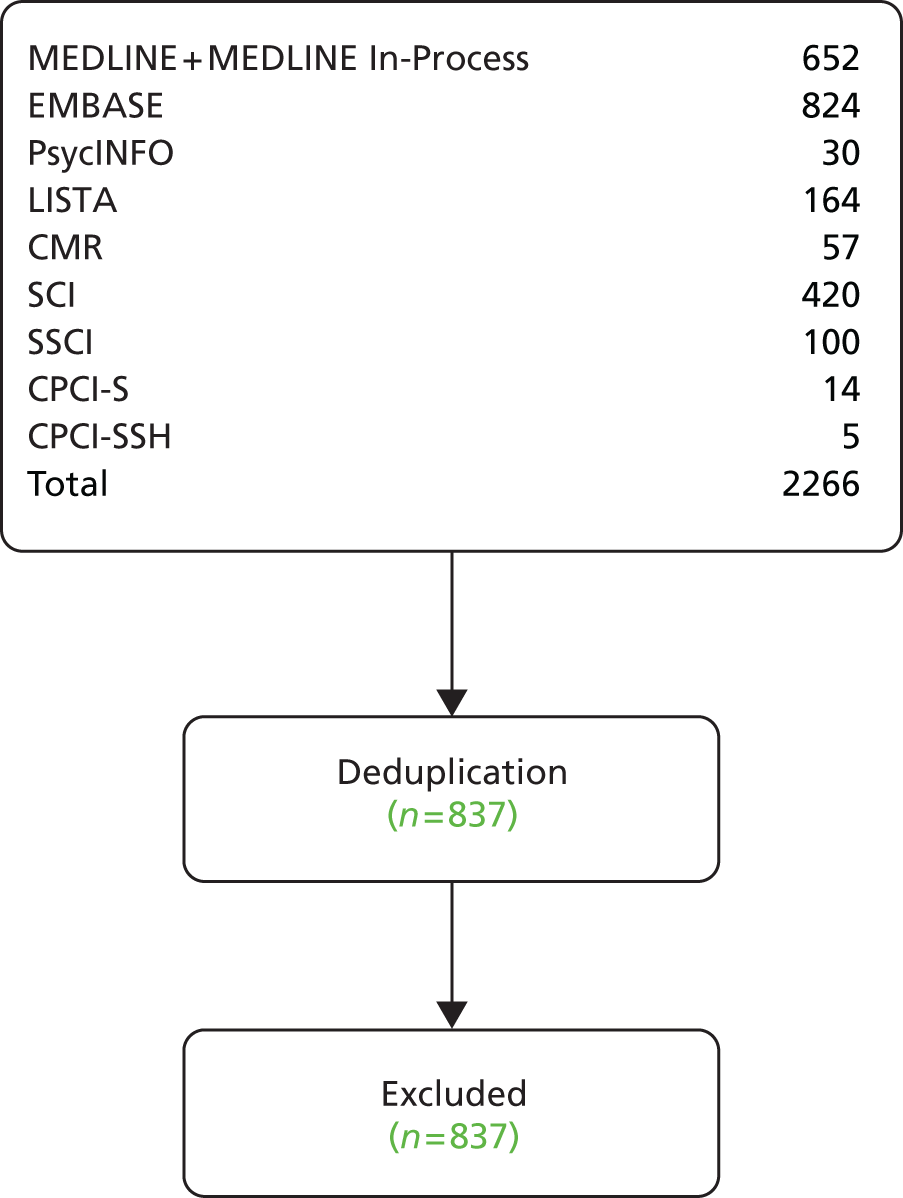

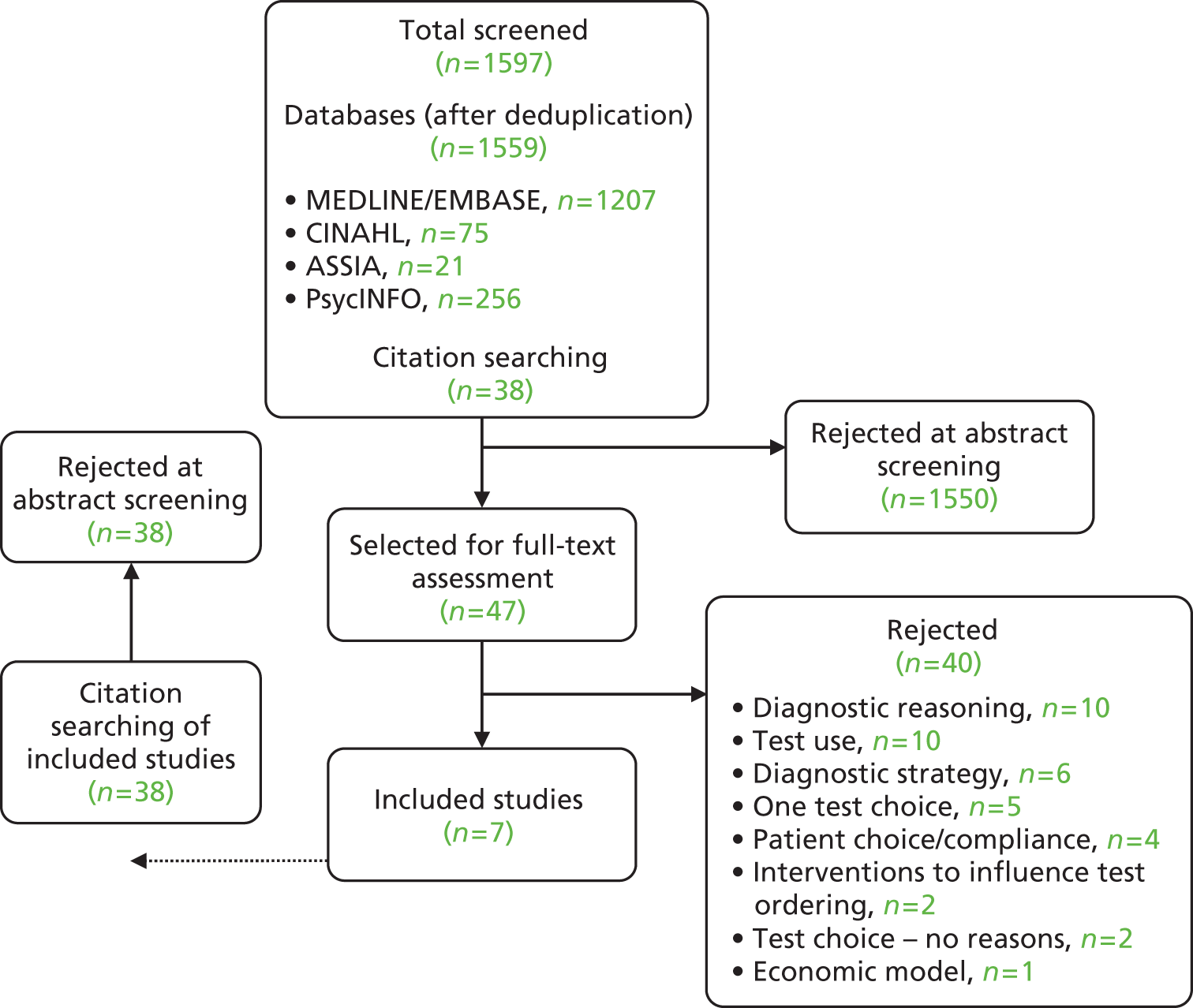

| White 200119 | Seven systematic review filters, five developed and two published | MEDLINE (Ovid CD-ROM 1995–September 1998) | Not specified | Translated some filters from MEDLINE (Dialog) to MEDLINE (Ovid) syntax | Hand-searching of five journals from 1995 and 1997; quasi-gold standard of systematic reviews – 110 records | Internal validation: compared performance in the ‘real-world’ search interface using quasi-gold standard | Sensitivity/recall, precision |