Notes

Article history

The research reported in this issue of the journal was funded by the HTA programme as project number NIHR127666. The contractual start date was in November 2018. The draft report began editorial review in June 2019 and was accepted for publication in November 2019. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Declared competing interests of authors

Sian Taylor-Phillips, Chris Stinton and Hannah Fraser are partly supported by the National Institute for Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care West Midlands at University Hospitals Birmingham NHS Foundation Trust. Sian Taylor-Phillips is funded by a NIHR Career Development fellowship (reference CDF-2016-09-018).

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2020. This work was produced by Fraser et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2020 Queen’s Printer and Controller of HMSO

Chapter 1 Introduction

Description of the health problem

Sore throat is a common condition;1,2 clinical descriptions of acute sore throat include acute pharyngitis and tonsillitis, which are both infections of the upper respiratory airway affecting the mucosa. 3,4 In a Scottish survey, 31% of respondents reported having experienced a severe sore throat in the previous 12 months. 1 Symptoms of sore throat include pain in the throat and may also include fever or headache; however, not all patients will require or seek medical advice and/or treatment for these symptoms. An analysis of UK primary care use data identified a reduction of diagnosed episodes of sore throat in the UK between 1993 and 2001. 2 This finding may suggest changes in patient behaviour regarding self-care, changes in general practitioner (GP) diagnosis and recording of sore throat or an actual change in the prevalence of sore throat, although there is no evidence to support these theories. Despite this reduction, sore throat and other respiratory tract infections (RTIs) remain a common reason for primary care use; one-quarter of the population will visit their GP because of a RTI each year. 5

In the UK, diagnosis of sore throat is currently based mainly on clinical assessment and it is recommended by the National Institute for Health and Care Excellence (NICE) that the FeverPAIN6 or Centor7 criteria are also used. The FeverPAIN and Centor tools were designed to predict group A Streptococcus (strep A) (Centor, FeverPAIN), group C Streptococcus (strep C) (FeverPAIN) and group G Streptococcus (strep G) (FeverPAIN),6,7 and have been proposed as methods by which clinicians can identify which patients are most likely to benefit from antibiotic use for sore throat. 8 This is because sore throat is often a self-limiting illness; most cases have a viral aetiology and, therefore, antibiotics would not be an effective treatment in these instances. In addition, as antibiotics reduce the duration of symptoms by only a very short period, this must be traded off against the side effects. Around 5–17% of sore throats are due to a bacterial infection, typically group A beta-haemolytic Streptococcus, also known as ‘Streptococcus pyogenes’, ‘group A Streptococcus’, ‘GAS’ or ‘strep A’. 5,8 Expert advice suggests that bacterial sore throat can also be caused by group C and group G streptococci; however, strep A is thought to account for around 80% of bacterial throat infections, and groups C and G streptococci are thought to account for around 20%. Most cases of strep A infection resolve without complications and, in fact, many people carry the bacterium without experiencing illness. Despite these factors, most patients presenting with sore throat in the UK will be given antibiotics in primary care. 9,10 Although rates of antibiotic prescribing for sore throat declined between 1993 and 2001, more recent prescribing data, for 2011, remain close to the 2001 figure, with median practice-prescribed antibiotics for sore throat at 60%. 8 RTIs, which include sore throats, account for a large proportion of antibiotic use in general practice in the UK (approximately 60%). 8

There are clinical and epidemiological reasons why clinicians may prescribe antibiotics for sore throat in the absence of microbiological confirmation. The first is practical. The current reference standard, culture of the bacteria grown from a throat swab, takes > 18 hours for a result to be obtained. 11 Where clinicians suspect strep A infection based on clinical judgement and use of the FeverPAIN or Centor criteria, there is an opportunity to reduce the risk or harm caused by complications such as tonsillitis, pharyngitis, scarlet fever, impetigo, erysipelas (an infection in the upper layer of the skin), glomerular nephritis, rheumatic fever, cellulitis and pneumonia. Some vulnerable patient groups, such as those who are immunocompromised, are at a higher risk of developing invasive strep A infection. To prevent onward transmission, current Public Health England (PHE) guidance on invasive strep A infection management12 indicates use of antibiotics in close contacts of people who have invasive strep A infection if they have symptoms of strep A infection, such as sore throat, themselves or are in a particular risk group or setting. 11 Although these factors must be considered in understanding the reasons for use of antibiotics to treat sore throat in the absence of more accurate diagnosis, another factor that has an impact on use is patient demand. Although patient attendances for minor ailments at GP surgeries have reduced, when patients do visit their GP there is an expectation of intervention, and this is increasingly the case. 11 Furthermore, RTIs account for a high proportion of working days lost in the UK – in 2016, almost one-quarter (24.8%, 34 million days) – so ensuring that patients receive appropriate and timely treatment also has an economic impact on the UK economy and on patients. 13 However, this rationale and the demands need to be balanced with the aforementioned statistics regarding the low prevalence of bacterial infection in sore throat and the risk of antimicrobial resistance (AMR).

Overuse or inappropriate use of antibiotics can lead to bacteria developing resistance, leading to an emergence of multidrug-resistant pathogens, which are increasingly difficult to treat. AMR could contribute to an estimated 10 million deaths every year globally by 2050 and a global productivity cost of £66T. 11 In response to this threat, ‘antimicrobial stewardship’ has been a central strategy adopted by the Chief Medical Officer and NICE. 11,14 Point-of-care testing in primary care has been recognised as an emerging technology for aiding targeted antibiotic prescribing in cases of sore throat, by supporting clinicians with diagnosis and communicating appropriate use of antibiotics to patients. 15 Several technologies have been developed for point-of-care testing in primary care for appropriate administration of antibiotics to those who would benefit and to prevent delay and associated complications.

The NICE Diagnostics Advisory Committee is tasked with providing guidance to the NHS about the use of point-of-care tests for the detection of strep A in sore throat infections. To inform the Diagnostics Advisory Committee, the External Assessment Group (EAG) has provided this assessment of the clinical accuracy and cost-effectiveness of point-of-care tests for the detection of strep A as a replacement or adjunct for standard assessment procedures. The potential value of the point-of-care tests is in rapidly determining the presence and nature of a bacterial infection.

Aetiology and pathology

Most sore throats are caused by an infection, mainly viral, and so are typically spread from person to person via respiratory droplets; non-infectious causes are uncommon. 3 In the case of infectious causes, viruses, bacteria or fungi invade the upper respiratory mucosa, causing a local inflammatory response. 4 Complications associated with sore throat caused by infection are rare; however, strep A infection has a small risk of the following complications:3

-

otitis media

-

acute sinusitis

-

peritonsillar abscess

-

rheumatic fever and post-streptococcal glomerulonephritis are also complications associated with strep A throat infection; however, these are extremely rare in developed countries

-

invasive strep A, if the bacteria move from the throat into a sterile body site (which can lead to severe infections, sepsis and streptococcal toxic shock syndrome).

Children are most likely to carry or be infected by strep A; however, people aged > 65 years or those whose immune system is compromised (e.g. people living with a human immunodeficiency virus infection, diabetes mellitus, heart disease or cancer and people using high-dose steroids or intravenous drugs) are at higher risk of developing an invasive strep A infection. 16

A Fusobacterium necrophorum infection affecting the pharynx or tonsils can (very rarely) lead to Lemierre’s syndrome (sepsis and jugular vein thrombosis). 3

Diagnosis and care pathway

Figure 1 depicts the care pathway for assessing and treating a sore throat as outlined in the NICE antimicrobial prescribing guidance on sore throat [NICE Guidance (NG) number 84 (NG84)]. 8 Most uncomplicated sore throats are managed without seeking medical advice and tend to resolve within 1 week. 10 Suggested conservative measures include simple analgesia, maintaining hydration, salt gargling and throat lozenges. In selected cases in which a GP, or a pharmacist or a health-care practitioner in the secondary care setting, such as in accident and emergency, feels that the patient may benefit from antibiotics, the prescriber should apply either the FeverPAIN or the Centor scores to guide their decision-making. The NICE antimicrobial prescribing guideline on acute sore throat does not make any recommendations about using point-of-care tests or throat cultures to confirm strep A infection. 8

FIGURE 1.

Diagnostic and care pathway for managing acute sore throat in patients who are not at high risk of complications.

Significance to the NHS and current service cost

The significance of sore throat and inappropriate use of antibiotics to the NHS broadly falls into two categories: the first is associated with health-care use directly owing to sore throat and the second is the impact of inappropriate use of antibiotics contributing to AMR.

Respiratory tract infections, including sore throat, account for a large proportion of primary care use and antibiotic prescribing. 10 However, there is already evidence that the majority of patients prefer to self-medicate minor ailments, such as sore throat, where they feel able to do so. 1,2,14 For example, a visit to the general practice for a diagnosis and treatment for sore throat incurs the cost of the visit to a general practice and any treatment prescribed. In addition, in the current system, in which GPs can use the FeverPAIN or Centor criteria to inform antibiotic prescribing, there is the potential cost of additional health-care use for patients whose condition does not improve or who develop complications owing to ineffective or no treatment being prescribed. The risk of complications, however, is low and current prescribing activity suggests overuse rather than underuse of antibiotics for sore throat. Another cost associated with the current system is laboratory costs where the reference standard for diagnosis is used, namely throat swabs sent for culture.

Although these costs and the impact of minor ailment use on the NHS is a key consideration, the primary aim of the intervention being considered is to reduce inappropriate antibiotic prescribing. Doing so could support a reduction in promoters of AMR. The main antibiotic prescribed by general practice is penicillin, and this is the first-line treatment currently recommended by NICE for suspected strep A throat infection. 8,15 Across Europe, an estimated 25,000 people die each year as a result of hospital infections caused by the five most common resistant bacteria, and a parliamentary report estimated the annual cost to the NHS to be £180M per year. 17 Although it is often possible at present to use alternative treatments to treat resistant infection, costs of treatment and risk of mortality for a resistant infection are likely to be approximately double the cost of a non-resistant infection. 17 One study investigating the cost of a 10-month outbreak of a type of antibiotic-resistant bacteria (carbapenemase-producing enterobacteria) found the total cost to be close to £1M. The main cost was missed revenue from cancellation of planned surgical procedures owing to ward closures and lack of bed space. Other costs were associated with additional staff time, increased length of patient stay in hospital, screening, bed and ward closure, contact precautions, anti-infective costs, human papillomavirus decontamination and ward-based monitors. 17 In addition to health-care costs and risk of litigation associated with AMR-related harm, there is a wider societal cost of lost productivity and reduced quality of life for patients suffering the effects of AMR infections.

Clear definition of interventions

There are rapid tests for the strep A bacterium, which are intended to be used in addition to clinical scoring systems, such as FeverPAIN and Centor. The purpose is to increase diagnostic confidence in a suspected strep A infection, to guide antimicrobial prescribing decisions in people presenting with an acute sore throat and to contribute to improving antimicrobial stewardship. The tests may be suitable for use in all settings where patients may present with an acute sore throat; these include both primary and secondary care, and community pharmacies. 11

Twenty-one rapid tests for strep A detection are available. The tests use either immunoassay detection methods [rapid antigen detection tests (RADTs)] or molecular methods [polymerase chain reaction (PCR) or isothermal nucleic acid amplification]. The tests listed in the following section were identified from the NICE scope on point-of-care testing in primary care for strep A infection in sore throat.

Comparative technical overview of the point-of-care tests for group A Streptococcus

Seventeen RADTs were identified, and their product properties are summarised in Table 1. The type of information provided by each of the manufacturers is summarised in Appendix 14. For each test, the limit of detection has been defined as the lowest concentration of strep A in a sample that can be distinguished from negative samples. Of these, 16 tests use lateral flow techniques (also known as immunochromatographic or immunofluorescent assays) and one test is a turbidimetric immunoassay.

| Product | Test format and supply | Method | Limit of detection | Description of results | Time to result (minutes)a |

|---|---|---|---|---|---|

| Clearview® Exact Strep A cassetteb (Abbott Laboratories, Lake Bluff, IL, USA) | 25 individually pouched test cassettes | Lateral flow (immunochromatography) | 5 × 104 organisms/test | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| Clearview Exact Strep A dipstick – testb strip (Abbott Laboratories, Lake Bluff, IL, USA) |

25 test kits Dipstick |

Lateral flow (immunochromatography) | 5 × 104 organisms/test | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| BD Veritor Plus system group A strep assay – cassette (Becton Dickinson and Company, Sparks, MD, USA) |

30 test kits Test cassette |

Lateral flow (immunochromatography) |

Strain 12384: 1 × 105 CFU/ml Strain 19615: 5 × 104 CFU/ml Strain 25663: 2 × 105 CFU/ml |

Analysed by a BD Veritor system analyser module. Results are displayed visually | 5 |

| Strep A Rapid Test – cassette (Biopanda Reagents, Belfast, UK) | 20 test cassettes | Lateral flow (immunochromatography) | 1 × 105 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| Strep A Rapid Test – test strip (Biopanda Reagents, Belfast, UK) | No information provided | Lateral flow (immunochromatography) | 1 × 105 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| NADAL® Strep A – test strip (nal von minden GmbH, Regensburg, Germany) | 40 test strips including controls, 50 test strips (tube) including controls, as well as positive and negative control vials | Lateral flow (immunochromatography) | 1.5 × 105 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| NADAL Strep A – cassette (nal von minden GmbH, Regensburg, Germany) | 20 test cassettes including controls as well as positive and negative control vials | Lateral flow (immunochromatography) | 1.5 × 105 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| NADAL Strep A plus – cassette (nal von minden GmbH, Regensburg, Germany) | 20-pack cassettes including controls and five-pack cassettes including controls | Lateral flow (immunochromatography) | 1.5 × 105 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| NADAL Strep A plus – test strip (nal von minden GmbH, Regensburg, Germany) | 40 test strips | Lateral flow (immunochromatography) | 1.5 × 105 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| NADAL Strep A scan test – cassette (nal von minden GmbH, Regensburg, Germany) | 20-pack cassettes including controls | Lateral flow (immunochromatography) | 1.5 × 105 organisms/swab | Extracted solution is placed into the test cassette, with the Colibri placed on top. Analysed using a Colibri reader and Colibri USB and software (nal von minden GmbH, Regensburg, Germany) | 5 |

| OSOM Strep A test – test strip (Sekisui Diagnostics, Burlington, MA, USA) | 50-test pack | Lateral flow (immunochromatography) | Not known | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| QuikRead Go Strep A test kit (Orion Diagnostica, Espoo, Finland) | 50 tests including controls | Turbidimetric immunoassay | 7 × 104 CFU/swab | Analysed using the QuikRead Go instrument | < 7a |

| Alere TestPack +Plus Strep A – cassette (Abbott Laboratories, Lake Bluff, IL, USA) | 20 or 40 tests | Lateral flow (immunochromatography) | Not known | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| bioNexia® Strep A plus – cassette (bioMérieux, Marcy-l’Étoile, France) | 25 test cassettes | Lateral flow (immunochromatography) | 1 × 104 organisms/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| bioNexia Strep A dipstick – test strip (bioMérieux, Marcy-l’Étoile, France) | 25 test strips | Lateral flow (immunochromatography) | Not known | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| Biosynex Strep A – cassette (Biosynex, Illkirch-Graffenstaden, France) | Not reported | Lateral flow (immunochromatography) | 1 × 105 bacteria/swab | Positive results are indicated by two lines: one in the control region and the other in the test region. Read by visual inspection | 5 |

| Sofia Strep A FIA (Quidel, San Diego, CA, USA) | 25 cassettes, including positive and negative control vials | Lateral flow (immunofluorescence) |

Strain Bruno [CIP 104226]: 1.86 × 104 CFU/test Strain CDC-SS-1402: 9.24 × 103 CFU/test Strain CDC-SS-1460: 2.34 × 104 CFU/test |

Analysed using the Sofia analyser, which interprets the immunofluorescent signal using on-board method-specific algorithms. Results are displayed on screen as positive, negative or invalid | 5–6 |

| Product | Test supply and format | Method | Analyser | Limit of detection | Description of results | Time to result (minutes)a |

|---|---|---|---|---|---|---|

| Alere i Strep A (Abbott Laboratories, Lake Bluff, IL, USA) | 24 test kits | Isothermal nucleic acid amplification | Alere i instrument | Strain:

|

Alere i instrument heats, mixes and detects, then presents results automatically on the digital display | < 8 |

| Alere i Strep A 2 (ID NOW™ Strep A 2)b (Abbott Laboratories, Lake Bluff, IL, USA) | Information not available | Isothermal nucleic acid amplification | Alere i instrument | Not provided by manufacturer | Alere i instrument heats, mixes and detects, then presents results automatically on the digital display | < 6 |

| cobas® Strep A Assay (Roche Diagnostics, Basel, Switzerland) | Strep A assay box of 20 | PCR | cobas Liat® analyser (Roche Diagnostics, Basel, Switzerland) | Strain:

|

Results displayed digitally | < 15 |

| Xpert® Xpress Strep A (Cepheid, Sunnyvale, CA, USA) | Each kit contains sufficient reagents to process 10 specimens or quality control samples | PCR | GeneXpert® system (Cepheid, Sunnyvale, CA, USA) | Strain:

|

Results displayed digitally | ≥ 18 |

Four molecular tests were identified which use nucleic acid amplification techniques, either PCR or isothermal nucleic acid amplification, to amplify and detect a specific fragment of the GAS genome (Table 2). In each test, any strep A deoxyribonucleic acid present in the sample is labelled during the reaction, producing fluorescent light, which is monitored by a reader. If fluorescence reaches a specific threshold, the test is considered positive. If the threshold is not reached during the set time (usually up to 15 minutes), the test is negative.

The lateral flow (immunochromatographic and immunofluorescence) tests require a throat swab, which is typically placed into a specimen extraction tube and mixed with reagents to extract the sample from the swab. The swab is discarded and then either a test strip is immersed in the extracted solution or drops of the extracted solution are added to the sample well of a test cassette. The sample then migrates along the test strip or cassette, with any strep A antigens present in the sample binding to immobilised strep A antibodies in the test strip or cassette. When strep A is present at levels above the detection limit of the test, a line appears in the test line region of the strip or cassette. A control line shows technical success of the test. Results should be discarded when the control line indicates that the test has failed (i.e. no line appears in the control line region). Depending on the technology, the results are read either by visual inspection or by using an automated test reader device.

The turbidimetric immunoassay has similar sample collection and extraction steps to the lateral flow tests, but the extracted solution is placed into a cuvette that is prefilled with reagents. This contains rabbit anti-strep A antibodies, which bind to strep A antigens present in the sample. The QuikRead Go (Orion Diagnostica, Espoo, Finland) instrument measures the absorbance of each cuvette and converts the absorbance value into a positive or negative result.

Several of the companies recommend that negative RADT results are confirmed by microbiological culture of a throat swab.

Target population

The population of interest is people aged ≥ 5 years presenting to health-care providers in a primary care (GP surgeries and walk-in centres), secondary care (urgent care/walk-in centres and emergency departments) or community pharmacy setting with symptoms of an acute sore throat. These patients are identified as being more likely (FeverPAIN score of 2 or 3 points) or most likely (FeverPAIN score of 4 or 5 points, or a Centor score of 3 or 4 points) to benefit from an antibiotic by a clinical scoring tool. Relevant subgroups to be evaluated may include children (aged 5–14 years), adults (aged 15–75 years) and the elderly (adults aged > 75 years). In elderly patients, the infection is more likely to be invasive and have a higher associated mortality rate.

Comparator

The comparator is antibiotic prescribing based on clinical judgement and clinical scoring tools alone for strep A. However, the literature search for the comparator arm may also result in evidence referring to clinical scoring for group C and group G streptococci. The clinical scoring tools that may be used in NHS practice are FeverPAIN and Centor/modified Centor (McIsaac). These criteria are based on research evidence that assessed the individual and combination of sore throat symptoms most likely to be present in patients with clinically confirmed streptococcal infection (whether strep A or non-strep A).

FeverPAIN

The FeverPAIN clinical scoring tool includes the following variables:

-

clinical history

-

sore throat (none, mild, moderate or severe)

-

cough or cold symptoms (none, mild, moderate or severe)

-

muscle aches (none, mild, moderate or severe)

-

fever in last 24 hours (yes or no)

-

onset of illness (0–3, 4–7 or > 7 days)

-

-

clinical examination

-

cervical glands (none, 1–2 or > 2 cm)

-

inflamed tonsils (none, mild, moderate or severe)

-

pus on tonsils (yes or no).

-

The result of FeverPAIN is presented as a score ranging from 0 to 5 points, with 1 point assigned for each symptom present.

Centor

The Centor clinical scoring tool includes the following variables:

-

cough (yes or no)

-

exudate or swelling on tonsils (yes or no)

-

tender/swollen anterior cervical lymph nodes (yes or no)

-

temperature > 38 °C (yes or no).

Expert advice suggests that the McIsaac (modified Centor) clinical scoring tool may also be used. The McIsaac score adjusts the Centor score to account for the higher incidence of strep A in children and the reduced incidence of strep A in older adults. This adds age criteria (3–14 years, 15–44 years and ≥ 45 years) and adds 1 point for those aged < 15 years and subtracts 1 point for those aged > 45 years. The Centor result is presented as a score ranging from 0 to 4 points (0–5 points for the modified Centor), with 1 point assigned for each symptom present. 18

Reference standard

The reference standard for assessing the test accuracy of point-of-care tests for strep A infections is microbiological culture of throat swabs using standard blood agar or streptococcal selective agar as the culture medium. In the latter, antibiotics can be added to the standard blood agar to suppress the normal pharyngeal microflora, thus improving the yield of the strep A bacteria. However, there is no consensus on the preferred medium. 19

Throat swab culture remains the best reference standard for diagnosing streptococcal pharyngitis. However, several studies have identified discordance between throat swab culture with PCR or other measures. 20–22

In recent studies, PCR techniques were used as arbitrators of discordant results between throat culture and point-of-care tests. 20,23,24 In point-of-care tests, a threshold quantity of viable organisms must be exceeded for culture to be positive, whereas PCR-based tests are able to detect the genome of organisms irrespective of their viability. However, PCR cannot distinguish between acute strep A pharyngitis and asymptomatic pharyngeal carriage and, therefore, may detect carriage in the absence of a streptococcal infection. Therefore, our reference standard does not include PCR. Furthermore, some of the index tests are PCR based, and so a PCR-based reference standard would be biased in favour of these index tests. Where such arbitration using PCR is reported, we have included these data in this report, but the main analysis uses culture as the reference standard.

Chapter 2 Definition of the decision problem

Decision question

This report undertaken for the NICE Diagnostics Assessment Programme examines the clinical effectiveness and cost-effectiveness of point-of-care tests for diagnosing group A streptococcal infections in people who present with an acute sore throat in primary care, secondary care or community pharmacy settings. The report will help NICE to make recommendations about how well the tests work and whether or not the benefits are worth the cost of the tests, when used in the NHS in England and Wales. The assessment also considers other outcomes, including antibiotic prescription behaviour, clinical improvement in patients’ symptoms and costs associated with treatment, based on evidence identified through systematic literature searches.

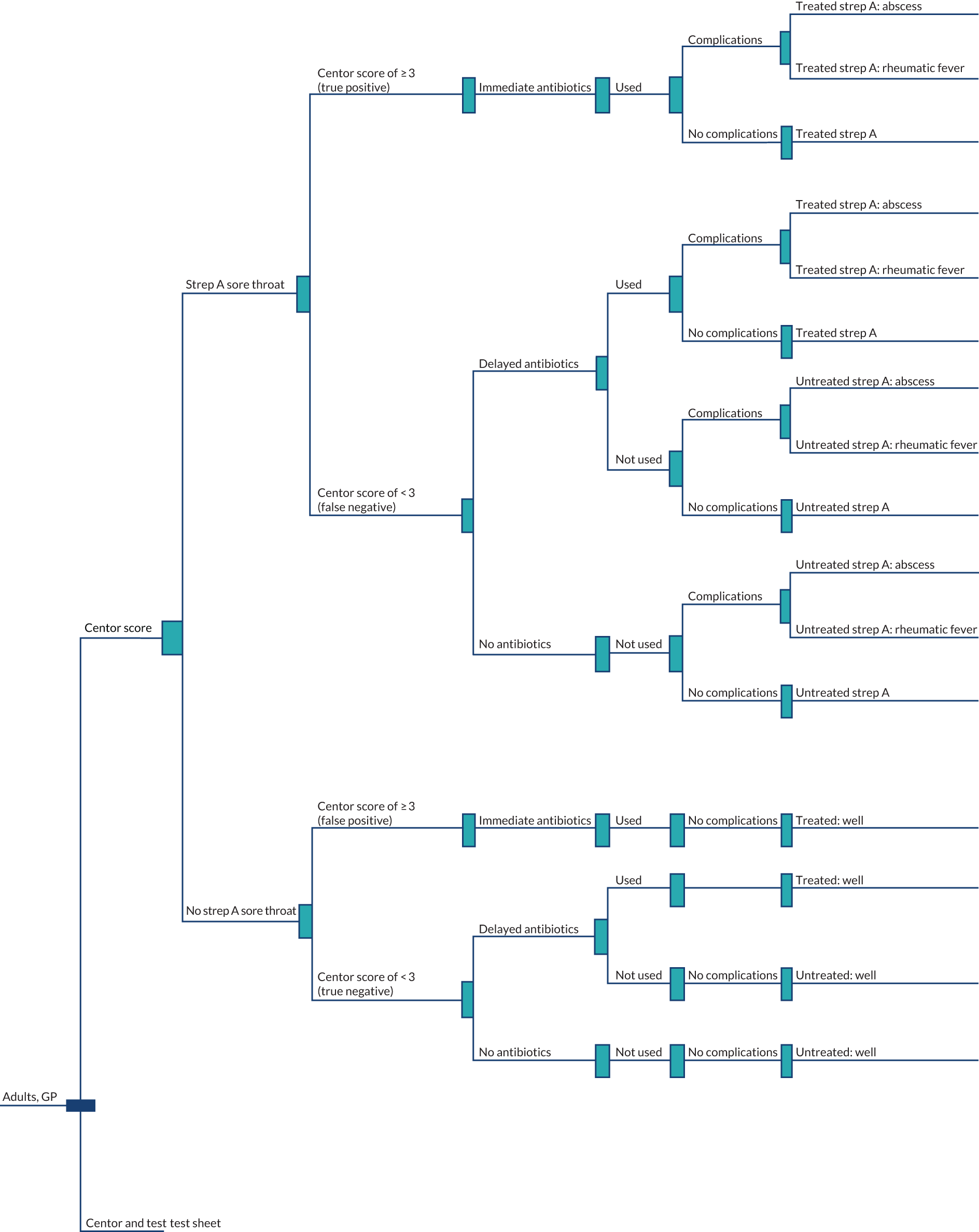

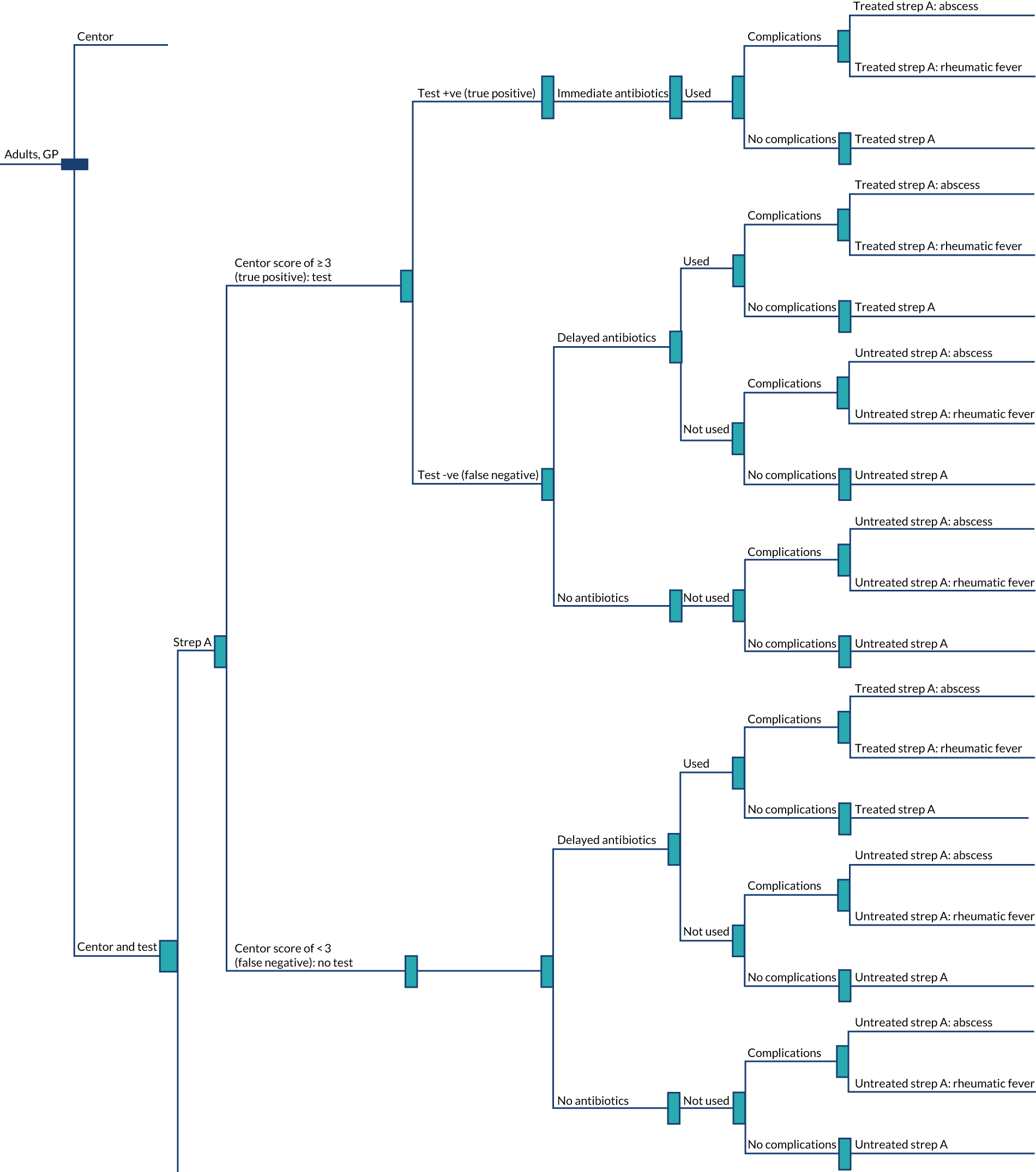

The decision question for this project is what is the clinical effectiveness and cost-effectiveness of rapid antigen detection and molecular tests in patients with high clinical scores (i.e. Centor scores of ≥ 3 points, FeverPAIN scores of ≥ 4 points), compared with the use of clinical scoring tools alone, for increasing the diagnostic confidence of suspected group A streptococcal infection in people who present with an acute sore throat in primary, secondary or pharmacy care?

Overall aim of the assessment

The overall aim of this report was to present evidence on the clinical effectiveness and cost-effectiveness of rapid antigen detection and molecular tests in those with high clinical scores (i.e. Centor scores of ≥ 3 points, FeverPAIN scores of ≥ 4 points), compared with the use of clinical scoring tools alone, for increasing the diagnostic confidence of suspected group A streptococcal infection in people aged ≥ 5 years who present with an acute sore throat in primary, secondary or pharmacy care.

Objectives

-

To systematically review the evidence for the clinical effectiveness of selected rapid tests for group A streptococcal infections in people aged ≥ 5 years with a sore throat presenting in a primary, secondary or pharmacy setting.

-

To systematically review existing economic evaluations and develop a de novo economic model to assess the cost-effectiveness of rapid tests in conjunction with clinical scoring tools for group A streptococcal infections compared with clinical scoring tools alone.

Chapter 3 Clinical effectiveness review

Methods

Search strategies for clinical effectiveness

The search strategy for the clinical effectiveness review is detailed in Appendix 1. An iterative procedure was used to develop the database search strategies, building on the scoping searches undertaken by NICE for this assessment and the searches underpinning the related MedTech innovation briefing published by NICE in 2018. 16 Database searches were run in November and December 2018 and were updated in March 2019. No date or language limits were applied. Grey literature searches were undertaken in February and March 2019.

Briefly, the search strategy included:

-

databases – MEDLINE [via OvidSP (Health First, Rockledge, FL, USA)], MEDLINE In-Process & Other Non-Indexed Citations (via OvidSP), MEDLINE Epub Ahead of Print (via OvidSP), MEDLINE Daily Update (via OvidSP), EMBASE (via OvidSP), Cochrane Database of Systematic Reviews [via Wiley Online Library (John Wiley & Sons, Inc., Hoboken, NJ, USA)], Cochrane Central Register of Controlled Trials (CENTRAL) (via Wiley Online Library), Database of Abstracts of Reviews of Effects (DARE) [via the Centre for Reviews and Dissemination (CRD)], Health Technology Assessment (HTA) database (via CRD), Science Citation Index and Conference Proceedings [via the Web of Science™ (Clarivate Analytics, Philadelphia, PA, USA)] and the PROSPERO International Prospective Register of Systematic Reviews (via CRD)

-

trial database – ClinicalTrials.gov

-

reference lists of relevant reviews and included studies

-

online resources of health services research organisations and regulatory bodies – International Network of Agencies for Health Technology Assessment (INAHTA), the US Food and Drug Administration (FDA) medical devices, FDA Clinical Laboratory Improvement Amendments (CLIA) database and European Commission medical devices

-

online resources of selected professional societies and conferences – British Society for Antimicrobial Chemotherapy, British Infection Association, PHE, British Society for Antimicrobial Chemotherapy, Royal College of Pathologists, streptococcal biology conference, Lancefield International Symposium on Streptococci and Streptococcal Diseases, Federation of Infection Societies Conference, The European Congress of Clinical Microbiology and Infectious Diseases (ECCMID), Microbiology Society Conference, American Society of Microbiology, and Association of Clinical Biochemistry and Laboratory Medicine

-

online resources of manufacturers of the included rapid tests.

Inclusion and exclusion of relevant studies (Boxes 1 and 2)

-

People aged ≥ 5 years presenting with symptoms of an acute sore throat. Where possible, relevant subgroups evaluated included children (aged 5–14 years), adults (aged 15–75 years) and the elderly (adults aged > 75 years); however, mixed populations were acceptable. Studies of children aged < 5 years could be included providing ≥ 90% were above this age.

-

Point-of-care tests for strep A (including RADTs and molecular tests as described in Tables 1 and 2).

-

Clinical scoring tools (such as FeverPAIN, Centor or McIsaac).

-

Microbiological culture of throat swabs.

-

Outcomes of test performance:

-

test accuracy – sensitivity, specificity, PPV and NPV. Where possible, evaluated by relevant clinical scores (Centor/McIsaac ≥ 3 points and FeverPAIN ≥ 4 points)

-

discordant results with throat culture

-

test failure rates

-

time to antimicrobial prescribing decision

-

changes to antimicrobial prescribing decision

-

number of appointments required per episode

-

number of delayed or immediate antibiotic prescriptions issued.

-

-

Clinical outcomes:

-

morbidity, including post-strep A infection complications, such as rheumatic fever and side effects from antibiotic therapy

-

mortality

-

contribution to antimicrobial stewardship and onward transmission of infection.

-

-

Patient-reported outcomes:

-

health-related quality of life

-

patient satisfaction with test and antimicrobial prescribing decision

-

health-care professional satisfaction with test and antimicrobial prescribing decision.

-

-

Costs.

For test accuracy data:

-

clinical test accuracy studies that compare the index tests (point-of-care tests for strep A) with throat swab culture

-

studies of head-to-head comparisons of rapid tests were eligible for inclusion if test accuracy statistics were reported for each test.

For data on other clinical outcomes:

-

any study design comparing the index tests (point-of-care tests for strep A) and/or clinical scoring tools (Centor, McIsaac or FeverPAIN) with biological culture as a reference standard.

-

Primary care (GP clinics and walk-in centres), secondary care (urgent care/walk-in centres and emergency departments) or community pharmacy settings.

NPV, negative predictive value; PPV, positive predictive value.

-

Patients without acute sore throat.

-

Patients with existing comorbidities.

-

Patients with known invasive strep A infection.

-

Other point-of-care tests that are not listed in the NICE scope.

-

For test accuracy data: no comparison of index test vs. throat culture reported.

-

For other outcomes: no comparison of index test vs. throat culture or clinical scoring tools (Centor, McIsaac or FeverPAIN).

-

Reviews, biological studies, case reports, editorials and opinions, poster presentations without supporting abstracts, non-English-language reports, meeting abstracts without sufficient information to produce 2 × 2 contingency tables for test performance.

-

Studies published before 1998 (keeping in line with the 1998 directive of the European parliament requiring all in vitro diagnostic devices to have a CE marking). 25

-

Hospital inpatient.

CE, Conformité Européenne.

Study selection strategy

All publications that were identified in searches from all sources were collated in EndNote (Clarivate Analytics) and deduplicated. Two reviewers independently screened the titles and abstracts of all records identified by the searches (Cohen’s kappa = 0.997) and discrepancies were resolved through discussion. Full copies of all studies deemed potentially relevant were obtained and two reviewers independently assessed these for inclusion; any disagreements were resolved by consensus or discussion with a third reviewer. Records excluded at full-text stage and reasons for exclusion were documented.

Data extraction strategy

All data were extracted by one reviewer, using a piloted data extraction form. A second reviewer checked the extracted data on test accuracy [2 × 2 table, sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV)], and a third reviewer checked other extracted data. Any disagreements were resolved by consensus. A sample data extraction form used in this review is available in Appendix 2. Test accuracy statistics for rapid/index tests were derived from data extracted onto 2 × 2 contingency tables in the format shown in Table 3. As shown, A represents the number of patients positive for strep A by rapid test and throat culture (true positives); B represents the number of patients positive for strep A by rapid test but not throat culture (false positives); C represents the number of patients negative for strep A by rapid test but positive by throat culture (false negatives); and D represents the number of patients negative for strep A by rapid test and throat culture (true negatives). Sensitivity was calculated as A/(A + C), specificity as D/(B + D), PPV as A/(A + B) and NPV as D/(C + D). Similarly, using data extracted in the formats shown in Tables 4 and 5, we calculated accuracy statistics for the current pathway (Centor/McIssac/FeverPAIN scores) based on NICE thresholds. Where PCR techniques were employed to arbitrate discordant results between microbiological culture and rapid tests, we report the PCR results for the discordant cases. We also extracted test accuracy data for each index test with culture as the reference standard in studies of head-to-head (direct) comparisons of index tests. Data on other outcomes of test performance, morbidity, antibiotic-prescribing behaviour, population characteristics and settings were also extracted using the extraction form.

| Culture + | Culture – | Total | |

|---|---|---|---|

| Index test + | A | B | A + B |

| Index test – | C | D | C + D |

| Total | A + C | B + D | A + B + C + D |

| Culture + | Culture – | Total | |

|---|---|---|---|

| Centor/McIsaac score of ≥ 3 points | A | B | A + B |

| Centor/McIsaac score of < 3 points | C | D | C + D |

| Total | A + C | B + D | A + B + C + D |

| Culture + | Culture – | Total | |

|---|---|---|---|

| FeverPAIN score of ≥ 4 points | A | B | A + B |

| FeverPAIN score of < 4 points | C | D | C + D |

| Total | A + C | B + D | A + B + C + D |

Quality assessment strategy for test accuracy studies

Quality assessment of eligible test accuracy studies was undertaken with a tailored Quality Assessment of Diagnostic Accuracy Studies – 2 (QUADAS-2) tool. 26 Methodological quality was assessed by a single reviewer and findings were checked by a second reviewer. Disagreements were resolved by consensus or use of a third reviewer.

Quality assessment aimed to assess the risk of bias and applicability concerns of included studies where one (or more) of our 21 scoped tests was the index test(s), and with biological throat culture as the reference standard. Additional tests outside the scope were not quality appraised.

Modifications to tailor the QUADAS-2 form to the research question in terms of the risk-of-bias assessment were as follows (see Appendix 4 for the tailored QUADAS-2 form and guidance notes).

Patient selection domain

Two further signalling questions were added to this domain. The first was ‘were selection criteria clearly described?’. It is important that the correct patient groups were included in the studies. Patients aged < 5 years follow a different NICE clinical pathway27 because they are more likely to present with a sore throat and less likely to be able to articulate their symptoms, and it is less likely that a throat swab can be obtained. Likewise, a clinical score (such as Centor or FeverPAIN) should be reported, with patients included only if they have a score of > 3 points on Centor or > 4 points on FeverPAIN. Those with lower scores may be systematically different and, therefore, test accuracy may also differ, introducing bias. Including patients aged < 5 years and with a low clinical score also raises applicability concerns.

The second signalling question that was added was ‘were patients seen in an ambulatory care setting?’. Patients seen as inpatients may vary in severity and have comorbidities affecting their diagnosis.

Index test domain

Two questions were added within this domain. The first was ‘was a separate swab undertaken for the index test?’. This question was added as manufacturers’ specifications require separate swabs to be taken for index and reference standard tests. Using one swab for multiple purposes may reduce the quantity of the sample for testing and, thus, affect the accuracy of the test. The second question was ‘is the test reading objective?’. Some of the tests require a subjective reading of whether or not a line, indicating a positive result, has appeared. Owing to this, there is always a high level of bias in any rapid test that requires a determination of the result by the human reader. Tests with automated readings have been shown to have improved specificity and reduce operator errors, especially in unclear results. 28

Comparator domain

One additional signalling question was added in this domain: ‘was a separate swab taken for throat culture testing?’. Using one swab for multiple purposes may reduce the quantity of the sample for testing and, thus, affect the accuracy of the test. Under this domain, the directions for taking a throat culture specimen were clarified based on the PHE guidelines on UK Standards for Microbiology Investigations. 29

Flow and timing

Two further signalling questions were added to the flow and timing domain. The first was ‘were both index test(s) and reference standard (and comparator where included) all carried out at the same appointment?’. The swabs for a rapid test and culture should be obtained at the same appointment. The levels of strep A are likely to vary by day, so taking a later sample could introduce systematic bias.

The additional signalling question was ‘were both index test(s) and reference standard (and comparator where included) all carried out prior to commencement of antibiotics?’. Patients should not have been treated with antibiotics prior to testing, as antibiotics are likely to have reduced the amount of strep A present.

Quality appraisal strategy for studies of prescribing behaviour and clinical outcomes

Quality appraisal for studies of prescribing behaviour and clinical outcomes used two different tools: the Cochrane risk-of-bias tool for randomised controlled trials (RCTs)30 and the Joanna Briggs Institute (JBI) Critical Appraisal Checklist for analytical cross-sectional studies. 31 Methodological quality was assessed by a single reviewer and findings were checked by a second reviewer. Disagreements were resolved by consensus or use of a third reviewer.

Assessment of test accuracy

To assess the accuracy of the point-of-care tests, we planned to conduct a series of meta-analyses on the available data. Data from studies that either presented 2 × 2 tables for one of the index tests compared with culture or provided information that allowed calculation of the 2 × 2 table were included in the meta-analyses.

The median age of participants was used to categorise each study into one of the three age groups of interest, with two reviewers discussing when the categorisation was not straightforward. Setting was also considered to inform the age categorisation where necessary (e.g. if the study was conducted within a paediatric department). The setting of each study was treated as a categorical variable, indicating primary care (health-care centre, GP clinic or primary care clinic), secondary care (emergency department, private paediatric clinic, outpatient clinic, urgent care clinic or walk-in centre) and pharmacy setting or mixed.

For the purpose of the meta-analysis, the throat score of the population was dichotomised to 0, if the study population included patients who had scores below the threshold set in the scope, and 1, if the study population matched the scope (Centor/McIsaac score of ≥ 3 points or FeverPAIN score of ≥ 4 points). Alternative throat score classification of study populations was also considered, using the categories of a population matching the scope (see Chapter 1, Target population), a population restricted by throat score but still including patients not in the scope (e.g. Centor score of 2 points) and a population without any restriction by throat score.

Methods of analysis/synthesis

We planned to use bivariate models to conduct each meta-analysis, as they allow simultaneous estimation of both sensitivity and specificity, accounting for correlation between the two measures. Where at least two studies existed for a test, we used a random-effects model to allow for deviation in test performance across each study. If bivariate or random-effects models failed to converge or produced results with unexpectedly wide confidence intervals (CIs) around key parameters, then simpler models (e.g. fixed-effect or univariate models) were used instead. 32,33 Where bivariate models were used, a comparison with the equivalent univariate models was made, and any difference noted. It was not anticipated that any meaningful difference between the two model types would be observed given the small number of data available.

For index tests that had just one study, a meta-analysis was not conducted. The impacts of age, setting and prevalence on test performance were all assessed through the meta-analysis of relevant subgroups. NICE advised the EAG against meta-analysis across rapid tests from different manufacturers.

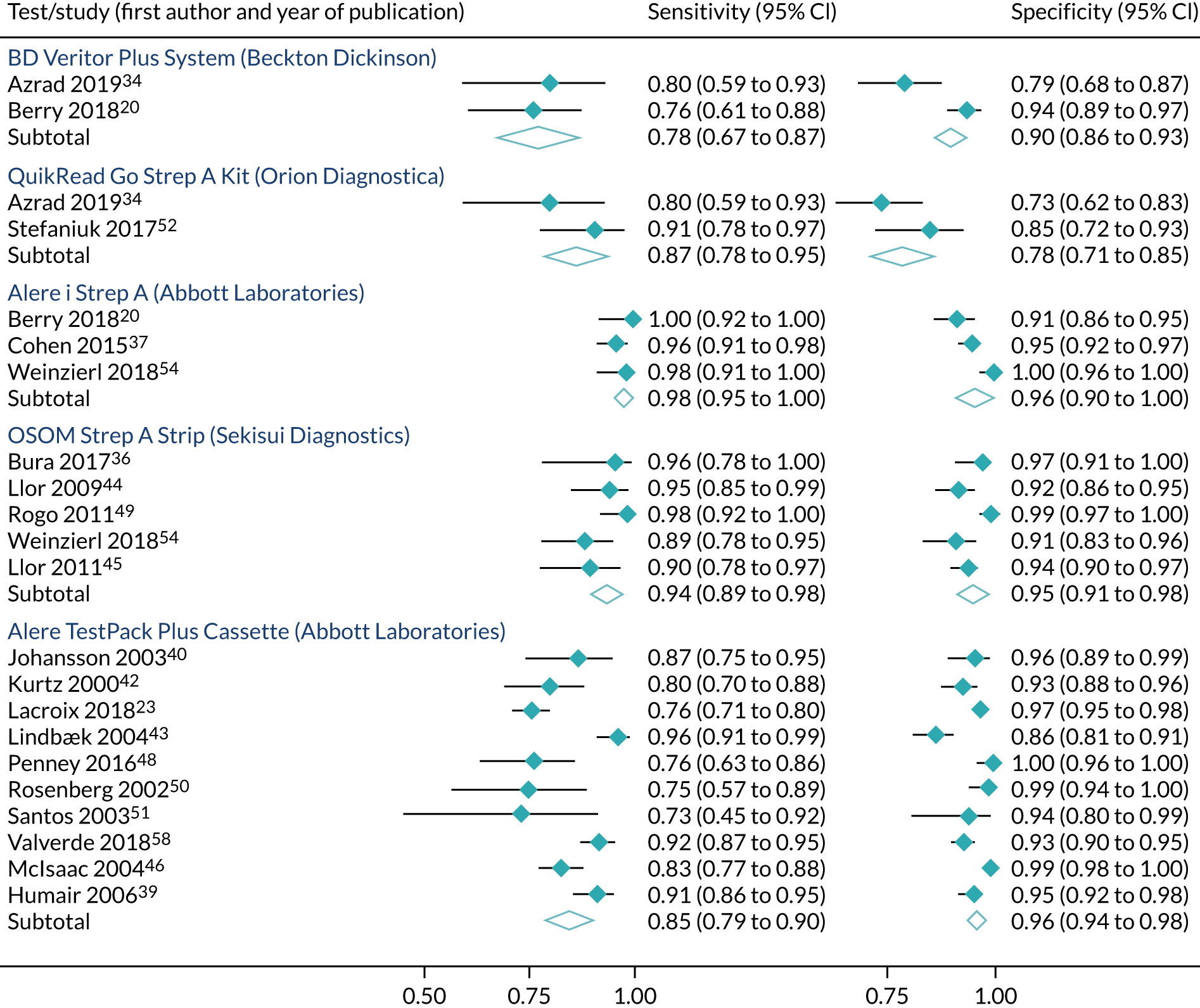

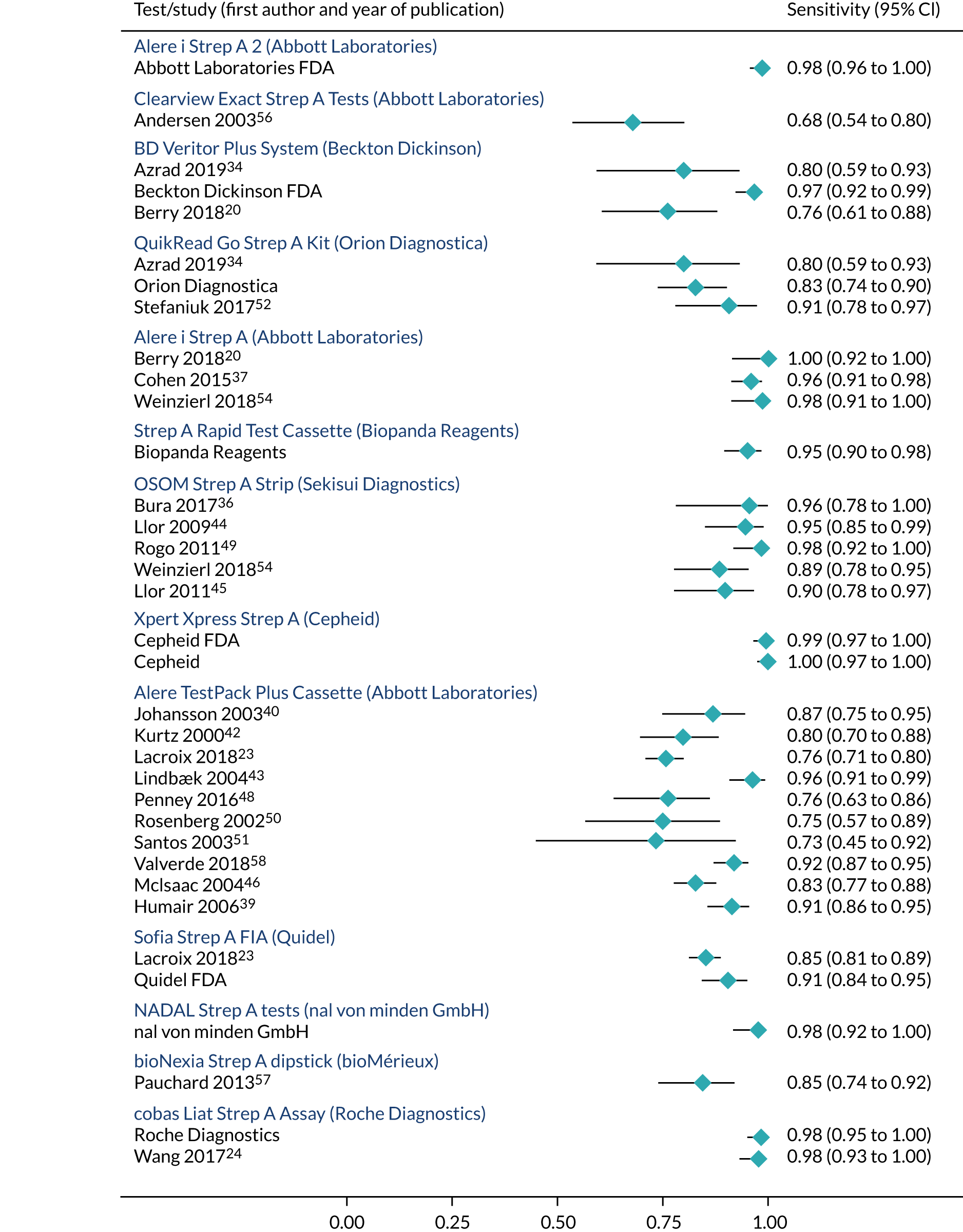

Clinical effectiveness results

Search results

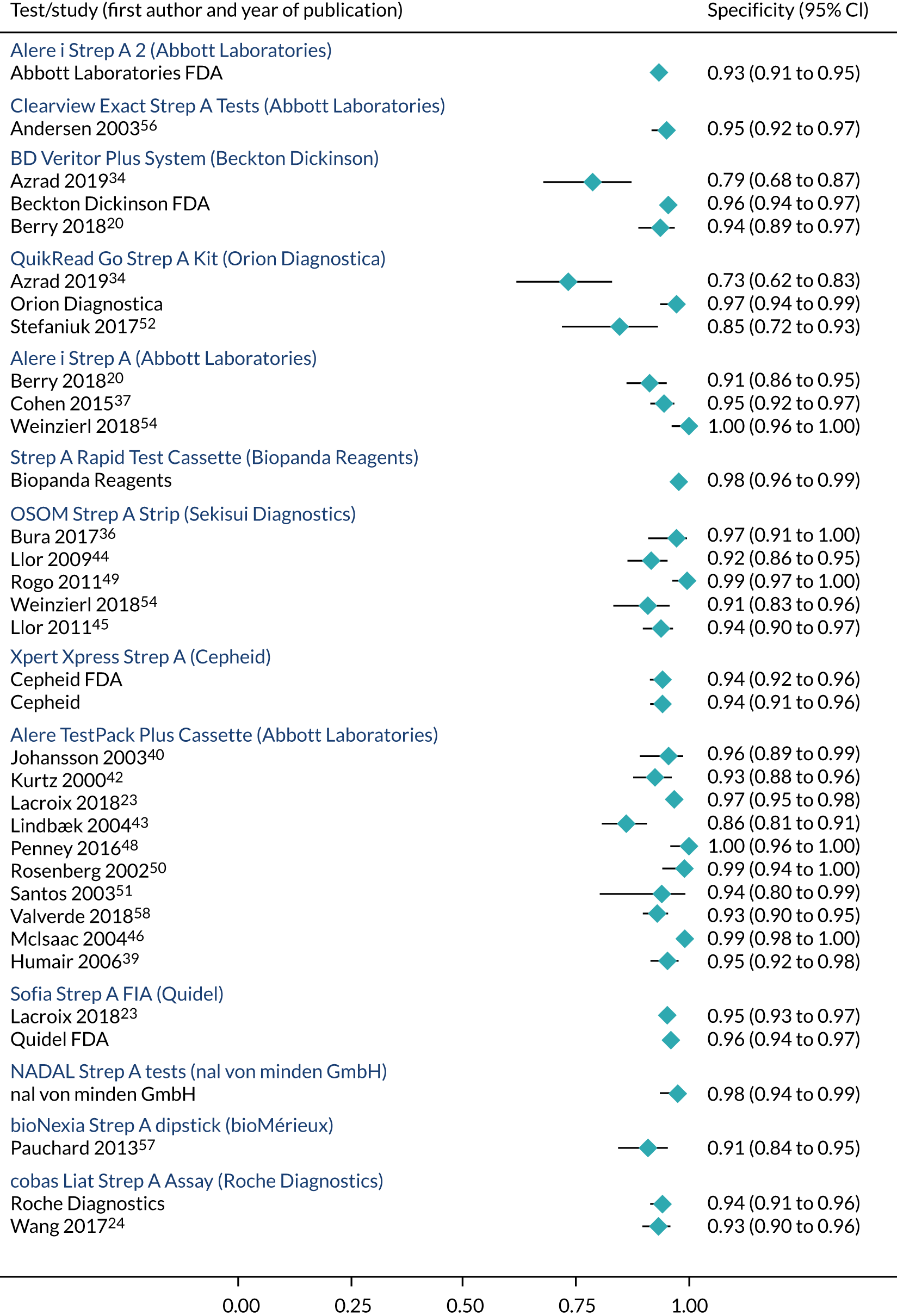

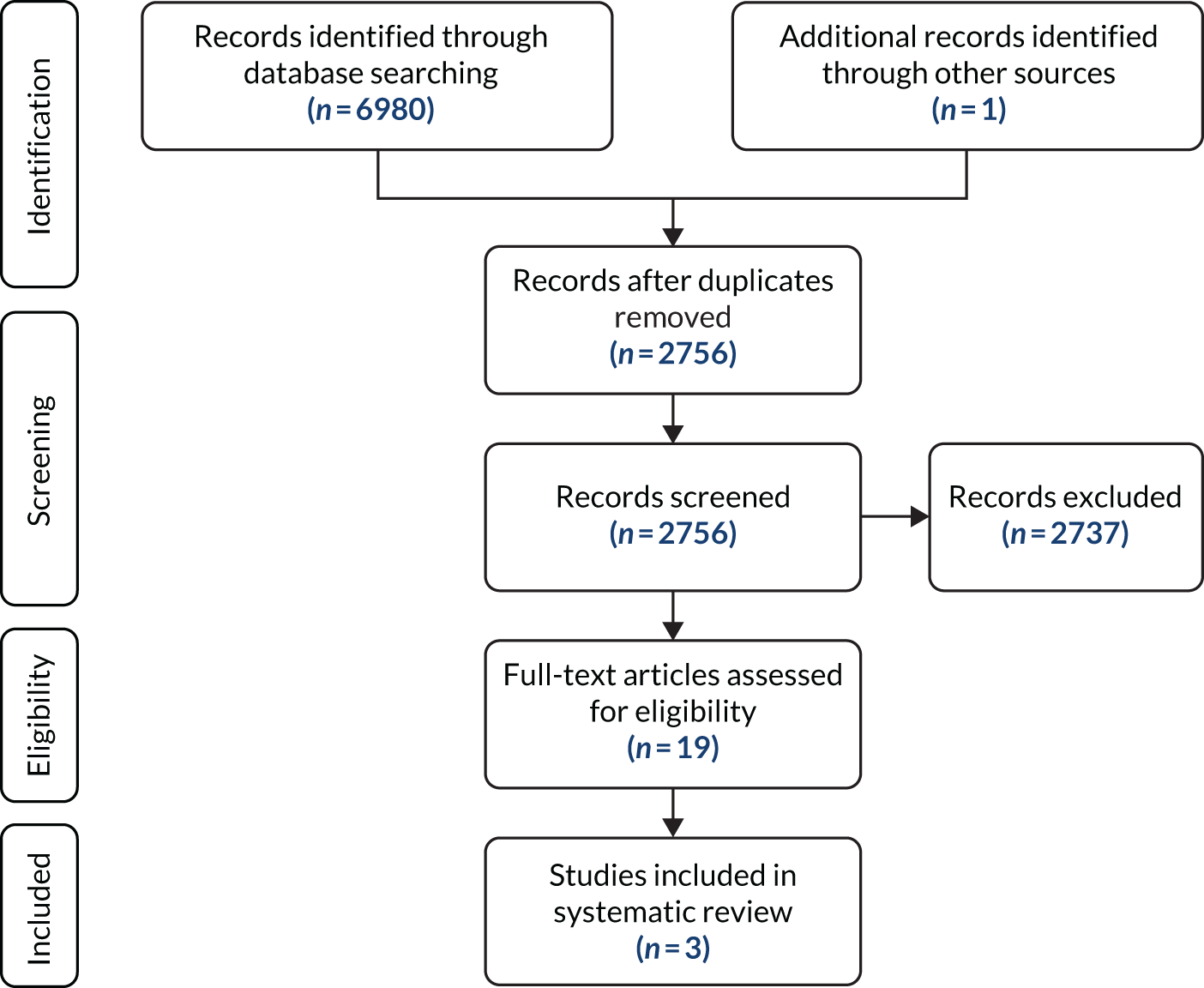

Figure 2 is a Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram that illustrates the study selection process for the clinical effectiveness review. The search identified 5919 records through database and other searches. Following duplicate removal, we screened 3309 records, of which 3072 were excluded by their titles and abstracts, leaving 237 assessed for their eligibility to be included in the review. A total of 199 studies were subsequently excluded with reasons, leaving 38 studies [26 full texts,6,20,23,24,34–55 three abstracts,56–58 five manufacturers’ studies (submitted directly to NICE in response to a request for information) and four FDA documents59–62]. The most common reason for exclusion at this stage was not reporting any of the rapid tests listed in the scope. The full list of excluded studies with reasons for exclusion can be found in Appendix 3.

FIGURE 2.

The PRISMA flow diagram showing study selection for the clinical effectiveness review.

Study characteristics

Characteristics of the 38 studies included in the clinical effectiveness review are described in Figures 3 and 4 and Table 6. Of the 29 studies (full texts and abstracts)6,20,23,24,34–58 identified by the search, 26 of the studies reported test accuracy data. 20,23,24,34–52,54,57,58,63 Three of the identified studies6,53,55 reported only other outcomes (such as antibiotic-prescribing rates) and did not report test accuracy. In addition, five studies were sent by manufacturers in response to a request for information by NICE and four FDA documents were retrieved. 59–62

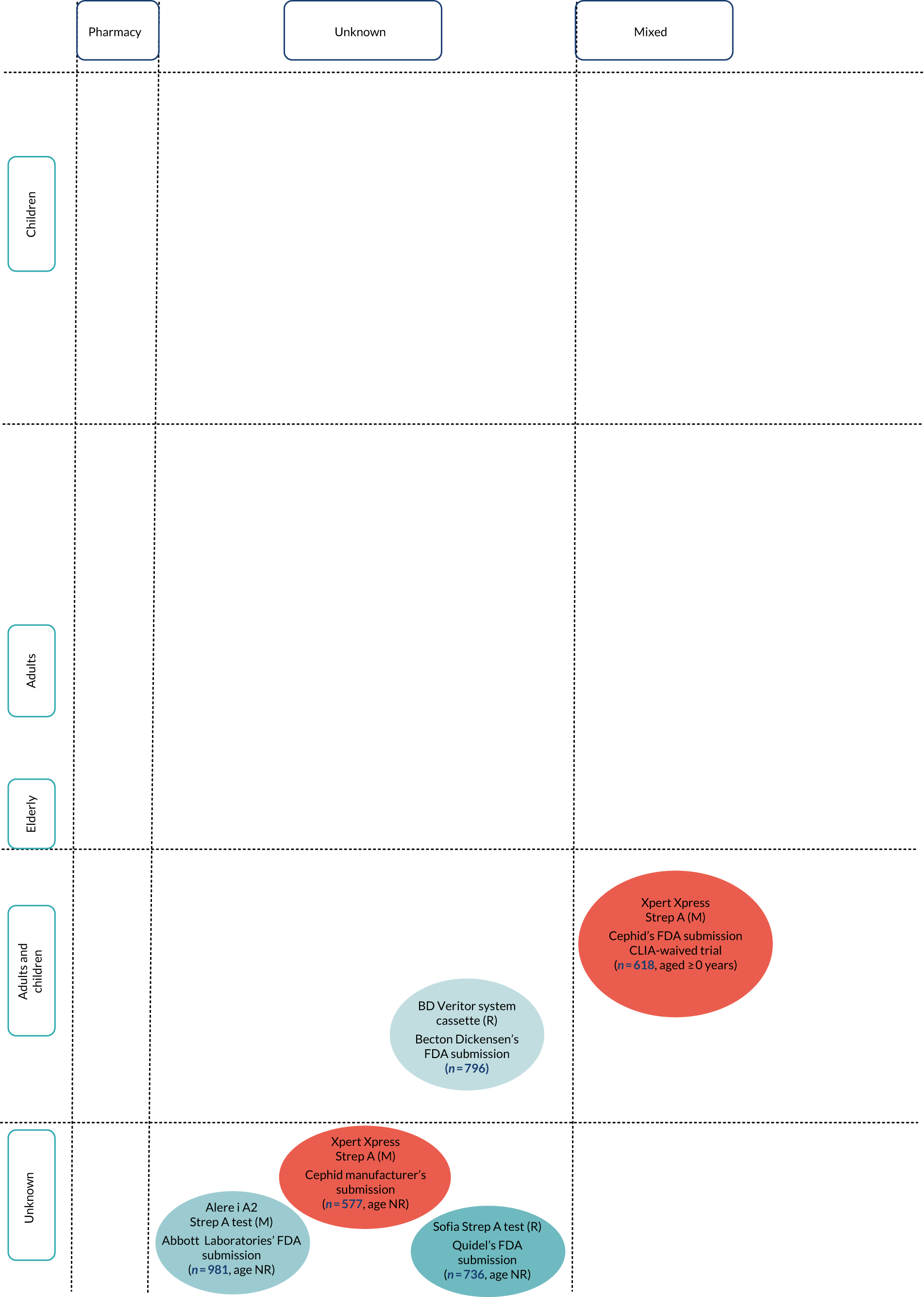

FIGURE 3.

Diagram of studies by test type, setting and population for included test accuracy studies with extractable 2 × 2 data. Note that lines between tests indicate head-to-head (direct) comparisons. M, molecular test; NR, not reported; R, rapid test.

FIGURE 4.

Diagram of studies by test type, setting and population for included test accuracy studies with extractable 2 × 2 data. M, molecular test; NR, not reported; R, rapid test.

| Study (first author and year of publication) | Data source | Setting | Study population | Index test | Comparison with Centor/McIsaac/FeverPAIN scores? | Throat swab culture medium | Outcomes | Test accuracy in high-risk subpopulations with Centor/McIsaac scores of ≥ 3 points or FeverPAIN score of ≥ 4 points | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| n | Age group as reported | Sore throat clinical score criteria | Strep A prevalence (%) | ||||||||

| Published articles and abstracts | |||||||||||

| Anderson 200356 | Abstract | Secondary | 353 | Children (0–14 years) |

No criteria reported Used clinical symptoms |

15 | Clearview Strep A | No | NR | Test accuracy | NR |

| Azrad 201934 | Published article | Secondary | 100 | NR |

No criteria reported Used clinical symptoms |

25 | BD Veritor system | No | Streptococcal selective agar | Test accuracy | NR |

| QuikRead Go Strep A test kit (Orion Diagnostica) | |||||||||||

| Berry 201820 | Published article | Secondary | 215 | Children (age range not reported) | NR | 19.5 | Alere i Strep A test | No | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

NR |

| BD Veritor system | NR | ||||||||||

| Bird 201835 | Published article | Secondary | 395 | Children | McIsaac ≥ 3 points | NR or not calculable | bioNexia Strep A | Yes/Centor | NA |

Test accuracy Antibiotic-prescribing behaviour |

NR |

| Bura 201736 | Published article | Primary | 101 | Adults (18–44 years) | Centor ≥ 2 points | 22.7 | OSOM Strep A test (Sekisui Diagnostics) | Yes/Centor | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

No |

| Cohen 201537 | Published article | Secondary | 481 | Children (median age 11 years) | McIsaac all scores | 30.3 | Alere i Strep A test | Yes/McIsaac | Blood agar | Test accuracy | No |

| Dimatteo 200138 | Published article | Secondary | 383 | Adults (18–86 years) | Centor ≥ 1 point | NR or not calculable | Alere™ TestPack +Plus Strep A (Abbott Laboratories) | Yes/McIsaac | Streptococcal selective agar | Test accuracy | NR |

| Humair 200639 | Published article | Primary | 224 | Adults (15–65 years) |

Centor = 2 points Centor > 2 points |

46.9 | Alere TestPack +Plus Strep A (Abbott Laboratories) | Yes/Centor | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

Yes |

| Johansson 200340 | Published article | Primary | 144 | Mixed (children and adults) | NR | 31.4 | Alere TestPack +Plus Strep A (Abbott Laboratories) | No | NR |

Test accuracy Antibiotic-prescribing behaviour |

NR |

| Johnson 200141 | Published article | Primary | 522 | Adults (median age 26 years) |

No criteria reported Used clinical symptoms |

NR or not calculable | Alere TestPack +Plus Strep A (Abbott Laboratories) | No | Blood agar | Test accuracy | NR |

| Kurtz 200042 | Published article | Secondary | 257 | Children (4–15 years) |

No criteria reported Used clinical symptoms |

31.1 | Alere TestPack +Plus Strep A (Abbott Laboratories) | No | Blood agar | Test accuracy | NR |

| Lacroix 201823 | Published article | Secondary | 1002 | Children | McIsaac ≥ 2 points | 38 | Sofia Strep A FIA (Quidel) | No | Blood agar | Test accuracy | No |

| Alere TestPack +Plus Strep A test (Abbott Laboratories) | |||||||||||

| Lindbæk 200443 | Published article | Primary | 306 | Adults (median age 23.9 years) | NR | 35.9 | Alere TestPack +Plus Strep A (Abbott Laboratories) | No | Streptococcal selective agar | Test accuracy | NR |

| Little 20136 | Published article | Primary | 1760 | Mixed (aged ≥ 3 years) | FeverPAIN ≥ 1 point | NR or not calculable | Alere TestPack +Plus Strep A (Abbott Laboratories) | Yes | None | Antibiotic-prescribing behaviour | No |

| Llor 200944 | Published article | Primary | 222 | Adults (median age 30.6 years) | Centor ≥ 2 points | 21.2 | OSOM Strep A | Yes/Centor | Blood agar | Test accuracy | No |

| Llor 201145 | Published article | Primary | 276 | Adults (median age 31.7 years) | Centor ≥ 1 point | 16.7 | OSOM Strep A test | Yes/Centor | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

Yes |

| McIsaac 200446 | Published article | Primary | 787 | Children (3–17 years) and adults (≥ 18 years); results reported separately by group | McIsaac all scores | 29 | TestPack Plus Strep A test (Abbott Laboratories) | Yes/McIsaac | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

No |

| Nerbrand 200247 | Published article | Primary | 615 | Mixed (children and adults |

No criteria reported Used clinical symptoms |

21.1 | TestPack Plus Strep A test (Abbott Laboratories) | No | Blood agar | Test accuracy | NR |

| Pauchard 201357 | Abstract | Secondary | 193 | Children (3–18 years) | McIsaac > 2 points | 37 | Strep A Rapid Test (Biopanda Reagents) | Yes/McIsaac | NR | Test accuracy | NR |

| Penney 201648 | Published article | Secondary | 147 | Children (mean age 8.8 years) |

No criteria reported Used clinical symptoms |

40.1 | Alere TestPack +Plus Strep A test (Abbott Laboratories) | No | Streptococcal selective agar | Test accuracy | NR |

| Rogo 201149 | Published article | Secondary | 228 | Children |

No criteria reported Used clinical symptoms |

28.9 | OSOM Strep A test | No | Blood agar | Test accuracy | NR |

| Rosenberg 200250 | Published article | Secondary | 126 | Mixed (children and adults) | Centor all scores | 25.4 | TestPack Plus Strep A test (Abbott Laboratories) | Yes/Centor | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

NR |

| Santos 200351 | Published article | Secondary | 49 | Children (1–12 years) |

No criteria reported Used clinical symptoms |

30 | Alere TestPack +Plus Strep A (Abbott Laboratories) | No | Blood agar | Test accuracy | NR |

| Stefaniuk 201752 | Published article | Primary | 44 | Children | McIsaac/Centor all scores | 26.3 | QuikRead Go Strep A test kit (Orion Diagnostica) | Yes/Centor | Blood agar |

Test accuracy Antibiotic-prescribing behaviour |

No |

| 96 | Adults and children | McIsaac/Centor all scores | 22.4 | ||||||||

| Thornley 201653 | Published article | Pharmacy | 149 | NR | Centor > 2 points | 24.2 | OSOM Strep A test (Sekisui Diagnostics) | Yes/Centor | None | Antibiotic-prescribing behaviour | NA |

| Valverde 201858 | Abstract | Secondary | 580 | Mixed (aged ≥ 0 years) | NR | NR or not calculable | TestPack Plus Strep A test | No | Blood agar | Test accuracy | NR |

| Wang 201724 | Published article | Primary | 427 | Children | Centor ≥ 1 point | 30.2 | cobas Liat Strep A Assay (Roche Diagnostics) | No | NR | Test accuracy | No |

| Weinzierl 201854 | Published article | Secondary | 160 | Children (median age 6.5 years) | NR | 38 | OSOM Strep A test | No | Blood agar | Test accuracy | NR |

| Alere i Strep A test | |||||||||||

| Worrall 200755 | Published article | Primary | 533 | NR | Centor all scores | NR or not calculable | Clearview Exact Strep A (Abbott Laboratories) | Yes/Centor | NA | Antibiotic-prescribing behaviour | NA |

| Manufacturer’s studies provided in responses to request by NICE | |||||||||||

| Biopanda Reagents | Manufacturer’s information | Secondary | 160 | Median age 6.5 years | NA | 23.2 | Alere i Strep A test | No | Blood agar | Test accuracy | NR |

| Cepheid | Manufacturer’s information | Primary | 577 | NR | NA | 25.6 | Xpert Xpress | Yes/Centor | NA | Test accuracy | NR |

| nal von minden GmbH | Manufacturer’s information | Unknown | 244 | Mixed (adults and children) | NA | 34.4 | NADAL Strep A test | No | Blood agar | Test accuracy | NR |

| Orion Diagnostica | Manufacturer’s information | Primary | 271 | NR | NA | 32.8 | QuikRead Go Strep A test kit (Orion Diagnostica) | No | Streptococcal selective agar | Test accuracy | NR |

| Roche Diagnostics | Manufacturer’s information | Mixed | 570 | Mixed (aged ≥ 3 years) | NA | 30.4 | cobas Liat Strep A Assay (Roche Diagnostics) | No | Blood agar | Test accuracy | NR |

| FDA documents | |||||||||||

| Abbott Laboratories61 | FDA document | Mixed | 981 | NR | NA | 20.2 | Alere i Strep A 2 test | No | Blood agar | Test accuracy | NR |

| Becton Dickinson59 | FDA document | Mixed | 796 | Mixed (aged ≥ 0 years) | NA | 18.7 | BD Veritor system | No | Blood agar | Test accuracy | NR |

| Cepheid62 | FDA document | Mixed | 618 | NR | NA | 25.6 | Xpert Xpress Strep A (Cepheid) | No | NR | Test accuracy | NR |

| Quidel60 | FDA document | Mixed | 736 | NR | NA | 17.4 | Sofia Strep A FIA (Quidel) | No | Blood agar | Test accuracy | NR |

The tests, their settings, the populations they cover and the head-to-head studies are illustrated in Figures 3 and 4.

Population

The 38 included studies comprised ≈ 14,000 symptomatic participants. Prevalence of strep A ranged from 15% to 49%, with no clear demographic or clinical patterns accounting for this variation. 39,56 Similarly, prevalence estimates of strep A were no more or less likely to be higher in secondary or primary care settings. The study population comprised adults and children; however, the exact proportions are unknown as they were not reported in about half of the included studies. In most of the included studies, participants aged < 18 years were identified as children. In fact, only two studies met the age criterion for children (ages 5 to 14 years) as defined in the protocol and scope. 50,51 Hence, studies that included children aged < 5 years as well as ≥ 5 years were included in the present review. More so, only two studies met the age criterion for adults (age ≥ 15 years) as defined in the protocol and scope and, therefore, the findings of the review may be applicable to only a mixed population. 39,52

All 38 studies included patients with a sore throat; however, other clinical characteristics were insufficiently reported across most of the included studies. For instance, sore throat clinical scores (e.g. Centor/McIsaac/FeverPAIN scores) were reported in 16 studies,6,23,35–39,44–46,50,52,53,55,57 of which two exclusively included patients with high clinical scores (i.e. Centor ≥ 3 points, FeverPAIN ≥ 4 points). 6,53 Both of these studies were on prescribing behaviours. However, there were two test accuracy studies that included patients with lower clinical scores (i.e. Centor scores of < 3 points) but reported test accuracy results separately by Centor score. 39,45

Recent antibiotic use prior to enrolment was considered in eight included studies, and patients without any recent use of antibiotics prior to recruitment were eligible for inclusion in these studies. 24,35,36,42,45,47,48,50

Index tests

There were more studies evaluating RADTs (76%, 29/38) than were evaluating molecular tests (18%, 7/38) or studies comparing both rapid tests and molecular tests (5%, 2/38). For instance, the Alere™ TestPack +Plus Strep A (Abbott Laboratories) was the most common antigen detection test, which was evaluated in 13 studies (excluding unpublished studies conducted by the manufacturers). 6,23,38–43,46–48,51,58 Conversely, the only molecular test evaluated in a peer-reviewed journal article was the PCR-based cobas Liat Strep A Assay (Roche Diagnostics). 24

As shown in Table 6, there were four studies providing head-to-head comparisons of index tests: BD Veritor System (Becton Dickinson) and QuikRead Go (Orion Diagnostica);34 Alere i Strep A (Abbott Laboratories) and BD Veritor System (Becton Dickinson);20 Alere i Strep A (Abbott Laboratories) and Sofia Strep A fluorescent immunoassay (FIA) (Quidel);23 and Alere i Strep A (Abbott Laboratories) and OSOM Strep A. 54 Essentially, each index test was compared with throat culture as the reference standard in order to obtain test accuracy.

The search strategy revealed test accuracy studies of OSOM Ultra Strep A (Sanofi Genzyme and Sekisui Diagnostics). 64,65 However, these studies were subsequently excluded because the EAG could not confirm whether it is the same as the OSOM Strep A test (Genzyme and Sekisui Diagnostics), which is listed among the scoped rapid tests. Similarly, it was unclear if Sofia Strep A+ Plus FIA (Quidel)66 and OSOM Strep A (Sekisui Diagnostics)67 were identical to Sofia Strep A FIA (Quidel) and OSOM Strep A (Sekisui Diagnostics), respectively; hence, studies of the former were excluded.

Comparator and reference standard

Index tests were compared with Centor, McIsaac or FeverPAIN scoring tools in 12 studies. 6,35,36,38,45,46,50,52,53,55,57,68 However, only six of these studies directly compared test accuracy between clinical scoring tools and point-of-care tests. 39,44–46,52 Only two reported test accuracy in patients with high clinical scores (Centor ≥ 3 points, FeverPAIN ≥ 4 points). 39,45

The culture medium used for the reference standard (blood agar or streptococcal selective agar) was reported in all but five studies. 24,40,56,57 Neither the manufacturers’ submissions (submitted directly to NICE in response to a request for information) nor the FDA studies provided information on index tests compared with clinical scoring tools.

Outcomes

Thirty-eight studies were included across all outcomes. Twenty-six published articles (full texts and abstracts) reported test accuracy data (of which seven also reported on antibiotic-prescribing rates and five reported on test failure rate); there were an additional five submissions from manufacturers and four FDA documents.

Five studies had insufficient data to construct 2 × 2 contingency tables to ascertain the accuracy of index tests with microbiological throat culture as the reference standard. 6,35,47,53,55 These studies were further excluded from the assessment of test accuracy in Point-of-care/index tests. An attempt to verify at least some of the discrepant results between rapid tests and microbiological culture was undertaken in only five studies. 20,23,24,37,43 Antibiotic-prescribing behaviour was reported in 12 studies. 6,20,35,36,39,40,45,46,50,52,53,55 None of the other outcomes in the scope or protocol was reported in any of the included studies.

Setting

Participants in the included studies were recruited from GP/primary care clinics/family practices,6,40,41,43–47 community pharmacies,53 paediatric clinics,42,49,54,56 paediatric emergency departments,23,48,57 hospital outpatient departments20,51 and emergency departments. 35,50 There were two multicentre studies with mixed populations from primary and secondary care settings: Cohen et al. 37 sampled patients from the emergency department (secondary care) and urgent care clinics (primary care); and Wang et al. 24 sampled patients from paediatric clinics (secondary care) and family practices (primary care).

Only one unpublished study supplied by the manufacturers confirmed the study setting (Orion Diagnostica, primary care). The remaining unpublished studies conducted by manufacturers may have included mixed populations from primary and secondary care settings; however, this is purely speculative as study settings were not reported in these studies. However, these studies provide no evidence to suggest any recruitment of inpatients.

Study design

The 26 published studies on test accuracy comprised one RCT45 and 25 cohort studies. 20,23,24,34–44,46,48–52,55–58,69 It was unclear what study design had been undertaken in any of the unpublished studies provided by the manufacturers or the FDA.

The 12 studies that provided data on antibiotic-prescribing rates comprised three RCTs,6,45,55 one before-and-after cohort study20 and eight one-armed cohort studies. 35,36,39,40,46,50,52,53

Quality considerations of included studies

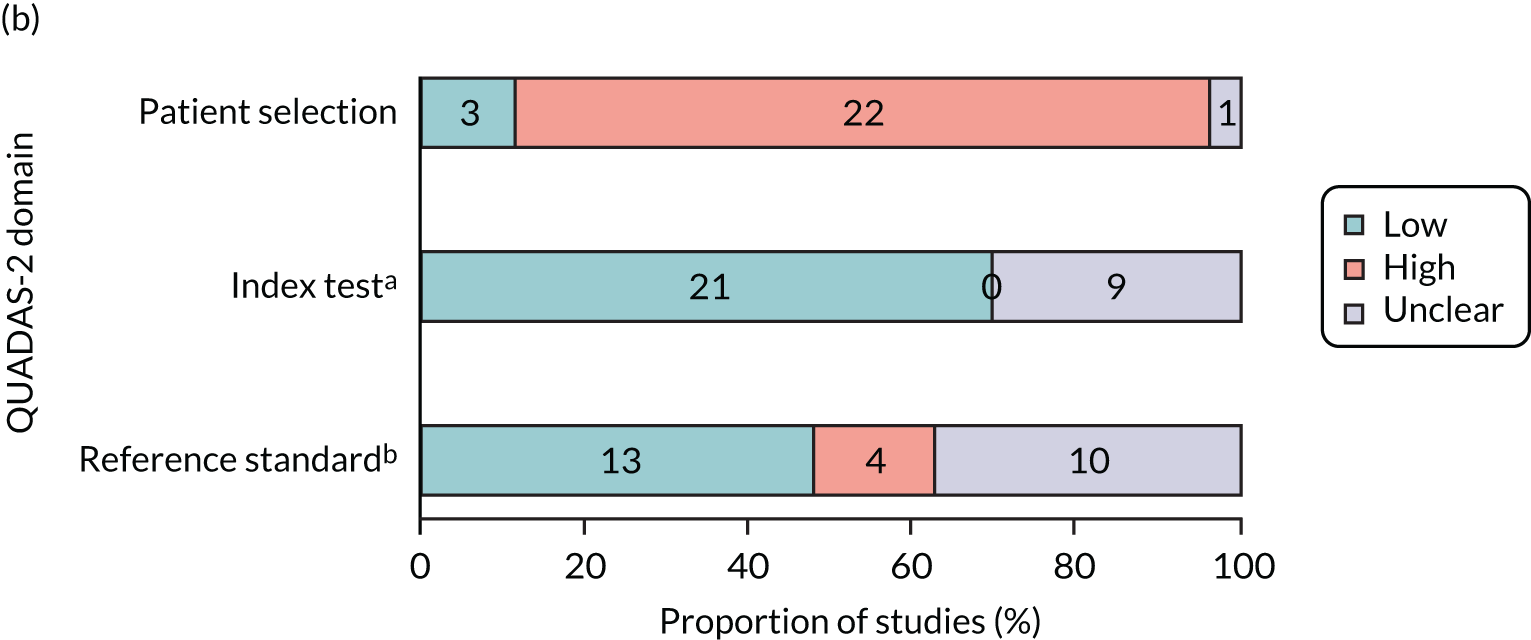

The assessment of risk of bias and applicability for the 26 included test accuracy studies20,23,24,34–46,48–52,55–58,69 using the QUADAS-2 tool are summarised in Table 7 and Figure 5. Four of the included studies compared two index tests that are relevant to this review, so there are 30 quality assessment ratings for the index test domains. Likewise, one study included two different culture mediums as its reference standard, so there are 27 quality assessment ratings across the reference standard domains.

| Study (first author and year of publication) | Risk of bias | Applicability concerns | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Patient selection | Index test | Additional index test | Reference standard | Additional reference standard | Flow and timing | Patient selection | Index test | Additional index test | Reference standard | Additional reference standard | |

| Andersen 200356 | Unclear | High | NA | Unclear | NA | Unclear | High | Unclear | NA | Unclear | NA |

| Azrad 201934 | High | Low | Low | Unclear | NA | High | High | Low | Low | Unclear | NA |

| Berry 201820 | Unclear | High | Low | High | NA | Unclear | High | Low | Low | Unclear | NA |

| Bird 201835 | Unclear | High | NA | Unclear | NA | High | High | Unclear | NA | Unclear | NA |

| Bura 201736 | High | High | NA | Low | NA | High | High | Low | NA | Low | NA |

| Cohen 201537 | Unclear | Low | NA | Low | NA | Unclear | High | Low | NA | Low | NA |

| Dimatteo 200138 | High | High | NA | High | NA | High | Low | Unclear | NA | High | NA |

| Humair 200639 | Low | High | NA | Unclear | NA | Low | Low | Low | NA | Low | NA |

| Johansson 200340 | Unclear | High | NA | Unclear | NA | High | High | Unclear | NA | Unclear | NA |

| Johnson 200141 | High | High | NA | High | NA | High | High | Low | NA | High | NA |

| Kurtz 200042 | Unclear | High | NA | High | Low | Unclear | High | Low | NA | High | Low |

| Lacroix 201823 | Unclear | Low | High | Low | NA | Low | High | Low | Low | Low | NA |

| Lindbæk 200443 | Unclear | High | NA | High | NA | Low | High | Low | NA | High | NA |

| Llor 200944 | Low | High | NA | Unclear | NA | Low | High | Low | NA | Unclear | NA |

| Llor 201145 | Low | High | NA | Unclear | NA | Unclear | Low | Low | NA | Low | NA |

| McIsaac 200446 | Unclear | High | NA | Low | NA | High | Unclear | Unclear | NA | Low | NA |

| Nerbrand 200247 | Unclear | High | NA | Unclear | NA | Low | High | Low | NA | Low | NA |

| Pauchard 201357 | Unclear | High | NA | Unclear | NA | Unclear | High | Unclear | NA | Unclear | NA |

| Penney 201648 | Low | High | NA | Unclear | NA | Low | High | Low | NA | Unclear | NA |

| Rogo 201149 | Unclear | High | NA | High | NA | Unclear | High | Low | NA | Unclear | NA |

| Rosenberg 200250 | High | High | NA | Low | NA | Low | High | Low | NA | Low | NA |

| Santos 200351 | Unclear | High | NA | Unclear | NA | High | High | Low | NA | Low | NA |

| Stefaniuk 201752 | Unclear | Low | NA | Unclear | NA | Unclear | High | Low | NA | Low | NA |

| Valverde 201858 | Unclear | High | NA | Low | NA | Unclear | High | Unclear | NA | Low | NA |

| Wang 201724 | Unclear | Low | NA | Unclear | NA | Low | High | Low | NA | Unclear | NA |

| Weinzierl 201854 | Unclear | Low | High | Low | NA | Unclear | High | Unclear | Unclear | Low | NA |

FIGURE 5.

Concerns regarding bias and applicability in included studies. (a) Risk of bias; and (b) concerns regarding applicability. a, Four studies included two index tests relevant to this review; b, one study included two reference standards (culture methods) relevant to this review.

Risk of bias for test accuracy studies

In general, the methodological and reporting quality of the included studies was poor, with risk of bias considered to be high in two or more domains for 13 studies (50%). 20,34–36,38,40–43,46,49–51 No study was considered to be at a low risk of bias in all four domains.

In 65.4% of studies (17/26),20,23,24,35,37,42,43,46,47,49,51,52,54,56–58,70 it was not clear whether patients were consecutively included or a convenience sample had been chosen, and only 15.4% (4/26 studies)39,44,45,48 were rated as having a low risk of bias in the patient selection domain (domain 1: patient selection). The selection process in the remaining 19.2% of studies (5/26)34,36,41,50 was rated as being at a high risk of bias, with studies clearly reporting convenience samples, having case–control designs or having made inappropriate exclusions from the eligible screening population.

The key risks of bias were surrounding how the index test was undertaken (22/30 domains were rated as being at high risk, 73.3%, 22/26 studies). 20,23,35,36,38–46,48–51,55–58,69 Although all of the included studies were on predeveloped tests that had in-built thresholds, in many cases use of the index test required a subjective reading by a clinician (domain 2: index tests). There were further concerns that studies often used the same swab intended for the index test to first streak the agar for biological culture, rather than taking an additional swab sample. Using one swab for multiple purposes may reduce the amount of the sample and underestimate the accuracy of the test.

Unclear or incomplete reporting was common in the reference standard domain (domain 3: reference standard). In all studies, time taken to process the biological culture exceeded that of the rapid test, with biological cultures generally reported 48 hours following sample collection. However, many studies did not state that laboratory staff were blinded to the results of the index test or reference standard (domain 3: reference standard, 13/27 studies, 48.1%). 24,34,35,39,40,44,45,47,48,51,52,56,57 There was a high risk of bias in 22.2% of the studies (6/27)20,38,41–43,49 because the methods of biological culture testing did not match current UK guidelines. 29

The flow of patients through the studies was rated as being at a high risk of bias in 31% of studies (8/26, domain 4: flow and timing). 34–36,38,40,41,46,51 The majority of these (62.5%, 5/8 studies)34–36,41,46,51 had incomplete testing and made exclusions from the analysis. However, in two of these studies only some patients received the reference standard (partial verification bias). In one study,38 only patients with negative rapid test results received the reference standard; in the other,40 only those with positive rapid test results were given the reference standard. The use of antibiotics was a further concern, with one study directly reporting 61 patients taking antibiotics at the time of testing,34 and 90% (9/10) of unclear ratings were linked to prior/current antibiotic use not being reported. 20,37,42,45,49,52,54,56–58

Applicability of study findings for test accuracy studies

The applicability of study findings was assessed with regard to three domains: patient selection, index test (rapid or molecular test) and reference standard (biological culture). There were significant concerns regarding the applicability of the studies to UK practice for patient selection in 22 of the 26 studies (85%, domain 1: patient selection). 20,23,24,34–37,40–44,48–52,55–58,69 In the UK, the test would be given only following an assessment using a clinical scoring tool, such as Centor or FeverPAIN. The rapid test would be given only to people with Centor scores of ≥ 3 points and FeverPAIN scores of ≥ 4 points. In all 22 studies, either a clinical scoring tool was not used or, if used, patients were included with scores lower than UK cut-off points and test accuracy data were not reported separately by score. In addition, 17 of the 22 studies (77%)20,23,24,35,37,40,42,48–52,55–58,69 included children aged < 5 years. Children aged < 5 years follow a different clinical pathway owing to differences in the presentation of symptoms and difficulties around communication and sample collection. 27 Concerns regarding the applicability of the index test were rated as being low for the majority of the studies (21/30 domains, 70%, 18 studies),20,23,24,34,36,37,39,41–45,48–52,69 with studies reporting that the tests were carried out in accordance with manufacturer’s guidelines. The eight remaining studies35,38,40,46,54,56–58 were rated as being unclear as this was not specified (domain 2: index test). Only four studies (4/27, 14.8%)38,40,42,43 were rated as having high concern for the applicability with respect to the reference standard (owing to deviations from UK guidelines on the undertaking of appropriate culture methods with respect to agar type, incubation period or atmosphere; domain 3: reference standard).

Assessment of studies of prescribing behaviour and clinical outcomes

There were 12 studies that reported on antibiotic-prescribing behaviour. 6,20,35,36,39,40,45,46,50,52,53,55 Of these, three studies were RCTs6,45,55 and were quality appraised using the Cochrane risk-of-bias tool for RCTs. 30 There were six studies (including one before-and-after study) that were single-arm cohorts and have been appraised using the JBI critical appraisal checklist31 for analytical cross-sectional studies. 20,36,40,50,52,71 The remaining three studies were one-armed cohort studies using predetermined guidelines to hypothetically estimate prescribing behaviour and offer no information on what happened in the real world or on what clinicians would do. 39,46,53 These studies were not quality appraised and have been briefly summarised later in the results (see Antibiotic-prescribing behaviours: other study designs).

Randomised controlled trials

Risk of bias of the included trials is shown in Figure 6 and Table 8. The domains regarding blinding were removed, as we were interested in test–treat trials measuring prescribing decisions with and without rapid tests. Therefore, clinicians could not be blinded to test results, and we considered blinding to which exact test was used to be unnecessary in this context. In general, the methodological quality of the RCTs was rated as being fair, with all studies having at least one domain rated as unclear. There was unclear risk of bias in four domains across the three studies (random sequence generation, allocation concealment, incomplete outcome data and selective outcome reporting). This was owing to insufficient information presented on which to make an assessment. The remaining applicable domains were judged to be at a low risk of bias.

FIGURE 6.

Concerns regarding the risk of bias of included RCTs.

Cohort studies

Risk of bias in the included cohort studies is shown in Figure 7 and Table 9. No study was rated as having had high methodological quality across all areas. There was low methodological quality regarding criteria for inclusion in 83% of studies (five out of six) and details regarding the study subjects in 33% of studies two out of six). 20,35,40,50,52 These studies reported the details of the patients, but provided no information on the details of those who are making the prescribing decisions. The outcome of interest in these studies was prescribing behaviour. The measurement of prescribing behaviour considered to be valid and reliable was recording in medical records; only 33% of the studies clearly reported this. 20,36 A confounder in the studies was current antibiotic use; 33% (two out of six) of studies did not clearly specify current or recent antibiotic use as an excluding factor.

FIGURE 7.

Methodological quality of included analytical cross-sectional studies. NA, not applicable.

| Study (first author and year of publication) | Were the criteria for inclusion in the sample clearly defined? | Were the study subjects and the setting described in detail? | Was the exposure measured in a valid and reliable way? | Were objective, standard criteria used for measurement of the condition? | Were confounding factors identified? | Were strategies to deal with confounding factors stated? | Were the outcomes measured in a valid and reliable way? | Was statistical analysis appropriate? |

|---|---|---|---|---|---|---|---|---|

| Berry 201820 | No | Yes | Yes | No | Unclear | Unclear | Yes | Yes |

| Bird 201835 | No | Yes | Yes | Yes | Yes | Yes | No | Yes |

| Bura 201736 | Unclear | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Johansson 200340 | No | No | Yes | Unclear | Yes | Yes | No | Yes |

| Rosenberg 200250 | No | Yes | Yes | Yes | Yes | Yes | Unclear | Yes |