Notes

Article history

This issue of the Health Technology Assessment journal series contains a project commissioned/managed by the Methodology research programme (MRP). The Medical Research Council (MRC) is working with NIHR to deliver the single joint health strategy and the MRP was launched in 2008 as part of the delivery model. MRC is lead funding partner for MRP and part of this programme is the joint MRC–NIHR funding panel ‘The Methodology Research Programme Panel’.

To strengthen the evidence base for health research, the MRP oversees and implements the evolving strategy for high-quality methodological research. In addition to the MRC and NIHR funding partners, the MRP takes into account the needs of other stakeholders including the devolved administrations, industry R&D, and regulatory/advisory agencies and other public bodies. The MRP funds investigator-led and needs-led research proposals from across the UK. In addition to the standard MRC and RCUK terms and conditions, projects commissioned/managed by the MRP are expected to provide a detailed report on the research findings and may publish the findings in the HTA journal, if supported by NIHR funds.

The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Permissions

Copyright statement

© Queen’s Printer and Controller of HMSO 2021. This work was produced by Bojke et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This issue may be freely reproduced for the purposes of private research and study and extracts (or indeed, the full report) may be included in professional journals provided that suitable acknowledgement is made and the reproduction is not associated with any form of advertising. Applications for commercial reproduction should be addressed to: NIHR Journals Library, National Institute for Health Research, Evaluation, Trials and Studies Coordinating Centre, Alpha House, University of Southampton Science Park, Southampton SO16 7NS, UK.

2021 Queen’s Printer and Controller of HMSO

Chapter 1 Background

In the UK, decisions about the use of health-care interventions are made by various NHS organisations, as well as the immediate beneficiaries, namely patients. In England, these NHS organisations include the National Institute for Health and Care Excellence (NICE), NHS England and Public Health England (PHE). At the forefront of these decisions is the aim of maximising health, calling for judgements about the interventions that are expected to lead to higher health effects. When resources are limited, additional costs incurred will affect the access to care for other patients, and health foregone in this way should also be taken into account (a cost-effectiveness framework). 1

Although randomised controlled trials (RCTs) have been described as the principle source of evidence for such decision-making, these have considerable limitations including a lack of external validity, short study periods to assess long-term treatment effect and invalid generalisations of findings outside the study group. 2–4 In addition, RCTs are not possible or ethical in some situations.

These limitations also impact the use of RCTs for urgent health issues for which decisions need to be made promptly on the basis of limited, and often imperfect, available data. 5 Health technology assessments (HTAs) traditionally use decision-modelling methods that gather different forms of evidence, by defining mathematical relationships between a varied set of input parameters, in a way that describes aspects of the history of the disease of interest and the impact of the intervention.

Uncertainty in the evidence is pervasive in cost-effectiveness modelling and the analysis may be biased if uncertainty in the model inputs is not reflected. Uncertainty can be distinguished as epistemic or aleatory. 6,7 Aleatory uncertainty arises as a result of randomness (i.e. unpredictable variation in a process) and expert knowledge cannot reduce this type of uncertainty. 6 Therefore, it is sometimes referred to as irreducible uncertainty. Epistemic uncertainty is due to imperfect knowledge and it can be reduced with sufficient study and, therefore, expert judgement may be useful in its reduction. 6 Additional evidence can reduce uncertainty and provide a more precise estimate of cost-effectiveness. By quantifying uncertainty, it is possible to assess the potential value of additional evidence, inform the types of evidence that might be needed and consider restricted use until the additional evidence becomes available. 8

In some situations, several input parameters in the decision model may have only limited empirical data. For example, the evidence may not be on ‘final’ outcomes (e.g. cancer products licensed on evidence of progression-free survival), or the evidence base may not be well developed (e.g. in the areas of diagnostics, medical devices, early access to medicines scheme, or public health). In these situations, judgements are required for a decision to be reached regarding that parameter. To ensure accountability in the decision, these judgements should be made explicit and incorporated transparently into the decision-making process, an inherently Bayesian view on decision-making. Formal methods to quantify prior beliefs in the form of experts judgements exist, and are termed structured expert elicitation (SEE) methods. 7

Structural expert elicitation is a process that allows experts to express their beliefs in a statistical, quantitative form. If conducted in an appropriate manner, SEE is the best approach to characterise uncertainties associated with the cost-effectiveness of competing interventions and to assess the value of further evidence. SEE methods have been used in disciplines including weather forecasting and reliability analysis within engineering,9 but the research findings in these disciplines are often interpreted as contradictory, in particular the appropriateness of generating consensus among experts. 10 In terms of SEE in health care, NICE uses expert judgement across all guidance-making programmes, but expert elicitation (vs. expert opinion) is used less frequently. 11 Existing timelines and consequent time constraints are reported as the common obstacles when conducting expert elicitation in health care. 11

There is an increasing interest in SEE, as HTAs are conducted progressively closer to the launch of the intervention of interest. 12 SEE is also essential for ‘early modelling’ of new interventions or unknown diseases for which little or no evidence is available.

No standard guidelines exist to conduct expert elicitation in HTA, but there are a number of generic guidances, some of which have been used in HTA. 13,14 The most notable of these is the Sheffield Elicitation Framework (SHELF). 14 This is a package of documents, templates and software for eliciting probability distributions. The method begins by eliciting judgements from each expert individually and then elicits a single probability distribution from the group of experts. Cooke’s classical method is another generic technique that has been applied in HTA. This method primarily focuses on the synthesis of multiple experts beliefs. Patients are scored based on their performance on calibration questions (questions for which experts do not know true values) and their assessments are weighted according to their scores. 15 The third generic guidance applied in HTA is the Delphi method. This is an iterative survey that provides feedback from the experts over successive rounds, providing an opportunity for consensus as experts review their opinions based on new information from their peers. 16

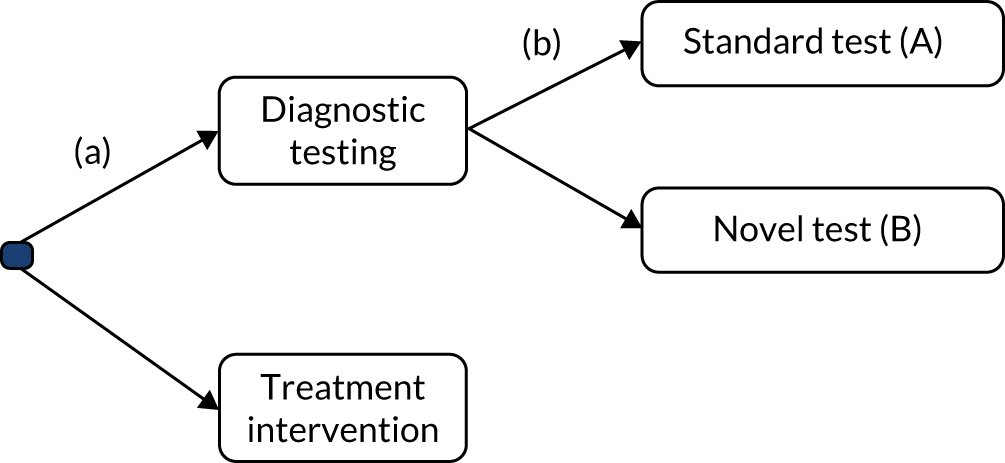

Although generic processes have been applied in HTA (Figure 1), there is an absence of a published guidance that is specific to HTA. Certain elements of the generic guidance may not be appropriate in a HTA context owing to resource and time constraints that are inherent in HTA.

FIGURE 1.

General schematic for SEE.

At present, an analyst needs to be aware of a number of key issues to consider when designing, conducting and analysing an elicitation exercise. In terms of the design, the analyst must decide what quantities to elicit. This will largely be informed by the requirements of the decision model. As a rule, experts should be asked to express their beliefs about observable quantities, such as probabilities, rather than unobservable quantities (i.e. moments of a distribution or covariates). Once the quantities have been chosen, the next choice will be based on which method(s) will be employed to express the parameters. Possible methods include fixed interval methods (FIMs) or variable interval methods (VIMs). The analyst must then choose which experts should be recruited to elicit these judgements. Once the beliefs have been elicited, a decision must be made on how to synthesise the beliefs.

There is heterogeneity in the existing methodology used in HTA. Given the lack of guidance, there is a need to develop a standard set of principles to guide the design and conduct of expert elicitation in HTA. It is essential that the elicited information represents how uncertain experts are about the current state of knowledge regarding a parameter of interest. There is a need to reflect the range of reasonable judgements that may be expressed across experts (between-expert variation) and determine how decision-makers use these elicited judgements in the decision-making process.

The overall aim of this report was to establish a reference protocol or guideline for the elicitation of experts’ judgements to inform health-care decision-making (HCDM). To achieve this overall aim, the report will focus on the following objectives:

-

providing clarity on the methods for collecting and using experts’ judgements within an assessment of cost-effectiveness

-

demonstrating when alternative methodology may be required in a particular context/constraints (e.g. time)

-

establishing preferred approaches for elicitation for a range of parameters and a range of decision-making contexts

-

determining which elicitation methods allow experts to express parameter uncertainty, as opposed to variability

-

determining the applicability and usefulness of the reference protocol developed within a case study application.

The initial research protocol outlined two additional objectives: (1) establish the accuracy of consensus-based methods in generating representations of uncertainty and (2) establish the accuracy of alternative methods of mathematically pooling the individual judgements of experts. The objectives were subsequently refined to explore individual factors that can affect the accuracy of consensus-based methods, in particular to explore individuals’ ability to extrapolate from their knowledge base, and to explore how individuals revise their answers when presented with group summaries. Further details on the reason for these deviations is provided in Chapter 8.

To achieve these objectives, the activities of this project were split into three work packages and an evaluation. The activities of the project are summarised in Figure 2.

FIGURE 2.

Summary of project activities. WP, work package.

Specifically the remaining chapters in this report provide the following.

Chapter 2 reviews existing guidelines for formal elicitation (SEE). This review identifies the approaches used in existing guidelines and aims to identify whether or not dominant approaches evolve in terms of the choices that need to be made in the elicitation process.

In the light of this review, Chapter 3 considers contexts for structured elicitation in HCDM. Different contexts may influence the requirements and feasibilities of expert elicitation. Chapter 3 discusses this in detail, and identifies the potential constraints in decision-making in health care and discusses the implications for expert elicitation methodology.

Chapter 4 is a review of SEE applications in cost-effectiveness modelling. The chapter summarises the basis for the methodological choices made in each application and details the challenges that were reported by the authors.

Chapter 5 reviews the evidence on the potential choices that are available for different components of the elicitation process. This focuses on the following elements: selection of experts, level of elicitation, fitting and aggregation, and adjusting judgements. This chapter discusses the advantages and disadvantages of each available choice and identifies any potential constraints to their application in cost-effectiveness analyses.

Heuristics and biases are concerns that are predominant across all elements in SEE; therefore, SEE should be conducted in such a way that minimises these errors. Chapter 6 reviews the existing evidence on heuristics, biases and de-biasing techniques that are of most relevance to HCDM.

Chapter 7 discusses what quantities to elicit. This chapter provides a list of alternative quantities that can be elicited to inform certain types of parameters that are commonly used in health care. This is particularly relevant in cost-effectiveness analyses, as parameters are often complex constructs, such as relative treatment effects or time to events, which experts will not directly observe in practice. Chapter 7 compiles a list of alternative quantities that may be elicited to inform specific parameters.

Chapter 8 provides the experimental plan for experiments that were conducted as part of this research. The aim of these experiments was threefold, to: (1) evaluate alternative methods of elicitation and how they perform in representing parameter uncertainty; (2) explore individuals’ ability to extrapolate from their knowledge base; and (3) explore how individuals revise their answers when presented with group summaries. The results and interpretation of these experiments are then presented.

Chapter 9 discusses the methodological choices for each of the different components of SEE: design, conduct and analysis. Managing biases and validity assessment are then considered as overarching concerns for throughout the SEE process. In order to conclude on their suitability for HCDM, Chapter 9 first presents a set of principles that underpin the use of expert elicitation in HCDM. Available choices are considered in the light of these principles and any empirical evidence available to support the choices.

Chapters 2–9 are then used to generate a reference protocol for HCDM (see Chapter 10). This presents the choices that are supported by the principles for HCDM and/or empirical evidence in this domain. Given the paucity of empirical evidence relating to HCDM, it was necessary to define this for a specific type of HCDM, HTA. Considerations when using the reference protocol outside this context are also presented.

Chapter 11 describes the applied evaluation of the developed reference protocol. This uses an existing cost-effectiveness model, in which SEE was used to generate initial estimates of uncertain parameters. In addition to demonstrating the usefulness of the reference protocol in navigating the SEE process, the practicality of SEE is determined using narrative feedback form experts and by generating estimates of resources required to design and conduct the SEE.

The report closes with discussion and conclusions based on the findings of this research (see Chapter 12). The feedback from a dissemination workshop exploring the usefulness and challenges in using SEE in HCDM is reported. The limitations of the research and areas of further research are also discussed here.

Chapter 2 Good practice in structured expert elicitation: learning from the available guidance

Introduction

Over the last few decades, SEE has been used in areas such as natural hazards, environmental management, food safety, health care, security and counterterrorism, economic and geopolitical forecasting, and risk and reliability analysis. All of these areas require consequential decisions be taken in the face of significant uncertainty about future events or scientific knowledge.

How judgements are elicited is critical to the quality of the resulting judgements and, hence, the ultimate decisions and policies. Methods for SEE should be suitable for specific contexts and understood by content experts to be useful to decision-makers. Example applications and recommended practices do exist in certain fields, but the specifics vary.

In developing a reference protocol for SEE specific to the needs of HCDM, the methodological recommendations and choices that exist in other fields need to be understood. This chapter surveyed the existing best practices for SEE, as reflected in published elicitation guidance, to identify areas of consensus, places where no consensus exists and other gaps. Identifying areas of commonality across current guidance can support elicitation practice in areas that lack context-specific guidance, such as HCDM. The recommendations and choices for the SEE process identified in this chapter are further explored in Chapters 5–8 and their suitability for HCDM is considered in Chapter 9.

Methods

To identify areas of agreement and disagreement in elicitation practice, both domain-specific and generic elicitation guidelines were systematically reviewed according to the search strategy and screening process detailed in Report Supplementary Material 1. A SEE guideline is defined as a document, either peer reviewed or in the grey literature, that advises on the design, preparation, conduct and analysis of a structured elicitation exercise. The review focused on SEE guidelines rather than applications to determine a full list of the possible methodological options, rather than relying on the partial reporting available in applications.

To constrain the scope of this review, guidelines needed to concern explicitly probabilistic judgements and offer guidance on more than one stage of the elicitation process. Literature relating to only one element of elicitation is considered in the targeted searches discussed in Chapters 5 and 6. When the same or a similar author lists published multiple guidance documents making similar recommendations, only one version was included. An extraction template was used to collect information from each guideline. The extracted data were analysed to create an overview of all of the stages, elements and choices involved in an elicitation, and to understand where current advice across guidelines conflicts or agrees. When the guidelines agreed, we assumed that this represented best practice that could be be taken forward within the HCDM context, as applicable. When the guidelines disagreed, we sought additional evidence to support the development of a reference protocol for HCDM (see Chapters 3–8).

Included structured expert elicitation guidelines

The searches identified 16 unique SEE guidelines (see Report Supplementary Material 1, Table 2). Five of the guidelines are generic and aim to inform practice across disciplines, and 11 focus on specific domains. Six of the domain-specific guidelines are agency white papers or agency-sponsored peer-reviewed articles and are tailored to the specific decision-making processes the agencies govern. Agencies issuing guidelines include the European Food Safety Authority (EFSA), the US Environmental Protection Agency (EPA), the Institute and Faculty of Actuaries and the US Nuclear Regulatory Commission. Both the Institute and Faculty of Actuaries and the Nuclear Regulatory Commission have published two distinct guidelines. The 10 guidelines not connected to agencies are based on reviews of existing evidence and practice about elicitation methods (two guidelines), reflections on personal experience and practice (three guidelines), or combinations of review and reflection (five guidelines) (see Report Supplementary Material 1 for details).

Two of the agency SEE guidelines were included with caveats. First, the EFSA guideline covers three distinct elicitation methods, but the classical model and SHELF are presented in other guidelines, so only the portions of the EFSA document related to the EFSA Delphi method are included in this review. 16 Second, the EPA guideline is a white paper released for public review that was not intended to be the final agency report on the subject. 17 However, a final version was never released and, thus, the document is widely cited in elicitation literature and has served as a de facto guideline as nothing has superseded it.

Analysis of the elicitation process

Although the characterisation of the process, including the number and categorisation of steps, differed among the 16 guidelines, the underlying elicitation process described, depicted in Figure 3, was remarkably similar.

FIGURE 3.

The elicitation process. a, These steps are described as post elicitation in some guidelines.

At each step of the elicitation process, analysts are faced with a variety of methodological choices. Table 1 provides the full list of choices described in the 16 guidelines and Table 2 summarises the level of agreement in the recommendations and choices discussed for each element. The following sections discuss the variety of methodological recommendations for each stage made across the guidelines (see Report Supplementary Material 1, Tables 4–15, for further detail).

| Element | Component | Choice |

|---|---|---|

| Identifying elicitation variables | ||

| What quantities to elicit | Type of parameter |

|

| Type of quantity |

|

|

| Selection criteria |

|

|

| Principles for describing quantities |

|

|

| Decomposition/disaggregation |

|

|

| Handling dependence |

|

|

| Encoding judgements | General approach |

|

| Use of visual aids |

|

|

| Identifying and selecting experts | ||

| Number of experts | Number of experts |

|

| Selecting experts | Roles within SEE |

|

| Desired characteristics for those providing judgements |

|

|

| Identification procedure |

|

|

| Selection procedure |

|

|

| Possible selection criteria |

|

|

| Training and preparation | ||

| Pilot the protocol | Pilot exercise |

|

| Training and preparation for experts | What to cover in training |

|

| Conducting the elicitation | ||

| Mode of administration | Location |

|

| Level of elicitation | Level of elicitation |

|

| Feedback and revision | Type of feedback |

|

| What to feed back |

|

|

| Opportunity for revision |

|

|

| Interaction | Opportunity for interaction |

|

| Rationales | Rationales |

|

| Aggregation | Aggregation |

|

| Aggregation approach |

|

|

| Fit to distribution | Fit |

|

| Distribution |

|

|

| Fitting method |

|

|

| Post elicitation | ||

| Feedback on process | Feedback from experts on process |

|

| Adjusting judgements | Methods for adjusting judgements |

|

| Documentation | What to include |

|

| Managing heuristics and biases | ||

| Managing heuristics and biases | Biases relevant for SEE |

|

| Bias elimination or reduction strategies |

|

|

| Considering the validity of the process and results | ||

| Validation | Characteristics of validity and supporting actions |

|

| Element | Component | Agreement level | Explanation |

|---|---|---|---|

| Identifying elicitation variables | |||

| What quantities to elicit | Type of parameter | Some disagreement | Guidelines agree that observable quantities are preferred, but disagree on whether or not directly eliciting model parameters is an acceptable choice |

| Type of quantity | Disagreement | Guidelines offer conflicting recommendations on whether or not eliciting probabilities (compared with other uncertain quantities) is an acceptable choice | |

| Selection criteria | Some agreement | Fewer than five guidelines discuss this, but they agree selection criteria should be defined | |

| Principles for describing quantities | Some agreement | Some guidelines describe slightly different principles (e.g. asking clear questions, ensuring that uncertainty on elicited parameters affects the final decision or model), but they do not conflict | |

| Decomposition | Agreement | The guidelines that discuss decomposing the variables of interest all agree it should be a choice | |

| Handling dependence | Some agreement | The guidelines that discuss dependence agree it should be avoided if possible or addressed separately, but they discuss a range of methods for considering dependence | |

| Encoding judgements | General approach | Disagreement | Guidelines recommend and discuss different conflicting methods for encoding judgements |

| Use of visual aids | Some agreement | Fewer than five guidelines discuss this, but they agree visual aids can be a useful choice | |

| Identifying and selecting experts | |||

| Number of experts | Number of experts | Agreement | The experts agree that multiple experts are important, with most guidelines recommending around 5–10 experts |

| Selecting experts | Roles within SEE | Agreement | The guidelines are very consistent in their description of the roles involved with elicitation |

| Desired characteristics for those provide judgements | Some agreement | Characteristics discussed in the guidelines are largely consistent, aside from differing views on if normative expertise is a requirement or just desired | |

| Identification procedure | Some agreement | Recommendations differ but do not conflict across the guidelines. Agency guidelines tend to offer more detail | |

| Selection procedure | Some agreement | Recommendations differ but do not conflict across the guidelines. Agency guidelines tend to offer more detail | |

| Possible selection criteria | Some agreement | Recommendations differ but do not conflict across the guidelines | |

| Training and preparation | |||

| Pilot the protocol | Pilot exercise | Agreement | Almost all guidelines recommend conducting a pilot exercise |

| Training and preparation for experts | What to cover in training | Some agreement | The lists of what should be included in training vary across guidelines, but do not conflict |

| Conducting the elicitation | |||

| Mode of administration | Location | Some agreement | Most guidelines agree that face-to-face administration is preferred, although remote options may be pragmatically useful alternatives in some situations |

| Level of elicitation | Level of elicitation | Disagreement | Guidelines recommend and discuss conflicting levels of elicitation |

| Feedback and revision | Type of feedback | Some agreement | Recommendations differ but do not conflict across the guidelines |

| What to feed back | Some agreement | Recommendations differ but do not conflict across the guidelines | |

| Opportunity for revision | Some agreement | Guidelines recommend revision takes place either following an elicitation (as part of an iterative process or immediately following the elicitation) or further in the future, following a draft report or additional data collection | |

| Interaction | Opportunity for interaction | Disagreement | Guidelines offer conflicting recommendations about when and how to facilitate interaction between the experts |

| Rationales | Rationales | Agreement | Almost all guidelines recommend collecting expert rationales in some form |

| Post elicitation | |||

| Aggregation | Aggregation | Agreement | All guidelines discuss aggregation as a recommendation or valid choice |

| Aggregation approach | Disagreement | Guidelines offer conflicting recommendations on the approach and method to aggregate judgements | |

| Fit to distribution | Fit | Some disagreement | The guidelines make few recommendations, but their choices differ |

| Distribution | Some agreement | Fewer than five guidelines discuss this, but they generally agree that many parametric distributions could be chosen | |

| Fitting method | Some agreement | Fewer than five guidelines discuss this, but they generally agree that choices include minimum least squares and method of moments | |

| Feedback on process | Feedback from experts on process | Some agreement | Fewer than five guidelines discuss this, and they recommend complementary approaches |

| Adjusting judgements | Methods for adjusting judgements | Some disagreement | Fewer than five guidelines discuss this, but they offer different perspectives |

| Documentation | What to include | Some agreement | The lists of what should be included in final documentation vary across guidelines but do not conflict |

| Managing heuristics and biases | |||

| Managing heuristics and biases | Biases relevant for SEE | Some agreement | The lists of potential biases vary across guidelines but do not conflict |

| Bias elimination or reduction strategies | Some agreement | The list of possible strategies vary across guidelines but do not conflict | |

| Considering the validity of the process and results | |||

| Validation | Characteristics/measures | Disagreement | The guidelines differ in their definitions of validity and discussion of how the concept can be operationalised in an elicitation |

Identifying elicitation variables

What quantities to elicit

Structured expert elicitation is often undertaken in areas with many relevant uncertainties and a decision has to be made about what will be elicited. Only one18 of the 16 guidelines does not provide advice on selecting what quantities to elicit. Recommendations and choices from the other guidelines are summarised in Report Supplementary Material 1, Table 3.

Five guidelines recommend that elicited variables should be limited to quantities that are, at least in principle, observable. 16,20–23 This includes probabilities that can be conceptualised as frequencies of an event in a sample of data (even if such data may in practice not be directly available to the expert). However, three guidelines20,24,25 argue that elicited quantities can be ‘unobservable’ model parameters, such as odds ratios, provided that they are well defined and understood by the participating experts.

Parameters are here described as ‘unobservable’ if they are complex functions of observable data, such as odds ratios. The guidelines list many types of quantities or parameters that can be elicited, including physical quantities, proportions, frequencies, probabilities and odds ratios. These guidelines give few recommendations; however, aside from Cooke and Goossens,21 they recommend that experts should not be asked about uncertainty regarding probabilities, but that questions should be reframed as uncertainty about frequencies in a large population. Choy et al. 22 also recommend against eliciting probabilities directly, but two other guidelines25,26 list it as a possible choice. Chapter 7 further considers the possible types of quantities relevant for HCDM.

Three of the guidelines16,24,27 recommend formal processes for selecting what to elicit, and several guidelines16,17,21–24,27–31 describe principles the elicited quantities should adhere to. Principles discussed include that questions should be clear and well defined, have neutral wording, be asked in a manner consistent with how experts express their knowledge, and be elicited only when the uncertainty affects the final model and/or decision.

Some SEE guidelines describe two issues related to the quantities to elicit: disaggregation and dependence. Five guidelines16,17,23,25,26 suggest that disaggregating or decomposing a variable makes the questions clearer and the elicitation easier for experts. Five guidelines20,21,23,28,30 also discuss the importance of considering dependence between variables. When dependence is discussed, guidelines recommend reframing dependent items in terms of independent variables wherever possible. If dependence cannot be avoided, the elicitation task will be more complicated, but they recommend assessing conditional scenarios or using other elicitation framing and related techniques to estimate dependence.

Encoding judgements

In addition to choosing what questions to put to experts in an elicitation, analysts must also choose how questions will be put to experts. That is, how will experts be asked to assess their uncertainty about the unknown quantities?

Three guidelines24,26,28 – all agency documents – either do not discuss methods for encoding judgements at all26,28 or do not offer advice (i.e. neither recommendations nor a list of choices) on the matter. 24 Report Supplementary Material 1, Table 4, summarises the recommendations and choices described by the other 13 guidelines.

Most approaches can be classified as either fixed interval or variable interval. Fixed interval techniques (discussed in six16,17,20,22,25,30 of the 16 guidelines) present experts with a specific set of ranges, and the experts provide the probability the quantify falls within that range. A popular fixed interval technique is the roulette or ‘chips and bins’ method, in which experts construct histograms that represent their beliefs. In contrast, VIMs (recommended by five guidelines16,21,23,27,31 and discussed in another five17,20,22,25,30) give the experts set probabilities and ask for the corresponding values. Popular VIMs include the bisection and other quantile techniques. These methods are described further in Chapter 8.

Two guidelines recommend methods that cannot be classified as either fixed interval or variable interval. The Investigate, Discuss, Estimate, Aggregate (IDEA) protocol utilises a combination approach, asking experts to provide a minimum, maximum and best guess for each quantity, as well as a ‘degree of belief’ that reflects the probability that the true value falls between the minimum and the maximum. Experts may all provide assessments for different credible ranges, and the analyst standardises them to an 80% or 90% credible interval (CrI) using linear extrapolation. 32

Kaplan’s method takes a very different approach. 18 Rather than asking experts to encode their beliefs in a way that can be transformed or interpreted as a probability distribution, the method requires that experts only discuss evidence related to the quantity of interest before a facilitator creates a probability distribution that reflects the existing evidence and uncertainty.

In addition to the core encoding method, three guidelines17,20,29 also discuss that physical or visual aids can be used by the elicitor(s) to assist with the encoding process.

Despite the variety of encoding methods discussed, none of the guidelines present empirical or anecdotal evidence or other justification for their recommendations or choices. Chapter 8 provides new evidence relating to the choice of encoding method.

Identifying and selecting experts

Recommendations and choices related to identifying and selecting experts are summarised in Report Supplementary Material 1, Tables 5 and 6. Only one guideline28 does not discuss the number of experts to include in an elicitation. The others either explicitly recommend or imply that judgements will be elicited from multiple experts. The range of how many experts should be included spans from four experts21 to 20 experts. 32 The EPA white paper17 is the only guideline that gives considerations beyond practical concerns for how many experts to include in an exercise. It observes that, if opinions vary widely among experts, more experts may be needed. On the other hand, if the experts in a field are highly dependent (e.g. based on similar training or experiences), adding more experts has limited value. The risk of dependence between experts is discussed in only three other guidelines. 20,23,26

Most guidelines do not address how many facilitators or analysts should be involved in an elicitation. The few that do so state that two or three facilitators is ideal, with the facilitators having different backgrounds or managing different tasks during the elicitation. 17,21,24,27,30

Identifying and selecting experts is discussed in all but three guidelines. 18,22,23 Recommendations from the other 13 guidelines overlap considerably. Common criteria relate to reputation in the field, relevant experience, the number and quality of publications, and the expert’s willingness and availability to participate. Normative expertise is listed as desired by five guidelines,16,24–26,30 but three16,24,30 specify that it is not a requirement.

Five guidelines17,20,26,28,30 recommend that all potential experts disclose a list of their personal and financial interests, often noting that interests should be recorded but will not automatically disqualify an expert from participating, as that may impose too extreme a limit on the pool of possible experts. Eight guidelines recommend that the group of experts included in an elicitation reflects the diversity of opinions and range of fields relevant to the elicitation topic. The agency guidelines tend to provide more details on identifying and selecting experts, with four describing optional procedures producing a longlist of possible experts that is then winnowed down based on agreed on selection criteria. Although many guidelines suggest identifying experts through peer nomination, Meyer and Booker25 caution that this process can, if not well managed, lead to issues related to experts nominating only other people with similar views. Chapter 5 considers the broader literature on selecting and identifying experts.

Training and preparation

Recommendations and choices related to identifying and selecting experts are summarised in Report Supplementary Material 1, Table 7. Eight guidelines16,17,20–22,25,26,29 either explicitly recommend piloting the elicitation protocol with a subject matter expert not participating in the exercise or imply32 that piloting will be done. The remaining seven guidelines do not discuss piloting. 18,23,24,27,28,30,31

Only one guideline18 offers training as a choice; the other 15 guidelines all require at least some form of training. Recommendations and suggestions for what should be included in expert training are largely consistent across the guidelines and cover issues related to elicitation generally and the subject matter at hand specifically. Commonly recommended aspects of training include an introduction to probability and uncertainty, an overview of the elicitation process, an introduction to heuristics and biases, the aim and motivation for the elicitation, information on how elicitation will be used, relevant background information, and details of any assumptions or definitions used in the elicitation. Five guidelines25–27,29,30 recommend using practice questions to ensure that experts understand the elicitation process.

Most guidelines do not discuss what, if any, training should be provided to the elicitation facilitator(s) or other roles involved in conduction an elicitation. Five guidelines, including four generic guidelines, provide material that is meant to assist the facilitator, including sample text and forms. 16,21,25,30,32

Conducting the elicitation

Mode and level of elicitation

Recommendations and choices about the mode of administration and the level of elicitation (group or individual) are summarised in Report Supplementary Material 1, Table 8.

Elicitations can be conducted in person, in either individual interviews or group workshops, or remotely via the internet, e-mail, mail, telephone, video conferencing or other means. Nine guidelines17,18,21,23,24,26,27,29,30 recommend in-person elicitation and only one guideline16 recommends remote elicitation. Eight guidelines17,22,23,25,28,29,31,32 list remote elicitation as a choice, recognising that it may be logistically easier to arrange than an in-person elicitation.

The mode of administration may be governed by whether or not a method elicits judgements from individual experts (i.e. each expert provides an individual assessment) or groups (i.e. a group of experts provides a single assessment). Of the 16 guidelines, only that by Choy et al. 22 does not discuss the level of elicitation. Group-level elicitation is only recommended by Kaplan,18 who recommends a process in which experts discuss the evidence relevant to an elicitation variable and then the facilitator proposes a probability distribution that matches the input provided by all of the experts. Individual-level elicitation is recommended by five guidelines,16,21,26,27,32 and two guidelines24,30 recommend a combination approach wherein individual assessments are elicited first followed by the group works to provide a communal assessment that reflects the diversity of opinion in the group. Chapter 5 provides more detail on individual-level compared with group-level elicitation.

Feedback and revision

All but one guideline25 discusses the importance of feedback and revision, but three guidelines20,28,29 do not provide information on how it should be done. The other guidelines discuss a range of possible feedback methods, which can provide information on an individual’s judgements, the aggregated group judgements or a summary of what the other experts provided. Recommendations and choices about the mode of administration and the level of elicitation are summarised in Report Supplementary Material 1, Table 9.

Only the guideline by Knol et al. 29 warns of a possible negative impact of feedback and revision, cautioning that it can cause unwanted regression to the mean in the experts’ revised assessments. None of the guidelines recommends against providing feedback and opportunities for revision in any form. The feedback of group summary judgements is investigated in Chapter 8.

Interaction

Recommendations and choices regarding interaction and rationales are summarised in Report Supplementary Material 1, Table 10. Three guidelines did not explicitly discuss interaction between the experts. 21,22,31 Although no guidelines recommended avoiding interaction, seven guidelines,17,20,23,25,27–29 say that no interaction is a possible choice. Interaction is closely related to level of elicitation, with guidelines recommending group discussion prior to individual elicitation, group discussion prior and during a group elicitation, and group discussion following an individual elicitation. One guideline16 recommended that interaction should be limited to a remote, anonymous, facilitated process. Other guidelines also described these options as choices. 17,20,25,32

Although the guidelines disagreed about if and how interaction should be managed in an elicitation, many do present more justification for the recommendations or choices around interaction than they do for other methodological choices. The benefits of interaction between experts is that it minimises the differences in assessments that are due to different information or interpretation29 and allows analysts to explore correlation between experts. 23 The drawbacks, however, are that it can allow strong personalities to carry too much weight,20,23,29 the experts may feel pressure to reach a consensus,20 there may be risk of confrontation23 and interaction can encourage groupthink, resulting in the experts being overconfident. 28 Practical considerations can also guide the choice of if and how to include interaction, as individual interviews may take more time, but a group workshop may be more expensive. 29 These issues are further discussed in Chapter 5.

Rationales

Only one guideline25 presented collecting the experts’ rationales during an elicitation as a choice rather than a recommendation. The other 15 guidelines all recommend collecting rationales because they help analysts and decision-makers understand what an answer is based on,20,23,28 provide a check of the internal consistency of an expert’s responses,20 record any assumptions27 and may help limit biases. 22 The information collected in rationales can also be useful for peer review or for future updating of the judgements. 28

One guideline31 also recommended collecting rationales from the decision-maker about how they use the expert judgement results.

Post elicitation

Aggregation

Even when eliciting judgements from multiple experts, it can be important to have a single distribution that reflects the beliefs of the experts that can be used in modelling. Recommendations and choices on aggregation methods are summarised in Report Supplementary Material 1, Table 11. Five guidelines17,22,26,28,29 presented aggregation as a choice, but the remaining 11 recommended aggregation always be done. 16,18,20,21,23–25,27,30–32

Aggregation can be behavioural or mathematical. In behavioural aggregation, experts interact with the goal of producing a single, consensus distribution. Mathematical aggregation involves the facilitator(s) eliciting individual assessments from the experts and then combining them into a single distribution through a mathematical process. Two guidelines recommend behavioural aggregation. Kaplan18 recommends a process that includes group-level elicitation and behavioural aggregation: the experts discuss the evidence relevant to an elicitation variable, the facilitator suggests a probability distribution that reflects the diversity of evidence on the subject and then the process concludes when there is consensus from the experts about the proposed distribution. The SHELF method recommends an initial round of individual-level elicitations followed by expert discussion designed to produce a single distribution that represents how a ‘rational independent observer’ would summarise the range of expert opinions. 30

Four16,21,26,32 of the guidelines recommended variations on mathematical aggregation. Three guidelines16,26,32 recommended combining expert judgements in a linear opinion pool that equally weights all of the experts. The guidelines by Cooke and Goossens21 is the only one to recommend mathematical aggregation with differential weights for the experts. Cooke and Goossens21 suggested a method whereby the experts are scored and weighted according to their performance in assessing a set of seed questions, which are items that are unknown to the expert but known to the facilitator.

Budnitz et al. 24 recommend a unique approach wherein the analysts determine the aggregation method during an elicitation, based on an evaluation of how the process is unfolding and determining what is most appropriate. They recommend that a behavioural aggregation-based consensus is the best choice, but believe it is not appropriate in all situations. The analysts can also decide to use mathematical aggregation with equal weights or analyst-determined weights or a process similar to that recommended by Kaplan,18 in which the analysts supply a distribution that they believe captures the discussion and evidence presented by the experts.

Like interaction, several of the guidelines give more background to help guide an analyst in his or her choice of method. The main drawback of aggregation, according to Tredger et al. ,28 is that it can lead to a result that no one believes. Two guidelines20,24 warn that the expert selection is of increased importance if an elicitation will use mathematical aggregation with an opinion pool, particularly equal weights, as increasing the number of experts with similar beliefs will result in those beliefs having more influence in the final, aggregated distribution. Garthwaite et al. 20 also suggest that opinion pools may be problematic as the result does not represent any one person or group’s opinion, but Bayesian weighting requires a lot of information on the decision-maker’s views of the experts’ opinions. Finally, several guidelines16,20,23,25,26,28,30,31 discuss that the possible issues around behavioural aggregation are linked to the challenge of properly managing group interactions, the topic discussed next. The broader literature on aggregation is discussed in Chapter 5.

Fit to distribution

Recommendations and choices on fitting to distribution are summarised in Report Supplementary Material 1, Table 12. Analysts can fit the elicited data to a probability distribution either as part of the elicitation or during post-elicitation analysis of the data. Possible choices, discussed in about half of the guidelines, include fitting to a parametric distribution, using non-parametric approaches or just using the information directly elicited from the experts.

None of the guidelines recommended specific distributions to be used in fitting, but they say that the analysts should choose based on the nature of the elicited quantity and the information provided by the experts. Cooke and Goossens21 describe probabilistic inversion, a method that can be done if the observable elicited variable needs to be transformed into a distribution on an unobservable model parameter. Chapter 5 explores issues of fitting judgements to distributions in more detail.

Other post-elicitation components

Recommendations and choices related to the other post-elicitation components are summarised in Report Supplementary Material 1, Table 13. Only two guidelines discussed obtaining feedback from the experts on the elicitation process. Walls and Quigley23 recommended that analysts ask experts what could have been done differently if new data are later collected that differ from the experts’ judgements. The EFSA Delphi16 recommended that analysts give experts a questionnaire with the opportunity to provide general comments on the elicitation questions and process.

None of the guidelines recommended that analysts should adjust experts’ assessments, but five describe related choices, such as manually adjusting assessments,16,20 dropping an expert from the panel23,24 or adjusting assessments to be more accurate, which is recommended against by two guidelines. 20,25

Documenting the elicitation process and results is the only elicitation element discussed by all 16 guidelines. Although the specific recommendations regarding what to include in the final documentation varies across the guidelines, they do not conflict. The guidelines typically recommend that documentation includes the elicitation questions, experts’ individual (if elicited) and aggregated responses, experts’ rationales and a detailed description of the procedures and design of the elicitation, including the reasoning behind any methodological decision. Many of the agency guidelines are more prescriptive about what documentation should entail, and some provide detailed templates. 16,17,31

Managing heuristics and biases

Expert judgements are affected by a variety of heuristics and biases. 33,34 Morgan35 argues that these biases cannot be completely eliminated, but that the elicitation process is designed to minimise their influence on the results. The 16 reviewed guidelines discussed 11 different cognitive biases and eight motivational biases that can affect an elicitation. A list of the biases discussed and possible actions to minimise them can be found in Report Supplementary Material 1, Table 14.

Most of the bias-reducing actions mentioned by SEE guidelines are discussed in only one or two guidelines, but the actions do not conflict with one another. The most frequently recommended actions are to frame questions in a way that minimises biases (discussed in five guidelines16,22,23,28,32) and to ask for the upper and lower bound first, to avoid anchoring (discussed in three guidelines26,30,32). Although most guidelines offer some recommendations for mitigating and managing biases, they present little to no empirical evidence to support that their recommended actions have the intended effect. The broader literature on heuristics and biases is reviewed in Chapter 6.

Considering the validity of the process and results

Four guidelines16,18,25,30 do not discuss how to ensure the validity of elicited results and the other 12 guidelines present a range of perspectives on what is meant by validity, summarised in Report Supplementary Material 1, Table 15. Validity can mean that the exercise captured what the experts believe (even if that is later proven false). 20 It can also refer to whether the expressed quantities correspond to reality,20,21,23,32 are consistent with the laws of probability20,23 or are internally consistent. 26,29 Some guidelines – all agency documents – also view validity as mostly concerned with the process, rather than the results, and suggest that an elicitation is valid if it has been subjected to peer review. 17,24,31 Recommendations and choices for handling validity differ across the guidelines and can involve actions at any stage of the elicitation process, depending on what definition of validity the guideline seeks to achieve.

Conclusions

The SEE guideline review reveals a developing body of work designed to guide elicitation practice. Although the guidelines evolved separately in different fields, they largely agree on issues around what quantities to elicit, expert selection, the importance of piloting the exercise and training experts, face-to-face elicitation being preferable to remote modes, the importance of collecting rationales from the experts alongside the quantitative assessments, fitting assessments to distributions, the key role documentation plays in supporting and communicating an elicitation exercise, and how to manage heuristics and biases. The guidelines recommend different approaches for encoding judgements, using individual- or group-level elicitation, aggregating judgements and managing interaction between the experts. Although the guidelines agree that validation is important, they disagree on what actions an analyst can take to encourage or demonstrate validity. Finally, some areas seem underdiscussed. Dependence between questions, for example, is a complicated issue that could be critically important when interpreting elicitation results, but little guidance exists on the topic.

The elicitation choices identified in this review are further considered in Chapters 5–8, and their suitability for use in the HCDM context is evaluated in Chapter 9.

Chapter 3 Expert elicitation in different decision-making contexts

Introduction

Challenges in the conduct of SEE in HCDM are discussed in Chapter 4. The challenges that were identified in the applied examples were largely practical and related to the design of the SEE for that particular task. There are, however, much broader challenges and opportunities that relate to the decision-making context in which SEE is applied. These issues are discussed in this chapter.

The specificities of the context in which expert elicitation is conducted should be distinct from the principles and methods employed. That is, best practice should always be regarded as an appropriate starting point, regardless of the context. Doing so, however, may ignore many important factors that influence the choice of method employed for an elicitation. A reference protocol that does not at least consider context-specific constraints is unlikely to be widely used or may be restricted to a subset of decision-makers only, such as those operating at a national level.

When considering how a reference protocol for expert elicitation in HCDM might be utilised in practice, it is important to understand how different decision-making contexts may influence the requirements for and practicalities of expert elicitation. In particular, there may be practical constraints in certain contexts that imply the use of a second-best methodology. Some of these issues are explored in the evaluation (see Chapter 11); however, this chapter considers the range of decision-making contexts more generally, and highlights the potential constraints and the implications for SEE methodology. Given the lack of experience with SEE in formal decision-making processes, a formal review of the challenges and constraints faced by different HCDM’s is unlikely to be informative. Instead, this chapter is intended as a discussion, rather than a formal review. It draws on observations and experiences of the project team and the wider advisory group.

Levels of decision-making

In England, reimbursement decision-making bodies can be described at three levels, implying the population they serve and the jurisdiction for their decision-making activities. 36 These are:

-

individual practitioners [such as general practitioners (GPs)], secondary care clinicians and local decision-makers [such as Clinical Commissioning Groups (CCGs)], local authorities (LAs) and hospital trusts

-

national decision-makers [such as NICE, the Department of Health and Social Care (DHSC), NHS England and PHE]

-

research commissioners, including organisations such as the National Institute for Health Research (NIHR) and the Medical Research Council (MRC), and also industry sponsors of research.

Reimbursement bodies range from local practitioners and commissioners to national decision-makers (level 2). Here, individual and local decision-makers (level 1) are grouped together, as many of the constraints are relevant in both contexts. In addition, there are multiple organisations that commission research (level 3), potentially including SEE; these organisations can also be regarded as decision-makers.

Individual practitioners and local ‘population-level’ decision-makers

Features

There are a number of decisions that are made on an individual practitioner–patient level in the NHS and other health-care systems. These usually concern a patient’s course of treatment and the most effective, and sometimes cost-effective, choice given the particular circumstances. Such decisions are made in both a primary care setting, usually involving a GP, and a secondary care setting, usually involving a consultant or other medical specialist. Such decision-makers may also make choices for groups of patients, for example in deciding which device to purchase within a hospital or in organising surgical lists.

Health-care decision-making occurs at a population level in several forms. In England, within primary care, a CCG (see below) supports decision-making between GPs and their patients through local guidance, such as the referral support system (see Kershaw37 for an example). This may also extend to services offered within secondary care, for example referrals for further testing or investigations. For both primary care and secondary care there may be relevant guidance produced by NICE to support decision-making. Individual practitioners and secondary care clinicians are also influenced by their professional bodies and councils. As well as commissioning for primary care, NHS England also produces key strategic guidance for CCGs to support them to fulfil their duties to their respective populations. Therefore, although individual health-care professionals in the NHS make decisions about individual patients, this is very much governed by the organisations that are intended to harmonise provision of services across England, and encourage best and most cost-effective provision of services.

The NHS also works closely with social services, and individual practitioners in this respect include social workers, residential care homes and carers. Within public health, services are delivered across the NHS, social care and LAs, and many are supported by the work of PHE. Public health practitioners, including nutritionists, smoking cessation co-ordinators and teenage pregnancy co-ordinators, are again employed by the NHS, PHE, NHS England and individual LAs and CCGs, and as such work within their codes of practice and adhere to appropriate guidance regarding provision of services. LA public health is also accountable to PHE. 36

Constraints

The particular constraints in these context relate to the degree of autonomy that individual practitioners and CCGs have in making decisions regarding individual patients or groups of patients within their jurisdiction. Since the abolition of GP fund holding (in 1997/98)38 and subsequent changes to commissioning before the 2014 Care Act,39 individual practitioners are more constrained with regards to patient-level decision-making.

In both CCGs and LAs there are significant budget constraints. Although the average CCG’s budget grew by 3.4% in 2016/17,40 there are a number of new pressures on CCGs that require them to cut back or reorganise local services. These include cutbacks to public health and social care funding. Post the first 2 years of the move of public health to LAs, there are services which LAs may be forced to reduce investment in, some of which have implications for public health. 41

Clinical Commissioning Groups and LAs are also constrained by budget cycles, which are typically 1–3 years. There may be an incentive to replace activities that cannot prove ‘value’ within these time frames with those that have a higher immediate payoff, for example less investment in prevention.

Both CCGs and LAs face multiple competing demands for money and resources. There are big differences across regions with regards to commissioning of and participation in research. Some CCGs and LAs work with health economists and are therefore directly involved in commissioning, participating in or understanding the results of cost-effectiveness evidence and the implications for their population. Others do not have access to such resources.

Implications for expert elicitation in this context

We are not aware of any examples in which formal SEE has been used to support decision-making of the system, although, of course, judgement is used routinely in the clinical and management settings every day. (This does not preclude that such elicitations have not been done of course, but, if they have, to the best of our knowledge they have not been documented.) Indeed, it might initially seem practically unfeasible to use SEE to support decision-making at the individual practitioner level, particularly given that many decisions are made on a national level with implementation at a local level. However, there are still a number of decisions that can be made by individual practitioners or groups of practitioners and local commissioners, many of whom may rely on assumptions and opinion rather than experimental data. For example, when considering individual cases and episodes of care, such as for procedures and services not routinely funded by the NHS, an individual funding request panel will consider specific cases for reimbursement (e.g. cosmetic services). 42 Those conducting SEE to inform other decision-making processes may also reply on individual practitioners to act as experts. In some circumstances the SEE may be required to consider parameters for a specific patient, rather than at a population level (e.g. in the individual funding request process). This can have implications for how a SEE is designed, specifically elicitation of uncertainty and communication with experts about how to express their uncertainty.

Structured expert elicitation undertaken in this context must also adapt to the practical constraints; in particular, it may not be possible to invest significant amounts of time and resources into SEE, and the availability of experts to inform often practice-level decision-making may be limited. Such experts are unlikely to possess any normative skills or have any experience with SEE. Group-based SEE may be a challenge in this context, as may individual SEE, which requires face-to-face interaction. It may be necessary to trade off recruiting large numbers of experts for face-to-face SEE with obtaining larger numbers through remote SEE.

National decision-makers

Features

In England, the DHSC governs health and social care matters and has responsibility for some elements that are not covered separately by the Scottish, Welsh or Northern Irish governments. 36 The DHSC itself takes responsibility for a number of services and activities provided by the NHS, and is also supported by a number of agencies and public bodies. The DHSC provides a mandate to NHS England to help guide its decisions regarding the allocation of resources, commissioning specialist services and its strategic direction. NHS England oversees commissioning and is aided by four regional offices. It has responsibility for commissioning contracts for GPs, pharmacists and dentists, and supporting CCGs in their commissioning roles.

There are a number of special health authorities and other bodies that are either part of the NHS or are closely associated with it. They include NICE and the Prescription Pricing Authority. These organisations are either accountable to the Secretary of State or have formal agreements with the DHSC. In general, they provide national services. NICE was set up in 1999 as a special health authority. 43 Officially, NICE has jurisdiction only in England and is supported in considering its guidance in Scotland and Wales by the Scottish Medicine Consortium and the All Wales Medicines Strategy Group, respectively. NICE provides guidance on a range of health-care products and services, including pharmaceuticals, diagnostics, medical devices and public health interventions. In compiling evidence to generate this guidance, it often relies on the use of an expert opinion in some form. A review of practices relating to the use of evidence elicited from experts across NICE guidance-making programmes was recently published. 44 The review concluded that ‘NICE uses expert judgement across all its guidance-making programmes, but its uses vary considerably’. 44 In addition, it agreed that ‘there is no currently available tool for expert elicitation suitable for use by NICE’. 44

Working alongside NICE on public health issues is PHE, which was formed in 2013 and took over the role of a number of other health bodies, including the Health Protection Agency. 45 PHE generates and interprets evidence; therefore, there is potential for it to utilise SEE. Like the Public Health Programme at NICE, the evidence base it considers is more likely to be low quality and/or sparse and, therefore, the opportunities for SEE may be significant.

Constraints

The likes of the DHSC, NICE and PHE are required to make decisions about reimbursement, best practice and access across the whole of their population. Therefore, decisions have to be relevant across different, perhaps heterogeneous, populations.

The separation of research commissioning and reimbursement can also generate complexities. Decisions may be reached on the basis that further data collection may be required; however, some national decision-makers do not commission their own research and therefore cannot ensure that data collection takes place and/or addresses the uncertainties identified.

As with more regional decision-making, national decision-making is also subject to the constraints of time and resources. Although not necessarily as constrained as local commissioning cycles dictate, national decision-makers do still have to generate guidance within acceptable timescales. The process of generating guidance through the NICE single technology appraisal process45 can take around 6 months, including committee meetings. Despite the fairly rapid timescales, formal decision-making processes, particularly those which imply mandatory implementation of guidance, such as the NICE technology appraisals process, require full accountability for the decisions reached. The need to make decisions in a timely manner therefore cannot compromise the quality of the deliberations used to make these decisions, including any evidence generation that contributes towards this.

Implications for expert elicitation in this context

Historically, SEE has been commissioned to support policy challenges. For example, policy on surgical equipment sterilisation to protect against the risk of new variant Creutzfeldt–Jakob disease prion transfer has been informed by SEE in the wake of the bovine spongiform encephalopathy crisis in the UK. 46 More recently, the European Commission commissioned SEE studies of the future antibiotic resistance rates in four European countries to inform policy, and the UK DHSC is currently commissioning additional UK-focused work in this area. 47 Across national decision-makers, the quality of evidence to inform decisions is quite heterogeneous. This can be at various stages of maturity and in some areas, for example public health, evidence may not be particularly robust. SEE could be useful to help inform decisions in these situations, although it is likely that some of the parameters required may also be difficult for experts to make judgements about, for example population uptake of a screening programme.

Indeed, many examples of SEE conducted in the area of HCDM have been undertaken to inform national decision-making organisations, such as NICE (see Chapter 4). As a result, there is a degree of familiarity with the approaches used and an acceptance of its limitations. NICE only makes brief reference to the use of expert opinion to generate evidence in its guide to the methods of technology appraisal. 45 NICE do not suggest a preferred methodology for this and they have not used any consistent criteria to judge SEE submitted as part of any appraisal process.

It is true that decision-makers have differing capacities to undertake SEE, specifically in reference to resourcing of SEE. Evidence generation does not constitute a significant proportion of the remit for some decision-makers. Therefore, similar to the use of SEE in local decision-making, SEE undertaken in this context must adapt to the practical constraints. Timescales for evaluation are often tight and there are implications for any delay in approving a technology or service. Although SEE takes significantly less time than many other forms of empirical evidence to collect, if conducted appropriately the time resource can still be unachievable in some instances. Political cycles can generate promises around improving efficiency and accesses to NHS services. Tight turnaround for evidence to support these promises can negate the ability to undertake SEE, and in this instance less formal approaches to filling data gaps may be employed.

In terms of specifics, as discussed above, decisions may have to be relevant for potentially heterogeneous populations. Eliciting uncertainty around a measure of central tendency across a heterogeneous population can be a challenge for experts. Rather than eliciting across the entire population, it may be advantageous to express quantities for multiple patient types, which will increase the size of the SEE task.

Research commissioners

Features

In addition to those discussed above, there are also other decision-makers not concerned with reimbursement, such as HTA, the NIHR (more generally) and the MRC. These bodies commission research and use expert opinion in cost-effectiveness analyses and, therefore, any guidance on appropriate design and conduct of SEE would have implications for their practices. Industry can also commission research as part of the licence and reimbursement processes.

Such decision-makers typically do not fund interventions per se but instead commission effectiveness and cost-effectiveness research across their areas of interest. Many of these could potentially use SEE to help inform their decisions regarding which research to fund and the specific form that this research might take. One example is the use of SEE in determining sample size calculations for clinical studies. 48 Here, the SHELF14 has been used to generate prior beliefs to aid clinical study design, specifically on the probability of success (assurance parameter).

Constraints

The scale of the commissioning of research varies across funders and within their programmes of work. Some funders are constrained to commission research with a specific area, for example clinical specialty, whereas others, such as the NIHR and the MRC, commission across a range of topic areas. SEE used outside the context of a decision-making (reimbursement) process may not be subject to the same constraints in terms of time or resources; however, Chapter 4 does not identify any applied examples where SEE has been the sole purpose of the research, instead SEE is likely to account for only a small proportion of the research funding.

Implications for expert elicitation in this context

As the rationale for the research is to reduce uncertainty and because research priorities are inevitably contentious, there seems to be a very strong case for using SEE in this context to focus research. For example, Dallow et al. 48 discuss several examples of the use of expert elicitation at GlaxoSmithKline (Brentford, UK) to inform trial design and the management of the company’s research portfolio. In a similar vein, Walley et al. 49 describe a case study of Pfizer (New York, NY, USA) in which elicitation was used. Given that research commissioners tend to focus on particular specialties, for example clinical areas, it may also be possible to generate a level of expertise to undertake SEE, in terms of both the analyst and the experts. When it has been used in the clinical trial setting to inform sample size calculations, an expert panel has been established to speed up the generation of experts priors.

The lack of consistency between research commissioners presents a challenge for the application of SEE in this context. Not all commission cost-effectiveness studies and there is diversity in topics, which may have implications for the way in which SEE is conducted. Public health and complex interventions, for example vaccination programmes or other non-pharmacological interventions (e.g. as service changes), may imply different methods for SEE compared with medicines (see Chapter 10).

Conclusions

Structured expert elicitation can, in principle, be applied in many different settings and across a range of types of decision-makers. In practice, to date, its application has largely been restricted to informing national-level HTA decisions and for the purposes of generating evidence as part of larger research projects (see Chapter 4). The lack of SEE at an individual practitioner and local population level is likely to be driven by resource and time constraints, and the fact that constant changes to policy-making at local and national levels can also shift the focus on a frequent basis. One solution is to move away from the use of SEE as an ‘addition to the analysts’ toolkit’ and instead as a substitute for other forms of evidence, for example a systematic review or modelling exercise. This is likely to be a challenge in systems that have relied heavily on such forms of evidence to inform decision-making, but may be more feasible in local decision-making settings.

Guidance on appropriate conduct of SEE in HCDM is likely to be useful in all the contexts discussed; however, time constraints and lack of capacity to conduct such exercises are likely to remain challenges, when SEE is forced to fit into existing processes. For this reason, SEE is most likely to gain traction in national and multinational settings, in which a capacity for such activities can be generated simply through economies of scale.

Even within national and multinational decision-making processes, there are likely to be different challenges in conducting SEE, and some of these may imply that methodological choices need to be adapted to suit that particular application. Such issues are discussed in Chapter 10.

Chapter 4 Challenges in structured elicitation in health-care decision-making

Parts of this chapter have been reproduced from Soares et al. 50 Copyright © 2018, International Society for Pharmacoeconomics and Outcomes Research (ISPOR). Published by Elsevier Inc. This is an open access article under the CC BY license (http://creativecommons.org/licenses/by/4.0/).

Introduction

Reimbursement decisions in health are often supported by model-based economic evaluation (MBEE). 51 There may be circumstances in which SEE is required to address data limitations in MBEEs, such as a short-time horizon or missing entirely.

A review of applications in this area, published in 2013,44 identified only a small number (n = 14) of studies reporting the use of SEE. This review did not seek to determine the reasons for heterogeneity of approach, nor did it look at the challenges faced when conducting SEE to support MBEE in health and inform directions for future research. In pursuit of further clarity, the review instead focuses on summarising the basis for methodological choices made in each application (design, conduct and analysis), and the difficulties and challenges reported by the authors. Further details of this review are reported elsewhere50 and so only a summary has been presented in this chapter.

Methods

To identify applications of SEE, the 2013 review44 was updated (identifying studies up to 11 April 2017). Further details on the methods of the search are given elsewhere. 50 Studies were included only if they contained a SEE to elicit uncertain parameters (in the form of a distribution) to inform MBEE in health.

The methods used in each application were extracted, along with the criteria used to support methodological and practical choices and any issues or challenges discussed in the text. Issues and challenges were extracted using an open field and then categorised and grouped for reporting.

Aspects related to the design of the structured expert elicitation