Notes

Article history

This issue of the Health Technology Assessment journal series contains a project commissioned by the Medical Research Council’s (MRC) Population Health Sciences Group (PHSG). Jointly funded by the MRC and NIHR, the work refreshed the previous version of the Medical Research Council framework for development and evaluation of complex interventions: A comprehensive guidance (2006).

PHSG is responsible for developing the MRC’s strategy for research to improve population health. NIHR’s mission is to improve the health and wealth of the nation through research. As population level interventions in community and clinical settings become more important, and as science advances and innovates, both funding partners agreed that updating the existing framework was timely and needed.

The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. This report has been published following a shortened production process and, therefore, did not undergo the usual number of proof stages and opportunities for correction. The Health Technology Assessment (HTA) programme editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Permissions

Copyright statement

Copyright © 2021 Skivington et al. This work was produced by Skivington et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaption in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2021 Skivington et al.

Chapter 1 Development of the framework

Introduction

In 2006, the Medical Research Council (MRC) published guidance for developing and evaluating complex interventions,1 building on a framework that was published in 2000. 2 The aim was to help researchers and research funders recognise and adopt appropriate methods to improve the quality of research to develop and evaluate complex interventions and, thereby, maximise its impact. The guidance documents have been highly influential, and the accompanying papers published in the British Medical Journal are widely cited. 3,4

Since the 2006 edition of the guidance was published, there have been considerable developments in the field of complex intervention research. In some areas, the accumulation of experience and knowledge in the application of approaches and methods that were novel or undeveloped at the time of writing the previous guidance has led to the publication of detailed, focused guidance on the conduct and/or reporting of research, including the MRC guidance on ‘process evaluation’. 5 In other areas, new challenges in complex intervention research have been identified and the reliance on traditional approaches and methods promoted in previous guidance has been challenged. 6–8 The guidance has also been complemented by MRC guidance on ‘natural experiments’,9 an important area of development in methods and practice. Given that complex intervention research is a broader and more active field, this new framework provides a less prescriptive and more flexible guide. The framework aims to improve the design and conduct of complex intervention research to increase its utility, efficiency and impact. Consistent with the principles of increasing the value of research and minimising research waste, the framework (1) emphasises the use of diverse research perspectives and the inclusion of research users, clinicians, patients and the public in research teams; and (2) aims to help research teams prioritise research questions and choose and implement appropriate methods. This aims to provide pragmatic recommendations aimed at an audience from multiple disciplines, and we have taken a pluralist approach.

Updating the framework was a pragmatic, staged process, in which the findings from one stage fed into the next. The next section, therefore, provides the methods followed by the results for each stage (gap analysis, expert workshop, open consultation and writing the new framework). We then provide concluding remarks and suggestions for moving forward. The resulting framework is presented in Chapter 2.

This project was overseen by a Scientific Advisory Group (SAG) that comprised representatives of each of the National Institute for Health Research (NIHR) programmes, the MRC–NIHR Methodology Research Panel, key MRC population health research investments and authors of the 2006 guidance (see Appendix 1). A prospectively agreed protocol, outlining the work plan, was agreed with the MRC and NIHR and signed off by the SAG (see Appendix 2). At various points throughout the writing process, we consulted with other researchers, evidence users, journal editors and funders (see Appendix 3).

As terminology can often be ambiguous, and there are often terms used interchangeably, we have provided a Glossary of key terms.

Methods and results

The framework was updated using multiple methods over several stages:

-

stage 1 – a gap analysis of the evidence base for complex interventions

-

stage 2 – a workshop that collated insight from current experts in the field

-

stage 3 – an open consultation

-

stage 4 – drafting the updated framework and a final feedback exercise.

Various stakeholders, for example researchers, research users (patients, public, policy-influencers and NHS), funders and journal editors, were engaged at different stages of the drafting process. The methods and findings from each of the stages are described in the following sections.

Stage 1: gap analysis

Methods for stage 1: gap analysis

The aim of the gap analysis was to identify and summarise aspects of the previous guidance that required updating. We used these gaps as a starting point for discussion within the project team, SAG (for a list of members, see Appendix 3) and identified experts. It was, therefore, a method of agenda setting and, thus, did not aim to be comprehensive. The intention was that issues could be added as the work progressed.

Our first step was a brief horizon scanning review that focused on new approaches/progress since the previous guidance, criticisms of existing guidance and other gaps. Based on initial reading of the literature and the experience of the project team, the SAG were provided with a list of topics for update. This was discussed at the initial SAG meeting (24 November 2017) and the list of topics was updated for more in-depth exploration of the literature.

Separate literature searches were conducted for each of the identified topics using keywords (the topic of interest plus variations of ‘complex intervention’) in Web of Science, restricted to English language with the date limited to those published since 2008. Where there were existing guidance documents relevant to the development, implementation or evaluation of complex interventions, we used these as our starting point and limited our literature review to documents published after these existing guidance documents. For example, guidance existed for natural experiments,9 process evaluation5 and context,10 which we drew heavily on. We also discussed this new framework with those involved in developing other guidance at the time, for example for intervention development,11 exploratory studies12 and systems thinking. 13,14 We excluded guidance that did not provide substantive information on methodological issues. Criteria for including other publications were broadly that they provided relevant thinking that could be used to progress the work. A summary of the findings from each topic search was created and used to identify focal points for the expert workshop.

Findings from stage 1: gap analysis

Several limitations of the 2006 guidance were highlighted. These mainly related to (1) the focus on effectiveness; (2) considering randomised controlled trials (RCTs) as the gold standard research design; (3) the lack of detail on economic evaluation; (4) the lack of attention to mechanisms that deliver change; (5) the lack of acknowledgement of complex organisational systems or complexity theory; and (6) omission of the importance of policy context, including the impossibility of standardising context. In addition to these issues, there were several areas that had progressed since 2006, which were considered to be important to explore in more detail. Here we provide a brief summary of the gaps that were identified in 2017 and what we initially proposed to be discussed with experts at the workshop:

-

Complex intervention definition –

-

Key issue for the update – definition is too narrow.

-

There are different dimensions of complexity. How can we improve the definition of complex intervention to better acknowledge contextual complexity and the system-level properties that add to this complexity?

-

Does the term ‘complex intervention’ make sense when complexity arises from the intervention context and the interplay between intervention and context as well as the intervention itself?

-

-

Intervention development –

-

Key issue for update – little practical guidance on this phase and subsequent literature provides more detail on certain aspects, for example using a theory-driven approach;15,16 identifying and delivering the mechanism of change;17 co-production and prototyping of public health interventions;18 and optimisation of the intervention. 19

-

There is guidance under way on intervention development (INDEX study11) that is about identifying and assessing different approaches to developing complex interventions. Given that this is current and there are clear overlaps, are there any issues that are not covered in the INDEX guidelines that we should consider adding in this document?

-

-

Pre-evaluation phase: appraisal of evaluation options or exploratory work –

-

Key issue for update – previous complex intervention guidance highlighted the importance of preparatory work, with the focus being on conducting a pilot study; however, detail on feasibility issues and how to develop the feasibility stage is required.

-

Similar to the development phase, there is work in progress to create guidance for Exploratory Studies of Complex Public Health Interventions (GuESt study12), which includes a lot of relevant information. Should the current update include anything further and how can we make it relevant beyond public health?

-

-

Context –

-

Key issue to update – although the previous complex intervention guidance states the importance of taking account of context, this is mostly about how context affects outcomes at the evaluation phase and how interventions may require adaptation for implementation in different contexts. There is little attention to the consideration of context throughout the research phases or guidance on how to take it into account.

-

How do ‘context’ and ‘system’ differ/overlap?

-

Context is a critical construct; how do we ensure that we refer to it throughout the research process?

-

Do we want to go further than the recently published guidance on taking account of context in population health intervention research?10 What are the key points for considering context in complex intervention research more broadly?

-

-

Ideas from complex systems science –

-

Key issue to update – this is an area that has received increasing attention in the last decade, and for this reason the previous complex intervention guidance did not draw on it.

-

Examples of using complex systems thinking in public health research have been limited to describing and modelling systems; this has not yet been taken further and been used to develop and evaluate interventions. 7

-

When is it critical to embrace a complexity perspective (and when it is not necessary: simple and complicated questions and approaches have their merits) and how can such a perspective be implemented methodologically?

-

How can a complex systems approach guide each phase of complex intervention research?

-

-

Programme theory –

-

Key issue to update – the previous complex intervention guidance provided brief information on causal mechanisms and on developing a theoretical understanding of the process of change; however, this lacks the required level of information to guide researchers in developing programme theory from the outset.

-

Further detail is needed to illustrate the steps required to undertake a robust planning phase, including (1) identifying appropriate theories of change, (2) considering potential mechanisms of change, (3) anticipating important contextual factors that could influence the change mechanism and outcomes and (4) mapping appropriate methods to operationalise the chosen theory into practice.

-

-

Implementation research –

-

Key issue to update – the previous complex intervention guidance has limited information on the practical implementation process and needs to understand and account for dynamic contextual factors.

-

Successful implementation is critical to the scaling up of interventions and the new framework should reflect this by emphasising implementation throughout the research process.

-

When do you stop doing effectiveness studies and start doing implementation studies?

-

How can we include the wider aspects of implementation that may enable or constrain desired change? For example, how much guidance do we provide on addressing intervention context and addressing future implementation on a greater scale?

-

How do we make the information palatable for decision-makers?

-

-

Economic evaluation and priority setting –

-

Key issue to update – the previous complex intervention guidance did not go into any detail on how standard economic evaluation methods need to be adapted to deal with particularly complex interventions.

-

Issues around timeline – outcomes are likely to extend beyond the lifetime of an evaluation – can economists work with proxies to system change?

-

How do we best guide on issues for existing economic evaluation methods where interventions aim to change the properties of complex systems? That is, it is not appropriate to evaluate health outcomes only at the individual level if a component of the intervention is to effect change to the system; outcomes are broader than individual health and costs (is a societal rather than a health-care perspective required?).

-

(How) should we include equity issues and economic evaluation analytical approaches, which are growing and complicated methodological areas?

-

How can we guide on economic evaluation for priority setting? That is, what is the most efficient use of resources (to determine whether or not the additional cost of a research project or particular study design is justified)? Are decision-modelling and value-of-information analysis (VOI) practical propositions?

-

-

Systematic reviews of complex interventions –

-

Key issue to update – the previous complex intervention guidance did not address issues related to the inclusion of complex intervention studies in systematic reviews, much beyond acknowledging that they can be problematic. Should we add more?

-

Systematic review methods may differ from standard methods and extra consideration is necessary where the systematic review includes complex interventions (if the review is about complexity), for example in defining the research questions, developing the protocol, the use of theory, searching for relevant evidence, and assessing complexity and quality of evidence (how to identify key components of complex interventions; how to assess study quality).

-

What should be the end point of a systemic review of complex interventions? For example, effect size, decision model, improved theory or supporting policy decisions?

-

-

Patient and public involvement (PPI) and co-production –

-

Key issue to update – previous complex intervention guidance mentioned that stakeholders should be consulted at various points, but did not emphasise the need to engage relevant stakeholders throughout the research process or provide any guidance on how to do this.

-

How do we guide on effective engagement of stakeholders throughout?

-

-

Evaluation –

-

Key issue to update – the previous complex intervention guidance focused on designing evaluations to minimise bias (i.e. with high internal validity) and, in doing so, did not consider how to maximise the usefulness of evidence for decision-making. These are not mutually exclusive concerns: could both be considered?

-

Should we take an approach that promotes ‘usefulness of evidence’ rather than hierarchy of evaluation study design?

-

Should we present evaluation options that go beyond individual-level primary health outcomes? For example, taking account of system change.

-

Evaluation study designs – what should be added to reflect development in this area? For example, n-of-1, adaptive trials. How much information should we present on individual study design?

-

These topic areas and questions were intended to be a foundation for discussion and further consideration, rather than an exhaustive or definitive list.

Stage 2: expert workshop

Methods for stage 2: expert workshop

A 1-day expert workshop was convened in London in May 2018. A list of those who attended the workshop is given in Appendix 3. The aim of the workshop was to obtain views and record discussions on topics that should be newly covered or updated. Participants were identified by the project team and SAG. We aimed to have at least two experts for each of the identified topics and include a range of people from across the UK, plus international representation as far as budget allowed.

In advance of the workshop, the participants were asked to provide two key points, each with one sentence of explanation, that they felt should be taken into account in the update. These key points, alongside findings from the stage 1 gap analysis and discussion with the SAG, were used to inform the agenda and develop content for an interactive, multidisciplinary expert workshop.

After an introductory presentation by the project team, participants were split into five groups (of seven or eight) for the morning session round-table discussion.

The topics covered for all groups (presented in a different order) were:

-

the definition of complexity

-

the overall framing and scope

-

potential impact of the new framework

-

the main diagram of the framework (key elements of the development and evaluation process)

-

complex systems thinking.

For each of the two afternoon sessions, participants were split into five ‘expert groups’ aligned with their topic areas of expertise. Topics covered in these smaller specialised groups included:

-

options for study design

-

the previous guidance’s emphasis on ‘effectiveness’

-

choice of outcomes

-

considerations for economic evaluation

-

pre-evaluation and development phases

-

considerations for implementation

-

key focus areas to improve applications for funding

-

evidence synthesis of complex interventions

-

considerations for digital health

-

programme theory.

Each session was facilitated by a member of the project team and was supported by a colleague from the MRC/Chief Scientist Office Social and Public Health Sciences Unit, University of Glasgow. Colleagues assisted the facilitators by taking notes of key points during each discussion, clarifying main points with attendees and producing a written summary of each discussion after the workshop. SAG members were also present in each discussion. Round-table discussions were audio-recorded. Throughout the day, participants were asked to provide their thoughts on key points, case study examples and key references on Post-it® notes (3M, Saint Paul, MN, USA) on dedicated noticeboards.

Data from each of the 15 workshop discussions and post-it points were thematically coded, and summaries drawing on all of the data were created for each theme. These workshop summaries were sent to workshop participants by e-mail as a follow-up consultation to ensure that the thematic summaries that we created from the workshops were accurate overviews of the discussions in which they were involved. Final summaries were discussed in detail with the SAG to support the decision-making on the content of the document.

Findings from stage 2: expert workshop

Seventy experts were invited to the workshop (with the aim of facilitating a workshop of around 40 participants). In total, 37 experts confirmed their attendance; one who accepted the invitation did not attend (owing to sickness), three people did not respond to our invitation and 30 people could not attend for various reasons, some of whom recommended others who did attend. In total, 36 participants attended the workshop. Key issues that were identified are summarised in Table 1.

| Topic | Key points |

|---|---|

| Definition of complex intervention |

|

| Framing and scope |

|

| Intervention development |

|

| Study design |

|

| Systems thinking |

|

| Implementation science |

|

| Programme theory |

|

| Economic evaluation |

|

| Effectiveness |

|

| Stakeholders |

|

| Evidence synthesis |

|

Decisions taken following the expert workshop

There was considerable agreement across the workshop discussions; however, as seen in Table 1, there were some issues for which consensus was not reached or for which competing points were made in different break-out discussions. The main example of controversy was the purpose of evaluation (theory as an end point, the need for primary outcome). In addition, some of the points that were made were very specialised, for example related to particular methods or specialties. Along with the SAG, the project team determined which focus areas to incorporate in the document, keeping them high level rather than getting into specific detail. With respect to the issues for which views diverged (primarily related to effectiveness and the purpose of evaluation), we consider the document as a ‘thinking tool’ to provide recommendations to arrive at the most appropriate approach for each piece of complex intervention research (with no ‘one size fits all’ approach, instead determined by the problem that is being addressed and taking a ‘usefulness of evidence’ approach).

Some examples of the decisions taken are as follows:

-

Clarity in terminology – include a comprehensive glossary.

-

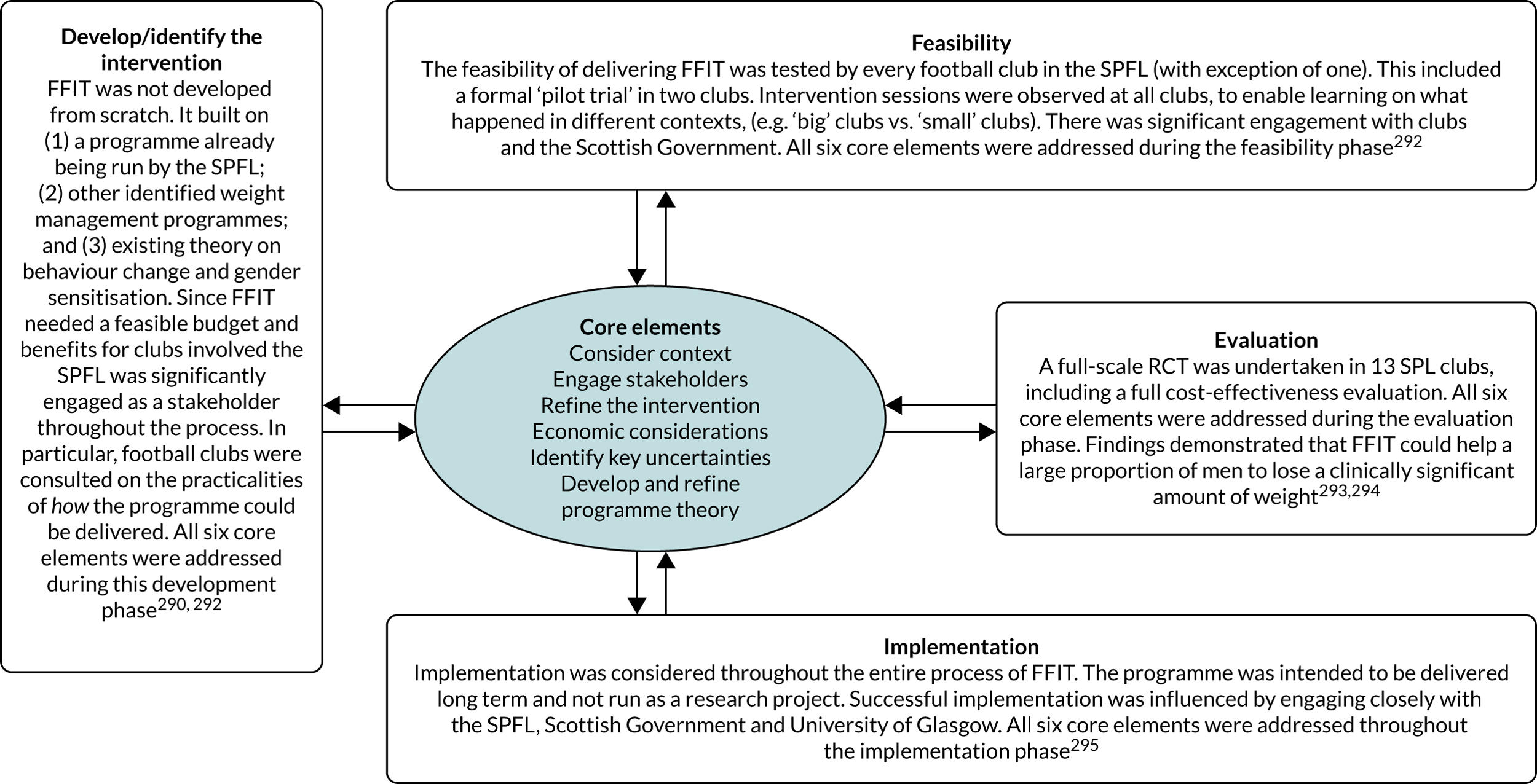

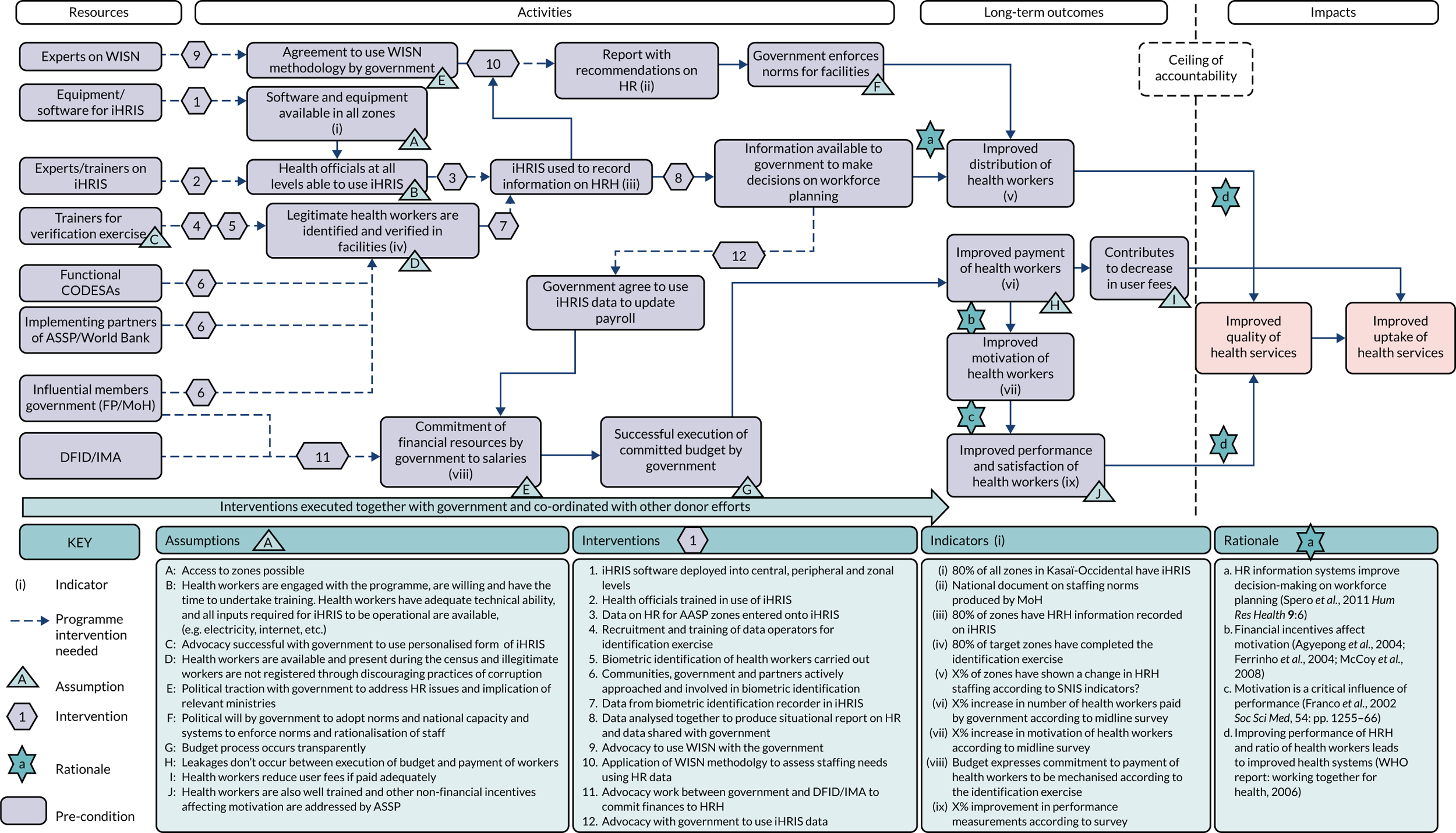

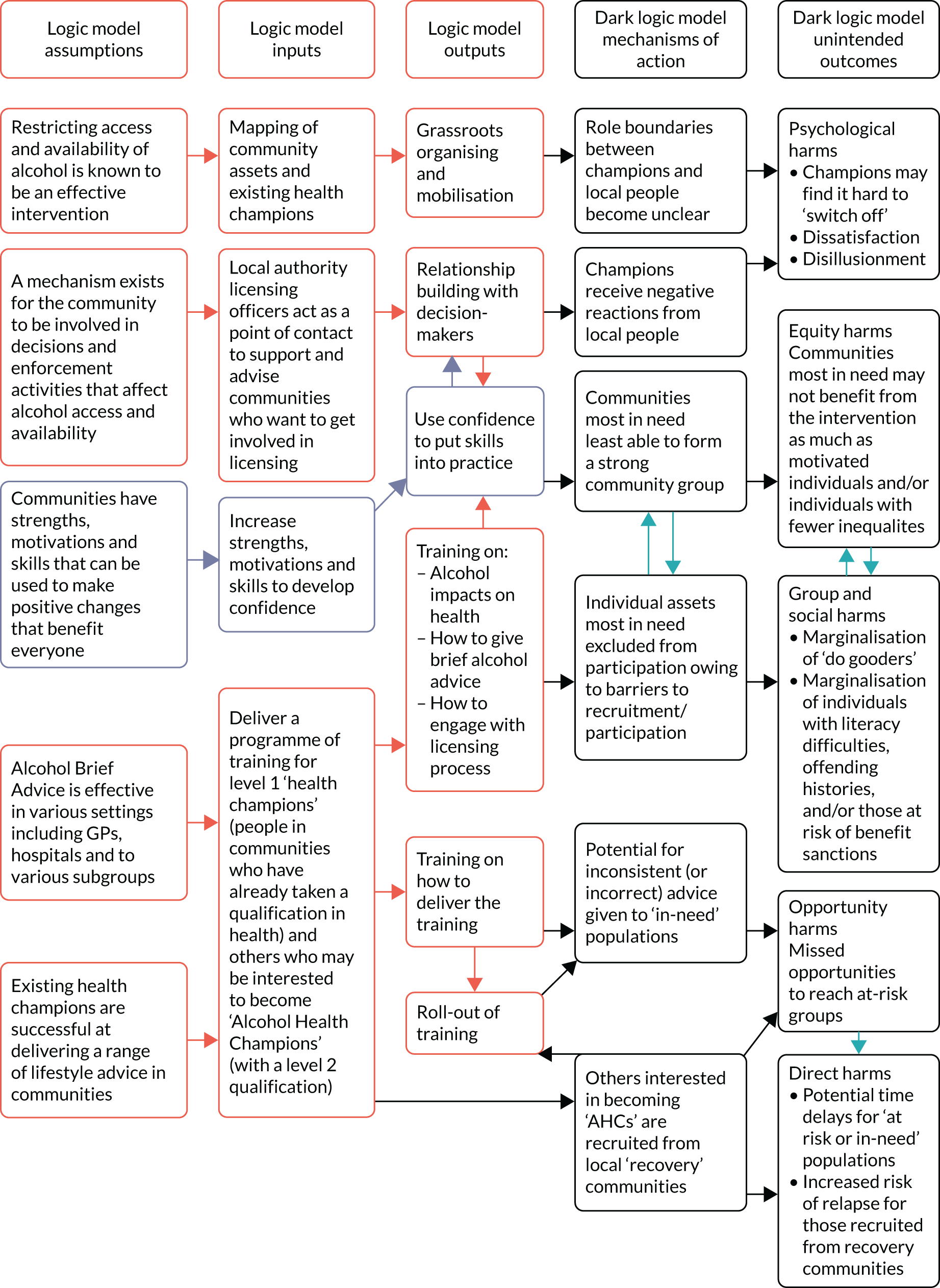

Include a series of case studies as an appendix, highlighting particular aspects of each phase and core elements of the research process.

-

Highlight the distinctive methods of evidence generation, emphasising that the research can begin at any stage of the intervention and that there may be different approaches for researchers not involved in intervention development.

-

Not to be prescriptive but rather provide options for approaching the research, which should be chosen by taking the problem as the starting point and working out what is most useful in terms of decision-making for policy and practice going forward.

-

Update the diagram included in the 2006 guidance that showed ‘Key elements of the development and evaluation process’,1 particularly to include context.

-

Include a greater focus on programme theory, but one that encourages its consideration and refining throughout.

-

Systems thinking – not to provide detailed guidance on systems thinking and methods because this is beyond the scope; rather it will be a starting point for encouraging people to consider how a systems perspective could help develop and evaluate complex interventions, with methodological development to follow.

-

Evidence synthesis – following the expert workshop, information that others were developing guidance in this area and discussion with the SAG we took the decision to focus on primary studies; therefore, we did not include a section in the main document on evidence synthesis. It is hoped that an improvement in primary studies, brought about by this new framework, would in time have a positive impact on evidence synthesis. We added an appendix to highlight some of the main considerations for evidence synthesis (see Appendix 5).

Further decisions were taken regarding the need to obtain further expertise in drafting the document. We approached three health economists for a follow-up meeting to discuss further issues related to economic considerations for complex intervention research; following this, they agreed to take on the responsibility of drafting sections that related to economic considerations and became co-authors. We also approached experts in systems thinking to discuss some of the emerging ideas on taking a systems perspective to complex intervention research. We convened a meeting in December 2018 in London with a group of researchers with such expertise (individually acknowledged in this monograph). Similarly, we convened a meeting in January 2019 with researchers who were creating guidelines on intervention development (individually acknowledged in this monograph) to discuss the overlap and the use of the INDEX guidance within the current document.

Stage 3: open consultation

Methods for stage 3: open consultation

The first draft of the updated document was made available for open consultation from Friday 22 March to Friday 5 April 2019.

Potential respondents were targeted, as follows:

-

those invited to the expert workshop

-

other experts identified from the suggestions of workshop participants, with greater focus on international experts

-

early and mid-career researchers (identified via e-mail groups)

-

journal editors

-

funders

-

service users/public

-

policy-influencers/-makers.

We e-mailed potential respondents with advance notice of the consultation dates and a link to register their interest in participating, and sent a further message when the consultation opened. Two reminder e-mails were also sent. As well as targeted promotion, we used social media to publicise the consultation and encouraged others to pass on the link.

Consultees were informed that they were responding about an early draft of the revised framework and that their involvement was an important part of the process for its final development. We asked them to relate topics in the draft to a project that they had recently worked on and to provide feedback on its usability.

The online consultation was guided by a questionnaire that was developed by the project team (the questions that all consultees were asked to complete are presented in Appendix 4). Responses were anonymous.

Findings from stage 3: open consultation

We received 52 individual responses, plus some follow-up e-mail comments. This amounted to 25,000 words of response. The majority of responses were from researchers, but some identified as funders (n = 3), journal editors (n = 7), NHS (n = 7), policy-influencers (n = 3) and service users (patient or public, n = 5). Most of the respondents said that their main field of expertise was public health (n = 21) or health services research (n = 20), with others stating clinical medicine (n = 6), implementing policy (n = 3), systems-based research (n = 4), patient or public involvement (n = 4) and other (n = 7: statistics, sociology, health economics and triallist) as their main field of expertise. A summary of the consultation suggestions is provided below; however, it is important to note that there were conflicting views on some aspects, which we have noted.

Overall

-

Overall layout: extra sections are required – an executive summary and a preface chapter that details how this is related to previous guidance and that this document is a standalone framework that does not require reference back to the 2006 version. Consider placing more emphasis on development in the earlier sections of the document rather than delve straight into evaluation.

-

Definition of complexity: the distinction made between complicated and complex interventions was said to be unclear. Respondents stressed that a clear definition of complex intervention and a more accessible account of how complexity affects the research process are required.

-

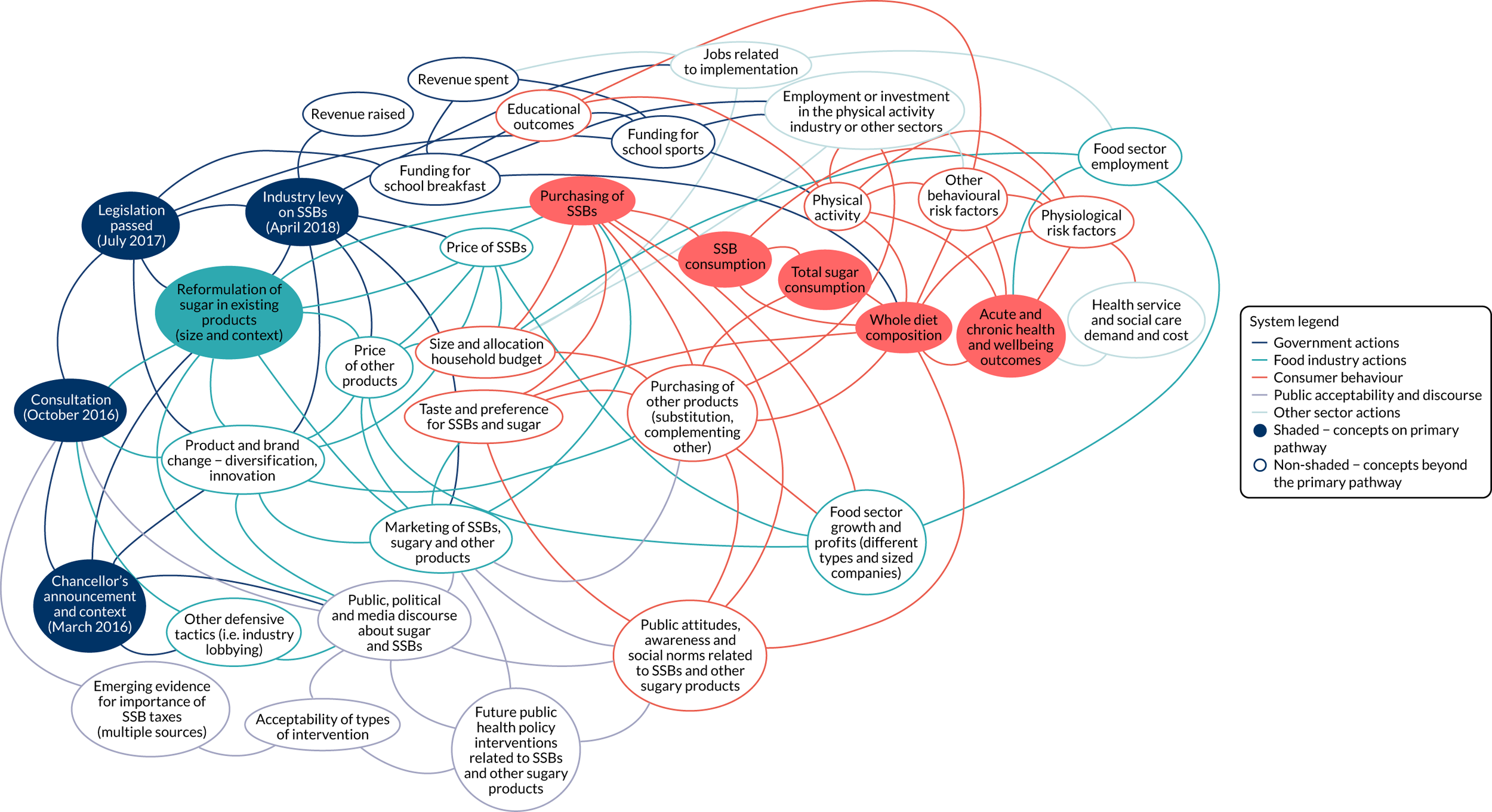

Key elements for developing and evaluating complex interventions (Figure 1): respondents felt that the ‘overarching considerations’ should all be highlighted as central to the research process and that some text detail should be added to each phase box to provide more information on what each means.

-

Evaluation perspectives (shown in the x-axis of Figure 2): many respondents felt that the perspectives that we presented were shown to be mutually exclusive and hierarchical (which was not the intention). There was significant pushback on using the term ‘realist’ as an evaluation perspective. Respondents questioned whether or not we were advocating for evaluations that do not measure effectiveness, with some conflicting views on whether or not this was a positive change. It was felt that there was not enough focus on how the perspectives relate to intervention development or to the development of research questions.

-

Framework for addressing complexity within an evaluation (see Figure 2): although some liked this framework, on balance respondents did not feel that this figure complemented the text or was very clear. Complexity does not increase in a linear fashion based on intervention components and perspective taken. Context and system were missing from the diagram despite being a large focus of the text.

FIGURE 1.

Key elements for developing and evaluating complex interventions (consultation version).

FIGURE 2.

Framework for addressing complexity within evaluation (consultation version).

Research phases (shown Figure 1, plus a section of text was also dedicated to each phase)

-

Developing and identifying complex interventions: suggestions included that we consider minimising detail in this section and signpost to the MRC-funded INDEX guidance;11 clarify the different circumstances in which development versus identification of interventions is appropriate; and consider including something specific on digital interventions.

-

Feasibility: make sure that the definition of feasibility is clear, for example in line with other standard definitions. There was a call for additional detail on the role of context in determining uncertainties for feasibility testing.

-

Evaluation: as in the expert workshop, there was conflicting feedback from respondents on how to provide guidance on evaluation. Suggestions included highlighting that evaluations must focus on effectiveness, with additions (not replacements) relating to theory and systems perspectives, but also to include better examples of evaluations focusing on systemic questions. Many respondents felt that there was too much focus on realist evaluation and little mention of theory-based evaluation approaches. The section on study design needs to be clearer, particularly on why some designs are included but others are not.

-

Implementation: suggestions were made to differentiate between clinical and implementation interventions; add EPOC (Effective Practice and Organisation of Care) criteria20 and diagnostic approaches to implementation; and clarify the time and stage of modification in relation to implementation.

Overarching considerations (see Figure 1)

-

Programme theory: it was suggested that we provide greater detail on theory-led research, with a balance of signposting to appropriate resources; address how theory-based content is written and presented for readers who come from non-theory-based disciplines, to avoid alienating people; and clarify the terminology relating to ‘mechanism’, ‘programme theory’ and ‘logic models’.

-

‘Modification’ (changed to refinement in the final version): there were conflicting opinions on the use of terminology, particularly with the (MRC-/NIHR-funded) adaptation guidance (in preparation21). Respondents called for guidance on where/when to perform modifications and how to agree acceptable boundaries, as well as examples to help readers understand the different approaches to modification.

-

Stakeholders: respondents encouraged a greater focus on PPI, more consideration of the challenges of ‘stakeholder engagement’, as well as practical examples of how to engage stakeholders.

-

Economic considerations: respondents suggested that we make sure that sensitivity analysis is discussed in relation to statistical models as well as economic models; that we give mention to the tensions between equity and efficiency in evaluating complex interventions; and that we provide more detail on generalisability and context dependency of cost-effectiveness as well as effectiveness, and the possibility of using programme theory to achieve this.

The majority of respondents were positive about the document overall, albeit with constructive criticism that required the project team to reconsider various aspects. Examples of changes that were made following consultation (note that this is not an exhaustive list of changes) were:

-

Figures –

-

Evaluation perspectives (see Figure 2) – a rewrite of this section was required (now termed ‘research perspectives’). We changed ‘realist perspective’ to ‘theory-based perspective’ to take account of other approaches to evaluation that aim to explore how and why interventions bring about change.

-

Terminology –

-

the definition of ‘complex intervention’ was updated

-

‘modification’ was changed to ‘refinement’

-

programme theory/logic model – a decision was taken to use ‘logic model’ for the visualisation of the ‘programme theory’, with programme theory detailed with text.

-

-

Programme theory: we have clarified terminology in the text and Glossary.

-

Modification/refinement: we changed the term from ‘intervention modification’ to ‘intervention refinement’, and differentiated from ‘adaptation’. We have added detail to this section on when you would expect interventions to be refined and why, including a separate section on rapid refinement of digital interventions.

-

Stakeholders: we separated the section on stakeholders into PPI and professional stakeholders, and added text to highlight the challenges in engaging stakeholders.

-

Economic considerations: we have edited and moved some of this section to other parts of the document to avoid repetition. We add detail on the potential trade-off between equity and efficiency.

-

Developing and identifying interventions: we removed text and used the INDEX guidance as the basis for this section, adding three more points that were not highlighted in that guidance but were felt to be important throughout the process of developing this document.

-

Feasibility: we further clarify what we mean by ‘feasibility’. We have re-ordered this section to improve readability. We have added a section on ‘efficacy signals’ to further show the potential of feasibility studies.

-

Evaluation: we have added detail on how the research perspectives are related to evaluation, as well as more case studies to illustrate the main points. We have emphasised the need for qualitative study in an evaluation and have added detail on process evaluation. We have added detail on the strengths and limitations of each type of economic evaluation.

-

Implementation: we have considered separately in this section (1) implementation science research, which focuses specifically on the development and evaluation of interventions to maximise effective implementation; and (2) the need to emphasise implementation considerations in earlier phases, including hybrid effectiveness/implementation designs. In the earlier phases and in the core elements, we have highlighted context, stakeholder input and the need for a broader programme theory, all of which contribute to increased consideration of implementation factors.

Stage 4: writing the updated framework

Methods for stage 4: writing the updated framework

The writing of the framework was led by the project team and was supported by co-authors in the writing group and the SAG. Feedback was received at various stages throughout the writing process from members of the MRC’s Population Health Sciences Group (PHSG) and the MRC–NIHR Methodology Research Programme (MRP) Advisory Group.

Given that the document had changed substantially from the open consultation draft, we asked a further set of external individuals to provide comments on the near-final draft. We received feedback from eight people in May/June 2020. The final draft was then sent to all co-authors for approval.

Findings from stage 4: final approval and sign-off

The final draft was approved by the MRC’s PHSG in March 2020.

Patient and public involvement

This project was methodological; views of patients and the public were included at the open consultation stage of the update. The open consultation, involving access to an initial draft, was promoted to our networks via e-mail and via digital channels, such as our unit Twitter account (Twitter, Inc., San Francisco, CA, USA; www.twitter.com). We received five responses from people who identified as ‘service users’ (rather than researchers or professionals in a relevant capacity). Their input included helpful feedback on the main complexity diagram, the different research perspectives, the challenge of moving interventions between different contexts and overall readability and accessibility of the document. Several respondents also highlighted useful signposts to include for readers.

In relation to broader PPI, the resulting updated framework (see Chapter 2) highlights the need to include PPI at every phase of developing and evaluating complex interventions. We have drawn on and referred to numerous sources that provide further detail or guidance in how to do so.

Limitations

There was a huge amount to cover in developing this document. We have not provided detailed methodological guidance where that is covered elsewhere because we have tried to focus on the main areas of change and novelty. In many of these areas of novelty, methods and experience are in some parts quite limited. In addition, we have foregrounded the very important concept of ‘uncertainties’ and, although there are methods of doing this through, for example, decision-modelling and more qualitative soft system methodologies, this area is limited and specific guidance on how to determine uncertainties in a formal way may seem unclear. We recommend that due consideration is given to this concept and call for further work to develop methods and provide examples in practice. Invariably we may have missed something in our writing and, furthermore, the fields will inevitably move on at pace following publication of this document.

Conclusion

Parts of this text have been reproduced with permission from Skivington et al. 26 This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: https://creativecommons.org/licenses/by/4.0/. The text below includes minor additions and formatting changes to the original text.

In this document, we have incorporated developments in complex intervention research that were published since the previous edition was written in 2006. We have retained the basic structure of the research process as comprising four phases – development, feasibility, evaluation and implementation – but we emphasise that a programme of research may begin at any of these points, depending on what is already known. We have emphasised that complex intervention research will not always involve the development of new researcher-led interventions, but will often involve the evaluation of interventions that are not in the control of the researcher, but instead led by policy-makers or service managers, or are the adaptation of interventions from another context. We have highlighted the importance of engaging stakeholders throughout the research process, including patients, the public, practitioners and decision-makers. We emphasise the value of working with them as partners in research teams to jointly identify or prioritise research questions; develop, identify or prioritise interventions; and agree programme theories, research perspectives, key uncertainties and research questions.

As with earlier editions, we stress the importance of thorough development and feasibility testing prior to large-scale evaluation studies. As well as taking account of established practice and recent refinements in the methodology of intervention development, feasibility and pilot studies, we draw attention to new approaches, such as evaluability assessment, that can be used to engage stakeholders in collaborative ways of planning and conducting research. We place greater emphasis than in the previous edition on economic considerations in complex intervention research. We see these as a vital to all phases of a research project, rather than simply a set of methods for assessing cost-effectiveness.

We have introduced a new emphasis on the importance of context and the value of understanding interventions as ‘events in systems’ that produce effects through interactions with features of the contexts in which they are implemented. We adopt a pluralist approach and encourage consideration and use of diverse research perspectives, namely efficacy, effectiveness, theory-based and systems perspectives, and the pragmatic choice of research questions and methods that are selected to optimally address the key uncertainties that remain. We acknowledge that to generate the most useful evidence for decision-making will often require a trade-off between precise, unbiased answers to narrowly defined questions and less certain answers to broader, more complex questions.

Although we have not explicitly discussed epistemology, we have challenged the position established in earlier editions that unbiased estimates of effectiveness are the cardinal goal of evaluation, and we have emphasised that improving theories and understanding of how and in what circumstances interventions contribute to change is also an important goal for complex intervention research. For many complex intervention research problems, an efficacy or effectiveness perspective will be the optimal approach, for which a RCT will probably provide the best design to achieve an unbiased estimate. For other problems this will not be the case, and alternative perspectives and designs will be more likely to generate useful new knowledge to help reduce decision-maker uncertainty. What is important for the future is that the scope of intervention research commissioned by funders and undertaken by researchers is not constrained to a limited set of perspectives and approaches that may be less risky to commission and more likely to produce a clear and unbiased answer to a specific question. What is needed is a bolder approach, including some methods and perspectives for which experience is still quite limited, where we (supported by our workshop participants and respondents to our consultations) believe that there is an urgent need to make progress by mainstreaming new methods that are not yet widely used, as well as undertaking methodological innovation.

We have emphasised the importance of continued deliberation by the research team of what the key uncertainties are that are relevant to that stage of research, and then defining research questions and selecting research perspectives and methods that will reduce that uncertainty. We reiterate that our recommendation is not to undervalue research principally designed to minimise bias in the estimation of effects; rather, we encourage the use of a wider range of perspectives and methods, augmenting the available toolbox and, thus, increasing the scope of complex intervention research and maximising its utility for decision-makers. This more deliberative, flexible approach is intended to reduce research waste and increase the efficiency with which complex intervention research generates knowledge that contributes to health improvement.

We acknowledge that some readers may prefer more detailed guidance on the design and conduct of any specific complex intervention research project. The approach taken is to help researchers identify the key issues that ideally need to be considered at each stage of the research process, to help research teams choose research perspectives and prioritise research questions, and to design and conduct research with an appropriate choice of methods. We have not provided detailed methodological guidance, primarily because that is well covered elsewhere. We have been fortunate to be able to draw on and refer to many other guidance documents that address specific and vitally important aspects of the complex intervention research process and specific aspects of research design, conduct and reporting. We encourage researchers to consult these sources, which provide more detail than we were able to here. We have provided more emphasis and detail in areas of change and novelty introduced in this edition. However, in many of these areas there is an urgent need for further methods development and guidance for their application and reporting in complex health intervention research. These include more formal methods to quantify or consider uncertainty, for example decision-modelling approaches, Bayesian approaches, uncertainty quantification or more qualitative soft systems methodologies, and methods suited to a systems perspective including simulation approaches and qualitative comparative analysis methods.

Recommendations

The recommendations of this work are given in Chapter 2. At the end of each research phase section (see Chapter 2, Phases of research) we include a table of elements that we recommend should be considered at that phase. The overall recommendation, therefore, is that people use the tables at the end of each phase when developing research questions and use the checklist in Appendix 6 as a tool to record where/how the recommendations have been followed.

Monitoring the use of this framework and evaluating its acceptability and impact is warranted: this has been lacking in the past. We encourage research funders and journal editors to support the diversity of research perspectives and methods that are advocated and to seek evidence that the key considerations are attended to in research design and conduct. The use of the checklist that we provide to support the preparation of funding applications, research protocols and journal publications (see Appendix 6) offers one way to monitor impact of the framework on researchers, funders and journal editors. Further refinement of the checklist is likely to be helpful.

We recommend that future updates of this framework continue to adopt a broad, pluralist perspective. Given the widening scope and the rich, diverse and constantly evolving body of detailed methods guidance that is now available on specific methods and topics, the framework will most usefully be in the form of a high-level framework with signposting, published in a fluid, web-based format, which will ideally be frequently updated to incorporate new material, both through updates to the text and case studies and through the addition of new links to updated and emerging key resources.

Chapter 2 The new framework

Introduction

Aims of the framework

The framework aims to improve the design and conduct of complex intervention research to increase its utility, efficiency and impact. Consistent with the principles of increasing the value of research and minimising research waste,22 the framework (1) emphasises the use of diverse research perspectives and the inclusion of research users, clinicians, patients and the public in research teams, and (2) aims to help research teams prioritise research questions and choose and implement appropriate methods.

Structure of the framework

The framework is presented as follows:

-

In What is a ‘complex intervention’?, we provide an updated definition of ‘complex intervention’ informed by a broader understanding of complexity, and introduce how different research perspectives can be employed in complex intervention research.

-

In Framework for the main phases and core elements of complex intervention research, we set out the revised framework for developing and evaluating complex interventions and provide an overview of the key core elements that we recommend are considered repeatedly throughout the phases of complex intervention research.

-

In Phases of research, we go into further detail about each of the key phases in complex intervention research.

-

In Case studies, we present illustrative case studies to help exemplify aspects of the framework using a variety of study designs, from a range of disciplines, undertaken in a range of settings.

How to use the framework

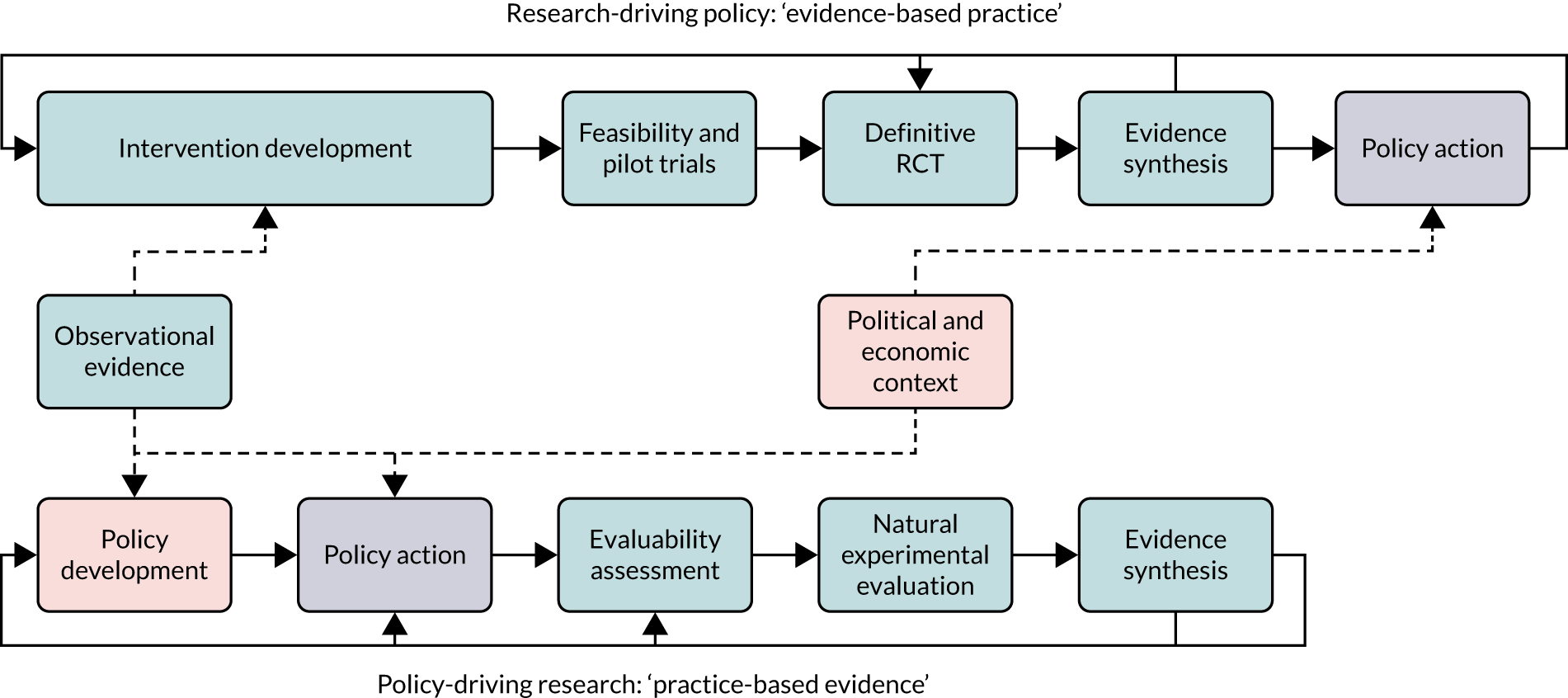

In the 20002 and 20061 versions of this document, there was a key explanatory diagram in which complex intervention research was divided into a number of key phases. In this edition, we have developed a revised explanatory diagram (see Figure 3). This does not offer a linear or even cyclical pathway through the phases of development and evaluation of interventions, but emphasises that at any phase key core elements should be considered to guide decisions as to whether or not the research should proceed to the next phase, return to a previous phase, repeat a phase or be aborted. The framework encourages teams to consider the research perspective(s) most suited to the research challenge that they are aiming to address and to use the six core elements to guide the choices that they make at each research phase. A programme of intervention research may begin at any one of the four phases, depending on the key uncertainties that are associated with the intervention and taking into account existing evidence and/or experience. Users can, therefore, choose which sections of the recommendations are most appropriate to their own research plans. To aid navigation through the document, we provide extensive hyperlinks to cross-references between sections. Throughout the document, we refer to existing detailed guidance and provide key points and signposts to further reading. At the end of the sections on each phase of research (see Tables 4–8) we include a table that lists the core considerations relevant to that phase, which we recommend to be used as a tool in research planning.

Given that this is a pragmatic framework aimed at an audience from multiple disciplines, we have taken a pluralist approach. Terminology that is related to the study of complex interventions is not used consistently across disciplines; our use of terms is detailed in the Glossary. Note that we focus on evaluation rather than ex ante appraisal,23 and specifically on evaluations that contribute to the scientific evidence base, rather than those that are conducted primarily for monitoring and accountability purposes.

Previous guidance has focused on statistical and qualitative considerations; we refer to this as well as highlight the importance of statistical and qualitative considerations throughout the research process. Economic issues in the context of developing and evaluating complex interventions have been given less attention; however, their consideration is also important from the early phases of research in relation to intervention development, evaluation and future implementation. We, therefore, provide more detail in this section of the framework.

We focus on primary research but note that other types of research, in particular evidence synthesis, must consider how to approach complex interventions as well. In Appendix 5, we provide some brief points and signposts to further reading on approaching complexity for producers and users of evidence syntheses. In Appendix 6, we provide a checklist to support and document the use of this framework in the preparation of funding applications and journal articles.

What is a ‘complex intervention’?

Few interventions are truly simple. Complexity arises from the properties of the intervention itself, the context in which an intervention is delivered and the interaction between the two. 8,24,25

Sources of complexity

Complexity owing to characteristics of the intervention

Parts of this text have been reproduced with permission from Skivington et al. 26 This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: https://creativecommons.org/licenses/by/4.0/. The text below includes minor additions and formatting changes to the original text.

Interventions become more complex in line with increasing the:1

-

number of intervention components and the interactions between them

-

range of behaviours, expertise and skills (e.g. particular techniques and communication) required by those delivering or receiving the intervention

-

number of groups, organisational levels or settings that are targeted by the intervention

-

level of flexibility or tailoring of the intervention or its components that is permitted (i.e. how dynamic or adaptive the intervention is).

For example, the Links Worker Programme27 was an intervention in primary care in Glasgow, Scotland, that aimed to link people with community resources that could help them to ‘live well’ in their communities. It targeted individual, primary care [general practitioner (GP) surgery] and community levels; the intervention was flexible in that it could differ between primary care GP surgeries. In addition, there was no single health or well-being issue that the Link Workers specifically supported: bereavement, substance use, employment and learning difficulties were all things that could be included. 27 The inherent complexity of this intervention had implications for many aspects of its evaluation, such as the choice of appropriate outcomes.

Complexity arising from context

. . . any feature of the circumstances in which an intervention is conceived, developed, implemented and evaluated.

Examples of features include social, political, economic and geographical contexts. Whether or not and how an intervention generates outcomes can be dependent on a wide range of contextual factors. These contextual factors will be intervention specific and may be difficult to anticipate. An example of an intervention that may seem simple, until context is considered, is the ‘Lucky Iron Fish’ (Box 1). 10

A small fish-shaped iron ingot placed in a pot while cooking or boiling drinking water. The ingots have been shown to be an effective way of reducing iron deficiency anaemia in women in some communities in rural Cambodia. 28 The intervention was carefully developed to be effective and sustainable in this setting and population. The ingots could be produced locally, at lower cost than conventional nutritional supplements, and making them fish-shaped encouraged uptake because fish are considered to be lucky by Cambodian villagers. Even so, an earlier trial found that short-term improvements in iron status were not sustained, because seasonal changes in water supply reduced the dietary availability of iron from the cooking water. 29 A subsequent trial in a different region of Cambodia also found little benefit, because anaemia in this region was primarily due to inherited problems with haemoglobin production, rather than dietary iron deficiency. 30

Example taken from Taking Account of Context in Population Health Intervention Research: Guidance for Producers, Users and Funders of Research. 10

Reproduced with permission from Craig et al. 10 Contains information licensed under the Non-Commercial Government Licence v2.0.

It is important to consider what features of context may be important in determining how an intervention achieves outcomes and under what circumstances an intervention may be more or less effective. This is important for all phases, that is for developing a new intervention, adapting or translating an existing intervention to a new context, evaluation and implementation.

Interventions as ‘events in systems’

a set of things that are interconnected in such a way that they produce their own pattern of behaviour over time.

Meadows and Wright31

Systems thinking can help us to understand the interaction between an intervention and the context in which the intervention is implemented in a more dynamic way. Systems can be thought of as complex and adaptive,32 in that they are defined by system-level properties, such as feedback, emergence, adaptation and self-organisation (Table 2). We can theorise interventions as ‘events in systems’24 and can conceive an intervention’s outcomes as being generated through the interdependence of the intervention and a dynamic system context.

| Properties of complex adaptive systems | Example |

|---|---|

| Emergence | |

| Complex systems have emergent properties that are a feature of the system as a whole. Emergent properties are often unanticipated, arising without intention | Group-based interventions that target at-risk young people may be undermined by the emergence of new social relationships among the group that increase exposure to and reinforce risk behaviours, while reducing their contact with mainstream youth culture where risk-taking is less tolerated33 |

| Enhanced recovery pathways are introduced to optimise early discharge and improve patient outcomes. They involve changes across pre-operative care where patient expectations are managed; changes in theatre by minimising the length of surgical incisions and the use of surgical drains; and changes in postoperative care on surgical wards (e.g. the use of physiotherapy). As enhanced recovery pathways are introduced for specific patients it influences management of other patients; thus, the whole culture of surgical practice within a hospital changes towards ‘enhanced recovery’ | |

| Feedback | |

| Where one change reinforces, promotes, balances or diminishes another | A smoking ban in public places reduces the visibility and convenience of smoking. Fewer young people start smoking because of its reduced appeal, thus further reducing its visibility and so on in a reinforcing loop7 |

| Adaptation | |

| A change of system behaviour in response to an intervention | Retailers adapted to the ban on multibuy discounts for alcohol by placing discounts on individual alcohol products, offering them at the same price individually as they would have been if part of a multibuy offer34 |

| Self-organisation | |

| Organisation and order achieved as the product of spontaneous local interaction rather than of a preconceived plan or external control | Individually focused treatment for people who misuse alcohol did not address some social aspects of alcohol dependency; as a result, recovery groups were self-organised in a collective effort and Alcoholics Anonymous (New York, NY, USA) was formed |

Change in complex systems may be unpredictable. In a social system, people interact with each other and other parts of the system in non-linear and interconnected ways so that the actions of one person alter the context for others. 35 For example, removing hospital car-parking charges has clear beneficiaries. However, by encouraging people to drive, the policy may reduce demand for public transport, leading to a reduction or withdrawal of services. The net effect may be to reduce accessibility to those without a car. Demand for car parking will increase, possibly beyond capacity, and alternative options will have diminished. What might appear at first sight to be a positive intervention may have adverse effects on health and serve to widen inequalities.

-

For a more detailed accessible introduction to applying systems thinking to public health evaluation, see Egan et al. 13,14

-

The Health Foundation’s overarching description and evidence scan. 32

-

Understanding complexity in health systems: international perspectives. A series of academic papers on the topic. 8

-

The Magenta Book36 is from the UK Government and provides guidance on the evaluation of government interventions, with recommendations for the planning, conduct and management of the evaluation. It provides relevant guidance on complex systems-informed evaluation and a supplementary guide that specifically focuses on handling complexity in policy evaluation. This highlights the challenges of complexity to policy evaluation and the importance of its consideration in commissioning and managing interventions, including guidance on the approaches available to support such projects. 36

-

A report published by the Centre for Complexity Across the Nexus and commissioned by the Department for Environment, Food and Rural Affairs provides a framework for evaluation, specifically to support evaluations of government policy to consider the implications of complexity theory. 37

Complexity and the research perspective

We aim to encourage wider awareness, understanding and use of ‘complexity-informed’ research,8 by which we mean research that gives sufficient and appropriate consideration to all of the sources of complexity outlined in the previous section. There are several overlapping perspectives that can be employed in complex intervention research (Box 2), each associated with different types of research questions. Examples of complex intervention studies taking different research perspectives are given at the end of this subsection (see Box 4).

Research perspectives that are used in the development and evaluation of interventions are best not thought of as mutually exclusive. The types of questions that these perspectives can be used to answer include:

-

Efficacy perspective: to what extent does the intervention produce the intended outcome(s) in experimental or ideal settings?

-

Effectiveness perspective: to what extent does the intervention produce the intended outcome(s) in real-world settings?

-

Theory-based perspectives: what works in which circumstances and how?

-

Systems perspective: how do the system and intervention adapt to one another?

Adapted with permission from Skivington et al. 26 This is an Open Access article distributed in accordance with the terms of the Creative Commons Attribution (CC BY 4.0) license, which permits others to distribute, remix, adapt and build upon this work, for commercial use, provided the original work is properly cited. See: https://creativecommons.org/licenses/by/4.0/. The box includes minor additions and formatting changes to the original box.

Efficacy perspective

Research taking an efficacy or effectiveness perspective is principally concerned with obtaining unbiased estimates of the average effect of interventions on predetermined outcomes. Studies from an efficacy perspective aim to test hypotheses about the mechanisms of action of interventions. 38 Such research aims for high internal validity, taking an explanatory approach to test causal hypotheses about the outcome(s) generated by the intervention. This contrasts with effectiveness studies, which take a more pragmatic approach. The PRagmatic Explanatory Continuum Indicator Summary (PRECIS)-2 tool39 identifies nine domains on which study design decisions may vary according to the perspective or approach. Few studies exhibit all of the characteristics of a purely efficacy or a purely effectiveness perspective; there is not necessarily a dichotomy between efficacy and effectiveness studies, rather a continuum.

Studies from an efficacy perspective will typically evaluate interventions in idealised, controlled conditions among a homogeneous group of highly selected participants using a proximal outcome, such as disease activity, with the intervention delivered in a standardised manner with high fidelity to a protocol or manual, by highly trained practitioners, without the flexibility and variability that is likely to occur in real-world practice. Evidence from an efficacy study indicates whether or not an intervention can work in idealised conditions. Typically, efficacy studies control for contextual variation, so do not usually help to illuminate context dependence. An efficacy perspective could be taken to the development, feasibility and evaluation phases of intervention research. However, if found efficacious, this would not provide sufficient evidence for implementation. For example, testing whether or not a vaccine is efficacious in preventing infection under optimal conditions would be undertaken prior to developing a delivery programme, which would then need to be tested for effectiveness in practice. Another example is testing a psychosocial intervention for efficacy in optimal conditions, using experienced practitioners and carefully selected patients, a precursor to a further research cycle to refine and test the intervention for effectiveness in real-world settings.

Effectiveness perspective

Research from an effectiveness perspective seeks to answer pragmatic questions about the effects that are produced by interventions in routine practice to directly inform the choice between intervention options. 38 Effectiveness studies aim to test an intervention in samples and settings representative of those in which the intervention would be implemented in everyday practice, usually with a health or health service outcome. Flexibility in intervention delivery and adherence may be permitted to allow for variation in how, where and by whom interventions are delivered and received. Standardisation of interventions may relate more to the underlying process and functions of the intervention than on the specific form of components delivered. 40 For example, the INCLUSIVE trial41 assessed the effectiveness of the Learning Together programme of restorative justice to reduce bullying and aggression in schools. Although key intervention inputs were provided to all intervention schools with the aim that key functions were delivered to trigger the theorised mechanisms of change, each school was encouraged to ensure that the form of local implementation was appropriate for their students and context, with scope for locally decided actions. 41

Theory-based perspective

The primary aim of adopting a theory-based perspective is to provide evidence on the processes through which interventions lead to change in outcomes and what prerequisites may be required for this change to take place, thus exploring how and why they bring about change. This differs from developing or evaluating interventions using an effectiveness perspective, which focuses on identifying whether or not they ‘work’ based primarily on average estimates of effect. It also differs from an efficacy perspective, as theory-based perspectives explore interventions in practice, taking account of context, and often explore more than one single theoretical account of how the intervention may work. Such approaches to evaluation aim to broaden the scope of the evaluation to understand how an intervention works and how this may vary across different contexts or for different individuals. 42 In research taking a theory-based perspective, interventions are developed and evaluated through a continuous process of developing, testing and updating programme theory. Research from this perspective can generate an understanding of how mechanisms and context interact, providing evidence that can be applied in other contexts. For example, there are numerous mechanisms by which group-based weight loss interventions may bring about behaviour change. Change may be motivated by participants’ relationship with the facilitator or by interaction and sharing of experiences among members of the group, as well as by the specific content of the intervention. Whether or not and how such mechanisms generate outcomes will depend on the context in which the intervention is being applied. 43 Thus, the impacts of interventions cannot confidently be determined in the absence of knowledge of the context in which they have been implemented. 44

There are several approaches that take a theory-based perspective (Box 3 shows a selection of examples). They are ‘methods neutral’ in the sense that they draw on both quantitative and qualitative study designs to test and refine programme theories.

This approach to evaluation was developed in the 1980s; it aims to move beyond ‘just getting the facts—to include the myriad human, political, social, cultural and contextual elements that are involved’. 45 To do this, the involvement of stakeholders and their ‘claims, concerns and issues’45 are essential.

Theory of change approach46,47‘A systematic and cumulative study of the links between activities, outcomes, and contexts of the initiative’. 46 This involves developing ‘plausible, doable, and testable’46 programme theories in collaboration with stakeholders to determine the intended outcomes of the intervention, the activities required to achieve those outcomes and the potential influence of contextual factors. The programme theory determines which outcome and interim measures should be collected in evaluation and which contextual factors should be considered. If activities, context and outcomes occur as expected in the prespecified theory of change, then the outcomes can be attributed to the intervention.

Realist Evaluation48Realist evaluation sets out to answer ‘what works in which circumstances and for whom?’. 48 The important aspect of realist evaluation is that the intervention’s explanatory components – context(s), mechanisms and outcomes – are identified, articulated, tested and refined. The development of context–mechanism–outcome configurations provides plausible explanations for the observed patterns of outcomes, and a key purpose is to test and refine programme theory based on the evaluation findings.

Systems perspective

A systems perspective suggests that interventions can be better understood with an examination of the system(s) in which they are embedded or the systems that they set out to change. A systems perspective treats interventions as events within, or disruptions to, systems. 24 The properties of a system cannot be fully explained by understanding only each of the system’s individual parts. 49 This perspective is concerned with an awareness and understanding of the whole system:

The essential point is that the theory driving the intervention is about the dynamics of the context or system, not the psyche or attributes of the individuals within it.

Hawe et al. 24

Key to a systems perspective is considering the relationships between intervention and context, engaging with multiple perspectives, and identifying and reflecting on system boundaries. 50 A systems perspective encourages researchers to consider how the intervention may be influenced by and impact on many elements of the system, and over an extended period of time. Rather than focusing on a narrow and predetermined set of individuals and outcomes within a fixed time period, a systems perspective will aim to consider multiple ways by which an intervention may contribute to system change. These may occur through multiple, often indirect, routes over an extended time period and through spillover and diffusion processes, some of which may be unintended. It is not usually possible to take account of a whole, often open, system; therefore, to make an evaluation tractable it will be necessary to limit its scope by determining a system boundary and restricting the range of potential mechanisms or explanations that are investigated.

Summary

Interventions rarely achieve effects evenly across individuals, populations and contexts. For complex intervention research to be useful to decision-makers it needs to take into account the complexity that arises both from the intervention’s components and from the intervention’s interaction with context. Intervention effects can be dependent on, can influence or change contexts, and this interdependence can vary over time. Complex intervention research should be conducted with an awareness of these multiple potential sources of complexity, with the design of any specific research study adopting the research perspective that is most suited to the research question that it aims to address (see Box 4 for examples). The preponderance of complex health intervention research to date has taken an efficacy or effectiveness perspective, and there will continue to be many situations in which research questions for which these perspectives are most suited will continue to be prioritised. However, for many interventions, the most critical research questions and the needs of decision-makers who use research evidence are not met by research that is restricted to questions of efficacy and effectiveness; therefore, a wider range of research perspectives and methods needs to be considered and used by researchers, and supported by funders. This may particularly be the case in health service delivery and public health research, in which organisational-level and population-level interventions and outcomes are research priorities that are not well served by traditional methods. 6,7 Key questions for evaluation include identifying generalisable determinants of beneficial outcomes and assessing how an intervention contributes to reshaping a system in favourable ways, rather than the dominant focus on the binary question of effectiveness in terms of individual-level outcomes. In the next section, we set out the revised framework that aims to help research teams consider the research perspective(s) most suited to the research challenge that they are aiming to address, to prioritise research questions, and choose and implement appropriate methods.

Initial trials of NRT for smoking cessation focused on establishing the efficacy of different forms of NRT. One example is for nasal nicotine spray, for which the efficacy study included a highly selected group of participants (in that they were patients attending a smokers’ clinic). It suggested that the intervention was efficacious but that it would require further testing for generalisability to other smokers and settings, and to compare it with other forms of NRT. 51

Effectiveness perspectiveTwo school smoking education programmes were evaluated and found to be efficacious in terms of delaying the onset and reducing the uptake of smoking in young people, and were subsequently evaluated for effectiveness under normal classroom conditions using a cluster RCT. 52 The research found no significant differences in uptake of smoking between intervention and control groups. The authors suggest that the experimental conditions in which the interventions were originally tested may be associated with success, in contrast to this study where the programmes were taught under typical classroom conditions, by usual classroom teachers. It was recommended that further work be carried out to develop interventions that are effective in practice and that it is important to formally ‘field test’ under usual conditions before widespread dissemination.

Theory-based perspectiveAlthough there has been promising evidence about smoking cessation programmes, they do not necessarily work for everyone in every context. Further investigation using a theory-based approach is, therefore, appropriate to provide a better understanding of how such interventions work, for whom and why. 53 An example is research that explores the perspectives of smoking and non-smoking pregnant women with regard to smoking in pregnancy, and relating this to anti-smoking interventions, identifying why standard cessation efforts may not be successful for some women. For example, rather than pregnant smokers being ignorant of the facts of smoking in pregnancy (the key issue that mass-media interventions target), they may be aware of the facts but do not place credibility in them in the same way as non-smoking pregnant women do, instead favouring information from family and friends. 54