Notes

Article history

The research reported in this issue of the journal was funded by the HTA programme as project number 17/93/06. The contractual start date was in September 2018. The draft report began editorial review in June 2020 and was accepted for publication in January 2021. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ report and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this report.

Permissions

Copyright statement

Copyright © 2022 Gega et al. This work was produced by Gega et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaption in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2022 Gega et al.

Chapter 1 Background

Mental health

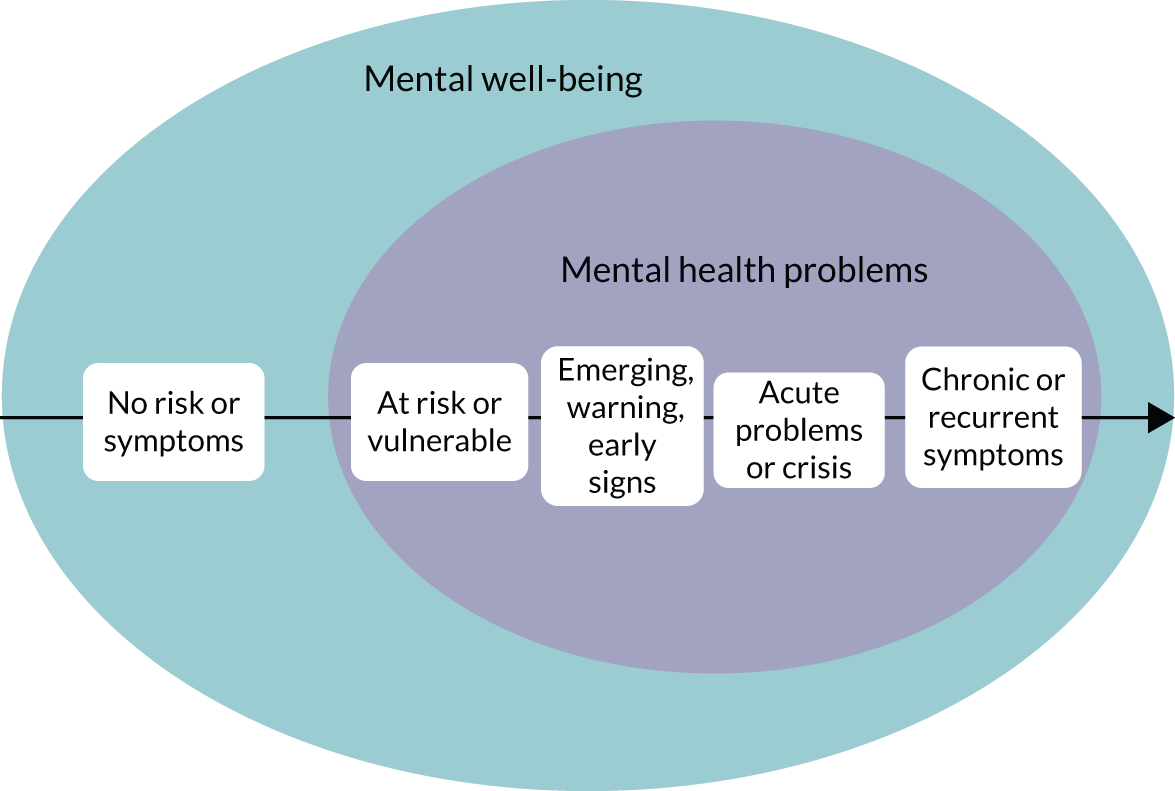

We can begin to understand mental health with two related, yet independent, concepts. Mental well-being (Figure 1, blue circle) refers to our sense of self, our ability to meet our potential and develop relationships, and our ability to do things that we consider important and worthwhile. Mental health problems (see Figure 1, purple circle) refer to the presence of specific signs and symptoms that indicate a diagnosable condition that affects our emotional state, physical function, behaviour and thinking.

FIGURE 1.

Mental well-being vs. mental health problems.

The diagnosis of mental health problems is based on specific signs and symptoms that follow the World Health Organization (WHO)’s classification criteria,1 which help clinicians group different types of mental health problems into specific diagnostic categories (Table 1). Clinical guidelines are also helpful to group different problems when they follow similar care pathways, for example the National Institute for Health and Care Excellence (NICE)’s clinical guidelines on common mental health problems. 2 Different mental health problems often coexist as comorbidities. In addition, the severity of mental health problems varies along a continuum not only between individuals, but also at different times in the same person; some people are more vulnerable than others, and the same person is more vulnerable at certain times in their lives, because of an accumulation of risk factors (e.g. family history of mental illness, abuse or bullying, and poverty). At any particular time, some susceptible individuals will be at one end of the continuum, with no symptoms and no risk of any mental health problems, while others will be further along the continuum, experiencing some degree of vulnerability to a specific mental health problem or exhibiting emerging or warning symptoms to some degree (see Figure 1, black arrow). Some people will move along the continuum to experience acute symptoms or a crisis, and, of those, some will recover and others will experience life-long and recurrent problems. This continuum gives a useful context to our work, as we will explore research studies in mental health, not only across different clinical populations, but also at different stages in the continuum for a specific clinical population represented by mild, moderate, severe or subthreshold states.

| Classification group | Examples of conditions |

|---|---|

| Schizophrenia/psychosis | Schizophrenia, schizoaffective disorder, delusional disorder |

| Mood disorders | Bipolar affective disorder, depressive episodes |

| Anxiety or fear-related disorders | Generalised anxiety disorder, panic disorder, agoraphobia, specific phobia, social anxiety, separation anxiety, selective mutism |

| Obsessive–compulsive or related disorders | Obsessive–compulsive disorder, body dysmorphic disorder, health anxiety, body-focused repetitive behaviour |

| Disorders associated with stress | Post-traumatic stress disorder, prolonged grief disorder, adjustment disorder |

| Feeding or eating disorders | Anorexia, bulimia, binge eating disorder, avoidant–restrictive food intake, pica |

| Disorders of bodily distress or bodily experience | Bodily distress disorder, body integrity dysphoria |

| Disorders due to substance use or addictive behaviours |

Disorders due to substance use: alcohol drugs, sedatives, hypnotics or anxiolytics, caffeine, nicotine, other psychoactive and non-psychoactive substances Disorders due to addictive behaviours: gambling disorder, gaming disorder |

| Impulse control disorders | Pyromania, kleptomania, compulsive sexual behaviour |

| Personality disorders and related traits | Personality disorder, prominent personality traits or patterns |

| Mental or behavioural disorders associated with pregnancy, childbirth and the puerperium | Post-natal depression, post-natal psychosis |

So, what is the difference, and the relationship, between mental well-being and mental health problems? Although diagnosable mental health problems are a risk factor for poor mental well-being, a diagnosis does not necessarily lead to poor mental well-being; many people with diagnosable mental health problems flourish and maintain strong mental well-being. In contrast, the absence of diagnosable mental health problems does not guarantee strong mental well-being, as people have reported poor mental well-being without a diagnosis. This is an important distinction to delineate the scope of this work, which relates to emerging or existing diagnosable mental health problems and is not about general mental well-being.

Interventions in mental health

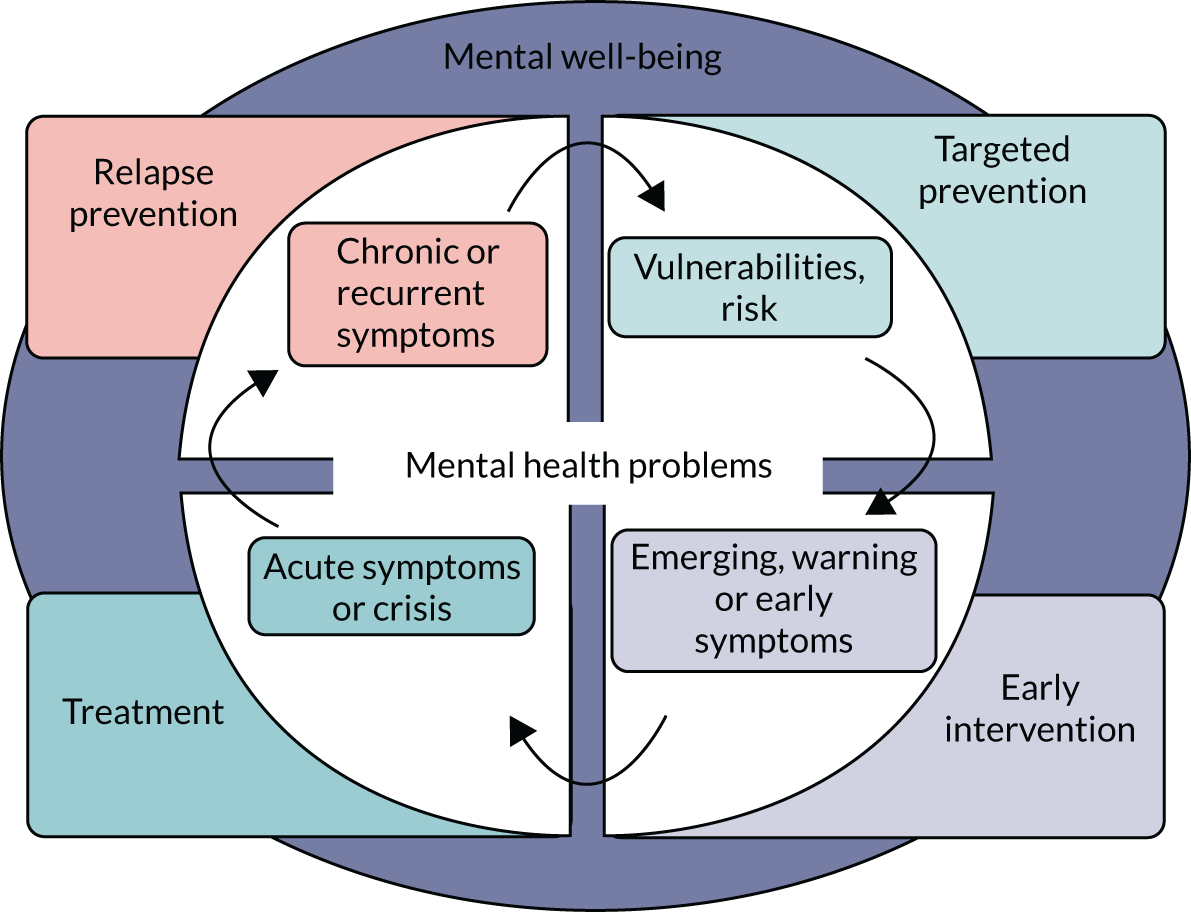

Interventions to promote or improve mental health can be mapped onto the two concepts of mental health – mental well-being and mental health problems – and across the four key points of the continuum in Figure 1 (at risk, emerging, acute problems, chronic problems). Interventions to support and improve mental well-being (Figure 2, the purple circle that sits in the background) are the foundation of good mental health for any population. This includes having an active lifestyle, eating and sleeping well, safeguarding people from abuse and bullying, providing good education, reducing poverty, ensuring equality and justice, and having meaningful relationships. Such activities are the foundation of mental well-being for any population, but they are beyond the scope of this work, unless they are specifically developed, implemented and evaluated in the context of preventing or improving emerging or existing mental health problems.

FIGURE 2.

Interventions to prevent or improve mental health problems.

Typically, interventions within the scope of our work, that is interventions that aim to prevent or improve emerging or existing mental health problems, are either pharmacological (i.e. prescribed psychiatric medication indicated for a specific diagnosis) or non-pharmacological (i.e. psychological therapies, social interventions, physical and occupational therapies, behavioural interventions). The aims of these interventions, as shown in Figure 1, are to:

-

reduce the likelihood of occurrence of future mental illness among those at risk (targeted prevention)

-

reduce emerging and early symptoms before these manifest as a diagnosable illness (early intervention)

-

improve acute symptoms and manage crisis (treatment)

-

improve and manage chronic symptoms to minimise the likelihood of recurrence (relapse prevention).

Our project focused on mental health outcomes associated with an intervention, in the form of reducing the incidence/occurrence of mental health problems and the severity of clinical symptoms associated with those. Mental health interventions could instigate behaviour change, improve physical health outcomes and have a positive impact on proxy indicators and factors associated with mental well-being (e.g. employment and poverty). These are important outcomes but beyond the scope of this project.

Digital interventions

Digital interventions (DIs) use software programs that are accessed via computers, tablets, smartphones, audio-visual and virtual reality (VR) equipment, gaming consoles, robots and other devices to deliver interventions that aim to prevent or improve mental health problems, including depression, anxiety disorders, addictive behaviours and eating disorders. 3 DIs collect, store and retrieve clinical information: deliver standardised instructions via text, voice files or video clips: and guide users in the application of therapeutic activities. DIs can include varying levels of standardisation, self-help and clinician involvement; some are entirely self-administered by service users, whereas others are completely reliant on a clinician/therapist.

Digital interventions are often standardised, automated, user-directed, psychological therapies that use technology to help users work through a therapeutic activity either independently of, or alongside, a clinician or therapist. One common mental health therapy that features heavily in DIs, because of its structured approach, is cognitive–behavioural therapy (CBT). CBT treats a physical or mental health problem by identifying and changing certain beliefs and behaviours that maintain the problem. CBT places emphasis on activities completed by users outside therapy sessions; this is commonly referred to as ‘homework’, which fits in well with the self-directed nature of DIs.

An example of a DI is an internet-based self-help programme evaluated by Christensen et al. 4 The intervention was a 10-week structured therapy consisting of psychoeducation (weeks 1 and 2), CBT (weeks 3–7), relaxation (weeks 8 and 9) and physical activity (week 10). The psychoeducation section provided information on worry, stress, fear and anxiety; how to differentiate between types of anxiety disorders; risk factors for anxiety; comorbidity; consequences of anxiety; and available treatments. The CBT toolkit addressed typical anxious thoughts and included sections on dealing with the purpose and meaning of worry, the act of worrying and the content of worry. The relaxation modules guided participants on how to progressively tense and relax different muscle groups to induce relaxation and how to become aware of their breathing and body, acknowledging thoughts and external distractions but remaining focused on the present. The physical activity gave tailored advice depending on the level of the participant’s motivation and ability.

Another example of a DI is a mobile app (application) in a study by Pham et al. ,5 which engaged users in a series of minigames to learn and practise diaphragmatic breathing to alleviate symptoms of anxiety, in line with NHS protocols and evidence-based literature. The minigames had various themes, from sailing a boat down a river to flying balloons into the sky. Users touched the screen with their finger as they inhaled and removed their finger from the screen as they exhaled to control the gaming mechanics. A breathing indicator visually represented a full breath; users saw a circle expanding as they inhaled and contracting as they exhaled. This indicator provided a visual guide of a breathing retraining exercise. The goal of each minigame was to correctly follow the breathing indicator to progress in the game narrative; users progressed through levels and achieved goals by breathing correctly and staying calm.

Economic evaluations

Digital interventions are particularly important for mental health care in locations where access to services is limited and face-to-face contact with psychiatrists and psychologists is at a premium. The decision to adopt DIs into a health-care system is, at least in part, informed by an assessment of value for money. There is an assumption that DIs offer ‘good value for money’ because they have the potential to save clinician time and make clinical work more efficient by encouraging patient self-management, allowing remote delivery of interventions, enabling a less specialised workforce to deliver complex interventions, enhancing outcomes for the same level of therapeutic input and reducing waiting lists (WLs).

Economic evaluations can provide evidence to support or refute the assumption that DIs are good value for money, by comparing the costs and outcomes of DIs relative to the costs and outcomes of relevant alternatives. Economic evaluations are often built within clinical evaluations or trials, which compare the outcomes of a new intervention/service with the outcomes of a control (e.g. usual or standard care) over a specific period of time. Randomised controlled trials (RCTs) are considered the ‘gold standard’ of clinical evaluations because changes in the selected outcome measures are likely to be due to the effect of the intervention itself, rather than be due to chance or other confounding variables (e.g. spontaneous remission of symptoms over time, attention or measurement effects).

Outcomes in economic evaluations are often expressed in terms of quality-adjusted life-years (QALYs), which are generated by multiplying years of life by the utility scores associated with the specific health states experienced by the person. Costs are calculated by multiplying resources used (resource utilisation) over an appropriate time horizon by the price attached to each unit of that resource (unit cost). The costs can include direct costs (e.g. for mediation, therapies, social services and transportation), indirect costs (e.g. productivity loss due to time off work and criminal justice expenditure) and intangible costs (e.g. impaired quality of life and distress of living with pain).

The type of resources included in the final cost calculation depends on the perspective of the economic evaluation, that is who pays for or saves from the resources used that we are interested in, such as the society in general or the health service in particular. This is important when the intervention is expected to have different impacts on different sectors and stakeholders (e.g. one sector incurred the majority of the costs and another yields the benefits of an intervention). The perspective of an economic evaluation can be as narrow as a particular agency or government department (e.g. ministry of health) or can be broader to include the statutory/public sector as a whole (e.g. all health and social care services).

There are five common types of economic evaluations that compare costs and outcomes between different interventions: cost minimisation analysis (CMA), cost–consequences analysis (CCA), cost-effectiveness analysis (CEA), cost–utility analysis (CUA) and cost–benefit analysis (CBA). All five types are similar in the way they measure costs, but they differ in the ways they measure health outcomes and combine these with costs to reach decisions about value for money.

Cost-minimisation analysis starts from the basis that two interventions have similar outcomes (in terms of effectiveness and safety) but different costs; however, the lack of a statistically significant difference in outcomes does not mean that the interventions are equivalent. 6 A CCA considers all the health and non-health impacts and costs of different interventions across different sectors; it then lists or tabulates these in a disaggregated form for each intervention and does not attempt to synthesise the costs and outcomes within and between interventions.

Cost-effectiveness analysis, CBA and CUA are three types of economic evaluations that compare the costs and outcomes of an intervention with the costs and outcomes of its alternatives. Outcomes are measured in their natural units (e.g. symptom-free days, depression score) in a CEA; in units of utility or preference, often as a QALY, in a CUA; and in monetary units in a CBA. The relative costs and outcomes of an intervention and an alternative are then summarised into one number, known as an incremental cost-effectiveness ratio (ICER), by dividing the difference in costs (incremental cost) by the difference in outcomes (incremental effect).

Table 2 provides an overview of how the five types of economic evaluations differ in terms of outcomes and their synthesis with costs.

| Analysis | Expression of outcomes | Synthesis of costs and outcomes |

|---|---|---|

| CMA | Outcomes are shown to be similar | Only costs are compared |

| CCA | A group of different outcomes expressed in their natural units | Not applicable – costs and outcomes are not combined but presented in separate tables for qualitative comparison |

| CEA | A single condition-specific outcome expressed in its natural units (e.g. points on a depression scale) | ICER: cost per natural unit |

| CUA | Utilities: QALYs or disability-adjusted life-years | ICER: cost per utility |

| CBA | Money | Net monetary benefit or cost |

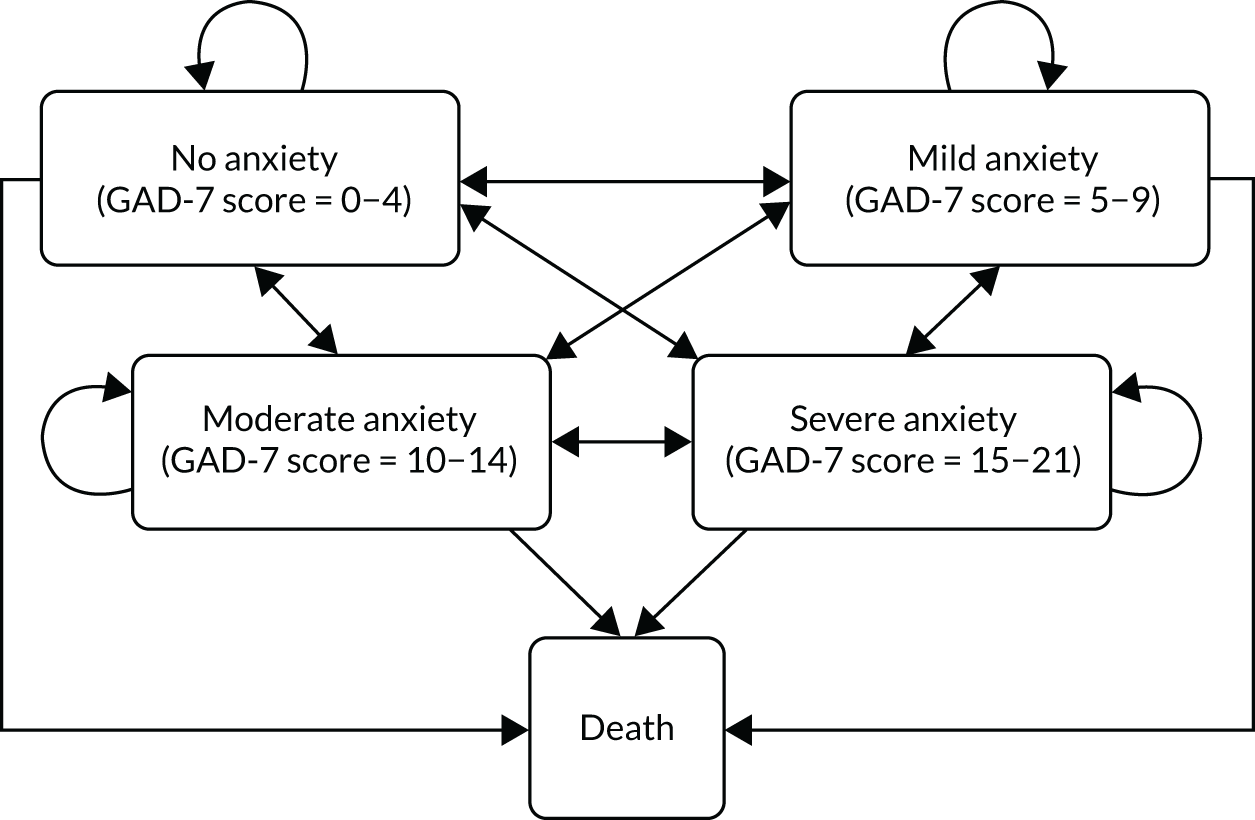

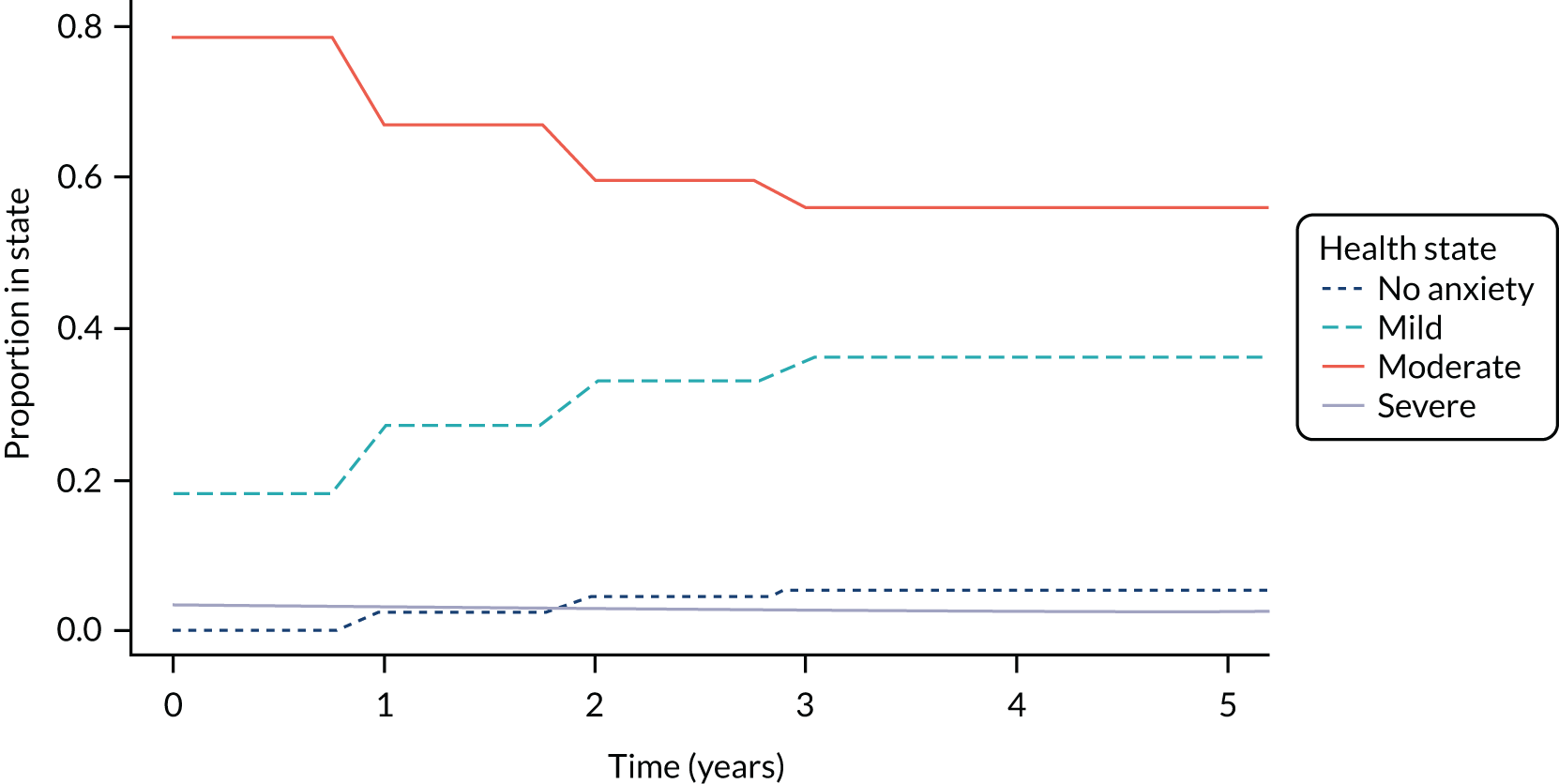

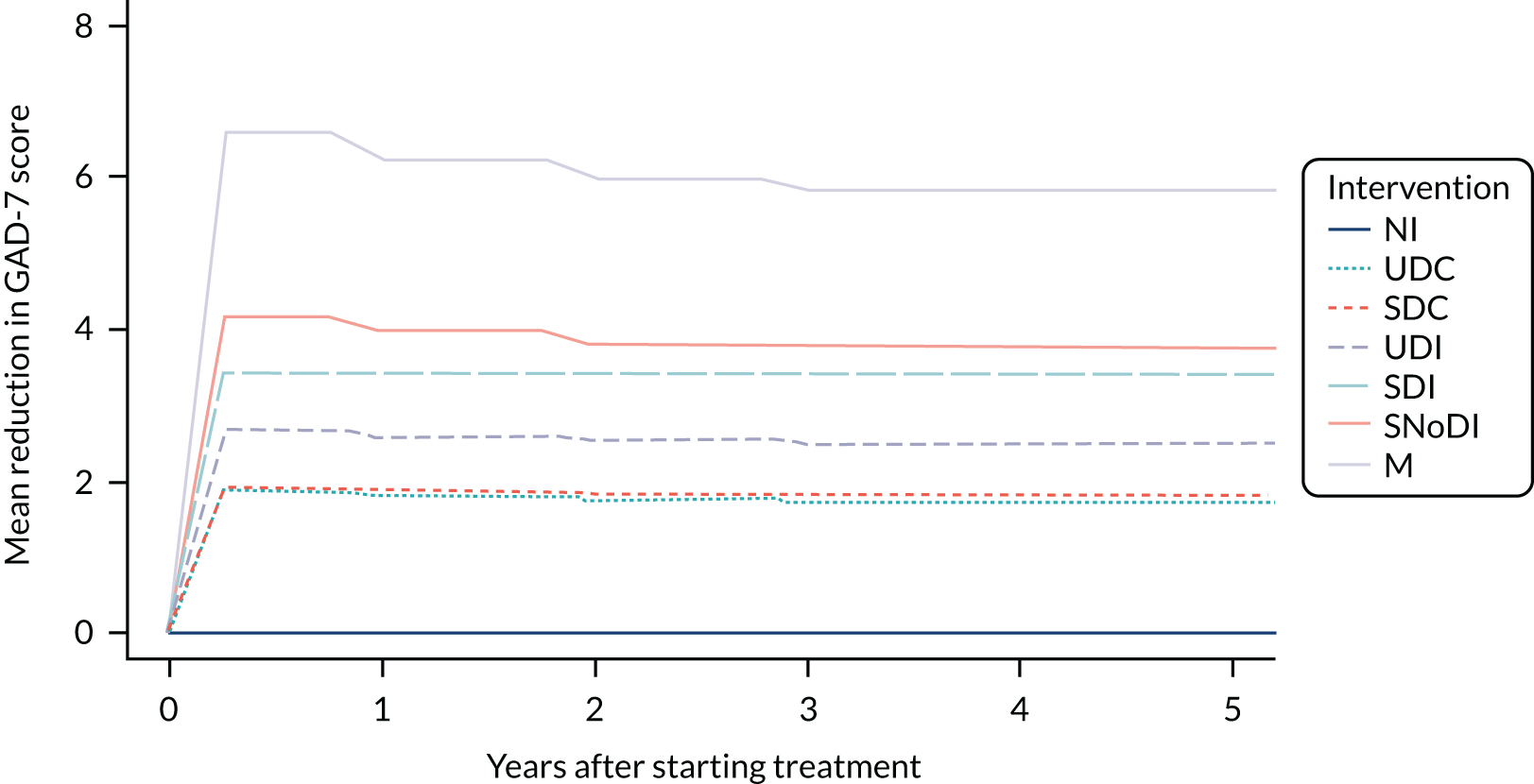

These five types of economic evaluations are informed by short- or medium-term clinical outcomes (in mental health usually up to 2 years) when they are based on a within-trial analysis, depending on the length of time during which participants in a RCT are followed up. Within-trial CEAs, or ‘piggy-back’ economic evaluations as they are known, have limitations, for example the atypical nature of trial setting, inappropriate clinical alternatives, inadequate length of follow-up, inadequate sample size for economic analysis, protocol-driven costs and benefits, and inappropriate range of end points (for both costs and outcomes). Health economic decision models are used to guide the choice of interventions for a clinical population on the basis of expected benefits and costs, commonly over a lifetime. 7 Decision models are often implemented by using either decision trees or Markov models. In Markov models, patients move between clinical states of interest in discrete time periods. Each state is associated with certain costs and outcomes. Decision models are defined by parameters that include probabilities of transition between clinical states, costs and outcomes associated with each state, treatment effects and other covariates (e.g. comorbidities and age). All available relevant evidence should be used to inform these parameters, which may include RCTs and population observational studies.

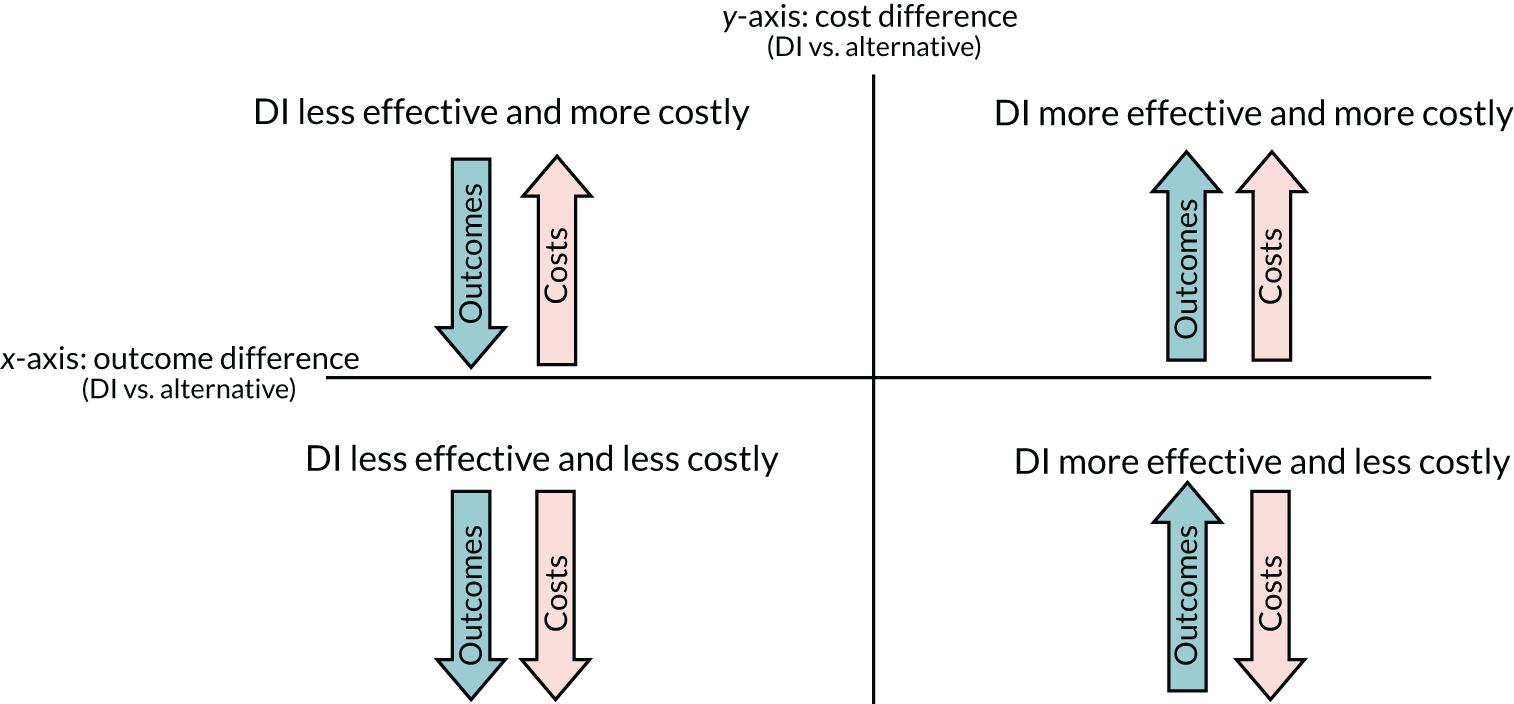

Once the costs and outcomes of competing alternatives have been estimated, either through a within-trial economic evaluation or through modelling, standard decision rules can be used to conclude whether or not a DI should be adopted. 8 If CEAs demonstrate that DIs are likely to be both more effective and less costly than the alternatives, then DIs are the preferred option in terms of ‘value for money’. Decision-making is more complex if DIs yield better outcomes for a greater cost than their alternatives. Where costs are higher and outcomes better, or costs lower and outcomes poorer, the incremental gain for a DI (costs saved or QALYs gained) must be assessed according to the marginal productivity of the health-care system (i.e. how much health is gained with an increase in expenditure at the margin or how much health is lost with a decrease in expenditure at the margin). An acceptable cost per QALY is health system specific, and is estimated at £15,000 in the UK,9 although alternative figures of £50,00010 and £20,000–30,000 have been used in decision-making. 11

Making a choice in favour of DIs (even when they are likely to be cost-effective) may imply the sacrifice of alternative options, which may not always be possible for ethical, clinical or feasibility reasons (e.g. we cannot replace the family doctor with digital self-management, but we can use the latter in addition to visits to the family doctor). Moreover, the cost of software and hardware for DIs is often frontloaded, whereas savings (or improved outcomes) are accrued in the long run, so those paying for DIs need to have the money to invest up front. Finally, costs incurred for DIs and benefits accrued from DIs may relate to different budgets (e.g. DIs are paid for by the health service that looks after the employees of a company but savings are accrued in the employment sector by reducing absenteeism of these employees). 12 Health-care providers and users may not adopt DIs even when they are proven to be cost-effective, because this will require either disinvesting from existing care options that cannot be forgone, or generating ‘new monies’ to add DIs to existing care options.

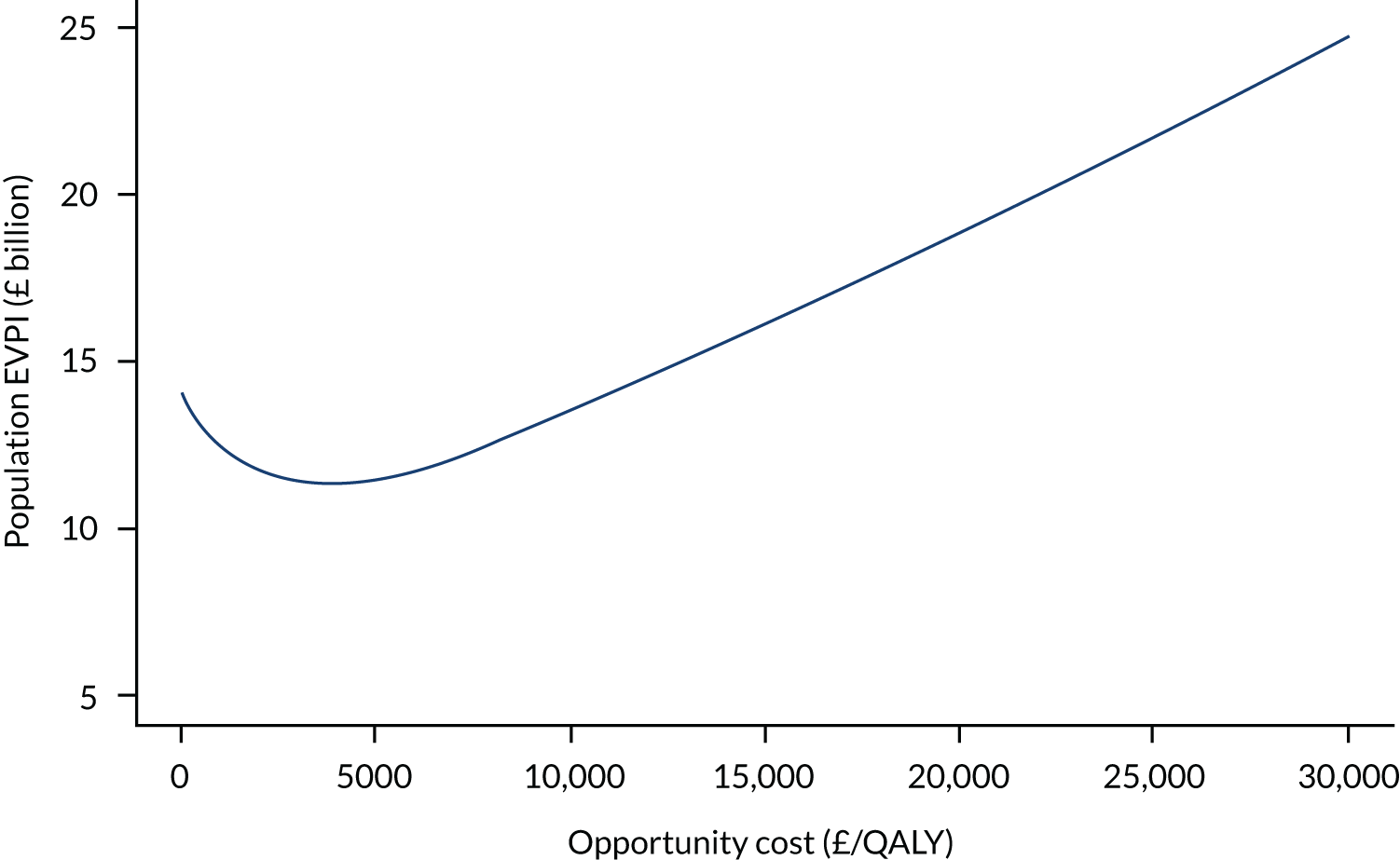

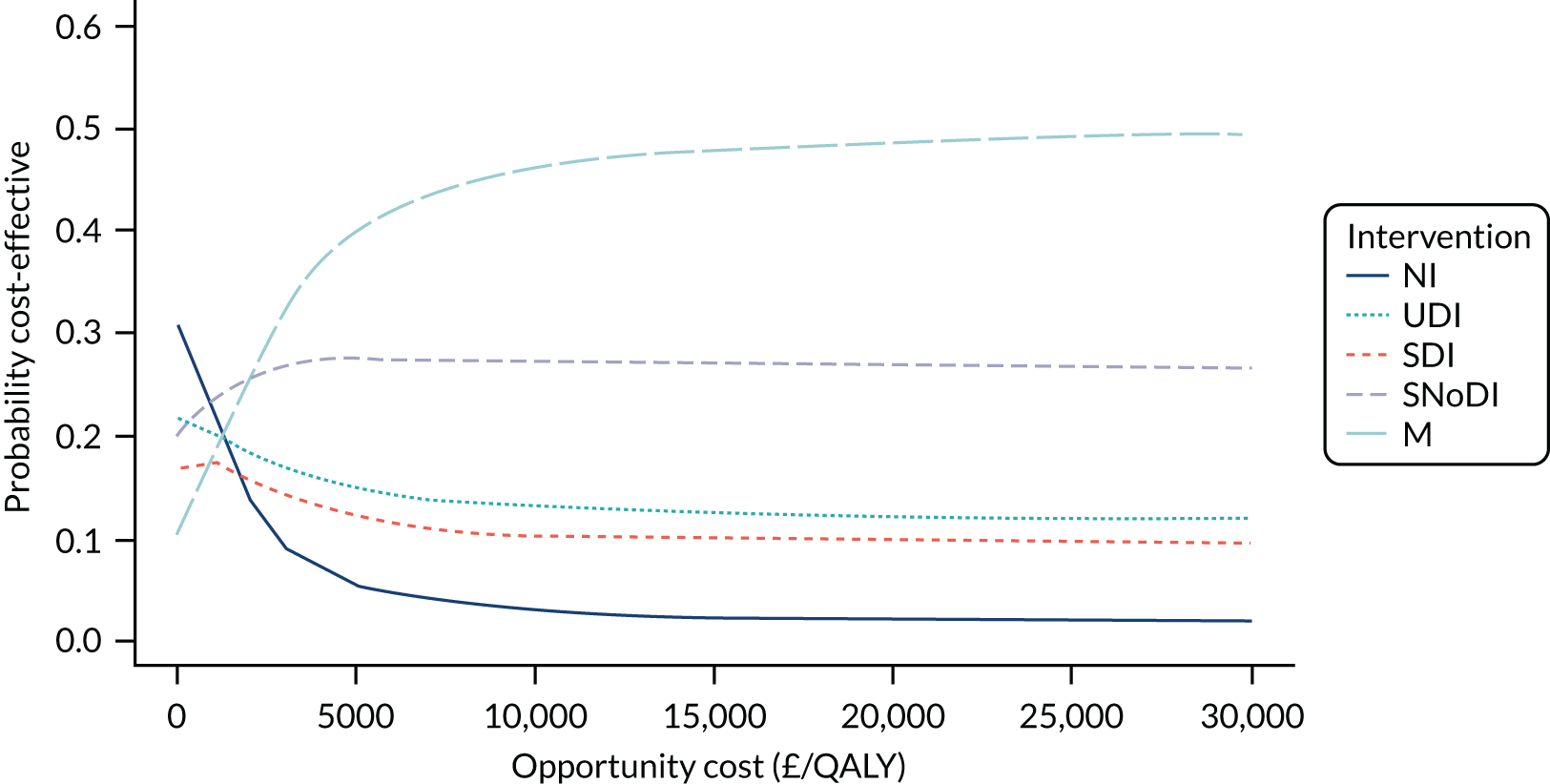

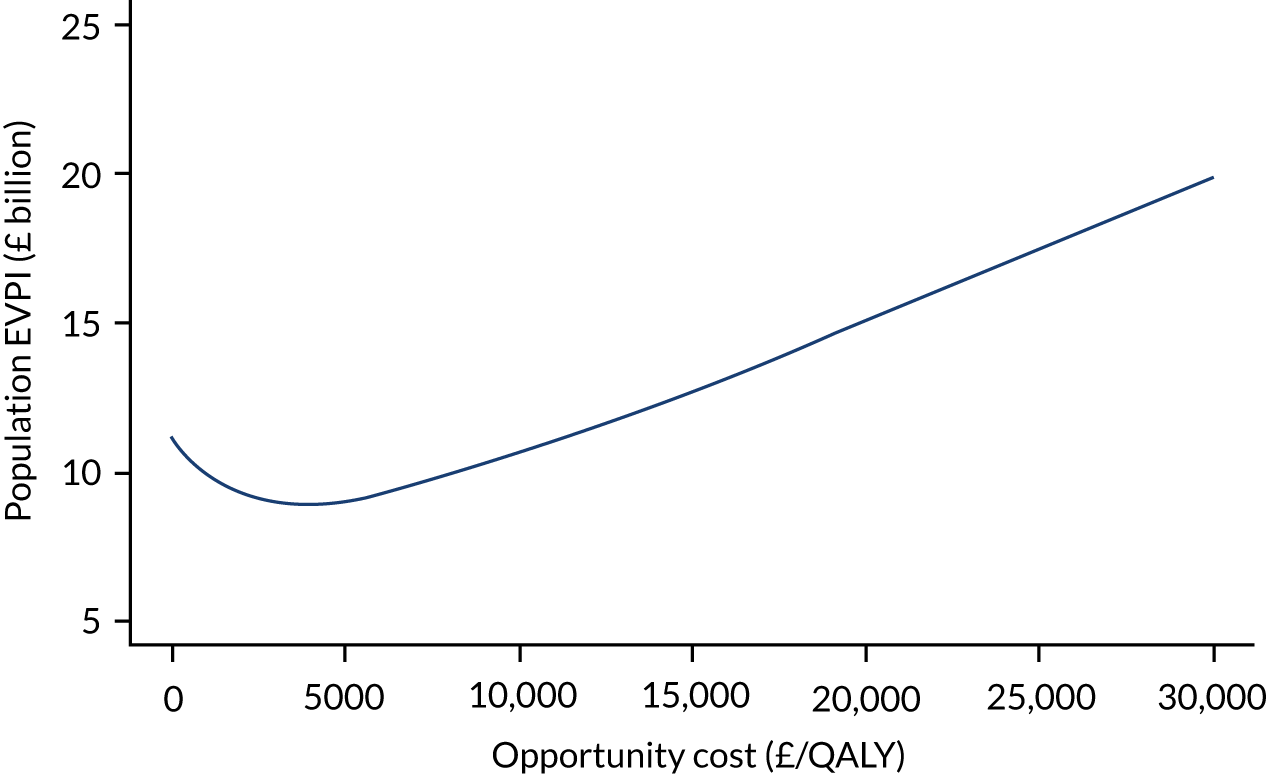

An additional consideration for decision-making utilising cost-effectiveness evidence is uncertainty. This uncertainty pertains to the evidence base used to generate estimates of cost-effectiveness as well as assumptions that are required in compiling this evidence. To inform decision-making, we need to characterise this uncertainty appropriately, for example using probabilistic sensitivity analysis and/or scenario analyses, and we need to explore the implications of this uncertainty in terms of adoption decisions and recommendations for further research. 13 Although decisions are binary (yes/no) in terms of cost-effectiveness, the evidence underpinning decisions may be uncertain, and so there is a probability of making the ‘wrong decision’. The evidence base to support assessments of cost-effectiveness for DIs is likely to be less developed than, for example, pharmaceuticals because of different regulatory requirements associated with the adoption of digital health interventions compared with pharmaceuticals. This implies that an assessment of cost-effectiveness for DIs should reflect this uncertainty and communicate it appropriately to decision-makers.

Gaps and limitations

The first systematic review of economic evidence for DIs was published by NICE more than 10 years ago14 and included only one CEA available at the time, which was on computerised CBT (cCBT). 15 Recent syntheses of economic evidence relating to DIs have focused on a specific technology (e.g. the internet)16,17 or a specific intervention (e.g. CBT)18 or a combination of both (e.g. internet CBT). 18–21 Some reviews22,23 include a wider range of interventions, such as online problem-solving therapy and positive psychology interventions. Most reviews are of studies of the most common mental health problems, namely depression and anxiety, but, increasingly, more reviews of economic evidence relevant to psychological and behavioural interventions include addictive behaviours (e.g. smoking24) and physical health/somatic problems. 25,26

The number of economic evaluations is a fraction of the number of clinical trials of DIs. Reviews of economic evidence for the use digital technologies to support mental health care (irrespective of the targeted population or type of technologies and interventions used) are useful, not least because the potential investment in digital technologies is large and irreversible. The economic evidence base for DIs is uncertain, so we need to understand under what circumstances these technologies are conducive to efficient delivery of care and the degree of certainty in the conclusions regarding cost-effectiveness. There may also be particular core assumptions that are key to determining the cost-effectiveness of DIs, such as engagement with DIs by patients (which can considerably change outcomes) and variable provision of personal support as an adjunct to DIs (which can considerably change the cost, e.g. if support is given by specialist clinicians or laypeople).

Previous work27 has concluded that economic evaluations for DIs (not specific to, but including, mental health) may require more flexible approaches to reflect the complexity of the intervention and its outcomes. Data to inform CEAs may not capture all the information required to assess cost-effectiveness. In most CEAs for DIs, time horizons are short, and the full opportunity costs of DIs, such as development costs, are not usually captured. Wider social costs, including productivity losses, presenteeism and other intangible costs, which carry weight in mental health, are also inconsistently measured. In addition, CEAs rarely estimate the investment sum needed for implementing DIs or the budgetary impact of their implementation against existing alternatives.

To our knowledge, there is no consideration of the appropriateness of existing methods of CEA to assess the value of DIs. To do so requires a comprehensive overview and critique of the cost-effectiveness evidence relating to the use of digital technologies to promote or improve mental health outcomes. Such a review will help to highlight the key conditions that make DIs cost-effective based on current evidence, as well as to identify key issues for consideration in establishing their cost-effectiveness. The results of a review and critique can be used to generate guidance and a checklist for future CEAs of DIs.

Aims and objectives

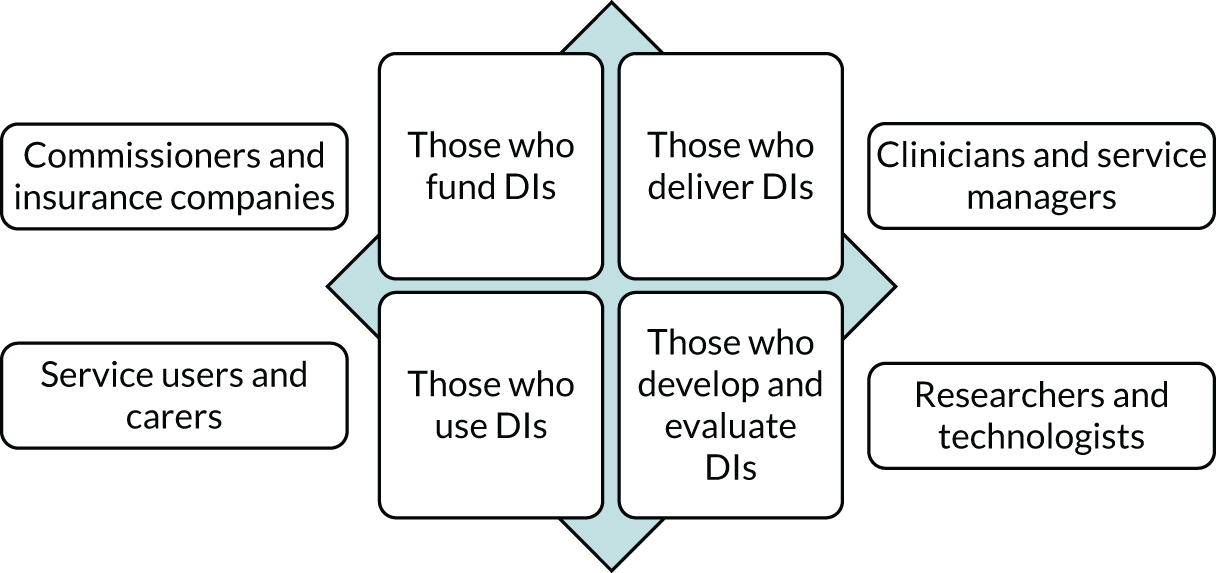

Our main aim was to make best use of existing evidence so that we could (1) inform practice and future research about which DIs are likely to represent a good use of health-care resources, (2) evaluate how uncertain the evidence regarding their cost-effectiveness is and (3) determine what drives variation in their value for money. Our secondary aim was to explore how current economic and clinical evidence is understood and used by key stakeholders in making decisions about the future development, evaluation and adoption of DIs.

Our objectives were to:

-

identify and summarise all published and unpublished CEAs comparing the costs and outcomes of DIs for the prevention and treatment of any mental health condition to the costs and outcomes of relevant alternatives [e.g. interventions that do not involve digital technologies or no intervention (NI)]

-

identify key drivers of variation in the effects and costs of DIs (e.g. for different population subgroups, delivery methods, economic perspectives or outcome measures)

-

develop classification criteria to inform the categorisation of DIs and their comparators

-

critically evaluate the quality and appropriateness of the methods used by existing CEAs to establish the cost-effectiveness of DIs

-

determine what cost-effectiveness judgements can be made for DIs given current evidence from economic evaluations

-

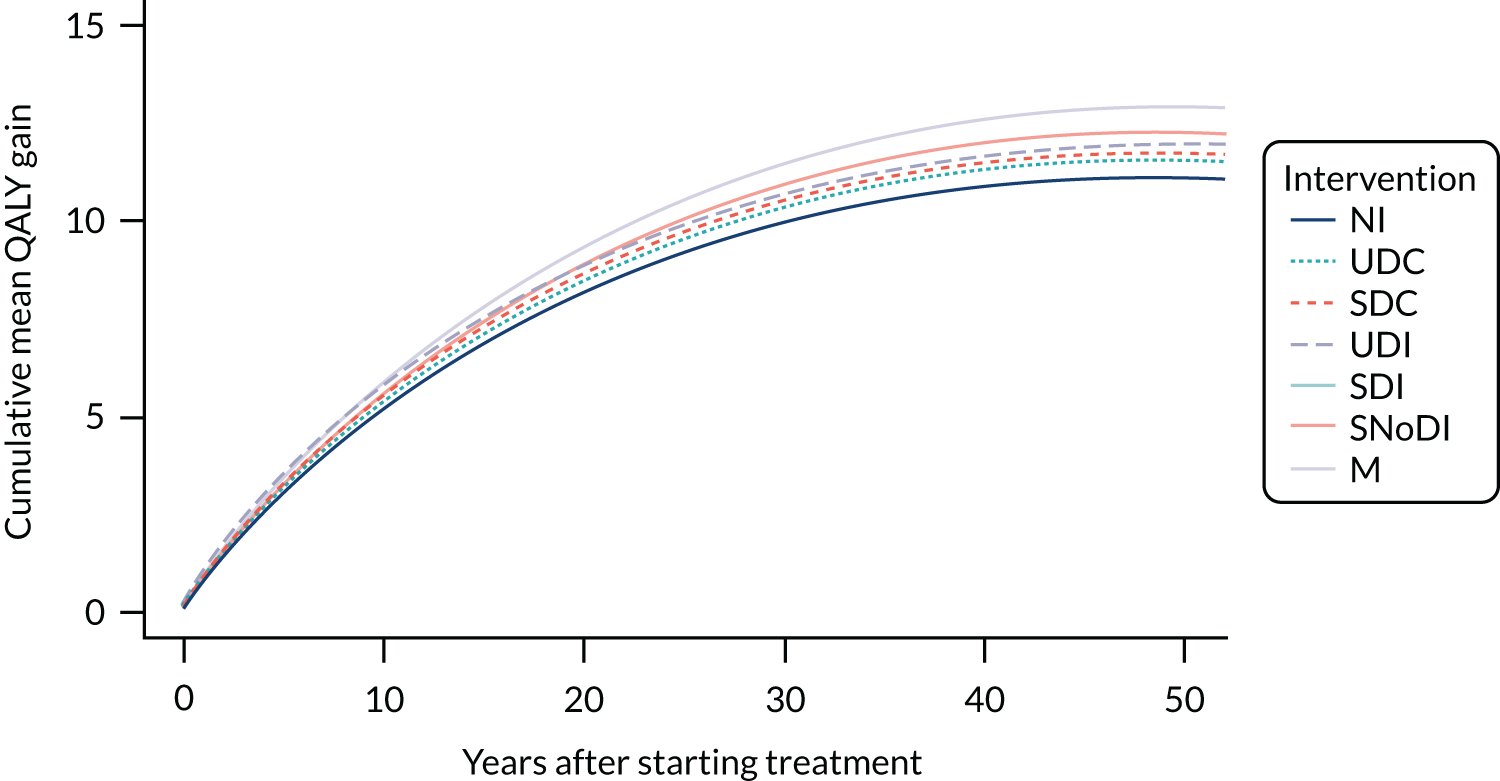

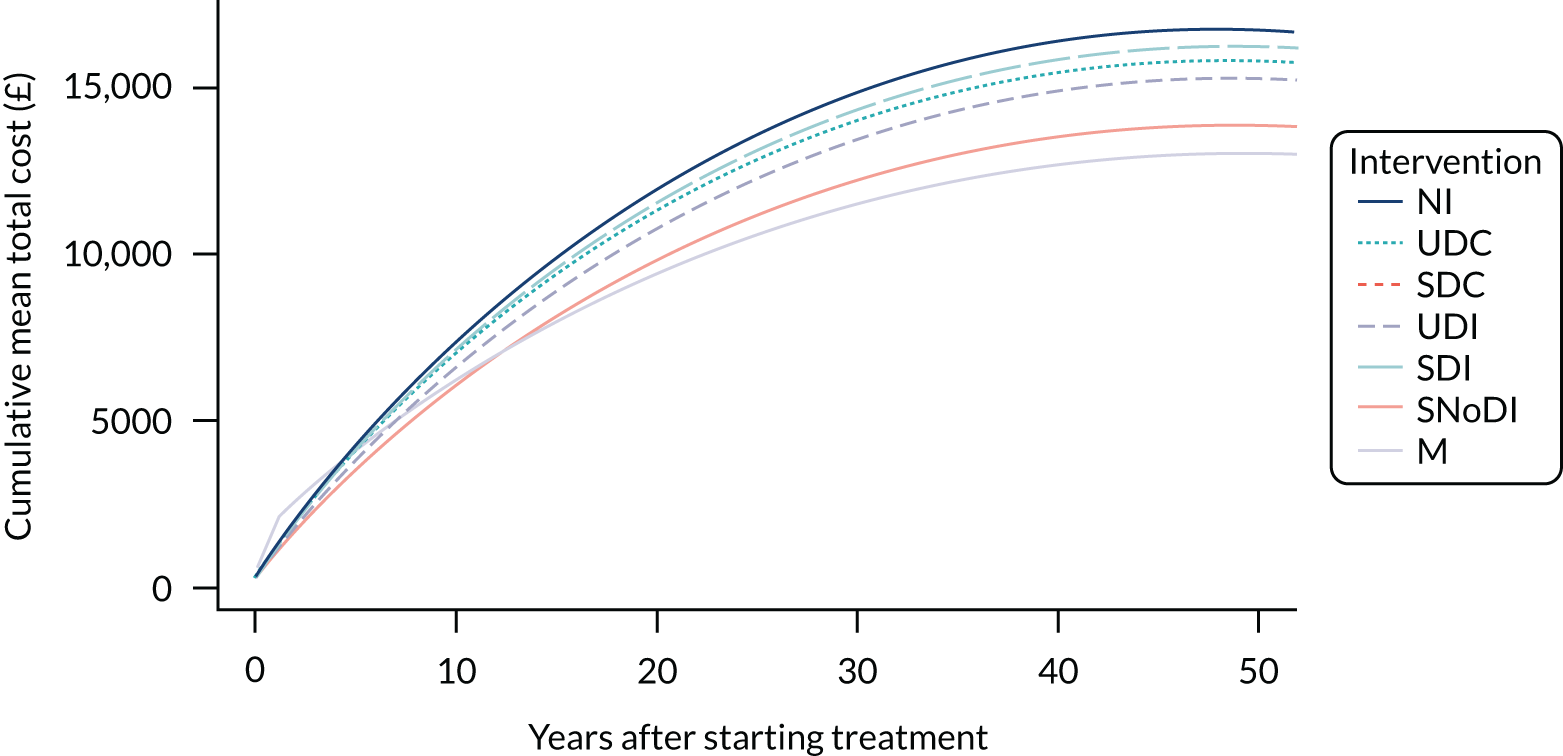

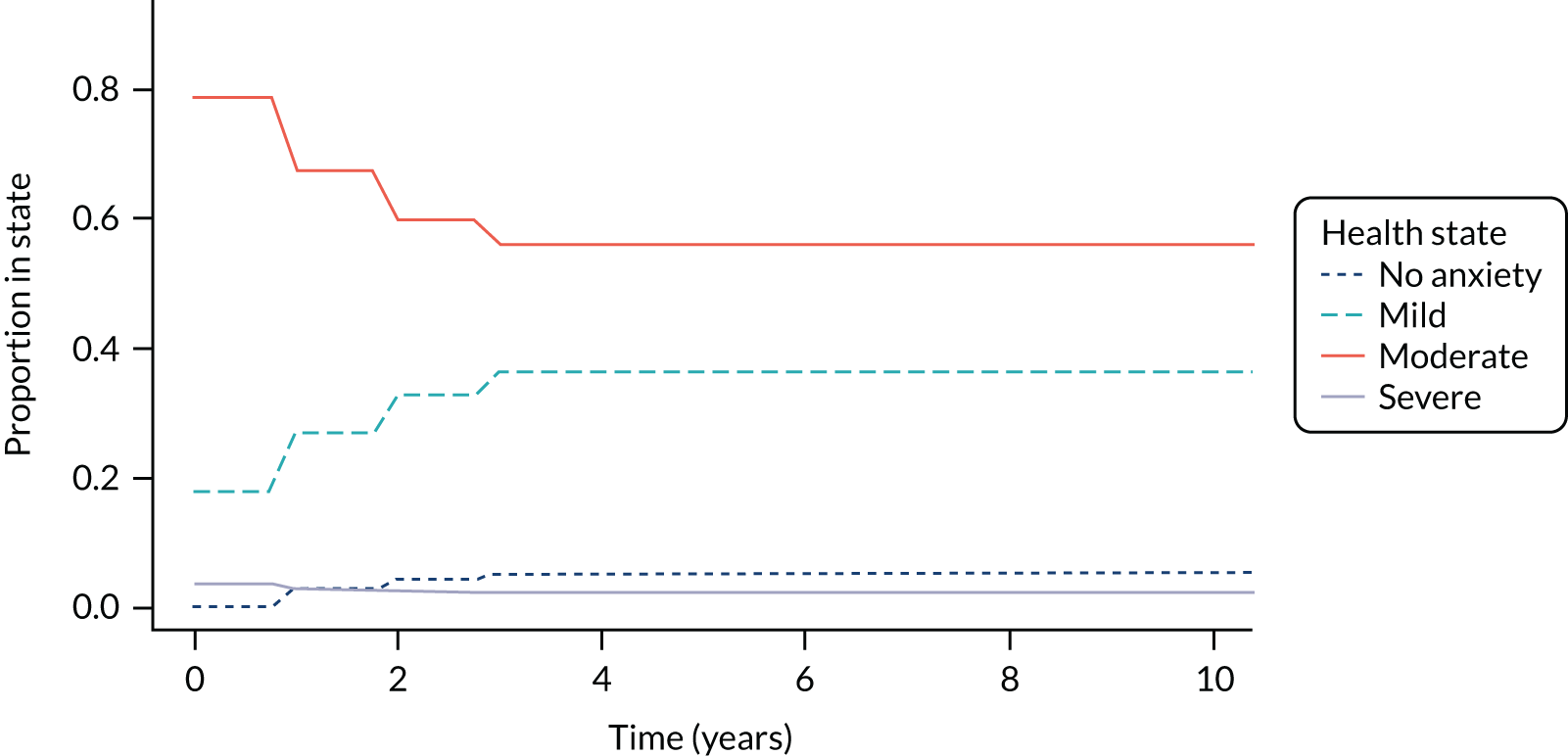

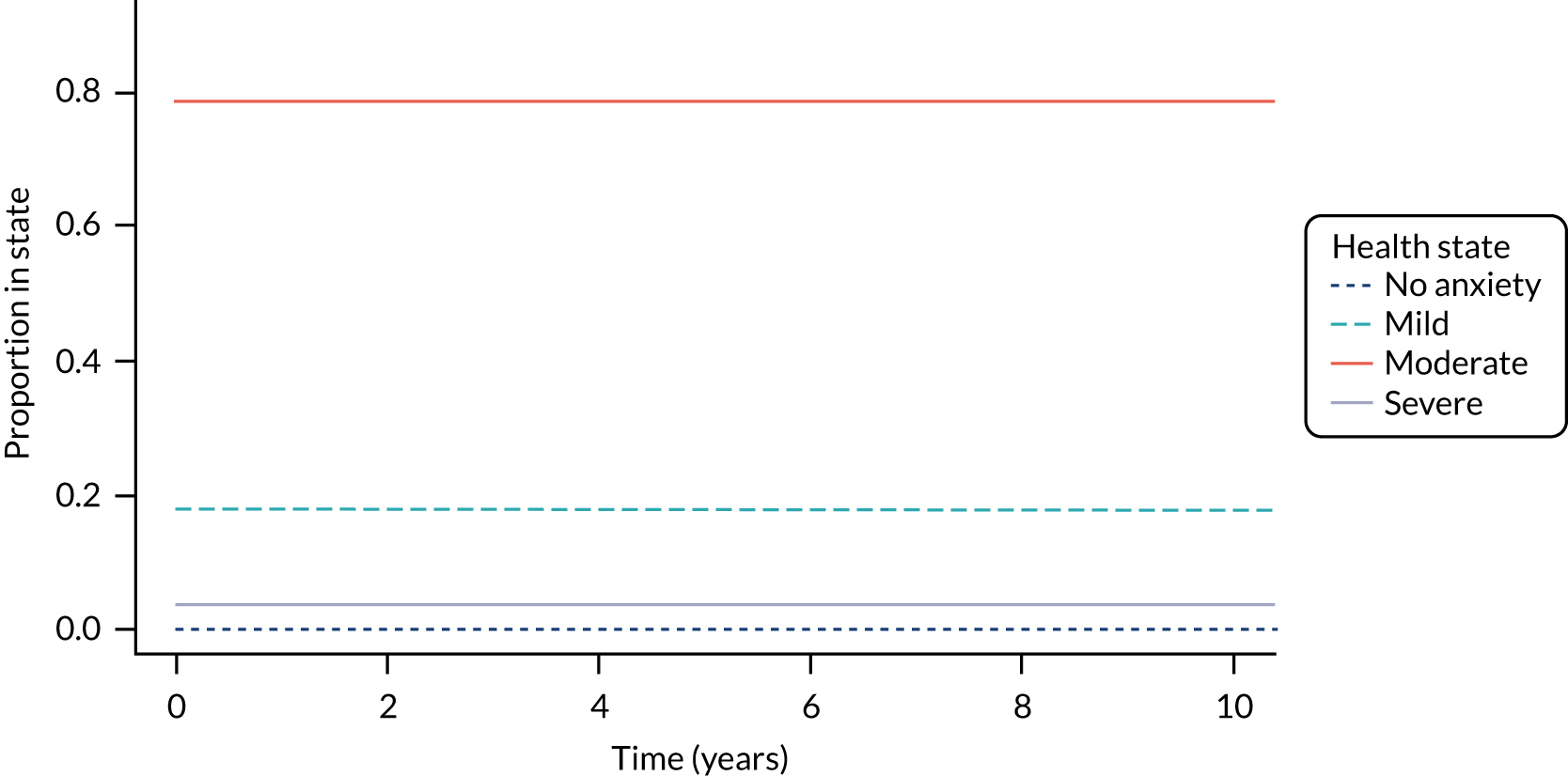

conduct an exploratory analysis to quantify the short- and long-term cost-effectiveness of DIs using a de novo decision-analytic model informed by a systematic review and quantitative data synthesis of clinical trials on common mental health problems

-

conduct a value-of-information (VOI) analysis based on the decision model findings and make recommendations as to what further research is necessary to inform future decisions

-

suggest how the methods of future CEAs for DIs can be improved by producing a step-by-step guide and a quality assessment checklist

-

investigate how the results on CEAs of DIs can be most effectively communicated to and inform decision-making by:

-

commissioners to fund services that use DIs

-

practitioners and service managers to provide DIs in routine care

-

service users to engage with DIs to improve or promote their mental health

-

technologists and researchers to further develop and optimise DIs.

-

Project design

The project had four work packages (WPs):

-

WP1 was a systematic review, critical appraisal and narrative synthesis of economic evaluations of DIs across all mental health conditions.

-

WP2 was a systematic review and network meta-analysis (NMA) of RCTs on DIs for a selected mental health condition.

-

WP3 was the economic modelling and VOI analysis of DIs for the selected mental health condition.

-

WP4 was a series of knowledge exchange seminars with stakeholders focusing on costs and outcomes of DIs.

We have reported our methods and findings of the systematic reviews in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statements. 28,29 We have reported our economic modelling in accordance with the Consolidated Health Economic Evaluation Reporting Standards (CHEERS) statement. 30

Changes to protocol

The protocol initially stated that the systematic review in WP2 and the model in WP3 would focus on depression and anxiety under the umbrella ‘common mental health problems’. These conditions were chosen because most of the available and best-quality clinical evidence relates to common mental health problems, as opposed to psychosis, eating disorders or autism. 31–35 During the course of the project, we concluded that the disease and treatment pathways across the group of conditions included under the original umbrella term of common mental health problems were too disparate to analyse in a single model. Related to this point, the international classification manuals removed some of the conditions that were previously considered under ‘anxiety disorders’, such as obsessive–compulsive disorder (OCD) and post-traumatic stress disorder, to their own diagnostic groups, making them even more disparate. Consequently, we refined the scope of the review in WP2 and the model in WP3 to an exemplar mental health condition, and the changes were approved by the project steering group’s independent members. The decision to focus on one exemplar condition, and that this condition should be generalised anxiety disorder (GAD) as opposed to a group of common mental health problems or a single condition other than GAD (e.g. depression), was based on the following factors:

-

Different common mental health problems have different illness trajectories and different treatment pathways, so they cannot be reasonably analysed in one model.

-

GAD is the most prevalent and least studied condition among other common mental health problems; its point prevalence is nearly double that of depression and it is often confused with panic disorder or depression when self-reported by survey participants. It is also commonly comorbid with other physical and mental health problems.

-

A substantial proportion of the papers identified in WP1 targeted GAD and related conditions, such as worry and stress, and so there was value in synthesising the findings from multiple studies and using this synthesis to inform the economic model.

-

We have not identified any anxiety models with an analysis time horizon > 18 months, and so a long-term model would provide a useful contribution to the body of evidence. In contrast, several long-term economic models for depression already exist, some of which have been used as a basis to assess the cost-effectiveness of DIs.

In the original protocol we thought that WP4 would require ethics approval by the University of York and the Health Research Authority. In the end, ethics approval was not necessary because our WP4 seminars were conducted as consultations and educational seminars, and we did not collect or report any information from individual participants. We did not record the sessions and we did not use any quotations or individual contributions, but reported only on general discussion themes across seminar groups. This was because our engagement with the stakeholders was an iterative process and it became apparent that it had to be embedded within the routine professional development activities of the stakeholders, such as clinical seminars and advisory group meetings, rather than as separate ‘research focus groups’.

Patient and public involvement

The patient and public involvement/service user member of our steering group attended all steering groups meetings and gave feedback during and after the meetings about how the project can lead to clear messages about the value for money of digital mental health interventions, especially ‘long-term’ value. He suggested that 6 months is a meaningful period of time to measure benefits and costs from a service user’s perspective as a way of distinguishing short-term and medium-term outcomes. We liaised regularly with him outside the steering group meetings to discuss decisions about literature search terms, inclusion/exclusion criteria and ways of organising information to carry out dissemination activities for our findings as part of WP4.

We have also had patient and public representation through our partners at the Mental Health Foundation [(MHF); London, UK], whom we met on a regular basis. Josefien Breedvelt, the Research Manager at the MHF at the time, acted as a conduit between the MHF’s regular patient and public consultations and this project. MHF is a public champion of mental health promotion and illness prevention, which has been a ‘grey area’ in our literature review for which we sought the MHF’s steer. DIs for mental health promotion and prevention were represented in a large and often overinclusive body of literature that could mean anything from universal emotional well-being initiatives to targeted or indicated interventions for populations with established symptoms or risk factors. The MHF participated in discussions about inclusion/exclusion criteria for our review in terms of appropriate interventions and outcomes around mental health promotion and prevention. One of the conclusions was that ‘prevention of mental health problems’ rather than ‘mental health promotion’ better described the focus of our review.

We have also had public involvement activities through the Closing the Gap network (University of York); one of the network’s themes, which was led by this project’s chief investigator, focused on improving physical outcomes in people with mental illness through digital technologies. Our project included only mental health outcomes and not physical health outcomes, but this was a point that we had to decide on early in the project so that we could agree on inclusion/exclusion criteria for the retrieved literature. The patient and public involvement members of the Closing the Gap network suggested that certain outcomes are directly related to physical health but can also be considered as mental health outcomes, such as smoking cessation and alcohol detoxification (‘addiction outcomes’). This was suggested as a good base for our review, which in the future can be extended to include physical health outcomes separate to mental health outcomes.

Conclusion

Digital interventions use software programs, accessed via computers, smartphones, audio-visual equipment and other devices, to deliver therapeutic activities that aim to make a difference to the symptoms and functioning of people with mental health and addiction problems. Economic evaluations can provide evidence as to whether or not DIs offer value for money, based on their costs and outcomes relative to the costs and outcomes of alternative care options. Our first aim was to review all published economic studies on DIs for mental health and addiction problems. Our second aim was to use an exemplar clinical condition to conduct a synthesis of clinical evidence, which would then inform our third aim of constructing an economic model that demonstrates how we can bring together evidence from different sources to assess the cost-effectiveness of DIs compared with all possible alternatives. To this end, we also aimed to develop classification criteria for categorising DIs and their alternatives so that they could be reasonably pooled together. Finally, we aimed to explore how evidence on costs and outcomes, as well as other factors, may influence stakeholder decisions to adopt DIs in mental health.

Chapter 2 Classification of digital interventions in mental health

Introduction

Systematic reviews can provide a comprehensive picture of the currently available evidence on DIs, but the way they often lump or split this evidence does not always lead to meaningful or useful conclusions. For example, technology-mediated interventions in mental health can include internet consultations [clinician–patient telecommunication by e-mail or via SkypeTM (Microsoft Corporation, Redmond, WA, USA)] and internet therapy (self-help with no support or some brief support from a clinician or lay person. These two types of interventions are ‘apples and oranges’ when it comes to funding and delivery of services, because internet consultations need a clinician to deliver them but do not require sophisticated software (so costs are mostly for human resources), whereas internet therapy needs software but can be delivered without clinician input (so costs are mostly for technology development and maintenance).

Using a classification system can help an evidence synthesis make best use of the currently available evidence by grouping together DIs that share key characteristics. Different stakeholders may have different views about how DIs should be lumped or split based on their key characteristics. For developers, the type of technology used [e.g. web based, mobile apps (applications) or artificial intelligence] may be the most important characteristic, whereas for clinicians the type of therapeutic approach may have an over-riding significance. For managers, DIs that increase service capacity are different from DIs that enhance usual care, whereas service users may consider it important to differentiate between DIs that enable them to stay in contact with clinicians and peers and DIs that are entirely automated self-help.

The WHO1 has produced a classification system for digital health interventions (not specific to mental health) according to four stakeholder groups (i.e. clients, health-care providers, health system managers and data services). The WHO classification groups reflect the different functions of DIs for each stakeholder group (e.g. self-monitoring for clients, training for health-care providers, management of budget and expenditures for managers, and data storage and aggregation for data services). The WHO system uses the term ‘intervention’ in a broad sense to include administrative activities and training that are important for health care but are not designed as patient-facing therapeutic activities to directly prevent or improve clinical symptoms.

For this project, we developed classification criteria that we could apply to the comparators used across the economic evaluations reviewed in WP1 and the RCTs reviewed in WP2. Using these criteria, we aimed to allocate each comparator (e.g. internet therapy, face-to-face therapy, control website and usual care) to a classification group. This would enable us to aggregate many complex and diverse comparators to a contained number of classification groups. Our evidence synthesis pooled together the costs and outcomes of comparators within the same classification group and compared the costs and outcomes of comparators in different classification groups. The granularity and number of classification groups aimed to strike a balance between the number of studies that could inform each classification group and the number of distinctive comparisons between groups; too many classification groups would have limited the number of combined studies within each group, whereas too few classification groups would have diluted the distinctiveness of comparisons between groups.

Classification of digital interventions and their comparators

We followed a five-step process for the allocation of comparators into classification groups. First, we extracted the key characteristics of comparison groups as reported in the reviewed studies. These comparison groups included at least one DI. We used existing frameworks of reporting complex interventions36 to ensure that we captured all the necessary components for each comparison group, as reported by the studies. Second, we identified common and differentiating features of the comparison groups between and within studies. Third, we consulted the literature and an advisory group of researchers, clinicians and service users about features within the available comparison groups that could be important for the relative costs and outcomes of DIs and their comparators. Fourth, we classified each comparison group in the reviewed studies based on specific criteria. Finally, we used combinations of these criteria to arrive at a list of classification groups.

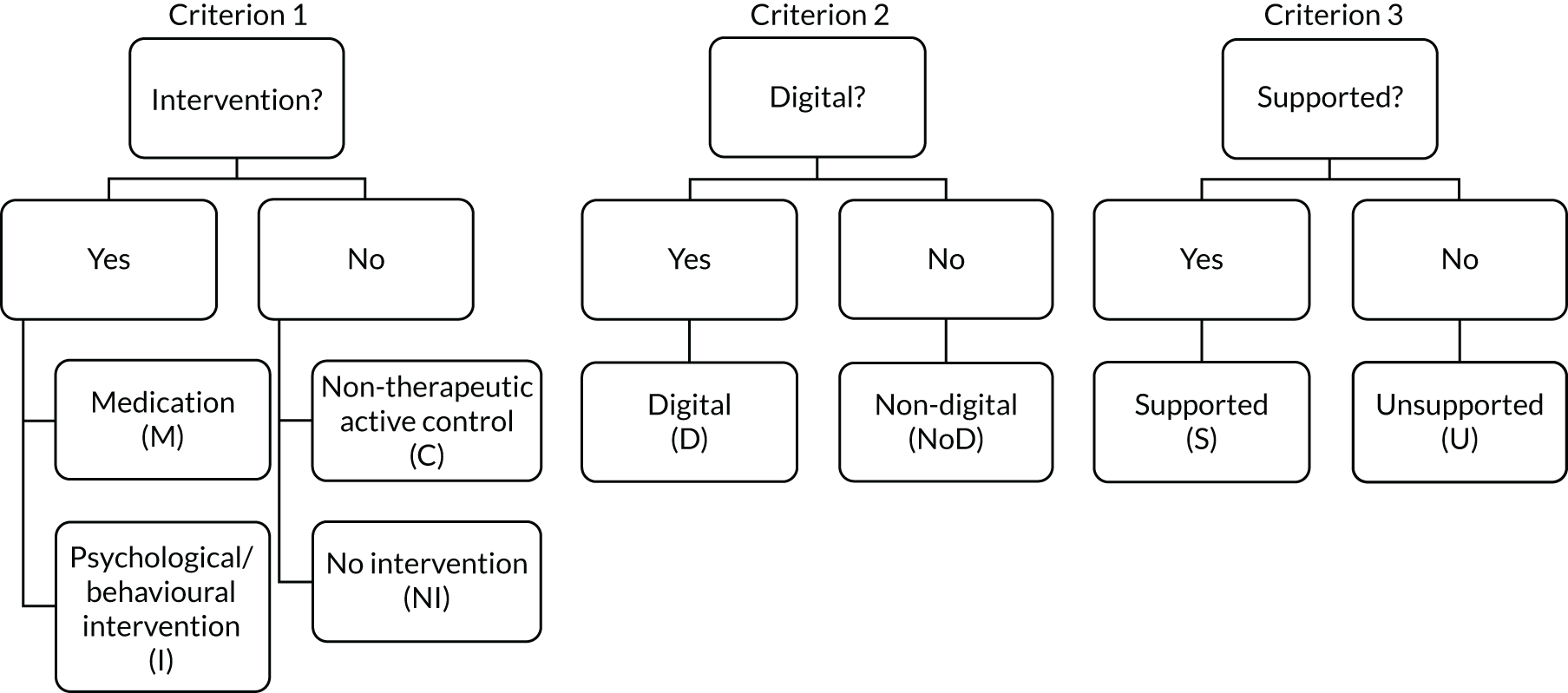

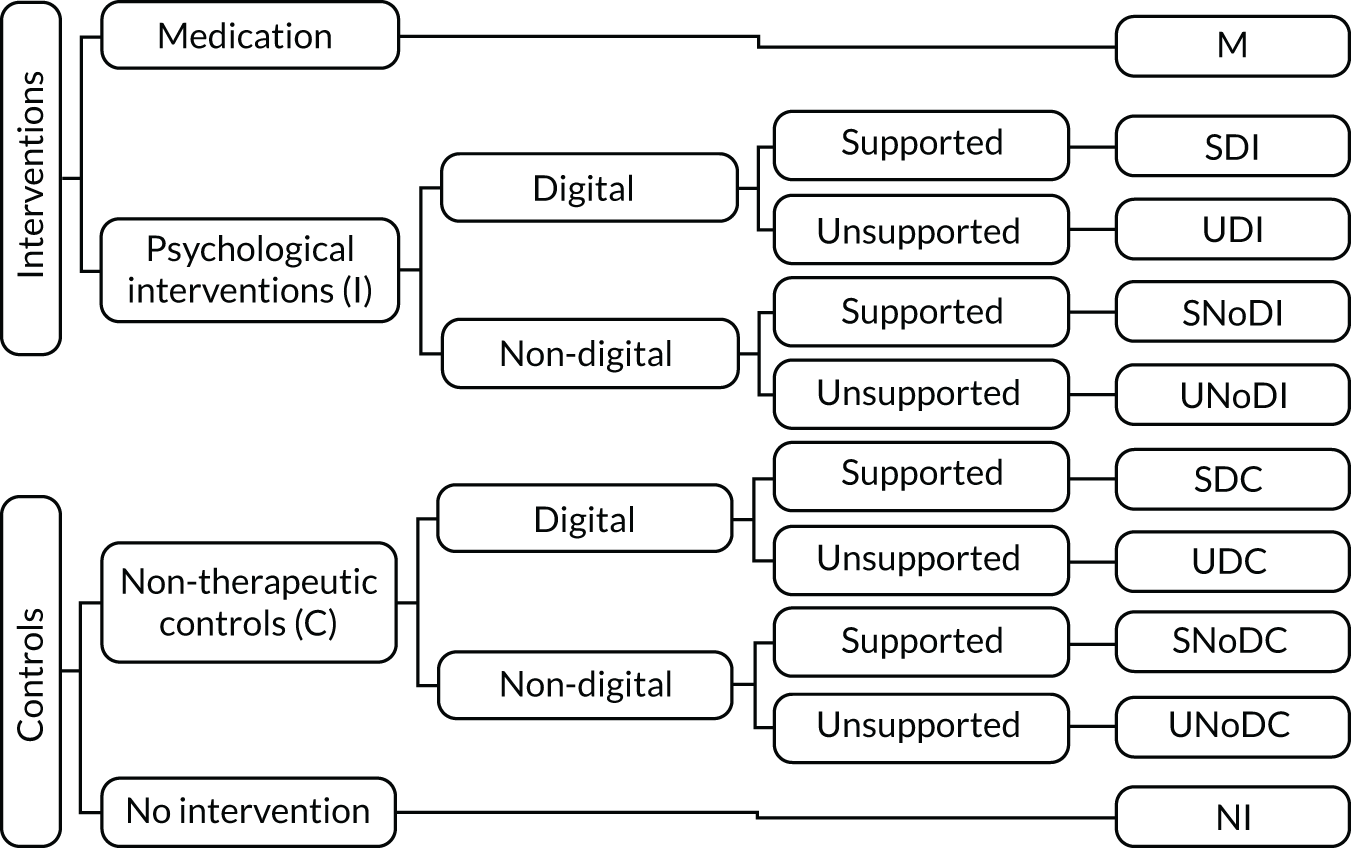

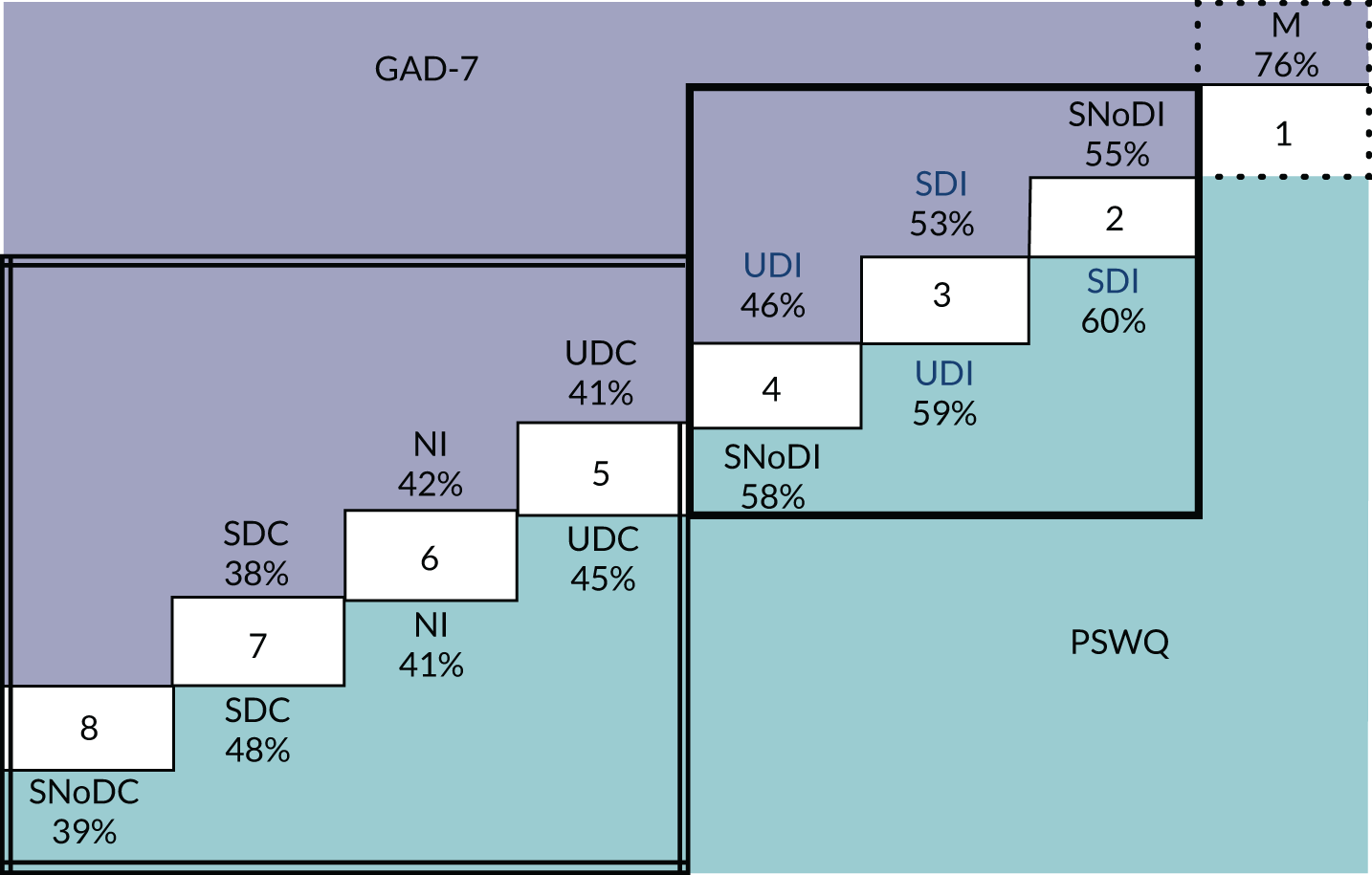

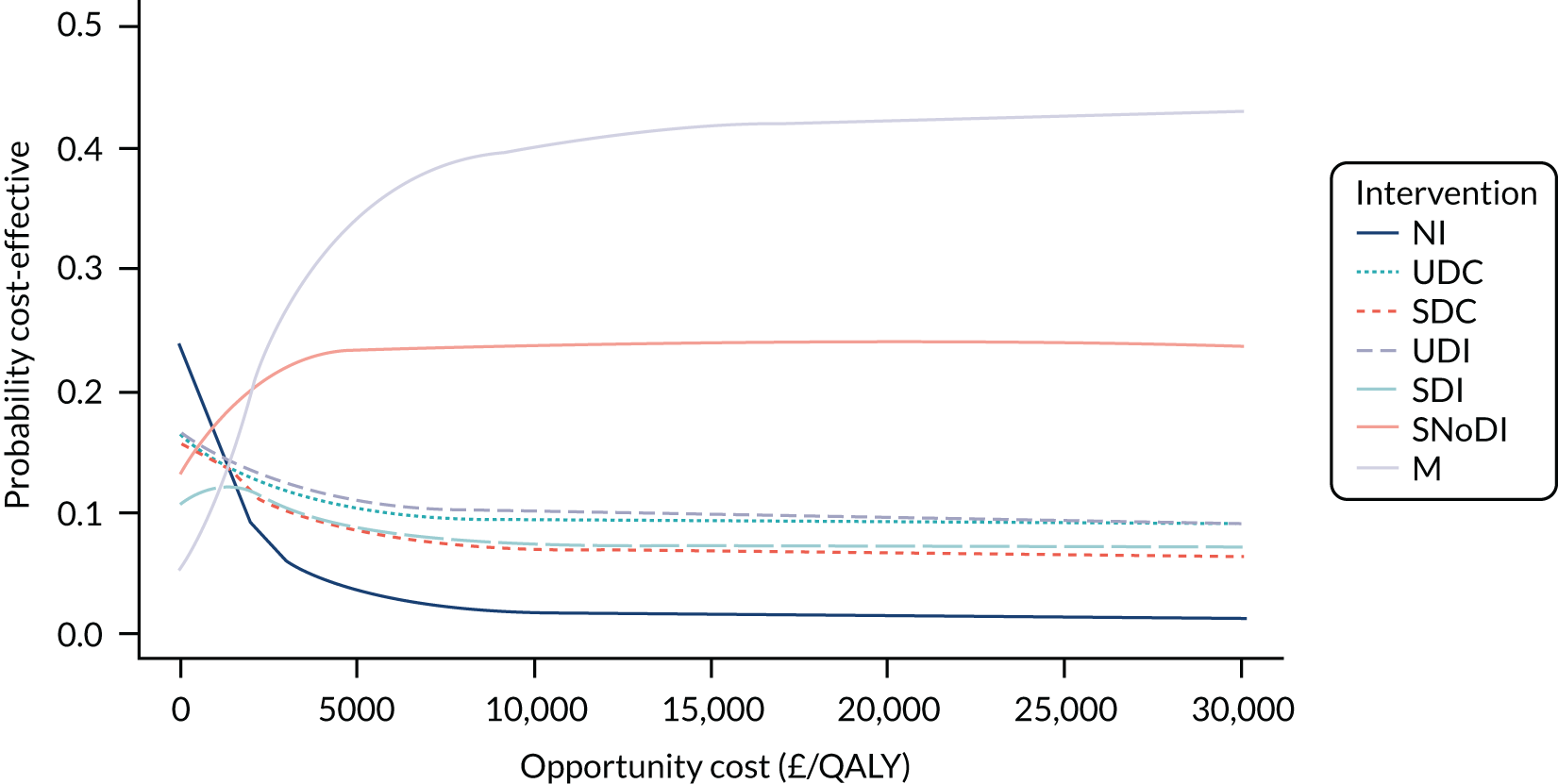

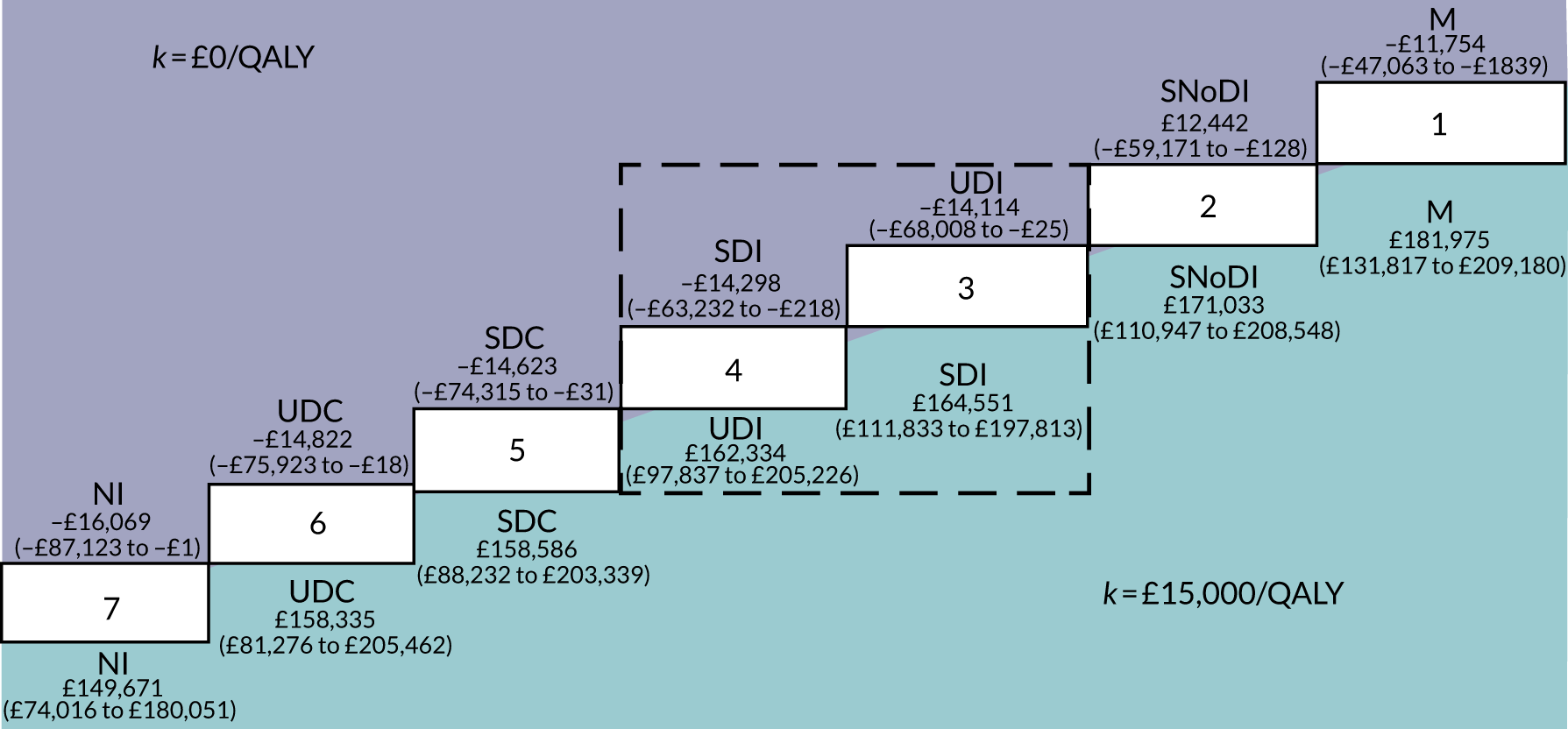

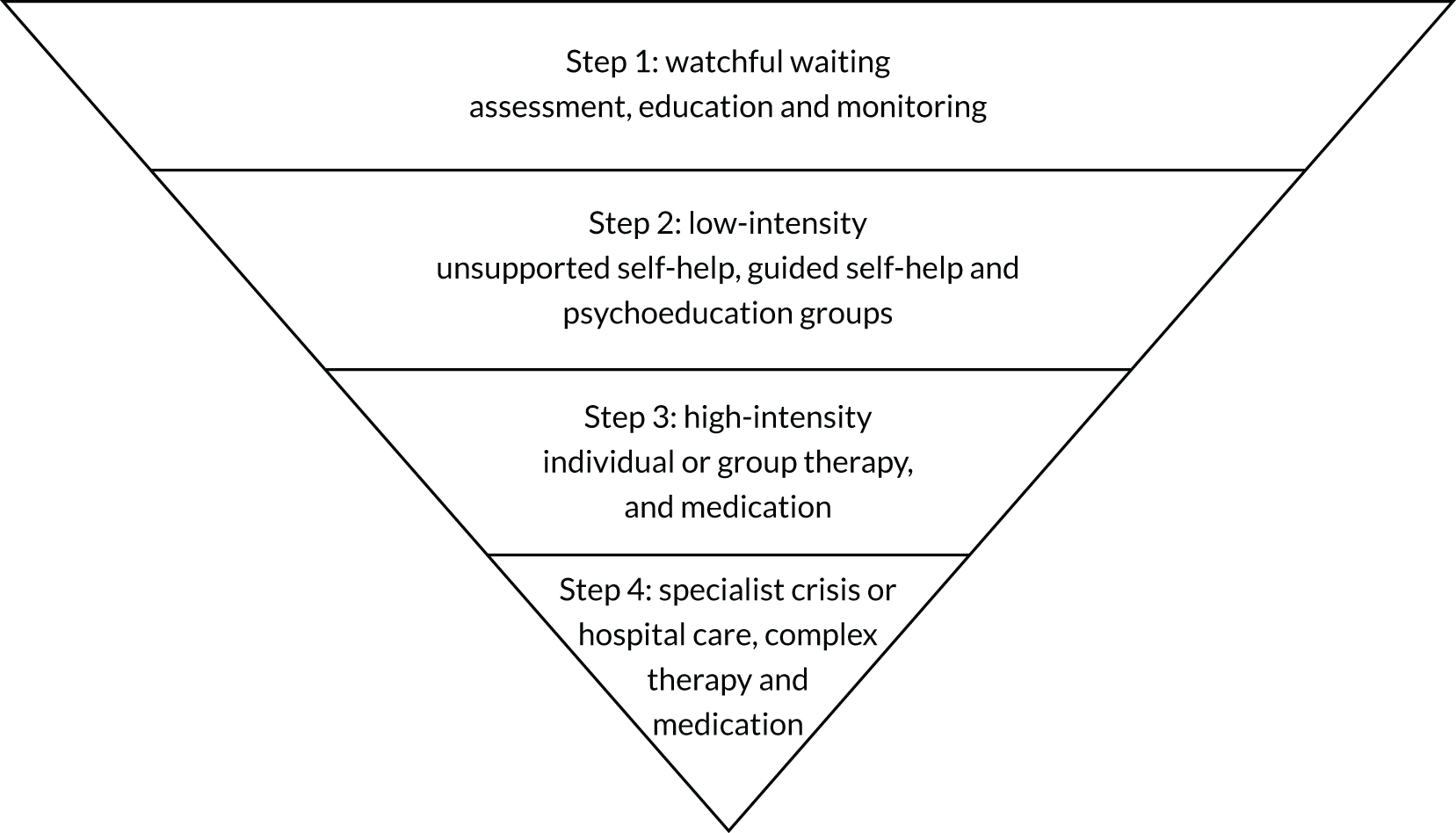

We used three criteria, as shown in Figure 3, to classify each comparison group: (1) whether the group was an intervention or a control – the intervention could be either psychosocial/behavioural (I) or medication (M), and the control could be either a non-therapeutic control (C) or NI; (2) whether the intervention/control was digital (D) or non-digital (NoD); and (3) whether the intervention/control was supported (S) or unsupported (U). The criteria are defined in Table 3.

FIGURE 3.

Classification of DIs and controls.

| Group classification | Criteria |

|---|---|

| Psychosocial/behavioural intervention (I) | An activity offered as part of a research protocol for therapeutic purposes (i.e. we expect it to make a difference in a mental health problem by improving clinical symptoms and functioning, based on psychological, behavioural, social or educational theories, evidence and/or experience) |

| Medication (M) | A pharmacological agent (pills, injections, etc.) offered as part of a research protocol |

| Non-therapeutic control (C) | An activity offered as part of a research protocol that we do not expect to make a clinically important difference to a mental health problem. This may be a psychological placebo, an attention control, or a change in usual care introduced by the research team to keep participants safe and minimise attrition |

| Digital (D) | Interventions/controls that include software processing of patient information to guide an activity |

| Non-digital (NoD) | Interventions/controls that do not involve any technology; for example, they are delivered by printed materials or during face-to-face meetings, or involve telecommunications technology without software-led activities (e.g. consultations by e-mail, Skype or telephone) |

| No intervention (NI) | No protocolled research activity and no changes in usual care introduced by the research team; this is typically when participants are placed on a WL or receive usual care. We used NI rather than ‘WL/usual care’ to differentiate from a ‘non-therapeutic control’ in which WL/usual care is enhanced by research activities (e.g. weekly contact for assessment) |

| Supported (S) | Interventions/controls that include scheduled or regular reciprocal/two-way person-to-person interactions (e.g. between service user and clinician or researcher, or peer to peer) |

| Unsupported (U) | Interventions/controls with no person-to-person interaction or ad hoc interaction (e.g. telephoning a helpline if any problems as a one-off) or non-reciprocal communication (e.g. reminders, posted or telephone messages without the expectation that there will be a conversation with the patient) |

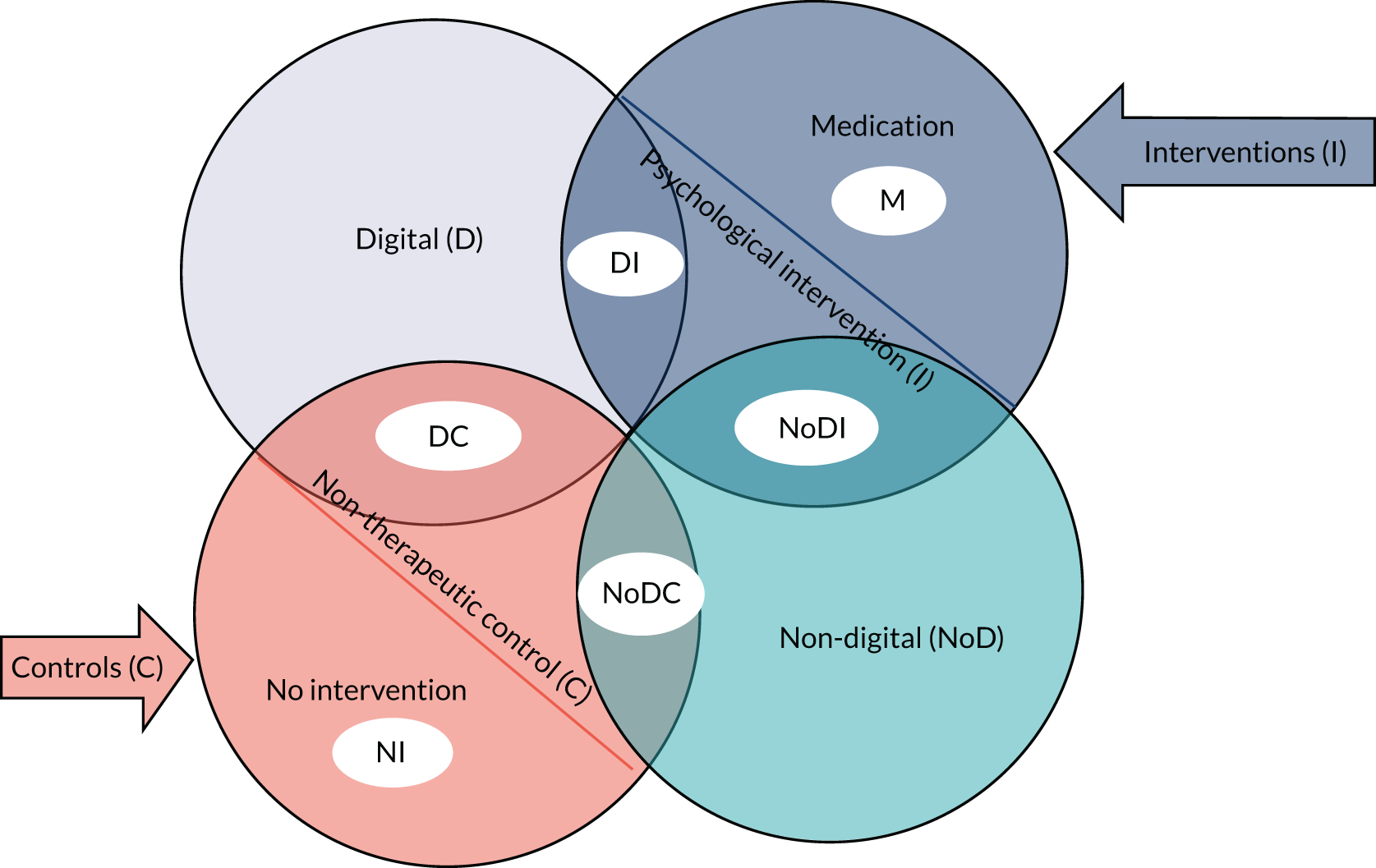

Using a combination of two criteria (intervention vs. control and digital vs. non-digital), we arrived at six comparison groups: DI, digital control (DC), non-digital intervention (NoDI), non-digital control (NoDC), NI and medication (Figure 4). In the context of this report, NoDI infers that the intervention is psychosocial/behavioural in nature. It is worth reiterating that we merged WL and usual care into ‘no intervention’ to mean that no additional activities or input were offered as part of a research study over and above interventions and resources that were routinely accessible to all participants irrespective of group allocation. We have further addressed this by grouping usual care or WL interventions into active controls when they included activities over and above what would be expected in routine care, or when usual-care activities were not accessible to the intervention group.

FIGURE 4.

Combination of interventions vs. controls and digital vs. non-digital. M, medication.

Using a combination of the three criteria (i.e. intervention vs. control, digital vs. non-digital and supported vs. unsupported), we arrived at 10 classification groups: medication (M), supported non-digital intervention (SNoDI), supported digital intervention (SDI), unsupported digital intervention (UDI), supported digital control (SDC), unsupported digital control (UDC), NI, unsupported non-digital intervention (UNoDI), unsupported non-digital control (UNoDC) and supported non-digital control (SNoDC) (Figure 5).

FIGURE 5.

Taxonomy groups based on combinations of intervention vs. control, digital vs. non-digital and supported vs. unsupported. M, medication.

Table 4 provides examples for each classification group.

| Acronym | Classification of intervention/control | Examples |

|---|---|---|

| M | Medication | Antidepressants, anxiolytics |

| SDI | Supported digital intervention | Computerised CBT with weekly telephone support, clinician-delivered therapy with VR, mobile app with SMS communication with a facilitator |

| UDI | Unsupported digital intervention | Internet self-help without any clinician contact, mobile app with automated reminders but without any two-way interaction with a facilitator |

| SNoDI | Supported non-digital intervention | Individual or group therapy, telephone brief therapy |

| UNoDI | Unsupported non-digital intervention | Stand-alone self-help using a treatment manual, bibliotherapy |

| SDC | Supported digital control | Access to a general health education website with weekly check-in calls, computer-delivered ‘sham’ experiment |

| UDC | Unsupported digital control | Access to an educational website with reminder e-mails |

| SNoDC | Supported non-digital control | WL with weekly check-in communication with a person, usual care with weekly researcher assessments |

| UNoDC | Unsupported non-digital control | General self-help (e.g. leaflets with health advice not specific to the mental health problem targeted) |

| NI | No intervention | WL, usual care |

Challenges with classification of comparators within studies of digital interventions

We resolved discrepancies between reviewers who attributed the same comparators to different classification groups by refining and expanding the definitions of our classification criteria until two reviewers could independently arrive at the same classification for every comparator within the selected studies. The discrepancies between reviewers highlighted some of the difficulties in classifying complex interventions and some of the gaps in reporting comparators to a sufficient detail to enable us to classify them appropriately. Below, we discuss some of the challenges in the classification of comparators across the economic evaluations reviewed in WP1 and in the RCTs reviewed in WP2.

When a waiting list goes beyond ‘no intervention’

By default, we have classified WL as ‘no intervention’, assuming no regular input from the research team and no changes in usual care because of the research study. If a WL (or usual care) was enhanced through regular contact or therapeutic materials from the research team then we classified it as ‘non-therapeutic active control’. For example, in Johansson et al. ,37 participants on the WL received weekly assessments and substantial non-directive support via an online system by therapists, so this was classified as SDC. Teng et al. 38 invited all WL participants to have a weekly face-to-face assessment with a research assistant in a laboratory, which was classified as SNoDC.

In Pham et al. ,5 patients on the WL also received a weekly newsletter with curated content on breathing retraining exercises, matching content to the intervention they were to receive after coming off the WL, and e-mail reminders to complete assessments, albeit without any personal interaction; this was classified as UDC, although we could argue that the extent and therapeutic content of the information was on a par with an intervention. As the authors called this a ‘waiting list’, and the participants knew that they were being given information while waiting to receive an intervention, irrespective of the potential therapeutic effect of the WL, it could be classified only as a control.

In a study by Lovell et al. ,39 WL was a period of NI followed by individual high-intensity CBT. As the majority of the participants randomised to WL/usual care received treatment within the duration of the study, the comparator for internet CBT was more akin to a standard therapy than to NI. This raises the importance of monitoring and reporting what usual care is within RCTs so that sensitivity analyses can take into account the actual interventions that were received by the control group, rather than making assumptions about what the control group received.

There are occasions40,41 when participants on the WL engage in a research activity that would not be available to those receiving usual care (e.g. the provision of weekly online ratings or the option to telephone the research team if they encounter problems). In this case, ‘no intervention’ is still the appropriate classification because there participants in such a group receive no regular input from the research team (e.g. measurement of outcomes or scheduled ‘check-ins’ with participants).

When a comparator labelled as ‘therapy’ is better classified as a ‘control’

It can be difficult to classify an activity as ‘therapy’ or ‘control’ when the same activity could be therapeutic in some contexts but not in others. For example, in a study reported by McCrone et al. ,42 one of the randomisation arms was a computerised relaxation programme that was delivered in the same way as the intended intervention (i.e. cCBT for OCD) in a clinic using a self-administered software-based programme. Although applied relaxation can be used as a treatment for some conditions, such as GAD, there is no evidence or indication that it is a bona fide treatment for OCD, so the study used this as a psychological placebo.

In another study by Andersson et al. ,43 internet CBT was compared with ‘internet support therapy’, which was intended as a ‘control over attention effects and possible alleviating effects in having contact with a professional therapist’. The internet CBT was a self-help intervention that included 100 pages of materials and worksheets with established therapy components as well as weekly homework and written feedback from therapists through an integrated treatment platform. Internet support therapy included supportive communication with a therapist via the same integrated treatment platform but without the CBT content or homework. Interacting with a therapist via an online platform in a supportive way could have been classified as a therapeutic activity for a condition such as depression but not for OCD; therefore, the internet support therapy on this occasion was a control rather than an intervention.

When a technology-enabled intervention is classified as ‘non-digital’

We have made the distinction between DIs and telecommunication media that may use a digital interface (e.g. a computer or a mobile phone) without information processing. For example, short message service (SMS) texts or e-mails between a therapist and a user are not classified as ‘DIs’, whereas an online or telephone-based messaging system, as used in Johansson et al. ,37 that includes specially constructed backend software to provide a bespoke therapy environment with message encryption and centralised monitoring is classified as a DI. In another scenario, videoconferencing is not a DI unless it is part of a platform with software processing that guides some elements of the intervention.

When unsupported interventions/controls include some type of ‘contact’

In their most basic form, unsupported interventions do not involve any interpersonal contact but are entirely self-administered. Some unsupported interventions may include one-way reminders, postcards or telephone messages, or a helpline to call if there are any problems. What differentiates these from supported interventions is that, in the case of supported interventions, there is a regular and expected two-way interaction between the user and a facilitator or between peers.

Mixed interventions

Interventions could be a mix of medication and psychosocial/behavioural interventions (e.g. when a DI is used to assist a participant in taking their medication and to monitor adherence). Medication that is a usual/routine care intervention may still be part of a ‘psychosocial intervention’ group, a ‘no intervention’ group or an ‘active control’ group. If participants are offered psychosocial support as part of a controlled medication trial, then the intervention is classified as ‘medication’. Participants may still be offered medication as part of usual care in addition to the psychosocial intervention (the medication is not a ‘research intervention’).

Granularity of taxonomy

Digital interventions are complex and may include different therapeutic components (e.g. psychoeducation, cognitive techniques, behavioural techniques and motivational interviewing) and different layers of intensity (e.g. weekly 1-hour sessions or brief interventions). In addition, DIs increasingly follow a mixed model in which one intervention may include different types of technologies (e.g. telephone, website, biofeedback) and different types of personal support (e.g. face-to-face sessions with a therapist, telephone calls for technical support or standardised texts and e-mails from an assistant).

Conclusions

We propose a classification system for DIs and their alternatives based on three criteria: therapeutic intent (intervention vs. control), software processing (digital vs. non-digital) and interpersonal communication (supported vs. unsupported). Such classification requires a judgement based not only on predefined criteria, but also on understanding the nuances of technology-enabled interventions in the context of specific clinical applications. Distinguishing between digital and non-digital interventions is not always straightforward, especially as technologies can be used for patient–clinician telecommunication or for patient-directed activities, or for a blend of both. Classifying a DI as an intervention or control could also be complicated when interventions for one clinical condition may not be considered therapeutic for another. WLs and usual care are classified by default as ‘no intervention’; however, they may be more accurately described as ‘active controls’ (when the research design introduces additional processes such as monitoring) or as ‘interventions’ (when participants receive routine treatments such as medication or face-to-face therapy). The relative effects of DIs depend on how tough their comparators are (e.g. DIs may perform ‘better’ than ‘no intervention’ but perform less well against ‘gold standard’ treatments). To enable the appropriate analysis and meaningful interpretation of evidence syntheses, research studies need to describe in detail the comparators of DIs in accordance with existing frameworks for reporting complex interventions, including any support that participants have received in a WL or usual care.

Chapter 3 Review of economic studies

Introduction

To inform decision-makers about which DIs (under what circumstances) may offer good value for money, we needed to review the relevant body of economic evidence that currently exists in the form of economic evaluations. In addition, we needed to critically appraise whether or not the economic evidence takes into account all relevant costs and outcomes, for the full range of possible alternative interventions and care options, and over an appropriate time horizon, for the mental health conditions of interest. 44

This systematic review aimed to identify all economic evaluations of DIs and extract common themes, focusing on the methods used in the economic analyses and their appropriateness for decision-making. The review also considered whether methods have yet to be developed or utilised, and what further research is needed to inform economic analysis in the context of DIs.

Methods

Searches

Material in this section has been reproduced with permission from Jankovic et al. , Systematic review and critique of methods for economic evaluation of digital mental health interventions, Applied Health Economics and Health Policy, published 2020. 45 Copyright © 2020, Springer Nature Switzerland AG.

In December 2018, the following databases were searched to identify published and unpublished studies: MEDLINE, PsycInfo® (American Psychological Association, Washington, DC, USA), Cochrane Central Register of Controlled Trials (CENTRAL), Cochrane Database of Systematic Reviews (CDSR), Cumulative Index to Nursing and Allied Health Literature (CINAHL) Plus, Database of Abstracts of Reviews of Effects (DARE), EMBASE™ (Elsevier, Amsterdam, the Netherlands), Web of Science™ (Clarivate Analytics, Philadelphia, PA, USA) Core Collection, NHS Economic Evaluation Database (NHS EED), the Health Technology Assessment database and the National Institute for Health Research (NIHR) Journals Library and the Database of Promoting Health Effectiveness Reviews (DoPHER). The full search strategy is presented in Report Supplementary Material 1.

We also searched two clinical trial registries for ongoing studies (ClinicalTrials.gov and the WHO’s International Clinical Trials Registry Platform portal), searched the NIHR portfolio and conducted web searches using Google (Google Inc., Mountain View, CA, USA) and Google Scholar (Google Inc.) using simplified search terms. After searches were completed, we searched the studies included in the relevant systematic reviews identified as well as the references cited in the included studies and conducted forward citation chasing on all identified protocols and conference abstracts. We also contacted researchers in the field and searched the reference lists of the selected studies.

The searches were conducted from 1997, as no relevant studies of DIs could have been published before this date. Searches were restricted to studies written in English, as we anticipated that most economic studies written in other languages would also have a version published in English (e.g. the South Asia Cochrane Group). 46

Selection criteria

Material in this section has been reproduced with permission from Jankovic et al. , Systematic review and critique of methods for economic evaluation of digital mental health interventions, Applied Health Economics and Health Policy, published 2020. 45 Copyright © 2020, Springer Nature Switzerland AG.

Eligible studies included participants with symptoms or at risk of mental health problems as defined by the International Classification of Diseases, Eleventh Revision,1 criteria for mental, behavioural or neurodevelopmental disorders, with the exception of the conditions listed under the categories of neurodevelopmental, neurocognitive and disruptive behaviour or dissocial disorders. Studies were also excluded if the primary diagnosis of the participants was a physical or other condition other than those listed (e.g. cancer or insomnia). All DIs that expressly targeted mental health outcomes and patient-facing therapeutic activities were included, with the exception of those that were simply a communication medium (e.g. telephones or videoconferencing). A broad range of studies was considered in the review, including economic evaluations conducted alongside trials, modelling studies and analyses of administrative databases. Only full economic evaluations that compared two or more options and considered both costs and consequences (i.e. CMA, CEA, CUA and CBA) were included in the review. No studies were excluded on the basis of their comparator group. Study protocols, abstracts and reviews were marked to facilitate follow-up as described in Searches.

Study selection

Two reviewers, one of whom had expertise in health economics, independently assessed all titles and abstracts for eligibility. If either reviewer indicated that a study could be relevant, the full text for that study was sought and again assessed independently by two reviewers, with disagreements resolved through discussion or with a third reviewer.

Data extraction

The purpose of data extraction was to summarise methodology and identify challenges common in the evaluation of DIs. To do so, we extracted information reported in the studies on the population, intervention (underpinning principles, delivery mode, level of support, treatment duration) comparators, outcomes (clinical and economic outcomes, and the economic end point), study design, analytical approach (within-trial analysis, decision model, statistical model, epidemiological study), analysis time horizon, setting (country and analytical perspective), analytical framework (CMA, CEA, CUA, CCA or CBA) and methods employed to characterise uncertainty.

Critical analysis

Material in this section has been reproduced with permission from Jankovic et al. , Systematic review and critique of methods for economic evaluation of digital mental health interventions, Applied Health Economics and Health Policy, published 2020. 45 Copyright © 2020, Springer Nature Switzerland AG.

The identified studies were critically reviewed, to assess whether or not the existing evidence meets the requirements for decision-making in health care44 and to assess the challenges in generating cost-effectiveness evidence in this context. The following questions were asked of the methods used in the included studies:

-

Does the economic analysis estimate both costs and effects?

-

Does the analysis appropriately synthesise all of the available evidence?

-

Are the full ranges of possible alternative interventions and clinical strategies included?

-

Are costs and outcomes considered over an appropriate time horizon?

Quality assessment

Checklists are useful tools to assess the quality and applicability of economic evaluations. They also provide a framework for summarising the methods and results of economic evaluations in a consistent manner. Having an appropriate quality assessment checklist for economic evaluations of DIs in mental health can improve reporting standards and encourage harmonisation of key methods likely to drive heterogeneity in results from different economic evaluations. Following a checklist can help structure the narrative synthesis of results and critique of the methods used. This process helps the comparison of results across different studies, which are likely to be driven by methods employed to determine cost-effectiveness.

There are several checklists used in reviews of health economic evaluations, as summarised by Watts and Li. 47 Their paper notes that the Drummond48 checklist [or BMJ (British Medical Journal) checklist, as Watts and Li call it] and the Philips49 checklist are the most commonly used and are well regarded by the health economics community. Using the Drummond48 checklist, we assessed the included studies according to the clarity of their research questions, the quality and completeness of data used in the economic evaluation, the methods used to characterise uncertainty in the evaluation model, and the interpretation of their results. We have also used the Philips49 checklist, which is specific to model-based economic evaluations and describes attributes of good practice and questions for critical appraisal according to the model’s structure, data and consistency.

Results

Summary of available economic evaluations of digital interventions

Material in this section has been reproduced with permission from Jankovic et al. , Systematic review and critique of methods for economic evaluation of digital mental health interventions, Applied Health Economics and Health Policy, published 2020. 45 Copyright © 2020, Springer Nature Switzerland AG.

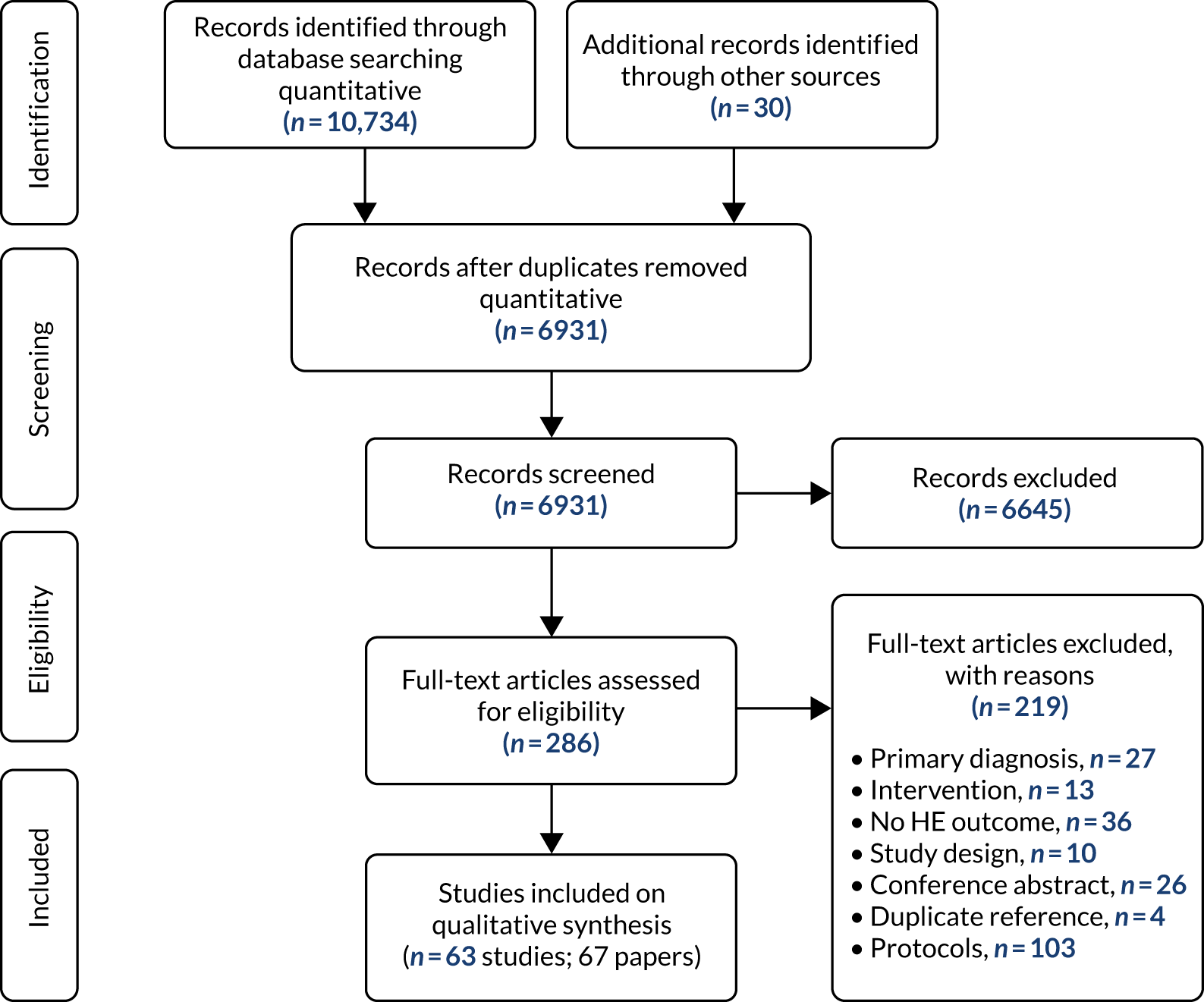

Our systematic literature search and study selection identified 63 primary studies,15,39,42,43,50–108 whose results were reported in 67 papers,15,39,42,43,50–112 as shown in Figure 6. After the removal of duplicates, 6931 of the 10,764 records originally identified remained and were screened, of which 6645 were excluded by title and abstract and 286 were assessed for eligibility by reviewing their full text. A total of 219 papers were excluded because the primary diagnosis was not a mental health problem (n = 27); the intervention was not a mental health intervention (n = 13); the study did not include health economic outcomes (n = 36); it was not an economic evaluation, or it was a review rather than a primary study (n = 10); it was a conference abstract rather than a peer-reviewed paper (n = 26); or it was a duplicate reference (n = 4) or a protocol (n = 103). Report Supplementary Material 2 gives the references of the excluded studies and the reasons for exclusion.

FIGURE 6.

The PRISMA flow diagram for the identification and selection of cost-effectiveness studies relating to DIs in mental health (search 1: up to November 2018). HE, health economic. Reproduced with permission from Jankovic et al. , Systematic review and critique of methods for economic evaluation of digital mental health interventions, Applied Health Economics and Health Policy, published 2020. 45 Copyright © 2020, Springer Nature Switzerland AG.

Two papers89,109 reported the results of identical analyses from the same study; therefore, the paper by Duarte et al. 109 was incorporated in the summary with Littlewood et al. 89 to avoid duplicate reporting. Two papers62,112 reported results from the same economic evaluation using different perspectives: the employer’s62 and the societal. 112 Three papers69,110,111 used the same sample and interventions but different economic evaluation methods: El Alaoui et al. ’s110 paper was based on the provider’s perspective and a 4-year time horizon, Hedman et al. 69 adopted a societal perspective and a 6-month time horizon, and Hedman et al. ’s111 paper was based on a societal perspective and a 4-year time horizon. It is worth highlighting that two papers43,51 were considered as reporting separate studies, although they used the same sample, as the study reported in the second paper51 was an extension of that reported in the first. 43 In conclusion, there were 66 papers with unique economic analyses and 63 studies with separate samples and interventions (hereafter referred to as 66 economic evaluations and 63 studies).

Approximately two-thirds of the studies (45/63) evaluated interventions that target anxiety and/or depression. Other conditions included suicidal ideation (n = 1), child disruptive behaviour (n = 1), eating disorders (n = 3), schizophrenia (n = 3) and addiction, including drug and alcohol addiction (n = 3 and n = 2, respectively) and smoking cessation (n = 8) (Table 5).

| Study (first author and year) | Design | Condition | Country | Target age group | Sample age: meana (SD), range (years) | Number randomised (number of men) | Number lost to follow-up/number completed | Perspective | Time horizon |

|---|---|---|---|---|---|---|---|---|---|

| Aardoom 201650 | 4-arm RCT | Eating disorder | The Netherlands | ≥ 16 years | None reported | 354 (4) | 152/202 | Societal | 3 months |

| Andersson 201543 | 2-arm RCT | OCD | Sweden | Adults | (Mean, SD not reported), 18–67 | 101 (34) | 2/99 | Societal | 10 weeks (for economic outcomes, 4 months for clinical outcome) |

| Andersson 201551 | 2-arm RCT | OCD | Sweden | Adults | (Mean, SD not reported), 20–70 | 93 (32) | 11/82 | Societal | 24 months |

| Axelsson 201852 | 4-arm RCT | Health anxiety | Sweden | Adults | 38 (13), 20–72 | 132 (34) | 5/127 | Health system, societal | 12 weeks (post treatment) |

| Bergman Nordgren 201453 | 2-arm RCT | Anxiety (not specified) | Sweden | Adults | 35 (SD not reported), 19–68 | 100 (37) | 21/79 | Societal | 1 year |

| Bergström 201054 | 2-arm RCT | Panic disorder | Sweden | Adults | Not reported | 113 (40) | 26/87 | Health system | 6 months |

| Bolier 201455 | 2-arm RCT | Depression | The Netherlands | ≥ 21 years | 43 (12), (range not reported) | 264 (58) | 86/198 | Societal | 6 months |

| Brabyn 201656 | 2-arm RCT | Depression | UK | Adults | 41 (14), 8–77 | 369 (131) | 95/274 | Health system | 12 months |

| Budney 201557 | 3-arm RCT | Cannabis addiction | USA | Adults | None reported | 75 (gender not reported) | 30/45 | Provider | 9 months |

| Buntrock 201758 | 2-arm RCT | Depression | Germany | Adults | 45 (12) (range not reported) | 406 (106) | 118/288 | Health system, societal | 12 months |

| Burford 201359 | 2-arm RCT | Smoking | Australia | Adults | (Mean, SD not reported), 18–30 | 160 (60) | 38/122 | Health system | 6 months |

| Calhoun 201660 | 2-arm RCT | Smoking | USA | Not specified | 43 (14), (range not reported) | 413 (343) | 105/308 | Health-care provider | 12 months follow-up (lifelong QALY gain modelled) |

| Dear 201561 | 2-arm RCT | Stress, anxiety, worry | Australia | ≥ 60 years | (Mean, SD not reported), 60–81 | 72 (28) | 10/62 | Health system | 12 weeks |

| Ebert 201862 (linked with Kählke 2019112) | 2-arm RCT | Stress | Germany | Adults | 43 (10), (range not reported) | 264 (71) | 26/87 | Employer | 6 months |

| El Alaoui 2017110 | 2-arm RCT | Social anxiety | Sweden | Adults | (Mean, SD not reported), 18–64 | 126 (81) | 25/101 | Provider | 4 years |

| Garrido 201763 | 2-arm RCT | Schizophrenia | Spain | Adults | 33 (SD not reported), 18–55 | 67 (49) | 34/33 | Health system | 36 months |

| Geraedts 201564 | 2-arm RCT | Depression | The Netherlands | Adults | None reported | 231 (87) | 106/125 | Societal, employer | 12 months |

| Gerhards 201065 | 3-arm RCT | Depression | The Netherlands | 18- to 65-year olds | None reported | 303 (131) | 28/275 | Societal | 12 months |

| Graham 201366 | 3-arm RCT | Smoking | USA | Adults | 36 (11), (range not reported) | 2005 (981) | 637/1368 | Payer | 18 months |

| Guerriero 201367 | Modelling study based on pilot RCT | Smoking | UK | Not specified | Not applicable | 200 (gender not reported) | 16/184 | Health system | 31 weeks |

| Hedman 201169 | 2-arm RCT | Social anxiety | Sweden | Adults | (Mean, SD not reported), 18–64 | 126 (81) | 25/101 | Societal | 6 months |

| Hedman 201368 | 2-arm RCT | Health anxiety | Sweden | Adults | (Mean, SD not reported), 25–69 | 81 (21) | 6/75 | Societal | 12 weeks (post treatment) |

| Hedman 2014111 | 2-arm RCT | Social anxiety | Sweden | Adults | (Mean, SD not reported), 18–64 | 126 (81) | Not reported | Societal | 4 years |

| Hedman 201670 | 2-arm RCT | Health anxiety | Sweden | Adults | (Mean, SD not reported), 21–75 | 158 (33) | 16/142 | Societal | 12 weeks (post treatment) |

| Hollinghurst 201071 | 2-arm RCT | Depression | UK | 18- to 75-year-olds | 35 (12), (range not reported) | 297 (95) | 87/210 | Health system | 8 months |

| Holst 201872 | 2-arm RCT | Depression | Sweden | Adults | 37 (11), (range not reported) | 90 (38) | 25/65 | Health system, societal | 9 months |

| Hunter 201773 | 2-arm RCT | Alcohol addiction | Italy | Adults | None reported | 763 (469) | 143/620 | Health system | 12 months |

| Joesch 201274 | 2-arm RCT | Anxiety (mixed) | USA | Adults | None reported | 690 (195) | Not reported | Health system | 18 months (including treatment) |

| Jolstedt 201875 | 2-arm RCT | Anxiety (mixed) | Sweden | 8- to 12-year-olds | 10 (1), (range not reported) | 131 (61) | 18/113 | Societal | 12 weeks (3 month follow-up but with crossover) |

| Jones 200177 | 3-arm RCT | Schizophrenia | UK | Adults aged < 65 years | (Mean, SD not reported), 18–65 | 112 (gender not reported) | 56/66 | Societal | 3 months |

| Jones 201476 | 2-arm RCT | Child disruptive disorder | USA | Children aged 3–8 years | 6 (SD, range not reported) | 22 (gender not reported) | 7/15 | Provider | Not reported |

| Kählke 2019112 | 2-arm RCT | Stress | Germany | Adults | 43 (10), (range not reported) | 264 (71) | 26/87 | Employer | 6 months |

| Kass 201778 | Modelling study | Eating disorder | USA | Not specified | Not applicable | Not applicable | Not applicable | Payer | Unclear (lifetime?) |

| Kenter 201579 | 2-arm observational study | Depression and anxiety | The Netherlands | Adults | None reported | 4448 (2006) | Not reported | Provider | Varied (observational data) |

| Kiluk 201680 | 3-arm RCT | Alcohol addiction | USA | Adults | 43 (12), (range not reported) | 68 (44) | 4/62 | Not reported | 6 months |

| Koeser 201381 | Modelling study based on multiple studies | Depression | UK | Not specified | Not applicable | Not applicable | Not applicable | Health system | 8 months |

| Kolovos 201682 | 2-arm RCT | Depression | The Netherlands | Adults | 38 (11), (range not reported) | 269 (124) | 158/111 | Health system, provider, societal | 12 months |

| König 201883 | 2-arm RCT | Binge eating disorder | Germany and Switzerland | Adults | None reported | 178 (gender not reported) | 31/147 | Societal | 18 months |

| Kraepelien 201884 | 3-arm RCT | Depression | Sweden | Adults | (Mean, SD not reported), 18–67 | 945 (250) | 717/228 | Health system, societal | Health-care perspective: 3 months. Societal perspective: 12 months |

| Kumar 201885 | Modelling study based on multiple studies | GAD | USA | Adults | Not applicable | Not applicable | Not applicable | Payer, societal | Not reported |

| Lee 201787 | Modelling study from multiple sources | Depression | Australia | Adolescents aged 11–17 years | Not applicable | Not applicable | Not applicable | Health system | 10 years |

| Lee 201786 | Modelling study from multiple sources | Depression and anxiety | Australia | Adults aged < 60 years | None reported | Not applicable | Not applicable | Health system | 12 months |

| Lenhard 201788 | 2-arm RCT | OCD | Sweden | Adolescents aged 12–17 years | 15 (2), (range not reported) | 67 (36) | 7/60 | Societal | 12 weeks |

| Littlewood 201589 and Duarte 2017109 | 3-arm RCT | Depression | UK | Adults | 40 (13), (range not reported) | 691 (229) | 230/461 | Health system | 24 months |

| Lovell 201739 | 3-arm RCT | OCD | UK | Adults | 33 (SD not reported), 18–77 | 475 (178) | 141/334 | Health system, societal | 12 months |

| McCrone 200415 | 2-arm RCT | Depression and anxiety | UK | Adults | (Mean, SD not reported), 18–75 | 274 (72) | 12/262 | Health system, societal | 6 months |

| McCrone 200742 | 3-arm RCT | OCD | USA and Canada | Adults | None reported | 218 (gender not reported) | 42/176 | Provider | Not reported |

| McCrone 200990 | 3-arm RCT | Panic disorder | UK | Adults | 38 (13), (range not reported) | 90 (28) | 30/60 | Health system | 1 month |

| Mihalopoulos 200591 | Modelling study from multiple sources | Panic disorder | Australia | Not specified | None reported | Not applicable | Not applicable | Health system | 12 months (reported in original model paper) |

| Murphy 201692 | 2-arm RCT | Addiction to substances (mixed) | USA | Adults | 35 (11), (range not reported) | 507 (315) | Not reported | Payer, provider | 36 weeks |

| Naughton 201793 | 2-arm RCT | Smoking | UK | Not specified | 27 (6), (range not reported) | 407 (gender not reported) | 146/261 | Payer | 11–36 weeks (final follow-up at 36 weeks of gestation) |

| Naveršnik 201394 | Modelling study based on pilot trial | Depression | Slovenia | Adults | Not applicable | 46 (gender not reported) | 24/22 | Health system | 6 months |

| Olmstead 201095 | 2-arm RCT | Addiction (mixed) | USA | Adults | 42 (10) (range not reported) | 77 (46) | 23/54 | Payer | Unclear (appears to be 8 weeks) |

| Phillips 201496 | 2-arm RCT | Depression and/or anxiety | UK | Adults | None reported | 637 (296) | 406/231 | Not reported | 12 weeks (total) |

| Romero-Sanchiz 201797 | 3-arm RCT | Depression | Spain | 18- to 65-year-olds | 43 (SD, range not reported) | 296 (70) | 83/203 | Societal | 12 months |

| Smit 201398 | 3-arm RCT | Depression | Australia | Adults | None reported | 414 (165) | 183/231 | Health system | 6 months |

| Solomon 201599 | Modelling study from multiple sources | Smoking | Australia | Adults | Not applicable | Not applicable | Not applicable | Societal | 12 months |

| Španiel 2012100 | 2-arm RCT | Schizophrenia | Czech Republic and Slovakia | 18- to 60-year-olds | None reported | 158 (90) | 63/95 | Not reported | 12 months |

| Stanczyk 2014101 | 3-arm RCT | Smoking | The Netherlands | Adults | None reported | 2551 (1273) | 1342/1209 | Health system, societal | 12 months |

| Titov 2009102 | 2-arm RCT | Social anxiety | Australia | Adults | None reported | 193 (gender not reported) | 22/171 | Other | 10 weeks |

| Titov 2015103 | 2-arm RCT | Depression | Australia | Elderly (no age specified) | (Mean, SD not reported), 61–78 | 54 (14) | 2/52 | Health system | 8 weeks |

| van Spijker 2012104 | 2-arm RCT | Suicidal ideation | The Netherlands | Adults | 41 (14), (range not reported) | 236 (80) | 25/111 | Societal | 6 weeks |

| Warmerdam 2010105 | 3-arm RCT | Depression | The Netherlands | Adults | 45 (12), (range not reported) | 263 (76) | 112/151 | Societal | 12 weeks |

| Wijnen 2018106 | 2-arm RCT | Depression | The Netherlands | Adults | (Mean, SD not reported), 18–81 | 329 (80) | 92/237 | Health system, societal | 3 months |

| Wright 2017107 | 2-arm RCT | Depression | UK | 12- to 18-year-olds | None reported | 91 (gender not reported) | 36/55 | Provider, societal | 4 months |

| Wu 2014108 | Modelling study based on RCT | Smoking | UK | Adults | 45 (12), (range not reported) | 6911 (3163) | 1737/5174 | Health system | Lifetime |

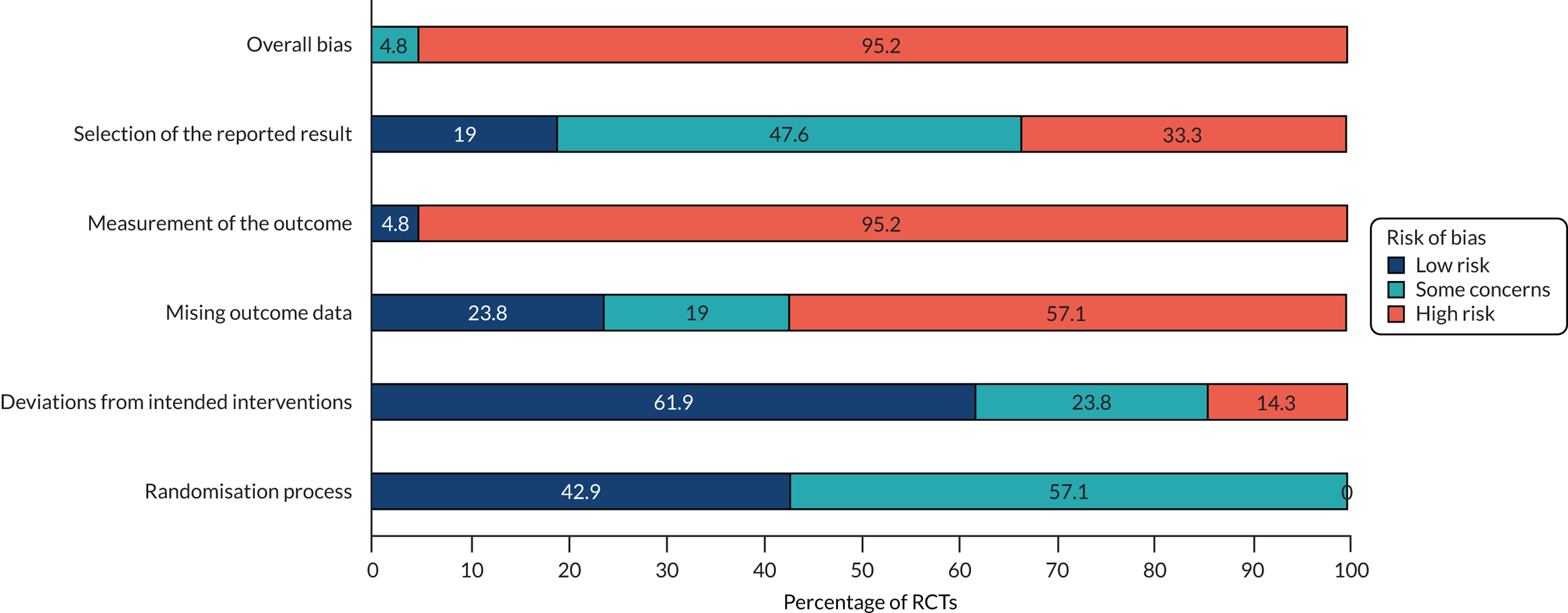

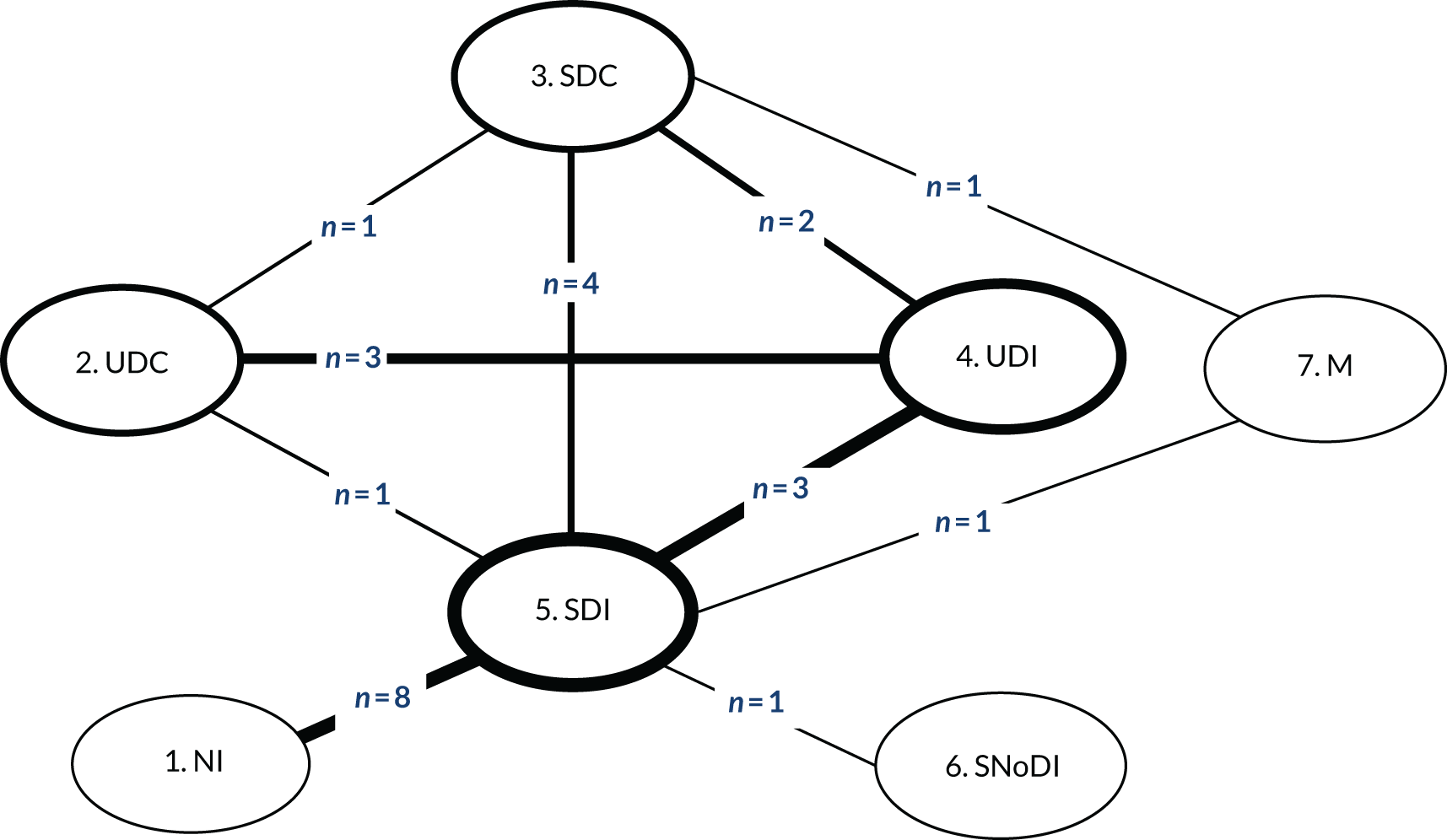

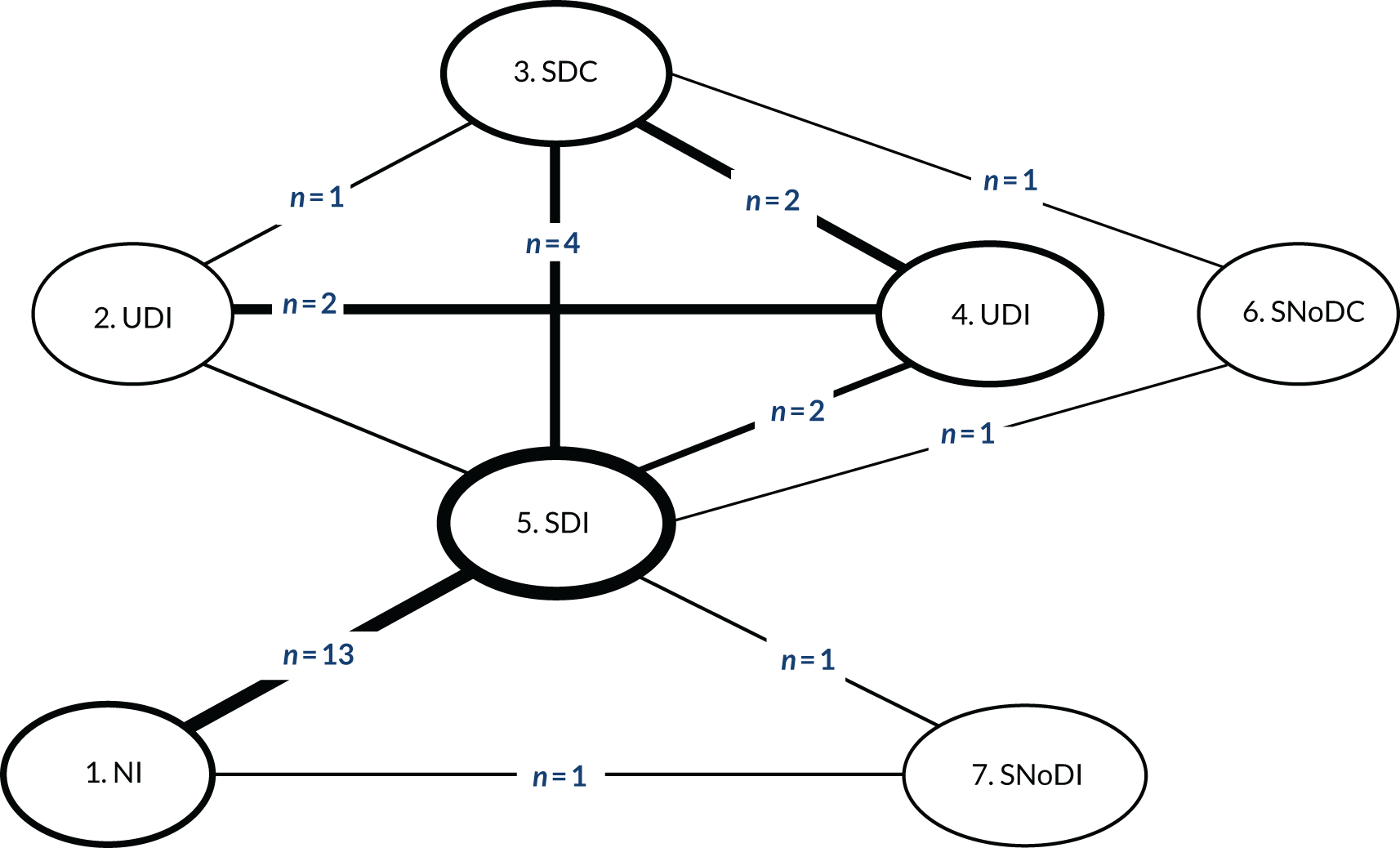

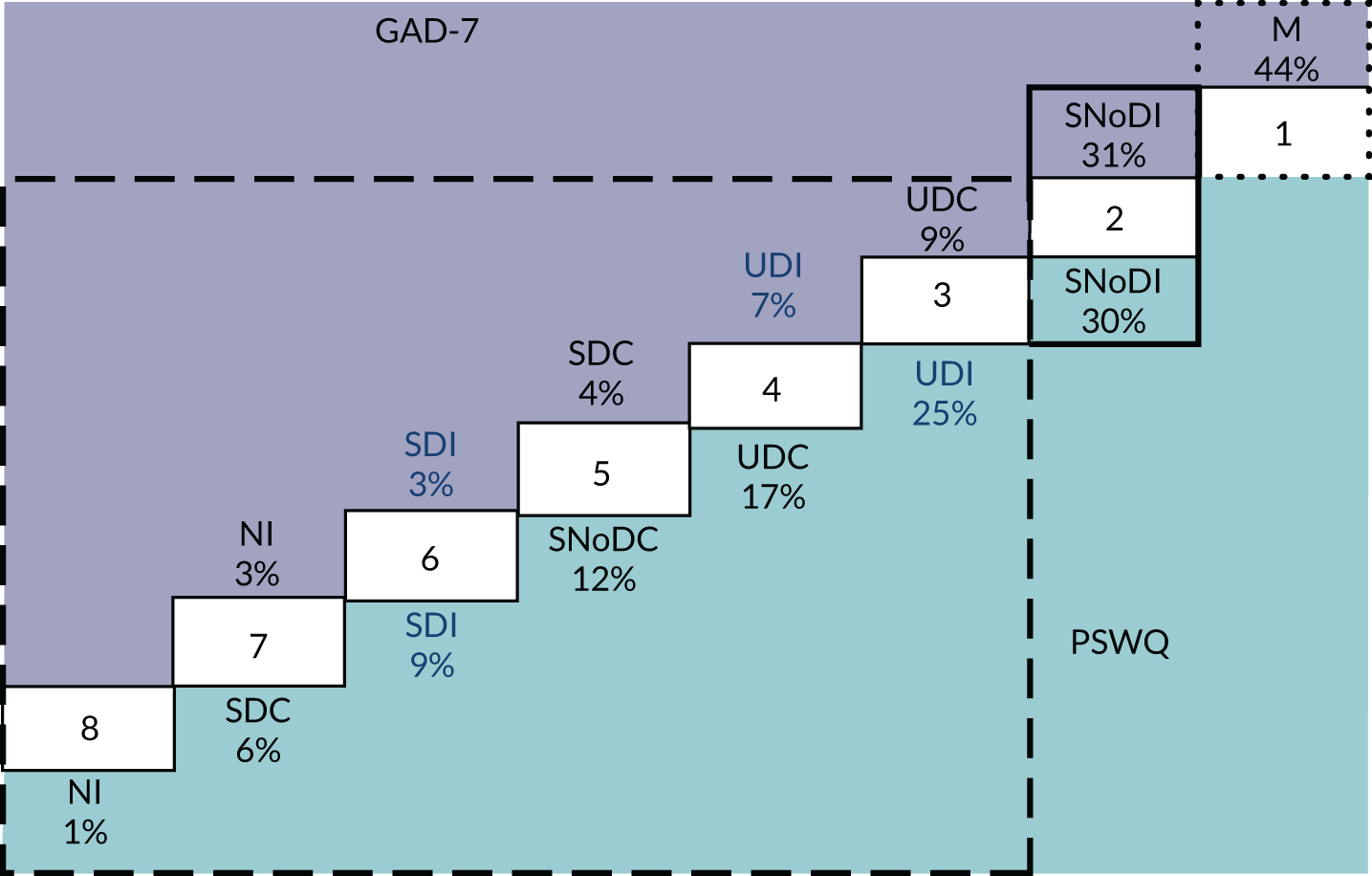

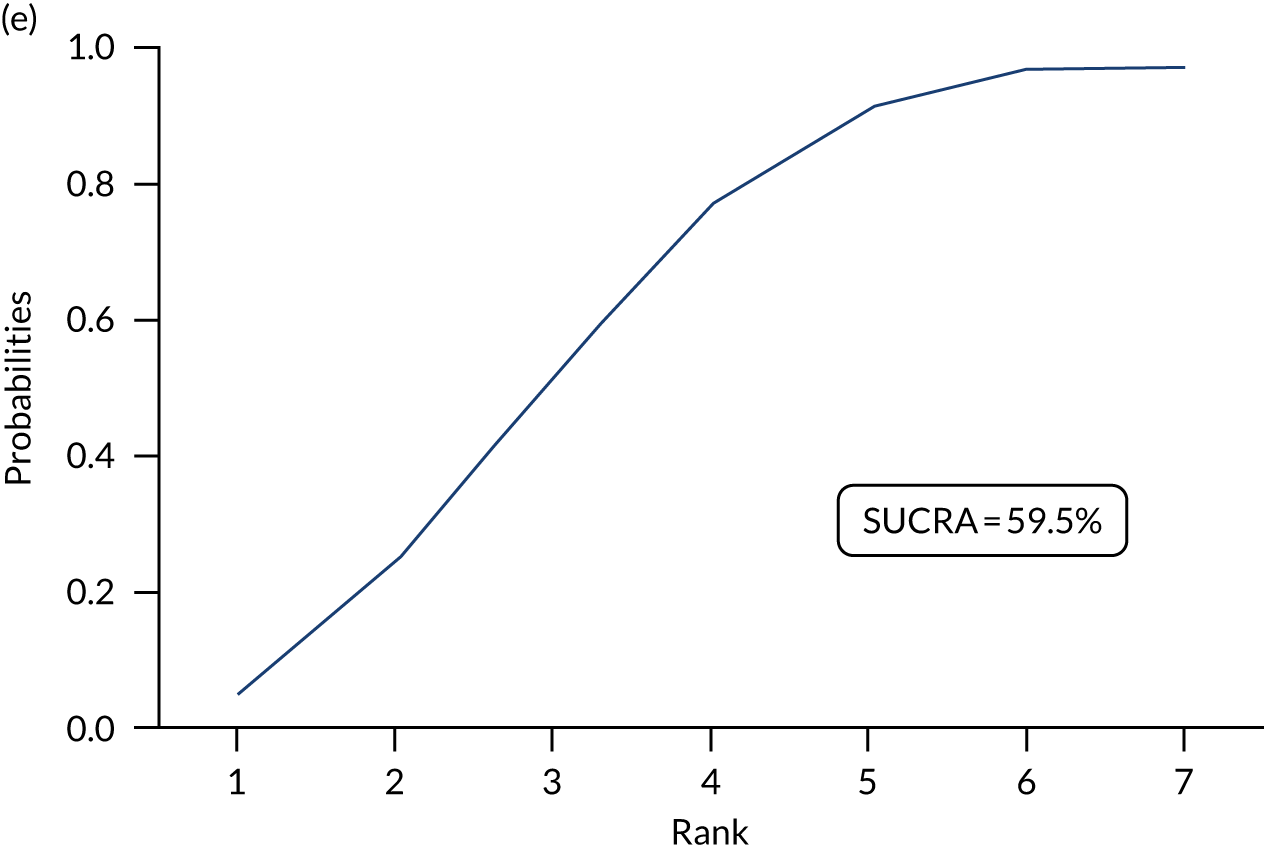

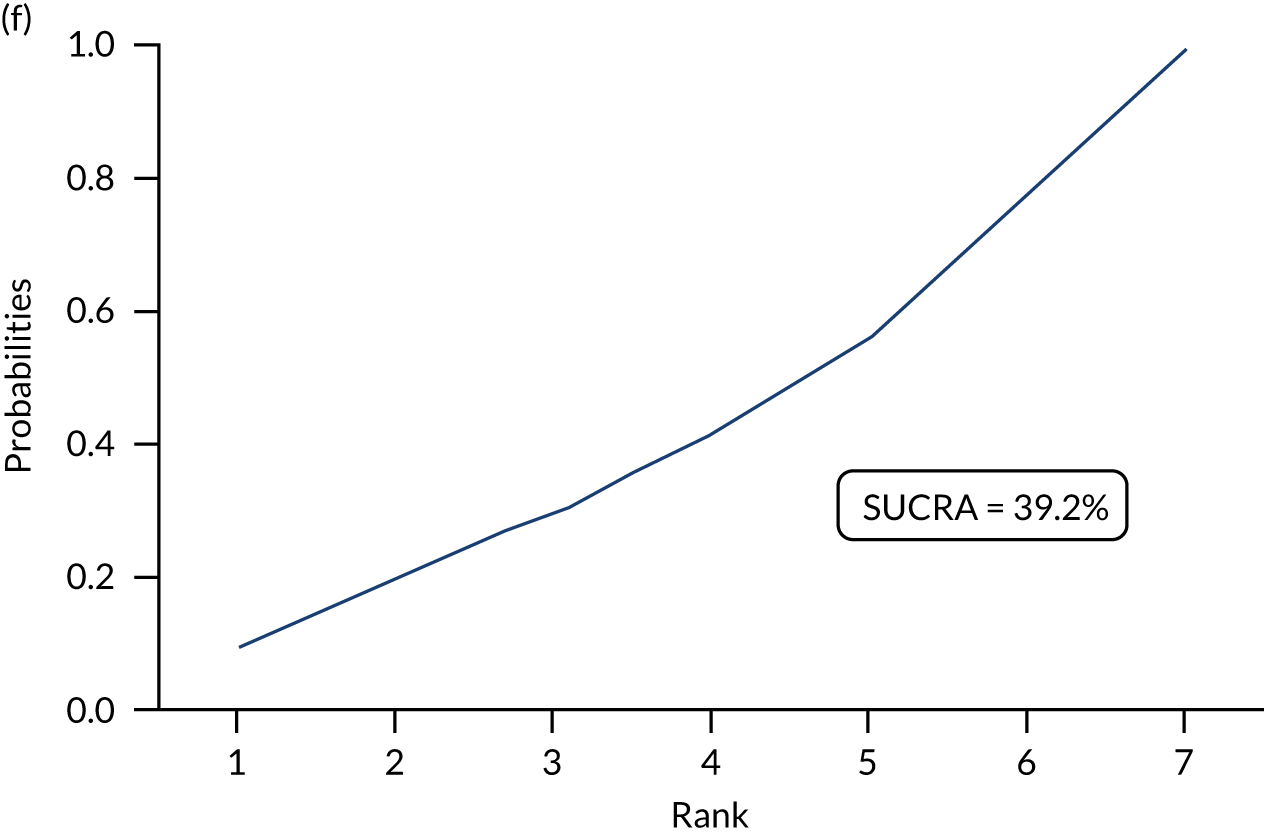

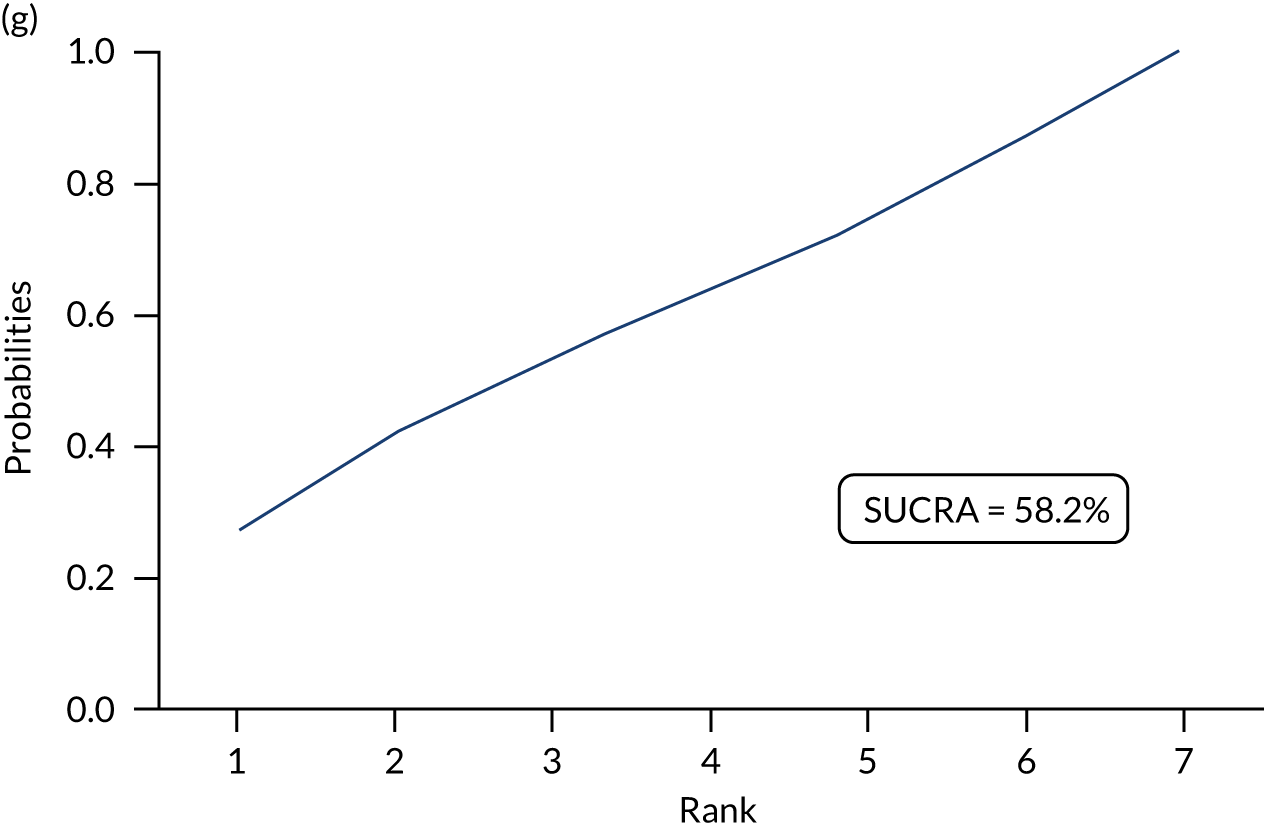

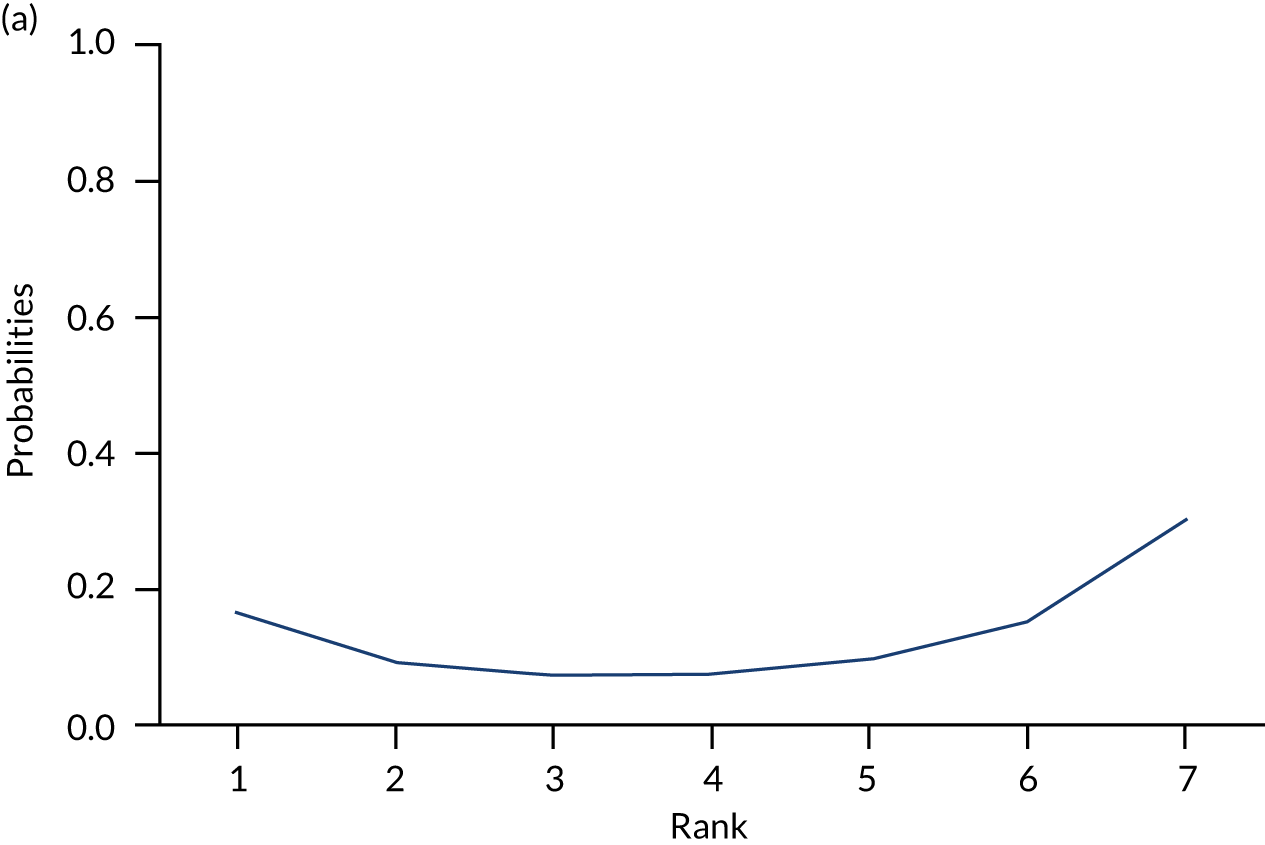

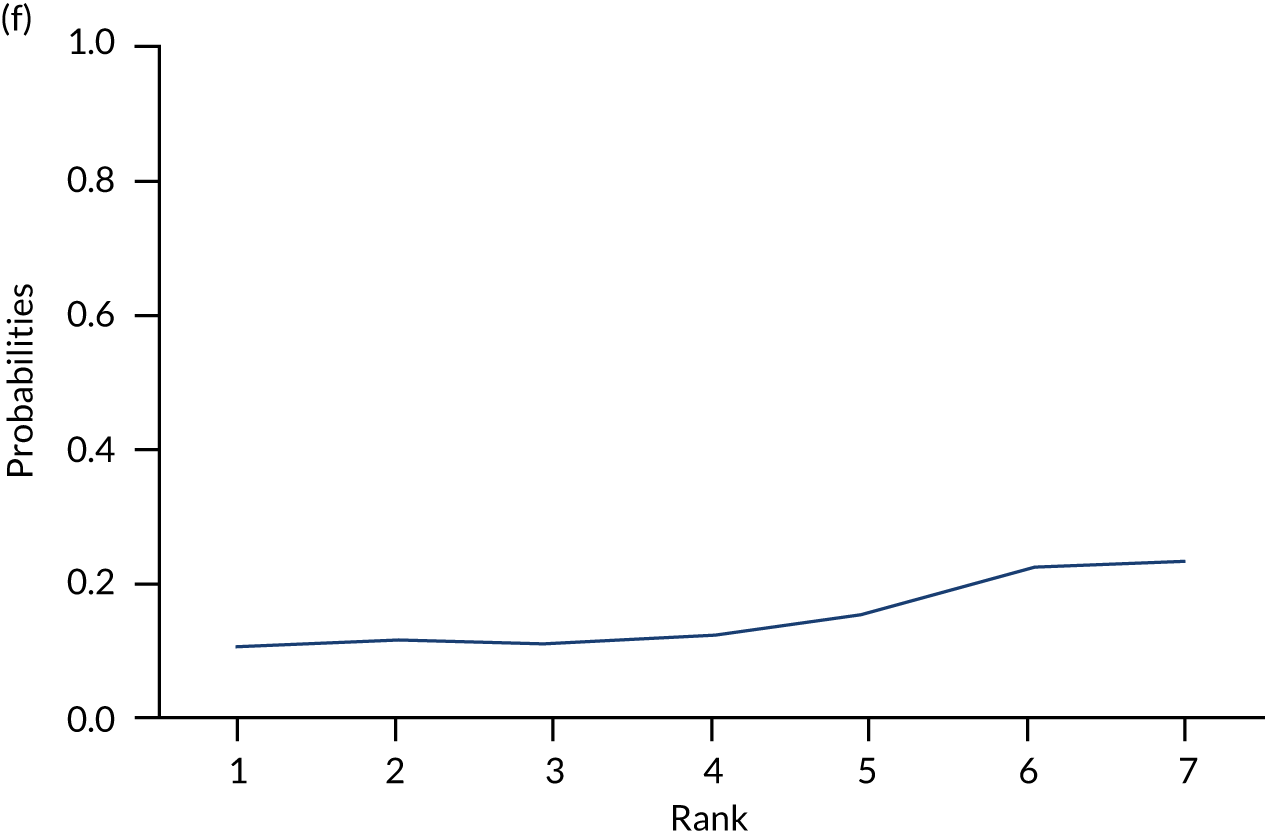

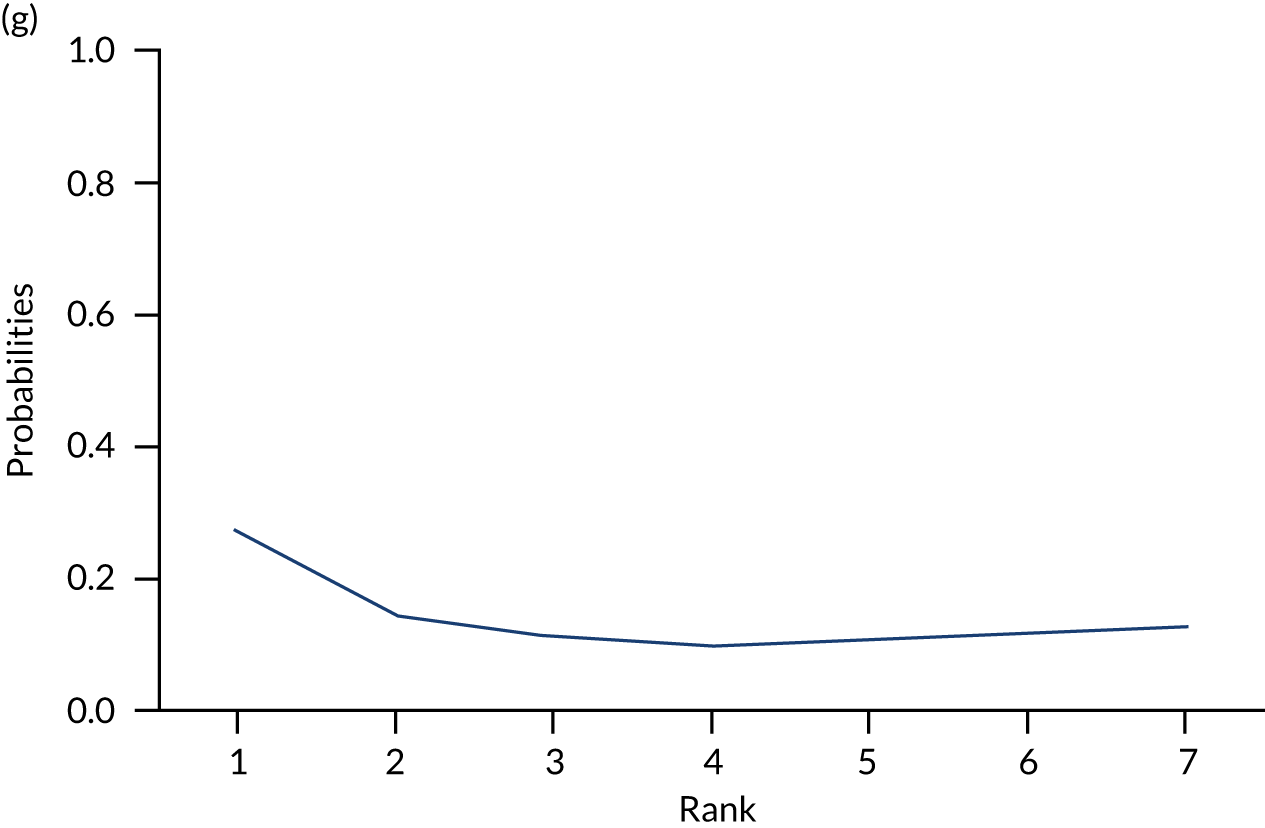

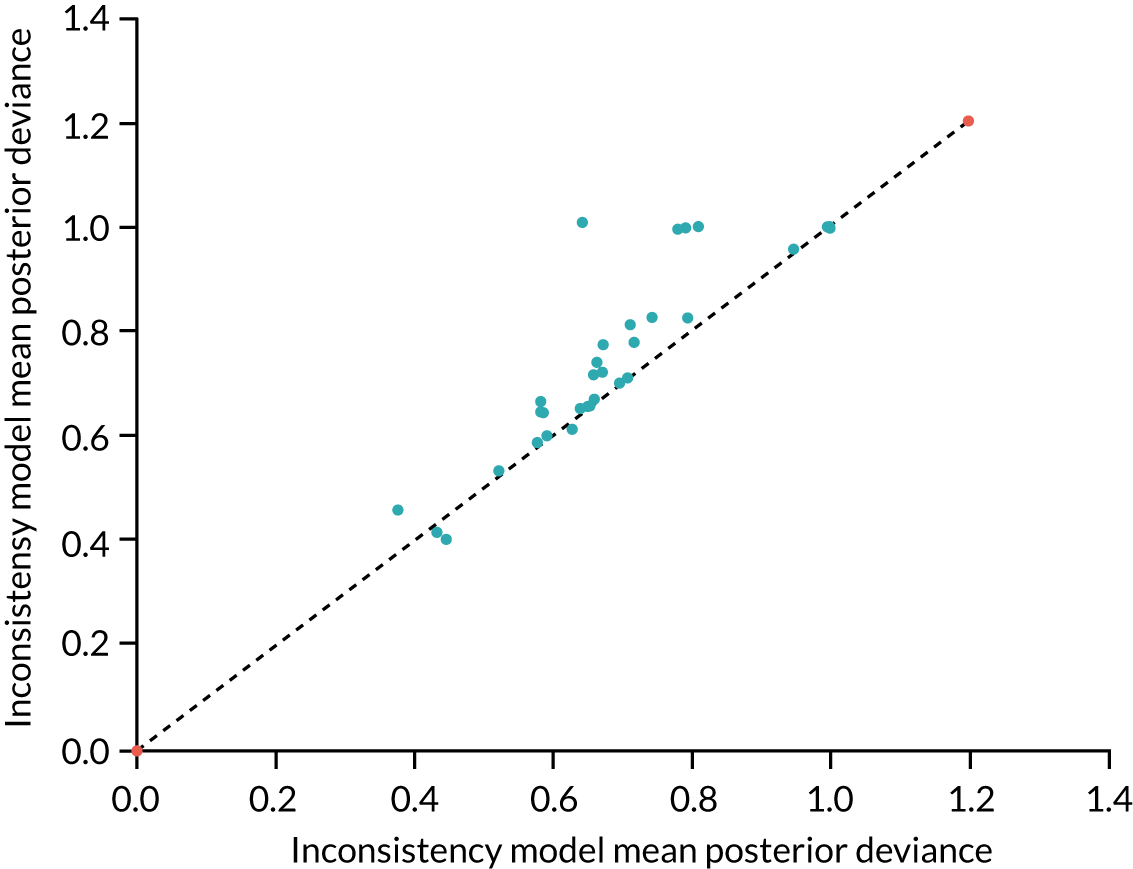

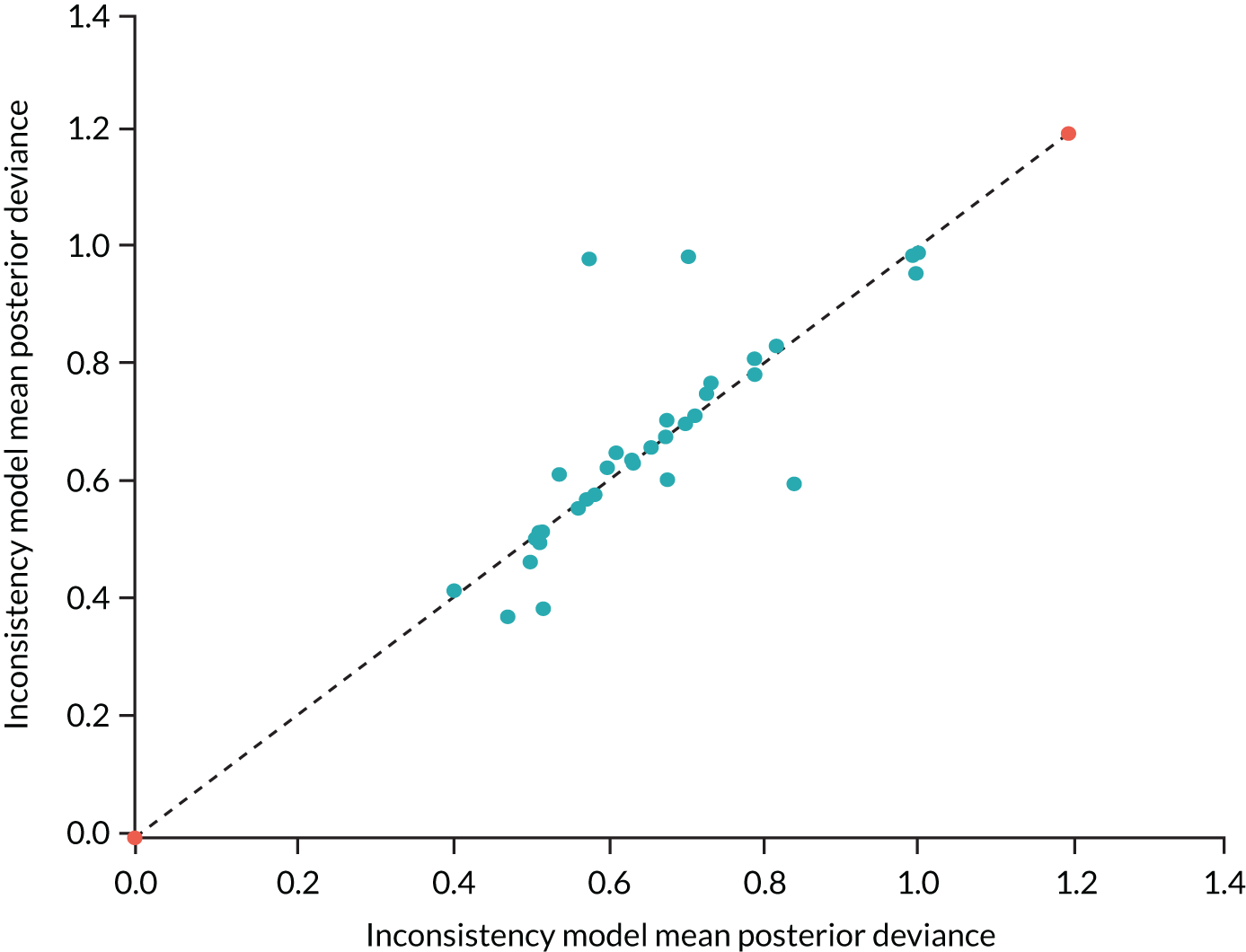

The interventions varied both between and within individual conditions, in terms of their underlying principles (e.g. CBT, guided relaxation, self-help, exposure therapy, motivational support for smoking cessation), content (e.g. the number of modules), mode of delivery (e.g. mobile, computer or text based, or completed at home or at clinic), type of support (e.g. online chat, telephone, face to face), frequency of support (e.g. weekly, ad hoc), person delivering support (e.g. clinician, assistant, lay person) and extent of support (e.g. administrative support only, additional counselling) (see Table 10).