Notes

Article history

The research reported in this issue of the journal was commissioned and funded by the Evidence Synthesis Programme on behalf of NICE as award number NIHR135755. The protocol was agreed in November 2022. The draft manuscript began editorial review in February 2023 and was accepted for publication in December 2023. The authors have been wholly responsible for all data collection, analysis and interpretation, and for writing up their work. The HTA editors and publisher have tried to ensure the accuracy of the authors’ manuscript and would like to thank the reviewers for their constructive comments on the draft document. However, they do not accept liability for damages or losses arising from material published in this article.

Permissions

Copyright statement

Copyright © 2024 Colquitt et al. This work was produced by Colquitt et al. under the terms of a commissioning contract issued by the Secretary of State for Health and Social Care. This is an Open Access publication distributed under the terms of the Creative Commons Attribution CC BY 4.0 licence, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. See: https://creativecommons.org/licenses/by/4.0/. For attribution the title, original author(s), the publication source – NIHR Journals Library, and the DOI of the publication must be cited.

2024 Colquitt et al.

Chapter 1 Introduction

Sections of this chapter have been reproduced from the protocol,1 available from the National Institute for Health and Care Excellence (NICE) website. © NICE 2022 Early Value Assessment Report Commissioned by the NIHR Evidence Synthesis Programme on Behalf of the National Institute for Health and Clinical Excellence – Protocol. Title of Project: Artificial Intelligence Software for Analysing Chest X-ray Images to Identify Suspected Lung Cancer. Available from www.nice.org.uk/guidance/hte12/documents/final-protocol All rights reserved. Subject to Notice of rights.

National Institute for Health and Care Excellence guidance is prepared for the NHS in England. All NICE guidance is subject to regular review and may be updated or withdrawn. NICE accepts no responsibility for the use of its content in this product/publication.

Purpose of the decision to be made

Lung cancer is one of the most common types of cancer in the UK. 2 In the early stages of the disease, people usually do not have symptoms, which means that lung cancer is often diagnosed late. 3 The 5-year survival rate for lung cancer is low, at below 10%. 2 Early diagnosis may improve survival. 3 NICE has identified software that has an artificial intelligence (AI)-developed algorithm (referred to hereafter as AI software) as potentially useful in assisting with the identification of suspected lung cancer.

The purpose of this early value assessment (EVA) is to assess the evidence on adjunct AI software for analysing chest X-rays (CXRs) for suspected lung cancer and identify evidence gaps to help direct data collection and further research. A conceptual modelling process will be undertaken to inform discussion of what would be required to develop a fully executable cost-effectiveness model for future economic evaluation.

Population

There are two populations of interest in this EVA: (1) people referred for a CXR from primary care because they have symptoms suggestive of lung cancer (symptomatic population) and (2) people referred for a CXR from primary care for reasons unrelated to lung cancer (incidental population).

Condition

Approximately 43,000 new cases of lung cancer are diagnosed annually in the UK. 2 The incidence of lung cancer is highest among older people. 4 It is rare in people aged < 40 years, and > 40% of people diagnosed with lung cancer are aged ≥ 75 years. 3

Lung cancer occurs when abnormal cells multiply in an uncontrolled way to form a tumour in the lung. 5 Cancer that begins in the lungs is called primary lung cancer. Cancer that begins elsewhere and spreads to the lungs is called secondary lung cancer. There are two main forms of primary lung cancer: non-small-cell lung cancer and small-cell lung cancer. These are named after the type of cell in which the cancer started growing. Non-small-cell lung cancer is the more common type (80–85% of cases) and can be classified into one of three kinds: squamous cell carcinoma, adenocarcinoma or large-cell carcinoma. Small-cell lung cancer is less common but usually spreads faster than non-small-cell lung cancer. 3 Most cases of lung cancer are caused by smoking. Although people who have never smoked can also develop the condition, smoking cigarettes is responsible for > 70% of cases. 3 People who smoke are 25 times more likely to get lung cancer than people who do not smoke. Other exposures can also increase the risk of lung cancer. These include radon gas (naturally occurring), occupational exposure to certain chemicals and substances, and pollution. 3

Symptoms of lung cancer include persistent cough, coughing up blood and shortness of breath. However, in the early stages of the disease people usually do not have symptoms. 3 This means that lung cancer is often diagnosed late. In 2018, > 65% of lung cancers in England were diagnosed at stage 3. Survival rates for lung cancer are very low. Recent estimates suggest 5-year survival rates of 10%. 3 The NHS Long Term Plan sets out the NHS’s ambition to diagnose 75% of all cancers at an early stage by 2028. 6

Technologies under assessment

Artificial intelligence combines computer science and data sets to enable problem-solving. Machine learning and deep learning are subfields of AI. They comprise AI algorithms that seek to create expert systems to make predictions or classifications based on data input. 7 Many paradigms of deep learning have been developed, but the most used of these is the convolutional neural network. 8

This assessment covers the use of AI software as an adjunct to an appropriate radiology specialist to assist in the identification of suspected lung cancer. AI technologies subject to this assessment are standalone software platforms developed with deep-learning algorithms to interpret CXRs. The algorithms are fixed but updated periodically. The AI software automatically interprets radiology images from the CXR to identify abnormalities or suspected abnormalities. The abnormalities detected and the methods of flagging the location and type of abnormalities differ between different AI technologies. For example, a CXR may be flagged as suspected lung cancer when a lung nodule, lung mass or hilar enlargement, or a combination of these, is identified. A technology may classify CXRs into those with and those without a nodule, or it may identify several different abnormalities or lung diseases. Fourteen companies producing AI software for analysing CXR images were included in the NICE scope. 9

Comparators

The comparator for this assessment is CXR images reviewed by an appropriate radiology specialist (e.g. radiologist or radiographer) without assistance from AI software.

Reference standards

Following CXR, people with suspected lung cancer should be offered a contrast-enhanced chest computed tomography (CT) scan to diagnose and stage the disease (contrast medium should only be given with caution to people with known renal impairment). The liver, adrenals and lower neck should also be included in the scan. 10 If the CT scan indicates that there may be cancer, the type and sequence of investigations may vary but typically include a positron emission tomography and computed tomography (PET-CT) scan and an image-guided biopsy. Other methods that may be used include magnetic resonance imaging, endobronchial ultrasound-guided transbronchial needle aspiration, and endoscopic ultrasound-guided fine-needle aspiration. 10 The PET-CT scan can show where there are active cancer cells, which can help with diagnosis and choosing the best treatment. 3

Care pathway

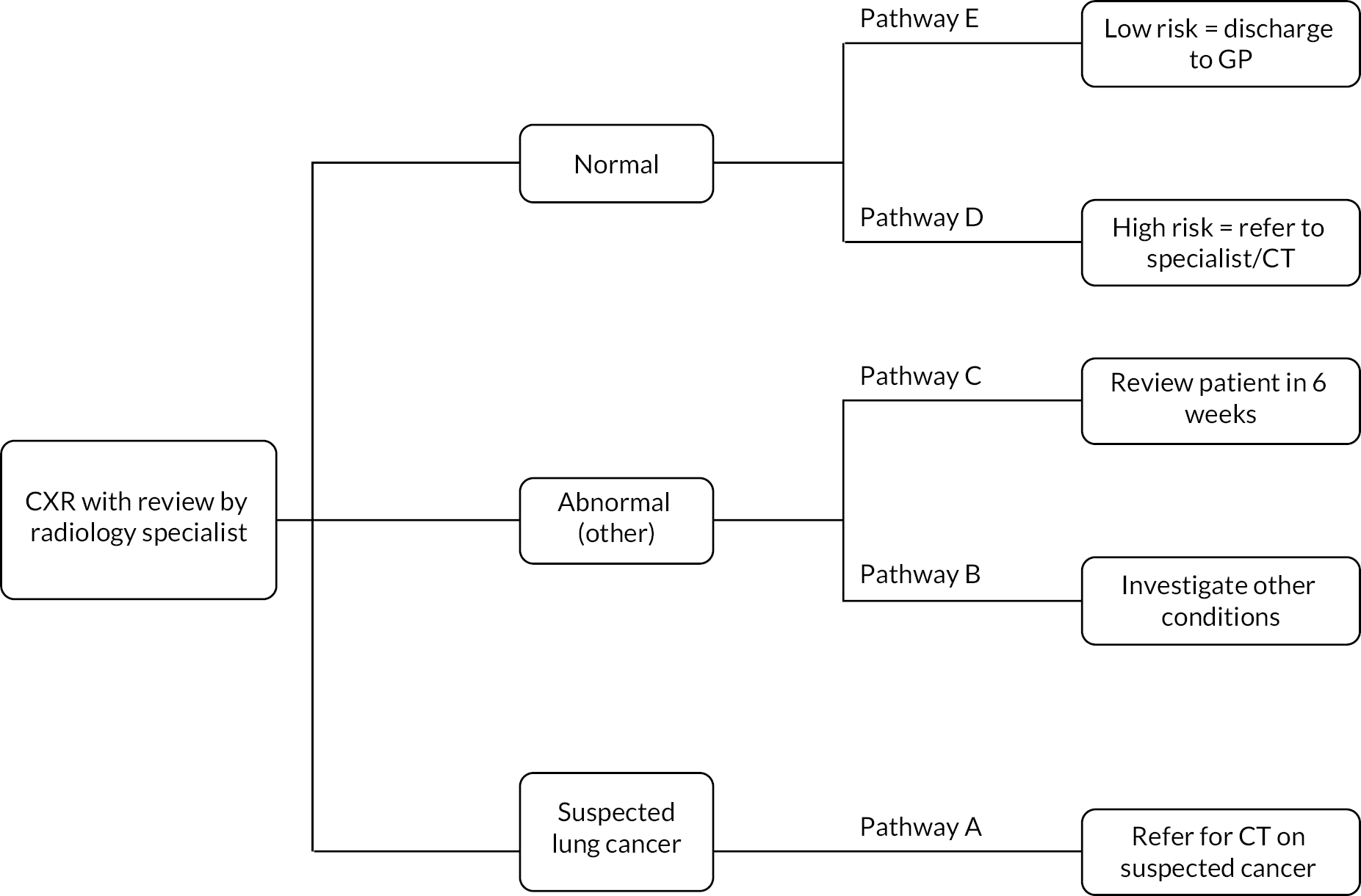

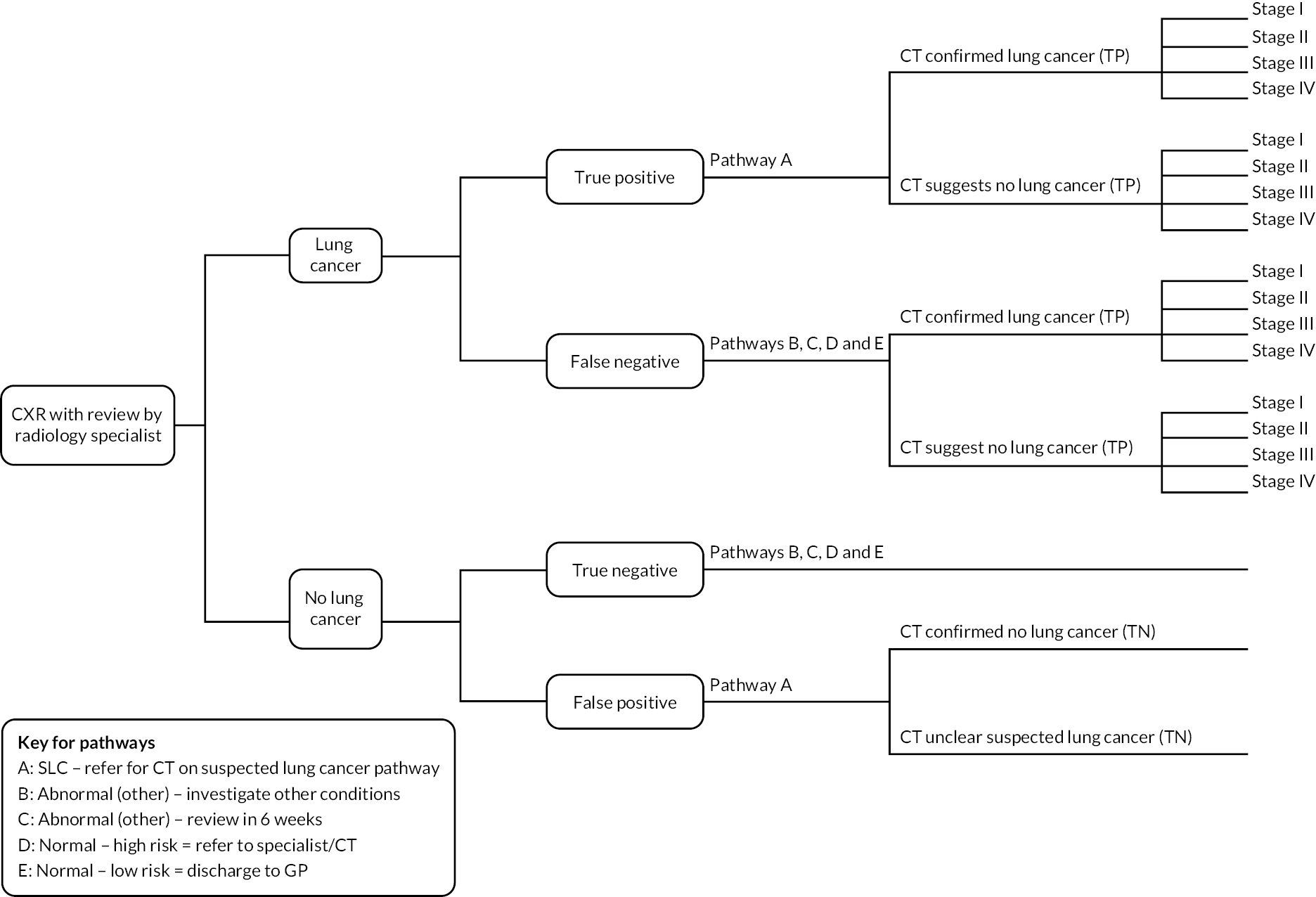

Figure 1 depicts the care pathway for the recognition and referral of suspected lung cancer as outlined in NICE Guideline NG12. 11 The identification of people with signs and symptoms suggestive of lung cancer often happens in primary care. The NICE guideline on recognition and referral for suspected lung cancer recommends that people aged ≥ 40 years be offered an urgent CXR (within 2 weeks of referral) if they have two or more symptoms of lung cancer, or if they have ever smoked and have at least one of the following unexplained symptoms: cough, fatigue, shortness of breath, chest pain, weight loss and appetite loss. 11 An urgent CXR should also be considered for people aged ≥ 40 years if they have a persistent or recurrent chest infection, finger clubbing, enlarged lymph nodes near the collarbone or in the neck (supraclavicular lymphadenopathy or persistent cervical lymphadenopathy), chest signs consistent with lung cancer or an increased platelet count (thrombocytosis). If the CXR findings suggest lung cancer, referral to secondary care should be made using a suspected cancer pathway referral for an appointment within 2 weeks. If the CXR is normal (without any clinically relevant lung abnormalities), high-risk patients, that is those who present with ongoing, unexplained symptoms, are referred to secondary care. Low-risk patients are discharged. In this EVA, AI software is applied to CXRs of patients who are referred for CXR from primary care. Referrals for CXR outside primary care are beyond the scope of this project.

FIGURE 1.

Care pathway for the recognition and referral of suspected lung cancer.

Chapter 2 Decision questions and objectives

Sections of this chapter have been reproduced from the protocol,1 available from the NICE website. © NICE 2022 Early Value Assessment Report Commissioned by the NIHR Evidence Synthesis Programme on Behalf of the National Institute for Health and Clinical Excellence – Protocol. Title of Project: Artificial Intelligence Software for Analysing Chest X-ray Images to Identify Suspected Lung Cancer. Available from www.nice.org.uk/guidance/hte12/documents/final-protocol All rights reserved. Subject to Notice of rights.

National Institute for Health and Care Excellence guidance is prepared for the NHS in England. All NICE guidance is subject to regular review and may be updated or withdrawn. NICE accepts no responsibility for the use of its content in this product/publication.

The overall aim of this project was to identify evidence on adjunct AI software for analysing CXRs for suspected lung cancer, and identify evidence gaps to help direct data collection and further research. A conceptual modelling process was undertaken to inform discussion of what would be required to develop a fully executable cost-effectiveness model for future economic evaluation. The available evidence base was examined via an EVA. The assessment was not intended to replace the need for a full assessment (Diagnostic Assessment Report) or to provide sufficient detail or synthesis to enable a recommendation to be made about whether AI software can be implemented in clinical practice at the present time.

Based on the scope produced by NICE,9 we defined the following questions to inform future assessment of the benefits, harms and costs of adjunct AI for analysing CXRs for suspected lung cancer compared with human reader alone:

-

What is the test accuracy and test failure rate of adjunct AI software to detect lung cancer on CXRs?

-

What are the practical implications of adjunct AI to detect lung cancer on CXRs?

-

What is the clinical effectiveness of adjunct AI software applied to CXRs?

-

What would a health economic model to estimate the cost-effectiveness of adjunct AI to detect lung cancer look like?

-

What are the cost and resource use considerations relating to the use of adjunct AI to detect lung cancer?

Chapter 3 Methods

This report contains reference to confidential information provided as part of the NICE Diagnostic Assessment Process. This information has been removed from the report and the results, discussions and conclusions of the report do not include the confidential information. These sections are clearly marked in the report.

Sections of this chapter have been reproduced from the protocol,1 available from the NICE website.

The review is registered on PROSPERO (registration number CRD42023384164), and the protocol is available from the NICE website (www.nice.org.uk/guidance/hte12/history).

The timeline to produce this EVA report was 10 weeks, which is substantially shorter than a typical systematic review or rapid review. To achieve the aims within the timeline, pragmatic decisions regarding the methods were made in collaboration with NICE and clinical experts.

Methods for assessing test accuracy, practical implications and clinical effectiveness

Search strategy

An iterative approach was taken to develop the search strategy, making use of relevant records identified during initial scoping searches and from relevant reviews. 12,13 The strategy was developed by an information specialist, with input from team members, aiming for a reasonable balance of sensitivity and specificity. Based on scoping work already undertaken, a series of complementary, targeted searches were favoured over a single search to retrieve a manageable number of records to screen (Appendix 1). Searches were run in a range of relevant bibliographic databases covering the fields of medicine and computer science. Searches were limited to studies published in English because studies published in other languages were likely to be difficult to assess in the timescale of this EVA. Non-human studies, letters, editorials, communications and conference abstracts were removed during the searches. No date limit was applied to the searches, but only records published in or after 2012 were screened. Database search strings were developed for MEDLINE and appropriately translated for each of the other databases, considering differences in thesaurus terms and syntax. The following bibliographic databases were searched: MEDLINE All (via Ovid), EMBASE (via Ovid), Cochrane Database of Systematic Reviews (via Wiley), Cochrane CENTRAL (via Wiley), Epistemonikos and ACM Digital Library.

A search for ongoing trials was conducted in the World Health Organization International Clinical Trials Registry Platform (WHO ICTRP). A search for ongoing systematic reviews was undertaken in the PROSPERO database.

The full record of searches is provided in Appendix 1.

Records were exported into EndNote X9.3 (Clarivate Analytics, Philadelphia, PA, USA), where duplicates were systematically identified and removed. Reference lists of included studies and a selection of relevant reviews were checked. Experts and team members were consulted and encouraged to share relevant studies.

Company submissions

As part of the Diagnostics Assessment Programme (DAP) process, 14 companies producing AI software were identified by NICE and invited to participate in the EVA and to submit evidence. 9 The External Assessment Group (EAG) assessed company submissions in exactly the same way as it assessed published evidence, and references lists were examined for relevant studies.

Eligibility criteria

The eligibility criteria for the test accuracy, practical implications and clinical effectiveness questions are presented in Table 1.

| Key question 1. What are the test accuracy and test failure rates of adjunct AI software to detect lung cancer on CXRs? Subquestions: 1a. What is the test accuracy of adjunct AI software to detect lung nodules? 1b. What is the concordance in lung nodule detection between radiology specialist with and without adjunct AI software? |

Key question 2. What are the practical implications of adjunct AI software to detect lung cancer on CXRs?a | Key question 3. What is the clinical effectiveness of adjunct AI software applied to CXRs? | |

|---|---|---|---|

| Population | Adults referred from primary care who are:

|

||

Where data permit, subgroups will be considered based on:

|

|||

| Target condition | Lung cancer | ||

| Intervention | CXR interpreted by radiology specialist (e.g. radiologist or radiographer) in conjunction with the following AI software: AI-Rad Companion CXR (Siemens Healthineers), Annalise CXR (annalise.ai), Auto Lung Nodule Detection (Samsung), ChestLink Radiology Automation (Oxipit), ChestView (GLEAMER), CXR (Rayscape), ClearRead Xray – Detect (Riverain Technologies), InferRead DR Chest (Infervision), Lunit INSIGHT CXR (Lunit), Milvue Suite (Milvue), qXR (Qure.ai), red dot (behold.ai), SenseCare-Chest DR Pro (SenseTime), VUNO Med-CXR (VUNO) | ||

| Comparator | CXR interpreted by radiology specialist without the use of AI software | ||

| Reference standard | For accuracy of lung cancer detection: lung cancer confirmed by histological analysis of lung biopsy, or diagnostic methods specified in NICE Guideline NG122,10 where biopsy is not applicable For accuracy of nodule detection: radiology specialist (single reader or consensus of more than one reader) |

N/A | N/A |

| Outcome | Test accuracy for the detection of lung cancer (sensitivity, specificity, positive predictive value, numbers of true positive, false-positive, true-negative, false-negative results, number of lung cancers diagnosed) Test failures (rates, and data on inconclusive, indeterminate and excluded samples, failure for any other reason) Characteristics of discordant cancers cases Test accuracy for the detection of lung nodules Concordance in lung nodule detection between radiology specialist with and without adjunct AI software |

Practical implicationsa [time to X-ray report, CT scan, diagnosis, turnaround time (image review to radiology report), acceptability of software to clinicians, impact on clinical decision-making, impact of false positives on workflow] | Mortality, morbidity, health-related quality of life |

| Study design | Comparative study designs | ||

| Publication type | Peer-reviewed papers | ||

| Language | English | ||

| Exclusion | Versions of AI software that are not commercially available, are not named in the protocol or are not specified in the study publication. Computer-aided detection that does not include AI software. Non-human studies. Letters, editorials, communications, conference abstracts, qualitative studies. People with a known diagnosis of lung cancer at the time of CXR. Studies of children. Study designs that do not include a control/comparator arm. Simulation studies or studies using synthetic images. Studies not applicable to primary care patients, for example neurosurgery, transplant or plastic surgery patients, people in secure forensic mental health services. Studies where more than 10% of the sample does not meet our inclusion criteria. Studies without extractable numerical data. Studies that provided insufficient information for assessment of methodological quality/risk of bias. Articles not available in the English language. Studies using index tests or reference standards other than those specified in the inclusion criteria. Studies of people who do not have signs and symptoms of cancer or a suspected condition or trauma (i.e. people undergoing health screening). Studies where it cannot be determined if the inclusion criteria are met | ||

Review strategy

Titles and abstracts of records identified by the searches were screened by one reviewer, with a random 20% assessed independently by a second reviewer. Records considered potentially relevant by either reviewer were retrieved for further assessment. Full-text articles were assessed against the full inclusion/exclusion criteria by one reviewer. A random 20% sample was assessed independently by a second reviewer. Disagreements were resolved by consensus or through discussion with a third reviewer. Records rejected at full-text stage (including reasons for exclusion) are reported in Report Supplementary Material 2.

Data extraction

We planned to extract data into a piloted electronic data collection form. Data were to be extracted by one reviewer, with a random 20% checked by a second reviewer, and disagreements resolved by consensus or discussion with a third reviewer. However, no studies met the inclusion criteria.

Risk of bias

We planned to assess the risk of bias of included studies using tools appropriate to the study design, such as those produced by the Joanna Briggs Institute. 14 Risk of bias was to be assessed by one reviewer, with a random 20% assessed by a second reviewer and disagreements resolved through consensus or discussion with a third reviewer. As no studies met the inclusion criteria, no formal risk-of-bias assessment was undertaken.

Analysis and synthesis

Methods of analysis and synthesis were described a priori in the research protocol. 1 However, no studies met the inclusion criteria, so no data synthesis was undertaken.

Post hoc methods

No studies meeting the inclusion criteria were identified. Following discussions with the NICE technical team for this project, we examined the list of excluded studies that were closest to the review inclusion criteria (see Table 1), that is:

-

Interventions: CXRs interpreted by radiology specialist in conjunction with eligible AI software versus radiologists alone and/or reference standard.

-

Population: no details provided on the referral status or symptom status [studies that had an explicitly excluded population, for example health screening, preoperative CXRs, inpatients, accident and emergency (A&E), were not selected].

-

Outcomes: as defined in Table 1.

Selected studies were tabulated using the approach described in Review strategy and key biases were noted. Results were summarised narratively.

Methods for developing a conceptual cost-effectiveness model

This section describes the process, methods and rationale for the development of a conceptual15 decision-analytic model to inform a potential full cost-effectiveness evaluation of adjunct AI software for analysing CXR images to identify suspected lung cancer.

The conceptual modelling process explored both the structure and evidence requirements for parameter inputs, for future model development. This was to facilitate the identification of cost outcomes, potential value drivers of AI software for this indication, and evidence linkage requirements for longer-term outcomes. Costs associated with implementing AI software were also considered.

Information to inform the conceptual model was obtained from a variety of sources including a literature review, current clinical guidelines, discussion with specialist clinical experts and the companies submitting AI software for assessment.

Literature review

A pragmatic search of the literature was used to identify existing methods of cost-effectiveness modelling for AI software in CXRs and inform parameterisation of the conceptual model. It was not intended as a substitute for a systematic literature review or to provide a definitive summary of evidence gaps. This will be required for any future development of an executable cost-effectiveness model.

Following initial scoping searches, we did not expect to find any full economic evaluations of AI software as an adjunct to radiology specialist review of CXRs, particularly in the primary care population. For this reason, a broad search strategy was used across two databases (MEDLINE and Tufts CEA), and broad screening criteria were applied. The primary inclusion criterion was ‘lung cancer studies’, but, following this, any study that could inform the structure or parameters of a conceptual model was identified at title/abstract level. Full-text assessment of these papers was used to refine screening criteria further into studies that satisfied (1) the primary care referral population, (2) those with specific intention of diagnosis or screening and (3) those most relevant to the UK setting. Reference lists of these studies and publication lists of authorship groups were also screened for any further potentially relevant papers. Studies identified in these targeted reviews were not subject to a formal assessment but discussed narratively. This focused on the methods used, assumptions made, availability of evidence to support evidence linkage approaches and considerations for future modelling and research.

Clinical guidelines

The structure of the decision-analytic model is intrinsically linked to current clinical pathways. Key points throughout the clinical pathway for the detection and management of lung cancer, and the positioning of AI software within this pathway (for adults referred for CXR from primary care), were identified with reference to Figure 1 in the final NICE scope for this topic,9 existing guidelines on the diagnostic and care pathway10,11,16,17 and close collaboration with clinical experts.

Company and clinical expert involvement

Information on the relevant AI technologies under review was obtained from company submissions, with requests for additional information sent to companies that registered as stakeholders (Annalise AI, Behold AI, Infervision, Lunit Inc. and Siemens Healthcare).

Using the information gathered from these sources, an iterative process was used to achieve a model structure that is pragmatic in its representation of the complex clinical pathways that adults from primary care populations may follow to arrive at a diagnosis of lung cancer.

Given the time available to conduct this EVA, the primary focus of this report was the diagnostic component of the model. Priority was given to the following:

-

input parameters to populate the model – including consideration of the type of evidence required, sources available and gaps in the evidence

-

relevant outcome measures to compare the cost-effectiveness and clinical effectiveness of AI software in the detection of lung cancer

-

identification of potential value drivers of the model – with recommendations of how these can be measured for inclusion in a cost-effectiveness model.

Once diagnosis is achieved in the model, evidence linkage between intermediate outcomes and long-term outcomes is required to assess cost-effectiveness over a clinically appropriate time horizon. These mainly relate to the mapping of the disease state (i.e. lung cancer) and are not specific to the diagnostic technology being assessed (e.g. utilities, costs and effects of current treatments). Potential sources for the main longer-term outcomes were identified during the literature search, with a focus on those relevant to the UK setting and in line with the requirements of the NICE reference case. 18 An overview is presented in this report as an example of current practices in modelling lung cancer.

Methods to assess potential budget impact

Estimates of the potential budget impact of introducing AI software as an adjunct to radiology specialist review of CXRs were calculated based on methods for a budget impact analysis (BIA) outlined in the NICE evidence standards framework for digital health technologies19 and International Society for Pharmacoeconomics and Outcomes Research Task Force recommendations. 20 These identify six key elements that require inputs for the modelling framework of a BIA:

-

size and characteristics of affected population

-

current intervention mix without the new intervention

-

costs of current intervention mix

-

new intervention mix with the new intervention

-

cost of the new intervention mix

-

use and cost of other health conditions and treatment-related healthcare services. 20

Given the limitation of time and scope, a fully comprehensive BIA was not attempted as this would have required data on any changes in resource use and associated cost. The intended outcome of this report was a conceptual model where no outcome data were run and no results were produced. Therefore, estimates on this element were not included. The aim was to approximate the budget impact at an individual institution level, with information sourced from the literature and supplemental information provided by representatives of the institution used as an example.

Company submissions to NICE as part of the DAP request for information were screened for cost data. Clarifying questions were sent to all companies (whether or not costings were already submitted) to obtain more granular detail for the purpose of BIA.

Records retrieved from the broad cost search were screened at title/abstract level by one reviewer (MJ) to identify any studies that may have been applicable. These were then retrieved as full text and their suitability for use was assessed. Studies that yielded relevant information were retained, data extracted and authors contacted to obtain further context-specific information.

Chapter 4 Results: test accuracy, practical implications and clinical effectiveness

Results of literature searches

Six of the companies contacted by NICE agreed to participate in the EVA, and five of these provided evidence (Annalise AI, Behold AI, Infervision, Lunit Inc. and Siemens Healthineers). None of the clinical evidence in the company submissions met the review eligibility criteria. An examination of reference lists in the company submissions identified 22 references.

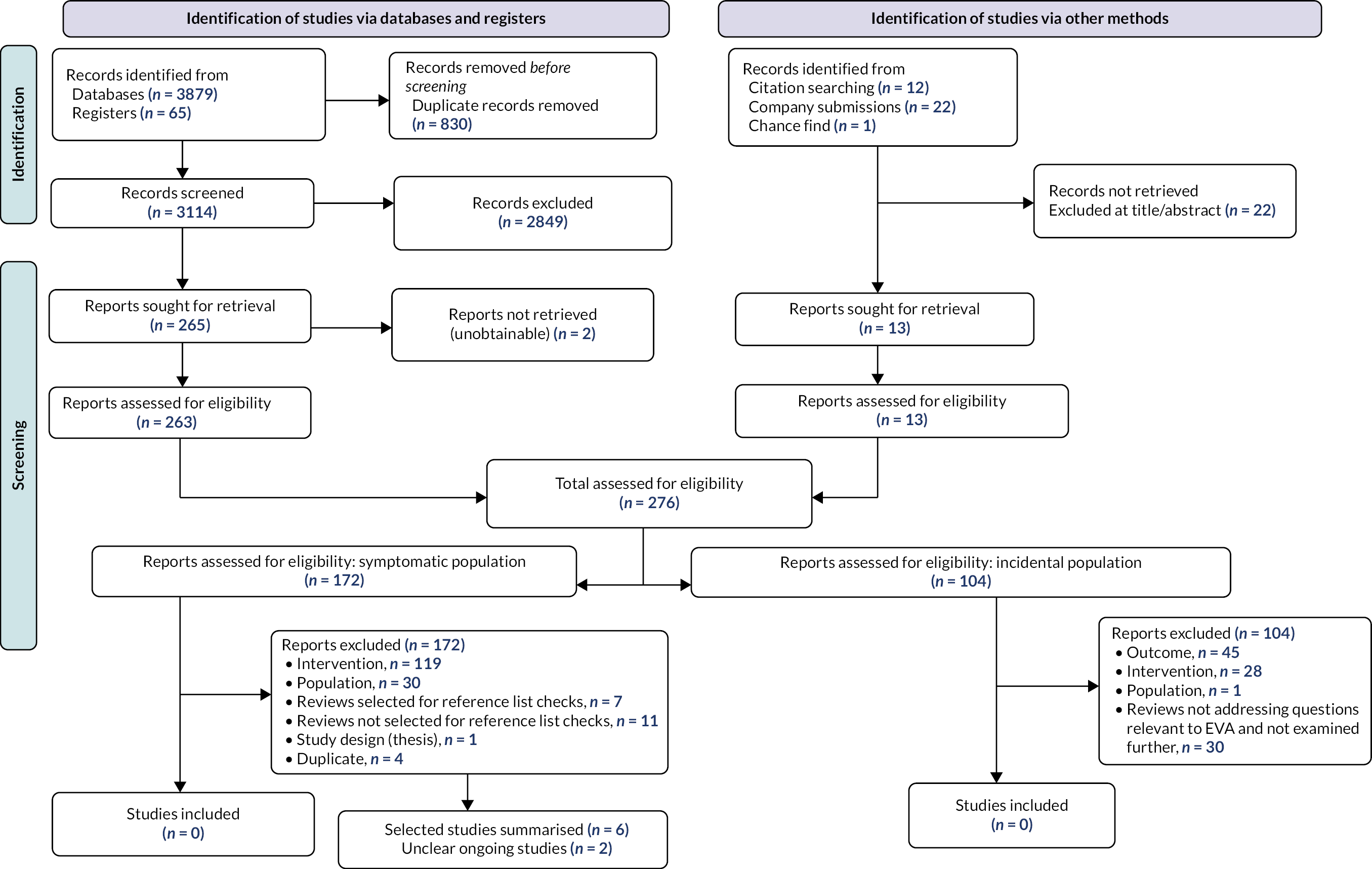

Figure 2 shows the flow of studies through this review. Searches identified a total of 3149 records. Of these, 172 were identified as potentially relevant to the symptomatic population and 104 were identified as potentially relevant to the incidental population. Full texts were obtained and screened. None of the studies met the inclusion criteria specified in Table 1. The eligibility of two ongoing studies was unclear, and these studies are summarised in Summary of ongoing trials.

FIGURE 2.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses flow diagram.

Reasons for exclusion are described in Report Supplementary Material 2. Among the studies that were potentially relevant to the symptomatic population, the main reasons for exclusion were no eligible AI software or AI not used in conjunction with radiology specialist (n = 119), and population not referred from primary care (n = 30). Only one identified study was conducted in a population referred from primary care; however, the comparison was not relevant (AI software alone vs. radiologist alone). 21 Among the studies potentially relevant to the incidental population, the main reasons for exclusion were no relevant outcome (n = 45) and no eligible AI software (n = 28).

As described in Analysis and synthesis, to provide the closest available evidence to that required in Table 1, we looked for excluded studies that (1) had eligible AI software and (2) compared radiology specialist in conjunction with AI software with radiology specialist alone, but where the referral status of the population was unclear. Studies that had an explicitly excluded population (e.g. a health screening population, preoperative CXRs, inpatients, A&E) remained excluded. Six such studies were identified (Table 2).

| Study (first author and year) | Country | Study design | Population | Index test | Comparator | Reference standard |

|---|---|---|---|---|---|---|

| Dissez 202222 | UK | Retrospective cohort study, one centre | 400 CXRs from 400 adults | Red Dot (Behold.ai) + radiologists | Radiologists, radiographers | Blind reads of CXRs by two consultant radiologists |

| Nam 202023 | Republic of Korea | Retrospective cohort study, one centre | 218 CXRs from 218 adults | Lunit INSIGHT version 1.0.1.1 + radiologists | Radiologists | CT scan |

| Jang 202024 | Republic of Korea | Retrospective cohort study, one centre | 351 CXRs from 351 adults | Lunit INSIGHT version 1.2.0.0 + radiologists | Radiologists | CXR and CT images |

| Koo 202125 | Republic of Korea | Retrospective cohort study, one centre | 434 CXRs from 378 adults | Lunit INSIGHT CXR version 1.00 + radiologist | Radiologists | Consensus from two thoracic radiologists using CXR or CT |

| Homayounieh 202126 | Germany; USA | Retrospective cohort study, two centres | 100 CXRs from 100 adults | AI-Rad Companion CXR (Siemens Healthineers) + radiologist | Radiologists | Consensus from two thoracic radiologists using all available clinical data |

| Siemens 2022 | Confidential information has been removed | Confidential information has been removed | Confidential information has been removed | Prototype AI-Rad Companion CXR algorithm (Siemens Healthineers) + radiologist | Radiologists | Consensus from two thoracic radiologists using CXR or CT |

Study characteristics and key biases of selected excluded studies

Characteristics of the summarised studies are described in Table 2. In brief, six studies were summarised22–26 (Siemens 2022, unpublished academic in confidence submission from Siemens Healthineers). The studies employed retrospective designs22–26 and (confidential information has been removed) (Siemens 2022). Four studies were published: two were provided by the companies and not peer reviewed, and one of these is a preprint22 and the other is ongoing (Siemens 2022). The studies were carried out in the USA,26 (Siemens 2022) Germany,26 Republic of Korea23–25 and the UK. 22

Chest X-ray images were obtained from hospital databases,22–25 the Lung Image Database Consortium,26 a health centre database26 or (confidential information has been removed) (Siemens 2022). The number of CXR images included in the studies ranged from 10026 to 434,25 and the number of participants who provided CXR data ranged from 10026 to 40022. No information was provided in any of the studies about the referral route of patients who provided CXR data. It is plausible that the studies include both symptomatic patients and those who underwent CXR for reasons unrelated to lung cancer, as well as those from excluded populations such as people referred from other healthcare settings.

A summary of characteristics of the CXRs assessed by the studies differed both within and across studies (Table 2; more detailed descriptions are provided in Report Supplementary Material 1). The UK study22 identified random samples of patients who had a clinical text report indicating potentially malignant CXR and a follow-up CT and those with a clinical text report of no urgent findings. Nam et al. 23 and Jang et al. 24 both included a large proportion of confirmed cancer cases with false-negative CXRs prior to diagnosis. Homayounieh26 selected CXRs to ensure that negative and positive cases with different levels of difficulty in detection were included. Siemens 2022 (confidential information has been removed). Koo et al. 25 included adults with three or fewer nodules on both CXR and CT with at least one nodule pathologically confirmed on biopsy as either benign or malignant.

Images were assessed by a mix of consultant radiologists, board-certified radiologists, radiology trainees and reporting radiographers,22 experienced radiologists,23 experienced radiologists and radiology residents24,25 and senior and junior radiologists. 26 This information was not reported in one study (Siemens 2022). The readers had 124 to 3526 years of experience of reporting CXRs. 22–26 One study reported the number of readers with fewer or more than 4 years of experience (Siemens 2022). The number of clinicians included in the studies ranged from 423,25 to 11. 22 The accuracy of readers in detecting nodules or lung cancer with and without AI software was each compared with a ground-truth or reference standard, and these varied between the studies (Table 3). The threshold for defining a positive index test result (i.e. what was considered to be a nodule on CXR) was not defined in any of the studies.

| Study name | AI name | Number of patients | Number of CXRs | Number of cancers/nodules | Group | TP | FP | FN | TN | Sensitivity (95% CI) | Specificity (95% CI) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Lung cancer detection | |||||||||||

| Dissez 202222 | Red Dot (Behold.ai) | 400 | 400 | 72 | With AI | 55a | 82a | 17a | 246a | 77% (75% to 80%) | 75% (71% to 77%) |

| Without AI | 48a | 266a | 24a | 62a | 66% (59% to 71%) | 81% (77% to 85%) | |||||

| Nodule detection | |||||||||||

| Nam 202023,b | Lunit INSIGHT version 1.0.1.1 | N/R | N/R | N/R | With AI | 357 | 36 | 315 | 164 | 53% (49% to 57%) | 82% (77% to 87%) |

| Without AI | 316 | 44 | 356 | 156 | 47% (43% to 51%) | 78% (72% to 84%) | |||||

| Jang 202024,b | Lunit INSIGHT version 1.2.0.0 | 351 | 351 | 117 | With AI | 66 | 19 | 51 | 215 | 56% (47% to 65%) | 92% (88% to 95%) |

| Without AI | 50 | 24 | 67 | 210 | 43% (34% to 52%) | 90% (86% to 94%) | |||||

| Koo 202125 – per patient any nodule | Lunit INSIGHT version 1.0.0.0 | 378 | 434 | 165 | With AI | 157a | 6a | 8a | 207a | 95% (91% to 98%) | 97% (94% to 99%) |

| Without AI | 152a | 15a | 13a | 198a | 92% (87% to 96%) | 93% (89% to 96%) | |||||

| Koo 202125 – per nodule | N/R | N/R | N/R | With AI | N/R | N/R | N/R | N/R | 94% (N/R) | N/R (N/R) | |

| Without AI | N/R | N/R | N/R | N/R | 89% (N/R) | N/R (N/R) | |||||

| Homayounieh 202126 | AI-Rad Companion CXR | 100 | 100 | N/R | With AI | 26.4 | 2.5 | 23.6 | 47.5 | 55% (48% to 63%) | 95% (91% to 9%) |

| Without AI | 23.6 | 4.1 | 26.4 | 45.5 | 45% (38% to 53%) | 93% (89% to 96%) | |||||

| Siemens 2022 | Prototype AI-Rad Companion CXR algorithm | Confidential information has been removed | Confidential information has been removed | N/R | With AI | N/R | N/R | N/R | N/R | Confidential information has been removed (N/R) | Confidential information has been removed (N/R) |

| Without AI | N/R | N/R | N/R | N/R | Confidential information has been removed (N/R) | Confidential information has been removed (N/R) | |||||

Three studies assessed Lunit INSIGHT,23–25 one assessed Red Dot Behold.ai22 and two assessed AI-Rad Companion Siemens26 (Siemens 2022). It is unclear whether the prototype AI software described in Siemens 2022 is commercially available.

Only a small number of outcomes relevant to the present review were assessed: test accuracy (lung cancer),22 test accuracy (lung nodules)23–26 (Siemens 2022), CT referrals,22,24 acceptability of AI to clinicians22 and CXR reading times. 24,25

The following risks of bias and applicability concerns were present in the papers:

-

Retrospective study designs were used. There is, therefore, the potential for selection bias, missing data and confounding.

-

Assessments were conducted on test sets of data interpreted outside clinical practice. Caution is needed in extrapolating from these types of studies, as prior evidence suggests little to no association between performance in this environment and that seen in clinical practice. 27

-

Only one study was conducted in the UK;22 however, it is unclear if the population from whom the CXRs were taken are reflective of people who would be referred from primary care in a real-world setting. The generalisability of results from the other five studies is similarly limited in this way, and also because populations from the USA and Republic of Korea may differ from the UK population in disease prevalence rates, age and comorbidities, and ethnic diversity. 28 There may also be differences in treatment settings and in the training and expertise of radiologists.

-

Artificial intelligence software manufacturers were involved in three of the six studies [financial support n = 226 (Siemens 2022), employees authors n = 122]. Prior evidence suggests that studies conducted by drug/device manufacturers tend to report more favourable results than non-industry studies. 29 Caution in interpretation of these studies is warranted until independent assessment of the AI software is obtained.

-

Each radiologist interpreted each CXR with and without AI software. In three studies22,24 (Siemens 2022), there was a washout period between readings, whereas in others,23,26 the radiologist was aware of their initial decision at the second reading. This is not reflective of UK clinical practice, and there is concern that the first reading could influence the second reading.

-

The threshold for defining a positive index test result was not defined in the studies; therefore, it is not possible to know whether the results of these studies are reflective of how AI would perform under clinical practice conditions, nor is it possible to know whether the results are comparable between studies.

-

Where CT referrals were reported, these were hypothetical referrals rather than actual referrals and may not reflect real-world practice.

What are the test accuracy and test failure rates of adjunct artificial intelligence software to detect lung cancer on chest X-rays?

We did not identify any studies that met the inclusion criteria for this question.

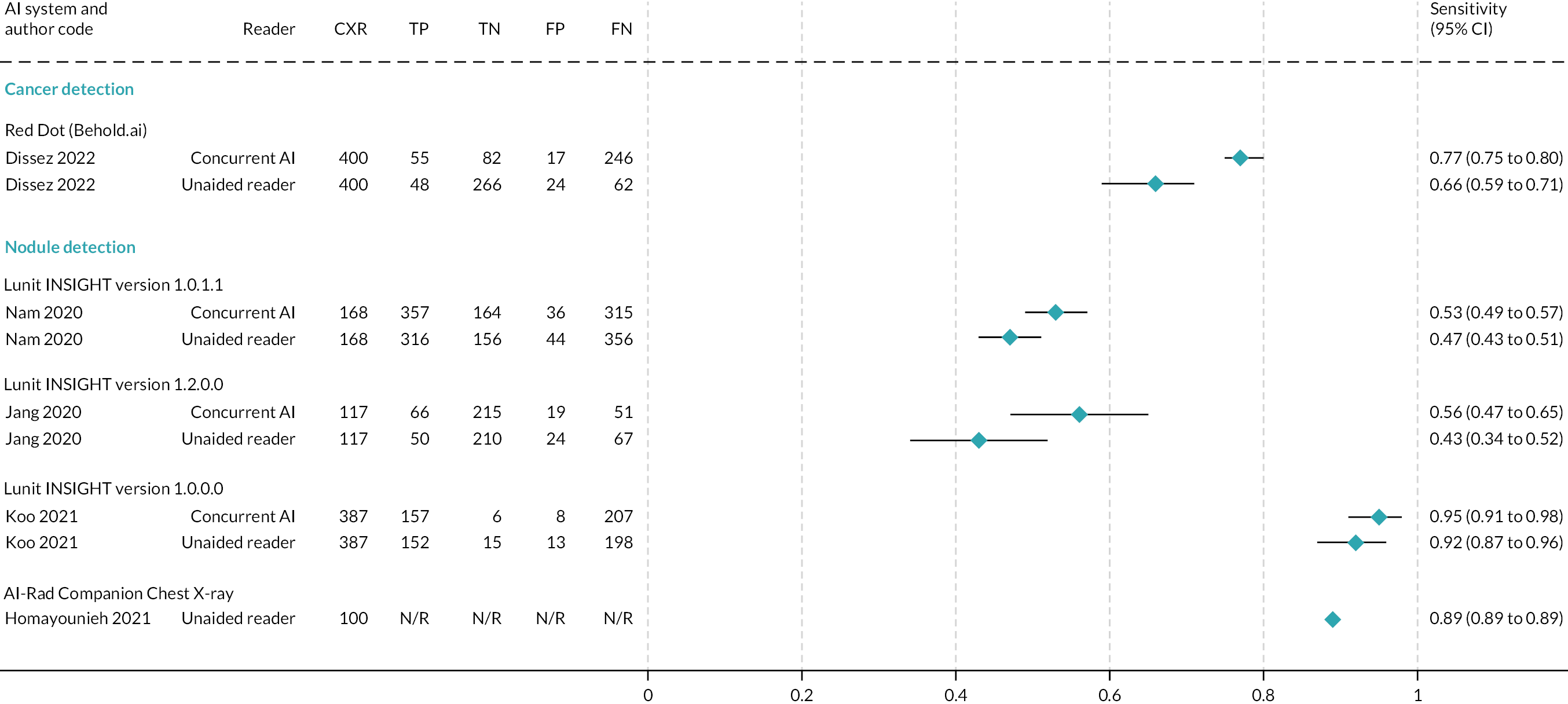

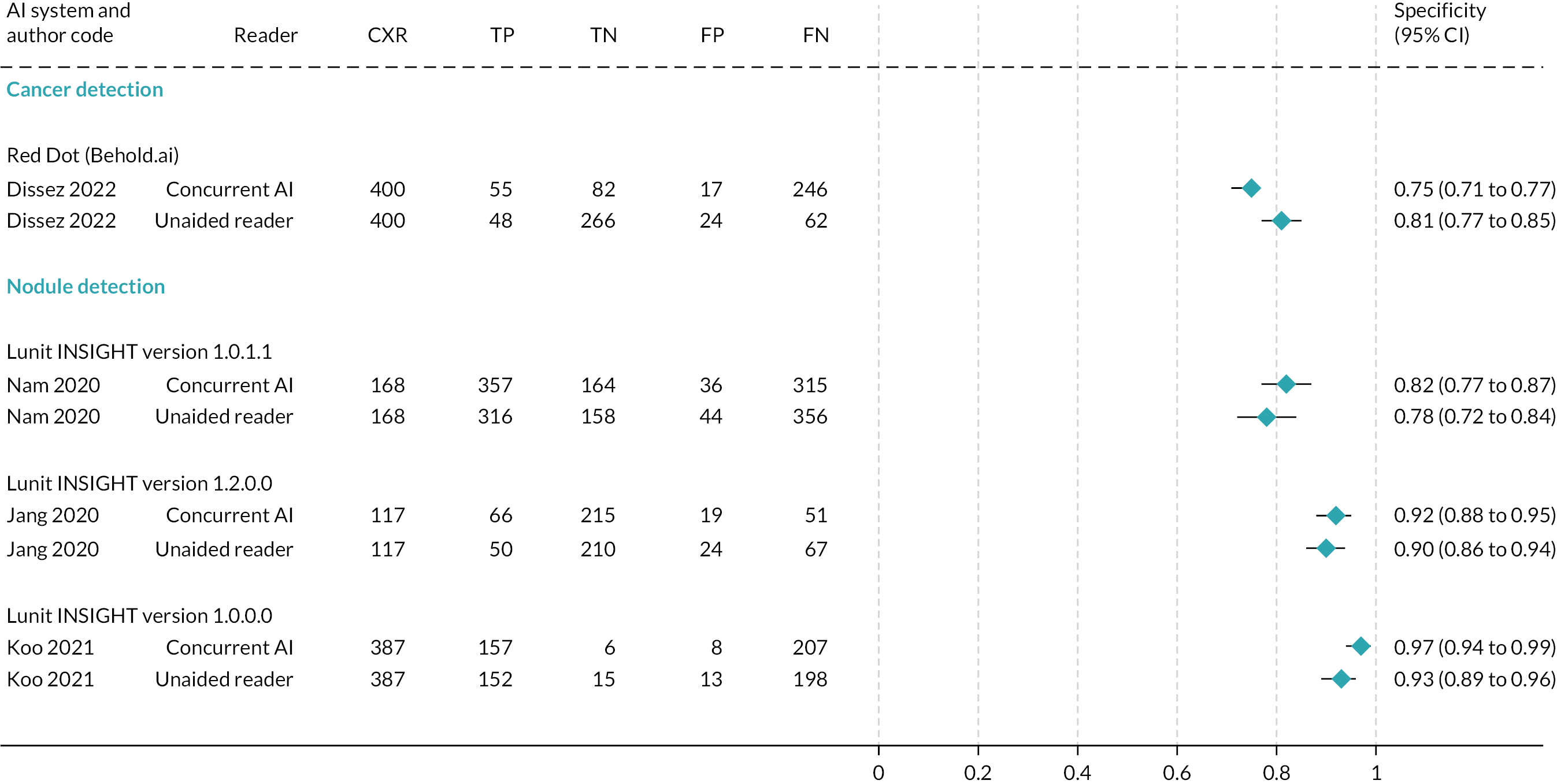

Results of six summarised (but ineligible) studies are reported in Table 3. Studies reported test accuracy for individual readers and/or mean values for all readers; the data summarised in Table 3 are the mean values across readers. Forest plots of test accuracy metrics are given in Figure 3 (sensitivity) and Figure 4 (specificity) for studies containing unredacted data.

FIGURE 3.

Forest plot of sensitivity. Note: it was not possible to calculate CIs for Homayounieh et al. 26

FIGURE 4.

Forest plot of specificity.

One study examined the test accuracy of AI software to detect lung cancer on CXRs. 22 In this UK study of Red Dot (Behold.ai), sensitivity was significantly higher for the interpretation of CXRs with AI (77%, 95% CI 75% to 80%) than without AI (66%, 95% CI 59% to 71%). No difference was observed for specificity (Table 3).

Five studies examined the test accuracy of AI software to detect lung nodules on CXRs23–26 (Siemens 2022). Three studies from Republic of Korea23–25 assessed different versions of the Lunit INSIGHT AI software. No statistically significant differences were observed in sensitivity or specificity between readers with and readers without AI in the studies by Nam et al. 23 and Jang et al. 24 (Table 3). In the third paper,25 an assessment of test accuracy was conducted for any nodule and each nodule. In the analysis of any nodule, sensitivity was 95.1% for readers with AI software and 92.4% for readers without AI software, and specificity was 97.2% for readers with AI software and 93.1% for readers without AI software. In the analysis of each nodule, sensitivity was 93.9% for readers with AI software and 88.6% for readers without AI software. Specificity was not reported. Instead, false-positive rates were reported to be 3.2% for readers with AI software and 6.3% for readers without AI software. Caution is required in the interpretation of false-positive data, as the paper reports that the false-positive rate is the total number of false positives divided by the number of CXRs, which is a non-standard calculation. It is not possible to know if the above estimates reflect true differences between assessment with/without AI software as no statistical analyses were presented in the paper for any of the above test accuracy metrics, and there were insufficient data to allow us to conduct our own analyses.

Two studies (Homayounieh et al. 26 and Siemens 2022) assessed versions of the Siemens Healthineers AI-Rad Companion CXR in US and German populations (one study) or in (confidential information has been removed) populations alone (Table 3). No statistically significant differences were observed in sensitivity or specificity between radiologists with and radiologists without AI software in the study by Homayounieh et al. 26 In the Siemens 2022 study (confidential information has been removed).

One study22 reported the mean number of cancers detected and found no significant differences with and without AI software (54 cancers, 95% CI 42 to 59 cancers, and 46 cancers, 95% CI 38 to 51 cancers, respectively).

None of the six studies reported AI software test failure.

What are the practical implications of adjunct artificial intelligence software to detect lung cancer on chest X-rays?

We did not identify any studies that met the inclusion criteria for this question.

Two of the summarised (but ineligible) studies22,24 provided information on the potential referrals for CT. No statistically significant differences were observed in the number of people who might be recommended for CT follow-up between readers with and readers without AI: Red Dot (Behold.ai) 144 out of 400 (36%) (95% CI 119 to 172) potential referrals with AI and 117 out of 400 (29%) (95% CI 93 to 147) potential referrals without AI;22 Lunit INSIGHT 96 out of 351 (27%, 95% CI 22.8% to 32.3%, calculated by the EAG) with AI and 80 out of 351 (23%, 95% CI 18.5% to 27.5%, calculated by the EAG) patients without AI. 24 It is important to note that these are hypothetical referrals. We found no evidence on the impact of AI on the readers’ behaviour in real-world clinical practice.

Two studies24,25 reported information on reading times. No statistically significant differences were observed in average image reading times between readers with and readers without AI: Siemens Healthineers AI-Rad Companion 22.5 [standard deviation (SD) 40.3] seconds with AI, 24.3 (SD 27.4) seconds without AI, per image;24 Lunit Insight 171 (SD 33.8) minutes with AI, 211.25 (SD 38.4) minutes without AI, to read 434 CXR. 25

One study22 reported on the acceptability of Red Dot (Behold.ai) among 10 out of 11 study clinicians. Eight clinicians indicated that reporting was not slowed down by AI, and nine stated that ‘the heatmaps (visual display of findings suspicious of lung cancer on CXRs) produced by the AI model were helpful to understand the algorithm’s attention points’. 22

What is the clinical effectiveness of adjunct artificial intelligence software applied to chest X-rays?

We did not identify any studies that met the inclusion criteria for this question. None of the six summarised (but ineligible) studies reported clinical effectiveness outcomes.

Summary of ongoing trials

No ongoing trials meeting the inclusion criteria were identified. As described in Analysis and synthesis, we looked for ongoing trials assessing eligible comparisons.

We identified one ongoing trial (KCT0005466)30 comparing Lunit INSIGHT in conjunction with a radiologist with radiologist alone; however, the population is those undergoing CXR for any reason in the outpatient department. It is not known whether the participants underwent CXR for symptoms of cancer or for reasons other than cancer, or if they were referred from primary care.

Details of one ongoing study (NCT05489471),31 identified from the Lunit company submission, are unclear. The proportion of general practitioner (GP) referrals, A&E attendances and inpatients is not known, the AI software is not named (but the study is funded by Lunit) and it is not clear whether the comparison is AI software in conjunction with a radiologist versus radiologist alone. This UK-based study is not yet recruiting and has an estimated primary end date of July 2023.

In addition, the Siemens 2022 study provided in the Siemens Healthineers’ company submission summarised above is ongoing (Table 4).

| Heading | Details |

|---|---|

| Trial identifier number | KCT000546630 |

| Title of project | Prospective evaluation of deep-learning-based detection model for chest radiographs in outpatient respiratory clinic |

| Trial completion date | 31 May 2021 (no results posted) |

| Trial identifier number | NCT0548947131 |

| Title of project | A study to assess the impact of an AI system on CXR reporting |

| Trial completion date | Estimated primary end date of July 2023 |

Chapter 5 Cost-effectiveness

Results of literature searches

A total of 1120 studies were identified through database searches (817 in MEDLINE and 303 in Tufts CEA). Of these, 29 studies were retrieved for full-text assessment (25 from MEDLINE and 4 from Tufts CEA). These covered a wide range of methodologies and research questions. Reference lists of these studies returned four further studies of relevance to this review.

We did not identify any cost-effectiveness studies that directly compared CXR review by radiology specialist with adjunct AI and radiology specialist review without. However, two economic evaluation studies from the database search32,33 and an updated analysis of one of these34 found through an authorship search were identified as useful to inform modelling techniques and parameter input sources. Similarly, four studies (one from the database search35 and three from author searches36–38) provided detailed information on radiological and clinical pathways to lung cancer diagnosis in the UK. A systematic review and meta-analysis on the diagnostic performance of CXRs in symptomatic primary care populations39 was also retrieved from the search.

These studies were retained and summarised narratively to include information of relevance to populate the conceptual model. No formal data extraction or quality appraisal was conducted. The studies by Snowsill et al. 33 and the Exeter Test Group and Health Economics Group34 were not summarised. Information of the diagnostic component of the conceptual model was prioritised due to project time constraints, whereas these studies33,34 pertained more to the longer-term treatment costs and utilities.

Description of the evidence

Bajre et al.32

Bajre et al. 32 used a decision tree structured model to assess the cost-effectiveness of trained radiographers compared with radiologists for the reporting of CXR in people suspected of having lung cancer. The model simulated a pathway for a hypothetical cohort of 1000 people undergoing CXR for suspected lung cancer, with cost-effectiveness calculations concluding at 5 years. The model started with a cohort of people receiving either a radiologist-reported CXR or a radiographer-reported CXR. The pathway for both strategies was the same. The proportion of those with true disease status was known, characterised by the prevalence of lung cancer. People with lung cancer who had a positive CXR result received a confirmatory test of a CT scan, which also provided staging. The authors included stages I, II, III and IV lung cancers. People with a false-negative result presented later to the A&E department, where they were diagnosed with lung cancer and staged. People who had a false-positive result following CXR received a CT scan that confirmed no lung cancer was present. People who had no lung cancer and had been correctly identified as negative by the CXR received no further testing/imaging.

Information required to populate the model was obtained from the literature and NHS reference costs. The model required information about the prevalence of lung cancer, sensitivity and specificity of radiologist-reported and radiographer-reported CXR to identify lung cancer, and sensitivity and specificity for radiologist-reported CT scan to confirm lung cancer diagnosis and probabilities. Although not explicitly stated, a confirmatory diagnosis was made by the radiologist. The proportion of people diagnosed at first presentation was obtained from statistics published by Cancer Research UK in 2013 (Bajre et al. 32). Additionally, information was required about the probability of lung cancer by stage at second presentation following misdiagnosis. All costs included in the model were reported at 2014–5 prices. Costs were required for radiologist and radiographer reading of CXRs, cost of CT scans and total costs of treatment by stage. The authors were not explicit about which treatment people received. The benefit of the strategies was reported in terms of cases detected at first presentation and quality-adjusted life-years (QALYs) yielded. Utility values by stage of diagnosis were obtained from Naik et al. 40

Several simplifying assumptions were made to give a workable model structure:32

-

Time taken to report CXR is 2 minutes for both radiographers and radiologists.

-

False negatives present at A&E at a later date, at which point disease may have advanced a stage (for patients at stages I to III).

-

Sensitivity and specificity of radiographer reporting of CXR and radiologist reporting of both CXR and CT scan are independent of disease stage or other patient characteristics such as age.

-

Quality of life (QoL) in the year following diagnosis (according to stage at diagnosis) is maintained in subsequent years.

-

There is no QoL impact arising from false-positive reporting.

-

Findings for non-small-cell lung cancer are representative of lung cancers in general.

The perspective and setting of the economic analysis were not clearly defined, but the analysis appears to be from the NHS and Personal Social Services (PSS) in a secondary care setting, based on the cost inputs. The results of the analysis were presented in terms of an incremental cost-effectiveness ratio (ICER), expressed as cost per QALY. The authors undertook a probabilistic sensitivity analysis (PSA) to assess the joint uncertainty in key model input parameters: prevalence of lung cancer, sensitivity and specificity of radiologist and radiographer reporting of CXRs, lung cancer stage distribution at initial CXR and stage progression following misdiagnosis. The authors stated the sampling distributions for the parameters included in the PSA but have not reported their parameters. The authors undertook a threshold analysis but not a one-way sensitivity analysis.

The authors reported disaggregated results for both strategies. Results were reported on the number of people expected to be diagnosed with lung cancer, QALYs yielded and treatment costs, all by stage. The QALYs yielded appeared to be high, with stage IV expected to yield more QALYs than stage III, and both of these higher than with stage II. There were modest QALY gains by strategy and by stage, with stage I having the greatest expected gain of 2.4 QALYs, favouring radiographer reporting. Radiographer reporting yielded more overall QALYs, but it was unclear with the inputs reported why QALYs yielded by radiologist reporting was greater for stages II and stage IV. Radiographer reporting diagnostic and treatment costs were lower than radiologist reporting costs. Overall results showed that radiographer reporting of CXR dominated radiologist reporting. The PSA results showed that radiographer reporting continued to dominate radiologist reporting in 98% of the iterations. Based on the model structure, its inputs and assumptions, the authors concluded that the use of trained radiographers to report CXR is cost-effective and an increased role for radiographers in the diagnostic pathway would be beneficial to meet hospital waiting time targets for lung cancer diagnosis.

Foley et al.35

Foley et al. 35 conducted a retrospective review of trust audit data (Royal United Hospitals Bath NHS Foundation Trust) to analyse the use of CXR as the first-line investigation in primary care patients with suspected lung cancer. In total, 1488 of the 16,495 primary care referrals received between 1 June 2018 and 31 May 2019 were for suspected lung cancer. CXRs were coded by result as CX1, normal but a CT scan is recommended to exclude malignancy; CX2, alternative diagnosis; or CX3, suspicious for cancer. Outcomes for the study cohort were stratified by CX code and included patient characteristics, number undergoing CT scan, number of lung cancers diagnosed, stage at diagnosis, time from initial CXR to CT scan, time from CT request to CT scan, time to diagnosis, treatment strategy taken and mortality (over an average follow-up period of 322 days in the total cohort). Table 5 shows the results of key outcomes.

| Outcome | CXR report code | Statistical significance (p < 0.05) | ||

|---|---|---|---|---|

| CX1 (normal but CT scan recommended to exclude malignancy) | CX2 (alternative diagnosis) | CX3 (suspicious for malignancy) | ||

| Total number of CXRs (%) | 1056 (75) | 288 (20) | 72 (5) | – |

| Number referred for CT (%) | 107 (10) | 107 (37) | 66 (92) | – |

| Number of lung cancers diagnosed (%) | 10 (1) | 29 (10) | 49 (68) | – |

| Number diagnosed at advanced stage IIIc/IV (%) | 5 (50) | 11 (38) | 28 (57) | p = 0.26 |

| Number of days from CXR to CTa | 34.6 | 19.6 | 1.9 | p < 0.001 |

| Number of days from CXR to diagnosisa | 89.7 | 65.3 | 30.2 | p < 0.001 |

| Number receiving treatment with curative intent (%) | 4 (40) | 14 (48) | 13 (27) | p = 0.14 |

| Number of deaths in follow-up period (all-cause mortality) (%) | 5 (50) | 10 (34.5) | 27 (55.1) | p = 0.42 |

Based on these findings, the authors concluded that there was significant delay in lung cancer diagnosis in patients who received a CX1 ‘normal’ initial CXR result (p < 0.001) and the majority of patients with a ‘normal’ or an ‘abnormal’ CXR are diagnosed at an advanced disease stage (p = 0.26) with no difference in survival outcomes based on the CXR findings (p = 0.42). 35

Bradley et al.36

Bradley et al. 36 undertook a retrospective observational study using routinely collected healthcare data from Leeds Teaching Hospitals NHS Trust.

All patients diagnosed with primary lung cancer between January 2008 and December 2015 with a GP-requested CXR in the year before diagnosis were coded based on the result of the earliest CXR in that period. CXR report codes were assigned: (1) suspicion of lung cancer identified/urgent investigation needed; (2) abnormality identified/non-urgent investigation indicated, including diagnoses of pneumonia or consolidation even if repeat imaging was not explicitly suggested; (3) abnormality identified but no further investigation/assessment indicated; and (4) normal CXR, no abnormalities identified.

The sensitivity of CXR was calculated and analyses were performed on time to diagnosis, stage at diagnosis and survival outcomes. Statistical analysis on these outcomes was performed by combining CXR codes 1 and 2 to form a ‘positive’ result group and codes 3 and 4 to form a ‘negative’ result group. However, the authors present numerical outcome data for all codes separately as well as for combined groups. Table 6 shows a summary of the key data by individual codes.

| Outcome | Initial CXR code | Total | |||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||

| Number of CXRs (%) | 1383 (65) | 370 (17.4) | 230 (10.8) | 146 (6.9) | 2129 |

| Time from CXR to diagnosis, median days (IQR) | 36 (23–63) | 93 (55–154) | 211 (181–296) | 193 (87–279) | 51 (29–107) |

| Survival from CXR, median days (IQR) | 313 (126–877) | 400 (163–964) | 408 (238–958) | 420 (214–1117) | 345 (148–920) |

| Stage I/II at diagnosis, n (%) [95% CI] | 397 (28.7) [26.4 to 31.2] | 111 (30) [25.4 to 35.0] | 83 (36.1) [30.0 to 42.7] | 43 (29.5) [22.4 to 37.7] | 634 (29.8) [27.9 to 31.8] |

| Stage III/IV at diagnosis, n (%) [95% CI] | 981 (70.9) [68.4 to 73.3] | 259 (70) [65.0 to 74.5] | 147 (63.9) [57.3 to 70.1] | 103 (70.5) [62.4 to 77.7] | 1490 (70) [68.0 to 71.0] |

| Stage unknown at diagnosis, n (%) [95% CI] | 5 (0.4) | 0 | 0 | 0 | 5 (0.2) |

Data were also presented on the number of people who had further CXRs requested by their GPs, with median time to second CXR and median times to diagnosis from initial CXR. Of 376 patients with an initial CXR that was ‘negative’ (codes 3 and 4), 98 (26.1%) had at least one further CXR. Sensitivity calculated based on initial CXR (codes 1 and 2) was 82.3% (95% CI 80.6% to 84.1%).

The authors concluded that the sensitivity results supported previous systematic review findings,41 and while those with a ‘positive’ initial CXR finding had a median of 43 days to diagnosis compared with 204 days for those with ‘negative’ findings, no direct association with time to diagnosis was found between stage at diagnosis and survival in this study.

Woznitza et al. (2018)

Woznitza et al. 37 conducted a 4-month feasibility study (November 2016 to March 2017) at a single radiology department at an acute general hospital (Homerton University Hospital, London). The primary outcome was to establish the feasibility of an immediate reporting service for CXRs. Comparison between CXR referrals from general practice that received an immediate and routine report was made to determine the number of lung cancers diagnosed, time to diagnosis, time to CT and number of urgent referrals to respiratory medicine.

From 1687 CXRs of people referred from general practice over the 26-week study period, 36 patients (22 immediate CXR report, 14 routine CXR report) had a CT scan arranged by radiology following a suspicious CXR. This equated to less than one additional unplanned patient per week (mean 0.8 scans per week) accommodated by the CT department. Time from CXR to CT was shorter in the immediate report group, with a mean of 0.9 (SD 2.3) days, than in the routine reporting group, at 10.6 (SD 4.5) days (p > 0.0001). No apparent difference was found in time to discussion at multidisciplinary team (MDT).

The study also gave a detailed description of the radiology department demographics and processes for reporting and referral. The results of all CXRs included in the study and pathways taken were explained, including 17 patients with a normal or non-cancer diagnosis at CXR who were subsequently diagnosed with lung cancer.

The authors concluded that it was feasible to introduce a radiographer-led immediate CXR reporting service, but a definitive study assessing outcomes would be needed to determine whether this would have an impact on patient mortality and morbidity.

Woznitza et al. (2022)

Woznitza et al. 38 conducted a prospective, block-randomised controlled trial (RadioX) at a single acute district general hospital in London (Homerton University Hospital). People referred for CXR from primary care attended sessions that were pre-randomised to either immediate radiographer (IR) reporting or standard radiographer (SR) reporting within 24 hours. Those who received SR reporting were the control group, as this was usual practice in the department. In the intervention group, CXRs were reported while the patient was still in the department, with all patients with CXR findings suggestive of lung cancer offered a same-day CT scan. Those who declined were scheduled for another day.

In total, 8682 CXRs were performed between 21 June 2017 and 4 August 2018, 4096 (47.2%) for IR and 4586 (52.8%) for SR. Lung cancer was diagnosed in 49 patients. Table 7 shows the summary outcome data from trial reporting arms.

| Outcome | Immediate reporting | Standard reporting |

|---|---|---|

| Total patients | 4096 | 4586 |

| Previous CXR, n (%) | ||

| Yes | 2297 (56.1) | 2583 (56.3) |

| No | 1799 (43.9) | 2003 (43.7) |

| Previous CT, n (%) | ||

| Yes | 307 (7.5) | 334 (7.3) |

| No | 3789 (92.5) | 4252 (92.7) |

| Lung cancer suspected, n (%) | ||

| Yes | 1326 (32.4) | 1511 (33.0) |

| No | 2757 (67.3) | 3062 (66.7) |

| Known | 13 (0.3) | 13 (0.3) |

| Total cancers diagnosed, n (%) | 27 (0.7) | 22 (0.5) |

| 2WW referral | ||

| Yes | 150 (3.7) | 189 (4.1) |

| No | 3946 (96.3) | 4397 (95.9) |

| Time from CXR to diagnosis (days) | ||

| Median (IQR) | 32 (19, 70) | 63 (29, 78)a |

| Mean (SD) | 47.2 (35.8) | 81.6 (78.5) |

| Time from CXR to discharge (days) (no cancer diagnosis) | ||

| Median (IQR) | 30 (17, 64) | 27 (14, 61) |

| Mean (SD) | 54.4 (60.4) | 50.3 (63.7) |

The authors stated that a health economic evaluation based on their RadioX trial was to be reported separately. 38 The corresponding author was contacted and confirmed that analysis of the data was still under way and they were unable to share any usable information at that time (Nicholas Woznitza, consultant radiographer, University College Hospital London NHS Foundation Trust, 29 November 2022, personal communication).

Dwyer-Hemmings and Fairhead39

The authors performed a systematic review of evidence to inform the diagnostic accuracy of CXR to detect lung malignancy in symptomatic patients presenting to primary care. Nine databases were searched, and data from included studies were extracted to calculate the sensitivity and specificity of CXR where possible. Risk of bias was assessed using the QUADAS-2 tool, with analyses conducted by means of random-effects meta-analyses. Ten studies were included in this review. Summary sensitivity of five studies (those not at high risk of bias) was 81% (95% CI 74% to 87%). Specificity of five studies was 68% (95% CI 49% to 87%). The authors concluded that there was good evidence regarding sensitivity because they included only those studies that were of similar design and were not at high risk of bias. By contrast, they considered the evidence on specificity to be weaker due to differences in study designs and variability in reported outcomes. 39

Clinical pathway for representation in model

The clinical pathway illustrated in Figure 5 was agreed in the NICE final scope. 9

The development of this pathway was supported by existing guidelines on the diagnostic and care pathway10,11,16,17 and collaboration with specialist committee members (SCMs) during the scoping process. Subsequent feedback from SCMs and clinical experts generally supported this as a representation of the multiple pathways patients may follow after primary care referral for a CXR. All emphasised that this was an aspirational pathway, with many alternative routes both in and out through to diagnosis, and that it was not particularly accurate in several trusts.

When critical pathway events were mapped based on the early stages of the National Optimal Lung Cancer Pathway17 using large cancer databases from two trusts, 83 individual combinations of early pathway events in 1018 suspected lung cancer patients were found. 42 This highlights the complexity of defining a realistic structure on which to base the clinical component of an economic model. All models by their nature are more simplistic formats of real practice. The balance is to represent the clinical pathway in sufficient detail to capture the main elements, while producing a model that is feasible to construct.

The availability of evidence to inform model parameters also influences the model structure. Where evidence is severely limited, a more simplistic model reduces the number of assumptions relied on to achieve an executable model and reduces the uncertainty introduced.

Two studies identified in the literature search35,36 reported data for parameters that had the potential to support multiple differential pathways after CXR results, rather than just a lung cancer suspected and no lung cancer suspected route through model. However, there were limitations in how the data reported from both sources might be applied.

Overall, the EAG determined that the clinical pathway developed during the NICE scoping process was a realistic representation on which to base the conceptual model. Although concerns remained around the feasibility of parameterising the model due to a lack of available evidence and differences in outcome reporting, five differential pathways (A, B, C, D and E) were formulated with feedback from clinical experts and reference to the clinical guidelines. 10,11,16,17

Figure 6 shows where each pathway is situated, and each pathway is described in detail below.

FIGURE 6.

Clinical pathways for conceptual model for people referred from primary care.

Pathway A

When CXR findings are suggestive of malignancy, a referral for urgent CT on the suspected lung cancer pathway is made. There is a variation in practice across trusts, but in many institutions highly suspicious CXR findings are flagged to secondary care lung cancer teams who request the CT scan and await referral to the suspected lung cancer clinic from the GP. Once reported, CT scans are triaged by lung cancer team consultants. If they suggest probable lung cancer, an urgent lung cancer team appointment is arranged with appropriate tests for example spirometry, planned biopsy (endobronchial ultrasound) (Alberto Alonso, consultant radiologist, Manchester Hospital NHS Foundation Trust, 19 November 2022, personal communication) for histopathological staging and to inform treatment options at the fast-track lung cancer clinic. 16

If the CT scan appears reasonably normal (despite CXR appearances), then the lung cancer team writes to the patient to inform them of their relatively normal CT appearances and arrange a non-urgent general respiratory clinic (not lung cancer clinic) outpatient appointment (Vidan Masani, consultant respiratory physician and lead for lung cancer, Royal United Hospitals Bath NHS Foundation Trust, 1 February 2023, personal communication). This also includes those who require investigation and management of pulmonary nodules in accordance with British Thoracic Society guidelines. 16,17

Pathways B and C

If CXR results are reported as ‘abnormal’ where findings are indeterminate or suggestive of an alternative diagnosis, people may follow pathway B or C. Here, findings are not sufficient to warrant further urgent investigation, but additional clinical enquiry is required.

Pathway B is taken when an alternative diagnosis is suspected and referral is made by the GP to a secondary care outpatient clinic with relevant expertise for that clinical finding, for example a non-urgent respiratory clinic.

Pathway C is followed when a 6-week repeat CXR is advised in the report. The referral for repeat CXR is made by the GP, and a radiologist or reporting radiographer compares the new image with the previous one. If the abnormality is resolved, then no further action or follow-up is required. If abnormal and suspicious, these cases are ‘red-alerted’ or ‘upgraded’ and the lung cancer team and referring GP are notified as per pathway A. The 6-week repeat CXR is used in cases where there is need to exclude infection, try a course of treatment and reassess before considering CT (Jonathan Rodrigues, consultant radiologist, Royal United Hospitals Bath NHS Foundation Trust, 13 November 2022, personal communication).

Pathways D and E

Where CXRs are reported as ‘normal’, findings may be unremarkable, but several trusts (including Royal United Bath NHS Foundation Trust and Manchester University NHS Foundation Trust) include the following automatic caveat in the report: ‘please note that a normal CXR does not exclude malignancy. If there is still a strong suspicion of malignancy (weight loss/unresolved cough/significant or unresolved haemoptysis), referral for a CT scan is advised’. This is to counter false reassurance in a case where clinical suspicion remains high.

People with normal results may, therefore, proceed along pathway D, where their GP considers them at high risk of lung cancer despite nothing being detected on CXR and refers the patient for CT scan and specialist review.

Pathway E is taken when the GP has no further concerns, no further diagnostic testing is requested and management is continued under primary care.

Discussion of inputs to inform model structure

To formulate a final conceptual model, an iterative process was used. This included identifying relevant intermediate and long-term outcome measures for parameterisation and selecting a structure that is most appropriate to support their inclusion.

This section describes the available evidence, gaps in evidence and recommendations for appropriate evidence generation for a range of outcome measures. In this report, these will be classified into intermediate measures (short- to medium-term clinical outcomes encountered during the diagnostic process), long-term clinical outcomes and cost inputs.

Intermediate measures for consideration

-

Accuracy in detecting lung cancer

No eligible studies were found in the clinical effectiveness review, but one of the six ineligible studies summarised examined the test accuracy of AI software in detecting lung cancer on CXR. 22 In this UK study of Red Dot (Behold.ai), sensitivity was significantly higher for the interpretation of CXR with AI (77% 95% CI 75% to 80%) than without AI (66%, 95% CI 59% to 71%). No difference was observed for specificity (with AI: 75%, 71% to 77%; without AI: 81%, 77% to 85%) (Table 3).

A systematic review and meta-analysis identified in the cost-effectiveness literature review39 provided evidence on the test accuracy of CXR to detect lung cancer in symptomatic patients presenting to primary care. In this population, specifically relevant to this review, summary sensitivity of 81% (95% CI 74% to 87%) was calculated from five studies not at high risk of bias. Summary specificity of 68% (95% CI 49% to 87%) was also obtained from five studies, but evidence was weaker due to their heterogeneous design and variation in reported outcomes. 39 Findings of this systematic review were supported by two other studies from the cost-effectiveness search. 36,41 A retrospective database study of all primary care referrals for CXR conducted by Bradley et al. reported sensitivity of 82.3% (95% CI 80.6% to 84.1%). This was calculated based on an initial CXR coding system that included results suggestive of lung cancer and those with an abnormality identified but no urgent investigation indicated as a ‘positive’ result for CXRs.

-

Turnaround time (TAT; time from start of image review to radiology report)

Turnaround time was identified in the final scope9 as a potentially useful outcome measure in this assessment. From a modelling perspective, the review time occurs on the pathway prior to the diagnostic decision outcome. This would be captured in a model as a resource use parameter used to calculate the cost per image, where the rate of radiology specialist’s pay is multiplied by the length of time to review scan.

A reduction in cost may be expected where TAT is decreased. However, the direction and magnitude of this relationship are highly uncertain given the lack of evidence found on TAT with AI software assistance and the variation of estimates given for TAT without AI from the literature and clinical expert feedback.

Estimated TAT for CXR varies considerably. As discussed in What are the practical implications of adjunct artificial intelligence software to detect lung cancer on chest X-rays?, of the ineligible studies reported on from the clinical search, two24,25 presented information on reading times. No statistically significant differences were observed in average image reading times between readers with and readers without AI: Siemens Healthineers AI-Rad Companion 22.5 (SD 40.3) seconds with AI, 24.3 (SD 27.4) seconds without AI, per image;24 Lunit Insight 171 (SD 33.8) minutes with AI, 211.25 (SD 38.4) minutes without AI, to read 434 CXRs,25 which equates to an average of 23.6 seconds per image with AI, and 29.2 seconds without AI (calculated by the EAG).

No information was given on the methods used for timing. With regard to context, timings were recorded during specified reading sessions under study conditions, so how this would translate to reading times in clinical practice is unknown.

Methods by the Royal College of Radiologists (RCR) to derive guidance on reporting output figures are described comprehensively. 43 Eighty reports for plain CXRs per hour (45 seconds per image) is the figure expected on average, over a 6-month minimum period, per in-hours, on-site, non-acute 4-hour reporting session in the NHS. 43

Specialist committee members advised average reading times of < 1 to 5 minutes, with an assumption of 2 minutes used in the economic evaluation by Bajre et al. 32

Many factors have an impact on reporting output and are well outlined by the RCR. 43 Therefore, focusing on this as an outcome measure, without appreciation of real-world context, is of little use unless a reduction in TAT can be shown to have an impact on efficiency of workflow over a sustained period in the NHS environment. This needs to be considered when designing future studies.

Another anticipated benefit of reducing TAT is an increase in the output of radiology specialists performing CXR reviews, thereby addressing the high demand for image reading and inherent limitations on workforce capacity. This is a potential value driver of AI software but would not be captured within the conceptual cost-effectiveness model. The potential value here would be recognised at a system level rather than at the patient level represented in the conceptual model.

-

Technical failure rate

Technical failure rate was identified in the final scope9 as a potential measure of interest. None of the six studies summarised in the clinical effectiveness review reported any information on technical failure rate in CXR.

-

Impact of software output on clinical decision-making

Impact of software on clinical decision-making is the primary measure of importance as the final CXR result is determined by a radiology specialist whether or not AI software is used. Even if the diagnostic accuracy of AI software alone is higher, the outcomes are mediated by human input. The results then determine which clinical pathway a patient will proceed down, affecting the quantity and type of further tests.

No evidence was found on this, and the only extrapolated data were in the form of two studies22,24 that provided information on hypothetical referrals to CT. No statistically significant differences were observed in the number of people who might be recommended for CT follow-up between readers with and readers without AI: Red Dot (Behold.ai) 144 out of 400 (36%) (95% CI 119 to 172) potential referrals with AI and 117 out of 400 (29%) (95% CI 93 to 147) potential referrals without AI;22 Lunit INSIGHT 96 out of 351 (27%, 95% CI 22.8% to 32.3%, calculated by the EAG) patients with AI and 80 out of 351 (23%, 95% CI 18.5% to 27.5%, calculated by the EAG) patients without AI. 24 It is important to note that these are hypothetical referrals, as CXRs were retrospectively selected from databases in these studies. We found no evidence of the impact of AI on the readers’ behaviour in real-world clinical practice.

-

Number of people referred for a CT scan